Abstract

Background

Low levels of numeracy and literacy skills are associated with a range of negative outcomes later in life, such as reduced earnings and health. Obtaining information about effective interventions for children with or at risk of academic difficulties is therefore important.

Objectives

The main objective was to assess the effectiveness of interventions targeting students with or at risk of academic difficulties in kindergarten to Grade 6.

Search Methods

We searched electronic databases from 1980 to July 2018. We searched multiple international electronic databases (in total 15), seven national repositories, and performed a search of the grey literature using governmental sites, academic clearinghouses and repositories for reports and working papers, and trial registries (10 sources). We hand searched recent volumes of six journals and contacted international experts. Lastly, we used included studies and 23 previously published reviews for citation tracking.

Selection Criteria

Studies had to meet the following criteria to be included:

Population: The population eligible for the review included students attending regular schools in kindergarten to Grade 6, who were having academic difficulties, or were at risk of such difficulties.

Intervention: We included interventions that sought to improve academic skills, were conducted in schools during the regular school year, and were targeted (selected or indicated).

Comparison: Included studies used an intervention‐control group design or a comparison group design. We included randomised controlled trials (RCT); quasi‐randomised controlled trials (QRCT); and quasi‐experimental studies (QES).

Outcomes: Included studies used standardised tests in reading or mathematics.

Setting: Studies carried out in regular schools in an OECD country were included.

Data Collection and Analysis

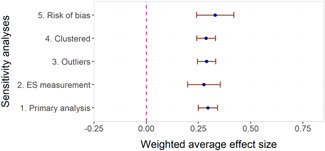

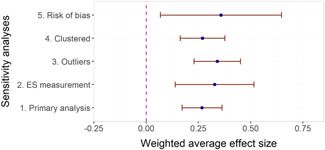

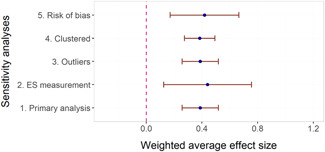

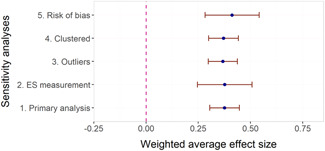

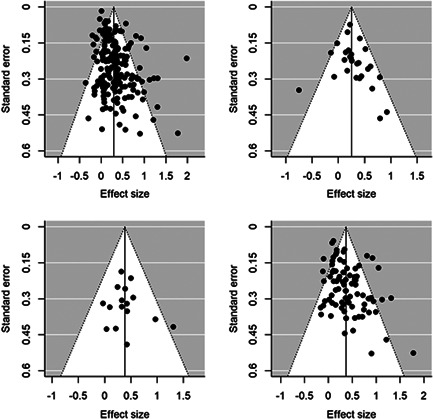

Descriptive and numerical characteristics of included studies were coded by members of the review team. A review author independently checked coding. We used an extended version of the Cochrane Risk of Bias tool to assess risk of bias. We used random‐effects meta‐analysis and robust‐variance estimation procedures to synthesise effect sizes. We conducted separate meta‐analyses for tests performed within three months of the end of interventions (short‐term effects) and longer follow‐up periods. For short‐term effects, we performed subgroup and moderator analyses focused on instructional methods and content domains. We assessed sensitivity of the results to effect size measurement, outliers, clustered assignment of treatment, risk of bias, missing moderator information, control group progression, and publication bias.

Results

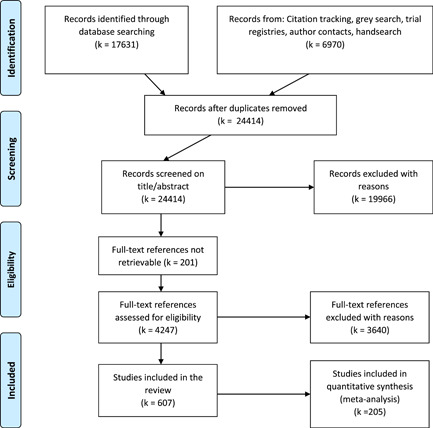

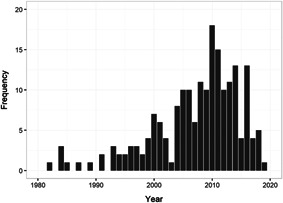

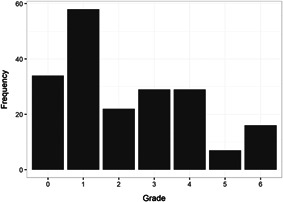

We found in total 24,414 potentially relevant records, screened 4247 of them in full text, and included 607 studies that met the inclusion criteria. We included 205 studies of a wide range of intervention types in at least one meta‐analysis (202 intervention‐control studies and 3 comparison designs). The reasons for excluding studies from the analysis were that they had too high risk of bias (257), compared two alternative interventions (104 studies), lacked necessary information (24 studies), or used overlapping samples (17 studies). The total number of student observations in the analysed studies was 226,745. There were 93% RCTs among the 327 interventions we included in the meta‐analysis of intervention‐control contrasts and 86% were from the United States. The target group consisted of, on average, 45% girls, 65% minority students, and 69% low‐income students. The mean Grade was 2.4. Most studies included in the meta‐analysis had a moderate to high risk of bias.

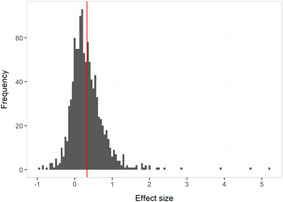

The overall average effect sizes (ES) for short‐term and follow‐up outcomes were positive and statistically significant (ES = 0.30, 95% confidence interval [CI] = [0.25, 0.34] and ES = 0.27, 95% CI = [0.17, 0.36]), respectively). The effect sizes correspond to around one third to one half of the achievement gap between fourth Grade students with high and low socioeconomic status in the United States and to a 58% chance that a randomly selected score of an intervention group student is greater than the score of a randomly selected control group student.

All measures indicated substantial heterogeneity across short‐term effect sizes. Follow‐up outcomes pertain almost exclusively to studies examining small‐group instruction by adults and effects on reading measures. The follow‐up effect sizes were considerably less heterogeneous than the short‐term effect sizes, although there was still statistically significant heterogeneity.

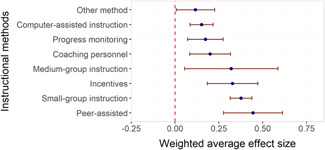

Two instructional methods, peer‐assisted instruction and small‐group instruction by adults, had large and statistically significant average effect sizes that were robust across specifications in the subgroup analysis of short‐term effects (ES around 0.35–0.45). In meta‐regressions that adjusted for methods, content domains, and other study characteristics, they had significantly larger effect sizes than computer‐assisted instruction, coaching of personnel, incentives, and progress monitoring. Peer‐assisted instruction also had significantly larger effect sizes than medium‐group instruction. Besides peer‐assisted instruction and small‐group instruction, no other methods were consistently significant across the analyses that tried to isolate the association between a specific method and effect sizes. However, most analyses showed statistically significant heterogeneity also within categories of instructional methods.

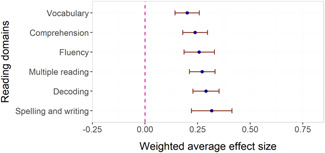

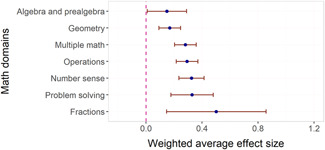

We found little evidence that effect sizes were larger in some content domains than others. Fractions had significantly higher associations with effect sizes than all other math domains, but there were only six studies of interventions targeting fractions. We found no evidence of adverse effects in the sense that no method or domain had robustly negative associations with effect sizes.

The meta‐regressions revealed few other significant moderators. Interventions in higher Grades tend to have somewhat lower effect sizes, whereas there were no significant differences between QES and RCTs, general tests and tests of subdomains, and math tests and reading tests.

Authors’ Conclusions

Our results indicate that interventions targeting students with or at risk of academic difficulties from kindergarten to Grade 6 have on average positive and statistically significant short‐term and follow‐up effects on standardised tests in reading and mathematics. Peer‐assisted instruction and small‐group instruction are likely to be effective components of such interventions.

We believe the relatively large effect sizes together with the substantial unexplained heterogeneity imply that schools can reduce the achievement gap between students with or at risk of academic difficulties and not‐at‐risk students by implementing targeted interventions, and that more research into the design of effective interventions is needed.

1. PLAIN LANGUAGE SUMMARY

1.1. Targeted school‐based interventions improve achievement in reading and maths for at‐risk students in Grades K‐6

School‐based interventions that target students with, or at risk of, academic difficulties in kindergarten to Grade 6 have positive effects on reading and mathematics. The most effective interventions include peer‐assisted instruction and small‐group instruction by adults. These have substantial potential to decrease the achievement gap.

What is the aim of this review?

This Campbell systematic review examines the effects of targeted school‐based interventions on standardised tests in reading and mathematics. The review analyses evidence from 205 studies, 186 of which are randomised controlled trials.

1.2. What is this review about?

Low levels of mathematics and reading skills are associated with a range of negative outcomes in life, including reduced employment and earnings, and poor health. This review examines the impact of a broad range of school‐based interventions that specifically target students with or at risk of academic difficulties in Grades K‐6. The students in this review either have academic difficulties or are at risk of such difficulties because of their background.

Examples of interventions that are included in this review are peer‐assisted instruction, using financial and non‐financial incentives, instruction by adults to small or medium‐sized groups of students, monitoring progress, using computer‐assisted instruction, and providing coaching to teachers.

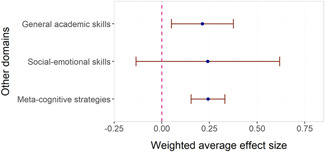

Some interventions target specific domains in reading and mathematics such as reading comprehension, fluency, number sense, and operations, while others also focus on building different skills, for example, meta‐cognition and social‐emotional learning.

The review looks at whether these interventions are effective in improving students’ performance on standardised tests of reading and/or mathematics.

1.3. What studies are included?

In total, 607 studies are included in this review. However, only 205 of these were of sufficiently high methodological quality to be included in the analysis. Of these, 175 are from the United States, 10 from Sweden, 7 from the United Kingdom, 3 from the Netherlands, 2 from Australia, 2 from Germany, 2 from New Zealand, and 1 each from Canada, Denmark, Ireland, and Israel.

1.4. Do targeted school‐based interventions improve reading and mathematics outcomes?

Yes. High‐quality evidence shows that, on average, school‐based interventions aimed at students who are experiencing, or at risk of, academic difficulties, do improve reading and mathematics outcomes in the short term.

1.5. What type of intervention is the most effective?

Two instructional methods stand out as being particularly and consistently effective. Both peer‐assisted instruction and small‐group instruction by adults showed the largest (short‐term) improvements in reading and mathematics. Other instructional methods showed smaller improvements however, there is substantial variation in the magnitude of these effects.

1.6. Are positive effects sustained in the longer term?

Follow‐up outcomes measured more than three months after the end of the intervention pertain almost exclusively to studies examining small‐group instruction and reading. There is evidence of fadeout but positive effects are still reported up to 2 years after the end of intervention. Only five studies measured intervention effects after more than 2 years.

1.7. What do the findings of the review mean?

School‐based interventions in Grades K‐6 can improve reading and mathematics outcomes for students with or at risk of academic difficulties. In particular, the evidence shows that using peer‐assisted instruction and small‐group instruction are two of the most effective approaches that schools can implement. These interventions make a real difference in the achievement gap for at risk students.

At the same time, we need more research to better understand why interventions work better in some contexts compared with others. We also need to know more about the long‐term effects of interventions, and of interventions implemented in other countries than the United States. Furthermore, there are fewer studies of mathematics interventions than reading interventions.

1.8. How up‐to‐date is this review?

The review authors searched for studies up to July 2018.

2. BACKGROUND

2.1. Description of the condition

International research has consistently shown that low academic achievement during primary school increases the risk of school dropout, and additionally decreases prospects of secondary or higher education (Berktold et al., 1998; Ensminger & Slausarcick, 1992; Finn et al., 2005; Gardnier et al., 1997; Goldschmidt & Wang, 1999; Randolph et al., 2004; Winding et al., 2013). Entering adulthood with a low level of education is associated with reduced employment prospects as well as limited possibilities for financial progression in adult life (De Ridder et al., 2012; Johnson et al., 2010; OECD, 2012; Scott & Bernhardt, 2000). Furthermore, adults with higher levels of educational attainment are more likely to live longer, show higher levels of civic engagement, and exhibit greater satisfaction with life (OECD, 2010a, 2012). Conversely, low levels of education are negatively correlated with numerous health‐related issues and risk behaviours such as drug use and crime, which have serious implications for the individual as well as for society (Berridge et al., 2001; Brook et al., 2008; Feinstein et al., 2006; Horwood et al., 2010; Sabates et al., 2013).

Overall, in the member countries of the Organisation for Economic Co‐operation and Development (OECD), almost one in five of all youth between 25‐34 years of age have not earned the equivalent of a high‐school degree/upper secondary education (OECD, 2013). Moreover, on average across the OECD countries, around 15% of 18‐24 year‐olds are neither employed, nor in education or training (OECD, 2018). The Programme for International Student Achievement (PISA) tests show that on average about 20%–25% of 15‐year‐olds in the OECD countries are not proficient readers (OECD, 2010b, 2016, 2019).1 Likewise, in mathematics, around 20%–25% of students could only manage the lowest level in the PISA test (OECD, 2010b, 2016, 2019).2 These results indicate that a large proportion of students do not obtain sufficient academic skills in school and stands outside the labour market, once they have left school.

Skill differences between groups of students with low and high risk of ending up with academic difficulties appear early and are often present already before primary school. For example, struggling readers tend to be persistently behind their peers from the early Grades (e.g., Elbro & Petersen, 2004; Francis et al., 1996) and early math and language abilities strongly predict later academic achievement (e.g., Duncan et al., 2007; Golinkoff et al., 2019). Low‐income preschool children have more behaviour problems (e.g., Huaqing & Kaiser, 2003) and there is a strong continuity between emotional and behavioural problems in preschool and psychopathology in later childhood (Link Egger & Angold, 2006). Emotional and behavioural problems are in turn linked to lower academic achievement in school (e.g., Durlak et al., 2011; Taylor et al., 2017). Lastly, the gap between majority and minority children on cognitive skills tests is large already when children are 3–4 years old (e.g., Burchinal et al., 2011; Fryer & Levitt, 2013).

The prenatal and early childhood environment appears to be an important factor that keeps students from realising their academic potential (e.g., Almond et al., 2018). Currie (2009) furthermore documented that children from families with low socioeconomic status (SES) have worse health, including measures of foetal conditions, physical health at birth, incidence of chronic conditions, and mental health problems. Immigrant and minority children are often overrepresented among low SES families and face similar risks (e.g., Bradley & Corwyn, 2002; Deater‐Deckard et al., 1998; Morgan et al., 2012).

Family environments also differ in aspects thought to affect educational achievement: low SES families are less likely to provide a rich language and literacy environment (Bus et al., 1995; Golinkoff et al., 2019; Hart & Risley, 2003). The parenting practices and access to resources such as early childhood education and intervention, health care, nutrition, and enriching spare‐time activities also differ between high‐ and low‐risk groups (Esping‐Andersson et al., 2012; Morgan et al., 2012). Low SES parents also seem to have lower academic expectations for their children (Bradley & Corwyn, 2002; Slates et al., 2012), and teachers often have lower expectations for low SES and minority students (e.g., Good et al., 2003; Timperley & Phillips, 2003). Low SES children are also more likely to experience a decline in motivation during the course of primary, secondary, and upper secondary school (Archambault et al., 2010).

The neighbourhoods that students grow up in is another potential determinant of achievement (e.g., Björklund & Salvanes, 2011; Campbell et al., 2000; Chetty et al., 2018). It seems likely that many students in high‐risk groups live in neighbourhoods that are less supportive of academic achievement in terms of, for example, peer support and role models. To get by in a disadvantaged neighbourhood may also require a very different set of skills compared with what is needed to thrive in school, something that may increase the risk that pupils have trouble decoding the “correct” behaviour in educational environments (e.g., Heller et al., 2017).

As indicated by the previous discussion, the group of students experiencing academic difficulties is diverse. It includes for instance students with learning disabilities, students who are struggling because they lack family support, because they have emotional or behavioural problems, or because they are learning the first language of the country they are living in. Some groups of students may not currently have academic difficulties but are “at risk” in the sense that they are more in danger of ending up with difficulties in the future, at least in the absence of intervention (McWhirter et al., 2004). Although being at risk points to a future negative situation, it is sometimes used to designate a current situation (McWhirter et al., 2004; Tidwell & Corona Garret, 1994), as current academic difficulties are a risk factor for future difficulties and having difficulties in one area may be a risk factor in other areas (McWhirter et al., 1994).

After this review of risk factors for academic difficulties, it is worth noting that the life circumstances placing children and youth at risk are only partially predictive. That is, risk factors increase the probability of having academic difficulties, but are not deterministic. As academic difficulties therefore cannot be perfectly predicted and may show up relatively late in a child's life, interventions in early childhood may not be enough and effective interventions during school may be needed to reduce the achievement gaps substantially.

As the test score gaps between high‐ and low‐risk groups remain relatively stable from the early grades, schools do not seem to be a major reason for the inequality in academic achievement (e.g., Heckman, 2006; Lipsey et al., 2012; von Hippel et al., 2018). Further evidence is provided by the seasonality in achievement gaps. In the United States, the gap between high and low SES students tends to widen during summer breaks when schools are out of session (e.g., Alexander et al., 2001; Gershenson, 2013; Kim & Quinn, 2013; although von Hippel et al., 2018, show that this pattern is not universal across risk groups, grades and cohorts). However, the stability of the test score gaps over time also implies that current school practice is not, in general, enough to decrease the achievement gaps. As schools are perhaps the societal arena where most children can be affected by attempts to reduce the gaps, finding effective school‐based interventions for students with or at risk of academic difficulties is a question of major importance.

Information about effective interventions for students with or at risk of academic difficulties is also of significant interest in most countries. This interest has been reflected in increased political initiatives such as the European Union (EU) Strategic Framework for Education and Training (The Council of the European Union, 2009), or comprehensive legislation such as the No Child Left Behind Act from 2001 in the United States (U.S. Congress, 2002; U.S. Department of Education, 2004).

The research on interventions aimed at academic achievement is rapidly growing, and the interventions described in the literature are numerous and very diverse in terms of for example intervention focus, target group, and delivery mode. The current review focused on targeted, school‐based interventions provided to students in kindergarten (K) to Grade 6 (ages range from 5–7 to 11–13, depending on country/state), where academic learning and skill building were the intervention aims. The outcome variables were standardised tests of achievement in reading and mathematics.

In line with the diversity of reasons for ending up with a low level of skills and educational attainment, we included interventions targeting students who for a broad range of reasons were having academic difficulties, or were at risk of such difficulties. We prioritised already having difficulties over belonging to an at‐risk group in the sense that if there was information about for example test scores and grade point averages, we did not require information about at‐risk status. Furthermore, we did not include interventions targeting high‐performing students in groups that may otherwise be at risk.

This review shares the aims, most inclusion criteria, and the search and screening process with another review about interventions for students in Grades 7–12 (Dietrichson et al., 2020). Consequently, some of the sections below are very similar and a reader that has already read that review may want to skip some parts of the background and method sections.

2.2. Description of the intervention

We included interventions that were targeted to students with or at risk of academic difficulties (i.e., interventions that were selected or indicated) and aimed to improve the students’ academic achievement. Targeted interventions can be delivered in various settings, including in class (e.g., peer‐assisted instruction interventions), in group sessions (e.g., the READ180 programme), or one‐to‐one. We restricted the settings to school‐based interventions, by which we mean interventions implemented in school, during the regular school year, and in which schools were one of the stakeholders. This restriction excluded for example after‐school programmes, summer camps and summer reading programmes, and interventions involving only parents and families (see e.g., Zief et al., 2006 for a review of after‐school programmes; Kim & Quinn, 2013, for a review of summer reading programmes; and Jeynes, 2012, for a review of programmes that involve families or parents).

We included a wide range of interventions that aimed to improve the academic achievement of students by changing the method of instruction—such as tutoring, peer‐assisted instruction, and computer‐assisted instruction interventions—or by changing the content of the instruction—for instance, interventions emphasising mathematical problem‐solving skills, reading comprehension, and meta‐cognitive and social‐emotional skills. Many interventions involved changes to both method and content, and included several major components. That is, we included interventions based on their aim to improve academic achievement and based on interventions targeting students with or at risk of academic achievement, and not based on the type of components used in the intervention.

Therefore, we excluded interventions that may improve academic achievement as a side effect, but did not have academic achievement as an aim. Examples are interventions where behavioural or social‐emotional problems were the primary intervention aim. However, interventions with behavioural and social‐emotional components may very well have academic achievement as one of their primary aims, and use standardised tests of reading and mathematics as one of their primary outcomes. Such interventions were included.

Universal interventions applied to improve the quality of the common learning environment at school in order to raise academic performance of all students (including average and above average students) were excluded. We also excluded whole‐school reform strategy concepts such as Success for All, as well as reduced class size interventions and general professional development interventions for principals and teachers that did not target at‐risk students. However, we included some interventions with a professional development component, for example, in the form of coaching of teachers during the implementation of the intervention, as long as the intervention specifically targeted students with or at risk of academic difficulties.

2.3. How the intervention might work

All the included interventions strove to improve academic achievement for students with or at risk of academic difficulties. However, they did so with different approaches and with diverse strategies of how to create that improvement. This diversity reflects the varying reasons for why students are struggling or are at risk. In turn, the theoretical background for the interventions varied accordingly. It is therefore not possible to specify one particular theory of change or one theoretical framework for this review. Instead, we briefly review three theoretical perspectives that we believe are characteristic for the majority of the included interventions. We then discuss and exemplify how existing targeted interventions may address some of the reasons for academic difficulties mentioned in Section 2.1 in the light of the theoretical perspectives.

2.3.1. Theoretical perspectives

The reasons why students may be struggling laid out in the previous section are multifaceted, and the theoretical perspectives underlying the included interventions are broad. Nevertheless, three superordinate components are characteristic for the majority of the included programmes:

Adaptation of behaviour (social learning theory).

Individual cognitive learning (cognitive developmental theory).

Alteration of the social learning environment (pedagogical theory).

We emphasise that the following presentation of these three theoretical perspectives is not exhaustive, and, although components are presented as demarcated, they contain some conceptual overlap.

Social learning theory has its origins in social and personality psychology, and was initially developed by psychologist Julian Rotter and further developed especially by Albert Bandura, (1977, 1986). From the perspective of social learning theory, behaviour and skills are primarily learned by observing and imitating the actions of others, and behaviour is in turn regulated by the recognition of those actions by others (reinforcement), or discouraged by lack of recognition or sanctions (punishment). According to social learning theory, creating the right social context for the student can therefore stimulate more productive behaviour through social modelling and reinforcement of certain behaviours that can lead to higher academic achievement.

Cognitive developmental theory is not one particular theory, but rather a myriad of theories about human development that focus on how cognitive functions such as language skills, comprehension, memory and problem‐solving skills enable students to think, act and learn in their social environment. Some theories emphasise a concept of intelligence where children gradually come to acquire, construct, and use cognitive functions as the child naturally matures with age (e.g., Piaget, 2001; Perry, 1999). Other theories hold a more socio‐cultural view of cognitive development and use a more culturally distinct and individualised concept of intelligence that to a greater extent includes social interaction and individual experience as the basis for cognitive development. Examples include the theories of Robert Sternberg (2009) and Howard Gardner (1999).

Pedagogical theory draws on the different disciplines in psychology and social theory such as cognitivism, social‐interactional theory and socio‐cultural theory of learning and development. There is not one uniform pedagogical model, but examples of contemporary models in mainstream pedagogy are concepts such as Scaffolding (Bruner, 2006) and the Zone of Proximal Development (Vygotsky, 1978), which originated in developmental and educational psychology. These notions hold that learning and development emerge through practical activity and interaction. Acquisition of new knowledge is therefore considered to be dependent on social experience and previous learning, as well as the availability and type of instruction. Accordingly, school interventions require educators to interact and organise the learning environment for the student in certain ways to fit the individual student's needs and potentials for development.

2.3.2. Interventions in practice

School interventions affect academic achievement by changing the methods by which instruction is given (instructional methods) and by targeting certain content (the content domain), and many combine several intervention components as well as theoretical perspectives. Examples of instructional methods covered in earlier reviews are tutoring, coaching of personnel, cooperative learning/peer‐assisted instruction, computer‐assisted instruction, feedback and progress monitoring, and incentive programmes (e.g., Dietrichson et al., 2017). Reading interventions directed to younger students often target content domains as phonemic awareness, phonics, fluency, vocabulary, and comprehension (e.g., Slavin et al., 2009). Slavin and Lake (2008) describe differences in elementary school math curricula in terms of how they emphasise domains such as problem solving, manipulatives, concept development, algorithms, computation and word problems. Gersten et al. (2009) used the following domains to divide mathematics interventions into categories: operations (e.g., addition, subtraction, and multiplication), word problems, fractions, algebra, and general math proficiency (or multiple components). Many school interventions have additional goals concerning other aspects of the student's life, such as reducing problematic behaviour of the students (Cheung & Slavin, 2012; Slavin & Lake, 2008; Wasik, 1997; Wasik & Slavin, 1993).

As indicated, many interventions combine theoretical perspectives. For example, interventions such as tutoring and peer‐assisted instruction interventions often have in common that they comprise an eclectic theoretical model that combines components from all three perspectives on learning presented in the previous section. They are comprehensive interventions that rely on mechanisms such as increased feedback and tailor‐made instruction (pedagogical theory), regulation of behaviour by for example rewards or interaction with role models (social learning theory), and development of cognitive functions such as learning how to learn (cognitive developmental theory).

Another way of viewing these and other types of interventions is that they address the differential family and neighbourhood resources of students with high and low risk of academic difficulties. Low‐risk students are more likely to have access to “tutors” all year round, as parents, siblings, and other family members help out with homework and schoolwork. Interventions to change mindsets, increase expectations, and mitigate stereotype threat may also substitute for low‐risk families and teachers already having such expectations or teaching low‐risk students such a mindset. Different types of extrinsic rewards may be a way to bolster motivation, which may be especially important for students whose families place less weight on educational achievement.

Furthermore, if the differences between students with high and low risk of academic difficulties can be understood as a consequence of differential access to a combination of resources, then remedial efforts may need to address several problems at once to be effective. Programmes that combine certain components may therefore be more effective than others. Another reason why it is interesting to examine combinations of components relates to an often suggested explanation for missing impacts: lack of motivation among participants (e.g., Edmonds et al., 2009; Fuchs et al., 1999). It is therefore possible that programmes will be more effective if they, for example, include some form of rewards for participating students, along with other components providing for instance specific pedagogical support.

2.4. Why it is important to do the review

In this section, we first discuss earlier related reviews, and then the contributions of our review in relation to the earlier literature. We focus on reviews that, like our review, compared types of interventions in terms of either instructional methods or content domains.

2.4.1. Prior reviews

Prior reviews have in particular covered reading interventions. Slavin et al. (2009) reviewed reading programmes for elementary Grades. They focused on all kinds of programmes and not only programmes for at‐risk or low‐performing students specifically. Wanzek et al. (2006) reviewed reading programmes directed to students in Grades K‐12 with learning disabilities, and Flynn et al. (2012), Inns et al. (2019), Scammaca et al. (2015), Slavin et al. (2011), and Wanzek et al. (2018) reviewed programmes for struggling readers in Grades 5‐9, K‐5, 4‐12, K‐5, and K‐3, respectively.3 These reviews thus covered low‐achieving students, but neither at‐risk students nor areas other than reading. Suggate (2016) reviewed the long‐run effects of phonemic awareness, phonics, fluency, and reading comprehension interventions from preschool up to Grade 7, but did not discern between interventions targeting students with/at risk of and without/not at risk of academic difficulties.

Mathematics interventions were reviewed in Slavin and Lake (2008) and Pellegrini et al. (2018) for general student populations in elementary school. Gersten et al. (2009) examined four types of components of mathematics instruction for students with learning disabilities, but did not include studies for students at risk of math difficulties (or other reasons for difficulties than learning disabilities). Dietrichson et al. (2017) included interventions targeting both reading and mathematics and based inclusion on the share of students with low SES, but did not consider whether students had academic difficulties or not. Fryer (2017) included both math and reading interventions for all types of student groups.4

All reviews that reported an overall effect size found that it was positive. Most also found substantial variation between interventions. Regarding intervention types, we provide a more detailed comparison to our results in Section 6.5 (including reviews focused on a specific intervention type), but to preview that discussion we describe some overarching results here. Among instructional methods, many reviews indicated that one‐to‐one or small‐group tutoring have relatively large effect sizes across both mathematics and reading interventions compared with other intervention types (Dietrichson et al., 2017; Fryer, 2017; Inns et al., 2019; Pellegrini et al., 2018; Slavin & Lake, 2008; Slavin et al., 2009, 2011). Peer‐assisted instruction or cooperative learning interventions also showed relatively large effect sizes in some reviews (Dietrichson et al., 2017; Inns et al., 2019; Pellegrini et al., 2018; Slavin & Lake, 2008; Slavin et al., 2009, 2011), but not in all (Gersten et al., 2009). Computer‐assisted or technology‐supported instruction have typically positive but smaller effect sizes than small‐group and peer‐assisted instruction (Dietrichson et al., 2017; Inns et al., 2019; Pellegrini et al., 2018; Slavin & Lake, 2008; Slavin et al., 2009, 2011).

Gersten et al. (2009) examined some components of mathematics instruction that do not map neatly into the categories used in the current review and some of the others. They found for example most support for explicit instruction, use of heuristics, and curriculum design. Regarding specific math domains, interventions targeting word problems had higher effect sizes than other math domains but not significantly so.

Reviews focusing on short‐term effects across reading domains reported positive effects in general but few reliable differences over reading domains (Flynn et al., 2012; Scammaca et al., 2015; Wanzek et al., 2006). An exception is that reading comprehension interventions were associated with significantly higher effect sizes than fluency interventions in Scammaca et al. (2015), but this difference disappeared when they only considered standardised tests. Suggate (2016) found that comprehension and phonemic awareness interventions showed relatively lasting effects that transferred to non‐targeted skills, whereas phonics and fluency interventions did not (mean follow‐up was around 11 months).

2.4.2. The contribution of this review

Academic difficulties and lack of educational attainment are significant societal problems. Moreover, as shown by the Salamanca declaration from 1994 (UNESCO, 1994), there has for decades been a great interest among policy makers to improve the inclusion of students with academic difficulties in mainstream schooling, and a desire to increase the number of empirically supported interventions for these student groups.

The main objective of this review is to provide policy makers and educational decision‐makers at all levels—from governments to teachers—with evidence of the effectiveness of interventions aimed to improve the academic achievement of students with or at risk of academic difficulties. To this end, we chose a broad scope in terms of the target group and the types of interventions we included. We included studies that measured the effects of interventions by standardised tests in reading and mathematics. The reason is that many interventions are not directed specifically to either subject and outcomes are therefore measured in both (Dietrichson et al., 2017). Including both students with and at risk of academic difficulties in the target group should also decrease the risk of biasing the results due to omission of studies where information about either academic difficulties or at‐risk status is available, but not both. Furthermore, making comparisons over intervention components within one review, rather than across reviews, should increase the possibilities of a fair comparison. For instance, controlling that effect sizes are calculated in the same way, that the definitions of intervention components are consistent, and that moderators are coded in the same way, is easier within the scope of one review than across reviews.

Earlier reviews with a comparable focus on students with or at risk of academic difficulties have included a more narrowly defined target group. Furthermore, their analyses either did not include intervention components together with other moderators in a meta‐regression, or only included very broad categories of instructional methods and content domains. Such analyses risk confounding the effects of intervention components with for example participant characteristics, and precludes testing whether components have significantly different effect sizes. Furthermore, some reviews have coded interventions regarding the instructional methods used, or regarding the type of content taught, and used such indicators in meta‐regressions (e.g., Dietrichson et al., 2017; Gersten et al., 2009; Scammaca et al., 2015). With the exception of Gersten et al. (2009), who included an indicator for word problems alongside instructional methods‐indicators, the analyses did not include both methods, and content domain indicators. They therefore risk confounding instructional methods with content domains.

Lastly, we are not aware of another review that have provided meta‐analytic estimates of medium‐ and long‐term effects specifically for students with or at risk of academic difficulties.

3. OBJECTIVES

The primary objective of this review was to assess the effectiveness of targeted interventions aimed at improving the academic achievement for students with or at risk of academic difficulties in Grades K to 6.

The secondary objective was to examine the comparative effectiveness of different types of interventions, focusing on instructional methods and content domains. We conducted subgroup and moderator analyses in which we attempted to identify those methods and domains that have the strongest and most reliable associations with academic outcomes, as measured by standardised test scores in reading and mathematics.

The tertiary objective was to explore the evidence for differential effects across participant and study characteristics. We prioritised characteristics that were relevant for all types of interventions.

4. METHODS

4.1. Criteria for considering studies for this review

4.1.1. Types of studies

According to our protocol, included studies should use an intervention‐control group design or a comparison group design (Dietrichson et al., 2016). Included study designs were randomised controlled trials (RCT), including cluster‐RCTs; quasi‐randomised controlled trials (QRCTs), that is, where participants are allocated by means such as alternate allocation, person's birth date, the date of the week or month, case number, or alphabetical order; and quasi‐experimental studies (QES). To be included, QES had to credibly demonstrate that outcome differences between intervention and control groups is the effect of the intervention and not the result of systematic baseline differences between groups. That is, selection bias should not be driving the results. This assessment is included as a part of the risk of bias tool, which we elaborate on in the “Risk of bias” section, and no QES was excluded on this criterion in the screening process. A fair amount of studies within educational research use single group pre–post comparisons (e.g., Edmonds et al., 2009; Wanzek et al., 2006); such studies were however excluded in the screening process due to the higher risk of bias.

Control groups received treatment‐as‐usual (TAU) or a placebo treatment. We found no studies in which the control group explicitly received nothing (i.e., a no‐treatment control), as all students experienced regular schooling. That is, control groups got whatever instruction the intervention group would have gotten, had there not been an intervention. The TAU condition can for this reason differ substantially between studies (although many studies did not describe the control condition in much detail). Eligible types of control groups included also waiting list control groups, which only differed in the time frame in which researchers estimate the effects. That is, students in both waiting list and regular control groups were offered regular schooling but after the students in the waiting list control group had received the intervention, they could no longer be used as controls.

Comparison designs compared alternative interventions against each other. That is, they made it clear that all students get something other than TAU because of the intervention. Effect sizes from such studies are not fully comparable to effect sizes from intervention‐control designs. We therefore planned to analyse comparison designs separately from intervention‐control designs, and use them where they may shed light on an issue, which could not be fully analysed using the sample of intervention‐control studies. However, the number of studies that were, in this sense, relevant was small and we used them only in one analysis of the effects of group sizes in small‐group instruction interventions.

Due to language restrictions in the review team, we included studies written in English, German, Danish, Norwegian, and Swedish. To ensure a certain degree of comparability between school settings and to align TAU conditions in included studies, we only included studies published in or after 1980.

4.1.2. Types of participants

The population samples eligible for the review included students attending regular schools in Grades K‐6, who were having academic difficulties, or were at risk of such difficulties. Students attending regular private, public, and boarding schools were included, and students receiving special education services within these school settings were also included.

We included only studies carried out in OECD countries. This selection made it more likely that school settings and TAU conditions were comparable across included studies. Grades K‐6 corresponds roughly to primary school, defined as the first step in a three‐tier educational system consisting of primary education, secondary education and tertiary or higher education. We included studies with a student population in higher Grades than K‐6 as long as the majority of the students were in Grades K‐6. The age range included differed between countries, and sometimes between states within countries (ages range from 4–7 to 11–13, depending on country/state). Much fewer studies reported the participants’ ages than Grades, which was also our main reason to formulate the inclusion criteria in terms of Grade rather than age.

The eligible student population included both students identified in the studies by their observed academic achievement (e.g., low academic test results, low grade point average or students with specific academic difficulties such as learning disabilities), and students that were identified primarily on the basis of their educational, psychological, or social background (e.g., students from families with low socioeconomic status, students placed in care, students from minority ethnic/cultural backgrounds, and second language learners). We excluded interventions that only targeted students with physical learning disabilities (e.g., blind students), students with dyslexia/dyscalculia, and interventions that were specifically directed towards students with a certain neuropsychiatric disorder (e.g., autism, ADHD), as some interventions targeting such students are different from interventions targeting the general struggling or at‐risk student population (e.g., they include medical treatments like in Ackerman et al., 1991).

Because there was substantial overlap between students that were already struggling and groups considered at‐risk of difficulties in studies found in a previous review (Dietrichson et al., 2017), we chose to include both students with difficulties and students that were deemed at‐risk, or were considered educationally disadvantaged. If the two criteria were inconsistent, we gave priority to students having academic difficulties. For example, we excluded interventions that targeted high‐achieving students from low‐income backgrounds.

Some interventions included other students, who neither had academic difficulties nor were at risk of such difficulties. For example, in some peer‐assisted learning interventions high‐performing students were paired with struggling students. Studies of such interventions were included if the total sample (intervention and control group) included at least 50% students that were either having academic difficulties or were at risk of developing such difficulties, or if there were separate effect sizes reported for these groups.

4.1.3. Types of interventions

We included interventions that sought to improve academic achievement or specific academic skills. This does not mean that the intervention had to consist of academic activities, but there had to be an expectation in the study that the intervention, regardless of the nature of the intervention content, would result in improved academic achievement or a higher skill level in a specific academic task. We however choose to exclude interventions that only sought to improve performance on a single test instead of improving a skill that would improve test scores. For similar reasons, we excluded studies of interventions where students are provided with accommodations when taking tests; for instance, when some students are allowed to use calculators and others not.

An explicit academic aim of the intervention did not per se exclude interventions that also included non‐academic objectives and outcomes. However, we excluded interventions having academic learning as a possible secondary objective. If the objectives were not explicitly stated, we used the presence of a standardised test in mathematics or reading as an indication that the authors expected the intervention to improve academic achievement. We excluded cases where such tests were included but the authors explicitly stated that they did not expect the intervention to improve reading or math skills.

Furthermore, we only included school‐based interventions. That is, interventions conducted in schools during the regular school year with schools as one of the stakeholders. This latter restriction excluded summer reading programmes, after‐school programmes, parent tutoring programmes, and other programmes delivered in the home of students.

Universal interventions that aimed to improve the quality of the common learning environment at the school level in order to raise academic achievement of all students (including average and above average students), were excluded. Interventions such as the one described in Fryer (2014) where a bundle of best practices were implemented at the school level in low‐achieving schools, where most students are struggling or at risk, was also excluded. This criterion also excluded whole‐school reform strategy concepts such as Success for All, curriculum‐based programmes like Elements of Mathematics (EMP), as well as reduced class size interventions.

This criterion also meant that we excluded interventions where teachers or principals receive professional development training in order to improve general teaching or management skills. Interventions targeting students with or at risk of academic difficulties may on the other hand include a professional development component, for example, when a reading programme includes providing teachers with reading coaches. Such interventions were therefore included.

Our protocol contained no criterion for the duration of interventions and we included interventions of all durations. We coded the duration of the interventions and this variable was included as a moderator in some of the analyses.

4.1.4. Types of outcome measures

We included outcomes that cover two areas of fundamental academic skills:

Standardised tests in reading

Standardised tests in mathematics

Studies were only included if they considered one or more of the primary outcomes. Standardised tests included norm‐referenced tests (e.g., Gates‐MacGinitie Reading Tests and Star Math), state‐wide tests (e.g., Iowa Test of Basic Skills), and national tests (e.g., National Assessment of Educational Progress, NAEP). If it was not clear from the description of the outcome measures in the studies, we used online sources to determine whether a test was standardised or not. For example, if a commercial test has been normed, this was typically mentioned on the publisher's homepage. However, for older tests it was not always possible to find information about the test from electronic sources. In these cases, we included the test if there was a reference to a publication describing the test, which made it clear that the test had not been developed for the intervention or the study.

We restricted our attention to standardised tests in part to increase the comparability between effect sizes. Earlier related reviews of academic interventions have pointed out that effect sizes tend to be significantly lower for standardised tests compared with researcher‐developed tests (e.g., Flynn et al., 2012; Gersten et al., 2009; Scammaca et al., 2015). Scammaca et al. (2015) furthermore reported that whereas mean effect sizes differed significantly between the periods 1980–2004 and 2005–2011 for other types of tests, mean effect sizes were not significantly different for standardised tests. As researcher‐developed tests are usually less comprehensive and more likely to measure aspects of content inherent to intervention but not control group instruction (Slavin & Madden, 2011), standardised tests should provide a more reliable measure of lasting differences between intervention and control groups.

We excluded tests that provided composite results for several academic subjects other than mathematics and reading, but included tests of specific domains (e.g., vocabulary, fractions) as well as more general tests, which tested several domains of reading or mathematics. Tests of subdomains had significantly larger effect sizes compared with more general tests in Dietrichson et al. (2017). This result may indicate that it may be easier to improve scores on tests of subdomains than on tests of more general skills, or that tests of subdomains may be more likely to be inherent to intervention group instruction. At the same time, it seems reasonable that interventions that target subdomains of reading and mathematics are tested on whether they affect these subdomains. Therefore, we did not want to exclude either type of test, but coded the type of test and used it as a moderator in the analysis. However, to mitigate problems with test content being inherent to intervention and not control group instruction, we did not consider tests where researchers themselves picked a subset of questions from a norm‐referenced test as being standardised. The subset should either have been predefined (as in e.g., the passage comprehension subset of Woodcock‐Johnson Tests of Achievement) or the picked by someone other than the researchers (e.g., released items from the NAEP).

We included all postintervention tests and coded the timing of each test (see “Multiple time points” section).

4.2. Search methods for identification of studies

This section describes the search strategy for identifying potentially relevant studies. We used the EPPI reviewer software to track the search and screening processes. A flowchart describing the search process and specific numbers of references screened on different levels can be found in Section 5.1.2. The search documentation, reporting and details relating to the search can be found in the Supporting Information Appendix A.

4.2.1. Limitations and restrictions of the search strategy

All searches were restricted to publications after 1980. This year was chosen to balance the competing demands of comparability between intervention settings and comprehensiveness of the review. We used no further limiters in the searches.

4.2.2. Electronic database searches

Relevant published studies were identified through electronic searches of bibliographic databases, government and policy databanks. We searched the following electronic resources/databases:

Academic Search Premier (EBSCO)

ERIC (EBSCO)

PsycINFO (EBSCO)

SocIndex (EBSCO)

British Education Index (EBSCO)

Teacher Reference Center (EBSCO)

ECONLIT (EBSCO)

FRANCIS (EBSCO)

CBCA Education (ProQuest)

Australian Education Index (ProQuest)

Social Science Citation Index (ISI Web of Science)

Science Citation Index (ISI Web of Science)

Medline (OVID)

Embase (OVID)

All databases were originally searched from 1st of January 1980 to March 2016. As mentioned, we only included studies published in or after 1980 to ensure a certain degree of comparability between school settings and to align TAU conditions in included studies. We updated the searches in June/July 2018 using identical search strings. Some database searches were not updated in 2018 due to access limitations.

In Supporting Information Appendix A, we report the search strings as well as details for each electronic database and resource searched.

Note that the searches contained terms relating to secondary school, since the search contributed to a review about this older age group (Grades 7–12, see Dietrichson et al., 2020). There is overlap in the literature among the age groups, and in order to rationalise and accelerate the screening process, we decided upon performing one extensive search.

4.2.3. Searching other web‐based resources

We also searched the following national/international repositories and review/trial archives/registries:

DIVA—Swedish repository for research publications and theses (http://www.diva-portal.org/smash/search.jsf?dswid=9447)

CRISTIN—Current Research Information Systems In Norway (https://www.cristin.no/)

Danish National Research Database (Forskningsdatabasen.dk)

Cochrane Library (http://www.cochranelibrary.com/)

Social Care Online (http://www.scie-socialcareonline.org.uk/)

Centre for Reviews and Dissemination Databases (https://www.crd.york.ac.uk/CRDWeb/)

What Works Clearinghouse—U.S. Department of Education (https://ies.ed.gov/ncee/wwc/)

Danish Clearinghouse for Education Research (edu.au.dk/clearinghouse)

Our protocol stated that we should search two trial registries: The Institute for Education Sciences’ (IES) Registry of Randomized Controlled Trials (http://ies.ed.gov/ncee/wwc/references/registries/index.aspx), and American Economic Association's RCT Registry (https://www.socialscienceregistry.org). We were however unable to search the IES registry as it was not available (last tried 23 July 2018). We have asked IES about availability, but have to date not received a reply. We updated the search of American Economic Association's RCT Registry on 23 July 2018.

4.2.4. Hand search

The following selected journals had the highest frequency of potentially relevant studies based on the initial pilot‐searches during the development of the search string and the protocol:

American Educational Research Journal

Journal of Educational Research

Journal of Educational Psychology

Journal of Learning Disabilities

Journal of Research on Educational Effectiveness

Journal of Education for Students Placed at Risk

The search was performed on editions from 2015 to July 2018 (i.e., including an updated search) of the journals mentioned, in order to capture relevant studies recently published and therefore not found in the systematic search.

4.2.5. Grey literature searches

We performed a wide range of searches on the below institutional and governmental resources, academic clearinghouses and repositories for relevant academic theses, reports and conference/working papers. Most of the resources searched for grey literature include multiple types of references. The resources are listed under the category of literature most prevalent in the resource, even though multiple types of unpublished/published literature might be identified in the resource.

Search for Dissertations

ProQuest dissertation & theses A&I (ProQuest)

Theses Canada (https://www.bac-lac.gc.ca/eng/services/theses/Pages/search.aspx)

Search for Working Papers/Conference Proceedings

European Educational Research Association (http://www.eera-ecer.de/).

American Educational Research Association (http://www.aera.net/).

German Educational Research Association (http://www.dgfe.de/en/aktuelles.html).

NBER working paper series (http://nber.org/).

Search for Reports

OpenGrey (http://www.opengrey.eu/).

Best Evidence Encyclopedia (http://www.bestevidence.org/).

Google Scholar (https://scholar.google.dk/).

Google (https://www.google.dk/).

4.2.6. Contacts to international experts

We contacted international experts to identify unpublished and ongoing studies. We primarily contacted corresponding authors of the related reviews mentioned in Section 2.4.1,5 and authors with many and/or recent included studies. The following authors replied: Douglas Fuchs, Lynn Fuchs, Russell Gersten, Nancy Scammaca, Robert Slavin, and Sharon Vaughn. Furthermore, during work with another review about the use of randomised controlled trials in Scandinavian compulsory school, authors were contacted about studies with sometimes overlapping inclusion criteria with the current review (see Pontoppidan et al., 2018).

4.2.7. Citation‐tracking/snowballing strategies

In order to identify both published studies and grey literature we used citation‐tracking/snowballing strategies. Our primary strategy was to citation‐track related systematic reviews and meta‐analyses. 1446 references from 23 existing reviews were screened in order to find further relevant grey and published studies (see Section 2.4.1 and the list in Supporting Information Appendix A, subsection Grey Literature Searches). The review team also checked reference lists of included primary studies for new leads.

4.3. Data collection and analysis

4.3.1. Selection of studies

Under the supervision of the review authors, at least two review team assistants independently screened titles and abstracts to exclude studies that were clearly irrelevant. Any disagreement of eligibility was resolved by the review authors. We retrieved studies considered eligible in full text. Two review team assistants then independently screened the full texts under the supervision of the review authors. Any disagreement of eligibility was resolved by the review authors. The review authors piloted the study inclusion criteria with all review team assistants.

4.3.2. Data extraction and management

Two members of the review team independently coded and extracted data from included studies. A coding sheet was piloted on several studies and revised. Any disagreements were resolved by discussion, and it was possible to reach consensus in all cases. We extracted data on the characteristics of participants, characteristics of the intervention and control/comparison conditions, research design, sample size, outcomes, and results. We contacted study authors if a study did not include sufficient information to calculate an effect size. Extracted data was stored electronically, and we used EPPI Reviewer 4, Microsoft Excel, and R as the primary software tools.

4.3.3. Assessment of risk of bias in included studies

We assessed the risk of bias of effect estimates using a model developed by Prof. Barnaby Reeves in association with the Cochrane Non‐Randomised Studies Methods Group. This model is an extension of the Cochrane Collaboration's risk of bias tool and covers risk of bias in non‐randomised studies that have a well‐defined control group. The extended model is organised and follows the same steps as the risk of bias model according to the 2008‐version of the Cochrane Handbook, chapter 8 (Higgins & Green, 2008). The extension to the model is explained in the three following points:

-

1.

The extended model specifically incorporates a formalised and structured approach for the assessment of selection bias in non‐randomised studies by adding an explicit item about confounding. This is based on a list of confounders considered to be important and defined in the protocol for the review. The assessment of confounding is made using a worksheet where, for each confounder, it is marked whether the confounder was considered by the researchers, the precision with which it was measured, the imbalance between groups, and the care with which adjustment was carried out. This assessment informed the final risk of bias score for confounding.

-

2.

Another feature of effect estimates in non‐randomised studies that make them at high risk of bias is that they need not have a protocol in advance of starting the recruitment process (this is however also true for a very large majority of RCTs in education). The item concerning selective reporting therefore also requires assessment of the extent to which analyses (and potentially, other choices) could have been manipulated to bias the findings reported, for example, choice of method of model fitting, potential confounders considered/included. In addition, the model includes two separate yes/no items asking reviewers whether they think the researchers had a prespecified protocol and analysis plan.

-

3.

Finally, the risk of bias assessment is refined, making it possible to discriminate between effect estimates with varying degrees of risk. This refinement is achieved with the addition of a 5‐point scale for certain items (see the next section for details).

The refined assessment is pertinent when thinking of data synthesis as it operationalises the identification of studies (especially in relation to non‐randomised studies) with a very high risk of bias. The refinement increases transparency in assessment judgements and provides justification for not including a study with a very high risk of bias in the meta‐analysis.

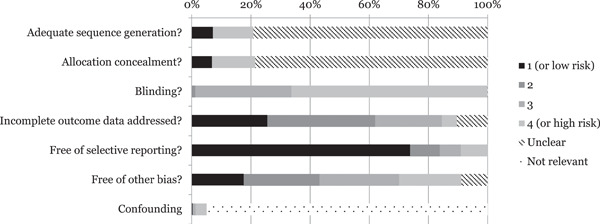

Risk of bias judgement items

The risk of bias model used in this review is based on nine items (see Supporting Information Appendix B: Risk of bias tool for a fuller description). The nine items refer to: sequence generation, allocation concealment, blinding, incomplete outcome data, selective outcome reporting, other potential threats to validity, a priori protocol, a priori analysis plan, and confounders (for non‐randomised studies). As all but the latter follow standard procedures described in the Cochrane Handbook (Higgins & Green, 2011), we focus on the confounding item below.

Confounding

An important part of the risk of bias assessment of effect estimates in non‐randomised studies is how studies deal with confounding factors. Selection bias is understood as systematic baseline differences between groups and can therefore compromise comparability between groups. Baseline differences can be observable (e.g., age and gender) and unobservable to the researcher (e.g., motivation). Included studies use for example matching and statistical controls to mitigate selection bias, or demonstrate evidence of preintervention equivalence on key risk variables and participant characteristics. In each study, we assessed whether the observable confounding factors of age and Grade level, performance at baseline, gender, and socioeconomic background had been considered, and how each study dealt with unobservables.

There is no single non‐randomised study design that always deals adequately with the selection problem. Different designs represent different approaches to dealing with selection problems under different assumptions and require different types of data. For example, differences in preintervention test score levels do not have to be a major problem in a difference‐in‐differences design, where the main identifying assumption is that the trends of the outcome variable in the intervention and control group would not have differed, had the intervention not occurred (e.g., Abadie, 2005). Similar differences in levels would, in general, be more problematic in a matching design as they indicate that the matching technique has not been able to balance the sample even on observable variables. For this reason, we did not specify thresholds in terms of preintervention differences (in say, effect sizes) for when a study has too high risk of bias on confounding.

Importance of prespecified confounding factors

We describe the motivation for focusing on age and Grade level, performance at baseline, gender, and socioeconomic background below.

Development of cognitive functions relating to school performance and learning are age dependent. Furthermore, systematic differences in performance level often refer to systematic differences in preconditions for further development and learning of both cognitive and social character (Piaget, 2001; Vygotsky, 1978). Therefore, to be sure that an effect estimate was a result from a comparison of groups with no systematic baseline differences it was important to control for the students' Grade level (or age).

Performance at baseline is generally a very strong predictor of posttest scores (e.g., Hedges & Hedberg, 2007), and controlling for this confounder was therefore highly important.

With respect to gender it is well‐known that there exist gender differences in school performance (e.g., Holmlund & Sund, 2005). In terms of our primary outcome measures, girls tend to outperform boys with respect to reading and boys tend outperform girls with respect to mathematics (Stoet & Geary, 2013), although parts of the literature finds that these gender differences vanish over time (Hyde et al., 1990; Hyde & Linn, 1988). As there is no consensus around the disappearance of gender differences, we found it important to include this potential confounder.

Students from more advantaged socioeconomic backgrounds on average begin school better prepared to learn (e.g., Fryer & Levitt, 2013). As outlined in Section 2, students with socio‐economically disadvantaged backgrounds have lower test scores on international tests (OECD, 2010c, 2013). Therefore, the accuracy of the estimated effects of an intervention may depend on how well socioeconomic background is controlled for. Socioeconomic background factors were for example parents’ educational level, family income, and parental occupation.

Bias assessment in practice

At least two review authors independently assessed the risk of bias for each included study. Disagreements were resolved by discussion, and it was possible to reach a consensus in all cases. We reported the risk of bias assessment in risk of bias tables for each included study (see Supporting Information Appendices F and G).

In accordance with Cochrane and Campbell methods we did not aggregate the 5‐point scale across items. Effect sizes given a rating of 5 on any item should be interpreted as being more likely to mislead than inform and were not be included in the meta‐analysis (the items with a 3‐point scale did not warrant exclusion). If an effect size received a rating of 5 on any item (from both reviewers), we did not continue the assessment because, as per our protocol, these effect sizes would not be included in any analysis. We discuss the risk of bias assessment, including the most common reasons for excluding an effect size, in Section 5.2. For studies with a lower than 5‐point rating, we used the ratings of the major items in sensitivity analyses.

A note is warranted for how we assessed some items in practice. Allocation concealment was assessed as a type of second‐order bias in RCTs. If there was doubt or uncertainty about how the random sequence was generated, this automatically carried over to the allocation concealment rating, which was also rated “Unclear”. Similarly, if the sequence generation rating was “High”, as for example in a QES, then the allocation concealment rating was also “High”. RCTs rated “Low” on sequence generation could get a “High” rating on allocation concealment if the sequence was not concealed from those involved in the enrolment and assignment of participants. However, if the randomisation was not done sequentially, this should not present a problem, and allocation concealment in non‐sequentially randomised RCTs were rated “Low”, given that the rating on sequence generation was also “Low”.

Blinding is in practice always a problem in the interventions we included. No included study was double‐blind for example, a standard that is very difficult to attain in an educational field trial. Furthermore, blinding was not extensively discussed in many studies, likely because it is difficult to attain in education interventions (Sullivan, 2011). For these reasons, we did not exclude any effect size due to insufficient blinding and rather than rating all studies that did not explicitly discuss blinding as “Unclear”, we sought to assess how likely it was that a particular group of participants was blind to treatment status. We used the following categories of participants: students in intervention and control groups, teachers, parents, and testers. We assessed the blinding item by the following standard: if all participant groups were likely to be aware of treatment status, we gave the study a rating of 4. If at least one group was likely blind to treatment status, it got a 3, and then we lowered the rating when more groups were blinded.

There were moreover very few studies that reported having an a priori protocol or analysis plan. We did not count hypotheses stated in the study as an a priori analysis plan. The plan should have been published before the analysis took place and we had to be able to find the plan.

This lack of prespecified outcome measures made it difficult to assess selective outcome reporting bias. However, a few studies lacked information regarding all outcomes described in, for example, the methods section of the study. To separate these effect sizes from the ones that did not contain information about a protocol or an analysis plan, we rated the latter ones with 1 (i.e., there was no evidence of selective outcome reporting). This rating should therefore not necessarily be considered as representing a low risk of bias.

4.3.4. Measures of treatment effect

The analysis of effect sizes involved comparing an intervention to control or comparison conditions. We conducted separate analyses for short‐ and follow‐up outcomes. The below sections apply to both types of outcomes, unless otherwise mentioned.

Effect sizes using continuous data

For continuous data, we calculated standardised mean differences (SMDs) whenever sufficient information was available in the included studies. We used Hedges’ g to estimate SMDs, calculated as (Lipsey & Wilson, 2001, pp. 47–49):

| (1) |

| (2) |

where is the total sample size, the postintervention mean in each group, and the pooled standard deviation defined as

| (3) |

Here, and denotes the raw standard deviation of the intervention and control group. We used covariate‐adjusted means, and the unadjusted posttest standard deviation whenever available. However, most studies did not report covariate‐adjusted means in a way that we could use. We then used the raw means instead (we test whether the studies reporting only raw means have different effect sizes in the sensitivity analysis). We decided to use the postintervention standard deviation, as more studies included this information than the preintervention standard deviation. In the few cases where the postintervention standard deviation was missing, we used the preintervention standard deviation.

All studies included in the data synthesis, except one, provided information so that we could calculate student‐level effect sizes. For the exception, we used information about intra‐cluster correlations (ICC) from Hedges and Hedberg (2007, table 6, p. 72, pre‐test covariate model for math in Grade 6, which is 0.098) to transform the teacher/class‐level effect size to a student‐level effect size.

Table 6.

Tests of differences between intervention components

| Coefficient difference | F‐statistic | df | p Value | |

|---|---|---|---|---|

| Hypothesis | (1) | (2) | (3) | (4) |

| Peer‐assisted = CAI | 0.31 | 10.65 | 51.76 | 0.002 |

| Peer‐assisted = Coaching personnel | 0.42 | 8.84 | 43.26 | 0.005 |

| Peer‐assisted = Incentives | 0.31 | 8.49 | 33.26 | 0.006 |

| Peer‐assisted = Medium‐group | 0.21 | 4.40 | 34.30 | 0.043 |

| Peer‐assisted = Progress monitoring | 0.49 | 9.28 | 43.48 | 0.004 |

| Peer‐assisted = Small group | 0.06 | 0.91 | 45.58 | 0.345 |

| Small‐group = CAI | 0.26 | 13.51 | 35.75 | 0.001 |

| Small‐group = Coaching personnel | 0.37 | 9.08 | 48.32 | 0.004 |

| Small‐group = Incentives | 0.25 | 8.46 | 27.60 | 0.007 |

| Small‐group = Medium‐group | 0.15 | 3.58 | 25.21 | 0.070 |

| Small‐group = Progress monitoring | 0.43 | 10.45 | 38.39 | 0.003 |

| Fractions = Algebra/Pre‐algebra | 0.53 | 14.15 | 10.18 | 0.004 |

| Fractions = Geometry | 0.33 | 6.12 | 10.21 | 0.032 |

| Fractions = Number sense | 0.39 | 8.12 | 7.16 | 0.024 |

| Fractions = Operations | 0.47 | 10.09 | 6.29 | 0.018 |

| Fractions = Problem solving | 0.29 | 6.21 | 8.95 | 0.035 |

Column 1 reports the difference between the coefficients of the two components mentioned in the Hypothesis‐column. To calculate the F‐statistic, the degrees of freedom, and the (two‐sided) p value in columns 2–4, we used the test described in Tipton and Pustejovsky (2015), and implemented it using our own extension to the Wald_test function in R package clubSandwich (Pustejovsky, 2020) and the “HTZ” small‐sample correction procedure. The coefficients and variance estimates are from the model reported in Table 5, column 2.

Abbreviation: CAI, computer‐assisted instruction.

Some studies reported an effect size where the mean difference was standardised using the control group's standard deviation (i.e., a Glass's δ) or reported effect sizes calculated with unclear methods (and no other information that we could use). Furthermore, a few studies used the school‐, district‐, or nation‐wide standard deviation to calculate a standardised mean effect size, but did not include information about the respective standard deviation for intervention and control group. We included these effect sizes, and tested the sensitivity to their inclusion in Section 5.4.

Our protocol stated that we would use intention‐to‐treat (ITT) estimates of the mean difference whenever possible. However, very few studies reported explicit ITTs, and the overwhelming majority only reported results for the students that actually received the intervention, rather than all for which the intervention was intended (often because they lacked outcome data for students that left the study). We therefore believe that the estimates are closer to treatment‐on‐the‐treated (TOT) effects and used TOT estimates when both ITTs and TOTs were available.

A few effect sizes are based on tests were low scores denote beneficial effects. We reverse coded these so that positive effect sizes imply beneficial effects of the intervention.

Effect sizes using discrete data

Only two studies exclusively reported discrete outcome measures. We transformed the outcomes into SMDs using the methods described in Sánchez‐Meca et al. (2003) and included them in the analyses together with studies reporting continuous outcomes.

4.3.5. Unit of analysis issues

Errors in statistical analysis can occur when the unit of allocation differs from the unit of analysis. In cluster‐randomised trials, participants are randomised to intervention and control groups in clusters, as when participants are randomised by school. QES may also include clustered assignment of treatment. Effect sizes and standard errors from such studies may be biased if the unit‐of‐analysis is the individual and an appropriate cluster adjustment is not used (Higgins & Green, 2011).