1. PLAIN LANGUAGE SUMMARY

1.1. Early grade literacy (EGL) interventions in Latin America and the Caribbean (LAC) are only effective under certain conditions

Children across the world are not acquiring basic reading and math skills despite increases in primary school enrollment and attendance. Teacher training and nutrition programs in LAC are not effective in improving EGL overall, but they may be, under certain conditions. Technology in schools can be detrimental to learning outcomes if these programs only focus on technology.

1.2. What is this review about?

Approximately 250 million children across the world are not acquiring basic reading and math skills, even though about 50% of them have spent at least 4 years in school. Educational policies on EGL in the LAC region have long suffered from a disjuncture between school practice and research.

This review examines the effectiveness and fidelity of implementation of various programs implemented in the LAC region that aim to improve EGL outcomes, including teacher training, school feeding, computer‐aided instruction, nutrition, and technology‐in‐education.

What is the aim of this review?

This Campbell systematic review summarizes findings from 107 studies to inform policy for EGL in the LAC region.

1.3. What studies are included?

This review includes four types of EGL studies from the LAC region:

-

(1)

Quantitative interventions (23 studies)

-

(2)

Qualitative interventions (6 studies)

-

(3)

Quantitative noninterventions (61 studies)

-

(4)

Qualitative noninterventions (14 studies).

1.4. What are the main findings of this review?

Overall, programs did not have statistically significant effects on EGL outcomes. But there are instances in which programs may have positive or negative effects.

For example, teacher training did not show positive effects on EGL outcomes, but a study from Chile showed that teacher training can possibly positively affect EGL outcomes in high‐income economies when it is well implemented and complemented by sustained coaching. Similarly, nutrition programs did not improve EGL outcomes. However, a study from Guatemala showed positive effects on EGL, possibly because Guatemala has high rates of stunting and wasting.

Although there is no statistically significant effect of technology‐in‐education programs on EGL outcomes in the LAC region, a study from Peru showed that the distribution of laptops to children can have adverse effects, particularly when not complemented by additional programs.

Other studies showed that phonemic awareness, phonics, fluency, and comprehension are associated with reading ability. Furthermore, poverty and child labor are negatively correlated with EGL outcomes. This finding supports the result that nutrition programs may be effective in settings with high rates of stunting and wasting.

Finally, the quality of preschool and promoting social learning are positively associated with EGL outcomes.

1.5. What do the findings of this review mean?

Teacher training, nutrition, and technology‐in‐education programs on average do not show positive effects on EGL outcomes in the LAC region. However, there are several factors that could potentially enable positive impacts. These include combining teacher training with coaching, targeting school feeding and other nutrition programs to low‐income countries with high rates of stunting and wasting, and combining technology‐in‐education programs with a strong focus on pedagogical practices.

The review also identifies some opportunities for improving the design and implementation of EGL programs. Studies support the need to teach phonological awareness (PA) skills early on, but caution is required considering the small evidence‐base in the LAC region. The evidence also supports investing in preschool quality through well‐implemented teacher training.

Finally, ministries of education in low‐income countries with high rates of stunting and wasting could consider investing in programs to improve the nutrition outcomes of students.

Caution is needed in interpreting these findings since the evidence base on what works to improve EGL outcomes in the LAC region is weak, with indications of publication bias.

1.6. How up‐to‐date is this review?

The review authors searched for studies published up to February 2016.

2. EXECUTIVE SUMMARY

2.1. Background

Improvements in students' learning achievement have lagged behind in low‐ and middle‐income countries despite significant progress in school enrollment numbers. Approximately 250 million children across the world are not acquiring basic reading and math skills, even though about 50% of them have spent at least 4 years in school (United Nations Educational, Scientific and Cultural Organization (UNESCO), 2014). The World Development Report (World Bank, 2018) presents evidence showing that learning is unlikely to improve unless the quality of each factor improves. The LAC region has experienced some positive trends in educational outcomes in the last decade, including improved EGL outcomes for third‐grade students in the majority of the countries. However, educational policies on EGL in the LAC region have long suffered from a disjuncture between school practice and research. As a result, policy makers, pedagogy and curriculum specialists, and other stakeholders in the region are unable to determine high‐quality research and what works in improving EGL outcomes. For this reason, they are unable to shape policy, practice, and programs in an evidence‐driven manner.

2.2. Objectives

This systematic review examines the effectiveness of various programs implemented in the LAC region that aim to improve EGL outcomes, including teacher training, school feeding, computer‐aided instruction, programs with an emphasis on nutrition, and technology in education programs. In addition, we assess the fidelity of implementation of programs that aim to improve EGL outcomes as well as the factors that predict EGL outcomes. Finally, we examine the experiences and perspectives of various stakeholders about EGL in the LAC region.

Specifically, this review addressed the following research questions:

-

1.

What is the impact of reading programs, practices, policies, and products aimed at improving the reading skills of children from birth through Grade 3 on reading outcomes in the LAC region?

-

2.

What factors predict the reading outcomes of children from birth through Grade 3?

-

3.

What factors contribute to improving the reading outcomes of children from birth through Grade 3?

2.3. Search methods

We searched electronic databases, gray literature, relevant journals, and institutional websites, and we performed keyword hand searches and requested recommendations from key stakeholders. The search was conducted from July to August 2015 and we finalized the search in February 2016. In addition, we used novel computational approaches (specifically Wikilabeling) to maximize the comprehensiveness of the review.

2.4. Selection criteria

This review includes studies that are relevant for the literacy of children in early grades in the LAC region. This literature included both studies with an emphasis on education and studies with a focus on enabling factors that are linked to education programs or reading outcomes. For example, we included studies with a focus on nutrition that may indirectly influence reading outcomes. We developed a theory of change to identify these enabling factors.

To answer our research questions, we included four study types. The first types are experimental and multivariate nonexperimental studies that include a control or comparison group. We defined these studies as “quantitative intervention studies.” We included these studies to determine the impact of specific programs on EGL outcomes. The second study type consists of qualitatively oriented studies with a focus on interventions. These studies usually emphasize the process of program implementation or the experiences of beneficiaries about the performance of the program. We defined these studies as “qualitative intervention studies.” The third study type emphasizes the predictors of reading outcomes but does not focus on the effects of a specific program. We defined these studies as “quantitative nonintervention studies.” We included these studies to increase our understanding of intermediate outcomes and their ability to predict reading outcomes. Fourth, we included qualitative studies that discuss literacy in the LAC region but do not include an emphasis on a specific program. We defined these studies as “qualitative nonintervention studies.”

2.5. Data collection and analysis

We systematically coded information from the studies included in the review and critically appraised them. We conducted statistical meta‐analysis and sensitivity analysis using the data extracted from quantitative experimental and quasiexperimental studies. We also used narrative synthesis techniques to synthesize the findings from qualitative studies and studies that focused on predictors of literacy outcomes.

2.6. Results

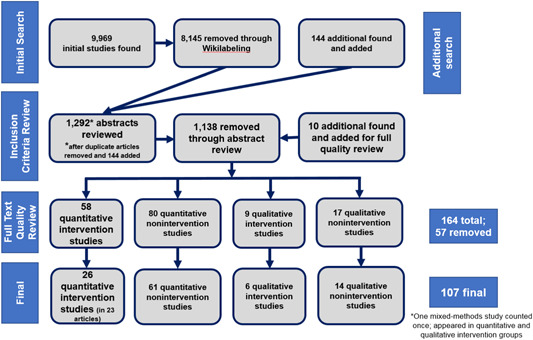

We included 107 studies with a focus on EGL in the LAC region. Initial searches resulted in 9,696 articles. Following a manual review of the abstracts, we were left with a total of 164 studies that underwent full‐text review. During this phase, an additional 57 articles were removed as not relevant, resulting in 107 studies included in the final review.

The 107 included articles were comprised of quantitative intervention research, quantitative nonintervention research, qualitative intervention research, and qualitative nonintervention research. We included 23 articles with studies that were experimental or quasiexperimental with a focus on the effects of specific development programs on EGL outcomes. Three of these 23 articles (Cardoso‐Martins, Mesquita, & Ehri, 2011; Larraín, Strasser, & Lissi, 2012; Vivas, 1996) each covered two distinct studies bringing the number of quantitative intervention studies included to 26. We also included 61 quantitative studies that had an emphasis on EGL outcomes but did not emphasize a specific intervention, 14 qualitative studies without a focus on a specific intervention, and six qualitative studies that focused on a specific intervention. Most of the studies included in our review of evidence were published journal articles and came from either Mexico or South America; significantly fewer articles were from Central America and the Caribbean. Almost all articles were published in English or Spanish. More than 90% of the articles were focused on high‐ to upper‐middle‐income countries.

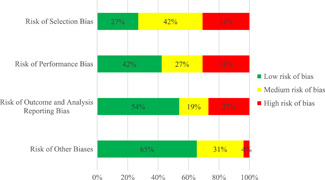

We only found few quantitative intervention studies with a low risk of bias. Of the 26 included studies, seven were rated as having a low risk of selection bias, five were rated as having a medium risk of selection bias, and eight were rated as having a high risk of selection bias. Furthermore, 11 studies were rated as having a low risk of performance bias, seven studies were rated as having a medium risk of performance bias, and eight studies were rated as having a high risk of performance bias. We rated 14 studies as having a low risk of outcome and analysis reporting bias, five studies as having a medium risk of outcome and analysis reporting bias, and seven studies as having a high risk of outcome analysis reporting bias. Finally, we rated 17 studies as having a low risk of other biases, eight studies as having a medium risk of other biases, and one study as having a high risk of other biases.

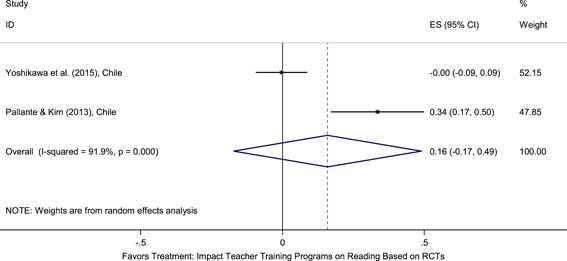

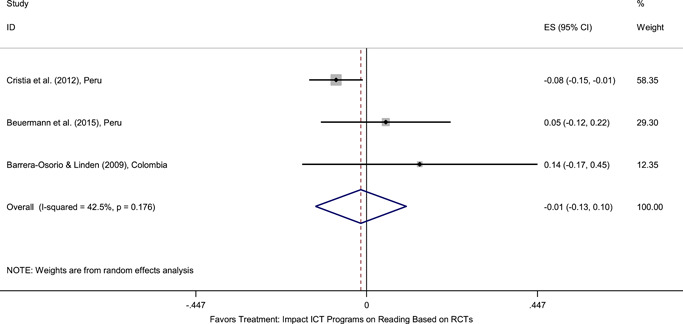

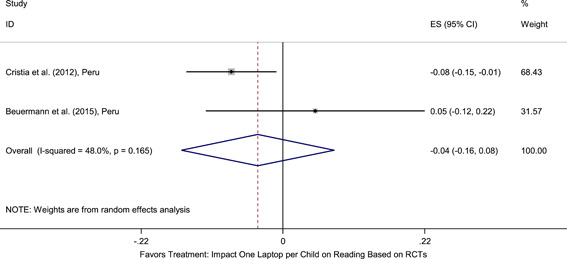

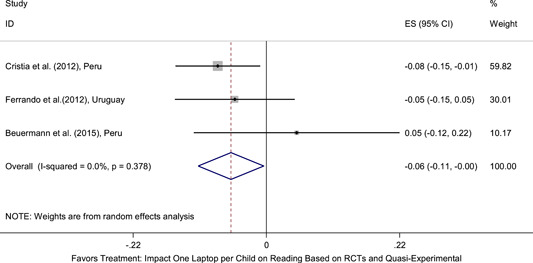

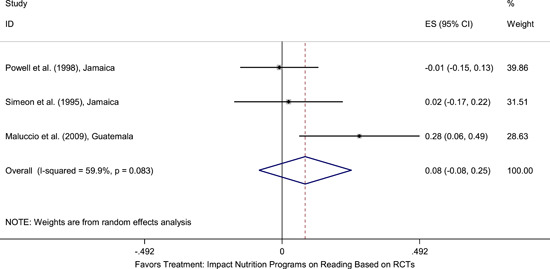

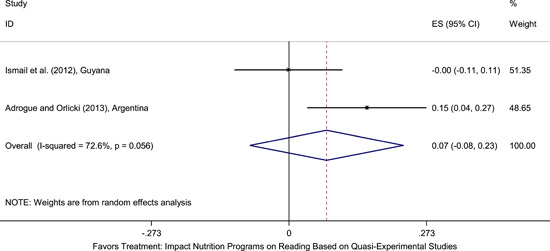

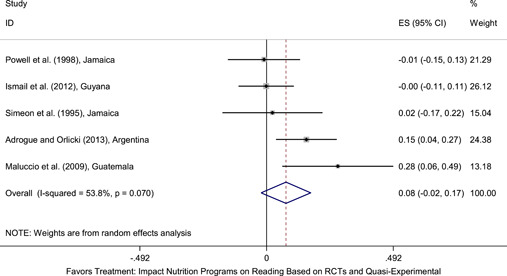

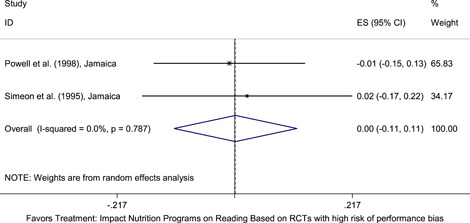

Meta‐analyses did not show the average and statistically significant effects of development programs on EGL outcomes, but a narrative synthesis of the limited number of high‐quality quantitative intervention studies did show some examples of development programs that may have positive effects on EGL outcomes in specific circumstances and contexts. For example, a meta‐analysis that focused on teacher training did not show positive effects on EGL outcomes (95% confidence interval [CI] = −0.17, 0.48; evidence from two programs). However, a study from Chile showed that teacher training programs can possibly positively affect EGL outcomes in high‐income economies when they are well implemented and complemented by the sustained coaching of teachers (Pallante & Kim, 2013). In addition, a meta‐analysis that focused on nutrition programs did not show positive effects on EGL outcomes (95% CI = −0.08, 0.25; evidence from two programs). However, a study from Guatemala showed some evidence that nutrition programs can have positive effects on EGL outcomes in contexts where stunting and wasting are high (Hoddinott et al., 2013). On average, we also did not find statistically significant effects of technology in education programs on EGL outcomes in the LAC region (SMD = −0.01, 95% CI = −0.13, 0.10; evidence from three studies). However, a study from Peru showed that the distribution of laptops to children can have adverse effects on EGL outcomes, particularly when the distribution of laptops is not complemented by additional programs (Cristia, Ibarrarán, Cueto, Santiago, & Severín, 2012).

The findings of the quantitative nonintervention studies indicate that phonemic awareness, phonics, fluency, and comprehension are associated with reading ability. The research also indicates that poverty and child labor are negatively correlated with EGL outcomes. This finding on the importance of poverty and socioeconomic factors for EGL outcomes supports the quantitative intervention result that nutrition programs may be effective in improving EGL outcomes in contexts with high rates of stunting and wasting. Finally, the quantitative nonintervention studies show that the quality of preschool is positively associated with EGL outcomes.

Both qualitative and quantitative studies indicated that consideration of context is key to improving reading outcomes. The most frequently discussed topic in qualitative nonintervention articles was the need to promote social learning to improve EGL.

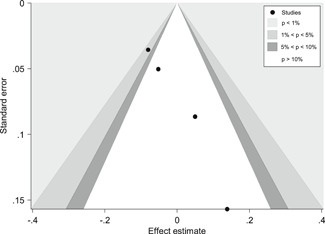

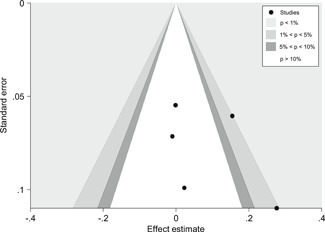

We found some indications for publication bias in the studies that focus on the effects of teacher practices, parental involvement, and Information and Communication Technology (ICT) programs on EGL outcomes in the LAC region; that is, it is possible that some studies that focus on EGL in the LAC region were not published because they did not find statistically significant effects.

2.7. Authors' conclusions

Our review highlighted several important implications for practice and policy related to the rollout, design, and potential impact of education programs that aim to improve EGL outcomes in the LAC region. First, our quantitative evidence suggests that teacher training, nutrition, and technology in education programs on average do not show positive effects on EGL outcomes in the LAC region. However, the quantitative narrative synthesis shows several factors that could potentially enable positive impacts of these programs on EGL outcomes. These factors include combining teacher training with teacher coaching, targeting school feeding and other nutrition programs to low‐income countries with high rates of stunting and wasting, and combining technology in education programs with a strong focus on pedagogical practices. However, the evidence‐base is too small to derive strong conclusions about the ability of these components to improve EGL outcomes in the LAC region.

Second, the systematic review identified some promising opportunities for improving the design and implementation of education programs that aim to improve EGL outcomes. We found evidence for a strong correlation between PA and reading ability. In addition, studies focused on the importance of PA and phonics to help students become strong decoders. These findings suggest the need to teach PA skills early on, but caution is required considering the small evidence‐base in the LAC region.

Third, the review suggests that more resources may potentially need to be focused on enhancing the quality of preschools through well‐implemented teacher training. The findings of this review suggest that such teacher training could possibly enhance reading outcomes if the training is complemented with sustained teacher coaching. The evidence‐base is, however, again too small to derive strong conclusions about the effects of teacher training in preschools.

Fourth, ministries of education in low‐income countries with high rates of stunting and wasting could consider potentially investing in programs to improve the nutrition outcomes of students in order to improve EGL outcomes. These efforts may be less effective in middle‐ or high‐income countries, however, and more evidence is needed to derive strong conclusions about the effects of programs that aim to improve nutrition on EGL outcomes.

In general, the evidence base on what works to improve EGL outcomes in the LAC region is weak. We only found a small number of studies that can present credible estimates on the impact of development programs on EGL outcomes. The majority of the studies suffer from either a medium or high risk of selection bias or a medium or high risk of performance bias. Furthermore, we found some indications for publication bias in the studies that focus on the effects of teacher practices and parental involvement on EGL outcomes in the LAC region.

3. BACKGROUND

3.1. The problem, condition, or issue

There is evidence of a global learning crisis (Berry, Barnett, & Hinton, 2015; Nakamura, de Hoop, & Holla, 2019; Pritchett & Sandefur, 2013). School enrollment has improved, but EGL and math assessment data have shown high “zero” scores in literacy assessments in many low‐ and middle‐income countries (e.g., Annual Status of Education Report [ASER], 2013; EGRA data, n.d.).

The findings of the latest World Development Report on education highlight how educational outcomes are affected directly by the quality of school inputs, school management, and teachers, as well as the education preparedness of learners. In theory, improvements in the quality of one of these factors could result in improvements in learning outcomes. However, the World Development Report (World Bank, 2018) presents evidence demonstrating that learning is unlikely to improve unless the quality of each factor improves. A systematic review of Snilstveit et al. (2012) also argues that education programs are unlikely to improve learning outcomes unless they ease more than one constraint.

The LAC region is composed of more than 40 countries and territories on two continents with five different official languages (English, Spanish, French, Dutch, and Portuguese) and many more regional languages. The region has experienced some positive trends in educational outcomes in the last decade, including improvements in pupil/teacher ratios, increases in the percentage of trained teachers (UNESCO, 2014, p. 8), and improved EGL outcomes for third‐grade students in the majority of the countries (see Figure 1).

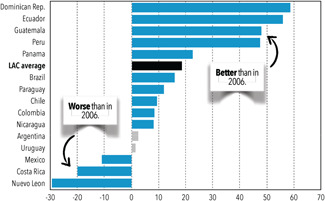

Figure 1.

Change in mean scores in third‐grade reading, 2006–2013.(1) Only changes shown in blue or black are statistically significant. (2) The mean score for the region includes all countries in this graph with equal weight Source: from Are Latin American children's reading skills improving? Highlights of the second and third regional comparative and explanatory studies (SERCE & TERCE). Washington, DC: American Institutes for Research; p. 15. Reprinted with permission

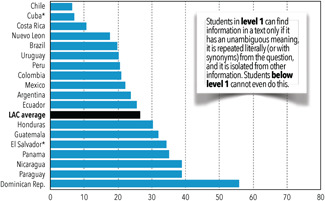

However, we still find great disparities among the poor, rural, indigenous, and other disadvantaged groups in the region. In addition, one in four third graders performed so poorly that they were categorized in the lowest level of the reading test, and <5% of the third graders performed so well that they were categorized as achieving the highest levels of reading. Figure 2 depicts these challenges by demonstrating that there are still a significant number of third graders scoring at the lowest levels of reading. In fact, more than 60% of third‐grade students have only achieved basic reading skills (Levels 1 and 2).

Figure 2.

Percentage of third graders scoring at level 1 or below on reading, 2013.(1) Lowest levels include level 1 and below. (2) The mean score for the region includes all countries except for Cuba, El Salvador, and Honduras with equal weights. (3) Cuba's and El Salvador's scores are from 2006 Source: from Are Latin American children's reading skills improving? Highlights of the second and third regional comparative and explanatory studies (SERCE & TERCE). Washington, DC: American Institutes for Research; p. 19. Reprinted with permission

There are many reasons to explain the poor literacy outcomes in the region but one of the key potential reasons is the lack of evidence‐based training, preparation and support for teachers. According to Bruns and Luque (2015) “the seven million teachers of LAC are the critical actors in the region's efforts to improve education quality and raise student learning levels, which lag far behind those of OECD countries and East Asian countries such as China.” Some of the reasons they cite are the low standards for entry into teacher training, poor quality training programs that are removed from the realities of the classroom, few career incentives, and weak support for teachers once they are on the job. In addition, teachers are not receiving the training they need to deal with students at very different learning levels, different ages, speaking different languages, and so forth, which is the reality of most LAC classrooms.

Evidence‐informed EGL policy can contribute to mitigating some of the concerns associated with EGL outcomes in the LAC region. However, up until now, education policies to improve reading outcomes have only been informed by evidence to a limited extent.

3.2. The interventions

National governments and development agencies in the LAC region have created a range of programs to improve EGL outcomes. Some of these programs specifically aim to improve EGL outcomes while others might improve EGL through indirect mechanisms. This review aimed to include any program that had the potential to affect EGL outcomes. We found and included research on the following program types and practices: teacher training, technology in education programs, school feeding and other nutrition programs, school governance programs, preschool programs, teacher practices and general pedagogical approaches, parental and community participation, and curricula. We discuss each of these intervention types below.

Teacher training programs can take several forms ranging from extensive multiyear, one‐on‐one coaching delivered to teachers in their classrooms to training workshops delivered outside of the classroom. Emerging evidence suggests that teacher training models that emphasize sustained in‐class coaching may produce larger effects on learning outcomes than short‐term training models in developing countries (Kraft, Blazar, & Hogan, 2018). For instance, a study from South Africa showed that monthly visits from specialized training coaches resulted in statistically significant effects on reading outcomes (0.25 standard deviations), while two 2‐day training sessions (provided over the course of a year) did not result in statistically significant effects on reading outcomes (Cilliers & Taylor, 2017).

Technology in education programs involve providing technological equipment (e.g., laptops, digital game‐based technology, mobile phones) to teachers or learners and integrating these tools into existing curriculums or including technology as additional tools. The equipment may have been refurbished and donated by the private sector or produced specifically for classroom instruction (Barrera‐Osorio & Linden, 2009; Cristia et al., 2012). Some programs may complement the distribution of technological equipment with training modules for teachers on the use of technology in the classroom for specific subjects. Other programs do not provide any complementary training. Studies that have examined the impact of technology in education programs on learning outcomes in low‐ and middle‐income countries suggest mixed evidence with a pattern of null results for programs that do not focus on complementary training for teachers (Bulman & Fairlie, 2016, p. 2). However, recent evidence shows more promising results for programs that include a strong focus on teaching at the right level. For example, Muralidharan et al. (2019) showed that a technology‐based afterschool instruction program with a strong emphasis on learning at the right level produced large and statistically significant effects on reading outcomes in India.

School feeding and nutrition programs vary in their modes of delivery and expected outcomes. Most programs are delivered within schools and provide meals (typically breakfast or lunch) to participating children (Adrogue & Orlicki, 2013; Powell, Walker, Chang, & Grantham‐McGregor, 1998). Other programs may provide children with specific nutrients that might be missing from their diets (Maluccio et al., 2009). Nutrition programs may aim to improve school attendance and boost learning outcomes, in addition to aiming to aiming to improve children's food security and nutritional status.

School governance interventions address school management issues that affect the delivery, quality, and financing of education. These programs often focus on decentralizing decision‐making at the school level or improve parents' and communities' involvement in school management. Some school governance interventions involve cash transfers to schools and provision of matching funds for private investment to schools along with institutional changes, which allows parents to decide how to allocate funds (Bando, 2010). Other models might provide support to poor performing schools based on needs identified in a school improvement plan (Lockheed, Harris, & Jayasundera, 2010).

Early childhood education programs often focus on preschool before the start of primary education. The effects of preschool can be moderated by variations in the length of time spent in preschool, availability and quality of school resources, quality of instruction and extraschool factors such as household income (Gardinal‐Pizato, Marturano, & Fontaine, 2012).

Interventions aimed at supporting parents in fostering children's early literacy take varied approaches and have shown mixed results. In developed countries, several interventions focus on addressing parent tutoring to improve children's literacy (Hannon, 1995; Tizard, Schofield, & Hewison, 1982; Topping, 1995). Several reviews have summarized the findings from literacy training programs for parents (Brooks, 2002; National Literacy Trust, 2001), but the effectiveness of parent training on children's literacy has not been established through systematic reviews, largely because of methodological discrepancies among the studies (Sylva, Scott, Totsika, Ereky‐Stevens, & Crook, 2008).

Interventions that target curriculum and teacher practices for literacy instruction take varied approaches as well. For instance, some interventions encourage teachers to explain unknown words to learners during storybook reading in order to boost reading comprehension (Larraín et al., 2012). Other interventions focus on providing PA training to boost learners' letter sound recognition (Cardoso‐Martins et al., 2011). Curricular interventions involve more actors and may have systemwide outcomes. For instance, interventions may focus on the reform of an existing curriculum to integrate content across subject areas or implement teaching strategies that cater to different cognitive levels (Roofe, 2014).

3.3. How the intervention might work

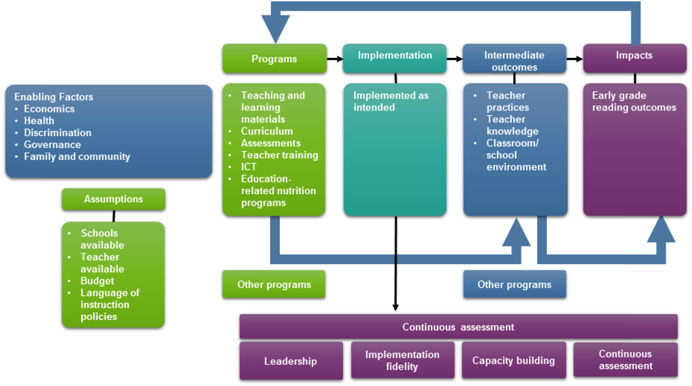

We developed a generic theory of change that—for all types of programs described above—maps out the plausible linkages across enabling factors, education‐ and noneducation‐related programs or initiatives that are associated with literacy, intermediate outcomes, and reading outcomes, as well as the assumptions that underlie the theory of change. The theory of change explains how programs or initiatives can contribute to improving EGL outcomes in a sustainable manner. Figure 3 depicts the theory of change.

Figure 3.

Theory of change

The theory of change begins with the enabling factors and assumptions that are necessary for any intervention or program to be able to impact EGL outcomes in the LAC region. These factors refer to assumptions that need to be in place to enable successful programs that are effective in improving reading outcomes. Then, education programs are implemented along with other noneducation programs that may have complementary, indirect, or moderating effects on EGL. Successful implementation then enables the achievement of intermediate EGL outcomes, such as changes in teacher knowledge and practices, which can, in turn, improve EGL. Finally, we include key elements for sustainability—namely, leadership, implementation fidelity, capacity building, and continuous assessment—that enable implementation to continue producing outcomes and impacts. Sustainability also depends on overcoming potential barriers, including financing, motivation at the community level, turnover in the government, and prioritization of these goals among competing initiatives.

The theory of change also considers mechanisms that influence how stakeholders interact with programs or practices, as well as external or contextual factors that influence implementation and the linkages in the conceptual framework. Importantly, the linkages in the conceptual framework can be moderated by the enabling environment. This enabling environment consists of the institutions and other contextual characteristics that need to be in place to enable the implementation of successful programs that are effective in improving EGL outcomes. For example, teacher training programs are likely to be more effective in an environment with a sufficient number of qualified teachers with the incentive to attend school. Similarly, teaching students how to read is likely to be more effective in an environment in which students are not stunted or wasted. Finally, a strong governance structure sets the stage for high‐quality education by ensuring that schools and teachers are available and have a budget within which they can implement programs or practices.

3.4. Why it is important to do the review

The World Conference on Education for All held in 1990 expanded the focus of the education agenda from access to quality and brought a new interest in the quality of education students received (World Conference on Education for All, 1990). Two of the six goals adopted at the Jomtien conference led to greater interest and support for EGL development. They were Goal 1: the expansion of early childhood care and development activities; and Goal 3, improvement in learning achievement.

There is evidence that programs in low‐ and middle‐income countries that focus on increasing educational inputs without addressing other constraints to learning are not sufficient to improve learning outcomes (Snilstveit et al., 2012). Banerjee et al. (2007) note that increasing inputs fail to have an impact on student attainment if what is being taught remains too difficult for students to learn. Similarly, a number of studies focused on computer‐assisted learning programs did not find significant impacts. For example, Cristia et al. (2012) analyzed the effect of the One‐Laptop‐Per‐Child program for students in rural Peru; they found little impact on the attendance and educational attainment of students. They argue that this lack of impact is due to the computers not containing software directly linked to class material, such as mathematics or reading, as well not having clear instruction on how teachers should use the computers in class.

This evidence shows the importance of identifying programs that are effective in improving learning outcomes. Recent systematic reviews show that structured pedagogical interventions targeted at teaching the right skills are among the most effective education interventions to improve learning outcomes in low‐ and middle‐income countries, particularly when the structured pedagogical intervention primarily focuses on teaching in the mother tongue (Evans & Popova, 2015; Snilstveit et al., 2012). However, it is important to develop context‐specific solutions for the LAC region. This systematic review aims to do so by providing specific evidence on what works to improve EGL outcomes in this region.

Educational policy around EGL in the LAC has long suffered from a disjuncture between school practice and research. Systematic reviews exist on the effects of education programs on learning outcomes (Evans & Popova, 2015; Snilstveit et al., 2012) and the impact of parental, community, and familial support interventions to improve children's literacy (Spier et al., 2016), but it is unclear whether these global findings can be extrapolated to the LAC region. Also, within the LAC region itself, research on EGL is fragmented and often of poor quality. There is no comprehensive or systematic overview of the EGL research literature specific to the LAC region. As a result, policy makers, pedagogy and curriculum specialists, and other stakeholders in the region are unable to determine what is relevant and are thus unable to shape policy, practice, and programs in an evidence‐driven manner.

Critical gaps in the literature and challenges in the achievement of EGL outcomes remain inside the LAC region. Most of the existing evidence on EGL is from outside the LAC region, and it is unclear whether these findings can be extrapolated to the LAC region. In addition, most of the evidence on EGL, both inside and outside the LAC region, is based on correlations and does not allow for causal claims about the impact of education and noneducation programs on EGL outcomes. These factors limit the possibility of evidence‐informed policy making.

This study will be the first systematic review to assess the evidence on EGL specifically from the LAC region. The review will also provide evidence on additional factors that support early literacy development outside of programs. This information could help to improve the design of early literacy programs at home, in schools and with parents and communities. Policy makers and practitioners need guidance in order to make use of evidence that is voluminous, diverse, and fragmented across disciplines. For research to be relevant to policy, it must be captured and consolidated in a reliable and accessible manner. It is important to differentiate research results on the basis of the quality of the methodology so that policy makers can make decisions that are based on valid findings. To that end, we reviewed and appraised the quality of all of the different methodological approaches used by the evaluations.

4. OBJECTIVES

The objective of this systematic review is to synthesize the high‐quality quantitative and qualitative evidence on what works to improve EGL outcomes in LAC. To achieve this goal, we addressed the following research questions.

-

(1)

What is the impact of reading programs, practices, policies, and products aimed at improving the reading skills of children from birth through Grade 3 on reading outcomes in the LAC region?

-

(2)

What factors predict the reading outcomes of children from birth through Grade 3?

-

(3)

What factors contribute to improving the reading outcomes of children from birth through Grade 3?

5. METHODS

5.1. Criteria for considering studies for this review

5.1.1. Types of studies

To answer our research questions, we included four study types. The first types are experimental and multivariate nonexperimental studies that include a control or comparison group. We defined these studies as “quantitative intervention studies.” We included these studies to determine the impact of specific programs on EGL outcomes. The second study type consists of qualitatively oriented studies with a focus on interventions. These studies usually emphasize the process of program implementation or experiences of beneficiaries about the performance of the program. We defined these studies as “qualitative intervention studies.” The third type of study emphasizes predictors of reading outcomes and does not focus on the effects of a specific program. We defined these studies as “quantitative nonintervention studies.” We included these studies to increase our understanding of intermediate outcomes and their ability to predict reading outcomes. Fourth, we included qualitative studies that discuss literacy in the LAC region but do not include an emphasis on a specific program. We defined these studies as “qualitative nonintervention studies.” We included these studies to assess the experiences and perspectives of key stakeholders, including students, teachers, and policy makers, concerning literacy and reading.

5.1.1.1. Experimental and quasiexperimental studies

We relied on quantitative experimental or quasiexperimental studies to address research question 1. We included both randomized controlled trials (RCTs) and quasiexperimental designs with nonrandom assignment. We include multivariate nonexperimental designs such as regression discontinuity designs, “natural experiments,” and studies in which students or schools self‐select into the program. To be included, the studies needed to collect cross‐sectional or longitudinal data for both beneficiaries and control or comparison groups and use propensity score or other types of matching, difference‐in‐difference estimation, instrumental variables regression, multivariate cross‐sectional or longitudinal regression analysis, or other forms of multivariate analysis, such as the Heckman selection model. The studies did not necessarily have to demonstrate baseline equivalence to be included in the review.

5.1.1.2. Qualitative studies on interventions

The second study type consists of qualitatively oriented studies with a focus on interventions that aim to improve EGL outcomes (either directly or indirectly). These studies usually emphasize the process of program implementation or experiences of beneficiaries about the performance of the program. We defined these studies as “qualitative intervention studies.” We included these studies to assess the experiences and perspectives of key stakeholders, including students, teachers, and policy makers, concerning literacy and reading.

5.1.1.3. Qualitative nonintervention studies

We also included qualitative studies that discuss literacy in the LAC region, but do not include an emphasis on a specific program. We defined these studies as “qualitative nonintervention studies.” We included these studies to assess the experiences and perspectives of key stakeholders, including students, teachers, and policy makers, concerning literacy and reading.

5.1.1.4. Quantitative studies that focus on predictors of reading outcomes

The fourth type of study emphasizes predictors of reading outcomes and does not focus on the effects of a specific program. We defined these studies as “quantitative nonintervention studies.” We included these studies to increase our understanding of intermediate outcomes and their ability to predict reading outcomes.

5.1.2. Types of participants

We included studies that focused on programs that included children in early grades in LAC from birth through grade 3. This time period was selected as it aligns with the funder's (USAID) definition of EGL. In cases where effects were reported for children in early grades and higher grades in LAC, studies were eligible for inclusion if a subgroup of the beneficiaries were children in early grades in LAC from birth through grade 3. Studies were also eligible for inclusion if they included children who were in grade 4 or higher during the endline survey but were in early grades (from birth through grade 3) during the start of the program.

5.1.3. Types of interventions included

The interventions included in this review were programs that aimed to improve EGL outcomes directly or could improve EGL outcomes through indirect mechanisms. We did not exclude studies that focused on programs that did not explicitly aim to improve EGL outcomes.

5.1.4. Types of interventions excluded

We excluded studies that focused on interventions that could influence reading but that did not discuss the link between the intervention and reading outcomes specifically (e.g., studies with a focus on improving IQ).

5.1.5. Types of outcome measures

To address Research Questions 1 and 2, we included studies that focused on EGL outcomes. To be included, studies needed to assess either EGL outcomes or EGL practices.

EGL outcomes: We included all studies that focused on a range of measures of EGL, including assessment tests and self‐reported measures of EGL.

EGL practices: We included all studies that focused on a range of measures of EGL practices, including measures of the time children spent on reading books.

We did not define outcome criteria to address Research Question 3, because studies included to address this research question were qualitative studies.

5.1.6. Language

We searched for studies published in any language that would have been relevant to the LAC region, including but not limited to English, Spanish, Portuguese, French, and Dutch. We did not exclude any studies based on language.

5.1.7. Types of settings

We included studies from all countries in the LAC region, as defined by the World Bank. We included any studies we found from or about the following countries:

Antigua and Barbuda, Argentina, Aruba, Bahamas, Barbados, Belize, Bermuda, Bolivia, Brazil, British Virgin Islands, Cayman Islands, Chile, Colombia, Costa Rica, Cuba, Curacao, Dominica, Dominican Republic, Ecuador, El Salvador, French Guiana, Grenada, Guadeloupe, Guatemala, Guyana, Haiti, Honduras, Jamaica, Martinique, Mexico, Mont Serrat, Netherlands Antilles, Nicaragua, Panama, Paraguay, Peru, Puerto Rico, Saint Barthelemy, Saint Kitts and Nevis, Saint Lucia, Saint‐Martin, Saint Vincent and the Grenadines, Sint Maarten, Suriname, Trinidad and Tobago, Turks and Caicos Islands, Uruguay, U.S. Virgin Islands, Venezuela

5.2. Search methods for identification of studies

5.2.1. Developing the search strategy

To develop and refine the search strategy, we relied on our PICO criteria and consultations with other researchers, librarians, computer scientists, and content experts. Through this process, we selected the most relevant databases for our review. The primary requirement for selected databases—ability to search the full database—is critical to ensure that the selection process was impartial. For example, Google Scholar is a source of unpublished or “grey” literature. However, it does not provide an interface that allows for a systematic search and retrieval of all potentially relevant documents. Rather, the query yields only the top results as defined by the Google search algorithm. After selecting appropriate databases, the team drafted, tested, and refined the initial search queries overall and by database specifications to identify the search string that best captured the most potentially relevant evidence for the population, topic, and time frame of interest.

The systematic review team constructed a database query by identifying search terms using the population criteria . To capture both quantitative studies for answering Research Question 1, qualitative intervention and nonintervention relevant to Research Question 3, and quantitative nonintervention research relevant to Research Question 2, we did not include search strings for study design, comparison condition, or outcome measures. Using these criteria in the search strategy would have excluded relevant qualitative studies, as well as quantitative and mixed‐methods studies that omitted this information from the title and abstract.

The terms below represent the keywords and phrases that were identified for our English search. Their equivalents in the other target languages are listed in Table 1.

-

Population:

Birth to grade 3, 0–10, early childhood, preschool, preprimary, primary, kindergarten, grade 1, grade 2, grade 3, day care, early‐grade, elementary

Latin America, Caribbean, Central America, South America, Antigua and Barbuda, Argentina, Aruba, Bahamas, Barbados, Belize, Bermuda, Bolivia, Brazil, British Virgin Islands, Cayman Islands, Chile, Colombia, Costa Rica, Cuba, Curacao, Dominica, Dominican Republic, Ecuador, El Salvador, French Guiana, Grenada, Guadeloupe, Guatemala, Guyana, Haiti, Honduras, Jamaica, Martinique, Mexico, Mont Serrat, Netherlands Antilles, Nicaragua, Panama, Paraguay, Peru, Puerto Rico, Saint Barthelemy, Saint Kitts and Nevis, Saint Lucia, Saint‐Martin, Saint Vincent and the Grenadines, Sint Maarten, Suriname, Trinidad and Tobago, Turks and Caicos, Islands, Uruguay, U.S. Virgin Islands, Venezuela

Table 1.

Search terms in English, Spanish, French, Portuguese, and Dutch

| English |

|---|

| (Read* OR Litera* OR writ* OR communic*) AND (primary sch* OR primary grad* OR “grades 1 through 3” OR “grades 1 to 3” OR “grades 1–3” OR “first through third” OR “Grade 1” OR first grade* OR “grade 2” OR second grade* OR “grade 3” OR third grade* OR early grade* OR elementary OR kinder* OR pre‐school* OR preschool* OR prekindergarten* OR preK OR pre‐K OR “early childhood”) AND (Latin America* OR Caribbean OR South America* OR Antigua* and Barbuda OR Argentin* OR Aruba OR Bahama* OR Barbados OR Beliz* OR Bermud* OR Bolivia* OR Brazil* OR “British Virgin Islands” OR “Cayman Islands” OR Chile* OR Colombia* OR Costa Ric* OR Cuba* OR Curaca* OR Dominica* OR “Dominican Republic” OR Ecuador* OR El Salvador* OR French Guiana* OR Grenada* OR Guadeloup* OR Guatemala* OR Guyana* OR Haiti* OR Hondura* OR Jamaica* OR Martinique OR Mexic* OR Mont Serrat OR “Netherlands Antilles” OR Nicaragua* OR Panama* OR Paraguay* OR Peru* OR “Puerto Ric*” OR “Saint Barthelemy” OR “Saint Kitts and Nevis” OR Saint Lucia* OR “Saint‐Martin” OR “Saint Vincent and the Grenadines” OR “Sint Maarten” OR Surinam* OR “Trinidad and Tobago” OR “Turks and Caicos” OR Uruguay OR “Virgin Islands” OR Venezuela) |

| Spanish |

|---|

| (Leer OR Lecto‐escritura OR Alfabetiz* OR “Ambiente letrado”) AND (“la escuela primaria” OR “grados de primaria” OR “grados 1ero a 3ero” OR “grados 1 a 3” OR “grados 1–3” OR “de primer grado a tercer grado” OR “Grado 1” OR “primer grado” OR “primeros grados” OR “primer grado” OR “grado 2” OR “segundo grado” OR “grado 3” OR “tercer grado “OR “grados iniciales” OR “grados tempranos” OR “educación preescolar” OR “Educación maternal” OR “jardín de infancia” OR “Jardines de infancia” OR Kinder* OR preescolar OR pre‐kindergarten OR “primera infancia” OR “Educación Inicial”) AND (“Latino América” OR Caribe OR “Sud América” OR “América del Sur” OR “Antigua y Barbuda” OR Argentin* OR Arub* OR Baham* OR Barbados OR Belice* OR Bermud* OR Bolivi* OR Brasil OR “Islas Virgenes Birtánicas” OR “Gran Cayman” OR Chil* OR Colombi* OR “Costa Rica” OR Cub* OR Curaca* OR Dominica* OR “República Dominicana” OR Ecuador* OR “El Salvador” OR “Guayana Francesa” OR Grenada* OR Guadalupe OR Guatemal* OR Guyana* OR Guayana OR Haiti* OR Hondur* OR Jamaic* OR Martinic* OR Méxic* OR “Mont Serrat” OR “Antillas Holandesas” OR Nicaragu* OR Panamá* OR Paraguay* OR Perú* OR “Puerto Ric*” OR “San Bartolomé” OR “Saint Kitts y Nevis” OR “Saint Lucia” OR “Saint‐Martin” OR “Saint Vincente y Granadines” OR “San Martín” OR Surinam OR “Trinidad y Tobago” OR “Turks y Caicos” OR Uruguay OR “Islas Vírgenes” OR Venezuel*) |

| French |

|---|

| (lire OR “la lecture” OR l'écriture OR écrire OR “l'Alphabétisation” OR “environnement lettré” OR “lire‐écrire”) AND (“l'école primaire” OR “Enseignement primaire” OR “l'école élémentaire” OR “première année” OR “deuxième année de cycle 2” OR “cours préparatoire” OR “CP” OR “troisième année de cycle 2” OR “cours élémentaire1re année” OR “CE1” OR “première année du cycle 3” OR “cours élémentaire 2e année” OR “CE2” OR “maternelle” OR “Préscolaire” OR “petite enfance”) AND (“Amérique latine” OR Caraïbes OR “Amérique du Sud” OR “Antigua‐et‐Barbuda” OR Argentine OR Aruba OR Antilles OR Bahamas OR Barbade OR Belize OR Bermudes OR Bolivie OR Brésil OR “Îles Vierges britanniques” OR “Grand Cayman” OR Chili OR Colombie OR “Costa Rica” OR Cuba OR Curaçao OR Dominique OR “République dominicaine” OR Equateur OR “El Salvador” OR Guyane OR Grenade OR Guadeloupe OR Guatemala OR Haïti OR Honduras OR Jamaïque OR Martinique OR Mexique OR “Mont Serrat” OR Nicaragua OR Panama OR Paraguay OR Pérou OR “Puerto Rico” OR “San Bartolomé” OU “Saint Kitts‐et‐Nevis” OR “Saint Lucia” OR “Saint‐Martin” OR “Saint‐Vincent‐et‐Grenadines” OR Suriname OR “Trinité‐et‐Tobago” OR “îles Turks et Caicos” OR Uruguay OR Venezuela) |

| Portuguese |

|---|

| (Leitura OR Escrever OR Alfabetização OR “Alfabetização Inicial” OR “Alfabetização Infantil” OR “Alfabetização Emergente” OR “Alfabetização de Crianças” OR “Meio de Alfabetização” OR “Ambiente Escritura” OR “Compreensão de leitura” OR “Literatura Infantil” OR “tradições orais indígenas” OR “alfabetização inicial endógena na língua maternal”) AND (“Escola Primária *” OR “graus elementares” OR “graus primeiro‐terceiro” OR “graus 1–3” OR “graus 1–3” OR “primeiro grau para a terceira série” OR “Grau 1” OR “primeiro grau” OR “séries iniciais” OR “pré‐escolar” OR “jardim de infância” OR Creche OR Maternal OR Kinder OR pré‐escola OR pré‐jardim de infância* OR “primeira infância” OR “Educação da Primeira Infância”) AND (“America Latina” OR Caribe OR “América do Sul* OR “Antígua e Barbuda” OR Argentina OR Aruba, OR Bahamas OR Barbados OR Belize OR Bermuda OR Bolívia OR Ilhas Virgens OR Brasil OR Gran Cayman Británicas OR Chile* OR Colômbia* OR Costa Rica* OR Cuba, OR Curacao OR Dominicana* OR Equador OR “El Salvador” OR Grenada OR Guiana OR Guadalupe OR Guatemala* OR Haiti OR Honduras OR Jamaica OR Martinica OR México OR “Mont Serrat” OR “Antilhas Holandesas” OR Nicarágua OR Panamá* OR Paraguai* OR Peru* OR “Porto Rico” OR “São Bartolomeu” OR “São Cristóvão e Nevis” OR “Santa Lúcia” OR “São Martin” OR “São Vicente e Granadinas” OR Suriname OR “Trinidad e Tobago” OR “Turcas e Caicos” OR Uruguai OR Venezuela) AND (meninas OR meninos OR crianças* OR bebês OR infantil) |

| Dutch |

|---|

| (Lezen* OR Alfabetisering) AND (“basisschool*” OR “basisonderwijs*” OR “groep 3 tot en met 5” “groep 3 tot 5” OR “groep 3–5” OR “groep 3” OR “groep 4”OR “groep 5” OR kleuterschool* OR peuterspeelzaal* OR kinderopvang* OR brede school* OR “vroegste kinderjaren”) AND (“Latijns Amerika*” OR Latijns‐Amerika OR “Zuid Amerika* OR “Zuid‐Amerika* OR Centraal‐Amerika” OR Centraal Amerika” OR Antigua* en Barbuda OR Argentinie* OR Argentinië* OR Aruba OR Bahama's OR Barbados OR Belize OR Bermuda OR Bolivia* OR Brazilië* OR Brazilie* OR “Britse Maagdeneilanden” OR “Kaaimaneilanden” OR Chilli* OR Colombia* OR Columbia* OR “Costa Rica*” OR Cuba* OR Curacao OR Curaçao OR Dominica* OR “Dominicaanse Republiek” OR Ecuador* OR “El Salvador*” OR “Frans Guyana*” OR Grenada* OR Guadeloupe OR Guatemala* OR Guyana* OR Haiti* OR Haïti* OR Honduras OR Jamaica* OR Martinique OR Mexico OR Montserrat OR “Nederlandse Antillen” OR Nicaragua* OR Panama* OR Paraguay* OR Peru* OR “Puerto Rico” OR “Saint Barthelemy” OR “Saint Barthélemy” OR “Saint Kitts en Nevis” OR “Saint Lucia*” OR “Saint‐Martin” OR “Saint Vincent en de Grenadines” OR “Sint Maarten” OR Suriname OR Trinidad en Tobago OR “Turks‐ en Caicoseilanden” OR “Turks en Caicoseilanden” OR Uruguay OR “Maagdeneilanden” OR Venezuela) |

We also included time frame (1990–2015) in the search parameters. We selected this time frame because it provided us with access to a large amount of relevant evidence; we also wanted to be more inclusive and make sure we did not leave out any important evidence. In addition, this time frame focuses on the period after the Education for All (EFA) movement and the World Conference on Education for All held in 1990 in Jomtien, Thailand. Based on the population criteria and time frame, we constructed a search string in five languages—English, Spanish, French, Portuguese, and Dutch—to cover the variety of literature most likely to address EGL in the LAC region.

We aimed to make the search strings as broad as possible to retrieve the maximum amount of potentially relevant items from all databases (Schuelke‐Leech, Barry, Muratori, & Yurkovich, 2015). In theory, the use of one standardized search string ensures an unbiased search strategy across all databases. In practice, using one standardized search string is challenging because the search rules are not standardized across repositories. For example, SAGE Publications has an interface that looks for two‐word and longer phrases encapsulated in double quotation marks (e.g., “early grade”). In contrast, the Thomson Reuters Web of Science research platform instructs users to include search terms/phrases in parentheses: (early grad*). The rules of using Boolean logic, including wildcards (e.g., “*” and “?”), are also different across various data sources. Furthermore, some databases impose limits on the number of queries and the length of search strings. As a result, the team modified the search string according to each database and documented the iterative process of modifying the search strings (see Appendix A).

The primary focus of the initial search for evidence was to retrieve as many potentially relevant documents from all data sources as possible. However, different data sources have different search functionalities and interfaces. For example, the SAGE Publications website only allowed us to search by a limited number of keywords (e.g., “early grade” AND literacy OR “early grade” AND reading). As a result, we had to limit our results by several journal categories (e.g., Special Education, Regional Studies, Language and Linguistics). In contrast, we were able to use the full search string at the ScienceDirect website (see Appendix A). To overcome these differences in search capabilities, we exported all 9,696 documents into a comma‐separated value file and applied a “standardized” search string across all documents using the same algorithm in Python 8.

5.2.2. Electronic searches

After the systematic review team developed the broad search strings, research associates with expertise in quantitative or qualitative research used the search terms and strings (in each of the target languages) to conduct an initial search of online databases and development‐focused websites, reviewed bibliographies of accepted articles to find other potentially relevant studies, and sent out emails to EGL experts in the LAC region and beyond in order to cast a broad net and capture as much of the evidence base as possible. We used three primary methods to search for EGL evidence.

Internet searches of predefined online databases, journals, and international development organizations

The review team worked with other researchers, librarians, computer scientists, and content experts to identify appropriate online databases, journals, and international development organizations for our search.

-

i.

Online databases:

3ie

British Library for Development Studies

Campbell Collaboration

Cochrane Library

Dissertation Abstracts

Directory of Open Access Journals (DOAJ)

Directory of Open Access Books (DOAB)

Development Experience Clearinghouse (DEC)

Education International

JSTOR Arts & Sciences I–X Collections and JSTOR Business III Collection

SAGE Publications

ScienceDirect

Taylor & Francis

Wiley

WorldCat

-

Within EBSCO:

-

–

Academic Search Premier

-

–

EconLit

-

–

Education Source

-

–

ERIC (Education Resource Information Center)

-

–

Psychology & Behavioral Sciences Collection

-

–

PsycINFO

-

–

SocINDEX with Full Text

-

–

-

ii.

Development‐focused databases/websites:

The U.K. Department for International Development (DfID)

The United States Agency for International Development (USAID)

The Joint Libraries of the World Bank and International Monetary Fund (JOLIS)

The British Library for Development Studies (BLDS)

Institute of Development Studies (eldis)

The International Initiative for Impact Evaluation (3ie)

The Abdul Latif Jameel Poverty Action Lab (J‐PAL)

Innovations for Poverty Action (IPA)

World Health Organization (WHO)

United Nations Educational, Scientific and Cultural Organization (UNESCO)

The United Nations Children's Fund (UNICEF)

The United Nations High Commissioner for Refugees (UNHCR)

Population Council

World Vision

Save the Children

Plan International

Organization of American States (OAS)

-

iii.

LAC region databases and websites:

Latindex

Red de Revistas Científicas de América Latina y el Caribe, España y Portugal (Redalyc)

Scientific Electronic Library Online o Biblioteca Científica Electrónica en Línea (SciELO)

Consejo Latinoamericano de Ciencias Sociales (CLACSO)

Dialnet

eRevistas

Forward and backward snowballing of the references of key papers provided additional studies for review that may not have been found in database searches. Citation searches were conducted in Google Scholar.

5.2.3. Searching other resources

5.2.3.1. Gray literature

To ensure we captured all of the relevant and applicable literature in the region, we reviewed the bibliographies of accepted articles and reports to identify relevant and high‐quality studies that might fit our criteria. We then searched for these studies and applied our inclusion criteria to them.

The research team compiled a list of 43 EGL experts—particularly those from the wider LAC region—and asked them to provide additional sources of evidence that may not have been captured through the online evidence search. We used a snowball approach and asked these experts to share the contacts of others, so that we could identify other relevant research.

5.3. Data collection and analysis

5.3.1. Selection of studies

We imported all citations found through the above search methods into the Mendeley reference management software (http://www.mendeley.com/). Mendeley automatically extracted bibliographic data from each book, article, or reference and removed all duplicates.

The following sections detail the additional steps that we took to identify the most potentially relevant articles, review them manually, and apply the strict inclusion criteria.

5.3.2. Screening Phase 1: WikiLabeling

We applied Wikipedia‐based labeling and classification techniques to the abstract data to categorize and screen articles to increase the relevance of retrieved results using the well‐known online encyclopedia, Wikipedia (Egozi, Markovitch, & Gabrilovich, 2011; Gabrilovich & Markovitch, 2006). Due to the broad and inclusive nature of our search strings, much of the initial evidence we captured was not actually relevant to our review. Therefore, we applied Wikipedia‐based labeling to help us identify the most relevant pages. The process of identifying these pages is twofold: first, experts need to share a list of potentially relevant categories. Next, we had to mine Wikipedia to find pages associated with exactly these or similar categories. We then validated the resulting list with the experts again. For example, “learning outcomes,” originally proposed by our experts, maps directly to “outcome‐based education” within Wikipedia. Wikipedia's innate hierarchical structure allowed us to make our categories less ambiguous and better organize them into a meaningful list (Box 1).

Box 1. List of relevant categories that have individual Wikipedia pages.

Dual language

Emergent literacies

First language

Fluency

Free writing

Grammar

Language education

Language proficiency

Listening

Literacy

Orthography

Outcome‐based education

Phonemic awareness

Phonics

Phonological awareness

Reading (process)

Reading comprehension

Second‐language

Second language acquisition

Spoken language

Transitional bilingual education

Understanding

Vocabulary

Writing

We combined the WikiLabeling results with the “standardized” search term strategy described in the previous section. Although WikiLabeling is generally effective at assessing the overall context of a document and its relevance to a given subject, the search term strategy helps narrow down the search by specific keywords and phrases, such as individual countries and the region name. We used this approach to categorize documents in all target languages (English, French, Spanish, Dutch, and Portuguese).

The “standardized” search term strategy and WikiLabeling are complementary in several important ways:

Search terms and regular expressions help discover individual words and phrases within a document, no matter where they appear. For example, the geographic region may be mentioned only in the discussion part of a paper when writing about broader potential impacts. Meanwhile, the main body of the paper might have nothing to do with Latin America or the Caribbean (e.g., we have seen some studies evaluating an intervention in sub‐Saharan Africa, which mention other developing countries that could learn from this experience). In contrast, Wikipedia‐based labeling assesses the entire context of the document by comparing all words and phrases used in academic papers and comparing them to the ones used to describe individual concepts, such as “language education” or “phonological awareness.”

Search strings can cover a wide range of inclusion criteria and be structured to include three or four different variables. WikiLabeling looks into every concept individually and therefore provides a more in‐depth assessment of the relevance of a document for the subject of focus.

Search term strategies are more flexible and do not depend on the user community curating an online encyclopedia every day. However, the continuous curation in Wikipedia helps improve the quality of knowledge and introduce new meaningful concepts into the scientific language through discovery and analysis applied in WikiLabeling.

For this review, we used the search term strategy followed by Wikipedia‐based labeling and classification to define which documents were most likely to be relevant for the subject in focus. This computational approach can be considered largely systematic and unbiased in how it decides the relevance of documents on a given subject. Both the search term strategy and Wikipedia‐based labeling apply standardized approaches and offer several methods of robust evaluation and validation.

Importantly, our approach supplements but does not replace the human review of potentially relevant articles. We built in several quality control procedures to ensure that our algorithm did not lead to the exclusion of relevant papers. We created four samples, with 100 abstracts each. Within each sample we included a set of 80 randomly selected abstracts that were retrieved by the search strategy, WikiLabeling, or both. The remaining 20 documents were randomly selected from the subset not retrieved by any of our approaches (i.e., 8,145 documents that were considered as irrelevant by the search strategy, WikiLabeling, or both). We then distributed these samples to four senior reviewers and reading experts and asked them to identify the irrelevant articles. This process enabled us to check for both false negatives (articles not retrieved through our search approach—the 20—but which were deemed relevant) as well as false positives (articles retrieved through our search approaches—the 80—but which were deemed irrelevant).

5.3.2.1. Phase 2: Applying inclusion criteria and recording key indicators

After narrowing down our list of articles through WikiLabeling, we imported all remaining 1,824 citations back into the Mendeley reference manager software. We divided citations among reviewers, who applied the predetermined inclusion criteria (see Table 2) to each title and abstract. We chose to err on the side of sensitivity rather than specificity during our initial title and abstract review. Our inclusion criteria were purposefully broad because we did not want to miss any relevant citations due to narrow inclusion criteria. Any article that did not meet one of the following five threshold criteria laid out in Table 2 was automatically excluded from further review.

Table 2.

Initial inclusion criteria for early grade literacy evidence

| # | Category | Criteria | Notes |

|---|---|---|---|

| 1 | Year of publication | Include literature from the last 25 years, a time frame spanning 1990–2015 |

|

| 2 | Relevance to the region | The evidence must be from or on the LAC region including any or all of the following: Antigua and Barbuda, Argentina, Aruba, Bahamas, Barbados, Belize, Bermuda, Bolivia, Brazil, British Virgin Islands, Cayman Islands, Chile, Colombia, Costa Rica, Cuba, Curacao, Dominica, Dominican Republic, Ecuador, El Salvador, French Guiana, Grenada, Guadeloupe, Guatemala, Guyana, Haiti, Honduras, Jamaica, Martinique, Mexico, Mont Serrat, Netherlands Antilles, Nicaragua, Panama, Paraguay, Peru, Puerto Rico, Saint Barthelemy, Saint Kitts and Nevis, Saint Lucia, Saint‐Martin, Saint Vincent and the Grenadines, Sint Maarten, Suriname, Trinidad and Tobago, Turks and Caicos Islands, Uruguay, U.S. Virgin Islands, Venezuela |

|

| 3 | Relevance to the population | Boys or girls ages birth through Grade 3 in the LAC region, regardless of the age of the child. If they are enrolled in Grade 3 or below, they fall within our population |

|

| 4 | Relevance to the topic | The literature must have a focus on reading or literacy (which includes reading and writing) |

|

| 5 | Is it research? | There must be a research question or research objective and a methodology that matches that objective |

|

Abbreviation: LAC, Latin America and the Caribbean.

During the title and abstract review, reviewers selected “yes,” “no,” “unclear,” or “not rated” on the Excel spreadsheet for each of the inclusion criteria (i.e., published since 1990, from or on the LAC region, ages birth to Grade 3, reading or literacy focused, and includes a research question or objective). Here is an explanation of each option:

Marking “yes” for any of the five criteria indicated that the reviewer should continue onto the next criterion on the coding sheet. If the reviewer marked “yes” to all of the inclusion criteria, then they were required to fill in the remaining indicators outlined in Table 3.

Marking “no” indicated that the reviewer should stop because the study did not meet the criteria for further review. In this case, the remaining inclusion criteria were automatically marked as “unrated,” signifying that the study failed to meet one of the inclusion criteria and thus, whether it met the other criteria was no longer relevant.

Marking “unclear” indicated that the study was tagged for review by a senior technical expert who was equipped to determine relevance. At this stage, we followed the motto “When in doubt–include,” and maintained a record of all excluded articles indicating for what criteria they were excluded.

Table 3.

Key indicators for early grade literacy evidence

| Categories | Selection choices |

|---|---|

| Abstract number | |

| Citation information | |

| Abstract | |

| Document reviewer name | |

| Country(ies) of focus | Antigua and Barbuda, Argentina, Aruba, Bahamas, Barbados, Belize, Bermuda, Bolivia, Brazil, British Virgin Islands, Cayman Islands, Chile, Colombia, Costa Rica, Cuba, Curacao, Dominica, Dominican Republic, Ecuador, El Salvador, French Guiana, Grenada, Guadeloupe, Guatemala, Guyana, Haiti, Honduras, Jamaica, Martinique, Mexico, Mont Serrat, Netherlands Antilles, Nicaragua, Panama, Paraguay, Peru, Puerto Rico, Saint Barthelemy, Saint Kitts and Nevis, Saint Lucia, Saint‐Martin, Sint Maarten, Saint Vincent and the Grenadines, Suriname, Trinidad and Tobago, Turks and Caicos Islands, Uruguay, U.S. Virgin Islands, Venezuela, or multiple countries |

| Region | South America, Central America, Caribbean, North America |

| World Bank income level | Low income, lower‐middle income, upper‐middle income, high income non‐OECD, high‐income OECD |

| Type of document | Journal article, technical report, dissertation/thesis, book chapter, other |

| Full text available to AIR | Yes, No, Other |

| Full text available to public | Yes, No, Other |

| How was document located? | Source bibliography, hand search of journal, online source, in‐person contact, recommended by a content expert |

| Language of publication? | English, Spanish, French, Dutch, Portuguese, Bilingual, Other |

| Target group | Early childhood, preprimary (pre‐k or kindergarten), primary, out‐of‐school children (school‐age children who are not enrolled), other |

| Type of evidence | Quantitative: Intervention‐based: Experimental, Quasiexperimental, Multivariate Regression, Univariate Regression, Graphics, Other |

| Quantitative: Nonintervention‐based: Psychology, linguistics, reading science studies (methods include structural equation models, multivariate and univariate regressions, lab‐type pilot studies, writing system analyses, other) | |

| Qualitative: Intervention, nonintervention: Case study, focus groups, interviews, multiple methods, other | |

| Mixed methods: Includes both quantitative and qualitative methodologies |

Abbreviation: OECD, Organisation for Economic Co‐operation and Development.

Reviewers then used the same Excel spreadsheet to record key indicators (Table 3) for literature that met all five inclusion criteria.1

5.3.3. Screening Phase 2: Data extraction and management

We compiled all of the full‐text articles and books that met all inclusion criteria, as well as those that were still unclear after the title and abstract review, and assigned them to senior researchers based on language and type of study. The senior researchers reviewed the articles using separate quality review protocols based on the type of study.

5.3.4. Assessment of risk of bias in included studies

Researchers reviewed the articles using separate quality review protocols (see Appendix D for full versions of each protocol) based on the type of study as follows:

5.3.4.1. Assessment of risk of bias quantitative studies

We used an adapted version of a risk of bias (RoB) assessment tool developed by Hombrados and Waddington (2012). Specifically, we assessed the risk of the following biases:

-

(1)

Selection bias and confounding, based on the quality of the identification strategy used to determine causal effects and assessment of equivalence across the beneficiaries and comparison or control group

-

(2)

Performance bias, based on the extent of spillovers to the students in the control or comparison groups and contamination of the control or comparison group

-

(3)

Outcome and analysis reporting biases, including:

The use of potentially endogenous control variables

Failure to report nonsignificant results

Other unusual methods of analysis

-

(4)

Other biases, including:

Courtesy and social desirability bias

Differential attrition bias

Small sample sizes and no clustering of standard errors

Strong researcher involvement in the implementation of the intervention and the Hawthorne effect

Two or more reviewers read and rated all quantitative intervention studies to ensure consensus. The reviewers resolved disagreements in assessments through discussion or by third‐party adjudication. Reviewers reread studies several times if something was unclear and maximized the use of all the available information from the studies. Assessments were based on the reporting in the primary studies, erring on the side of caution. For example, in those cases in which it was not clear whether standard errors were clustered, we assumed they were not clustered and took that into consideration in the risk of bias assessment.

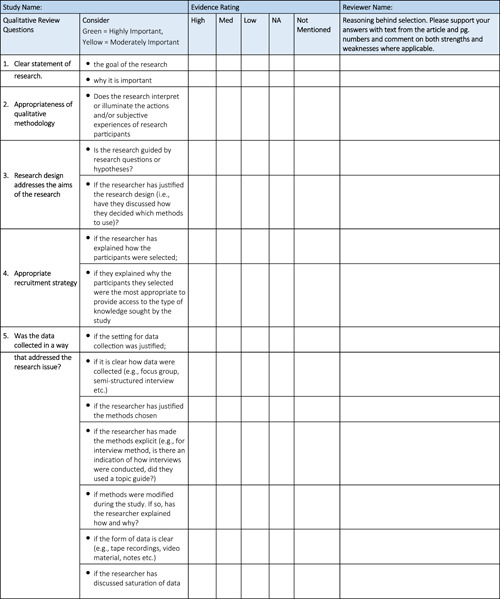

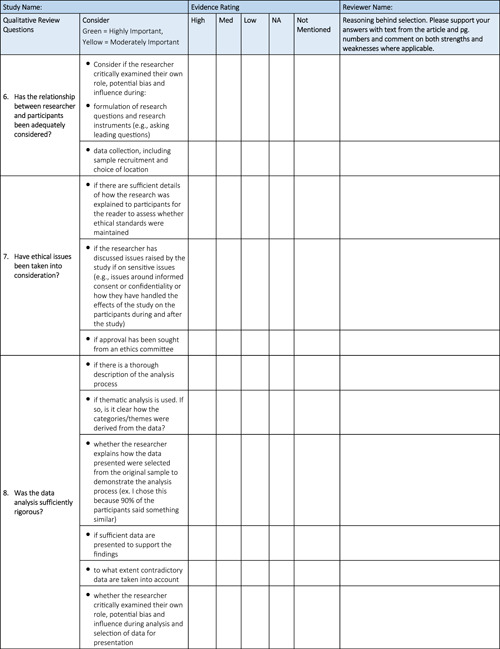

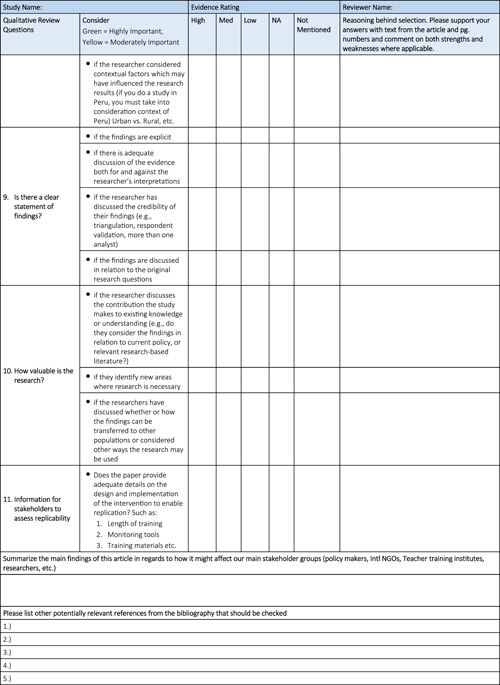

5.3.4.2. Quality appraisal of qualitative studies

We adapted the Critical Appraisal Skills Programme (CASP) Qualitative Research Checklist (Critical Appraisal Skills Programme, 2018) to assess the research design, data analysis, ethical considerations, and the relevance to practice. The tool examines reviewers' responses to 11 main questions, each of which has multiple subquestions. Upon reading the full‐text article, reviewers had to select either “High,” “Medium,” “Low,” “N/A,” or “Not Mentioned” for each of the 11 questions and subquestions and provide a justification for their rating. The justification was also supported by text and page numbers from the article. Reviewers were encouraged to comment on both strengths and weaknesses when applicable. The 11 qualitative review questions were divided into three categories: research design, ethics and reflexivity, and relevance to the field as shown below:

Research design:

Clear statement of research?

Appropriateness of qualitative methodology?

Addresses the aims of the research?

Was the data collected in a way that addressed the research issue?

Was the data analysis sufficiently rigorous?

Is there a clear statement of findings?

Ethics and reflexivity:

Has the relationship between researcher and participants been adequately considered?

Have ethical issues been taken into consideration?

Appropriate recruitment strategy?

Relevance to the field:

How valuable is the research?Information for stakeholders to assess replicability?

In addition to these 11 quality criteria, reviewers summarized the main findings of each qualitative article. Finally, reviewers reviewed the bibliography for each article and identified other relevant references for further review. Pairs of reviewers rated the same studies at the outset to ensure a common understanding of the quality categories, but the remaining articles were reviewed by single reviewers due to time constraints.

5.3.4.3. Quantitative appraisal of correlational studies

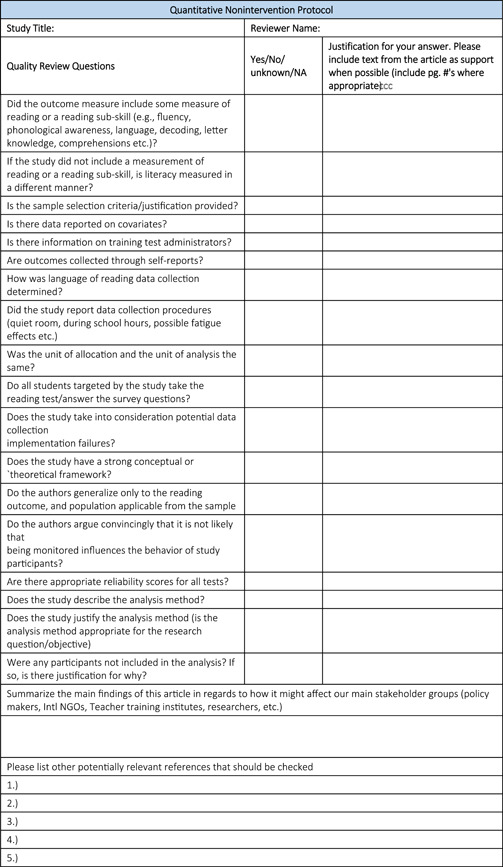

For the quantitative appraisal of correlational studies, we used an adapted version of the RoB tool for quantitative intervention studies (Hombrados & Waddington, 2012), removing any questions regarding interventions. The quantitative nonintervention quality review tool assesses the relevance, data and methodology, and analytical approach of the research by eliciting reviewers' responses to the following 18 quality criteria questions:

-

(1)

Did the outcome measure include some measure of reading or a reading subskill (e.g., fluency, PA, language, decoding, letter knowledge, comprehensions etc.)?

-

(2)

If the study did not include a measurement of reading or a reading subskill, is literacy measured in a different manner?

-

(3)

Is the sample selection criteria/justification provided?

-

(4)

Is there data reported on covariates?

-

(5)

Is there information on training test administrators?

-

(6)

Are outcomes collected through self‐reports?

-

(7)

How was language of reading data collection determined?

-

(8)

Did the study report data collection procedures (e.g., quiet room, during school hours, possible fatigue effects)?

-

(9)

Was the unit of allocation and the unit of analysis the same?

-

(10)

Do all students targeted by the study take the reading test/answer the survey questions?

-

(11)

Does the study take into consideration potential data collection implementation failures?

-

(12)

Does the study have a strong conceptual or theoretical framework?

-

(13)

Do the authors generalize only to the reading outcome, and population applicable from the sample?

-

(14)

Do the authors argue convincingly that it is not likely that being monitored influences the behavior of study participants?

-

(15)

Are there appropriate reliability scores for all tests?

-

(16)

Does the study describe the analysis method?

-

(17)

Does the study justify the analysis method (is the analysis method appropriate for the research question/objective)?

-

(18)

Were any participants not included in the analysis? If so, is there justification for why?

Upon reading the full‐text article, reviewers responded to each question by selecting “Yes,” “No,” “Unclear,” or “N/A” and provided a justification for the rating, citing the text whenever possible. Finally, reviewers provided a summary of the article's main findings and their relevance to target stakeholder groups.

In order to synthesize the findings of the quantitative nonintervention research, we first determined which studies should be included in the analysis. To achieve this goal, we referred to the quality protocols filled out by the reviewers for each article and only included studies that were considered high quality. For instance, if there was missing information about data administration or no information provided about how the language of testing was determined, we did not dismiss the study; however, if the reviewers judged that there were notable problems with the method or sample selection, we did not include the study in our analysis.

The below seven ratings from the protocol were considered key to determining inclusion as they ensure that the study is focused on reading and has a strong research design and methodology:

-

(1)

Did the outcome measure include some measure of reading or a reading subskill?

-

(2)

Is the sample selection criteria/justification provided?

-

(3)

Did the study report data collection procedures?

-

(4)

Does the study have a strong conceptual or theoretical framework?

-

(5)

Are there appropriate reliability scores for all tests?

-

(6)