1. PLAIN LANGUAGE SUMMARY

Adaptive teaching and individualization for K‐12 students improve academic achievement

1.1. The review in brief

Teaching methods that individualize and adapt instructional conditions to K‐12 learners’ needs, abilities, and interests help improve learning achievement. The most important variables are the teacher's role in the classroom as a guide and mentor and the adaptability of learning activities and materials.

What is the aim of this review?

This Campbell systematic review assesses the overall impact on student achievement of processes and methods that are more student‐centered versus less student‐centered. It also considers the strength of student‐centered practices in four teaching domains.

Flexibility: Degree to which students can contribute to course design, selecting study materials, and stating learning objectives.

Pacing of instruction: Students can decide how fast to progress through course content and whether this progression is linear or iterative.

Teacher's role: Ranging from authority figure and sole source of information, to teacher as equal partner in the learning process.

Adaptability: Degrees of manipulating learning environments, materials, and activities to make them more student‐centered.

1.2. What is this review about?

Teaching in K‐12 classrooms involves many decisions about the appropriateness of methods and materials that both provide content and encourage learning.

This review assesses the overall impact on student achievement of processes and methods that are more student‐centered versus less student‐centered (and thus more teacher‐centered, i.e., more under the direct control of a teacher). It also considers in which instructional dimensions the application of more of these student‐centered practices is most appropriate, and the strength of student‐centered practices in each of four teaching domains.

1.3. What is this review about?

1.3.1. What studies are included?

This review presents evidence from 299 studies (covering 43,175 students in a formal school setting) yielding 365 estimates of the impact of teaching practices. The studies spanned the period 2000–2017 and were mostly carried out in the United States, Europe, and Australia.

What is the overall average effect of more versus less student‐centered instruction on achievement outcomes? Which demographic variables moderate the overall results?

More student‐centered instructional conditions have a moderate positive effect on student achievement compared to less student‐centered.

Which dimensions of instruction are most important in promoting better achievement through the application of more versus less student‐centered instruction? Do these dimensions interact?

The teacher's role has a significantly positive impact on student achievement; more student‐centered instruction produces better achievement. Pacing of instruction/learning—where learners have more choice over setting the pace and content navigation of learning activities—has a significant effect in the opposite direction; i.e., a significantly negative relationship. There is no relationship between adaptability and flexibility and student achievement.

There are interactive effects. The teacher's role combined with adaptability produces stronger effects, whereas flexibility (greater involvement of students in course design and selection of learning materials and objectives) has the opposite effect; it reduces the effectiveness of teacher's role on learning outcomes.

Special education students perform significantly better in achievement compared to the general population.

Three other factors—grade level; Science, technology, engineering, and mathematics (STEM) versus non‐STEM subjects; individual subjects—do not have any effect on the impact of the intervention.

1.4. What do the findings of this review mean?

This review confirms previous research on the effectiveness of student‐centered and active learning. It goes further in suggesting the teacher's role promotes effective student‐centered learning, and excessive student control over pacing appears to inhibit it.

An important element of these findings relates to the significant combination of teacher's role and adaptability, in that it suggests the domain in which the teacher's role should focus.

Since adaptability relates to increasing the involvement of students in more student‐centered activities, the evidence suggests that instruction that involves activity‐based learning, either individually or in groups, increases learning beyond the overall effect found for more student‐centered versus less student‐centered activities.

Various student‐centered approaches, such as cooperative learning and peer‐tutoring, have been found to accomplish this goal.

1.5. How up‐to‐date is this review?

This meta‐analysis contains studies that date from 2000–2017.

2. EXECUTIVE SUMMARY/ABSTRACT

2.1. Background

The question of how to best deliver instruction to k‐12 students has dominated the educational conversation, both in terms of theory and practice, since before 1960. Two predominant models have clashed: (a) Traditional teacher‐directed instruction (referred to here as teacher‐centered Teacher‐Centered instruction), where there is little methodological adaptation for individual differences in ability, skills, interests, etc. among students; and (b) so‐called student‐centered instruction (referred to here as Student‐Centered instruction), deriving much of its theoretical justification and methodological intricacies from constructivist thought embodied in the works of Jean Piaget, Lev Vygotsky, Jerome Burner, and many others. While radical constructivism has never become dominant in k‐12 schooling (except in a relatively small number of demonstration schools), there has been considerable interest in embedding some of the principles of constructivism into k‐12 schooling. This is often referred to as individualized or adaptive instruction, meaning an operational concern for individual students, their abilities, interests, etc., which is nearly the opposite of Teacher‐Centered instruction. A great deal of research has demonstrated that approaches to individualism, such as mastery learning, collaborative and cooperative learning, problem‐based learning, peer tutoring, and computer‐based instruction, are effective in promoting achievement and attitudinal gains, as contrasted with Teacher‐Centered instruction, where mastery of content or subject matter is of the greatest concern, and the teacher is the “delivery mechanism.” More recently, this has been extended to include video‐based lectures often delivered through the internet, as proposed by proponents of blended learning and its variant the flipped classroom (e.g., Baepler, Walker, and Driessen (2014). Research has also demonstrated that Teacher‐Centered instruction is particularly useful in developing basic skills in areas such as reading, spelling, and math (Stockard, Wood, Coughlin, & Khoury, 2018).

More recent theory and practice concerning Teacher‐Centered (more conventional) and Student‐Centered (more adaptive and individualized) instruction suggest that neither perspective is entirely sufficient and that some combination of Teacher‐Centered and Student‐Centered instruction is possibly more productive. This notion of combined teaching methods (i.e., Teacher‐Centered plus Student‐Centered) is one of the defining characteristics of the flipped classroom (Baepler et al., 2014). Certainly, students need to acquire skills and knowledge, but they also need to develop their own personal preferences, creativity, problem‐solving abilities, and evaluative and self‐evaluative perspectives. The current meta‐analysis aims to determine if the advantage endowed by Student‐Centered instruction also affects content achievement (i.e., content achievement is the outcome measure in this meta‐analysis).

The current meta‐analysis was designed to explore teaching and learning in k‐12 classrooms and the achievement benefit that derives from more Student‐Centered versus less Student‐Centered classrooms. Several perspectives informed the basis for the research approach described here, but none more so than the words of Gersten et al. (2008) while exploring through meta‐analysis the question of Teacher‐Centered versus Student‐Centered instructional practices in elementary mathematics instruction. In the final report of their study, the group stated: “The Task Group found no examples of studies in which learners were teaching themselves or each other without any teacher guidance; nor did the Task Group find studies in which teachers conveyed … content directly to learners without any attention to their understanding or response. The fact that these terms, in practice, are neither clearly nor uniformly defined, nor are they true opposites, complicates the challenge of providing a review and synthesis of the literature …” (p. 12). The current meta‐analysis intends to investigate variations of more versus less Student‐Centered instruction and the four domains of the instructional process in which they are more or less profitable.

2.2. Objectives (research questions)

There are three primary objectives that this meta‐analysis intends to address (research questions that this study explores):

Overall, does more Student‐Centered instructional practices lead to a significant advantage in the acquisition of content (subject matter) knowledge (i.e., measured learning achievement)?

Do any of the four primary (substantive) moderator variables (entered into multiple meta‐regression), Teacher's Role, Pacing, Adaptability, and Flexibility, predict an increase or decrease in achievement across degrees of Student‐Centered use (From less Student‐Centered to more Student‐Centered)?

Is there a difference in categorical levels of less Student‐Centered to more Student‐Centered for each of the dimensions of instructional practice listed above, tested in mixed moderator variable analysis?

Do any of the secondary (demographic) moderator variables interact with each other (i.e., combine) to produce more versus less Student‐Centered instructional practices?

2.3. Search methods

Following the guidelines of the Campbell Collaboration (Kugley et al., 2017), in order to retrieve a broad base of studies to review, we started by having an experienced Information Specialist search across an array of bibliographic databases, both in the subject area and in related disciplines. The following databases were searched for relevant publications: ABI/Inform Global (ProQuest), Academic Search Complete (EBSCO), ERIC (EBSCO), PsycINFO (EBSCO), CBCA Education (ProQuest), Education Source (EBSCO), Web of Knowledge, Engineering Village, Francis, ProQuest Dissertations & Theses Global, ProQuest Education Database, Linguistics and Language Behavior Abstracts (ProQuest).

The search strategy was tailored to the features of each database, making use of database‐specific controlled vocabulary and search filters, but based on the same core key terms. Searches were limited to the year 2000–2017 and targeted a k‐12 population.

Database searching was supplemented by using the Google search engine to locate additional articles, but principally grey literature (research reports, conference papers, theses, and research published outside conventional journals).

2.4. Selection criteria

The overall set of inclusion/exclusion criteria (i.e., selection) for the meta‐analysis contained the following requirements:

Be publicly available and encompass studies from 2000 to the present;

Feature at least two groups of different instructional strategies/practices that can be compared according to the research question as Student‐Centered and Teacher‐Centered instruction;

Include course content and outcome measures that are compatible with the groups that form these comparisons;

Contain sufficient descriptions of major instructional events in both instructional conditions;

Satisfy the requirements of either experimental or high‐quality quasi‐experimental design;

Be conducted in formal k‐12 educational settings eventually leading to a certificate, diploma, degree, or promotion to a higher grade level;

Contain legitimate measures of academic achievement (i.e., teacher/researcher‐made, standardized); and

Contain sufficient statistical information for effect size extraction.

2.5. Data collection and analysis

2.5.1. Effect size extraction and calculation

One of the selection criteria was “Contain sufficient statistical information for effect size extraction,” so that an effect size could be calculated for each independent comparison. This information could take several forms (in all cases sample size data were required):

Means and standard deviations for each treatment and control group;

Exact t value, F value, with an indication of the ± direction of the effect;

Exact p value (e.g., p = .011), with an indication of the ± direction of the effect;

Effect sizes converted from correlations or log odds ratios;

Estimates of the mean difference (e.g., adjusted means, regression β weight, gain score means when r is unknown)

Estimates of the pooled standard deviation (e.g., gain score standard deviation, one‐way ANOVA with three or more groups, ANCOVA);

Estimates based on a probability of a significant t test using α (e.g., p < .05); and

Approximations based on dichotomous data (e.g., percentages of students who succeeded or failed the course requirements).

Effect sizes were initially calculated as Cohen's d (Cohen, 1988) and then converted to Hedges'g (i.e., correction for small samples; Hedges & Olkin, 1985). Standard errors (SE d ) were calculated for d and then converted to standard errors of SE g applying the correction formula for g. Hedges’ g, SE g , and sample sizes (i.e., treatment and control) were entered into Comprehensive Meta‐Analysis 3.3.07 (Borenstein, Hedges, Higgins, & Rothstein, 2014) where statistical analyses were performed.

The effect sizes were coded for precision and these data were analyzed in moderator variable analysis.

2.5.2. Statistical analyses

Analyses were conducted using the following statistical tests:

Overall weighted random effects analysis with the statistics of , SE g , V g, upper and lower limits of the 95th confidence interval, z g , and p value;

Homogeneity is estimated using Q‐Total, df, and p value. I 2 (i.e., percentage of error variation) and tau2 (i.e., average heterogeneity) is also calculated and reported.

Meta‐regression (single and multiple) is used to determine the relationship between covariates and effect sizes; and

Mixed‐model (i.e., random and fixed) moderator variable analysis is used to compare levels (categories) of each coded moderator variable. Q‐Between, df, and p value are used to make decisions about the significance of each categorical variable.

2.6. Results

The results are presented here in relationship to the four research questions previously described.

Question 1: Overall, does more Student‐Centered instructional practices lead to a significant advantage in the acquisition of content (subject matter) achievement (i.e., measured learning).

Result: Answering the basic question, more Student‐Centered instructional conditions (i.e., the treatment described above) outperform less Student‐Centered to a moderate extent. The average effect, = 0.44, k = 365, z = 4.56, p < .00, SE = 0.03, Q = 3,095.89, I 2 = 88.22, tau 2 = 0.27, between the mean of the more Student‐Centered treatment and the less Student‐Centered control, suggesting that teachers who promote and enact active classroom processes (more Student‐Centered instruction), can expect to see better student achievement than in classrooms where teachers employ less Student‐Centered instruction. Also, a linear trend was found in meta‐regression when Hedges’ was regressed on degree of Student‐Centered instruction (β = 0.04, SE = 0.02, z = 2.41, p = .032). The distribution remains significantly heterogeneous.

Question 2: Do any of the four moderator variables (entered into multiple meta‐regression), Teacher's Role, Pacing, Adaptability, and Flexibility, predict an increase or decrease in achievement across degrees of Student‐Centered use (From less Student‐Centered to more Student‐Centered)?

Result: In meta‐regression, Teacher's role produces a significant linear trend (β = 0.06, SE = 0.04, z = 4.42, p < .001) and Pacing (β = −0.14, SE = 0.04, z = 3.18, p = .002). Adaptability, and Flexibility are not significant (p > .05). However, the trend for Teacher's role and Pacing is opposite (note the opposite signs on β). Teacher's role is significantly positive (i.e., more Student‐Centered instruction produced higher achievement), while Pacing produces the reverse (i.e., a significantly negative trend). For Pacing, more Student‐Centered methods produce lower achievement.

Question 3: Do any of the moderator variables interact with each other (i.e., combine) to produce more versus less Student‐Centered instructional practices?

Result: Yes, Teacher's Role compared to two dimensions added to the Teacher's Role produce significantly different results (Q‐Between = 7.76, df = 3, p = .02: Teacher's Role and Teacher's Role plus Adaptability significantly outperformed Teacher's Role plus Flexibility.

Question 4: Is there a difference in categorical levels of less Student‐Centered to more Student‐Centered for each of the dimensions of instructional practice listed above, tested in mixed moderator variable analysis?

Result: Only one of five moderator variables produced a significant differentiation among levels. Among four moderator variables (i.e., grade level; STEM versus Non‐STEM subjects; individual subjects; and ability profile) only ability profile significantly differentiated among levels. Special education students demonstrated significantly higher achievement compared to the General population of students.

2.7. Authors’ conclusions

This meta‐analysis provides strong evidence that Student‐Centered instruction leads to improvements in learning with k‐12 students. Not only is the overall random effects average effect size of medium strength ( = 0.44), but there is also a demonstrated (subtle but significant) linear relationship between more Student‐Centered classroom instruction and effect size (p = .03). Taken together, these results support the efficacy of allowing students to engage in active learning or other forms of Student‐Centered enterprise as part of a comprehensive educational experience.

3. BACKGROUND

3.1. Adaptive teaching and individualization for k‐12 students improve academic achievement: A meta‐analysis of classroom studies

The question of how to provide the best‐quality instructional conditions for students of all grade levels has been scrutinized extensively since the early 1960s, principally from two major perspectives: Teacher‐centeredness (Teacher‐Centered) and student‐centeredness (Student‐Centered). Student‐Centered education initially arose from the writings of early progressive educators like John Dewey, and was carried on subsequently, in various forms, by Jean Piaget, Lev Vigotsky, Jerome Bruner, and Carl Rogers, to name only a few. The ideas were radical when first introduced, but the notion of Student‐Centered education resonated in educational circles, where lecturing and rote memorization was still the standard for quality education and led to vast amounts of theorizing and research to show that students could succeed in learning of all sorts without a strongly transmissive approach on the part of the teacher. Today, the terms individualized instruction and adaptive teaching have become a popular expression for current practice and are used nearly synonymously with Student‐Centered learning.

However, since their inception, Student‐Centered practices have inspired resistance, both from the public and from educational theorists. Thus, after Student‐Centered practices were widely introduced, a dichotomy arose in the literature, with one side promoting the continuation of Teacher‐Centered learning and on the other side the adopting Student‐Centered learning practices. This was argued as a dichotomy for many years. However, the arguments have abated somewhat now with the general recognition that there is value in both approaches. Generally speaking, educators no longer aspire to a pure implementation of either approach, but now discuss questions of which method, when, and for what purpose is best.

3.1.1. Individualized learning and adaptive student‐centered education (Student‐Centered)

Conceptual understanding of individualized learning and adaptive teaching varies broadly, encompassing a multitude of instructional strategies, approaches, and activities. It stretches from accounts of specific systems of instruction such as mastery learning (Bloom, 1968) and scaffolded adaptive feedback in computer‐based instruction (e.g., Azevedo & Bernard, 1995) to more general conceptions of active learning and individualization that involve approaches such as cooperative learning (e.g., Johnson & Johnson, 2002; Johnson, Johnson, & Maruyama, 1983), collaborative learning (e.g., Bernard, Rojo de Rubalcava, & St‐Pierre, 2000), problem‐based learning (e.g., Zhang et al., 2015), and project‐based learning (e.g., Bernard & Lundgren‐Cayrol, 2001). It also includes educational concepts, largely derived from elements of constructivism, such as discovery learning, inquiry‐based learning, activity‐based learning, experiential learning, and other forms of Student‐Centered education (Tobias & Duffy, 2009).

Notions of unguided Student‐Centered learners have not been free from detractors. Dewey criticized this approach in Experience and Education (Dewey, 1938), and, more recently, Kirschner, Sweller, and Clark (2006) published an influential piece that argued that the practice of turning kids loose to learn defies many of the tenets of the psychological principles of working memory and that guided instruction is both more efficient and ultimately more profitable to long‐term learning outcomes. A flurry of responses and rejoinders ensued with no clear resolution, but the educational community was left with the strong impression that a teacher's role in Student‐Centered learning was better as a guide on the side rather than a silent witness (King, 1993).

The learning sciences have further contributed to the distinction between social constructivism and individual constructivism providing a theoretical grounding for teacher versus learner‐based strategies (Kolodner, 2004). Current and developing applications, informed by pedagogical principles espoused by case‐based learning (e.g., Kolodner et al., 2003).

Research on more individualized and adaptive education

The earliest large‐scale research project, aimed at exploring the efficacy of so‐called progressive education, was conducted between 1933 and 1941 by the Progressive Education Association (funded by the General Education Board and other foundations). Twenty‐nine model schools were selected for curricular experimentation with the security that over 200 colleges and universities would accept their students upon recommendation by their principals. Changes in these schools included more individualized instruction and more access to alternative and cross‐disciplinary programs, which emphasized greater access to arts and extracurricular programs.

Results indicated that students graduating from the 200 schools scored on par in basic courses (e.g., mathematics and science) with students from traditionally oriented schools and that there was more activity in artistic, political, and social engagement in students from the alternative experimental schools. The long‐term impact of these experiments is generally described as influence on its participants and subsequent reformers rather than dramatic change. The intervening conservatism brought about by World War II and the ensuing Cold War are often cited as deterrents to widespread change in the overall educational system in the United States (Aiken, 1942).

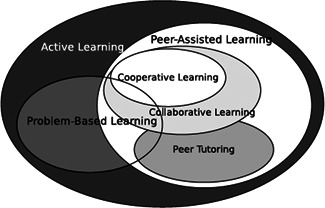

Examples of further attempts to make teaching and learning more individualized and adaptive can be found in both the early and current research literature. They include, but are not limited to, mastery learning (e.g., Bloom, 1968), Personalized System of Instruction (PSI; e.g., Keller, 1968), assorted forms of peer instruction (e.g., Mazur, 1997), various practices of reciprocal reading/writing activities (e.g., Huang & Yang, 2015), collaborative and cooperative learning, problem and project‐based learning and, more recently, Intelligent Tutoring Systems (ITS; e.g., Huang & Shiu, 2012). Several of these approaches are summarized in the following paragraphs and a number of the most common group‐based Student‐Centered approaches are depicted in a Venn Diagram (Figure 2) that shows their inter‐relationship and approximate overlap (Bishop & Verleger, 2013, p. 6).

Figure 2.

Venn diagram of the overlap among methods of active learning (Student‐Centered; Bishop & Verleger, 2013, p. 6)

The benefits and limitations of so‐called systems of instruction (i.e., mastery learning, PSI, and ISI) are summarized separately in both qualitative and quantitative reviews. In the late 1970s and early 1980s, several relevant meta‐analyses were published on mastery learning and its variant PSI. First, Lysakowski and Walberg (1982), Guskey and Gates (1986), Guskey and Pigott (1988), Slavin (1987), and Kulik, Kulik, and Bangert‐Drowns (1990) each performed successive meta‐analyses (Slavin's was the best evidence synthesis) on the efficacy of mastery learning. The studies produced equivocal and highly debatable findings. Kulik, Kulik, and Cohen (1979) reviewed 75 individual comparative studies of Keller's Personalized System of Instruction (PSI is a spin‐off of mastery learning) college teaching method. In comparison to conventional instruction, the PSI approach was demonstrated to have a positive effect on student achievement and course perception (mean effect size of nearly 0.70sd for both).

Bangert and Kulik (1982) looked at the effectiveness of the Individualized Systems of Instruction (ISI, a spin‐off of PSI) in secondary school students. They broadened the list of outcomes to account not only for student achievement (e.g., final exams), but also critical thinking, attitudes toward subject matter, and student self‐concept. For all outcome types, the findings were inconclusive. For example, for the achievement data, only 8 out of 49 studies demonstrated statistically significant results in favor of ISI (four studies favored more conventional teaching methods and the rest were inconclusive). Finally, Kulik (1984) attempted a wider research synthesis (encompassing over 500 individual studies) of the effectiveness of programmed instruction and ISI, paying special attention to the moderator variables of study dates and grade levels. The most promising findings indicated that more recent studies showed higher effects than the earlier ones and that college‐level students benefited significantly from using ISI compared with elementary and secondary school students. In summary, as stated earlier, these meta‐analyses produced inconclusive results. Moreover, they are rather outdated and practically none of the above‐mentioned instructional methods exists now in their original forms (e.g., Eyre, 2007 was able to identify fewer than 50 studies of PSI for the period between 1990 and 2006 in the PsycInfo database).

Much of the preceding discussion has been about systems of individualized instruction, designed and intended as self‐contained approaches to individualizing student learning. Because of their rule‐based nature, they may be thought to be individualized, but insufficiently adaptive (systems often are not very adaptive).

Several meta‐analyses addressed the topic of individualized and adaptive instruction (i.e., instructional approaches that can be applied as local circumstances dictate), though in very specific narrowly focused forms. Aiello and Wolfle (1980) summarized research on individualized instruction in science education compared with traditional lectures and found that individualized instruction was more effective. Horak's (1981) meta‐analysis of self‐paced modular instruction of elementary and secondary school math (1981) produced a wide variety of both positive and negative effect sizes. A highly cited meta‐analysis of active learning in science, engineering, and mathematics subject matters (Freeman et al., 2014) found a moderate effect size ( = 0.47) based on 158 studies. The authors also state that “The results raise questions about the continued use of traditional lecturing as a control in research studies, and support active learning as the preferred, empirically validated teaching practice in regular classrooms” (p. 8410). This sentiment appears to add support to the comparative approach that is employed in the current meta‐analysis.

Kraft, Blazar, and Hogan (2018) examined the effects of teacher coaching (i.e., tutoring) on student achievement and found minor effect on achievement ( = 0.08). Though these instructional approaches are not “adaptive,” per se, at least peer tutoring opens the educational process to much greater involvement of students, and thus accounts more for their individual inputs in learning. The effect size tended to be relatively small in middle school students, but higher at elementary and high school levels.

There have been numerous reviews and meta‐analyses of various forms of computer‐assisted instruction (CBI). Ma, Adesope, Nesbit, and Liu (2014) meta‐analyzed studies of ITS in a variety of subject matters, from reading and math to law and medical education. More specific reviews have been conducted on the effectiveness of feedback and scaffolding in CBI and ITS. The list of moderator variables included the type of both experimental and comparison treatments, as well as outcome type, student academic level, study discipline, etc. The highest achievement effects of using ITS were found in comparison with non‐ITS computer‐based instruction ( = 0.57) and teacher‐centered, large‐group instruction ( = 0.42), whereas in comparison with human tutoring it was even negative ( = −0.11), though not statistically significant. ITS‐based practices were similarly effective when used either alone or in combination with various forms of teacher‐led instruction in many subject domains. In particular, certain specific aspects of instruction like feedback and scaffolding in CBI and ITS systems have come under scrutiny. Azevedo & Bernard (1995) examined studies testing the effectiveness of computer‐provided feedback against no feedback, and Belland, Walker, Olsen, and Leary (2015) synthesized studies investigating feedback in computer‐based scaffolding. In both cases, the average effect size was around = 0.50 in favor of feedback conditions. Overall, the research literature paints a positive picture of Student‐Centered learning.

3.1.2. Less individualized and less adaptive teacher‐centered education (Teacher‐Centered)

There has been considerable research in Teacher‐Centered education as well over the years. In the 1960s, during the Lyndon Johnson administration in the United States, a massive experiment called Project Follow Through was initiated to test the efficacy of a range of instructional strategies. The intent was to evaluate the relative advantages of models of instruction that ranged from Direct Instruction (i.e., DISTAR) to so‐called Open Education (i.e., based on the British Infant School Model). After years of testing and millions of dollars spent, only one really striking finding emerged: That direct instruction advantaged learners in terms of both measures of achievement and affect, outperforming other models by as much as 1.5 SD (standard deviation). While a great deal of controversy surrounds the conduct and findings of this large‐scale educational trial, its results set a tone of teacher‐centeredness that is still influential (Magliaro, Lockee, & Burton, 2005).

The most recent addition to the direct instruction literature comes from Stockard et al. (2018). The report, published in Review of Educational Research, entitled “The Effectiveness of Direct Instruction Curricula: A Meta‐Analysis of a Half‐Century of Research,” synthesized 328 studies involving 413 study designs and almost 4,000 effect sizes. Effect sizes, calculated between the Direct Instruction group and a comparison group (not specifically described), were reported for Reading ( = 0.51), math ( = 0.55), language ( = 0.54), and spelling ( = 0.66), interpreted as medium effect sizes by Cohen's (1988) criteria. This report suggests that teaching basic skills and competencies through a Direct Instruction curriculum is at least as effective as the best forms of individualized and adaptive instructional systems and approaches. This quote from the Stockard et al. report summarizes their view as to the distinction between Teacher‐Centered and Student‐Centered instruction:

Direct Instruction builds on the assumption that all students can learn with well‐designed instruction. When a student does not learn, it does not mean that something is wrong with the student but, instead, that something is wrong with the instruction. Thus, the theory underlying DI lies in opposition to developmental approaches, constructivism, and theories of learning styles, which assume that students’ ability to learn depends on their developmental stage, their ability to construct or derive understandings, or their own unique approach to learning. Instead, DI assumes all students can learn new material when (a) they have mastered prerequisite knowledge and skills and (b) the instruction is unambiguous. (p. 480)

This quotation acknowledges that there is a marked distinction between Teacher‐Centered and Student‐Centered learning. Our premise is that the answer to the question regarding Teacher‐Centered and Student‐Centered classrooms is not either/or but a spectrum of practices that usually avoids either extreme. The central question posed in this systematic review is the location of the sweet spot on the Teacher‐Centered/Student‐Centered spectrum. Where and when should the teacher maintain control of the sort described by Stockard et al. (2018) and where and when can students take more ownership of their own leaning processes?

3.1.3. Comparing teacher‐centered and student‐centered instructional practices

A large‐scale examination (Hattie, 2008) of variables relating to various influences on educational outcomes of both Teacher‐Centered and Student‐Centered offers an opportunity to examine instructional practices side‐by‐side (See Table 1). Second‐order meta‐analyses relating to the teacher, the school, the curriculum, the home, etc. found average effect sizes for a number of instructional approaches that are shown in Table 1. Some of these approaches are clearly teacher‐centered, while some are more learner‐centered, and some have elements of both (or can be either depending on their application). Judging from these results, it is difficult to establish a clear pattern; Student‐Centered, Teacher‐Centered and both/either can be highly effective or not so effective. Clearly, a more in‐depth analysis is called for (Table 2).

Table 1.

Results of second‐order meta‐analyses of selected educational practices (ordered by average effect size)

| Instructional/pedagogical approach | Activity category (Teacher‐Centered, Student‐Centered or both/either) | Average effect size |

|---|---|---|

| Reciprocal teaching | Both/either | +0.74 |

| Feedback to students | Both/either | +0.73 |

| Problem‐solving teaching | Both/either | +0.61 |

| Cooperative versus individualistic learning | Both/either | +0.59 |

| Direct instruction | Teacher‐centered | +0.59 |

| Peer tutoring | Learner‐centered | +0.55 |

| Cooperative versus competitive learning | Both/either | +0.54 |

| Cooperative learning versus other strategies | Both/either | +0.41 |

| Inductive teaching | Teacher‐centered | +0.33 |

| Inquiry‐based teaching | Both/either | +0.31 |

| Problem‐based learning | Both/either | +0.15 |

| Learner control of learning | Learner‐centered | +0.04 |

| Open versus traditional education | Learner‐centered | +0.01 |

Note: Based on Hattie, (2008). Visible learning: A synthesis of over 800 meta‐analyses related to achievement. London: Routledge.

Table 2.

Duvall & Tweedie's Trim and Fill

| k |

|

Lower 95th | Upper 95th | Q value | ||

|---|---|---|---|---|---|---|

| Observed values | 365 | 0.44 | 0.38 | 0.50 | 3095.89 | |

| Adjusted values | 0 | 0.44 | 0.38 | 0.50 | 3095.89 |

3.2. The pragmatics of teaching and learning

One might be tempted to organize some of these practices according to a spectrum of more and less constructivist practice. However, since constructivism has many different strands, both philosophically and pedagogically (Phillips, 1995), and since those strands vary significantly and counter‐intuitively in the degree of teacher‐centeredness they tend to imply, other approaches organize teaching practice more directly. These approaches label instructional strategies from more Student‐Centered (e.g., collaborative learning, discovery learning, problem‐based learning, inquiry‐based learning) to more teacher‐centered (e.g., direct or explicit instruction, didactic and expository instruction, lecturing, lecture‐discussion, drill, and practice).

3.2.1. The genesis of this project

The current project deconstructs teaching and learning according to the events (or dimensions) associated with instructional conditions. Any of these events can be either more Teacher‐Centered or Student‐Centered. A more Teacher‐Centered environment is one where teachers are in charge of most of the instructional events. A more Student‐Centered classroom is one in which teachers pass on control over the responsibility for many of the instructional events to learners, thereby acting as guides rather than directors. These events are then isolated and rated, and a composite can be constructed that will yield a greater‐to‐lesser Student‐Centered scale along a continuum of instructional practices. This approach is multidimensional and avoids problems associated with the vague and somewhat confusing nature the first approach (i.e., holistically, more constructivist vs. less constructivist) and the inexact labeling (i.e., inquiry learning) of the second. It also has the advantage of allowing for the examination of clusters or combinations of instructional events that will be more practically relevant to k‐12 education.

There is support for this approach in the conclusion of Gersten et al. (2008), who was tasked with conducting a meta‐analysis of mathematics teaching practices of Teacher‐Centered and Student‐Centered classroom. They noted: “The Task Group found no examples of studies in which learners were teaching themselves or each other without any teacher guidance; nor did the Task Group find studies in which teachers conveyed … content directly to learners without any attention to their understanding or response. The fact that these terms, in practice, are neither clearly nor uniformly defined, nor are they true opposites, complicates the challenge of providing a review and synthesis of the literature…” (p. 12). Similarly, the National Mathematics Advisory Panel Final Report (2008) noted that most teachers do not rely on one single methodology (i.e., either/or, the extremes of teacher‐directedness or learner‐centeredness) but attempt to blend the two so that each is strengthened by the other.

In an attempt to help settle the issue in regards to inquiry instruction (in particular) versus direct instruction in k‐12 education, a team of researchers (Cobern et al., 2010), funded through NSF/IERI, conducted a 4‐year set of large‐scale RCTs comparing inquiry methods of teaching (Student‐Centered) with direct instruction (Teacher‐Centered). Results suggested that both models produced significant pretest‐posttest learning, but that there was no significant difference between the classroom models. One of their conclusions was that “… soundly constructed lessons, involving learner engagement, and competently taught by good teachers, are as important for development as to whether a lesson is cast as inquiry or direct instruction. Thus, the promotion of one mode of instruction over the other, where both are based on sound models of expert instruction, cannot be based simply on content acquisition alone” (p. 37). This result runs counter to the findings of a meta‐analysis by Schroeder, Scott, Tolson, Huang, and Lee (2007) of instructional practices in science education, where an average effect size of = 0.65 was found for Inquiry Strategies, but in the write‐up of this review it is unclear what served as the control condition.

While it seems quite clear that both Student‐Centered and Teacher‐Centered instructional practices can contribute to learning, it is not at all clear how they work together and in what instructional domains. This project seeks answers to these questions.

3.3. Description of the intervention

The main research question of this meta‐analysis is: Can more Student‐Centered (i.e., more adaptive and individualized) approaches to k‐12 instruction be distinguished from more Teacher‐Centered approaches, and if they can, what approaches work best in terms of their effect on student achievements and what substantive (including combinations among dimensions) and demographic factors moderate these effects?

A concrete example of the advantage of using this approach compared to other classification schemes can be observed in the literature of cooperative and collaborative learning. While cooperative learning strategies tend to be more Teacher‐Centered, and collaborative learning tends to be more Student‐Centered (i.e., there tend to be more rules for delivering cooperative learning than there are in collaborative learning), great variance can be observed in the way the steps (e.g., group composition, task selection, role assignment, assessment methods) in each are operationalized. As a teaching/learning strategy, cooperative learning is perhaps the most heavily researched and best‐understood technique for involving learners in small‐group, process‐oriented learning. However, it can be viewed as either Teacher‐Centered or Student‐Centered depending on how its components are implemented.

For better understanding and more successful practical application, educational practices subsumed under this generic pedagogical idea of adaptive teaching and individualized learning deserves a valid conceptual working model, both inclusive enough to account for various forms of personalized/individualized instruction and sufficiently sensitive to fluctuations due not only to the influence of numerous moderator variables, but also to nuanced qualities of particular instructional approaches themselves. Student‐Centered instructional strategies could, in our view, serve such an overarching conceptual framework with adequate explanatory power, but only if operationalized properly to avoid an oversimplified dichotomy such as inductive versus deductive or constructivist versus direct instruction.

3.4. How the intervention might work

The phenomenon being investigated in this review is not an intervention in the normal way that this word is used in the experimental literature. It is more correctly a set of instructional practices that have defined along a continuum from extremely Teacher‐Centered (where the teacher is the boss in control of all instructional events) to extremely Student‐Centered (i.e., where the teacher is a guide and facilitator, even sometimes an equal partner). As such, any classroom research, regardless of the intervention being investigated, is eligible so long as there is sufficient detail provided as to what each group did.

Following a review of the literature by the research team on instructional practices in grades k‐12, we developed a list of instructional events (or dimensions) that can be rated on a Teacher‐Centered to Student‐Centered continuum. These are: (a) Flexibility in Course Planning—degree to which teachers/learners participate in course design, setting objectives, selecting or creating materials; (b) Pacing of Instruction/Learning—degree to which teachers/learners set the pace and content navigation of learning activities; (c) Teacher's Role—degree to which the teacher's role in the classroom ranges from lecturer to lecturer/authority figure/facilitator/ guide/partner; and (d) Adaptability of Instruction—degree to which materials and activities are generic or modified for individual students.

To define the key qualities of instruction as adaptive and individualized, (referred to here as Student‐Centered) for the purposes of this systematic review, we have deconstructed teaching and learning according to the events associated with them. Accordingly, a more Student‐Centered (more adaptive and individualized) classroom is one in which students play a more central role in the conduct of the instructional events. Conversely, if teachers dominate the instructional events, the classroom might be referred to as less adaptive. We have isolated these categories of instruction in reports of primary classroom research and rated them individually on a Teacher‐Centered to Student‐Centered continuum. Each event could then be: (a) Examined separately to determine their individual strengths; (b) examined in clusters as combinations of events; or (c) collapsed into a multidimensional composite that would yield a greater‐to‐lesser distinction between two different instructional settings. This approach avoids problems associated with either subjectively defining instructional conditions as Teacher‐Centered versus Student‐Centered or the specific labeling of them, as, for instance, PSI, mastery learning, etc. It also has the advantage of allowing us to examine instructional events in isolation and in various combinations in the search for optimal instructional practices.

3.5. Why it is important to do the review

Most of the significant effects from the meta‐analyses described in the first section of this report on the topic cluster around = 0.40, but the data also reflect a wide range of effects depending on the whole spectrum of moderator variables. Also, the overall picture painted by these meta‐analyses is less useful today as most are now dated. Of special concern to us is the fact that both earlier and recent meta‐analyses are rather limited in scope and focus of interest, addressing very specific instructional practices. There were no serious attempts to find and conceptualize pedagogical commonalities among the interventions in question that would allow treating them within the same class of phenomena broadly depicted as individualized learning and adaptive teaching. Thus, a review that is broad in scope and summarizes up to‐date‐evidence is a next logical step in investigating these phenomena.

3.6. Objectives

The main objective of this review is to summarize research on the effectiveness (in terms of learning achievement outcomes) of adaptive and individualized instructional interventions operationally defined here as more Student‐Centered pedagogical approaches. The overall weighted average effect size will be an indication of that. Additionally, and no less important, the review aims to provide a better understanding of what circumstances (e.g., with what populations of learners, for what subject matters) the effects of adaptive and individualized instruction reach their highest potential, and what conditions may depress them. To explore this, a set of substantive and demographic study features are coded and subjected to moderator variable analyses.

The outcomes of this review will inform education practitioners and the research community of the best instructional practices, preconditions for their successful implementation, and potential pitfalls, as well as directions for further empirical research in the area.

4. METHODS

4.1. Criteria for considering studies for this review

4.1.1. Types of studies

Only studies that considered the difference between the two groups were eligible for inclusion in this review. Included are studies that are experimental (i.e., Randomized Control Trials) or high‐quality Quasi‐Experimental Designs (i.e., statistically verified group equivalence or adjustment) in design that adequately addressed the research question of group comparisons, contained legitimate measures of academic achievement (i.e., teacher/researcher‐made, standardized), and reported sufficient statistical information for effect size extraction.

4.1.2. Types of participants

The participants are students in k‐12 formal educational settings (~ages 5–18) eventually leading to a certificate, diploma, degree, or promotion to a higher level. Educational interventions take place either in the classroom, via distance education, or as a blended intervention (various combinations of classroom and distance education).

4.1.3. Types of interventions

As described earlier, the intervention in question (an experimental condition) was considered to be any combination of instructional events that is rated higher in Student‐Centered qualities than a comparison (control) condition. Student participation in decisions about or control over the selection of study materials and learning activities, pacing of instruction, adapting learning for students’ individual needs, interests, backgrounds, etc., as well as various degrees of involvement in “partnership” with teachers, constitute, in our view, such Student‐Centered qualities of instruction. Two experienced independent reviewers coded instructional conditions featured in a given primary study (on a scale from 1–5) to reflect the extent to which each group possessed these qualities. Below we describe dimensions that were in the focus of our review.

Within each eligible comparative study all participation groups were coded for the four effect dimensions using a five‐point scale, as follows:

Dimension of Teacher's Role represents a continuum of a teacher's major responsibilities for organizing/delivering instruction/managing classroom activities, etc.

Coding: Describes the teacher's predominant role in the teaching/learning process:

-

1.

Teacher almost exclusively lectures, is the main source of content‐relevant information and/or an authority figure.

-

2.

Teacher provides some guidance, feedback, initiates and supports discussions, etc.

-

3.

Teacher functions as a guide, coach, tutor, provocateur of thinking.

-

4.

Teacher functions as a colleague, partner in learning.

-

5.

Teacher almost exclusively acts as a facilitator of learning, responding to students’ specific needs (follows students’ lead, consults, clarifies, encourages, etc.).

Dimension of Pacing reflects the degree of student control over the time of instruction/learning and over the progression through the course content (i.e., pedagogical flexibility—revisiting/selecting/skipping/reordering topics and tasks).

Coding: Describes the degree to which students are given control over course progression:

-

1.

Instruction is highly structured and progresses step‐by‐step; no flexibility is allowed.

-

2.

Minor degree of either logistical or pedagogical flexibility is available to students.

-

3.

Program/teacher's control over course progression is balanced with that of students.

-

4.

Students have a substantial amount of flexibility in course progression.

-

5.

High degree of flexibility (up to the point of completely self‐paced and/or self‐planned/self‐managed learning).

Dimension of Flexibility describes the degree of student control over course design, selection, and the provision of study materials and the setting up of learning objectives.

Coding: Describes the degree to which teachers/students participate in course planning:

-

1.

No involvement of students (most is determined by the teacher or program/curriculum).

-

2.

Student involvement in at least one of the components of course planning is present but limited.

-

3.

Teachers and students collaborate in the course planning, but teacher's role is still dominant.

-

4.

Teachers and students collaborate in the course planning equally.

-

5.

High student involvement—students play a leading role in course planning and selection of learning materials.

Dimension of Adaptability of Instruction describes the degree to which levels or modifications in instructional process is provided to accommodate individual students.

Coding: Describes the degree to which instruction takes into account students’ needs/interests/level of knowledge:

-

1.

Learning materials, settings, group formation (if any), activities and other work arrangements are predetermined and unchanged throughout the instruction (e.g., standardized or required curriculum).

-

2.

Minor modifications are allowed to either learning materials, group composition, or the context of instruction.

-

3.

Elements of either individualized feedback, or role and tasks assignments based on students’ interests and/or previous achievements, etc.

-

4.

Adapting several instructional components (in combinations) to students’ individual needs/interests/levels of knowledge.

-

5.

High levels of joint Adaptability of several components of instruction.

Based on the results of this coding (implemented independently by two reviewers compared and finalized in discussions), numeric values for each participating group were derived. The sum of these values determined the experimental (higher total) and control (lower total) conditions in every included study. The differential score was subsequently calculated to reflect the degree of student‐centered (Student‐Centered) components of instruction and to serve as a “continuous” predictor in meta‐regression of effect sizes against the “strength” of the intervention. In the Results section this variable is depicted as “Student‐Centered Total Differential Score” (i.e., sum of scores for the experimental group minus sum of scores for the control group) with a theoretical range from 1 (one point difference in coding on a single dimension) to 16 (maximum difference between groups on all four coded dimensions).

Similarly, we determined and reflected the number of dimensions with differential scores higher than zero (i.e., on how many dimensions adaptive qualities of the instruction were present in the experimental group to a greater extent than in the control group). This variable is labeled “Difference by Dimension” and could range from 1 (difference on a single dimension) to 4 (difference on all four dimensions), regardless of the magnitude of that difference.

Finally, we wanted to trace and analyze the source of the difference. To that end, a categorical variable Source of the Difference was designed to reflect what dimensions in what combinations contributed to the magnitude of the respective effect size. Initial letters of each of the coded dimension depicted levels of this variable. For example, F_T_A stands for some difference on the dimensions of Flexibility, Teacher's Role, and Adaptability of Instruction, with zero differential score on the dimension of Pacing.

Decisions about the completeness of the reported information were made at three points in time. First, two independent coders decided in general on each study's inclusion/exclusion status (overall qualitative judgment). Second, when dimensions were actually coded, reviewers searched for all relevant information in the study and if this information was not found, assigned the valued of “999” (or missing information) subsequently excluding studies with more than one “999.” Third, at the analysis stage studies with “999” were converted into zeroes, indicating no difference between the two respective conditions, and if after this transformation the overall composite score was zero these studies were also excluded.

As a result, only studies judged by coders to have provided sufficient description were retained for analysis.

4.1.4. Types of outcome measures

Primary outcome

All types of objective measures of academic achievements were considered. Their psychometric features (e.g., standardized, nonstandardized teacher/researcher‐made assessment tools) and type of representativeness (e.g., cumulative final examinations or averages of several performance tasks covering various components of the course/unit content) were documented and used in subsequent moderator variable analyses. Self‐assessments were excluded, as well as attitudinal and behavioral measures.

4.1.5. Duration of follow‐up

To maximize coverage of primary research, fully compatible in terms of outcome measures, only immediate post‐test results were considered. Various forms of delayed post‐tests were documented and their time lags categorized to inform further reviews.

4.1.6. Types of settings

As stated earlier, k‐12 formal educational settings (~ages 5–18), in educational programs leading to advancement to the next academic level/grade, were required in the current meta‐analysis. Other settings (i.e., homeschooling, auxiliary programs, summer camps, vocational workshops, etc.) were excluded.

4.2. Search methods for identification of studies

4.2.1. Electronic searches

Following the Guidelines of the Campbell Collaboration (Kugley et al., 2017), in order to retrieve a broad base of studies to review we started by having an experienced Information Specialist search across an array of bibliographic databases, both in the subject area and in related disciplines. The following databases were searched for relevant publications: ABI/Inform Global (ProQuest), Academic Search Complete (EBSCO), ERIC (EBSCO), PsycINFO (EBSCO), CBCA Education (ProQuest), Education Source (EBSCO), Web of Knowledge, Engineering Village, Francis, ProQuest Dissertations & Theses Global, ProQuest Education Database, Linguistics and Language Behavior Abstracts (ProQuest).

The search strategy was tailored to the features of each database, making use of database‐specific controlled vocabulary and search filters. Searches were limited to the year 2000–2017, and targeted a k‐12 population. The following is an example from the ERIC database:

(AB (adaptive OR personalized OR personalized OR individuali*) AND AB (pedagog* OR learning OR teaching OR instruction OR education OR classroom OR curriculum)

OR (AB “self direct*” OR “self regulate*”) OR (DE “Open Education” OR DE “Discovery Learning” OR DE “Individual Activities” OR DE “Student‐Centered Curriculum” OR DE “Student Centered Learning” OR DE “Mastery Learning” OR DE “Independent Reading” OR DE “Independent Study” OR DE “Individualized Instruction” OR DE “Competency‐Based Education” OR DE “Individual Instruction” OR DE “Individualized Programs” OR DE “Individualized Reading” OR DE “Individualized Transition Plans” OR DE “Learner Controlled Instruction” OR DE “Pacing” OR DE “Individual Testing” OR DE “Adaptive Testing” OR DE “Experiential Learning” OR DE “Learner Engagement” OR DE “Cooperative Learning”))

AND

(DE “Program Validation” OR DE “Academic Achievement” OR DE “Instructional Improvement” OR DE “Progress Monitoring” OR DE “Educational Assessment” OR DE “Instructional Effectiveness” OR DE “Program Evaluation” OR DE “School Effectiveness” OR DE “Evidence” OR DE “Outcomes of Education” OR DE “Program Effectiveness”)

AND

(DE “Pretesting” OR DE “Pretests Posttests” OR DE “Control Groups” OR DE “Experimental Groups” OR DE “Matched Groups” OR DE “Mixed Methods Research” OR DE “Randomized Controlled Trials” OR DE “Effect Size” OR DE “Quasiexperimental Design” OR DE “Comparative Analysis”)

Limiters—Date Published: 20000101‐20171231; Educational Level: Elementary Education, Grade 1, Grade 2, Grade 3, Grade 4, Grade 5, Grade 6, Grade 7, Grade 8, Grade 9, Grade 10, Grade 11, Grade 12, High Schools, Junior High Schools, Kindergarten, Middle Schools, Primary Education, Secondary Education; Publication Type: Books, Collected Works (All), Dissertations/Theses (All), ERIC Publications, Information Analyses, Journal Articles, Numerical/Quantitative Data, Reports—Descriptive, Reports—Evaluative Reports, Reports—Research.

4.2.2. Searching other resources

Theses/Conference papers/Research reports

Database searching was supplemented by using the Google search engine to locate additional articles, but principally grey literature (research reports, conference papers, theses, and research published outside conventional journals). Finally, an in‐house database of empirical studies of teaching methods, assembled by the research team from previous research reviews, was searched and produced an additional 254 studies. While these studies had been previously collected, the same set of inclusion criteria used for other studies were applied to them.

4.3. Data collection and analysis

4.3.1. Selection of studies

The overall set of inclusion/exclusion criteria for the meta‐analysis contained the following requirements:

Be publicly available (or archived) and encompass studies from 2000 to the present.

Feature at least two groups of different instructional strategies/practices that can be compared according to the research question as Student‐Centered and Teacher‐Centered instruction.

Include course content and outcome measures that are compatible with the groups that form these comparisons.

Contain sufficient descriptions of major instructional events in both instructional conditions.

Satisfy the requirements of either experimental or high‐quality quasi‐experimental design.

Is conducted in formal k‐12 educational settings eventually leading to a certificate, diploma, degree, or promotion to a higher grade level.

Contain measures representative of course achievement (i.e., teacher/researcher‐made, standardized).

Contain sufficient statistical information for effect size extraction.

4.3.2. Data extraction and management

Two researchers independently conducted abstract screening and full‐text review of studies identified through the whole complex of searching activities, compared notes, discussed and resolved disagreements, and documented reliability rates. Similar procedures were employed for effect size extraction and coding of moderator variables.

4.3.3. Effect size extraction and calculation

One of the selection criteria was “Contain sufficient statistical information for effect size extraction,” so that an effect size could be calculated for each independent comparison. This information could take several forms (in all cases sample size data were required):

Means and standard deviations for each treatment and control group;

Exact t value, F‐value, with an indication of the ± direction of the effect;

Exact p value (e.g., p = .011), with an indication of the ± direction of the effect;

Effect sizes converted from correlations or log odds ratios;

Estimates of the mean difference (e.g., adjusted means, regression β weight, gain score means when r is unknown)

Estimates of the pooled standard deviation (e.g., gain score standard deviation, one‐way ANOVA with three or more groups);

Estimates based on a probability of a significant t test using α (e.g., p < .05); and

Approximations based on dichotomous data (e.g., percentages of students who succeeded or failed the course requirements).

Effect sizes were initially calculated as Cohen's d and then converted to Hedges’g (i.e., correction for small samples). Standard errors () were calculated for and then converted to standard errors of SE g applying the correction formula for g. Hedges’ g, SE g , and sample sizes (i.e., treatment and control) were entered into Comprehensive Meta‐Analysis 3.3.07 (Borenstein et al., 2014) where statistical analyses were performed.

The effect sizes were coded for precision of calculations and analyzed in moderator variable analysis.

4.3.4. Description of methods used in primary research

True experimental and quasi‐experimental studies were included as far as they feature two educational interventions covering the same content (required knowledge acquisition and/or skill development). They were assessed on compatible outcome measures, where one group (experimental) is greater in Student‐Centered qualities (as described earlier) compared to the other (control) group with fewer Student‐Centered qualities. Reporting quantitative data sufficient for an effect size extraction was a necessary condition for study inclusion.

4.3.5. Criteria for determination of independent findings

There are several potential major threats to the independence of the findings. These are: (a) Repeated use of data coming from the same participants (i.e., dependence); (b) reporting multiple outcomes of the same type; and (c) aggregating outcomes of different types representing the same sample of participants (does not apply to this review, as it is limited to learning achievement outcomes only). The means that we used for ensuring data independence were that no group of participants was used more than once, resulting in most cases in only one effect size per study; and only one outcome measure was used in each comparison (either cumulative or composite achievement score).

4.3.6. Details of study coding categories

In addition to the coding dimensions of Student‐Centered pedagogical qualities that would determine proper comparisons for effect size extraction, the following groups of study coding categories were used in the review. First, study methodological quality was assessed for features such as design type, the fidelity of treatment implementation, attrition, and the unit of assignment/analysis (Cooper, Hedges, & Valentine, 2009). Within the same category, we coded for outcome source and psychometric quality of the assessment tools, as well as for the precision of procedures used for effect size extraction and for equivalence of instructor and study materials. Jointly, these methodological study features were used in moderator variable analyses to inform us of any potential threats to all types of study validity (Cooper et al., 2009).

Substantive study features further clarify descriptions of Student‐Centered pedagogical qualities by specifying theoretical models underlying instructional practices under review, treatment duration, instructor's experience, provision of professional development for teachers, and training for students, whenever it is required by specific instructional intervention. Demographic study features encompass learners’ age, educational background, and ability level, as well as, subject matter studied. All of these study features were subsequently analyzed as moderators for their potential impact on treatment effects.

4.3.7. Assessment of risk of bias in included studies

Assessment of the risk of bias was accomplished in several ways:

4.3.8. Sensitivity analysis

Sensitivity analysis was performed to determine if issues such as research design, effect size extraction methods, instructor and material equivalence, publication bias, and assessment tool category, might have introduced bias into the results. It also involves a “one study removed” analysis of the distribution effect sizes. For the results of this analysis please see Table 5a–e.

Table 5.

a–e Research design, extraction method, instrument type, and publication source

| Codes | k |

|

SE | Lower 95th | Upper 95th | z‐value | p value | Q‐B | df | p value | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Research design—a | |||||||||||

| QED | 273 | 0.46 | 0.03 | 0.39 | 0.53 | 13.18 | .00 | ||||

| RCT | 90 | 0.40 | 0.07 | 0.28 | 0.53 | 6.17 | .00 | ||||

| Total between | 0.55 | 1 | .46 | ||||||||

| Extraction method—b | |||||||||||

| Exact | 107 | 0.37 | 0.05 | 0.27 | 0.47 | 7.22 | .00 | ||||

| Approximate | 257 | 0.48 | 0.04 | 0.40 | 0.55 | 12.69 | .00 | ||||

| Total between | 2.83 | 1 | .09 | ||||||||

| Instrument type—c | |||||||||||

| Standardized | 78 | 0.37 | 0.00 | 0.28 | 0.46 | 7.95 | .00 | ||||

| Mod. Stand. | 79 | 0.44 | 0.01 | 0.30 | 0.59 | 5.95 | .00 | ||||

| Teacher/Rcher. | 196 | 0.48 | 0.00 | 0.39 | 0.57 | 10.26 | .00 | ||||

| Combo | 11 | 0.35 | 0.02 | 0.10 | 0.60 | 2.78 | .01 | ||||

| Total between | 3.11 | 3 | .37 | ||||||||

| Teacher class assignment—d | |||||||||||

| Same teacher | 156 | 0.32 | 0.00 | 0.23 | 0.42 | 6.64 | .00 | ||||

| Diff. teacher | 196 | 0.52 | 0.00 | 0.44 | 0.60 | 13.12 | .00 | ||||

| Total between | 10.19 | 1 | .00 | ||||||||

| Teacher training—e | |||||||||||

| No | 128 | 0.50 | 0.05 | 0.40 | 0.60 | 9.86 | .00 | ||||

| Yes | 208 | 0.45 | 0.04 | 0.37 | 0.53 | 10.86 | .01 | ||||

| Total between | 0.63 | 1 | .43 | ||||||||

Note: Missing data has been removed so k does not always equal 365.

Abbreviations: QED: quasi‐experimental design; RCT: randomized control trial.

4.3.9. Assessment of reporting biases

Publication bias was assessed based on the examination of a Forest Plot and associated “Trim and Fill” analysis plus other tests such as Classic Fail‐safe analysis and Orwin's Fail‐safe N (Orwin, 1983).

4.3.10. Assessment of heterogeneity

Homogeneity assessment, sometimes called an analysis of precision, was accomplished using the fixed model of analysis. The following indicators are reported and discussed:

Q‐Total, df, test of the null hypothesis, I 2 (percentage of error variance over and above chance), and tau2 (average variability used in the calculation of random weights).

4.3.11. Data synthesis

Data are synthesized, initially, under the random effects model, and includes the following statistics: Overall weighted random effects analysis with the statistics of , SE g , V g, upper and lower limits of the 95th confidence interval, z g , and p value;

Heterogeneity is estimated using Q‐Total, df, and p value. I 2 (i.e., percentage of error variation) and tau2 (i.e., average heterogeneity) is also calculated and reported.

Meta‐regression (single and multiple) is used to determine the relationship between covariates and effect sizes; and

Mixed‐model (i.e., random and fixed) moderator variable analysis is used to compare categories of each coded moderator variable. Q‐Between, df, and p value are used to make decisions about the significance of difference among levels of each categorical variable.

The protocol for this review was published in the Campbell Library, August 2016: https://campbellcollaboration.org/media/k2/attachments/Bernard_Operationalized_Adaptive_Teaching_Title.pdf

5. RESULTS

5.1. Description of studies

5.1.1. Results of the searches

All searches were conducted by a fulltime Information Specialist (MLS level) and member of the Systematic Review Team at the Centre for the Study of Learning and Performance at Concordia University in Montreal, QC, Canada. As shown in the PISA flowchart in Figure 1, there were three sources of studies: (a) 1,663 studies from dedicated bibliographic searches detailed in the Method; (b) 95 studies retrieved from the grey literature; and (c) 254 studies transferred from an internal database of studies retrieved for a larger meta‐analysis that includes all grade levels, but with the same inclusion/exclusion criteria as the current study. Figure 1 details the results at each stage of the search and retrieval process. All bibliographic information was exported into an Endnote database and managed from there.

Figure 1.

Flow diagram of the review process

Duplicate studies were removed (n = 247) and the remaining 1,765 studies were subjected to an abstract screening process. In all, 817 studies were retrieved as full‐text documents. Examination of these studies proceeded according to the details described in the Method. A total of 518 full‐text documents were excluded for reasons detailed in the inclusion/exclusion description in the Method, leaving 299 studies that were included in the final analysis. In the final stage, 365 independent effect sizes were extracted from these studies, coded, and analyzed.

5.1.2. Included studies

There are 365 effect sizes (299 individual studies) included in this review, representing 43,175 treatment and control participants. References to these 299 studies appear in the section entitled References to included studies. Please see Table S13 for complete statistical information for the 365 effect sizes.

5.1.3. Excluded studies

A total of 1,613 studies were excluded from this review. Figure 1 shows how this number diminished over the course of the review and selection process and references to these excluded studies are presented in the section entitled References to excluded studies (found in Online Supplement 1).

5.2. Risk of bias in included studies

In assessing the quality of included studies we used the following criteria: Methodological quality moderators, publication and sensitivity bias analysis, data independence, and sufficiency of the description of instructional practices.

5.2.1. Publication bias analysis

Borenstein et al. (2014) state:

“The basic issue of publication bias is that not all completed studies are published, and the selection process is not random (hence the “bias”). Rather, studies that report relatively large treatment effects are more likely to be submitted and/or accepted for publication than studies [that] report more modest treatment effects. Since the treatment effect estimated from a biased collection of studies would tend to overestimate the true treatment effect, it is important to assess the likely extent of the bias, and its potential impact on the conclusions” (Publication Bias Report, Comprehensive Meta‐Analysis, 2014).

Thus, this report includes an extensive investigation of publication bias, as a potential source of difficulty and error in interpreting these results.

Funnel Plot analysis and Trim and Fill

A Funnel Plot (See Figure 3) and associated Trim and Fill procedure (Duval & Tweedie, 2000) of 365 effect sizes (See Table 3) indicate that there is no discernable publication bias on the negative side of the plot (i.e., left of the mean effect size) under the random effects model.

Figure 3.

Random effects funnel plot (effect size by standard error). [Color figure can be viewed at wileyonlinelibrary.com]

Table 3.

Analysis of publication source

| Categories | k |

|

SE | Lower 95th | Upper 95th | z‐value | p value | Q‐B | df | p value | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Journal articles | 302 | 0.46 | 0.03 | 0.40 | 0.53 | 13.69 | .00 | ||||

| Theses | 8 | 0.31 | 0.12 | 0.08 | 0.54 | 2.60 | .01 | ||||

| Other | 54 | 0.35 | 0.07 | 0.21 | 0.50 | 4.85 | .00 | ||||

| Total between | 3.12 | 2 | .21 |

Another indicator, Classic fail‐safe N, suggests that 121,993 additional effect sizes would be needed to bring the observed p value below alpha = .05 (i.e., 860 additional “null” effect sizes per each observed effect size). Also, Orwin's fail‐safe N (Orwin, 1983), suggests that 125 additional “null” effect sizes would be needed to bring the observed average effect size to a trivial level of = 0.10.

In addition, an analysis of publication type (See Table 3) indicated that journal articles ( = 0.46, k = 302), unpublished theses ( = 0.31, k = 8) and other unpublished documents (e.g., conference papers), ( = 0.35, k = 54) were not significantly different in mixed‐model moderator analysis (Q‐Between = 3.12, df = 2, p = .21). However, these nonsignificant findings should be viewed cautiously because of the small k for theses, possibly resulting from an issue of power.

Overall, there appear to be no serious issues of bias related to the analysis of published data.

5.2.2. Sensitivity analysis

Sensitivity analysis examines issues in the data and coding that might affect the reliability of the results. First, we conducted a one study removed (Borenstein et al., 2014, CMA, Version 3.3.070) analysis of effect size and study sample on the variability of the individual data points across the distribution. Table 4 shows partial results of that analysis. The table contains six studies from the top of the distribution (highest effect sizes) and six studies from the bottom (lowest effect sizes, all negative). There is only a 0.01th difference in average effect size between the top and the bottom of the distribution when each study is removed sequentially. Also, the standard errors and the limits of the 95th confidence demonstrate the same consistency. Since the most problematic studies often reside on the peripheries of the distribution, large/small in effect size magnitude and large in sample size (i.e., high influence studies), the relative random weights were included in the last column. In the 12 studies displayed, their influence ranged from 0.13–0.28 on the upper end and 0.19–0.33 on the lower end indicating little concern for undue influence.

Table 4.

Sample (top six studies and bottom six studies) of one study removed

| Study names | Actual | One study removed | Relative wt. | |||

|---|---|---|---|---|---|---|

| g |

|

SE | Lower 95th | Upper 95th | ||

| 1. Zohar_2 2008 | 3.10 | 0.44 | 0.03 | 0.38 | 0.50 | 0.20 |

| 2. Lamidi 2015 | 3.10 | 0.44 | 0.03 | 0.38 | 0.49 | 0.28 |

| 3. Garcâ_1 2006 | 3.10 | 0.44 | 0.03 | 0.38 | 0.50 | 0.26 |

| 4. Ben‐David 2009 | 3.10 | 0.44 | 0.03 | 0.38 | 0.49 | 0.27 |

| 5. Alfassi_1 2003 | 3.10 | 0.44 | 0.03 | 0.38 | 0.50 | 0.15 |

| 6. Alfassi_2 2003 | 2.85 | 0.44 | 0.03 | 0.38 | 0.50 | 0.13 |

| 360. Eysink_2 2009 | −0.88 | 0.45 | 0.04 | 0.23 | 0.39 | 0.33 |

| 361. Furtak_1 2012 | −0.97 | 0.45 | 0.04 | 0.23 | 0.39 | 0.19 |

| 362. Chang_2 2002 | −0.97 | 0.45 | 0.04 | 0.23 | 0.39 | 0.25 |

| 363. Sola_2 2007 | −0.98 | 0.45 | 0.04 | 0.23 | 0.39 | 0.29 |

| 364. Wesche 2002 | −1.07 | 0.45 | 0.04 | 0.23 | 0.39 | 0.32 |

| 365. Bassett 2014 | −1.48 | 0.45 | 0.04 | 0.23 | 0.39 | 0.25 |