Abstract

The link between spatial (where) and temporal (when) aspects of the neural correlates of most psychological phenomena is not clear. Elucidation of this relation, which is crucial to fully understand human brain function, requires integration across multiple brain imaging modalities and cognitive tasks that reliably modulate the engagement of the brain systems of interest. By overcoming the methodological challenges posed by simultaneous recordings, the present report provides proof‐of‐concept evidence for a novel approach using three complementary imaging modalities: functional magnetic resonance imaging (fMRI), event‐related potentials (ERPs), and event‐related optical signals (EROS). Using the emotional oddball task, a paradigm that taps into both cognitive and affective aspects of processing, we show the feasibility of capturing converging and complementary measures of brain function that are not currently attainable using traditional unimodal or other multimodal approaches. This opens up unprecedented possibilities to clarify spatiotemporal integration of brain function.

Keywords: data fusion, event‐related optical signal (EROS), event‐related potentials (ERPs), functional magnetic resonance imaging (fMRI), multimodal neuroimaging, simultaneous recording

By overcoming the methodological challenges posed by simultaneous recordings, the present report provides proof‐of‐concept evidence for a novel approach using three complementary imaging modalities: functional magnetic resonance imaging, event‐related potentials, and event‐related optical signals. Using the emotional oddball task, a paradigm that taps into both cognitive and affective aspects of processing, we show the feasibility of capturing converging and complementary measures of brain function that are not currently attainable using traditional unimodal or other multimodal approaches. This opens up unprecedented possibilities to clarify spatiotemporal integration of brain function.

1. INTRODUCTION

Brain imaging methodologies have progressed dramatically over the past decades, but current techniques still have important limitations in either the spatial or temporal domain, and hence they provide only an incomplete view of how the human brain functions. Multimodal imaging approaches may overcome such limitations by jointly capitalizing on the individual strengths of different brain imaging modalities (Uludağ & Roebroeck, 2014). This is possible because simultaneous acquisition of multiple imaging techniques enables direct examination of diverse indices of brain activity related to the same events, within the same participants, and at the same time. Additionally, simultaneous multimodal brain imaging eliminates important discrepancies between measures (introducing confounds in modality fusion) due to phenomena such as habituation, practice, and memory, and allows for a more comprehensive investigation of phenomena that can be difficult to examine when using multiple unimodal sessions. Such phenomena include moment‐to‐moment variability of cognitive functions (e.g., attention), or of physiological responses (e.g., electrophysiological or neurovascular state), as well as acute changes, such as short‐term effects of drugs. Application in humans is particularly useful because simultaneous non‐invasive measures of brain function can be directly examined in relation to complex cognitive processes, behaviors, self‐report assessments, and clinical diagnoses (S. Liu et al., 2015; Uludağ & Roebroeck, 2014). Furthermore, increasing interest in the dynamics of neuronal activity has been highlighted in recent and emerging frameworks such as spatiotemporal neuroscience, which point to the relevance of spatiotemporal dynamics in mental features such as self, consciousness, and psychiatric disorders (Demertzi et al., 2019; Northoff, Wainio‐Theberge, & Evers, 2019). Notably, however, simultaneous multimodal imaging also poses specific technical and interpretational challenges, which have traditionally limited its applicability.

The main goal of the present investigation was to address many of these challenges in order to implement and validate a novel protocol for trimodal simultaneous brain imaging recording, using the following three methodologies: functional magnetic resonance imaging (fMRI), event‐related potentials (ERPs), and event‐related optical signals (EROS). The resulting protocol provides a novel brain imaging tool that further clarifies the links between spatial and temporal aspects of brain activity. In the following sections, we first provide a brief overview of the three brain imaging techniques and the rationale for capitalizing on their overlapping and complementary features, and then demonstrate the feasibility of using them simultaneously for the study of human brain function, using a task involving emotion–cognition interactions. Finally, we also discuss potential approaches for data fusion and interpretation.

1.1. Basic features of fMRI, ERP, and EROS

Functional MRI is a widely used noninvasive method for examining spatial aspects of brain function (Glover, 2011). The typical fMRI method by which spatial mapping of hemodynamic responses is achieved is referred to as blood oxygen level‐dependent (BOLD) fMRI, which captures changes in the level of oxygenated blood in a local brain region (Buxton, 2002) and is commonly taken as an indicator of changes in neural activity. However, while fMRI can be used for high‐resolution localization of changes in brain activity, on the order of millimeters (Glover, 2011), the detected changes in the hemodynamic response are inherently slow, and may confound activities occurring at up to several seconds distance from each other (but see Ogawa et al., 2000). Thus, fMRI is most suited for investigating where changes occur, but is generally much less accurate regarding when changes occur. In contrast, ERP recording is a widely used noninvasive method for examining temporal aspects of brain function (Fabiani, Gratton, Karis, & Donchin, 1987; Teplan, 2002). This method capitalizes on the brain's electrical properties, typically by recording from electrodes placed on the surface of the scalp. Although ERPs can be used for examining brain responses with millisecond resolution, recording of neural activity from the scalp does not provide precise information about the location of sources of the signal within the brain because of volume conduction and the effect of the skull on the propagation of signals from the brain to the surface of the head (Burle et al., 2015; but see recent advances in Ferracuti et al., 2020; Migliore et al., 2019; Porcaro, Balsters, Mantini, Robertson, & Wenderoth, 2019). Hence, fMRI and ERP can be seen as complementary in regard to spatial and temporal resolutions, but because they capture different underlying mechanisms (i.e., hemodynamic vs. postsynaptic potential changes), it is helpful to also include a method that can help bridge between them. Therefore, the present protocol included a third neuroimaging method, EROS, to help identify spatial and/or temporal overlaps between neural activities, by avoiding the collapsing across time and across space that fMRI and ERPs entail, respectively.

Fast optical imaging/EROS is a diffuse optical imaging method based on the measurement of near‐infrared light scattering changes associated with neural activity, which can provide the type of data required for integrating and bridging between fMRI and ERPs (Gratton & Fabiani, 2010), at least for cortical phenomena. Changes in neural activity may be associated with changes in depolarization or hyperpolarization. In the case of depolarization, it appears that neurites (primarily dendrites) swell, while in the case of hyperpolarization, neurites shrink, which is posited to be related to the movement of water across the membrane associated with ion transport (Foust & Rector, 2007; Gratton & Fabiani, 2010; Rector, Carter, Volegov, & George, 2005; Rector, Poe, Kristensen, & Harper, 1997). The swelling/shrinking of neurites during neural activity changes the way in which light scatters within the tissue, which can then be measured using fast optical signal or EROS. As a result, EROS photons' time of flight data, as measured by the phase delay of a photon density wave moving between a source and a detector, can show spatial correspondence with fMRI activations and temporal correspondence with ERP components (Fabiani et al., 2014; Gratton et al., 1997; Gratton & Fabiani, 2010; Tse et al., 2007). Notably, because fast optical signal recording is limited to a few centimeters (≅3) below the head surface (Chiarelli et al., 2016; Gratton & Fabiani, 2010), limiting the findings to areas of the cortex proximal to the head surface, this technique does not fully replace the advantages of combined fMRI–ERP. However, as mentioned above, because of the shared properties with these two methods, it can help bridge between these techniques when examining brain activity near the cortical surface.

1.2. The need for a simultaneous trimodal approach

Although considerable progress has been made in integrating different brain imaging techniques, current bimodal protocols (i.e., protocols involving two imaging modalities) still have important limitations. For example, it is difficult to distinguish apparent mismatches between fMRI and ERP data as being due to decoupling of the signals, or to signal detection failures (Daunizeau, Laufs, & Friston, 2010). Also, bimodal EROS–ERP recording is limited in how much information it can provide about subcortical brain activity, due to the limited depth of optical imaging and incomplete source information in ERP. Furthermore, the challenges of integrating moment‐to‐moment variability in fMRI–ERP and EROS–ERP are increased if these modalities are collected separately and integrated through analysis. Finally, current bimodal fMRI–EROS (Zhang, Toronov, Fabiani, Gratton, & Webb, 2005) integrations are limited in that there are no systems available to provide full head coverage for EROS recording in a MRI scanner, and available targeted optical arrays cannot replace the broad coverage available with MR‐compatible ERP.

Additionally, in performing the fusion of data from different imaging modalities, it is often assumed that the same neural signals are present in each (given the same experimental manipulation), and that it is possible to perform a one‐to‐one mapping of the signals from one modality to another. For instance, task responses might be associated with BOLD fMRI activity in particular brain areas (thus providing the spatial specificity information), and amplitude of particular ERP components (thus providing the temporal specificity information), with the often‐implicit assumptions that they correspond to the very same phenomenon. It should be noted, however, that it is not necessary that a one‐to‐one relationship must exist between phenomena identified through modalities with limited spatial and temporal resolution. A very significant issue of collapsing over time is the fact that, when two conditions are compared, they may appear similar (and therefore generate no difference in the BOLD signal), but may in fact occur at different times (thus generating ERP differences). The same problem occurs when collapsing over space: activities emanating from relatively close regions may generate largely overlapping scalp distributions (and therefore no differences in ERP activity) but generate significant fMRI differences. Trimodal data integration allows for testing such assumptions.

Furthermore, a challenge with a modality like EROS, which possesses both high spatial and temporal resolution, is that its description of brain activity may require a large number of free parameters (because the data vary in both space and time), which can be difficult to estimate simultaneously with precision. This problem is aggravated by the relatively low signal‐to‐noise ratio of EROS recordings. The number of free parameters, however, can be greatly reduced by prior information about the location and timing of “candidate” activity provided by other imaging methods, vastly reducing the confidence interval for the estimation of each of them. Thus, fMRI and ERPs can be useful in narrowing down the number of free parameters to be estimated by EROS, by imposing spatial and temporal constraints, respectively, in the form of regions‐of‐interest (ROIs) and intervals‐of‐interest to analyze. In this sense, trimodal integration of fMRI, ERP, and EROS can overcome such respective limitations by capitalizing on the strengths of each technique, which allows for clarification of their complementarity as well as their integration. Additionally, and differently from the combined fMRI/ERP recording, EROS recording does not introduce artifacts in either fMRI or ERP data nor do these modalities introduce artifacts in EROS data. However, the requirement of adding sensors for ERPs and EROS within the MRI environment adds time for participants' preparation and requires space within the MRI head coil. For this reason, the current proof‐of concept study involved simultaneous recordings of ERPs and EROS inside the scanner based on relatively small sensor sets, with research questions that were targeted accordingly.

Notably, to date, no study has simultaneously recorded and integrated all three of these techniques for the purpose of clarifying the link between spatial and temporal aspects of human brain function, and hence implementation of their simultaneous recording is the focus of the present approach. For the purpose of developing clear initial hypotheses and proof‐of‐concept evidence in the present study, we targeted the phasic/event‐related activity associated with stimulus onsets in a typical event‐related design. To this end, it is critical to show that the trimodal approach can help dissociate and individually describe brain activities that are relatively close in time (a few hundred milliseconds or less) and in space (a few centimeters distance or less). Hence, we employed the emotional oddball paradigm, for which previous data suggest that this should be the case. Most interestingly, however, the spatial information provided by scalp ERP activity in this paradigm (see below) is not readily consistent with that obtained in fMRI studies, so that the current state of knowledge in this case leaves uncertainties about the relationship between ERPs and fMRI data, making application of the trimodal paradigm particularly interesting. In the next section, we discuss extant evidence supporting the logic presented here.

1.3. Neural correlates of emotion–cognition interactions

The emotional oddball task provides clear targets for expected spatial dissociations in brain response and temporal responses from scalp electrodes, making it an ideal candidate for testing simultaneous multimodal recordings (Moore, Shafer, Bakhtiari, Dolcos, & Singhal, 2019). This task has been studied with both fMRI (Fichtenholtz et al., 2004; Wang, Krishnan, et al., 2008; Wang, LaBar, et al., 2008; Wang, McCarthy, Song, & Labar, 2005; Yamasaki, LaBar, & McCarthy, 2002) and ERP (Briggs & Martin, 2009; Schluter & Bermeitinger, 2017; Singhal et al., 2012). Functional MRI research using the emotional oddball or similar tasks with distraction has shown greater response to goal‐relevant stimuli (targets) compared to distracters in dorsal executive system (DES) regions, such as the dorsolateral prefrontal and lateral parietal cortices (dlPFC, LPC), and greater response to distracters compared to targets in ventral affective system (VAS) regions,1 such as the ventrolateral prefrontal cortex (vlPFC) and the amygdala (Anticevic, Repovs, & Barch, 2010; Chuah et al., 2010; Denkova et al., 2010; Diaz et al., 2011; Dolcos, Iordan, & Dolcos, 2011; Dolcos & McCarthy, 2006; Fichtenholtz et al., 2004; Iordan, Dolcos, & Dolcos, 2013; Oei et al., 2012; Wang et al., 2005; Wang, Krishnan, et al., 2008; Wang, LaBar, et al., 2008; Yamasaki et al., 2002; reviewed in Iordan et al., 2013), in addition to activities in parietal regions. Notably, fMRI studies have specifically linked vlPFC responses with both general emotion processing and engagement of control mechanisms to cope with emotional distraction (Dolcos et al., 2011; Iordan et al., 2013; Ochsner, Silvers, & Buhle, 2012), suggesting an important role of these frontal regions in emotional distraction. More specifically, the pattern of these associations has generally suggested that anterior vlPFC (e.g., Brodmann Areas [BAs] 45/47) is engaged during the initial impact of emotional distraction (Dolcos & McCarthy, 2006; Iordan et al., 2013), while more posterior subregions of the vlPFC (e.g., BAs 44/45) appear to be engaged during coping with emotional distraction (Dolcos, Kragel, Wang, & McCarthy, 2006). Together, this evidence suggests that there is extensive heterogeneity of the function of the vlPFC, which makes it a prime target for examining activity using EROS, and with the guidance of known ERP responses to these processes.

However, the dynamics of spatial dissociations identified with fMRI in PFC areas are not captured by ERP data from frontal electrodes—instead, sensitivity in the timing of responses is identified in the P300 response to targets (Fabiani et al., 1987; Katayama & Polich, 1999; Polich & Heine, 1996; Singhal et al., 2012) and in the late positive potential (LPP) response to emotional pictures (Dolcos & Cabeza, 2002; Schupp et al., 2004; Schupp, Junghofer, Weike, & Hamm, 2003; Singhal et al., 2012; Weinberg & Hajcak, 2010) at posterior electrode locations. This is an example in which, as alluded to above, neural signals appear in one modality with no apparent corresponding signal appearing in another modality. Of course, it is entirely possible that ERP signals show maximum values at scalp locations distant from the cortical regions involved in their generation (in large part because of the orientation of these regions relative to the surface of the head). However, an important open question is whether the brain activity ultimately responsible for the BOLD signal in frontal areas (which of course is delayed by several seconds) is really occurring at the same time as the parietal ERP phenomena, or instead occurs at subsequent or even previous points in time. Answering questions like these may have great importance for understanding the chain of brain events occurring in this particular paradigm and related to emotion–cognition interactions and brain function, in general (Figure 1 illustrates how trimodal brain imaging data can be integrated using the present paradigm).

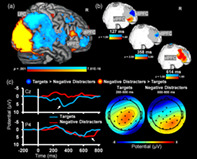

FIGURE 1.

Integration of multimodal data. First, signal from functional magnetic resonance imaging (fMRI), event‐related optical signals (EROS), and event‐related potentials (ERPs) can be extracted and analyzed individually in relation to cognitive processes, behaviors, or individual differences (top panel). Note the differences in the spatial and temporal scales of the fMRI, EROS, and ERP signals. For display purposes, the EROS data were downsampled with a resampling factor of 4. Second, pairs of brain imaging modalities can be analyzed together to identify associations between brain signals (middle panel), and the combined information can be examined in relation to the activity of interest. Third, all three brain imaging modalities can be integrated together (bottom panel), by linking the emergent information from the integrated pairs of modalities, and/or by jointly analyzing spatiotemporal features across all three modalities. Illustrations of fMRI (A), ERP (C), and data integrations (AC, BC, and bottom panel) include adaptations from Moore et al. (2019), with permission. For consistency across imaging modalities, integration data from the right hemisphere is featured here at a lower threshold. Notably, the right hemisphere regions shown are homologous to the fMRI–ERP integration results identified in Moore et al. (2019)

1.4. Main goal and expected findings

The key goal of this project is to provide proof‐of‐concept evidence regarding the feasibility of implementing and validating a novel protocol for trimodal brain imaging that would provide a spatiotemporal description of brain events related to emotional distraction. This involved successful simultaneous acquisition of fMRI, ERP, and EROS data, as well as thorough data cleaning, so that artifacts inherent to the multimodal acquisition are removed and targeted effects can be assessed. The dorso‐ventral dissociations identified in the lateral PFC mentioned above, along with the evidence highlighting the role of the vlPFC, guided the decision regarding the placement of the EROS sensors (see Section 2). Consistent with the expected feasibility of trimodal recordings and integration, we predicted that: (a) fMRI data would effectively capture the established dorso‐ventral spatial dissociations in the lateral PFC as well as in parietal areas, in response to targets versus emotional distracters; (b) ERPs would identify timing and functional differences associated with the processing of targets versus emotional distracters most evident at parietal scalp locations (P300 and LPP, respectively) but not frontal locations; (c) EROS would indicate that dorso‐frontal activity with timing similar to that of the P300 is observed in response to targets, and ventro‐frontal activity with timing similar to that of the LPP is observed in response to emotional distracters. Finally, (d) we also sought to illustrate how keeping track of trial‐to‐trial variations in the latency of ERP responses could lead to improvements in the EROS data, emphasizing the relationships between the phenomena observed with these two methods and the advantage of their simultaneous recording for EROS analysis.

2. METHODS

Trimodal and bimodal (fMRI–ERP, EROS–ERP) recordings were collected to validate the expected findings in each individual modality, based on findings from the extant literature (including our own work) and from preceding unimodal sessions. The primary focus is on data resulting from implementing the proposed trimodal brain imaging protocol, which built on the procedures used to obtain bimodal recordings. These protocols were optimized for participant and equipment safety as well as data quality.

2.1. Participants

Given the purpose of the present study to provide proof‐of‐principle evidence for the trimodal recording approach, rather than for generating inferences about brain–cognition relationships based on multimodal data (e.g., Moore et al., 2019), only a small number of participants were employed. For the present pilot investigation, data were collected from 13 healthy young adults (age range: 18–36, 7 females). Of these, eight participated in the trimodal fMRI–EROS–ERP sessions and five participated in preceding bimodal sessions (two in fMRI–ERP sessions and three in EROS–ERP sessions). Accordingly, the main analyses and results reported in the main text focus on the trimodal data, whereas aspects of the bimodal data are provided in Supplementary Materials. Behavioral data from one trimodal participant were excluded due to a technical issue during recording. The experimental protocols were approved for ethical treatment of human participants by the Office for the Protection of Research Subjects at the University of Illinois, and all participants provided written informed consent.

2.2. The emotional oddball task

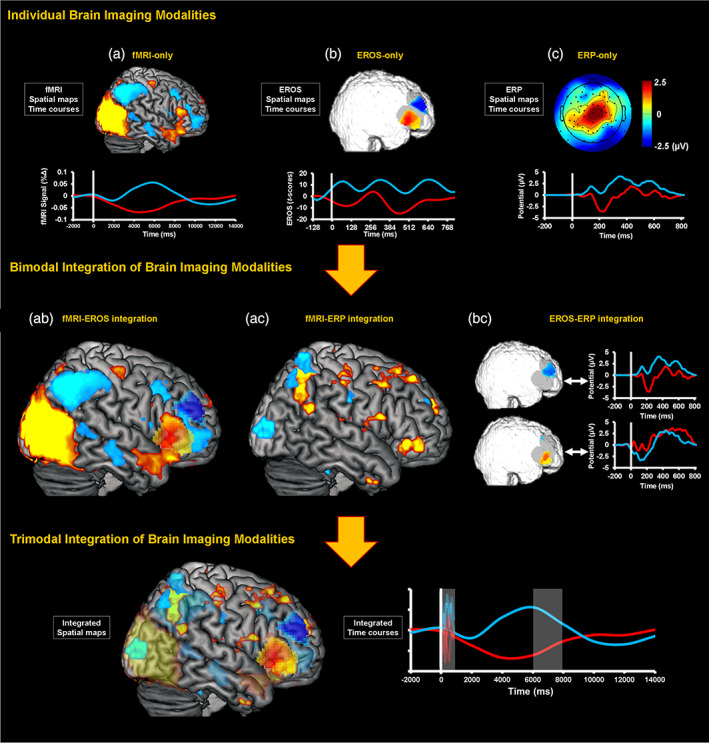

Participants underwent simultaneous recording of brain imaging data while performing an emotional oddball task (Figure 2; Moore et al., 2019; Singhal et al., 2012). Participants detected “oddball” target stimuli (circles) presented in a string of standard (scrambled) and distracter (emotional and neutral) stimuli (squares). There were 595 scrambled image trials, 60 target trials, 45 emotional (40 negative, 5 positive) distracter trials, and 40 neutral distracter trials. Each stimulus was displayed for 1,250 ms and a fixation cross was presented for 750 ms during the interstimulus intervals. The infrequent distracter stimuli (negative and neutral pictures) were selected from the International Affective Picture System (IAPS; Lang, Bradley, & Cuthbert, 2008), based on normative ratings for valence and arousal and were supplemented with in‐house pictures used in previous studies (Dolcos & McCarthy, 2006; Singhal et al., 2012; Wang et al., 2005). The smaller number of positive distracters was included to provide anchors for the comparison with negative and neutral distracters and to avoid induction of longer‐lasting negative states. For the purposes of analyses, targets were compared with negative distracters only, to assess differences in the associated brain activity linked to the two main aspects of processing involved by the oddball task: goal‐relevant cognitive processing versus goal‐irrelevant emotional distraction (i.e., oddball targets vs. negative distracters, respectively).

FIGURE 2.

Diagram of the emotional oddball task. Participants detected rare “oddball” target stimuli presented in a string of standard (scrambled) and distracter (emotional and neutral) pictures. Participants pressed a button with their right index finger to all target stimuli, and their left index finger to all frequent (i.e., scrambled pictures) and infrequent (i.e., emotional and neutral pictures) stimuli. Adapted from Moore et al. (2019), with permission

2.3. Brain imaging procedures for data acquisition, preprocessing, and analysis

Trimodal imaging was preceded by unimodal and bimodal imaging sessions. Procedures for the latter are described first below, because their implementation was essential for trimodal imaging.

2.3.1. Bimodal fMRI–ERP acquisition

Functional MRI scanning was conducted using a 3 Tesla Siemens MAGNETOM Trio scanner. Following the sagittal localizer and the 3D MPRAGE anatomical images (repetition time [TR] = 2,000 ms; inversion time [TI] = 900 ms; echo time [TE] = 2.32 ms; flip angle 9°; field of view = 230 × 230 mm2; volume size = 192 slices; voxel size = 0.9 × 0.9 × 0.9 mm3), fMRI data consisting of a series of T2*‐weighted images were acquired axially, using an echoplanar imaging sequence (TR = 2,000 ms; TE = 25 ms; field of view = 240 × 208 mm2; volume size = 25 slices; voxel size = 2.6 × 2.6 × 4.4 mm3; GRAPPA factor of 2) using a Siemens 32‐channel head coil. ERP data were acquired using a Brain Products 32 electrode cap and BrainAmp MR Plus (Supplementary Figure 1, top panels) at a sampling rate of 5 kHz. The built‐in reference electrode was located at Fz, the ground electrode was located at AFz, and the electrooculogram (EOG) channels were located below the right eye, and at the outer canthi of the left and right eyes. An electrocardiogram electrode was placed on the back of the participant for later correction of ballistocardiogram artifacts in the ERP data. The analytical procedures for the fMRI data collected during the bimodal sessions were the same as for those from the trimodal sessions (see below), and the procedures for the ERP data from these fMRI–ERP sessions are described in the Supplementary Materials.

2.3.2. Bimodal EROS–ERP acquisition

EROS data were collected using ISS Imagent frequency‐domain oximeters (http://www.iss.com/biomedical/instruments/imagent.html) and source fibers (64 laser diodes) emitting a wavelength of 830 nm. Light was detected by 24 photomultiplier tubes and both amplitude and delay were sampled at 39 Hz. Optical fibers were placed against the scalp using a custom‐built helmet designed for full‐head coverage (Supplementary Figure 1, bottom panels). Application involved typical procedures—parting the hair beneath each optical fiber location and securing the fiber in place at each location in a configuration montage. ERP data were collected using 10 drop‐electrodes and a sampling rate of 1 kHz using the BrainAmp MR Plus. Additionally, the right mastoid was used as the online reference (left mastoid was also recorded), and the ground electrode was placed near the nasion. EOG electrodes were placed below the right eye, and at the outer canthi of the left and right eyes. Electrode placements for ERP data were based on available locations in the EROS helmet, within approximately 2 cm of standard extended 10–20 system locations. Locations included: FC: 1, 2, 5, 6; C: 3, 4; CP: 1, 2; P: 3, 4. ERP data were downsampled to 500 Hz and re‐referenced to an average mastoid reference during preprocessing. Regarding the analytical procedures, for EROS, delay data were processed using the same procedures as those described for the trimodal sessions (see below). For ERP data, consistent with fMRI–ERP analysis (see Supplementary Materials), average ERPs were computed time‐locked to the onset of the targets and distracters at centroparietal electrodes (CP1 CP2) after excluding trials that showed large microvolt amplitudes (> ±100 μV).

2.3.3. Trimodal fMRI–EROS–ERP acquisition

The trimodal data sample was collected in two stages (each including four participants), corresponding to the development of the project and the upgrade of the MRI local scanning facilities. The first stage involved acquisition using focused optical arrays in a patch format to justify the targeted coverage over lateral PFC, while minimizing adjustments to the MR‐compatible ERP equipment. The second stage coincided with the upgrade of the MRI scanner from a 3 Tesla Siemens Trio to a 3 Tesla Siemens Prisma; there are no issues in compatibility between the two scanners. For this stage, EROS coverage was also expanded from the patch format to a helmet format that covered the same PFC areas as well as parietal cortex, with the ultimate goal of attaining full scalp coverage. The focus here is on data from frontal locations, obtained from all eight participants undergoing trimodal recordings.

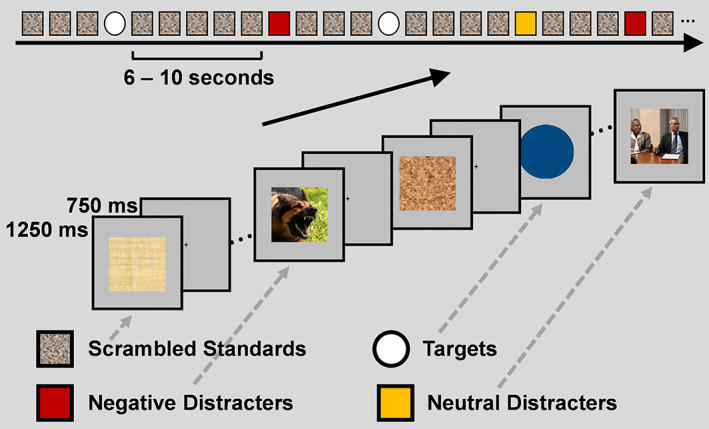

For the initial four participants who completed the trimodal recordings in the 3 Tesla Siemens Trio scanner, fMRI data were acquired and processed using the same parameters described for bimodal fMRI–ERP, with the exception of TE, which was changed to 26 ms, in association with the use of the RAPID Biomedical one‐channel transmit/eight‐channel receive head coil used for collection of trimodal data. EROS data were collected using custom patches that were created from rubber and elastic to hold plastic optical fiber housings and prisms, and held in place by Velcro straps across the head. Similar to the approach used in a previous study using optical imaging with transcranial magnetic stimulation (Parks et al., 2012), the prisms allowed the optical fibers to be placed tangentially to the scalp surface under the electrode cap (Figure 3a). The placement of the patches was guided by fMRI findings regarding functional dissociations identified in the lateral PFC in studies of emotion–cognition interactions (Figure 3b).

FIGURE 3.

(a) Diagram of the trimodal imaging equipment with frontal event‐related optical signals (EROS) coverage. In the functional magnetic resonance imaging (fMRI) scanner, EROS, and event‐related potential (ERP) data were recorded using bilateral patches (top left), used to apply optical fibers over lateral PFC, and a MR‐compatible electrode cap (top right). The optical detector fibers were applied to the scalp using prisms, to allow for tangential orientation such that the electrode cap could be placed over the patch, and the optical emitter fibers were threaded through the mesh of the electrode cap. Optical fibers were connected to the ISS Imagent unit placed in the scanner's control room, which input to the acquisition computer running optical recording software (bottom left). The ERP signal was acquired using the Brain Products BrainAmp MR Plus and USB2 Adapter, sending the signal to the acquisition laptop. The ERP acquisition also recorded the clock signal from the MR scanner via the SyncBox, and TR and event markers via a parallel port cable bringing signals from the MRI scanner and stimulus computer (bottom right). For the trimodal helmet version, the optical arrays covered the same locations as well as parietal cortex (not shown), and curved optical fibers replaced the prisms. (b) Dorso‐ventral dissociation of brain activation in response to emotional distraction guiding the placement of EROS patches. Peak activation voxels from ventral (VAS) areas showing increased (red) and dorsal (DES) areas showing decreased (blue) activity to negative distraction are displayed on anatomical images, based on their locations identified across fMRI studies of emotional distraction, including with emotional oddball tasks (reviewed in Iordan et al., 2013). The white diamonds and triangles mark peak voxels from areas involved in coping with emotional distraction. The line graphs show the typical time course of activity in dorsal (dlPFC) and ventral (vlPFC) regions. Emotional distraction produced the most disrupting effect on activity in dlPFC, while producing the most enhancing effect in vlPFC. These regions were therefore targeted for EROS recording using the lateral PFC patches shown in the left panel. The white ovals illustrate the relative location of the patches over the lateral PFC. The gray boxes above the x‐axes indicate the onset and duration of the working memory task's phases: memoranda, distracters, and probes. L, Left; R, Right; dlPFC, dorsolateral prefrontal cortex; vlPFC, ventrolateral PFC. Adapted from Dolcos and McCarthy (2006) (Copyright 2006 Society for Neuroscience) and Iordan et al. (2013), with permission

The patches covered dlPFC and vlPFC areas, bilaterally, which in the 10–20 system for electroencephalography (EEG) correspond to the location of F3/4 and F7/8 electrodes, respectively. Source optical fibers were threaded through the mesh of the electrode cap into small holes in the rubber patches and held in place using adhesive putty. EROS data collection involved an ISS frequency‐domain oximeter, and sources were 16 laser diodes emitting a wavelength of 830 nm. Light was detected by four photomultiplier tubes, and both amplitude and delay were sampled at 39 Hz (Mathewson et al., 2014; Tse et al., 2007). The ERP equipment included the same Brain Products system, and for these sessions the electrodes located over the optical patches (F: 3, 4, 7, 8, FC: 5, 6) were moved and secured to posterior locations that were empty in the cap (PO: 1, 2, z, Oz, P: 1, 2), based on safety‐related advice provided by Brain Products. Notably, to ensure safety during recording, the electrodes moved to posterior locations were still prepared with electrode gel and kept below suggested impedance level thresholds (i.e., 20 kΩ).

For the remaining four participants completing the trimodal recording in the 3 Tesla Siemens Prisma scanner, fMRI data were also acquired and processed using the same parameters described for bimodal fMRI–ERP, with the exceptions of TE, which was changed to 30 ms, and field of view, which was 240 × 240 mm2. The 3D MPRAGE anatomical images were also adjusted (TR = 2,300 ms; flip angle 8°; field of view = 240 × 240 mm2). The design for the optical arrays used was consistent with the patches described above, but were built into a full foam helmet that allowed for the EEG electrodes to remain in place at frontal locations. More specifically, the full set of electrodes were removed from the net cap and sewn into corresponding locations in a foam helmet consistent with the general design shown in Supplementary Figure 1. Furthermore, two additional optical detectors were placed in each of the frontal arrays to increase the coverage for both dorsal and ventral regions of interest. Finally, parietal optical patches were also included in the helmet, but were not of primary interest for the current proof‐of‐concept analyses.

ERP data from all trimodal sessions were processed using a procedure consistent with the method for bimodal fMRI–ERP, and the electrodes moved to posterior locations during the sessions involving the optical patch approach were ignored during the calculation of the average reference. This was done in order to avoid over‐weighting the average reference with posterior scalp activity. Importantly, because the expected ERP effects peak in posterior locations, moving and excluding the frontal electrodes that overlapped with the optical array from analyses did not impede acquisition of data of interest.

2.4. Brain imaging procedures for data preprocessing and analysis

Regarding analytical procedures, for fMRI data, preprocessing and analyses were performed using SPM12 (Wellcome Department of Cognitive Neurology, London, UK). Specifically, fMRI data were first corrected for differences in acquisition time between slices for each image. Second, each functional image was spatially realigned to the first image of each run to correct for head movement and was co‐registered to the participant's high‐resolution 3D anatomical image. Third, these functional images were transformed into the standard anatomical space defined by the Montreal Neurological Institute (MNI) template implemented in SPM12. Finally, the normalized functional images were spatially smoothed using an 8 mm Gaussian kernel, full‐width‐at‐half‐maximum, to increase the signal‐to‐noise ratio. Preprocessed functional images were submitted to fixed‐effects t‐test analyses using an event‐related design in the general linear model (GLM) framework, in which the onsets of target and distracter stimuli were convolved with a canonical hemodynamic response function and included as the regressors of interest. Durations of the stimulus events were specified as the duration of stimulus presentation (i.e., 1,250 ms), using a boxcar function. To control for motion‐related artifacts, six motion parameters calculated during spatial realignment for each run were included in our GLM as regressors of no interest. These analyses generated contrast images identifying differential BOLD activation associated with each event of interest relative to baseline, as well as differences in activation between the events of interest. In the present analyses, we primarily focused on the following two main contrasts that are essential to identify brain regions whose activity was sensitive to the manipulation of stimulus type: targets > negative distracters and negative distracters > targets. Given the nature of the study, a voxel‐wise intensity threshold of p < .001, uncorrected for multiple comparisons, and a cluster extent threshold of p < .05 family‐wise error (FWE) corrected for multiple comparisons were used; details about activations surviving intensity thresholds corrected for multiple comparisons are also reported.

For EROS, phase delay data were corrected off‐line for phase wrapping, pulse artifacts were removed, and data were high‐pass filtered at 0.1 Hz and low‐pass filtered at 8 Hz (Gratton & Fabiani, 2003; Tse et al., 2007). Optical fiber locations were digitized in 3D with respect to three fiducial points (located on the nasion and left and right preauricular points) on each individual participant, using a Polhemus "3Space" 3D digitizer. The same fiducial points were then marked on MR anatomical images and co‐registered using a recently developed optimized co‐registration package (Chiarelli, Maclin, Low, Fabiani, & Gratton, 2015). The optical source and detector points were transformed to MNI space to place them in a common space. Analysis software OPT‐3D (Gratton, 2000) was used to estimate optical paths, construct activity images, and perform statistical tests, time‐locked to the onset of the targets and negative distracters. Statistical maps of the optical phase delay signal for each data point were generated by 3D reconstruction of the z‐scores on a template brain in MNI space, with an 8 mm spatial filter according to the location information from the co‐registration procedure. Channels with a SD of the delay greater than 100 ps were removed to limit noise.

For ERP data, each set was confirmed to have a minimum of 30 trials per condition of interest (targets: M = 42.88, SD = 9.11; negative distracters: M = 33.00, SD = 4.07), with the exception of one participant who had 25 negative distracter trials (Cohen & Polich, 1997; Vilberg, Moosavi, & Rugg, 2006), after excluding trials that showed large microvolt amplitudes (> ±100 μV). Additionally, targeted electrodes were filtered using a wavelet decomposition approach (Ahmadi & Quiroga, 2013), which is a common technique for enhancing extraction of task‐related activity from average and single‐trial ERP data. The wavelet decomposition approach was implemented using freely available automated software in MATLAB (Ahmadi & Quiroga, 2013), and was visually checked and manually adjusted in cases of poor decompositions (e.g., overfiltering). ERPs were targeted at central and parietal midline electrode locations within expected time‐windows, consistent with those identified in previous studies using similar paradigms (Katayama & Polich, 1999; Singhal et al., 2012). Specifically, ERP analysis first involved a within‐participant analysis of variance (ANOVA), with factors of electrode (Cz, Pz), time window (250–500 ms, 550–800 ms), and condition (targets, negative distracters), to dissociate P300 and LPP sensitivity to targets and emotional distracters (Hajcak, MacNamara, & Olvet, 2010; Katayama & Polich, 1999; Moore et al., 2019; Singhal et al., 2012; Warbrick et al., 2009), respectively. ANOVA results are reported with the Greenhouse–Geisser correction. Planned comparisons also included two‐tailed paired t‐tests on the average ERP amplitudes from each participant with a threshold of p < .05.

Finally, integration of spatial information from fMRI and temporal information from ERPs was used for multimodally informed analysis of the EROS data. Namely, the fMRI contrast maps were used to identify the peak coordinate within the vlPFC showing sensitivity to emotional distracters compared to targets. Then, a 10 mm spherical mask was defined around this coordinate and used as an ROI for targeted signal extraction of EROS. Additionally, the ERP peak latencies for the P300 at electrode Pz were used to adjust the time‐locking of the EROS data trial‐by‐trial. The expectation was that, if the spatial and temporal information from fMRI and ERPs are converging or complementary to the activity captured by EROS, the integration of these aspects to inform the EROS analysis should lead to improved signal extraction.

3. RESULTS

3.1. Behavioral results

Behaviorally, trimodal participants tended to have high overall accuracy in responding to each category. Mean accuracy in response to targets was 94.76% (SD = 2.24%), and over 95% in distracter and standard conditions (neutral distracters, M = 98.93%, SD = 1.97%; negative distracters, M = 98.57%, SD = 1.97%; positive distracters, M = 97.14%, SD = 7.56%; standards, M = 99.69%, SD = 0.36%). Consistent patterns were also shown in the bimodal participants (see Supplementary Materials).

3.2. Brain imaging results

3.2.1. Proof‐of‐concept evidence for simultaneous trimodal imaging

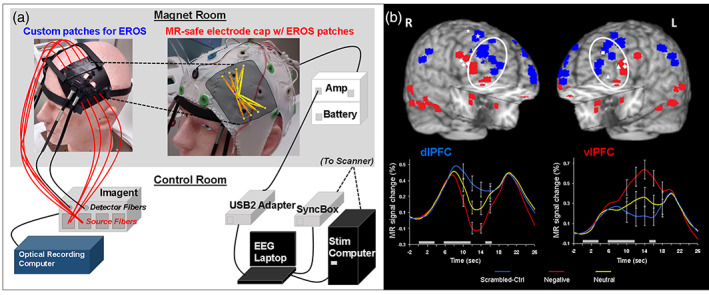

Following successful artifact removal (see Supplementary Figure 2), analyses of data from the trimodal brain imaging sessions allowed for confirmation of our predictions, which provided strong evidence for the feasibility of using these methods simultaneously. The fMRI, EROS, and ERP responses captured the expected prefrontal and parietal cortical responses, which were consistent with spatial and temporal evidence from unimodal recordings. Namely, results from the trimodal sample (n = 8) showed (1) the expected dorso‐ventral dissociations to targets versus distracters in the lateral PFC, in the fMRI data, (2) similar spatial dissociation in the dlPFC versus vlPFC in the EROS data, but at temporal resolutions similar to that of ERPs, and (3) sensitivity to targets and distractors, reflected in modulations of known ERP components (P300 and LPP, respectively), was identified only at posterior locations, in the ERP data (Figure 4).

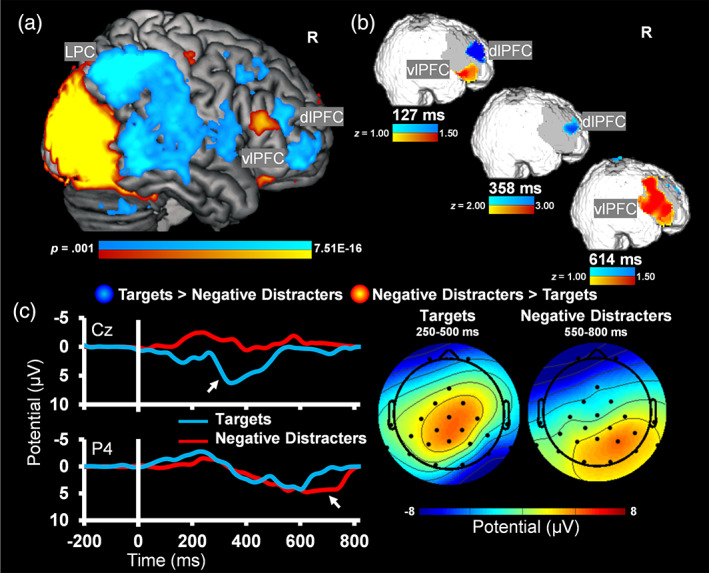

FIGURE 4.

Converging evidence from simultaneous trimodal recordings. Dorso‐ventral dissociations typically observed in the functional magnetic resonance imaging (fMRI) data 6–8 s poststimulus onset (a) were first identified by event‐related optical signals (EROS) as early as < 150 ms post‐onset (b). Event‐related potentials (ERPs) captured similar temporal dissociations for targets and distracters, but at posterior electrode locations (c); see also the topographic maps. Notably, the peak differential sensitivity to targets (250–500 ms) and distracters (550–800 ms) corresponds to known ERP components: P300 and LPP. The ERP plots illustrate the locations for which P300 and LPP were maximal. R, right; dlPFC, dorsolateral prefrontal cortex; vlPFC, ventrolateral PFC; LPC, lateral parietal cortex

Regarding (1), consistent with the expected dorso‐ventral dissociation of brain response during the emotional oddball task, results from the whole‐brain t‐tests showed differential sensitivity to targets and negative emotional distracters in DES (dlPFC, LPC) and VAS (vlPFC) regions, bilaterally. More specifically, analyses of contrasts for the most dissimilar conditions (i.e., targets vs. negative distracters) clearly identified the expected pattern of greater response to targets compared to negative distracters in bilateral dlPFC and LPC, and the opposite pattern in bilateral vlPFC (Figure 4a and Table 1). Regarding (2) EROS analyses showed expected dorso‐ventral dissociations similar to the patterns identified in the fMRI data, but at temporal resolutions similar to that of ERPs (i.e., 127, 358, and 614 ms). Differential activity was analyzed at 127 and 614 ms post‐stimulus onset using a threshold with a range of z‐scores ±1.00–1.50, while activity at 358 ms was analyzed using a range of z‐scores ±2.00–3.00 (Figure 4b). Finally, regarding (3), consistent with the expected P300 and LPP responses in the ERP data, deflections over central/parietal sites showed a peak response for targets in the earlier time window, and deflections over parietal sites showed a peak response for negative distracters in the later time window (Figure 4c). Specifically, an ANOVA for electrode × time window × condition showed main effects of electrode F(1,7) = 7.93, p = .026, η 2 p = .53, condition F(1, 7) = 17.16, p = .004, η 2 p = .71, a time × condition interaction F(1, 7) = 16.40, p = .005, η 2 p = .70, and a marginal electrode × condition interaction F(1, 7) = 4.58, p = .070, η 2 p = .40. As expected, the average P300 amplitude at Cz within the early time window was significantly greater in response to targets (M = 4.12, SD = 3.09) compared to negative distracters (M = −0.61, SD = 2.66; t[7] = 4.99, p = .002, d = 1.76). Also, the average LPP amplitude at Pz within the late time window was numerically greater in response to negative distracters (M = 2.49, SD = 1.82) compared to targets (M = 1.83, SD = 2.23; t[7] = 0.71, p = .503, d = 0.25). Notably, the LPP peak was observed to be largest around electrode P4 and showed significant differences at this location (negative distracters: M = 3.85, SD = 1.53; targets: M = 1.71, SD = 2.48; t[7] = 2.78, p = .027, d = 0.98).

TABLE 1.

fMRI results: Brain regions showing sensitivity to targets and negative distracters. This table identifies brain regions showing differential activity between the most dissimilar experimental conditions of targets and negative distracters, identified by t‐tests significant above a voxel‐wise intensity threshold of p < .001 uncorrected for multiple comparisons and pFWE < .05 corrected extent threshold. The “Sig.” column designates peaks that survived threshold of pFWE < .05 corrected intensity threshold; L, left; R, right; BA, Brodmann's area

| Brain region | Side | BA | MNI peak coordinates | t | Sig. | ||

|---|---|---|---|---|---|---|---|

| x | y | z | |||||

| Targets > negative distracters | |||||||

| Frontal lobe | |||||||

| Superior frontal gyrus | L | 10 | −28 | 60 | 8 | 4.16 | |

| Superior frontal gyrus | R | 10 | 28 | 60 | −8 | 7.10 | * |

| Superior frontal gyrus | R | 9 | 24 | 48 | 24 | 4.43 | |

| Superior frontal gyrus | R | 8 | 28 | 36 | 44 | 4.03 | |

| Superior frontal gyrus | R | 6 | 28 | 20 | 52 | 5.25 | * |

| Medial frontal gyrus | L | 6 | −4 | −12 | 52 | 6.43 | * |

| Medial frontal gyrus | R | 8 | 4 | 32 | 36 | 4.10 | |

| Medial frontal gyrus | R | 6 | 20 | 12 | 52 | 4.89 | * |

| Middle frontal gyrus | L | 10 | −28 | 44 | 12 | 4.28 | |

| Middle frontal gyrus | L | 9 | −40 | 36 | 24 | 4.34 | |

| Middle frontal gyrus | R | 10 | 40 | 44 | 12 | 7.25 | * |

| Middle frontal gyrus | R | 9 | 28 | 32 | 24 | 3.43 | |

| Middle frontal gyrus | R | 8 | 24 | 40 | 32 | 5.03 | * |

| Middle frontal gyrus | R | 6 | 32 | 16 | 44 | 5.04 | * |

| Inferior frontal gyrus | L | 46 | −32 | 36 | 12 | 5.39 | * |

| Inferior frontal gyrus | R | 44 | 56 | 8 | 12 | 5.40 | * |

| Precentral gyrus | L | 6 | −56 | 0 | 4 | 6.81 | * |

| Precentral gyrus | R | 6 | 56 | 8 | 24 | 4.01 | |

| Paracentral lobule | L | 6 | −4 | −20 | 56 | 4.88 | * |

| Anterior cingulate/ | R | 32 | 12 | 36 | 16 | 5.21 | * |

| Cingulate gyrus | R | 32 | 4 | 36 | 24 | 5.25 | * |

| Cingulate gyrus | L | 24 | −8 | 4 | 44 | 4.22 | |

| Cingulate gyrus | L | 23 | 0 | −24 | 28 | 5.85 | * |

| Cingulate gyrus | R | 24 | 16 | 8 | 36 | 4.41 | |

| Subgyral | R | 40 | 52 | −8 | 6.95 | * | |

| Subgyral | R | 32 | −32 | 36 | 3.96 | ||

| Parietal lobe | |||||||

| Postcentral gyrus | L | 40 | −60 | −20 | 16 | 9.51 | * |

| Postcentral gyrus | L | 2 | −48 | −24 | 56 | 11.70 | * |

| Postcentral gyrus | R | 40 | 60 | −24 | 16 | 7.25 | * |

| Postcentral gyrus | R | 2 | 52 | −24 | 44 | 7.22 | * |

| Inferior parietal lobule | L | 40 | −44 | −60 | 52 | 6.16 | * |

| Inferior parietal lobule | R | 40 | 48 | −56 | 48 | 9.97 | * |

| Inferior parietal lobule | R | 39 | 44 | −64 | 48 | 9.69 | * |

| Superior parietal lobule | L | 7 | −28 | −48 | 64 | 4.46 | |

| Supramarginal gyrus | L | 40 | −36 | −48 | 36 | 4.93 | * |

| Angular gyrus | L | 39 | −36 | −56 | 40 | 5.54 | * |

| Precuneus | L | 7 | 0 | −64 | 52 | 9.21 | * |

| Precuneus | R | 31 | 8 | −44 | 36 | 6.56 | * |

| Cingulate gyrus | L | 31 | −12 | −28 | 48 | 4.13 | |

| Cingulate gyrus | R | 31 | 8 | −36 | 32 | 5.60 | * |

| Posterior cingulate | L | 23 | 0 | −36 | 24 | 6.10 | * |

| Posterior cingulate | L | 29 | −16 | −40 | 12 | 4.37 | |

| Posterior cingulate | R | 29 | 20 | −40 | 12 | 4.86 | * |

| Subgyral | R | 31 | 12 | −28 | 48 | 3.52 | |

| Temporal lobe | |||||||

| Superior temporal gyrus | L | 39 | −60 | −56 | 32 | 3.79 | |

| Superior temporal gyrus | R | 41 | 64 | −16 | 4 | 5.93 | * |

| Superior temporal gyrus | R | 39 | 48 | −56 | 36 | 9.14 | * |

| Transverse temporal gyrus | R | 41 | 40 | −32 | 8 | 4.40 | |

| Middle temporal gyrus | L | 21 | −64 | −28 | −8 | 3.23 | |

| Middle temporal gyrus | L | 39 | −28 | −52 | 28 | 4.17 | |

| Middle temporal gyrus | R | 21 | 64 | −24 | −12 | 5.76 | * |

| Middle temporal gyrus | R | 20 | 64 | −44 | −12 | 7.73 | * |

| Middle temporal gyrus | R | 22 | 64 | −48 | 0 | 7.47 | * |

| Inferior temporal gyrus | L | 20 | −60 | −24 | −20 | 4.53 | |

| Hippocampus | R | 28 | −44 | 4 | 4.09 | ||

| Parahippocampal gyrus | R | 40 | −44 | 0 | 3.69 | ||

| Sublobar | |||||||

| Caudate (caudate head) | R | 12 | 12 | −4 | 5.62 | * | |

| Caudate (caudate body) | L | −16 | 16 | 0 | 6.57 | * | |

| Caudate (caudate body) | R | 12 | 4 | 4 | 5.10 | * | |

| Lentiform nucleus (putamen) | L | −28 | 8 | 0 | 4.93 | * | |

| Lentiform nucleus (putamen) | R | 20 | 8 | −12 | 3.99 | ||

| Claustrum | L | −36 | −16 | 4 | 5.50 | * | |

| Insula | L | 13 | −52 | −20 | 20 | 8.56 | * |

| Insula | R | 13 | 60 | −36 | 24 | 5.83 | * |

| Thalamus (ventral anterior nucleus) | L | −12 | 0 | 4 | 4.48 | ||

| Thalamus | L | 0 | −8 | 12 | 5.23 | * | |

| Thalamus (medial dorsal nucleus) | L | −4 | −16 | 12 | 3.96 | ||

| Thalamus | R | 4 | −4 | 4 | 4.55 | ||

| Thalamus (pulvinar) | R | 12 | −28 | 12 | 4.11 | ||

| Cerebellum | |||||||

| Cerebellar tonsil | L | −48 | −64 | −40 | 5.62 | * | |

| Cerebellar tonsil | R | 52 | −52 | −40 | 4.40 | ||

| Inferior semilunar lobule | L | −40 | −76 | −36 | 4.78 | * | |

| Pyramis | L | −20 | −68 | −28 | 4.47 | ||

| Cerebellar lingual | R | 8 | −52 | −16 | 4.72 | * | |

| Culmen | R | 16 | −52 | −20 | 6.50 | * | |

| Negative distracters > targets | |||||||

| Frontal lobe | |||||||

| Superior frontal gyrus | L | 9 | −8 | 64 | 12 | 5.75 | * |

| Superior frontal gyrus | R | 9 | 8 | 56 | 24 | 5.43 | * |

| Medial frontal gyrus | L | 9 | −8 | 52 | 20 | 4.58 | |

| Medial frontal gyrus | L | 6 | −4 | 48 | 28 | 5.15 | * |

| Middle frontal gyrus | L | 46 | −52 | 28 | 24 | 4.64 | * |

| Middle frontal gyrus | R | 46 | 56 | 28 | 16 | 6.17 | * |

| Inferior frontal gyrus | L | 47 | −36 | 32 | −16 | 9.76 | * |

| Inferior frontal gyrus | L | 45 | −56 | 28 | 16 | 5.89 | * |

| Inferior frontal gyrus | R | 47 | 32 | 32 | −16 | 8.69 | * |

| Precentral gyrus | R | 4 | 36 | −20 | 56 | 5.07 | * |

| Anterior cingulate | L | 32 | 0 | 40 | −20 | 8.69 | * |

| Parietal lobe | |||||||

| Postcentral gyrus | R | 3 | 48 | −12 | 56 | 3.97 | |

| Precuneus | L | 7 | −20 | −68 | 48 | 4.72 | * |

| Precuneus | R | 7 | 24 | −68 | 48 | 7.01 | * |

| Posterior cingulate | L | 30 | −4 | −52 | 20 | 7.25 | * |

| Temporal lobe | |||||||

| Superior temporal gyrus | L | 22 | −40 | −56 | 16 | 5.01 | * |

| Middle temporal gyrus | L | 21 | −52 | −4 | −20 | 4.60 | * |

| Occipital lobe | |||||||

| Lingual gyrus | L | 18 | −12 | −72 | 8 | 4.44 | |

| Lingual gyrus | R | 17 | 24 | −92 | 0 | 20.68 | * |

| Fusiform gyrus | R | 19 | 28 | −60 | −8 | 12.56 | * |

| Middle occipital gyrus | L | 19 | −32 | −84 | 24 | 13.53 | * |

| Middle occipital gyrus | L | 18 | −8 | −96 | 20 | 7.79 | * |

| Middle occipital gyrus | R | 19 | 40 | −76 | 16 | 15.03 | * |

| Middle occipital gyrus | R | 18 | 32 | −88 | −4 | 19.56 | * |

| Inferior occipital gyrus | L | 18 | −28 | −92 | −8 | 21.74 | * |

| Inferior occipital gyrus | R | 19 | 48 | −76 | 0 | 15.97 | * |

| Cuneus | L | 7 | −20 | −72 | 40 | 5.52 | * |

| Cuneus | L | 19 | −12 | −88 | 40 | 7.71 | * |

| Cuneus | R | 19 | 12 | −88 | 40 | 6.48 | * |

| Cerebellum | |||||||

| Culmen | L | −24 | −52 | −12 | 10.69 | * | |

| Culmen | R | 40 | −44 | −24 | 15.07 | * | |

| Declive | L | −40 | −80 | −12 | 16.46 | * | |

| Declive | R | 40 | −56 | −16 | 13.96 | * | |

Abbreviation: fMRI, functional magnetic resonance imaging.

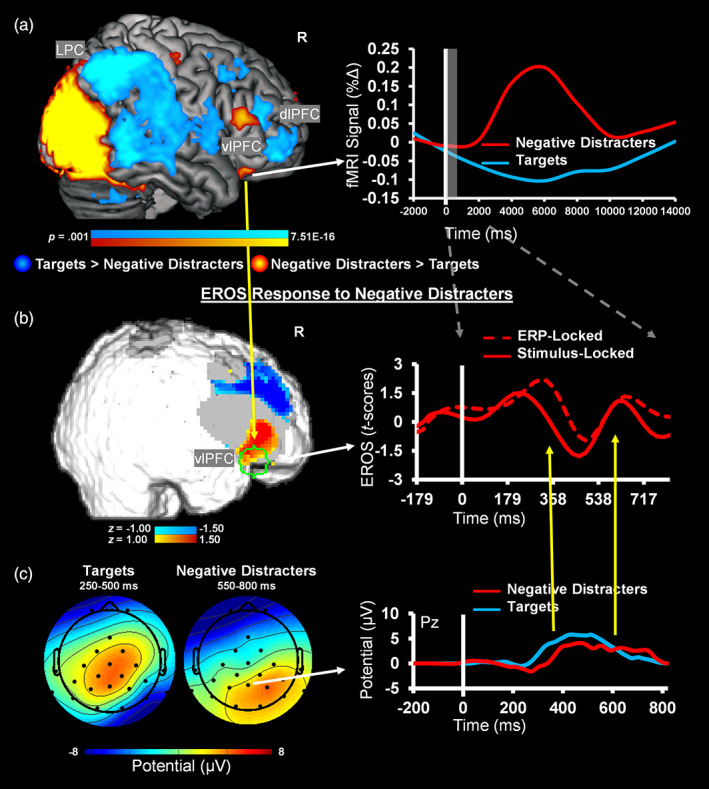

3.2.2. Demonstration of crossmodal integration

To demonstrate integration across the three modalities, below we illustrate an analysis involving fMRI and ERP‐informed EROS signal extraction and refinement. For this, the spatial information from the fMRI analysis was used to define an anterior vlPFC ROI (10 mm sphere around MNI coordinates: x = 32, y = 32, z = −16; BA 47) (Figure 5a), and the P300 ERP latency at Pz was used to adjust the time‐locking of the EROS trial‐by‐trial (Figure 5b,c); due to slight differences in the fit of the trimodal helmet, EROS signal for the anterior vlPFC was not captured for one participant, resulting in this analysis being performed on data from seven participants. The results from fMRI/ERP‐informed EROS analysis showed evidence supporting convergence and improved signal extraction compared to the unimodal EROS analysis. Specifically, the timing alignment showed that around the P300 peak following emotionally negative distracters (M = 414.17 ms, SD = 16.39), response within the anterior vlPFC region later showing BOLD sensitivity to emotional distracters was also captured in EROS (Figure 5b). This led to refined EROS signal, reflected in significantly greater amplitude during expected time intervals around the P300 peak (333–410 ms) when incorporating the spatial and temporal information from fMRI and ERPs (M = 3.13, SD = 4.60), respectively, compared to the stimulus‐locked EROS waveform (M = −2.15, SD = 4.21; t[6] = 3.40, p = .015, d = 1.28) (Figure 5b). There was also a numerical difference in the fMRI and ERP‐informed EROS signal (M = 1.05, SD = 4.24) compared to the stimulus‐locked signal (M = 0.42, SD = 6.32), around the LPP time window of interest (550–800 ms), but this was not statistically significant (t[6] = 0.57, p = .589, d = 0.22). Overall, as evident from Figure 5b, the gain in signal‐to‐noise of the EROS response was greatest within the latencies of the ERP P300 time window of 250–500 ms, which was used as the time‐locking point trial‐by‐trial, and was less apparent in windows outside of this interval. This is not surprising, since shifting the EROS waveform to accommodate for the variability in latency in the ERP peaks should be most helpful for phenomena that are more closely related in time to the time‐locking signal. These results provide an illustration of how overlapping information from the three modalities can be integrated to refine the signal obtained separately from individual modalities.

FIGURE 5.

Evidence for Integration of functional magnetic resonance imaging (fMRI) and event‐related potential (ERP) information for analysis of event‐related optical signals (EROS) data. (a) fMRI peak sensitivity for the contrast of negative distracters versus targets was used to define a spatial region of interest (ROI, delineated in green on Panel (b)), and (c) P300 latencies from electrode Pz, which served as a common location for P300 amplitudes across conditions, were used to adjust the time‐locking of the EROS data, trial‐by‐trial, to extract spatially and temporally informed EROS data. (b) Adjusting the time‐locking of fMRI‐informed EROS data based on ERP latency yielded increased EROS amplitude to negative distracters compared to baseline (brain map shows ~76 ms before the P300 peak following negative distracters), while also showing temporal richness evident in modulations within time‐windows similar to ERPs. For display purposes, the EROS data were downsampled with a resampling factor of 5. R, right; dlPFC, dorsolateral prefrontal cortex; vlPFC, ventrolateral PFC; LPC, lateral parietal cortex

Altogether, the present results provide proof‐of‐concept evidence supporting the feasibility of using simultaneous trimodal fMRI–EROS–ERP recordings to study brain function. These data also highlight EROS as a feasible bridging method between fMRI and ERP allowed by their shared spatial and temporal properties, respectively, and illustrate how fMRI and ERP‐informed analysis of EROS data can lead to signal refinement. Importantly, by identifying complementarity and overlaps across measures of brain function associated with a task involving emotion–cognition interactions, the present results also point to ways in which these distinct methodologies can be used together to study spatial and temporal correlates of brain function, in general. The present proof‐of‐concept evidence opens up the possibility of investigating such associations in larger samples, based on signal integration across spatiotemporal scales (Figure 1).

4. DISCUSSION

The goal of the present study was to implement and validate a novel protocol for trimodal simultaneous brain imaging (fMRI–EROS–ERP), which was preceded by implementation of two bimodal protocols (fMRI–ERP and EROS–ERP). Using an emotional oddball task, which taps into both cognitive and affective aspects of processing, we show that: (a) fMRI data effectively captured expected dorso‐ventral spatial dissociations in the PFC, in response to targets and emotional distracters, (b) EROS identified spatial frontal effects similar to those captured by the fMRI, but at a temporal resolution similar to that of the ERPs, which (c) showed temporal modulation of the associated components (P300, LPP) at parietal but not frontal locations. Finally, (d) we also illustrate how integrating spatial information from fMRI and trial‐to‐trial temporal information from ERP data allows for the refinement of EROS data, beyond what can be accomplished in any of the three modalities, individually. These findings demonstrating the feasibility of using these three techniques simultaneously are discussed below.

Trimodal brain imaging involves clear challenges, which have been addressed in the current protocol. First, the optical and electrode arrays had to be carefully configured to capture expected effects of interest. The present results point to remarkable convergences and parallels among fMRI, EROS, and ERP measures of brain activity, even with a small number of data sets. The fMRI and EROS showed spatially convergent results, with both capturing expected dorso‐ventral dissociations in the lateral PFC, and the EROS and ERP showed temporally convergent results, capturing responses to targets and negative distracters at similar timings after stimulus presentation. Specifically, we demonstrated that a targeted optical array over the lateral PFC regions can capture dorso‐ventral spatial dissociations similar to those identified by fMRI, but at a much higher temporal resolution, while ERP electrodes at posterior locations can capture expected responses, at a similar timing with that of EROS. Notably, the EROS sensitivity to targets coincided with the P300 timing and also with an earlier time window, while the EROS sensitivity to negative distracters coincided with the typical time window for LPP. Furthermore, integration analyses using the fMRI to define an ROI and the ERP latencies to time lock the EROS trial‐by‐trial showed evidence that incorporating information from each modality helps improve signal extraction. Importantly, key to integration of fMRI, EROS, and ERP signals is cleaning the data of artifacts inherent to the multimodal acquisition format, which was also demonstrated by the current protocol.

The present findings expand beyond what can be accomplished using bimodal investigations of brain function, employing associations between BOLD and ERP components (Bénar et al., 2007; Y. Liu, Huang, McGinnis‐Deweese, Keil, & Ding, 2012; Sabatinelli, Lang, Keil, & Bradley, 2007; Warbrick et al., 2009), and demonstrate complementarity across spatial and temporal aspects using EROS as a bridging technique. For instance, the present EROS findings identified dorso‐ventral PFC dissociations that occur at a much earlier timing than what is detectable in fMRI, and which are not captured in ERPs at frontal locations. The EROS response in the dlPFC captured for targets in the present study is consistent with the DES–VAS dissociation previously observed in fMRI studies, and show that dorsal PFC regions are initially involved in processing oddball targets even before the expected P300 peak captured at parietal sites. Similarly, the EROS results also point to the role of the vlPFC in early responses, consistent with the idea that the vlPFC is part of the VAS and sensitive to bottom‐up processing which might be dissociable from later processing associated with coping with emotional distraction (Iordan et al., 2013). Overall, the present results are consistent with the idea that DES and VAS regions play dissociable roles in the context of emotional distraction and suggest that these responses are detectable even at early stages of processing of visual stimuli. Future research combining these three modalities can further explore the spatiotemporal dynamics of these brain systems, to clarify the underlying mechanisms of the impact of, and coping with, emotional distraction, which are still not well understood (Iordan et al., 2013).

The task used in the present study was selected primarily because it provided clear expectations for fMRI and ERP responses coming from the literature on emotion–cognition interactions (Iordan et al., 2013; Singhal et al., 2012; Wang et al., 2005), which also identified the best location for the EROS arrays. However, other conceptual frameworks can also provide insights into the underlying mechanisms of the responses elicited by this task and are relevant for understanding brain function in general. For example, the pattern of enhanced engagement of DES regions in response to targets is consistent with the cognitive control frameworks that emphasize endogenous control (Corbetta & Shulman, 2002), proactive processing (Braver, 2012), and the cognitive control process of shifting (i.e., flexible changes between task‐sets or goals) (Miyake et al., 2000; Miyake & Friedman, 2012), which also highlight activity of dorsal brain regions during top‐down processing. The P300 (or so‐called P3b, in this case) has also been proposed as a component that might be related to these processes, although there has been some evidence suggesting relation to other cognitive control processes as well (Gratton, Cooper, Fabiani, Carter, & Karayanidis, 2018). On the other hand, the pattern of enhanced engagement of VAS regions in response to emotional distraction is also consistent with models of cognitive control that emphasize exogenous control (Corbetta & Shulman, 2002), reactive processing (Braver, 2012), and the cognitive control process of updating (i.e., monitoring and changing working memory contents) (Miyake et al., 2000; Miyake & Friedman, 2012), which engage ventral brain regions during bottom‐up processing.

Although investigation of these possible alternative options regarding the processing indexed by the dorso‐ventral dissociations identified here is beyond the scope of the present study, their clarification is allowed by future investigations using simultaneous multimodal imaging capitalizing on the protocol implemented here. A recent magnetoencephalography (MEG) investigation of emotional distraction (García‐Pacios, Garcés, Del Río, & Maestú, 2015) has provided initial evidence for a temporal dissociation in the orbito‐lateral PFC between an earlier (70–130 ms) response, and a later response (360–455 ms). These findings are consistent with the timing of response identified in our EROS data and support the idea of detectable neural engagement in the initial impact of versus coping with distraction, respectively, based on fMRI findings. However, it is unclear how the fast neuronal responses captured by MEG relate to the slower hemodynamic changes in the vlPFC, revealed by fMRI studies. Our recent work integrating fMRI and ERPs in the context of the emotional oddball (Moore et al., 2019) has provided spatial evidence consistent with subregional specificity within the vlPFC. Namely, while the LPP was associated with BOLD modulation in anterior vlPFC, possibly linked to basic emotion processing, sensitivity in posterior vlPFC was associated with individual differences in emotion regulation and self‐control, consistent with an involvement of this region in cognitive/executive control processing. However, these findings do not provide information regarding differential timing of these processes. Hence, by incorporating EROS, future studies capitalizing on the trimodal imaging approach demonstrated here will be able to clarify the dynamic role of vlPFC subregions in various aspects of processing linked to the impact of emotional distraction (basic processing vs. coping).

Simultaneous fMRI–EROS–ERP recordings have benefits beyond the study of emotion–cognition interactions or cognitive/executive control. For example, recent studies have demonstrated comprehensive multimethod investigations of topics such as neurovascular coupling in aging (Fabiani et al., 2014), using simultaneously acquired EROS–ERP and separately acquired fMRI. Capitalizing on multiple indices of brain activity allows for validation measures to be built into a given study, which can help to strengthen inferences about results. Additionally, multimodal brain imaging can provide advantages for controlling effects such as habituation and/or memory, which can be difficult to obtain across multiple unimodal sessions. Another important feature of simultaneous multimodal data collection is in experiments where moment‐to‐moment spontaneous variations in activity can be captured, such that not only variability across individuals is identified, but also variability in individual responses or ongoing activity. This aspect could also be important for understanding different types of brain activity, as alluded to above, such as widespread shifts in neural activity, as well as spontaneous and event‐related oscillatory activity, which might capture different activities than the phasic responses associated with event‐related paradigms (Gratton & Fabiani, 2010; Gratton, Goodman‐Wood, & Fabiani, 2001; Logothetis, Pauls, Augath, Trinath, & Oeltermann, 2001; Makeig et al., 2004; Sadaghiani et al., 2010; Scheeringa et al., 2009; Scheeringa et al., 2011; Teplan, 2002). One clear example of this is the study of resting‐state networks, where the spontaneous and uncontrolled variations are correlated over time between brain locations. Hence, to fully understand the relations between activity fluctuations observed with different imaging modalities, the modalities must be collected simultaneously. Finally, even for event‐related paradigms, as previously noted, trial‐by‐trial variations may be an important consideration for either cognitive (e.g., attention) or physiological (e.g., EEG phase/microstate or neurovascular state) reasons. Additionally, some practical limitations can also be avoided or addressed by simultaneous multimodal brain imaging, such as cases where only a limited set of unique appropriate stimuli are available, or when time and resources are not available for bringing in participants for multiple sessions. Therefore, brain imaging studies targeting various topics of interest can capitalize on trimodal imaging for advantages at the levels of study design and interpretation of results, with great benefits for the field of cognitive neuroscience.

Integration of multimodal brain imaging data is an emerging and highly anticipated area in cognitive neuroscience, and various analytic approaches can be employed (detailed in Supplementary Materials). Also, integration of data from simultaneous fMRI–EROS–ERP can be used to identify associations, as well as dissociations, among these signals (see also Figure 1), and an important aspect to consider in analytically integrating multimodal imaging data concerns the measure(s) to extract from each signal. Whereas some studies involving task‐manipulations use ERP amplitude (Mayhew, Hylands‐White, Porcaro, Derbyshire, & Bagshaw, 2013; Warbrick et al., 2009), it is also possible to use features from signals extracted via source separation approaches such as independent component analysis (Ostwald, Porcaro, & Bagshaw, 2011; Porcaro, Ostwald, & Bagshaw, 2010). Furthermore, recent work has shown that choosing a single channel (Fz, Cz, or Pz) does not fully describe the mechanisms underlying the P300 ERP observed in scalp electrode data (Ferracuti et al., 2020; Migliore et al., 2019; Porcaro et al., 2019), and advanced approaches for extracting information from EEG can further inform fMRI analyses to improve results (Ostwald et al., 2011; Ostwald, Porcaro, & Bagshaw, 2010; Ostwald, Porcaro, Mayhew, & Bagshaw, 2012; Porcaro et al., 2010). Additionally, it is possible to extract other measures such as power in various EEG frequency bands (Mayhew, Ostwald, Porcaro, & Bagshaw, 2013; Michels et al., 2010), as not all brain activity is captured by event‐related measures such as ERPs. Indeed, a substantial amount of brain activity appears to occur in the form of ongoing oscillatory activity, which can be quantified in different ways. For example, spectral perturbation measures are commonly used in the EEG literature (Onton & Makeig, 2006), and offer another way of capturing the oscillatory changes. Interestingly, oscillatory measures have been shown to be especially informative in some cases of examining EEG associations with fMRI BOLD, and thus might provide complementary information related to BOLD response that is not captured by ERPs (Engell, Huettel, & McCarthy, 2012), and hence could also capture different aspects of brain activity. Similar considerations are also necessary for examination of multimodal resting‐state data (Mantini, Perrucci, Del Gratta, Romani, & Corbetta, 2007). Based on the extant literature, we may expect that features of event‐related signals from EEG and fast optical imaging, such as ERP or EROS amplitudes, can be associated with the amplitude of peak hemodynamic changes captured by fMRI and fNIRS (Goldman et al., 2009; Y. Liu et al., 2012). We may also expect that ongoing oscillatory activity in the EEG and fast optical data can be associated with features of the fMRI and fNIRS (e.g., Sadaghiani et al., 2010; Scheeringa et al., 2009; Scheeringa et al., 2011). Such associations can link the fast fluctuation or ongoing activity that is captured by measures linked to neuronal responses and the subsequent hemodynamic changes in large‐scale brain networks (Jann et al., 2009; Sadaghiani et al., 2010).

4.1. Caveats

An important consideration for the present study is that the optical data in the trimodal format focused on PFC regions and used a limited number of data sets. Despite these limitations, the present trimodal results are consistent with typical findings from other acquisition formats (i.e., bimodal) and with data obtained independently from these methodologies (Katayama & Polich, 1999; Moore et al., 2019; Singhal et al., 2012; Wang et al., 2005; Wang, Krishnan, et al., 2008; Wang, LaBar, et al., 2008). Hence, they provide proof‐of‐concept evidence regarding the feasibility of simultaneous fMRI–EROS–ERP recordings, and future research will capitalize on further improvements (e.g., by using larger optical arrays for more widespread coverage, with the ultimate goal of attaining full scalp coverage), to address open questions regarding the spatiotemporal dynamics of brain function. Furthermore, although the equipment in the present study was customized for the initial application of trimodal, the individual elements of the trimodal setup are commercially available, including the ERP equipment (https://www.brainproducts.com/), as well as the EROS equipment and the materials for the patches and helmets that integrated the equipment for these modalities (http://www.iss.com/biomedical/instruments/imagent.html). The protocols described in this report provide an overview and proof‐of‐concept, which interested researchers can build upon to make use of the trimodal approach in future works.

5. CONCLUSION

In sum, the present report provides proof‐of‐concept evidence demonstrating implementation and validation of simultaneous trimodal fMRI–EROS–ERP recordings. This novel imaging approach provides considerable advantages that (a) overcome the current limitations of unimodal or bimodal imaging, (b) help to clarify the fundamental links between brain activity and these individual measures, and (c) elucidate the links between the spatial and temporal aspects of dynamic brain functioning. This technique will be useful for future studies to comprehensively examine the brain mechanisms associated with healthy psychological functioning and in disease, and will inform future theoretical models of brain function.

CONFLICTS OF INTEREST

The authors declare no conflicts of interest.

Supporting information

Supporting information

ACKNOWLEDGMENTS

This work was conducted/funded in part at/by the Biomedical Imaging Center of the Beckman Institute for Advanced Science and Technology at the University of Illinois at Urbana‐Champaign (UIUC‐BI‐BIC). Data collection was supported by funds from UIUC‐Psychology, Campus Research Board, and BI/BIC to F. D. The authors also wish to acknowledge NCRR grant S10‐RR029294 to G. G., NIA grants RF1AG062666 and R01AG059878 to M. F. and G. G, and a Campus Research Board grant to E. L. M. During the preparation of this manuscript, M. M. was supported by Beckman Institute Predoctoral and Postdoctoral Fellowships, provided by the Beckman Foundation, Y. K. was supported by the Honjo International Scholarship Foundation, A. T. S. was supported by the Intramural Research Program, National Institute on Aging, NIH, and F. D. was supported by a Helen Corley Petit Scholarship in Liberal Arts and Sciences and an Emanuel Donchin Professorial Scholarship in Psychology from the University of Illinois. The authors wish to thank Dr. Kathy Low and members of the Dolcos Lab for assisting with data collection.

Moore, M., Maclin, E. L., Iordan, A. D., Katsumi, Y., Larsen, R. J., Bagshaw, A. P., Mayhew, S., Shafer, A. T., Sutton, B. P., Fabiani, M., Gratton, G., & Dolcos, F. (2021). Proof‐of‐concept evidence for trimodal simultaneous investigation of human brain function. Human Brain Mapping, 42(13), 4102–4121. 10.1002/hbm.25541

Funding information Beckman Institute for Advanced Science and Technology, University of Illinois at Urbana‐Champaign; Campus Research Board, University of Illinois at Urbana‐Champaign; Department of Psychology, University of Illinois at Urbana‐Champaign; Honjo International Scholarship Foundation; National Center for Research Resources, Grant/Award Number: S10‐RR029294; National Institute on Aging, Grant/Award Numbers: R01AG059878, RF1AG062666; National Institute on Aging, Intramural Research Program of the National Institutes of Health; University of Illinois at Urbana‐Champaign

Endnote

It should be noted that while the DES and VAS are not treated as equal to brain networks, there are considerable overlaps between these larger neural systems which are sensitive to emotional distraction and the large‐scale functional networks identified during resting‐state (Iordan & Dolcos, 2017). Specifically, the task‐induced dorso‐ventral dissociation between DES and VAS overlaps with the resting‐state dissociations between the frontoparietal control/central‐executive/dorsal‐attention networks and the salience/cingulo‐opercular/ventral‐attention networks, respectively (Bressler & Menon, 2010; Dosenbach et al., 2007; Dosenbach, Fair, Cohen, Schlaggar, & Petersen, 2008; Power et al., 2011; Seeley et al., 2007; Yeo et al., 2011).

Contributor Information

Matthew Moore, Email: mmoore16@illinois.edu.

Gabriele Gratton, Email: grattong@illinois.edu.

Florin Dolcos, Email: fdolcos@illinois.edu.

DATA AVAILABILITY STATEMENT

The data that support the findings of this study are available on request from the corresponding author. The data are not publicly available due to privacy or ethical restrictions.

REFERENCES

- Ahmadi, M., & Quiroga, R. Q. (2013). Automatic denoising of single‐trial evoked potentials. NeuroImage, 66, 672–680. 10.1016/j.neuroimage.2012.10.062 [DOI] [PubMed] [Google Scholar]