Abstract

Head motion is a major source of image artefacts in neuroimaging studies and can lead to degradation of the quantitative accuracy of reconstructed PET images. Simultaneous magnetic resonance‐positron emission tomography (MR‐PET) makes it possible to estimate head motion information from high‐resolution MR images and then correct motion artefacts in PET images. In this article, we introduce a fully automated PET motion correction method, MR‐guided MAF, based on the co‐registration of multicontrast MR images. The performance of the MR‐guided MAF method was evaluated using MR‐PET data acquired from a cohort of ten healthy participants who received a slow infusion of fluorodeoxyglucose ([18‐F]FDG). Compared with conventional methods, MR‐guided PET image reconstruction can reduce head motion introduced artefacts and improve the image sharpness and quantitative accuracy of PET images acquired using simultaneous MR‐PET scanners. The fully automated motion estimation method has been implemented as a publicly available web‐service.

Keywords: MR image registration, MR‐guided MAF, MR‐guided motion correction, multiple acquisition frame (MAF), PET motion artefacts, PET motion correction, PET/MR, simultaneous MR‐PET

1. INTRODUCTION

The lengthy duration of the simultaneous magnetic resonance‐positron emission tomography (MR‐PET) brain imaging experiments can lead to head motion induced artefacts in the PET images (Chen et al., 2018). Even sub‐millimetre motion which is not manifest as visible image artefacts can result in systematic and regionally specific biases in MRI anatomical estimations (Alexander‐Bloch et al., 2016). Significant effects are observed in fMRI functional connectivity measurements due to head motion (Satterthwaite et al., 2012). With recent improvements in PET scanner resolution, head motion is increasingly becoming one of the major causes of image quality degradation, including reduction of spatial resolution and erroneous estimation of radio‐ligand concentrations.

A widely used technique for correcting head motion in PET and PET‐CT scanners is the multiple acquisition frame (MAF) method (Picard & Thompson, 1997). The MAF method subdivides the PET raw data (i.e., list‐mode data) into a number of short duration temporal frames, with the frames then co‐registered to correct for head motion under the assumption that intra‐frame motion is negligible.

External motion tracking devices can further be installed to monitor motion (see Maclaren, Herbst, Speck, & Zaitsev, 2013 for a detailed review). These devices can provide excellent motion estimation accuracy and temporal sampling of motion parameters at milliseconds temporal resolution, but in general they are complex to setup and often have patient compliance issues. Additionally, MR compatibility and PET attenuation aspects of an external device have to be considered thoroughly for application to a hybrid MR‐PET scanner. Because of the complexity in workflow and potential patient compliance issue, external device motion correction methods are currently not commonly used in routine clinical and experimental studies.

Data‐driven methods form another category of motion correction methods in PET imaging. Recently, Thielemans et al. applied the principal component analysis (PCA) method to detect head movements directly from PET sinogram or list‐mode data, and then used the estimated motion position information to guide the MAF framing process (Schleyer et al., 2015; Thielemans, Schleyer, Dunn, Marsden, & Manjeshwar, 2013). The PCA motion detection method is based on the identification of changes in the principal components of the PET time activity curve, and compared with the conventional MAF technique, the PCA guided MAF has demonstrated to reduce intra‐frame motion in PET image reconstruction. However, the PCA motion detection can only work when the tissue biological kinetics are stable and therefore any signal change in the tissue time activity curve is due to motion. This assumption is invalid in dynamic PET data acquisition where tracer uptake in the brain increases with time. Furthermore, PET based methods rely on co‐registration of PET images which have intrinsic lower spatial resolution and anatomical contrast compared with MR images.

Recently, slow infusion based dynamic fluorodeoxyglucose ([18‐F]FDG) PET imaging has shown promising results for investigating dynamic brain metabolism (Hahn et al., 2016; Villien et al., 2014). In these methods, PET list‐mode data are acquired for 60–90 min and then binned into 1‐min frames. Due to the long acquisition time, motion correction is critical in the dynamic PET imaging. Conventional PET data‐driven approaches cannot accurately estimate the motion since the radioactivity distribution in the brain accumulates and changes over time. Therefore, a reliable motion correction method is still required.

Simultaneous MR‐PET makes it possible to model head motion from high‐resolution MR images and then correct motion artefacts in PET images. While PET data driven methods may work for [18‐F]FDG PET when signal to noise ratio (SNR) is sufficient, the MR‐based motion correction can be advantageous in many applications including low dose FDG PET and other tracers such as receptor‐targeted PET where spatial SNR is limited. Echo Planar Imaging (EPI) MRI volumes are often used as image navigators to track head movements. In Blood‐Oxygen‐Level Dependent functional MRI (BOLD fMRI) experiments, dynamic EPIs are acquired in every repetition time (TR = 2 s or less), which provide motion estimates for PET data correction (Catana et al., 2011; Ullisch et al., 2012). Single EPI volumes can also be inserted in between MR sequences/scans which are often several minutes apart (Keller et al., 2015). The specific advantages of using EPI navigators to perform motion correction include their high temporal resolution (i.e., in seconds) and spatial resolution and SNR for accurate image registration. Many software toolboxes (e.g., FSL, SPM, ANTS, etc.) have been introduced to co‐register fMRI EPI volumes, and these software tools have achieved excellent image co‐registration accuracy. Ardekani, Bachman, and Helpern (2001) demonstrated that excellent image co‐registration accuracy in the order of 0.20 mm can be obtained when the image SNR is greater than 5. However, inserting EPI acquisitions amongst MR sequences takes additional imaging time. In recent work, EPI navigators have also been embedded directly into T1 weighted MR sequences (i.e., in every repetition time TR) for intra‐sequence motion correction (Tisdall et al., 2016). However, this method adds additional acquisition time to the minimum TR and is not routinely available for other MR sequences.

Our aim in this research was to develop a fully automated MR‐guided method based on co‐registration of multicontrast MR images with different resolution and imaging parameters. The MR‐guided PET motion correction method has the following advantages: (a) a fully automated method that does not require any image or k‐space navigators, (b) provides excellent motion estimation accuracy due to the high spatial resolution of the MR images and (c) it can be applied in both static and dynamic PET experiments. The introduced method, MR‐guided MAF, optimises the MR image registration for all types of MR image contrasts (e.g., T1, T2, EPI BOLD, Diffusion Weighted Imaging (DWI), Arterial Spin Labelling (ASL), etc.). The inclusion of different MR image contrasts makes it possible to correct motion during the complete neuroimaging examination. Similar to BOLD EPIs, DWI and ASL sequences are also dynamic scans and can be used to extract motion estimates with high temporal resolution. Anatomical T1 and T2 weighted MR acquisitions normally have long scanning duration (e.g., 5–10 min), and motion estimates from them can be limited in temporal resolution. Nevertheless, in a comparable approach Keller et al. (2015) demonstrated improved PET image quality using navigators which are several minutes apart. The MR‐guided MAF extracts motion parameters which are then used to rebin PET raw data into a multiple acquisition frame reconstruction. The PET attenuation map can also be re‐aligned to match the head position for each frame to further improve the quality of the PET image reconstruction. The MR‐guided MAF method was evaluated on a volunteer with controlled head motion as well as on a cohort of 10 subjects who were instructed to minimise their head motion during data acquisition. Participants were slowly administered 260 MBq [18‐F]FDG PET at constant infusion rate, and to the best of our knowledge, the impact of MR‐based PET motion correction has not been previously investigated in a cohort of subjects undergoing a slow infusion FDG PET experiment. The motion correction performance of the MR‐guided MAF method was evaluated using a static (single frame) PET image reconstruction in the cohort. The impact of the PET attenuation map re‐alignment was investigated in the motion controlled experiment. Improvements in the image sharpness and accuracy of PET image quantification during the dynamic PET image reconstruction were also investigated.

2. METHODS

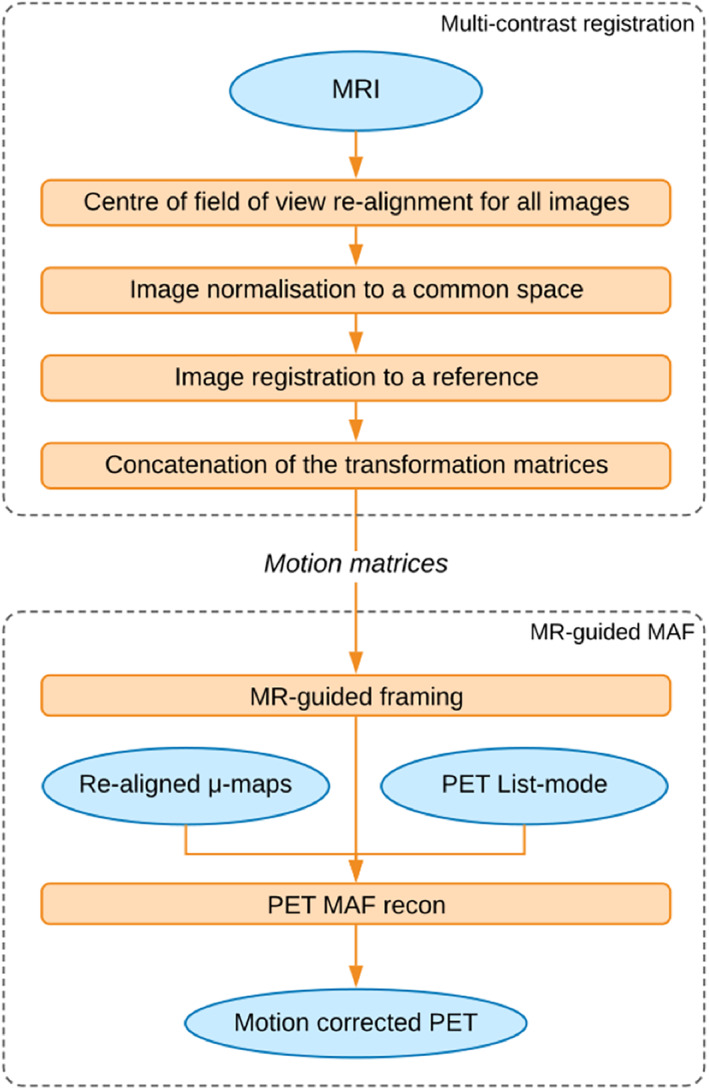

The MR‐guided MAF method contains two main steps: (a) multicontrast MR co‐registration and (b) the MR‐guided MAF PET image reconstruction. In the first step, the motion parameters are estimated by image registration and concatenation of transformation matrices. These motion parameters are then used to guide the reconstruction of static or dynamic PET images in the second step. An overview of the MR‐guided MAF method is shown in Figure 1.

FIGURE 1.

Overview of the MR‐guided MAF method

2.1. Motion estimation based on multicontrast MR image registration

2.1.1. Selection of reference image for registration

A reference image was first selected for registration of the multicontrast MR images. The selected reference image was chosen to minimise the bias introduced by image registration. T1‐weighted contrasts are typically used as the anatomical reference due to their good grey/white matter contrast. In this work, we compared the registration imprecision from both 3D isotropic T1‐weighted and T2‐weighted images as references.

In order to determine the optimal anatomical reference image between the T1 and T2 weighted images, an IIDA brain phantom (Iida et al., 2013) was used to acquire T1 weighted 3D Magnetization‐Prepared Rapid Gradient‐Echo (MPRAGE), T2 weighted 3D Fluid‐Attenuated Inversion Recovery (FLAIR), Proton Density (PD) weighted, Diffusion Weighted Imaging (DWI) based on SE‐EPI (Spin Echo‐Echo Planar Imaging) and Blood‐Oxygen‐Level Dependent functional MRI (BOLD fMRI) using GE‐EPI (Gradient Echo EPI) and 2D Gradient Echo (GRE) (see Table 1 for detailed acquisition parameters). The brain phantom was fixed inside the head coil and kept free of motion during the acquisition. Each MR image contrast was then rigidly (6° of freedom) registered to the T1 and T2 weighted images. The acquisition was repeated four times to calculate the standard errors of the mean registration bias. In this work, we selected T2 weighted FLAIR image as the reference image (see Results section).

TABLE 1.

MR image acquisition parameters for the motion instructed volunteer and the phantom experiments

| Scan | TR | TE | Matrix | Slices | Resolution |

|---|---|---|---|---|---|

| UTE | 11.94 | 0.07, 2.46 | 192 × 192 | 192 | 1.5 × 1.5 × 1.5 |

| T1 MPRAGE | 1,640 | 2.34 | 256 × 256 | 176 | 1.0 × 1.0 × 1.0 |

| T2 FLAIR | 3,200 | 418 | 256 × 256 | 176 | 1.0 × 1.0 × 1.0 |

| Proton density | 3,800 | 16 | 128 × 128 | 34 | 1.0 × 1.0 × 2.0 |

| ASL | 2,500 | 13 | 64 × 64 | 9 | 4.0 × 4.0 × 10.0 |

| BOLD fMRI | 3,200 | 20 | 64 × 64 | 60 | 3.5 × 3.5 × 3.0 |

| DWI | 13,100 | 110 | 96 × 96 | 60 | 2.5 × 2.5 × 2.5 |

| 2D GRE | 466 | 4.92, 7.38 | 64 × 64 | 44 | 3.0 × 3.0 × 3.0 |

TR, repetition time [ms]; TE, echo time [ms]; Res, resolution [mm3].

2.1.2. Preprocessing of images

To improve image registration accuracy and robustness, brain extraction (BET, Smith, 2002) was applied to each MR image.

For EPI acquisitions (i.e., BOLD and diffusion weighted), a geometric distortion correction was applied using an opposite‐phase encoding EPI image. The distortion correction was implemented using the FSL‐TOPUP toolkit (Andersson, Skare, & Ashburner, 2003).

The T2 weighted image was segmented into grey and white matters and CSF using FAST (Zhang, Brady, & Smith, 2001). The segmented white matter boundaries were used to improve the registration accuracy of the BOLD and Arterial Spin Labelling (ASL) MR images to the reference T2 weighted images.

2.1.3. Multicontrast image registration

Motion matrices containing the rotational and translational parameters for the anatomical MR images (i.e., T1, T2 and PD) and multivolume images (i.e., BOLD, ASL and DWI) were estimated as per the following steps:

All MR images (the first volume if a multivolume MRI) were normalised to the image space of the reference, accounting for field‐of‐view and images resolution differences.

-

a) For anatomical MRI, each image contrast was rigidly registered to the T2 weighted reference image using FSL FLIRT (Jenkinson & Smith, 2001).

b) For multivolume MRI, only the first image volume was rigidly registered to the reference. The registration steps for BOLD fMRI and ASL were optimised using white matter boundaries from the T2 weighted images. Each of the remaining volumes were then aligned to the first volume using MCFLIRT (Jenkinson, Bannister, Brady, & Smith, 2002) for ASL and BOLD fMRI, and using EDDY (Andersson & Sotiropoulos, 2016) for DWI (with a b0 volume as the first volume).

-

a) For anatomical MRI, the motion matrix of the corresponding image contrast was calculated by multiplying the inverse of the transformation matrices in step 1) and the registration matrices in step 2a).

b) For multivolume MRI, the motion matrix of the corresponding image volume was calculated by multiplying the inverse of each of the registration matrix obtained in step 2b).

Rotational and translational parameters, as well as the mean displacement, were derived from the estimated motion matrices. During MR idling times, the last known motion estimates were used. For the anatomical MRI images (e.g., T1 and T2 weighted) which take several minutes to acquire, the estimated motion parameters represent an estimate of the averaged motion throughout the acquisition period.

2.2. Multiple acquisition frame correction

2.2.1. MR‐guided MAF

The mean displacement parameter was used to guide the multiple acquisition frame (MAF) algorithm in the subdivision of the PET list‐mode data into multiple motion correction frames. Specifically, the following two criteria were used to form a new motion correction frame when motion occurred:

The absolute difference between the mean displacement values of two consecutive volumes was greater than a predefined threshold parameter (d 1). This criterion determined whether a sudden movement occurred. The parameter d 1 was set to 2 mm in this work.

Mean displacement between the current volume and the reference was greater than a predefined threshold parameter (d 2). This criterion determined whether a gradual motion (e.g., the subject’s head position is slowly drifting) occurred. The parameter d 2 was also set to 2 mm in this work.

The choices of the threshold d 1 and d 2 values were a compromise between the computational time and the motion estimation accuracy, with 2 mm chosen because it was less than the voxel size of the reconstructed PET images. Furthermore, the minimum duration for a motion correction frame was 1 min to ensure the motion correction frame had sufficient radioactivity counts to reconstruct a PET image.

μ‐Map realignment

The attenuation correction μ‐map was re‐aligned to the head position for each motion correction frame prior to the PET image reconstruction. All the MR derived motion parameters within one motion correction frame were averaged to obtain an averaged motion estimate which was then applied to the original μ‐map data.

Timestamps, re‐aligned attenuation maps and PET list‐mode data were used with the PET image reconstruction software (see Section 2.3) to reconstruct one PET image per motion correction frame. The final motion corrected static PET image was calculated using the frame duration weighted average of the motion corrected PET images. The dynamic PET images were reconstructed by re‐binning the list‐mode data into 90 frames (1 min per frame), and the averaged motion parameters within each of these frames were used to correct for head motion.

2.2.2. Fixed frame MAF

The fixed frame MAF is often used to compare motion correction methods (Schleyer et al., 2015). In the fixed frame MAF, the PET list‐mode data were first re‐binned into 1 min length frames, which were then reconstructed and registered to a reference image using FSL‐FLIRT (normalised mutual information as cost function) to generate a motion corrected dynamic PET image series. The final PET image was computed as the average of the motion corrected images. The 1 min frame length was required in order to have sufficient counts for the PET image reconstruction and image co‐registration.

2.3. PET image reconstruction

PET image reconstruction was performed with the following procedure. List‐mode data were reconstructed with an ordinary Poisson ordered‐subsets expectation maximisation algorithm (OP‐OSEM: 21 subsets, 3 iterations) and point spread function (PSF) correction using 344 × 344 × 127 matrix with a slice thickness 2.03 mm and a pixel size 2.09 mm. The reconstructed PET data were smoothed using a 3D Gaussian filter (5 mm in all directions). The image reconstruction was implemented using the scanner software.

2.4. Data acquisition

A total of 11 healthy human subjects were acquired on a MR‐PET scanner (Siemens Biograph mMR, Erlangen, Germany) equipped with a 20‐channel head and neck coil at Monash Biomedical Imaging, Melbourne, Australia. The human scans were approved by the Monash University human research ethics committee.

2.4.1. Motion controlled study

One healthy volunteer was injected with a bolus of 110 MBq [18‐F]FDG, and instructed to move their head during the scan at specific times. Head motion was introduced during the EPI BOLD, as well as between structural scans (e.g., T1 and T2 weighted scans). MR images were acquired (see Table 1 for acquisition parameters). PET list‐mode data were acquired for 60 min. The PET attenuation map was acquired using the ultrashort echo time (UTE) sequence on the Siemens Biograph mMR.

2.4.2. Group study – Slow infusion based static and dynamic PET imaging

Ten subjects were administered 260 MBq FDG at a constant slow infusion rate of 36 mL/hr over 90 min. MR images were acquired as per Table 3. Prior to data acquisition, the subjects were instructed to keep movements to minimum during the 90‐min examination.

TABLE 3.

MR image acquisition parameters for the slow infusion FDG PET experiments

| Scan | TR | TE | Matrix | Slices | Resolution |

|---|---|---|---|---|---|

| UTE | 11.94 | 0.07, 2.46 | 192 × 192 | 192 | 1.5 × 1.5 × 1.5 |

| T1 MPRAGE | 1,640 | 2.34 | 256 × 256 | 176 | 1.0 × 1.0 × 1.0 |

| T2 FLAIR | 5,000 | 395 | 256 × 256 | 160 | 1.0 × 1.0 × 1.0 |

| SWI | 31 | 5.70, 10.97, 16.24, 21.25, 26.78 | 384 × 384 | 104 | 0.6 × 0.6 × 1.2 |

| Proton density | 4,800 | 11 | 128 × 128 | 35 | 1.8 × 1.8 × 4.0 |

| ASL | 4,800 | 11 | 64 × 64 | 23 | 3.5 × 3.5 × 4.0 |

| BOLD fMRI | 2,450 | 30 | 64 × 64 | 44 | 3.0 × 3.0 × 3.0 |

| 2D GRE | 466 | 4.92, 7.38 | 64 × 64 | 44 | 3.0 × 3.0 × 3.0 |

TR, repetition time [ms]; TE, echo time [ms]; Res, resolution [mm3].

2.5. PET image quality assessment

2.5.1. Static PET image reconstruction – Image sharpness

Relative image sharpness was used to quantify the motion correction improvements in the motion corrected PET images. The image sharpness was calculated using the mean absolute Laplacian of Gaussian (LOG) (Schleyer et al., 2015), defined as:

where, r is the voxel index in the image f(r), convolved with H, a 9 × 9 × 9 kernel describing the 3D LoG with standard deviation of 1.9. The group sharpness index was calculated as the mean and standard errors across the 10 subjects in the slow infusion study.

2.5.2. Dynamic PET image reconstruction – DICE coefficients

To assess the quality of slow infusion dynamic PET data, DICE coefficients were calculated with the following steps. Firstly, the grey matter (GM) were segmented from the T2 weighted reference image using FAST with a probability threshold of 0.5 (Zhang et al., 2001). The DICE coefficients for the GM region were calculated for images reconstructed using the MR‐guided MAF method, the fixed‐MAF method and the original motion corrupted images as follows:

where, GMref is the grey matter mask from the T2 reference image, GMPET is the grey matter mask segmented from each of dynamic PET images (Hatt, Cheze le Rest, Turzo, Roux, & Visvikis, 2009). The operator ∩ is the intersection operator between two spatial masks. The DICE coefficient ranges from 0 (no spatial overlap between two images) to 1 (complete spatial overlap).

2.6. Software availability

The MR‐guided MAF method has been implemented in a fully automated software package, written in Python using the Arcana framework (Close et al., 2018), which is available as web‐service: http://mbi-tools.erc.monash.edu/motion_correction. The source code for the package can be found at: https://github.com/MonashBI/banana/releases/tag/v0.2.0.

3. RESULTS

3.1. Selection of MR reference image

The image registration imprecisions of the different MR image contrasts registered to the T1 weighted MPRAGE and the T2 weighted FLAIR, respectively, are compared in Table 2. The mean registration errors for both the T1 and T2 weighted images were less than 1 mm. The optimal reference image was determined by comparing the mean registration errors for two contrasts, with theT2 FLAIR reference demonstrating a lower mean registration error based on the MR images acquired in this study.

TABLE 2.

Comparison of image registration bias (in mm) using T1‐MPRAGE and T2‐FLAIR images as references

| T1‐MPRAGE | T2‐FLAIR | |

|---|---|---|

| GRE‐EPI | 0.92 ± 0.24 | 0.54 ± 0.21 |

| Proton density | 0.51 ± 0.10 | 0.36 ± 0.11 |

| SE‐EPI | 0.52 ± 0.04 | 0.23 ± 0.04 |

| 2D GRE | 0.96 ± 0.17 | 0.39 ± 0.10 |

3.2. Motion controlled study results

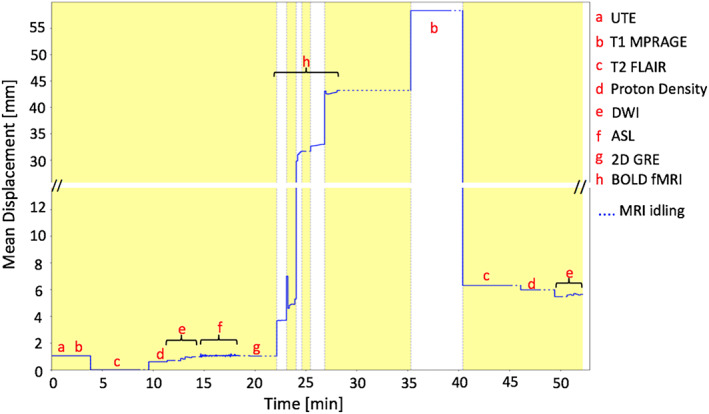

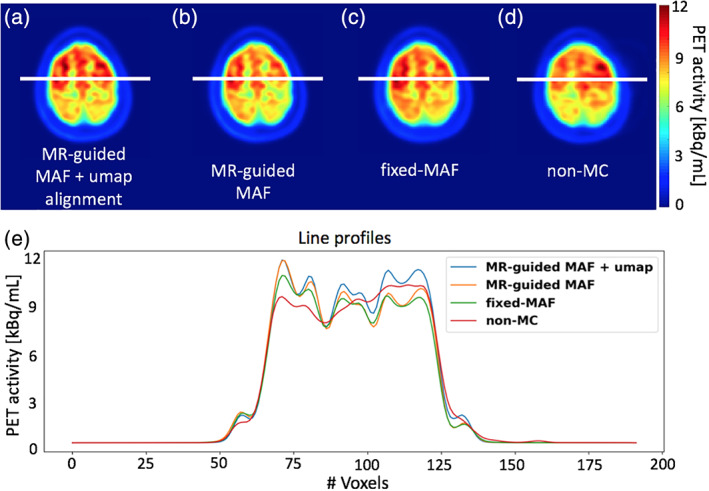

The results for the single subject controlled head motion study, where the head movements were instructed multiple times during the examination, are given in Figures 2, 3, 4. The mean displacement plot (Figure 2) demonstrates a maximum movement close to 60 mm. Translation and rotation motion parameters are shown in Supporting Information Figures S1a and S1b. The image from the MR‐guided MAF with μ‐map realignment shows symmetric radiotracer uptake in the two hemispheres of the brain (Figure 3a). Images reconstructed without μ‐map realignment for the MR‐guided MAF (Figure 3b), the fixed MAF (Figure 3c) and without motion correction (Figure 3d) demonstrate asymmetric radiotracer uptake in the brain hemispheres, which is also evident in line profiles drawn across the hemispheres in each image (Figure 3e). The asymmetric radiotracer uptake is almost certainly due to misalignment in sinogram space between the μ‐map and the head position (see Supporting Information Figure S2). Using the sharpness index calculation, the images from the MR‐guided MAF with μ‐map realignment had an approximately 23% increase in image sharpness compared with original motion corrupted images, and an approximately 4% increase compared with the MR‐guided MAF. Furthermore, the fixed‐MAF images showed 21% greater blurriness compared with the fully corrected images.

FIGURE 2.

Mean displacement plot for the motion instructed volunteer demonstrating the head movement with respect to the T2 weighted reference image, as detected by the multicontrast registration method. The yellow/white alternation bands indicate the durations of successive motion correction frames

FIGURE 3.

Motion correction results for the controlled motion experiment. Images in panels (a)–(d) show the reconstructed PET images using different reconstruction methods. The plots in panel (e) show the signal intensity variation along the line profiles in panel (a)–(d)

FIGURE 4.

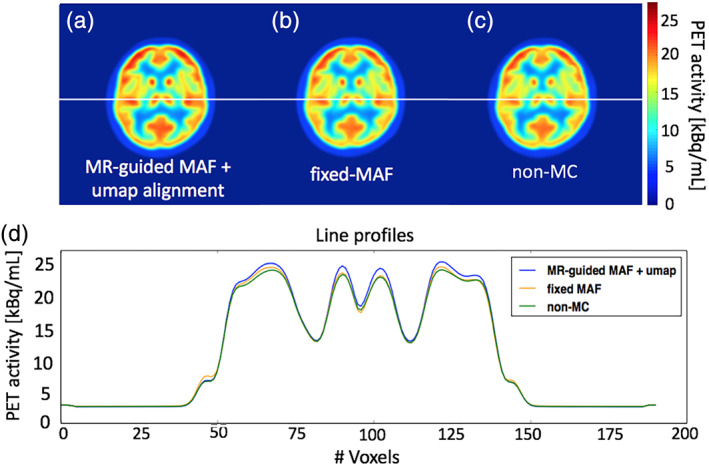

Comparison of the motion correction results for the group averaged image. The images in panels (a)–(c) show the reconstructed PET images using the three different reconstruction methods. The plots in panel (d) show the signal intensity variation along the line profiles in panels (a)–(c)

3.3. Group study results

The group of 10 participants were instructed to keep motion to a minimum during the 90‐min long MR‐PET scan. The estimated motion parameters are shown in Table 4. Overall, a 2–5 mm mean displacement was observed in the group. Figure 4 compares the group averaged results from different motion correction methods with the nonmotion corrected images. For each method, the reconstructed images for all subjects were co‐registered together to derive the group averaged image. The fully motion corrected image (i.e., MR‐guided MAF with μ‐map realignment, Figure 4a) depicts improved grey and white matter contrast compared with the fixed‐MAF (shown in Figure 4b) and the nonmotion corrected images (shown in Figure 4c). The comparison of line profiles (shown in Figure 4d) shows that the best grey and white matter delineation is observed from the fully corrected image. All three images show symmetric tracer uptake in both hemispheres, which is in agreement with the estimated relatively small mean displacements.

TABLE 4.

The average mean displacement [mm] and the average six motion parameters (three for rotation [mrad] and three for translation [mm]) for the 10 subjects used in group analysis

| Average mean displacement | Average rotation x | Average rotation y | Average rotation z | Average translation x | Average translation y | Average translation z | |

|---|---|---|---|---|---|---|---|

| Subject 1 | 1.98 ± 0.59 | 10.3 ± 9.7 | 7.4 ± 4.0 | −6.7 ± 3.7 | 0.17 ± 0.08 | −0.30 ± 0.06 | −1.74 ± 0.50 |

| Subject 2 | 5.60 ± 2.27 | 17.1 ± 14.3 | 12.5 ± 8.2 | −4.5 ± 23.8 | 0.58 ± 0.27 | −1.25 ± 0.39 | 4.92 ± 2.62 |

| Subject 3 | 4.05 ± 1.33 | 7.8 ± 6.1 | −12.3 ± 5.9 | −1.5 ± 5.2 | 0.42 ± 1.51 | −0.68 ± 0.12 | −3.63 ± 1.12 |

| Subject 4 | 5.74 ± 3.22 | 39.1 ± 20.3 | 9.7 ± 4.5 | −10 ± 5.1 | 0.03 ± 0.18 | −0.30 ± 0.30 | 5.21 ± 3.20 |

| Subject 5 | 4.18 ± 1.13 | 58.2 ± 20.6 | −9.5 ± 7.0 | −20.2 ± 8.8 | 0.50 ± 0.51 | −0.64 ± 0.22 | 2.37 ± 0.92 |

| Subject 6 | 4.88 ± 1.38 | 15.7 ± 12.5 | −17.1 ± 13.1 | 0 ± 6.7 | 0.97 ± 1.32 | −0.41 ± 0.44 | 4.33 ± 1.26 |

| Subject 7 | 1.82 ± 0.61 | 18.6 ± 6.3 | 4.1 ± 1.5 | 2.2 ± 2.6 | 0.09 ± 0.08 | −0.40 ± 0.14 | −1.45 ± 0.57 |

| Subject 8 | 5.94 ± 1.51 | 26.3 ± 11.8 | 7.6 ± 6.5 | 3.7 ± 11.0 | 0.19 ± 0.11 | −1.45 ± 0.39 | 5.47 ± 1.61 |

| Subject 9 | 5.85 ± 2.54 | 33.5 ± 17.3 | −28.9 ± 6.8 | 36.4 ± 7.2 | −1.16 ± 0.31 | −0.42 ± 0.19 | 4.71 ± 2.70 |

| Subject 10 | 2.56 ± 0.96 | 26.1 ± 15.4 | −0.8 ± 10.3 | −28.6 ± 11.1 | 1.10 ± 0.19 | −0.52 ± 0.14 | 0.70 ± 0.66 |

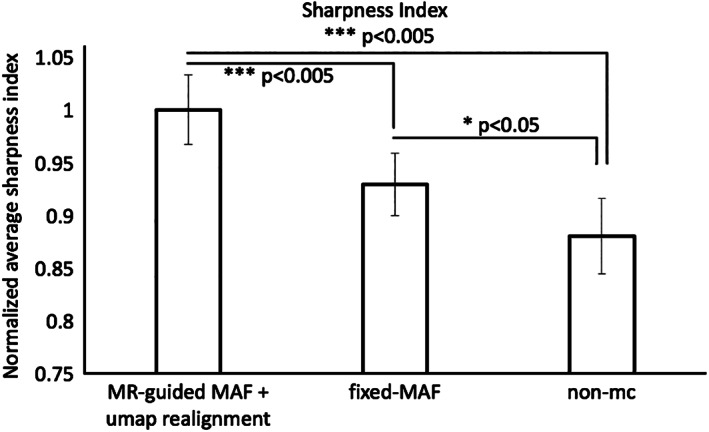

Figure 5 shows an averaged sharpness index (mean and standard errors) of the 10 participants. The fully corrected images demonstrate a 7% increase in mean sharpness index when compared with fixed‐MAF, and 12% increase when compared with nonmotion corrected images. These differences are all statistically significant (***p < 0.005, *p < 0.05).

FIGURE 5.

Comparison of the averaged (mean and standard errors) sharpness indices for the experimental group of 10 participants

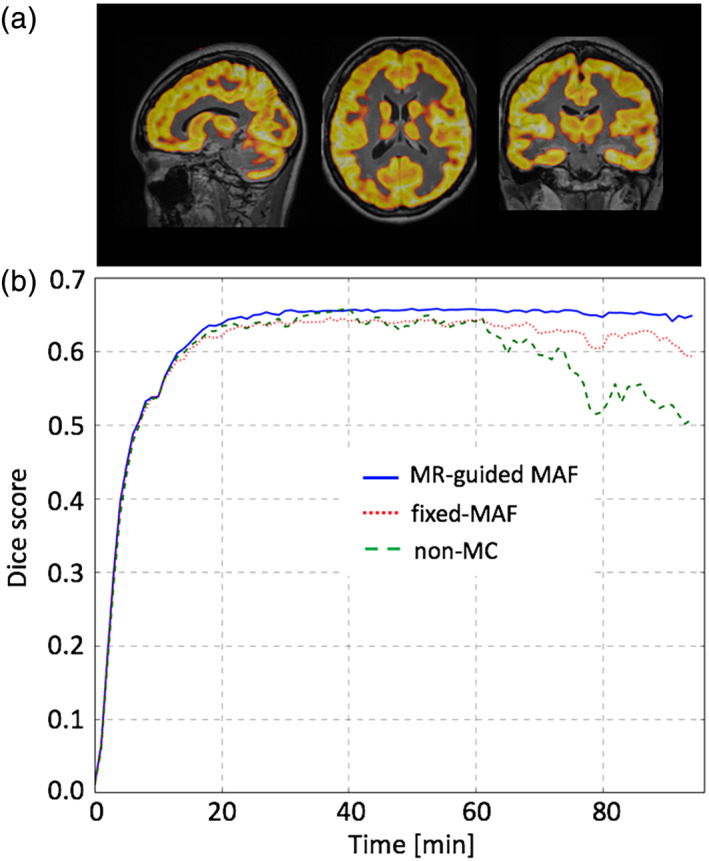

3.4. Dynamic PET image reconstruction

The segmented grey matter from a PET frame overlaid to the reference image was shown in Figure 6. The DICE coefficients were calculated and used to investigate the accuracy of motion correction in the grey matter region. Apart from the first 20 min, where the counts in the PET images were too low, the DICE coefficients of the MR‐guided motion corrected images were constant around 0.65 (Figure 6b). The DICE coefficients of the frames aligned using the standard fixed‐MAF approach, were lower compared with those calculated from the MR‐guided MAF method. For both motion corrupted and fixed‐MAF corrected images, the DICE coefficients fluctuated significantly toward the end of the 90‐min acquisition where the head motion was greater (see mean displacement plot in Supporting Information Figure S3). In addition, the percentage difference in the time activity curve between the MR‐guided MAF and the fixed‐MAF varies between 1 and 5%, while that between the MR‐guided MAF and the frames without motion correction reached 15% (see Supporting Information Figures S4a,b).

FIGURE 6.

Comparison of the motion correction results between the MR‐guided MAF, fixed‐MAF and for the images without motion correction, for a dynamic PET reconstruction for one test subject. The Dice scores are shown in (b) using grey matter masks shown in (a)

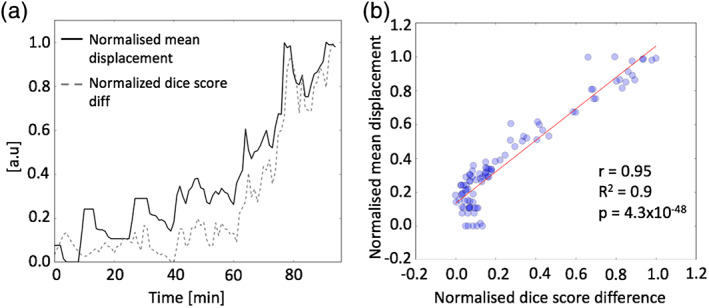

The difference in the DICE coefficients between the nonmotion corrected and the MR‐guided motion corrected frames were significantly correlated with the mean displacement of the head position (Figure 7), demonstrating the accuracy of the MR‐guided MAF approach.

FIGURE 7.

Plots of the Dice score differences and the mean displacement between the MR‐based motion corrected and the nonmotion corrected PET images in panel (a). Panel (b) shows the correlation scatter plot between the Dice score differences and the mean displacement

4. DISCUSSION

The aim of this study was to develop a fully automated MR‐based method to estimate and correct for head motion in simultaneous MR‐PET imaging. The MR‐guided MAF method relies on co‐registration of multicontrast MR images. One advantage of the MR‐guided method is that no additional imaging navigators or dedicated EPI volumes are acquired for tracking motion (Keller et al., 2015; Ullisch et al., 2012), thereby maintaining optimal usage of the scanning time, and simplification of the experimental workflow. Using both bolus injection and slow constant infusion FDG PET datasets, we have shown that the method removes head motion induced images artefacts, improved image sharpness and provided more uniform tracer uptake across the brain. Compared with nonmotion corrected images, the relative image sharpness increase using MR‐guided MAF was approximately 25% in the motion controlled study and an average of approximately 12% in the subject cohort. The method using either MR‐guided MAF with or without μ‐map alignment performed better than the standard fixed‐MAF, most likely because of intra‐frame motion that is not corrected in the fixed‐MAF method. The MR‐guided method can be expected to be robust and accurate even when the PET tracer activity is low, for example, during the early phase of the slow infusion experiments and high temporal resolution dynamic PET image reconstruction (e.g., 20 s).

Our findings highlight the importance of the re‐alignment of the μ‐map before PET image reconstruction if large motion occurs. Head motion causes a misalignment between the original head position during the attenuation map measurement and the head position during PET data acquisition. Consequently, without re‐alignment of the μ‐map, the reconstructed PET image has regions with inaccurate attenuation correction that lead to a significant quantification error of the tracer uptake. These inaccuracies can be recovered using the MR‐aligned attenuation map, as shown by our results. In an [18‐F]FDG PET dementia study, Chen et al. (2018) used the time‐weighted averaged coil μ‐map to account for motion during image reconstruction. With the conventional fixed‐MAF approach, re‐alignment of the μ‐map requires two reconstructions which can significantly increases the computation time and propagate the reconstruction errors.

The robustness and accuracy of the MR‐guided MAF method has been demonstrated using datasets from a group of 10 subjects. With motion between 2 and 5 mm, the MR‐guided MAF achieves an increase in image sharpness around 7% compared with the fixed‐MAF correction, and around 12% with respect to the nonmotion corrected image. Compared with fixed‐MAF, MR‐guided MAF can more effectively correct intra‐frame motion and requires fewer re‐binned frames. Since the method forms fewer frames compared with the 1 min binned fixed‐MAF method, less computation time is required.

MR‐based motion correction has the advantage that the MR image co‐registration is more accurate than using PET images due to the high spatial resolution and high contrast to noise ratio in MR images. Using both T1 and T2 weighted images as reference images, the image registration imprecision was less than 1 mm, which is significantly less than the PET image resolution. Furthermore, PET data driven motion correction methods (Schleyer et al., 2015; Thielemans et al., 2013) are heavily dependent on PET radioactivity count rates, which is problematic when dealing with short temporal frames and low dose applications. Conversely MR motion correction using EPI scans can provide a temporal resolution of 2 s or less. Several articles in the literature have used MR navigators to correct motion in PET imaging of the brain. EPI based fMRI were firstly used for motion navigators, and demonstrated improved PET image quality in several healthy subjects (Catana et al., 2011; Ullisch et al., 2012). In the same work, Catana et al. (2011) also implemented and evaluated cloverleaf MRI navigators (CLNs) to reduce motion artefacts. Instead of using dynamic EPI navigators, Keller et al. (2015) inserted EPI volumes that were several minutes apart to exact motion information during the complete PET examination. Chen et al. (2018) employed both fMRI navigators and EPI navigators that embedded inside the T1 weighted images to improve PET quantification accuracy in a group of FDG PET dementia patients. In our work, we further extended the MR‐based PET motion correction, and optimised for other MR contrasts (DWI/DTI, ASL, etc.). This approach offers a motion correction strategy during the complete course of PET examination and for most popular MR neuroimaging sequences. The quantitative improvement in PET images was further evaluated in slow infusion based FDG PET datasets.

In our work, the motion correction has been implemented as a post reconstruction step (i.e., MAF), and there exist methods that apply motion correction prior or during image reconstruction. These methods are developed based on the consideration that better motion correction can be achieved at coincidence event level. Two early studies from Catana et al. (2011) and Ullisch et al. (2012) both applied motion correction to PET list‐mode data prior to image reconstruction. They compared the list‐mode motion correction with the post reconstruction based correction using the same motion estimates, and the final reconstructed images were found to be comparable. The advantage of applying motion correction prior to image reconstruction is that it can reduce the total number of reconstruction jobs, resulting in overall faster data processing. On the other hand, the advantage of using the MAF based method is the simple implementation and application in clinical and research MR‐PET scanners. Although this work presents an MAF based motion correction, the motion parameters estimated using the multicontrast MR image registration can potentially be fed into list‐mode reconstruction. Jiao et al. (2017) proposed a method for joint estimation of PET kinetic parameters and correction of head motion during image reconstruction. Their method demonstrated improved accuracy in estimation of kinetic parameters, especially at low radioactivity doses and when large motion occurred, compared with the post reconstruction MAF method. Their work highlighted that PET data driven MAF methods suffer from inaccurate motion estimation when SNR is poor.

In this article, we developed and applied the MR‐guided MAF method to slow infusion dynamic PET imaging. Our results have shown the importance of motion correction for dynamic imaging approaches. Indeed, head movements can lead to large differences in the time activity curves, and the DICE score measures before and after the MR‐based motion correction. The difference between the time activity curves was correlated well with the motion estimates.

4.1. Limitation and future work

The current method uses an MR‐derived attenuation map rather than a 68‐Germanium based PET transmission scan or a CT X‐ray derived attenuation map. Previous work by ourselves and others has demonstrated the accuracy of head image segmentation and the assignment of tissue attenuation values using advanced MR methods (Baran et al., 2018). However, irrespective of the absolute accuracy of the attenuation maps used in the PET image reconstructions, the advantages of the fully motion corrected method (i.e., the MR‐guided MAF with μ‐map realignment) have been clearly demonstrated. One limitation of the current method is the absence of motion estimation during anatomical MR scans (e.g., T1 or T2 weighted). These scans can take several minutes, and motion may occur during these acquisitions. One possible solution is to insert navigator echoes (Tisdall et al., 2012) in the anatomical MR sequences. The navigators (image or k‐space) can provide motion estimates in every hundreds of milliseconds to several seconds, depending on the repetition time of the sequence. Another possible solution is to use PET raw data driven motion correction during these MR sequences and during periods without MR data acquisition.

5. CONCLUSIONS

In this article, we have introduced a fully automated MR‐based motion correction method and software for simultaneous MR‐PET imaging. Using in vivo datasets, the introduced MR‐guided MAF method has shown significantly improved PET image contrast and sharpness compared with both nonmotion corrected images and images from the conventional fixed‐MAF method. The new method has also been applied to a slow FDG infusion dynamic PET study of brain metabolism, to produce significant improvements in PET image quality and accuracy of whole brain and regional time activity curve estimation.

CONFLICT OF INTERESTS

None.

Supporting information

Figure S1 Translational (a) and rotational (b) parameters for the motion instructed volunteer, as determined by the MR‐guided MAF method.

Figure S2 Demonstration of the μ‐map realignment in the MR‐guided MAF method. Images in row (a) show misalignment between the head position and the original μ‐map (highlighted in the circled area). The MR‐guided MAF method re‐aligned μ‐map using the MR parameters completely recovers the PET image signal intensity as shown in row (b).

Figure S3 Mean displacement plot for a subject that shows the head movement with respect to the reference images, as detected by the MR‐guided MAF method. The yellow/white alternation bands indicate the time durations for the motion correction frames.

Figure S4 Panel (a) shows the differences in the time activity curves as extracted from the MR‐based motion correction method (blue), the fixed‐MAF (red) and the nonmotion corrected PET images (green). Panel (b) shows percentage errors between the whole head time activity curves extracted from the MR‐based motion corrected frames and that from the fixed‐MAF (grey line), and from the nonmotion corrected (black line) PET images.

ACKNOWLEDGMENTS

The research was supported by a grant from the Reignwood Cultural Foundation and an Australian Research Council (ARC) Linkage grant (LP170100494). GE is supported by the ARC Centre of Excellence for Integrative Brain Function (CE140100007). The authors acknowledge Richard McIntyre and Alexandra Carey for their assistance in acquiring data, Shenjun Zhong for assistance in software preparation and Kamlesh Pawar for useful discussions. The authors acknowledge the useful discussions with Benjamin Schmitt, Thomas Gaass, and Daniel Staeb from Siemens Healthineers.

Chen Z, Sforazzini F, Baran J, Close T, Shah NJ, Egan GF. MR‐PET head motion correction based on co‐registration of multicontrast MR images. Hum Brain Mapp. 2021;42:4081–4091. 10.1002/hbm.24497

Funding information ARC Centre of Excellence for Integrative Brain Function , Grant/Award Number: CE140100007; Australian Research Council (ARC) Linkage, Grant/Award Number: LP170100494; Reignwood Cultural Foundation ; Siemens Healthineers

REFERENCES

- Alexander‐Bloch, A., Clasen, L., Stockman, M., Ronan, L., Lalonde, F., Giedd, J., & Raznahan, A. (2016). Subtle in‐scanner motion biases automated measurement of brain anatomy from in vivo MRI. Human Brain Mapping, 37, 2385–2397. 10.1002/hbm.23180 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Andersson, J. L. R., Skare, S., & Ashburner, J. (2003). How to correct susceptibility distortions in spin‐echo echo‐planar images: Application to diffusion tensor imaging. NeuroImage, 20, 870–888. 10.1016/S1053-8119(03)00336-7 [DOI] [PubMed] [Google Scholar]

- Andersson, J. L. R., & Sotiropoulos, S. N. (2016). An integrated approach to correction for off‐resonance effects and subject movement in diffusion MR imaging. NeuroImage, 125, 1063–1078. 10.1016/j.neuroimage.2015.10.019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ardekani, B. A., Bachman, A. H., & Helpern, J. A. (2001). A quantitative comparison of motion detection algorithms in fMRI. Magnetic Resonance Imaging, 19, 959–963. [DOI] [PubMed] [Google Scholar]

- Baran, J., Chen, Z., Sforazzini, F., Ferris, N., Jamadar, S., Schmitt, B., … Egan, G. F. (2018). Accurate hybrid template‐based and MR‐based attenuation correction using UTE images for simultaneous PET/MR brain imaging applications. BMC Medical Imaging, 18, 41. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Catana, C., Benner, T., van der Kouwe, A., Byars, L., Hamm, M., Chonde, D. B., … Sorensen, A. G. (2011). MRI‐assisted PET motion correction for neurologic studies in an integrated MR‐PET scanner. Journal of Nuclear Medicine, 52, 154–161. 10.2967/jnumed.110.079343 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen, K. T., Salcedo, S., Chonde, D. B., Izquierdo‐Garcia, D., Levine, M. A., Price, J. C., … Catana, C. (2018). MR‐assisted PET motion correction in simultaneous PET/MRI studies of dementia subjects. Journal of Magnetic Resonance Imaging, 48, 1288–1296. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen, K. T., Salcedo, S., Gong, K., Chonde, D. B., Izquierdo‐Garcia, D., Drzezga, A. E., … Catana, C. (2018). An efficient approach to perform MR‐assisted PET data optimization in simultaneous PET/MR neuroimaging studies. Journal of Nuclear Medicine. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen, Z., Jamadar, S. D., Li, S., Sforazzini, F., Baran, J., Ferris, N., … Egan, G. F. (2018). From simultaneous to synergistic MR‐PET brain imaging: A review of hybrid MR‐PET imaging methodologies. Human Brain Mapping, 39, 5126–5144. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Close, T. G., Ward, P. G. D., Sforazzini, F., Goscinski, W., Chen, Z., & Egan, G. (2018). A comprehensive framework to capture the arcana of neuroimaging analysis. bioRxiv. [DOI] [PubMed] [Google Scholar]

- Hahn, A., Gryglewski, G., Nics, L., Hienert, M., Rischka, L., Vraka, C., … Lanzenberger, R. (2016). Quantification of task‐specific glucose metabolism with constant infusion of 18F‐FDG. Journal of Nuclear Medicine, 57, 1933–1940. 10.2967/jnumed.116.176156 [DOI] [PubMed] [Google Scholar]

- Hatt, M., Cheze le Rest, C., Turzo, A., Roux, C., & Visvikis, D. (2009). A fuzzy locally adaptive Bayesian segmentation approach for volume determination in PET. IEEE Transactions on Medical Imaging, 28, 881–893. 10.1109/TMI.2008.2012036 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Iida, H., Hori, Y., Ishida, K., Imabayashi, E., Matsuda, H., Takahashi, M., … Zeniya, T. (2013). Three‐dimensional brain phantom containing bone and grey matter structures with a realistic head contour. Annals of Nuclear Medicine, 27, 25–36. 10.1007/s12149-012-0655-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jenkinson, M., Bannister, P., Brady, M., & Smith, S. (2002). Improved optimization for the robust and accurate linear registration and motion correction of brain images. NeuroImage, 17, 825–841. 10.1006/nimg.2002.1132 [DOI] [PubMed] [Google Scholar]

- Jenkinson, M., & Smith, S. (2001). A global optimisation method for robust affine registration of brain images. Medical Image Analysis, 5, 143–156. [DOI] [PubMed] [Google Scholar]

- Jiao, J., Bousse, A., Thielemans, K., Burgos, N., Weston, P. S. J., Schott, J. M., … Ourselin, S. (2017). Direct parametric reconstruction with joint motion estimation/correction for dynamic brain PET data. IEEE Transactions on Medical Imaging, 36(1), 203–213. [DOI] [PubMed] [Google Scholar]

- Keller, S. H., Hansen, C., Hansen, C., Andersen, F. L., Ladefoged, C., Svarer, C., … Hansen, A. E. (2015). Motion correction in simultaneous PET/MR brain imaging using sparsely sampled MR navigators: A clinically feasible tool. EJNMMI Physics, 2(14), 14. 10.1186/s40658-015-0118-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maclaren, J., Herbst, M., Speck, O., & Zaitsev, M. (2013). Prospective motion correction in brain imaging: A review. Magnetic Resonance in Medicine, 69, 621–636. 10.1002/mrm.24314 [DOI] [PubMed] [Google Scholar]

- Picard, Y., & Thompson, C. J. (1997). Motion correction of PET images using multiple acquisition frames. IEEE Transactions on Medical Imaging, 16, 137–144. 10.1109/42.563659 [DOI] [PubMed] [Google Scholar]

- Satterthwaite, T. D., Wolf, D. H., Loughead, J., Ruparel, K., Elliott, M. A., Hakonarson, H., … Gur, R. E. (2012). Impact of in‐scanner head motion on multiple measures of functional connectivity: Relevance for studies of neurodevelopment in youth. NeuroImage, 60, 623–632. 10.1016/j.neuroimage.2011.12.063 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schleyer, P. J., Dunn, J. T., Reeves, S., Brownings, S., Marsden, P. K., & Thielemans, K. (2015). Detecting and estimating head motion in brain PET acquisitions using raw time‐of‐flight PET data. Physics in Medicine and Biology, 60, 6441–6458. 10.1088/0031-9155/60/16/6441 [DOI] [PubMed] [Google Scholar]

- Smith, S. M. (2002). Fast robust automated brain extraction. Human Brain Mapping, 17, 143–155. 10.1002/hbm.10062 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thielemans, K., Schleyer, P., Dunn, J., Marsden, P.K., Manjeshwar, R.M., 2013. Using PCA to detect head motion from PET list mode data. In 2013 I.E. Nuclear Science Symposium and Medical Imaging Conference (2013 NSS/MIC), pp. 1–5. 10.1109/NSSMIC.2013.6829254 [DOI]

- Tisdall, M. D., Hess, A. T., Reuter, M., Meintjes, E. M., Fischl, B., & van der Kouwe, A. J. W. (2012). Volumetric navigators for prospective motion correction and selective reacquisition in neuroanatomical MRI. Magnetic Resonance in Medicine, 68, 389–399. 10.1002/mrm.23228 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tisdall, M. D., Reuter, M., Qureshi, A., Buckner, R. L., Fischl, B., & van der Kouwe, A. J. W. (2016). Prospective motion correction with volumetric navigators (vNavs) reduces the bias and variance in brain morphometry induced by subject motion. NeuroImage, 127, 11–22. 10.1016/j.neuroimage.2015.11.054 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ullisch, M. G., Scheins, J. J., Weirich, C., Kops, E. R., Celik, A., Tellmann, L., … Shah, N. J. (2012). MR‐based PET motion correction procedure for simultaneous MR‐PET neuroimaging of human brain. PLoS One, 7, e48149. 10.1371/journal.pone.0048149 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Villien, M., Wey, H.‐Y., Mandeville, J. B., Catana, C., Polimeni, J. R., Sander, C. Y., … Hooker, J. M. (2014). Dynamic functional imaging of brain glucose utilization using fPET‐FDG. NeuroImage, 100, 192–199. 10.1016/j.neuroimage.2014.06.025 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang, Y., Brady, M., & Smith, S. (2001). Segmentation of brain MR images through a hidden Markov random field model and the expectation‐maximization algorithm. IEEE Transactions on Medical Imaging, 20, 45–57. 10.1109/42.906424 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Figure S1 Translational (a) and rotational (b) parameters for the motion instructed volunteer, as determined by the MR‐guided MAF method.

Figure S2 Demonstration of the μ‐map realignment in the MR‐guided MAF method. Images in row (a) show misalignment between the head position and the original μ‐map (highlighted in the circled area). The MR‐guided MAF method re‐aligned μ‐map using the MR parameters completely recovers the PET image signal intensity as shown in row (b).

Figure S3 Mean displacement plot for a subject that shows the head movement with respect to the reference images, as detected by the MR‐guided MAF method. The yellow/white alternation bands indicate the time durations for the motion correction frames.

Figure S4 Panel (a) shows the differences in the time activity curves as extracted from the MR‐based motion correction method (blue), the fixed‐MAF (red) and the nonmotion corrected PET images (green). Panel (b) shows percentage errors between the whole head time activity curves extracted from the MR‐based motion corrected frames and that from the fixed‐MAF (grey line), and from the nonmotion corrected (black line) PET images.