Abstract

High-throughput data make it possible to study expression levels of thousands of genes simultaneously under a particular condition. However, only few of the genes are discriminatively expressed. How to identify these biomarkers precisely is significant for disease diagnosis, prognosis, and therapy. Many studies utilized pathway information to identify the biomarkers. However, most of these studies only incorporate the group information while the pathway structural information is ignored. In this paper, we proposed a Bayesian gene selection with a network-constrained regularization method, which can incorporate the pathway structural information as priors to perform gene selection. All the priors are conjugated; thus, the parameters can be estimated effectively through Gibbs sampling. We present the application of our method on 6 microarray datasets, comparing with Bayesian Lasso, Bayesian Elastic Net, and Bayesian Fused Lasso. The results show that our method performs better than other Bayesian methods and pathway structural information can improve the result.

1. Introduction

Identifying disease-associated genes, which can be treated as diagnostic biomarkers, can bring a significant effect on disease diagnosis, prognosis, and treatments [1, 2]. With the development of high-throughput technologies in recent years, gene expression profiling has provided a useful way to find biomarkers. Researchers can identify the genes which are differentially expressed between two groups of samples. These genes are regarded as disease-associated genes. However, gene expression data usually contains a large number of genes and a relatively small sample size [3, 4]. And many of the genes are also redundant or irrelevant to the prediction [5, 6]. Furthermore, there are also noises in the experiment procedures which will influence the gene expression values. Therefore, identifying the biomarkers from gene expression data is challenging.

During the last decades, a number of gene selection methods have been developed to tackle this problem. Feature selection and feature extraction are two major methods (we treat gene and feature equally in this paper). On the one hand, the aim of feature selection is to select relevant features and do not change the form of the features. On the other hand, feature extraction will extract the feature from the original data and may alter the form of the features. Here, we focus on the feature selection methods since the results of such methods could be interpreted easily. Feature selection methods can be generally organized into three categories: filter, wrapper, and embedded methods. Both the wrapper and embedded methods are classifier-dependent methods; thus, they are always time consuming and easy to overfitting. However, the filter methods are usually based on statistic approaches [7] such as mRMR [5], PLSRFE [8], lasso [9], and elastic net [10], which are relatively efficient in terms of computation and can derive a score of each of the genes which represents the significance of the gene. Therefore, we focus on the filter methods in this paper.

Although these methods are successful in many applications, they usually obtain suboptimal solutions. Therefore, the prediction accuracies are not satisfied and the disease-associated genes selected from different methods have few overlaps [11]. This is partly due to the fact that the discriminatory power of many biomarkers is similar. Furthermore, some genes which have low discriminatory powers play important roles in cellular functions. Their combinations are highly discriminative while they are usually ignored [12, 13].

Recently, with a large amount of biological information accumulated, there is an increased interest in gene selection with incorporating information on pathways, which can partially compensate for the lack of reliable expression data [14]. Pathways depict a series of chemical interactions in living cells; genes that interact with one another usually mean that they function together concertedly. Therefore, these genes should be highly correlated and have dependence structures. However, many studies only utilize the information that pathways cluster genes into the natural group; the pathway structural information is neglected. Li and Li have overcome this disadvantage by incorporating pathway structure information through a Laplacian matrix of a global graph [15, 16] and combined with lasso penalty to perform network-constrained penalty which can select subgroups of correlated features in the network. This penalty is based on the assumption that genes belonging to the same pathway have similar functions and therefore smoothed regression coefficients. And this penalty has been successfully applied in many studies [17–19].

The Bayesian approach has three major advantages over Bayesian selection methods [20]. Firstly, hyperparameters can be estimated automatically through fulfilling stochastic draws; thus, 10-fold cross-validation for estimating penalized parameters is not required. Secondly, the Bayesian framework can utilize the pathway information naturally by integrating it in the model as prior knowledge. Finally, the Bayesian estimation with the posterior distributions can provide credible intervals for the regression coefficients, which is a great advantage over frequentist methods.

In this paper, we work with a Bayesian framework to perform gene selection through network-constrained regularization. Similar to the Bayesian Lasso [21], Bayesian Elastic Net [22], and Bayesian Fused Lasso [23], we use shrinkage priors to perform regularization. We show that all the conditional posteriors of the proposed model are available in closed form and proper. Thus, parameter estimation can be performed through Gibbs sampling easily. The pathway information is obtained from the Kyoto Encyclopedia of Genes and Genomes (KEGG) [24], which is the most popular pathway public database, especially pathways associated with several types of cancer could be obtained in the model. Furthermore, following Held and Holmes [25], we extend the regression model to binary regression which can perform binary classification through an auxiliary variable. This method is assessed by applying it to several microarray datasets.

2. Method

2.1. The Bayesian Network-Constrained Model for Gene Selection

Considering an N × P matrix X, where P is the number of genes and N is the number of the samples, with a response vector y = (y1, ⋯,yn)T, we normalize the values of each feature as the tradition in variable selection; thus, the mean and standard deviation of each feature are 0 and 1. We assume the likelihood function of the continuous response is Gaussian function:

| (1) |

which can be also expressed as

| (2) |

Following Li and Li's work [16], we incorporate the pathway information through its normalized Laplacian matrix. Consider an undirected graph G = (V, E, W). In this graph, genes are represented by a set of nodes V, and the interactions between genes are represented by a set of edges E = {u ~ v}, and W is the weights of the edges, where w(u, v) represents the weight of edge e = (u ~ v) which indicates the uncertainty of the edge between the vertices u and v. The degree of each vertex is defined as dv = ∑u∼vw(u, v). Then, the normalized Laplacian matrix L for graph G with the uth and vth elements can be defined by

| (3) |

Here, we let w(u, v) = 1 if there exists an interaction between gene u and v, and w(u, v) = 0, otherwise.

To form the network-constrained regularization, we assign the prior distribution for β as follows:

| (4) |

where Λ is taking the form:

| (5) |

Note that Λ only contains hyperparameter τ.

To eliminate the |Λ|1/2 in the prior distribution of β, we assign the prior distribution for τ as follows:

| (6) |

where Cτ is the normalizing constant.

The prior distribution defined in (6) is proper, due to the following analysis:

Let A = Λ − In, and A is a symmetric and positive semidefinite matrix.

Let DA = diag(a1, ⋯, ap), where a1, ⋯, ap are eigenvalues of A and 0 ≤ a1 ≤ ⋯≤ap.

Since A is the symmetric and positive semidefinite, there exists an orthonormal matrix Q. Hence, the eigendecomposition of matrix A can be written as A = QDAQT.

Because of Λ = A + In = QDAQT + QQT = Q(DA + In)QT, so ∣Λ | = ∏i=1n(ai + 1) ≥ 1.

Then,

| (7) |

where the integrand is kernels of the gamma density that indicates the integral is finite. Therefore, the prior distribution is proper.

Since

| (8) |

Λ is positive semidefinite.

The joint posterior distribution can be written as

| (9) |

Integrating out τ2, we have

| (10) |

Applying the fact as follows to the above equation:

| (11) |

we have

| (12) |

Thus, maximizing the posterior distribution is equivalent to minimizing the following equation:

| (13) |

which has the same regularization term as the method proposed in [19].

We assign the prior distribution for σ2 as follows:

| (14) |

And we assign the following prior for the hyperparameters r and λ:

| (15) |

Then, the hierarchical Bayesian model is

| (16) |

2.2. Gibbs Sampling Method

The likelihood is

| (17) |

According to the above hierarchical model and the likelihood, the joint posterior distribution on data is

| (18) |

Due to the fact that all the prior distributions are conjugated, the full conditional posterior distributions for the parameters have closed forms.

| (19) |

Let μ = (X′X + rΛ)−1X′Y, Σ = σ2(X′X + rΛ)−1 , we have

| (20) |

| (21) |

| (22) |

| (23) |

This implies that τ2 follows a generalized inverse Gaussian distribution:

| (24) |

| (25) |

| (26) |

| (27) |

| (28) |

The Gibbs sampling scheme iterates as follows:

2.3. The Binary Response Case

Binary data such as absence or presence or different types of a disease are often used as response variables in gene selection problems. To perform binary classification, we use probit regression using auxiliary variables. Then, the model can be represented as follows:

| (29) |

where Xi is the ith sample and P(yi = 1) is the probability of yi = 1. Here, latent variables Z = (z1, z2, ⋯, zn) are defined as

| (30) |

Then, the full conditional posterior distribution for each zi is truncated normal:

| (31) |

And Z follows a multivariate truncated normal distribution:

| (32) |

| (33) |

Sampling from this distribution directly is difficult. We use the method proposed in [26] to sample this latent variable.

Then, the hierarchical Bayesian model is

| (34) |

To derive the Gibbs sampling scheme, we only need to replace Y with Z in the Gibbs sampling scheme defined in Section 2.2. And the latent variables Z are sampled from (32).

3. Results

3.1. Datasets and Preprocessing

To demonstrate the effectiveness of our methods, a regression microarray dataset and 5 real-life binary classification microarray datasets were tested in this paper, which are described as follows. The pathway information was obtained from the KEGG database.

A breast cancer dataset was used to predict the survival time of patients [27]. We used gene expression profiles of 76 patients. Each patient was measured with 24481 probes. 3592 genes were found in the KEGG database from this dataset. We used the logarithm of survival times of patients as the response variable in this dataset.

The other 5 binary classification microarray datasets are shown in Table 1. No. genes mean the genes we found both existing in the microarray dataset and KEGG pathway database.

Table 1.

Binary classification microarray datasets used.

Lastly, the gene expression values were normalized; thus, its mean and standard deviation are 0 and 1.

3.2. Parameter Settings

In the procedure of Bayesian network-constrained regularization, we recommend small values for a, b, c, d, e, f in (16) and we set these values to 0.01 in our experiments. The Gibbs sampling iteration was conducted 6000 times, and we chose the second half of the samples to estimate the regression parameters. The posterior estimates of all parameters were obtained through the posterior averages of the chains. For the classification problem, the classifiers were built by a support vector machine (SVM). In this paper, we used the radial basic function as the kernel function in SVM. And the regularization parameter and the kernel width parameter were optimized by a grid search approach. We used Libsvm [32] to model the SVM.

3.3. Results and Analysis

In this section, we will describe the results on 6 microarray gene expression datasets (Table 1) to evaluate the performance of the proposed method. Our method was compared with the other three Bayesian regularized regression methods, including Bayesian Lasso, Bayesian Elastic Net, and Bayesian Fused Lasso. A comprehensive review of these methods can be found in [23]. When L = I, which means we know nothing about the pathway structure, the Bayesian network-constrained regularization is equivalent to Bayesian Elastic Net. And when L = O, our method is equivalent to Bayesian Lasso. These three methods can also be extended to perform binary classification through an auxiliary variable. We also used Gibbs sampling to perform parameter estimation. Previous review [23] also shows that these three Bayesian methods' performances are similar to and in some cases better than the frequentist methods. Prediction mean square error was used to evaluate the performance on regression problem. Meanwhile, ACC and AUC were used as the evaluation criteria for binary classification problem. According to previous studies, the number of important genes is probably about 50 [28]; thus, we selected the top 50 genes based on the absolute value of their regression coefficient for the binary classification problem.

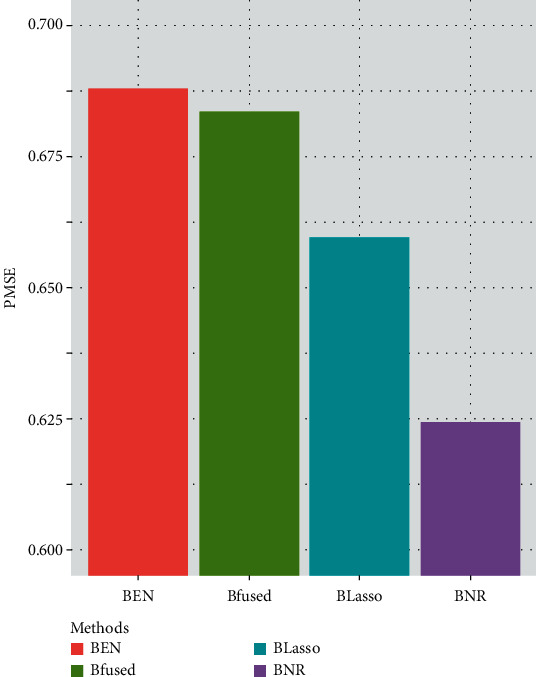

Figure 1 shows the performance of all the four methods on the regression microarray dataset. And the classification performances on the five binary classification microarray datasets are summarized in Table 2. In the binary classification datasets, the first three datasets are usually treated as easy classification datasets, while the other two datasets are relatively hard to classify. From Figure 1, we can see that the PMSE of our method is lower than other Bayesian methods. Table 2 also shows that on the four easy binary classification datasets, our method achieves the highest ACC and AUC. In the other two hard classification datasets, our method achieves the highest ACC and AUC on GSE412. Although the AUC of Bayesian Elastic Net is higher than our method on GSE4922, our method achieves the highest ACC. In general, Bayesian network-constrained regularization shows better prediction and classification ability than other three Bayesian methods, which is similar to the results implied by [15]. Since our method can be transferred to Bayesian Lasso or Bayesian Elastic Net when the normalized Laplacian matrix L = O or L = I, the results also show that pathway information indeed contributes to the accuracy of the gene selection.

Figure 1.

PMSE performance on regression microarray dataset.

Table 2.

Comparison of results of 4 Bayesian methods.

| Dataset | Methods | AUC | ACC |

|---|---|---|---|

| Leukemia | BEN | 0.9955 | 0.9600 |

| BFused | 1 | 0.9733 | |

| BLasso | 1 | 0.9447 | |

| BNR | 1 | 0.9733 | |

| DLBCL | BEN | 0.9674 | 0.9223 |

| BFused | 0.9674 | 0.9223 | |

| BLasso | 0.9485 | 0.9223 | |

| BNR | 0.9958 | 0.9482 | |

| Prostate | BEN | 0.9784 | 0.9414 |

| BFused | 0.9655 | 0.9314 | |

| BLasso | 0.9784 | 0.9419 | |

| BNR | 0.9900 | 0.9510 | |

| GSE412 | BEN | 0.9428 | 0.8498 |

| BFused | 0.9046 | 0.8619 | |

| BLasso | 0.9541 | 0.8792 | |

| BNR | 0.9637 | 0.9074 | |

| GSE4922 | BEN | 0.6274 | 0.6666 |

| BFused | 0.6028 | 0.6523 | |

| BLasso | 0.6132 | 0.6860 | |

| BNR | 0.6132 | 0.6860 |

Consistent with previous studies [33, 34], all the Bayesian regularization regression methods could classify Leukemia, DLBCL, Prostate, and GSE412 dataset accurately. However, the performances of all the methods were poor on GSE 4922 dataset. Therefore, we demonstrate the effectiveness of our method by selecting the top 18 genes which make the prediction accuracy to achieve the highest value and most of those genes are associated with breast cancer (Table 3).

Table 3.

Description of top 18 genes of GSE4922.

| Gene symbol | Description | Reference |

|---|---|---|

| SYCP3∗ | Synaptonemal complex protein 3 | [35] |

| CDKN2A∗ | Cyclin dependent kinase inhibitor 2A | [36] |

| PLB1∗ CTNNBIP1∗ |

Phospholipase B1 Catenin beta-interacting protein 1 |

[37] [38] |

| GBE1∗ | 1,4-Alpha-glucan-branching enzyme 1 | [39] |

| SMURF1∗ | SMAD-specific E3 ubiquitin protein ligase 1 | [40] |

| NR1H4 | Nuclear receptor subfamily 1 group H member 4 | / |

| PDE11A | Phosphodiesterase 11A | / |

| UGT1A1∗ | UDP glucuronosyltransferase family 1 member A1 | [41] |

| FGF19∗ | Fibroblast growth factor 19 | [42] |

| OR51B4∗ | Olfactory receptor family 51 subfamily B member 4 | [43] |

| RAB7A∗ | RAB7A, member RAS oncogene family | [44] |

| SDHD∗ | Succinate dehydrogenase complex subunit D | [45] |

| IFNA8 | Interferon alpha 8 | / |

| VANGL2∗ | VANGL planar cell polarity protein 2 | [46] |

| UMPS | Uridine monophosphate synthetase | / |

| CASP3 | Caspase 3 | [47] |

| SUFU | SUFU negative regulator of hedgehog signaling | [48] |

∗The gene was reported as an oncogene in previous literatures.

4. Conclusion

In this paper, we propose a Bayesian approach to perform gene selection, which can incorporate the pathway information as prior biological knowledge through network-constrained regularization to improve the accuracy of gene selection. All the prior distributions we propose are strictly conjugated; thus, all the conditional posteriors of the model are available in closed form. An auxiliary variable is also introduced to extend the regression model to perform binary classification. An efficient Gibbs sampling method is used to estimate regression coefficients and tune parameters simultaneously, which can perform feature filter feasible for high dimensional microarray datasets. The performance of the proposed method is demonstrated by applying it to a regression microarray dataset and five binary classification microarray datasets. The results show that compared with Bayesian Lasso, Bayesian Elastic Net, and Bayesian Fused Lasso, our method performs better both in prediction and classification. And the pathway information indeed improves the accuracy of gene selection.

Acknowledgments

This work is supported in part by the National Science Foundation of China, under Grant 61173111.

Data Availability

The breast cancer dataset could be obtained from the R package breast cancer NKI. Leukemia, DLBCL, and Prostate datasets are available on the website http://portals.broadinstitute.org/cgi-bin/cancer/. GSE412 and GSE4922 datasets are available in the GEO of NCBI under accession GSE412 and GSE4922.

Conflicts of Interest

The authors declare that there is no conflict of interest regarding the publication of this paper.

References

- 1.Govindarajan R., Duraiyan J., Kaliyappan K., Palanisamy M. Microarray and its applications. Journal of Pharmacy & Bioallied Sciences. 2012;4(Supplement 2):S310–S312. doi: 10.4103/0975-7406.100283. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Wang H., Jing X., Niu B. A discrete bacterial algorithm for feature selection in classification of microarray gene expression cancer data. Knowledge-Based Systems. 2017;126:8–19. doi: 10.1016/j.knosys.2017.04.004. [DOI] [Google Scholar]

- 3.Dessì N., Pes B. Similarity of feature selection methods: an empirical study across data intensive classification tasks. Expert Systems with Applications. 2015;42(10):4632–4642. doi: 10.1016/j.eswa.2015.01.069. [DOI] [Google Scholar]

- 4.Lu L., Townsend K. A., Daigle B. J., Jr. GEOlimma: differential expression analysis and feature selection using pre-existing microarray data. BMC Bioinformatics. 2021;22(1):p. 44. doi: 10.1186/s12859-020-03932-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Peng H., Long F., Ding C. Feature selection based on mutual information criteria of max-dependency, max-relevance, and min-redundancy. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2005;27(8):1226–1238. doi: 10.1109/TPAMI.2005.159. [DOI] [PubMed] [Google Scholar]

- 6.Sun S., Peng Q., Zhang X. Global feature selection from microarray data using Lagrange multipliers. Knowledge-Based Systems. 2016;110:267–274. doi: 10.1016/j.knosys.2016.07.035. [DOI] [Google Scholar]

- 7.Kanti Ghosh K., Begum S., Sardar A., et al. Theoretical and empirical analysis of filter ranking methods: experimental study on benchmark DNA microarray data. Expert Systems with Applications. 2021;169:p. 114485. doi: 10.1016/j.eswa.2020.114485. [DOI] [Google Scholar]

- 8.You W., Yang Z., Ji G. Feature selection for high-dimensional multi-category data using PLS-based local recursive feature elimination. Expert Systems with Applications. 2014;41(4):1463–1475. doi: 10.1016/j.eswa.2013.08.043. [DOI] [Google Scholar]

- 9.Tibshirani R. Regression shrinkage and selection via the lasso. Journal of the Royal Statistical Society: Series B (Methodological) 1996;58(1):267–288. doi: 10.1111/j.2517-6161.1996.tb02080.x. [DOI] [Google Scholar]

- 10.Hui Z., Hastie T. Regularization and variable selection via the elastic net. Journal of the Royal Statistical Society. 2005;67(5):768–768. [Google Scholar]

- 11.Fröhlich H. Network based consensus gene signatures for biomarker discovery in breast cancer. PLoS One. 2011;6(10, article e25364) doi: 10.1371/journal.pone.0025364. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Michailidis G. Statistical challenges in biological networks. Journal of Computational and Graphical Statistics. 2012;21(4):840–855. doi: 10.1080/10618600.2012.738614. [DOI] [Google Scholar]

- 13.Wu M. Y., Zhang X. F., Dai D. Q., Ou-Yang L., Zhu Y., Yan H. Regularized logistic regression with network-based pairwise interaction for biomarker identification in breast cancer. BMC Bioinformatics. 2016;17(1):p. 108. doi: 10.1186/s12859-016-0951-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Su J., Yoon B. J., Dougherty E. R. Accurate and reliable cancer classification based on probabilistic inference of pathway activity. PLoS One. 2009;4(12, article e8161) doi: 10.1371/journal.pone.0008161. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Li L. H., Li H. Network-constrained regularization and variable selection for analysis of genomic data. Bioinformatics. 2008;24(9):1175–1182. doi: 10.1093/bioinformatics/btn081. [DOI] [PubMed] [Google Scholar]

- 16.Li C., Li H. Variable selection and regression analysis for graph-structured covariates with an application to genomics. The Annals of Applied Statistics. 2010;4(3):1498–1516. doi: 10.1214/10-AOAS332. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Liu J., Huang J., Ma S. Incorporating network structure in integrative analysis of cancer prognosis data. Genetic Epidemiology. 2013;37(2):173–183. doi: 10.1002/gepi.21697. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Zhang W., Wan Y. W., Allen G. I., Pang K., Anderson M. L., Liu Z. Molecular pathway identification using biological network-regularized logistic models. BMC Genomics. 2013;14(Supplement 8):p. S7. doi: 10.1186/1471-2164-14-s8-s7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Jiang H., Hong T., Wang T., et al. Gene expression profiling of human bone marrow mesenchymal stem cells during osteogenic differentiation. Journal of Cellular Physiology. 2019;234(5):7070–7077. doi: 10.1002/jcp.27461. [DOI] [PubMed] [Google Scholar]

- 20.Fan Y., Wang X., Peng Q. Inference of gene regulatory networks using Bayesian nonparametric regression and topology information. Computational and Mathematical Methods in Medicine. 2017;2017:8. doi: 10.1155/2017/8307530.8307530 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Hui Z. The Bayesian Lasso. 2008.

- 22.Li Q., Lin N. The Bayesian elastic net. Bayesian Analysis. 2010;5(1):151–170. doi: 10.1214/10-ba506. [DOI] [Google Scholar]

- 23.Casella G., Ghosh M., Gill J., Kyung M. Penalized regression, standard errors, and Bayesian lassos. Bayesian Analysis. 2010;5(2):369–412. doi: 10.1214/10-ba607. [DOI] [Google Scholar]

- 24.Kanehisa M., Araki M., Goto S., et al. KEGG for linking genomes to life and the environment. Nucleic Acids Research. 2008;36(Database issue):D480–D484. doi: 10.1093/nar/gkm882. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Held L., Holmes C. C. Bayesian auxiliary variable models for binary and multinomial regression. Bayesian Analysis. 2006;1(1):145–168. doi: 10.1214/06-ba105. [DOI] [Google Scholar]

- 26.Ivshina A. V., George J., Senko O., et al. Genetic reclassification of histologic grade delineates new clinical subtypes of breast cancer. Cancer Research. 2006;66(21):10292–10301. doi: 10.1158/0008-5472.CAN-05-4414. [DOI] [PubMed] [Google Scholar]

- 27.van 't Veer L. J., Dai H., van de Vijver M. J., et al. Gene expression profiling predicts clinical outcome of breast cancer. Nature. 2002;415(6871):530–536. doi: 10.1038/415530a. [DOI] [PubMed] [Google Scholar]

- 28.Golub T. R., Slonim D. K., Tamayo P., et al. Molecular classification of cancer: class discovery and class prediction by gene expression monitoring. Science. 1999;286(5439):531–537. doi: 10.1126/science.286.5439.531. [DOI] [PubMed] [Google Scholar]

- 29.Shipp M. A., Ross K. N., Tamayo P., et al. Diffuse large B-cell lymphoma outcome prediction by gene-expression profiling and supervised machine learning. Nature Medicine. 2002;8(1):68–74. doi: 10.1038/nm0102-68. [DOI] [PubMed] [Google Scholar]

- 30.Singh D., Febbo P. G., Ross K., et al. Gene expression correlates of clinical prostate cancer behavior. Cancer Cell. 2002;1(2):203–209. doi: 10.1016/S1535-6108(02)00030-2. [DOI] [PubMed] [Google Scholar]

- 31.Cheok M. H., Yang W., Pui C. H., et al. Treatment-specific changes in gene expression discriminate _in vivo_ drug response in human leukemia cells. Nature Genetics. 2003;34(1):85–90. doi: 10.1038/ng1151. [DOI] [PubMed] [Google Scholar]

- 32.Ferrari V. Libsvm : A Library for Support Vector Machines. 2008.

- 33.Momenzadeh M., Sehhati M., Rabbani H. A novel feature selection method for microarray data classification based on hidden Markov model. Journal of Biomedical Informatics. 2019;95:p. 103213. doi: 10.1016/j.jbi.2019.103213. [DOI] [PubMed] [Google Scholar]

- 34.Dabba A., Tari A., Meftali S., Mokhtari R. Gene selection and classification of microarray data method based on mutual information and moth flame algorithm. Expert Systems with Applications. 2021;166:p. 114012. doi: 10.1016/j.eswa.2020.114012. [DOI] [Google Scholar]

- 35.Mobasheri M. B., Shirkoohi R., Modarressi M. H. Synaptonemal complex protein 3 transcript analysis in breast cancer. Iranian Journal of Public Health. 2016;45(12):1618–1624. [PMC free article] [PubMed] [Google Scholar]

- 36.Lubiński J., Górski B., Huzarski T., et al. BRCA1-positive breast cancers in young women from Poland. Breast Cancer Research and Treatment. 2006;99(1):71–76. doi: 10.1007/s10549-006-9182-3. [DOI] [PubMed] [Google Scholar]

- 37.Ueda A., Oikawa K., Fujita K., et al. Therapeutic potential of PLK1 inhibition in triple-negative breast cancer. Laboratory Investigation. 2019;99(9):1275–1286. doi: 10.1038/s41374-019-0247-4. [DOI] [PubMed] [Google Scholar]

- 38.Mukherjee N., Dasgupta H., Bhattacharya R., et al. Frequent inactivation of MCC/CTNNBIP1 and overexpression of phospho-beta- cateninY654 are associated with breast carcinoma: clinical and prognostic significance. Biochimica et Biophysica Acta (BBA)-Molecular Basis of Disease. 2016;1862(9):1472–1484. doi: 10.1016/j.bbadis.2016.05.009. [DOI] [PubMed] [Google Scholar]

- 39.Birch A. H., Quinn M. C. J., Filali-Mouhim A., Provencher D. M., Mes-Masson A. M., Tonin P. N. Transcriptome analysis of serous ovarian cancers identifies differentially expressed chromosome 3 genes. Molecular Carcinogenesis. 2008;47(1):56–65. doi: 10.1002/mc.20361. [DOI] [PubMed] [Google Scholar]

- 40.Kwon A., Lee H. L., Woo K. M., Ryoo H. M., Baek J. H. SMURF1 plays a role in EGF-induced breast cancer cell migration and invasion. Molecules and Cells. 2013;36(6):548–555. doi: 10.1007/s10059-013-0233-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Kuo S.-H., Yang S. Y., You S. L., et al. Polymorphisms of ESR1, UGT1A1, HCN1, MAP3K1 and CYP2B6 are associated with the prognosis of hormone receptor-positive early breast cancer. Oncotarget. 2017;8(13):20925–20938. doi: 10.18632/oncotarget.14995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Tiong K. H., Tan B. S., Choo H. L., et al. Fibroblast growth factor receptor 4 (FGFR4) and fibroblast growth factor 19 (FGF19) autocrine enhance breast cancer cells survival. Oncotarget. 2016;7(36):57633–57650. doi: 10.18632/oncotarget.9328. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Zhang C., Zhao H., Li J., et al. The identification of specific methylation patterns across different cancers. PLoS One. 2015;10(3, article e0120361) doi: 10.1371/journal.pone.0120361. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Xie J., Yan Y., Liu F., et al. Knockdown of Rab7a suppresses the proliferation, migration, and xenograft tumor growth of breast cancer cells. Bioscience Reports. 2019;39(2) doi: 10.1042/BSR20180480. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Yu W., He X., Ni Y., Ngeow J., Eng C. Cowden syndrome-associated germline SDHD variants alter PTEN nuclear translocation through SRC-induced PTEN oxidation. Human Molecular Genetics. 2015;24(1):142–153. doi: 10.1093/hmg/ddu425. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Hatakeyama J., Wald J. H., Printsev I., Ho H. Y. H., Carraway K. L. Vangl1 and Vangl2: planar cell polarity components with a developing role in cancer. Endocrine-Related Cancer. 2014;21(5):R345–R356. doi: 10.1530/ERC-14-0141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Pu X., Storr S. J., Zhang Y., et al. Caspase-3 and caspase-8 expression in breast cancer: caspase-3 is associated with survival. Apoptosis. 2017;22(3):357–368. doi: 10.1007/s10495-016-1323-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Alimirah F., Peng X., Gupta A., et al. Crosstalk between the vitamin D receptor (VDR) and miR-214 in regulating SuFu, a hedgehog pathway inhibitor in breast cancer cells. Experimental Cell Research. 2016;349(1):15–22. doi: 10.1016/j.yexcr.2016.08.012. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The breast cancer dataset could be obtained from the R package breast cancer NKI. Leukemia, DLBCL, and Prostate datasets are available on the website http://portals.broadinstitute.org/cgi-bin/cancer/. GSE412 and GSE4922 datasets are available in the GEO of NCBI under accession GSE412 and GSE4922.