Abstract

Background

Panoramic radiography is an imaging method for displaying maxillary and mandibular teeth together with their supporting structures. Panoramic radiography is frequently used in dental imaging due to its relatively low radiation dose, short imaging time, and low burden to the patient. We verified the diagnostic performance of an artificial intelligence (AI) system based on a deep convolutional neural network method to detect and number teeth on panoramic radiographs.

Methods

The data set included 2482 anonymized panoramic radiographs from adults from the archive of Eskisehir Osmangazi University, Faculty of Dentistry, Department of Oral and Maxillofacial Radiology. A Faster R-CNN Inception v2 model was used to develop an AI algorithm (CranioCatch, Eskisehir, Turkey) to automatically detect and number teeth on panoramic radiographs. Human observation and AI methods were compared on a test data set consisting of 249 panoramic radiographs. True positive, false positive, and false negative rates were calculated for each quadrant of the jaws. The sensitivity, precision, and F-measure values were estimated using a confusion matrix.

Results

The total numbers of true positive, false positive, and false negative results were 6940, 250, and 320 for all quadrants, respectively. Consequently, the estimated sensitivity, precision, and F-measure were 0.9559, 0.9652, and 0.9606, respectively.

Conclusions

The deep convolutional neural network system was successful in detecting and numbering teeth. Clinicians can use AI systems to detect and number teeth on panoramic radiographs, which may eventually replace evaluation by human observers and support decision making.

Keywords: Artificial intelligence, Deep learning, Tooth, Panoramic radiography

Background

Panoramic radiography is a method for producing a single tomographic image of the facial structures that includes both the maxillary and mandibular dental teeth and their supporting structures. It has a number of advantages, including a relatively low radiation dose, short imaging time, and low burden to the patient. Panoramic radiography is widely used in clinical settings to solve diagnostic problems in dentistry because it shows both jaws in a single image and allows evaluation of all teeth. The automated diagnosis of panoramic radiographs could help clinicians in daily practice [1, 2].

Artificial intelligence (AI) is defined as the ability of a machine to mimic intelligent behavior to perform complex tasks, such as decision making, recognition of words and objects, as well as problem solving. Deep learning systems are among the most promising systems in AI. The deep learning approach has been elaborated to improve the performance of traditional artificial neural networks (ANNs) using complex architectures. Deep learning methods are characterized by multiple levels of representation, and the raw data are processed to yield classification or performance of detection tasks [3–5]. In deep learning, multiple layers of algorithms are classified into conjunct and important hierarchies to provide meaningful data. These layers collect input data and provide output that undergoes gradual changes as the AI system learns new features based on the supplied data. ANNs are composed of thousands to millions of connected nodes or units. Links between the nodes or units are activated, and the activation spreads from one unit to another, with each link activation weighted with a numerical value that determines the strength of the link [3]. ANNs should be trained using educational data sets in which the initial image data sets should be manually tagged by the algorithm to suit the ground truth [3, 6, 7].

Convolutional neural networks (CNNs) have been applied in computerized vision applications within ANNs. In this deep ANN class, convolution operations are used to obtain feature density maps. In such maps, the density of each pixel/voxel is the sum of the pixels/voxels in the original image and the sum of pixels/voxels with convolution matrices also bearing the name of the nucleus. Specific tasks, such as sharpening, blurring, or edge detection, are performed by various cores. CNNs are inspired by nature and mimic the behavior of the complex structures of the brain cortex, in which small regions of the visual field are analyzed by cells sensitive to this area. The deep CNN architecture allows complex features to be decoded from simple features based on raw image data, thus achieving redundancy in identification of specific features [8–12].

AI became a cornerstone of radiology with the introduction of a digital picture archiving and communication system that provided large amounts of imaging data, offering great potential for AI training [8, 13, 14]. However, the use of AI, especially CNNs, in dentistry has been limited. Panoramic radiography is a baseline imaging modality and an essential tool for diagnosis, treatment planning, and follow-up in dentistry. Therefore, in this study, it was considered worthwhile to train a deep CNN for detection and numbering of teeth and to compare the performance of the CNN with that of expert human observers.

Methods

Patient selection

This retrospective observational study evaluated a radiographic data set that included 2482 anonymized panoramic radiographs from adults obtained between January 2018 and January 2020 from the archive of Eskisehir Osmangazi University, Faculty of Dentistry, Department of Oral and Maxillofacial Radiology. Panoramic radiographs with artifacts related to metal superposition, position errors, movement, etc., were removed from the data set. Panoramic radiographs showing developmental anomalies, crowded teeth, malocclusion, rotated teeth, anterior teeth with inclination, retained deciduous and supernumerary teeth, and transposition between the upper left canine and lateral incisor were excluded from the study. Panoramic radiographs showing teeth with dental caries, restorative fillings, crowns and bridges, implants, etc., were included in the study. The non-interventional Clinical Research Ethical Committee of Eskisehir Osmangazi University approved the study protocol (decision date and approval number: 06.08.2019/14). The study was conducted in accordance with the principles of the Declaration of Helsinki.

Radiographic data set

All panoramic radiographs were obtained using the Planmeca Promax 2D (Planmeca, Helsinki, Finland) panoramic dental imaging unit with the following parameters: 68 kVp, 16 mA, 13 s.

Image evaluation

Two oral and maxillofacial radiologists (E.B. and I.S.B.) with 10 years of experience and an oral and maxillofacial radiologist (F.A.K.) with 3 years of experience provided ground truth annotations for all images using Colabeler (MacGenius, Blaze Software, CA, USA). Annotations were collected by asking the experts to draw bounding boxes around all teeth and at the same time to provide a class label for each box with the tooth number according to the FDI tooth numbering system.

Deep convolutional neural network

An arbitrary sequence was generated using the open-source Python programming language (Python 3.6.1, Python Software Foundation, Wilmington, DE, USA; retrieved August 01, 2019 from https://www.python.org). Inception v2 Faster R-CNN (region-based CNN) network implemented with TensorFlow library was used to create a model for tooth detection and numbering. This method consists of 22 deep layers, which can obtain different scale features by applying various sizes of convolutional filters within the same layer. It includes an auxiliary classifier, totally interconnected layers, and a total of nine start modules, including softmax functions [15].

Model pipeline

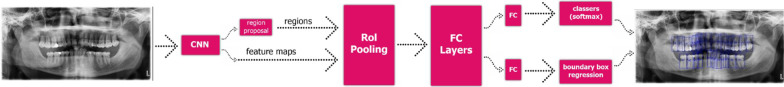

In this study, we developed an AI algorithm (CranioCatch, Eskisehir, Turkey) to automatically detect and number teeth using deep learning techniques, including Faster R-CNN Inception v2 models, on panoramic radiographs. Using the Inception v2 architecture as transfer learning, the transfer values in the cache were first saved, and then a fully connected layer and softmax classifier was used to build the final model layers (Fig. 1). Training was performed using 7000 steps on a PC with 16 GB RAM and the NVIDIA GeForce GTX 1050 graphics card. The training and validation data sets were used to predict and generate optimal CNN algorithm weight factors.

Fig. 1.

System architecture and tooth detection and numbering pipeline

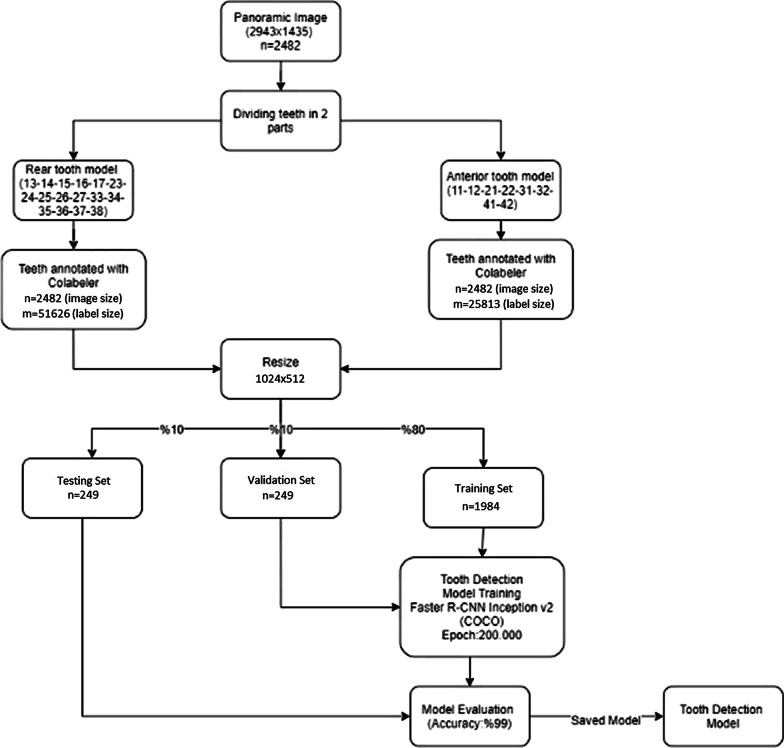

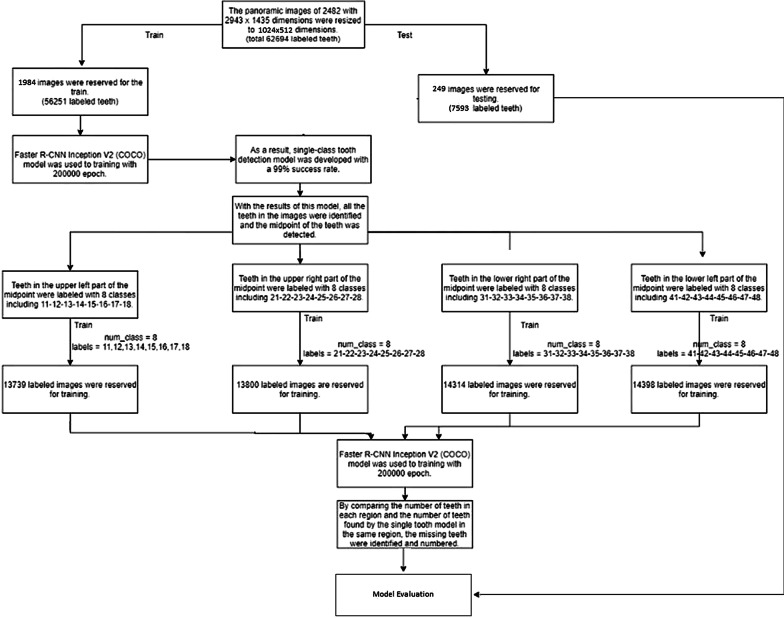

Before training, each radiograph was resized from the original dimensions of 2943 × 1435 pixels to 1024 × 512 pixels. The training data set in which 32 different teeth were labeled at the same time consisted of 1984 images. Among the teeth on the 1984 panoramic radiographs in the training group, 56,251 teeth were labeled. The classes were as follows: 11-12-13-14-15-16-17-18-21-22-23-24-25-26-27-28-31-32-33-34-35-36-37-38-41-42-43-44-45-46-47-48. To compensate for the tooth numbers that could not be found, the order of the teeth was made using the single tooth detection model. The gap between the right and left teeth was checked using a single tooth detection model for missing teeth, and the gap was filled.

Training phase

The images were divided into training (80%), validation (10%), and test (10%) groups. For each quadrant (regions 1, 2, 3, and 4), 1984, 249, and 249 images were randomly distributed into the training, test, and validation groups, respectively.

The CranioCatch approach for detecting teeth is based on a deep CNN using 200,000 epochs trained with faster R-CNN inception v2 with a learning rate of 0.0002. At this point, the exact type of tooth must be specified using a separate deep CNN. Once trained, the model is utilized to identify the presence of teeth in the following manner: dental object detection model; different models (100%), 11-12-21-22-31-32-41-42 numbered teeth were detected using the anterior tooth model, and the remaining numbered teeth were detected using the rear tooth model; jaw midpoint detection; upper/lower jaw classification model (100%); training in four models (Figs. 2, 3, 4).

Fig. 2.

The diagram of Dental Object Detection Model (CranioCatch, Eskisehir-Turkey)

Fig. 3.

The diagram of Different Models (CranioCatch, Eskisehir-Turkey)

Fig. 4.

The diagram of AI model (CranioCatch, Eskisehir-Turkey) developing stages

Statistical analysis

The confusion matrix, a useful table that summarizes the predicted and actual situations, was used as a metric to calculate the success of the model. The following procedures and metrics were used to assess the success of the AI model:

Initially, true positive (TP), false positive (FP), and false negative (FN) rates were calculated.

TP: the outcome in which the model correctly predicts the positive class (teeth correctly detected and numbered on panoramic radiographs).

FP: the outcome in which the model incorrectly predicts the positive class (teeth correctly detected but incorrectly numbered on panoramic radiographs).

FN: the outcome in which the model incorrectly predicts the negative class (teeth incorrectly detected and numbered on panoramic radiographs).

The following metrics were then calculated using the TP, FP, and FN values:

Sensitivity (Recall): TP/(TP + FN)

Precision: TP/(TP + FP)

F1 Score: 2TP/(2TP + FP + FN)

False Discovery Rate = FP/(FP + TP)

False Negative Rate = FN/(FN + TP)

Results

The deep CNN system was successful in detecting and numbering the teeth (Fig. 5). The numbers of TP, FP, and FN results were 1388, 50, and 64 in all quadrants. The sensitivity and precision rates were promising for detecting and numbering the teeth. Consequently, the estimated sensitivity, precision, false discovery rate, false negative rate, and F-measure were 0.9559, 0.9652, 0.0348, 0.0441, and 0.9606, respectively (Tables 1, 2).

Fig. 5.

Detecting and numbering the teeth with the deep convolutional neural network system in panoramic radiographs

Table 1.

The number of teeth correctly and incorrectly detected and numbered by the AI model in terms of the region

| Quadrant | Region-1 | Region-2 | Region-3 | Region-4 | Total |

|---|---|---|---|---|---|

| True positives (TP) | 1710 | 1610 | 1800 | 1820 | 6940 |

| False positives (FP) | 85 | 60 | 70 | 35 | 250 |

| False negatives (FN) | 95 | 160 | 20 | 45 | 320 |

Table 2.

The value of AI model estimation performance measure using confusion matrix

| Measure | Value | Derivations |

|---|---|---|

| Sensitivity (Recall) | 0.9559 | TPR = TP/(TP + FN) |

| Precision | 0.9652 | PPV = TP/(TP + FP) |

| False Discovery Rate | 0.0348 | FDR = FP/(FP + TP) |

| False Negative Rate | 0.0441 | FNR = FN/(FN + TP) |

| F1 Score | 0.9606 | F1 = 2TP/(2TP + FP + FN) |

Discussion

Radiology has undergone two major digital revolutions with the introduction of advanced imaging methods and the development of workstations and archiving systems. These developments have been followed by the use of AI especially in radiographic analysis [8, 13]. The main advantage of machine learning, as radiologists are trained to evaluate medical images repeatedly, is that the dedicated AI model can develop and learn with experience due to increased training based on large and new image data sets. Using AI diagnostic models, radiologists hope not only to read and report many medical images but also to improve work efficiency and obtain more precise results in the accurate diagnosis of various diseases [8, 9].

The application of AI in dentistry is a relatively new development. Deep learning and deep CNNs have been applied to the detection of objects, periapical lesions, caries, and fracture determination [11, 12, 16–21]. There have also been studies of the application of AI to cone-beam CT, panoramic, bitewing, and periapical radiographs for charting purposes [9–11, 22–24]. Miki et al. [23] applied an automated method for classifying teeth in cone-beam CT images based on a deep CNN. They reported an accuracy of dental charting by deep CNN of 91.0% and concluded that AI could be an efficient tool for automated dental charting and in forensic dentistry [23]. Zhang et al. [25] described the use of a label tree with a cascade network for effective recognition of teeth. They applied the deep learning technique not only to tooth detection but also classification of dental periapical radiographs. The performance of the technique was good even with limited training data, with a precision of 95.8% and recall of 96.1% [25]. Lin et al. [22] developed a dental classification and numbering system that could be applied to intraoral bitewing radiographs for segmentation, classification, and numbering of teeth. The bitewing radiographs were processed using homomorphic filtering, homogeneity-based contrast stretching, and adaptive morphological transformation to improve the contrast and evenness of illumination, and they reported a numbering accuracy of 95.7% [22]. Chen et al. [26] presented a deep learning approach for automated detection and numbering of teeth on dental periapical films. They reported that both precision and recall exceeded 90%, and the mean value of the IOU [intersection over union] between detected boxes and ground truth also reached 91%. In addition, the results of the machine learning system were similar to those obtained by junior dentists [26]. Tuzoff et al. [24] focused on tooth detection and numbering on panoramic radiographs using a trained CNN-based deep learning model to provide automated dental charting according to the FDI two-digit notation. They aimed to achieve numbering of maxillary and mandibular teeth in a single image and used the state-of-the-art Faster R-CNN model based on the VGG-16 convolutional architecture. Their results were promising and showed that AI deep learning algorithms have the potential for practical application in clinical dentistry [24]. VGG-16 consists of 16 layers with two convolution layers: a pooling layer and a fully connected layer. The aim of the VGG network is to develop a much deeper architecture with much smaller filters. Inception V2 used in this study consists of 22 deep layers with convolutional filters of various sizes in the same layer. VGG-16 and Inception V2 achieved top-1 classification accuracy on Image-Net of 71% and 73.9%, respectively [27].

Consistent with the literature, the precision and sensitivity obtained in our research were high. The relatively low values obtained by studies performed on periapical and bitewing radiographs can be explained by the fact that the system first determines whether the graph belongs to the upper or lower jaw, whereas CNN-based deep learning is performed in fewer steps on panoramic radiographs. Although the present algorithm based on the Faster R-CNN showed promising results, this study had some limitations. Further studies are needed to determine the estimation success of each type of tooth (incisors, canines, premolars, molars) using larger data sets, as well as to examine additional types of CNN architectures and libraries. An improved method may be developed with better tooth detection and numbering results. Future studies should also investigate the possible advantages of using an AI on radiographic images with lower radiation doses.

Conclusion

In the present study, a deep CNN was proposed for tooth detection and numbering. The precision and sensitivity of the model were high and showed that AI is useful for tooth identification and numbering. This AI system can be used to support clinicians in detecting and numbering teeth on panoramic radiographs and may eventually replace evaluation by human observers and improve performance in the future.

Acknowledgements

Not applicable

Abbreviations

- AI

Artificial intelligence

- ANNs

Artificial neural networks

- CNN

Convolutional Neural Network

- CT

Computed tomography

- FN

False negative

- FP

False positive

- HOG

Histogram of oriented gradients

- PACS

Picture archiving and communication system

- SIFT

Scale-invariant feature transform

- TP

True positive

Authors' contributions

ISB and KO were responsible for the concept of the research. EB and OC worked on the methodology of the research. OC, AO and AFA provided software for the research. OC, ISB and KO validated the data; ISB, EB, FAK, HS, CO, MK contributed to data curation; ISB, EB prepared the draft; KO, IR-K were responsible for writing, review and editing of the manuscript; KO, IR-K were supervisors of the research. All authors read and approved the final manuscript.

Funding

This research received no external funding.

Declarations

Ethics approval and consent to participate

The research protocol was approved by the non-interventional Clinical Research Ethical Committee of Eskisehir Osmangazi University (decision date and number: 06.08.2019/14). All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki declaration and its later amendments or comparable ethical standards. Additional informed consent was obtained from all individual participants included in the study.'

Consent for publication

Not applicable.

Availability of data and materials

The datasets used and/or analyzed during the current study are available from the corresponding author on reasonable request.

Competing interests

The authors declare that they have no competing interests.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Shah N, Bansal N, Logani A. Recent advances in imaging technologies in dentistry. World J Radiol. 2014;6:794–807. doi: 10.4329/wjr.v6.i10.794. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Angelopoulos C, Bedard A, Katz JO, Karamanis S, Parissis N. Digital panoramic radiography: an overview. Semin. Orthod. 2004;10:194–203. doi: 10.1053/j.sodo.2004.05.003. [DOI] [Google Scholar]

- 3.European Society of Radiology (ESR) What the radiologist should know about artificial intelligence—an ESR white paper. Insights Imaging. 2019;10:44. doi: 10.1186/s13244-019-0738-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Schier R. Artificial Intelligence and the Practice of Radiology: An Alternative View. J Am Coll Radiol. 2018;15:1004–1007. doi: 10.1016/j.jacr.2018.03.046. [DOI] [PubMed] [Google Scholar]

- 5.Syed AB, Zoga AC. Artificial intelligence in radiology: current technology and future directions. Semin Musculoskelet Radiol. 2018;22:540–545. doi: 10.1055/s-0038-1673383. [DOI] [PubMed] [Google Scholar]

- 6.Nichols JA, Herbert Chan HW, Baker MAB. Machine learning: applications of artificial intelligence to imaging and diagnosis. Biophys Rev. 2019;11:111–118. doi: 10.1007/s12551-018-0449-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Park WJ, Park JB. History and application of artificial neural networks in dentistry. Eur J Dent. 2018;12:594–601. doi: 10.4103/ejd.ejd_325_18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Hung K, Montalvao C, Tanaka R, Kawai T, Bornstein MM. The use and performance of artificial intelligence applications in dental and maxillofacial radiology: a systematic review. Dentomaxillofac Radiol. 2020;49:20190107. doi: 10.1259/dmfr.20190107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Hwang JJ, Jung YH, Cho BH, Heo MS. An overview of deep learning in the field of dentistry. Imaging Sci Dent. 2019;49:1–7. doi: 10.5624/isd.2019.49.1.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Lee JH, Han SS, Kim YH, Lee C, Kim I. Application of a fully deep convolutional neural network to the automation of tooth segmentation on panoramic radiographs. Oral Surg Oral Med Oral Pathol Oral Radiol. 2020;129:635–642. doi: 10.1016/j.oooo.2019.11.007. [DOI] [PubMed] [Google Scholar]

- 11.Murata M, Ariji Y, Ohashi Y, Kawai T, Fukuda M, Funakoshi T, Kise Y, Nozawa M, Katsumata A, Fujita H, Ariji E. Deep-learning classification using convolutional neural network for evaluation of maxillary sinusitis on panoramic radiography. Oral Radiol. 2019;35:301–307. doi: 10.1007/s11282-018-0363-7. [DOI] [PubMed] [Google Scholar]

- 12.Poedjiastoeti W, Suebnukarn S. Application of convolutional neural network in the diagnosis of jaw tumors. Healthc Inform Res. 2018;24:236–241. doi: 10.4258/hir.2018.24.3.236. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Deyer T, Doshi A. Application of artificial intelligence to radiology. Ann Transl Med. 2019;7:230. doi: 10.21037/atm.2019.05.79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Hosny A, Parmar C, Quackenbush J, Schwartz LH, Aerts HJWL. Artificial intelligence in radiology. Nat Rev Cancer. 2018;18:500–510. doi: 10.1038/s41568-018-0016-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Szegedy C, Vanhoucke V, Ioffe S, Shlens J, Wojna Z. Rethinking the Inception Architecture for Computer Vision. Proceedings of the IEEE conference on computer vision and pattern recognition; 2016. p.2818–2826. arXiv:1512.00567 [cs.CV].

- 16.Ezhov M, Zakirov A, Gusarev M. Coarse-to-fine volumetric segmentation of teeth in Cone-Beam CT. In: 2019 IEEE 16th international symposium on biomedical imaging (ISBI 2019). IEEE; 2019.

- 17.Ekert T, Krois J, Meinhold L, Elhennawy K, Emara R, Golla T, Schwendicke F. Deep Learning for the Radiographic Detection of Apical Lesions. J Endod. 2019;45:917–922. doi: 10.1016/j.joen.2019.03.016. [DOI] [PubMed] [Google Scholar]

- 18.Fukuda M, Inamoto K, Shibata N, Ariji Y, Yanashita Y, Kutsuna S, Nakata K, Katsumata A, Fujita H, Ariji E. Evaluation of an artificial intelligence system for detecting vertical root fracture on panoramic radiography. Oral Radiol. 2020;36:337–343. doi: 10.1007/s11282-019-00409-x. [DOI] [PubMed] [Google Scholar]

- 19.Reddy MS, Shetty SR, Shetty RM, Vannala V, Sk S. Future of periodontics lies in artificial intelligence: Myth or reality? J Investig Clin Dent. 2019;10:e12423. doi: 10.1111/jicd.12423. [DOI] [PubMed] [Google Scholar]

- 20.Valizadeh S, Goodini M, Ehsani S, Mohseni H, Azimi F, Bakhshandeh H. Designing of a computer software for detection of approximal caries in posterior teeth. Iran J Radiol. 2015;12:e16242. doi: 10.5812/iranjradiol.12(2)2015.16242. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Zakirov A, Ezhov M, Gusarev M, Alexandrovsky V, Shumilov E. Dental pathology detection in 3D cone-beam CT; 2018. arXiv preprint arXiv:1810.10309.

- 22.Lin P, Lai Y, Huang PJPR. An effective classification and numbering system for dental bitewing radiographs using teeth region and contour information. Pattern Recogn. 2010;43:1380–1392. doi: 10.1016/j.patcog.2009.10.005. [DOI] [Google Scholar]

- 23.Miki Y, Muramatsu C, Hayashi T, Zhou X, Hara T, Katsumata A, Fujita H. Classification of teeth in cone-beam CT using deep convolutional neural network. Comput Biol Med. 2017;80:24–29. doi: 10.1016/j.compbiomed.2016.11.003. [DOI] [PubMed] [Google Scholar]

- 24.Tuzoff DV, Tuzova LN, Bornstein MM, Krasnov AS, Kharchenko MA, Nikolenko SI, Sveshnikov MM, Bednenko GB. Tooth detection and numbering in panoramic radiographs using convolutional neural networks. Dentomaxillofac Radiol. 2019;48:20180051. doi: 10.1259/dmfr.20180051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Zhang K, Wu J, Chen H, Lyu P. An effective teeth recognition method using label tree with cascade network structure. Comput Med Imaging Graph. 2018;68:61–70. doi: 10.1016/j.compmedimag.2018.07.001. [DOI] [PubMed] [Google Scholar]

- 26.Chen H, Zhang K, Lyu P, Li H, Zhang L, Wu J, Lee CH. A deep learning approach to automatic teeth detection and numbering based on object detection in dental periapical films. Sci Rep. 2019;9:3840. doi: 10.1038/s41598-019-40414-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Huang J, Rathod V, Sun C, Zhu M, Korattikara A, Fathi A, Fischer I, Wojna Z, Song Y, Guadarrama S, Murphy K Speed/accuracy trade-offs for modern convolutional object detectors. In: Proceedings of the IEEE conference on computer vision and pattern recognition; 2017. p. 7310–7311. arXiv:1611.10012 [cs.CV]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The datasets used and/or analyzed during the current study are available from the corresponding author on reasonable request.