Abstract

Objective

Although the representation of women in science has improved, women remain underrepresented in scientific publications. This study compares women and men in scholarly dissemination through the AMIA Annual Symposium.

Materials and Methods

Through a retrospective observational study, we analyzed 2017–2020 AMIA submissions for differences in panels, papers, podium abstracts, posters, workshops, and awards for men compared with women. We assigned a label of woman or man to authors and reviewers using Genderize.io, and then compared submission and acceptance rates, performed regression analyses to evaluate the impact of the assumed gender, and performed sentiment analysis of reviewer comments.

Results

Of the 4687 submissions for which Genderize.io could predict man or woman based on first name, 40% were led by women and 60% were led by men. The acceptance rate was smilar. Although submission and acceptance rates for women increased over the 4 years, women-led podium abstracts, panels, and workshops were underrepresented. Men reviewers increased the odds of rejection. Men provided longer reviews and lower reviewer scores, but women provided reviews that had more positive words.

Discussion

Overall, our findings reflect significant gains for women in the 4 years of conference data analyzed. However, there remain opportunities to improve representation of women in workshop submissions, panel and podium abstract speakers, and balanced peer reviews. Future analyses could be strengthened by collecting gender directly from authors, including diverse genders such as non-binary.

Conclusion

We found little evidence of major bias against women in submission, acceptance, and awards associated with the AMIA Annual Symposium from 2017 to 2020. Our study is unique because of the analysis of both authors and reviewers. The encouraging findings raise awareness of progress and remaining opportunities in biomedical informatics scientific dissemination.

Keywords: gender equity, authorship, bias, women, sex distribution

INTRODUCTION

Despite efforts to increase representation, women remain underrepresented in science, including biomedical informatics. The American Medical Informatics Association (AMIA) has spearheaded several initiatives to promote women in biomedical informatics, including awards, career advancement, networking, mentoring, and leadership programs.1 This study examines research publications for women and men through AMIA scholarly dissemination, which carries implications for journals,2 conferences,3–4 and peer review,5 and can significantly impact career and promotion.6

Although the representation of women in science and medicine has increased dramatically over the past several decades, bias towards women persists.7 Underrepresentation of women has been demonstrated in many fields, including gender gaps in scientific publication and impact.2,8–10 For example, an analysis of over 10 million academic papers published in Science, Technology, Engineering, Mathematics, and Medicine (STEMM) disciplines since 2002 found that gains for women authors have been slowest in historically male-dominated fields, such as surgery and computer science.11 Differences between women and men have also been demonstrated in author order. For example, among 1.8 million JSTOR articles, West et al12 found first authorship roughly matched the proportion of women in a given field, but women were underrepresented as last (ie, senior) authors. Although the majority of prior work focuses on authorship, a study of peer reviews for over 9000 publications in top-tier economics journals found publications authored by women spent 6 months longer in review despite higher readability scores than those authored by men.13 Thus women continue to face biases, including increased barriers for publication.

Women continue to be underrepresented in conferences as well, in both biomedical14,15 and technology fields.16,17 Opportunities to present at scientific conferences are important academic accomplishments for career advancement. Yet inequitites between women and men have been shown at many scientific conferences, often demonstrating that one-third or less of speakers are women.14,18–25 Among biomedical conferences, differences between women and men have been documented in speaker topics16,23,26 and prominence of all-male panels,21 despite increased audience satisfaction associated with >50% female panel composition.27 Differences between women and men are more pronounced in fields like critical care and algorithms, where there is a smaller pool of women than other fields like nursing or human factors.26,28 The COVID-19 pandemic only further amplified these differences.29

We know little about how women and men compare in AMIA scholarly dissemination, including any differences in conference submission rates, submission types, or acceptance rates. Most prior work examines differences between women and men authors without considering other factors, such as the role of gender in peer review.13 There is an opportunity for more detailed analyses in scientific conference submissions within biomedical informatics. Documenting trends in AMIA scholarly dissemination will help to describe any demonstrable bias against women, provide a benchmark to track future improvements, and help inform any needed corrective strategies.

OBJECTIVE

The objective of this study was to examine associations between women and men authors, reviewers, and outcomes for the AMIA Annual Symposium submissions and awards. We examined differences in submission rates, submission types, acceptance rates, reviewer scores, sentiment of reviews, odds of rejection, and awards for women and men.

MATERIALS AND METHODS

We conducted a retrospective observational study using deidentified conference submission data from the AMIA Annual Symposium from 2017 to 2020. The University of Washington Institutional Review Board approved procedures for analysis of submission data. AMIA granted access to the conference submission data under the condition that identity remains confidential. A data use agreement was established with AMIA to ensure deidentification of the submission data.

Data set

The data set included a total of 4940 submissions to the AMIA Annual Symposia from 2017 to 2020. Author and reviewer names were assigned codes for deidentification and the original file was loaded to a secure institutional server. For our analysis, each submission included the following data elements: first name of first author, senior author, and reviewers, submission type (ie, panel, regular paper, student paper, podium abstract, poster, workshop), submission acceptance (accept, reject), reviewer score (1 [definitely should be rejected] to 5 [definitely should be on program]), and reviewer comments to the author.

In addition to analyzing conference submission data, we compared women and men who received AMIA awards between 2017 and 2020. Awards are made each year at the AMIA Annual Symposium. We included the award data listed on the public AMIA website.30 We used the first name of award winners in our analysis. For awards based on a manuscript with multiple authors, we used the first name of the first author in our analysis. We included 3 categories of awards from the AMIA Annual Symposia from 2017 to 2020: Research, Leadership, and Signature awards. Research awards include the “Distinguished Paper Award,” “Distinguished Poster Award,” “Doctoral Dissertation Award,” “Homer R. Warner Award,” and “The Martin Epstein” Student Paper Award. Both Signature and Research awards contain links to the Student Paper awards. We include the Student Paper awards in the Research awards, since they are similarly based on a publication. Leadership awards include the “AMIA Leadership Award” and exclude the “Member Get a Member Award,” since no awards were bestowed during the analysis period. Signature awards include the “AMIA New Investigator Award,” “Don Eugene Detmer Award for Health Policy Contributions in Informatics,” “Donald A.B. Lindberg Award for Innovation in Informatics,” “Morris F. Collen Award of Excellence,” “Virginia K. Saba Informatics Award,” and the “William W. Stead Award for Thought Leadership in Informatics.”

Prediction of gender

Because the actual gender of authors, reviewers, and award winners was not available for our data set, we applied an automated approach to predict gender. This approach aligns with the reviewer experience since reviewers are not explicitly told author gender, but they do see author names. Between 11/10/2020 and 1/25/2021, we used Genderize.io (https://genderize.io/)31 to assign a label of man or woman to authors, reviewers, and award winners. The Genderize.io API is a first name gender prediction system that includes over 250,000names with country-specific name–gender associations for approximately 80 countries. The system has been used in related prior work10,11,32 and provides a minimum accuracy of 82% with an F1 score of 90% for women and 86% for men.33 Although Genderize.io offers a global database that is continuously updated,34 we recognize that an inherent limitation of this approach is its binary operationalization of gender.35

Analysis

We conducted 6 analyses to compare differences in women and men for submissions and awards. Data analyses were performed with Stata statistical software version 16.1 (StataCorp).

Submission rates

We used descriptive statistics to summarize submission rates and submission types by women and men first authors. We compared submission frequencies over years with Fisher exact test and between women and men with chi square test. We also compared the proportion of all-male panels across years with Fisher exact test.

Acceptance rates

We used descriptive statistics to summarize acceptance rates by women and men first authors. We compared acceptance frequencies over years with Fisher exact test and between women and men with chi square tests. We also compared the proportion of all-male panels across years with Fisher exact test.

Odds of rejection

Odds of rejection were determined by mixed-effects multivariable logistic regression, with submission nested within reviewer as random effects.

Reviewer scores

For each submission, the reviewer score was defined as the score provided by each reviewer (minimum 1; maximum 5). Average scores are reported as mean ± SD. We used descriptive statistics to summarize reviewer scores of women and men reviewers. We performed a univariate mixed effects linear regression to predict reviewer scores based on reviewer gender, nesting submission within the reviewer.

Sentiment of reviews

We calculated the sentiment of reviewer comments for each submission. We processed the text using Python and the Spacy library36 to tokenize and remove stop words. After stopword removal, we used the NRC Emotion Lexicon37,38 to conduct a lexical lookup of all remaining words. The NRC lexicon was developed using crowdsourcing and contains 2312 positive and 3324 negative terms. Each word in the reviewer comments was compared to both lexicons and counts of positive and negative words were retained. For each comment, we calculated the word count and sentiment. We calculated the sentiment of comments as the percentage of positive and negative words in comments by dividing the number of positive and negative words by the word count after stop word removal. We compared means of positive and negative word ratios using t-test to compare comments received by women and men first authors and comments given by women and men reviewers.

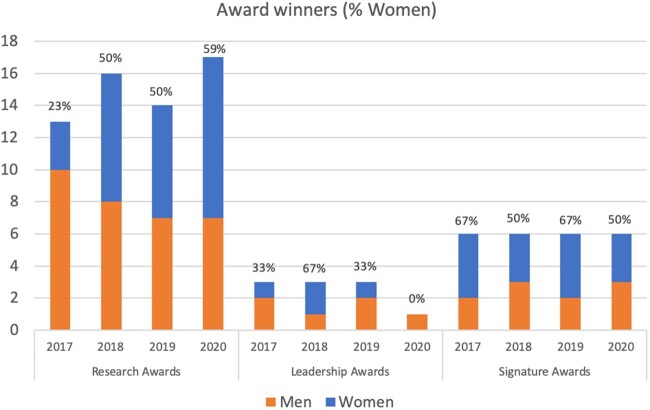

Awards

The distribution of women and men award winners is reported as number of winners (%) for the 3 categories of awards from the AMIA Annual Symposium: Research, Leadership, and Signature. We compared total awards made to women and men between 2017 and 2020 with Fisher exact test.

RESULTS

Sample

Submission data

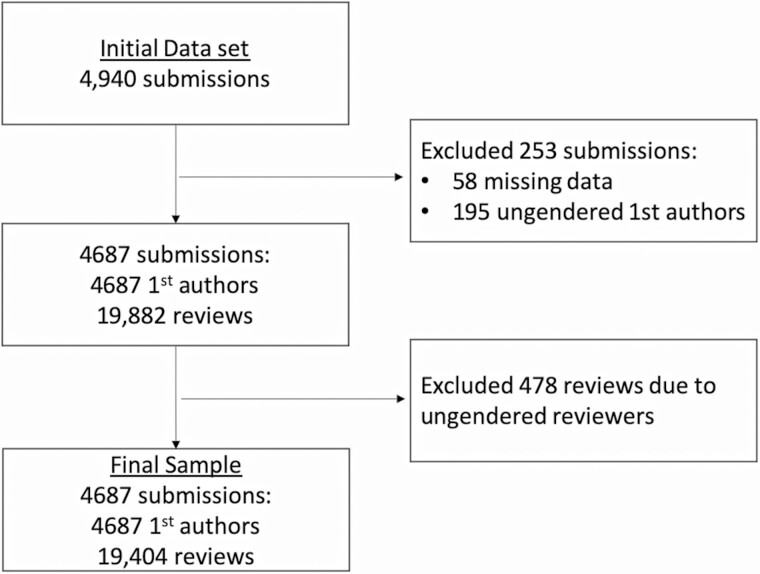

Of the 4940 submissions in the data set, we excluded a total of 253 submissions, including 58 (1%) that had missing data and 195 (4%) for which we were unable to predict gender. Through a manual audit of the 195 ungendered first author names, we identified common patterns, including hyphenated names (eg, Hye-Chung) (22%), names with more than 1 unhyphenated term (eg, Hun Ji) (30%), and typographical variations, such as first initials (eg, “A.”) and nonsense characters (eg, “-”) (4%). There was no clear pattern evident in the remaining 44% of ungendered names. For completeness, we further investigated name origin using Names.org (https://www.names.org/) and found that many ungendered names were Chinese (26%) or Korean (12%) in origin, but many remained of unknown origin (33%) (Supplementary Appendix A provides the full manual audit of ungendered names). Excluding those 253 submissions resulted in a final sample of 4687 submissions with a gendered first author included in our analysis (Figure 1). The final sample included 40% women-led submissions with 34% women senior authors. Each submission had an average of 4.4 reviews (sd = 0.87). Of the 19 882 reviews associated with the 4687 included submissions, we were unable to predict reviewer gender for 478 (2%), which were excluded. Thus, the final submission sample included 19 404 reviews with a gendered reviewer included in our analysis (38% women).

Figure 1.

Sample of AMIA Annual Symposium conference submissions.

Award data

Of the 94 awards made from 2017 to 2020 that we collected from the AMIA website, we were able to use Genderize.io to predict gender for all. Based on the gender prediction procedure, the final award sample included 49% of awards made to women.

Submission rates

Table 1 summarizes the distribution of women and men first author submissions by submission year and type (Supplementary Appendix B shows women-led and men-led submissions for all types and all years). The total number of submissions increased over the 4 years. Overall, the proportion of women to men first author submissions was 40% women and 60% men, following a 2:3 ratio. The proportion of women first author submissions increased significantly from 2017 (38%) to 2020 (43%) (P = .009). Additionally, posters had a significantly higher than expected proportion of women first authors (P < .001), while workshops had a significantly lower than expected proportion of women first authors (P< .001). The proportion of all-male panel submissions significantly decreased from 28% of all panels submitted in 2017 to 8% of all panels in 2020 (P < .001).

Table 1.

Distribution of women and men first author submissions by submission year and type

| Year | Submissions n | Woman first author n (%) | Man first author n (%) |

|---|---|---|---|

| 2017 | 1112 | 424 (38) | 688 (62) |

| 2018 | 1145 | 460 (40) | 685 (60) |

| 2019 | 1158 | 465 (40) | 693 (60) |

| 2020 | 1272 | 548 (43) | 724 (57) |

| All years | 4687 | 1897 (40) | 2790 (60) |

| Submission type | Submissions n (%)a | Woman first author n (%) b | Man first author n (%) b |

| Panels | 357 (8) | 142 (40) | 215 (60) |

| Regular papers | 958 (20) | 375 (39) | 583 (61) |

| Student papers | 300 (6) | 137 (46) | 163 (54) |

| Podium abstracts | 1177 (25) | 467 (40) | 710 (60) |

| Posters | 1589 (34) | 708 (45) | 881 (55) |

| Workshops | 306 (7) | 68 (22) | 238 (78) |

| All types | 4687 (100) | 1897 (40) | 2790 (60) |

Percentage of all submission types.

Percentage of each submission type.

Acceptance rates

Table 2 summarizes the distribution of accepted women and men first authors submissions by submission year and type. (Supplementary Appendix C shows accepted women- and men-led submissions for all types and years). Across all years, the proportion of women-led to men-led accepted submissions follows a 2:3 ratio, similar to submissions. The overall acceptance rate decreased by 17% from 2017 (60% accepted) to 2020 (50% accepted). However, the proportion of accepted women-led submissions increased significantly from 2017 (37%) to 2020 (44%) (P = .01). Relative to submission rates, the proportion of accepted submissions was similar for women and men first authors across all submission types except panels (P = .05) and podium abstracts (P = .01), for which a significantly lower proportion of women first author submissions was accepted. Relative to submission rates, the acceptance rate of all-male panels decreased significantly from 28% in 2017 to 6% in 2020 (P = .006).

Table 2.

Distribution of accepted women and men first author submissions by year and type

| Year | Accepted submissions n (%)a | Woman first author n (%) b | Man first author n (%) b | P value |

|---|---|---|---|---|

| 2017 | 670/1112 (60) | 251 (37) | 419 (63) | .57 |

| 2018 | 694/1145 (62) | 281 (40) | 413 (60) | .79 |

| 2019 | 644/1158 (56) | 249 (40) | 371 (60) | .99 |

| 2020 | 630/1272 (50) | 280 (44) | 350 (56) | .33 |

| All years | 2614/4687 (56) | 1060 (41) | 1553 (59) | .86 |

| Submission type | Accepted submissions n (%)a | Woman 1st author n (%) b | Man 1st author n (%) b | P value |

| Panels | 181/375 (51) | 69 (38) | 112 (62) | .05 |

| Regular papers | 375/958 (39) | 139 (37) | 236 (63) | .29 |

| Student papers | 173/300 (58) | 86 (50) | 87 (50) | .10 |

| Podium abstracts | 459/1177 (39) | 161 (35) | 298 (65) | .01 |

| Posters | 1266/1589 (80) | 569 (45) | 697 (55) | .50 |

| Workshops | 160/306 (52) | 37 (23) | 123 (77) | .69 |

| All types | 2614/4687 (56) | 1061 (41) | 1553 (59) | .86 |

Percentage of all accepted submissions.

Percentage of each accepted submission year/type.

Odds of rejection

Mixed effect logistic regression to predict the odds of rejection by nesting individual reviewers within unique submissions showed that a submission with a woman first author was not associated with increased odds of rejection (Table 3). However, having a man reviewer for a submission was associated with increased odds of rejection. The odds of rejection were decreased for student papers and posters but increased for regular papers and podium abstracts (Table 3). Evaluation for random effects showed that reviewer explained 4% and submission (while controlling for reviewer) explained 31% of the odds of rejection.

Table 3.

Rejection of submissions modeled with mixed effects multivariable logistic regressiona

| Variable | OR | (95% | CI) | P value |

|---|---|---|---|---|

| Fixed effects | ||||

| Woman 1st author | 1.11 | (0.99, | 1.24) | .06 |

| Man reviewer | 1.2 | (1.02, | 1.4) | .03 |

| Man senior author | 0.93 | (0.84, | 1.03) | .17 |

| Panel | 0.92 | (0.74, | 1.14) | .44 |

| Paper (regular) | 1.5 | (1.04, | 2.19) | .03 |

| Paper (student) | 0.58 | (0.36, | 0.94) | .03 |

| Podium abstract | 1.6 | (1.07, | 2.53) | .02 |

| Poster | 0.16 | (0.04, | 0.66) | .01 |

| Random effects | ICC | (95% | CI) | |

| Reviewer | 0.04 | (0.02, | 0.06) | |

| Submission | 0.31 | (0.01, | 0.97) |

N = 18 752 reviews after dropping submissions with 647 ungendered senior authors and 5 missing senior authors. Workshops omitted from model due to colinearity.

Abbreviations: CI, confidence interval; ICC, intraclass correlation coefficient; OR, odds ratio.

Reviewer scores

The mean of reviewer scores for all years combined was 3.3 ± 0.5. For all years combined, the mean reviewer score was lower for men (3.2 ± 0.5) than women reviewers (3.4 ± 0.5; P < .001); this difference was similar in each year analyzed separately. In a univariable mixed effects linear model with reviewer score as the outcome and reviewer nested within submission, men reviewers scored submissions lower than women reviewers (mean difference, −0.11; 95% CI, −0.14 to −0.08; P < .001).

Sentiment of reviews

Of the 19 404 reviews, reviewer comments were available for 13 292 from 2017 to 2019. We excluded reviewer comments for which the gender of the first author or the reviewer was unknown. We analyzed the sentiment of reviews from 2 perspectives: as received by first authors (ie, analyzed by women versus men first authors) and as provided by reviewers (analyzed by women versus men reviewers). Table 4 summarizes the word count and sentiment of review comments addressed to women and men first authors (top) and women and men reviewers (bottom).

Table 4.

Sentiment of reviews addressed to authors by reviewers (all submission types)

| Year | Number of reviews |

Word count Mean ± SD |

% Positive words |

% Negative words |

|||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Women | Men | Women | Men | P | Women | Men | P | Women | Men | P | |

| Authorsa | |||||||||||

| 2017 | 1523 | 2478 | 72 ± 81 | 66 ± 70 | .02 | 14.8 | 14.0 | .007 | 3.0 | 3.0 | .90 |

| 2018 | 1801 | 2636 | 69 ± 71 | 68 ± 70 | .60 | 14.7 | 14.7 | .95 | 3.2 | 3.0 | .20 |

| 2019 | 1724 | 2498 | 76 ± 74 | 78 ± 77 | .31 | 14.7 | 14.2 | .03 | 3.2 | 3.1 | .90 |

| All Years | 5048 | 7612 | 72 ± 75 | 71 ± 73 | .30 | 14.7 | 14.3 | .005 | 3.1 | 3.0 | .25 |

| Reviewersb | |||||||||||

| 2017 | 1537 | 2575 | 67 ± 81 | 69 ± 71 | .40 | 14.9 | 14.0 | <.001 | 2.8 | 3.1 | .02 |

| 2018 | 1613 | 2969 | 63 ± 65 | 71 ± 72 | <.001 | 15.3 | 14.4 | <.001 | 3.1 | 3.1 | .85 |

| 2019 | 1674 | 2610 | 71 ± 74 | 80 ± 78 | <.001 | 15.0 | 14.0 | .01 | 3.1 | 3.1 | .53 |

| All Years | 4824 | 8154 | 67 ± 74 | 73 ± 74 | <.001 | 15.0 | 14.2 | <.001 | 3.0 | 3.1 | .12 |

Note: N = 14 863 reviews; 1571 reviews that had no comments were excluded, leaving 13 292 reviews included in the analysis.

Reviews received by women and men first authors: 632 reviews that had no gendered authors were excluded, leaving 12 660 reviews received by gendered authors.

Reviews given by women and men reviewers: 314 reviews that had no gendered reviewers were excluded, leaving 12 978 reviews given by gendered reviewers.

Sentiment of reviews analyzed by women and men first authors

Overall, the average word count (ie, length of review) ranged from 66 to 78, with an average of 14% positive words and an average of 3% negative words across years. The standard deviations of word counts are large due to some comments being very short (ie, 1 word) or very long (ie, over 700 words). Despite the similar word count (ie, length of review) in all years but 2017, women first authors received a higher mean percentage of positive words (14.7%) than men first authors (14.3%) across all years (P = .005).

Sentiment of reviews analyzed by women and men reviewers

Similar to reviews analyzed by first authors, the average word count ranged from 63 to 80, with an average of 15% positive words and an average of 3% negative words across years. Although comments provided by men reviewers have a significantly higher mean word count than women reviewers (P < .001), comments provided by women reviewers have a significantly higher mean percentage of positive words (15.0%) than men (14.2%) (P < .001). In fact, in 1 year (2017), the comments provided by men reviewers had a significantly higher percentage of negative words (3.1%) than women reviewers (2.8%) (P = .02).

Awards

For all awards and years, awards were made to 46 women (49%) and 48 men (51%) (Figure 2, Supplementary Appendix D). The frequency of awards to women was not significantly different from 2017 to 2020. For 2017 to 2020 combined, research awards were given to 28 women (47%) and 32 men (53%); research awards were given to 3 women (23%) in 2017 and 10 women (59%) in 2020 (not significant) (Supplementary Appendix Table D). From 2017 to 2020, leadership awards were given to 4 women (40%) and 6 men (60%). Signature awards were given to 14 women (58%) and 10 men (42%), including Virginia Saba Awards that are limited to nurses who practice in an historically women-dominated field, with 3 of the 4 awards to women. When this award was excluded from the analysis, there were 11 (55%) Signature awards given to women and 9 (45%) to men.

Figure 2.

Awards by award type and year.

DISCUSSION

Findings from this study fill an important gap in our understanding of the representation of women in biomedical informatics scholarly dissemination. In the context of the AMIA Annual Symposium from 2017 to 2020, we identified several promising gains for women. Although the overall submission rate for women (40%) was lower than men (60%), the proportion of women first author submissions increased significantly from 2017 to 2020. Relative to submission rates that show an overall 2:3 woman to man ratio of first authors, our findings show similar acceptance rates, where a woman first author was not associated with higher frequency of rejection. Although the overall acceptance rate decreased from 2017 to 2020, the proportion of accepted submissions among women first authors increased significantly over that time. In fact, women made strong gains in accepted student papers and posters, where their acceptance rates were 50% and 45%, respectively. It is unclear whether this trend is due to these submission types offering a less resistant path for acceptance or that a larger proportion of junior women in the field are beginning to publish. Furthermore, both the submission and acceptance rates of all-male panels decreased significantly over the 4-year period. Similar to publications, awards did not show major differences. Overall, our findings reflect significant gains for women in biomedical scholarly dissemination.

Despite these promising results, we also identified critical areas for improvement. The 2:3 woman to man ratio held for all submission types except for posters and workshops. In contrast to posters that had a higher-than-expected submission rate by women first authors, workshops had a significantly lower submission rate. Relative to rates of submission, the acceptance rates of women-led panels and podium abstracts were significantly lower than those led by men. Although it may be easier for women first authors to have a student paper or poster accepted, the odds of rejection are higher for regular papers and podium abstracts. There is an opportunity for women to increase workshop submissions and to have work recognized, particularly through acceptance of a greater number of women-led panels and podium abstracts.

One of the key strengths of this study is inclusion of reviewer data in the analysis, including reviewer scores and sentiment of reviewer comments. We identified significant but small differences in reviewer scores from men reviewers, and men reviewers were associated with higher odds of rejection. In addition to the number of men reviewers that may impact reviewer scores, the sentiment of reviews showed differences between women and men. Whereas men reviewers provided longer reviews than women, women reviewers provided reviews that were more positive than men reviewers. Further, women first authors received reviews that were more positive than men first authors.

Our study has several limitations. Because our conference submission data was deidentified, we could not account for individual factors that may have impacted findings, such as actual gender, author age, or eminence in the field (eg, h-index).9,12 Without data on the actual gender of authors and reviewers, we assigned a label of woman or man through an automated gender prediction approach. Although this computational approach has been applied in much prior work,10,11,32,33 the prediction is limited to binary gender (ie, woman or man), which excludes diverse gender identities. The consequences of such “gender reductionism” (ie, simplifying gender to binary categories) are serious and require adaptations to accommodate gender diversity.39

Further, the gender predictor may have produced additional bias for groups with disproportionate name variations (eg, hyphenated, 2+ terms) and origin (eg, Chinese, Korean). Such inherent flaws in algorithms have received increased recognition for perpetuating racial and ethnic bias in healthcare.40 Such inequities may apply to gender as well and warrant greater algorithmic stewardship.41 To this end we reported an audit of ungendered names to help surface the nature of biases that limit our approach. Future work should investigate non-binary and trans-inclusive alternatives to automated gender prediction, such as reliance on user-provided profile data with diverse gender options to mitigate misgendering, discrimination, and algorithmic injustice.35

A further limitation relates to the sentiment analysis. The NRC lexicon is a general English lexicon containing a wide variety of terms. The comments made by reviewers sometimes referred to the topic of the paper which may have resulted in positive or negative terms being assigned (eg, “depression” is scored as a negative term). We opted not to modify the lexicon for such possible terms. Instead, we assumed that the presence of such terms was consistent across years, and between women and men, and only increased the count without changing the relative frequencies.

Our findings carry practical implications and directions for future research. Interesting follow-on studies could include assessing differences in submission topics among women and men16,27,28 or “prestige bias” for eminent authors.5,12 Similar analyses could be conducted for in biomedical informatics journals, editorial boards, and leadership roles in the field. Perhaps more important are the practical implications of this work for conference organizing and peer review among AMIA members, leaders, and scholars in the field. Women should consider submitting more workshops to further strengthentheir representation. Limitations of our approach to gendering authors and reviewers should motivate more comprehensive collection of demographic data from authors, reviewers, and conference attendees to provide the data on actual gender needed for benchmarking improvements4 and reducing potential for inequities from automated gender prediction approaches.35,39 For example, sparse gender data prevented us from determining whether women were represented at rates proportional to AMIA Annual Symposium attendance from 2017 to 2020 (38% male, 35% not specified, 26% female, 1% prefer not to answer). Similarly, membership data provided by AMIA (as of April 2021) lacks comprehensive gender data (50% male, 30% female, 18% not specified, 1% prefer not to answer, <1% nonbinary, <1% Other). Conference organizers should safeguard peer review by ensuring a balance of women and men reviewers, and introduce processes that promote equitable peer review. For example, double-blind review has been shown to help reduce bias against women authors42 and could address quality–quantity tradeoffs that have also been observed in other fields.13 There are also opportunities for automated approaches to optimize equitable assignment of reviewers to submissions.43 Finally, increasing involvement of women leaders in conference organizing could have a positive impact by improving opportunities for women speakers.3,44,45

CONCLUSION

Although there are improvements in gender equity in science, women remain underrepresented in publication productivity and impact. We report findings from the first study to compare scholalry dissemination among women and men in biomedical informatics and found little evidence of bias against women in acceptance of submissions to the AMIA Annual Symposium from 2017 to 2020 relative to the submission rates of women and men. Although both the rates of submission and acceptance by women first authors increased significantly over time, there remain opportunities to improve representation of women in workshop submissions, podium abstract speakers, and balanced peer reviews. The granular data on reviews extends prior work with the addition of reviewer scores and sentiment. These promising findings raise awareness of progress for women in scientific dissemination and opportunitites to promote equity and inclusiveness in biomedical informatics.

FUNDING

No external funding.

AUTHOR CONTRIBUTIONS

GL, CS, and AH conceived of the study, analyzed the data, and wrote the manuscript; MO conducted literature review, and BD preprocessed the data and predicted gender. DC and JW prepared the data set and provided feedback on the manuscript but did not participate in data analysis. All coauthors participated in review and preparation of the manuscript.

Supplementary Material

ACKNOWLEDGMENTS

We wish to thank the Women in AMIA (WIA) Program for their support, AMIA for sharing conference submission data, and AMIA authors and reviewers who made this study possible.

DATA AVAILABILITY STATEMENT

The data underlying this article cannot be shared publicly to protect the confidentiality of authors and reviewers. The data were provided by AMIA by permission. Data will be shared on reasonable request to the corresponding author with permission of AMIA.

SUPPLEMENTARY MATERIAL

Supplementary material is available at Journal of the American Medical Informatics Association online.

CONFLICT OF INTEREST STATEMENT

GL, CS, AH, MO, and BD declare no competing interests. DC and JW are AMIA employees.

References

- 1.American Medical Informatics Association. Women in AMIA Initiative. https://www.amia.org/women-amia-initiative Accessed April 9, 2021

- 2.Jagsi R, Guancial EA, Worobey CC, et al. The “gender gap” in authorship of academic medical literature–a 35-year perspective. N Engl J Med 2006; 355 (3): 281–7. [DOI] [PubMed] [Google Scholar]

- 3.Lithgow KC, Earp M, Bharwani A, et al. Association between the proportion of women on a conference planning committee and the proportion of women speakers at medical conferences. JAMA Netw Open 2020; 3 (3): e200677. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Martin JL.Ten simple rules to achieve conference speaker gender balance. PLoS Comput Biol 2014; 10 (11): e1003903. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Tomkins A, Zhang M, Heavlin WD.. Reviewer bias in single-versus double-blind peer review. Proc Natl Acad Sci USA 2017; 114 (48): 12708–13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Holden G, Rosenberg G, Barker K.. Bibliometrics: a potential decision making aid in hiring, reappointment, tenure and promotion decisions. Soc Work Health Care 2005; 41 (3–4): 67–92. [DOI] [PubMed] [Google Scholar]

- 7.Roper RL.Does gender bias still affect women in science? Microbiol Mol Bio Rev 2019; 83 (3): e00018–19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Puri K, First LR, Kemper AR.. Trends in gender distribution among authors of research studies in pediatrics: 2015–2019. Pediatrics 2021; 147 (4): e2020040873. [DOI] [PubMed] [Google Scholar]

- 9.Mayer EN, Lenherr SM, Hanson HA, et al. Gender differences in publication productivity among academic urologists in the United States. Urology 2017; 103: 39–46. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Huang J, Gates AJ, Sinatra R, et al. Historical comparison of gender inequality in scientific careers across countries and disciplines. Proc Natl Acad Sci USA 2020; 117: 4609–16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Holman L, Stuart-Fox D, Hauser CE.. The gender gap in science: how long until women are equally represented? PLoS Biol 2018; 16 (4): e2004956. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.West JD, Jacquet J, King MM, et al. The role of gender in scholarly authorship. PLoS One 2013; 8 (7): e66212. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Hengel E.Publishing while female. Are women held to higher standards? Evidence from peer review. Cambridge Working Papers in Economics 2017; 1753. [Google Scholar]

- 14.Larson AR, Sharkey KM, Poorman JA, et al. Representation of women among invited speakers at medical specialty conferences. J Womens Health 2020; 29 (4): 550–60. [DOI] [PubMed] [Google Scholar]

- 15.Jones TM, Fanson KV, Lanfear R, et al. Gender differences in conference presentations: a consequence of self-selection? Peer J 2014; 2: e627. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Shishkova E, Kwiecien NW, Hebert AS, et al. Gender diversity in a STEM subfield—Analysis of a large scientific society and its annual conferences. J Am Soc Mass Spectrom 2017; 28 (12): 2523–31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Early K, Hammer J, Hofmann MK, et al. Understanding gender equity in author order assignment. Proc Acm Hum-Comput Interact 2018; 2 (CSCW): 1–21. [Google Scholar]

- 18.Carley S, Carden R, Riley R, et al. Are there too few women presenting at emergency medicine conferences? Emerg Med J 2016; 33: 681–3. [DOI] [PubMed] [Google Scholar]

- 19.Ruzycki SM, Fletcher S, Earp M, et al. Trends in the proportion of female speakers at medical conferences in the United States and in Canada, 2007 to 2017. JAMA Netw Open 2019; 2 (4): e192103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Patel SH, Truong T, Tsui I, Moon JY, Rosenberg JB.. Gender of presenters at ophthalmology conferences between 2015 and 2017. Am J Ophthalmol 2020; 213: 120–4. [DOI] [PubMed] [Google Scholar]

- 21.Moeschler SM, Gali B, Goyal S, et al. Speaker gender representation at the American Society of Anesthesiology annual meeting: 2011–2016. Anesth. Analg 2019; 129 (1): 301–5. [DOI] [PubMed] [Google Scholar]

- 22.Fournier LE, Hopping GC, Zhu L, et al. Females are less likely invited speakers to the international stroke conference: time’s up to address sex disparity. Stroke 2020; 51 (2): 674–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Davids JS, Lyu HG, Hoang CM, et al. Female representation and implicit gender bias at the 2017 American Society of Colon and Rectal Surgeons’ Annual Scientific and Tripartite Meeting. Dis Colon Rectum 2019; 62: 357–62. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Kalejta RF, Palmenberg AC.. Gender parity trends for invited speakers at four prominent virology conference series. J Virol 2017; 91 (16): e00739-17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Schroeder J, Dugdale HL, Radersma R, et al. Fewer invited talks by women in evolutionary biology symposia. J Evol Biol 2013; 26: 2063–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Cohoon JM, Nigai S, Kaye JJ.. Gender and computing conference papers. Commun ACM 2011; 54 (8): 72–80. [Google Scholar]

- 27.Rahimy E, Jagsi R, Park HS, et al. Quality at the American Society for Radiation Oncology Annual Meeting: gender balance among invited speakers and associations with panel success. Int J Radiat Oncol Biol Phys 2019; 104 (5): 987–96. [DOI] [PubMed] [Google Scholar]

- 28.Mehta S, Rose L, Cook D, et al. The speaker gender gap at critical care conferences. Crit Care Med 2018; 46: 991–6. [DOI] [PubMed] [Google Scholar]

- 29.Ribarovska AK, Hutchinson MR, Pittman QJ, et al. Gender inequality in publishing during the COVID-19 pandemic. Brain Behav Immun 2021; 91: 1–3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.American Medical Informatics Association. AMIA Awards. https://amia.org/programs/awards Accessed April 28, 2021.

- 31.Stromgren C. Determine the gender of a name. Genderize. 2018. https://genderize.io/ Accessed April 28, 2021.

- 32.Qureshi R, Lê J, Li T, et al. Gender and editorial authorship in high-impact epidemiology journals. Amer J Epidemiol 2019; 188 (12): 2140–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Karimi F, Wagner C, Lemmerich F, et al. Inferring gender from names on the web: a comparative evaluation of gender detection methods. In: Proceedings of the 25th International Conference Companion on World Wide Web; April 11–15, 2016; Montréal, Québec, Canada.

- 34.Wais K.Gender prediction methods based on first names with genderizeR. R J 2016; 8 (1): 17. [Google Scholar]

- 35.Keyes O.The misgendering machines: trans/HCI implications of automatic gender recognition. Proc Acm Hum-Comput Interact 2018; 2 (CSCW): 1–22. [Google Scholar]

- 36.SpaCy https://spacy.io/ Accessed April 28, 2021.

- 37.Mohammad SM, Turney PD.. Crowdsourcing a word–emotion association lexicon. Comput Intell 2013; 29 (3): 436–65. [Google Scholar]

- 38.Mohammad S, Turney P. Emotions evoked by common words and phrases: Using mechanical turk to create an emotion lexicon. In: Proceedings of the NAACL HLT 2010 Workshop on Computational Approaches to Analysis and Generation of Emotion in Text; June 5 2010; Los Angelos, CA.

- 39.Hamidi F, Scheuerman MK, Branham SM. Gender recognition or gender reductionism? The social implications of embedded gender recognition systems. In: Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems; April 21–26, 2018; Montreal, Canada.

- 40.Obermeyer Z, Powers B, Vogeli C, et al. Dissecting racial bias in an algorithm used to manage the health of populations. Science 2019; 366 (6464): 447–53. [DOI] [PubMed] [Google Scholar]

- 41.Eaneff S, Obermeyer Z, Butte AJ.. The case for algorithmic stewardship for artificial intelligence and machine learning technologies. JAMA 2020; 324 (14): 1397. [DOI] [PubMed] [Google Scholar]

- 42.Roberts SG, Verhoef T.. Double-blind reviewing at EvoLang 11 reveals gender bias. J Lang Evol 2016; 1 (2): 163–7. [Google Scholar]

- 43.Fiez T, Shah N, Ratliff L. A SUPER algorithm to optimize paper bidding in peer review. In: Proceedings of the 36th Conference on Uncertainty in Artificial Intelligence; August 3–6, 2020; Toronto, Canada.

- 44.Sardelis S, Drew JA.. Not “Pulling up the Ladder”: women who organize conference symposia provide greater opportunities for women to speak at conservation conferences. PLoS One 2016; 11 (7): e0160015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Casadevall A, Handelsman J.. The presence of female conveners correlates with a higher proportion of female speakers at scientific symposia. mBio 2014; 5 (1): e00846-13. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The data underlying this article cannot be shared publicly to protect the confidentiality of authors and reviewers. The data were provided by AMIA by permission. Data will be shared on reasonable request to the corresponding author with permission of AMIA.