Significance

During the COVID-19 pandemic, individuals have been forced to balance conflicting needs: stay-at-home guidelines mitigate the spread of the disease but often at the expense of people’s mental health and economic stability. To balance these needs, individuals should be mindful of actual local virus transmission risk. We found that although pandemic-related risk perception was likely inaccurate, perceived risk closely predicted compliance with public health guidelines. Realigning perceived and actual risk is crucial for combating pandemic fatigue and slowing the spread of disease. Therefore, we developed a fast and effective intervention to realign perceived risk with actual risk. Our intervention improved perceived risk and reduced willingness to engage in risky activities, both immediately and after a 1- to 3-wk delay.

Keywords: COVID-19, cognition, psychology, risk, intervention

Abstract

The COVID-19 pandemic reached staggering new peaks during a global resurgence more than a year after the crisis began. Although public health guidelines initially helped to slow the spread of disease, widespread pandemic fatigue and prolonged harm to financial stability and mental well-being contributed to this resurgence. In the late stage of the pandemic, it became clear that new interventions were needed to support long-term behavior change. Here, we examined subjective perceived risk about COVID-19 and the relationship between perceived risk and engagement in risky behaviors. In study 1 (n = 303), we found that subjective perceived risk was likely inaccurate but predicted compliance with public health guidelines. In study 2 (n = 735), we developed a multifaceted intervention designed to realign perceived risk with actual risk. Participants completed an episodic simulation task; we expected that imagining a COVID-related scenario would increase the salience of risk information and enhance behavior change. Immediately following the episodic simulation, participants completed a risk estimation task with individualized feedback about local viral prevalence. We found that information prediction error, a measure of surprise, drove beneficial change in perceived risk and willingness to engage in risky activities. Imagining a COVID-related scenario beforehand enhanced the effect of prediction error on learning. Importantly, our intervention produced lasting effects that persisted after a 1- to 3-wk delay. Overall, we describe a fast and feasible online intervention that effectively changed beliefs and intentions about risky behaviors.

The COVID-19 pandemic has brought unprecedented global challenges, affecting both physical health and mental well-being (1–8). Public health experts have promoted restrictions to mitigate the spread of disease, including social distancing (i.e., physical distancing) and closing nonessential businesses (7). Despite rapid progress in preventative and palliative care, widespread global vaccination will require an extended period of time, and social/physical distancing continues to be crucial for protecting vulnerable individuals and limiting the spread of viral variants (9). Severe outbreaks will limit the success of vaccine implementation, underscoring the need for behavioral interventions that reduce the spread of disease (10). Given the exponential rate of virus transmission (9, 11), encouraging even a single individual to comply with public health guidelines could have significant and widespread downstream effects (12–16).

To make adaptive decisions during the pandemic, individuals should balance conflicting needs, which might include avoiding exposure to the virus, earning an income, supporting local businesses, or seeking social support to bolster mental health (1–3, 5–7). Accurately assessing the risks associated with behavioral options is fundamental to adaptive decision making in any context (17–19), especially under chronic stress (20–22). Nonetheless, risk misestimation is common, especially for low-probability events (23–26), and low quantitative literacy is linked to poor health decision making and outcomes (27, 28). During the pandemic, risk underestimation could lead to risky behaviors that harm individuals and society at large, but risk overestimation could increase distress and anxiety while reducing mental well-being (29, 30).

Encouraging large-scale, long-term behavior change during the COVID-19 pandemic has proven difficult: widespread “pandemic fatigue” and prolonged economic hardship contributed to a deadly global resurgence of the virus during late 2020 and early 2021 (7, 9). Empowering individuals to accurately assess local risk levels can support more informed decision making, bolstering sustainable compliance with public health recommendations. Although recent studies have found that subjective perceived risk relates to demographic variables, attitudes, and risky behaviors during the pandemic (3, 29, 31–36), past studies have not evaluated the accuracy of perceived risk or intervened to change perceived risk. Local risk levels can change rapidly over time (11, 37); an intervention that is fast, low effort, and easy to administer could realign perceived risk with actual risk.

Prior interventions on risk estimation have shown some success, although effect sizes are typically small and weaken over time (38, 39). A separate line of research has demonstrated that episodic simulation, or imagination, of the downstream outcomes of choices can enhance decision making, including self-regulation (40–44). The rich, personalized mental imagery generated during episodic simulation may drive these effects by increasing the salience of an intervention (44–46) and supporting the formation of “gist” representations that persist over time (47). Furthermore, thinking concretely about outcomes increases perceived risk and estimation accuracy for common adverse events (48). Other studies have shown that increasing the salience of an intervention can enhance initial behavioral outcomes and also boost long-term effects (49, 50). Risk perception is influenced by the availability of information about outcomes (51–53); anecdotes tend to be more vivid and easily recalled, and can exert greater influence on risk perception than statistics (54–56). Crucially, combining statistical information with an imagined narrative could create a synergistic effect that enhances learning (57).

Other studies have explored how individuals update beliefs and knowledge in response to feedback (58–60). Information prediction error (i.e., surprise) describes the discrepancy between expectation and reality; the valence (better or worse than expected) and magnitude of this surprise signal drive learning. Larger prediction errors lead to more successful belief revision (58–61). A prior study found that prediction error allowed beliefs about risk to be updated, but participants tended to resist using bad news to learn about future adverse events (62). Likewise, another study found a valence bias in belief updating (particularly in youths), such that negative information about risk tended to be discounted (63). Overall, presenting surprising risk information may change beliefs and improve the accuracy of risk perception. However, combining prediction error with another psychological intervention—such as an episodic simulation—could enhance learning, particularly if people tend to resist updating beliefs about adverse events.

Here, we report the results of an efficient and accessible intervention designed to reduce risk misestimation and realign individual behavior with public health guidelines. Using a large, nationally representative sample of US residents, we first showed that perceived risk was not aligned with actual risk (study 1). To remedy this misalignment, we developed an intervention that combined an episodic simulation with a risk estimation exercise that provided accuracy feedback (study 2). In this preregistered experiment, we found that a simple 10-min intervention helped realign perceived risk with actual risk and reduced willingness to engage in potentially risky activities. The magnitude of the information prediction error experienced during a prevalence-based risk estimation exercise drove change in the perceived risk of engaging in a variety of everyday risky activities; this effect of surprise on learning was enhanced when the intervention included an episodic simulation about the possible outcomes of risky decisions.

Study 1

First, we sought to test whether subjective risk perception corresponded with actual local risk levels. We recruited a nationally representative sample of 303 US residents in May 2020. Participants completed an online survey that assessed perceived risk of engaging in six different activities in the participant’s current location: going for a walk outside, shopping at a grocery store, eating inside a restaurant, meeting with a small group of friends, traveling within one’s geographical state, and traveling beyond one’s state. Although the precise risk associated with these activities is not known, these activities have been identified as scenarios in which exposure to an infected individual could increase risk of infection and spread. Participants also reported willingness to engage in risky activities during reopening, and past compliance with public health guidelines. We also measured actual risk based on case prevalence in each participant’s location by obtaining the number of active COVID-19 cases in their county of residence on the day that the study was completed. Actual risk (prevalence based) was calculated as the probability (log transformed) that at least one individual in a hypothetical gathering of 10 people would be infected with SARS-CoV-2 (37). However, it is important to note that this measure of actual risk is subject to several limitations: risk levels can vary within a county, across event types, and across individuals (e.g., depending on the socioeconomic status of a neighborhood, the demographic characteristics of a group, ventilation, mask wearing, comorbidities, or vaccination status), and actual prevalence is estimated from recent confirmed cases, but some cases may be undiagnosed or not yet reported.

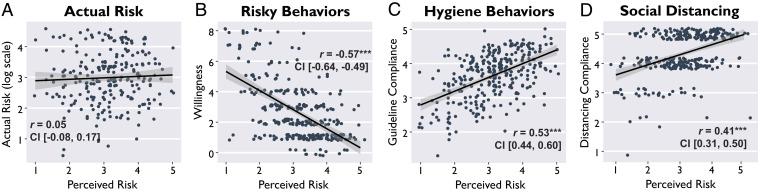

If subjective perceived risk of engaging in various everyday activities is aligned with the actual risk of COVID-19 prevalence in a given location, then perceived risk and actual risk should be positively correlated. Critically, we found that perceived risk was not correlated with actual risk, Pearson’s r(232) = 0.05, 95% CI [−0.08, 0.17], P = 0.472 (Fig. 1A). Moreover, actual risk was not correlated with willingness to engage in risky activities, r(232) = −0.01, 95% CI [−0.14, 0.12], P = 0.854. Equivalence tests provided evidence in favor of the null hypothesis that perceived risk was not correlated with actual risk (SI Appendix, Equivalence Testing). This striking disconnect between actual and perceived risk indicated that subjective risk perception was likely inaccurate. Individuals did not seem to have a realistic understanding of risk levels in their given locations, or, at minimum, did not judge the riskiness of everyday activities on the basis of the true prevalence of positive cases in their local community.

Fig. 1.

Perceived risk was not aligned with actual risk, but perceived risk predicted compliance with public health guidelines. In study 1, we found the following: (A) Perceived risk of engaging in various everyday activities was not correlated with actual risk based on COVID-19 prevalence. (B) Perceived risk was negatively associated with willingness to engage in risky activities and was positively associated with (C) compliance with hygiene guidelines and (D) compliance with social/physical distancing guidelines. Points are minimally jittered for visualization, in order to display all data without overlapping points. Shaded bands indicate 95% confidence intervals around the regression line. ***P < 0.001.

Although subjective perceived risk was misaligned with local prevalence, subjective perceived risk was significantly related to behavior. Individuals who reported greater perceived risk tended to report lower willingness to engage in risky activities during reopening (r(301) = −0.57, 95% CI [−0.64, −0.49], P < 0.001; Fig. 1B), greater adherence to hygiene and sanitation guidelines (r(301) = 0.52, 95% CI [0.44, 0.60], P < 0.001; Fig. 1C), and more compliance with social/physical distancing (r(301) = 0.41, 95% CI [0.31, 0.50], P < 0.001; Fig. 1D). Overall, we found that subjective perceived risk was not aligned with reality, but it predicted a variety of behaviors with crucial public health implications; we identified subjective perceived risk as a critical target for interventions.

Study 2

In study 2, we developed a new intervention designed to change beliefs and intentions about risky behaviors during the pandemic. We expected that, on average, realigning perceived risk with actual risk would lead to better compliance with public health guidelines because people tend to underestimate the risk of virus transmission. An online informational intervention could enable quick, broad dissemination of risk information. Numerous websites and tools have emerged to provide information about COVID-19 cases and deaths (11, 37, 64–66). Yet, the efficacy of these interventions has not been directly measured; to our knowledge, no past studies have tested whether exposure to information about the prevalence of COVID-19 cases influences risk perception or risky decision making. Our preregistered (https://osf.io/6fjdy) intervention included two components: an episodic simulation task (Fig. 2B) and a risk estimation task (Fig. 2 C and D). Participants completed the intervention during session 1 and later returned for a follow-up survey during session 2 (1- to 3-wk delay) to evaluate the durability of the intervention over time.

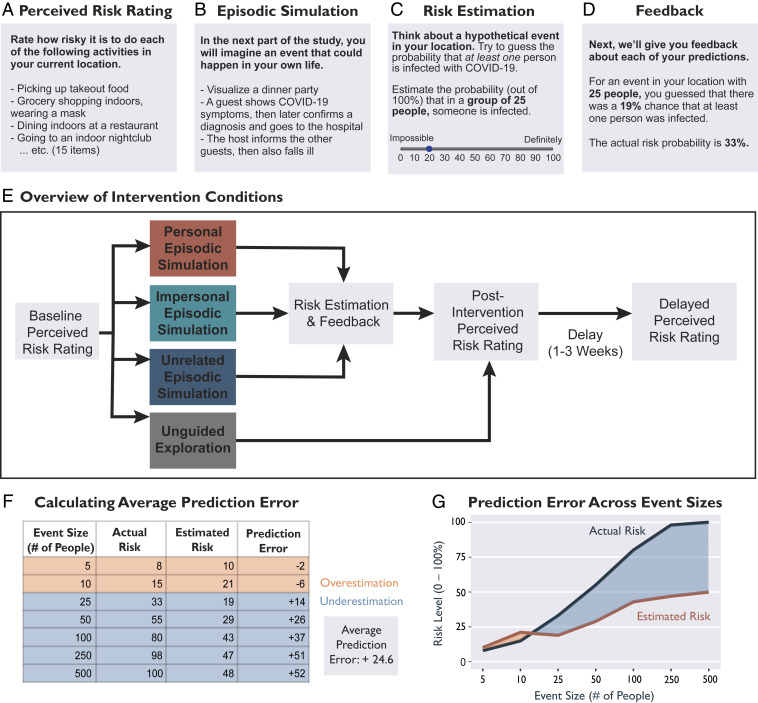

Fig. 2.

Overview of the intervention approach used in study 2. (A) The perceived risk rating was an assessment of subjective perceived risk of 15 activities and willingness to engage in those activities. Participants completed the perceived risk rating preintervention, immediately postintervention, and 1 to 3 wk postintervention. (B) During the episodic simulation task, participants were guided through an imagination exercise and typed responses. (C) During the risk estimation task, participants estimated exposure risk probabilities (based on the prevalence of COVID-19 cases) for seven hypothetical event sizes (ranging from 5 to 500 people) in their location. (D) After estimating the risk for each event size, participants received feedback about the actual exposure risk probabilities. (E) Overview of the four intervention conditions and the order in which participants completed tasks. (F) Table demonstrating the calculation of average prediction error, using responses from the risk estimation task for one example participant. (G) Visualization of the values provided in F.

We expected that imagining the potential consequences of pandemic-related risky decisions would increase the efficacy of our intervention, especially if the scenario included personalized elements. Drawing on past studies (44, 46, 57), we predicted that this imagination exercise would enhance the salience of subsequent numerical information and thus boost learning during the subsequent risk estimation task. Therefore, we randomly assigned participants to receive one of three versions (personal, impersonal, unrelated) of the episodic simulation task (i.e., guided imagination). In the personal simulation, participants imagined themselves hosting a dinner party with four guests (specific close others, such as friends or neighbors) invited to their home. During this scenario, one of the guests exhibited symptoms of COVID-19 and later confirmed a diagnosis. The host then contacted the other guests to inform them of the exposure and eventually also fell ill with the disease. Participants were asked to visualize sensory details of the episode and imagine the emotions that they would experience. In the impersonal simulation, participants imagined a fictional character experiencing the same scenario. Lastly, in the unrelated simulation, participants imagined an episode that was thematically similar, but neither pandemic related nor personalized (a story about rabbits eating rotten vegetables). The unrelated simulation was a control condition; we did not expect this condition to influence risk perception, but this condition required participants to exert the same amount of time and attention as in the other conditions.

Immediately following the episodic simulation, participants completed the risk estimation task, in which they attempted to numerically estimate general risk levels in their location based on the prevalence of positive COVID-19 cases. After receiving a brief tutorial on risk and probability, participants were asked to think about events of various sizes (5, 10, 25, 50, 100, 250, and 500 people) that could happen in their location. For each event size, participants estimated the probability (ranging from 0%: impossible to 100%: definitely) that at least one person attending the event was infected with COVID-19. After making estimations for all seven event sizes, participants received individualized, veridical feedback about the actual risk probabilities in their local communities (37). We calculated information prediction error as the discrepancy between actual risk and estimated risk. For each participant, we averaged the estimation errors across the seven event sizes to calculate an average prediction error score, reflecting the average discrepancy between estimated and actual risk (based on prevalence). For our primary analyses, this average prediction error score served as a continuous independent variable that captured the valence (i.e., underestimation or overestimation) and magnitude of each participant’s overall misestimation bias. In contrast, our primary dependent variable was perceived risk, the average subjective riskiness of engaging in 15 different everyday activities. To clarify the differences between these two measures, and the individual items that contributed to each composite measure, we provided data visualizations for three example subjects (SI Appendix, Fig. S1).

We hypothesized that prediction error (from the risk estimation task) would drive change in subjective perceived risk (of everyday activities), thus demonstrating that learning numerical risk information about disease prevalence can transfer to influence the perceived risk of engaging in specific behaviors. We expected that our intervention would realign perceived risk with actual risk: Individuals who underestimated risk should report increases in perceived risk, and individuals who overestimated risk should report decreases in perceived risk. Importantly, we predicted that the effect of prediction error on perceived risk would be enhanced if the risk estimation task was preceded by a COVID-related imagination exercise (personal and impersonal simulation conditions). We expected that the personal simulation would be most effective, the impersonal simulation would be somewhat less effective, and the unrelated simulation would be the least effective. Specifically, the unrelated control condition allowed us to test whether prediction error could influence risk perception in the absence of any relevant contextualizing information.

In addition to the three simulation conditions, we included an unguided exploration condition in which participants viewed an interactive nationwide risk assessment map (63) for a minimum of 1 min, without specific instructions regarding how to engage with the information. This condition used a well-advertised tool that reflects existing standards for disseminating risk information; this tool has been cited or promoted by the media over 2,500 times (65). Statistics about COVID-19 cases were presented without guidance or personalization, consistent with how individuals would encounter this information in a naturalistic setting. Participants in the unguided exploration condition did not complete the episodic simulation or risk estimation tasks. This condition offered some insight into the efficacy of existing methods for communicating risk information, but was not directly comparable to the three simulation conditions because of the differences in the tasks.

We tested the four interventions across two sessions on a nationally representative sample of 735 US residents, after exclusions (Fig. 2). Participants were randomly assigned to one of four conditions: personal simulation (session 1: n = 181, session 2: n = 158), impersonal simulation (session 1: n = 180, session 2: n = 165), unrelated simulation (session 1: n = 185, session 2: n = 172), or unguided exploration (session 1: n = 189, session 2: n = 176). In all four conditions, participants completed an assessment of perceived risk of engaging in 15 potentially risky everyday activities and willingness to engage in the same activities preintervention (session 1, baseline), immediately postintervention (end of session 1), and after a delay (session 2). To determine whether the intervention influenced perceived risk of everyday activities, we calculated within-subjects change scores (postintervention − baseline) in perceived risk for each testing session. Lastly, participants returned after a delay (1 to 3 wk) to complete session 2, which included a follow-up assessment of perceived risk and a version of the risk estimation task without feedback.

We defined subjective perceived risk as the average riskiness rating (on a 5-point Likert scale) for all 15 everyday activities (e.g., picking up takeout, dining indoors at a restaurant, exercising at a gym, going to a house party). These activities are labeled in Fig. 3 and described in detail in SI Appendix, Everyday Activities Assessed (Fig. 2A). Importantly, this perceived risk measure was distinct from the information prediction error measure that we derived from the risk estimation task (Fig. 2C). Whereas perceived risk concerned the subjective riskiness of everyday activities, information prediction error measured the numerical discrepancy between actual and estimated probabilities of virus exposure risk (Fig. 2 F and G). SI Appendix, Fig. S1 details how the perceived risk and prediction error measures were calculated for three example subjects.

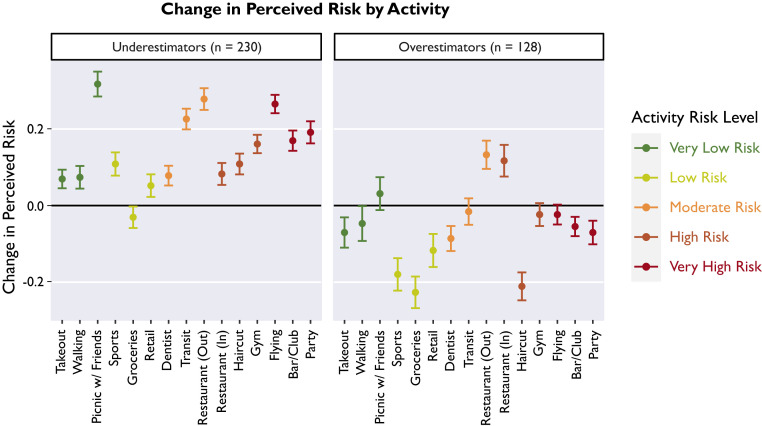

Fig. 3.

Change in perceived risk by activity. Points depict the average within-subjects change in perceived risk for each of the 15 everyday activities assessed. Activities are color coded according to approximate risk level (89). Participants who had been underestimating risk (average prediction error ≥15) reported increases in perceived risk (Left), whereas participants who had been overestimating risk (average prediction error ≤ −15) reported decreases in perceived risk (Right). Error bars indicate 95% confidence intervals around the mean. Black line indicates zero, no change from the preintervention baseline.

Study 2, Session 1 Results.

Overall effects.

Consistent with study 1, we found that preintervention, perceived risk of engaging in various everyday activities was unrelated to actual risk levels (based on prevalence in each participant’s location), r(733) = −0.003, 95% CI [−0.08, 0.07], P = 0.94. Similarly, willingness to engage in risky activities was unrelated to actual risk at baseline, r(733) = −0.05, 95% CI [−0.12, 0.02], P = 0.183. Equivalence tests provided evidence in favor of the null hypothesis that perceived risk was not correlated with actual risk (SI Appendix, Equivalence Testing). Importantly, subjective risk perception was related to behavior: Perceived risk was inversely related to willingness to engage in risky activities (r(733) = −0.72, 95% CI [−0.75, −0.68], P < 0.001) and positively associated with social distancing (i.e., physical distancing) compliance (r(671) = 0.46, 95% CI [0.40, 0.52], P < 0.001). Overall, we replicated the associations between perceived risk and risky behaviors that we observed in study 1. We also found that on average, participants tended to underestimate risk levels, evidenced by a directional bias in the risk estimation task (average prediction error = +8.9 points, indicating that actual risk was greater than estimated risk).

Across all four intervention conditions, receiving numerical risk information improved the alignment between perceived risk (of engaging in various everyday activities) and actual risk (based on prevalence). At the end of session 1, perceived risk was weakly positively correlated with actual risk, r(733) = 0.09, 95% CI [0.01, 0.16], P = 0.019. Next, we calculated within-subjects difference scores to assess postintervention change in perceived risk of various everyday activities and willingness to engage in those activities. On average, there was an increase in perceived risk after the intervention, t(734) = 5.04, P < 0.001, Cohen’s d = 0.19, 95% CI [0.11, 0.26]. Likewise, there was a decrease in willingness to engage in potentially risky activities, t(734) = −16.82, P < 0.001, Cohen’s d = −0.62, 95% CI = [−0.70, −0.54]. Changes in perceived risk were negatively correlated with changes in willingness, r(733) = −0.23, 95% CI [−0.30, −0.16], P < 0.001. Summary statistics are provided in SI Appendix .

Next, we visualized the average change in perceived risk for each of the 15 activities individually (Fig. 3). We expected that the intervention would shift perceived risk for each activity to counteract each participant’s baseline risk estimation bias. For a visual exploration of item-level effects, we classified participants as either risk underestimators, overestimators, or accurate estimators on the basis of their average prediction error scores from the risk estimation task (actual − estimated risk). We defined underestimators as those who believed that risk levels were lower than reality (average prediction error ≥ 15), accurate estimators as those who were relatively accurate at estimating exposure risk (average prediction error between −14 and 14), and overestimators as those who believed that risk levels were higher than reality (average prediction error ≤15). (Importantly, this binned classification was used only for the sake of visualization. Prediction error scores were treated as a continuous variable in all statistical analyses reported in the following sections.)

This visualization (Fig. 3) revealed that on average, underestimators reported increases in perceived risk for 14/15 activities (with the exception of grocery shopping) (Fig. 3, Left). On average, overestimators reported decreases in perceived risk for 12/15 activities (with the exception of riskier social activities, such as dining in a restaurant) (Fig. 3, Right). Taken together, this exploratory visualization of item-level effects demonstrated that our intervention effectively changed perceived risk of various everyday activities, in a manner that is optimal for both public health (discouraging risky social gatherings) and economic needs (encouraging necessary shopping in overestimators). Refer to SI Appendix for figures that show participants who were relatively accurate at estimating risk, and separate panels for each intervention condition (SI Appendix, Figs. S4 and S5).

We also investigated possible backfire effects. Before implementing these interventions, it is important to determine whether any participants posed a greater risk to public health after the intervention. As previously discussed, the behavior of individuals during a pandemic can have widespread consequences. Therefore, we identified underestimators who counterintuitively reported lower perceived risk and greater willingness to engage in potentially risky activities after the intervention. We found that only a very small percentage of respondents reported these increases in riskiness, suggesting that our intervention did not produce a backfire effect (3.3%, 18 out of the 546 participants across the three simulation conditions). The small number of participants and small numerical increases in riskiness are not convincingly different from what might be expected from measurement error. About half of participants responded in the intended direction to the intervention, whereas others did not report changes in perceived risk (SI Appendix, Responders and Non-Responders).

The effect of prediction error across simulation conditions.

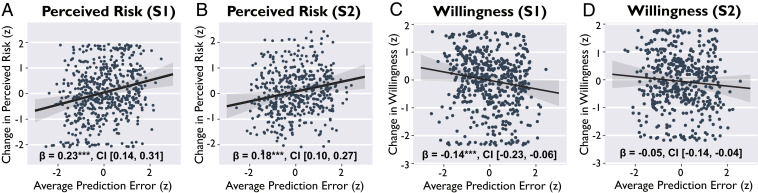

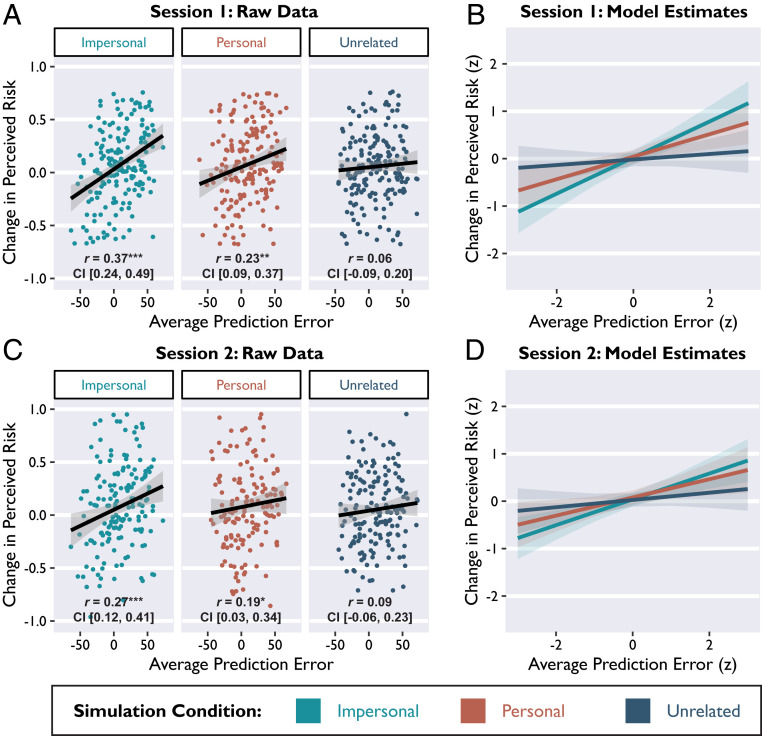

Next, we compared the efficacy of the three interventions that included episodic and numerical risk information (personal, impersonal, and unrelated conditions). We hypothesized that the numerical feedback provided during the risk estimation portion of the intervention would shift perceived risk of everyday activities: Individuals who underestimated risk should report increases in perceived risk, and individuals who overestimated risk should report decreases in perceived risk. The magnitude of this realignment should depend on the magnitude of each participant’s misestimation bias. In other words, we expected that information prediction errors (actual − estimated risk) experienced during the risk estimation task would drive change in perceived risk. Using multiple linear regression, we found that average prediction error was positively related to change in perceived risk, β = 0.23, 95% CI [0.14, 0.31], t = 5.32, P < 0.001 (Fig. 4 A and B). There was also an interaction between prediction error and simulation condition predicting change in perceived risk [F(2,531) = 4.79, P = 0.009], such that the effect of prediction error was stronger in the impersonal and personal conditions (Fig. 5 A and B), relative to the unrelated condition (unrelated vs. impersonal: β = −0.17, 95% CI [−0.29, −0.05], t = −2.57, P = 0.006; personal vs. unrelated: β = 0.16, 95% CI [0.04, 0.27], t = 2.59, P = 0.01). The effect of prediction error did not differ between the personal and impersonal conditions, β = 0.01, 95% CI [−0.11, 0.13], t = 0.20, P = 0.839.

Fig. 4.

Prediction error influenced perceived risk and willingness to engage in potentially risky everyday activities. Prediction error was positively associated with change in perceived risk both immediately postintervention (A) and after a delay (B). Prediction error was negatively associated with change in willingness to engage in risky activities immediately postintervention (C), but not after a delay (D). Points depict average scores from individual subjects (standardized units). Lines depict slope estimates for the main effect of prediction error, derived from multiple regression models that also account for variance that can be attributed to the intervention condition and delay between sessions. S1 = session 1; S2 = session 2. Shaded bands indicate 95% confidence intervals around the regression line. ***P < 0.001.

Fig. 5.

Relevant episodic simulations enhance the effect of prediction error on perceived risk. Prediction error from the risk estimation task was significantly positively associated with change in perceived risk in the impersonal and personal conditions (imagining a COVID-related scenario), but not the unrelated condition. (A and B) Session 1 results and (C and D) session 2 results. (A and C depict all raw data points with original units, subset by simulation condition. (B and D) Slope estimates derived from multiple regression models (standardized units), overlaid for comparison. Shaded bands indicate 95% confidence intervals around the regression lines. *P < 0.05, **P < 0.01, ***P < 0.001.

To examine this interaction further, we tested the relationship between prediction error and change in perceived risk in each condition separately. Prediction error was positively associated with change in perceived risk in the impersonal simulation condition (r(175) = 0.37, 95% CI [0.24, 0.49], P < 0.001) and personal simulation condition (r(176) = 0.23, 95% CI [0.09, 0.37], P = 0.002), but not in the unrelated simulation condition (r(180) = 0.06, 95% CI [−0.09, 0.20], P = 0.429). These effects remained statistically significant even after controlling for relevant demographic and individual difference variables: political conservatism, age, episodic future thinking ability, subjective numeracy ability, and self-reported vividness and affect ratings from the simulation task (SI Appendix, Controlling for Individual Differences).

Next, we conducted the same analysis for a different dependent variable: change in willingness to engage in potentially risky activities. Prediction error experienced during the risk estimation task was negatively related to change in willingness, β = −0.14, 95% CI [−0.23, −0.06], t = −3.26, P = 0.001 (Fig. 4 C and D). In other words, individuals who had been severely underestimating actual risk levels showed a greater reduction in willingness to engage in potentially risky activities. This effect remained significant after controlling for several covariates (SI Appendix, Controlling for Individual Differences). However, the interaction between prediction error and simulation condition was not significantly related to change in willingness (unrelated vs. impersonal: β = 0.03, 95% CI [−0.09, 0.16], t = −0.56, P = 0.579; personal vs. unrelated: β = −0.02, 95% CI [−0.15, 0.10], t = −0.40, P = 0.590; and impersonal vs. personal: β = −0.01, 95% CI [−0.13, 0.11], t = −0.16, P = 0.872).

Overall, we found that prediction error elicited during the risk estimation task was a moderately strong and statistically robust predictor of change in both perceived risk and willingness to engage in risky activities. This finding demonstrates that receiving veridical numerical information about local risk statistics can exert transfer effects on subjective perceived risk. Furthermore, imagining a COVID-related scenario (either impersonal or personal) enhanced the effect of prediction error on perceived risk. Receiving numerical information about risk without accompanying contextual information (unrelated simulation condition) did not successfully change perceived risk.

Study 2, Session 2 Results.

Overall effects.

First, we tested whether the average changes in perceived risk and willingness to engage in potentially risky activities persisted after a 1- to 3-wk delay. We found that across all four conditions, the average increase in perceived risk (relative to the preintervention baseline) was still evident at session 2, t(670) = 3.41, P < 0.001, Cohen’s d = 0.13, 95% CI [0.06, 0.21]. Within subjects, change in perceived risk during session 1 was positively correlated with lasting change in session 2, r(669) = 0.51, 95% CI [0.45, 0.56], P < 0.001.

Likewise, the average decrease in willingness to engage in potentially risky activities also persisted after a delay, t(670) = −6.61, P < 0.001, Cohen’s d = −0.26, 95% CI [−0.33, −0.18]. Within subjects, change in willingness from session 1 was positively correlated with lasting change in session 2, r(669) = 0.48, 95% CI [0.42, 0.54], P < 0.001. Consistent with session 1, we also found that lasting changes in perceived risk were negatively correlated with lasting changes in willingness, r(669) = −0.28, 95% CI [−0.35, −0.21], P < 0.001. Overall, we found that across all four intervention conditions, participants reported lasting increases in perceived risk and decreases in willingness to engage in risky activities after a delay. We also asked participants to retrospectively report engagement in risky activities between sessions, but did not find any differences among conditions (SI Appendix, Retrospective Report of Risky Activities).

The effect of prediction error across simulation conditions.

Next, we tested whether prediction error during the session 1 risk estimation task predicted lasting changes in perceived risk. We accounted for variable delay lengths in all of the following models by including a covariate for the number of days between session 1 and session 2. We found that prediction error experienced during the risk estimation task in session 1 continued to predict lasting changes in perceived risk in session 2, β = 0.18, 95% CI [0.10, 0.27], t = 4.17, P < 0.001 (Fig. 4 E and F). The interaction between prediction error and simulation condition was no longer significant (unrelated vs. impersonal: β = −0.10, 95% CI [−0.22, 0.02], t = −1.69, P = 0.092; personal vs. unrelated: β = 0.09, 95% CI [−0.03, 0.21], t = 1.53, P = 0.126; and impersonal vs. personal: β = 0.01, 95% CI [−0.11, 0.13], t = 0.19, P = 0.850). However, numerically the results across conditions were consistent with session 1 (Fig. 5 C and D), such that prediction error was positively correlated with lasting change in perceived risk in both the impersonal condition (r(162) = 0.27, 95% CI [0.12, 0.41], P < 0.001) and the personal condition (r(153) = 0.19, 95% CI [0.03, 0.34], P = 0.018), but not in the unrelated condition (r(168) = 0.09, 95% CI [−0.06, 0.23], P = 0.258). Overall, prediction errors experienced during session 1 were associated with lasting changes in perceived risk, particularly in the impersonal and personal simulation conditions.

We then conducted the same analysis for lasting change in willingness to engage in potentially risky activities (Fig. 4 G and H). Prediction error was not significantly related to willingness in session 2, β = −0.06, 95% CI [−0.15, 0.03], t = −1.30, P = 0.194. There was no significant interaction between prediction error and simulation condition predicting willingness (personal vs. unrelated: β = −0.03, 95% CI [−0.15, 0.10], t = −0.40, P = 0.689; impersonal vs. personal: β = 0.01, 95% CI [−0.12, 0.13], t = 0.10, P = 0.918; and unrelated vs. personal: β = 0.02, 95% CI [−0.10, 0.14], t = 0.31, P = 0.759). As reported above (overall effects), we found that participants were less willing to engage in risky activities after the intervention, both immediately and after a delay. However, prediction error only described the magnitude of change in willingness immediately after the intervention, suggesting that the parametric effect of prediction error on willingness was attenuated over time. One possibility is that participants who were highly risk averse may tend to revert to risk aversion over time.

Change in risk estimation accuracy over time.

We also computed a nonparametric measure of estimation accuracy to evaluate risk estimation change across all event sizes. Note that only participants in the three simulation conditions completed the risk estimation task during session 1, but participants in all four conditions completed the risk estimation task during session 2. We examined how each individual’s risk estimation function related to actual risk across all group sizes by computing the area between the two curves, representing actual risk and estimated risk (Fig. 2G). We compared the curves for actual and estimated risk first for session 1 and again at session 2. This measure of misestimation was very strongly correlated with the absolute value of the average prediction error scores used in prior analyses (session 1: r(535) = 0.97, 95% CI [0.96, 0.97], P < 0.001; session 2: r(669) = 0.95, 95% CI [0.94, 0.96], P < 0.001), but provides additional information—especially visually—about where (i.e., for which particular event sizes; Fig. 2G) individuals tend to misestimate risk. We found that overall misestimation decreased significantly from session 1 to session 2 (paired t(489) = 10.06, P < 0.001, Cohen’s d =0.45, 95% CI [0.36, 0.55]), reflecting substantial mitigation of both underestimation and overestimation.

Lastly, we used this measure of misestimation to compare the longer-term effects of the four intervention conditions (including the unguided exploration condition). We compared average risk misestimation scores at session 2 and found that participants in the personal and impersonal simulation conditions were significantly more accurate at estimating risk (i.e., lower misestimation scores), relative to participants in the unguided condition (personal vs. unguided: β = −0.18, 95% CI [−0.31, −0.05], t = −2.75, P = 0.006; impersonal vs. unguided: β = −0.18, 95% CI [−0.31, −0.05], t = −2.78, P = 0.006). However, risk misestimation scores in the unrelated simulation condition did not significantly differ from those in the unguided exploration condition (unrelated vs. unguided: β = −0.10, 95% CI [−0.22, 0.03], t = −1.50, P = 0.134). Taken together, these results demonstrate that the personal and impersonal interventions improved the accuracy of risk estimation, above and beyond the benefits of existing risk assessment tools.

General Discussion

During the COVID-19 pandemic, individuals have struggled to balance conflicting needs and make informed decisions in an environment characterized by high uncertainty. Although public health guidelines initially helped to slow the spread of disease, widespread pandemic fatigue (7) and the emergence of new highly transmissible viral variants contributed to resurgences around the world (67). New interventions are necessary to sustain long-term behavior change, allowing individuals to comply with public health guidelines while also fulfilling other needs. Here, we report an informational intervention that may help individuals make decisions and balance public health, personal, financial, and community needs. In study 1, we found that subjective perceived risk was inaccurate, yet predicted compliance with public health guidelines. In study 2, we demonstrated that a brief online intervention changed beliefs and intentions about risk. Information prediction error, a measure of surprise about the actual local risk of virus exposure, drove beneficial change in perceived risk and willingness to engage in potentially risky activities. Imagining a pandemic-related scenario prior to receiving risk information enhanced learning. Importantly, the benefits of our intervention persisted after a 1- to 3-wk delay.

We predicted that the efficacy of the intervention would be driven by both the numerical information about local risk (information prediction error) and the context in which it was received (episodic simulation). Our results supported this hypothesis, demonstrating that imagining a COVID-related scenario enhanced subsequent learning from prediction error, perhaps by increasing the salience of the intervention context. Postintervention, participants who had previously underestimated risk reported greater perceived risk for a variety of everyday activities (Fig. 3) and reduced willingness to engage in these activities (e.g., dining indoors at a restaurant, traveling, exercising at a gym without a mask). These changes reflect a realignment with public health guidelines both immediately and after a delay, with perceived risk showing the most durable change. Although on average participants continued to be less willing to engage in these potentially risky activities 1 to 3 wk postintervention, the parametric effect of prediction error on willingness did not persist after a delay. More frequent, regular exposure to risk information may be critical for linking interventions on risk estimation to behavioral risk tolerance (68).

Interestingly, we found that the personal and impersonal simulations were similarly effective. We had expected the personal simulation to be most effective, as suggested by several theoretical frameworks of risk perception related to personalized emotional processing (69, 70). Although the effects of the personal and impersonal conditions did not differ statistically, the personal simulation was numerically less effective because individuals who tended to overestimate risk did not respond as well (SI Appendix, Table S1). The personal simulation may have been aversive for participants who were already overestimating risk, thus counteracting the effect of the numerical risk information and resulting in no net change in perceived risk. Our results suggest that personalization may be beneficial for remedying risk underestimation but not overestimation. Furthermore, our results suggest that cognitive effects, rather than emotional effects from personalized appeals, may be more useful for correcting perceived risk. The impersonal simulation was effective at counteracting both risk underestimation and overestimation, offering practical utility because impersonal elements are easy to implement in large-scale online interventions.

Prior interventions seeking to mitigate biases in risk perception have largely targeted numerical cognition, especially in individuals low in quantitative literacy (28, 71). Overall, risk communication entails three main goals: sharing factual information, changing beliefs, and changing behavior (72). Traditional informational interventions (e.g., pamphlets in clinical settings) have been widely used, especially in health decision making (27, 28). Such decision aids are easy to implement, but they lack features that engage attention, facilitate retention, and drive lasting changes in behavior (73, 74). Importantly, there is little evidence of long-term efficacy for even the most effective interventions (28, 73). Recent work has highlighted the potential of using affect and gist-based thinking to shape the learning context, thereby making risk information more salient and potentially improving long-term efficacy (47, 69, 75).

To increase the likelihood of intervention success, we combined the most effective elements of past interventions, pairing surprising risk information with a novel interactive experience designed to contextualize and increase the salience of risk information. Past studies have shown that prediction error (i.e., surprise) drives belief and knowledge updating (59–62), and can influence risk perception (62). Here, we demonstrated that information prediction error realigned perceived risk with actual risk and also influenced willingness to engage in potentially risky activities. Crucially, we found that an episodic simulation prior to a learning experience enhanced the effect of prediction error on learning. Past studies have shown that episodic simulation can support decision making in other domains, improving both patience (43, 76) and prosociality (46). However, other studies have shown no effect of episodic simulation on risk perception (77, 78), perhaps because narratives are more powerful when they are paired with statistics (57). Importantly, our intervention combines an episodic simulation with prediction error. Imagining a COVID-related episode may link numerical risk information with the potential outcomes of risky decisions, thus enhancing the effect of prediction error (40, 41). Our findings bear broader practical implications: In other domains, combining episodic simulation with prediction error might support revising common misconceptions (e.g., about vaccine safety), correcting misinformation in the media, and learning in educational settings.

We assessed whether our effects persisted after a relatively short delay of 1 to 3 wk. Because risk levels can change rapidly over time, an effective intervention should be updated frequently and administered repeatedly. In the present study, it is possible that participants encountered new information about COVID-19 risks during the delay between sessions, such as by consulting a risk map (11, 65) or reading the news. Such information, whether accurate or inaccurate, may update or interfere with prior learning about risk. Future interventions could focus on cultivating a habit of information seeking from reputable sources; these small behavioral nudges could be used to quickly realign perceived risk with actual risk. Our intervention is fast to complete and easy to disseminate online; these features enhance feasibility for both participants and behavior designers.

Limitations and Future Directions

Some of our results suggest important avenues for future research. Not all participants responded to the intervention (SI Appendix, Responders and Non-Responders), perhaps because other factors may limit belief updating. The COVID-19 pandemic has created a breeding ground for conspiratorial thinking on social media (8, 79), with many Americans confidently dismissing the pandemic as a hoax (80–82). Conspiratorial thinking about the pandemic tracks the propensity for people to engage in antisocial and risky behaviors (83, 84). Alternative (or additional) methods may be necessary to successfully realign risk-related beliefs for people who dismiss the severity of the pandemic, perhaps through facilitating analytic thinking or through training to identify disinformation. Other recent studies have suggested that age (14), political partisanship (82, 85), gender (86), analytical thinking (80), and open mindedness (87) may influence beliefs about risk during the pandemic. In related analyses, we found that age influenced responses to our intervention, such that older adults were less sensitive to prediction error but more responsive to a personalized episodic simulation (88).

Notably, our measure of actual risk does not capture the complexity of factors that influence viral transmission. Although our measure of actual risk based on prevalence is validated by epidemiologists and offers a useful heuristic for understanding local risk levels (37), it is best regarded as an approximate estimate of prevalence-based risk rather than an exact probability of infection. In addition to group size, distance between people, number of infected individuals, ventilation, and masking all influence the probability of viral transmission. Furthermore, the risk level for a given individual is influenced by other factors, such as age, comorbid conditions, vaccination status, or community vulnerability. Future research could leverage our intervention tools to encourage other behaviors (e.g., masking, outdoor activities, vaccination) that reduce the likelihood of infection.

In intervention studies, particularly when the goal is to aid individuals who lie at the extreme ends of a distribution, it is important to rule out regression to the mean. This statistical artifact arises when extreme values of a dependent variable become less extreme when repeatedly measured over time, giving the illusion of beneficial change. To rule out regression to the mean as an explanation for our results, we conducted an analysis that demonstrated that our intervention shifted perceived risk by a similar amount for each participant, regardless of each participant’s baseline measurement (SI Appendix, Regression to the Mean and Figs. S6 and S7). The composite score used for perceived risk also helped to safeguard against regression to the mean; we averaged perceived risk across 15 everyday activities (Fig. 3 and SI Appendix, Fig. S1), thus potentially reducing noise and measurement error that can contribute to regression to the mean.

The everyday activities used in our perceived risk assessment vary in their potential for transmission of the virus, which is why we refer to these as potential risks throughout. We included a range of low-risk to high-risk activities in order to capture variability in risk tolerance among individuals (89). Our intervention did not aim to change how participants assessed the relative risks of these everyday activities. Although the precise risk level of each activity is not known, the riskiness of most of these activities should be affected by local viral prevalence. Consistent with this idea, the reported effects generally applied to the full range of activities assessed (Fig. 3). Another limitation of the study is that we measured self-reported behavior (e.g., willingness to engage in risky activities, recent compliance with social distancing guidelines). These self-reported measures are not direct measures of actual real-world behavior. Overall, our results indicate that receiving numerical information about local viral prevalence can exert transfer effects on subjective perceived risk of everyday activities.

Conclusion

Globally, the outbreak reached new levels of severity more than a year after initial lockdowns. Viral transmission has followed an exponential trajectory during severe outbreaks (7, 11, 65), and the World Health Organization has recommended a harm reduction approach to combat widespread pandemic fatigue (7). Severe outbreaks may limit the success of vaccination programs (10), highlighting the urgent need for behavior change to reduce viral transmission. Here, we report the results of interventions that beneficially changed perceived risk and willingness to engage in potentially risky activities. In this high-stakes context, increasing even a single individual’s compliance with public health guidelines could have significant downstream effects and limit superspreading events (12, 15, 16). Furthermore, since individuals repeatedly choose whether or not to engage in everyday risky activities, the impact of changing perceived risk would accumulate over many decisions.

Importantly, our intervention is simple to implement; elements of our intervention could be applied to existing risk assessment tools (37). We showed that the impact of numerical risk information was enhanced when it was paired with contextualizing information. Existing websites that present COVID-19 statistics could be modified to include images and scenarios that add context, or a risk estimation game that elicits information prediction error (e.g., “Imagine a restaurant in your area with 25 people dining inside. Estimate the probability that at least one of the diners is infected.”). Overall, we describe a fast and effective intervention to realign perceived risk with actual risk, and offer concrete recommendations for implementation. Effectively communicating local risk information could empower individuals to make better decisions by finding the optimal balance between personal and public health needs.

Methods

Study 1.

Participants.

We recruited 303 current US residents to complete an online survey via Prolific, an online testing platform. However, 70 participants did not provide location data or resided in counties that were not reporting COVID-19 statistics; these participants were omitted from analyses that involved measures of actual risk. The sample was nationally representative, stratified by age, sex, and race to approximate the demographic makeup of the United States. Participants were paid $4.75 USD for completing a task that took ∼30 min. The study was approved by the Duke University Health System Institutional Review Board (IRB) (Protocol #00101720). Participants provided informed consent by reading an online description of the study and payment, then clicking a button to indicate agreement. Data collection took place on May 18 and 19, 2020.

Procedure.

The task was administered with Qualtrics survey software. Participants answered questions about perceived risk related to COVID-19, willingness to engage in risky activities, and compliance with public health guidelines. We measured perceived risk by asking participants to rate how risky they believed it was to engage in six different everyday activities, using a 5-point Likert scale (ranging from 1 = "not at all" risky to 5 = "extremely risky") (SI Appendix, Everyday Activities Assessed). Perceived risk scores were averaged across the six items. We measured willingness to engage in risky activities by asking participants if they would be willing to participate (yes/no) in eight different activities, if hypothetically all stay-at-home restrictions were lifted (SI Appendix, Everyday Activities Assessed). Willingness scores were summed across the eight items.

Study 2.

Participants.

We recruited a nationally representative sample of 816 current US residents via Prolific. After exclusions (SI Appendix, Exclusions), our final sample consisted of 735 participants who were randomly assigned to four different intervention conditions: personal simulation (n = 181), impersonal simulation (n = 180), unrelated simulation (n = 185), and unguided exploration (n = 189). Participants were paid $4.50 for a survey that took ∼20 to 30 min to complete. The study was approved by the Duke University Health System IRB (Protocol #00101720). Data collection took place between September 14 and October 9, 2020. The intervention study was preregistered (https://osf.io/6fjdy) (SI Appendix, Deviations from Preregistration).

Additionally, we recontacted our participants 1 wk later for a follow-up survey. Of the 735 participants who successfully completed session 1, 671 returned and successfully completed session 2 after a delay (personal simulation: n = 158, impersonal simulation: n = 165, unrelated simulation: n = 172, and unguided exploration: n = 176). The average delay between session 1 and session 2 was 7.74 d (SD = 2.11, range [7, 25]). Participants were paid $1.25 for a survey that took ∼5 min to complete.

Procedure.

The experiment was conducted using Qualtrics survey software. The assessment of perceived risk and willingness to engage in potentially risky activities was expanded to include 15 activities sampled evenly across five levels of risk, ranging from low-risk activities (e.g., picking up takeout) to high-risk activities (e.g., going to a crowded nightclub). These activities are listed in Fig. 3 and described in greater detail in SI Appendix, Everyday Activities Assessed. Using 5-point Likert scales, participants rated perceived risk (ranging from 1 = "not at all risky" to 5 = "extremely risky") and willingness to engage in these activities (ranging from 1 = "definitely would not do this" to 5 = "definitely would do this"). Participants were randomly assigned to one of four conditions (personal simulation, impersonal simulation, unrelated simulation, or unguided exploration), described in detail in the main text.

Episodic simulation.

The narratives of the episodic simulations are described in the main text, and the full text for all conditions is provided in SI Appendix, Episodic Simulation Text. During the simulation, participants followed step-by-step instructions to imagine the story (e.g., narrative, dialogue, spatial context, characters, and emotions). On each page, participants typed into a text box to describe the details they imagined before proceeding to the next step. Each step of the simulation had a minimum duration (appropriate for the amount of typing required), but there was no maximum duration.

Risk estimation task.

Immediately after the episodic simulation, participants in the three simulation conditions completed an exposure risk estimation task. Participants provided and confirmed their current location (county within state), then read a brief tutorial about probability and risk. Next, participants were asked to think about events of various sizes (5, 10, 25, 50, 100, 250, and 500 people) in their location and estimate the probability (ranging from 0% = "impossible" to 100% = "definitely") that at least one person in the group would be infected with COVID-19. Participants also rated confidence in their estimates (ranging from 0% = "guessing" to 100% = "very sure"). After making estimates for all event sizes, participants received veridical feedback about the actual risk probability (Prevalence-Based Exposure Risk) and rated subjective surprise about the feedback on a 5-point Likert scale (ranging from 1 = "not at all surprised" to 5 = "extremely surprised").

Analysis.

Statistics.

Analyses were conducted with R v4.0.3 and RStudio v1.3.1093. Data and code necessary to reproduce the results and figures are available in a public repository hosted by the Open Science Framework (https://osf.io/6fjdy) (90). Further information about data cleaning and exclusions is provided in SI Appendix, Variable Transformations, Exclusions.

Prevalence-based exposure risk.

We measured the actual risk of viral exposure using the number of active COVID-19 cases in each participant’s county on the day that the study was completed, using data from the COVID Tracking Project (62) and the US Census (83). We used the formula employed by the COVID-19 Risk Assessment Planning Tool developed by researchers at the Georgia Institute of Technology (37). The risk assessment formula estimates the probability that at an event of a given size, there will be at least one individual who is infected with COVID-19 and may spread the disease to others. Risk estimates were calculated for hypothetical events with 10 attendees, on the basis of the current number of active cases in a participant’s county and an ascertainment bias of 10 (accounting for additional cases that are unidentified because of insufficient testing). The ascertainment bias used was the recommended default for the risk assessment tool at the time that the study was conducted, but ascertainment bias is related to COVID-19 testing availability, which has changed over time. Note that the choice of event size for the actual risk measure is arbitrary; we were interested in the correlation between perceived and actual risk scores, despite the different measurement scales.

Supplementary Material

Acknowledgments

The study was funded by discretionary funding and a US National Institute on Aging grant awarded to G.R.S.-L. (R01-AG058574). A.H.S. is supported by a Graduate Research Fellowship from the NSF and a Postgraduate Scholarship from the Natural Sciences and Engineering Research Council of Canada. We thank Aroon Chande, Seolha Lee, Quan Nguyen, Stephen J. Beckett, Troy Hilley, Clio Andris, and Joshua S. Weitz at the Georgia Institute of Technology and Mallory Harris at Stanford University for openly sharing the tools they developed to assess local virus levels, which made the present studies possible.

Footnotes

The authors declare no competing interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at https://www.pnas.org/lookup/suppl/doi:10.1073/pnas.2100970118/-/DCSupplemental.

Data Availability

Anonymized, raw, and cleaned behavioral data (CSV files) have been deposited in Open Science Framework (DOI: 10.17605/OSF.IO/35US2) (90).

References

- 1.Choi K. R., Heilemann M. V., Fauer A., Mead M., A second pandemic: Mental health spillover from the novel coronavirus (COVID-19). J. Am. Psychiatr. Nurses Assoc. 26, 340–343 (2020). [DOI] [PubMed] [Google Scholar]

- 2.Fitzpatrick K. M., Drawve G., Harris C., Facing new fears during the COVID-19 pandemic: The State of America’s mental health. J. Anxiety Disord. 75, 102291 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Fofana N. K., et al., Fear and agony of the pandemic leading to stress and mental illness: An emerging crisis in the novel coronavirus (COVID-19) outbreak. Psychiatry Res. 291, 113230 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Lenzen M., et al., Global socio-economic losses and environmental gains from the Coronavirus pandemic. PLoS One 15, e0235654 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Nicola M., et al., The socio-economic implications of the coronavirus pandemic (COVID-19): A review. Int. J. Surg. 78, 185–193 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Shah K., et al., Focus on mental health during the coronavirus (COVID-19) pandemic: Applying learnings from the past outbreaks. Cureus 12, e7405 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.WHO , Pandemic Fatigue—Reinvigorating the Public to Prevent COVID-19. Policy Framework for Supporting Pandemic Prevention and Management (WHO Regional Office for Europe, Copenhagen, 2020). [Google Scholar]

- 8.Bavel J. J. V., et al., Using social and behavioural science to support COVID-19 pandemic response. Nat. Hum. Behav. 4, 460–471 (2020). [DOI] [PubMed] [Google Scholar]

- 9.Honein M. A., Summary of guidance for public health strategies to address high levels of community transmission of SARS-CoV-2 and related deaths, December 2020. MMWR Morb. Mortal. Wkly. Rep. 69, 1860–1867 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Paltiel A. D., Schwartz J. L., Zheng A., Walensky R. P., Clinical outcomes of a COVID-19 vaccine: Implementation over efficacy. Health Aff. (Millwood) 40, 42–52 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.America’s COVID warning system. Covid Act Now (2020) (December 8, 2020).

- 12.Wong F., Collins J. J., Evidence that coronavirus superspreading is fat-tailed. Proc. Natl. Acad. Sci. U.S.A. 117, 29416–29418 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.West R., Michie S., Rubin G. J., Amlôt R., Applying principles of behaviour change to reduce SARS-CoV-2 transmission. Nat. Hum. Behav. 4, 451–459 (2020). [DOI] [PubMed] [Google Scholar]

- 14.Kim J. K., Crimmins E. M., How does age affect personal and social reactions to COVID-19: Results from the national Understanding America Study. PLoS One 15, e0241950 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Valle S. Y. D., Mniszewski S. M., Hyman J. M., “Modeling the impact of behavior changes on the spread of pandemic influenza” in Modeling the Interplay Between Human Behavior and the Spread of Infectious Diseases, Manfredi P., D'Onofrio A., Eds. (Springer, 2012), pp. 59–77. [Google Scholar]

- 16.Funk S., Salathé M., Jansen V. A. A., Modelling the influence of human behaviour on the spread of infectious diseases: A review. J. R. Soc. Interface 7, 1247–1256 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Boholm A., Comparative studies of risk perception: A review of twenty years of research. J. Risk Res. 1, 135–163 (1998). [Google Scholar]

- 18.Vasvári T., Risk, risk perception, risk management—A review of the literature. Public Finan. Q. 60, 29–48 (2015). [Google Scholar]

- 19.Winch G. M., Maytorena E., Making good sense: Assessing the quality of risky decision-making. Organ. Stud. 30, 181–203 (2009). [Google Scholar]

- 20.Chumbley J. R., et al., Endogenous cortisol predicts decreased loss aversion in young men. Psychol. Sci. 25, 2102–2105 (2014). [DOI] [PubMed] [Google Scholar]

- 21.Ceccato S., Kudielka B. M., Schwieren C., Increased risk taking in relation to chronic stress in adults. Front. Psychol. 6, 2036 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Porcelli A. J., Delgado M. R., Stress and decision making: Effects on valuation, learning, and risk-taking. Curr. Opin. Behav. Sci. 14, 33–39 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Slovic P., et al., Perceived risk: Psychological factors and social implications. Proc.-R. Soc. Lond., Math. Phys. Sci. 376, 17–34 (1981). [Google Scholar]

- 24.Slovic P., Fischhoff B., Lichtenstein S., “The psychometric study of risk perception” in Risk Evaluation and Management, Contemporary Issues in Risk Analysis, Covello V. T., Menkes J., Mumpower J., Eds. (Springer US, 1986), pp. 3–24. [Google Scholar]

- 25.Barnett J., Breakwell G. M., Risk perception and experience: Hazard personality profiles and individual differences. Risk Anal. 21, 171–177 (2001). [DOI] [PubMed] [Google Scholar]

- 26.Szollosi A., Liang G., Konstantinidis E., Donkin C., Newell B. R., Simultaneous underweighting and overestimation of rare events: Unpacking a paradox. J. Exp. Psychol. Gen. 148, 2207–2217 (2019). [DOI] [PubMed] [Google Scholar]

- 27.Reyna V. F., Nelson W. L., Han P. K., Dieckmann N. F., How numeracy influences risk comprehension and medical decision making. Psychol. Bull. 135, 943–973 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Garcia-Retamero R., Cokely E. T., Designing visual aids that promote risk literacy: A systematic review of health research and evidence-based design heuristics. Hum. Factors 59, 582–627 (2017). [DOI] [PubMed] [Google Scholar]

- 29.Yıldırım M., Güler A., Positivity explains how COVID-19 perceived risk increases death distress and reduces happiness. Pers. Individ. Dif. 168, 110347 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Leppin A., Aro A. R., Risk perceptions related to SARS and avian influenza: Theoretical foundations of current empirical research. Int. J. Behav. Med. 16, 7–29 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Dryhurst S., et al., Risk perceptions of COVID-19 around the world. J. Risk Res. 23, 994–1006 (2020). [Google Scholar]

- 32.Yıldırım M., Güler A., Factor analysis of the COVID-19 Perceived Risk Scale: A preliminary study. Death Stud. 45, 1–8 (2020). [DOI] [PubMed] [Google Scholar]

- 33.Vai B., et al., Risk perception and media in shaping protective behaviors: Insights from the early phase of COVID-19 Italian outbreak. Front. Psychol. 11, 563426 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Malecki K. M. C., Keating J. A., Safdar N., Crisis communication and public perception of COVID-19 risk in the era of social media. Clin. Infect. Dis. 72, 697–702 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Kwok K. O., et al., Community Responses during early phase of COVID-19 Epidemic, Hong Kong. Emerging Infect. Dis. 26, 1575–1579, 10.3201/eid2607.200500 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Yıldırım M., Geçer E., Akgül Ö., The impacts of vulnerability, perceived risk, and fear on preventive behaviours against COVID-19. Psychol. Health Med. 26, 35–43 (2021). [DOI] [PubMed] [Google Scholar]

- 37.Chande A., et al., Real-time, interactive website for US-county-level COVID-19 event risk assessment. Nat. Hum. Behav. 4, 1313–1319 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Lustria M. L. A., et al., A meta-analysis of web-delivered tailored health behavior change interventions. J. Health Commun. 18, 1039–1069 (2013). [DOI] [PubMed] [Google Scholar]

- 39.Portnoy D. B., Scott-Sheldon L. A. J., Johnson B. T., Carey M. P., Computer-delivered interventions for health promotion and behavioral risk reduction: A meta-analysis of 75 randomized controlled trials, 1988-2007. Prev. Med. 47, 3–16 (2008). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.St-Amand D., Sheldon S., Otto A. R., Modulating episodic memory alters risk preference during decision-making. J. Cogn. Neurosci. 30, 1433–1441 (2018). [DOI] [PubMed] [Google Scholar]

- 41.Benoit R. G., Paulus P. C., Schacter D. L., Forming attitudes via neural activity supporting affective episodic simulations. Nat. Commun. 10, 2215 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Peters J., Büchel C., Episodic future thinking reduces reward delay discounting through an enhancement of prefrontal-mediotemporal interactions. Neuron 66, 138–148 (2010). [DOI] [PubMed] [Google Scholar]

- 43.Bulley A., Henry J., Suddendorf T., Prospection and the present moment: The role of episodic foresight in intertemporal choices between immediate and delayed rewards. Rev. Gen. Psychol. 20, 29–47 (2016). [Google Scholar]

- 44.Schacter D. L., Addis D. R., Buckner R. L., “Episodic simulation of future events: Concepts, data, and applications” in The Year in Cognitive Neuroscience 2008, Annals of the New York Academy of Sciences, Kingstone A., Miller M. B., Eds. (Blackwell Publishing, 2008), pp. 39–60. [DOI] [PubMed] [Google Scholar]

- 45.Daniel T. O., Sawyer A., Dong Y., Bickel W. K., Epstein L. H., Remembering versus imagining: When does episodic retrospection and episodic prospection aid decision making? J. Appl. Res. Mem. Cogn. 5, 352–358 (2016). [Google Scholar]

- 46.Gaesser B., Schacter D. L., Episodic simulation and episodic memory can increase intentions to help others. Proc. Natl. Acad. Sci. U.S.A. 111, 4415–4420 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Reyna V. F., Mills B. A., Theoretically motivated interventions for reducing sexual risk taking in adolescence: A randomized controlled experiment applying fuzzy-trace theory. J. Exp. Psychol. Gen. 143, 1627–1648 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Lermer E., Streicher B., Sachs R., Raue M., Frey D., Thinking concretely increases the perceived likelihood of risks: The effect of construal level on risk estimation. Risk Anal. 36, 623–637 (2016). [DOI] [PubMed] [Google Scholar]

- 49.Ryan P., Lauver D. R., The efficacy of tailored interventions. J. Nurs. Scholarsh. 34, 331–337 (2002). [DOI] [PubMed] [Google Scholar]

- 50.Bettinger E., Cunha N., Lichand G., Madeira R., “Are the Effects of Informational Interventions Driven by Salience?” (Social Science Research Network, 2020) (2020). 10.2139/ssrn.3633821. [DOI]

- 51.Tversky A., Kahneman D., Availability: A heuristic for judging frequency and probability. Cognit. Psychol. 5, 207–232 (1973). [Google Scholar]

- 52.Lunn P. D., et al., Using behavioral science to help fight the coronavirus. J. Behav. Public Adm. 30, 1–5 (2020). [Google Scholar]

- 53.Lichtenstein S., Slovic P., Fischhoff B., Layman M., Combs B., Judged frequency of lethal events. J. Exp. Psychol. [Hum. Learn.] 4, 551–578 (1978). [Google Scholar]

- 54.Shen F., Sheer V. C., Li R., Impact of narratives on persuasion in health communication: A meta-analysis. J. Advert. 44, 105–113 (2015). [Google Scholar]

- 55.Jaramillo S., Horne Z., Goldwater M., The impact of anecdotal information on medical decision-making. PsyArXiv [Preprint] (2019) 10.31234/osf.io/r5pmj (Accessed 15 December 2020). [DOI]

- 56.Fagerlin A., Wang C., Ubel P. A., Reducing the influence of anecdotal reasoning on people’s health care decisions: Is a picture worth a thousand statistics? Med. Decis. Making 25, 398–405 (2005). [DOI] [PubMed] [Google Scholar]

- 57.Allen M., et al., Testing the persuasiveness of evidence: Combining narrative and statistical forms. Commun. Res. Rep. 17, 331–336 (2000). [Google Scholar]

- 58.Charpentier C. J., Bromberg-Martin E. S., Sharot T., Valuation of knowledge and ignorance in mesolimbic reward circuitry. Proc. Natl. Acad. Sci. U.S.A. 115, E7255–E7264 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Metcalfe J., Learning from errors. Annu. Rev. Psychol. 68, 465–489 (2017). [DOI] [PubMed] [Google Scholar]

- 60.Pine A., Sadeh N., Ben-Yakov A., Dudai Y., Mendelsohn A., Knowledge acquisition is governed by striatal prediction errors. Nat. Commun. 9, 1673 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Sinclair A. H., Stanley M. L., Seli P., Closed-minded cognition: Right-wing authoritarianism is negatively related to belief updating following prediction error. Psychon. Bull. Rev. 27, 1348–1361 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Kuzmanovic B., Rigoux L., Valence-dependent belief updating: Computational validation. Front. Psychol. 8, 1087 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Moutsiana C., et al., Human development of the ability to learn from bad news. Proc. Natl. Acad. Sci. U.S.A. 110, 16396–16401 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Data API . COVID Track. Proj. (2020) (8 December 2020).

- 65.The Path to Zero: Key Metrics for COVID Suppression. Glob. Epidemics (2020) (8 December 2020).

- 66.Eisenstein M., What’s your risk of catching COVID? These tools help you to find out. Nature 589, 158–159 (2021). [DOI] [PubMed] [Google Scholar]

- 67.Volz E., et al., Report 42 - Transmission of SARS-CoV-2 Lineage B.1.1.7 in England: Insights From Linking Epidemiological and Genetic Data (Imperial College London, 2020) (4 January 2021).

- 68.Sheridan S. L., et al., The effect of giving global coronary risk information to adults: A systematic review. Arch. Intern. Med. 170, 230–239 (2010). [DOI] [PubMed] [Google Scholar]

- 69.Slovic P., Peters E., Risk perception and affect. Curr. Dir. Psychol. Sci. 15, 322–325 (2006). [Google Scholar]

- 70.van Gelder J.-L., de Vries R. E., van der Pligt J., Evaluating a dual-process model of risk: Affect and cognition as determinants of risky choice. J. Behav. Decis. Making 22, 45–61 (2009). [Google Scholar]

- 71.Garcia-Retamero R., Sobkow A., Petrova D., Garrido D., Traczyk J., Numeracy and risk literacy: What have we learned so far? Span. J. Psychol. 22, E10 (2019). [DOI] [PubMed] [Google Scholar]

- 72.Fischhoff B., Communicating Risks and Benefits: An Evidence Based User’s Guide (Government Printing Office, 2012). [Google Scholar]

- 73.Malloy-Weir L. J., Schwartz L., Yost J., McKibbon K. A., Empirical relationships between numeracy and treatment decision making: A scoping review of the literature. Patient Educ. Couns. 99, 310–325 (2016). [DOI] [PubMed] [Google Scholar]

- 74.Lewis N. A. Jr., Kougias D. G., Takahashi K. J., Earl A., The behavior of same-race others and its effects on black patients’ attention to publicly presented HIV-prevention information. Health Commun. 36, 1–8 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Reyna V. F., A scientific theory of gist communication and misinformation resistance, with implications for health, education, and policy. Proc. Natl. Acad. Sci. U.S.A. 118, e1912441117 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Loewenstein G., Anticipation and the valuation of delayed consumption. Econ. J. (Lond.) 97, 666–684 (1987). [Google Scholar]

- 77.Bø S., Wolff K., I can see clearly now: Episodic future thinking and imaginability in perceptions of climate-related risk events. Front. Psychol. 11, 218 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Bø S., Wolff K., A terrible future: Episodic future thinking and the perceived risk of terrorism. Front. Psychol. 10, 2333 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Roozenbeek J., et al., Susceptibility to misinformation about COVID-19 around the world. R. Soc. Open Sci. 7, 201199 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Stanley M., Barr N., Peters K., Seli P., Analytic-thinking predicts hoax beliefs and helping behaviors in response to the COVID-19 pandemic. PsyArXiv (2020) 10.31234/osf.io/m3vth (Accessed 15 December 2020). [DOI]

- 81.Frenkel S., Alba D., Zhong R., Surge of virus misinformation stumps Facebook and Twitter. NY Times, 15 December 2020).

- 82.Russonello G., Afraid of coronavirus? That might say something about your politics. NY Times, 15 December 2020).

- 83.Imhoff R., Lamberty P., A bioweapon or a hoax? The link between distinct conspiracy beliefs about the coronavirus disease (COVID-19) outbreak and pandemic behavior. Soc. Psychol. Personal. Sci. 11, 1110–1118 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Jovančević A., Milićević N., Optimism-pessimism, conspiracy theories and general trust as factors contributing to COVID-19 related behavior—A cross-cultural study. Pers. Individ. Dif. 167, 110216 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]