Abstract

Purpose

To describe the research capacity and culture, and research activity (publications and new projects) of medical doctors across a health service and determine if the research activity of specialty groups correlated with their self-reported “team” level research capacity and culture.

Methods

Cross-sectional, observational survey and audit of medical doctors at a tertiary health service in Queensland. The Research Capacity and Culture (RCC) validated survey was used to measure self-reported research capacity/culture at organisation, team and individual levels, and presence of barriers and facilitators to research. An audit of publications and ethically approved research projects was used to determine research activity.

Results

Approximately, 10% of medical doctors completed the survey (n= 124). Overall, median scores on the RCC were 5 out of 10 for organisational level, 5.5 for specialty level, and 6 for individual level capacity and culture; however, specialty-level scores varied significantly between specialty groups (range 3.1–7.8). Over 80% of participants reported lack of time and other work roles taking priority as barriers to research. One project was commenced per year for every 12.5 doctors employed in the health service, and one article was published for every 7.5. There was a positive association between a team’s number of publications and projects and their self-reported research capacity and culture on the RCC. This association was stronger for publications.

Conclusion

Health service research capacity building interventions may need a tailored approach for different specialty teams to accommodate for varying baselines of capacity and activity. When evaluating these initiatives, a combination of research activity and subjective self-report measures may be complementary.

Keywords: research culture, research activity, health service, hospital, medical, doctor

Introduction

Clinicians play a key role in shaping research agendas, generating research questions, and conducting research that enhances rapid translation of findings.1 Studies have found that health services whose clinicians conduct more research tend to have lower mortality rates, greater organizational efficiency, better staff retention and higher patient and staff satisfaction.2–4 Additionally, a key recommendation to improve the estimated 85% avoidable waste in health research is the increased involvement of health service-embedded clinicians in driving research agendas.1,5

In Australia, building research capacity of clinicians embedded within healthcare services has been identified as a key priority for health research,6 and most recently as one of the 12 priorities for the $20 billion Medical Research Future Fund.7 However, with increasing pressure on public health systems to meet activity targets and provide services to a growing and aging population, finding the time, money and resources to conduct research is challenging. Medical doctors are the second-largest health profession in Australia,8 and have an important role in many types of health research from bench to bedside. However, only 7% of the medical workforce report active involvement in research, and it has been argued this is decreasing.9

A key facilitator of building research capacity is an in-depth understanding of context-specific barriers and facilitators, research culture, and the levels of and drivers for current research activity. In health services in particular, arguments have been made that traditional research output measures, such as publications, may need to be replaced or supplemented by process measures.10 However, there have been very few studies comparing different forms of measurement in this context.

In Australia, the Research Capacity and Culture (RCC) survey has frequently been used to understand the current level of research culture and engagement of a profession, most commonly in Allied Health,11–13 but also in medical professions.14–16 Studies using this tool have found differences in research culture between teams, and findings have been used to inform tailored development of research capacity building strategies.11,13 One study in Allied Health also found that there was no association between a team’s RCC score and their research activity; however, publication and project outputs were low (0–4 publications and 0–8 new projects per team), which limited interpretation.13

In line with the increasing focus on improving health professional research capacity, there is a need to robustly measure and evaluate outcomes of research capacity building initiatives. There is currently little understanding of the relationship between validated measures of research culture and capacity, and traditional research activity and output measures.

Thus, this study aimed to:

describe the research capacity and culture of medical doctors across a health service using the Research Capacity and Culture tool

describe the research activity, in terms of publications and projects, of medical doctors across a health service

determine whether “team” level research capacity and culture on the Research Capacity and Culture tool is significantly different between specialty groups, and whether this score is associated with actual research activity

Materials and Methods

This cross-sectional, observational study collected data using a survey and audit. Ethics approval was obtained from the Gold Coast Hospital and Health Service Human Research Ethics Committee (HREC/10/QGC/177).

Context

Gold Coast Health (GCH) is a publicly funded tertiary health service located in South-East Queensland, Australia. The service includes two hospital facilities of 750 and 403 beds each, as well as outpatient and community-based services. Research is not currently routinely included in medical role descriptions, and engagement in research is variable across the organisation. GCH’s 2019–2022 research strategy has a focus on growing clinician research capacity and establishing a sustainable research culture.17 Strategic incentives and support for research include an annual grant scheme; access to small-scale grants for conference presentations and open access journal fees; an annual research week; a centralised Clinical Trials Unit; and a Research Council and Research Subcommittee of the Board to oversee implementation of the research strategy.17 Considerable work has also been done to increase Allied Health research engagement in the organisation,18 with the RCC tool used across multiple years to measure improvements.13,19 This current study aims to build on this success in the medical stream.

Sample

Convenience sampling was used for the RCC survey. All medical doctors employed by GCH were invited to participate. “Medical doctors” included all professions requiring a medical degree (MBBS, MD or equivalent), including physicians, surgeons, anesthetists, radiologists and others. Data for the audit were gathered from institutional records on publications and projects.

Survey Tool

The Research Capacity and Culture (RCC) tool is a validated questionnaire, which measures indicators of research capacity and culture across three levels – organization, team and individual.8 The tool includes 52 questions (items) on self-reported success or skill in research, including 18 at the organisational level, 19 at the team level and 15 at the individual level. Each item is scored on a scale 1–10, with 10 being the highest possible level of skill or success, as well as an “unsure” option. The RCC also asks respondents to select applicable barriers and motivators to participate in research from a list of 18 of each, and prompts them to add additional barriers/motivators if desired. Lastly, the tool includes a standard set of demographic questions.

Small modifications were made to the tool to better fit the target population, and additional demographic questions were included (eg participant’s facility within GCH). For this study, participants were asked to reflect on their Medical College specialty (eg cardiology, general surgery) when answering questions about their “Team”. This clarification was made as participants could have also interpreted “team” to mean their multispeciality or multidisciplinary team. The tool was administered via a secure online platform (Survey Monkey®).

Procedures

Active promotion and recruitment for the survey was in two stages, – the first in January and February 2019, then a break whilst the organization transitioned to an integrated electronic medical record, and then a second promotion in July and August. Potential participants were provided with an electronic participant information sheet and gave voluntary informed consent prior to participation. The survey took respondents approximately 15 minutes to complete.

Two key audits were performed to collect data on the publications and new projects of doctors in the health service for the calendar years 2018 and 2019. Data was collected for both years as, due to the distributed timing of the survey recruitment, it was determined that both years of data would be relevant to doctors’ RCC responses. For the purposes of the audit, new projects were defined as projects, which obtained a health service governance approval to proceed within that year. Health service-maintained research governance approvals and publication databases were used to identify projects and publications involving medical doctors from the health service. Where necessary, original articles were accessed, or authors/investigators were contacted to clarify information. Information on the number of doctors and FTE status was extracted from internal institutional records.

Data Analysis

Quantitative survey data analysis was performed using Stata 15 (College Station, TX, USA). Survey and audit data were analysed descriptively, using frequencies, percentages, medians and interquartile ranges. “Unsure” responses on the RCC were not included in the analyses but the percentage of “unsure” responses is presented for each item. A one-way analysis of variance analysis with post hoc Scheffe tests was used to determine whether there were differences between specialty groups on the mean of all RCC item scores at the “team” level. Linear regression was used to investigate the relationships between these means and the publications and projects per full-time equivalent (FTE) for each specialty group. Analysis of open-ended survey responses was conducted using inductive qualitative content analysis,20 in which core meanings were derived from the text and grouped into themes. This analysis was completed by a single researcher with experience in health-related qualitative research (CB). Themes were discussed with a subset of the team with qualitative expertise (CB, CN, SM) to reach consensus.

Results

Survey: Quantitative Results

In total, 225 participants consented to complete the survey. Of these, 96 incompletely answered the questions and 129 completed the entire survey. Five of these complete responses were excluded as 4 were not doctors (allied health/nursing/midwifery) and one was a duplicate response. In total, data from 124 survey responses were available for analysis, representing 10.1% of the health service’s estimated total medical workforce.

Participant characteristics are shown in Table 1. The sample had almost equal numbers of males (49.2%) and females (46.8%). Consultants were overrepresented in the sample, making up 72.5% of respondents, whereas they make up slightly less than half of doctors in the health service. Conversely, registrars (12.1% of respondents) and junior doctors (12.9%) were under-represented. Research was part of the role description of 34.7% of respondents, and was not for 42.7%, while almost a quarter (22.6%) of respondents were unsure.

Table 1.

Demographic Information and Professional Qualifications

| Gender | n (%) |

| Female | 57 (46.0) |

| Male | 61 (49.2) |

| Other/prefer not to disclose | 6 (4.8) |

| Current employment status | n (%) |

| Full-time permanent | 61 (49.2) |

| Full-time temporary | 23 (18.5) |

| Part-time permanent | 28 (22.6) |

| Part-time temporary | 9 (7.3) |

| Casual | 3 (2.4) |

| No. of years employed as a Doctor | n (%) |

| Less than 2 years | 11 (8.9) |

| 2–5 years | 9 (7.3) |

| 6–10 years | 13 (10.5) |

| 11–15 years | 28 (22.6) |

| 16–20 years | 24 (19.4) |

| 20+ years | 39 (31.5) |

| No. of years employed in this health service | n (%) |

| Less than 2 years | 33 (26.6) |

| 2–5 years | 29 (23.4) |

| 6–10 years | 29 (23.4) |

| 11–15 years | 13 (10.5) |

| 16–20 years | 15 (12.1) |

| 20+ years | 5 (4.0) |

| Current career stage | n (%) |

| Consultant | 90 (72.5) |

| Registrar | 15 (12.1) |

| Junior doctor | 16 (12.9) |

| Not specified | 3 (2.4) |

| Master’s or PhD by research | n (%) |

| None | 104 (83.9) |

| Completed PhD | 9 (7.3) |

| Enrolled in PhD | 2 (1.6) |

| Completed research Master’s degree | 7 (5.6) |

| Enrolled in research Master’s degree | 2 (1.6) |

| Research-related activities are part of role description | n (%) |

| Yes | 43 (34.7) |

| No | 53 (42.7) |

| Unsure | 28 (22.6) |

Note: n= number of respondents.

The median score for research capacity and culture on the RCC was 5 at the organization level, 5.5 at the team level, and 6 at the individual level. Higher scores on the 10-point scale indicate a more positive perception of research culture and capacity. There was a high rate of “unsure” responses for the organization (18.6% of all item responses) and team (14.7%) levels, and a low rate for individual level (2.8%). “Unsure” responses relating to external funding, applications for scholarships/degrees, mechanisms for monitoring research quality, consumer involvement, and research software made up over a fifth of responses at both the organisational and team level. At the organisational level only, ensuring the availability of career pathways and having a policy/plan for research development also resulted in over 20% “unsure” responses.

Median scores for each item at the organizational level ranged between 3 and 7 (Table 2). Key strengths of the organisation’s research culture were “promotes clinical practice based on evidence” (median=7), “engages external partners (eg universities) in research” (6), “supports the peer-reviewed publication of research” (6) and “has regular forums to present research findings” (6). Lowest success was reported for “ensures staff career pathways are available in research” (3) and “has funds, equipment or admin to support research” (4).

Table 2.

Median Score for Organisation Level RCC Items, Arranged in Descending Order

| Item | Median Score | IQR | % Unsure Responses |

|---|---|---|---|

| Promotes clinical practice based on evidence | 7 | 5–8 | 3.2 |

| Engages external partners (eg universities) in research | 6 | 4–8 | 12.1 |

| Supports the peer-reviewed publication of research | 6 | 3–8 | 16.1 |

| Has regular forums to present research findings | 6 | 3–7 | 10.5 |

| Supports a multidisciplinary approach to research | 5 | 4–7 | 18.5 |

| Encourages research activities which are relevant to practice | 5 | 4–7 | 6.5 |

| Accesses external funding for research | 5 | 3–7 | 25.8 |

| Has resources to support staff research training | 5 | 3–7 | 11.3 |

| Has identified experts accessible for research advice | 5 | 3–7 | 16.9 |

| Has a plan or policy for research development | 5 | 3–6 | 20.2 |

| Has software programs for analysing research data | 5 | 3–7 | 36.3 |

| Has mechanisms to monitor research quality | 5 | 3–6 | 32.3 |

| Supports applications for research scholarships or degrees | 5 | 3–6 | 27.4 |

| Ensures that organisational planning is guided by evidence | 5 | 3–6 | 16.9 |

| Has executive managers that support research | 5 | 3–7 | 16.1 |

| Has consumers involved in research | 5 | 2.5–6.5 | 29.8 |

| Has funds, equipment or admin to support research | 4 | 2–6 | 13.7 |

| Ensures staff career pathways are available in research | 3 | 2–5 | 21.8 |

Abbreviation: IQR, Interquartile range.

Median scores for items at the team level ranged from 3 to 7 (Table 3). Key strengths of team-level research culture reflected the organizational level, including “conducts research activities which are relevant to practice” (7), “supports peer-reviewed publication of research” (7) and “supports a multidisciplinary approach to research” (7). Lowest success was reported for “has funds, equipment or admin to support research” (3) and “has incentives and support for research mentoring activities” (3).

Table 3.

Median Scores for Team Level RCC Items, Arranged in Descending Order

| Item | Median Score | IQR | % Unsure Responses |

|---|---|---|---|

| Conducts research activities which are relevant to practice | 7 | 5–9 | 6.5% |

| Supports peer reviewed publication of research | 7 | 4–9 | 10.5% |

| Supports a multidisciplinary approach to research | 7 | 3.5–9 | 10.5% |

| Has team leaders that support research | 7 | 3–8 | 5.6% |

| Does planning that is guided by evidence | 7 | 4–8 | 10.5% |

| Disseminates research results at forums/seminars | 6 | 4–8 | 9.7% |

| Has applied for external funding for research | 6 | 3–9 | 25.8% |

| Has external partners (eg universities) engaged in research | 6 | 3–8 | 18.5% |

| Has identified experts accessible for research advice | 6 | 3–8 | 14.5% |

| Provides opportunities to get involved in research | 6 | 3–8 | 2.4% |

| Supports applications for research scholarships/degrees | 5 | 2.25–8 | 21.0% |

| Has mechanisms to monitor research quality | 5 | 2–7 | 27.4% |

| Ensures staff involvement in developing the plan for research development | 5 | 2–7 | 11.3% |

| Has resources to support staff research training | 5 | 2–7 | 9.7% |

| Has consumer involvement in research activities/planning | 4 | 2–7 | 25.0% |

| Does team level planning for research development | 4 | 2–7 | 12.1% |

| Has software available to support research activities | 4 | 2–6 | 28.2% |

| Has incentives and support for research mentoring activities | 3 | 2–6.5 | 16.9% |

| Has funds, equipment or admin to support research | 3 | 2–6 | 12.9% |

Abbreviation: IQR, Interquartile range.

Median scores for items at the individual level ranged from 3.5 to 7 (Table 4). Strengths in individual research success were related to evidence-based practice (EBP) skills, including “finding relevant literature” (7), “integrating research findings into practice” (7) and “critically reviewing the literature” (7). The lowest rated item was “securing research funding” (3.5).

Table 4.

Median Scores for Individual Level RCC Items, Arranged in Descending Order

| Item | Median Score | IQR | % Unsure Responses |

|---|---|---|---|

| Finding relevant literature | 7 | 7–8 | 0.8 |

| Integrating research findings into practice | 7 | 6–8 | 2.4 |

| Critically reviewing the literature | 7 | 6–8 | 1.6 |

| Collecting data (eg surveys, interviews) | 6 | 5–8 | 1.6 |

| Writing for publication in peer-reviewed journals | 6 | 4–8 | 4.8 |

| Writing a research report | 6 | 4–7.25 | 3.2 |

| Using a computer referencing system (eg Endnote) | 6 | 4–7.25 | 0.8 |

| Analysing quantitative research data | 6 | 3–7 | 1.6 |

| Using computer data management systems | 6 | 3–7 | 2.4 |

| Writing a research protocol | 5 | 4–7 | 1.6 |

| Writing an ethics application | 5 | 3–7 | 3.2 |

| Providing advice to less experienced researchers | 5 | 2.5–7 | 4.0 |

| Designing questionnaires | 5 | 3–7 | 4.0 |

| Analysing qualitative research data | 5 | 2–7 | 3.2 |

| Securing research funding | 3.5 | 2–5 | 6.5 |

Abbreviation: IQR, Interquartile range.

Table 5 shows the most commonly reported barriers and motivators by percent of total survey respondents. “Lack of time for doing research” and “Other work roles take priority” were the most commonly reported barriers, identified by 83.9% and 82.3% of respondents, respectively. In decreasing order of frequency, other common barriers were the “lack of funds for research” (57.3%), “desire for work/life balance” (45.2%), “lack of a coordinated approach to research” (45.2%), and “lack of skills for research” (45.2%). Only 4% of respondents reported “not interested in research” as a barrier. The most commonly reported motivators were “to develop skills” (70.2%), followed closely by “increased job satisfaction” (66.9%). Other common motivators included “problem identified that needs changing” (58.9%), “career advancement” (58.1%) and “to keep the brain stimulated” (57.3%).

Table 5.

Reported Frequency of Personal Barriers and Motivators to Conducting Research, Arranged in Descending Order

| Item – Barrier | % of Response Across n=124 | Item – Motivator | % of Response Across n=124 |

|---|---|---|---|

| Lack of time for doing research | 83.9 | To develop skills | 70.2 |

| Other work roles take priority | 82.3 | Increased job satisfaction | 66.9 |

| Lack of funds for research | 57.3 | Problem identified that needs changing | 58.9 |

| Desire for work/life balance | 45.2 | Career advancement | 58.1 |

| Lack of a co-ordinated approach to research | 45.2 | To keep the brain stimulated | 57.3 |

| Lack of skills for research | 45.2 | Desire to explore a theory/hunch | 53.2 |

| Lack of support from management | 39.5 | Dedicated time for research | 52.4 |

| Lack of software for research | 39.5 | Links to universities | 50.8 |

| Isolation | 36.3 | Increased credibility | 50.0 |

| Lack of access to equipment for research | 34.7 | Mentors available to supervise | 45.2 |

| Other personal commitments | 34.7 | Opportunities to participate at own level | 45.2 |

| Intimidated by research language | 21.0 | Colleagues doing research | 42.7 |

| Different experience levels of team members (applies to team only) | 17.7 | Research encouraged by managers | 37.9 |

| Staff shortages (applies to team only) | 16.9 | Grant funds | 37.1 |

| Intimidated by fear of getting it wrong | 14.5 | Research written into role description | 36.3 |

| Not interested in research | 4.0 | Forms part of post-graduate study | 25.0 |

| Lack of library/internet access | 2.4 | Team building (applies to specialty group only) | 25.0 |

| Other barrier/s (please specify) – lack of dedicated research support roles | 4.8 | Study or research scholarships available | 21.0 |

| Other barrier/s (please specify) – lack of pathways to engage in research | 3.2 | Required as part of Specialty College Training | 20.2 |

| Other barrier/s (please specify)- difficulty with research ethics and governance processes | 1.6 | Other motivator/s (please specify) | 0.0 |

| Other barrier/s (please specify) – lack of value placed on research in organisational culture | 1.6 | ||

| Other barrier/s (please specify) – contract role does not allow research | 0.8 |

Survey: Qualitative Results

Twenty-nine percent (n= 36) of respondents provided free text responses to the question “Do you have any final comments or suggestions about the survey or research in general?”. Responses were a mean of 34 words in length. The four main themes are summarised in Table 6. The most common theme (n=21) was expansion on barriers to doing research, which were mostly reflective of the quantitative results. A significant portion (n=16) described the tension for change,21 emphasising there was a need for improvement in research engagement, positing that the health service was behind others in this aspect, and outlining frustrations with a perceived lack of focus on and support for research in the service. Others (n=11) included suggestions for potential strategies for improving the research culture at GCH in their response. These included suggestions like dedicated time for research, research support staff, making it easier to link in with potential projects, and calls for improved research culture and planning in the health service. A small number of respondents (n=4) outlined their perceptions of the benefits of increased research engagement as justification for the need for change.

Table 6.

Codes from Qualitative Analysis of Final Free Text Question

|

Expanding on barriers (n= 21) Example quote: “It is so very difficult to balance doing the clinical job with finding time to do, or supervise, research, especially when most of the time is spent just getting the project running!” |

• Difficulty finding out about research projects |

| • Research is not valued by leadership | |

| • Lack of research/nonclinical time and overloaded clinical rosters | |

| • Lack of skills and knowledge, including HDR experience | |

| • Lack of interest in department | |

| • Lack of general support and infrastructure | |

| • Ethics and governance process and long start up process for projects | |

| • Lack of support staff | |

| • Lack of awareness about what support is available | |

| • Casual contracts | |

|

Potential strategies (n= 11) Example quote: “Please make available projects easier to find. Consider an online noticeboard where all research groups post available roles and projects.” |

• Involvement with statewide clinical trials networks |

| • More professorial appointments | |

| • Pathways to make available projects easier to find, including online listing | |

| • More long-term planning | |

| • Appoint more research support staff | |

| • Increase awareness of the benefits of research at an executive level | |

| • Substantial investment in changing research culture | |

| • Dedicated time for research, including rostering more clinicians | |

| • More funding and access to HDRs | |

| • Research criteria should be included in performance reviews | |

|

Benefits of a research culture (n= 4) Example quote: “At exec level there needs to be awareness this investment will be paid back in spades in 1) economic return; 2) job satisfaction; 3) career progression; 4) staff retention.” |

• Attraction and retention of high quality trainees |

| • Retention of quality staff | |

| • Economic return | |

| • Job satisfaction | |

| • Career progression for staff | |

| • Generally a good thing for the health service | |

|

Tension for change (n= 16) Example quote: “Urgent need for institutional support for formal research ….training among clinical staff in all disciplines” |

• General statements that there is a need for change |

| • Our health service is not as research active as others in the region | |

| • Increased research engagement is needed to strengthen our identity as a tertiary institution | |

| • Despite being part of clinical role descriptions, research is mostly completed in personal time | |

| • The health service is focused on short-term operational concerns at the expense of research |

Project Audit

There were 266 research projects that received health service governance approval from January 2018 to December 2019. 74.1% (n=197) of these had a medical doctor from the health service as an investigator. Data was not collected on investigators who were not affiliated with the health service. For every 12.5 doctors employed in the health service, one project was commenced each year.

Table 7 displays further details on these 197 projects. The health service was the only site in 50.8% of the projects, the lead site of a multisite study in 6.6%, and nonlead site on a multisite study in 42.6%. Most (80.2%) projects involved doctors from a single specialty in the health service, while 19.8% involved doctors from multiple specialties (eg cardiology and rheumatology). A third of projects involved collaboration with other professional streams, such as nursing and Allied Health.

Table 7.

Characteristics of Publications and Projects from Medical Doctors in 2018 and 2019

| n (%) | ||

|---|---|---|

| Projects (n= 197) | 2018 | 92 |

| 2019 | 105 | |

| Site information | Health service as only site | 100 (50.8%) |

| Health service as lead site of a multisite study | 13 (6.6%) | |

| Health service as nonlead site on a multisite study | 84 (42.6%) | |

| Inter-specialty collaborationa | Investigators from only one specialty | 158 (80.2%) |

| Investigators from more than one specialty (eg cardiology and rheumatology) | 39 (19.8%) | |

| Inter-professional collaborationa | Investigators only from the medical stream | 132 (67%) |

| Investigators from nursing and medical streams | 28 (14.2%) | |

| Investigators from Allied Health and medical streams | 16 (8.1%) | |

| Investigators from otherb and medical streams | 6 (3%) | |

| Investigators from more than two streams (eg nursing, Allied Health and medical) | 15 (7.6%) | |

| Publications (n= 327) | 2018 | 175 |

| 2019 | 152 | |

| Number of unique medical doctor authors and their number of publications in 2018 | 2018 | 161 |

| 1 publication | 117 (72.6%) | |

| 2 publications | 22 (13.7%) | |

| 3–5 publications | 19 (11.8%) | |

| 6–9 publications | 1 (0.6%) | |

| 10+ publications | 2 (1.2%) | |

| Number of unique medical doctor authors and their number of publications in 2019 | 2019 | 162 |

| 1 publication | 122 (75.3%) | |

| 2 publications | 31 (19.1%) | |

| 3–5 publications | 5 (3.1%) | |

| 6–9 publications | 2 (1.2%) | |

| 10+ publications | 2 (1.2%) | |

| Authorship order | Medical doctor as first or last author | 174 (53.2%) |

| Medical doctor as middle author | 153 (46.8%) | |

| Inter-profession collaborationa | Authors only from the medical stream | 282 (86.2%) |

| Authors from nursing and medical streams | 26 (8.0%) | |

| Authors from Allied Health and medical streams | 14 (4.3%) | |

| Authors from otherb and medical streams | 3 (0.9%) | |

| Authors from more than two streams (eg nursing, Allied Health and medical) | 2 (0.6%) | |

| Publication type | Primary research | 196 (59.9%) |

| Case reports | 42 (12.8%) | |

| Systematic reviews, scoping reviews and meta-analyses | 19 (5.8%) | |

| Protocols | 4 (1.2%) | |

| Other article types (eg correspondence, topic summaries, opinions, etc) | 66 (20.2%) | |

Notes: aOnly represents collaborations within the health service, specialty/profession data was not collected for other institutions; bOther refers to staff outside of medical, nursing or Allied Health streams (eg statisticians, human resources professionals).

Publication Audit

There were 479 total publications that included health service staff between January 2018 and December 2019. More than two thirds (68.3%; n=327) of these publications had a medical doctor from the health service as an author (data was not collected about authors not employed in the health service). For every 7.5 doctors employed in the health service, one article was published each year.

Table 7 displays further details on these 327 publications. There were around 160 unique authors each year (approximately 13% of GCH doctors), and just over a quarter of these published in both years. In 2018, 72.6% published a single paper, 13.7% published two papers, and only 1.2% published over ten papers. These results were similar in 2019.

A medical doctor from the health service was first or last author in 53.2% of the publications, and 13.8% involved authors from another professional stream in the health service, such as nursing or Allied Health. The most common publication type was primary research (59.9%), followed by other article types like letters or opinions (20.2%) and case reports (12.8%).

Relationship Between Survey Results and Research Activity for Specialty Groups

“Team” level scores on the RCC were summarised into 7 broad specialty groups (Table 8), which were (in no particular order): emergency medicine; surgery and intensive care; anaesthetics; psychiatry; paediatrics, obstetrics and gynecology; and 2 physician groups split according to which division of the health service the specialty was placed. One response could not be categorised as a specialty group. Specialty groups were anonymized as Groups 1–7 in accordance with this study’s ethical approval.

Table 8.

Mean Scores for Team Level RCC Items and Publication and Projects per FTE, Separated by Broad Specialty Group, Arranged in Descending Order

| Median Scores | Group 1 (n=16) | Group 2 (n=14) | Group 3 (n=12) | Group 4 (n=17) | Group 5 (n=18) | Group 6 (n=20) | Group 7 (n=26) |

|---|---|---|---|---|---|---|---|

| Survey results | |||||||

| Overall mean for team-level items | 6.4 | 4.0 | 4.6 | 5.2 | 7.8 | 3.1 | 6.0 |

| Overall SD for team-level items | 2.1 | 2.8 | 2.3 | 2.1 | 1.1 | 2.4 | 1.7 |

| Audit results | |||||||

| Number of publications per FTE | 0.50 | 0.01 | 0.15 | 0.33 | 0.67 | 0.18 | 0.44 |

| Number of new projects per FTE | 0.26 | 0.08 | 0.09 | 0.31 | 0.21 | 0.13 | 0.45 |

Differences were identified in the mean total-item RCC scores at the “team” level between specialty groups (P<0.0001). Group 5 had the highest mean score (7.8) which was greater than Group 6 (3.1, P<0.0001), Group 2 (4.0, P<0.0001), Group 3 (4.6, P=0.010), and Group 4 (5.2, P=0.036). Group 6 had the lowest mean score (3.1) and was lower than Group 7 (6.0, P=0.003) and Group 1 (6.4, P=0.006) as well as Group 5.

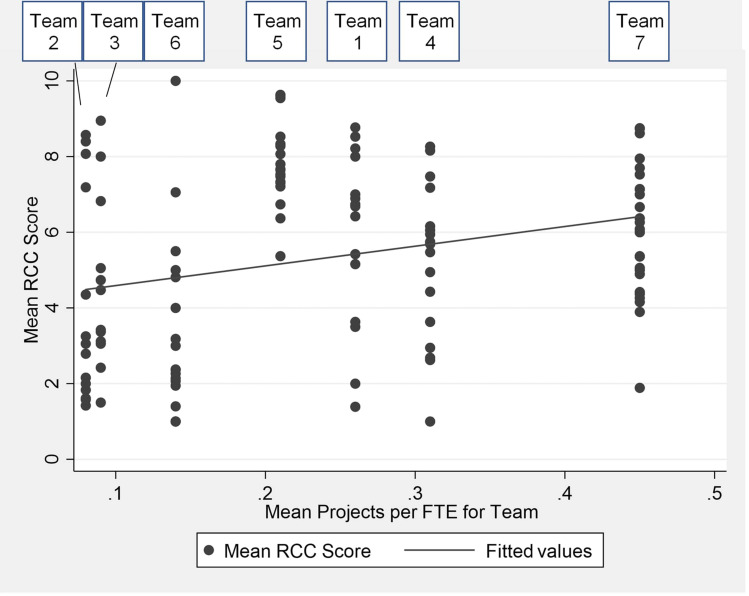

Linear regression showed a relationship between mean “team” level RCC score and both projects (Figure 1) and publications (Figure 2) per FTE. Each additional project or publication per FTE corresponded to an increase of 5.3 (95% CI 2.0–8.6; P=0.002) or 6.6 (95% CI 4.8–8.5; P<0.0001) in the mean “team” level RCC score, respectively. It was estimated that of the total variance in “team” level RCC score, 29.95% was associated with variance in publications per FTE and 7.91% in projects per FTE.

Figure 1.

Relationship between Projects per FTE of teams and the teams’ mean RCC scores.

Figure 2.

Relationship between Publications per FTE of teams and teams’ mean RCC scores.

Discussion

The research capacity and culture of medical doctors at individual, team and organisational levels was moderate, with medians of 6, 5.5, and 5, respectively. This level of research culture and capacity, and a pattern of team and individual RCC scores being higher than organisational-level scores, is reflected in similar studies including medical doctors in other Australian health services.15,16

The pattern and spread of results between the highest- and lowest-rated items found in this study is also broadly reflected in the results of these other studies.14,15 This includes the general trend of Evidence Based Practice-related items scoring more positively than pure research-related items, and items relating to funding, career pathways and incentives for research activity consistently scoring the lowest.22 This reflects progress towards the commonly cited goal that all clinicians should understand/use research, while fewer will participate in or lead it.23 Lack of time and other work roles taking priority as the most common barriers to undertaking research are also consistent across the literature for clinicians within health services.12–15

A 2017 study using the RCC with Allied Health professionals in the same health service returned scores consistently 1–3 points higher for the organisation level, with an overall median of 7.19 Reasons for this are unclear, as it is unlikely the organisation’s research culture has changed so significantly in that time. The Allied Health clinicians also scored their team-level success slightly higher, with a median of 6, and their individual capability lower, with a median of 5.19 Notably, both studies also found a high level of “unsure” responses, especially at the organization level, implying that the health service could improve communication and promotion of institutional research supports and initiatives.19

Consistent with the Allied Health study, differences were identified between specialty groups at the team level, with mean scores varying from 3.1 to 7.8. Other research has also shown that different teams within the same health service may have different barriers, motivations and levels of research capacity and current activity.11,13,19,24,25 Literature on research capacity building in health settings has found that team-based approaches are likely to be most effective, as they allow strategies to be tailored to specific needs.11,24–26 Tools like the RCC are a useful way for health services to capture their teams’ current research engagement and needs.

This study found that medical doctors were the largest producers of research projects and publications within the health service. Over a third of projects involved collaborations with other professions like Allied Health and nursing, reflecting the importance of the multidisciplinary team in modern models of care.27 Due to differences in how studies measure and report research activity,28 it is difficult to compare research activity with other health services.29,30 Available international literature on research outputs from medical clinicians usually focuses on specific specialties, often further limited to registrars and academic doctors, rather than a whole of health service measurements.16,31–35 However, one self-report survey found that for every 12.8 Australian physiotherapists employed in tertiary facilities, one article was published per year, compared to 7.5 doctors in this study.36

We identified an association between a specialty group’s research activity and their self-reported, subjective research culture and capacity. Publications demonstrated a stronger association than projects, likely because the two highest scoring groups on the RCC (1 and 5) had relatively few projects. Further investigation showed that one of these groups had a high proportion of multiphase, multisite and complex projects. This indicates that the number of projects may be a poorer indicator than the number of publications, as a simple project like a retrospective chart audit is counted equivalently to a multisite, multiphase interventional trial. The same can also be true of publications; however, complex projects will likely result in multiple publications, which helps offset this effect.

Previous research has called into question the utility of research activity and output measures, such as publications for measuring clinician research capacity. As producing research is not the core role of clinicians, an improvement in research culture is likely to have a significant time lag before a measurable increase in outputs is realized.10,12,37,38 Some authors have argued that self-report measures of research capacity and culture should be combined with traditional research activity measures.12,13 However, this is the first study to identify that the two types of measure are associated, adding further weight to this argument.

Limitations

Limitations to the study were the low (10.1%) response rate for the survey and the fact that the sample was not random. Other studies using the RCC have achieved both lower14,15 and higher12,13,16 response rates. Selection bias may also affect results, as it is likely that those interested in research were more likely to respond to the survey. This may also explain the overrepresentation of consultants compared to junior doctors. A poor response rate from junior doctors has been found in other surveys of research culture.24 Due to this, the results may be more likely to reflect the opinions of senior doctors, and should be interpreted with caution for the junior doctor and registrar populations.

Future Directions

Locally, results from this study were fed back to key strategic groups at Gold Coast Health in February 2020; however, the Covid-19 pandemic, staffing changes, and an organisational restructure have slowed the translation of this work. Nevertheless, funding has been obtained for a Knowledge Translation study to identify and implement evidence-based strategies for increasing medical engagement in research. The findings of this current study are being used to inform this work, provide a baseline measure, and help localise and tailor potential strategies.

In terms of wider implications, the findings of this work may help inform the approach to research capacity building in similar settings. This study has demonstrated that research capacity and culture vary widely between teams within an organisation, and comparison with other studies shows that it varies between organisations. While this means that the results should not be directly applied to other settings, there are common patterns, and this work adds to the literature on the research capacity and culture of medical professionals15,16 to provide a set of results for comparison. More importantly, however, there are few studies reporting data on health service-wide research activity, in contrast to the plethora of research describing the research outputs of universities.39 As health services and clinicians are increasingly called upon to be producers, not just consumers, of research,3,4 systematic study of their research activity is essential.

The link between RCC results and actual research activity of teams should also be further explored, especially in longitudinal research. It would be valuable to determine whether there is delay between improvements in a team’s scores on the RCC survey, and translation into increased research activity in the form of new projects. This would serve to delineate whether a self-report measure like the RCC can reflect improvements in research capacity and culture more rapidly, thus being a more sensitive measure to change than traditional research output measures.

Conclusion

This study reinforces the most significant challenge faced in supporting clinical research within a tertiary health context governed by activity-based funding allocations: providing medical staff time to engage in research activities. New approaches to address this in an increasingly constrained fiscal environment are needed. This study also demonstrated significant differences between team’s reported research activity and culture, indicating that research capacity building initiatives may need to be tailored to specialty groups. Objective activity measures, particularly publications, were shown to be associated with a team’s self-rated research capacity and culture. A combination of subjective process and objective activity outcome measures may therefore be complementary when measuring the impact of research capacity building initiatives. The results of the RCC in this study are intended to identify areas for improvement and provide a baseline for multi-faceted and tailored research capacity building programs in the health service.

Acknowledgments

We would like to sincerely thank all the Gold Coast Health staff who participated in or helped to disseminate this research.

Funding Statement

This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors. Costs associated with open access publication have been funded by the Gold Coast Health Study, Education and Research Trust Account.

Author Contributions

All authors made significant contribution to the work reported, either in the conception, study design, execution, acquisition of data, analysis and interpretation, or in all these areas; took part in drafting, revising or critically reviewing the article; have agreed on the journal to which the article has been submitted; and agree to be accountable for all aspects of the work.

Disclosure

The authors declare no conflicts of interest for this work and that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

References

- 1.Chalmers I, Glasziou P. Avoidable waste in the production and reporting of research evidence. Lancet. 2009;374(9683):86–89. doi: 10.1016/S0140-6736(09)60329-9 [DOI] [PubMed] [Google Scholar]

- 2.Ozdemir BA, Karthikesalingam A, Sinha S, et al. Research activity and the association with mortality. PLoS One. 2015;10(2):e0118253. doi: 10.1371/journal.pone.0118253 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Harding K, Lynch L, Porter J, et al. Organisational benefits of a strong research culture in a health service: a systematic review. Aust Health Rev. 2016;41(1):45–53. doi: 10.1071/AH15180 [DOI] [PubMed] [Google Scholar]

- 4.Jonker L, Fisher SJ, Dagnan D. Patients admitted to more research-active hospitals have more confidence in staff and are better informed about their condition and medication: results from a retrospective cross-sectional study. J Eval Clin Pract. 2020;26(1):203–208. doi: 10.1111/jep.13118 [DOI] [PubMed] [Google Scholar]

- 5.Stehlik P, Noble C, Brandenburg C, et al. How do trainee doctors learn about research? Content analysis of Australian specialist colleges’ intended research curricula. BMJ Open. 2020;10(3):e034962. doi: 10.1136/bmjopen-2019-034962 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Australian Government Department of Health and Ageing. Strategic review of health and medical research final report. Canberra: Commonwealth of Australia; 2013. [Google Scholar]

- 7.Australian Government Department of Health. MRFF strategy and priorities. Canberra; 2020. Available from:https://www.health.gov.au/initiatives-and-programs/medical-research-future-fund/about-the-mrff/mrff-strategy-and-priorities. Accessed August 2020. [Google Scholar]

- 8.Australian Institute of Health and Welfare. Australia’s health 2016. Canberra: AIHW; 2016. [Google Scholar]

- 9.Joyce CM, Piterman L, Wesselingh SL. The widening gap between clinical, teaching and research work. Med J Aust. 2009;191(3):169–172. doi: 10.5694/j.1326-5377.2009.tb02731.x [DOI] [PubMed] [Google Scholar]

- 10.Cooke J. A framework to evaluate research capacity building in health care. BMC Fam Pract. 2005;6(1):1–11. doi: 10.1186/1471-2296-6-44 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Holden L, Pager S, Golenko X, et al. Validation of the research capacity and culture (RCC) tool: measuring RCC at individual, team and organisation levels. Aust J Prim Health. 2012;18(1):62–67. doi: 10.1071/PY10081 [DOI] [PubMed] [Google Scholar]

- 12.Alison JA, Zafiropoulos B, Heard R. Key factors influencing allied health research capacity in a large Australian metropolitan health district. J Multidiscip Healthc. 2017;10:277–291. doi: 10.2147/JMDH.S142009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Wenke RJ, Mickan S, Bisset L. A cross sectional observational study of research activity of allied health teams: is there a link with self-reported success, motivators and barriers to undertaking research? BMC Health Serv Res. 2017;17(1):114. doi: 10.1186/s12913-017-1996-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Gill SD, Gwini SM, Otmar R, et al. Assessing research capacity in Victoria’s south‐west health service providers. Aust J Rural Health. 2019;27(6):505–513. doi: 10.1111/ajr.12558 [DOI] [PubMed] [Google Scholar]

- 15.Lee SA, Byth K, Gifford JA, et al. Assessment of health research capacity in Western Sydney Local Health District (WSLHD): a study on medical, nursing and allied health professionals. J Multidiscip Healthc. 2020;13:153–163. doi: 10.2147/JMDH.S222987 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.McBride KE, Young JM, Bannon PG, et al. Assessing surgical research at the teaching hospital level. ANZ J Surg. 2017;87(1–2):70–75. doi: 10.1111/ans.13863 [DOI] [PubMed] [Google Scholar]

- 17.Gold Coast Health. Research Strategy 2019–2022. Southport; 2020. Available from:https://www.publications.qld.gov.au/dataset/gold-coast-hospital-and-health-service-plans/resource/7aa0850a-886c-4c2c-a1a7-866e6f9427ed. Accessed August 2020. [Google Scholar]

- 18.Mickan S, Wenke R, Weir K, Bialocerkowski A, Noble C. Strategies for research engagement of clinicians in allied health (STRETCH): a mixed methods research protocol. BMJ Open. 2017;7(9):e014876. doi: 10.1136/bmjopen-2016-014876 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Matus J, Wenke R, Hughes I, Mickan S. Evaluation of the research capacity and culture of allied health professionals in a large regional public health service. J Multidiscip Healthc. 2019;12:83–96. doi: 10.2147/JMDH.S178696 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Graneheim UH, Lundman B. Qualitative content analysis in nursing research: concepts, procedures, and measures to achieve trustworthiness. Nurse Educ Today. 2004;24(2):105–112. doi: 10.1016/j.nedt.2003.10.001 [DOI] [PubMed] [Google Scholar]

- 21.Greenhalgh T, Robert G, Macfarlane F, Bate P, Kyriakidou O. Diffusion of innovations in service organizations: systematic review and recommendations. Milbank Q. 2004;82(4):581–629. doi: 10.1111/j.0887-378X.2004.00325.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Phang DTY, Rogers G, Hashem F, et al. Factors influencing junior doctor workplace engagement in research: an Australian study. Focus Health Prof Educ. 2020;21(1):13–28. doi: 10.11157/fohpe.v21i1.299 [DOI] [Google Scholar]

- 23.Del Mar C. Publishing research in Australian family physician. Aust Fam Physician. 2001;30:1094. [PubMed] [Google Scholar]

- 24.Paget SP, Lilischkis KJ, Morrow AM, Caldwell PHY. Embedding research in clinical practice: differences in attitudes to research participation among clinicians in a tertiary teaching hospital. Intern Med J. 2014;44(1):86–89. doi: 10.1111/imj.12330 [DOI] [PubMed] [Google Scholar]

- 25.Pager S, Holden L, Golenko X. Motivators, enablers, and barriers to building allied health research capacity. J Multidiscip Healthc. 2012;5:53–59. doi: 10.2147/JMDH.S27638 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Holden L, Pager S, Golenko X, et al. Evaluating a team-based approach to research capacity building using a matched-pairs study design. BMC Fam Pract. 2012;13(1):16. doi: 10.1186/1471-2296-13-16 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Mitchell GK, Tieman JJ, Shelby-James TM. Multidisciplinary care planning and teamwork in primary care. Med J Aust. 2008;188(8):S63. doi: 10.5694/j.1326-5377.2008.tb01747.x [DOI] [PubMed] [Google Scholar]

- 28.Caminiti C, Iezzi E, Ghetti C, et al. A method for measuring individual research productivity in hospitals: development and feasibility. BMC Health Serv Res. 2015;15(1):468. doi: 10.1186/s12913-015-1130-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.DiDiodato G, DiDiodato JA, McKee AS. The research activities of Ontario’s large community acute care hospitals: a scoping review. BMC Health Serv Res. 2017;17(1):566. doi: 10.1186/s12913-017-2517-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Prasad V, Goldstein JA, Wray KB. US news and world report cancer hospital rankings: do they reflect measures of research productivity? PLoS One. 2014;9(9):e107803. doi: 10.1371/journal.pone.0107803 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Bateman EA, Teasell R. Publish or perish: research productivity during residency training in physical medicine and rehabilitation. Am J Phys Med Rehabil. 2019;98(12):1142–1146. doi: 10.1097/PHM.0000000000001299 [DOI] [PubMed] [Google Scholar]

- 32.Boudreaux ED, Higgins SE Jr, Reznik-Zellen R, Volturo G. Scholarly productivity and impact: developing a quantifiable, norm-based benchmark for academic emergency departments. Acad Emerg Med. 2016;23:S44. [DOI] [PubMed] [Google Scholar]

- 33.Elliott ST, Lee ES. Surgical resident research productivity over 16 years. J Surg Res. 2009;153(1):148–151. doi: 10.1016/j.jss.2008.03.029 [DOI] [PubMed] [Google Scholar]

- 34.Gaught AM, Cleveland CA, Hill JJ 3rd.Publish or perish? Physician research productivity during residency training. Am J Phys Med Rehabil. 2013;92(8):710–714. doi: 10.1097/PHM.0b013e3182876197 [DOI] [PubMed] [Google Scholar]

- 35.Wang H, Chu MWA, Dubois L. Discrepancies in research productivity among Canadian surgical specialties. J Vasc Surg. 2019;70(4):e103. doi: 10.1016/j.jvs.2019.07.030 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Skinner EH, Hough J, Wang YT, et al. Physiotherapy departments in Australian tertiary hospitals regularly participate in and disseminate research results despite a lack of allocated staff: a prospective cross-sectional survey. Physiother Theory Pract. 2014;31(3):200–206. doi: 10.3109/09593985.2014.982775 [DOI] [PubMed] [Google Scholar]

- 37.Pain T, Plummer D, Pighills AC, Harvey D. Comparison of research experience and support needs of rural versus regional allied health professionals. Aust J Rural Health. 2015;23(5):277–285. doi: 10.1111/ajr.12234 [DOI] [PubMed] [Google Scholar]

- 38.Sweeny A, van den Berg L, Hocking J, et al. A Queensland research support network in emergency healthcare: collaborating to build the research capacity of more clinicians in more locations. J Health Organ Manag. 2019;33(1):93–109. doi: 10.1108/JHOM-02-2018-0068 [DOI] [PubMed] [Google Scholar]

- 39.Rhaiem M. Measurement and determinants of academic research efficiency: a systematic review of the evidence. Scientometrics. 2017;110(2):581–615. doi: 10.1007/s11192-016-2173-1 [DOI] [Google Scholar]