Abstract

In response to questions regarding the scientific basis for mindfulness-based interventions (MBIs), we evaluated their empirical status by systematically reviewing meta-analyses of randomized controlled trials (RCTs). We searched six databases for effect sizes based on ≥4 trials that did not combine passive and active controls. Heterogeneity, moderators, tests of publication bias, risk of bias, and adverse effects were also extracted. Representative effect sizes based on the largest number of studies were identified across a wide range of populations, problems, interventions, comparisons, and outcomes (PICOS). A total of 160 effect sizes were reported in 44 meta-analyses (k=336 RCTs, N=30,483 participants). MBIs showed superiority to passive controls across most PICOS (ds=0.10–0.89). Effects were typically smaller and less often statistically significant when compared to active controls. MBIs were similar or superior to specific active controls and evidence-based treatments. Heterogeneity was typically moderate. Few consistent moderators were found. Results were generally robust to publication bias, although other important sources of bias were identified. Reporting of adverse effects was inconsistent. Statistical power may be lacking in meta-analyses, particularly for comparisons with active controls. As MBIs show promise across some PICOS, future RCTs and meta-analyses should build upon identified strengths and limitations of this literature.

Keywords: mindfulness, meditation, meta-analysis, evidence-based treatments

Mindfulness meditation has entered mainstream culture in the United States (US) and many other countries in the past several decades. Meditation instructions are published by the New York Times (Gelles, n. d.) and potential benefits are discussed by Fox News (Miller & Zaharna, 2018) and Al Jazeera (Breaking bad habits: Mindful addiction recovery, 2016). Mindfulness meditation has been embraced by business (Wieczner, 2016), schools (Magra, 2019), and health care providers (Mayo Clinic Staff, n. d.). Hundreds of smartphone applications offer mindfulness-related content (Mani et al., 2015). Data on utilization mirror this increased cultural visibility; the number of US adults meditating in the past 12 months more than tripled between 2012 and 2017 (4.1% to 14.2%, Clarke, Barnes, Black, Stussman, & Nahin, 2018).

The term mindfulness derives from the Pali word sati (or smrti in Sanskrit). The cultivation of sati is emphasized across Buddhist traditions, defined in part as the development of receptive, present-moment awareness1 (Analayo, 2018; Goldstein, 2013). As has been acknowledged (Davidson & Kaszniak, 2015; Grossman & Van Dam, 2011; Van Dam et al., 2018), mindfulness has a variety of meanings in the scientific literature, with the term used to reference a mental trait, a spiritual path for cultivating well-being and relieving suffering, and a cognitive process or mental faculty that can be trained. Within the psychotherapeutic literature, mindfulness-based interventions (MBIs) often adopt Kabat-Zinn’s (1994) definition of mindfulness as the intentional self-regulation of attention to the present moment without judgment.

Mindfulness meditation was initially introduced in the Western biomedical context for the treatment of chronic pain (Kabat-Zinn, 1982). Since then, numerous interventions based on the cultivation of mindfulness through various forms of meditation practice have been developed and tested in randomized controlled trials (RCTs). The prototypical MBI, Mindfulness-Based Stress Reduction (MBSR; Kabat-Zinn, 2013), has been widely studied and, to some extent, disseminated in clinical and non-clinical populations (Chiesa & Serretti, 2009; Bohlmeijer, Prenger, Taal & Cuijpers, 2010). Although mindfulness meditation was not originally developed to treat illness (Harrington & Dunne, 2015), MBIs have been used for the treatment of various psychiatric disorders (e.g., Mindfulness-Based Cognitive Therapy for depression; Segal, Williams, & Teasdale, 2013), physical health conditions (e.g., Mindfulness-Based Cancer Recovery; Carlson et al., 2013), and as a prevention strategy in the general population (e.g., university students; Galante et al., 2018). Extensive experimental research has examined the effects of MBIs, including through large-scale RCTs (Kuyken et al., 2015; Segal et al., 2020). A growing number of meta-analyses have aggregated the effects of MBIs (Supplemental Materials Figure 1). Based on evidence derived from RCTs and meta-analyses, one MBI – mindfulness-based cognitive therapy (MBCT; Segal et al., 2013) – is included in the United Kingdom’s National Institute for Health Care Excellence (2009) guidelines for depression treatment and listed as an evidence-based treatment for depression with strong research support by the American Psychological Association Society of Clinical Psychology (Goldberg & Segal, n. d.).

In tandem with growing popular and scientific interest surrounding mindfulness meditation and MBIs, there has been ongoing criticism of these approaches and their evidence base. A recent consensus statement from 15 researchers working in this area highlighted important conceptual issues related to how mindfulness has been defined and persistent methodological shortcomings within the empirical literature (Van Dam et al., 2018). Van Dam and colleagues argue that public consumers may be “harmed, misled, and disappointed” (p. 36) by the gap between popular media representations of mindfulness meditation and actual engagement with these practices and interventions. The authors (and more recently Baer, Crane, Miller, & Kuyken, 2019) also note the lack of acknowledgement of potential adverse effects. Other concerns raised with the MBI evidence base include an over-reporting of trials demonstrating statistically significant effects (Coronado-Montoya et al., 2016), limitations associated with a reliance on self-report measures (especially of mindfulness itself; Davidson & Kaszniak, 2015; Grossman, 2008), and a host of study design features that have largely not improved over time (e.g., small sample sizes, lack of active controls, lack of treatment fidelity assessment; Goldberg et al., 2017). Based on these concerns, some have questioned whether evidence actually supports beneficial effects of these practices and, if so, for whom (Farias, Wikholm, & Delmonte, 2016). Thus, it seems the question raised by Bishop (2002) almost two decades ago remains: what do we really know about MBIs?

Evaluating the Evidence Base for MBIs

The confluence of hundreds of RCTs testing MBIs (Strohmaier, in press) with ongoing criticism of the evidence base highlights the need for a comprehensive and systematic evaluation of this literature. Meta-analysis has become the gold standard method for quantitatively synthesizing experimental research within medicine, psychology and many other disciplines (Higgins & Green, 2008). Indeed, meta-analyses have become an increasingly large proportion of the MBI literature. In 2019, 46 studies appeared in PubMed with the term “mindfulness” and the publication type “meta-analysis” while only 149 studies appeared with the publication type “clinical trial” (Supplemental Materials Figure 1). The sheer number of RCTs and meta-analyses makes it difficult to draw firm conclusions. Moreover, meta-analyses of this literature have at times come to contrasting conclusions, even when evaluating theoretically similar constructs and presumably somewhat overlapping primary studies (e.g., prosocial effects of meditation; Donald et al., 2019; Kreplin, Farias, & Brazil, 2019). Given the volume of research in this area, a review of meta-analyses can provide a comprehensive depiction of the literature far beyond a single meta-analysis, while also identifying potential gaps and highlighting methodological trends to guide both future RCTs and meta-analyses. Systematic reviews of meta-analyses have been conducted to evaluate the empirical status of other commonly used psychological interventions (e.g., cognitive-behavioral therapy; Butler et al., 2006; Hofmann, Asnaani, Vonk, Sawyer, & Fang, 2012). To our knowledge, no such review exists for MBIs.

The current study aimed to systematically review meta-analyses of RCTs testing MBIs. The overall goal was to clarify strengths and weaknesses of both the primary RCTs and the meta-analyses themselves, highlighting areas of consistency and inconsistency. We hoped to assess the empirical status of MBIs using methods similar to those that have been used to evaluate other psychotherapeutic approaches (e.g., cognitive-behavioral therapy; Butler et al., 2006). Specifically, we aimed to catalogue and summarize (1) effect size estimates, (2) heterogeneity, (3) tests of moderation, (4) assessment of bias (publication bias and risk of bias), and (5) reports of adverse effects. Following recommendations from the Cochrane Collaboration, these features were examined across populations, problems, interventions, comparisons, and outcomes (i.e., PICOS; Higgins & Green, 2008). In addition, similar to recent evaluations of statistical power in neuroscience (Button et al., 2013), we also sought to assess the statistical power of meta-analyses conducted in this area. We hoped such a comprehensive review could provide guidance for both RCTs and meta-analyses in this area.

Method

Protocol and Registration

This systematic review was registered through the Open Science Framework (https://osf.io/eafy7/). We made four deviations to the protocol. First, we restricted our review to meta-analyses of RCTs, given a reasonably large number of eligible meta-analyses were available to allow this more stringent requirement. Second, we did not code meta-analyst allegiance or meta-analysis quality as we were unable to find established methods for doing so that we felt would meaningfully add to the review. Third, we reported representative effect sizes by PICOS along with the range of effect sizes (rather than the range alone) in order to more accurately estimate the effects of MBIs. Fourth, we reported additional data beyond effect sizes (heterogeneity, moderators, publication bias, risk of bias, adverse effects, statistical power). We followed the PRISMA guidelines (Moher et al., 2009).

Eligibility Criteria

We aimed to include all meta-analyses examining the effects of MBIs tested through RCTs across the range of PICOS subcategories (with the exception of S [i.e., study design], which was restricted to RCTs). Eligible studies had to report effect sizes in standardized units that could be converted to Cohen’s d (e.g., odds ratio, correlation coefficient; Borenstein et al., 2009) along with a 95% confidence interval. In terms of PICOS subcategories, no restrictions were made regarding the population or problem (P) being studied. Interventions (I) were restricted to MBIs. We followed definitional boundaries of MBIs laid out by Crane et al. (2017) and implemented by Dunning et al. (2019) that characterize MBIs as training that includes the cultivation of a present-moment focus through the engagement in sustained meditation practice (i.e., not a single mindfulness induction; Leyland, Rowse, & Emerson, 2018). Like Dunning et al., we required mindfulness practice to be the central component of the intervention (i.e., unlike Acceptance and Commitment Therapy [ACT; Hayes, Strosahl, & Wilson, 1999] or Dialectical Behavior Therapy [DBT; Linehan, 1993] which would be considered “mindfulness-informed”; Crane et al., 2017, p. 991). Beyond these requirements, no restrictions were placed on the specific type of MBI (i.e., not restricted to MBSR or MBCT) or the delivery format (e.g., training could be delivered through mobile health technology; Spijkerman, Pots, & Bohlmeijer, 2016).

For comparisons (C), meta-analyses including both passive (e.g., waitlist) and active (e.g., other therapies) control conditions were eligible. However, meta-analyses were excluded if they only reported results that combined passive and active controls, as the inferences that each type of comparison allows differs markedly. Passive controls estimate intervention effects relative to the passage of time or related potential confounds (e.g., regression to the mean, normal recovery) and active controls additionally estimate intervention effects beyond non-specific treatment ingredients (e.g., meeting with an instructor or group, expectancy; Wampold & Imel, 2015). Robust meta-analytic evidence confirms that the strength of the comparison group moderates the magnitude of effects in MBIs (Goldberg et al., 2018). No restrictions were placed on outcome (O) type.

In order to characterize the evidence base across PICOS, results were summarized by PICOS subcategory. In order to strengthen the precision of our results, we adopted the Agency for Healthcare Research and Quality recommendation of requiring at least four primary studies (Fu et al., 2011). No restriction was placed on the publication status of the meta-analysis (i.e., dissertations were eligible) and results were not restricted by publication language.

Information Sources

We searched six databases (PubMed, Scopus, Web of Science, Cochrane Database of Systematic Reviews, CINAHL, PsycINFO) for meta-analyses published up to September 12, 2019.

Search

We used the search terms “mindful*” and “meta-analy*.” Search terms and fields searched for each of the six databases are included in Supplemental Materials Table 1.

Study Selection

Titles and/or abstracts of potentially eligible studies were independently coded by the first and second author. Disagreements were discussed until a consensus was reached.

Data Collection Process

Standardized spreadsheets were developed for coding all study-level data. Two coders with doctoral degrees in psychology and experience conducting meta-analysis coded all study data. Data were extracted independently by the first and third author. Inter-rater reliabilities were in the good to excellent range (i.e., Ks and ICCs > 0.60; Cicchetti, 1994). When a meta-analysis was otherwise eligible but necessary data for computing a standardized effect size and associated confidence interval was not available, we contacted study authors.

Data Items

All eligible effect sizes (i.e., based on ≥4 RCTs testing MBIs versus a passive or active control condition) were extracted. To evaluate the strength of the evidence, for each effect size we also coded the associated confidence interval, estimate of heterogeneity (i.e., I2), number of studies and participants represented, and results of publication bias tests (i.e., influence of potentially unpublished studies). To evaluate potential moderating variables, for each effect size we also coded results of moderation tests (i.e., study-level characteristics predicting effect sizes; Borenstein et al., 2009). To characterize effects based on PICOS, for each effect size we coded the sample population based on demographic characteristics (e.g., children and adolescents) and/or problem (e.g., breast cancer), the assessment timepoint (i.e., post-intervention or follow-up), the intervention type, the comparison type, and the outcome assessed (e.g., pain intensity, depression). Comparison type was coded as passive or active, with active comparisons further disaggregated into other therapies (i.e., specific active controls; Goyal et al., 2014) and evidence-based treatments (i.e., cognitive-behavioral therapy, antidepressants; Goldberg et al., 2018).

Meta-analysis-level characteristics were also coded, including the focus of the review (i.e., PICOS), MBI type, sample population and/or problem, and, when available, risk of bias (e.g., selective reporting, blinding; Higgins & Green, 2008), and reports of adverse events.

Summary Measures

The primary effect size measure used was the standardized mean difference (i.e., Cohen’s [1988] d). In instances where Cohen’s d was adjusted for small sample bias (i.e., yielding Hedges’ g; Borenstein et al., 2009), the adjusted effect size was used. Alternative standardized effect sizes (e.g., odds ratio, correlation coefficient) were converted into Cohen’s d using standard methods (Borenstein et al., 2009). I2 was used as the metric of heterogeneity, reflecting the proportion of variance in effects that occurs between studies (Borenstein et al., 2009). The magnitude of Cohen’s d and I2 were interpreted based on established guidelines (Cohen, 1988; Higgins, Thompson, Deeks, & Altman, 2003). As discussed below, second order meta-analysis (Schmidt & Oh, 2013) was not possible due to the overlap of primary studies (i.e., first order meta-analyses were not independent). However, all extracted effect sizes were themselves based on non-overlapping primary studies (i.e., per standard meta-analytic methods, primary studies are not duplicated within meta-analyses; Borenstein et al., 2009).

Similar to procedures used by Button et al. (2013) to evaluate primary studies in neuroscience, we examined the statistical power for the included meta-analytic effect size estimates using standard formulas for a random effect meta-analysis (Valentine, Pigott, & Rothstein, 2010). Specifically, we determined the observed statistical power provided for each effect size based on the size of the effect, sample size (number of studies, number of participants), and degree of heterogeneity.

Synthesis of Results

We considered a variety of approaches for synthesizing effect sizes and other data extracted from the eligible studies. As noted by Cooper and Koenka (2012), no definitive, established method for this task exists. While some previous reviews have conducted a second order meta-analysis by quantitatively combining results across included meta-analyses (e.g., Gotink et al., 2015; Wilson & Lipsey, 2001), this approach has the substantial limitation of over-representing studies published earlier (that may be more likely to appear in multiple meta-analyses) and over-estimating the precision of the observed effects (by allowing duplicating of individual studies). Second order meta-analysis has been recommended for literatures that are entirely distinct (e.g., validity of personality measures across countries; Schmidt & Oh, 2013). Moreover, we were specifically interested not only in evaluating MBIs but also in evaluating the meta-analytic literature itself, examining patterns in findings and methodologies across often independently conducted meta-analyses (in some ways akin to replications across research labs; Open Science Collaboration, 2012). In an effort to balance representing the breadth and variability within the meta-analytic literature with providing interpretable estimates of the magnitude of effects, we report both the range of effect sizes and a representative effect size estimate for each PICOS subcategory. The representative effect size estimate was that based on the largest number of studies, which in theory should provide the most precise and reliable effect size for each PICOS subcategory.2

The specific PICOS subcategories examined were determined using an inductive approach based on the eligible meta-analyses. We aimed to maximize representation of the included meta-analyses while avoiding an overwhelming number of subcategories. Thus, we examined six population types, 11 problem types, five intervention types, and eight outcome types. Results were subdivided by comparison type (passive, active, specific active, and evidence-based treatment).

Estimates of heterogeneity were reported for PICOS subcategories and summarized for passive and active controls. Results of moderator tests, publication bias, risk of bias, and adverse effects reporting were summarized across the included meta-analyses (i.e., across PICOS). Statistical power was summarized for passive and active controls separately.

Results

Study Selection

A total of 2037 citations were retrieved and evaluated. After application of the exclusion criteria (see PRISMA flow diagram in Supplemental Materials Figure 2), 44 meta-analyses were retained for analysis with 160 effect size estimates. Thirty-nine potentially eligible meta-analyses were excluded due to combining passive and active controls. A total of 336 unique primary studies and 30,483 participants were represented across the 44 meta-analyses. As shown in Supplemental Materials Figure 3, there was considerable overlap in the primary studies included across meta-analyses, making second order meta-analysis not feasible. Included studies were published between 2010 and 2019.

Study Characteristics

All meta-analysis-level and effect size-level data are shown in Supplemental Materials Tables 2 and 3. Meta-analyses included an average of 17.68 studies (SD = 25.14, range = 4 to 142) and 1,657 participants (SD = 2,203, range = 115 to 12,005). The majority (56.8%) of the included meta-analyses were focused on a specific problem, 15.9% on a particular population, 31.8% on a particular intervention (e.g., MBCT) or intervention delivery format (e.g., mobile health), 15.9% on a particular comparison, and 20.5% on a particular outcome. Note that a single meta-analysis could have multiple areas of focus (e.g., effects of MBCT in the prevention of depressive relapse has an intervention and problem focus; Kuyken et al., 2016). For both comparisons with active and passive controls, the most common population was adults (k = 39 meta-analyses), the most common problem was psychiatric conditions (k = 20), the most common intervention included more than one type of MBI (k = 23), and the most common outcome was psychiatric symptoms (k = 33).

Of the 160 effect sizes, most were post-treatment effects (k = 118), with 42 representing effects at follow-up. For meta-analyses including follow-up effect sizes, when reported, the average length of follow-up was 7.19 months (SD = 3.30, range = 2.25 to 12.00). Approximately half of the effect sizes were from comparisons with passive controls (k = 82), with the remainder from comparisons with active controls (k = 78). Of the active controls, 46 comparisons were with specific active controls and 11 were with evidence-based treatments.

Primary studies included across the 44 meta-analyses had an average sample size of 90.72 (SD = 83.99, range = 13 to 551). The majority of primary studies were conducted in North America (52.7%), with 28.0% in Europe, 8.1% in Asia, 5.4% in Oceania (e.g., Australia or New Zealand), 4.8% in the Middle East, 0.6% in multiple regions, and 0.3% in Africa.

Results of Individual Studies

For each included meta-analysis, effect size estimates and confidence intervals are reported in Supplemental Materials Table 3. All included PICOS and their associated subcategories are reported in Supplemental Materials Table 4.

Synthesis of Results

Effect sizes by populations, problems, interventions, comparisons, and outcomes (PICOS).

Effect sizes separated by PICOS and timepoint are reported in Tables 1 to 4, Figures 1 to 3, Supplemental Materials Tables 5 to 8, and Supplemental Materials Figures 1 to 4. As shown in Supplemental Materials Figure 4, there was wide variability across PICOS subcategories in the number of available meta-analyses (range = 1 to 26) and effect size estimates (range = 1 to 53). Likewise, for the representative effect sizes (i.e., those based on the largest number of studies for each PICOS subcategory), there was wide variability in the number of primary studies (range = 4 to 89) and the sample size from these primary studies (range = 115 to 5,748; Tables 1 to 4).

Table 1.

Post-treatment comparisons with passive controls across PICOS

| PICOS | Subcategory | kall | ESall | ESall Min | ESall Max | Rep Study | krep | nrep | ESrep | 95% CIrep | I2rep |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Population | Children | 1 | 2 | 0.10 | 0.38 | Dunning (2019) | 11 | 1501 | 0.10 | 0.04, 0.16 | 67.99 |

| Population | Adults | 26 | 53 | 0.21 | 1.27 | Goldberg (2018) | 89 | 5748 | 0.55 | 0.47, 0.63 | 58 |

| Population | Older adults | 1 | 1 | 0.74 | 0.74 | Li (2019) | 4 | 330 | 0.74 | −0.20, 1.68 | 92 |

| Population | Employees | 1 | 1 | 0.43 | 0.43 | Phillips (2019) | 4 | NA | 0.43 | 0.20, 0.66 | 17.14 |

| Population | Healthcare | 1 | 1 | 0.39 | 0.39 | Chen (2019) | 4 | 624 | 0.39 | 0.09, 0.68 | 41 |

| Population | Students | 2 | 2 | 0.39 | 0.49 | Halladay (2019) | 20 | 1266 | 0.49 | 0.30, 0.68 | 59 |

| Problem | Psychiatric | 11 | 22 | 0.24 | 1.27 | Goldberg (2018) | 89 | 5748 | 0.55 | 0.47, 0.63 | 58 |

| Problem | Physical | 13 | 32 | 0.21 | 1.13 | Goldberg (2018) | 24 | 1534 | 0.45 | 0.30, 0.60 | 46 |

| Problem | Cancer | 6 | 20 | 0.21 | 1.13 | Cillessen (2019) | 20 | 2346 | 0.40 | 0.29, 0.52 | 38.9 |

| Problem | Pain | 5 | 9 | 0.24 | 0.78 | Goldberg (2018) | 24 | 1534 | 0.45 | 0.30, 0.60 | 46 |

| Problem | Weight/eating | 1 | 1 | 0.79 | 0.79 | Goldberg (2018) | 5 | 226 | 0.79 | 0.44, 1.15 | 82 |

| Problem | Psychotic | 1 | 1 | 0.50 | 0.5 | Goldberg (2018) | 7 | 456 | 0.50 | 0.23, 0.76 | 46 |

| Problem | Anxiety | 1 | 1 | 0.89 | 0.89 | Goldberg (2018) | 8 | 472 | 0.89 | 0.62, 1.17 | 81 |

| Problem | Depression | 4 | 4 | 0.49 | 1.27 | Goldberg (2018) | 30 | 2369 | 0.59 | 0.46, 0.73 | 35 |

| Problem | MDD relapse | NA | NA | NA | NA | NA | NA | NA | NA | NA | NA |

| Problem | Substance use | 1 | 1 | 0.35 | 0.35 | Goldberg (2018) | 5 | 149 | 0.35 | −0.06, 0.76 | 69 |

| Problem | Smoking | NA | NA | NA | NA | NA | NA | NA | NA | NA | NA |

| Interv | Various MBIs | 17 | 28 | 0.08 | 1.27 | Goldberg (2018) | 89 | 5748 | 0.55 | 0.47, 0.63 | 58 |

| Interv | MBSR | 7 | 16 | 0.29 | 1.05 | Teleki (2010) | 12 | 613 | 0.54 | 0.33, 0.75 | NA |

| Interv | MBCT | 2 | 2 | 0.49 | 0.76 | Lenz (2016) | 16 | NA | 0.76 | 0.56, 0.95 | 51.08 |

| Interv | MBSR/MBCT | 12 | 29 | 0.21 | 1.05 | Lenz (2016) | 16 | NA | 0.76 | 0.56, 0.95 | 51.08 |

| Interv | mHealth | 2 | 2 | 0.43 | 0.54 | Spijkerman (2016) | 7 | 1174 | 0.54 | 0.27, 0.82 | 85.18 |

| Outcome | Psych sx | 20 | 36 | 0.08 | 1.27 | Goldberg (2018) | 89 | 5748 | 0.55 | 0.47, 0.63 | 58 |

| Outcome | Stress | 3 | 3 | 0.33 | 0.54 | Cillessen (2019) | 20 | 2346 | 0.40 | 0.29, 0.52 | 38.9 |

| Outcome | Phys sx | 7 | 9 | 0.23 | 0.78 | Goldberg (2018) | 24 | 1534 | 0.45 | 0.30, 0.60 | 46 |

| Outcome | Physiology | 1 | 1 | 0.48 | 0.48 | Sanada (2016) | 4 | 115 | 0.48 | −0.39, 1.35 | 79 |

| Outcome | Well-being | 1 | 1 | 0.21 | 0.21 | Haller (2017) | 7 | 958 | 0.21 | 0.04, 0.39 | 41 |

| Outcome | Mindfulness | 5 | 5 | 0.24 | 0.52 | Goldberg (2019) | 25 | 1415 | 0.52 | 0.40, 0.64 | 17.45 |

| Outcome | Objective | 1 | 1 | 0.48 | 0.48 | Sanada (2016) | 4 | 115 | 0.48 | −0.39, 1.35 | 79 |

| Outcome | Sleep | 2 | 2 | 0.23 | 0.38 | Haller (2017) | 4 | 504 | 0.23 | 0.05, 0.40 | 0 |

Note: PICOS = populations, problems, interventions, comparisons, outcomes, and study design; Subcategory = PICOS subcategory; kall = number of meta-analyses providing effect size for PICOS subcategory; Min = minimum effect size for subcategory; ESall Max = maximum effect size for subcategory; Rep Study = meta-analysis that included the effect size based on the largest number of studies for PICOS subcategory; krep= number of studies in representative effect size; nrep= number of participants for representative effect size; ESrep= representative effect size; 95% CIrep= confidence interval for representative effect size; I2rep= heterogeneity for representative effect size; NA = not available; sx = symptoms; MDD = major depressive disorder; MBI = mindfulness-based intervention; MBSR = mindfulness-based stress reduction; MBCT = mindfulness-based cognitive therapy; mHealth = mobile health; Phys = physical; Psych = psychiatric.

Table 4.

Follow-up comparisons with active controls across PICOS

| PICOS | Subcategory | kall | ESall | ESall Min | ESall Max | Rep Study | krep | nrep | ESrep | 95% CIrep | I2rep |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Population | Children | NA | NA | NA | NA | NA | NA | NA | NA | NA | NA |

| Population | Adults | 7 | 18 | −0.14 | 0.52 | Goldberg (2018) | 29 | 3810 | 0.29 | 0.13, 0.45 | 72 |

| Population | Older adults | NA | NA | NA | NA | NA | NA | NA | NA | NA | NA |

| Population | Employees | NA | NA | NA | NA | NA | NA | NA | NA | NA | NA |

| Population | Healthcare | NA | NA | NA | NA | NA | NA | NA | NA | NA | NA |

| Population | Students | NA | NA | NA | NA | NA | NA | NA | NA | NA | NA |

| Problem | Psychiatric | 6 | 16 | −0.14 | 0.52 | Goldberg (2018) | 29 | 3810 | 0.29 | 0.13, 0.45 | 72 |

| Problem | Physical | 2 | 3 | 0.01 | 0.18 | Goldberg (2018) | 8 | 946 | 0.18 | −0.07, 0.44 | 51 |

| Problem | Cancer | 1 | 1 | 0.01 | 0.01 | Cillessen (2019) | 7 | 772 | 0.01 | −0.14, 0.15 | 0 |

| Problem | Pain | 1 | 1 | 0.18 | 0.18 | Goldberg (2018) | 8 | 946 | 0.18 | −0.07, 0.44 | 51 |

| Problem | Weight/eating | 1 | 1 | 0.18 | 0.18 | Goldberg (2018) | 5 | 437 | 0.18 | −0.16, 0.53 | 74 |

| Problem | Psychotic | NA | NA | NA | NA | NA | NA | NA | NA | NA | NA |

| Problem | Anxiety | NA | NA | NA | NA | NA | NA | NA | NA | NA | NA |

| Problem | Depression | 4 | 6 | 0.04 | 0.35 | Goldberg (2018) | 7 | 1064 | 0.04 | −0.13, 0.20 | 0 |

| Problem | MDD relapse | 1 | 2 | 0.16 | 0.17 | Kuyken (2016) | 5 | 892 | 0.16 | 0.01, 0.31 | 0 |

| Problem | Substance use | 1 | 1 | 0.38 | 0.38 | Goldberg (2018) | 4 | 900 | 0.38 | 0.00, 0.76 | 73 |

| Problem | Smoking | NA | NA | NA | NA | NA | NA | NA | NA | NA | NA |

| Interv | Various MBIs | 5 | 15 | −0.14 | 0.52 | Goldberg (2018) | 29 | 3810 | 0.29 | 0.13, 0.45 | 72 |

| Interv | MBSR | NA | NA | NA | NA | NA | NA | NA | NA | NA | NA |

| Interv | MBCT | 3 | 4 | 0.16 | 0.26 | Lenz (2016) | 7 | NA | 0.16 | 0.01, 0.30 | 0 |

| Interv | MBSR/MBCT | 3 | 4 | 0.16 | 0.26 | Lenz (2016) | 7 | NA | 0.16 | 0.01, 0.30 | 0 |

| Interv | mHealth | NA | NA | NA | NA | NA | NA | NA | NA | NA | NA |

| Outcome | Psych sx | 8 | 17 | −0.14 | 0.52 | Goldberg (2018) | 29 | 3810 | 0.29 | 0.13, 0.45 | 72 |

| Outcome | Stress | 1 | 1 | 0.01 | 0.01 | Cillessen (2019) | 7 | 772 | 0.01 | −0.14, 0.15 | 0 |

| Outcome | Phys sx | 2 | 2 | −0.14 | 0.18 | Goldberg (2018) | 8 | 946 | 0.18 | −0.07, 0.44 | 51 |

| Outcome | Physiology | NA | NA | NA | NA | NA | NA | NA | NA | NA | NA |

| Outcome | Well-being | NA | NA | NA | NA | NA | NA | NA | NA | NA | NA |

| Outcome | Mindfulness | 1 | 1 | 0.1 | 0.1 | Goldberg (2019) | 13 | 1430 | 0.1 | −0.08, 0.28 | 58.54 |

| Outcome | Objective | NA | NA | NA | NA | NA | NA | NA | NA | NA | NA |

| Outcome | Sleep | 1 | 1 | −0.14 | −0.14 | Rusch (2019) | 4 | 527 | −0.14 | −0.62, 0.34 | 84 |

Note: PICOS = populations, problems, interventions, comparisons, outcomes, and study design; Subcategory = PICOS subcategory; kall = number of meta-analyses providing effect size for PICOS subcategory; Min = minimum effect size for subcategory; ESall Max = maximum effect size for subcategory; Rep Study = meta-analysis that included the effect size based on the largest number of studies for PICOS subcategory; krep= number of studies in representative effect size; nrep= number of participants for representative effect size; ESrep= representative effect size; 95% CIrep= confidence interval for representative effect size; I2rep= heterogeneity for representative effect size; NA = not available; sx = symptoms; MDD = major depressive disorder; MBI = mindfulness-based intervention; MBSR = mindfulness-based stress reduction; MBCT = mindfulness-based cognitive therapy; mHealth = mobile health; Phys = physical; Psych = psychiatric.

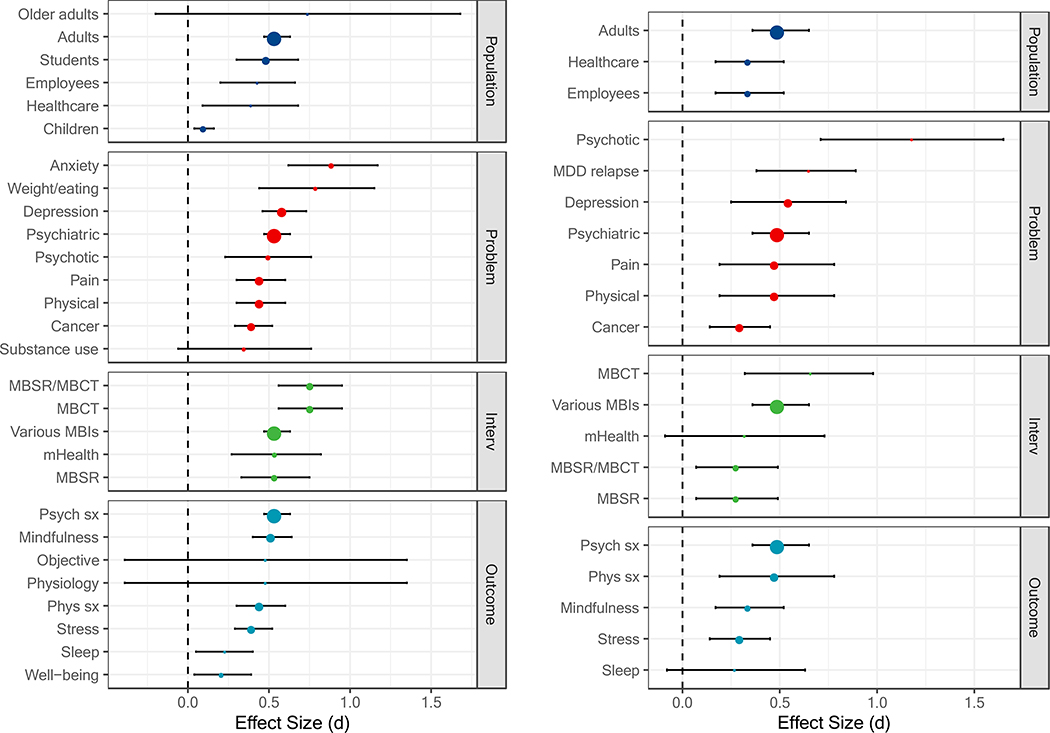

Figure 1.

Comparisons with passive controls at post-treatment (left panel) and follow-up (right panel) based on the largest number of studies. The size of each point is relative to the number of primary studies it represents. MBSR = mindfulness-based stress reduction; MBCT = mindfulness-based cognitive therapy; mHealth = mobile health; Psych sx = psychiatric symptoms; Phys = physical symptoms; MDD = major depressive disorder; Interv = intervention.

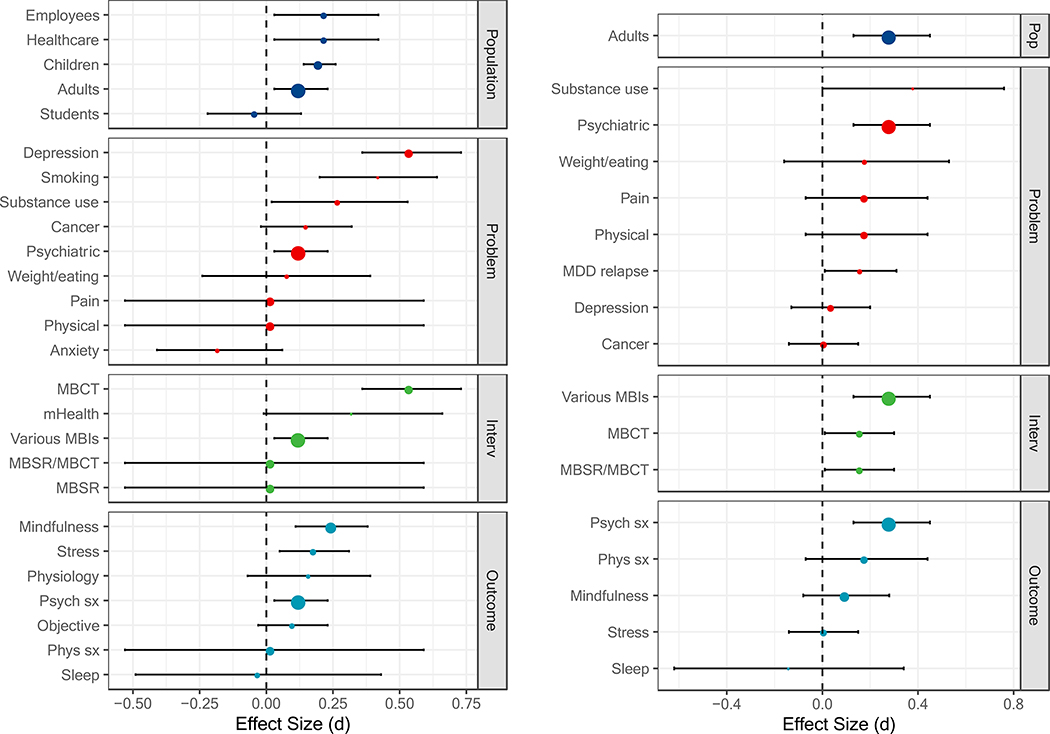

Figure 3.

Comparisons with active controls at post-treatment (left panel) and follow-up (right panel) based on the largest number of studies. The size of each point is relative to the number of primary studies it represents. MBSR = mindfulness-based stress reduction; MBCT = mindfulness-based cognitive therapy; mHealth = mobile health; Psych sx = psychiatric symptoms; Phys = physical symptoms; MDD = major depressive disorder; Pop = population; Interv = intervention.

Comparison with passive controls.

Post-treatment.

Representative effect sizes indicated that MBIs, on the whole, compared favorably with passive controls across a wide range of PICOS, with some exceptions (Table 1; Figure 1). MBIs showed superiority to passive controls for children and adolescents, healthcare professionals/trainees, employees, students (i.e., post-secondary or allied healthcare students), and adults. Statistically significant meta-analytic effect sizes ranged from very small (d = 0.10, for children and adolescents) to moderate (d = 0.55, for adults). Although largest in magnitude (d = 0.74, 95% confidence interval [CI] [−0.20, 1.68]), the effect size for older adults did not differ from zero and was based on a small number of studies (k = 4; Table 1). MBIs showed superiority to passive controls across most problems assessed. Statistically significant effect sizes ranged from small (d = 0.40, for cancer) to large (d = 0.89, for anxiety disorders). MBIs did not differ from passive controls for substance use disorders (d = 0.35, [−0.06, 0.76]). MBIs showed superiority to passive controls across all MBI types (ds = 0.54 to 0.76). MBIs showed superiority to passive controls for most outcome types, with the exception of objective measures and physiological measures (d = 0.48, [−0.39, 1.35]). Statistically significant meta-analytic effect sizes ranged from small (d = 0.21, for well-being) to moderate (d = 0.55, for psychiatric symptoms).

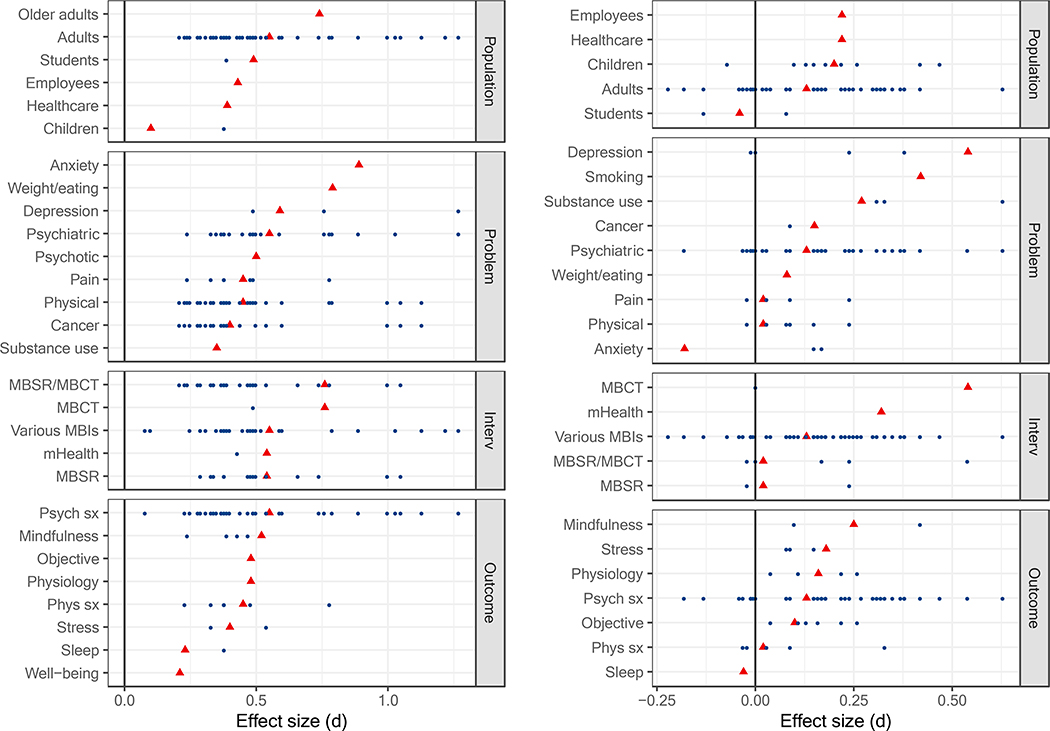

Figure 2 displays the range of meta-analytic effect size estimates across PICOS. As can be seen, some PICOS subcategories were much more densely represented by the included meta-analyses than others. When multiple meta-analytic estimates were available, the representative effect size tended to occur toward the middle of the distribution. One exception was the effect size for mindfulness which appeared at the high end.

Figure 2.

All meta-analytic effect size estimates for comparisons with passive controls (left panel) and active controls (right panel) at post-treatment. The representative estimate for each PICOS (i.e., based on the largest number of studies) is displayed as a red triangle. MBSR = mindfulness-based stress reduction; MBCT = mindfulness-based cognitive therapy; mHealth = mobile health; Psych sx = psychiatric symptoms; Phys = physical symptoms; Interv = intervention.

Follow-up.

Fewer estimates were available at follow-up, although they followed a pattern similar to post-treatment (Table 2). MBIs again compared favorably with passive controls across a range of PICOS, with two exceptions (Figure 1). MBIs showed superiority to passive controls for adults, healthcare professionals/trainees, and employees (ds = 0.34 to 0.50). MBIs showed superiority to passive controls across problems assessed, including physical health conditions, cancer, depression, pain conditions, psychiatric disorders, relapse of major depressive disorder, and psychotic disorders (ds = 0.30 to 1.18). MBIs showed superiority to passive controls across MBI types (ds = 0.28 to 0.66), with the exception of mobile health (d = 0.32, [−0.09, 0.73]). MBIs showed superiority to passive controls across outcome types (ds = 0.30 to 0.50), with the exception of sleep (d = 0.27, [−0.08, 0.63]).

Table 2.

Follow-up comparisons with passive controls across PICOS

| PICOS | Subcategory | kall | ESall | ESall Min | ESall Max | Rep Study | krep | nrep | ESrep | 95% CIrep | I2rep |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Population | Children | NA | NA | NA | NA | NA | NA | NA | NA | NA | NA |

| Population | Adults | 12 | 22 | 0.19 | 1.18 | Goldberg (2018) | 37 | 5748 | 0.50 | 0.36, 0.65 | 80 |

| Population | Older adults | NA | NA | NA | NA | NA | NA | NA | NA | NA | NA |

| Population | Employees | 2 | 2 | 0.32 | 0.34 | Spinelli (2019) | 7 | NA | 0.34 | 0.17, 0.52 | 0 |

| Population | Healthcare | 1 | 1 | 0.34 | 0.34 | Spinelli (2019) | 7 | NA | 0.34 | 0.17, 0.52 | 0 |

| Population | Students | NA | NA | NA | NA | NA | NA | NA | NA | NA | NA |

| Problem | Psychiatric | 6 | 11 | 0.27 | 1.18 | Goldberg (2018) | 37 | 5748 | 0.50 | 0.36, 0.65 | 80 |

| Problem | Physical | 5 | 10 | 0.19 | 0.48 | Goldberg (2018) | 12 | 1534 | 0.48 | 0.19, 0.78 | 54 |

| Problem | Cancer | 4 | 9 | 0.19 | 0.32 | Cillessen (2019) | 11 | 1435 | 0.30 | 0.14, 0.45 | 46.1 |

| Problem | Pain | 1 | 1 | 0.48 | 0.48 | Goldberg (2018) | 12 | 1534 | 0.48 | 0.19, 0.78 | 54 |

| Problem | Weight/eating | NA | NA | NA | NA | NA | NA | NA | NA | NA | NA |

| Problem | Psychotic | 1 | 1 | 1.18 | 1.18 | Goldberg (2018) | 4 | 456 | 1.18 | 0.71, 1.65 | 97 |

| Problem | Anxiety | NA | NA | NA | NA | NA | NA | NA | NA | NA | NA |

| Problem | Depression | 5 | 6 | 0.45 | 0.97 | Goldberg (2018) | 12 | 2369 | 0.55 | 0.25, 0.84 | 60 |

| Problem | MDD relapse | 1 | 1 | 0.65 | 0.65 | Galante (2013) | 4 | 307 | 0.65 | 0.38, 0.89 | 0 |

| Problem | Substance use | NA | NA | NA | NA | NA | NA | NA | NA | NA | NA |

| Problem | Smoking | NA | NA | NA | NA | NA | NA | NA | NA | NA | NA |

| Interv | Various MBIs | 5 | 9 | 0.27 | 1.18 | Goldberg (2018) | 37 | 5748 | 0.50 | 0.36, 0.65 | 80 |

| Interv | MBSR | 1 | 4 | 0.27 | 0.32 | Schell (2019) | 7 | 1094 | 0.28 | 0.07, 0.49 | NA |

| Interv | MBCT | 4 | 5 | 0.45 | 0.97 | Chiesa (2011) | 4 | 326 | 0.66 | 0.32, 0.98 | 39 |

| Interv | MBSR/MBCT | 7 | 13 | 0.19 | 0.97 | Schell (2019) | 7 | 1094 | 0.28 | 0.07, 0.49 | NA |

| Interv | mHealth | 1 | 1 | 0.32 | 0.32 | Stratton (2017) | 4 | 215 | 0.32 | −0.09, 0.73 | 0 |

| Outcome | Psych sx | 11 | 18 | 0.19 | 1.18 | Goldberg (2018) | 37 | 5748 | 0.50 | 0.36, 0.65 | 80 |

| Outcome | Stress | 2 | 2 | 0.3 | 0.32 | Cillessen (2019) | 11 | 1435 | 0.30 | 0.14, 0.45 | 46.1 |

| Outcome | Phys sx | 2 | 2 | 0.27 | 0.48 | Goldberg (2018) | 12 | 1534 | 0.48 | 0.19, 0.78 | 54 |

| Outcome | Physiology | NA | NA | NA | NA | NA | NA | NA | NA | NA | NA |

| Outcome | Well-being | NA | NA | NA | NA | NA | NA | NA | NA | NA | NA |

| Outcome | Mindfulness | 2 | 2 | 0.34 | 0.52 | Spinelli (2019) | 7 | NA | 0.34 | 0.17, 0.52 | 0 |

| Outcome | Objective | NA | NA | NA | NA | NA | NA | NA | NA | NA | NA |

| Outcome | Sleep | 1 | 1 | 0.27 | 0.27 | Schell (2019) | 4 | 654 | 0.27 | −0.08, 0.63 | NA |

Note: PICOS = populations, problems, interventions, comparisons, outcomes, and study design; Subcategory = PICOS subcategory; kall = number of meta-analyses providing effect size for PICOS subcategory; Min = minimum effect size for subcategory; ESall Max = maximum effect size for subcategory; Rep Study = meta-analysis that included the effect size based on the largest number of studies for PICOS subcategory; krep= number of studies in representative effect size; nrep= number of participants for representative effect size; ESrep= representative effect size; 95% CIrep= confidence interval for representative effect size; I2rep= heterogeneity for representative effect size; NA = not available; sx = symptoms; MDD = major depressive disorder; MBI = mindfulness-based intervention; MBSR = mindfulness-based stress reduction; MBCT = mindfulness-based cognitive therapy; mHealth = mobile health; Phys = physical; Psych = psychiatric.

Comparison with active controls.

Post-treatment.

As expected, effect sizes were smaller and less often statistically significant when MBIs were compared with active controls (which ranged from attentional controls to evidence-based treatments; Table 3, Figure 3). MBIs showed superiority to active controls for adults, children, employees, and healthcare professionals/trainees (ds = 0.13 to 0.22). MBIs did not differ from active controls for students (d = −0.04, [−0.22, 0.13]). MBIs showed superiority to active controls for psychiatric disorders, substance use, smoking, and depression (ds = 0.13 to 0.42). MBIs did not differ from active controls for physical health conditions, pain, weight/eating-related conditions, cancer, or anxiety (ds = 0.02 to 0.15). MBIs showed superiority to active controls for MBCT and various MBIs (ds = 0.13 to 0.54), but not for mobile health, MBSR, or MBSR/MBCT (i.e., meta-analyses that included either MBSR, MBCT, or a combination of these; ds = 0.02 to 0.32). MBIs showed superiority to active controls for mindfulness, stress, and psychiatric symptoms (ds = 0.16 to 0.25), but not for sleep, physical health symptoms, objective measures, or physiological measures (ds = −0.03 to 0.16).

Table 3.

Post-treatment comparisons with active controls across PICOS

| PICOS | Subcategory | kall | ESall | ESall Min | ESall Max | Rep Study | krep | nrep | ESrep | 95% CIrep | I2rep |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Population | Children | 1 | 10 | −0.07 | 0.47 | Dunning (2019) | 17 | 1762 | 0.20 | 0.14, 0.26 | 67.08 |

| Population | Adults | 18 | 42 | −0.22 | 0.63 | Goldberg (2020) | 58 | 5627 | 0.13 | 0.03, 0.23 | 69.7 |

| Population | Older adults | NA | NA | NA | NA | NA | NA | NA | NA | NA | NA |

| Population | Employees | 1 | 1 | 0.22 | 0.22 | Spinelli (2019) | 9 | NA | 0.22 | 0.03, 0.42 | 0 |

| Population | Healthcare | 1 | 1 | 0.22 | 0.22 | Spinelli (2019) | 9 | NA | 0.22 | 0.03, 0.42 | 0 |

| Population | Students | 1 | 3 | −0.13 | 0.08 | Halladay (2019) | 9 | 830 | −0.04 | −0.22, 0.13 | 29 |

| Problem | Psychiatric | 13 | 31 | −0.18 | 0.63 | Goldberg (2020) | 58 | 5627 | 0.13 | 0.03, 0.23 | 69.7 |

| Problem | Physical | 4 | 8 | −0.02 | 0.24 | Khoo (2019) | 17 | 1221 | 0.02 | −0.53, 0.59 | NA |

| Problem | Cancer | 1 | 2 | 0.09 | 0.15 | Cillessen (2019) | 5 | 518 | 0.15 | −0.02, 0.32 | 0 |

| Problem | Pain | 3 | 5 | −0.02 | 0.24 | Khoo (2019) | 17 | 1221 | 0.02 | −0.53, 0.59 | NA |

| Problem | Weight/eating | 1 | 1 | 0.08 | 0.08 | Goldberg (2018) | 5 | 437 | 0.08 | −0.24, 0.39 | 0 |

| Problem | Psychotic | NA | NA | NA | NA | NA | NA | NA | NA | NA | NA |

| Problem | Anxiety | 2 | 3 | −0.18 | 0.17 | Goldberg (2018) | 5 | 362 | −0.18 | −0.41, 0.06 | 38 |

| Problem | Depression | 4 | 5 | −0.01 | 0.54 | Lenz (2016) | 16 | NA | 0.54 | 0.36, 0.73 | 46.15 |

| Problem | MDD relapse | NA | NA | NA | NA | NA | NA | NA | NA | NA | NA |

| Problem | Substance use | 2 | 4 | 0.27 | 0.63 | Goldberg (2018) | 7 | 900 | 0.27 | 0.02, 0.53 | 18 |

| Problem | Smoking | 1 | 1 | 0.42 | 0.42 | Goldberg (2018) | 4 | 587 | 0.42 | 0.20, 0.64 | 11 |

| Interv | Various MBIs | 17 | 52 | −0.22 | 0.63 | Goldberg (2020) | 58 | 5627 | 0.13 | 0.03, 0.23 | 69.7 |

| Interv | MBSR | 1 | 3 | −0.02 | 0.24 | Khoo (2019) | 17 | 1221 | 0.02 | −0.53, 0.59 | NA |

| Interv | MBCT | 2 | 2 | 0.002 | 0.54 | Lenz (2016) | 16 | NA | 0.54 | 0.36, 0.73 | 46.15 |

| Interv | MBSR/MBCT | 4 | 6 | −0.02 | 0.54 | Khoo (2019) | 17 | 1221 | 0.02 | −0.53, 0.59 | NA |

| Interv | mHealth | 1 | 1 | 0.32 | 0.32 | Martin (2018) | 4 | 386 | 0.32 | −0.01, 0.66 | 63 |

| Outcome | Psych sx | 17 | 37 | −0.18 | 0.63 | Goldberg (2020) | 58 | 5627 | 0.13 | 0.03, 0.23 | 69.7 |

| Outcome | Stress | 3 | 4 | 0.08 | 0.18 | Dunning (2019) | 9 | 844 | 0.18 | 0.05, 0.31 | 0 |

| Outcome | Phys sx | 5 | 6 | −0.03 | 0.33 | Khoo (2019) | 17 | 1221 | 0.02 | −0.53, 0.59 | NA |

| Outcome | Physiology | 1 | 6 | 0.04 | 0.26 | Pascoe (2017) | 5 | 298 | 0.16 | −0.07, 0.39 | 0 |

| Outcome | Well-being | NA | NA | NA | NA | NA | NA | NA | NA | NA | NA |

| Outcome | Mindfulness | 3 | 3 | 0.1 | 0.42 | Goldberg (2019) | 30 | 2863 | 0.25 | 0.11, 0.38 | 59.85 |

| Outcome | Objective | 2 | 8 | 0.04 | 0.26 | Dunning (2019) | 7 | 958 | 0.10 | −0.03, 0.23 | 5.1 |

| Outcome | Sleep | 1 | 1 | −0.03 | −0.03 | Rusch (2019) | 7 | 716 | −0.03 | −0.49, 0.43 | 88 |

Note: PICOS = populations, problems, interventions, comparisons, outcomes, and study design; Subcategory = PICOS subcategory; kall = number of meta-analyses providing effect size for PICOS subcategory; Min = minimum effect size for subcategory; ESall Max = maximum effect size for subcategory; Rep Study = meta-analysis that included the effect size based on the largest number of studies for PICOS subcategory; krep= number of studies in representative effect size; nrep= number of participants for representative effect size; ESrep= representative effect size; 95% CIrep= confidence interval for representative effect size; I2rep= heterogeneity for representative effect size; NA = not available; sx = symptoms; MDD = major depressive disorder; MBI = mindfulness-based intervention; MBSR = mindfulness-based stress reduction; MBCT = mindfulness-based cognitive therapy; mHealth = mobile health; Phys = physical; Psych = psychiatric.

Representative effect sizes again tended to occur toward the middle of the distribution, with some exceptions (Figure 2). The effect size for depression appears at the higher end and the effect sizes for pain, physical health conditions, physical health symptoms, and MBSR/MBCT appear at the lower end.

Follow-up.

Similar to passive controls, fewer estimates were available at follow-up for active controls. Similar to post-treatment, effect size estimates were generally smaller and less often statistically significant when MBIs were compared with active controls (Table 4, Figure 3). MBIs continued to show superiority to active controls for adults at follow-up (d = 0.29). MBIs showed superiority to active controls for psychiatric disorders and prevention of relapse in major depressive disorder (ds = 0.16 to 0.29), but did not differ from active controls for substance use, depression, weight/eating-related disorders, cancer, pain, or physical health conditions (ds = 0.01 to 0.38). All MBI types assessed showed statistically significant effects at follow-up (ds = 0.16 to 0.18). In contrast, the effect of MBIs across outcomes at follow-up may be less uniform. MBIs only showed sustained benefits over active controls for psychiatric symptoms (d = 0.29) and did not differ from active controls on measures of physical health, mindfulness, stress, or sleep (ds = −0.14 to 0.18).

Comparison with specific active controls.

Post-treatment.

Comparisons with specific active controls followed a pattern similar to active controls generally (Supplemental Materials Table 5, Supplemental Materials Figure 5). MBIs showed superiority to specific active controls for all four populations assessed (children/adolescents, healthcare professionals/trainees, employees, adults; ds = 0.13 to 0.26). MBIs showed superiority to active controls for psychiatric disorders, substance use, smoking, and depression (ds = 0.16 to 0.54), but not for physical health conditions, pain, weight/eating-related conditions, cancer, or anxiety (ds = −0.18 to 0.09). MBIs showed superiority to active controls for MBCT and various MBIs (ds = 0.13 to 0.54), but not for mobile health, MBSR, or MBSR/MBCT (ds = 0.02 to 0.32). MBIs showed superiority to active controls for mindfulness, and psychiatric symptoms (ds = 0.13 to 0.25), but not for stress, physical health symptoms, or sleep (ds = −0.03 to 0.09).

Follow-up.

Available comparisons with specific active controls followed an identical pattern to active controls at follow-up (Supplemental Materials Table 6, Supplemental Materials Figure 6). This was because representative effect sizes for active controls at follow-up all used comparisons with specific active controls (with the exception of Cillessen et al. [2019], leading to an absence of an estimate related to cancer).

Comparison with evidence-based treatments.

Post-treatment.

MBIs did not differ from evidence-based treatments for any PICOS subcategory at post-treatment, with one exception. MBIs showed superiority to evidence-based treatments for smoking (d = 0.42). All other effect size estimates ranged from d = −0.18 to d = 0.02 (Supplemental Materials Table 7, Supplemental Materials Figure 7).

Follow-up.

MBIs did not differ from evidence-based treatments at follow-up in most PICOS (adults, depression, psychiatric conditions, various MBIs, psychiatric symptoms; Supplemental Materials Table 8, Supplemental Materials Figure 8). MBCT, however, did show superiority over evidence-based treatments for the prevention of depressive relapse (d = 0.17; Kuyken et al., 2016).

Heterogeneity.

Heterogeneity estimates (I2) were available for most of the effect sizes in the included meta-analyses (k = 136 out of 160, 85.0%; Supplemental Materials Figures 9 and 10). On average, heterogeneity was moderate at post-treatment and follow-up for comparisons with both passive and active controls, although also fairly variable (passive controls at post-treatment = 44.81%, SD = 33.46; passive controls at follow-up = 37.60%, SD = 37.60; active controls at post-treatment = 37.41%, SD = 29.85; active controls at follow-up = 44.09%, SD = 33.74). When restricted to estimates of heterogeneity based on the representative effect sizes, heterogeneity at post-treatment was high for comparisons with passive controls (53.40%, SD = 22.12) and moderate for comparisons with active controls (35.72%, SD = 31.18).

Moderators.

A surprisingly small number of meta-analyses (k = 7 out of 44; 15.9%) reported eligible tests of moderation. While other tests of moderation were reported, these tests were not associated with eligible effect sizes (e.g., were conducted using both passive and active controls; Kuyken et al., 2016). Therefore, they were not considered interpretable. Across the seven meta-analyses reporting eligible tests of moderation, 73 tests were conducted (see Supplemental Materials Table 9). A minority (17.8%, k = 13) of the moderators tested were statistically significant.

Across 29 moderator tests focused on study quality, four were statistically significant and all indicated smaller effects associated with higher quality studies. Across 13 moderator tests focused on intervention dosage, one was statistically significant and indicated a larger effect in longer interventions. Across 13 moderator tests that examined participant characteristics, four were statistically significant with two finding larger effects with younger samples, one finding smaller effects with higher percentage female, and one finding larger effects in studies conducted in Asia or South African versus other locations. Across 12 moderator tests focused on comparison type, one found larger effects when MBIs were compared with non-evidence-based treatments versus when MBIs were compared with evidence-base treatments. Across three moderator tests comparing effects of MBSR versus MBCT, one found no difference and two found larger effects in MBCT. In a single moderator test each, analysis method and therapist experience did not predict outcomes, although higher researcher allegiance to MBIs was associated with larger effects in favor of MBIs.

Publication bias.

The majority of meta-analyses (70.5%, k = 31 out of 44; Supplemental Materials Table 10) did not conduct tests of publication bias on an eligible effect size. Among those that did report an eligible test, 13 meta-analyses used a test related to funnel plot asymmetry (e.g., Egger’s test). Six studies reported a fail-safe N (i.e., the number of unpublished null findings that would need to exist to nullify an observed effect; Rosenberg, 2003).

At the effect size-level, quantitative assessment of publication bias was reported for 41.3% of estimates (k = 66 out of 160). Among these, most reported no evidence of publication bias (57.6%, k = 38), 27.3% indicated an upwardly biased original estimate (“true” effect size is smaller than estimate; k = 18), 10.6% indicated a downwardly biased original estimate (“true” effect size is larger than estimate; k = 7), and 4.5% (k = 3) reported bias but did not indicate the direction. The significance test for the publication bias adjusted effect size changed in four instances (ks = 2 for upwardly and downwardly biased original estimates, respectively).

Risk of bias.

A measure related to risk of bias was included in most meta-analyses (63.6%, k = 28 out of 44; Supplemental Materials Table 11). Studies used several different tools designed to detect risk of bias, assess methodological quality, and/or evaluate the overall strength of the evidence. The Cochrane risk-of bias tool was by far the most commonly used (k = 18), followed by the Grading of Recommendations, Assessment, Development, and Evaluations (GRADE) tool (k = 5). Four studies used a version of the Jadad criteria and six used other assessment methods.

Results of Cochrane risk of bias assessment was tabulated across meta-analyses (Supplemental Materials Table 12; Supplemental Materials Figure 11). In no domain was risk of bias consistently rated as low, although the degree of potential bias varied across domains. Areas most commonly rated as having high risk for bias were blinding of personnel/participants (43.6%) and blinding of outcome assessment (39.3%). Examination of meta-analysis-level ratings in these domains reveals a further concern about this aspect of the literature – namely potential inconsistency across meta-analysts in how these domains are interpreted. As reported by Kuyken et al. (2016), the nature of a behavioral intervention like an MBI made blinding of personnel and participants not possible (see Wampold and Imel [2015]). Also, as reported in the one eligible Cochrane review (Schell et al., 2019), the use of self-report measures made blinding of outcomes not possible (i.e., the “assessor” was the participant, who within behavioral interventions cannot readily be blinded to their group assignment; Higgins & Green, 2008). However, as shown in Supplemental Materials Figure 11, these domains were commonly coded as at low or unclear risk for bias, including in instances in which all outcome measures were self-report (e.g., depression symptoms; Martin, Golijani-Moghaddam, & dasNair, 2018).

Adverse effects.

Adverse effects were discussed in 34.1% (k = 15 out of 44) of the meta-analyses (Supplemental Materials Table 13). Of these, 11 noted a lack of reporting of adverse events in primary studies. Five meta-analyses discussed serious adverse events, with four reporting that no serious adverse events occurred and one reporting serious adverse events did occur. Within the meta-analysis that reported serious adverse events occurred, Kuyken et al. (2016) concluded the adverse events were not attributable to the MBI (MBCT). Rusch et al. (2019) also concluded that the non-serious adverse events reported (e.g., worsening of sleep quality, muscle soreness) did not indicate increased risk of harm for various MBIs for sleep. One meta-analysis reported mild adverse events associated with MBIs for treating low back pain, but did not specify whether this indicated MBIs increased risk of harm (e.g., increased pain; Anheyer et al., 2017).

Statistical power.

Data necessary for computing the meta-analytic statistical power were available for 76.3% of effect sizes. The average statistical power was examined for comparisons with passive and active controls separately (Supplemental Materials Figure 13). As would be expected, statistical power was typically higher for comparisons with passive controls (beta = .78, SD = .29) than comparisons with active controls (beta = .52, SD = .39). A majority (62.3%) of tests had statistical power ≥ .80 for comparisons with passive controls, while 34.4% of tests has statistical power ≥ .80 for comparisons with active controls.

Discussion

The current study sought to rigorously evaluate the empirical status of MBIs through systematically reviewing the available meta-analytic literature. Our search yielded 44 meta-analyses that represent 336 RCTs with 30,483 participants. This literature base is similar in magnitude to that used in previous large-scale evaluations of psychotherapy generally (k = 375; Smith & Glass, 1977) and cognitive-behavioral therapy specifically (k = 332; Butler et al., 2006). Notably, unlike these previous reviews, the current review is restricted to RCTs. Perhaps the most obvious conclusion that can be drawn is simply that the experimental literature for MBIs is quite large. This is not a family of interventions that has gone untested. Thus, the question becomes: what does the evidence suggest? And, what are the limitations of the literature?

Estimates of Efficacy

We evaluated the efficacy of MBIs by assessing the magnitude of treatment effects relative to passive controls (e.g., waitlist) and active controls (e.g., attentional control, other therapies). Meta-analytic effect sizes based on the largest number of studies available suggest that MBIs compare favorably with passive controls across several (but not all) populations, problems, interventions, and outcomes (i.e., PICOS; Higgins & Green, 2008). The magnitude of effects at post-treatment ranged considerably from very small (d = 0.10, for children/adolescents) to large (d = 0.89, for anxiety disorders), with most near the moderate range. Effects at follow-up also showed a wide range (d = 0.30 to 1.18), with most small to moderate in magnitude. On the whole, the pattern of comparisons with passive controls suggest that MBIs may have transdiagnostic relevance, with effects persisting at follow-up. Importantly, not all PICOS subcategories showed significant effects. In particular, MBIs did not differ from passive controls for older adults, substance use disorders, or on objective or physiological measures at post-treatment or for sleep or mobile health MBIs at follow-up. Importantly, in each of these cases, the meta-analytic estimates were based on five or fewer RCTs. Depending the degree of heterogeneity (which in some cases was high, e.g., Li & Bressington, 2019), such tests are very likely underpowered to detect even moderate effects (e.g., post hoc power to detect d = 0.74 in Li and Bressington was 47.9%).

Comparisons with active controls showed less consistent evidence for the superiority of MBIs. Significant effects were observed across several PICOS, with MBIs outperforming active controls for adults, children/adolescents, depression, smoking, substance use, and psychiatric conditions, and on measures of mindfulness, stress, and psychiatric symptoms (ds = 0.13 to 0.54).3 However, many effect sizes for comparisons with active controls were very small and non-significant, including for students, anxiety disorders, cancer, pain, physical health conditions, MBSR, mobile health, physiology, objective measures, and sleep (ds = −0.18 to 0.32). Overall, this pattern of evidence suggests that MBIs sometimes, but not always, show effects beyond those associated with non-specific factors (e.g., instructor attention, expectancy; Wampold & Imel, 2015). A similar pattern emerged at follow-up, with MBIs showing superiority to active controls for some PICOS (e.g., adults, psychiatric disorders, depression relapse) but not others (e.g., weight/eating-related disorders, pain, cancer, stress, sleep). At follow-up, the effect of MBIs for substance use disorders was one of the larger effect sizes (d = 0.38, [0.00, 0.76]) but did not differ from zero and was based on only 4 studies (Goldberg et al., 2018).

Comparisons with other therapies provide a more rigorous test of efficacy and can address the question facing clinicians and patients who may consider these versus other interventions. MBIs tended to perform similarly to specific active controls (i.e., other interventions) at post-treatment and follow-up, with effect sizes often close to zero (e.g., for cancer, weight/eating-related conditions, pain, physical health conditions, sleep). MBIs did show superiority with very small to small effect sizes in some PICOS subcategories (across populations, for depression, substance use, smoking, psychiatric symptoms, for MBCT), suggesting there may be instances in which MBIs are a preferred approach. The pattern was similar at follow-up, with some evidence of superiority (e.g., for psychiatric disorders and prevention of depressive relapse). MBIs may be less effective than other therapies for sleep at follow-up, although the effect size did not differ from zero (d = −0.14, [−0.62, 0.34]; Rusch et al., 2019).

The theoretically most rigorous test of MBIs are comparisons with evidence-based treatments, which included primarily cognitive-behavioral therapy or antidepressants (Goldberg et al., 2018; Kuyken et al., 2016). In general, MBIs performed on par with these therapies at both post-treatment and follow-up. MBIs may outperform evidence-based treatments for smoking cessation and for the prevention of depressive relapse. MBIs may be less effective than evidence-based treatments for anxiety, although the effect size did not differ from zero (d = −0.18, [−0.41, 0.06]; Goldberg et al., 2018).

Potential Sources of Bias

In the absence of other potential sources of bias and unreliability, these effect sizes support the notion that MBIs may hold promise across a range of PICOS, in most cases outperforming passive controls and performing on par or better than active controls, including other therapies and evidence-based treatments. However, additional factors are important to consider when evaluating the strength of this evidence. One essential factor to consider is the degree of heterogeneity (Higgins & Green, 2008). While the degree of heterogeneity itself was quite variable, on average, the meta-analytic estimates reviewed showed a moderate to high degree of heterogeneity (25 ≥ I2 ≥ 75%). This magnitude of heterogeneity is similar to estimates found in large-scale meta-analyses of evidence-based psychotherapies (e.g., cognitive-behavioral therapy, interpersonal psychotherapy; Cuijpers et al., 2011, 2013). It nonetheless suggests there is considerable variability across the RCTs represented by each effect size. Further, our review of moderators detected no study-level characteristics that have been consistently shown to account for this heterogeneity.4 The degree of heterogeneity and absence of consistent moderators highlights the need for future meta-analytic work clarifying study characteristics that explain this variability.

Publication bias and risk of bias are two additional potential sources of uncertainty. Unfortunately, publication bias was formally tested for only 41.3% of the included effect sizes (k = 66). Among these tests, a sizable minority (27.3%, k = 18) found evidence for publication bias suggesting upwardly biased original estimates, although only two of these (i.e., 3.0% of the tests conducted) resulted in modified significance tests. Eight publication bias tests suggested downwardly biased original estimates. Taken together, it seems that effect sizes may be modestly inflated due to publication bias, but this source of bias alone is unlikely to account for the observed pattern of findings.

Other sources of bias may be more influential. Perhaps the most pernicious is a lack of blind outcome assessment, which leaves much of the MBI literature vulnerable to social desirability and other biases associated with self-report. Of course, including non-self-report measures is not straightforward for all outcome types. Valid behavioral measures are not always available. Clinician-rated measures are another option (e.g., Kuyken et al., 2016), although these can be costly. Nonetheless, this is an important future direction for increasing the rigor of work in this area, both in primary studies and meta-analyses (for recent meta-analyses of objective measures, see Pascoe et al., 2017; Treves, Tello, Davidson, & Goldberg, 2019). Other commonly noted sources of bias within the included studies were incomplete outcome data and selective reporting. As is increasingly discussed, selective reporting in particular allows opportunistic bias and can substantially reduce the scientific integrity of a body of literature (DeCoster, Sparks, Sparks, Sparks, & Sparks, 2015). It is crucial that researchers embrace the spirit of open science to address these biases (Open Science Collaboration, 2012). We commend those who have begun doing so (e.g., Lindsay, Young, Brown, Smyth, & Creswell, 2019).

The remaining potential sources of bias are, from our perspective, either less influential or unavoidable. Random sequence generation and allocation concealment were commonly rated as of unclear risk of bias, indicating authors of primary studies are omitting these aspects of their design in published reports. We hope that clinical trialists along with journal editors and reviewers become attuned to the necessity of reporting these details of randomization.

The lack of blinding of personnel (e.g., instructors) and participants seems largely unavoidable. With few exceptions (e.g., comparison conditions that involve sham or dismantled meditation; Zeidan, Johnson, Gordon, & Goolkasian, 2010; Lindsay et al., 2019), it is typically not possible to blind participants and mindfulness instructors to the condition they are receiving or providing. Moreover, instructors delivering treatments that they think will actually work has been recognized as an important factor common across various forms of psychological intervention (Wampold & Imel, 2015). The challenge of applying the double-blind placebo-controlled design is not unique to MBIs, but exists for all behavioral interventions (Wampold et al., 2005). One potential solution are designs that compare MBIs to other therapies that are intended to be therapeutic (i.e., bona fide treatments; Wampold et al., 1997). However, even for these comparisons, it is crucial that researcher allegiance to MBIs be balanced with allegiance to the comparison condition (Goldberg & Tucker, 2020; Munder et al., 2013).

A final note of concern regarding risk of bias is variability in how these items are interpreted across meta-analyses. For example, some authors coded self-report outcomes as blinded (e.g., Martin et al., 2018), which seems inaccurate when the participant is aware of the intervention they received. Again, we hope that future meta-analysts, journal editors, and reviewers will evaluate this important aspect of meta-analyses carefully. Variation in item interpretation makes it impossible to accurately evaluate these sources of bias.

One important criticism raised by Van Dam et al. (2018) and others (e.g., Baer et al., 2019) is the potential for MBIs to cause harm. Unfortunately, reporting of this was inconsistent both in primary studies and the included meta-analyses. Better reporting of these outcomes is vital for definitively evaluating safety.

Lastly, we assessed meta-analytic statistical power. In keeping with concerns regarding small sample sizes in RCTs testing MBIs (Baer, 2003; Goldberg et al., 2017), it appears that many meta-analyses are also underpowered. As we restricted our sample to estimates based on at least four studies, we likely overestimated the average statistical power for meta-analytic effect sizes in this literature, therefore providing an upper bound on the typical power. Tests of moderation within these studies are almost certainly commonly underpowered (Borenstein et al., 2009).

Key Areas for Improvement

There is clearly room for improving the evidence base for MBIs. However, on the whole, it seems unlikely the sources of bias reviewed here undermine the pattern of efficacy observed for MBIs across numerous (but not all) PICOS.5 Based on comparisons with active controls, MBIs appear to hold promise for both adults and child/adolescent samples, and may be particularly promising for addressing some psychiatric symptoms and disorders (e.g., depression, depressive relapse, substance use, smoking). Evidence was weaker for the superiority of MBIs over active controls for health-related conditions and outcomes (e.g., cancer, pain, sleep).

Our results suggest several fruitful directions for future RCTs (Table 5). Given the known biases associated with small samples (Button et al., 2013), large-scale RCTs conducted and reported in ways that minimize risk for bias are essential. RCTs focused on older adults, mobile health delivery, and physiological measures may be particularly valuable, as these areas showed promising effect sizes but wide confidence intervals, being based on a small number of RCTs. Necessary steps for improving the strength of this evidence base include reporting adverse events, including non-self-report outcomes, conducting intention-to-treat analyses, and including follow-up assessments. For MBIs with established efficacy (e.g., MBCT for prevention depression relapse), dissemination and implementation RCTs may be appropriate (Dimidjian & Segal, 2015).

Table 5.

Recommendations for future research

| Recommendations for RCTs | Increase sample sizes |

| Report details of randomization procedure | |

| Use intention-to-treat analyses | |

| Embrace open science practices | |

| Study MBIs for older adults | |

| Test mHealth delivery formats | |

| Include non-self-report and physiological measures | |

| Assess and report adverse events | |

| Study dissemination and implementation for established MBIs (e.g., MBCT) | |

|

| |

| Recommendations for meta-analyses | Avoid combining passive and active controls |

| Test and report moderators based on theory | |

| Code risk of bias based on standard methods | |

| Formally assess publication bias | |

| Consider impact of statistical power in study planning and interpretation | |

| Study objective measures | |

| Assess effects at follow-up | |

| Create publicly available database of MBI RCTs with effect sizes | |

Note: mHealth = mobile health; MBI = mindfulness-based intervention; MBCT = mindfulness-based cognitive therapy.

Several of these recommendations have been voiced elsewhere, with little evidence that practices have improved over time (Goldberg et al., 2017). This signals a need to clearly evaluate barriers to implementing best practices and strategies for encouraging their adoption. There are likely influences throughout the research pipeline (e.g., from graduate training to grant review study sections). While a full discussion of these factors is outside the scope of this review, we note that journal policies are a powerful way to effect change. Strategies adopted to incentivize open science (e.g., badges for preregistration, methodology disclosure statements; Eich, 2014) are promising models. If journal editors accept manuscripts using intention-to-treat analyses with null findings over those using completer analyses with statistically significant findings, we believe authors will modify their practices.

Our results also suggest fruitful directions for future meta-analyses. One important recommendation is that meta-analysts avoid combining passive and active control conditions (and avoid testing moderators based on a combination of passive and active control conditions). Combining passive and active controls yields results that are inherently ambiguous and potentially misleading.6 Other steps to strengthen the meta-analytic literature include more consistent coding of risk of bias, systematic testing and reporting of theoretically important moderators, formal assessment of publication bias, and consideration of statistical power when conducting and interpreting meta-analytic results. Meta-analyses focused on objective outcomes and assessing effects at follow-up would also be welcome contributions. Having a publicly available database of MBI RCTs with associated effect sizes (as has been done for psychotherapies for depression; Cuijpers, Karyotaki, Ebert, & Harrer, 2020) could facilitate future large-scale, open science meta-analyses of this literature.

Limitations

Just as meta-analyses are limited by the available primary studies, our review was likewise limited by both the available meta-analyses and the RCTs of which the meta-analyses were composed. Gaps in both meta-analyses and primary studies (e.g., reporting of adverse events) necessarily resulted in gaps in our review. One especially troubling gap is a lack of primary studies and meta-analyses focused on the efficacy of MBIs for underserved and underrepresented groups (Waldron, Hong, Moskowitz, & Burnett-Zeigler, 2018), which limits the generalizability of our results to these populations. Second, our decision to require a minimum of four studies for effect sizes to be included resulted in some estimates being based on only four studies (and likely less reliable as a result; Pereira & Ioannidis, 2011) and other estimates being excluded due to an insufficient number of available studies (and therefore not represented in the current review). A third limitation was the moderate degree of heterogeneity within the included studies (compounded by uncertainty in the I2 estimates themselves, given the small number of studies for some effect sizes; von Hippel, 2015). There was likewise variability across meta-analytic estimates for a given PICOS. This variability decreases confidence in the pattern of findings, suggesting systematic variation may exist both between RCTs and between meta-analyses. In the absence of second order meta-analysis (Schmidt & Oh, 2013), we could not evaluate sources of this variability directly.

A fourth limitation was the need to choose a method for determining representative effect sizes. Our decision to report representative effect sizes based on the largest number of studies seemed likely to provide the most precise estimate, but a different metric (e.g., effect sizes with the smallest confidence intervals) may have yielded a somewhat different pattern of findings. Further, selecting the largest meta-analysis may have neglected smaller but perhaps more homogeneous meta-analyses (e.g., MBCT for depression relapse; Kuyken et al., 2016).

Conclusion

Based on 44 meta-analyses examining the effects of MBIs across 336 unique RCTs with 30,483 participants, it appears that interventions based on mindfulness meditation indeed hold substantial transdiagnostic potential, albeit with stronger evidence for some PICOS than others. Therefore, the utilization of MBIs as a family of interventions is at least partially supported by scientific evidence. Ongoing, rigorous experimental research evaluating these interventions, with attention to limitations of the existing literature, is warranted.

Supplementary Material

Acknowledgments

This systematic review was registered through the Open Science Framework (https://osf.io/eafy7/). Study data are included in supplemental materials. This research was supported by the National Institute of Mental Health (NIMH) Grant R01MH43454 (Richard J. Davidson) and Grant T32MH078788 (Shufang Sun), by the National Center for Complementary and Integrative Health Grant P01AT004952 (Richard J. Davidson) and Grant K23AT010879 (Simon B. Goldberg), and by the University of Wisconsin-Madison, Office of the Vice Chancellor for Research and Graduate Education with funding from the Wisconsin Alumni Research Foundation (Simon B. Goldberg). The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health. Richard J. Davidson is the founder, president, and serves on the board of directors for the nonprofit organization, Healthy Minds Innovations, Inc. We are grateful to Scott A. Baldwin for comments on our study design and Zach G. Daily for assisting with gathering information regarding primary studies. We are grateful to Willem Kuyken, Fiona Warren, Christina Spinelli, and Michaela Pascoe for sharing supplemental data from their meta-analyses.

Footnotes

See Analayo (2018) for a discussion of the aspects of sati that connote memory or remembering.

We considered the possibility of re-analyzing the included primary RCTs. Ultimately, we concluded that the value of doing so (i.e., to provide updated estimates of the effects of MBIs) would be better served by a future large-scale meta-analysis with an independent literature search. Such a review would be useful, particularly for testing moderators which are frequently underpowered. Re-analyzing the included studies and replicating the tests reported across the included meta-analyses seemed to us both unwieldly and unlikely to yield results dissimilar from representative effect sizes based on the largest number of studies. Given most of the representative effect sizes were based on recent meta-analyses, it is likely a re-analysis drawn from the included meta-analyses would have almost entirely the same studies and presumably very similar results.

In only one instance was an effect significant relative to active but not passive controls (substance use disorders at post-treatment). Statistical power is again a likely explanation, with only five studies available for comparisons with passive controls (n = 149) versus seven studies (n = 900) available for active controls.

Butler et al. (2006) also failed to find consistent moderators beyond researcher allegiance in their review of meta-analyses of cognitive behavioral therapy.