Abstract

Purpose

To determine in what way the proposed simulation-based intervention (SBI) is an effective intervention for use in basic arthroscopic skills training.

Methods

Twenty candidates were recruited and grouped according to experience. Performance metrics included the time to activity completion, errors made, and Global Rating Scale score. Qualitative data were collected using a structured questionnaire.

Results

Performance on the SBI differed depending on previous arthroscopic training received. Performance on the simulator differed between groups to a statistically significant level regarding time to completion. A difference was also present between participants with no previous training and those with previous training when assessed using the Global Rating Scale. The SBI was deemed acceptable, user-friendly, and realistic. Participants practicing at the expert level believe that such an SBI would be beneficial in developing basic arthroscopic skills.

Conclusions

The results of this study provide evidence that the use of an SBI consisting of a benchtop workstation, laptop viewing platform, 30° arthroscope, and defined performance metrics can detect differences in the level of arthroscopic experience. This format of SBI has been deemed acceptable and useful to the intended user, increasing the feasibility of introducing it into surgical training.

Clinical Relevance

This study adds to the existing body of evidence supporting the potential benefits of benchtop SBIs in arthroscopic skills training. Improved performance on such an SBI may be beneficial for the purpose of basic arthroscopic skills training, and we would support the inclusion of this system in surgical training programs such as those developed by the Arthroscopy Association of North America and American Board of Orthopaedic Surgery.

The traditional model of surgical training has evolved significantly in recent years. As a result, surgeons in training are exposed to a lower volume of surgical procedures with less opportunity to develop the psychomotor skills required for arthroscopic surgery.1,2 As a result, many trainees feel less confident in performing arthroscopic, relative to open, procedures on completing their training.3

Consequently, significant efforts have been made to develop techniques for surgical skills training outside of the operating theater. One growing area of research is in the use of simulation-based intervention (SBI) in surgery.

High-fidelity virtual-reality (VR) simulators have many advantages, but they are expensive and are not designed for personal practice. By contrast, benchtop simulators have the advantage of being less expensive and more accessible to trainees. They do not necessarily require complex viewing platforms and can often be used with personal computers or laptops. This design of SBI has the potential to be portable and accessible and therefore increase the distribution of simulation within the surgical domain. Many such models have been produced; however, before each SBI can be incorporated into a training program, it should first undergo a validation process for use in this way.4,5

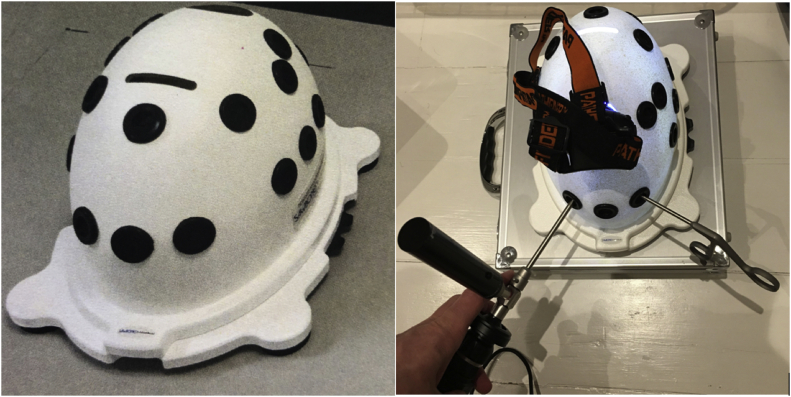

The FAST (Fundamentals of Arthroscopic Surgery Training) workstation (Sawbones–Pacific Research Laboratories, Vashon, WA) is a commercially produced benchtop simulator consisting of a baseplate with interchangeable components and a solid plastic dome with premade entry portals for instrument placement (Fig 1). The dome can be either clear or opaque depending on whether an arthroscopic camera is used, and the changeable components vary in design to allow a variety of activities to be performed, from basic triangulation to complex tissue debridement and repair.

Fig 1.

FAST workstation with camera and arthroscopic instrument.

The American Academy of Orthopaedic Surgeons, Arthroscopy Association of North America, and American Board of Orthopaedic Surgery have developed a course module consisting of online written and video material to guide the trainee through a series of predetermined activities to be performed on the FAST workstation. Previous research investigated the construct validity of the FAST workstation when combined with a VR simulator (ArthroS; VirtaMed, Zurich, Switzerland).6 The study concluded that training year but not prior number of arthroscopic cases correlated significantly with performance and that the use of the FAST workstation in combination with a VR simulator may provide an excellent environment for basic arthroscopic skill acquisition. However, there is little evidence available to support the use of the FAST workstation when combined with a standard laptop or home computer, as recommended in the course design.

The purpose of this research project was to determine in what way the proposed SBI is an effective intervention for use in basic arthroscopic skills training. We hypothesized that a measurable difference would be present between participants with differing levels of training in basic arthroscopic skills when performing tasks on the FAST benchtop workstation in combination with a laptop viewing platform and 30° arthroscope. We also hypothesized that the proposed SBI would be deemed acceptable and useful by participants.

Methods

Between January and March 2019, all members of the orthopaedic department were invited to participate via email. Twenty candidates were recruited and grouped according to arthroscopic experience: novice, trainee, or expert (Table 1). This represents the maximum number of participants who could be recruited during the study period and is consistent with numbers in similar studies in the literature. We enrolled 17 men and 3 women (2 in the novice group and 1 in the trainee group) in the study. No potential participants were excluded. The novice group contained junior doctors with an interest in orthopaedic surgery but no prior training in arthroscopy. The trainee group included orthopaedic trainees who had received training in arthroscopic procedures but were not yet operating at the expert level. The expert group included consultants who regularly performed arthroscopic procedures as part of their orthopaedic practice and fellows who had completed their training in arthroscopic surgery.

Table 1.

Participant Demographic Characteristics

| n | Novice |

Trainee |

Expert |

|---|---|---|---|

| 9 participants (7 men) | 5 participants (4 men) | 6 participants (6 men) | |

| Right hand dominant, n | 8 | 4 | 5 |

| No. of previous arthroscopies performed, mean (range) | 3.11 (0-15) | 180.8 (50-270) | >1,000 (466 to >1,000) |

| Participant level range | F2 to ST3 | ST5 to ST8 | Fellow to consultant |

F2, foundation year 2; ST3, specialty trainee year 3; ST5, specialty trainee year 5; ST8, specialty trainee year 8.

The workstation instruments consisted of a 30° arthroscope connected to a commercially available USB (Universal Serial Bus) camera head and a light lead, arthroscopic graspers for manipulation of cylinders, and a standard hook probe for manipulation of a ball through a maze. These instruments are identical to those used in real-life arthroscopic surgery. The use of a 30° arthroscope was considered fundamental because manipulation of this instrument is required to afford the appropriate view during an arthroscopic procedure. A light source was placed at the apex of the dome because, to make the system suitable for home use, the light lead was not connected to a source.

The camera was linked to a laptop via a USB link. The laptop acted as both the screen to view the procedure and the recording device to capture the performance for future analysis.

All subjects provided informed consent prior to participation. All participants performed the same activities: placing cylinders on vertical pegs, placing cylinders on horizontal pegs, and moving a metal ball through a maze. These activities were selected because it was deemed that key basic arthroscopic skills would be required for their completion. The use of more complex activities available with the FAST workstation, such as meniscal debridement, requires a greater understanding of the surgical procedure and therefore would unfairly benefit participants with previous exposure to that procedure. The participant performed the 3 activities with one hand controlling the arthroscope and the other controlling the arthroscopic instrument before performing these activities again having switched hands.

Each participant was given an instruction sheet and reviewed an introductory video prior to attending the session allocated for data collection. The video explained in detail the simulation activities to be performed. On the day of data collection, each participant received a 10-minute face-to-face orientation to familiarize himself or herself with the simulator prior to completing the activities.

Each activity was recorded on a standard Windows laptop using the AmCap program (Noel Danjou). The following data were collected for each hand of each participant: mean time taken to complete the activity, mean number of errors made, mean Arthroscopic Surgical Skill Evaluation Tool (ASSET) Global Rating Scale (GRS) score, and questionnaire responses. Demographic data included sex, hand dominance, number of arthroscopic procedures performed in a training situation, and level of training.

The ASSET has been specifically designed to assess performance during arthroscopic procedures. It is known to have high internal consistency and inter-rater reliability in simulated and operating room environments.7 An error was defined as dropping a cylinder or placing it on the wrong peg during the vertical and horizontal peg activities or losing control of the metal ball during the maze activity.

The performance of participants on the maze activity was rated using the ASSET GRS by 2 assessors with an interest in surgical simulation. Both assessors were blinded to the participant being rated and were not directly related to the study. The raters independently reviewed footage from the simulator remotely and rated the participant’s performance according to 7 domains: safety, field of view, camera dexterity, instrument dexterity, bimanual dexterity, flow of procedure, and quality of procedure. Each domain was rated on a scale from 1 to 5 points, with 1 point representing the lowest score and 5 points, the highest. The domain for autonomy was removed because no help was available to participants. The scores for each domain were summed to give an overall total ranging from 7 to 35 points.

Each participant completed a 19-item questionnaire after completion of the activities (Table 2). This consisted of a 5-point Likert scale (from 1 [strongly disagree] to 5 [strongly agree]) to evaluate the realism of the SBI, acceptability to the user, and perceived usefulness as a training tool. Ethical approval was given for this research project.

Table 2.

Participant Questionnaire

| Demographic Characteristics |

| Level |

| No. of arthroscopies in logbook |

| Right or left handed |

| Participant feedback∗ |

| The external instrumentation was realistic. |

| The position of your hands while operating was realistic. |

| The visual experience of the arthroscopy screen was realistic. |

| The visual appearance of the instruments on screen was realistic. |

| The camera ergonomics were realistic (size, weight). |

| The use of the 30° scope and light lead was realistic. |

| The feel of the instruments on the internal simulator was realistic. |

| The triangulation skills required to complete the tasks were realistic. |

| The simulator gave a sense of what a real arthroscopy is like. |

| The simulator provided an unthreatening learning environment. |

| I enjoyed using the simulator. |

| The simulator is a useful training tool for orthopaedic core trainees. |

| The simulator is a useful training tool for orthopaedic specialty trainees. |

| The simulator is a useful training tool for orthopaedic consultants. |

| Incorporation into ST training∗ |

| Practice with this simulator could improve early attempts at arthroscopy. |

| If given the arthroscopic simulator for home use, I would use it. |

| The simulation exercise was interesting. |

| The simulator is easy to use. |

| The simulator is well designed and constructed. |

| Other feedback† |

ST, specialty trainee.

The following scale was used for each response: 1, strongly disagree; 2, disagree; 3, neither agree or disagree; 4, agree; or 5, strongly agree.

Participants were asked to provided other feedback in free-text format.

All data were analyzed using RStudio software (version 1.2.1335; RStudio, Boston, MA). Nonparametric tests were used for time, errors, and total ASSET GRS score because previous studies have found these data not to be normally distributed.8

Kruskal-Wallis tests were used for time, errors, and total ASSET GRS score grouped by experience level. When a difference was identified, pair-wise Wilcoxon rank sum tests were used between the novice and trainee groups, between the novice and expert groups, and between the trainee and expert groups to delineate the level at which the various aspects measured could distinguish between different groups. A significance level of P < .05 was selected.

Remaining descriptors for these data have been reported as median values including lower and upper quartiles. The Cohen linear-weighted κ test between the 2 ASSET data sets was used to assess for inter-rater agreement. Questionnaire responses were analyzed as ordinal data and reported as the percentage in agreement with the anchor statement.9

Results

Time

The overall time taken to complete the activities showed a difference among the 3 experience groups, with novices requiring more time than trainees, who in turn required more time than experts (Table 3). Subsequent pair-wise analysis of the vertical peg activity showed a statistically significant difference between the novice and expert groups (P = .036) (Table 3). A statistically significant difference was shown across all groups during the horizontal peg activity (P = .0039), with pair-wise analysis confirming statistically significant differences between the novice and expert groups (P = .0016) and between the trainee and expert groups (P = .017). A statistically significant difference was shown across all groups during the maze activity (P = .021), with pair-wise analysis confirming statistically significant differences between the novice and expert groups (P = .021) and between the novice and trainee groups (P = .042).

Table 3.

Time Taken to Complete Each Activity

| Activity | Time, Median (IQR), s |

P Value for all Groups and Paired Analyses |

|||||

|---|---|---|---|---|---|---|---|

| Novice | Trainee | Expert | All∗ | Novice vs Trainee† | Novice vs Expert† | Trainee vs Expert† | |

| Vertical peg | 115.5 (85-150.5) | 79.5 (77.5-125.5) | 64.75 (54.86-80.63) | .11 | .36 | .036‡ | .54 |

| Horizontal peg | 163 (133-221) | 113.5 (102-127) | 59.5 (54.5-69) | .0039‡ | .15 | .0016‡ | .017‡ |

| Maze | 170.5 (122-180) | 109.5 (97.5-137) | 91.5 (86.5-116.75) | .021‡ | .042‡ | .021‡ | .31 |

IQR, interquartile range.

Kruskal-Wallis test.

Wilcoxon rank sum test.

Statistically significant (P < .05).

Errors

The overall number of errors made showed a statistically significant difference among groups during the horizontal peg activity (P = .027), with pair-wise analysis showing a statistically significant difference only between the novice and expert groups (P = .018) (Table 4). No difference was found between groups during the vertical peg and maze activities.

Table 4.

Errors Made During Each Task

| Activity | Errors Made, Median (IQR) |

P Value for all Groups and Paired Analyses |

|||||

|---|---|---|---|---|---|---|---|

| Novice | Trainee | Expert | All∗ | Novice vs Trainee† | Novice vs Expert† | Trainee vs Expert† | |

| Vertical peg | 1 (0.5-2) | 1 (0-1.5) | 1.25 (1-1.5) | .86 | .68 | .95 | .71 |

| Horizontal peg | 4.5 (2.5-5) | 2 (1-2.5) | 1.5 (0.75-1.8) | .027‡ | .079 | .018‡ | .46 |

| Maze | 0 (0-1) | 0 (0-0) | 0 (0-0.75) | .55 | .31 | .79 | .56 |

IQR, interquartile range.

Kruskal-Wallis test.

Wilcoxon rank sum test.

Statistically significant (P < .05).

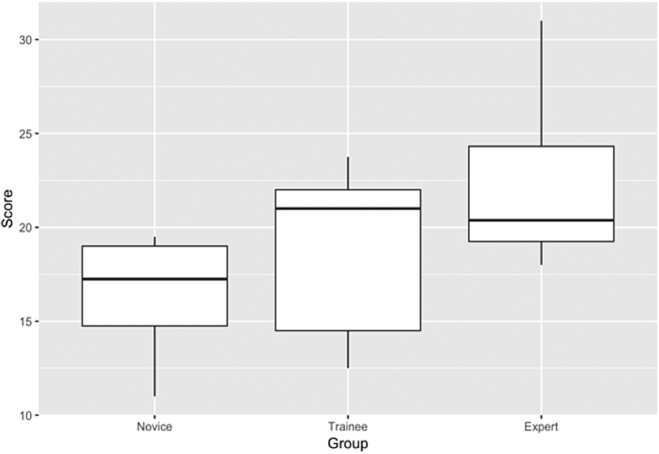

GRS Score

Good inter-rater agreement of the ASSET score was found between the 2 assessors. The Cohen linear-weighted κ coefficient of agreement was 0.83. A statistically significant difference was found between the novice and expert groups (P = .018). A trend toward higher scores with an increasing level of experience was observed (Fig 2).

Fig 2.

Box-and-whisker plot showing total Arthroscopic Surgical Skill Evaluation Tool (ASSET) score attained in each group. A statistically significant difference was observed between the novice and expert groups (P = .018). The center bar indicates the median; hinges, first and third quartiles; whiskers, 1.5 times the interquartile range; and points, outliers.

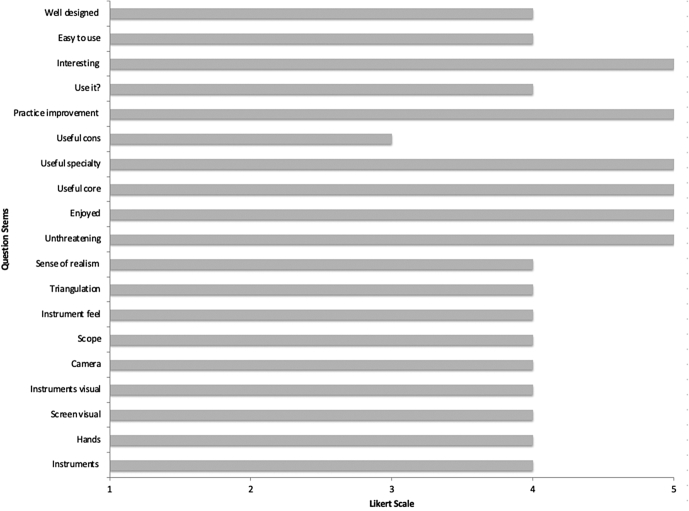

Questionnaire Results

Each participant completed all aspects of the questionnaire (Fig 3). Of the 15 participants who had previously performed an arthroscopic procedure, all either agreed or strongly agreed that the external instrumentation and use of the 30° scope and light lead were realistic; 93.3% agreed or strongly agreed that the visual appearance of the instruments on the screen and feel of the instruments on the internal structure of the simulator were realistic; 80% agreed or strongly agreed that the camera ergonomics regarding size and weight and the triangulation skills required to complete the activities were realistic; and 73.3% agreed or strongly agreed that the position of the hands while operating was realistic, the visual experience of the arthroscopy screen was realistic, and the simulator gave a sense of what a real arthroscopy was like. Responses from the 5 participants who had not performed an arthroscopy before were excluded because of the lack of real-life experience on which to base a judgment regarding realism.

Fig 3.

Summary of questionnaire responses. (cons, orthopaedic consultants.)

In terms of whether the simulator represents an acceptable learning tool, all 20 participants agreed or strongly agreed that the simulator provided an unthreatening learning environment and was interesting and enjoyable to use. All but 1 participant in the trainee and novice groups (95%) agreed or strongly agreed that if given the simulator for home use, they would use it (80% of all participants). All trainees and novices agreed or strongly agreed that it was easy to use (95% of all participants), and 78.6% agreed or strongly agreed that the simulator was well designed and constructed (85% of all participants).

In terms of whether the simulator represents a useful learning tool, all participants agreed or strongly agreed that it would be a useful training tool for orthopaedic core trainees and 95% agreed or strongly agreed that it would be a useful training tool for orthopaedic specialty trainees and that practice with this training tool could improve early attempts at arthroscopy. Three participants commented that the light source could be improved, 3 indicated that the workstation itself needed better anchoring to reduce movement during the activities, 1 noted that the rubber around the entry portals was too slippery, 1 commented that the laptop screen negatively impacted realism, and 1 indicated that the feel of the instruments within the simulator was unrealistically hard. One trainee stated that he or she believed his or her hand-eye coordination and triangulation had improved throughout the simulation experience.

Discussion

The pertinent findings of this study are that performance on the FAST workstation benchtop simulator, used in conjunction with a laptop viewing platform, was positively associated with the amount of previous arthroscopic training received. Performance on the simulator differed between groups to a statistically significant level regarding time to completion. A difference was also present between participants with no previous training and those with previous training when assessed using the ASSET GRS. The FAST workstation was deemed acceptable and user-friendly by the intended end user. The use of a 30° arthroscope and light source in combination with actual surgical instruments was deemed realistic, and participants practicing at the expert level believed the use of such a simulator would be beneficial in developing basic arthroscopic skills in the early years of training.

The findings of this study add to the existing body of evidence supporting the use of benchtop SBIs in the training of basic arthroscopic skills and are in keeping with those previously reported in the literature for similar simulators. Tofte et al.6 combined the FAST workstation with a VR system and found that novice arthroscopists had lower performance scores than trainees and experts but trainees and experts performed at a similar level. They assessed basic arthroscopic activities including “periscoping,” “tracing the lines,” and “gathering the stars” using total operation time, camera path length, and a composite score derived from multiple measurements produced by the VR simulator. Although their study used the benchtop FAST workstation, it did so in combination with a VR simulator and not a laptop viewing platform.

Colaco et al.10 and Lopez et al.11 both reported similar findings when using homemade benchtop simulators in combination with a laptop viewing platform and a 0° arthroscope. Colaco et al. assessed basic arthroscopic activities in combination with the time taken to complete the task and the number of times participants looked at their hands. Their study showed that students were significantly slower and looked at their hands more frequently than trainees and experts; however, times did not differ significantly between trainees and experts. They concluded that they had established construct validity owing to the ability of the simulator to differentiate the participant’s level of training based on these metrics. Similarly, Lopez et al. evaluated triangulation, probing, horizon changes, suture management, and object manipulation in combination with a time score, an assessment of accuracy, and a GRS, reaching very similar conclusions. The findings of our study add to these data by showing that the SBI was able to discriminate between novices and experts to a statistically significant level regarding time during all 3 activities; however, significant differences were identified only between the trainee and expert groups for the horizontal peg activity and between the novice and trainee groups for the maze activity.

This study provides some evidence for the use of errors as a metric for differentiating levels of expertise. Although a significant difference was identified for all groups during the horizontal peg activity, with pair-wise analysis showing a significant difference between the novice and expert groups, no significant difference was identified for this metric during the vertical peg and maze activities. Errors have been used successfully in previous studies to discriminate between levels of expertise using benchtop simulators.11, 12, 13, 14, 15 There was a low error rate in all activities, which may account for the findings of our study, and it is likely that with the use of increasingly complex activities available with the FAST workstation, more errors would occur, providing significant differences.

In our study, a significant difference was identified between the novice and expert groups regarding the ASSET GRS score, although the differences between the novice and trainee groups and between the trainee and expert groups did not reach significance. Similar findings have been reported with the use of GRSs when differentiating between novices and experts14,16 and when differentiating between 3 to 4 different levels of expertise when more complex surgical procedures are being simulated.15,17, 18, 19, 20

It is likely that owing to the basic nature of the activities involved in this study, the opportunity to demonstrate higher-level surgical skills is less and therefore a lower ASSET score in the expert group may be expected. This is supported by previous studies reporting on the observed ASSET score of expert participants for both basic and complex simulated procedures. Garfjeld Roberts et al.8 recorded mean ASSET scores of 22.5 and 23 when participants performed basic simulated knee and shoulder activities, respectively, with the achieved scores increasing to 31 for the knee and 33 for the shoulder when more complex simulated activities were performed. Other authors have shown similar findings.20

One of the strengths of this study is the allocation of the participants. Previous studies have grouped participants based on training grade or the number of arthroscopic procedures performed. Owing to the nonsequential acquisition of skills throughout training, assuming a higher skill level in a certain area purely based on training level is likely inappropriate because expertise does not develop in a linear, stepwise manner. Participants in our study were therefore grouped depending on the amount of training in arthroscopic procedures that they had received.

Previous studies have also included medical students—who may have only limited surgical experience—in the novice group. The inclusion of only orthopaedic trainees was deemed appropriate to allow a more useful interpretation of any difference found between groups. With the goal of introducing the surgical skills SBI into orthopaedic training programs, it seems intuitive to include the end user in the investigative process and this may promote more reliable generalization of results to the wider population.

Limitations

Several limitations have been identified. This study may have been limited by the participant group sizes. Such variation in group sizes, however, is commonly seen in simulation studies and relates to the inherent difficulty of recruiting such highly skilled individuals into such studies.21 However, having more participants in the novice group and fewer participants in the expert group is potentially desirable owing to the wider range in innate ability of those with less experience.22

Because the aim of this study was to provide evidence for the use of the current SBI in developing basic arthroscopic surgical skills, only the 3 simplest FAST workstation activities were chosen. It is reasonable to predict, on the basis of the results of this study and previous investigations, that with the use of more complex activities, the difference in performance scores between groups would be more apparent. However, prior knowledge of the procedure being performed introduces a degree of bias; therefore, to control for this, only the 3 most basic activities were included in this study.

Conclusions

The results of this study provide evidence that the use of an SBI consisting of a benchtop workstation, laptop viewing platform, 30° arthroscope, and defined performance metrics can detect differences in the level of arthroscopic experience. This format of SBI has been deemed acceptable and useful to the intended user, increasing the feasibility of introducing it into surgical training.

Footnotes

The authors report the following potential conflicts of interest or sources of funding: D.T. receives lecture fees from Arthrex, outside the submitted work; receives royalties from Arthrex, outside the submitted work; and has a patent issued for an acromioclavicular joint fixation technique and receives royalties from Arthrex. Full ICMJE author disclosure forms are available for this article online, as supplementary material.

Supplementary Data

References

- 1.Breen K.J., Hogan A.M., Mealy K. The detrimental impact of the implementation of the European working time directive (EWTD) on surgical senior house officer (SHO) operative experience. Ir J Med Sci. 2013;182:383–387. doi: 10.1007/s11845-012-0894-6. [DOI] [PubMed] [Google Scholar]

- 2.Skipworth R.J., Terrace J.D., Fulton L.A., Anderson D.N. Basic surgical training in the era of the European Working Time Directive: What are the problems and solutions. Scott Med J. 2008;53:18–21. doi: 10.1258/RSMSMJ.53.4.18. [DOI] [PubMed] [Google Scholar]

- 3.Hall M.P., Kaplan K.M., Gorczynski C.T., Zuckerman J.D., Rosen J.E. Assessment of arthroscopic training in U.S. orthopedic surgery residency programs—A resident self-assessment. Bull NYU Hosp Jt Dis. 2010;68:5–10. [PubMed] [Google Scholar]

- 4.Frank R.M., Erickson B., Frank J.M., et al. Utility of modern arthroscopic simulator training models. Arthroscopy. 2014;30:121–133. doi: 10.1016/j.arthro.2013.09.084. [DOI] [PubMed] [Google Scholar]

- 5.Samia H., Khan S., Lawrence J., Delaney C.P. Simulation and its role in training. Clin Colon Rectal Surg. 2013;26:47–55. doi: 10.1055/s-0033-1333661. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Tofte J.N., Westerlind B.O., Martin K.D., et al. Knee, shoulder, and Fundamentals of Arthroscopic Surgery Training: Validation of a virtual arthroscopy simulator. Arthroscopy. 2017;33:641–646.e3. doi: 10.1016/j.arthro.2016.09.014. [DOI] [PubMed] [Google Scholar]

- 7.Koehler R., John T., Lawler J., Moorman C., III, Nicandri G. Arthroscopic training resources in orthopedic resident education. J Knee Surg. 2015;28:67–74. doi: 10.1055/s-0034-1368142. [DOI] [PubMed] [Google Scholar]

- 8.Garfjeld Roberts P., Guyver P., Baldwin M., et al. Validation of the updated ArthroS simulator: Face and construct validity of a passive haptic virtual reality simulator with novel performance metrics. Knee Surg Sports Traumatol Arthrosc. 2017;25:616–625. doi: 10.1007/s00167-016-4114-1. [DOI] [PubMed] [Google Scholar]

- 9.Jamieson S. Likert scales: How to (ab)use them. Med Educ. 2004;38:1217–1218. doi: 10.1111/j.1365-2929.2004.02012.x. [DOI] [PubMed] [Google Scholar]

- 10.Colaco H.B., Hughes K., Pearse E., Arnander M., Tennent D. Construct validity, assessment of the learning curve, and experience of using a low-cost arthroscopic surgical simulator. J Surg Educ. 2017;74:47–54. doi: 10.1016/j.jsurg.2016.07.006. [DOI] [PubMed] [Google Scholar]

- 11.Lopez G., Martin D.F., Wright R., et al. Construct validity for a cost-effective arthroscopic surgery simulator for resident education. J Am Acad Orthop Surg. 2016;24:886–894. doi: 10.5435/JAAOS-D-16-00191. [DOI] [PubMed] [Google Scholar]

- 12.Braman J.P., Sweet R.M., Hananel D.M., Ludewig P.M., Van Heest A.E. Development and validation of a basic arthroscopy skills simulator. Arthroscopy. 2015;31:104–112. doi: 10.1016/j.arthro.2014.07.012. [DOI] [PubMed] [Google Scholar]

- 13.Molho D.A., Sylvia S.M., Schwartz D.L., Merwin S.L., Levy I.M. The grapefruit: An alternative arthroscopic tool skill platform. Arthroscopy. 2017;33:1567–1572. doi: 10.1016/j.arthro.2017.03.010. [DOI] [PubMed] [Google Scholar]

- 14.Angelo R.L., Pedowitz R.A., Ryu R.K., Gallagher A.G. The Bankart performance metrics combined with a shoulder model simulator create a precise and accurate training tool for measuring surgeon skill. Arthroscopy. 2015;31:1639–1654. doi: 10.1016/j.arthro.2015.04.092. [DOI] [PubMed] [Google Scholar]

- 15.Coughlin R.P., Pauyo T., Sutton J.C., Coughlin L.P., Bergeron S.G. A validated orthopaedic surgical simulation model for training and evaluation of basic arthroscopic skills. J Bone Joint Surg Am. 2015;97:1465–1471. doi: 10.2106/JBJS.N.01140. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Chang J., Banaszek D.C., Gambrel J., Bardana D. Global rating scales and motion analysis are valid proficiency metrics in virtual and benchtop knee arthroscopy simulators. Clin Orthop Relat Res. 2016;474:956–964. doi: 10.1007/s11999-015-4510-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Dwyer T., Slade Shantz J., Chahal J., et al. Simulation of anterior cruciate ligament reconstruction in a dry model. Am J Sports Med. 2015;43:2997–3004. doi: 10.1177/0363546515608161. [DOI] [PubMed] [Google Scholar]

- 18.Dwyer T., Schachar R., Leroux T., et al. Performance assessment of arthroscopic rotator cuff repair and labral repair in a dry shoulder simulator. Arthroscopy. 2017;33:1310–1318. doi: 10.1016/j.arthro.2017.01.047. [DOI] [PubMed] [Google Scholar]

- 19.Erturan G., Alvand A., Judge A., Pollard T.C.B., Glyn-Jones S., Rees J.L. Prior generic arthroscopic volume correlates with hip arthroscopic proficiency: A simulator study. J Bone Joint Surg Am. 2018;100:e3. doi: 10.2106/JBJS.17.00352. [DOI] [PubMed] [Google Scholar]

- 20.Phillips L., Cheung J.J.H., Whelan D.B., et al. Validation of a dry model for assessing the performance of arthroscopic hip labral repair. Am J Sports Med. 2017;45:2125–2130. doi: 10.1177/0363546517696316. [DOI] [PubMed] [Google Scholar]

- 21.Morgan M., Aydin A., Salih A., Robati S., Ahmed K. Current status of simulation-based training tools in orthopedic surgery: A systematic review. J Surg Educ. 2017;74:698–716. doi: 10.1016/j.jsurg.2017.01.005. [DOI] [PubMed] [Google Scholar]

- 22.Alvand A., Auplish S., Khan T., Gill H.S., Rees J.L. Identifying orthopaedic surgeons of the future: The inability of some medical students to achieve competence in basic arthroscopic tasks despite training: A randomised study. J Bone Joint Surg Br. 2011;93:1586–1591. doi: 10.1302/0301-620X.93B12.27946. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.