Abstract

Objectives

Access to full texts of randomised controlled clinical trials (RCTs) is often limited, so brief summaries of studies play a pivotal role. In 2008, a checklist was provided to ensure the transparency and completeness of abstracts. The aim of this investigation was to estimate adherence to the reporting guidelines of the Consolidated Standards of Reporting Trials (CONSORT) criteria for abstracts (CONSORT-A) in RCT publications.

Primary endpoint

Assessment according to the percentage of compliance with the 16 CONSORT-A criteria per study.

Materials and methods

This study is based on a full survey (212 RCT abstracts in dental implantology, PubMed search, publication period 2014–2016, 45 journals, median impact factor: 2.328). In addition to merely documenting ‘adherence’ to criteria, the authors also assessed the ‘complete implementation’ of the requested information where possible. The collection of data was performed independently by two dentists, and a final consensus was reached. The primary endpoint was evaluated by medians and quartiles. Additionally, a Poisson regression was conducted to detect influencing factors.

Results

A median of 50% (Q1–Q3: 44%–63%) was documented for the 16 criteria listed in the CONSORT-A statement. Nine of the 16 criteria were considered in fewer than 50% of the abstracts. ‘Correct implementation’ was achieved for a median of 43% (Q1–Q3: 31%–50%) of the criteria. An additional application of Poisson regression revealed that the number of words used had a locally significant impact on the number of reported CONSORT criteria for abstracts (incidence rate ratio 1.001, 95% CI 1.001 to 1.002).

Conclusion

Transparent and complete reporting in abstracts appears problematic. A limited word count seems to result in a reduction in necessary information. As current scientific knowledge is often not readily available in the form of publications, abstracts constitute the primary basis for decision making in clinical practice and research. This is why journals should refrain from limiting the number of words too strictly in order to facilitate comprehensive reporting in abstracts.

Keywords: oral & maxillofacial surgery, oral medicine, statistics & research methods

Strengths and limitations of this study.

Literature search: We searched one electronic database—PubMed—because it comprises more than 30 million citations for biomedical literature from MEDLINE and has been declared the world’s largest and most important medical bibliographic database.

Data extraction: Two dentists reviewed the abstracts in parallel and independently of each other; the final set of data was produced in consensus.

Reporting quality by assessing the adherence and correct implementation of the Consolidated Standards of Reporting Trials statement: This approach—for assessing the reporting quality—is new in the field of dental implantology; in addition to merely documenting ‘adherence’ to criteria, the authors also assessed ‘correct implementation’ of the requested information.

Background and objective

Transparent and comprehensive reporting forms the basis for the evaluation and interpretation of published scientific findings. The EQUATOR network currently provides a total of 425 guidelines on reporting in health research to improve the quality of reporting in healthcare studies.1 The Consolidated Standards of Reporting Trials (CONSORT) statement contains recommendations for reporting randomised controlled trial clinical studies (RCTs) that present the highest evidence level (lb) and serve as a basis for recommendations and therapy decisions derived from these trials in daily clinical routines as well as evidence-based practice. The CONSORT group has developed guidelines for a variety of study designs, interventions and data and makes checklists available to authors to be used in the preparation of publications.2 A specific checklist to generate abstracts has been available since 2008, as this part of a publication plays a key role: researchers and physicians worldwide use information from abstracts of publications to assess the relevance and further exploitation of a scientific paper. An abstract, that is, a publication in miniature format, should, therefore, convey all necessary information from a scientific study.

A look at the current literature reveals differences among published abstracts in terms of completeness, structure and scope, despite existing and freely available guidelines. Reporting is frequently non-transparent and incomplete, which inevitably leads to two problems:

-

Fragmented and incomplete abstract reporting of study results prevents decision making about therapies in daily clinical routines.

Due to increasing time constraints in hospitals and a rapidly growing number of published study results, many interested individuals only have time to read the abstracts.3 On this basis, they need to decide for or against the inspection or acquisition of full texts and possibly for or against a therapy.

Even though it is strongly advised to include the full texts for decision making, there may be circumstances in which this advice cannot always be followed. This is also regularly described in publications.4 In regions with fewer healthcare resources, in particular, limited, chargeable and expensive access to full texts forces medical staff to make treatment decisions exclusively on the basis of abstracts.5 This leads to a high risk of mistakes with possibly far-reaching consequences for patients.

-

Fragmented and incomplete abstract reporting of study results complicates the compilation of reviews, meta-analyses and evidence-based information in medicine.

Lund et al published the following key finding: ‘An evidence-based research approach—the use of existing evidence in a transparent and explicit way—is needed to justify the need for and design a new study’.6 Especially in projects with moderate or no funding, a literature search is followed by abstract screening to identify relevant literature and to reduce the costs for the procurement of literature to a minimum. Non-transparent or fragmentary reporting in abstracts entails the risk that relevant study reports will not be considered since the presentation of the results may be incomplete, incorrect or selective. Relevant studies could not be found for the preparation of reviews and meta-analyses. This effect is especially critical for the drafting of recommendations, where RCT reporting is used for an evidence-based presentation of the results. As a consequence of unclear and incomplete reporting, it cannot be excluded that articles of importance to the formulation of therapeutic guidelines are not identified and therefore not considered.

These problems are widespread in publications on various clinical indications.7–10 In oral implantation, we found one publication that assessed the reporting quality in abstracts of RCTs.11 This study determined a mean overall reporting quality score of 58.6% in RCTs by focusing on six leading implantology journals between 2008 and 2012. Our investigation aimed to provide updated results to identify the extent to which authors in the field comply with recommendations provided in the CONSORT statement for the compilation of transparent and complete abstracts. The objective was to check RCT publications in dental implantology (2014–2016) for information as requested by the CONSORT statement for abstracts.

Materials and methods

The authors of this study examined abstracts of published study reports in dental implantology for compliance with 16 criteria recommended by CONSORT criteria for abstracts (CONSORT-A).12 The objective was to identify the degree—by percent and per study—to which all criteria requested in CONSORT-A were adhered to (primary endpoint). Secondary research questions served to identify possible factors via regression analysis, which may result in a better implementation of CONSORT criteria. These criteria were also assessed in terms of their correct and meaningful documentation. Assessment was conducted in two steps. For an assessment of the ‘degree of adherence I’, the focus was exclusively on the documentation (retrievability) of information in abstracts as requested by CONSORT-A. For an assessment of the degree of adherence II, correctness and completeness as required by CONSORT-A2 12 13 were evaluated. It was only possible to collect this information for 6 out of 16 criteria since an assessment of correctness would not have made sense for the remaining criteria. Assessment of the ‘degree of adherence II’ is based on requirements defined in the CONSORT-A statement,12 as well as on information required in ‘Explanation and Elaboration’.13 The latter provides a clear description of reporting on individual criteria in several subsections. An assessment in terms of requirements for the degree of adherence II was possible for six criteria (see online supplemental table 1).

bmjopen-2020-045372supp001.pdf (84.4KB, pdf)

A literature search of the publication period January 2014 to December 2016 via the PubMed search engine of the MEDLINE medical database formed the basis for the analysis. We performed a very unrestricted search to obtain as many hits as possible. For this purpose, the keywords ‘dental implantation’, ‘dental implant’ and ‘tooth artificial’ were combined with the logical operator OR. The type of study was restricted to RCTs (online supplemental table 2). The software program Excel14 was used to compile data, and the data mask was generated on the basis of the CONSORT statement for abstracts.12

bmjopen-2020-045372supp002.pdf (78.5KB, pdf)

At the start of the project, a tool for evaluating abstract quality was available from a preceding study,8 which had to be slightly adapted and extended for the purposes of this investigation by its planners. All 16 CONSORT-A criteria were included in data compilation and analysis. General information on each publication was documented to facilitate a clear classification of reports at a later time, as well as additional data to be examined for their potential impact on reporting quality (year of publication, ‘structured’ or ‘unstructured’ presentation of the abstract, the number of patients included, the word count and the impact factor of the respective journal).

Two dentists reviewed the abstracts in parallel and independently of each other. The final data set was drawn up in consensus (JL and CL). Data analysis was performed using the SPSS Statistics V.24 software program15 (SK and CB). The authors determined relative frequencies and IQRs for study-related implementation rates and for the implementation of individual criteria. The results at the primary endpoint are depicted in box plots. Criteria-related frequencies are illustrated with bar charts.14

Possible factors influencing the quality of the abstracts measured by the number of criteria fulfilled per publication were identified by means of an additional explorative data analysis via Poisson regression (ST). Backward variable selection was performed with the Akaike information criterion (AIC). Incidence rate ratios (IRRs), including 95% CIs and respective p values determined via the Wald test, were used to describe the impact. Year of publication (reference: 2014), presence of a structured abstract (reference: no), number of patients analysed, impact factor and word count were examined as potential influencing factors. The analysis was conducted using the software program R (R Core Team, 2015).

Patient and public involvement

No patients were directly involved.

Results

Research results

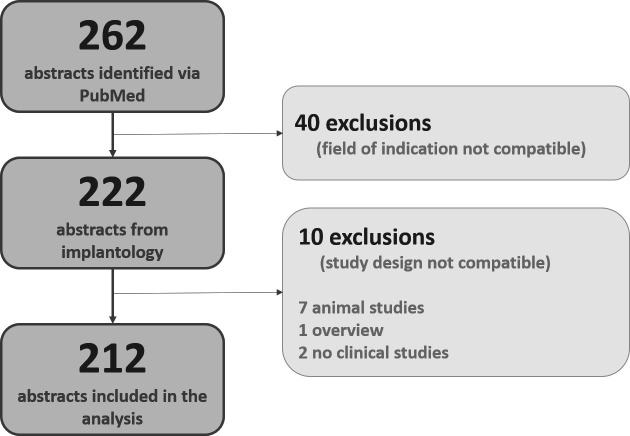

The electronic search yielded a data pool of 262 reports, 40 abstracts of which had to be excluded after a first screening due to a mismatch in disciplines. Ten additional abstracts were excluded because the publications in question did not report clinical studies. As a result, a total of 212 abstracts from RCT publications (see figure 1) were included in this study.

Figure 1.

Description of the selection procedure and documentation of the number of published RCTs from dental implantology, the aim being a data pool to identify—per criterion and study—the degree of adherence to the CONSORT recommendations for abstracts. Fifty study reports had to be excluded from further investigation and analysis because the clinical indication (n=1) or the study design (n=39) were not compatible or the respective data sets referred to investigations of animals (n=7), were reviews (n=1) or were not identifiable as RCTs (n=2). CONSORT, Consolidated Standards of Reporting Trials; RCTs, randomised controlled trials.

General study characteristics and journals

The analysis included RCT abstracts from 45 journals (see online supplemental table 3) with a median IF of 2.3280 (min. 0, max. 5.62). Two journals, the ‘European Journal of Implantology’ (36 of 212 abstracts; 17%) and ‘Clinical oral implants research’ (36 of 212 abstracts; 17%), accounted for approximately one-third of the abstracts. Table 1 shows the general study characteristics for the data pool evaluated. Most of the information was available in a structured form (174/212; 82%) with a median of 258 words (min. 94 words16 and max. 659 words17). The abstracts reported case numbers ranging from 1018–20 to 36021 from published studies. The median number of study participants was 36.

Table 1.

Study characteristics of 212 RCT abstracts of implantology in terms of the frequency (N) and relative frequency (%)

| Study characteristics | Frequencies | |

| Form of abstract | Structured | 174 (82%) |

| Unstructured | 38 (18%) | |

| Year of publication | 2014 | 101 (48%) |

| 2015 | 74 (35%) | |

| 2016 | 37 (17%) | |

| Journals | European Journal of Oral Implantology | 36 (17%) |

| Clinical oral implants research | 36 (17%) | |

| The International Journal of Oral & Maxillofacial Implants | 23 (11%) | |

| Clinical implant dentistry and related research | 22 (11%) | |

| Other | 95 (45%) | |

| Provenance | Europe | 70 (33%) |

| America | 21 (10%) | |

| Africa | 6 (3%) | |

| Asia | 35 (17%) | |

| Not specified | 80 (38%) | |

| Word count (median) | 258 (min. 94; max. 659) | |

| No of cases analysed (median) | 36 (min. 10; max. 360) | |

| Impact factor (median) | 2.3280 (min. 0; max. 5.62) | |

RCT, randomised controlled trial.

bmjopen-2020-045372supp003.pdf (113.8KB, pdf)

Implementation rate per study

The studies included showed a median implementation of CONSORT-A recommendations (degree of adherence I) of 50% (Q1–Q3 43.8%–62.5%) per abstract, whereby 8 out of 16 criteria were documented (min. 7, max. 14 criteria); (see also online supplemental file 5). The criterion with the highest percentage of documentation was ‘intervention’ (100%). A documentation of less than 10% was found for ‘trial registration’ and ‘funding’ (see table 2).

The studies included showed a median implementation of CONSORT-A recommendations (degree of adherence I) of 50% (Q1–Q3 43.8%–62.5%) per abstract, whereby 8 out of 16 criteria were documented (min. 7, max. 14 criteria); (see also online supplemental file 5). The criterion with the highest percentage of documentation was ‘intervention’ (100%). A documentation of less than 10% was found for ‘trial registration’ and ‘funding’ (see table 2).

Table 2.

Implementation N (%) of CONSORT-A in 212 reports of published RCTs in the field of implantology

| CONSORT criterion | Implementation N (%) degree of adherence I |

Implementation N (%) degree of adherence II |

| Identification as a randomised trial in the title | 95 (45) | 95 (45)* |

| Trial design | 66 (31) | 66 (31)* |

| Participant characteristics | 154 (73) | 154 (73)* |

| Interventions | 212 (100) | 200 (94) |

| Objective | 209 (99) | 209 (99)* |

| Definition primary endpoint | 198 (93) | 117 (55) |

| Randomisation | 35 (17) | 13 (6) |

| Blinding | 41 (19) | 21 (10) |

| Numbers randomised | 97 (46) | 97 (46)* |

| Recruitment | 196 (93) | 196 (93)* |

| Numbers analysed | 57 (27) | 57 (27)* |

| Results of outcome | 207 (98) | 133 (63) |

| Harms | 23 (11) | 23 (11)* |

| Conclusion | 204 (96) | 47 (22) |

| Trial registration | 10 (5) | 10 (5)* |

| Funding | 8 (4) | 8 (4)* |

| Total |

Presentation of the degree of adherence I (information given in the abstract) and degree of adherence II (correct documentation in accordance with CONSORT-A).

*Variables without a formal degree of adherence II.

CONSORT, Consolidated Standards of Reporting Trials; CONSORT-A, CONSORT criteria for abstracts; RCTs, randomised controlled trials.

bmjopen-2020-045372supp004.pdf (332.8KB, pdf)

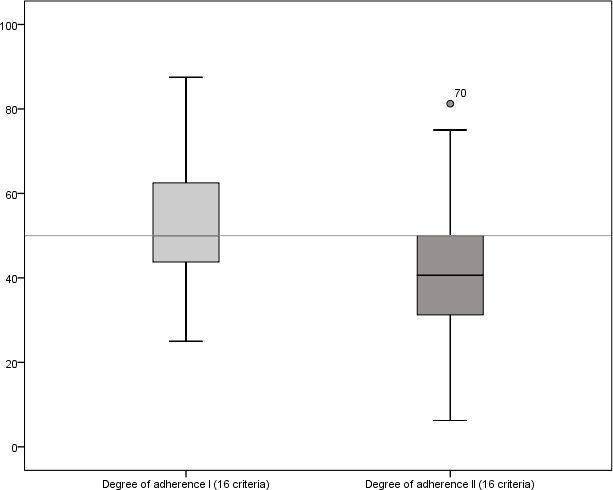

In terms of correct implementation (degree of adherence II), a median implementation of 40.6% (6.5 criteria) was found with an IQR of 31.3%–50.0%. One abstract22 revealed the lowest implementation with only the ‘objective’ criterion (6.25%), whereas Esposito et al23 documented a maximum number of 13 criteria (81.25%).

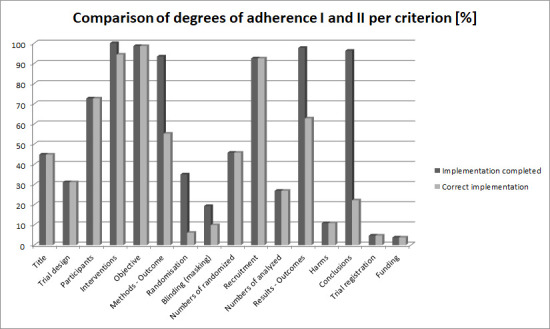

For the ‘randomisation’ (documentation 17%, correct implementation 6%) and ‘conclusion’ (documentation 96%, correct implementation 22%) criteria, the authors found a decrease of ≥50% in the implementation rate from the degree of adherence I to the degree of adherence II (see table 2).

Implementation rates per criterion

General criteria

Among the 212 abstracts included, 45% mentioned RCTs as the study design in the title of the study (95/212). Thirty-one per cent provided a more detailed description of the study design, such as parallel group studies, blinded studies and placebo-controlled studies (66/212).

Methods

The ‘aim of the study’ was documented in 99% of the abstracts examined (209/212). Information on the ‘primary endpoint’ was given in 93% (198/212); this information was, however, clearly defined in only 55% (117/212), including specification of the measurement variable. ‘Eligibility criteria for participants and the settings’ were found in 73% (154/212) of the abstracts, while complete documentation of the ‘intervention for each group’ was found in all 212 abstracts (100%). Ninety-four per cent (200/212) reported a detailed description of the intervention for each group. Random allocation of participants to the intervention group was documented in 17% (35/212) of cases; only 6% (13/212) of abstracts contained data on the generation of the random sequence and its implementation. Nineteen per cent (41/212) of abstracts mentioned blinding prior to the study; 10% (21/212) also indicated blinded groups of participants.

Results

In terms of result presentation, 93% (196/212) of abstracts provided information on the current status of the study (study completed, interim analysis after xy years). The number of randomised participants was given in 46% (97/212) of abstracts and in 27% (57/212) of the analysed participants. A total of 98% (207/212) of abstracts included reported results at the primary endpoint, but only 63% (133/212) of abstracts contained a precise effect size. Eleven per cent (23/212) of the examined RCT abstracts documented major (significant) harms. Ninety-six per cent (204/212) provided a general summary of the results; only 22% (47/212) described the strengths and deficits of the respective study. The registration ID was documented in 5% (10/212) of the abstracts, and information on funding was documented in 4% (8/212).

The additional explorative analysis by means of Poisson regression included 199 out of 212 abstracts, since 13 abstracts did not provide information on all potential influencing factors. For both degrees of adherence—I and II—the number of words used was shown to have a locally significant impact on the number of reported CONSORT abstract criteria (degree of adherence I: IRR 1.001, 95% CI 1.001 to 1.002; degree of adherence II: IRR 1.002, 95% CI 1.001 to 1.003). The percentages of explained variance according to Nagelkerke’s R2 were 14% and 21%, respectively. The other possible influencing variables—year of publication, presence of a structured abstract, number of patients included and impact factor—were not selected in the backward variable selection via AIC and had no significant influence on the number of reported CONSORT-A criteria.

Discussion

This study examined the degree to which recommendations of the CONSORT statement for abstracts were implemented in trial publications on dental implantology. A total of 212 abstracts published 2014–2016 showed a median documentation of the required criteria (degree of adherence I) of only 50%. When focusing on ‘correct’ compliance with the requirements of the statement (in this context: degree of adherence II), adherence declined to 40.6% (see figure 2 and table 2).

Figure 2.

Illustration of the degree of adherence per study (%) in a box plot (n=212). Degree of adherence I (quantitative implementation), degree of adherence II (qualitative implementation).

A comparison of all criteria revealed that the two criteria, ‘funding’ and ‘trial registration’, were rarely documented (5% and 4%, respectively). In general, journal editors request these details separately, and they are mentioned in the publication but quite often not in the abstract.

In addition, the content of abstracts is often massively reduced by word count limitations requested by the publishers. A Poisson regression analysis conducted for the purposes of this study showed that word count limits were responsible for lesser reporting quality or missing details in abstracts (IRR 1.001, 95% CI 1.001 to 1.002). The influence of the number of words used in the abstract was documented in a previous study by Baulig et al (N=136) (Poisson regression-based IRR 1.002, 95% CI 1.001 to 1.003). This previous study explored the abstract quality in ophthalmology RCTs on age-related macular degeneration. The analysis revealed a median implementation of seven criteria (95% CI 7 to 8).8 The results are similar to those found in the present study in the field of dental implantology. Xie et al also investigated the quality of 249 RCT abstracts published in dental science.24 They found major gaps in the documentation of general items (5.6% documented trial registration), methods (only one publication, ie, 0.4%, noted the sequence generation procedure for randomisation and allocation concealment; in 7.6% of the papers reviewed, blinding was described; and a clearly stated primary outcome was documented in only 16.9%), trial results (the number of participants analysed was only described in 8.8% and adverse events were described in only 14.9%). As in our study, this research group also found a significant association between word count requirements and reporting quality (multivariable linear regression (B=0.020; p<0.001)).

Notwithstanding any word count limitations, minor additional information (such as registration ID, identification as RCT in the title, specification of patient numbers at randomisation or analysis) can be included in the text (eg, numbers in brackets) without the need for more words. Such inclusions provide important information on indexing or for the benefit of readers and improve the transparency required for abstracts. A publication by Berwanger et al25 offers an excellent template for transparent and comprehensive reporting in abstracts even if the word count has been restricted.

The assessment of the documentation of the CONSORT-A criteria in this paper is based exclusively on abstracts. Original texts (full texts) or information provided outside the abstract text were not examined and were not taken into consideration. Since the two criteria ‘funding’ and ‘trial registration’ do not necessarily have to be included in the abstract and a subjective presentation of the implementation ratio might be a consequence, to the detriment of the authors’ duty of documentation, an additional data evaluation seemed advisable, based on only 14 criteria after excluding the ‘funding’ and ‘trial registration’ criteria. This further evaluation yielded a somewhat higher ‘implementation ratio’ for the degree of adherence I of 57.1% with an IQR of 50.5%–71.4% (see online supplemental file 6). For the degree of adherence II, the authors still found a reduced implementation ratio of 42.9% (Q1–Q3; 35.7%–57.1%). A median of eight criteria (IQR 7–10 criteria) was documented, and six criteria were correctly implemented (IQR 5–8 criteria). It was, however, obvious from both data pools (14 vs 16 criteria) that a far smaller number of CONSORT-A criteria were identified as having been included when correct implementation was examined in addition to mere documentation (see online supplemental file 7).

bmjopen-2020-045372supp005.pdf (262KB, pdf)

bmjopen-2020-045372supp006.pdf (284.4KB, pdf)

A data analysis of 14 CONSORT-A criteria by means of Poisson regression also revealed a locally significant influence of the abstract word count on the quality of abstracts (degree of adherence I: IRR 1.001, 95% CI 1.001 to 1.002, p<0.001; degree of adherence II: IRR 1.002, 95% CI 1.001 to 1.003, p<0.001). The percentage of explained variance according to Nagelkerke’s R2 was 13% and 20%, respectively. Other possible influencing variables, that is, year of publication, availability of a structured abstract, number of patients included and impact factor were again not selected in the backward selection via AIC and had no significant impact on the number of CONSORT-A criteria reported in the abstracts included.

Findings from a doctoral project were presented in the context of this study; the project in question did not receive any financial support or assistance. A literature search was therefore exclusively conducted by means of the internet-based literature database PubMed with a total of over 30 million quotations for biomedical literature from MEDLINE, life science journals and online books that were directly available free of charge to all researchers involved. When interpreting the findings of this study, readers should therefore be aware that the inclusion of only one database might lead to a bias of results (deviation in the estimated degree of implementation).

However, we assume that only a few publications could have been found when searching additional databases, as PubMed includes all relevant implantology journals. The evaluation of congress abstracts, which might have been found in Embase, were explicitly not part of this investigation. In this respect, the authors assumed that the results would not have been significantly improved if a few additional studies had been considered in this analysis. The search was limited in time to the 3-year period from January 2014 to December 2016. Up to 2012, implantological abstracts had been examined by Kiriakou et al. A follow-up examination should, therefore, be carried out on more recent studies.

To minimise bias on the part of the evaluators, two researchers/physicians performed the analysis in parallel and independently of each other. Abstract evaluation was based on txt files that were generated directly in PubMed after completing the search operation. This strategy ensured that all abstracts were available in the same visual form and ruled out any influence due to the graphical representation of the abstracts. However, evaluators were not blinded with respect to the journals, authors and publication periods, so a possible assessor bias can be assumed.

The calculation of Cohen’s kappa shows a high conformity between the assessors for 8 out of 16 criteria (see online supplemental table 4). With a focus on the percentage of correlation, the lowest degree of conformity between assessors was identified for the ‘harms’ criterion (62%; ĸ=0.041). Information on this aspect may possibly be more or less deduced from the abstract (if one reads between the lines) and is not always explicitly presented as health disadvantages for patients. An evaluation of abstract quality performed in a previous study in the field of ophthalmology8 served as a basis for the present study in terms of the assessment tool, evaluation procedure and the evaluation so that no study protocol was deemed necessary for the present study.

bmjopen-2020-045372supp007.pdf (80.3KB, pdf)

Several publications on other clinical indications with similar research questions confirmed our results for the general implementation of criteria. Gallo et al analysed 126 abstracts from 2011 to 2018 for the rate of implementation. The authors found that, in general, seven criteria (SD ±2) were considered per publication. ‘Trial registration’, ‘method of randomisation’ and ‘source of trial funding’ were documented with a frequency of less than 5%.26 Chow et al reported on adherence to CONSORT criteria in 395 abstracts in the field of anaesthesiology. Their study documented that 75% of these abstracts from RCTs published in 2016 met less than half of the 16 criteria. In line with the present study, their examination revealed that not a single one of the included publications took all 16 CONSORT-A into consideration. An implementation rate of <50% was found for the following criteria: ‘designation in the title’, ‘study design’, ‘baseline data’, ‘objective’, ‘randomisation’, ‘blinding’, ‘number of randomised participants’, ‘outcome’, ‘registration’ and ‘funding’.27 Speich et al explored the abstract quality in published study reports from the field of surgery (2014–2016).28 They found a general implementation of eight criteria (95% CI 7.83 to 8.39), with ‘randomisation’, ‘blinding’ and ‘funding’ have been considered in less than 20%.

The above-mentioned reports are consistent with the present study in terms of the low number of criteria met, as well as of those criteria for which the lowest degree of adherence was found. The authors criticise in particular the documentation of ‘randomisation’, ‘blinding’ and ‘number of randomised/analysed participants’. The ‘Explorations and Elaborations’ section describes in detail how the 16 required CONSORT-A criteria contribute to the completeness and sufficient transparency of an abstract, with the relevance of individual criteria and their processing not being under discussion in this context. The literature provides no clues as to a possibly larger or smaller impact of criteria on reporting quality. However, future studies should consider a weighting of required criteria, with the possible consequence that future studies can present the degree of implementation in a more objective manner.

In addition to the poor documentation of the ‘blinding’, ‘randomisation’ and ‘harms’ criteria, our study revealed additional massive deficits in the documentation of ‘definition of primary endpoint’, ‘results/outcomes’ and ‘conclusion’ (see table 2 and figure 3). In the evaluation of ‘correctness’, the degree of adherence declined by at least 30%, since pertinent information was documented in the abstract but not in the manner required by CONSORT-A. Deficits in the implementation of CONSORT-A recommendations therefore tend to occur more frequently for the methodological criteria, as was previously confirmed by Ghimire et al.

Figure 3.

Graphical representation of proportional implementation of criteria to facilitate locating the corresponding information in the abstract (degree of adherence I vs degree of adherence II).

This research team reports a documentation of randomisation (‘Allocation Concealment’) in only 12% of abstracts and of blinding in only 21%.29 Obviously, there are criteria that authors adhere to in general, and a few others (statistical criteria) that are reported infrequently. It can be assumed that a large number of individuals are involved in the compilation of an abstract (publication), so that different text sections are inevitably drawn up in different contexts while applying different quality standards (transparency and completeness). This may explain deficits in specific areas. Deficits in statistical aspects in particular might be reduced by involving medical statisticians/biometricians in the compilation of publications and during the review process.

To date, some investigations have been conducted on reporting quality in abstracts in dental research. Fleming et al reported an overall reporting quality score of 60.2% in abstracts of five orthodontic journals from 2006 to 2011.30 For this evaluation, 117 RCT abstracts were assessed by using a modified CONSORT for Abstracts checklist containing 21 items. In particular, the items ‘randomisation procedures’, ‘allocation concealment’, ‘blinding’, ‘failure to report CIs’ and ‘harms’ were found to be reported insufficiently. Seehra et al published a mean overall reporting quality in dental specialty journals of 62.5% (N=228 RCT abstracts).31 The research group found that randomisation restrictions, allocation concealment, blinding, numbers analysed, CIs, intention-to-treat analysis, harms, registration and funding were rarely described. The research group of Faggion et al compared the quality of reporting in abstracts between 2005–2007 and 2009–2011 in seven leading journals of periodontology and implant dentistry.32 They included 392 abstracts in their review and found the quality of reporting to be improvable. Only the documentation of the title significantly improved over time.

The reporting quality in abstracts of RCTs in oral implantology was assessed by Kiriakou et al.11 Therefore, six leading implantology journals were reviewed from 2008 to 2012. Abstracts were assessed as providing either ‘no description’, ‘inadequate description’ or ‘adequate description’. The results showed a mean overall reporting quality score of 58.6% (95% CI 57.6% to 59.7%), with insufficient reporting of the randomisation procedures and allocation concealment items. They also found failure in reporting CIs, effect estimates and sources of funding.

In contrast to existing investigations assessing abstract reporting quality in dentistry, we used CONSORT-A without modifying the number of items. However, we found a similar degree of implementation as the authors mentioned above. The new findings from our investigation clearly show that there is a difference between the implementation of guidelines and fully documented/correct implementation (50% implementation vs 40.6% fully/correct implementation).

Currently, 585 journals refer authors to the CONSORT statement33; nevertheless, there is an urgent need to improve abstracts. Findings from this study suggest that not all authors pay attention to the CONSORT statement as recommended by journals. The statement for abstracts and the corresponding checklist as well as the interactive exploration platform provided in this context (CONSORT, 2019b; Hopewell et al, 2008b) appear to be inadequate to ensure transparent and comprehensive reporting even though they contain exact and detailed instructions for implementation as well as specific examples. A particularly worrying fact is that this low rate of implementation was also found for criteria that are easy to meet, such as the identification of a publication as an RCT in the title, documentation of registration ID, or reporting the number of participants included in the analysis. It seems reasonable to assume that recommendations for the publication standard were not implemented because authors would have to study additional literature for this purpose, and might not have the time or patience to do so.

Many journals support the idea of explicitly requesting authors to use the CONSORT checklist; CONSORT is endorsed by over 50% of the core medical journals listed in the Abridged Index Medicus on PubMed as of April 2020.34 A general request to adhere to the CONSORT-A checklist in the drafting of publications and a demand for obligatory implementation on the part of all journals may further improve reporting quality in abstracts and thus promote comprehensive and transparent presentation. Moreover, reviewers should check their data for completeness and correctness according to CONSORT-A. Bearing in mind that publications are reviewed under increasing time pressure, and primarily outside the job and on an honorary basis, the entire review system might have to be reconsidered. One option would be to check abstracts/publications for completeness of reporting as a preliminary step, followed by the actual review procedure. By implementing this additional step, papers that are not well structured and non-transparent may be identified early in the review process and sent back for revision. Even if that means that additional human resources have to be deployed, it seems to be an opportunity to focus the journals’ review process on content-related items.

Conclusion

Even though the CONSORT group gave recommendations for the compilation of abstracts as early as 2008, the quality of such ‘miniature publications’ remains suboptimal. Coauthors well versed in statistics should address and/or check methodological criteria, in particular when drafting abstracts and during the review process. Word count limitations seem to be another reason for the omission of important information. Abstracts play a key role for readers, and journals should not restrict the admissible number of words too rigidly.

Supplementary Material

Acknowledgments

The authors thank Christina Wagner for linguistic support in the preparation of this manuscript.

Footnotes

Contributors: SK wrote the initial draft of this manuscript and performed major parts of the statistical analysis. JL screened all abstracts of the literature search through PubMed and documented suitable publications for further processing. Moreover, he extracted the necessary information for evaluating the abstract quality and performed data entry and data validation. ST conducted the regression analysis and revised the initial draft of this manuscript. CL validated the pool of studies by screening all abstracts and evaluating the studies included. CB designed the review and its analysis concept; she implemented the literature search, including the identification of those RCTs to be included in the review and thoroughly revised the initial draft of this manuscript.

Funding: The authors have not declared a specific grant for this research from any funding agency in the public, commercial or not-for-profit sectors.

Competing interests: This study is part of the doctoral thesis written by Jeremias Loddenkemper in pursuit of a doctoral degree in dental medicine (‘Dr. med. dent.’) at Witten/Herdecke University. Furthermore, the results contained in this article have already been presented as part of a poster presentation at the 33rd DGI Congress, Hamburg (28–30 November 2019). The authors declare that they have no competing financial, professional or personal interests that might have influenced the performance or presentation of the work described in this manuscript.

Provenance and peer review: Not commissioned; externally peer reviewed.

Supplemental material: This content has been supplied by the author(s). It has not been vetted by BMJ Publishing Group Limited (BMJ) and may not have been peer-reviewed. Any opinions or recommendations discussed are solely those of the author(s) and are not endorsed by BMJ. BMJ disclaims all liability and responsibility arising from any reliance placed on the content. Where the content includes any translated material, BMJ does not warrant the accuracy and reliability of the translations (including but not limited to local regulations, clinical guidelines, terminology, drug names and drug dosages), and is not responsible for any error and/or omissions arising from translation and adaptation or otherwise.

Data availability statement

Data are available on reasonable request.

Ethics statements

Patient consent for publication

Not required.

Ethics approval

This study constitutes an analysis of published abstracts on RCTs and therefore does not require approval by an ethics committee.

References

- 1.EQUATOR-Network . EQUATOR-network: minervation Ltd. Available: https://www.equator-network.org/reporting-guidelines/

- 2.CONSORT . Extensions of the CONSORT statement, 2019. Available: http://www.consort-statement.org/extensions

- 3.Barry HC, Ebell MH, Shaughnessy AF, et al. Family physicians’ use of medical abstracts to guide decision making: style or substance? J Am Board Fam Pract 2001;14:437–42. [PubMed] [Google Scholar]

- 4.Sivendran S, Newport K, Horst M, et al. Reporting quality of abstracts in phase III clinical trials of systemic therapy in metastatic solid malignancies. Trials 2015;16:341. 10.1186/s13063-015-0885-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Barbour V, Chinnock P, Cohen B, et al. The impact of open access upon public health. Bull World Health Organ 2006;84:339. 10.2471/BLT.06.032409 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Lund H, Juhl CB, Nørgaard B, et al. Evidence-based research series-paper 2: using an evidence-based research approach before a new study is conducted to ensure value. J Clin Epidemiol 2021;129:158–66. 10.1016/j.jclinepi.2020.07.019 [DOI] [PubMed] [Google Scholar]

- 7.Alharbi F, Almuzian M. The quality of reporting RCT abstracts in four major orthodontics journals for the period 2012-2017. J Orthod 2019;46:225–34. 10.1177/1465312519860160 [DOI] [PubMed] [Google Scholar]

- 8.Baulig C, Krummenauer F, Geis B, et al. Reporting quality of randomised controlled trial abstracts on age-related macular degeneration health care: a cross-sectional quantification of the adherence to CONSORT abstract reporting recommendations. BMJ Open 2018;8:e021912. 10.1136/bmjopen-2018-021912 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Khan MS, Shaikh A, Ochani RK, et al. Assessing the quality of abstracts in randomized controlled trials published in high impact cardiovascular journals. Circ Cardiovasc Qual Outcomes 2019;12:e005260. 10.1161/CIRCOUTCOMES.118.005260 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Kuriyama A, Takahashi N, Nakayama T. Reporting of critical care trial abstracts: a comparison before and after the announcement of CONSORT guideline for abstracts. Trials 2017;18:32. 10.1186/s13063-017-1786-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Kiriakou J, Pandis N, Madianos P, et al. Assessing the reporting quality in abstracts of randomized controlled trials in leading journals of oral implantology. J Evid Based Dent Pract 2014;14:9–15. 10.1016/j.jebdp.2013.10.018 [DOI] [PubMed] [Google Scholar]

- 12.Hopewell S, Clarke M, Moher D, et al. CONSORT for reporting randomised trials in journal and conference abstracts. Lancet 2008;371:281–3. 10.1016/S0140-6736(07)61835-2 [DOI] [PubMed] [Google Scholar]

- 13.Hopewell S, Clarke M, Moher D, et al. CONSORT for reporting randomized controlled trials in journal and conference abstracts: explanation and elaboration. PLoS Med 2008;5:e20. 10.1371/journal.pmed.0050020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Excel® . Microsoft® Excel® 2010. Redmond WA USA Microsoft Corporation, 2010. [Google Scholar]

- 15.Released IC . IBM SPSS statistics for windows. version 26.0 ed. IBM Corp, 2020. [Google Scholar]

- 16.De Angelis N, Nevins ML, Camelo MC, et al. Platform switching versus conventional technique: a randomized controlled clinical trial. Int J Periodontics Restorative Dent 2014;34:s75–9. 10.11607/prd.2069 [DOI] [PubMed] [Google Scholar]

- 17.Pistilli R, Felice P, Piatelli M, et al. Blocks of autogenous bone versus xenografts for the rehabilitation of atrophic jaws with dental implants: preliminary data from a pilot randomised controlled trial. Eur J Oral Implantol 2014;7:153–71. [PubMed] [Google Scholar]

- 18.Göçmen G, Atalı O, Aktop S, et al. Hyaluronic acid versus ultrasonic resorbable pin fixation for space maintenance in non-grafted sinus lifting. J Oral Maxillofac Surg 2016;74:497–504. 10.1016/j.joms.2015.10.024 [DOI] [PubMed] [Google Scholar]

- 19.Kasperski J, Rosak P, Rój R, et al. The influence of low-frequency variable magnetic fields in reducing pain experience after dental implant treatment. Acta Bioeng Biomech 2015;17:97–105. [PubMed] [Google Scholar]

- 20.Torroella-Saura G, Mareque-Bueno J, Cabratosa-Termes J, et al. Effect of implant design in immediate loading. A randomized, controlled, split-mouth, prospective clinical trial. Clin Oral Implants Res 2015;26:240–4. 10.1111/clr.12506 [DOI] [PubMed] [Google Scholar]

- 21.Arduino PG, Tirone F, Schiorlin E, et al. Single preoperative dose of prophylactic amoxicillin versus a 2-day postoperative course in dental implant surgery: a two-centre randomised controlled trial. Eur J Oral Implantol 2015;8:143–9. [PubMed] [Google Scholar]

- 22.Arbab H, Greenwell H, Hill M, et al. Ridge preservation comparing a nonresorbable PTFE membrane to a resorbable collagen membrane: a clinical and histologic study in humans. Implant Dent 2016;25:128–34. 10.1097/ID.0000000000000370 [DOI] [PubMed] [Google Scholar]

- 23.Esposito M, Cannizzaro G, Barausse C, et al. Cosci versus summers technique for crestal sinus lift: 3-year results from a randomised controlled trial. Eur J Oral Implantol 2014;7:129–37. [PubMed] [Google Scholar]

- 24.Xie L, Qin W, Gu Y, et al. Quality assessment of randomized controlled trial abstracts on drug therapy of periodontal disease from the Abstracts published in dental science citation indexed journals in the last ten years. Med Oral Patol Oral Cir Bucal 2020;25:e626–33. 10.4317/medoral.23647 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Berwanger O, Ribeiro RA, Finkelsztejn A, et al. The quality of reporting of trial abstracts is suboptimal: survey of major general medical journals. J Clin Epidemiol 2009;62:387–92. 10.1016/j.jclinepi.2008.05.013 [DOI] [PubMed] [Google Scholar]

- 26.Gallo L, Wakeham S, Dunn E, et al. The reporting quality of randomized controlled trial abstracts in plastic surgery. Aesthet Surg J 2020;40:335–41. 10.1093/asj/sjz199 [DOI] [PubMed] [Google Scholar]

- 27.Chow JTY, Turkstra TP, Yim E, et al. The degree of adherence to CONSORT reporting guidelines for the abstracts of randomised clinical trials published in anaesthesia journals: a cross-sectional study of reporting adherence in 2010 and 2016. Eur J Anaesthesiol 2018:942–8. 10.1097/EJA.0000000000000880 [DOI] [PubMed] [Google Scholar]

- 28.Speich B, Mc Cord KA, Agarwal A, et al. Reporting quality of journal abstracts for surgical randomized controlled trials before and after the implementation of the CONSORT extension for abstracts. World J Surg 2019:2371–8. 10.1007/s00268-019-05064-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Ghimire S, Kyung E, Kang W, et al. Assessment of adherence to the CONSORT statement for quality of reports on randomized controlled trial abstracts from four high-impact general medical journals. Trials 2012;13:77. 10.1186/1745-6215-13-77 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Fleming PS, Buckley N, Seehra J, et al. Reporting quality of abstracts of randomized controlled trials published in leading orthodontic journals from 2006 to 2011. Am J Orthod Dentofacial Orthop 2012;142:451–8. 10.1016/j.ajodo.2012.05.013 [DOI] [PubMed] [Google Scholar]

- 31.Seehra J, Wright NS, Polychronopoulou A, et al. Reporting quality of abstracts of randomized controlled trials published in dental specialty journals. J Evid Based Dent Pract 2013;13:1–8. 10.1016/j.jebdp.2012.11.001 [DOI] [PubMed] [Google Scholar]

- 32.Faggion CM, Giannakopoulos NN. Quality of reporting in abstracts of randomized controlled trials published in leading journals of periodontology and implant dentistry: a survey. J Periodontol 2012;83:1251–6. 10.1902/jop.2012.110609 [DOI] [PubMed] [Google Scholar]

- 33.CONSORT . Endorsers - Journals and organizations: CONSORT, 2019. Available: http://www.consort-statement.org/about-consort/endorsers1

- 34.Group TC . Endorsers-Journals and organizations, 2020. Available: http://www.consort-statement.org/about-consort/endorsers1

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

bmjopen-2020-045372supp001.pdf (84.4KB, pdf)

bmjopen-2020-045372supp002.pdf (78.5KB, pdf)

bmjopen-2020-045372supp003.pdf (113.8KB, pdf)

bmjopen-2020-045372supp004.pdf (332.8KB, pdf)

bmjopen-2020-045372supp005.pdf (262KB, pdf)

bmjopen-2020-045372supp006.pdf (284.4KB, pdf)

bmjopen-2020-045372supp007.pdf (80.3KB, pdf)

Data Availability Statement

Data are available on reasonable request.