Abstract

Background

Global interest in research skills in undergraduate medical education is growing. There is no consensus regarding expected research skills of medical students on graduation. We conducted a systematic review to determine the aims and intended learning outcomes (ILOs) of mandatory research components of undergraduate medical curricula incorporating the teaching, assessment, and evaluation methods of these programs.

Methods

Using the PRISMA protocol, MEDLINE and ERIC databases were searched with keywords related to “medical student research programs” for relevant articles published up until February 2020. Thematic analysis was conducted according to student experience/reactions, mentoring/career development, and knowledge/skill development.

Results

Of 4880 citations, 41 studies from 30 institutions met the inclusion criteria. Programs were project-based in 24 (80%) and coursework only–based in 6 (20%). Program aims/ILOs were stated in 24 programs (80%). Twenty-seven different aims/ILOs were identified: 19 focused on knowledge/skill development, 4 on experience/reactions, and 4 on mentoring/career development. Project-based programs aimed to provide an in-depth research experience, foster/increase research skills, and critically appraise scientific literature. Coursework-based programs aimed to foster/apply analytical skills for decision-making in healthcare and critically appraise scientific literature. Reporting of interventions was often incomplete, short term, and single institution. There was poor alignment between aims, teaching, assessment, and evaluation methods in most.

Conclusions

The diversity of teaching programs highlights challenges in defining core competencies in research skills for medical graduates. Incomplete reporting limits the evidence for effective research skills education; we recommend those designing and reporting educational interventions adopt recognized educational reporting criteria when describing their findings. Whether students learn by “doing”, “proposing to do”, or “critiquing”, good curriculum design requires constructive alignment between teaching, assessment, and evaluation methods, aims, and outcomes. Peer-reviewed publications and presentations only evaluate one aspect of the student research experience.

Electronic supplementary material

The online version of this article (10.1007/s40670-020-01059-z) contains supplementary material, which is available to authorized users.

Keywords: Medical students, Research project, Scholarly concentration, Intended learning outcomes, Curriculum design

Background

Mounting competitiveness in research amongst universities [1, 2] and in desirable careers amongst medical graduates has led to renewal of research skills curricula for medical students worldwide [3, 4]. Conducting research during medical school can increase students’ interest and confidence in performing research post-graduation, their knowledge of research principles, and their ability to critically evaluate scientific literature, and to write scientific papers [5]. Research skills can be acquired through “learning by doing”, such as the completion of an independent research project; “learning by proposing to do”, such as writing a research proposal; or “learning by critiquing”, such as critiquing articles in the literature [6].

Despite this need to upskill medical students in research methodology and practice, there has not been an authoritative consensus regarding acquisition of research skills and knowledge in medical students at graduation, or the optimal method for research training [7], although several authors have reported the research skills expectations of medical students at their graduation [8, 9]. This absence poses a challenge for curriculum developers. Ideally, assessment should align with intended learning outcomes to optimise alignment and maximise teaching and learning effects [10], and meet the requirements of the profession. What evidence and scholarship can developers then draw upon to plan and implement research skills curricula for medical students?

A preliminary search identified five reviews which have attempted to systematically investigate medical student research programs. These focused either on elective [11–13], or combined elective, dual-degree, and mandatory research components and degree types [14, 15]. These reviews sought to identify the evidence for elective program outcomes such as generation of an academic product (i.e. written thesis, poster or oral presentation), or change in student attitudes to research involvement, and/or short- or long-term involvement of students in research activities. However, none specifically examined what was expected of medical students at graduation in research skills and knowledge; none determined the program’s aims and goals; and none determined whether the assessment methods documented how and whether students gained the expected skills and knowledge. Without knowing aims and intended outcomes and their alignment with assessment, gaps in our understanding of how research skills should be learned and taught remain.

We therefore conducted this systematic review of primary studies reporting research training programs in undergraduate medical education and identified directions for future research and practice. We asked whether goals were stated as intended learning outcomes (ILOs) or clearly defined competencies (i.e. pre-emptive/foresight) or whether as research-related skills and abilities which were presumed to be achieved (i.e. retrospective/hindsight). We compared project- and non-project-based (e.g. coursework) research programs to determine whether different models of delivery, with very different resourcing implications, were associated with different ILOs/competencies. We also sought to appraise the assessment methods used to document student learning and whether these methods formally assessed the stated ILOs/competencies or used indirect measures such as student research productivity or output.

As our questions were focused on expected skills and competencies for all medical graduates, the search was limited to studies reporting compulsory medical student research programs. Elective research programs such as summer research electives were excluded as these types of programs may not be available to all students and there is likely a significant student selection bias towards students who are more capable and/or interested in research. Furthermore, the goals and ILOs of elective research programs may not be consistent with those of compulsory research programs [16].

Methods

This study adopted the “Preferred Reporting Items for Systematic reviews and Meta-Analysis” (PRISMA) reporting protocol for systematic reviews [17].

Definitions

Research components of medical courses are often known as “scholarly concentrations” in North America [11, 13] or “student selected components” in the UK [18]. For this review, the term “research program” is used to describe the mandatory research components which are the focus of our study. We excluded those which were elective or optional. We defined a research program as project-based if it required in-depth inquiry of an area beyond the standard curriculum that could result in new data or knowledge, often requiring its documentation in a research product such as a thesis or article or poster [11, 13]. We defined a research program as coursework-based if it required students to learn generic knowledge or skills related to any aspect of the research process such as literature searching, critical analysis, ethics, statistical analysis, or scientific writing and presentation, but did not require in-depth inquiry or a research product.

Search Strategy

Searches of MEDLINE and Educational Resources Information Centre (ERIC) databases were conducted with the following terms (see Appendix 1):

(medical or medicine) adj2 (student* or graduate or graduates or graduate)

research

skill or skills or skillsets or skillset or training or competency or competencies or curriculum or performance or perform or performing or task or tasks

scholarly concentration/s

research literacy

scholarly skill* or scholarly activit* or scholarly program*

research activit*

research project* or research program*

teach or teaching

teaching or education or curriculum or medical school*

biomedical research/ed

medical education or medical school* or medical student* or education medical

After removal of duplicate articles, all titles and abstracts were read by the first author (MGYL) and those articles which included a research program for medical students which was not a research elective or optional program were selected. The full-text versions of these articles were then read to determine if they met inclusion criteria. Reference lists of these papers were scanned to identify additional studies for appraisal.

Inclusion and Exclusion criteria

Twenty articles (approximately 20%) were randomly chosen from those selected for full-text paper consideration and independently read by the third author (JB). Any over which there was disagreement about inclusion eligibility were independently read by the second author (WH). An email discussion was then conducted until consensus on inclusion eligibility for the article was reached between all three authors. The above process was undertaken as a quality control measure to ensure there was agreement amongst the authors on the application of the inclusion and exclusion criteria.

Articles were included if they:

described a mandatory research program involving medical students only

were in English

published in peer-reviewed journals

Articles were excluded if they:

-

described an optional research program including double research degrees,

elective or short-term initiatives, and summer programs

did not explicitly state if the research program was mandatory for all medical students

did not explicitly state if the research program was assessed

provided only secondary data such as a literature review

provided only evaluation data such as cross-sectional studies on student opinions of research without reference to a current research program

There was no limit on publication date.

Multiple Articles from the Same Institution

All articles describing the same research program from the same institution were included if the articles described different aims or outcomes or provided substantial updates to the research program such as serial publications on outcomes. These articles were all classified as belonging to the same research program and institution. Only the most recent article from that research program and institution has been referenced in this review.

Multiple Institutions in the Same Article

Articles describing research programs from multiple institutions were included if at least one of the programs was mandatory. If more than one research program from these studies was described as mandatory, then each was classified as belonging to different institutions and referenced as “first author surname (institution name)” in the data summary tables.

Data Extraction

Data extraction was performed using a predetermined checklist, and included:

article details: first author, year of publication

institution characteristics: name of institution, country of institution

research program characteristics: year mandatory research program initiated, program, type (project-based or coursework-based), year level/s program was taught, whether there was dedicated curriculum time for research

research program details: study design, aims and ILOs/competencies

required assessment tasks

research program outcome measures: quantitative e.g. scholarly outputs such as publications including numbers and proportions of student abstracts, publications and presentations; and qualitative e.g. student surveys, themes of surveys conducted

Data Analysis

Each of the included studies were described by year of publication, country of origin, and the program characteristics listed above. Thematic analysis was conducted to identify stated research program aims and/or ILOs/competencies, and initially grouped according to the three categories described by Bierer et al. [13]: experience/reactions, mentoring/career development, and knowledge/skill development. Subthemes were further identified with further analysis and discussion between the research team. No inferences were made about the program outcomes where the aims and/or outcomes and competencies were not explicitly stated.

Results

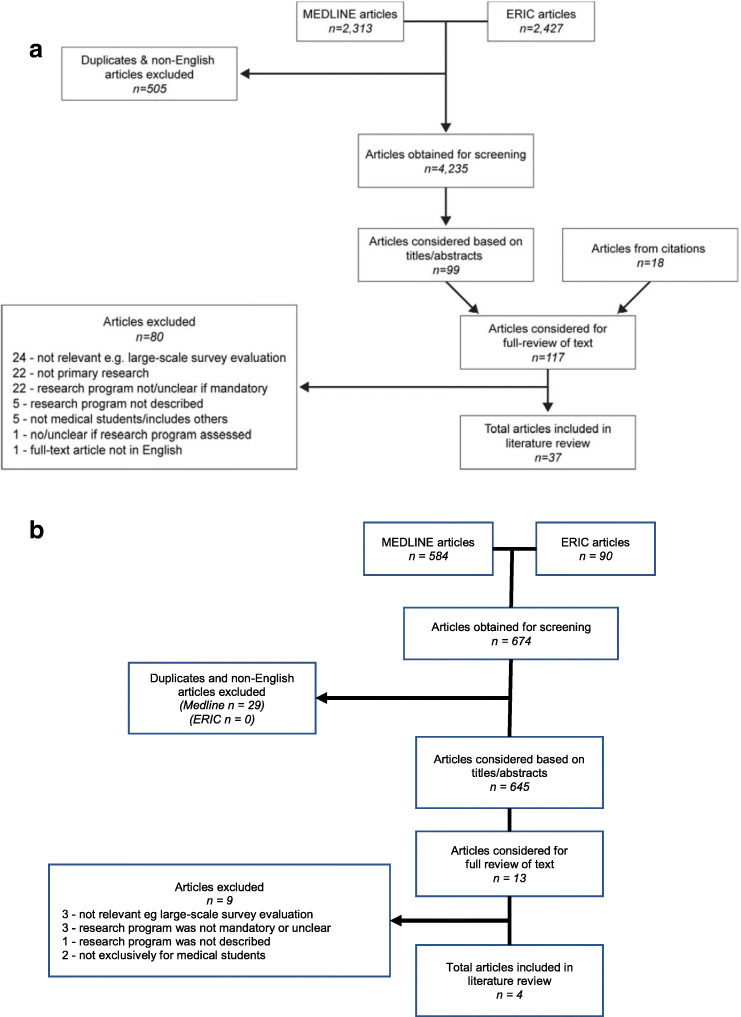

The primary search, which was conducted in March–April 2017, yielded 2274 and 2427 citations from MEDLINE and ERIC databases, respectively. After removal of duplicates and titles and abstracts not written in English, 4235 unique citations remained. A list of 117 articles for full-text paper consideration was produced after reviewing titles and abstracts and scanning of reference lists. From this, 37 studies met the inclusion criteria.

Eighty studies were excluded because they were not relevant, e.g. large-scale survey evaluation (n = 24); not primary research, e.g. literature review (n = 22); research program was not mandatory or unclear (n = 22); research program was not described (n = 5), research program or study not exclusively for medical students (n = 5), none or unclear if assessment tasks were required (n = 1); or the full-text article was not in English (n = 1) (see Fig. 1a for the complete search and study selection strategy).

Fig. 1.

a Primary search and selection strategy. b Update to primary search and selection strategy

An update to the primary search was conducted in February 2020. After removal of duplicates and abstracts not written in English, 645 unique citations (555 in MEDLINE and 90 in ERIC) published between March 2017 and February 2020 were identified. A list of 13 articles for full-text paper consideration was produced after title and abstract review. From this, four studies met the inclusion criteria—two studies [19, 20] were new reports of research training programs and two studies [21, 22] were updates to programs identified in the primary search. The remaining nine were excluded because they were not relevant, e.g. large-scale survey evaluation (n = 3), research program was not mandatory or unclear (n = 3), research program was not described (n = 1), or research program or study not exclusively for medical students (n = 2) (see Fig. 1b for the updated search and study selection strategy).

Included Studies

These 41 articles originated from 30 different institutions and are summarised in Appendix 2. Study designs included cross-sectional student questionnaire/test [5, 21, 23–31], descriptive [32–41], mixed descriptive and cross-sectional student questionnaire/test [18–21, 42–49], retrospective cohort [50–52], longitudinal student questionnaire [22, 53, 54], cross-sectional graduate questionnaire [55], and prospective student questionnaire [22]. The year of article publication ranged from 1960 to 2019 with more than half of the articles (54%, 22/41) published in the last 7 years [18–27, 29, 32, 33, 36, 37, 42, 43, 45, 47, 50, 51, 54].

Programs most commonly originated from North America including the United States of America (US) [5, 27, 28, 32–36, 43, 48, 51, 55] and Canada [20, 44, 46]; Europe including Netherlands [26, 42, 47], the UK [18, 23], Sweden [22, 56], Germany [19], Portugal [50], and Croatia [54]; Australia [21, 57]; India [24, 29]; South Africa [45]; and Sudan [25]. The year the mandatory research program was initiated was provided in 24 programs [5, 19, 21, 23–29, 32–36, 43, 46–48, 50, 51, 54, 55]. Most programs were project-based with or without additional coursework (79%, 24/30) [5, 18–26, 32–35, 42–47, 50, 51, 55] while the remainder were coursework only (21%, 6/30) [27–29, 36, 48, 54].

Program Aims and Intended Learning Outcomes

A summary of the themes and subthemes from any stated aims and ILOs is provided in Table 1.

Table 1.

Thematic analysis of aims and intended learning outcomes of mandatory medical student research programs

| Aims and intended learning outcomes | Reference list (alphabetically by first author) | |

|---|---|---|

| Project-based | Coursework-based* | |

| Experience/reactions | ||

| Provide an in-depth research experience | Boninger [32], Burgoyne [23], Houlden [44], Laskowitz (Duke and Stanford) [33], Möller [22, 56], Rosenblatt [35] | |

| Foster/increase self-identification as a scholar/researcher | Dyrbye [51] | |

| Foster/increase self-identification as a scientifically proficient clinician/evidence-based practitioner | Möller [22, 56] | Mullan [21, 57] |

| Improve student attitudes towards research | Smith [46] | |

| Mentoring/career development | ||

| Participation in research | ||

| Foster/increase student interest/participation in research (during medical school) | Hunt [48], Vujaklija [54] | |

| Foster/increase future interest/participation in research (beyond graduation) | Dyrbye [51], Laskowitz (Duke) [33] | Hunt [48] |

| Enhance culture of self-directed learning and/or group learning | Boninger [32], Smith [46] | |

| Relationships | ||

| Foster mentoring relationships outside of usual course structure | Boninger [32], Laskowitz (Stanford) [33], Ogunyemi [5], Rosenblatt [35] | |

| Knowledge/skill development | ||

| Foster/increase general research skills | Barbosa [50], Burgoyne [23], Devi [24], Dyrbye [51], Rosenblatt [35], Laskowitz (Duke) [33], Rhyne [34] | Vujaklija [54] |

| Foster/apply analytical skills for decision-making/problem solving in healthcare | Boninger [32], Laskowitz (Duke) [33], Mullan [21, 57], Riley [18] | Boninger[32], Crabtree [36], DiGiovanni [27], Hunt [48], Riegelman [28] |

| Select a mentor and research project | Gotterer [43], Smith [46] | |

| Conduct primary care research in areas that impact disadvantaged communities | Ogunyemi [5] | |

| Technology | ||

| Use computers and other information systems effectively | Riley [18] | |

| Literature | ||

| Conduct a literature search | Riley [18], Smith [46] | Crabtree [36], Hunt [48], Mullan [21, 57] |

| Critically appraise scientific literature | Dyrbye [51], Gotterer [43], Knight [45], Laskowitz (Duke) [33], Möller [22, 56], Riley [18], Smith [46] | Crabtree [36], Mullan [21, 57], Riegelman [28], Vujaklija [54] |

| Research methodology | ||

| Formulate a research question/hypothesis | Knight [45], Riley [18], Smith [46] | Crabtree [36], Hunt [48], Vujaklija [54] |

| Write a research protocol | Knight [45], Smith [46] | |

| Learn ± select appropriate research methods | Gotterer [43], Knight [45], Riley [18], Smith [46] | Hunt [48], Mullan [21, 57], Shaikh [29], Vujaklija [54] |

| Understand principles of ethical research conduct | Gotterer [43], Knight [45], Riley [18], Smith [46] | Vujaklija [54] |

| Successfully obtain ethics approval | Knight [45], Smith [46] | |

| Data collection and interpretation | ||

| Collect data using appropriate methods | Knight [45], Riley [18], Smith [46] | |

| Appropriately analyse and interpret data | Gotterer [43], Knight [45], Riley [18], Smith [46] | Crabtree [36], Hunt [48] |

| Communication | ||

| Foster/increase written communication skills | Boninger [32], Gotterer [43], Rosenblatt [35], Smith [46] | Vujaklija [54] |

| Foster/increase presentation (oral or poster) skills | Boninger [32], Gotterer [43], Knight [45] | |

| Self-reflect on own research experience/efforts | Gotterer [43], Knight [45] | |

| Intervention/outcome | ||

| Use research findings to prepare an intervention | Knight [45], Riley [18] | |

| Implement and evaluate the intervention | Knight [45] | |

*Includes project-based programs with compulsory coursework where assessment tasks for the coursework components were stated separately (references italicised)

Program aims or ILOs were reported in 24 of the 30 (80%) studies [5, 18, 19, 21–24, 27–29, 32–36, 43–46, 48, 50, 51, 54]. The six programs which did not explicitly state any aims or ILOs were all project-based [20, 25, 26, 42, 47, 55] and all but one [55] were from outside North America [20, 25, 26, 42, 47]. A total of 27 different aims and ILOs were identified with 70% focused on knowledge/skill development (19/27), 15% on experience/reactions (4/27), and 15% on mentoring/career development (4/27). Knowledge/skill development was the most common in both project-based programs (73%, 21/28) and coursework-based programs (75%, 9/12). The most common aims for project-based programs were to provide an in-depth research experience [19, 20, 22, 23, 32, 33, 35, 44], foster/increase general research skills [20, 23, 24, 33–35, 50, 51], and critically appraise scientific literature [18, 22, 33, 43, 45, 46, 51]. The most frequently stated aims for coursework-based programs were to foster/apply analytical skills for decision-making/problem solving in healthcare [27, 28, 32, 36, 48] and critically appraise scientific literature [21, 28, 36, 54].

There were up to 13 ILOs and aims in some programs [45, 46] including knowledge/skill development, experience of participating in research, mentoring/career development, and/or teamwork. Two programs had population-based aims focused on serving local communities typically in disadvantaged low-income areas [5, 45].

Teaching Methods

Project-Based Research Programs

Thirteen programs stated the entry level [5, 18–24, 42, 44, 45, 47, 50], with nine being school-leaver entry [18, 22–24, 42, 44, 45, 47, 50]. Ten of the 11 project-based programs which did not state entry level were based in the US where typically there are 4-year postgraduate programs [31–35, 43, 51, 55].

Eleven programs were undertaken in the penultimate or final year [5, 21–23, 25, 32, 33, 42, 47, 50, 57], five were undertaken in the early years of the course [24, 43–46, 55], three were “longitudinal” or “multiyear” programs [19, 20] with no further details [18, 19, 33, 34], and this was unclear in two programs [26, 35].

Project-based program duration ranged from three 4-week blocks [45] to 4 years [20]. Fourteen included dedicated time for the project [5, 18–20, 22, 24, 26, 33, 42–46, 51]. These [22, 33, 42, 44, 45, 51] ranged from unspecified “dedicated hours per week” [33] to 24 weeks full-time [42]. One program required students to dedicate at least 8 weeks of summer time between years 1 and 2 on the project [43].

Three programs required a small-group project instead of an individual project [18, 24, 45] and two gave students the option of a small-group project [5, 32]. Only one program aimed to enhance self-directed learning and/or group learning [32].

Thirteen provided additional compulsory research coursework in preclinical years [21, 26, 42], or during the research project [5, 25, 33, 43, 46, 51], or both [18–20, 32].

Coursework-Based Research Programs

Of these, six stated the entry level, with three being school-leaver entry [29, 36, 54]. Those which did not state entry level were from the US [27, 28, 48]. Most provided the research program in the first or second year of the course [28, 29, 36, 48, 54]. Its duration ranged from eight [29] to twenty [54] contact hours. Most combined large-group lectures and small-group tutorials. Of the three project-based programs with compulsory research coursework in preclinical years, this ranged from 7 weeks [26] to four 3-week blocks [42], and one did not specify a duration [21].

Assessment Methods

A summary of assessment tools used in medical student research programs is provided in Table 2.

Table 2.

Summary of assessment methods of mandatory medical student research programs

| Assessment methods | Reference list (alphabetically by first author) | |

|---|---|---|

| Project-based | Coursework-based* | |

| Written reports | ||

| Research article/report | Barbosa [50], Burgoyne [23], Dekker [42], Devi [24], Dress [19], Dyrbye [51], Houlden [44], Laskowitz (Stanford) [33], Mullan [21, 57], Rhyne [34], Rosenblatt [35], Sawarynski [20], Smith [46], [19, 20] | |

| Thesis | Ebbert [55], Moller [22, 56], Ogunyemi [5], Osman [25], Zee [26] | |

| Research article/report or thesis | Laskowitz (Duke) [33] | |

| Research proposal | DiGiovanni [27] | |

| Abstract | Gotterer [43], Mullan [21, 57] | |

| Self-reflection | Boninger [32], Grotter [43], Riley [18] | |

| Summaries/critical appraisal of literature | Crabtree [36], Vujaklija [54] | |

| Presentation | ||

| Presentation required but type not stated | Laskowitz (Duke) [33], Ogunyemi [5], Zee [26] | |

| Oral presentation only | Drees [19], Burgoyne [23] | |

| Poster presentation only | Devi [24], Gotterer [43], Knight [45], Mullan [21, 57], Riley [18] | |

| Either oral or poster presentation | Rhyne [34] | |

| Both oral and poster presentation | Sawarynski [20] | DiGiovanni (presentation of research proposals) [27] |

| External to medical school | ||

| Publication in peer-reviewed journal | Barbosa [50], Boninger [32] | |

| Presentation at extramural scientific conference | Boninger [32] | |

| Portfolio | ||

| Student portfolio | Riley [18], van der Akker [47] | |

| Assignments/examinations | ||

| Assignments | Dekker[42], Hunt [48], Riegelman [28] | |

| Formal test/examination | Knight [45] | Boninger[32], DiGiovanni [27], Hunt [48], Shaikh [29] |

| Peer assessment | ||

| Peer assessment of behaviours in tutorials | Hunt [48] | |

*Includes project-based programs with compulsory coursework where assessment tasks for the coursework components were stated separately (references italicised)

Project-Based Research Programs

The most commonly required assessments were a written research article or report [19–21, 23, 24, 32–35, 42, 44, 46, 50, 51] or thesis [5, 22, 25, 26, 55]; one program allowed students to choose either format [33]. Of these 18, four stated that an aim was to improve written communication skills [32, 35, 43, 46]. Ten project-based programs required an oral [19] and/or poster [5, 18–21, 23, 24, 26, 33, 34, 43, 45] presentation to peers and faculty members but only two stated that an aim was to improve oral or poster presentation skills [43, 45]. Two programs included publication in a peer-reviewed journal or presentation at a scientific conference as an alternative assessment, but these criteria were not required [32, 50].

A student portfolio was used in two project-based research programs [18, 47]. The University of Edinburgh required students to develop an e-portfolio throughout the medical course containing all research training related reports, ethics reviews for projects, or reflective practice tasks [18]. The portfolio assessment at Maastricht University in the Netherlands for the last two clinical years required students to perform a strengths, weaknesses, opportunities, and threats (SWOT) analysis for each CanMEDS competency domain [58] and was not limited to the research project [47].

Coursework-Based Research Programs

The most commonly required assessments were formal tests or examinations [27, 29, 32, 48], assignments [28, 42, 48], and written summaries or critical appraisal of the literature [36, 54]. Four programs which required formal tests or assignments stated an aim to apply analytical skills for decision-making or problem solving in healthcare [27, 28, 32, 48] and two aimed to learn or select appropriate research methods [29, 48]. Both programs which assessed students using written summaries or critical appraisal of literature aimed to critically appraise scientific literature [36, 54], with one aiming to improve written communication skills [54].

Evaluation of Research Programs

A summary of the methods used to evaluate medical student research programs is provided in Table 3. The most commonly used method was frequency of peer-reviewed publication and extramural presentations.

Table 3.

Summary of evaluation methods of mandatory medical student research programs

| Evaluation methods | Reference list (alphabetically by first author)* | |

|---|---|---|

| Project-based | Coursework-based | |

| Quantitative | ||

| Knowledge assessment | ||

| Test/assignment scores on research methodology | Devi [24], Knight [45] | Hunt [48], Riegelman [28], Shaikh [29], Vujaklija [54] |

| Frequency of grades of student written reports/posters | Devi [24], Knight [45] | |

| Directly related to student research (objective) | ||

| Frequency of publication in peer-reviewed journals | Barbosa [50], Boninger [32], Dekker [42], Devi [24], Dyrbye [51], Rhyne [34] | |

| Frequency of first authorship in peer-reviewed journals | Dekker [42], Dyrbye [51] | |

| Frequency of abstract publication | Dyrbye [51] | |

| Frequency of presentation in extramural conferences | Boninger[32], Devi [24], Dyrbye [51], Rosenblatt [35] | |

| Frequency of research collaborations | Devi [24] | |

| Number of students receiving faculty commendation in research at graduation | Rhyne [34] | |

| Number of evidence summaries translated into clinical guidelines (by faculty and clinicians) | Crabtree [36] | |

| Directly related to student research (self-reported) | ||

| Frequency of publication in peer-reviewed journals | Houlden [44], Laskowitz (Duke and Stanford) [33], Smith [46] | |

| Frequency of abstract publication | Smith [46] | |

| Frequency of presentation in extramural conferences | Houlden [44], Smith [46] | |

| Frequency of projects discussed in residency interviews | Gotterer [43] | |

| Unrelated/may be related to student research (objective) | ||

| Frequency of publications within 3 years of graduation | Dyrbye [51] | |

| Frequency of future grant success | Boninger[32] | |

| Unrelated/may be related to student research (self-reported) | ||

| Frequency of voluntary participation in research projects outside of compulsory research program | Dekker [42] | |

| Frequency of publications/presentations/attendance at scientific conferences within 2 years of research program | Moller [22, 56] | |

| Qualitative | ||

| Student-reported | ||

| Perceptions/satisfaction with research program | Boninger[32], Devi [24], Drees [19], Gotterer [43], Houlden [44], Knight [45], Laskowitz (Duke and Stanford) [33], Ogunyemi [5], Sawarynski [20], Smith [46], van den Akker [47], Drees, Sawarynski [19, 20] | Crabtree [36], Shaikh [29] |

| Perceptions/confidence of own research skills | Boninger[32], Burgoyne [23], Devi [24], Drees [19], Houlden [44], Knight [45], Mullan [21, 57], Riley [18], Sawarynski [20], Zee [26] | Riegelman [28], Shaikh [29] |

| Attitudes/perceptions of research | Devi [24], Moller [22, 56], Ogunyemi [5] | DiGiovanni [27], Shaikh [29], Vujaklija [54] |

| Motivation for future research career | Burgoyne [23], Devi [24], Gotterer [43], Houlden [44], Laskowitz (Duke and Stanford) [33], Möller [22, 56] | |

| Amount of journal reading and names of journals | Riegelman [28] | |

| Awareness of research activities at own institution | Burgoyne [23] | |

| Usefulness of project in residency application/interviews | Ogunyemi [5], Rhyne [34] | |

| Perceptions/attitudes of small-group learning vs. individual | Hunt [48] | |

| Graduate-reported | ||

| Research and medical career since graduation | Ebbert [55] | |

| Faculty-reported | ||

| Perceptions/satisfaction with research program | Devi [24] | Shaikh [29] |

| Quality and usefulness of students’ evidence summaries | Crabtree [36] | |

| Externally reported | ||

| Observations of students’ behaviour in small-group sessions | Hunt [48] | |

*Italicised articles refer to research programs that have planned to utilise that particular evaluation method but for which no results are available yet. PhD, Doctor of Philosophy

Quantitative Methods

Eighteen research programs used quantitative measures including 13 project-based programs [22, 24, 33–35, 42–46, 50, 51] and five coursework-based programs [28, 29, 36, 48, 54]. The most common measure was frequency of publication in peer-reviewed journals (n = 9), measured objectively in six programs [5, 24, 34, 42, 50, 51] and self-reported in three [33, 44, 46]. The number of presentations at extramural conferences was used in six programs and measured objectively in four [5, 24, 35, 51] and self-reported in two programs [44, 46]. Two other programs stated an intention to use such measures [32, 33]. The rate of publication in peer-reviewed journals and extramural presentations measured objectively ranged from 10 to 41% [5, 24, 34, 42, 50, 51] and from 11 to 50% [5, 24, 35, 51], respectively, and when self-reported ranged from 28 to 76% [33, 44] and from 22 to 47% [44, 46], respectively. Two project-based programs also used objective frequency of first authorship on publications, reporting a rate of 22% and 63% [42, 51].

The Mayo Medical School reported the highest rates, with 66% of graduates having a tangible research product (published research report, published abstract, or extramural conference presentation) directly related to their required research project during medical school [51].

Factors which may increase student publication and first authorship rates include addition of publication as a non-compulsory assessment criterion, clinical science or basic science projects [50], and longer duration of dedicated research project time [51]. Mayo reported an increase in objective publication rate from 10.4 to 23.9% over 6 years after introducing publication as an optional assessment criterion. Concurrently, a decrease in students choosing to perform a systematic literature review was observed. The authors concluded that students who conducted clinical or basic sciences research projects were four and six times more likely to publish than students with projects in the health sciences [50]. A duration of either 13, 17–18, or 21 weeks of dedicated research time had no difference in the proportion publishing or presenting [51].

Two project-based programs showed an increase in graduate short-term research [51, 56]. Dyrbye et al. showed that graduates with a research product related to the required research experience during medical school produced more non-related research within 3 years of graduation [51]. The Karolinska Institutet reported that 9% of students had enrolled in a Doctor of Philosophy (PhD) program 2 years after a mandatory research project, with an additional 34% planning to enrol in a PhD program [56]. There were no long-term or longitudinal studies with quantitative measures of research outputs after graduating from a course with a mandatory research program.

The most commonly used quantitative measure for coursework-based programs was test scores on research concepts and methodology [28, 29, 48, 54]. Three programs reported content mastery on assignments [48] or higher test scores compared with students who had not yet undergone the course [29, 54]. One program used pre- and post-course knowledge tests in first-year undergraduate students but the effect size was weak and it was unclear whether the same test was used on both occasions [29].

Qualitative Measures

Student questionnaires were the most common method [5, 18–24, 26–29, 33, 34, 36, 43–48, 54]; three used faculty questionnaires [24, 29, 36], one an alumni questionnaire [55], and one externally reported observations of student behaviour [48]. Common topics included student perceptions and satisfaction with the research program [5, 19, 24, 29, 33, 36, 43–47], and confidence in research skills [18, 19, 21, 23, 24, 26, 28, 29, 44, 45]. These topics were most common in project-based programs, whereas coursework-based programs focused on student overall perceptions of research [27, 29, 54].

Student satisfaction, attitudes, and perceptions towards research after a mandatory research program were largely positive whether it was project-based [5, 19, 20, 22, 24, 33, 43–47] or coursework-based [27, 29, 36, 54]. Interestingly, only one program had a stated aim to improving student attitudes towards research [54]. This coursework-based program provided students with lectures in scientific methodology and small-group problem-based learning in the second year of the medical course and used a longitudinal questionnaire of students’ attitudes towards science and scientific methodology, demonstrating an increase in positive attitudes between the pre- and first post-research course, as well as over the 6 years of the medical course.

However, negative responses were also reported. In a questionnaire study of 140 undergraduate students, only minimal change in attitudes towards research pre- and post-participation in a 6-month research project without dedicated research time [24] was reported. Students reported the experience as stressful and the authors speculated that the lack of dedicated research time is likely the reason. Burgoyne et al. reported 13% of survey respondents from all cohorts in a course both before and after their research project found research overly challenging, unstimulating, and generally uninteresting [23].

Students’ self-perceived confidence and research skills abilities generally increased after the research program [18, 19, 21, 23, 24, 26, 28, 29, 44, 45]. These included skills such as performing a literature search, critical appraisal of literature, problem formulation, survey design, laboratory skills, statistical analysis, manuscript preparation, and presentation skills. Interestingly, of these skills, laboratory skills were least likely to increase after the program [44].

The type of research program may affect student perception of research skill acquisition. Zee et al. compared student perceptions during a course restructure [26]. Unsurprisingly, perceptions of skills in writing and in retrieving information were significantly higher in the project-based research program, which required a written thesis, compared with students who had undergone lecture-based learning. However, perceptions of critical judgement were higher in the latter group. The authors speculated that students completing research projects may often end up working in isolation and consequently have fewer opportunities to critique scientific information.

Student perceptions of usefulness in completing a research project for obtaining top residency choices was examined in three project-based programs [5, 34, 43]. In a US program, completion of primary care research project was associated with half of respondents reporting that the research project made them more competitive during residency application and interviews [5]. In another US program, graduate reported that completing a research project in medical school helped them obtain their top residency choices, but it was not reported how this data was obtained [34].

Student motivation for a future research career was only evaluated in project-based programs. Overall, students were more interested in medical research after completing a mandatory research project [22–24, 33, 43, 44, 55]. For example, a survey of 60 students (85% response rate) reported an increase in those interested in pursuing a career in medical research from 35 to 42% [44]. One study surveyed 1034 graduates [55] (85.7% response rate) reporting a greater likelihood of specialising in internal medicine if a research project had been completed on this area [55].

Discussion

Our systematic review of mandatory medical student research programs from across the world confirms growing interest in research training in medical school curricula, particularly in the last 7 years. Where reported, there was significant variation between program format and design, aims, and ILOs/competencies, and in assessment and evaluation methods. It appears that agreed statements or consensus on the expected research skills and knowledge of medical students at graduation, and the consequent program design, is yet to emerge. Without such guidance, it is difficult to design and benchmark best practice, and to conduct comparative research on the effectiveness of different teaching methods. As a result, the research to date has comprised single-program evaluations, with better studies able to demonstrate effects over time. Outcome measures, however, have tended to focus on easily measurable outputs, such as publications, rather than pedagogical aims such as developing higher order critical thinking capabilities, or clinical aims of improved patient care.

The following discussion synthesises key findings and addresses the implications of our findings on curricular elements with the aim of assisting curriculum developers and educators to better identify best practice and areas for further research.

Program Format and Design

A disparate range of program designs was described, and none was explicitly informed by educational theory. These included project-based versus coursework-based programs, whether there should be dedicated time for research, the length and timing of any dedicated research time, and whether a research project should be small group or individual.

While hands-on research projects are often considered the most authentic way for students to develop research skills [19, 59, 60], didactic methods such as lectures and skills-based activities focused on research methodology and critical thinking were described [20, 27–29, 36, 48, 54]. Some have advocated mandatory research projects [61] but others remain uncertain [62]. There is no clear evidence that in-depth research during medical school produces better clinicians or clinician-scientists [32, 63]. Regardless, “learning by doing” (such completing an independent research project) is thought more likely to produce deep learning as opposed to “learning by proposing to do” (such as writing a research proposal but not collecting or analysing data), or “learning by critiquing” (such as critiquing existing articles in the literature) [6]. However, not all program aims and ILOs/competencies may need to be met “by doing”. “Critical appraisal of literature”, the most frequently stated aim, can be learnt through coursework, which may be more likely to ensure a minimum skill level compared with self-taught and potentially ad hoc independent approaches [64]. Conversely, “providing an in-depth research experience” could be met by writing a comprehensive research proposal. It seems reasonable that coursework should supplement research projects [26]. However, we identified only thirteen project-based programs which stated that additional mandatory research coursework was included [5, 18–21, 25, 26, 32, 33, 42, 43, 46, 51].

Mandatory research projects may or may not be given dedicated curriculum time. Students without dedicated research time are expected to work on projects while completing clinical rotations, which may be detrimental to learning [63] and cause stress [24]. Dedicated time may result in greater improvement in attitudes towards research compared with projects without dedicated time [24]. However, it is also argued that time management skills such as juggling research with clinical commitments are an essential graduate competency [20]. The optimal length and timing of dedicated research time will depend on expected outcomes (e.g. longer time is associated with more students as first authors [51]) and any scaffolding from other program elements.

Several project-based programs were conducted as small groups [5, 18, 24, 32, 45]. However, only one stated collaborative learning as an aim [32]. This would appear important as most medical research is conducted in teams. Although it is reported that students value the opportunity to receive peer feedback and develop teamwork [45], other students have reported mixed feelings about group learning, citing uncooperative and misunderstanding group members [24]. Fair assessment of groupwork is challenging, with one program requiring each student to analyse a unique portion of the group’s research data and produce a unique thesis [5].

Appropriate Assessment Tools

Many programs, particularly project-based programs, did not have clear links between the aims, ILOs, and the teaching and assessment methods used [5, 18, 20–22, 24–26, 32–35, 38, 42–46, 50, 51, 55].

A written report or thesis with a research presentation was commonly used in project-based programs [5, 18–22, 24–26, 32–35, 38, 42–46, 50, 51, 55] but often did not match with all stated ILOs/competencies, particularly reflective practice aims such as “to self-reflect on own research experience/efforts” and “to foster/increase self-identification as a scholar/researcher”. A few programs utilised portfolio assessment containing multisource evidence and self-reflection to assess research skill attainment [18, 20, 47]. Portfolios have become more common since competency-based assessment has become more established [65]. Advantages of a portfolio include authenticity and the capacity to assess learning outcomes not easily assessed by other methods such as professionalism and reflective ability [20, 66].

Need for Rigorous Evaluation Tools

Frequency of peer-reviewed publications and presentations was the most commonly used evaluation measure. Several programs [33, 44] relied on self-reports, but these generally led to higher numbers than objectively measured rates. The addition of publication as a non-compulsory assessment criteria, clinical science or basic science projects, and longer duration of dedicated research project time [50, 51] were reported to result in higher publication and first authorship rates.

The Mayo Medical School reported 66% of students producing an objectively measured research output (published article or abstract, or extramural presentation) directly related to their required medical school research, including 41% with a publication [51]. The Mayo program is undertaken in the third year and includes 13 weeks of dedicated research time and has been a mandatory component since the school’s establishment in 1972. With almost half a century of experience, it is likely that a strong student research culture, supported by students and faculty, has developed. Faculty members who mentor student projects allocate up to 8% of their time and are reimbursed up to $750 for laboratory expenses per student. The success of medical student research programs is dependent on strong school commitment and faculty experienced in research supervision, supported by administrative structures and dedicated resources [67].

Numbers of publications and presentations may not be appropriate for programs aimed at improving the health of disadvantaged communities [5, 45]. Neither of the two programs with socially accountable aims used publication and presentation rates for program evaluation [5, 45]. Alignment with socially accountable goals would require measures of the impact of student research on identified communities. One program reported future plans to base an intervention on student research findings [45].

Unfortunately, no studies reported impact on patient outcomes or healthcare delivery, or an aim or intended outcome to do so. This may reflect the short term and single institutional nature of the program outcomes reported.

There was frequent use of questionnaires about student attitudes and motivation towards future research [19, 22–24, 33, 43, 44, 55], or self-perceived confidence in research skills [18, 21, 23, 24, 26, 28, 29, 44, 45]. However, such measures only evaluate the lowest two levels of Kirkpatrick’s model [68]. The highest level of evaluation reported was the impact on future research interests and activities. Only two institutions reported quantitative measures of graduate research endeavours and only within 2–3 years of graduation [22, 51]. Only one reported on graduate perceptions of the impact on their careers [55].

Multimethod, longitudinal prospective studies which include the views of students, supervisors, and alumni, as well as measures of research productivity by tracking publications, presentations, and clinical and research careers of graduates, could lead to measures of more distal effects such as impact on communities and health outcomes. Such programs of evaluation will provide a more complete and more useful dataset for curriculum developers and researchers, but ideally should be planned and set up early during program development.

Limitations

This review has several limitations. Firstly, articles may have been omitted due to our search strategy, inclusion criteria, and restriction to English language articles. To maximise yield from the two databases, we scanned reference lists. Secondly, we did not include grey literature. Many curricula, which may be informed by best practice, may only be published in the grey literature. However, it is likely that any program which has been rigorously evaluated would be published in a peer-reviewed journal. Our search and analysis were informed by graduate outcomes required by international accreditation standards [69, 70]. Medical school curricula are required to conform to these standards, so we are unlikely to have missed major themes in program aims and outcomes. Data extraction, article selection, and thematic analysis were largely performed by one author (MGYL) and we did not estimate inter-rater variability. However, regular discussion between all authors is likely to have minimised potential variation in application of criteria and theme developments. As previous authors have found [11–15], many programs were incompletely reported so our analysis may be limited by lack of published evidence rather than actual effect. We strongly recommend that those designing and reporting educational interventions adopt both widely accepted standards for curriculum quality (for example the World Federation of Medical Education Accreditation Standards; https://wfme.org/standards/) and a recognized educational reporting criterion, such as those listed on the EQUATOR network. This will ensure that inconsistent evaluation and reporting of educational interventions, a recognised barrier to identifying the most effective teaching strategies and intervention efficacy [71–73], are limited.

Conclusion

We aimed to identify the minimum expected research skills and knowledge of medical students at graduation by reviewing the aims and ILOs/competencies and teaching, assessment, and evaluation methods of mandatory medical student research programs from across the world. Despite limitations in reporting, the diversity of program elements found suggests that no universal approach can be applied and the variability in the included studies likely reflects contextual differences in medical programs across the globe. As medical schools become more socially accountable and re-orient their research priorities towards addressing the health concerns of the communities they serve, their graduate competencies should reflect their ethos, their research strengths and focus, and the communities they serve. For example, there was strong alignment in one medical school’s mission to serve disadvantaged communities and the student research program aim to conduct primary care research in areas that impact disadvantaged communities [5].

Whether student learn by “doing”, “proposing to do”, or “critiquing”, it is a given that medical school curriculum developers ensure constructive alignment between teaching, assessment, and evaluation methods, and intended aims and ILOs/competencies. The number of peer-reviewed publications and presentations, a common approach to measuring program outcomes, only captures one aspect of the scholarly experience. Measures which document outcomes such as problem solving, teamwork, and communication skills; student’s short- and long-term engagement with research; and the benefits of student research on healthcare outcomes are more authentic and reflect career-long required competencies for medical graduates.

Electronic Supplementary Material

(DOCX 121 kb)

Acknowledgements

We would like to acknowledge Mr Patrick Condron, from the University of Melbourne Brownless Biomedical Library, for his assistance in designing the search strategy.

Authors’ Contributions

MGYL and JB developed the conception, design, and methodology of the review, and searched, analysed, and interpreted the literature within the review. JB drafted the manuscript and revised its preparation for submission. JB and MGYL give permission for this version to be published and agree to be accountable for all aspects of the work. WH provided advice and consultation throughout, assisted with the analysis of the literature, and reviewed multiple draft manuscripts. WH gives permission for this version to be published and agrees to be accountable for all aspects of the work. All authors read and approved the final manuscript.

Funding Information

This work was partly funded by a Faculty of Medicine, Dentistry and Health Sciences (University of Melbourne) Learning and Teaching Initiative Seed Funding Grant awarded to Dr Melissa Lee and Dr Justin Bilszta.

Data Availability

The data extraction sheet used and/or analysed during the current study is available from the corresponding author on request. Data is provided in the tables and appendices and all data is based on published studies.

Compliance with Ethical Standards

Competing Interests

The authors declare that they have no competing interests.

Ethics Approval and Consent to Participate

Not applicable

Consent for Publication

Not applicable

Disclaimer

The funding body has no role in the design of the study and collection, analysis, and interpretation of data.

Footnotes

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Melissa G. Y. Lee, Email: melissa.lee1@unimelb.edu.au

Wendy C. Y. Hu, Email: w.hu@westernsydney.edu.au

Justin L. C. Bilszta, Email: jbilszta@unimelb.edu.au

References

- 1.Shaw K, Holbrook A, Bourke S. Student experience of final-year undergraduate research projects: an exploration of ‘research preparedness’. Stud High Educ. 2013;38(5):711–727. [Google Scholar]

- 2.Mohrman K, Ma W, Baker D. The research university in transition: the emerging global model. Higher Educ Policy. 2008;21:5–27. [Google Scholar]

- 3.Lawson McLean A, Saunders C, Velu PP, Iredale J, Hor K, Russell CD. Twelve tips for teachers to encourage student engagement in academic medicine. Med Teacher. 2013;35(7):549–554. doi: 10.3109/0142159X.2013.775412. [DOI] [PubMed] [Google Scholar]

- 4.Mabvuure NT. Twelve tips for introducing students to research and publishing: a medical student’s perspective. Med Teacher. 2012;34(9):705–709. doi: 10.3109/0142159X.2012.684915. [DOI] [PubMed] [Google Scholar]

- 5.Ogunyemi D, Bazargan M, Norris K, Jones-Quaidoo S, Wolf K, Edelstein R, Baker RS, Calmes D. The development of a mandatory medical thesis in an urban medical school. Teach Learn Med. 2005;17(4):363–369. doi: 10.1207/s15328015tlm1704_9. [DOI] [PubMed] [Google Scholar]

- 6.Overfield T, Duffy ME. Research on teaching research in the baccalaureate nursing curriculum. J Adv Nurs. 1984;9(2):189–196. doi: 10.1111/j.1365-2648.1984.tb00360.x. [DOI] [PubMed] [Google Scholar]

- 7.Barton JR. Academic training schemes reviewed: implications for the future development of our researchers and educators. Med Educ. 2008;42(2):164–169. doi: 10.1111/j.1365-2923.2007.02978.x. [DOI] [PubMed] [Google Scholar]

- 8.Brannan GD, Dogbey GY, McCament CL. A psychometric analysis of research perceptions in osteopathic medical education. Med Sci Educ. 2012;22:151–161. doi: 10.1007/BF03341780. [DOI] [Google Scholar]

- 9.Allen A. Research skills for medical students. United Kingdom: Sage Publishing; 2012. [Google Scholar]

- 10.Biggs JB. Teaching for quality learning at university: what the student does. Buckingham: Society for Research into Higher Education : Open University Press; 1999. [Google Scholar]

- 11.Havnaer AG, Chen AJ, Greenberg PB. Scholarly concentration programs and medical student research productivity: a systematic review. Perspect Med Educ. 2017;6(4):216–226. doi: 10.1007/s40037-017-0328-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Burk-Rafel J, Mullan PB, Wagenschutz H, Pulst-Korenberg A, Skye E, Davis MM. Scholarly concentration program development: a generalizable, data-driven approach. Academic Medicine. 2016;91(11 Association of American Medical Colleges Learn Serve Lead: Proceedings of the 55th Annual Research in Medical Education Sessions):S16-S23. doi:10.1097/ACM.0000000000001362. [DOI] [PubMed]

- 13.Bierer SB, Chen HC. How to measure success: the impact of scholarly concentrations on students--a literature review. Acad Med. 2010;85(3):438–452. doi: 10.1097/ACM.0b013e3181cccbd4. [DOI] [PubMed] [Google Scholar]

- 14.Chang Y, Ramnanan CJ. A review of literature on medical students and scholarly research: experiences, attitudes, and outcomes. Acad Med. 2015;90(8):1162–1173. doi: 10.1097/ACM.0000000000000702. [DOI] [PubMed] [Google Scholar]

- 15.Amgad M, Man Kin Tsui M, Liptrott SJ, Shash E. Medical student research: an integrated mixed-methods systematic review and meta-analysis. PLoS One. 2015;10(6):e0127470. doi: 10.1371/journal.pone.0127470. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Agarwal N, Norrmén-Smith IO, Tomei KL, Prestigiacomo CJ, Gandhi CD. Improving medical student recruitment into neurological surgery: a single institution’s experience. World Neurosurg. 2013;80(6):745–750. doi: 10.1016/j.wneu.2013.08.027. [DOI] [PubMed] [Google Scholar]

- 17.Moher D, Liberati A, Tetzlaff J, Altman D, The PRISMA Group Preferred Reporting Items for Systematic Reviews and Meta-Analyses: The PRISMA Statement. Br Med J. 2009;339:b2535. doi: 10.1136/bmj.b2535. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Riley SC, Morton J, Ray DC, Swann DG, Davidson DJ. An integrated model for developing research skills in an undergraduate medical curriculum: appraisal of an approach using student selected components. Perspect Med Educ. 2013;2(4):230–247. doi: 10.1007/s40037-013-0079-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Drees S, Schmitzberger F, Grohmann G, Peters H. The scientific term paper at the Charité: a project report on concept, implementation, and students’ evaluation and learning. GMS J Med Educ. 2019;36(5):Doc53. doi: 10.3205/zma001261. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Sawarynski K, Baxa D, Folberg R. Embarking on a journey of discovery: developing transitional skill sets through a scholarly concentration program. Teach Learn Med. 2019;31(2):195–206. doi: 10.1080/10401334.2018.1490184. [DOI] [PubMed] [Google Scholar]

- 21.Mullan J, Mansfiled K, Weston K, Rich W, Burns P, Brown C, et al. ‘Involve me and I learn’: development of an assessment program for research and critical analysis. J Med Educ Curric Dev. 2017;4:1–8. doi: 10.1177/2382120517692539. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Möller R, Shoshan M. Does reality meet expectations? An analysis of medical students’ expectations and perceived learning during mandatory research projects. BMC Med Educ. 2019;19:93. doi: 10.1186/s12909-019-1526-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Burgoyne LN, O’Flynn S, Boylan GB. Undergraduate medical research: the student perspective. Med Educ Online. 2010;10. [DOI] [PMC free article] [PubMed]

- 24.Devi V, Ramnarayan K, Abraham RR, Pallath V, Kamath A, Kodidela S. Short-term outcomes of a program developed to inculcate research essentials in undergraduate medical students. J Postgrad Med. 2015;61(3):163–168. doi: 10.4103/0022-3859.159315. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Osman T. Medical students’ perceptions towards research at a Sudanese University. BMC Med Educ. 2016;16(1):253. doi: 10.1186/s12909-016-0776-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Zee M, de Boer M, Jaarsma AD. Acquiring evidence-based medicine and research skills in the undergraduate medical curriculum: three different didactical formats compared. Perspect Med Educ. 2014;3(5):357–370. doi: 10.1007/s40037-014-0143-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.DiGiovanni BF, Ward DS, O’Donnell SM, Fong CT, Gross RA, Grady-Weliky T, et al. Process of discovery: a fourth-year translational science course. Med Educ Online. 2011;16. [DOI] [PMC free article] [PubMed]

- 28.Riegelman RK. Effects of teaching first-year medical students skills to read medical literature. J Med Educ. 1986;61(6):454–460. doi: 10.1097/00001888-198606000-00003. [DOI] [PubMed] [Google Scholar]

- 29.Shaikh W, Patel M, Shah H, Singh S. ABC of research: experience of Indian medical undergraduates. Med Educ. 2015;49(5):523–524. doi: 10.1111/medu.12714. [DOI] [PubMed] [Google Scholar]

- 30.Blazer D, Bradford W, Reilly C. Duke’s 3rd year: a 35-year retrospective. Teach Learn Med. 2001;13(3):192–198. doi: 10.1207/S15328015TLM1303_9. [DOI] [PubMed] [Google Scholar]

- 31.Holloway R, Nesbit K, Bordley D, Noyes K. Teaching and evaluating first and second year medical students’ practice of evidence-based medicine. Med Educ. 2004;38(8):868–878. doi: 10.1111/j.1365-2929.2004.01817.x. [DOI] [PubMed] [Google Scholar]

- 32.Boninger M, Troen P, Green E, Borkan J, Lance-Jones C, Humphrey A, Gruppuso P, Kant P, McGee J, Willochell M, Schor N, Kanter SL, Levine AS. Implementation of a longitudinal mentored scholarly project: an approach at two medical schools. Acad Med. 2010;85(3):429–437. doi: 10.1097/ACM.0b013e3181ccc96f. [DOI] [PubMed] [Google Scholar]

- 33.Laskowitz DT, Drucker RP, Parsonnet J, Cross PC, Gesundheit N. Engaging students in dedicated research and scholarship during medical school: the long-term experiences at Duke and Stanford. Acad Med. 2010;85(3):419–428. doi: 10.1097/ACM.0b013e3181ccc77a. [DOI] [PubMed] [Google Scholar]

- 34.Rhyne RL. A scholarly research requirement for medical students: the ultimate problem-based learning experience. Acad Med. 2000;75(5):523–524. doi: 10.1097/00001888-200005000-00045. [DOI] [PubMed] [Google Scholar]

- 35.Rosenblatt RA, Desnick L, Corrigan C, Keerbs A. The evolution of a required research program for medical students at the University of Washington School of Medicine. Acad Med. 2006;81(10):877–881. doi: 10.1097/01.ACM.0000238240.04371.52. [DOI] [PubMed] [Google Scholar]

- 36.Crabtree EA, Brennan E, Davis A, Squires JE. Connecting education to quality: engaging medical students in the development of evidence-based clinical decision support tools. Acad Med. 2017;92(1):83–86. doi: 10.1097/ACM.0000000000001326. [DOI] [PubMed] [Google Scholar]

- 37.Rao G, Kanter SL. Physician numeracy as the basis for an evidence-based medicine curriculum. Acad Med. 2010;85(11):1794–1799. doi: 10.1097/ACM.0b013e3181e7218c. [DOI] [PubMed] [Google Scholar]

- 38.Schor NF, Troen P, Kanter SL, Levine AS. The Scholarly Project Initiative: introducing scholarship in medicine through a longitudinal, mentored curricular program. Acad Med. 2005;80(9):824–831. doi: 10.1097/00001888-200509000-00009. [DOI] [PubMed] [Google Scholar]

- 39.O’Connor Grochowski C, Halperin EC, Buckley EG. A curricular model for the training of physician scientists: the evolution of the Duke University School of Medicine curriculum. Acad Med. 2007;82(4):375–382. doi: 10.1097/ACM.0b013e3180333575. [DOI] [PubMed] [Google Scholar]

- 40.Dogas Z. Teaching scientific methodology at a medical school: experience from Split, Croatia. Nat Med J India. 2004;17(2):105–107. [PubMed] [Google Scholar]

- 41.Marusic A, Marusic M. Teaching students how to read and write science: a mandatory course on scientific research and communication in medicine. Acad Med. 2003;78(12):1235–1239. doi: 10.1097/00001888-200312000-00007. [DOI] [PubMed] [Google Scholar]

- 42.Dekker FW, Halbesma N, Zeestraten EA, Vogelpoel EM, Blees MT, de Jong PGM. Scientific training in the Leiden Medical School preclinical curriculum to prepare students for their research projects. J Int Assoc Med Sci Educ. 2009;19(2s).

- 43.Gotterer GS, O’Day D, Miller BM. The Emphasis Program: a scholarly concentrations program at Vanderbilt University School of Medicine. Acad Med. 2010;85(11):1717–1724. doi: 10.1097/ACM.0b013e3181e7771b. [DOI] [PubMed] [Google Scholar]

- 44.Houlden RL, Raja JB, Collier CP, Clark AF, Waugh JM. Medical students’ perceptions of an undergraduate research elective. Med Teacher. 2004;26(7):659–661. doi: 10.1080/01421590400019542. [DOI] [PubMed] [Google Scholar]

- 45.Knight SE, Van Wyk JM, Mahomed S. Teaching research: a programme to develop research capacity in undergraduate medical students at the University of KwaZulu-Natal, South Africa. BMC Med Educ. 2016;16:61. doi: 10.1186/s12909-016-0567-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Smith FG, Harasym PH, Mandin H, Lorscheider FL. Development and evaluation of a Research Project Program for medical students at the University of Calgary Faculty of Medicine. Acad Med. 2001;76(2):189–194. doi: 10.1097/00001888-200102000-00023. [DOI] [PubMed] [Google Scholar]

- 47.van den Akker M, Dornan T, Scherpbier AJJA, Oude Egbrink MGA, Snoeckx LHEH. Easing the transition: the final year of medical education at Maastricht University. Z Evid Fortbild Qual Gesundh Wesen. 2012;106(2):92–97. doi: 10.1016/j.zefq.2012.02.013. [DOI] [PubMed] [Google Scholar]

- 48.Hunt DP, Haidet P, Coverdale JH, Richards B. The effect of using team learning in an evidence-based medicine course for medical students. Teach Learn Med. 2003;15(2):131–139. doi: 10.1207/S15328015TLM1502_11. [DOI] [PubMed] [Google Scholar]

- 49.Roland CG, Cox BG. A mandatory course in scientific writing for undergraduate medical students. J Med Educ. 1976;51(2):89–93. doi: 10.1097/00001888-197602000-00002. [DOI] [PubMed] [Google Scholar]

- 50.Barbosa JM, Magalhaes SI, Ferreira MA. Call to publish in an undergraduate medical course: dissemination of the final-year research project. Teach Learn Med. 2016;28(4):432–438. doi: 10.1080/10401334.2016.1182916. [DOI] [PubMed] [Google Scholar]

- 51.Dyrbye LN, Davidson LW, Cook DA. Publications and presentations resulting from required research by students at Mayo Medical School, 1976-2003. Acad Med. 2008;83(6):604–610. doi: 10.1097/ACM.0b013e3181723108. [DOI] [PubMed] [Google Scholar]

- 52.McPherson JR, Mitchell MM. Experience with providing research opportunities for medical students. J Med Educ. 1984;59(11 Pt 1):865–868. doi: 10.1097/00001888-198411000-00004. [DOI] [PubMed] [Google Scholar]

- 53.Hren D, Lukic IK, Marusic A, Vodopivec I, Vujaklija A, Hrabak M, Marusic M. Teaching research methodology in medical schools: students’ attitudes towards and knowledge about science. Med Educ. 2004;38(1):81–86. doi: 10.1111/j.1365-2923.2004.01735.x. [DOI] [PubMed] [Google Scholar]

- 54.Vujaklija A, Hren D, Sambunjak D, Vodopivec I, Ivanis A, Marusic A, et al. Can teaching research methodology influence students’ attitude toward science? Cohort study and nonrandomized trial in a single medical school. J Investig Med. 2010;58(2):282–286. doi: 10.2310/JIM.0b013e3181cb42d9. [DOI] [PubMed] [Google Scholar]

- 55.Ebbert A., Jr A retrospective evaluation of research in the medical curriculum. J Med Educ. 1960;35:637–643. [PubMed] [Google Scholar]

- 56.Moller R, Shoshan M. Medical students’ research productivity and career preferences; a 2-year prospective follow-up study. BMC Med Educ. 2017;17(1):51. doi: 10.1186/s12909-017-0890-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Mullan JR, Weston KM, Rich WC, McLennan PL. Investigating the impact of a research-based integrated curriculum on self-perceived research experiences of medical students in community placements: a pre- and post-test analysis of three student cohorts. BMC Med Educ. 2014;14:161. doi: 10.1186/1472-6920-14-161. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Frank JR. The CanMEDS 2005 physician competency framework: better standards, better physicians, better care. Royal College of Physicians and Surgeons of Canada: Ottawa; 2005. [Google Scholar]

- 59.Laidlaw A, Guild S, Struthers J. Graduate attributes in the disciplines of Medicine, Dentistry and Veterinary Medicine: a survey of expert opinions. BMC Med Educ. 2009;9:28. doi: 10.1186/1472-6920-9-28. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Shankar PR, Chandrasekhar TS, Mishra P, Subish P. Initiating and strengthening medical student research: time to take up the gauntlet. Kathmandu Univ Med J. 2006;4(1):135–138. [PubMed] [Google Scholar]

- 61.Laidlaw A, Aiton J, Struthers J, Guild S. Developing research skills in medical students: AMEE Guide No. 69. Med Teacher. 2012;34(9):e754–e771. doi: 10.3109/0142159X.2012.704438. [DOI] [PubMed] [Google Scholar]

- 62.Frishman WH. Student research projects and theses: should they be a requirement for medical school graduation? Heart Dis. 2001;3(3):140–144. doi: 10.1097/00132580-200105000-00002. [DOI] [PubMed] [Google Scholar]

- 63.Parsonnet J, Gruppuso PA, Kanter SL, Boninger M. Required vs. elective research and in-depth scholarship programs in the medical student curriculum. Acad Med. 2010;85(3):405–408. doi: 10.1097/ACM.0b013e3181cccdc4. [DOI] [PubMed] [Google Scholar]

- 64.Maggio LA, Cate OT, Irby DM, O’Brien BC. Designing evidence-based medicine training to optimize the transfer of skills from the classroom to clinical practice: applying the four component instructional design model. Acad Med. 2015;90(11):1457–1461. doi: 10.1097/ACM.0000000000000769. [DOI] [PubMed] [Google Scholar]

- 65.Portfolio-based learning in general practice: report of a working group on higher professional education. Royal College of General Practitioners 1993. [PMC free article] [PubMed]

- 66.Friedman Ben David M, Davis MH, Harden RM, Howie PW, Ker J, Pippard MJ. AMEE Medical Education Guide No. 24: portfolios as a method of student assessment. Med Teacher. 2001;23(6):535–551. doi: 10.1080/01421590120090952. [DOI] [PubMed] [Google Scholar]

- 67.Zier K, Stagnaro-Green A. A multifaceted program to encourage medical students’ research. Acad Med. 2001;76(7):743–747. doi: 10.1097/00001888-200107000-00021. [DOI] [PubMed] [Google Scholar]

- 68.Kirkpatrick DL. Evaluation of Training. In: Craig RL, American Society for Training and Development, editor. Training and development handbook: a guide to human resource development. 3. New York: McGraw-Hill; 1987. [Google Scholar]

- 69.Australian Medical Council Limited. Accreditation standards for primary medical education providers and their program of study and graduate outcome statements. Graduate Outcome Statements 2012.

- 70.Liaison Committee on Medical Education. Functions and structure of a medical school. Washington, DC, USA 2017.

- 71.Haidet P, Levine RE, Parmelee DX, Crow S, Kennedy F, Kelly PA, Perkowski L, Michaelsen L, Richards BF. Guidelines for reporting team-based learning activities in the medical and health sciences education literature. Acad Med. 2012;87(3):292–299. doi: 10.1097/ACM.0b013e318244759e. [DOI] [PubMed] [Google Scholar]

- 72.Patricio M, Juliao M, Fareleira F, Young M, Norman G, Vaz CA. A comprehensive checklist for reporting the use of OSCEs. Med Teacher. 2009;31(2):112–124. doi: 10.1080/01421590802578277. [DOI] [PubMed] [Google Scholar]

- 73.Phillips A, Lewis L, McEvoy M, Galipeau J, Glasziou P, Moher D, et al. Development and validation of the guideline for reporting evidence-based practice educational interventions and teaching (GREET) BMC Med Educ. 2016;16:237. doi: 10.1186/s12909-016-0759-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

(DOCX 121 kb)

Data Availability Statement

The data extraction sheet used and/or analysed during the current study is available from the corresponding author on request. Data is provided in the tables and appendices and all data is based on published studies.