Abstract

Concept and knowledge maps have been shown to improve students’ learning by emphasising meaningful relationships between phenomena. A user-friendly online tool that enables assessment of students’ maps with automated feedback might therefore have significant benefits for learning. For that purpose, we developed an online software platform known as Knowledge Maps. Two pilot studies were performed to evaluate the usability and efficacy of Knowledge Maps. Study A demonstrated significantly improved perceptions of learning after using Knowledge Maps to learn pathology. Study B showed significant improvement between pre-test and post-test scores in an anatomy course. These preliminary studies indicate that this software is readily accepted and may have potential benefits for learning.

Keywords: Concept maps, Knowledge maps, Medical education, eLearning, Assessment, Feedback, Teaching and learning strategies

Background

In higher education, both students and teachers are at risk of being overwhelmed by the ever-growing body of scholarly knowledge. Meaningful learning is dependent upon making connections with prior knowledge and between relevant concepts, no matter how disparate these might seem [1].

Concept and knowledge maps are graphical representations of understanding, which have been shown to promote and cultivate meaningful learning and critical thinking [2–5]. Various methods of manual grading for concept and knowledge maps have been proposed, compared and tested, with varying levels of success [6–8]. However, these all add to the workload of time-poor academics. As such, an automated tool for the assessment of online student concept and knowledge maps that is valid and reliable would be of great value to both students and teachers [9].

Developing such a tool is a challenge, because not only would it need to provide consistent feedback, but it must also be user-friendly. Usability is a key feature of any educational tool or strategy. In designing human–computer interaction, one must be aware of such restrictions [10, 11] because presenting students with tasks that require unnecessary effort can hamper learning [12].

Even though computer-based concept mapping has been available for more than 10 years [13], and even with advances in artificial intelligence, automated feedback on concept and knowledge maps has been difficult to achieve [14–19]. A common limitation is reliance on installation of third-party software and plugins. These additional steps can greatly hinder usability and reduce engagement, perhaps explaining why none of these systems have come into general use. Thus, it remains challenging and time-consuming to provide worthwhile constructive feedback to students on their maps.

This manuscript describes the development of a knowledge mapping tool that would be usable and effective in providing automated feedback to assist students’ learning.

Methods

Development of Knowledge Maps

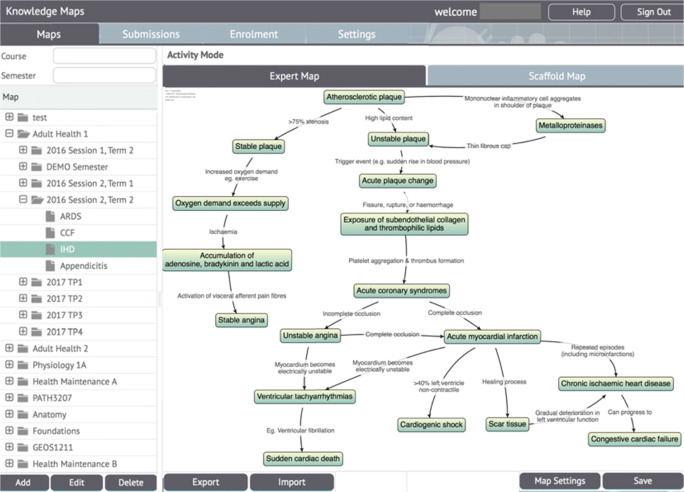

Knowledge Maps is a novel online mapping software platform that is based on the work of a team at the National Taiwan University of Science and Technology with their permission [19]. Knowledge Maps is a wholly Web-based interactive mapping system that can be used to create, edit and share maps, while students receive automated graphical feedback in real-time (Fig. 1).

Fig. 1.

User interface of Knowledge Maps

As a teacher, mapping activities can be created for students. Firstly, an “expert map” is created, which can be created directly within Knowledge Maps, or imported from the freeware program CmapTools™ (https://cmap.ihmc.us/). Linked to each “expert map” is a “scaffold map”, which is presented to students with some nodes and/or linking phrases hidden. The difficulty of the mapping task can be tailored to each group of students by altering the level of scaffolding, ranging from no scaffold at all, to providing the entire structure.

Various settings can be selected regarding the activity itself. Teachers can choose to have students generate their own text for nodes and linking phrases, or to give them the choice from an alphabetically ordered drop-down list. The map can be locked such that students are unable to move existing nodes or linking phrases. This is advantageous in maps with a greater degree of scaffolding. Conversely, the map can be “unlocked” for tasks that require students to generate their own framework or restructure the scaffold provided for them.

There are two main forms of feedback that Knowledge Maps can provide. The first is a mark out of 100 that is generated by the weighted proposition method [20]. Secondly, students receive graphical feedback that displays in the browser window. This feedback represents the student’s map with nodes and linking phrases highlighted in red if incorrect and highlighted in yellow if omitted. The teacher can choose to show the correct label for omitted nodes, or to show only the first letter of a missing node as a hint.

Teachers can view attempts made by students through a “Submissions” tab, which can help identify common areas of misunderstanding across a cohort.

Pilot Studies of Usability and Efficacy

These studies were approved by the University of New South Wales Human Research Ethics Committee (no. HC15114). Each map included brief instructions for students explaining how to navigate and complete the map.

Pilot Study A

Students were recruited from the first and second years of an undergraduate Medicine programme. Participants were randomly allocated into two study groups. This crossover trial was designed to evaluate usability and acceptability and observe any measurable impacts on student learning. The trial ran over 2 weeks in which group 1 (n = 84) was provided with a map on ischaemic heart disease (IHD), and group 2 (n = 83) received a map on deep venous thrombosis and pulmonary embolism (DVT/PE). These were scaffolded maps where students completed missing concepts or linking phrases by choosing options from a drop-down list. As a control, students were provided with a link to existing online resources for the topic addressed by the other group using a map.

At the end of each week, students completed a timed online quiz comprising multiple-choice questions for each topic. An online questionnaire was provided, containing a range of Likert scale items, ranging from 0.0 (least favourable) to 6.0 (most favourable). There were also items asking students to rate their perceptions of their understanding of the topic before and after using Knowledge Maps on a scale from 1 to 10. Open-ended questions enabled students to comment on the strengths and weaknesses of the tool.

Pilot Study B

Study B also evaluated the impact of Knowledge Maps in learning using objective measures. Participants (n = 54) were recruited from a third-year undergraduate anatomy course. During a tutorial class, participants were asked to complete scaffolded maps on cranial nerves in pairs. Students individually completed an online pre-test on cranial nerves prior to the mapping activity and a post-test following the activity.

Results

Pilot Study A

While the number of participants recruited was higher, only 53 students from group 1 and 59 students from group 2 completed the online quiz. No significant difference was found between the mean quiz scores of each group for the topics IHD and DVT/PE by unpaired t test. In total, 135 students completed the questionnaire. Students reported a significantly higher perception of understanding after using the maps (median of 4.5 before and of 7.0 after, IQR of 4.0–6.0 before and 6.0–8.0 after, p < 0.0001 by Wilcoxon rank sign test) (Table 1).

Table 1.

Results for quiz (mean percentage score ± standard error of mean); p values calculated by unpaired t tests

| Group 1 (n = 53) | Group 2 (n = 59) | ||

|---|---|---|---|

| IHD | 60.38 ± 3.40 | 61.02 ± 2.84 | p = 0.885 |

| DVT/PE | 58.18 ± 2.64 | 58.19 ± 2.36 | p = 0.996 |

| Both topics | 59.28 ± 2.56 | 59.60 ± 2.06 | p = 0.920 |

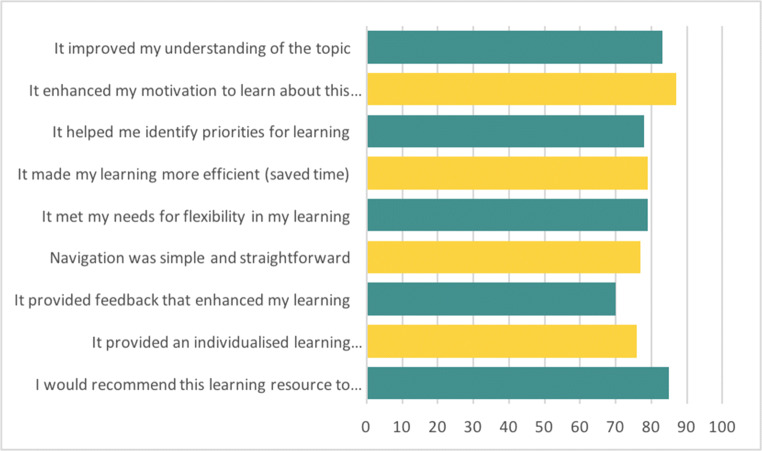

Percentage agreement was calculated for Likert scale items based on the percentage of students who rated the items 4.0 stars or higher and ranged from 70 to 87% across all 9 items. Qualitative analysis of open-ended questionnaire responses revealed several themes of commonly identified benefits (n = 99): providing an overview or summary of the topic (n = 18); demonstrating links and relationships between concepts (n = 17); providing feedback (n = 14); and that it was a simple and logical tool (n = 11) (Fig. 2).

Fig. 2.

Percentage agreement for Likert scale items in the Perceived Utility of Learning Technology Scale survey (i.e. 4.0–6.0, n = 135)

Pilot Study B

There was a significant increase in students’ mean post-test scores after engaging in the mapping activity (pre-test mean 5.98 out of 10, 95% CI [5.51, 6.65]; post-test mean 7.67, 95% CI [7.13, 8.20]; p < 0.0001 paired t test).

Discussion

Knowledge Maps, a novel software tool, was readily accepted by the student cohorts engaged in the pilot studies described here. Learning is a complex and multidimensional construct. While it would have been encouraging to demonstrate significantly improved quiz scores in pilot study A, this result was unsurprising, given that all students had access to face-to-face teaching and other resources in their course covering the topics addressed by our knowledge maps. Thus, this research was performed in a broader context where innovations are virtually impossible to observe in isolation [20, 21]. Furthermore, the lack of objective increase in knowledge does not negate students’ perceptions of significantly improved understanding. Indeed, Kirschner and colleagues [22] suggest that successful educational design will result in increased effectiveness, efficiency or satisfaction without a concomitant decrease in any of the other parameters.

One of the major benefits of Knowledge Maps is its accessibility. This entirely browser-based system simplifies the process for students to engage with the desired activity. It thereby maintains student participation rates and increases uptake and acceptance [10, 11]. In order for a learning resource to demonstrate any impact on learning, it must first be a resource that students are willing to use [23]. Thus, in lowering the barriers for uptake, Knowledge Maps could prove to be a superior tool for the construction of student maps and the delivery of feedback on these maps.

Another advantage is that Knowledge Maps can create a range of activities with varying levels of scaffolding, which many other systems do not allow. Teachers thus have the flexibility to design learning activities suited to their students.

Limitations

These preliminary data were gathered from exploratory studies. More evidence is needed for Knowledge Maps to be definitively validated as a resource that facilitates enhanced learning. Pilot study B was carried out in a scheduled class and this made the incorporation of a control group impractical. Randomised controlled trials of learning following use of Knowledge Maps compared with a control group using traditional methods of learning would help to resolve whether use of the tool results in objective learning gains (as opposed to students’ perceptions of learning). Further crossover trials similar to pilot study A that used Knowledge Maps with topics with fewer existing resources could also be informative.

Conclusion

Knowledge Maps is an accessible tool for the creation of concept and knowledge maps which enables assessment of students’ maps with automated feedback. The data presented here suggest that use of Knowledge Maps has a significant beneficial effect on learning, both in terms of students’ perceptions and objective assessment of knowledge. This tool has potential to enhance learning across a range of contexts and disciplines.

Abbreviations

- CI

confidence interval

- DVT/PE

deep venous thrombosis and pulmonary embolism

- IHD

ischaemic heart disease

- IQR

interquartile range

Funding

The development of Knowledge Maps was supported by a University of New South Wales Learning and Teaching Development Grant.

Compliance with Ethical Standards

Conflict of Interest

The authors declare that they have no conflict of interest.

Ethical Approval

These studies were approved by the University of New South Wales Human Research Ethics Committee (no. HC15114).

Informed Consent

Information about the trials was supplied to all participants and they were informed that non-participation would have no effect on academic standing. Consent was implied based on complete participation by students.

Footnotes

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Veronica W. Ho, Email: veronica.ho@unsw.edu.au

Meng Meng, Email: mmeng0912@gmail.com.

Gwo-Jen Hwang, Email: gjhwang.academic@gmail.com.

Nalini Pather, Email: n.pather@unsw.edu.au.

Rakesh K. Kumar, Email: r.kumar@unsw.edu.au

Richard M. Vickery, Email: richard.vickery@unsw.edu.au

Gary M. Velan, Phone: +61 2 9385 1278, Email: g.velan@unsw.edu.au

References

- 1.Jacobs HH. The growing need for interdisciplinary curriculum content. In: Jacobs HH, editor. Interdisciplinary curriculum: design and implementation. Alexandria: Association for Supervision and Curriculum Development; 1989. pp. 1–11. [Google Scholar]

- 2.Novak JD, Canas AJ. The theory underlying concept maps and how to construct and use them 2008. 2013 [cited 2013. Available from: http://cmap.ihmc.us/Publications/ResearchPapers/TheoryUnderlyingConceptMaps.pdf.

- 3.Gonzalez HL, Palencia AP, Umana LA, Galindo L, Villafrade LA. Mediated learning experience and concept maps: a pedagogical tool for achieving meaningful learning in medical physiology students. Adv Physiol Educ. 2008;32:312–316. doi: 10.1152/advan.00021.2007. [DOI] [PubMed] [Google Scholar]

- 4.O'Donnell AM, Dansereau DF, Hall RH. Knowledge maps as scaffold for cognitive processing. Educ Psychol Rev. 2002;14(1):71–86. doi: 10.1023/A:1013132527007. [DOI] [Google Scholar]

- 5.Daley BJ, Shaw CA, Balistrieri T, Glasenapp K, Piacentine L. Concept maps: a strategy to teach and evaluate critical thinking. J Nurs Educ. 1999;38(1):42–47. doi: 10.3928/0148-4834-19990101-12. [DOI] [PubMed] [Google Scholar]

- 6.Besterfield-Sacre M, Gerchak J, Lyons M, Shuman LJ, Wolfe H. Scoring concept maps: an integrated rubric for assessing engineering education. J Eng Educ. 2004;93(2):105–115. doi: 10.1002/j.2168-9830.2004.tb00795.x. [DOI] [Google Scholar]

- 7.Watson MK, Pelkey J, Noyes CR, Rodgers MO. Assessing conceptual knowledge using three concept map scoring methods. J Eng Educ. 2016;105(1):118–146. doi: 10.1002/jee.20111. [DOI] [Google Scholar]

- 8.Yin Y, Vanides J, Ruiz-Primo MA, Ayala CC, Shavelson RJ. Comparison of two concept-mapping techniques: implications for scoring, interpretation and use. J Res Sci Teach. 2005;42(2):166–184. doi: 10.1002/tea.20049. [DOI] [Google Scholar]

- 9.Hwang G-J, Wu PH, Ke HR. An interactive concept map approach to supporting mobile learning activities for natural science courses. Comput Educ. 2011;57(4):2272–2280. doi: 10.1016/j.compedu.2011.06.011. [DOI] [Google Scholar]

- 10.Te'eni D, Carey JM, Zhang P. Human-computer interaction: Developing Effective Organizational Information Systems. Wiley; 2005.

- 11.Weinerth K, Koenig V, Brunner M, Martin R. Concept maps: a useful and usable tool for computer-based knowledge assessment? A literature review with a focus on usability. Computers and Education. 2014;78:201–209. doi: 10.1016/j.compedu.2014.06.002. [DOI] [Google Scholar]

- 12.de Jong T. Cognitive load theory, educational research, and instructional design: some food for thought. Instr Sci. 2010;38:105–134. doi: 10.1007/s11251-009-9110-0. [DOI] [Google Scholar]

- 13.Cañas AJ, Hill G, Carff R, Suri N, Lott J, Eskridge T, et al., editors. CmapTools: a knowledge modeling and sharing environment. Concept maps: theory, methodology, Technology proceedings of the first international conference on concept mapping; 2004.

- 14.Ho V, Velan G. Online concept maps in medical education: are we there yet? FoHPE. 2016;17(1):18. doi: 10.11157/fohpe.v17i1.119. [DOI] [Google Scholar]

- 15.Gouli E, Gogoulou A, Papanikolaou K, Grigoriadou M, editors. COMPASS: an adaptive web-based concept map assessment tool. Pamplona: International Conference on Concept Mapping; 2004. [Google Scholar]

- 16.Anohina-Naumeca A, Grundspenkis J, Strautmane M. The concept map-based assessment system: functional capabilities, evolution, and experimental results. Int J Contin Eng Educ Life Long Learn. 2011;21(4):308–327. doi: 10.1504/IJCEELL.2011.042790. [DOI] [Google Scholar]

- 17.Luckie D, Harrison SH, Ebert-May D. Model-based reasoning: using visual tools to reveal student learning. Adv Physiol Educ. 2011;35(1):59–67. doi: 10.1152/advan.00016.2010. [DOI] [PubMed] [Google Scholar]

- 18.Cline BE, Brewster CC, Fell RD. A rule-based system for automatically evaluating student concept maps. Expert Syst Appl. 2010;37:2282–2291. doi: 10.1016/j.eswa.2009.07.044. [DOI] [Google Scholar]

- 19.Wu PH, Hwang G-J, Milrad M, Ke HR, Huang YM. An innovative concept map approach for improving students' learning performance with an instant feedback mechanism. Br J Educ Technol. 2012;43(2):217–232. doi: 10.1111/j.1467-8535.2010.01167.x. [DOI] [Google Scholar]

- 20.Pirrie A. Evidence-based practice in education: the best medicine? Br J Educ Stud. 2001;49(2):124–136. doi: 10.1111/1467-8527.t01-1-00167. [DOI] [Google Scholar]

- 21.Regehr G. It’s NOT rocket science: rethinking our metaphors for research in health professions education. Med Educ. 2010;44(1):31–39. doi: 10.1111/j.1365-2923.2009.03418.x. [DOI] [PubMed] [Google Scholar]

- 22.Kirschner PA, Martens RL, Strijbos J-W. CSCL in higher education?: a framework for designing multiple collaborative environments. In: Jan-Willem S, Paul AK, Rob LM, Pierre D, editors. What we know about CSCL and implementing it in higher education. Kluwer Academic Publishers; 2004. p. 3–30.

- 23.Nielsen J. Usability engineering. Elsevier; 1994.