Abstract

With the introduction of McMaster University’s problem-based, self-directed learning and cognitive integration in the medical school curriculum, learning in small groups has been gaining popularity with medical schools worldwide. Problem-based learning (PBL) places emphasis on the value of basic medical sciences as the basis of learning medicine using clinical problems. For a successful outcome, a PBL curriculum needs to have a student-centered learning environment, problem-based design and facilitation, and assessment of learning in PBL domains. We describe a PBL program that has been used for undergraduate medical education, including changes made to learning resources and assessment. The changes required input from both faculty educators and students, and success depended on buy-in into the process. One of the changes included implementing the use of standard textbooks, which students use as the primary source of information during self-directed learning. Another change was the use of several reliable, valid, and cost-effective high-stakes written exams from internal and external sources, to promote spaced retrieval of biomedical facts and clinical contexts. By making these and other changes, we have been able to achieve pass rates and board scores which are consistently above the national average for 12 years. We conclude that in order to ensure sustainable successful outcomes, it is important to keep our program dynamic by making improvements in the PBL domains and assessment methods, taking into consideration students’ course evaluations of the learning environment.

Keywords: Problem-based learning, Active learning, Preclinical medical education

Introduction

Problem-based learning (PBL) focused on small groups, in the “purist” perspective, is designed to promote learning principles of being constructivist, collaborative, self-directed, and contextual and has been in use in medical education since the early 1970s [1]. In traditional PBL, small groups of learners are presented with a full-length clinical case developed in a progressive disclosure design, anchored to systems-based learning objectives. PBL groups set their own learning topics, issues, and objectives progressively throughout the case, often teaching each other, and explaining causal mechanisms and pathophysiologic connections to clinical features of the case, guided by a skilled facilitator. The PBL preclinical medical education curriculum at LECOM Bradenton (which was founded in 2004) was designed around the original model used by Schools of Medicine at McMaster University and The Ohio State University (OSU), using original patient cases written at OSU. In this paper, we describe in detail how we have conducted the PBL program at LECOM Bradenton over a 14-year period and the learning outcomes that resulted.

Description of the PBL Program at LECOM Bradenton

In our preclinical curriculum (first two years of osteopathic medical school), students learn all basic biomedical sciences (except anatomy) in a modified PBL format. Curricular contents include the basic sciences vital to medical education such as anatomy, embryology, histology, genetics, biochemistry, physiology, pathology, pharmacology, microbiology, immunology, and neuroanatomy [2], driven by clinical cases in the body systems. Behavioral sciences, ethics, nutrition, public health, human sexuality, medical jurisprudence, and geriatrics are offered as mini-courses (usually of one- to two-week duration), in various semesters. Anatomy is taught as a 10-week structured course at the start of the first semester, with lectures and laboratory sessions utilizing cadavers, plastinated specimens, and lab exercises. In all four semesters for the preclinical curriculum, students are required to take three major longitudinal courses—PBL, clinical exam (CE) skills, and osteopathic manipulative medicine (OMM).

Each PBL group session is two hours long, and sessions are generally held three times a week, resulting in six contact hours in PBL each week. Each PBL group consists of seven to eight students with one faculty facilitator, and students remain in the same group for the entire semester (20 weeks). Faculty facilitators (basic or clinical science faculty) change groups every 10 weeks. PBL sessions are generally held on Mondays, Wednesdays, and Fridays, in order to allow protected time in between for students to do independent study and self-directed learning (SDL) based on the learning issues assigned by the group. Students take ownership of their learning by studying learning issues and related learning materials. The days in between are also utilized for teaching CE and OMM. Attendance and punctuality for all PBL sessions are mandatory, as they constitute a percentage of the grade for the course. Each PBL room is equipped with a whiteboard, and students are encouraged to use the board to list case details, differential diagnoses, an assessment and plan for each PBL patient, and learning issues [3]. Each session begins with a progress note or subjective, objective, assessment, and plan (SOAP) note [4] and concludes with a wrap-up, in which each student evaluates both the group process and individual participation. Students are encouraged to play roles of physician, patient, SOAP note presenter, scribe, and reader in each session.

For each PBL case, facilitators are given learning objectives in briefing sessions before a unit or organ-system module begins. During each PBL session, students identify learning issues (what they do not know) and list them on the board during the brainstorming, problem synthesis, and hypotheses-generation stage. Learning issues are usually sections/chapters of the relevant (to the PBL case) basic science textbooks. As the case is progressively disclosed with laboratory results (CBC, metabolic panel, liver function tests, other end-organ assessments), students continue to list their learning issues on the board. Students then research and study during inquiry-driven SDL and return ready to answer questions, teach each other, and apply newly acquired knowledge to the clinical problem in subsequent sessions. Students are encouraged to integrate the basic sciences widely, so that they pick learning issues from several different textbooks for any given case.

At the completion of a PBL case, students in a group reach a consensus on which learning issues were important to understand the foundational basic sciences for that case. These are then submitted as “learning objectives” (commonly known to students as “exam topics”) to the faculty member in charge of creating PBL assessments (or PBL exams, described below). In general, students submit three to ten (or more) chapters for each case, depending on the length and complexity of the case. Each group submits its own selected chapters to be tested on, and the PBL exam for each group is comprised of multiple-choice questions (MCQs) from those selected chapters. Thus, the PBL exam is constructed separately for each group, based on their choices. Students’ learning objectives from independent PBL groups are vetted against the expected learning objectives of the cases (provided only to the facilitators in briefing notes) and become the basis of specific clinical vignette-based MCQs as stand-alone or “case-cluster” PBL questions.

When our program first began (in 2004), students were allowed to choose learning issues and exam topics from any medical textbook or resource of their choice. When we conducted PBL exams, we found that exam questions derived from one textbook would not necessarily be answerable from other textbooks. This was discovered by students during the post-exam review, where questions with poor statistics were often those that could not be found in all textbooks. It was observed that these questions frustrated students and led to low PBL exam scores.

We then examined COMLEX Level 1 (licensing exam offered by the National Board of Osteopathic Medical Examiners (the NBOME)) results for the inaugural (graduating) Class of 2008 (students who took COMLEX Level 1 in 2006). We found that their average COMLEX Level 1 score (467) and pass rate (83%) was significantly below the national COMLEX score (490) and pass rate (88%) for that year (see Figs. 1 and 2). Based on feedback provided by students regarding their frustrations with the learning environment and textbook resources, along with the lower-than-average board results, we decided to implement changes in the program to see if outcomes would improve. We used the following questions to guide our improvements:

How do we sequence and intertwine all basic medical sciences with cases and assess medical knowledge in a PBL pathway?

How do we standardize reliable sources of information across the preclinical years and provide scope to students for identification, analysis, and synthesis of information based on evidence-based medicine and also provide a point of reference for “problem-analysis questions” for summative exams?

How do we improve curricular outcomes and national board COMLEX Level 1 results?

Fig. 1.

The mean first-time COMLEX Level 1 exam scores from 2008 to 2020. The national mean (excluding LECOM Bradenton) and the LECOM Bradenton school mean (with 95% confidence intervals) are reported for each graduating class. LECOM Bradenton had class sizes ranging from 148 to 155 students between 2008 and 2014 and class sizes of 181–195 for 2015–2020. Sample sizes for the national mean (excluding LECOM Bradenton) almost doubled during from 2008 to 2020 (yearly totals: 3287, 3503, 3627, 4092, 4462, 4699, 4901, 5260, 5350, 5988, 6368, 6573, and 6832). Numbers above bars indicate the average exam scores. All differences between school and national means were statistically significant (adjusted p value < 0.001)

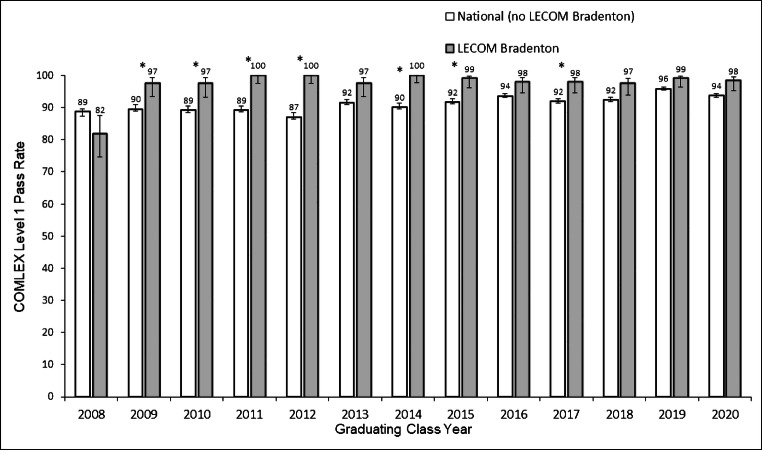

Fig. 2.

COMLEX Level 1 pass rates from 2008 to 2020. The national (excluding LECOM Bradenton) and the LECOM Bradenton school pass rates and 95% confidence intervals are reported for each graduating class. Numbers above bars indicate the pass rate (percent of students who passed the COMLEX Level 1). Asterisk symbol denotes years for which school and national pass rates were significantly different (adjusted p value < 0.05). All unadjusted p values were < 0.05

Modifications to the Original PBL Program

The results from COMLEX Level 1 for the Class of 2008 (Fig. 1) led us to make several changes to the way the PBL program was executed, starting in 2006. Some of those changes, implemented over the course of several years include the following:

Provide students with a list of standard textbooks which they are required to use, preferably as print copies, to study from as well as provide exam topics to be tested from.

Create integrated 200-question PBL group-specific exams, which are written by faculty using national boards-style MCQs.

Administer additional assessments such as K1–K4 and standardized tests such as the National Board of Medical Examiners (NBME) Comprehensive Basic Science exam (CBSE), to promote learning and retention.

Write/review PBL cases on an ongoing basis to ensure that the content is up to date and includes all medical basic sciences, population/public health, behavioral/social science, and osteopathic manipulative treatment (OMT) in every case.

Use PBL course and facilitator evaluations to make necessary changes on an ongoing basis based on student and faculty feedback.

Mentor new faculty facilitators in a rigorous manner (in a semester-long training program with a seasoned facilitator), so that there is consistency amongst them in terms of PBL facilitation and in providing formative evaluations using FASP forms.

Encourage and remind students regularly, to pick learning issues and exam topics from several biomedical disciplines for any given PBL case, for better integration.

To ensure improved curricular outcomes and students’ buy-in, it became necessary to report from which textbook the exam questions were derived. Hence, in 2005, we decided to implement a list of required textbooks, which included all the standard basic medical science textbooks determined by faculty content experts. A list of required textbooks is now provided in the beginning of the year. Students are required to buy and use print copies (not pdfs or online versions) of specific medical textbooks for each science. Currently, students may continue to use alternative textbooks/resources, but exam questions for PBL are derived from the required textbooks.

A study conducted in 2014 found that an effort to increase the quality of resources students use in researching PBL-learning issues resulted in substantially greater use of medical textbooks [5]. PBL cases unfold in a progressive disclosure model [1], and similar to what Distlehorst et al. report [6], students identify what they do not know by brainstorming, asking “why questions,” and applying of available knowledge. Our learners use a variety of resources including textbooks, the internet, journals, medical literature, and faculty and community experts. When students have to reconcile pieces of information from various sources, it serves to develop their critical appraisal and critical thinking skills [7]. Knowledge acquisition and performance in the framework of PBL cases (where process rather than problem-solving is desired) aids the learners in making repeated journeys to higher levels in Bloom’s cognitive taxonomy [8].

Our assessments for PBL include PBL exams; there are five PBL exams each year (first and second). Each PBL exam is in an MCQ format with about 200 questions and generally covers six to nine cases that were offered as a block over six–eight weeks. Faculty facilitators provide one-on-one feedback to students on their performance in PBL sessions, during the middle and end of the 10-week facilitation period. Feedback is provided using a numeric rubric-based facilitator assessment of student performance (FASP) form; this FASP grade accounts for 15–20% of the final PBL grade in any semester. In addition, there are two Krueger diagnostic exams (around 200 MCQs each) given each year, to test students’ retrieval of knowledge. These multiple-choice exams were created in-house and are termed “Krueger” diagnostics K1–4 (collected from content experts and archived by the late dean Dr. Wayne Krueger). These exams integrate several basic medical sciences and serve as summative exams for testing content from the system/block that the students covered in the semester. We also offer students the NBMECBSE in semester 4; it is mandatory that students take it eight–nine weeks before they take the first national board exam, COMLEX Level 1. NBME CBSE scores do not count towards students’ final grades for PBL. A percentage of the scores from the K2 diagnostic exam are added to the final PBL grade at the end of semester 2, in order to provide an incentive to the students.

When the first semester begins and students start anatomy through lecture/laboratory, we organize them in groups of seven–eight based on gender and grades (so that groups are gender-balanced and not all high-performing students are in one group). Students work through anatomy lab exercises in those groups thrice a week. PBL begins once a week in week 6 of the semester and increases to three times/week after week 10. Since the same group of students have worked together in anatomy, it allows for a smooth transition in terms of group dynamics and interaction when they start PBL. We have found (by trial and error) that this schedule works well for the students’ performance in the first semester. Students are given two PBL exams in semester 1.

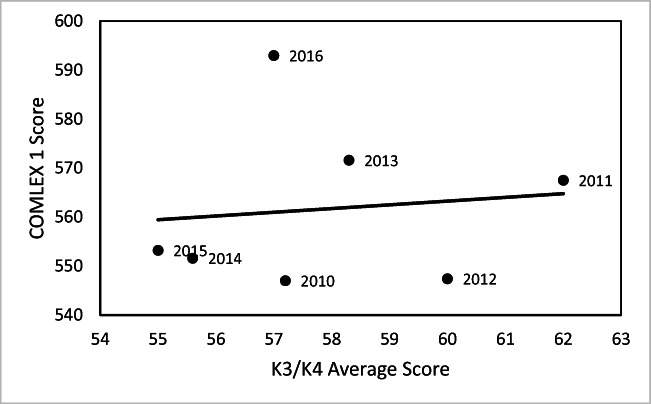

In the second semester, students are given three PBL exams and three diagnostic K1 and K2 exams. In the third semester, the students are given three PBL exams. At the beginning of the fourth (and final) semester for the preclinical curriculum, students take the K3 and K4 exams. As seen in Fig. 4, performance on K3/K4 does not serve as a good predictor of COMLEX Level 1 scores (t = 0.25, n = 7, p = 0.81, and R-squared = 0.012). However, we have found that it serves to identify at-risk students who are then offered additional board review preparation in semester 4. The Krueger diagnostic exams also serve as important tools that allow for retrieval, encoding, and consolidation, which according to Brown et al. act powerfully to enhance learning and durable retention [9]. Over the years, students have indicated that the timing of when the diagnostic exams are offered in the curricular map serves the purpose of enhancing retrieval and retention of the basic sciences. About 10 weeks after the K3/K4 diagnostic exams, students take the NBME CBSE exam. Again, we use the NBME exam scores to identify at-risk students and provide them with extra board review sessions during the 8 weeks before they take COMLEX Level 1. Students are also given two PBL exams in semester 4.

Fig. 4.

Relationship between the mean K3/K4 scores and COMLEX 1 scores for Class of 2010–2016, along with the best-fit linear trend line

In this paper, we describe how we accomplished our goals of ongoing strong board results and enhanced student satisfaction. Some of the salient changes we made since the beginning of the program include the following: (1) having students pick exam topics specific to each groups’ learning issues and objectives and proactively writing MCQs linked to group discussions and learning issues and (2) using assessments including PBL exams, the NBME CBSE, COMLEX Level 1 exams, and the K1–K4 diagnostic exams to measure outcomes. We present data on COMLEX Level 1 scores, NBME CBSE scores, in-house diagnostic exam scores, and school pass rates, in order to examine how the program and the changes we made affected learning outcomes.

Materials and Methods

We compared performance on COMLEX 1 for LECOM Bradenton students to all other osteopathic students who took the COMLEX 1 in the same year. We had all COMLEX 1 scores for LECOM Bradenton students graduating in the years 2008–2020, which allowed us to estimate the standard deviation as well as the mean for each year. For the national COMLEX data, the national means, pass rates, and sample sizes were reported, but no measure of error was provided. Moreover, we did not have access to individual student or school scores for the national data. We therefore made the assumption that the variance for the LECOM student exam scores was the best available estimate of variance for non-LECOM student exam scores. The assumption of equal variances (a standard assumption in t tests and analyses of variance) allowed us to construct t tests within each year to compare LECOM student COMLEX 1 exam scores with non-LECOM student COMLEX 1 exam scores. Since 12 p values were generated, the Bonferroni adjustment was used to maintain a family-wise error rate of 0.05.

To obtain national averages which did not include LECOM Bradenton scores, we used the yearly school COMLEX 1 exam averages and number of students to adjust the national averages and number of test takers. The COMLEX 1 pass rates for LECOM and non-LECOM students were compared for each year using Fisher’s exact tests. The Bonferroni-adjusted p values were used to indicate significant differences. Pass rates and 95% confidence intervals for proportions using method 3 in [10] are reported. Statistical analyses were conducted in Rv3.5.1 (R Core Team 2018).

We present data on the relationships amongst the different exams since the start of the school, with the (graduating) Class of 2008 being the inaugural class. The exams include COMLEX Level 1, NBME CBSE exam, and our internal diagnostic exams (K3/K4). The first year we started using the NBME CBSE exam was with the Class of 2010. We considered other factors such as the selection process of students (interviews, grade point averages (GPAs), and Medical College Admission Test (MCAT) scores) to see if those influenced our outcomes, but there was no correlation of these to COMLEX 1 scores. Since this is beyond the scope of our paper, the results are not shown here.

Results

LECOM Bradenton has significantly outperformed the national COMLEX Level 1 average scores in every year except its inaugural year (Fig. 1, t test within a year, the Bonferroni-adjusted p values < 0.001). The school mean COMLEX Level 1 score has been between 26 and 55 points above the national mean score for 12 of the 13 years studied. The pass rate for first-time COMLEX Level 1 test takers was also significantly higher in LECOM Bradenton compared to the national average in most years, with pass rates at the school at or approaching 100% in some years (Fig. 2). The school pass rate was the lowest for the Class of 2008 (inaugural class) and increased to surpass the national average every year thereafter.

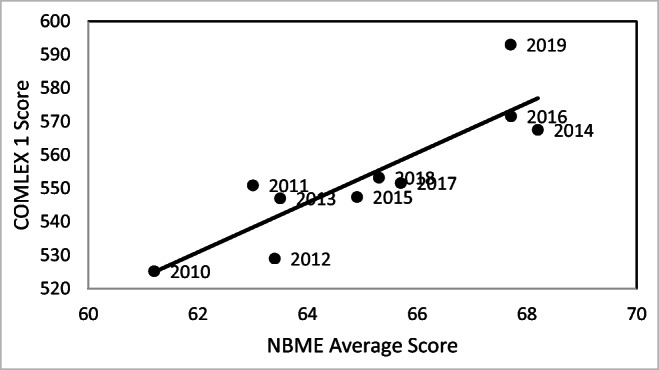

The average score for the NBME CBSE exam was a significant positive predictor of COMLEX Level 1 score for student cohorts from 2010 to 2019 (Fig. 3; intercept = 70.7, slope = 7.4, t = 4.84, n = 10, p = 0.001), and it explained 74.6% of the variance in COMLEX Level 1 scores. In contrast, the average scores for the K3/K4 diagnostic exams were not good predictors of COMLEX 1 scores in the years the test was done (Fig. 4; 2010–2016; intercept = 517.6, slope = 0.76, t = 0.25, n = 7, p = 0.81).

Fig. 3.

Relationship between mean NBME CBS exam scores and COMLEX 1 scores for Class of 2010–2019, along with the best-fit linear trend line

Our findings suggest that the academic program has produced consistently strong exam outcomes relative to peer institutions.

Discussion

Our data support the idea that a dynamic PBL-based curriculum can attain and sustain excellent learning outcomes. Our PBL program was originally designed around the Barrows model [1], as adopted by The OSU College of Medicine PBL program in the 1990s. When we were faced with the challenge of frustrated students and less-than-ideal outcomes on COMLEX Level 1 with the inaugural class of 2008, we made changes to the program. We required students to use standard medical textbooks and introduced several testing strategies to see if they would improve the outcomes.

Introducing MCQ testing methods into the curriculum that are reliable assessments, while maintaining the essential PBL elements of self-directed learning and knowledge application, is a difficult challenge for PBL schools [11]. We, like all medical schools, also strive to ensure that our learners are adequately prepared not only for national board/licensing exams, but also for practice as future knowledgeable, empathetic physicians with a holistic approach to caregiving towards patients. We can serve our students best by fusing elements of various methods such as PBL, team-based or case-based learning, and flipped classrooms, followed by rigorously evaluating our innovations so that we can provide an evidence-based approach in medical education [12].

We cannot definitively state which of the changes we outlined contributed to the learning outcomes report, but the data offer some ideas. Performance on K3/K4 did not serve as a good predictor of COMLEX Level 1 scores, but its purpose was to identify at-risk students, who were then offered additional board review preparation in semester 4, and to allow for retrieval, encoding, and consolidation, which act powerfully to enhance learning and durable retention [9]. The NBME CBSE exam is taken closer (eight to nine weeks before) to COMLEX 1, which may be why average scores on the two exams are positively related.

Overall, our data shows that students consistently performed above the national mean. We therefore conclude that all of the changes, taken together, work towards creating an improved learning environment, enabling adult learners to actively engage in a PBL curriculum and perform well in the first level of board exams.

Challenges of the PBL Curriculum

A PBL curriculum requires increased faculty time expenditure and high faculty workload [7]. While we employ one faculty facilitator for a PBL group of six to eight students, a significant amount of time is spent training faculty as well as doing case writing/review, item writing, and PBL exam preparation, in addition to mentoring and advising students. Cases are reviewed by faculty periodically to ensure that not only are the biomedical, clinical, and scientific aspects accurate, but they reflect the intersecting, overlapping, and interwoven discourses that construct a patient’s illness [13]. Evaluations of the PBL course provided by students at the end of each semester indicate that students dislike the variability in FASP scores amongst different faculty facilitators, especially since those can affect overall PBL grades. Students also indicate that not having PBL post-exam review is a disadvantage, since they do not have the opportunity to see which questions they missed. The post-exam review process is discouraged, to maintain the security of item banks and to maintain the cognitive elements of andragogy (doing or performing tasks with the empowerment of acquired resources matters most in life-long learning) [9]. Similar to the national board exams, students are provided with a breakdown of their performance in each discipline for PBL exams, NBME CBSE, and the K1–K4 exams. This allows them to determine their performance in a particular science, but not on individual questions.

Benefits of the PBL Curriculum

Since our program is entirely PBL (except for anatomy, clinical exam, and OMM) without lectures, we provide sufficient time for independent exploration and inquiry between sessions. We believe that this promotes critical thinking and problem-solving skills in students. Students report high levels of satisfaction with the PBL pathway and the assessment methods, during end-of-semester evaluations of the learning environment and all domains of the PBL pathway, using a “Likert scale” (rubric) and narratives. In one published commentary, a medical student stated that it is important that students learn lifelong learning skills by learning content independently before sessions [14]. Srinivasan et al. note that PBL may allow promotion of more aggressive students who dominate the sessions [15]. Student evaluations indicate that few students report this aggression as being a problem or hindering learning. Faculty are trained to address such an issue if it becomes a problem during PBL sessions. Students are encouraged to tackle such issues (of aggression or dysfunctionality) as a group, allowing them to learn some life lessons in conflict resolution along the way. In PBL, since students are not provided with learning objectives/learning issues, they sometimes wander through the back alleys and dead ends of a case presentation [16]. However, that learning contributes to their understanding as much as, or perhaps more than, a directed path.

Limitations

Our study is limited in that while we provide data before and after an educational intervention(s), we do not have cohorts that did not receive the intervention. Hence, the students could not be randomized into groups. Also, several changes were made at the same time, so we cannot identify one factor as being responsible for the outcomes. One advantage the data offers, however, is that we can compare across 12 years, with an n for each class ranging from 145 to 195.

Conclusion

In conclusion, the original PBL program and the changes made over the years have resulted in consistently strong outcomes. While there are not many schools that use an entirely (except anatomy) PBL-based preclinical medical school curriculum, we hope that providing a detailed description will be useful for other programs that utilize group formats such as team-based, case-based, or large-group learning. Our study raises some important questions: does learning by reading textbooks and applying the knowledge to problem-solving promote self-directed learning? Does it promote life-long learning? Does allowing students to pick their own exam topics contribute, as a major factor, to student buy-in and their eventual success on board exams? Therefore, do groups that pick more exam topics do better, compared to groups that pick less? We hope to be able to answer some of these in future studies.

Abbreviations

- PBL

Problem-based learning

- NBOME

National Board of Osteopathic Medical Examiners

- NBME

National Board of Medical Examiners

- CBSE

Comprehensive Basic Science exam

- K 1–4

The Krueger Diagnostic exams 1–4

- COMLEX

Comprehensive Osteopathic Medical Licensing Examination of the United States

- MCQ

Multiple-choice question

Compliance with Ethical Standards

Conflict of Interest

The authors declare that they have no conflict of interest.

Footnotes

VMC and CV are fourth-year medical students at LECOM-B

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Barrows HS. Problem-based learning applied to medical education, revised edition. Illinois: Southern Illinois School of Medicine; 2000. Ch 9: pp 48–66.

- 2.Finnerty EP, Chauvin S, Bonaminio G, Andrews M, Carroll RG, Pangaro LN. Flexner revisited: the role and value of the basic sciences in medical education. Acad Med. 2010;85(2):349–355. doi: 10.1097/ACM.0b013e3181c88b09. [DOI] [PubMed] [Google Scholar]

- 3.Hmelo-Silver CE. Problem-based learning: what and how do students learn? Educ Psychol Rev. 2004;16(3):235–266. doi: 10.1023/B:EDPR.0000034022.16470.f3. [DOI] [Google Scholar]

- 4.Sleszynski SL, Glonek T, Kuchera WA. Standardized medical record: a new outpatient osteopathic SOAP note form: validation of a standardized office form against physician’s progress notes. J Am Osteopath Assoc. 1999;99(10):516–529. doi: 10.7556/jaoa.1999.99.10.516. [DOI] [PubMed] [Google Scholar]

- 5.Krasne S, Stevens CD, Wilkerson L. Improving medical literature sourcing by first-year medical students in problem-based learning: outcomes of early interventions. Acad Med. 2014;89(7):1069–1074. doi: 10.1097/ACM.0000000000000288. [DOI] [PubMed] [Google Scholar]

- 6.Distlehorst LH, Dawson E, Robbs RS, Barrows HS. Problem-based learning outcomes: the glass half-full. Acad Med. 2005;80(3):294–299. doi: 10.1097/00001888-200503000-00020. [DOI] [PubMed] [Google Scholar]

- 7.Donner RS, Bickley H. Problem-based learning in American medical education: an overview. Bull Med Libr Assoc. 1993;81(3):294–298. [PMC free article] [PubMed] [Google Scholar]

- 8.Adams NE. Bloom’s taxonomy of cognitive learning objectives. J Med Libr Assoc. 2015;103(3):152–153. doi: 10.3163/1536-5050.103.3.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Brown PC, Roediger HL, McDaniel MA. Make it stick. Massachusetts: Harvard University Press; 2014. Ch 8, pp 200–11.

- 10.R Core Team. R: A language and environment for statistical computing. Vienna, Austria: R Foundation for Statistical Computing; 2018. Available online at https://www.R-project.org/

- 11.Wood TJ, Cunnington JPW, Norman GR. Assessing the measurement properties of a clinical reasoning exercise. Teach Learn Med. 2000;12(4):196–200. doi: 10.1207/S15328015TLM1204_6. [DOI] [PubMed] [Google Scholar]

- 12.Schwartzstein RM, Roberts DH. Saying goodbye to lectures in medical school - paradigm shift or passing fad? N Engl J Med. 2017;377(7):605–607. doi: 10.1056/NEJMp1706474. [DOI] [PubMed] [Google Scholar]

- 13.MacLeod A. Six ways problem-based learning cases can sabotage patient-centered medical education. Acad Med. 2011;86(7):818–825. doi: 10.1097/ACM.0b013e31821db670. [DOI] [PubMed] [Google Scholar]

- 14.Chang BJ. Problem-based learning in medical school: a student’s perspective. Ann Med Surg (Lond) 2016;12:88–89. doi: 10.1016/j.amsu.2016.11.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Srinivasan M, Wilkes M, Stevenson F, Nguyen T, Slavin S. Comparing problem-based learning with case-based learning: effects of a major curricular shift at two institutions. Acad Med. 2007;82(1):74–82. doi: 10.1097/01.ACM.0000249963.93776.aa. [DOI] [PubMed] [Google Scholar]

- 16.Sklar DP. Just because I am teaching Doesn’t mean they are learning: improving our teaching for a new generation of learners. Acad Med. 2017;92(8):1061–1063. doi: 10.1097/ACM.0000000000001808. [DOI] [PubMed] [Google Scholar]