Abstract

Team-based learning (TBL) is gaining popularity at medical schools transitioning from lecture-based to active learning curricula. Here, we review challenges and opportunities faced in implementing TBL at 2 new medical schools. We discuss the importance of using meaningful TBL grades as well as the role TBL plays in developing critical reasoning skills and in early identification of struggling students. We also discuss how the concurrent use of learning strategies with different incentive structures such as problem- and case-based learning could foster the development of well-rounded physicians. We hope this monograph helps and even inspires educators implementing TBL at their schools.

Keywords: Active Learning, Infectious Diseases, Teaching Methods: Problem-Based Learning PBL, Teaching Methods: Team-Based Learning TBL, Teaching Methods: Case-Based Learning CBL

Introduction

It is essential for future physicians to analyze and apply new medical knowledge to keep pace with the rapidly changing landscape of medical care. Additionally, the physician of the twenty-first century needs to function within inter-professional teams to integrate all aspects of medical care, from prevention to therapy. Clerkship students and residents have the opportunity to learn from their preceptors and other members of the medical team by working in small groups often using a Socratic-like apprenticeship model [1]. In contrast, basic science topics, covered during the first years of medical school, have been traditionally taught in large classes where students learn passively by listening to a content expert [2]. In these sessions, sometimes the only direct interactions between students and professors are questions asked by students in attendance. Some educators have attempted to increase student participation by utilizing “engaged lectures,” where students are encouraged to voluntarily participate in one or more question-based activities using on-line/computer-based software [2, 3]. This strategy increases student engagement but does not always incentivize critical thinking. Also, due to its voluntary nature, engaged lectures do not necessarily involve all students attending the lecture. To complicate this further, in many medical schools, lecture attendance is optional and lectures are recorded. As a result, a significant number of students decide to watch lectures on their computers in the comfort of their own homes, further reducing opportunities for engagement [4]. Although Benjamin Franklin’s old adage, “tell me and I forget, teach me and I remember. Involve me and I learn” was scientifically confirmed in the beginning of the twenty-first century by researchers studying active learning strategies, instructors of the sciences have been slow to incorporate and study pedagogical techniques that utilize active learning. In a meta-analysis of 225 studies examining student performance in science, engineering, and mathematics, Freeman et al. reported better student performance in courses using active learning strategies as compared with those using traditional lectures [5]. In a physics class, Deslauriers et al. clearly demonstrated better student performance, engagement, and attendance as well as deeper learning of course content when students were involved in course-related experiments as compared with traditional pedagogical techniques [6]. Thus, the empirical perception, which was elegantly stated by Franklin, is now backed by strong statistical evidence that favors active learning over more traditional, lecture-based methods.

If the value of active learning has been so clearly backed by data, why is there so much resistance to implementation in medical education? A possible explanation is that the traditional lecture-based format is a safe and comfortable environment for faculty and students alike. Both parties typically have vast experience with this format and clearly understand the expectations of this method. For faculty, preparing a lecture is often easier than preparing an active learning session. Lecture-based teaching is passive for students, and even when lecturers want to engage the students in a Socratic-like discussion, class sizes are often too large to make this practical. In the 1980s, L. Michaelsen encountered these same issues in the business school classes he taught [7]. He addressed these problems through the development of team-based learning (TBL™). Since then, TBL has been used in medical education [8–10] as well as other professional fields including nursing [11], veterinary medicine [12], dentistry [13], and law [14]. In TBL, students are required to prepare content prior to class and are individually tested on this material, using mastery level questions, to ensure preparation. This individually administered test has been dubbed the individual readiness assurance test (iRAT). Completion of this test is followed by cooperative completion of the same quiz by teams of 5–7 students in an activity called the group readiness assurance test (gRAT) or more recently, the team readiness assurance test (tRAT). During this time, peer teaching occurs and students attempt to cooperatively master the assigned course content. The students then apply their newly acquired knowledge to the last portion of the exercise, the application questions. These questions are open book but more challenging than those found on the iRAT/tRAT. Student teams discuss the questions and arrive at a consensus answer which is reported to the class resulting in a larger discussion of the tested concepts amongst the teams, facilitated by a faculty member [8, 15].

This monograph will briefly review challenges, opportunities, insights, and pitfalls learned during the implementation of TBL exercises at the 2 newest medical schools in the state of New Jersey (USA).

Implementing TBL at a Medical School: Insights and Pitfalls

Cooper Medical School of Rowan University (CMSRU) in Camden and Hackensack Meridian School of Medicine at Seton Hall University (Hackensack Meridian) in Nutley are the 2 newest medical schools in New Jersey. They received their inaugural classes in 2012 and 2018, respectively. CMSRU graduated its charter class in 2016, and its pre-clinical curriculum included limited lecturing (6 h/week) and extensive use of case-based learning (CBL) (6 h/week). The Hackensack Meridian curriculum is “lecture-less” and focuses on active learning as well, specifically large-group active learning sessions, problem-based learning (PBL) (3 h/week), and typically 2 TBL exercises per week.

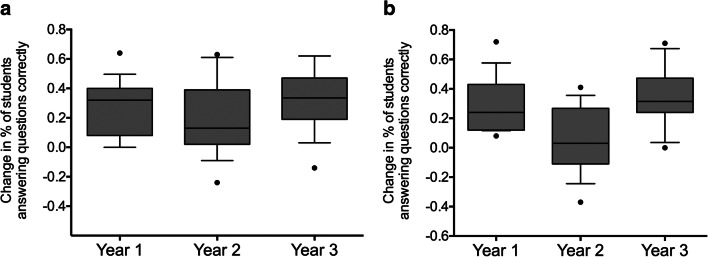

At CMSRU, TBL exercises were slowly incorporated into the medical school’s programs. Initially, TBL exercises were included in a 6-week summer pipeline program for students from disadvantaged and/or underrepresented minorities in medicine (URMs). These pipeline programs are common in US medical schools and are aimed at strengthening academic performance and enhancing the portfolios of URM students so they are more competitive in medical school admissions [16, 17]. As part of this program, students took a course in Medical Microbiology where TBL exercises were used to promote development of critical reasoning skills [18, 19]. This course also included several engaged lectures with intra-lecture questions using a pairwise instruction method similar to that developed by E. Mazur [20]. Students studied course material in the afternoons with the help of a first-year medical student and participated in several TBL exercises that covered these concepts. In the first year of this program, students indicated their preference for engaged lectures over TBL exercises. Therefore, in the second year of the program, we decreased the number of TBL exercises from 6 to 3 and replaced them with engaged lectures resulting in improved student satisfaction as evidenced by increased evaluation scores for the instructor [21]. However, there was a concomitant significant decrease (p < 0.01) in student performance on the course final examination. In the following year, we restored the number of TBL exercises to 6, which resulted in a significant (p < 0.001) increase in final course grades [21]. Further analysis showed that over these 3 years, there were no significant differences in student performance on Bloom’s taxonomy level 1 (remember) final examination questions. However, there was significant improvement in performance (p < 0.001) on Bloom’s taxonomy level 2, 3, and 4 (understand, apply, and analyze) final examination questions in years 1 and 3 as compared with year 2 (please see Fig. 1) [21]. Thus, in agreement with other authors [22, 23], we concluded that, in contrast to students’ perceptions that more engaged lectures enhance learning of course content, TBL exercises are actually more effective in improving learning of course material and critical thinking [21]. Interestingly, in course evaluations, when students were asked: “What is the single best aspect of this course that needs to be continued?,” 91% of students in year 1, 67% of students in year 2, and 75% of students in year 3 answered “TBL.” Therefore, even those students who felt more comfortable with lectures enjoyed the active learning environment provided by the TBL exercises.

Fig. 1.

Change in percentage of students answering questions correctly between the pre-course and post-course examinations according to Bloom’s taxonomy classification. The distribution of changes in the percentage of students answering each Bloom’s taxonomy level 1 (remember) (a) or Bloom’s taxonomy level 2, 3, and 4 (understand, apply, and analyze) (b) question correctly between the pre-course and post-course examinations was analyzed using a one-way analysis of variance. In the case of the Bloom’s taxonomy level 2, 3, and 4 questions, this was followed by a pairwise multiple comparison (Student-Newman-Keuls). For the Bloom’s taxonomy level 1 questions, there was no significant difference in the distributions between years 1, 2, and 3 (p = 0.213). For the Bloom’s taxonomy level 2, 3, and 4 questions, there was a significant difference in the distributions between years 1 and 2 (p = 0.001) and years 2 and 3 (p < 0.001) but not between years 1 and 3 (p = 0.587). The upper and lower limits of each box represent the 75th and 25th percentiles of the data, respectively, while the upper and lower whiskers represent the 90th and 10th percentiles, respectively. Data points outside of the whiskers, the outliers, are represented by solid circles. The horizontal line within each box represents the median (p = 0.317). Data from: Behling K.C., Murphy M.M., Mitchell-Williams J., Rogers-McQuade H., and Lopez O.J., Team-based learning in a pipeline course in medical microbiology for under-represented student populations in medicine improves learning of microbiology concept [21]. Available from doi: 10.1128/jmbe.v17i3.1083

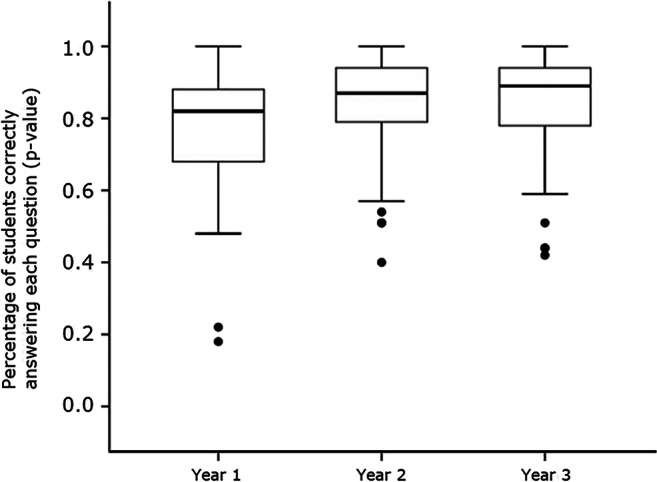

The pre-clinical curriculum at CMSRU is organ systems–based, and the first organ systems–based course is a 4-week Infectious Diseases (ID) course. This course reviews the fundamental basis of identification, diagnosis, and management of infectious diseases. The first iteration of the ID course included primarily CBL activities, lectures, and laboratory practical sessions, and there was a relatively high number of non-passing grades. On review of the literature, we found that use of TBL exercises in health profession education in Europe, the Middle East, and the USA was associated with a significant increase in course grades [24]. There was also evidence that use of TBL exercises was helpful for at-risk students [25]. Thus, based on these studies and our experience with TBL in our pipeline program, we decided to implement weekly TBL exercises in the second iteration of the ID course. These exercises were held on Mondays, and the assigned materials for review included the lectures and laboratory practical sessions from the prior week. There were essentially no changes in faculty and content delivered between years 1 and 2. As an external incentive, we graded the RAT exercises as follows: each RAT contributed 3% to the final grade where the iRAT counted for 1% and the tRAT counted for 2%. The application questions were not graded. Use of TBL exercises in the second iteration of the ID course followed by continued use in the next year was associated with improved final exam performance compared with the first iteration when no TBL exercises were used (please see Fig. 2) [26]. More importantly, the final examination failure rate decreased from 16% in year 1 to 6.3% and 2.8% in years 2 and 3, respectively. Another first-year organ systems–based course, Hematology-Oncology, which did not incorporate TBL exercises, was used as a control in our study. Interestingly, there was no change in the final examination failure rate in this course over the same time period [26]. Additionally, on further study of student performance in the ID course, we identified a significant positive correlation between iRAT and final course scores [26, 27], suggesting that iRAT scores may help in early identification of struggling students that may need additional assistance.

Fig. 2.

Performance on individual final examination questions after implementation of team-based learning (TBL) exercises in an Infectious Diseases course. TBL exercises were implemented in years 2 and 3, while results of year 1 served as a control in this study. Importantly, as performance improved in years 2 and 3 of this study, the final examination failure rate decreased from 16% in year 1 to 6.3% and 2.8% in years 2 and 3, respectively. Another first-year organ systems–based course, Hematology-Oncology, which did not incorporate TBL exercises, was used as a control in our study. There was no change in the final examination failure rate in the Hematology-Oncology course over the same time period [26]. Figure from: Behling, KC, Kim, R, Gentile, M, Lopez O. Does team-based learning improve performance in an infectious diseases course in a pre-clinical curriculum? [26]. Available from doi: 10.5116/ijme.5895.0eea

Students also provided many positive comments about the TBL exercises in the ID course [26] including “weekly TBL exercises helped me to stay on track with studying.” Moreover, they also stated that the TBL exercises provided them the opportunity to dispel any misconceptions they might have through peer teaching during the tRAT. The success of TBL exercises in the ID course has prompted other course directors to consider using TBL exercises in their courses.

One of the authors of this monograph (OJL) has recently moved to Hackensack Meridian, where students engage in 2 TBL exercises per week as part of the 16-month pre-clinical curriculum. The TBL prework consists of new content that students must learn on their own during self-directed learning time, and TBL grades do not influence the final course grade in a meaningful way. Preliminary experiences during the first year of TBL implementation have revealed 2 challenges associated with this strategy: (1) 2 TBL exercises in 1 week may increase stress levels in medical students because of the large amount of prework necessary for each TBL exercise and (2) because the TBL exercises do not significantly influence the final course grade, students achieving below average RAT scores do not face any significant consequences, resulting in a missed opportunity to incentivize pre-class preparation to improve the quality of student participation in team discussions. Additionally, in contrast to our previous findings [27], preliminary data from an 11-week-long Immunity, Infectious Diseases and Cancer course demonstrates no correlation between iRAT and final examination scores in this scenario where there is a relatively insignificant grading incentive.

To Grade or Not to Grade TBL, That Is the Question

TBL grading was one of the earliest challenges that we encountered with the implementation of TBL exercises in the pre-clinical curriculum at CMSRU [28]. Grading of TBL exercises is a very relevant and complex subject, and its pros and cons have been discussed in the recent TBL literature [28–31]. Some authors have suggested that ungraded TBL exercises promote an active learning environment, foster the improvement of teamwork skills, and are friendlier to learners [19, 32], while others highlight the importance of TBL scores contributing to the overall course grade [15]. As mentioned above, in the first phase of TBL [15], learners study independently prior to the TBL exercise to master identified objectives. In the second phase, mastery of relevant course material prepared during self-directed learning time is individually evaluated in a quantitative fashion by the iRAT and then for each team by the tRAT. After introducing TBL exercises into the pre-clinical curriculum, we used a retrospective design to study the effect of grading incentives on iRAT and final examination performance during the first 3 years of TBL implementation [28]. We found that iRAT scores were significantly higher and positively correlated with final examination scores when they contributed to the course grade as compared with the year when they did not [28]. Given our experiences at that time, in agreement with experts in TBL [33], we suggested that an appropriate grading incentive that struck a balance between providing motivation to prepare without being too onerous would improve student preparation and satisfaction with TBL exercises without unduly increasing stress and anxiety related to graded assessments.

We extended our study of TBL performance and its relationship to final examination scores by conducting a retrospective analysis of weekly iRAT scores as well as final examination scores from 3 cohorts of students (n = 260) in our pre-clinical ID course at CMSRU [34]. In addition to analyzing overall course performance, we also examined the performance of students achieving scores in the upper, middle, and lower 33th percentile on the final examination, to gain insight regarding the effect of weekly TBL exercises on high-performing and struggling students. There was a significant (p < 0.001) correlation between final examination and iRAT scores in all 3 student cohorts. Notably, we only detected highly significant (p < 0.01) weekly improvements in iRAT scores in students performing in the upper and middle 33rd percentile on the final examination, but not in the lower 33rd percentile. These data led us to hypothesize that poorly performing students may struggle during the first 3 weeks of the course and are only able to improve their iRAT scores in the last week of the course, when they have synthesized all the course content [34]. Alternatively, struggling students may lack adequate preparation for TBL exercises early in the course, failing to comply with the required prework [15], and they are only able to perform well during the last TBL exercise as they prepare for the final examination. Unfortunately, either alternative may be associated with poorer preparation for TBL exercises that likely interferes with the efficacy of this learning method for struggling students while also depriving their TBL teammates of the benefits of peer teaching. Importantly, we also found that iRAT scores can be used for early identification of struggling students who might need additional support to make better use of their self-directed learning time and also with coping skills to address the stresses inherent to medical education [35, 36].

The aforementioned studies provide substantial evidence that graded TBL exercises that influence the final course grade encourage student preparation allowing for maximal educational impact of this learning strategy. However, the distribution of points assigned to the iRAT and tRAT has been a matter of debate amongst TBL practitioners. At our institutions, iRAT and tRAT scores are assigned 33% and 67%, respectively, of the total TBL grade, and each team member shares the tRAT score independent of their individual contribution to the team discussion. Not surprisingly, tRAT average scores are generally higher than average iRAT scores [26, 27]. We have been concerned that assignment of such significant weight to the tRAT would unfairly allow more poorly prepared students, also referred as “free-riders” by Michaelsen et al. [33], to benefit from the efforts of their better prepared teammates.

To address this issue, we decided to change the grading of the iRAT and tRAT to create a grading incentive that would encourage students to better prepare for TBL exercises. This study was conducted in a pipeline course for senior undergraduate URM students that combined TBL and hands-on experiments in molecular biology [37]. In this study, we evaluated the effect of a novel grading paradigm that required a minimum iRAT score to share the team’s tRAT score on individual preparation of relevant course material as assessed by the iRAT. We found that this new grading incentive lowered the variance of TBL scores (iRAT and tRAT) and improved the statistical correlation between final examination and iRAT scores [37]. The reduced variance suggests that the grading incentive used in this study reduces disparity in knowledge amongst the students while the blending of TBL and hands-on sessions improved student acquisition of new knowledge and promoted teamwork skills [37].

Other groups have investigated alternative methods to motivate student preparation and participation in the tRAT portion of TBL exercises. In a manuscript by Haidet et al. [38], the authors highlight incentive structure as 1 of the 7 core elements for TBL implementation. They stated that individual TBL grading provides enough motivation to enhance preparation prior to the TBL session whereas team grading provides an incentive to maximize collaboration [38]. The importance of peer evaluation in TBL assessment, another core element in TBL, has been abundantly investigated [7, 33, 39, 40]. Peer evaluation allows for the acknowledgment of different contributions of individual team members during the tRAT, which could improve the quality and quantity of individual student participation. Indeed, Stein et al. recently reported that students consistently give the lowest peer evaluation scores to the least involved student, increasing individual student accountability in TBL [41]. Importantly, Michaelsen et al. suggested that the issue of “free-riders” is critical in all group approaches (TBL, CBL, and PBL, etc.), and peer evaluations can provide an incentive for students to do their fair share [33]. Moreover, he suggested that peer assessment is fundamental to TBL because it allows for practice of important skills including teamwork and self-management in an independent working group; development of better working relationships with peers; improvements in interpersonal skills; and provision of feedback in a professional setting [33].

Overall, our studies have demonstrated a statistically significant positive correlation between iRAT scores and final examination scores in both a medical school [28, 34] and a pre-medical pipeline program for URM students [37]. Moreover, graded TBL exercises [34] with additional assessment incentives for preparation [37] are positively correlated with improved overall course performance, highlighting the link between assessment and learning. Some may refute the idea of using additional assessments to motivate preparation as some believe that the conventional wisdom “assessment drives learning” should be replaced by “assessment drives learning for assessment (rather than learning)” [42]. This newer statement describes a vicious circle where students are prone to only identify “learning” as the subject(s) for which they are assessed [42]. Furthermore, as stated by McLachland, assessment can also inhibit the learning process due to increased stress and anxiety levels associated with graded assessments [42]. Therefore, while assessments can certainly drive learning, educators need to be aware that different learning styles and individual responses to assessment may influence performance on graded TBL assessments.

Our published studies demonstrate the relevance and benefits of graded assessment in TBL [28, 34, 37]. However, while assessment does not necessarily drive learning in all settings, it could specifically drive learning in TBL by reinforcing changes in attitudes and behaviors, an area that has been understudied in the current literature. For instance, several established medical schools are transitioning to curricula where active and self-directed learning are playing more central roles with more traditional pedagogies, such as teacher-centered, didactic lectures, are seeing a decrease in utilization. However, most undergraduate medical students have the most experience with traditional teacher-centered approaches, such as didactic lectures, and therefore need more instruction in how to best utilize newer student-centered pedagogies associated with active and self-directed learning. While some institutions may provide orientation to these teaching/learning methods at the beginning of the first year, further guidance and accountability over time may be necessary. Notably, at CMSRU, many students commented that they “found the use of TBL exercises in the ID block to be EXTREMELY beneficial. I would like to see this incorporated into other blocks if possible. I felt it served as a nice benchmark and allowed structured time to discuss topics with classmates.” We feel that this current system works well; however, the addition of “peer assessment” to the current iRAT and tRAT grading scheme may enrich the contributions of students in TBL. Moreover, it may induce changes in behaviors and attitudes that will improve peer teaching and critical thinking. Finally, we also contend that graded TBL exercises benefit not only the learners but also the faculty, because they allow for early identification of students who are at risk for underperforming in a course, potentially providing enough time for intervention.

Cognitive Versus Non-cognitive Assessments in Active learning: the Role of Incentive Structure

At CMSRU, undergraduate medical students are exposed to both CBL and TBL as part of the pre-clinical curriculum whereas at Hackensack Meridian, pre-clinical instruction utilizes PBL and TBL. The classroom settings for CBL and PBL are similar with the main differences between these 2 active learning strategies being that in PBL, the group is mostly self-guided and students identify their own learning objectives, while in CBL, the learning objectives for each case are determined by the case author, and the facilitator’s role is to guide the students towards those learning objectives using “guiding” questions [43, 44] (please see Table 1 for a comparison between CBL, PBL, and TBL). In CBL at CMSRU, groups of 8 students work with the help of 2 faculty facilitators, 1 basic science and 1 clinical, who are not necessarily content experts in the subject(s) discussed. These CBL groups, which meet for 2 h, 3 times per week, are assembled using the Myers-Briggs personality test as well as race, sex, national origin, academic achievements (MCAT scores and undergraduate GPA), and student undergraduate institution and remain constant for an entire academic year.

Table 1.

Comparison between distinctive features of case-based learning (CBL), problem-based learning (PBL), and team-based learning (TBL) as used at 2 new US medical schools

| PBL | CBL | TBL | |

|---|---|---|---|

| Student groups |

Case presentation: all groups in the same large classroom. Second day: small teams (8 students) with facilitators in separate small rooms |

Always small teams (8 students) with facilitator(s) in separate small rooms | Small teams (5–7 students): all teams are in the same large classroom with one facilitator |

| Sessions per week | Two | Three | One |

| Prework | No | Yes. Students receive a clinical case and do independent research prior to the initial session and after each session during the week | Yes. Mandatory prework assigned before the session |

| Grading of prework | N/A |

5-point Likert scale Narrative feedback |

Multiple-choice (RAT) at the beginning of the session |

| Learning objectives | Students identify issues for independent learning after initial clinical case presentation with the help of a content expert at the beginning of the week | Developed by content expert faculty prior to the sessions | Developed by content expert faculty prior to the session |

| Use of external resources for problem solving | Yes | Yes |

None for RAT Yes for application questions |

| Discussion | Students discuss content researched by them after initial case presentation | Students discuss content researched by them after reading the first release of the clinical case and additional releases throughout the week | Students discuss and negotiate a multiple-choice option to each question in their teams and defend their choice in intra-teams discussions |

| Facilitators’ role | Guides the discussion in small groups based on their expertise | Guides the discussion towards the learning objectives using open-ended questions |

No role during the iRAT/tRAT intra-team discussions Facilitator is a content expert and guides inter-teams discussions |

| External incentives (overall assessment) | Likert scale and narrative feedback based on preparation, participation, teamwork, and justification of concepts | Likert scale and narrative feedback based on preparation, participation, teamwork, and completion of learning objectives |

RAT is graded (33% iRAT/67% tRAT) Application questions are not graded |

With the exception of the ID course, TBL exercises at CMSRU are sporadic and are not a part of every course in the pre-clinical curriculum. Each TBL group is composed of 5–6 students who are selected based on undergraduate GPA, sex, and undergraduate institution. Of note, the cases discussed during CBL sessions are related to the content taught during the week, which, in the ID course, is also covered in the TBL exercises. In contrast to CBL, in TBL, 1 faculty content expert facilitates the inter-team discussions of up to 15 teams at once. Another important difference between these active learning strategies is that in CBL, students receive different releases of information during the week that provide them clues regarding the subjects that they should prepare for each session, while in TBL, the faculty selects a mandatory pre-class assignment. In TBL, students are individually tested on this assignment at the beginning of each TBL session, whereas there is no objective assessment of medical knowledge acquisition in CBL.

The assessment and incentive structures for pre-class preparation and participation are quite different in CBL and TBL. In CBL, non-cognitive skills, including student preparation, participation, completion of learning objectives, and teamwork, are evaluated each week by faculty facilitators using a Likert scale. As described above, assessment of cognitive skills in TBL is based on individual (iRAT) and team (tRAT) evaluations. We recently compared the effects of these different incentive structures on student preparation, participation, and final exam performance in the ID course [27] by studying the performances of a volunteer group of students in CBL and TBL. The performance of this group was consistent with the rest of the class, and our analysis showed that iRAT, but not CBL, scores were highly correlated (p < 0.01) with final examination scores, confirming our previous results highlighting the correlation between assessments of cognitive skills in TBL and final examination performance [28].

In this study, we also reviewed the amount of time (%Time) that students in the volunteer study group spoke during CBL and TBL exercises as a surrogate for student participation. Notably, we found a strong correlation (p < 0.01) between either CBL or iRAT scores and %Time only in students that performed in the upper 33th percentile on the final examination [28], suggesting that students that are more successful in the assessment of their cognitive skills tend to participate more in both CBL and TBL discussions (please see Table 2) [27]. This published study compelled 2 different groups of medical students to write very flattering “Letters to the Editor” supporting our findings and conclusions, stating that “TBL is an effective teaching method, and it should be used more often in other departments and institutions” [45] and that “we wholeheartedly agree with the authors that preparation for sessions is paramount to the eventual learning of an individual as well as the wider case group” [46]. In our most recently published study [47], we also reported a strong correlation (p < 0.001) between participation in TBL and iRAT and final examination scores in female, but not male, students in a pipeline program for freshmen and sophomore URM college students at CMSRU. These results suggest that in this group of URM students, knowledge of the subject improves self-confidence, particularly in female students, which in turn increases their level of participation in the TBL exercise.

Table 2.

ALG and iRAT scores and percentage of participation in ALG and TBL of students in the upper and lower 33rd percentiles of ALG and iRAT scores

| ALG scores | iRAT scores | ||||

|---|---|---|---|---|---|

| Upper 33rd Percentile | Lower 33rd Percentile | Upper 33rd Percentile | Lower 33rd Percentile | ||

| ALG Scores | Full Class | 4.94 (0.06)** | 4.10 (0.06) | 4.59 (0.25) | 4.56 (0.45) |

| Average (Standard Deviation) | Study Group | 4.73 (0.06)** | 4.10 (0.30) | 4.42 (0.20) | 4.44 (0.34) |

| iRAT Scores | Full Class | 6.79 (1.08) | 6.74 (1.07) | 8.15 (0.59)** | 5.82 (0.36) |

| Average (Standard Deviation) | Study Group | 6.58 (2.06) | 7.00 (1.90) | 8.25 (1.43)** | 5.95 (1.73) |

| Percentage of Participation in ALG | Full Class | - | - | - | - |

| Average (Standard Deviation) | Study Group | 15.96 (6.26)** | 9.17 (6.93) | 14.06 (7.41) | 11.90 (7.83) |

| Percentage of Participation in TBL/gRAT | Full Class | - | - | - | - |

| Average (Standard Deviation) | Study Group | 17.81 (8.23)** | 12.50 (7.12) | 22.10 (9.67)** | 15.53 (9.38) |

**p<0.01

Table from Carrasco GA, Behling KC, and Lopez OJ, Evaluation of the role of incentive structure on student participation and performance in active learning strategies: a comparison of case-based and team-based learning [27]. Active learning group (ALG) = case-based learning (CBL).

Available from doi: 10.1080/0142159X.2017.1408899

Overall, these studies demonstrate that TBL, not CBL, scores are strong predictors of acquisition of medical knowledge as assessed by course final examinations. As CBL facilitators are not content experts, they may lack the expertise to assess the accuracy of each student’s contributions to the CBL discussion. However, faculty facilitators individually evaluate and provide feedback for each student on non-cognitive skills such as participation and teamwork. Interestingly, a study by Kulatunga-Moruzi and Norman suggests that while cognitive assessments in medical school significantly correlate with future cognitive and clinical assessments, non-cognitive assessments correlate better with performance in clinical clerkships [48], reinforcing the value of these types of evaluations in medical education.

Conclusion

Our experience implementing active learning exercises in the 2 newest medical schools in New Jersey (USA) highlights the need for a robust understanding of the role of student participation and grading incentive structures on the performance of undergraduate medical students. Our results suggest that when the RAT grade is meaningful for students, individual assessment of cognitive skills by the iRAT is the best predictor of student success on course final examinations and can therefore also be used for early identification of students who struggle with course content. Additionally, we have found that improved knowledge of course content improves participation in active learning activities, likely leading to better peer teaching and educational impact. Importantly, from the student perspective, most CMSRU students favor the use of TBL and would like to see more of these exercises in their courses, as preparation for these activities keeps them on track for learning course content. On the other hand, assessment of non-cognitive skills during CBL still serves an important role as it may be predictive of future performance in clinical clerkships.

The use of CBL/PBL and/or TBL in medical education has enjoyed some discussion in the recent literature [49–51]. Some authors feel that although there is no definitive proof that CBL/PBL helps in acquisition of medical knowledge, CBL/PBL has positive effects on the social and cognitive competencies necessary for physicians after graduation [31, 48]. Our results and the current literature suggest that TBL and CBL/PBL can be used in a complementary fashion in medical education to “combine the best of both worlds,” as suggested by Dolmans [51], which may provide a holistic incentive structure to improve educational outcomes in undergraduate medical education. These pedagogical methods also help prepare medical students for life-long learning, by supporting development of important problem solving and critical thinking skills. In conclusion, our findings suggest that use of a combination of active learning strategies, with different incentive structures, such as TBL and CBL/PBL, can foster development of essential cognitive and non-cognitive skills required for the practice of medicine in the twenty-first century.

Funding Information

This monograph was funded in part by an International Association of Medical Science Educators (IAMSE) Educational Scholarship Grant.

Compliance with Ethical Standards

Conflict of Interest

The authors declare that they have no conflict of interest.

Ethical Approval

All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki declaration and its later amendments or comparable ethical standards.

Footnotes

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Gonzalo A. Carrasco, Email: carrasco@rowan.edu

Osvaldo J. Lopez, Email: osvaldo.lopez@shu.edu

References

- 1.Spencer JA, Jordan RK. Learner centred approaches in medical education. BMJ. 1999;318(7193):1280–1283. doi: 10.1136/bmj.318.7193.1280. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Luscombe C, Montgomery J. Exploring medical student learning in the large group teaching environment: examining current practice to inform curricular development. BMC Med Educ. 2016;16:184. doi: 10.1186/s12909-016-0698-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Pickering JD, Swinnerton BJ. Exploring the dimensions of medical student engagement with technology-enhanced learning resources and assessing the impact on assessment outcomes. Anat Sci Educ. 2019;12(2):117–128. doi: 10.1002/ase.1810. [DOI] [PubMed] [Google Scholar]

- 4.Burton WB, Ma TP, Grayson MS. The relationship between method of viewing lectures, course ratings, and course timing. J Med Educ Curric Dev. 2017;4:2382120517720215. doi: 10.1177/2382120517720215. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Freeman S, Eddy SL, McDonough M, Smith MK, Okoroafor N, Jordt H, et al. Active learning increases student performance in science, engineering, and mathematics. Proc Natl Acad Sci USA. 2014;111(23):8410–8415. doi: 10.1073/pnas.1319030111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Deslauriers L, Schelew E, Wieman C. Improved learning in a large-enrollment physics class. Science. 2011;332(6031):862–864. doi: 10.1126/science.1201783. [DOI] [PubMed] [Google Scholar]

- 7.Michaelsen L, Sweet M. New directions for teaching and learning In: MLS M, editor. The essential elements of team based learning: Wylie; 2008. p. 7–27.

- 8.Parmelee DX, Michaelsen LK. Twelve tips for doing effective team-based learning (TBL) Med Teach. 2010;32(2):118–122. doi: 10.3109/01421590903548562. [DOI] [PubMed] [Google Scholar]

- 9.McLean SF. Case-based learning and its application in medical and health-care fields: a review of worldwide literature. J Med Educ Curric Dev. 2016;3:39–49. doi: 10.4137/JMECD.S20377. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Zgheib NK, Simaan JA, Sabra R. Using team-based learning to teach pharmacology to second year medical students improves student performance. Med Teach. 2010;32(2):130–135. doi: 10.3109/01421590903548521. [DOI] [PubMed] [Google Scholar]

- 11.Clark MC, Nguyen HT, Bray C, Levine RE. Team-based learning in an undergraduate nursing course. J Nurs Educ. 2008;47(3):111–117. doi: 10.3928/01484834-20080301-02. [DOI] [PubMed] [Google Scholar]

- 12.Hazel SJ, Heberle N, McEwen MM, Adams K. Team-based learning increases active engagement and enhances development of teamwork and communication skills in a first-year course for veterinary and animal science undergraduates. J Vet Med Educ. 2013;40(4):333–341. doi: 10.3138/jvme.0213-034R1. [DOI] [PubMed] [Google Scholar]

- 13.Pileggi R, O’Neill PN. Team-based learning using an audience response system: an innovative method of teaching diagnosis to undergraduate dental students. J Dent Educ. 2008;72(10):1182–1188. doi: 10.1002/j.0022-0337.2008.72.10.tb04597.x. [DOI] [PubMed] [Google Scholar]

- 14.Sparrow SM, McCabee MS. Team-based learning in law. Soc Sci Res Netw. 2012:1–42.

- 15.Parmelee D, Michaelsen LK, Cook S, Hudes PD. Team-based learning: a practical guide: AMEE guide no. 65. Med Teach. 2012;34(5):e275–e287. doi: 10.3109/0142159X.2012.651179. [DOI] [PubMed] [Google Scholar]

- 16.Fincher RM, Sykes-Brown W, Allen-Noble R. Health science learning academy: a successful “pipeline” educational program for high school students. Acad Med. 2002;77(7):737–738. doi: 10.1097/00001888-200207000-00023. [DOI] [PubMed] [Google Scholar]

- 17.Smith SG, Nsiah-Kumi PA, Jones PR, Pamies RJ. Pipeline programs in the health professions, part 2: the impact of recent legal challenges to affirmative action. J Natl Med Assoc. 2009;101(9):852–863. doi: 10.1016/S0027-9684(15)31031-2. [DOI] [PubMed] [Google Scholar]

- 18.Hrynchak P, Batty H. The educational theory basis of team-based learning. Med Teach. 2012;34(10):796–801. doi: 10.3109/0142159X.2012.687120. [DOI] [PubMed] [Google Scholar]

- 19.Thompson BM, Haidet P, Borges NJ, Carchedi LR, Roman BJ, Townsend MH, et al. Team cohesiveness, team size and team performance in team-based learning teams. Med Educ. 2015;49(4):379–385. doi: 10.1111/medu.12636. [DOI] [PubMed] [Google Scholar]

- 20.Miller K, Schell J, Ho A, L B, Mazur E. Response switching and self-efficacy in peer-instruction classrooms. Phys Rev ST Phys Educ Res. 2015;11:1–8. doi: 10.1103/PhysRevSTPER.11.010104. [DOI] [Google Scholar]

- 21.Behling KC, Murphy MM, Mitchell-Williams J, Rogers-McQuade H, Lopez OJ. Team-based learning in a pipeline course in medical microbiology for under-represented student populations in medicine improves learning of microbiology concepts. J Microbiol Biol Educ. 2016;17(3):370–379. doi: 10.1128/jmbe.v17i3.1083. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Persky AM. The impact of team-based learning on a foundational pharmacokinetics course. Am J Pharm Educ. 2012;76(2):31. doi: 10.5688/ajpe76231. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Jost M, Brustle P, Giesler M, Rijntjes M, Brich J. Effects of additional team-based learning on students’ clinical reasoning skills: a pilot study. BMC Res Notes. 2017;10(1):282. doi: 10.1186/s13104-017-2614-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Fatmi M, Hartling L, Hillier T, Campbell S, Oswald AE. The effectiveness of team-based learning on learning outcomes in health professions education: BEME Guide No. 30. Med Teach. 2013;35(12):e1608–e1624. doi: 10.3109/0142159X.2013.849802. [DOI] [PubMed] [Google Scholar]

- 25.Koles PG, Stolfi A, Borges NJ, Nelson S, Parmelee DX. The impact of team-based learning on medical students’ academic performance. Acad Med. 2010;85(11):1739–1745. doi: 10.1097/ACM.0b013e3181f52bed. [DOI] [PubMed] [Google Scholar]

- 26.Behling KC, Kim R, Gentile M, Lopez O. Does team-based learning improve performance in an infectious diseases course in a preclinical curriculum? Int J Med Educ. 2017;8:39–44. doi: 10.5116/ijme.5895.0eea. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Carrasco GA, Behling KC, Lopez OJ. Evaluation of the role of incentive structure on student participation and performance in active learning strategies: a comparison of case-based and team-based learning. Med Teach. 2018;40(4):379–386. doi: 10.1080/0142159X.2017.1408899. [DOI] [PubMed] [Google Scholar]

- 28.Behling KC, Gentile M, Lopez OJ. The effect of graded assessment on medical student performance in TBL exercises. Med Sci Educ. 2017;27:451–455. doi: 10.1007/s40670-017-0415-3. [DOI] [Google Scholar]

- 29.Wahawisan J, Salazar M, Walters R, Alkhateeb FM, Attarabeen O. Reliability assessment of a peer evaluation instrument in a team-based learning course. Pharm Pract (Granada). 2016;14(1):676. doi: 10.18549/PharmPract.2016.01.676. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Fete MG, Haight RC, Clapp P, McCollum M. Peer evaluation instrument development, administration, and assessment in a team-based learning curriculum. Am J Pharm Educ. 2017;81(4):68. doi: 10.5688/ajpe81468. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Koh YYJ, Rotgans JI, Rajalingam P, Gagnon P, Low-Beer N, Schmidt HG. Effects of graded versus ungraded individual readiness assurance scores in team-based learning: a quasi-experimental study. Adv Health Sci Educ Theory Pract. 2019. 10.1007/s10459-019-09878-5. [DOI] [PubMed]

- 32.Deardorff AS, Moore JA, McCormick C, Koles PG, Borges NJ. Incentive structure in team-based learning: graded versus ungraded Group Application exercises. J Educ Eval Health Prof. 2014;11:6. doi: 10.3352/jeehp.2014.11.6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Michaelsen LK, Davidson N, Major CH. Team-based learning practices and principles in comparison with cooperative learning and problem based learning. J Excell Coll Teach. 2014;25(3-4):57–84. [Google Scholar]

- 34.Carrasco GA, Behling KC, Lopez OJ. First year medical student performance on weekly team-based learning exercises in an infectious diseases course: insights from top performers and struggling students. BMC Med Educ. 2019;19(1):185. doi: 10.1186/s12909-019-1608-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Pulfrey C, Butera F. Why neoliberal values of self-enhancement lead to cheating in higher education: a motivational account. Psychol Sci. 2013;24(11):2153–2162. doi: 10.1177/0956797613487221. [DOI] [PubMed] [Google Scholar]

- 36.Smith CK, Peterson DF, Degenhardt BF, Johnson JC. Depression, anxiety, and perceived hassles among entering medical students. Psychol Health Med. 2007;12(1):31–39. doi: 10.1080/13548500500429387. [DOI] [PubMed] [Google Scholar]

- 37.Carrasco GA, Behling KC, Lopez OJ. A novel grading strategy for team-based learning exercises in a hands-on course in molecular biology for senior undergraduate underrepresented students in medicine resulted in stronger student performance. Biochem Mol Biol Educ. 2018. 10.1002/bmb.21200. [DOI] [PubMed]

- 38.Haidet P, Levine RE, Parmelee DX, Crow S, Kennedy F, Kelly PA, et al. Perspective: guidelines for reporting team-based learning activities in the medical and health sciences education literature. Acad Med. 2012;87(3):292–299. doi: 10.1097/ACM.0b013e318244759e. [DOI] [PubMed] [Google Scholar]

- 39.Branney J, Priego-Hernandez J. A mixed methods evaluation of team-based learning for applied pathophysiology in undergraduate nursing education. Nurse Educ Today. 2018;61:127–133. doi: 10.1016/j.nedt.2017.11.014. [DOI] [PubMed] [Google Scholar]

- 40.Cestone CM, Levine RE, Lane DR. Peer assessment and evaluation in team-based learning. In: Periodicals W, editor. New Directions for Teaching and Learning: Wiley Interscience: Wiley; 2008. p. 69–78.

- 41.Stein RE, Colyer CJ, Manning J. Student accountability in team-based learning classes. Teach Sociol. 2016;44(1):28–38. doi: 10.1177/0092055X15603429. [DOI] [Google Scholar]

- 42.McLachlan JC. The relationship between assessment and learning. Med Educ. 2006;40(8):716–717. doi: 10.1111/j.1365-2929.2006.02518.x. [DOI] [PubMed] [Google Scholar]

- 43.Taylor D, Miflin B. Problem-based learning: where are we now? Med Teach. 2008;30(8):742–763. doi: 10.1080/01421590802217199. [DOI] [PubMed] [Google Scholar]

- 44.Srinivasan M, Wilkes M, Stevenson F, Nguyen T, Slavin S. Comparing problem-based learning with case-based learning: effects of a major curricular shift at two institutions. Acad Med. 2007;82(1):74–82. doi: 10.1097/01.ACM.0000249963.93776.aa. [DOI] [PubMed] [Google Scholar]

- 45.Ho CLT. Team-based learning: a medical student’s perspective. Med Teach. 2019;1. 10.1080/0142159X.2018.1555370. [DOI] [PubMed]

- 46.Bradley M, Broome C, Davies M, Watson C. A case to answer - could CBL do more for medical students? A response to Carrasco et al. Med Teach. 2018;1. 10.1080/0142159X.2018.1506572. [DOI] [PubMed]

- 47.Collins CM, Carrasco GA, Lopez OJ. Participation in active learning correlates to higher female performance in a pipeline course for underrepresented students in medicine. Med Sci Educ. 2019, in press. 10.1007/s40670-019-00794-2. [DOI] [PMC free article] [PubMed]

- 48.Kulatunga-Moruzi C, Norman GR. Validity of admissions measures in predicting performance outcomes: a comparison of those who were and were not accepted at McMaster. Teach Learn Med. 2002;14(1):43–48. doi: 10.1207/S15328015TLM1401_10. [DOI] [PubMed] [Google Scholar]

- 49.Burgess A, Bleasel J, Haq I, Roberts C, Garsia R, Robertson T, et al. Team-based learning (TBL) in the medical curriculum: better than PBL? BMC Med Educ. 2017;17(1):243. doi: 10.1186/s12909-017-1068-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Burgess A, Roberts C, Ayton T, Mellis C. Implementation of modified team-based learning within a problem based learning medical curriculum: a focus group study. BMC Med Educ. 2018;18(1):74. doi: 10.1186/s12909-018-1172-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Dolmans D, Michaelsen L, van Merrienboer J, van der Vleuten C. Should we choose between problem-based learning and team-based learning? No, combine the best of both worlds! Med Teach. 2015;37(4):354–359. doi: 10.3109/0142159X.2014.948828. [DOI] [PubMed] [Google Scholar]