Abstract

Purpose

Design and test the usability of a novel virtual rehabilitation system for bimanual training of gravity supported arms, pronation/supination, grasp strengthening, and finger extension.

Methods

A robotic rehabilitation table, therapeutic game controllers, and adaptive rehabilitation games were developed. The rehabilitation table lifted/lowered and tilted up/down to modulate gravity loading. Arms movement was measured simultaneously, allowing bilateral training. Therapeutic games adapted through a baseline process. Four healthy adults performed four usability evaluation sessions each, and provided feedback using the USE questionnaire and custom questions. Participant’s game play performance was sampled and analyzed, and system modifications made between sessions.

Results

Participants played four sessions of about 50 minutes each, with training difficulty gradually increasing. Participants averaged a total of 6,300 arm repetitions, 2,200 grasp counts, and 2,100 finger extensions when adding counts for each upper extremity. USE questionnaire data averaged 5.1/7 rating, indicative of usefulness, ease of use, ease of learning, and satisfaction with the system. Subjective feedback on the custom evaluation form was 84% favorable.

Conclusions

The novel system was well-accepted, induced high repetition counts, and the usability study helped optimize it and achieve satisfaction. Future studies include examining effectiveness of the novel system when training patients acute post-stroke.

Keywords: Sub-acute stroke, virtual reality, gamification, therapeutic game controller, integrative rehabilitation, BrightArm Compact, upper extremity, cognition, usability evaluation

Introduction

Stroke is the leading cause of disability in the United States (US),1 responsible for approximately 140,000 deaths each year in this country.2 The incidence of stroke is projected to increase 20% by 2030, compared to 2012,3 with related annual costs expected to exceed $180 billion. Stroke is clearly a major disease with enormous costs for the individual and society. Stroke survivors typically present with motor, cognitive, as well as mood dysfunction, requiring complex and extended intervention. It is thus important to modernize methods of treating the stroke survivor, whether in hospital, clinic or home. Technology plays an important role in this effort.

Upper extremity (UE) functional deficits impact 60% to 80% of stroke survivors,4 leading to a lifetime of disability and affecting quality of life.5 Common UE impairments associated with stroke are reduced joint mobility, loss of muscle strength,6 compounded by cognitive deficits affecting memory, attention, and executive function.7 These impairments adversely affect independence in activities of daily living (ADLs).7 It follows that post-stroke rehabilitation needs to be integrative (motor and cognitive) and be done at a single point of care, to reduce costs. Bilateral training has advantages over customary training which involves only the more affected arm/hand. Advantages of bilateral training include more neural reorganization as motor centers in both hemispheres are activated and the ability to train at higher cognitive engagement levels required when performing bimanual tasks. A meta-analysis of bilateral training8 found a significant effect from bimanual reach timed to auditory cues. In a randomized controlled trial (RCT),9 an experimental group of stroke survivors, at the end of outpatient therapy, trained only healthy arms. A 23% functional improvement was observed in their untrained paretic arm. The control group showed no significant change.

Advances in UE rehabilitation technology have led to a wide array of robotic and virtual reality-based training systems.10 However, as bilateral robotic rehabilitation was studied, the associated costs and required space became more of a concern.11 Robotic systems used in rehabilitation need to be designed with redundant safety measures due to their active forces applied on weak limbs. However, there is always the possibility that programming errors could lead to unforeseen robot movements that may cause accidents.12

There is evidence that game-based therapy for patients with stroke offers significantly more motor training in both upper and lower body13,14 than standard of care. Due to the intrinsic nature of video games, it is easier to alleviate learned nonuse and boredom, as well as increase much needed number of movement repetitions beneficial to recovery after stroke.15 Moreover, game-based therapy has been widely used to boost patient’s motivation, to increase exercise intensity, and to provide means to measure objective outcomes in a quantifiable way,16 either locally or at a distance. What is needed is technology which is passive (safer as no actuators act on the trained limbs),17 that allows bimanual training on a single system (lower cost, compactness), and uses virtual reality therapeutic games (high number of arm repetitions, motivation).14

This research group had pioneered the development of robotic rehabilitation tables that modulate gravity bearing on weak arms, facilitate UE strengthening18 and provide bilateral, integrative game-based training.19 The BrightArm Duo robotic table (Figure 1(a)), developed in 2015, used a low-friction motorized table to help assist forward arm reach, by tilting its distal side down. Conversely, forward arm reach was resisted and bringing the arm closer to the trunk was assisted, once the table work surface was tilted up. Arms were placed in low-friction forearm supports with infrared (IR) light emitting diodes (LEDs), electronics and wireless transmitters (Figure 2(a)). Grasping strength was measured by rubber pears connected to digital pressure sensors and transmitted to a PC which was controlling the system. The forearm supports were tracked by a pair of overhead infrared (IR) cameras communicating with the same PC. The table was accessible for patients in wheelchairs and its work surface could be lifted or lowered to accommodate different body sizes. A large display in front of the patient presented several therapeutic games that adapted to the patient’s motor and cognitive functioning level at each session. However, BrightArm Duo had shortcomings due to its large size, difficult control (owing to its 4 linear actuators) (2 for lifting and 2 for tilting), and inability to train pronation/supination while arms were supported on the table. Furthermore, BrightArm Duo forearm supports could not train finger extension movement or pronation/supination, which are important for ADLs. The BrightArm Duo system underwent an RCT on chronic stroke survivors in nursing homes.20 It did not, however, undergo an initial usability evaluation which may have uncovered some of the above design issues.

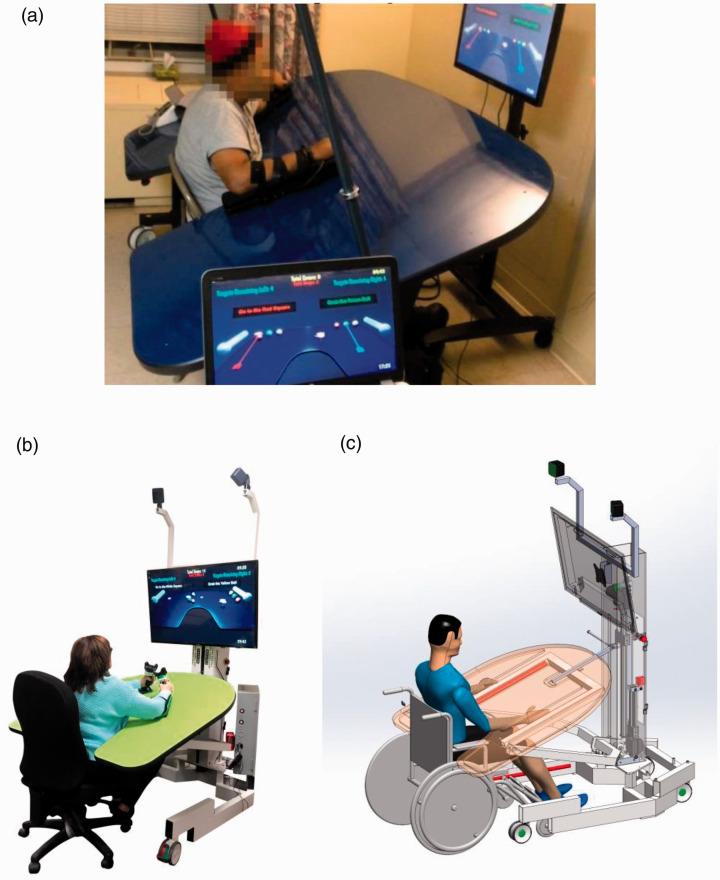

Figure 1.

Rehabilitation tables modulating gravity: a) BrightArm Duo rehabilitation system [17]; b) BrightArm Contact with participant c) 3D CAD rendering model with key components shown.

© Bright Cloud International Corp. Reprinted by permission.

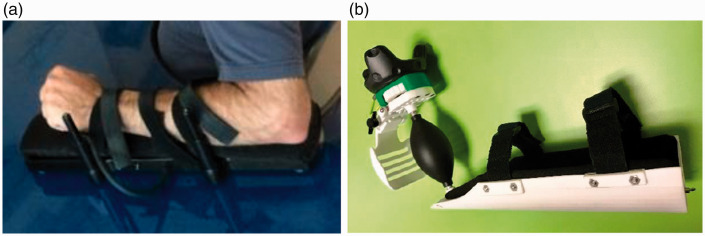

Figure 2.

a) BrightArm Duo game controller: b) BrightBrainer Grasp therapeutic game controller.

© Bright Cloud International Corp. Reprinted by permission.

This article presents the next generation BrightArm Compact (BAC) rehabilitation table, and its novel game controllers, designed to overcome the limitations of the Duo system. Results of an initial usability evaluation with four healthy adult participants who trained on the system are included.

Methods

The BrightArm compact rehabilitation table

Actuator assembly

The design process of the BAC system was focused on reducing size and complexity (Figure 1(b)). Instead of 4 actuators used in the Duo version, the BAC table had only two electrical linear actuators, which were placed in a central column (Figure 1(c)). One actuator lifted/lowered the rehabilitation table to adjust to patient’s height, while the other actuator was responsible for tilting the table up/down. This second actuator was mounted in a piggy-back arrangement on the table lifting one, and a hinge was used to allow table rotation regardless of height. The up/down translation range was 8 inches, while the tilt angle was adjustable between +20° and −15° (0° corresponding to horizontal). The hinge was detachable allowing the work surface of the table to be placed in a vertical position for transport.

The table work surface was made of a custom honeycomb wood material so to reduce weight, and covered with laminated Formica film to allow low-friction movement of the game controllers. Its top surface had a matt finish to reduce ambient light reflection, while its pastel green surface was chosen as an attractive color for the patient. Similar to its precursor BrightArm Duo, the work surface had a center cutout on its proximal edge, so to allow a patient’s trunk to be placed against the table inner edge. All the work surface edges were rounded and covered with a rubber mold so to reduce the chance of arm skin injury. The total area of the work surface was 1,605 in2 (10,355 cm2), which represented a 54% reduction from the Duo’s 3,459 in2 (22,316 cm2) work surface. The BAC overall footprint was 4,400 in2 (28,387 cm2), a 45% reduction of the Duo footprint of 8,000 in2 (51,613 cm2).

Unlike the BrightArm Duo display, which had been mounted on a separate TV stand, the BAC system had a TV display (40 inch diameter) mounted directly on its central column. The TV could be tilted 20° downward to facilitate prolonged viewing with minimal neck strain. The same central column anchored two HTC VIVE IR illuminators,21 part of the tracking mechanism of its game controllers.

The actuator assembly and work surface were supported by a U-shape steel frame placed on lockable wheels. The same underside frame also supported a custom lockable computer box, housing a medical grade HP PC (z240 SFF), the actuators power supply, a medical grade power chord (Tripp Lite ISOBAR6ULTRAHG)) and the VIVE head mounted display (HMD). Images normally seen on this HMD were transmitted to the TV through an HDMI cable routed in the center tower. The decision to use a TV instead of HMD was taken so to minimize disease transmission through repeat use in clinical settings.

Table safety mechanism

While the BAC was designed to be a passive system, it was important to maintain patient’s safety by preventing collision with the table underside during height adjustments or while tilting. Another concern was the possibility of collision with the wheels of a wheelchair. Finally, attending therapists had to have a way to stop the table movement manually in case of malfunction. A triple-layer safety mechanism was developed to address these possible scenarios.

The first safety layer was composed of pairs of IR illuminator strips (Seco-Larm E-9660-8B25, E-9622-4B25) placed on the underside of the work surface. Close proximity between the patient’s knees and the table was detected as an interruption of one of several IR beams, and this change in status was transmitted to the BAC control box. This stopped the table from moving further and triggered an audible warning sound. A special pair of IR strips was placed left and right of the work surface central cutout, to detect a patient’s presence. This signal, interrupted when a patient was rolled onto the BAC, did not disable the table.

The second layer of the safety mechanism consisted of a movable small mechanical plate located on the table underside, above the right wheel of any wheelchair placed against the table. The mechanical plate rotated if pressed against the wheel and interrupted an electrical circuit through a micro switch. This in turn stopped the table from further pressing against the wheel during table upward tilting.

The third layer of the BAC safety system consisted of two emergency power shut-off switches (model AutomationDirect.com; GCX3226-24). These emergency switches were mounted on the left and right sides of the central column at 100 cm above the floor, so to be easily reachable. Once an emergency switch had been pushed by a therapist, the table was immobilized.

Therapeutic game controllers

This study used a novel game controller optimized for UE rehabilitation. The BrightBrainer Grasp (BBG) (Figure 2(b)) incorporated a VIVE tracker (HTC 99HANL00200) mounted on top of a mechanical assembly, and grounded on the controller curved bottom support. The mechanical assembly had a lever mechanism which measured global finger extension,20 as well as a rubber pear used to measure grasping strength. The rubber pear was part of a novel grasp sensing mechanism as it had a pneumatic connection with a digital pressure sensor embedded in the bottom support sled. The same sled housed electronics and battery, such that the game controller transmitted lever position and grasp force values wirelessly to the PC running the therapeutic games.

Hand 3 D position was measured in real time by a combination of the two VIVE IR illuminators and the tracker sensors. Position and orientation data were transmitted wirelessly to a VIVE HMD which in turn communicated with the PC running the therapeutic games. The combination of the two data streams (from the VIVE system and from the BBG controller) enabled real time control of one or two avatars (corresponding to the use of one or two controllers in unilateral or bilateral training). Further details on the BBG therapeutic game controller design and usability its evaluation are given in Burdea et al.22

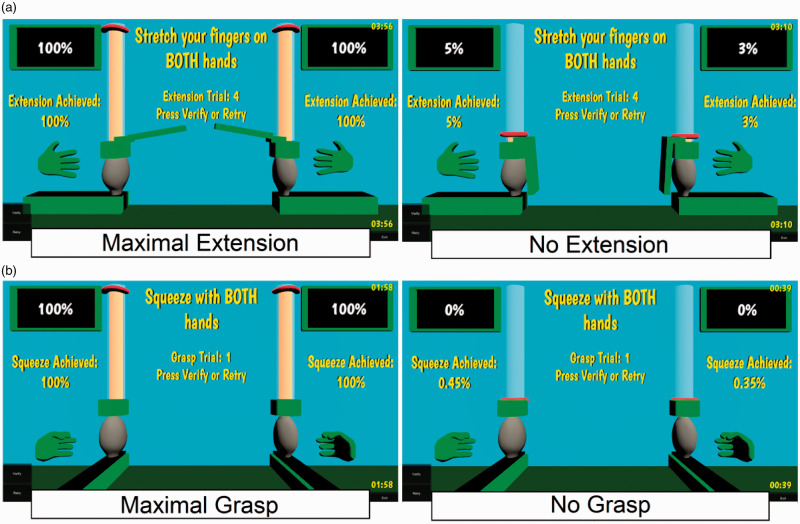

System baselining

The BBG controllers were designed to passively adapt to impaired hand characteristics. Finger extension was detected globally, regardless of which finger or group of fingers pushed the mechanical lever away. This characteristic was chosen to accommodate dissimilar finger range of motion due, for example, to spasticity. Conversely, grasping force was detected regardless of which finger (or group of fingers) flexed around the central rubber pear.

A baselining process was implemented to measure maximal extension range and to map it to an avatar being controlled. Unlike the Duo table model, where each hand was baselined in sequence, the BAC simultaneously baselined both hands, so to save setup time. As shown in Figure 3(a), the extension baseline scene depicted two simplified controllers. The amount of extension was visualized by the position of two mechanical levers, a percentage number, and a vertical tube that colored in proportion with the amount of global finger extension. Residual extension (typical of spastic hands) was also measured for each hand, and then subtracted from the extension value, to determine net movement.

Figure 3.

Therapeutic game controller baselining: a) finger extension; b) grasping force.

© Bright Cloud International Corp. Reprinted by permission.

Figure 3(b) depicts the grasp baseline scene, again for both hands measured simultaneously. The vertical tube would fill with color proportional with the grasp force and would become transparent when no grasp was requested. Residual values due to involuntary grasping were then subtracted so to determine actual grasping range.

Impairments subsequent to stroke typically result in dissimilar UE characteristics, with weakness and less range of motion present in the more impaired arm. However, in order to optimize game play it was necessary to show fully functional virtual hands or other avatars, so that they could move the whole extent of the virtual scene. As a consequence, the gains between physical movement and the corresponding avatar movement were dissimilar for the two UEs. Showing scenes with fully functional arms was also aimed at giving the patient a feeling of being in control and improving wellbeing (reducing depression typically seen in those with severe impairments23).

Therapeutic games

Ten serious games, previously developed by this group for UE integrative therapy,20,24 were used in this BAC usability evaluation study.

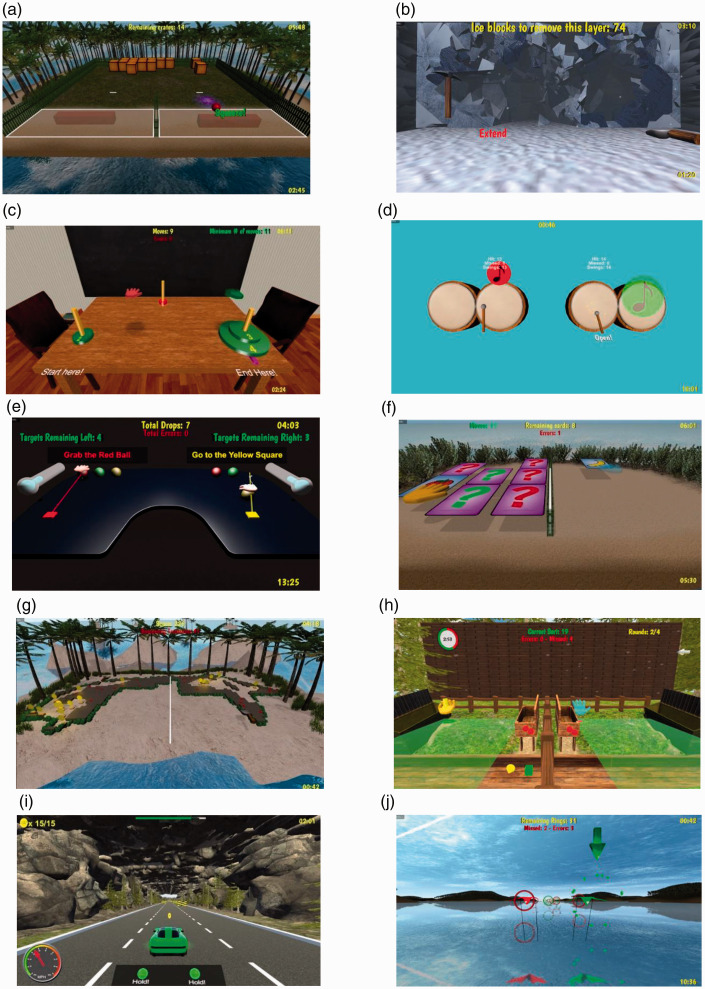

Breakout 3D (Figure 4(a)) was aimed at training speed of reaction, hand-eye coordination, and executive function. Participants were tasked to destroy an array of crates on an island, using virtual balls bounced with one of two paddle avatars. The game had two variants, depending whether paddles moved predominantly left-right (shoulder abduction/adduction), or in-out (shoulder flexion/extension). At higher levels of difficulty there were more crates (more repetitions needed to destroy all of them), balls became faster and paddles shorter (requiring shorter reaction time). At yet higher difficulty, participants had to remember to squeeze the BBG rubber pear to “solidify” the paddle just before a bounce, lest the ball passed right through it. A finger extension was subsequently required to reset the paddle in preparation for the next bounce.

Figure 4.

Screenshots of 10 BrightArm Compact games that were played during the usability study:

a) Breakout 3D; b) Avalanche; c) Towers of Hanoi; d) Drums; e) Pick-and-Place; f) Card Island;

g) Treasure Island; h) Catch 3D; i) Car race; and j) Kites. © Bright Cloud International Corp.

Reprinted by permission.

Avalanche (Figure 4(b)) was designed to train motor endurances and task sequencing. Participants were asked to break through three walls of ice to rescue people trapped in a cottage by an avalanche. The right arm controller was mapped to a pick axe avatar, and the left controller to a shovel avatar. To break the ice participants had to repeatedly hit it by placing the pickaxe at the desired location and grasping. Broken ice (visualized by a blue color) could then be shoveled out by moving the shovel avatar while grasping. A number of repetitions were required to shovel out a sufficiently large hole in the ice wall, with higher levels of difficulty corresponding to thicker walls (more repetitions).

Towers of Hanoi 3D (Figure 4(c)) trained executive function by adapting a well-known game usually played with a mouse. In this group’s 3D version, participants controlled a green and a red hand avatars, and saw disks stacked on a start pole in increasing order of size from top to bottom. The smallest disk at the top had a green color and the other disks were red, with game difficulty increasing with total number of disks. Participants were asked to pick disks from the starting pole (by grasping), and restack them in the same order of sizes on a target pole (by extending fingers). A third pole as used as a via point. Decision making was trained by setting the condition that disks could only be handled by avatars of matching color, and that larger disks could never be placed on top of smaller diameter ones. Participants were asked to use the minimum number of moves to accomplish this task.

Drums (Figure 4(d)) was designed to train hand-eye coordination and split attention. Participants had to hit falling notes at the precise instance when they overlapped an array of drums. A cognitive cue was provided by the change in note color from red to green, once they overlapped a drum, indicative of the moment when they should be hit. To hit a note a participant had to grasp to activate a mallet avatar, and to overlap a note by moving the respective arm. Game difficulty increased with the speed of falling musical notes, their number, as well as the number of drums.

Pick and Place (Figure 4(e)) was a virtual representation of the classical rehabilitation task intended to train motor control. In its bilateral training version, the game depicted two hand avatars, multiple balls of different colors and two square colored targets. The patients were told to pick the ball of a color indicated in text (thus training reading comprehension and matching), and follow an ideal straight line to the target. The patient had to identify the ball to pick up, move the arm so the hand avatar hovered over the desired ball and squeeze the rubber pear, in order to pick it up. Then the patient had to follow the line to the target as best as possible, and once the target had been reached, extend fingers to release the ball. The degree of grasping force was visualized by coloring tubes on either side of the virtual table. Picking up and moving both arms at the same time trained split attention, and several repetitions were done until the game completed or had timed out. Upon completion the game displayed all the trajectory traces corresponding to each repetition. This bundle of traces was indicative of uniformity and smoothness of movement (straight and tight bundle), or could indicate ballistic movement at the edge of arm reach. At higher difficulty levels more repetitions were required, and instructional text color did not match the color of the ball to be picked up.

Card Island (Figure 4(f)) was intended to primarily train visual and auditory memory by asking the patient to pair an array of cards placed face down on an island. To turn a card face up, reveal its image and hear a corresponding noun, a patient had to hover one of the hand avatars over that card, then grasp. To be able to grasp again with that hand, so to turn another card face up, it was necessary to first extend fingers so to “reset” the status of the hand avatar. Placement of matching cards was random, so pairs could be on either side of a center divider or on one side of the divider. The center divider was meant to elicit movement of both arms so to combat learned nonuse. A congratulatory text appeared once all cards had been paired and disappeared from the island. Higher levels of difficulty involved more cards to be paired, which in turn meant a higher cognitive load and more arm reach, grasping and extension repetitions.

Treasure Island (Figure 4(g)) was designed to train motor endurance and visual memory. Participants were asked to find treasures buried in sand on an island, in an area surrounded by boulders. To dig treasures out of the sand participants had to grasp to “activate” shovel avatars, then reach out into the sand, with a center divider preventing crossing arms. This was done so to prevent learned non-use, which is often found in impaired patient populations (such as stroke survivors). At lower levels of difficulty treasure locations were indicated by marks on the sand. At higher difficulty levels there were no marks, and sand storms periodically covered some of the already discovered treasures, so to elicit more arm reach and grasping repetitions.

Catch 3D (Figure 4(h)) trained arm reaching, pronation/supination, as well as reaction time and pattern recognition. Specifically, participants had to catch falling objects before they hit the ground, using hand avatars with extended fingers. The objects varied in shape and color, and had to be placed in bins by grasping, reaching to a chosen bin, pronating, and then extending such that the object fell in that bin. Objects had to be sorted by choosing bins showing matching objects on their walls, or by placing a non-matching object into a side bin. At higher difficulty there were more objects to sort (more repetitions), objects fell with higher speed (shorter reaction time) and lateral winds acted as disturbances (requiring better motor control to catch before the object fell on the ground and disappeared).

Car race (Figure 4(i)) asked participants to drive a race car avatar by coordinating pronation or supination movements to change lanes, extending fingers on the BBG controller to accelerate, and grasping to break. Lane changes were needed to avoid obstacles such as oil spills or boulders, with a longer racetrack, faster car avatar and more obstacles corresponding to higher levels of difficulty. This game was aimed at training primarily grasping, finger extension and arm pronation/supination, as well as speed of reaction and hand-eye coordination.

Kites (Figure 4(j)) asked participants to fly a green kite and a red one through an array of circular targets of matching color and random position. This elicited numerous arm crossing movements, as targets alternated in the horizontal plane. Difficulty was increased with higher number of targets (more arm repetitions) and faster kites (shorter reaction time). Thus playing Kites trained hand-eye coordination, pattern matching (fly through like-colored targets), reaction time (the position and color of targets was random), as well as abduction/adduction shoulder movements to fly the kite through the array of targets.

Therapeutic games design principles

While the 10 games used in the BAC usability evaluation were custom built, they did follow principles used in commercial videogames to increases user (patient) engagement and motivation. Lohse and colleagues25 looked at the intersection of industrial game design, neuroscience and motor learning in rehabilitation, domains which all benefit from one’s engagement and motivation. The authors formulated six principles of successful rehabilitation game design: 1) reward; 2) challenge; 3) feedback; 4) choice; 5) clear goals; and 6) socialization.

Rewards were implemented by providing visual and auditory congratulatory messages upon success in a game. Visual rewards were fireworks, or congratulatory text (“Great!” or “Good work!”, for example) while auditory rewards could be applause, or whistles. What constituted success depended on each game, for example in Breakout 3D success meant that all crates had been destroyed in the allotted time. Similarly in Towers of Hanoi success meant that all disks had been restacked using the minimum number of necessary movements. It is important to also not discourage patients when they had been unable to complete a particular game task. Unlike commercial games for entertainment, which occasionally tell players “you lost,” or “you are dead,” the games developed for the BAC therapy told participants “Nice try.”

Challenge within rehabilitation gaming is a delicate principle by which the game needs to motivate a patient to exert maximally, however without making the games impossible hard to win. What constitutes maximal exertion is, of course, patient-dependent, and for a given patient maximal exertion can change from day to day. Maximal exertion whether in arm reach counts or grasp force, for example, will eventually lead to fatigue, and even pain. In the case of the games described above, an appropriate amount of challenge was dependent on baseline outcomes (described later in this article), as well as game-specific tasks. For example, Treasure Island crates had more gold when located near the boulders, as boulders traced the horizontal arm reach baseline. Thus to maximize the number of gold coins found, a participant had to reach maximally.

Feedback relates to task status (in progress or completed), errors, and timing. When a game task is in progress momentary feedback followed each player’s action, while feedback provided at the end of a game is summative, often in the form of statistics, or total points earned that game. For the BAC games, for example Card Island, momentary feedback was a prerecorded voice uttering a noun associated with the image shown once a card had been turned face up. Momentary feedback was also the fact that a card turned face down when paired incorrectly. Timer and partial scores were displayed and updated in each game. In Musical Drums and in Kites, summative feedback was presented upon completion, or when timeout, in the form of a percentage. This percentage represented how many notes had been successfully hit by a mallet out of total number of notes (for Musical Drums), or percentage of targets successfully flown through (for Kites). Summative feedback was also a factor determining the particular reward provided, such as “Great job” for a set percentage success, or “Nice try” when performance was below such percentage threshold.

Choice relates to variety of available difficulty levels for a given game, as well as variety of different games in a given session. Choice is important in maintaining a patient’s interest, especially over many months of needed rehabilitation. Typically rehabilitation session duration would grow over this time span, allowing new games to become available on a weekly basis. Giving a patient the choice of what games to play is extremely motivating, and increases the feeling of being in control over the rehabilitation process. However choice needs to be weighed against therapy goals.

Clear goals. Therapists typically select among available games depending on what specific impairments a particular patient presents with. A therapist-selected games may however not necessarily be a patient’s favorite ones. A hybrid approach was taken previously by this group,26 by which all game selected by a therapist needed to be played at least once in a session, followed by free choice in subsequent game play in that session. Within each game goals will need to be clearly explained, such that a patient knows what the tasks is for that game. In the games used for BAC usability evaluation, goals were explained in text format displayed in the game starting scene. For example, Card Island starting scene displayed the text “Move your controller LEFT, RIGHT, FORWARD and BACK to control the HAND. FLIP over PAIRS and try to find MATCHES!”

Socialization is important in increasing motivation for a patient. Unlike network-linked game play, typical sessions on a BAC system are with a single participant. However, it is theoretically possible to have two patients compete, as long as two BACs are connected over the internet, and the patients’ schedule overlaps. In the present study participants evaluated the system individually, taking turns on a single system, something that follows established norms for formative usability evaluations.20 However, many of the games used in this evaluation had been played in a first-ever tournament between stroke survivors at two nursing homes located 12 km apart, each housing a BrightArm Duo system.27 Each team consisted of two participants, which controlled one avatar each on their BrightArm Duo, performing a collaborative task. For example, when they played Breakout 3 D, each patient controlled a paddle, so that together they could keep the ball in play and destroy all crates. Game designers need to however be careful how to modulate competitive socialization when players are disabled individuals. One way to address this is in team selection, which needs to set competitions between players with similar degree of impairment severity. This may not always be practical, thus a better method is to have teams in which team members are competing against the computer. This was in fact the scenario in the nursing homes tournament, previously described, and it was well liked by the participants.

The features of the therapeutic game controller and the baselining process described above were ways to adapt games to each patient’s functional level, which could change over time with recovery. An important role in adapting to individual patient’s characteristics and to constantly challenge the individual, was played by the BAC Artificial Intelligence (AI) software. This software, developed by this research group, mapped each arm physical reach in both horizontal and vertical planes to the full size of the game space. Another component was the variation of game difficulty levels based on patient’s past performance, so to facilitate winning and benefitting well-being. The AI program monitored performance in each game and automatically changed its level of difficulty accordingly for each game. Thus games played in a given session were not all of the same difficulty, rather they were set by the AI based on how successful the patient had been previously when playing them. Had the patient succeeded in a particular game three times in a row, the next time that game was played, its difficulty was increased one level. Conversely, had a patient failed two times in a row in a particular game, difficulty for that game was then automatically reduced one level, so to prevent disengagement. Eight different games, each with 10 to 16 levels of difficulty, ensured that there was sufficient variation and challenge during BAC training. The AI was also in charge of automatically scheduling games for a given week of training, based on a set protocol. Finally, the AI extracted game performance variables from a session and assembled a session report. More detail on game performance variables is provided in the Outcomes section below.

Participants in the usability evaluation

This study received initial human subject approval from the Western Institutional Review Boards (WIRB). Between September and December 2018, 5 participants were consented to take part in the usability evaluation of a BAC system. One participant withdrew from the study due to scheduling conflicts and 4 completed it at the Bright Cloud International Research Laboratory (North Brunswick, NJ, USA). The participants’ characteristics are shown in Table 1. They were in general good health, had no prior stroke, nor any symptoms of cognitive, emotional, or physical dysfunction. Participants’ age ranged from 57 to 67 years, they were all English speakers, each had 18 years of formal education, and they were computer literate. Three of the participants were female Caucasians and one was an Asian male. Each participant signed an informed consent prior to data collection and was compensated with payments of $25 at the completion of each usability session. In a survey of methods to recruit participants in usability studies, Sova and Nielsen28 report that the majority of worldwide studies use paid participants. The pay for such participation is reported at $63/hour when external participants were used, substantially larger than the $25/hour in this study. While monetary (cash) payments is universally accepted as a way to facilitate recruitment in usability studies, the much smaller compensation provided here was aimed at mitigating bias. Bias was further reduced by recruiting exclusively external participants, rather than using employees as evaluators.

Table 1.

Usability study participants demographics. © Bright Cloud International Corp. Reprinted by permission.

| Participant | M/F | Age | Race | Education years | Dominant Arm | Primary Language | Computer literacy |

|---|---|---|---|---|---|---|---|

| 1 | F | 67 | White | 18 | Right | English | Literate |

| 2 | F | 57 | White | 18 | Left | English | Literate |

| 3 | F | 58 | White | 18 | Right | English | Literate |

| 4 | M | 67 | Asian | 18 | Right | English | Literate |

Data collection instruments

This study followed a formative usability evaluation protocol,29 with data collected at every evaluation session. The sessions collected data on game performance in terms of duration of play, errors, game difficulty level, number of repetitions for arm movements, grasps and finger extensions, as well as intensity of play as repetitions/minute. These data were non-standardized, collected automatically by the BAC system and stored in a local database.

Feedback was solicited from participants by completing the USE questionnaire30 and by answering questions on a custom form. The USE form is a standardized questionnaire which rates the usability of technology on a 7-point Likert scale (1 – least desirable and 7-most desirable outcome). These questions were designed to ascertain an evaluator’s ability to learn how to use a computer system, perceived level of discomfort, appropriateness of training intensity (in this case on the BAC system) and overall satisfaction with the computer system.

The non-standardized questionnaire was a custom form which had been developed by this research team. It consisted of 23 questions, each using a 5-point Likert scale,31 with 1 representing the least desirable outcome and 5 the most desirable one. These questions asked participants to evaluate the appropriateness of BAC games and their difficulty progression, ease of baselining, comfort of game controllers, responsiveness of arm tracking, ability to detect grasping and finger extension, ease of game selection, appropriateness of session length, individual ratings for each of the 10 games tested, as well as the overall rating of the BAC system. Participants were asked to fill the forms at the end of each usability evaluation session, so to determine changes in rating once games became harder, sessions longer and the table was tilted downwards or upwards, from its initial horizontal setting.

Protocol

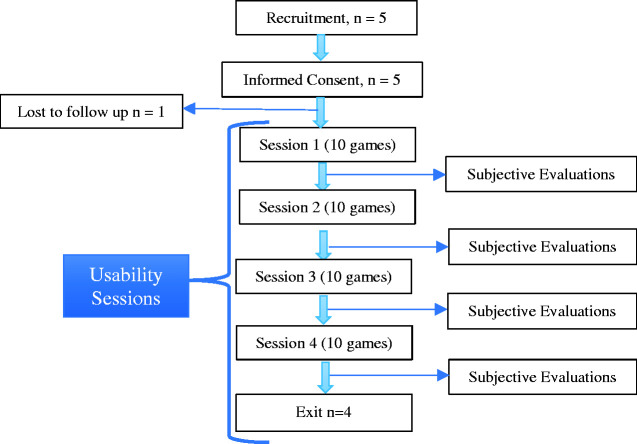

Figure 5 is a flowchart of the usability study protocol which provided for four sessions to be completed within a two-week period. Each participant was to start on a subset of BAC games at their lowest level of difficulty, playing uni-manually during the first session. Interaction mode was then to change to bimanual play, with game difficulty progressively increasing over sessions 2, 3 and 4, according to a pre-determined schedule (Table 2).

Figure 5.

Flowchart diagram of the BrightArm Compact usability study protocol.

© Bright Cloud International Corp. Reprinted by permission

Table 2.

Game difficulty level progression, interaction mode and rehabilitation table settings in the BrightArm Compact usability study. © Bright Cloud International Corp. Reprinted by permission.

| Game name | 1st Session | 2nd Session | 3rd Session | 4th Session |

|---|---|---|---|---|

| Avalanche | 1,2 | 3,4 | 6,7 | 9,10 |

| Breakout 3D | 3,4 | 6,7 | 11,12 | 15,16 |

| Card Island | 3,4 | 6,7 | 11,12 | 15,16 |

| Kites | 3,4 | 6,7 | 11,12 | 15,16 |

| Musical Drums | 3,4 | 6,7 | 11,12 | 15,16 |

| Pick-and-Place | 1,2 | 3,4 | 6,7 | 9,10 |

| Towers of Hanoi | 3,4 | 6,7 | 11,12 | 15,16 |

| Treasure Island | 1,2 | 3,4 | 6,7 | 9,10 |

| Catch 3D | 1,2 | 4,5 | 8,9 | 11,12 |

| Car Race | 1,2 | 4,5 | 8,9 | 11,12 |

| Interaction mode | Uni-manual | Bimanual | Bimanual | Bimanual |

| Table tilt angle | 0◦ | –10◦ | 10◦ | 20◦ |

Participants were to sit at the BAC table such that their belly touched the inside of the table cutout and the table height was to be set to ensure comfortable supported movement of their arms (Figure 1(b) and (c)). Table tilt angle was to be kept 0 degrees in the first session (table horizontal), -10 degrees (table tilted distally downwards) in session 2, while sessions 3 and 4 were to have the table tilted up 10 and 20 degrees, respectively. Once set at the start of a session, the table tilt angle was to remain subsequently fixed during the reminder of that session.

Subsequent to measures of blood pressure and pulse, participants were to perform horizontal and then vertical baselines for arm reach, as well as baselines for grasping strength, finger extension and arm pronation and supination ranges of motion. Provisions were made so sessions could be interrupted in case of fatigue, without requiring a repeat of the baseline procedure for that session.

During each evaluation participants were to complete each game at least twice at the difficulty level set for that session. Inability to successfully complete a game, after several attempts, was to trigger a reduction in that game level of difficulty. At the end of each session, participants were to provide feedback on the BAC system using the instruments previously described.

Outcomes

Outcomes when measuring the BAC system usability were participants’ performance when playing the various therapeutic games, as well as the participants’ subjective evaluation of their experience.

Participants’ game performance

The progression in participants’ average game performance over the 4 usability sessions is shown in Table 3. The total game-play duration used to evaluate the system was 200 minutes per participant, each evaluation session averaging 50 minutes. On average participants exceeded 6,300 total arm repetitions, 2,200 total grasps and 2,100 total finger extensions over the evaluation process. Only two of the tested games (Catch 3D and Care Race) induced arm pronation/supination movements. Participants averaged a total of 139 pronation/supination repetitions when playing Catch 3D. Data on similar repetitions during Car Race play are not available.

Table 3.

Game performance outcomes from usability participants using the BrightArm Compact system. © Bright Cloud International Corp. Reprinted by permission.

| Game performance variable |

Participant |

||||

|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | Average | |

| Total gameplay duration (minutes) | 136.1 | 226.3 | 187.8 | 251 | 200.3 |

| Total number of arm repetitions | 3577 | 9166 | 4814 | 7973 | 6382.5 |

| Total number of grasps | 1126 | 2098 | 2001 | 3675 | 2225 |

| Total number of finger flexions | 1213 | 2356 | 1793 | 3190 | 2138 |

| Average intensity of training (arm repetitions/minute) | 24.8 | 37.2 | 25.6 | 29.9 | 29.4 |

| Average intensity of training (grasps/minute) | 8.1 | 8.7 | 10.3 | 13.7 | 10.2 |

| Average intensity of training (extensions/minute) | 8.5 | 9.9 | 9.3 | 11.9 | 9.9 |

Game performance data provided additional information on the intensity of training possible on the BAC, namely an average of 29 arm repetitions, 10 grasps, and 10 extensions every minute. The intensity of play is a quantitative variable characterizing motor training on the BAC, and clinically a measure of an individual’s UE endurance and speed of movement.

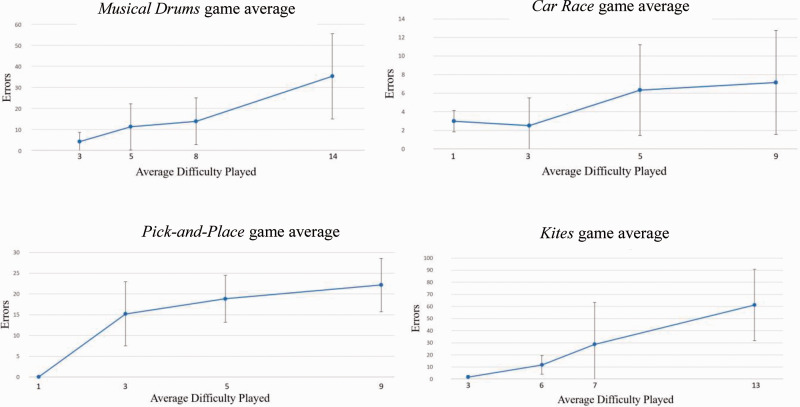

Figure 6 graphs the number of errors during game play as a function of game difficulty for a sub-set of 4 games used in this usability evaluation (Musical Drums, Car Race, Pick and Place and Kites). As a trend, participants made more errors as game difficulty increased. There was no plateau for these graphs, indicative of appropriate cognitive challenge. Figure 6 also shows the standard deviations of errors made by participants, as a function of game and its difficulty. Standard deviations in error rates were a measure uniformity of performance among the group. The standard deviation in session 4 (last session) was larger than that for session 2 (mid-point) in three of the four games shown. For Pick and Place the standard deviation was smaller in session 4 (played at an average difficulty of 9) than that in session 2 (average game difficulty of 3).

Figure 6.

BrightArm Compact usability evaluation group average game errors.

© Bright Cloud International Corp. Reprinted by permission.

Participants’ feedback

BAC evaluation with the USE form

Participants’ USE questionnaire ratings are shown in Table 4. The average rating score was 5.1/7 indicating that the participants agreed with the usefulness, ease of use, ease of learning, and satisfaction with the system. The lowest rating (3.3) was for the statement “I can use it without written instructions,” followed by “I don’t notice any inconsistencies as I use it” (3.8) and “I can use it successfully every time” (3.8). The highest rating (6.7) was given for the statement “It is pleasant to use,” followed by “I would recommend it to a friend” (6.5) and “It is wonderful” (6.3). In their critical comments on the USE form participants wrote “Somewhat hard to grasp,” “Extension needed for small hands,” “Finger extension hard at times,” “Side-to-side motion lacked responsiveness, did not feel in total control.” A positive comment was “Fun games - you don’t think you’re in therapy.”

Table 4.

Responses given by participants on the USE Questionnaire when rating the usability of the BrightArm Compact system (1– Least desirable, 7 – most desirable outcome). © Bright Cloud International Corp. Reprinted by permission.

| Number | Question |

Participant |

Questionaverage | |||

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | |||

| 1 | It helps me be more effective | 5 | 7 | 5 | 5.7 | |

| 2 | It helps me be more productive | 5 | 3 | 7 | 6 | 5.3 |

| 3 | It is useful | 7 | 4 | 7 | 6 | 6.0 |

| 4 | It gives me more control over activities in my life | 5 | 4 | 7 | 5 | 5.3 |

| 5 | It makes the things I want to accomplish easier to get done | 5 | 4 | 7 | 4 | 5.0 |

| 6 | It saves me time when I use it | 5 | N/A | 5 | 5.0 | |

| 7 | It meets my needs | 6 | 4 | 7 | 5 | 5.5 |

| 8 | It does everything I would expect it to do | 6 | 4 | 7 | 5 | 5.5 |

| 9 | It is easy to use | 4 | 5 | 7 | 6 | 5.5 |

| 10 | It is simple to use | 3 | 3 | 7 | 6 | 4.8 |

| 11 | It is user friendly | 3 | 4 | 7 | 6 | 5.0 |

| 12 | It requires the fewest steps possible to accomplish what I want to do | 4 | 5 | 6 | 6 | 5.3 |

| 13 | It is flexible | 3 | 5 | 5 | 6 | 4.8 |

| 14 | Using it is effortless | 3 | 5 | 3 | 6 | 4.3 |

| 15 | I can use it without written instructions | 1 | 3 | 6 | 3.3 | |

| 16 | I don’t notice any inconsistencies as I use it | 3 | 2 | 4 | 6 | 3.8 |

| 17 | Both occasional and regular users would like it | 4 | 4 | 7 | 6 | 5.3 |

| 18 | I can recover from mistakes quickly and easily | 5 | 3 | 2 | 6 | 4.0 |

| 19 | I can use it successfully every time | 5 | 2 | 2 | 6 | 3.8 |

| 20 | I learned to use it quickly | 4 | 2 | 7 | 6 | 4.8 |

| 21 | I easily remembered how to use it | 4 | 2 | 5 | 6 | 4.3 |

| 22 | It is easy to learn to use it | 4 | 3 | 7 | 6 | 5.0 |

| 23 | I quickly became skillful with it | 4 | 3 | 7 | 6 | 5.0 |

| 24 | I am satisfied with it | 5 | 6 | 7 | 6 | 6.0 |

| 25 | I would recommend it to a friend | 7 | 6 | 7 | 6.5 | 6.5 |

| 26 | It is fun to use | 7 | 4 | 7 | 6 | 6.0 |

| 27 | It works the way I want it to work | 6 | 3 | 6 | 6 | 5.3 |

| 28 | It is wonderful | 6 | 7 | 6 | 6.3 | |

| 29 | I feel I need to have it | 5 | 3 | N/A | 6 | 4.7 |

| 30 | It is pleasant to use | 7 | N/A | 7 | 6 | 6.7 |

| Average of all scores per participant and overall score | 4.7 | 3.7 | 6.1 | 5.8 | 5.1 (7-max) | |

BAC usability ratings using custom evaluation form

Participants’ ratings using the custom evaluation form are shown in Table 5. Each question score is an average of 4 scores that particular question had received in the 4 evaluation sessions. When all ratings were averaged, the overall usability score for the BAC system was 4.2/5 (or 84%).

Table 5.

Subjective evaluation average scores from usability study over four sessions (1: least desirable, 5: most desirable outcome). Each participant submitted one feedback form per session. © Bright cloud international corp. Reprinted by permission.

| Question |

Scores |

|||||

|---|---|---|---|---|---|---|

| Participant 1 | Participant 2 | Participant 3 | Participant 4 | Question Avg. Score | ||

| 1 | Baseline | 3.7 | 4.5 | 5.0 | 4.8 | 4.5 |

| 2 | Game selection interface | 4.5 | 4.3 | 5.0 | 5.0 | 4.7 |

| 3 | 3D hand controller use | 3.8 | 3.8 | 3.5 | 3.5 | 3.6 |

| 4 | Chair or screen positioning | 3.5 | 4.3 | 5.0 | 4.3 | 4.3 |

| 5 | Grasping responsiveness | 3.3 | 3.5 | 3.3 | 3.8 | 3.4 |

| 6 | Detection of finger extension | 3.3 | 3.5 | 3.8 | 3.8 | 3.6 |

| 7 | Level of noise when moving | 4.8 | 4.5 | 5.0 | 4.3 | 4.6 |

| 8 | Progression of game difficulty | 4.8 | 4.3 | 4.8 | 4.5 | 4.6 |

| 9 | Weight of controller | 4.0 | 4.3 | 3.5 | 4.0 | 3.9 |

| 10 | Length of therapy | 4.5 | 4.0 | 5.0 | 4.5 | 4.5 |

| 11 | Tracker delay | 3.8 | 3.5 | 4.5 | 3.8 | 3.9 |

| 12 | Table tilting | 5.0 | 4.3 | 5.0 | 4.3 | 4.6 |

| 13 | Overall system | 4.5 | 4.0 | 5.0 | 4.3 | 4.4 |

| 14 | Breakout 3D game | 3.3 | 4.3 | 5.0 | 4.5 | 4.3 |

| 15 | Avalanche game | 3.0 | 4.0 | 3.8 | 4.5 | 3.8 |

| 16 | Card Island game | 3.8 | 4.0 | 5.0 | 4.8 | 4.4 |

| 17 | Musical Drums game | 3.3 | 2.7 | 3.0 | 4.5 | 3.4 |

| 18 | Pick-and-Place game | 4.0 | 4.0 | 5.0 | 4.8 | 4.4 |

| 19 | Towers of Hanoi game | 4.3 | 4.0 | 5.0 | 4.8 | 4.5 |

| 20 | Treasure Island game | 4.5 | 4.3 | 5.0 | 4.5 | 4.6 |

| 21 | Catch 3D game | 4.7 | 4.0 | 5.0 | 4.5 | 4.5 |

| 22 | Car Race game | 4.0 | 3.5 | 4.8 | 4.7 | 4.2 |

| 23 | Kites game | 4.5 | 3.5 | 3.5 | 4.0 | 3.9 |

| Average score for all questions and overall score | 4.0 | 3.9 | 4.5 | 4.3 | 4.2 (5-max) | |

The lowest ratings were for the responsiveness of the BBG grasping detection and for the Musical Drums game (both with a score of 3.4). This was followed by “3D Hand Controller Use” and “Detection of Finger Extension” (both with 3.6), and for the Avalanche game (3.8). The highest ratings were for the Game Selection Interface (4.7), followed by the “Level of Noise when Moving” (while supported by table), “Progression of Game Difficulty,” “Table Tilting,” and the Treasure Island game, all rated at 4.6/5.

The comments provided by each participants over the 4 custom rating forms were changing with the composition and difficulty of each session and the setting of table tilting angle. Participant 1 in her first session found “the instructions seemed complicated and non-intuitive,” although she wrote “Some games had ‘reminders’ e.g. extend that were helpful.” She thought the screen needed to be lowered as she complained that “my neck is killing me.” In her second session, when the work table surface had been tilted downwards, Participant 1 commented about the baseline stating “The instructions said to make as big a circle as you can. I didn’t but was still pulled way forward sometimes. Was almost pulled forward off the table at one point (circle too big?)” In her third session Participant 1 though the screen positioning was good, with reclining the chair back, and gave it a perfect score (5). In that same session, when rating the Progression of Game difficulty with a perfect 5, she commented “Nice new features at higher levels.” In her last session (the hardest), when rating the same question with a 4, she wrote “Most were good, a few got diabolical.”

Participant 2 in her first session while sitting all the way in against the table, wrote that she needed “something more comfortable on the lumbar spine.” However in her last session this participant found the chair and screen position “always comfortable.” When first playing Avalanche Participant 2 commented that she needed “A little more explanation of what you are breaking the ice to get to”. In Session 3 she wrote “When I finally get how to break the ice, game is over,” but in her 4th session she wrote “Good game, difficult in the end.” In the Car Race first session Participant 2 felt dizzy at times “I get a bit dizzy when changing lanes,” however in the second session she “did not get dizzy this time.” Participant 2 really liked the Towers of Hanoi which trained executive functions (decision making). She wrote “I like (that) it’s a brain teaser too. Good for dementia/forgetfulness” (session 1) and “Makes me think (but) I do not like the timer” (session 3) and at the hardest session 4 “Loved this. Wish I could complete it before time runs out.”

Participant 3 in her first session rated the 3D Hand Controller Use as a 3 (neutral), and noted “Not as responsive as I would like.” In session 3 she rated Musical Drums with a 2, noting that “(it is) hard to do two sides at once” when she was playing the game bimanually. Similarly she rated Kites with a 2, noting “hard to not hit controllers when trying to move.” In the same session she rated the Towers of Hanoi game excellent (5) writing “makes you think.” In the last session she felt she did better with the tilted table, but had problems with the hand controllers, noting “wasn’t going where I wanted it to.” Nonetheless in her final comments Participant 3 wrote “Whole concept is excellent, really makes your mind work trying to get your hands to move the way you want.”

Participant 4 in his first session noted that “games were very good,” but thought that “Response of the arm needs to be improved.” There were no negative observations from this participant in subsequent sessions.

Discussion

The BrightArm Compact rehabilitation system described here improved over its BrightArm Duo predecessor in compactness, better control of its table height and tilt, as well as better tracking of UE movements. Its BBG therapeutic game controllers improved in functionality over the Duo forearm supports, by adding the ability to detect global finger extension, as well as to pronate/supinate the supported arm.

The added finger extension and pronation/supination measurements meant new baselines had to be done on the affected and unaffected UEs, so to accommodate bimanual play during bilateral training. These were added to the existing baselines for grasping, as well as arm horizontal and arm vertical reach. While baselines were needed to customize games to each patient’s abilities each session, they could be an issue in clinical practice where time is of the essence. To address this issue for the BAC, both UEs were baselined simultaneously for grasping, for finger extension, for pronation, and for supination.

Length of baseline is only one of the potential barriers to adoption of new technology in clinical care. Langhan and colleagues32 interviewed 19 physicians and nurses within 10 emergency departments. They concluded that barriers to adoption included infrequent use, perceived complexity of the device, resistance to change, learner fatigue, and anxiety related to performance among staff. As discussed at the American Congress of Rehabilitation Medicine,33 the largest gathering of rehabilitation researchers and practitioners in the world, flows in equipment design, complexity of graphics scenes, and reluctance of therapists to learn anything new significantly hamper introduction of new technology. Patients are those who suffer as a consequence, by receiving suboptimal care.

The graphics scenes of BAC games, while somewhat complex, were not perceived as overwhelming by the participants, as seen in their system evaluations. This was somewhat expected since these participants were cognitively intact, thus had better processing speed than someone with cognitive disabilities.34 It is possible that those with Alzheimer’s disease would have been overwhelmed by the graphics, especially for higher levels of difficulty. However, in the authors’ experience, even those with stage II or III Alzheimer’s Disease were able to play and enjoy BrightArm games,35 as long as they were assisted by staff.

One BAC evaluation subject however complained about dizziness when playing the Car Race game. Dizziness is a symptom of simulation sickness,36 known to be associated with VR simulations. The Car Race game was the one of two therapeutic games where the camera view was moving in the scene. This created a sensorial conflict between vision feedback information indicating motion and the participant’s proprioceptive system indicating lack of motion (sitting in a chair).

The game tasks difficulty was constantly increased to challenge the participants, and error rates did not plateau. As seen in Figure 6, error rates continued to increase with increased game difficulty. Had these rates plateaued, it would have been indicative of games that were either too easy, or too difficult for the participants to play.37

Other robotic rehabilitation tables exist in clinical use, such as the Bi-Manu-Track.38 Its shape resembles the BAC in its center cutout, while the table is only horizontal, and bimanual training is for pronation/supination and for finger flexion/extension. While the Bi-Manu-Track is less engaging since it does not have a VR component, its electrical actuators allow active/passive training of the impaired arms, while the BAC allows active training only.

The Gloreha Workstation Plus (Gloreha, Italy) has a table cutout and provides unilateral training using integrative games, similar to the games used in this study.39 Unlike the BAC, however, the affected arm is gravity supported in a mechanical arm, and tracking of wrist movement is done using a LEAP Motion tracker.40 LEAP Motion trackers have a relatively small range, that would not have allowed the larger 3D arm movements of the BAC (when unsupported). Assisted grasping is possible with Gloreha rehabilitation glove, which uses compressed air to close fingers during functional grasping of real objects.

While all participants in the current study were age-matched to envisioned end users, thought to be elderly impaired individuals, one study limitation was that all participants were healthy. The decision to use healthy participants stemmed from the need to test the technology first on able body individuals, so to uncover obvious issues, and to have a more uniform evaluation population. Stroke survivors, while more ecological to the device intended use, are also more heterogeneous in their impairments.

Another limitation of the study was its relatively small number of participants. Cost and logistics of large-scale recruiting prevented a large n, which in turn resulted in more heterogeneity among the recruited participants. Within medical device usability studies participant counts are typically smaller than for studies of new drugs. As an example, Pei and colleagues41 in their usability study of a robotic table using bimanual training had enrolled 12 participants, of whom only 4 were patients post-stroke, 4 were caregivers and 4 were therapists. Prior to testing on patients, they had pre-tested the rehabilitation table prototype on 5 healthy individuals.

Conclusions

The BAC usability study presented here is the first clinical trial of the novel BrightArm Compact system. Subsequently, two more studies were conducted. One was a feasibility case series with two subjects who were in the early sub-acute phase post-stroke and inpatients at a local rehabilitation facility.42 These patients underwent 12 training sessions on the BAC over three weeks in addition to standard of care they were receiving. The participants were able to attain between 250 and close to 500 arm repetitions per session, which illustrates the intensity of training possible with the BAC. This training intensity, combined with standard of care, resulted in marked improvements in the affected shoulder strength of 225% and 100%, respectively. Interestingly, elbow active supination, which typically recovers later in a patient’s progression, became larger by 75% and 58%, respectively. Motor function improved above Minimal Clinically Important Differences (MCIDs) when assessed with standardized measures (Fugl-Meyer Assessment,43 Chedoke Inventory44 and Upper Extremity Functional Index45). Just as important for technology acceptance, each of the two therapists involved in the study, rated the ease of learning how to use the BAC system with a 4 out of 5.

A second BAC clinical study was a randomized controlled trial (RCT) of stroke survivors in the acute phase, who were first inpatients and then outpatients at a stroke hospital in New Jersey, USA. Results from this subsequent study are being analyzed at the time of this writing and will be presented elsewhere.

Supplemental Material

Supplemental material, sj-pdf-1-jrt-10.1177_20556683211012885 for Novel integrative rehabilitation system for the upper extremity: Design and usability evaluation by Grigore Burdea, Nam Kim, Kevin Polistico, Ashwin Kadaru, Doru Roll and Namrata Grampurohit in Journal of Rehabilitation and Assistive Technologies Engineering

Supplemental material, sj-pdf-2-jrt-10.1177_20556683211012885 for Novel integrative rehabilitation system for the upper extremity: Design and usability evaluation by Grigore Burdea, Nam Kim, Kevin Polistico, Ashwin Kadaru, Doru Roll and Namrata Grampurohit in Journal of Rehabilitation and Assistive Technologies Engineering

Supplemental material, sj-pdf-3-jrt-10.1177_20556683211012885 for Novel integrative rehabilitation system for the upper extremity: Design and usability evaluation by Grigore Burdea, Nam Kim, Kevin Polistico, Ashwin Kadaru, Doru Roll and Namrata Grampurohit in Journal of Rehabilitation and Assistive Technologies Engineering

Supplemental material, sj-pdf-4-jrt-10.1177_20556683211012885 for Novel integrative rehabilitation system for the upper extremity: Design and usability evaluation by Grigore Burdea, Nam Kim, Kevin Polistico, Ashwin Kadaru, Doru Roll and Namrata Grampurohit in Journal of Rehabilitation and Assistive Technologies Engineering

Supplemental material, sj-pdf-5-jrt-10.1177_20556683211012885 for Novel integrative rehabilitation system for the upper extremity: Design and usability evaluation by Grigore Burdea, Nam Kim, Kevin Polistico, Ashwin Kadaru, Doru Roll and Namrata Grampurohit in Journal of Rehabilitation and Assistive Technologies Engineering

Supplemental material, sj-pdf-6-jrt-10.1177_20556683211012885 for Novel integrative rehabilitation system for the upper extremity: Design and usability evaluation by Grigore Burdea, Nam Kim, Kevin Polistico, Ashwin Kadaru, Doru Roll and Namrata Grampurohit in Journal of Rehabilitation and Assistive Technologies Engineering

Acknowledgements

The authors gratefully acknowledge the contribution of Team Design Group, which manufactured the prototype BrightArm Compact system described here.

Declaration of conflicting interests: The author(s) declared the following potential conflicts of interest with respect to the research, authorship, and/or publication of this article: Dr. Burdea is principal shareholder of Bright Cloud International, the company which developed the medical device in this study.

Funding: The author(s) disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: This research was funded by NIH grant R44AG044639.

Guarantor: Grigore C. Burdea PhD.

Contributorship: Grigore Burdea contributed to the mechanical design and therapeutic game concepts. He interacted with WIRB for approval of the study, co- analyzed data and wrote this manuscript. Nam Kim PhD contributed the mechanical design together with Doru Roll. Dr. Kim also did data analysis and contributed to this manuscript.

Kevin Polistico and Ashwin Kadaru did software development, and data collection during the usability evaluation.

Namrata Grampurohit OT PhD advised on therapeutic game design, performed data analysis and contributed to this manuscript.

ORCID iD: Grigore Burdea https://orcid.org/0000-0001-5429-054X

References

- 1.Centers for Disease Control and Prevention. Division of Heart Disease and Stroke Prevention: at a glance, www.cdc.gov/chronicdisease/pdf/aag/dhdsp-H.pdf. (2018, accessed 27 April, 2021).

- 2.Yang Q, Tong X, Schieb L, et al. Vital signs: Recent trends in stroke death rates – United States 2000–2015. Mmwr Morb Mortal Wkly Rep 2017; 66: 933–939. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Benjamin EJ, Blaha MJ, Chiuve SE, et al. Heart disease and stroke statistics-2017 update: a report from the American Heart Association. Circulation 2017; 135: e146-603. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Rosamond W, Flegal F, Friday G, et al. Heart disease and stroke statistics – 2007 update: a report from the American Heart Association statistics committee and stroke statistics subcommittee. Circulation 2007; 115: e69–e171. [DOI] [PubMed] [Google Scholar]

- 5.Robinson RG, Spalletta G.Poststroke depression: a review. Can J Psychiatry 2010; 55: 341–349. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Raghavan P.Upper limb motor impairment post stroke. Phys Med Rehabil Clin N Am 2015; 26: 599–610. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Mullick AA, Sandeep K, Subramanian SK.Emerging evidence of the association between cognitive deficits and arm motor recovery after stroke: a meta-analysis. RNN 2015; 33: 389–403. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Cauraugh JH, Lodha H, Naik SK, et al. Bilateral movement training and stroke motor recovery progress: a structured review and meta-analysis. Human Move Sci 2010; 29: 853–870. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Ausenda C, Carnovali M.Transfer of motor skill learning from the healthy hand to the paretic hand in stroke patients: a randomized controlled trial. Eur J Phys Rehabil Med 2011; 47: 417–425. [PubMed] [Google Scholar]

- 10.Laver KE, George S, Thomas S, et al. Virtual reality for stroke rehabilitation. Cochrane Datab Syst Rev2017; 1.

- 11.Laut J, Porfiri M, Raghavan P.The present and future of robotic technology in rehabilitation. Curr Phys Med Rehabil Rep 2016; 4: 312–319. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Roderick S, Carignan C. Designing safety-critical rehabilitation robots (Chapter 4). In: Sashi S and Kommu, et al. Rehabilitation Robotics. Vienna, Austria: Itech Education and Publishing, 2007, pp. 648–670.

- 13.Choi HS, Shin WS, Bang DH, et al. Effects of game-based constraint-induced movement therapy on balance in patients with stroke: a single-blind randomized controlled trial. Am J Phys Med Rehabil 2017; 96: 184–190. [DOI] [PubMed] [Google Scholar]

- 14.Burdea G, Polistico K, Grampurohit N, et al. Concurrent virtual rehabilitation of service members post-acquired brain injury – a randomized clinical study. In: Proc. 12th ICDVRAT, University of Nottingham, England, 4–6 September 2018.

- 15.Zeiler SR, Krakauer JW.The interaction between training and plasticity in the poststroke brain. Curr Opin Neurol 2013; 26: 609–616. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Holden M.Virtual environments for motor rehabilitation: review. Cyberpsychol Behav 2005; 8: 187–211. [DOI] [PubMed] [Google Scholar]

- 17.Vanderniepen R, Van Ham J, Naudet M, et al. Novel compliant actuator for safe and ergonomic rehabilitation robots – design of a powered elbow orthosis. In: IEEE 10th international conference on rehabilitation robotics, Noordwijk, Netherlands, 13–15 June 2007. pp.790–797, Piscataway: IEEE.

- 18.Rabin B, Burdea G, Roll D, et al. Integrative rehabilitation of elderly stroke survivors: the design and evaluation of the BrightArm. Disabil Rehab – Assist Tech 2012; 7: 323–335. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.House G, Burdea G, Polistico K, et al. Integrative rehabilitation of stroke survivors in skilled nursing facilities: the design and evaluation of the BrightArm duo. Disabil Rehab – Assist Tech 2016; 11: 683–694. [DOI] [PubMed] [Google Scholar]

- 20.House G, Burdea G, Polistico K, et al. BrightArm duo integrative rehabilitation for post-stroke maintenance in skilled nursing facilities. In: Proc. int. conf. virtual rehabilitation, Valencia, Spain, 11–12 June 2015. pp.217–214. Piscataway, NJ, USA: IEEE.

- 21.Cipresso P, Chicchi Giglioli IA, Alcañiz Raya M, et al. The past, present, and future of virtual and augmented reality research: a network and cluster analysis of the literature. Front Psychol 2018; 9: 20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Burdea G, Kim N, Polistico K, et al. Assistive game controller for artificial intelligence-enhanced telerehabilitation post-stroke. Assist Tech 2019; 31: 1–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Bhattacharjee S, Al Yami M, Kurdi S, et al. Prevalence, patterns and predictors of depression treatment among community-dwelling older adults with stroke in the United States: a cross sectional study. BMC Psychiatry 2018; 18: 130. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Burdea G, Polistico K, Kim N, et al. Novel therapeutic game controller for telerehabilitation of spastic hands: two case studies. In: Proc. 13th Int. Conf. Virtual Rehabilitation 2019, Tel Aviv Israel, 21–14 July July 2019. 8 pp. Piscataway, NJ, USA: IEEE.

- 25.Lohse K, Shirzad N, Verster A, et al. Video games and rehabilitation: using design principles to enhance engagement in physical therapy. J Neurol Physiother 2013; 37: 166–175. [DOI] [PubMed] [Google Scholar]

- 26.Burdea G, Polistico K, House G, et al. Novel integrative virtual rehabilitation reduces symptomatology in primary progressive aphasia – a case study. Int J Neurosci 2015; 125: 949–958. PMID 25485610 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.House G, Burdea G, Polistico K, et al. A rehabilitation first – nursing home tournament between teams of chronic post-stroke residents. Games for Health 2016; 5: 75–83. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Hinderer SD, Nielsen J.234 tips and tricks for recruiting users as participants in usability studies. Fremont, CA: Nielsen Norman Group, 2003. [Google Scholar]

- 29.Abts N. The case for formative human factors testing. Med Device Online, 4 October 2016, www.meddeviceonline.com/doc/the-case-for-formative-human-factors-testing-0001 (accessed 27 April 2021)

- 30.Lund AM.Measuring usability with the USE questionnaire. STC Usability SIG Newsletter 2001; 8: 2. [Google Scholar]

- 31.Sullivan GM, Artino AR.Analyzing and interpreting data from Likert-Type scales. J Grad Med Ed 2013; 5: 541–542. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Langhan ML, Riera A, Kurtz JC, et al. Implementation of newly adopted technology in acute care settings: a qualitative analysis of clinical staff. J Med Eng Technol 2015; 39: 44–53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.American Congress on Rehabilitation Medicine. The shower bench in the closet: end user acceptance and uptake of rehabilitation technology (600484). Instructional Course. Chicago: Author, 2019.

- 34.Bonnechère B, Van Vooren M, Bier J-C, et al. The use of mobile games to assess cognitive function of elderly with and without cognitive impairment. JAD 2018; 64: 1285–1293. [DOI] [PubMed] [Google Scholar]

- 35.Burdea G, Polistico K, Krishnamoorthy A, et al. Feasibility study of the BrightBrainer™ integrative cognitive rehabilitation system for elderly with dementia. Disabil Rehabil Assist Technol 2015; 10: 421–432. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Ihemedu-Steinke QC, Rangelova S, Weber M, et al. Simulation sickness related to virtual reality driving simulation. In: Proc int conf virtual, augmented and mixed reality, Osaka, Japan, 2017. pp 521–532. Berlin Heidelberg: Springer-Verlag.

- 37.Burdea G, Coiffet P.Virtual reality technology. 2nd ed. Hoboken, NJ: John-Wiley & Sons, 2003. [Google Scholar]

- 38.Hasomed Solutions for Neurological Rehabilitation, www.hasomed.de/en/home.html (accessed 27 April 2021).

- 39.Gloreha. Gloreha Workstation Plus.. Product brochure, www.gloreha.com/. (2018, accessed 22 April 2021).

- 40.Bachmann D, Weichert F, Rinkenauer G.Review of three-dimensional human-computer interaction with focus on the leap motion controller. Sensors (Basel) 2018; 18: 2194. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Pei Y-C, Chen J-L, Wong AMK, et al. An evaluation of the design and usability of a novel robotic bilateral arm rehabilitation device for patients with stroke. Front Neurorobot 2017; 11: 36. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Burdea G, Kim N, Polistico K, et al. Robotic Table and Serious Games for Integrative Rehabilitation Early Post-Stroke: Two Case Reports. J Rehab Assist Tech. JRAT 26990 Under revision. [DOI] [PMC free article] [PubMed]

- 43.Gladstone DJ, Danells CJ, Black SE.The Fugl-Meyer assessment of motor recovery after stroke: a critical review of its measurement properties. Neurorehabil Neural Repair 2002; 16: 232–240. [DOI] [PubMed] [Google Scholar]

- 44.Barreca SR, Stratford PW, Lambert CL, et al. Test-retest reliability, validity, and sensitivity of the Chedoke arm and hand activity inventory: a new measure of upper-limb function for survivors of stroke. Arch Phys Med Rehabil 2005; 86: 1616–1622. [DOI] [PubMed] [Google Scholar]

- 45.Chesworth BM, Hamilton CB, Walton DM, et al. Reliability and validity of two versions of the upper extremity functional index. Physiother Canada 2014; 66: 243–253. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplemental material, sj-pdf-1-jrt-10.1177_20556683211012885 for Novel integrative rehabilitation system for the upper extremity: Design and usability evaluation by Grigore Burdea, Nam Kim, Kevin Polistico, Ashwin Kadaru, Doru Roll and Namrata Grampurohit in Journal of Rehabilitation and Assistive Technologies Engineering

Supplemental material, sj-pdf-2-jrt-10.1177_20556683211012885 for Novel integrative rehabilitation system for the upper extremity: Design and usability evaluation by Grigore Burdea, Nam Kim, Kevin Polistico, Ashwin Kadaru, Doru Roll and Namrata Grampurohit in Journal of Rehabilitation and Assistive Technologies Engineering

Supplemental material, sj-pdf-3-jrt-10.1177_20556683211012885 for Novel integrative rehabilitation system for the upper extremity: Design and usability evaluation by Grigore Burdea, Nam Kim, Kevin Polistico, Ashwin Kadaru, Doru Roll and Namrata Grampurohit in Journal of Rehabilitation and Assistive Technologies Engineering

Supplemental material, sj-pdf-4-jrt-10.1177_20556683211012885 for Novel integrative rehabilitation system for the upper extremity: Design and usability evaluation by Grigore Burdea, Nam Kim, Kevin Polistico, Ashwin Kadaru, Doru Roll and Namrata Grampurohit in Journal of Rehabilitation and Assistive Technologies Engineering

Supplemental material, sj-pdf-5-jrt-10.1177_20556683211012885 for Novel integrative rehabilitation system for the upper extremity: Design and usability evaluation by Grigore Burdea, Nam Kim, Kevin Polistico, Ashwin Kadaru, Doru Roll and Namrata Grampurohit in Journal of Rehabilitation and Assistive Technologies Engineering

Supplemental material, sj-pdf-6-jrt-10.1177_20556683211012885 for Novel integrative rehabilitation system for the upper extremity: Design and usability evaluation by Grigore Burdea, Nam Kim, Kevin Polistico, Ashwin Kadaru, Doru Roll and Namrata Grampurohit in Journal of Rehabilitation and Assistive Technologies Engineering