Abstract

BACKGROUND/OBJECTIVES:

No data exist regarding the validity of International Classification of Disease (ICD)-10 dementia diagnoses against a clinician-adjudicated reference standard within Medicare claims data. We examined the accuracy of claims-based diagnoses with respect to expert clinician adjudication using a novel database with individual-level linkages between electronic health record (EHR) and claims.

DESIGN:

In this retrospective observational study, two neurologists and two psychiatrists performed a standardized review of patients’ medical records from January-2016 to December-2018, and adjudicated dementia status. We measured the accuracy of three claims-based definitions of dementia against the reference standard.

SETTING:

Mass-General-Brigham Healthcare (MGB), Massachusetts, USA.

PARTICIPANTS:

From an eligible population of 40,690 fee-for-service (FFS) Medicare beneficiaries, aged 65-years and older, within the MGB Accountable Care Organization (ACO), we generated a random sample of 1,002 patients, stratified by the pretest likelihood of dementia using administrative surrogates.

INTERVENTION:

None.

MEASUREMENTS:

We evaluated the accuracy (area-under-receiver-operating-curve [AUROC]) and calibration (calibration-in-the-large [CITL] and calibration slope) of three ICD-10 claims-based definitions of dementia against clinician-adjudicated standards. We applied inverse probability weighting to reconstruct the eligible population and reported the mean and 95% confidence interval (95% CI) for all performance characteristics, using 10-fold cross-validation (CV).

RESULTS:

Beneficiaries had an average age of 75.3-years and were predominately female (59%) and non-Hispanic white (93%). The adjudicated prevalence of dementia in the eligible population was 7%. The best performing definition demonstrated excellent accuracy (CV-AUC 0.94; 95% CI 0.92-0.96) and was well-calibrated to the reference standard of clinician-adjudicated dementia (CV-CITL <0.001, CV-slope 0.97).

CONCLUSION:

This study is the first to validate ICD-10 diagnostic codes against a robust and replicable approach to dementia ascertainment, using a real-world clinical reference standard. The best performing definition includes diagnostic codes with strong face validity and outperforms an updated version of a previously validated ICD-9 definition of dementia.

Keywords: Dementia, Dementia Prevalence, Medicare Claims, Electronic Health Record, Validation

INTRODUCTION

Dementia affects an estimated 5.7 million Americans and poses a substantial challenge to the Medicare program and the broader United States healthcare system.1 The care of persons with dementia is far costlier than for those without dementia, due to greater use of both acute- and long-term care services.2,3 Because dementia disproportionately affects older adults, its associated morbidity, mortality, and costs are projected to increase with population aging.3,4 Informed policy and population health management are contingent upon the reliable ascertainment of disease status and healthcare utilization for persons with dementia.5-7

Previous studies have examined Medicare claims as a valuable, albeit imperfect, source of information for determining dementia-status and per capita costs.5,6,8 Specifically, studies have assessed the accuracy of dementia-related diagnoses using the 9th revision of the International Classification of Diseases (ICD-9) coding system against reference standards of variable quality.9-12 The most robust of such validation studies indicated favorable sensitivity (0.85) and specificity (0.95) of Medicare claims for dementia, as diagnosed by the Aging Demographics and Memory Study (ADAMS) clinical assessments, within the broader Health and Retirement Study.5

The applicability of these findings, however, is constrained by inclusion of diagnostic codes that do not maintain fidelity to clinical diagnostic criteria for dementia when mapped onto ICD-10 equivalents.5 For example, several of the ICD-9 diagnostic codes used in previously validated claims-based dementia definitions correspond to ICD-10 equivalents that represent reversible etiologies of altered mental status. More recent validation studies within longitudinal population health surveys have used reference standards that exclude important diagnostic information (e.g., functional status) for self-respondents and, in head-to-head comparisons, demonstrate excellent specificity but underperform alternative classification schemes with respect to sensitivity and accuracy among proxy-respondents.13-15

A reexamination of the validity of Medicare claims data in dementia ascertainment is timely. Medicare transitioned from the 9th to the 10th ICD-revision in 2015, with the revision reflecting an updated and more granular series of diagnostic codes to improve the classification of disease.16 Contemporaneous policy changes, especially the reinstatement of dementia within the Medicare Advantage risk-adjustment criteria, may influence the documentation of dementia diagnoses.17,18 Clinical cognitive screening practices may have evolved concurrently, as suggested by greater parity between claims-derived diagnoses and validated reference standards over time.12

In this manuscript, we examine the accuracy of ICD-10 diagnostic codes in ascertaining dementia status using a novel longitudinal dataset that includes person-level electronic health record (EHR) data for a well-defined population, combined with Medicare insurance claims for all inpatient and outpatient services. We build upon prior studies examining the accuracy of ICD-9 diagnosis codes in ascertaining dementia status.19 In this study, physicians with expertise in dementia reviewed all available clinical data to adjudicate disease status using a structured diagnostic protocol. With this real-world clinical reference standard, we then examined the performance of Medicare claims in identifying patients with dementia.

METHODS

Data sources and sampling approach

We used multiple sources of longitudinal data, linked at the individual level, including provider documentation, pharmacy data, and ICD-10 codes. The claims data were from the Mass General Brigham Healthcare Medicare Pioneer and Next Generation Accountable Care Organizations (ACOs), which serve a population of over 100,000 beneficiaries across two academic medical centers, seven community hospitals, three specialty institutions, and twenty-one community health centers. We used claims from Medicare Parts A, B (hospital and physician services), and D (prescription drugs), with data from 2016 through 2018.

Sample selection

We designed our sample to identify prevalent dementia among community-dwelling older adults, a population in need of validated measures. We required that beneficiaries meet the following eligibility criteria as of 01/01/2016: 1) alive in January 2016; 2) aged 65-years or older; 3) community-dwelling at the time of ACO entry); 4) enrolled in Medicare Parts A and B; and 5) aligned to the ACO during the entire observation period (01/01/2016 – 12/31/2018) or until death.20 For beneficiaries who died during the 2016-18 observation period, we required at least six months of continuous Medicare enrollment and ACO alignment. We also required that each beneficiary have a designated Medicare Original Reason for Eligibility (OREC) of age or disability.

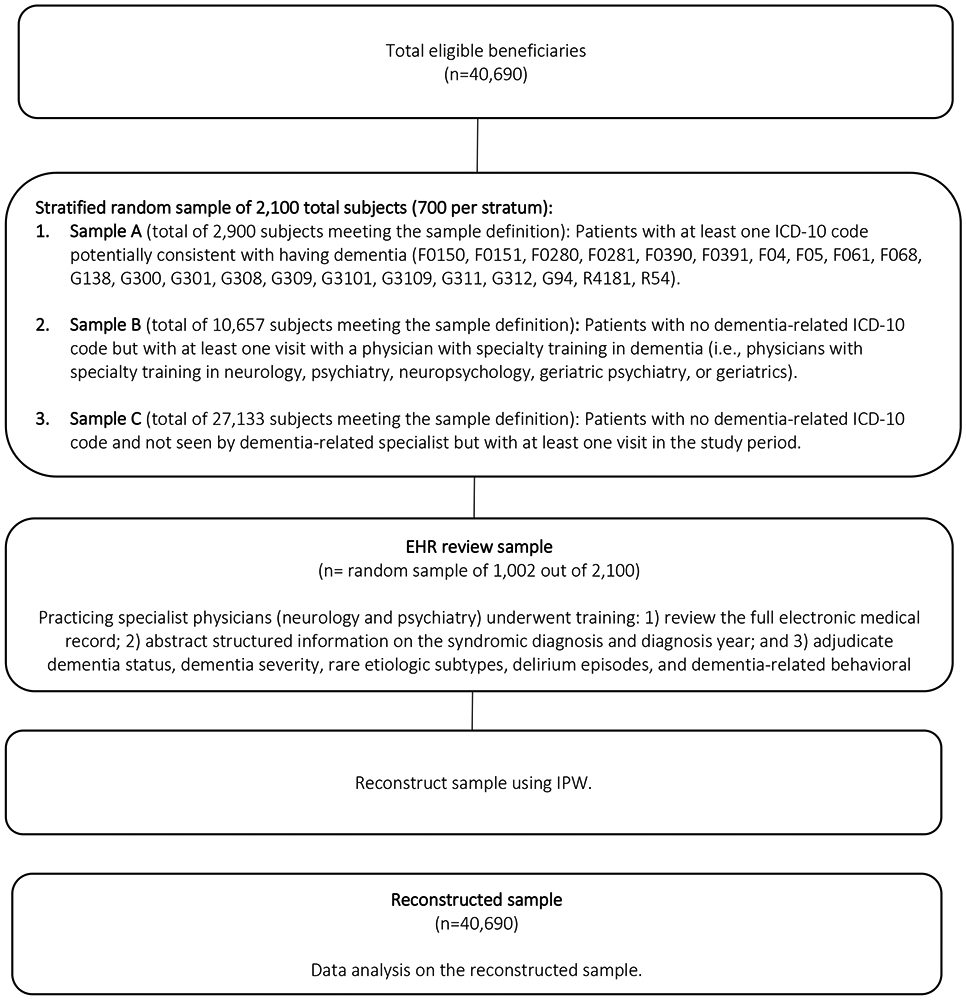

Among the 40,969 beneficiaries meeting eligibility criteria, we generated a random sample, stratified according to their pre-abstraction probability of dementia, with the following three mutually-exclusive samples: A) patients with an ICD-10 diagnosis code suggestive of dementia (Supplementary Table S1); B) patients with a clinic visit during the observation period with a neurologist, psychiatrist, geriatrician, neuropsychologist, or geriatric psychiatrist (i.e., visit with a clinician with specialty training in dementia care), but without an ICD-10 diagnosis suggestive of dementia; and C) all other patients. Of note, as indicated in Supplementary Table S1, several of the ICD codes for group A only suggest the possibility of dementia (e.g., “presenile dementia, uncomplicated”), and thus might not identify patients who meet clinical diagnostic standards for dementia.

We randomly sampled patients within each of the three strata, then selected 1,002 patients for detailed chart reviews based on initial power calculations. The final sample included 312 patients from Sample A, 341 patients from Sample B, and 299 patients from Sample C. Among the 1,002 patients, 952 (95%) had available electronic health record data during the observation period (i.e., the remaining 5% of the sample had no medical visits to an outpatient clinic, emergency department, or hospital within the healthcare system during the three-year period). We applied inverse probability weighting to reconstruct the characteristics of the overall sample (n=40,690). More detail is given in Figure 1 and Supplementary Table S2.20

FIGURE 1: Overview of sampling approach.

The workflow delineates our sampling procedure to build our final reconstructed sample for analysis, beginning with 40,690 FFS Medicare beneficiaries, aged 65-years and older, within the ACO. We perform stratified random sampling based on pretest likelihood of dimension using administrative surrogates.

Abbreviations: EHR, electronic health record; ICD-10, International Classification of Diseases, 10th Edition

Clinical data review and disease adjudication

We developed a standard operating procedure (SOP) for dementia ascertainment (Supplementary Text S1), designed to ensure systematic review of available clinical information in the EHR. We based the disease adjudication on the diagnostic criteria from the Diagnostic and Statistical Manual of Mental Disorders, 5th Edition (DSM-5) and the report of the National Institute on Aging, and Alzheimer's Association.21-23 Reviewers (two neurologists, LM & SZ, and two psychiatrists, NB & DB), underwent a three-month period of EHR-abstraction-training and SOP-refinement, during which we evaluated the interrater agreement, discussed potential ambiguities within the protocol, and refined the SOP. Interrater agreement of the final protocol was reasonable (κ ≥ 0.80) for adjudication of the key measures: cognitive concern (binary), year of onset of cognitive concern (prior to 2016, 2016-2018), dementia (binary), severe dementia (binary), delirium (binary).22,24

Reference standard: Electronic health record abstraction

Each physician-adjudicator received a random, blinded list of patients for whom they reviewed electronic health records (i.e., reviewers did not receive any information regarding the sampling strata or diagnostic claims). Using all available clinical information in the medical records, reviewers classified each patient into one of the following categories: 1) normal cognition; 2) borderline of normal cognition and mild cognitive impairment (MCI); 3) MCI; 4) borderline of MCI and dementia; 5) dementia; or 6) uncertain. For patients in the fourth (borderline of MCI and dementia) and fifth (dementia) diagnostic categories, reviewers also assessed severity and etiologies of disease, as per DSM-5.22 The reviewers also rated their confidence in each cognitive classification on a scale of “highly,” “moderately,” “mildly,” or “not at all” confident.

Reviewers’ classifications of diagnostic certainty were informed by clinical considerations, as well as data quality and availability. As dementia exists on a spectrum with mild cognitive impairment (MCI),25-27 there is potential for diagnostic misclassification, as may occur in clinical practice. Because our approach relied on the available clinical information within the EHR, there are also potential errors or omissions with respect to evaluation or documentation by clinicians. In some cases, limited information within the EHR constrained diagnostic certainty. These considerations were each reflected in reviewers’ documentation of their diagnostic confidence.

Importantly, this reference standard reflects the systematic and expert assessment of disease status based on all available clinical data contained within the medical record, including the level of diagnostic certainty. This real-world clinical standard is related to but distinct from a definition based on true disease status (i.e., a hypothetical gold standard). Accordingly, the interpretation of this standard reflects the approach by which an expert clinician would classify disease status, based on the available EHR data.

Claims-based dementia diagnoses

We used all available Medicare data (2016-2018) to create claims-based definitions of dementia, evaluating three unique sets of diagnostic criteria. Our first, “base” definition is an indicator variable incorporating all ICD-10 diagnostic codes corresponding to the ICD-9 codes included in a previously validated claims-based dementia definition,5 which we identified using the CMS General Equivalence Mapping (Supplementary Table S3). Our second, “refined,” definition is an indicator variable, incorporating a modified set of ICD-10 diagnostic codes, determined to have strong face validity by clinical reviewers. In constructing the refined definition, we removed administrative codes inconsistent with current diagnostic criteria for dementia (e.g., F05, “Delirium due to known physiological condition”) (Supplementary Table S3). Our third, “refined-count definition” was comprised of two integer variables, corresponding to the care setting in which a beneficiary had been assigned a diagnostic claim included in the “refined” claims-based definition of dementia. The first integer variable counted the number of qualifying outpatient diagnostic claims. The second integer variable counted the number of qualifying inpatient diagnostic claims. To differentiate distinct clinical encounters, each patient could have a maximum of one diagnostic claim per care setting per day. We identified care setting using Place of Service (POS) codes.

Statistical analysis

We used a series of logistic regression models to assess the performance of each claims-based definition of dementia against the reference standard diagnosis within the target population (n=40,969).

We considered only patients categorized in the fifth diagnostic category, “dementia,” as having clinician-adjudicated disease in the main analyses (Models 1-7, described below). In later sensitivity analyses, we also varied the diagnostic categories used to define dementia.

We examined a total of seven models corresponding to each of the three claims-based definitions described above. The first, unadjusted model (Model 1) regressed clinician-adjudicated dementia (i.e., the reference standard) on the “base definition” indicator variable. Model 2 added patient sex and age to Model 1. Model 3 additionally adjusted for the first occurrence of a dementia diagnosis within the inpatient setting.

The next four models used the “refined,” rather than “base,” set of ICD-10 codes. Model 4 regressed clinician-adjudicated dementia on a separate indicator variable for the “refined definition.” Model 5 added patient sex and age to Model 4. Model 6 additionally adjusted for the first occurrence of a dementia diagnosis within the inpatient setting. Model 7 regressed clinician-adjudicated dementia on the refined-count definition (i.e., the number of days with a qualifying diagnostic claim), with adjustment for age and sex.

For each model, we predicted the probability of dementia for a “new” patient based on their observed characteristics by sampling from a Bernoulli distribution with probability equal to the predicted probability of dementia. We repeated each analysis 1000-times, utilizing Monte Carlo resampling to calculate sensitivity, specificity, positive predictive value (PPV), and negative predictive value (NPV). Separately, we applied 10-fold cross-validation and plotted receiver operating characteristic (ROC) and calibration curves corresponding to each model.

We reported the mean and 95% confidence interval (95% CI) for the area under the ROC curve (CVAUC), as well as calibration-in-the-large (CV CITL) and calibration slope (CV slope). We compared the CVAUC of the base claims-based definition (Model 1) of dementia to each novel definition. Because the probability of dementia increases with age, we also examined model performance in strata defined by beneficiary age group.

Sensitivity analysis

We repeated our analyses while varying dimensions of our case definition to evaluate model performance. We first varied our case definition to include diagnostic category-4 (“borderline MCI and dementia”) and category-5 (“dementia”). We next repeated our analyses weighting for the level of diagnostic certainty assigned to each diagnosis by reviewers. We also repeated our analyses using claims over a shortened, one-year timeframe.

RESULTS

Sample characteristics

Table 1 displays the characteristics of the overall reconstructed target population (n=40,690). The mean age of patients was 75.3 years, with females comprising 59% of the sample. A total of 1010 patients (2%) died by the end of the study period. The adjudicated prevalence of dementia was 7% (n=2854, 95%CI: 6%-9%) in the target population. Patients in sample A, (i.e., beneficiaries who had been assigned a diagnostic claim potentially consistent with dementia), were older, on average, than beneficiaries in the other two samples, with mean ages of 80.9-years, 75.9-years, 74.4-years for Samples A, B, and C, respectively. Please see Supplementary Table S2 for information regarding the cognitive characteristics of the analytic sample.

Table 1: Population Characteristics of Reconstructed Sample.

Headings in bold, subheadings below not bolded.

Sample A: patients with an ICD-10 diagnosis code suggestive of dementia (Supplementary Table S2)

Sample B: patients with a clinic visit during the observation period with a neurologist, psychiatrist, geriatrician, neuropsychologist, or geriatric psychiatrist, but without an ICD-10 diagnosis suggestive of dementia

Sample C: all other patients

| Characteristics | Sample A | Sample B | Sample C | Total | ||||

|---|---|---|---|---|---|---|---|---|

| Patients (n) | 2,900 | 10,657 | 27,133 | 40,690 | ||||

| Demographics a | ||||||||

| Age in years, mean (SD) | 80.9 | 0.41 | 75.8 | 0.36 | 74.4 | 0.36 | 75.2 | 0.26 |

| Female, n (%) | 1,849 | 64% | 6,328 | 59% | 15,799 | 58% | 23,976 | 59% |

| White, n (%) | 2,657 | 92% | 10,051 | 94% | 24,986 | 92% | 37,695 | 93% |

| Deceased by end study period, n (%) | 217 | 7% | 363 | 3% | 429 | 2% | 1,010 | 2% |

| Any Cognitive Concern | ||||||||

| No, n (%) | 226 | 8% | 6,449 | 62% | 22,926 | 89% | 29,600 | 76% |

| Yes, n (%) | 2,483 | 92% | 3,875 | 38% | 2,748 | 11% | 9,106 | 24% |

| Cognitive Status b | ||||||||

| Cognitively normal, n (%) | 287 | 11% | 7,115 | 69% | 22,840 | 89% | 30,241 | 78% |

| Normal vs. MCI, n (%) | 96 | 4% | 817 | 8% | 687 | 3% | 1,600 | 4% |

| MCI, n (%) | 226 | 8% | 969 | 9% | 429 | 2% | 1,624 | 4% |

| MCI vs. dementia, n (%) | 217 | 8% | 484 | 5% | 258 | 1% | 959 | 2% |

| Dementia, n (%) | 1,789 | 66% | 636 | 6% | 429 | 2% | 2,854 | 7% |

| Unknown, n (%) | 96 | 4% | 303 | 3% | 1,030 | 4% | 1,429 | 4% |

| Missing Chart Data, n (%) | 191 | 7% | 333 | 3% | 1,460 | 5% | 1,984 | 5% |

| Cognitive Status by Claims-Based Definitions | ||||||||

| Met Base Claims-Based Dementia Definition, n (%) | 2,891 | 100% | 908 | 9% | 601 | 2% | 4,400 | 11% |

| Met Refined Claims-Based Dementia Definition, n (%) | 2,579 | 89% | 575 | 5% | 258 | 1% | 3,412 | 8% |

Data derived from Partner’s Medicare claims

data abstracted from EHRs

Abbreviations

MCI, mild cognitive impairment; EHR, electronic health record

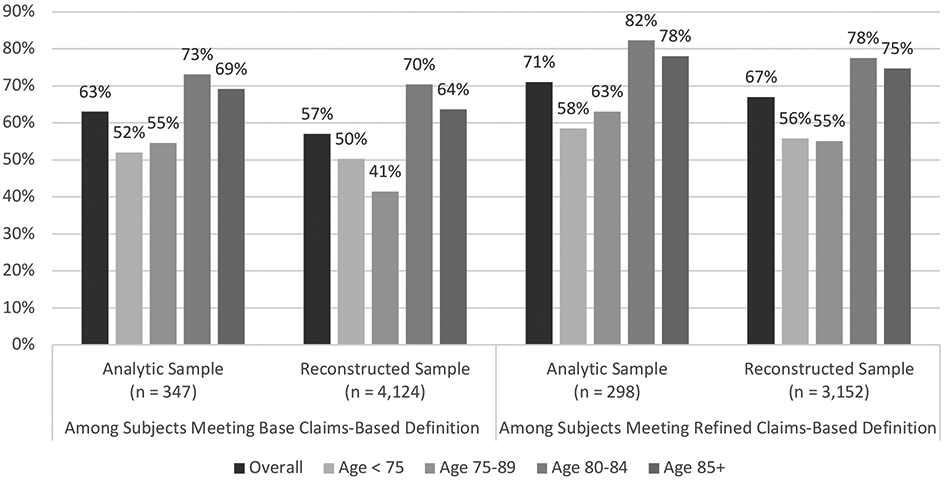

Among beneficiaries assigned an ICD-10 diagnostic code corresponding to those included in the base claims-based definition of dementia, only 57% had clinician-adjudicated dementia (Figure 2). This is to say that approximately four-of-ten patients with an ICD-10 diagnosis code under the base definition did not meet criteria for dementia as per expert review of their respective medical records. Of beneficiaries meeting criteria for the refined claims-based definition of dementia, 67% were determined to have clinician-adjudicated dementia.

FIGURE 2: Overlap of Claims-Based Definitions with EHR-Adjudicated Dementia, Overall and by Age.

Percent of subjects with clinician-adjudicated dementia, among those meeting criteria for the base and refined claims-based definitions of dementia, overall and by age group.

Base clams-based definition: Indicator variable incorporating all ICD-10 diagnostic codes corresponding to the ICD-9 codes included in a previously validated claims-based dementia, identified using Centers for Medicare & Medicaid Services General Equivalence Mapping.

Refined claims-based definition: Indicator variable incorporating a modified set of ICD-10 diagnostic codes, in which we removed codes inconsistent with current diagnostic criteria for dementia.

Analytic sample: Comprised of subjects whose electronic health records were fully evaluated by clinician reviewers. Only the overall sample size is shown.

Reconstructed sample: Target overall sample, reconstructed from the analytic sample using inverse probability weighting. Only the overall sample size is show.

Performance characteristics of claims-based diagnoses in ascertaining dementia: Base definition

Supplementary Table S4 summarizes model performance by the base definition of dementia in ascertaining clinician-adjudicated dementia. For the base claims-based definition of dementia (Model 1), we observed good discrimination (CVAUC 0.86; 95% CI 0.83-0.88). This definition was well-calibrated to the reference standard with CV CITL of 0.002 and CV slope of 0.99.

Performance characteristics of claims-based diagnoses in ascertaining dementia: Refined definition

Supplementary Table S5 displays the performance characteristics of the refined set of ICD-10 codes (i.e., removing the codes with poor face validity) in ascertaining dementia status. The adjusted model for the refined claims-based definition of dementia (Model 6), demonstrated excellent discrimination (CVAUC of 0.93; 95% CI 0.92-0.95). This model was also well-calibrated to the reference standard with CV CITL of 0.003 and CV slope of 0.99, albeit modestly likelier to overpredict dementia.

Performance of a refined claims-based definition with incorporation of a count element: Refined-count definition

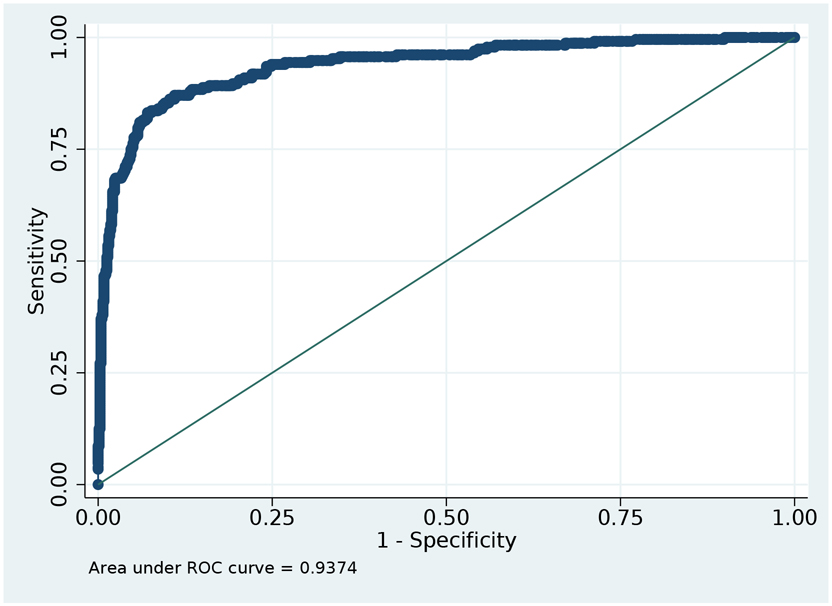

Table 2 displays the performance characteristics for the ascertainment of dementia using the refined-count definition (also see Supplementary Table S6). This definition reflects the number of days with a dementia code per patient per care setting. The adjusted model for the refined-count definition (Model 7) yielded the best overall performance, with excellent discrimination (CVAUC 0.94; 95% CI 0.92-0.96, Figure 3). This model was best calibrated to the reference standard with CV CITL of 0.002 and CV slope of 0.97 (Supplementary Table S7, Figures S1 and S2). Using a predicted probability threshold of 0.5 to classify dementia status, the refined-count definition demonstrated sensitivity of 68.0% (SD 1.8%; range 60.8-73.7%), specificity of 93.5% (SD 0.7%; range 91.3-95.6), positive predictive value of 77.1% (SD 2.0%; range 70.7-83.4%), and negative predictive value of 90.1% (SD 0.5%; range 88.1-91.6%).

Table 2: Performance Characteristics: Logistic Model of Diagnostic Claim Count, Refined Claims-Based Definition (Model 7), Overall and by Age Group.

The table is based primarily on the reconstructed sample, but Cross-Validation (CV) metrics are shown for the original (analytic) sample. Cross-validation was repeated with reconstructed sample for sensitivity analysis, which yielded similar results.

Mean: Mean value for performance characteristic from 1000 Monte Carlo resampling

Min: Minimum value for performance characteristic from 1000 Monte Carlo resampling

Max: Maximum value for performance characteristic from 1000 Monte Carlo resampling

| Model | Clinician Adjudicated Dementia |

Metric | Sensitivity (%) |

Specificity (%) |

PPV (%) |

NPV (%) |

CV AUC (0-1) |

CV CITL (0-1) |

CV Slope (0-1) |

|---|---|---|---|---|---|---|---|---|---|

| Overall | Freq: 2,854 Prev: 7.4% |

Mean | 68.0 | 93.5 | 77.1 | 90.1 | 0.94 | 0.00 | 0.97 |

| SD | 1.8 | 0.7 | 2.0 | 0.5 | - | - | - | ||

| Min | 60.8 | 91.3 | 70.7 | 88.1 | - | - | - | ||

| Max | 73.7 | 95.6 | 83.4 | 91.6 | - | - | - | ||

| Age 65-74 | Freq: 412 Prev: 1.9% |

Mean | 56.6 | 98.0 | 75.2 | 95.6 | 0.90 | 0.00 | 0.81 |

| SD | 3.8 | 0.5 | 5.2 | 0.4 | - | - | - | ||

| Min | 45.0 | 96.1 | 58.8 | 94.4 | - | - | - | ||

| Max | 67.5 | 99.2 | 89.3 | 96.6 | - | - | - | ||

| Age 75-79 | Freq: 477 Prev: 6.1% |

Mean | 60.9 | 93.6 | 73.1 | 89.4 | 0.88 | −0.02 | 0.71 |

| SD | 4.0 | 1.6 | 5.0 | 1.0 | - | - | - | ||

| Min | 51.1 | 88.1 | 57.1 | 86.9 | - | - | - | ||

| Max | 75.6 | 98.1 | 90.3 | 93.0 | - | - | - | ||

| Age 80-84 | Freq: 599 Prev: 11.1% |

Mean | 72.6 | 92.3 | 84.3 | 85.6 | 0.92 | 0.02 | 0.78 |

| SD | 3.2 | 2.0 | 3.6 | 1.5 | - | - | - | ||

| Min | 64.4 | 85.6 | 73.2 | 81.7 | - | - | - | ||

| Max | 81.4 | 97.1 | 93.9 | 89.8 | - | - | - | ||

| Age 85+ | Freq: 1,366 Prev: 32.7% |

Mean | 74.6 | 72.4 | 75.8 | 71.1 | 0.88 | 0.00 | 0.84 |

| SD | 3.5 | 4.4 | 3.1 | 3.2 | - | - | - | ||

| Min | 62.5 | 59.2 | 67.8 | 62.3 | - | - | - | ||

| Max | 86.4 | 86.8 | 87.3 | 82.6 | - | - | - |

Abbreviations

SD: Standard Deviation; PPV: Positive Predictive Value; NPV: Negative Predictive Value; 95%CI: 95% Confidence Interval; CV: 10-fold cross validation; CV AUC: 95% CI is Asymptotic Normal; Freq: Frequency; Prev: Prevalence; CV CITL: Calibration-in-the-large

FIGURE 3: Area under ROC curve: tradeoff between sensitivity and specificity.

Figure is based on the analytic sample. Area Under the ROC curve = 0.94. Figure 3 provides the AUROC as it relates to varying sensitivity and 1-specificity and illustrates the point that AUROC is maximized. We display in Figure 3 the performance reference to Model 7 which used a Refined Claims-Based Definition and Diagnostic Claim Count.

The refined-count model was most accurate (CVAUC 0.92; 95% CI 0.87-0.97) for the subgroup of beneficiaries aged 80-84 years, at the expense of systematically higher predicted probabilities of dementia (CV CITL 0.02 and CV slope 0.78). This model was best calibrated (CV CITL <0.001 and CV slope 0.84) to the reference standard for the subgroup of beneficiaries aged 85-years and older, despite a decrement in accuracy (CVAUC 0.88; 95% CI 0.82-0.93).

Sensitivity analyses

Overall, the findings were consistent across sensitivity analyses that varied the diagnostic categories included in the reference standard (Supplementary Table S8), diagnostic claims included in the refined-count definition (Supplementary Table S9-S10), degree of diagnostic certainty (Supplementary Tables S11-S15), and period over which diagnostic claims were counted (Supplementary Table S16 and S17).

DISCUSSION

ICD-10-based diagnostic codes are a useful tool for identifying Medicare beneficiaries with dementia and, arguably, the only feasible way to obtain national estimates of dementia within the entire Medicare program.5 Sets of diagnostic codes with strong face validity, combined with information regarding beneficiary age and the frequency of diagnoses, can aid in identifying individuals with a high likelihood of true dementia. This type of validated approach is critical to the study of dementia within the traditional, fee-for-service Medicare program, which accounts for the majority of older adults in the United States. Accordingly, such an approach is essential to any research or policy utilizing large, administrative datasets to capture dementia status.

This study is the first to validate ICD-10 diagnostic codes against clinician-adjudicated dementia status, using all available clinical information within the electronic health records of FFS Medicare beneficiaries. Through standardized, expert review of 952 electronic health records, we observed 24.4% dementia prevalence within our analytic sample and 7.0% prevalence in the target population, which was reweighted to represent those enrolled in the ACO. Consistent with prior literature, claims-based definitions of dementia overestimated disease prevalence.5 Overcounting was most pronounced for beneficiaries aged 75-79-years, suggesting a potentially higher rate of false-positive diagnoses in this subgroup. A stringent, refined claims-based definition of dementia overcounted fewer cases than the base definition.

Our results support the utility of validated claims-based measures as an approximation of dementia prevalence. We developed a refined claims-based definition of dementia, in which we excluded ICD-10 codes that were nonspecific or inconsistent with clinical diagnostic criteria for dementia, such as reversible etiologies of altered mental status. The refined claims-based definition demonstrated excellent accuracy in ascertaining the reference standard. Model performance was further improved through incorporating the count of days during which a beneficiary had been assigned qualifying dementia diagnostic code. The more stringent, refined-count definition has strong face validity, excellent accuracy, and is well-calibrated to clinician-adjudicated dementia status.

The refined-count approach (Model 7) performed best within our sample. The refined-count approach is well-suited to studies of prevalent dementia and its downstream healthcare costs within a population of Medicare beneficiaries. The optimal approach to dementia ascertainment within future analyses could depend on the nature of the research question, as well as the quality and extent of available data.

The existing literature on the validity of ICD-9 claims-based definitions of dementia includes several studies that have been limited by small and nonrepresentative samples, omission of performance characteristics, and or problematic face-validity when the constituent diagnostic codes of claims-based definitions are directly mapped onto ICD-10 equivalents.6,8 Prior instruments have also adjusted for age and applied count-thresholds for diagnostic claims, without reporting calibration measures, thus limiting interpretation of their external validity.12 This has resulted in widely variable agreement between claims-based definitions of dementia and selected reference standards (κ=0.23-0.70) across distinct samples.5,6,8 The validation studies that ascertained cases through expert review of electronic medical records did not clearly delineate either standardized diagnostic criteria or adjudication procedures.12,17,18 Available validation literature also preceded evidence of improved concordance between claims- and survey-defined dementia, which is consistent with an evolution in the accuracy with which Medicare claims ascertain dementia.12,28

Our analysis improves upon the limitations of prior validation studies through the development of a claims-based instrument that is accurate and well-calibrated to a parsimonious, and replicable reference standard of clinician-adjudicated dementia. Our use of ICD-10 diagnostic codes reflects the most recent diagnostic and administrative conventions. Due to our defined—and recent—study period (2016-2018), this analysis is well situated to reflect current clinical and administrative practice patterns, as well as recent Medicare reforms.

Limitations

Our study has several limitations. First, we are limited to available information in the medical record, data that are contingent upon evaluations and documentation by clinicians, largely in the context of clinical encounters (i.e., ascertainment neither systematic nor random). Not all patients or caregivers seek care for symptoms potentially suggestive of dementia, even when otherwise presenting to routine care. Not all physicians screen for or document cognitive changes in the medical record. Those patients who underutilize care are underrepresented in any medical dataset.

Variations in overall healthcare utilization and in care-seeking for cognitive symptoms could vary with demographic factors that might result in differential validity across age, gender, racial and ethnic, and socioeconomic groups. While such issues are beyond this examination of the first order question of overall diagnostic accuracy, these are important and will be explored in later work.

There also were 50 patients (5%) in the sample for whom there was little medical record data (e.g., the records were limited to nonspecific clinical encounters such as a phone call notes or focused preventive care, such as routine eye exams). We suspect that low healthcare utilization among these patients suggests that they have intact health and cognition. Nonetheless, some of these individuals also could have avoided presentation to care or, theoretically, have had impairments that precluded interaction with the healthcare system.

Our analysis is restricted to FFS Medicare beneficiaries within a single metropolitan ACO, such that our findings may not generalize to a broader older adult population nor to beneficiaries enrolled in private insurance plans.29 In particular, it would be desirable to replicate our analysis for Medicare Advantage beneficiaries and in samples with greater geographic and racial diversity. In addition, our calibration measures may be optimistic due to our use of cross-validation, as opposed to external-validation. We plan for forthcoming external validation of the claims-based instruments evaluated in this study.

Conclusions

This study is the first to validate ICD-10 diagnostic codes against a robust and replicable approach to dementia ascertainment using a real-world clinical reference standard, derived from the electronic health records of fee-for-service Medicare beneficiaries. Our analysis both modernizes and augments existing knowledge of claims-based dementia ascertainment. Our approach is accessible to clinicians, health systems, and policy makers in that it does not require prior knowledge of software-based methods and is simple in its construction.29

We have demonstrated the utility of novel ICD-10 claims-based definitions in ascertaining dementia status, while outperforming previously validated definitions. The best performing claims-based definition is well-aligned with clinical diagnostic criteria for dementia, demonstrated excellent accuracy, and is well-calibrated to disease status in a cross-validated sample. These results suggest that use of this claims-based instrument may improve estimations of dementia prevalence and costs.

Supplementary Material

Supplementary Figure S1: CV Calibration Plot: Logistic Model of Diagnostic Claim Count, Refined Claims-Based Definition (Model 7, Overall Model)

Supplementary Figure S2: Expected probabilities <0.1: Logistic Model of Diagnostic Claim Count, Refined Claims-Based Definition (Model 7, Overall Model)

Supplementary Table S1: ICD-9 to ICD-10 GEMS Crosswalk of Codes in Base (Taylor) Claims-Based Definition of Dementia

Supplementary Table S2: EHR-Adjudicated Dementia Status of Analytic Sample

Supplementary Table S3: ICD-10 GEMS Diagnostic Codes, Removed from and Added to Refined Claims-Based Definition of Dementia (From Base Definition)

Supplementary Table S4: Performance Characteristics: Ascertainment of EHR-Adjudicated Dementia by Base Claims-Based Definition

Supplementary Table S5: Logistic Regression Results: Ascertainment of EHR-Adjudicated Dementia by Refined Claims-Based Definition

Supplementary Table S6: Logistic Model of Diagnostic Claim Count, Refined Claims-Based Definition

Supplementary Table S7: CV AUC from Models 2-7, compared with CV AUC from Model 1 (Base Model)

Supplementary Table S8: Sensitivity Analysis; Logistic Model of Diagnostic Claim Count, Refined Claims-Based Definition, and Adjusted Reference Standard (Dementia and Mild Cognitive Impairment versus Dementia), Overall

Supplementary Table S9: Logistic Regression Results and Performance Characteristics, Claims-Based Definition with Removal of Nonspecific Diagnoses, Only

Supplementary Table S10: Logistic Regression Results and Performance Characteristics, Claims-Based Definition with Removal of Reversible Etiologies of Altered Mental Status, Only

Supplementary Table S11: Logistic Model Results: Ascertainment of EHR-Adjudicated Dementia with “High or Moderate” Diagnostic Certainty by Base Claims-Based Definition

Supplementary Table S12: Logistic Model Results: Ascertainment of EHR-Adjudicated Dementia with “High or Moderate or Mild” Diagnostic Certainty by Base Claims-Based Definition

Supplementary Table S13: Logistic Model Results: Ascertainment of EHR-Adjudicated Dementia with “High or Moderate” Diagnostic Certainty by Refined Claims-Based Definition

Supplementary Table S14: Logistic Model Results: Ascertainment of EHR-Adjudicated Dementia with “High or Moderate or Mild” Diagnostic Certainty by Refined Claims-Based Definition

Supplementary Table S15: Logistic Model Results: Ascertainment of EHR-Adjudicated Dementia by Level of Diagnostic Certainty, Count-Based Refined Claims Definition

Supplementary Table S16: Performance Characteristics: Logistic Model of Diagnostic Claim Count, Refined Claims-Based Definition (Model 7), Overall and by Age Group, Using only 2018 Claims

Supplementary Table S17: Logistic Model of Diagnostic Claim Count, Refined Claims-Based Definition, Using only 2018 Claims

Supplementary Text S1: Standard Operating Procedure (SOP) for Electronic Health Record Based Ascertainment of Dementia

Key Points.

This study has improved upon previous reference standard definitions of dementia.

The current ICD-10 system of claims data can identify dementia with high accuracy.

Why does this matter? This study provides a highly accurate method to identify dementia using ICD-10 codes.

Acknowledgments

Funding: This project is supported by three NIH research grants (NIH-NIA 5K08AG053380-02, NIH-NIA 5R01AG062282-02, a

Sponsor’s Role: This work was done as part of the fulfillment of Dr. Moura’s doctoral degree in Population Health Sciences (Epidemiology) at the Harvard T.H. Chan School of Public Health. This project is supported by three NIH research grants (NIH-NIA 5K08AG053380-02, NIH-NIA 5R01AG062282-02, and NIH-NIA 2P01AG032952-11), following NIH policies of resource allocation.

Footnotes

Conflict of Interest: The authors report no conflict of interest.

REFERENCES

- 1.Alzheimer’s Association. 2018 Alzheimer’s Association Disease Facts and Figures. Alzheimer’s & Dementia. 2018;14(3):367–429. Available at: https://www.alz.org/media/HomeOffice/Facts%20and%20Figures/facts-and-figures.pdf. Accessed February 17, 2021. [Google Scholar]

- 2.Feng Z, Coots LA, Kaganova Y, Wiener JM. Hospital And ED Use Among Medicare Beneficiaries With Dementia Varies By Setting And Proximity To Death. Health Affairs. 2014;33(4):683–690. doi: 10.1377/hlthaff.2013.1179 [DOI] [PubMed] [Google Scholar]

- 3.Hurd MD, Martorell P, Delavande A, Mullen KJ, Langa KM. Monetary Costs of Dementia in the United States. New England Journal of Medicine. 2013;368(14):1326–1334. doi: 10.1056/nejmc1305541 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Colby SL, Ortman JM. The Baby Boom Cohort in the United Current Population Reports Population Estimates and Projections: 2012-2060. Washington, DC: United States Census Bureau. 2014. Available at: https://www.census.gov/content/dam/Census/library/publications/2014/demo/p25-1141.pdf. Accessed February 17. 2021. [Google Scholar]

- 5.Taylor DH, Østbye T, Langa KM, Weir D, Plassman BL. The accuracy of Medicare claims as an epidemiological tool: The case of dementia revisited. Journal of Alzheimer’s Disease. 2009;17(4):807–815. doi: 10.3233/JAD-2009-1099 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Taylor DH, Fillenbaum GG, Ezell ME. The accuracy of Medicare claims data in identifying Alzheimer’s disease. Journal of Clinical Epidemiology. 2002;55(9):929–937. doi: 10.1016/S0895-4356(02)00452-3 [DOI] [PubMed] [Google Scholar]

- 7.Hurd MD, Martorell P, Langa K. Future Monetary Costs of Dementia in the United States Under Alternative Dementia Prevalence Scenarios. Journal of Population Ageing. 2015;8(1-2):101–112. doi: 10.1007/s12062-015-9112-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Østbye T, Taylor DH, Clipp EC, van Scoyoc L, Plassman BL. Identification of dementia: Agreement among national survey data, medicare claims, and death certificates. Health Services Research. 2008;43(1 Pt 1):313–326. doi: 10.1111/j.1475-6773.2007.00748.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Hoops S, Nazem S, Siderowf AD et al. Validity of the MoCA and MMSE in the detection of MCI and dementia in Parkinson disease. Neurology. 2009;73(21):1738–1745. doi: 10.1212/WNL.0b013e3181c34b47 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Weir DR, Wallace RB, Langa KM et al. Reducing case ascertainment costs in U.S. population studies of Alzheimer’s disease, dementia, and cognitive impairment - Part 1. Alzheimer’s & Dementia. 2011;7(1):94–109. doi: 10.1016/j.jalz.2010.11.004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Houx PJ, Shepherd J, Blauw G-J et al. Testing cognitive function in elderly populations: the PROSPER study. J Neurol Neurosurg Psychiatry. 2002;73:385–389. doi: 10.1136/jnnp.73.4.385 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Chen Y, Tysinger B, Crimmins E, Zissimopoulos JM. Analysis of dementia in the US population using Medicare claims: Insights from linked survey and administrative claims data. Alzheimer’s & Dementia: Translational Research and Clinical Interventions. 2019;5:197–207. doi: 10.1016/j.trci.2019.04.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Jain S, Rosenbaum PR, Reiter JG et al. Using Medicare claims in identifying Alzheimer’s disease and related dementias. Alzheimer’s & Dementia. 2020. doi: 10.1002/alz.12199 [Epub ahead of print] [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Gianattasio KZ, Wu Q, Glymour MM, Power MC. Comparison of Methods for Algorithmic Classification of Dementia Status in the Health and Retirement Study. Epidemiology. 2019;30(2):291–302. doi: 10.1097/EDE.0000000000000945 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Herzog AR, Wallace RB. Measures of Cognitive Functioning in the AHEAD Study. The Journals of Gerontology: Series B. May1997; 52B(Special_Issue):37–48. doi: 10.1093/geronb/52b.special_issue.37 [DOI] [PubMed] [Google Scholar]

- 16.ICD-10. CMS.gov 2020. Available at: https://www.cms.gov/Medicare/Coding/ICD10/index. Accessed May 17, 2020.

- 17.Willink A, DuGoff EH. Integrating Medical and Nonmedical Services - The Promise and Pitfalls of the CHRONIC care act. New England Journal of Medicine. 2018;378(23):2153–2155. doi: 10.1056/NEJMp1803292 [DOI] [PubMed] [Google Scholar]

- 18.Kouzoukas D Advance Notice of Methodological Changes for Calendar Year (CY) 2020 for the Medicare Advantage (MA) CMS-HCC Risk Adjustment Model. CMSgov. December2018. https://www.cms.gov/Medicare/Health-Plans/MedicareAdvtgSpecRateStats/Downloads/Advance2020Part1.pdf. Accessed February 20, 2021. [Google Scholar]

- 19.Yun H, Kilgore ML, Curtis JR et al. Identifying types of nursing facility stays using medicare claims data: An algorithm and validation. Health Services and Outcomes Research Methodology. 2010;10(1-2):100–110. doi: 10.1007/s10742-010-0060-4 [DOI] [Google Scholar]

- 20.Moura LMVR, Smith JR, Blacker D et al. Epilepsy Among Elderly Medicare Beneficiaries. Medical Care. 2019;57(4):318–324. doi: 10.1097/MLR.0000000000001072 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.McKhann GM, Knopman DS, Chertkow H et al. The diagnosis of dementia due to Alzheimer’s disease: Recommendations from the National Institute on Aging-Alzheimer’s Association workgroups on diagnostic guidelines for Alzheimer’s disease. Alzheimer’s & Dementia. 2011;7(3):263–269. doi: 10.1016/j.jalz.2011.03.005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.American Psychiatric Association. Diagnostic and Statistical Manual of Mental Disorders, Fifth Edition. Arlington, VA: American Psychiatric Association, 2013. doi: 10.1176/appi.books.9780890425596 [DOI] [Google Scholar]

- 23.Albert MS, DeKosky ST, Dickson D et al. The diagnosis of mild cognitive impairment due to Alzheimer’s disease: Recommendations from the National Institute on Aging-Alzheimer’s Association workgroups on diagnostic guidelines for Alzheimer’s disease. Alzheimer’s & Dementia. 2011;7(3):270–279. doi: 10.1016/j.jalz.2011.03.008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.McHugh ML. Interrater reliability: The kappa statistic. Biochemia Medica. 2012;22(3):276–282. doi: 10.11613/bm.2012.031 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Mitchell AJ, Shiri-Feshki N. Rate of progression of mild cognitive impairment to dementia-meta-analysis of 41 robust inception cohort studies. Acta Psychiatrica Scandinavica. 2009;119(4):252–265. doi: 10.1111/j.1600-0447.2008.01326.x [DOI] [PubMed] [Google Scholar]

- 26.Pereira T, Lemos L, Cardoso S et al. Predicting progression of mild cognitive impairment to dementia using neuropsychological data: A supervised learning approach using time windows. BMC Medical Informatics and Decision Making. 2017;17(1):110. doi: 10.1186/s12911-017-0497-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Roberts RO, Knopman DS, Mielke MM et al. Higher risk of progression to dementia in mild cognitive impairment cases who revert to normal. Neurology. 2014;82(4):317–325. doi: 10.1212/WNL.0000000000000055 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Park S, White L, Fishman P, Larson EB, Coe NB. Health Care Utilization, Care Satisfaction, and Health Status for Medicare Advantage and Traditional Medicare Beneficiaries With and Without Alzheimer Disease and Related Dementias. JAMA Network Open. 2020;3(3):e201809. doi: 10.1001/jamanetworkopen.2020.1809 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.McCoy TH, Han L, Pellegrini AM, Tanzi RE, Berretta S, Perlis RH. Stratifying risk for dementia onset using large-scale electronic health record data: A retrospective cohort study. Alzheimer’s & Dementia. 2020;16(3):531–540. doi: 10.1016/j.jalz.2019.09.084 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplementary Figure S1: CV Calibration Plot: Logistic Model of Diagnostic Claim Count, Refined Claims-Based Definition (Model 7, Overall Model)

Supplementary Figure S2: Expected probabilities <0.1: Logistic Model of Diagnostic Claim Count, Refined Claims-Based Definition (Model 7, Overall Model)

Supplementary Table S1: ICD-9 to ICD-10 GEMS Crosswalk of Codes in Base (Taylor) Claims-Based Definition of Dementia

Supplementary Table S2: EHR-Adjudicated Dementia Status of Analytic Sample

Supplementary Table S3: ICD-10 GEMS Diagnostic Codes, Removed from and Added to Refined Claims-Based Definition of Dementia (From Base Definition)

Supplementary Table S4: Performance Characteristics: Ascertainment of EHR-Adjudicated Dementia by Base Claims-Based Definition

Supplementary Table S5: Logistic Regression Results: Ascertainment of EHR-Adjudicated Dementia by Refined Claims-Based Definition

Supplementary Table S6: Logistic Model of Diagnostic Claim Count, Refined Claims-Based Definition

Supplementary Table S7: CV AUC from Models 2-7, compared with CV AUC from Model 1 (Base Model)

Supplementary Table S8: Sensitivity Analysis; Logistic Model of Diagnostic Claim Count, Refined Claims-Based Definition, and Adjusted Reference Standard (Dementia and Mild Cognitive Impairment versus Dementia), Overall

Supplementary Table S9: Logistic Regression Results and Performance Characteristics, Claims-Based Definition with Removal of Nonspecific Diagnoses, Only

Supplementary Table S10: Logistic Regression Results and Performance Characteristics, Claims-Based Definition with Removal of Reversible Etiologies of Altered Mental Status, Only

Supplementary Table S11: Logistic Model Results: Ascertainment of EHR-Adjudicated Dementia with “High or Moderate” Diagnostic Certainty by Base Claims-Based Definition

Supplementary Table S12: Logistic Model Results: Ascertainment of EHR-Adjudicated Dementia with “High or Moderate or Mild” Diagnostic Certainty by Base Claims-Based Definition

Supplementary Table S13: Logistic Model Results: Ascertainment of EHR-Adjudicated Dementia with “High or Moderate” Diagnostic Certainty by Refined Claims-Based Definition

Supplementary Table S14: Logistic Model Results: Ascertainment of EHR-Adjudicated Dementia with “High or Moderate or Mild” Diagnostic Certainty by Refined Claims-Based Definition

Supplementary Table S15: Logistic Model Results: Ascertainment of EHR-Adjudicated Dementia by Level of Diagnostic Certainty, Count-Based Refined Claims Definition

Supplementary Table S16: Performance Characteristics: Logistic Model of Diagnostic Claim Count, Refined Claims-Based Definition (Model 7), Overall and by Age Group, Using only 2018 Claims

Supplementary Table S17: Logistic Model of Diagnostic Claim Count, Refined Claims-Based Definition, Using only 2018 Claims

Supplementary Text S1: Standard Operating Procedure (SOP) for Electronic Health Record Based Ascertainment of Dementia