Abstract

National policy and litigation have been a catalyst in many states for expanding personal outcomes for people with intellectual and developmental disabilities (IDD) and have served as an impetus for change in state IDD systems. Although several metrics are used to examine personal outcomes, the National Core Indicators (NCI) In-Person Survey (IPS) is one tool that provides an annual depiction of the lives of people who receive Medicaid Home and Community Based IDD waiver services (HCBS). This article examines whether a validated, three-factor (Privacy Rights, Everyday Choice, and Community Participation) measure of Personal Opportunity, derived from NCI items, functions as predicted across non-equivalent, NCI cohorts (N=2400) from Virginia in 2017, 2018, and 2019. Multiple-groups confirmatory factor analysis (CFA) was employed to examine the invariance and generalizability of the Personal Opportunity constructs. Results indicated that Privacy Rights, Everyday Choice, and Community Participation measured the same concepts even when time and group varied. Significant improvements in Privacy Rights and Community Participation were observed when comparing latent factor means across years. Findings provide stakeholders with a tool for interpreting personal outcomes in the contexts of policy and practice intended to improve inclusion and quality of life for adults with IDD.

Keywords: National Core Indicators, rights, choice, community participation, intellectual disability

Background

National policy and litigation such as the Americans With Disabilities Act in 1990, the Supreme Court Olmstead v. L.C. decision in 1999, and the 2014 Home and Community-Based Services (HCBS) Settings Final Rule have cleared a path for people with intellectual and developmental disabilities (IDD) to be fully engaged and included into their communities. For example, key provisions of the Final Rule emphasize increased access to personal opportunity for participants in HCBS programs, particularly opportunities to have autonomy and independence in making choices, to have desired privacy, and to exercise full access to the greater community (Developmental Disabilities Program, 2015).

Like the Final Rule, many policies are guided by person-referenced principles, such as choice, rights, and inclusion (Schalock et al., 2010). Policies are also developed to assure that the agencies and organizations delivering supports and services to eligible people with IDD are well coordinated and accountable to the people they serve (Shogren et al., 2018). Personal outcomes are useful for operationalizing policy impacts on people and systems. People with IDD value certain personal outcomes, embedded in human rights, and those same outcomes can be used to monitor system impacts (Houseworth et al., 2019; Tichá et al., 2018).

Clear delineations among personal outcomes, system performance, and policy goals have emerged over the past decade. Shogren et al. (2015) improved a system for linking personal outcome indicators with policy goals by synthesizing national and international policy documents and distilling regulation and legislation into three overarching disability policy goals: Human Dignity and Autonomy, Human Endeavor, and Human Engagement. However, there remains a need for valid and stable mechanisms for measuring personal outcomes such as choice-making, privacy rights, or community inclusion that are Human Dignity and Autonomy and Human Engagement policy goals (Shogren et al., 2015). Accurately measuring personal outcomes allows systems to adhere to the spirit of a policy and the people it references.

Current Outcome Measurement Approaches

Two current mechanisms for tracking personal outcomes have been widely adopted. The Council on Quality and Leadership’s (2017) Personal Outcome Measures (POMs) uses 21 indicators, mapped onto 5 factors: Security, Community, Relationships, Choices, and Goals. A quality of life lens guides the POMs, and its orientation is clearly person-referenced. The National Association of State Directors of Developmental Disabilities Services (NASDDDS) and Human Services Research Institute’s (HSRI) National Core Indicators (NCI; n.d.), like the POMs, accesses multiple perspectives, but it always references systems, often through a person-referenced outcomes, collected through the NCI In-Person Survey (IPS; n.d.).

Although the NCI-IPS organizes personal outcome indicators into outcome domains such as “community inclusion, participation, and leisure,” “choice and decision-making,” and ”respect and rights,” the relationships between items and theorized constructs are not always aligned. As a result, many scales measuring a range of constructs have emerged through factor analytic techniques, which allow items to be combined freely based on the strength of their relationships rather than fidelity to the concepts they were initially intended to measure. Factors that contain some but not all indicators in a given domain or that combine personal outcome indicators include, but are not limited to, Social Participation and Relationships, Personal Control, Social Determination (Mehling & Tassé, 2014), Everyday Choice (Lakin et al., 2008) or Budgetary Agency (Houseworth et al., 2019). Although these measures have been validated, often on subsets of NCI data from particular states or with a specified subpopulation of people with IDD, difficulties in replication have been reported when using existing measures in other state or national contexts (Jones et al., 2018).

Many of the measures combined data from multiple states, but measures do not always hold in disaggregated systems (Jones et al., 2018). The poor translation of measures from the national to the state level poses problems for state systems and decision-makers that rely on valid personal outcome measures that can be used year after year. Thus, there is need to develop IDD system scales that can be used for monitoring and quality assurance purposes and to make informed, databased decisions about policy and regulatory directions. To meet this end, the authors of this study sought to develop stable measures that (a) fit state and national data, (b) were similarly valid for different randomly selected NCI cohorts, and (c) could compare and benchmark personal outcomes across years. Measures capable of these criteria could reasonably be expected to evaluate whether state systems were improving personal opportunities for adult service users with IDD.

Personal Opportunity

In this study we are referring to Personal Opportunity as the chances for self-directed action, including acting on one’s strengths and preferences. A range of contextual elements can promote or inhibit personal opportunities to meaningfully engage in life activities. For some, personal opportunities may be conditioned on the supports they use or permissions they are required to seek (Mansell & Beadle-Brown, 2012; McConkey & Collins, 2010; Wilson et al., 2017). Support provided should be proportionate to gaps between environmental demands and one’s preparedness to independently meet those demands. As people learn strategies to better navigate environments they are given or take more chances for autonomous actions (Prohn et al., 2018). Personal outcomes serve as indicators of access to personal opportunity.

Responsive systems use personal outcome information to adapt circumstances that contribute to personal opportunities (Shogren et al., 2018). Opportunities to meaningfully participate in a range of life activities are tied to several personal outcomes, many of which are measured through the NCI-IPS. We chose to consider a specific constellation of personal outcomes, Everyday Choice, Privacy Rights, and Community Participation that contribute to Personal Opportunity when systems and the policies that govern them are supportive. These personal outcomes have been individually measured in multiple configurations through items on the NCI-IPS. Regardless of measurement tools, the three outcomes are malleable. In supportive contexts, they should improve over time, enhancing personal opportunities.

Privacy Rights

The right to privacy, like everyday choice, has been detailed in international policy, namely through Article 22 of the Convention on the Rights of Persons with Disabilities which dictates that “No person with disabilities…shall be subjected to arbitrary or unlawful interference with his or her privacy” (United Nations, n.d.). More control over liminal spaces can offer dignity and a sense of security. This right permits people to negotiate an individualized balance between the outside world and one’s place of residence or between personal and private spaces within residences. Privacy features prominently in the HCBS Final Settings Rule (2014), dictating that residential experiences have the liberties and flexibility associated with home, rather than residential systems that replicate institutional functioning (i.e., transinstitutionalization). IDD systems can regulate improved privacy for those receiving supports and services. Improved privacy rights outcomes reflect on the system while creating opportunities for service users.

Everyday Choice

Everyday Choice refers to a category of choices that often are made daily to guide personal activities (Lakin et al., 2008). The freedom to make choices has been identified as a hallmark of human rights (United Nations, 2006). The Final Rule (2014) echoes the importance of autonomy by requiring individuals to have control over their scheduled activities and to have individual choices (42 CFR 441.301(c)(4)). There is some evidence that the autonomy and opportunity to make choices predicts a variety of valued personal outcomes such as employment, social relationships, and financial independence (Bush & Tassé, 2017; Shogren & Shaw, 2016). Contexts such as residence size have been shown to either improve or restrict everyday choices (Houseworth et al., 2018; Lakin, et al., 2008; Ticha et al., 2012). Personal opportunities can be attained when systems become aware of aggregate levels of everyday choice and choose to develop contexts that strengthen everyday choices.

Community Participation

Presence in the community and social participation are the defining features of community participation (Bigby et al., 2018; Chang & Coster, 2014; Lee & Morningstar, 2019). Optimal human functioning is dependent upon participation (Schalock et al., 2010). It is also a right captured across multiple articles of the United Nation’s Convention on Rights of Persons With Disabilities (Ticha et al., 2018). Through participation, people grow and learn. Improvements in supporting the participation of individuals with IDD in their communities have been documented (Verdonschot et al., 2009). People with IDD that participate in their communities have more personal opportunities (Braddock et al., 2017).

Study Aims

Everyday choice, privacy rights, and community participation are meaningful for individuals with IDD and for the systems that support them. It is important to reflect on policies that aim for these outcomes to feature more prominently in people’s daily lives. As people strive for these outcomes, and systems cede unnecessary controls, people with disabilities should have greater access to the opportunities that occur in inclusive, community living. However, dependable tools for measuring opportunity are required if systems, states, or countries are to hold themselves accountable to people with disabilities. In this study, we examined whether or not personal outcome measures fit state data and whether or not personal outcome concepts being measured were stable over time. This study satisfied those needs by addressing two primary research questions:

Do measurement models for three factors (privacy rights, everyday choice, and community participation) fit state data from cohorts over 3 consecutive years?

Do cohorts from 2017, 2018, and 2019 differ in their latent mean levels of privacy rights, everyday choice, and community participation?

Methods

Sample

We employed identical procedures to collect NCI samples in the 2016–2017, 2017–2018, and 2018–2019 years. The state Developmental Disabilities (DD) agency designated five planning regions containing 5 to 10 community services boards each. Community Service Boards are Virginia’s local entities that provide state-funded services for people with IDD. Mutually exclusive strata were formed using the five regions. The sample across regions was proportional to participation on the IDD waiver. We used simple random sampling without replacement to select from the population of adults (i.e., 18 years or older) who used case management and at least one other public service. This process yielded samples of 806, 807 and 805 in years 2017 (cohort 1), 2018 (cohort 2), and 2019 (cohort 3), respectively. Basic demographics and characteristics of each sample are included in Table 1.

Table 1.

Demographics of State National Core Indicators Samples From 2017, 2018, and 2019

| Demographics | Cohort 1 | Cohort 2 | Cohort 3 |

|---|---|---|---|

| N | 806 | 807 | 805 |

| Age | 45.65 (SD: 16.49) | 43.73 (SD: 15.64) | 43.43 (SD: 15.57) |

| Gender | |||

| Female | 40% | 44% | 42% |

| Male | 60% | 56% | 58% |

| Race | |||

| Asian | 3% | 2% | 2% |

| African American | 27% | 31% | 34% |

| White | 61% | 63% | 59% |

| Latinx | 2% | 3% | 1% |

| Other | 1% | 1% | 2% |

| Do not know | 6% | 1% | 1% |

| Level of Intellectual Disability | |||

| Mild | 28% | 30% | 28% |

| Moderate | 32% | 40% | 38% |

| Severe | 17% | 15% | 16% |

| Profound | 14% | 13% | 9% |

| Expression | |||

| Spoken | 28% | 76% | 74% |

| Gestures | 32% | 20% | 20% |

| Sign Language | 17% | 2% | 2% |

| Communication aid | 14% | 1% | 2% |

| Residence Type | |||

| Independent home or apartment | 6% | 8% | 7% |

| Parent or relative’s home | 29% | 33% | 37% |

| Agency 1–3 residents | 6% | 12% | 8% |

| Agency 4–6 residents | 32% | 34% | 35% |

| Agency 7–15 residents | 4% | 6% | 5% |

| Specialized facility | 6% | N/A | 1% |

| Nursing facility | 6% | N/A | 1% |

Instrumentation and Variables

The 2017–2019 versions of the NCI-IPS contained up to 109 items across three survey sections: (a) Background Information, (b) Section 1: Face-to-Face with Person Receiving Services and Supports, and (c) Section 2: Survey with Person Receiving Services or with or without Proxy Respondents. Demographics, health, employment, and residential information comprise much of the Background Information section, which is completed by case managers. The remainder of the NCI-IPS was administered through face-to-face interviews by trained interviewers residing in the same region as respondents.

The person receiving services is the only person who can answer items in Section 1. These items cover topics such as privacy, safety, community participation, and satisfaction with supports and services. In Section 2 a proxy can answer items, but only if the person receiving services is unable to answer, and the proxy is knowledgeable about section topics, which include community inclusion, choice making, rights, and access to supports and services. Our analysis includes items from Section 2 of the NCI-IPS that were related to factors that fit data from the state as well as from a national sample (Dinora et al., 2019). The configuration of privacy rights, everyday choice, and community participation is further described below.

Privacy Rights

Neely-Barnes et al. (2008) created a single rights item from the composite of six items (open mail, use phone, etc.) with a total score ranging from 0–12 points. The single item was used as an outcome and was found to be predicted by choice. The rights items used by Neely-Barnes et al. did not adequately fit the Virginia’s NCI data. Instead of using the Neely-Barnes et al.’s rights composite, we measured rights as suggested by Dinora et al. (2019). They used two residential privacy rights items: “Do you have a key to your home?” and “Can you lock your bedroom door if you want to?” Confirmatory factor analyses using state and national NCI data showed that these items adequately loaded on to a privacy rights factor. The response options for these items were recoded to 0 = No, 1 = Maybe, 2 = Yes. The ordinal alpha ranged from .40–.50.

Everyday Choice

The NCI-IPS used nine indicators to capture “choice and decision-making” (NCI, n.d.) These indicators have been reported as two different factors, one “support-related choice” and another called “short-term decision making” (Bush & Tassé, 2017), often referred to as Everyday Choice (Lakin et al., 2008). This study measured the latter, Everyday Choice, as it has been measured since 2008 (Lakin et al., 2008), with three items (“Who decides your daily schedule?”; “Who decides how you spend your free time?”; “Do you choose what you buy with your spending money?”). The response options varied slightly depending on the question, but all were on a 3-point scale that was recoded to 0 = someone else chose, 1 = person had some input, and 2 = person made the choice. The ordinal alpha ranged from .75–81.

Community Participation

A continuum of community participation ranges from physical presence in the community to meaningful social participation in community contexts (O’Brien & Blessing, 2011; Schalock et al., 2010). The NCI-IPS separates the tasks performed in daily life, often requiring some monetary exchange, and elements of community participation more akin with social inclusion. The former is measured by listing various activities that take place in the community and allowing respondents to provide the subjective frequency of their participation in the listed activities. Specifically, respondents share how often over the past month and with whom they went on errands, went out to eat, went out to entertainment, went shopping, or attended religious services. Different combinations of these items have been used to capture community participation. Mehling and Tassé (2015) combined shopping, errands, entertainment, vacation, and eating out with two friendship items to contribute to a factor referred to as “Social Relationships and Community Inclusion.” This factor did not fit Virginia’s NCI-IPS data. In particular, the friendship items did not load with community participation items as they did with the Mehling and Tassé’s (2014; 2015) samples. Instead, we used the Community Participation items outlined by Dinora et al. (2019), which consisted of shopping, errands, entertainment, and eating out. The response options for these items were approximations of frequency of outings over the past month and were recoded to 0 = did not go out, 1 = 1 or 2 times, 2 = 3 or 4 times, or 3 = 5 or more times. The ordinal alpha ranged from .74–.83.

Statistical Analyses

Multiple-Group Confirmatory Factor Analysis

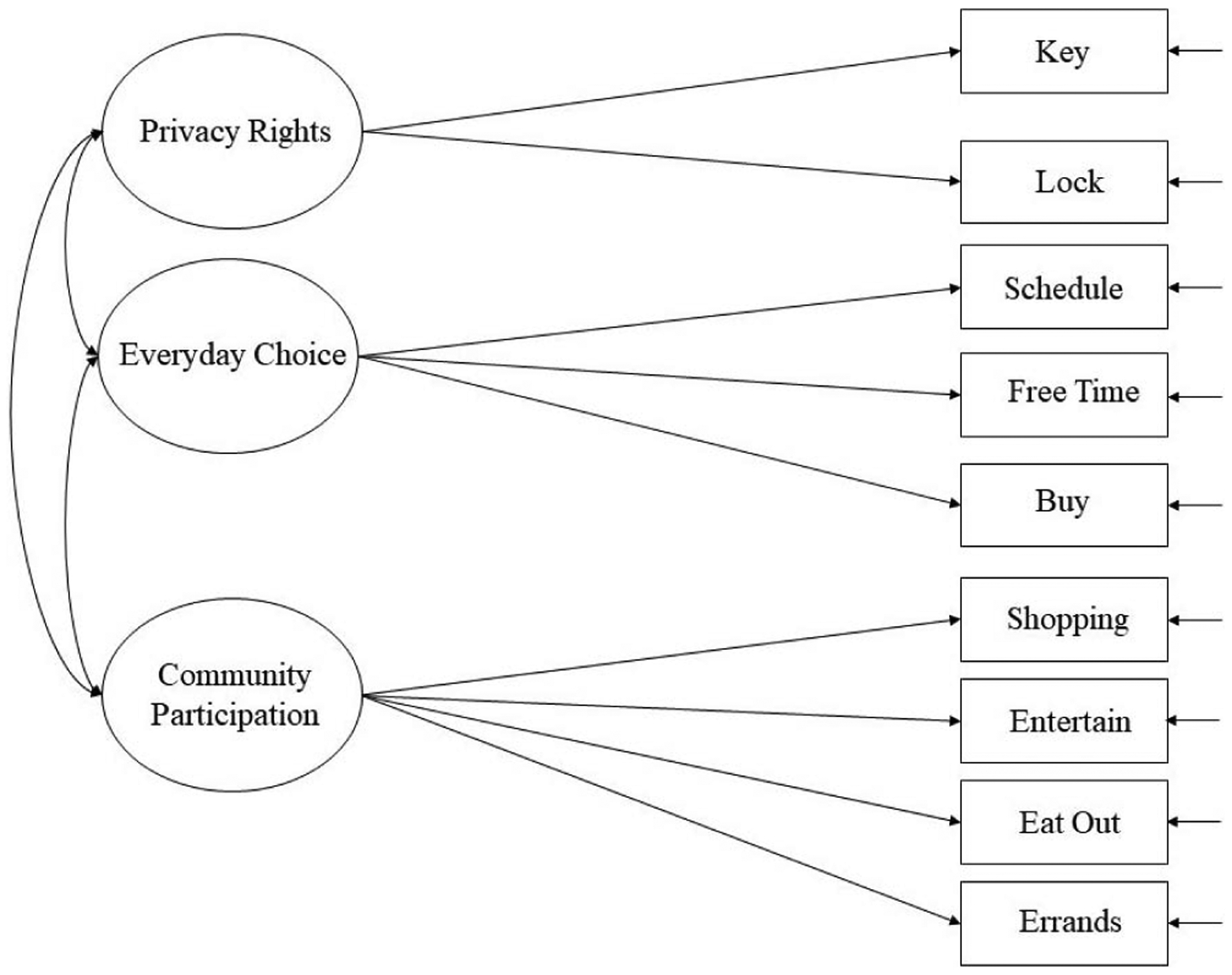

The Personal Opportunity construct consisted of three latent factors. We replicated Dinora et al.’s (2019) model, in which two items were loaded on Privacy rights, three items were loaded on Everyday Choice, and four NCI-IPS items were loaded on Community Participation (Figure 1). Our study differed from Dinora et al.’s (2019) confirmatory factor analysis for one NCI sample because we were interested in comparing factor scores across NCI samples from different years. Before making comparisons, we needed to determine that the personal outcome factors, as we measured them, held the same meaning for different response groups. Therefore, we used multiple-groups confirmatory factor analysis (MG-CFA) to examine whether theorized models were invariant across 2017, 2018, and 2019 response groups. Comparisons across groups only become possible when the factors have the same measurement properties and mean the same thing for different groups. In order to evaluate the equivalence of different aspects of the model, we tested the model through a series of standard steps.

Figure 1.

Hypothesized factor model.

In line with Brown’s (2015) suggestions, our first step was to test configural invariance by building a least restrictive model with identical factor structures. This step was to assure that there were the same numbers of factors and items across cohorts and items were loaded on the same factors despite respondent groups. Next, we tested metric invariance to make sure that the relationships between factors and specific items (i.e., factor loadings) were the same across cohorts. Finally, it was necessary to test scalar invariance to examine uniformity in response patterns in items across years. Scalar invariance is particularly important for comparing the means of latent factors. Given that the items were ordered categorically, the weighted least square mean and variance adjusted (WLSMV) estimator with Theta parameterization in Mplus 8.3 (Muthén & Muthén, 1998–2017) was used, rather than the maximum likelihood (MI) estimator that is commonly used for examining continuous variables.

Evaluation of Model Fit

The degree to which data were aligned with personal opportunity factors was captured with fit indices. Fit indices are criteria that help us evaluate how well the proposed model fits real world data. Overall goodness of model fit was evaluated by using the chi-square test (χ2), comparative fit index (CFI), the Tucker–Lewis index (TLI), root mean square error of approximation (RMSEA), and the standardized root mean square residual (SRMR). An acceptable model fit was defined by the following criteria: CFI ≥ .95, TLI ≥ .95, RMSEA ≤ .06, and SRMR ≤ .08 (Hu & Bentler, 1999). Modification indices were inspected to determine misfit between data and our models. Measurement invariance was retained by meeting the following criteria: ΔRMSEA ≤ .050 in conjunction with significant Δχ^2 and ΔCFI ≥ −.004 for metric invariance; ΔRMSEA ≤ .010 in conjunction with significant Δχ^2 and ΔCFI ≥ −.004 for scalar invariance (Rutkowski & Svetina, 2017; Svetina et al., 2020).

Comparing Latent Means

Assuming that we establish evidence that each latent factor—privacy rights, everyday choice, and community participation—was interpreted in the same way by different groups of participants, and goodness of fit criteria were met, then latent mean comparisons can be made. By understanding the direction and magnitude of latent personal outcome changes across 3 consecutive years, we can understand the outcomes of the supports and services provided by the DD systems and, if necessary, plan for adjustments. Latent mean differences can be examined through the scalar invariance model. Estimated standardized effect sizes (; i.e., the magnitude of the differences) are also reported.

Handling of Missing Data

The proportion of missing data across cohorts ranged from 0.62% to 6.05%. Based on the results of Little’s (1988) MCAR test, missing completely at random (MCAR) was supported for the first (, p=.20) and second cohorts (, p = .29). The missingness of the third cohort was not completely at random (, p=.01). The probability of missing data in everyday choice items was related to the eating-out items in community participation (, p < .01). Within each level of the eating-out items, there were no significant differences in participants’ responses to the everyday choice items. Hence, the assumption of missing at random (MAR) was met. The WLSMV estimator in Mplus uses pairwise deletion to handle missing values (Muthén & Muthén, 1998–2017). Although pairwise deletion could still lead to biased parameter estimates under the assumption of MAR (Enders, 2010), the low percentage of missingness in the third cohort (< 10%) was unlikely to have a significant effect on the findings (Bennett, 2001). The final dataset contains 806, 807, and 805 participants in the first, second, and third cohorts respectively.

Results

A series of measurement invariance models were constructed and evaluated with three distinct latent factors specified: Privacy Rights, Everyday Choice, and Community Participation. Following conventional procedures (Brown, 2015), measurement invariance tests proceeded from the least restrictive (i.e., configural invariance) model to the most restrictive (i.e., scalar invariance) model. The first model, the configural invariance model (Model A1), examined whether the number of latent factors and indicators were identical across cohorts. As shown in Table 2, the equal form model yielded a satisfactory fit, , p < .001, CFI = .99, TLI = .98, RMSEA = .03, SRMR =.03 This finding indicated that the structures of the model (i.e., numbers of factors and items) were identical across respondent cohorts, and the model fit the data well.

Table 2.

Measurement Invariance Models

| Model | χ2 | df | CFI | TLI | RMSEA | SRMR | Model Comparison | Δχ2 | ΔCFI | ΔRMSEA |

|---|---|---|---|---|---|---|---|---|---|---|

| Cohort 1: 806 | ||||||||||

| Cohort 2: 807 | ||||||||||

| Cohort 3: 805 | ||||||||||

| A1 Configural invariance | 137.592* | 72 | .989 | .984 | .034 | .030 | ||||

| A2 Metric invariance | 159.673* | 84 | .988 | .984 | .033 | .033 | A2 - A1 | 24.043* | −.001 | −.001 |

| A3 Scalar invariance | 237.772* | 122 | .981 | .984 | .034 | .035 | A3 - A2 | 79.940* | −.007 | .001 |

| A3–1 Partial scalar invariance | 220.704* | 118 | .984 | .985 | .033 | .034 | A3–1 - A2 | 62.205* | −.004 | .000 |

Note. CFI=Comparative Fit Index; TLI=Tucker Lewis Index; RMSEA=Root Mean Square Error of Approximation; SRMR=Standardized Root Mean Square Residual.

p < .05.

Given that configural invariance was retained, we then examined the metric invariance model (Model A2). This model examined whether the magnitude of the relationships between factors and indicators were identical across cohort years. The metric invariance model yielded a satisfactory fit (, p < .001, CFI = .99, TLI = .98, RMSEA = .03, SRMR = .03). Also, changes in both CFI and RMSEA were minimal (i.e., ΔRMSEA ≤ .050 and ΔCFI ≥ −.004) compared to the configural model. Therefore, the metric invariance model was no worse than the baseline model. Retaining metric invariance indicated that the relationships between survey items and latent factors were equal across cohorts, and therefore, a one unit change in the level of the same latent factor (i.e., Privacy Rights, Everyday Choice, or Community Participation) will be associated with identical changes in participants’ responses to a specific item when comparing between cohorts.

Given that metric invariance was retained, we then examined the scalar invariance model (Model A3), which examined whether response propensity was equivalent for respondents with the same levels of Privacy Rights, Everyday Choice, and Community Participation. The scalar invariance model yielded a satisfactory fit (, p < .001, CFI = .98, TLI = .98, RMSEA = .03, SRMR = .04). However, there was a significant drop in CFI, suggesting that there was a significant difference in response propensity for some of the survey items between cohorts. The modification indices suggested that the KEY item (“Do you have a key to your home?”) was the largest source of the misfit and should be freely estimated in each cohort, that is, to allow each cohort to have their own response propensity for the KEY item. After we revised the model, the change in CFI and RMSEA met the criteria (i.e., ΔRMSEA ≤ .010 and ΔCFI ≥ −.004) compared to the metric invariance model. Therefore, the partial scalar invariance model (Model A3–1) was retained and indicated that all but KEY items had the same response propensities between cohorts. Partial scalar invariance means that, overall, we had identical response propensities across cohorts with the exception of one item. Because full scalar invariance is uncommon, it has become commonplace to test latent mean differences with partially invariant factors (Putnick & Bornstein, 2016).

In terms of latent means, the second and third cohorts had significantly higher Privacy Rights (, p = .004; , p < .001) and Community Participation (, p < .001 ; (, p < .001) scores compared with the first cohort (see Hancock, 2001 for computing standardized effect size in structured means modeling). However, there was no significant difference in Privacy Rights and Community Participation between the second and third cohorts. Additionally, there was no significant difference in the latent mean of Everyday Choice between cohorts. Therefore, it can be concluded that, on average, the mean levels of Rights and Community Participation of the second and third cohort are around 0.2 to 0.5 standard deviations higher than those of the first cohort. These differences represent a small-to-medium effect size (Cohen, 1988).

In terms of the associations between factors, three dimensions were positively associated with one another across three cohorts. Participants with a high level of rights also tended to have high levels of choice and community participation. It should be noted that the magnitude of the relationships seems to decrease over time. Model fit indices are provided in Table 2 and model parameters from the partial scalar invariance model are provided in Table 3–7.

Table 3.

Factor Loadings

| Indicators | λ | SE | p-value |

|---|---|---|---|

| Privacy Rights | |||

| key | .479 | .075 | .000 |

| lock | 1.001 | .192 | .000 |

| Everyday Choice | |||

| schedule | 1.183 | .080 | .000 |

| free time | 1.647 | .154 | .000 |

| buy | 1.021 | .063 | .000 |

| Community Participation | |||

| shopping | 1.496 | .088 | .000 |

| entertain | .913 | .050 | .000 |

| eat out | 1.463 | .084 | .000 |

| errands | .844 | .046 | .000 |

Table 7.

Factor Mean Differences

| Factors | κ | SE | p-value |

|---|---|---|---|

| Cohort 1 | |||

| Privacy Rights | .000 | .000 | — |

| Everyday Choice | .000 | .000 | — |

| Community Participation | .000 | .000 | — |

| Cohort 2 – Cohort 1 | |||

| Privacy Rights | .321 | .113 | .004 |

| Everyday Choice | −.004 | .059 | .941 |

| Community Participation | .204 | .051 | .000 |

| Cohort 3 – Cohort 1 | |||

| Privacy Rights | .469 | .130 | .000 |

| Everyday Choice | .039 | .057 | .497 |

| Community Participation | .199 | .051 | .000 |

| Cohort 3 – Cohort 2 | |||

| Privacy Rights | .147 | .109 | .176 |

| Everyday Choice | .043 | .055 | .431 |

| Community Participation | −.005 | .046 | .913 |

Discussion

In a policy climate that emphasizes the full inclusion of people with disabilities in their communities, state IDD systems have an increasing responsibility to use sound and stable measures to ensure compliance with mandates and to track progress over time. Although NCI-IPS is widely used as a mechanism to measure IDD system performance, an item by item analysis of the IPS can be unwieldy and overwhelming for policy makers, families, and other stakeholders. To address this issue, researchers have worked with national- and state-level NCI datasets to identify personal outcomes that encompass multiple items in the survey and allow for constructs, such as personal opportunity, to be monitored more holistically. The analysis presented in this study is an extension of this growing body of research with added degrees of rigor from identifying valid measures and further testing the invariance of validated personal outcome factors with different cohorts over 3 consecutive years.

To investigate our first research question, we used MG-CFA to examine whether the NCI-IPS factors Privacy Rights, Everyday Choice, and Community Participation had the same meaning for different cohorts of NCI respondents over a 3-year period. Retaining configural invariance indicated that the organization of the factors was supported across cohorts, and because metric invariance was retained, the magnitude of the relationships between factors and corresponding indicators are identical across different cohorts. Also, we were able to achieve partial scalar invariance. These results support the use of the NCI personal outcome factors in that the meaning of the factors remained unchanged year after year.

Policy feedback loops connect policies to support and service practices. Personal outcomes are key components in these feedback loops by indicating when policies and practices should be altered. Conversely, in the absence of valid and meaningful personal outcome data, feedback loops are broken or delayed. Therefore, the tools we presented for capturing outcomes present a considerable opportunity for the state system and the people receiving service. Although the NCI-IPS always captured personal outcome items, our measures allow for an examination of discrete outcomes related to specific policy goals. These data can be used for continuous quality improvement because they can be translated to state-level policy and regulatory decision making. Completing policy feedback loops allows systems to best meet the spirit of disability policies that were developed to improve personal outcomes and system accountability for those outcomes. Further, our MG-CFA allowed us to capture personal outcomes and monitor changes in those outcomes over a 3-year period.

By achieving partial invariance, we were able to compare group latent means over consecutive years for Privacy Rights, Everyday Choice, and Community Participation (i.e., research questions 2). This analysis represents an undertaking that, to our understanding, has not been performed elsewhere but is critical for states to monitor the direction and magnitude of aggregate personal outcomes changes. Monitoring these results allows the IDD system to respond accordingly to maintain or improve observed changes in personal outcomes. Our results showed that from 2017 through 2019, the latent means for Everyday Choice were statistically equivalent across cohorts. When comparing the latent means for the Privacy Rights and Community Participation, results showed that 2018 and 2019 cohorts were significantly higher than the 2017 cohort.

In general, service users more frequently enact Everyday Choices compared to larger decisions such as where to work and where to live, although long-term choices do impact Everyday Choice (Lakin et al., 2008; Sheppard-Jones et al., 2005). Some have argued that Everyday Choice is less dictated by service-delivery systems than are long-term choices and even claimed that Everyday Choice can be realized independent of HCBS service delivery (Houseworth et al., 2018; Lakin et al., 2008). In this study, Everyday Choice was unchanged over a 3-year period. Samples from those years all had more than 50% of people living in agency licensed residences. State residential licenses typically require person-centered individualized services plans and assurances of rights, including freedom to spend personal money and to have choice in daily activities (i.e., everyday choices). Therefore, it is possible that state requirements can indirectly contribute to latent mean scores of the Everyday Choice outcomes as well as the maintenance of those outcomes over years.

Nonetheless, plateaus in choice making should always be closely scrutinized. When patterns of Everyday Choice item responses indicate little autonomy for choice making, then action would be needed to improve the amount of influence people have over their everyday life. One approach would be to consider the contexts that support or constrain Everyday Choice. For example, ample evidence indicates Everyday Choice outcomes are stronger for people living in smaller (i.e., fewer co-residents) and less restrictive residences (Houseworth et al., 2018; Lakin et al., 2008; Tichá et al., 2012; Weymeyer & Gardner, 2003). Emerging evidence that nested Everyday Choice outcomes within state context shows stronger outcomes in states with more attainable costs of living (Houseworth et al., 2018). Responsive states and systems can use outcome data to develop more supportive contexts, monitor results, and guide more choice making rights.

Dinora et al.’s (2019) Personal Opportunity model showed a strong relationship between Everyday Choice and Privacy Rights. Jones et al. (2018) found that a particular Privacy Rights item, whether individuals can lock their bedroom door, predicted self-determination. Similarly, Neely-Barnes et al. (2008) developed a path model, which shows that greater choice making predicted rights and community participation. Although our models were not developed to show relationships between latent factors, we did observe that the means of personal outcome latent factors changed differently between 2017 and 2018. Although latent means for Everyday Choice was statistically unchanged over all 3 years, we did observe Privacy Rights latent means significantly improved between 2017 and 2018.

Though many unexplored factors, such as choice, may have contributed to Privacy Rights trends, basic differences in sample composition are a likely and obvious contributor. In 2017, 12% of the sample lived in institutional environments. After that year, respondents from institutional settings were not included in the sample, and the Privacy Rights outcomes improved significantly for 2018 and 2019 cohorts. This is consistent with previous findings that when respondents lived in larger group residences, they had lower rights scores compared to those living in more individualized residences (Neely-Barnes et al., 2008).

Privacy Rights items measured whether one had a key to their residence and if they could lock their bedroom door. It would be irregular for people living in institutional settings to report these sorts of privacy rights and freedoms (Human Services Research Institute, 2015). Another potential contributing explanation is a methodological one. NCI-IPS Privacy Rights items have restricted, essentially binary, response options (yes, no, don’t know), and changes in state-regulated residencies would likely occur only once. For example, if a group home added locking mechanisms for all bedroom doors 1 year to improve privacy for their residence, then these changes to the physical environment would likely be maintained. When changes are enacted across multiple agency-regulated residences in a relatively short period of time, then improvements in aggregate Privacy Rights outcomes reflect system-level changes.

States can also enact measures to increase community participation. For example, NCI-IPS data from Kentucky, when compared to the General Population Survey, showed that individuals with IDD were lonelier than the general population (Moseley et al., 2013). The state DD agency, as a result of the finding, responded by increasing funding for providers to improve community access in the evenings and weekends.

Our results showed small latent mean improvements for Community Participation between 2017 and 2018. These changes were documented 1 year after Virginia implemented a Medicaid waiver redesign. Revisions further aligned with the Final Rule (2014) and other federal regulations assuring that all service users were provided with opportunities to learn and build relationships in their community. The impacts of these policy and system changes likely have influenced goals for service users to more frequently participate in their communities and should maintain improved levels of community participation over time.

Data-driven planning and policy construction, however, extends from basic assurances that outcome measures are stable. Evidence that constructs hold a common meaning across multiple cohorts also makes data more useful because large numbers of items can now be represented by a concise number of factors, which leaves decision makers to consider more holistic concepts rather than trying to independently decide how to combine similar items and derive their meaning. Confidence in the Dinora et al.’s (2019) measures allows decision makers to plan innovative ways to use the personal outcome measures to track the impact of programs, regulations, and policies on HCSB service user outcomes. After all, improvements in personal outcomes will yield more personal opportunities for people with IDD.

Limitations and Future Research

There are a number of limitations associated with the current study. First, the constellation of personal outcomes captured through the three measures (Privacy Rights, Everyday Choice, and Community Participation) reviewed in this study are necessary but not exhaustive in measuring all personal opportunity. Personal opportunity concepts, such as human functioning, would include the interaction of many personal outcomes and contextual elements that are not included in the current measure. Framing outcomes in terms of personal opportunity is nonetheless important because the terminology forwards the notion that restrictions on personal rights and outcomes are restrictions on opportunities for meaningful community inclusion.

Second, the NCI-IPS items that we used to operationalize the Community Participation outcome primarily addressed access to the community. Community Participation, however, is often conceptualized as access to places and activities as well as social interactions and relationships in the community (Bigby et al., 2018; Chang & Coster, 2014; Lee & Morningstar, 2019). Dinora et al. (2019) were able to better measure the social component of community participation through a personal outcome measure called friendship that tracked if community activities occurred with friends, as opposed to family or staff. The 2017 NCI-IPS items did not include the items that captured the social aspect of community participation. Future studies should measure elements of social embeddedness along with physical emplacement.

Third, Privacy Rights, Everyday Choice, and Community Participation demonstrated good overall psychometric properties across three cohorts. One Privacy Rights item related to having a key for home was, however, found to be non-invariant. This is important because the extent to which mean estimates for subgroups are biased is proportionate to the number of invariant items on a factor (Chen, 2008). We were able to determine that the noninvariant key item had little impact on the study’s findings by comparing the partial scalar invariance solution obtained in this study with a fully scalar invariance model.

Fourth, each NCI indicator can be answered either by direct or proxy participants. There is a possibility that service providers and guardians misinterpret individuals’ experiences, which could undermine the validity of the data. Our further analysis showed that there was no significant difference in most variables between direct and proxy participants across cohorts. Future researchers are encouraged to examine differences in item responses between direct and proxy participants using the latest NCI data.

Last, the scales formed in this study provided a more parsimonious understanding of personal outcome variables that can be benchmarked from one year to the next. However, because our data, albeit from 3 consecutive years, came from only one state, it is possible that studies using data from other states or from national NCI-IPS data could lead to different results than those reported here. Future studies using cohorts from a different timeframe or location should examine the degree to which current findings can be replicated.

Conclusion

Despite the importance of having predictable outcome measures, caution should be exercised when using best fit data from one state or even a national dataset to generalize to another state. Although federal mandates apply equally, every state has a particular policy context, regulatory environment, and service array. Although forming scales from NCI items provides a more parsimonious and elevated understanding of outcomes, it is recommended that states test these scales using their own data to ensure accuracy and fit. It has long been established that states differ in their resources, cultures, and service environments. Such differences have been associated with differing NCI outcomes (Houseworth et al., 2018; Lakin et al., 2008). Therefore, each state should take advantage of opportunities to benchmark specific NCI items with other states and the nation, while also noting that NCI data can be further optimized. Using the techniques presented in this research, states can have a more clear, concise, and accurate way to measure change in domains such as Privacy Rights, Everyday Choice, and Community Participation over time, which has great utility for ensuring federal mandates that people have personal opportunities to live full lives in the community.

Table 4.

Item Thresholds

| Thresholds | τ | SE | p-value |

|---|---|---|---|

| Privacy Rights | |||

| key_1: cohort 1 | .236 | .050 | .000 |

| key_2: cohort 1 | .254 | .050 | .000 |

| key_1: cohort 2 | .055 | .066 | .401 |

| key_2: cohort 2 | .073 | .066 | .268 |

| key_1: cohort 3 | −.018 | .072 | .805 |

| key_2: cohort 3 | −.014 | .072 | .843 |

| lock_1 | −.219 | .066 | .001 |

| lock_2 | −.126 | .065 | .052 |

| Everyday Choice | |||

| schedule_1 | −1.410 | .070 | .000 |

| schedule_2 | .381 | .057 | .000 |

| free time_1 | −2.675 | .173 | .000 |

| free time_2 | −.661 | .087 | .000 |

| buy_1 | −1.649 | .066 | .000 |

| buy_2 | .070 | .051 | .171 |

| Community Participation | |||

| shopping_1 | −1.912 | .090 | .000 |

| shopping_2 | −.641 | .068 | .000 |

| shopping_3 | .490 | .067 | .000 |

| entertain_1 | −.911 | .048 | .000 |

| entertain_2 | .197 | .045 | .000 |

| entertain_3 | .978 | .048 | .000 |

| eat out_1 | −1.688 | .081 | .000 |

| eat out_2 | −.358 | .065 | .000 |

| eat out_3 | .640 | .066 | .000 |

| errands_1 | −1.263 | .051 | .000 |

| errands_2 | .248 | .044 | .000 |

| errands_3 | 1.076 | .048 | .000 |

Table 5.

Factor Variances

| Factors | ϕ | SE | p-value |

|---|---|---|---|

| Cohort 1 | |||

| Privacy Rights | 1.000 | .000 | — |

| Everyday Choice | 1.000 | .000 | — |

| Community Participation | 1.000 | .000 | — |

| Cohort 2 | |||

| Privacy Rights | 1.115 | .423 | .008 |

| Everyday Choice | .802 | .103 | .000 |

| Community Participation | .591 | .067 | .000 |

| Cohort 3 | |||

| Privacy Rights | .751 | .317 | .018 |

| Everyday Choice | .665 | .088 | .000 |

| Community Participation | .584 | .066 | .000 |

Table 6.

Factor Covariances

| Factors | 1 | 2 | 3 |

|---|---|---|---|

| Cohort 1 | |||

| 1. Privacy Rights | — | ||

| 2. Everyday Choice | .523* | — | |

| 3. Community Participation | .589* | .341* | — |

| Cohort 2 | |||

| 1. Privacy Rights | — | ||

| 2. Everyday Choice | .378* | — | |

| 3. Community Participation | .227* | .199* | — |

| Cohort 3 | |||

| 1. Privacy Rights | — | ||

| 2. Everyday Choice | .296* | — | |

| 3. Community Participation | .168* | .168* | — |

p < .05.

Acknowledgments

This work was supported by the National Institute on Disability, Independent Living, and Rehabilitation Research (NIDILRR) Grant #90IFRE0015.

References

- Americans with Disabilities Act of 1990, 42, USC §§12101–12213.

- Bennett DA (2001). How can I deal with missing data in my study? Australian and New Zealand Journal of Public Health, 25(5), 464–469. 10.1111/j.1467-842X.2001.tb00294.x [DOI] [PubMed] [Google Scholar]

- Bigby C, Anderson S, & Cameron N (2018). Identifying conceptualizations and theories of change embedded in interventions to facilitate community participation for people with intellectual disability: A scoping review. Journal of Applied Research in Intellectual Disabilities, 31(2), 165–180. 10.1111/jar.12390 [DOI] [PubMed] [Google Scholar]

- Braddock D, Hemp R, Tanis ES, Wu J, & Haffer L (2017). The state of the states in intellectual and developmental disabilities (11th ed.). American Association on Intellectual and Developmental Disabilities. [Google Scholar]

- Brown TA (2015). Confirmatory factor analysis for applied research (2nd ed.). Guilford Publications. [Google Scholar]

- Bush KL, & Tassé MJ (2017). Employment and choice-making for adults with intellectual disability, autism, and Down syndrome. Research in Developmental Disabilities, 65, 23–34. 10.1016/j.ridd.2017.04.004 [DOI] [PubMed] [Google Scholar]

- Centers for Medicare and Medicaid Services. (2014). Medicaid Program; State Plan Home and Community-Based Services, 5-Year Period for Waivers, Provider Payment Reassignment, and Home and Community-Based Setting Requirements for Community First Choice (Section 1915(k) of the Act) and Home and Community-Based Services (HCBS) Waivers (Section 1915(c) of the Act). https://www.medicaid.gov/medicaid-chip-program-information/by-topics/long-term-services-and-supports/home-and-community-based-services/downloads/final-rule-slides-01292014.pdf [PubMed]

- Chang FH, & Coster WJ (2014). Conceptualizing the construct of participation in adults with disabilities. Archives of Physical Medicine and Rehabilitation, 95(9), 1791–1798. 10.1016/j.apmr.2014.05.008 [DOI] [PubMed] [Google Scholar]

- Chen FF (2008). What happens if we compare chopsticks with forks? The impact of making inappropriate comparisons in cross-cultural research. Journal of Personality and Social Psychology, 95(5), 1005–1018. 10.1037/a0013193 [DOI] [PubMed] [Google Scholar]

- Cohen J (1988). Statistical power analysis for the behavioral sciences (2nd ed.). L. Erlbaum Associates. [Google Scholar]

- Developmental Disabilities Program, 45 CFR 1385 (2015). https://www.federalregister.gov/documents/2015/07/27/2015-18070/developmental-disabilities-program

- Dinora P, Bogenschutz M, Broda M, & Prohn S (2019, November 17–20). New personal opportunity and wellness scales using the National Core Indicators [Poster session]. Association of Universities Centers on Disabilities, Washington, D.C. [Google Scholar]

- Enders CK (2010). Applied missing data analysis. Guilford Press. [Google Scholar]

- Hancock GR (2001). Effect size, power, and sample size determination for structured means modeling and MIMIC approaches to between-groups hypothesis testing of means on a single latent construct. Psychometrika, 66(3), 373–388. 10.1007/BF02294440 [DOI] [Google Scholar]

- Houseworth J, Stancliffe RJ, & Tichá R (2018). Association of state-level and individual-level factors with choice making of individuals with intellectual and developmental disabilities. Research in Developmental Disabilities, 83, 77–90. 10.1016/j.ridd.2018.08.008 [DOI] [PubMed] [Google Scholar]

- Houseworth J, Stancliffe RJ, & Tichá R (2019). Examining the National Core Indicators’ potential to monitor rights of people with intellectual and developmental disabilities according to the CRPD. Journal of Policy and Practice in Intellectual Disabilities, 16(4), 342–351. 10.1111/jppi.12315 [DOI] [Google Scholar]

- Hu L, & Bentler PM (1999). Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Structural Equation Modeling: A Multidisciplinary Journal, 6(1), 1–55. 10.1080/10705519909540118 [DOI] [Google Scholar]

- Human Services Research Institute. (2015). NCI data brief: Rights and respect. https://www.nationalcoreindicators.org/upload/coreindicators/NCI_DataBrief_MARCH2015_edits_v2_042015.pdf

- Jones JL, Shogren KA, Grandfield EM, Vierling KL, Gallus KL, & Shaw LA (2018). Examining predictors of self-determination in adults with intellectual and developmental disabilities. Journal of Developmental and Physical Disabilities, 30(5), 601–614. 10.1007/s10882-018-9607-z [DOI] [Google Scholar]

- Lakin KC, Doljanac R, Byun S, Stancliffe R, Taub S, & Chiri G (2008). Choice-making among Medicaid HCBS and ICF/MR recipients in six states. American Journal on Mental Retardation, 113(5), 325–342. 10.1352/2008.113.325-342 [DOI] [PubMed] [Google Scholar]

- Lee H, & Morningstar ME (2019). Exploring predictors of community participation among young adults with severe disabilities. Research and Practice for Persons With Severe Disabilities, 44(3), 186–199. 10.1177/1540796919863650 [DOI] [Google Scholar]

- Little RJ (1988). A test of missing completely at random for multivariate data with missing values. Journal of the American statistical Association, 83(404), 1198–1202. 10.1080/01621459.1988.10478722 [DOI] [Google Scholar]

- Mansell J, & Beadle-Brown J (2012). Active support: Enabling and empowering people with intellectual disabilities. Jessica Kingsley Publishers. [Google Scholar]

- McConkey R, & Collins S (2010). The role of support staff in promoting the social inclusion of persons with an intellectual disability. Journal of Intellectual Disability Research, 54(8), 691–700. 10.1111/j.1365-2788.2010.01295.x [DOI] [PubMed] [Google Scholar]

- Mehling MH, & Tassé MJ (2014). Empirically derived model of social outcomes and predictors for adults with ASD. Intellectual and Developmental Disabilities, 52(4), 282–295. 10.1352/1934-9556-52.4.282 [DOI] [PubMed] [Google Scholar]

- Mehling MH, & Tassé MJ (2015). Impact of choice on social outcomes of adults with ASD. Journal of Autism and Developmental Disorders, 45(6), 1588–1602. 10.1007/s10803-014-2312-6 [DOI] [PubMed] [Google Scholar]

- Moseley C, Kleinert H, Sheppard-Jones K, & Hall S (2013). Using research evidence to inform public policy decisions. Intellectual and Developmental Disabilities, 51(5), 412–422. 10.1352/1934-9556-51.5.412 [DOI] [PubMed] [Google Scholar]

- Muthén LK, & Muthén BO (1998–2017). Mplus user’s guide (8th ed.). [Google Scholar]

- National Core Indicators. (n.d.) https://www.nationalcoreindicators.org/about/indicators/

- Neely-Barnes S, Marcenko M, & Weber L (2008). Does choice influence quality of life for people with mild intellectual disabilities? Intellectual and Developmental Disabilities, 46(1), 12–26. 10.1352/0047-6765(2008)46[12:DCIQOL]2.0.CO;2 [DOI] [PubMed] [Google Scholar]

- O’Brien J & Blessing C (Eds.). (2011). Conversations on citizenship and person-centered work (Vol. 3). Inclusion Press. [Google Scholar]

- Olmstead v. L.C., 527 U.S. 581 (1999)

- Prohn SM, Kelley KR, & Westling DL (2018). Students with intellectual disability going to college: What are the outcomes? A pilot study. Journal of Vocational Rehabilitation, 48(1), 127–132. 10.3233/JVR-170920 [DOI] [Google Scholar]

- Putnick DL, & Bornstein MH (2016). Measurement invariance conventions and reporting: The state of the art and future directions for psychological research. Developmental Review, 41, 71–90. 10.1016/j.dr.2016.06.004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rutkowski L, & Svetina D (2017). Measurement invariance in international surveys: Categorical indicators and fit measure performance. Applied Measurement in Education, 30(1), 39–51. 10.1080/08957347.2016.1243540 [DOI] [Google Scholar]

- Schalock RL, Borthwick-Duffy SA, Bradley VJ, Buntinx WHE, Coulter DL, Craig EM, Gomez SC, Lachapelle Y, Luckasson R, Reeve A, Shogren KA, Snell ME, Spreat S, Tasse MJ, Thompson JR, Verdugo-Alonso MA, Wehmeyer ML, & Yeager MH (2010). Intellectual disability: Definition, classification, and systems of supports. American Association on Intellectual and Developmental Disabilities [Google Scholar]

- Sheppard-Jones K, Prout HT, & Kleinert H (2005). Quality of life dimensions for adults with developmental disabilities: A comparative study. Mental Retardation, 43(4), 281–291. 10.1352/0047-6765(2005)43[281:QOLDFA]2.0.CO;2 [DOI] [PubMed] [Google Scholar]

- Shogren KA, Luckasson R, & Schalock RL (2015). Using context as an integrative framework to align policy goals, supports, and outcomes in intellectual disability. Intellectual and Developmental Disabilities, 53(5), 367–376. 10.1352/1934-9556-53.5.367 [DOI] [PubMed] [Google Scholar]

- Shogren KA, Luckasson R, & Schalock RL (2018). The responsibility to build contexts that enhance human functioning and promote valued outcomes for people with intellectual disability: Strengthening system responsiveness. Intellectual and Developmental Disabilities, 56(4), 287–300. 10.1352/1934-9556-56.5.287 [DOI] [PubMed] [Google Scholar]

- Shogren KA, & Shaw LA (2016). The role of autonomy, self-realization, and psychological empowerment in predicting outcomes for youth with disabilities. Remedial and Special Education, 37(1), 55–62. 10.1177/0741932515585003 [DOI] [Google Scholar]

- Svetina D, Rutkowski L, & Rutkowski D (2020). Multiple-group invariance with categorical outcomes using updated guidelines: An illustration using M plus and the lavaan/semtools packages. Structural Equation Modeling: A Multidisciplinary Journal, 27(1), 111–130. 10.1080/10705511.2019.1602776 [DOI] [Google Scholar]

- The Council on Quality and Leadership. (2017). Personal Outcome Measures: Measuring personal quality of life (4th ed.). https://www.c-q-l.org/wp-content/uploads/2020/03/2017-CQLPOM-Manual-Adults.pdf [Google Scholar]

- Tichá R, Lakin KC, Larson SA, Stancliffe RJ, Taub S, Engler J, Bershadsky J, & Moseley C (2012). Correlates of everyday choice and support-related choice for 8,892 randomly sampled adults with intellectual and developmental disabilities in 19 states. Intellectual and Developmental Disabilities, 50, 486–504. 10.1352/1934-9556-50.06.486 [DOI] [PubMed] [Google Scholar]

- Tichá R, Qian X, Stancliffe RJ, Larson SA, & Bonardi A (2018). Alignment between the Convention on the Rights of Persons with Disabilities and the National Core Indicators Adult Consumer Survey. Journal of Policy and Practice in Intellectual Disabilities, 15(3), 247–255. 10.1111/jppi.12260 [DOI] [Google Scholar]

- United Nations Convention on the Rights of Persons with Disabilities – Articles (n.d.). https://www.un.org/development/desa/disabilities/convention-on-the-rights-of-persons-with-disabilities/convention-on-the-rights-of-persons-with-disabilities-2.html [DOI] [PubMed]

- Verdonschot MM, De Witte LP, Reichrath E, Buntinx WHE, & Curfs LM (2009). Community participation of people with an intellectual disability: A review of empirical findings. Journal of Intellectual Disability Research, 53(4), 303–318. 10.1111/j.1365-2788.2008.01144.x [DOI] [PubMed] [Google Scholar]

- Wehmeyer ML, & Garner NW (2003). The impact of personal characteristics of people with intellectual and developmental disability on self-determination and autonomous functioning. Journal of Applied Research in Intellectual Disabilities, 16(4), 255–265. 10.1046/j.1468-3148.2003.00161.x [DOI] [Google Scholar]

- Wilson NJ, Jaques H, Johnson A, & Brotherton ML (2017). From social exclu sion to supported inclusion: Adults with intellectual disability discuss their lived experiences of a structured social group. Journal of Applied Research in Intellectual Disabilities, 30(5), 847–858. 10.1111/jar.12275 [DOI] [PubMed] [Google Scholar]