Abstract

Background:

Patient-reported outcomes (PROs) allow for direct measurement of functional and psychosocial effects related to treatment. However, technological barriers, survey fatigue, and clinician adoption have hindered meaningful integration of PROs in clinical care. We aim to develop an electronic PROs (ePROs) program that meets a range of clinical needs across a head and neck multidisciplinary disease management team (DMT).

Methods:

We developed the ePROs module using literature review and stakeholder input in collaboration with health informatics. We designed an ePROs platform that was integrated as standard of care for personalized survey delivery by diagnosis across the DMT. We used Tableau software to create dashboards for data visualization and monitoring at clinical enterprise, disease subsite, and patient levels. All patients treated for head and neck cancer were eligible for ePROs assessment as part of standard of care. A descriptive analysis of ePROs program implementation is presented.

Results:

The Head and Neck Service at Memorial Sloan Kettering Cancer Center has integrated ePROs into clinical care. Surveys are delivered via patient portal at time of diagnosis and longitudinally through care. From August 1, 2018 to February 1, 2020, 4,154 patients have completed ePROs surveys. Average patient participation rate has been 69%. Median time of completion has been 5 minutes.

Conclusions:

Integration of the head and neck ePROs program as part of clinical care is feasible and could be used to assess value and counsel patients, in the future. Continued qualitative assessments of stakeholders and workflow will refine content and enhance the health informatics platform.

Lay summary:

Patients with head and neck cancer have significant changes in quality of life after treatment. Measuring and integrating patient-reported outcomes as part of clinical care has been challenging given the multimodal treatment options, vast subsites, and unique domains affected. We present a case study of successful integration of electronic patient-reported outcomes into a high-volume head and neck cancer practice.

Keywords: head and neck neoplasm, patient-reported outcomes measures, patient portals, data visualization, medical informatics

Precis for use in the Table of Contents:

Integration of an electronic patient-reported outcomes (ePROs) program into a head and neck oncology practice is feasible and will allow for measurement of outcomes. This program can be used as a model for future development of ePROs programs across oncology practices.

Introduction

In 2014, the National Cancer Institute (NCI) underscored the value of patient-reported outcomes (PROs) in head and neck oncology by calling for the inclusion of a core set of 12 patient-reported symptoms and health-related quality-of-life (HRQOL) domains in head and neck clinical trials.1 PROs and HRQOL can be used to monitor symptoms, detect functional or psychological concerns, and track long-term side effects of treatment. Although the ability to collect PROs to measure secondary endpoints represents an important step in clinical research,2 these data are often not accessible, appropriate, or reliable for a multidisciplinary head and neck cancer disease management team (DMT) to make clinical decisions on individual patients or follow groups of patients to assess outcomes.

Patients with head and neck cancer are an ideal group to benefit from the use of PROs in clinical care because of the high burden of treatment effects with actionable interventions. These patients have a high acute and chronic toxicity burden affecting basic physiologic functions, including eating, swallowing, and communication. However, there are unique challenges to incorporating PROs into the clinical care of patients with head and neck cancer. Treatment for head and neck cancer often involves multimodal approaches, including a combination of surgery, radiation therapy, and chemotherapy. Effective PRO measures (PROMs) would require coordination of survey delivery across a complex system without duplication, which can lead to survey fatigue. The anatomical location of the disease crosses many functional (swallowing and eating) and social (speaking and facial appearance) domains, which can complicate the development of a head and neck cancer-specific PROM tool with the relevant functional and psychosocial domains. As a result, there are no validated head and neck cancer PROMs that were generated using patient input, designed to assess the relevant functional and psychosocial domains, and able to help make clinical decisions on individual patients over time rather than populations.

We sought to investigate the feasibility of developing and implementing an electronic PROs (ePROs) program as standard of care across the entire head and neck DMT and to identify areas that can be modified to improve integration. We present a case study detailing the experience, obstacles, and opportunities to incorporate PROs to improve health care delivery to patients with head and neck cancer.

Methods

As part of the Patient-Reported Information for Strategic Management (PRISM) program at Memorial Sloan Kettering Cancer Center (MSK), we developed and implemented the Head and Neck PROs Oncology platform. This feasibility study was conducted at a high-volume National Comprehensive Cancer Network center across all sites of care, including 7 regional network campuses. Guidelines published by the International Society for Quality of Life Research and Patient-Centered Outcomes Research Institute were used as a framework.3,4 The Head and Neck PROs Oncology platform was implemented as standard of care for all patients treated with head and neck cancer and therefore was exempt from review by the Institutional Review Board.

Selection of Domains and Measures

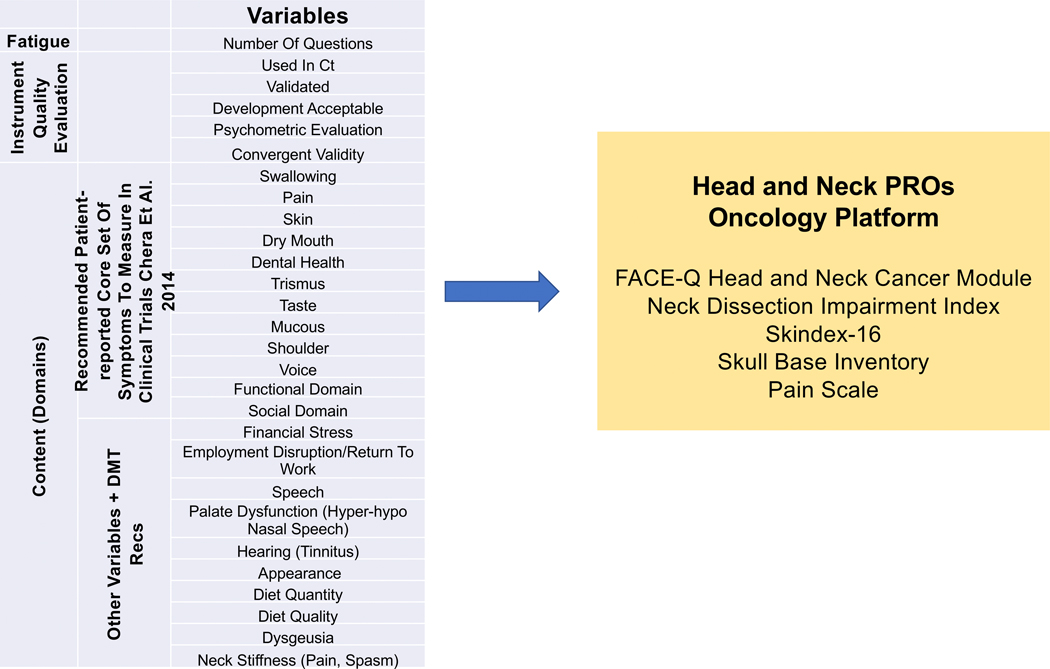

Measure selection involved a stepwise process that included input from the head and neck DMT. First, relevant domains affected by head and neck cancer and treatment were identified. The 12 core patient-reported symptoms and domains identified by the NCI Symptom Management and Health-Related Quality of Life Steering Committee were also included. Additional domains were selected through expert opinion and literature review. Figure 1 shows the relevant symptoms and domains that informed measure selection and the final list for the Head and Neck PROs Oncology platform.

Figure 1.

The content of the Head and Neck Patient-Reported Outcomes (PROs) Oncology platform was developed based on National Cancer Institute guidelines and MSK disease management team (DMT) input. Survey fatigue reduction strategies and institutional governance contributed to the final list of measures included in the module.

Measures were selected based on how they were developed, whether they were psychometrically sound, whether they were used in active and completed head and neck clinical trials, and whether they measured the defined domains. Measures that were developed using patient input were ranked higher. We also assessed the rigor of the psychometric testing, including the validity, reliability, and responsiveness of an instrument. The newly developed and validated FACE-Q Head and Neck Cancer module5 was used to capture secondary relevant head and neck domains because of its robust psychometric development and modular format, which allows for subsite- and treatment-specific delivery of scales. Domains not covered in the FACE-Q Head and Neck Cancer Module were captured with other validated measures (skin, pain, and shoulder and neck morbidity). All selected measures were reviewed for clinical use by the MSK eForms Committee.

Cohort Development

The cohort was defined based on head and neck diagnosis independent of treatment modality, with the goal of capturing all head and neck subsites, across all domains (Figure 2). We used the International Classification of Diseases, 10th Revision (ICD-10) codes to designate patients at the time of presentation. Patients with predesignated ICD-10 codes were included in the cohort and assigned the subsite-specific domains to be measured. Table 1 shows a representative example. The baseline cohort included 16 unique subsites and 183 specific ICD-10 codes. We used specific Current Procedural Terminology (CPT) codes to designate patients to the “follow-up cohort” and designate time 0 from treatment. There were 192 CPT codes included in the cohort build. Codes for radiation simulation (medical radiation physics, dosimetry, treatment devices, and special services for radiation treatment, and therapeutic radiology simulation-aided field setting), surgery (excluding biopsies), and chemotherapy administration were included. To capture long-term follow ups, all patients treated at the institution starting in 2008 were eligible for follow-up.

Figure 2:

Capturing all head and neck subsites, across all domains, independent of primary treatment modality was accomplished using a system based on International Classification of Diseases, 10th Revision (ICD-10) codes.

Table 1:

Examples of subsite ICD-10 code-dictated measure delivery to provide personalized and relevant surveys while reducing survey fatigue.

| SUBSITE | ICD-10a CODES | MEASURES |

|---|---|---|

| Nose | C44.309, C43.31, C43.9, C44.300, C44.301, C44.310, C44.311, C44.319, C44.320, C44.321, C44.329, C44.390, C44.391, C44.399 | FACE-Q Head and Neck Cancer Module (Facial Appearance, Cancer Worry), Facial Sensation, Runny Nose, Pain, Skin, Nasal, Neck Dissection Impairment Index |

| Salivary/Parotid | D11.0, C07, C08, D37.030, K11.9 | FACE-Q Head and Neck Cancer Module (Eating, Oral Function, Salivation, Drooling, Smile, Swallowing, Facial Appearance, Cancer Worry), Facial Sensation, Eye Tearing, Vision, Pain Scale, Skin, Neck Dissection Impairment Index |

| Oral Cavity | D10.1, C02.0, C02.1, C02.2, C02.9, C03.9, C04.0, C04.1, C04.8, C04.9, C05.9, C06.0, C06.1, C06.2, C06.9, C80.1, D10.2, D10.30, D10.39, D49.9, K13.21, K13.79, K14.8, K14.9, D00.07, D16.5, M27.2, C03.1, C41.1, C05.0, C03.0 | FACE-Q Head and Neck Cancer Module (Eating, Oral Function, Salivation, Drooling, Smile, Speech, Swallowing, Facial Appearance, Cancer Worry), Facial Sensation, Pain Scale, Skin, Neck Dissection Impairment Index |

| Orbit/Skull Base | D31.50, C31.9, C69.50, C69.51, C69.52, C69.60, C69.61, C69.62, D31.51, D31.52, D31.60, D31.61, D31.62, D32.0, D32.9, D35.2, D35.3, D44.3, D44.4, D48.0, D49.1, D49.89, J32.0, J32.1, J32.2, J32.3, J32.9, J34.1, R09.89, D16.4, C41.0 | The Skull Base Inventory, Pain Scale |

Abbreviations: ICD-10, International Classification of Diseases, 10th Revision

Electronic Platform and Measure Delivery

The survey of the Head and Neck PROs Oncology platform is delivered to the patient via MSK Engage, the electronic survey application in the MyMSK patient portal. A robust, user-friendly, and secure platform, MSK Engage enables the collection and visualization of patient-generated data and PROs for clinical and research purposes. MSK clinicians can collect data from new and current patients both remotely and onsite (electronically). Patients can complete a survey on a desktop, a tablet, or a smartphone. MSK Engage is integrated with Clinical Information Systems, Clinical Documentation, electronical medical records (EMR), and the institutional database so the data are accessed in existing clinical workflows and then stored for advanced analytics.

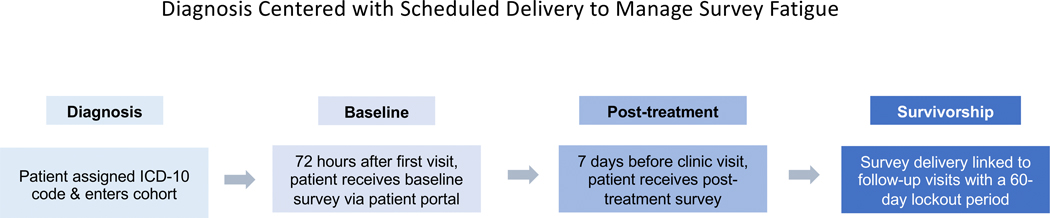

Timing of delivery is automated with the MSK Engage platform (Figure 3). At time of presentation, a head and neck oncologist electronically designates a diagnosis code (ICD-10) as part of routine clinical workflow. By submitting an electronic charge ticket, the clinician automatically triggers a “baseline” survey delivered via the patient portal with PROM scales that have been designated for this disease site. Patients then enter the follow-up cohort if a procedure code (CPT) follows within 3 months. After a CPT code (dedicated for chemotherapy administration, radiation simulation, or surgery) is recorded, patients are followed with PROMs at every scheduled follow-up visit. Surveys are delivered 7 days before the scheduled follow-up clinic visits with a member of the DMT. If a patient in the follow-up cohort arrives at the appointment without having completed the module, they are asked to complete the survey in the waiting room before the visit. To prevent survey fatigue, no additional surveys are delivered for 60 days (allowing for multiple visits within the DMT without repeat survey delivery). Surveys “expire” 30 days after a baseline module is delivered and 24 hours after a follow-up visit. This strategy avoids duplicate surveys in the patient portal.

Figure 3:

Patients are followed from diagnosis to survivorship. Timing of delivery was agreed on by the disease management team and was automated with the MSK Engage platform.

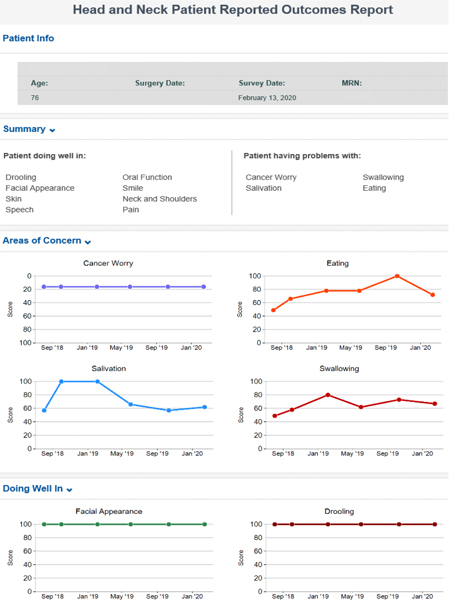

To encourage adoption, survey fatigue reduction strategies, including the use of “trigger” yes/no questions, were used to guide hide/show computer logic. For example, the patient will see: “Do you have problems swallowing?” If they answer “no,” the computer will adapt to move to the next domain; if they answer “yes,” a full scale of dysphagia questions will display and be scored. Patients are assigned a “maximum” measure score based on the validated instrument. An advanced patient-facing report is developed to monitor module response over time (Figure 4). The advanced report shows patient information, summary of “patient doing well in” and “patient having problems with” responses, and graphs showing scores over time.

Figure 4:

Internally developed advanced patient-facing report to monitor response over time and improve communication with the clinician.

Data Visualization and EMR Integration

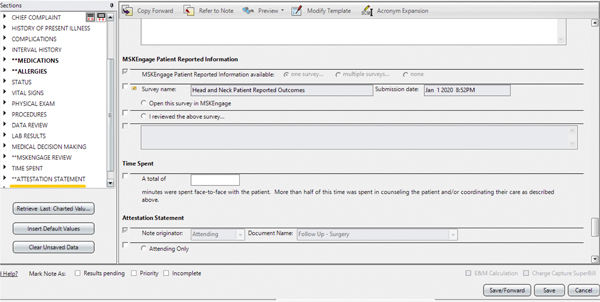

Data were visualized on a clinical enterprise, disease subsite, and individual patient level. Individual patient responses can be seen in the electronic medical record through MSK Engage. We also used Tableau (Tableau Software, LLC., Seattle, WA) for clinical enterprise and disease subsite levels because of its flexibility to support a variety of data visualizations and robust integration with our institutional database. The dashboards were iteratively designed using a human-centered design framework. Tableau dashboards were developed to operationalize MSK Engage survey roll-out by monitoring key performance indicators: response rates across MSK regional campuses, MyMSK portal enrollment, survey completion, and portal vs in-clinic completion. In addition, we developed a population- (subsite-) level response dashboard. Descriptive subsite data across domains were examined for trends and as hypothesis-generating visualization tools. Patient-level reports were internally developed and integrated into the EMR for patient and clinician review. The clinical document was built to include a box that will transmit the report into EMRs when checked for integration into the clinical record (Figure 5). Illustrative case reports were extracted from the medical record.

Figure 5:

For electronic medical record integration, the clinical document was revised to include a box to check when the patient-reported outcomes report is reviewed by the clinician at the time of the visit.

Results

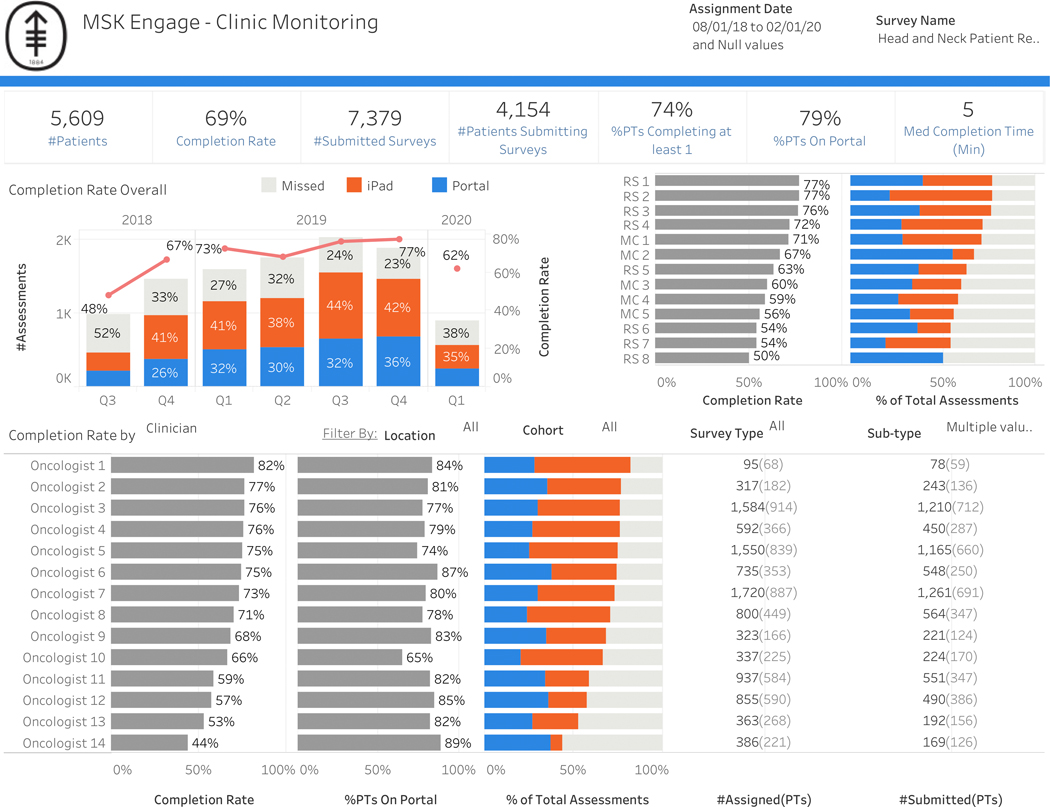

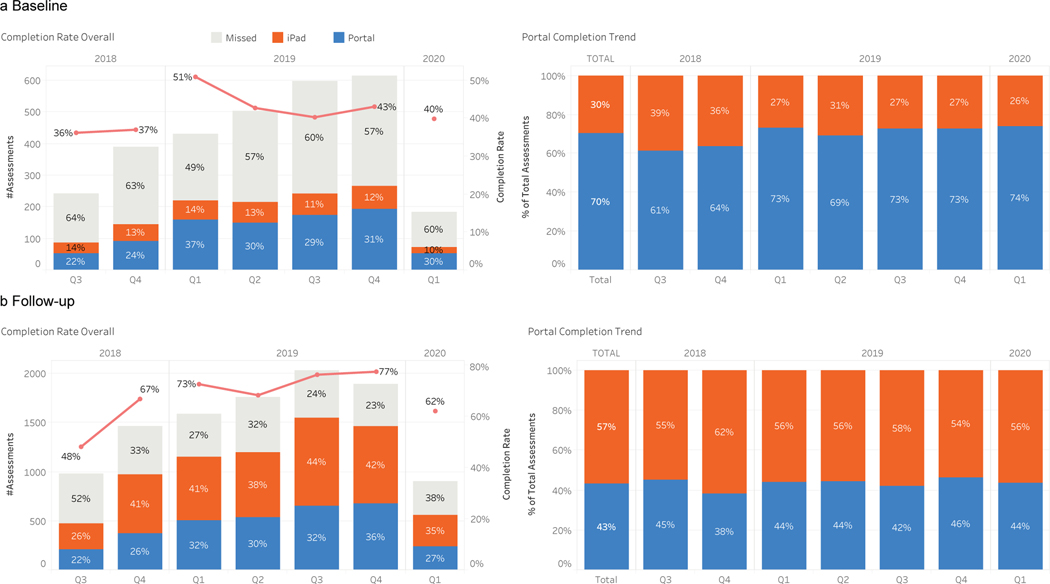

The integration of ePROs as part of standard of care in head and neck oncology is feasible (Figure 6). Since August 2018, all patients who have presented to MSK with head and neck cancer have used the Head and Neck PROs Oncology platform in MSK Engage as part of routine clinical care. From August 2018 to February 2020, 4,154 patients have completed survey measures. Average patient participation rate has been 69%. Baseline completion rates were much lower than completion rates at follow-up visits. The number of patients who were enrolled in the institutional patient portal increased over the course of the case study from 64% to 84% (Supplemental Figure 1). Forty-two percent of surveys were completed at baseline while 80% were completed at follow-up (Figure 7). For baseline surveys, the majority (70%) were completed via the institutional patient portal. In contrast, most follow-up surveys were completed at the time of the visit (62%) while 38% were completed before the visit via the institutional patient portal. Median time of completion was 5 minutes.

Figure 6:

Integration of Head and Neck PROs Oncology platform across clinicians and all sites (main and regional campuses).

Abbreviations: #, number; Q, quarter; med, median; min, minutes; PT, patients; MC, main campus; RS, regional site

Figure 7:

Dashboards allow for internal monitoring of completion rates. We identified that a) baseline compliance (which does not include an in-clinic completion option) was lower (42%) and was more often completed on the patient portal. b) Follow-up compliance was higher (80%), which reflects the in-clinic completion option with higher in-clinic completion rates.

Abbreviation: Q, quarter

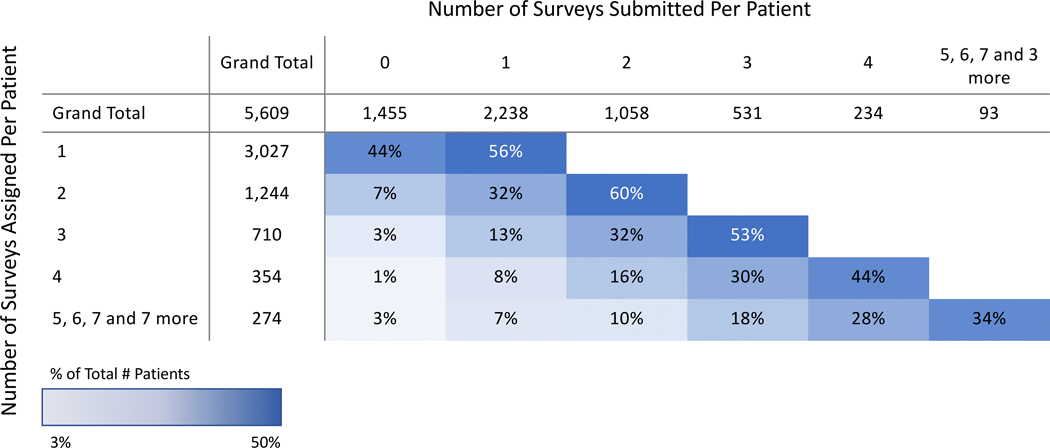

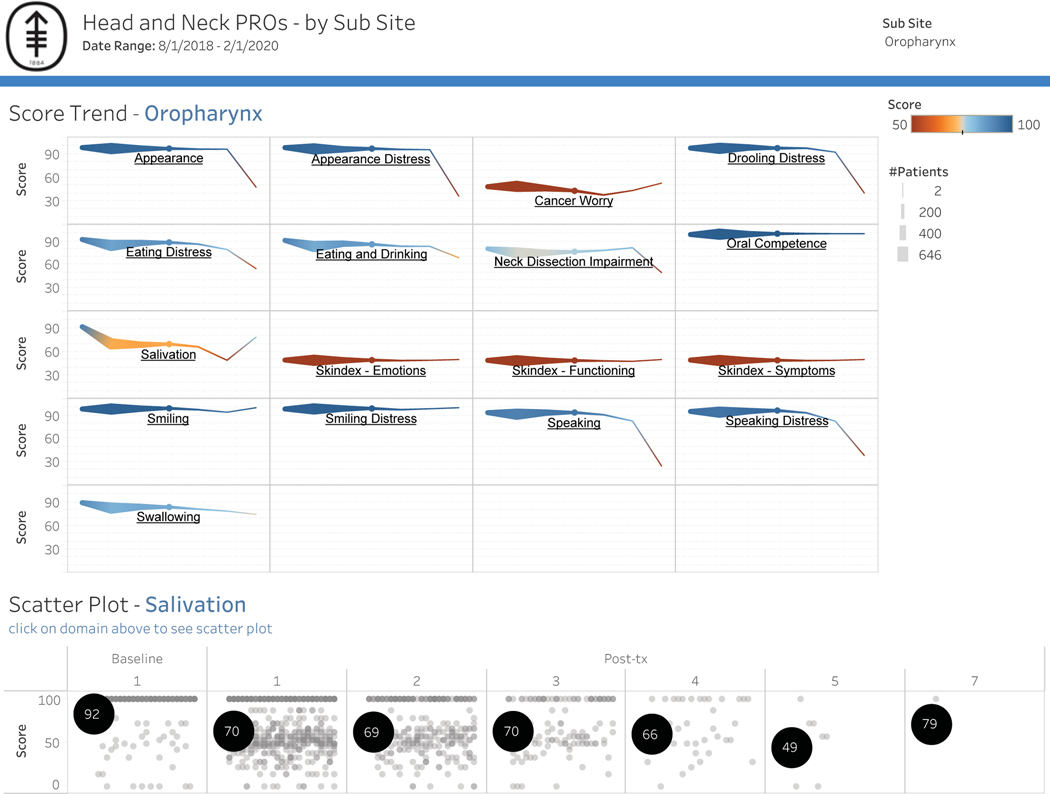

Based on the response rates, it was feasible to include both main campus and regional centers. Patient engagement was defined as the proportion of patients who complete the module when a survey is delivered (Figure 8). For example, in the analyzed cohort of patients who received 4 modules, 44% returned to the survey and completed all measures. Overall subsite scores by domain were also designed to generate hypotheses for clinical research. Figure 9 shows all oropharynx cancers across measured functional domains, with salivation highlighted to show the range of responses across all patients. Clinician adoption rate, as measured by “checking EMR box,” was 12%.

Figure 8:

Patient engagement grid. Patients were first divided into categories based on number of surveys assigned. Patient engagement was calculated by dividing the number of patients who completed a given number of surveys by the total number of patients in that category.

Figure 9:

Normative data will be used to enhance feedback. a) Responses across all patients with oropharynx cancer by domain over time. b) Dashboard allows for toggling between domains, highlighted is a scatter plot of Salivation Scale showing the range of responses across patients over time.

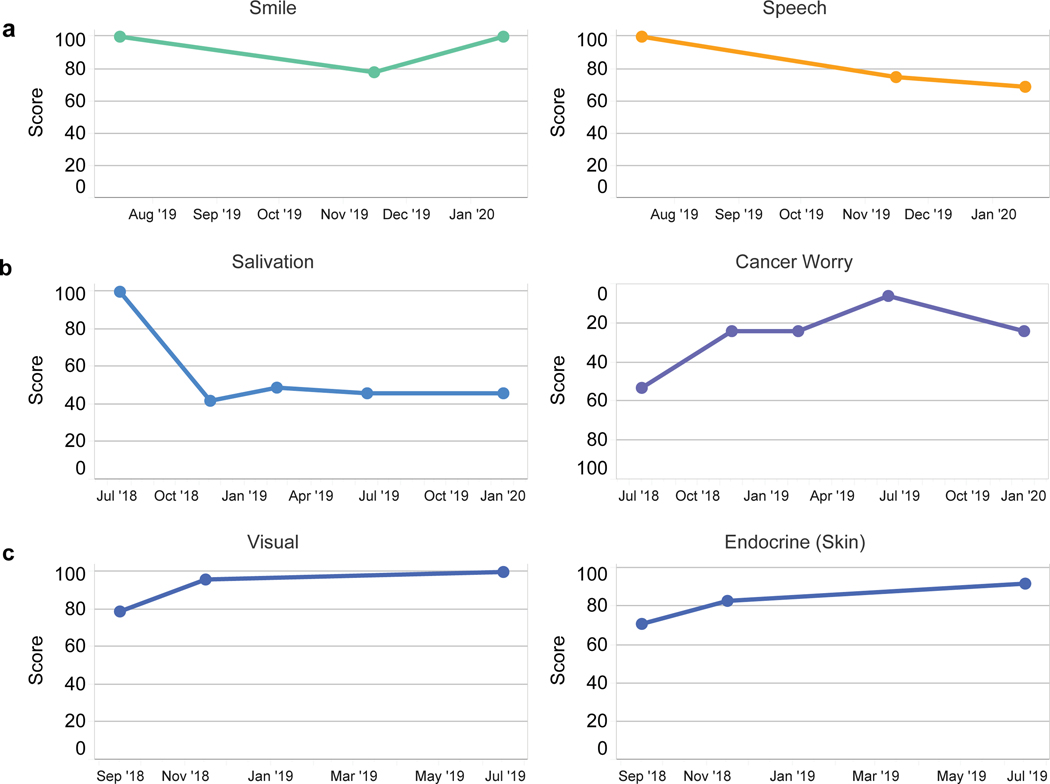

Three illustrative cases are presented and demonstrate the patient level data visualization, a tool that is used to communicate in the clinic and offers opportunities to follow clinical course to asses for needed intervention as well as monitor for response to treatment (Figure 10). Case 1 shows the status of a patient who underwent glossectomy and neck dissection for T1N0 oral tongue cancer. The patient advanced report captured marginal mandibular nerve weakness, which resolved but with persistent speech complaints; a consult with speech pathology was recommended. Case 2 is a patient with T1N0 acinic cell carcinoma intermediate grade status after parotidectomy and adjuvant radiation. Since persistent xerostomia and cancer worry were reported as “areas of concern,” acupuncture was recommended through rehabilitation medicine. Case 3 is a patient with acromegaly secondary to an insulin-like growth factor-1 secreting pituitary tumor with cavernous sinus invasion and mild diplopia status post-endoscopic resection of tumor with radiographic and biochemical cure. Postoperatively, the patient reported resolved visual complaints and noted improvement in skin appearance (endocrine).

Figure 10.

Case examples of individual patents. a) Patient with tongue cancer after glossectomy and neck dissection. Postoperative marginal mandibular nerve weakness improves while speech complaints persist. b) Patient with salivary gland cancer post parotidectomy with adjuvant radiation. Dry mouth and cancer worry represent continued “areas of concern.” c) Patient with acromegaly and diplopia secondary to pituitary tumor status post endoscopic resection. Visual complaints resolved postoperatively and skin appearance (endocrine) improved.

Discussion

The integration of PROs as part of standard clinical care in head and neck oncology has been limited by underpowered information technology platforms, limited PROMs, suboptimal data visualization, and the lack of a comprehensive DMT effort. We demonstrate the feasibility of developing and implementing into a high-volume head and neck oncology practice an ePROs program that 1) longitudinally follows individual patients to inform care, 2) generates normative data across disease subsites and treatment options to improve shared decision-making during consultation, and 3) studies our practices, reports our experiences, and adds patient-reported HRQOL endpoints to our research studies. Our case study also highlights opportunities and obstacles to integration.

Monitoring PROs in clinical care is thought to be most effective when tailored to a specific population in a defined disease with treatment-related issues that can be systematically identified and managed.6 We selected domains that capture subsite/treatment modality HRQOL effects that are common and unique to head and neck cancer by involving stakeholder (all DMT members) input and recommendations from the NCI.1 Involving treating clinicians in survey selection allowed for the measurement of symptoms across head and neck cancer treatment modalities. Investment in stakeholders has been shown to increase clinician adoption of PROs.7 Use of our recently developed FACE-Q Head and Neck Cancer module5 and ICD-10 code-directed delivery has also enabled tailored measure delivery to specific disease subsites across the entire population of head and neck cancer patients.

The use of universal ICD-10 diagnosis codes as prompts for content delivery has enabled the administration of the Head and Neck PROS Oncology platform based on diagnosis, independent of treatment modality. For example, patients are designated to the cohort based on their diagnosis of laryngeal cancer rather than its treatment with total laryngectomy. This is especially important in head and neck oncology where primary radiation approaches are often employed rather than primary surgery. The design of our ePROs program may allow for future comparative effectiveness research across different treatment modalities that may be oncologically equivalent (in terms of survival) as well as for maximum generalizability. Cohort development based on universal codes (ICD-10 and CPT) permits expansion to other oncology disease sites and general medical conditions, as well as widespread adaptability outside of the institution.

Timing and frequency of delivery of ePROs surveys were designed to longitudinally measure treatment and disease toxicity while balancing patient response burden with generating quality data. The International Society for Quality of Life Research presents options ranging from only once to frequent completion, with assessments either tied to visits or used to monitor patients between visits.4 In our ePROs program, we opted to administer multiple longitudinal surveys anchored with a clinical visit. Multiple surveys allowed for analysis of trajectory of treatment-related side effects 4 and monitoring for recurrence.8–10 Incorporating survey delivery into a visit also improved adoption in the follow-up setting (Figure 7) by providing a strategy to collect surveys from patients who had not filled them out before the visit.

Delivery within a clinic visit also offers a forum for the survey responses to be reviewed. The incremental benefits of collecting PROs during an already scheduled patient–clinician visit to improve communication needs further study. While survey delivery between visits may improve access to care, timely responses to time-sensitive issues will require an increase in clinical infrastructure.4 The landmark work by Basch et al showed a survival benefit from PRO-based symptom monitoring (between clinical visits) versus usual care. Importantly, while the number of calls were equivalent between the 2 arms, the benefits from the PROs arm may have been related to content-directed clinical interactions.11 Access to PROs at the time of the clinical visit may structure the visit, enhance communication, and improve workflow.12 Our study cohort includes long-term monitoring in a patient cohort (from diagnosis to survivorship) outside of a clinical trial and, therefore, both content and schedule of survey delivery have additional complexity and will continue to evolve as we develop our ePROs program.

Integration of PROs into the EMR allowed for clinical data to be readily accessible to individual head and neck DMT members. While accessible, PRO-integrated EMR data should be presented in a format that improves (does not slow down) clinical workflow and outcomes need to be displayed in a way that requires minimal cognitive and time load on the user.13 We used dashboards to optimize meaningful use through visualization of the data by the patient, project managers, and clinicians. These dashboards were developed to display responses on a clinical enterprise, disease site, and patient level; population surveillance level data, allowing the monitoring of adoption rates across sites, physicians, and departments, were easily adaptable (Figure 6). Each dashboard also provided information including time-to-completion and where it was completed (in clinic or on portal remotely). Interventions to improve adoption could also be monitored for effectiveness. With dynamic dashboards that allowed for toggling between survey type, we identified that the response rate for follow-up surveys was higher for the follow-up group compared with the baseline group. This finding prompted the use of education sections and scripts for staff to improve baseline participation.

“Quality” dashboards to provide feedback on care based on quality metrics are widely used and have been shown to be effective in health care.13–15 “Clinical” dashboards where real-time data from an individual patient or data from a group of patients are displayed to trigger clinically actionable responses are less developed than “quality” dashboards that provide feedback. In this study, line graphs of individual patient scores were developed to improve patient communication with clinicians as well as monitor responses overtime. Line graphs have been shown to convey HRQOL more effectively than other types of graphics.16,17

In our study, we presented examples of cases in which the PROs prompted clinician intervention or demonstrated the effects of an intervention (Figure 10). Over time, normative data will develop within the program and allow for enhanced feedback with integration of expected HRQOL outcomes.16,18 Integration of a “comparison group,” which allows patients to associate their HRQOL to other “patients like me,” has been indicated as one of the most important functions of a clinical dashboard.16 Aggregated normative data, generated by this ePROs program over time, will advance clinical care by 1) setting expectation for treatment-related side effects, 2) identifying cohorts at high risk for poor HRQOL, and 3) improving shared decision-making. Real-time modeling of nadirs, predicted improvement (“How long will my dry mouth last?”), and plateaus (“Will my taste ever be back to normal?”) by tumor, patient, and treatment factors will replace anecdotal responses. These data will allow for early interventions in people who deviate from the normative curve and prophylactic intervention in populations who are at risk for poor functional outcomes related to treatment. Visualizing how “people like me with head and neck cancer” do would improve shared decision-making during consultation. While toxicity grade may be similar across treatment options, the toxicity may be in different domains, which would be captured with the Head and Neck PROs Oncology platform at MSK. For example, a musician vs a construction worker with human papillomavirus-associated oropharynx cancer metastatic to the neck may opt for a primary surgical approach with adjuvant therapy over concurrent chemoradiation therapy to avoid ototoxicity. In contrast, the construction worker, who is dependent on shoulder function, may elect to avoid the neck dissection in the primary setting and proceed with nonsurgical treatment. ePRO data collected across all patients as standard of care will allow clinicians to visualize these preferences for future patients.

There are obstacles and limitations to full ePRO integration across the head and neck clinical service. Engagement of clinicians and incorporation of these discussions into clinical workflow remains a challenge secondary to the scopes of a clinician’s practices and time constraints in the clinic. Strategies to improve patient engagement are also required but will be linked to increasing clinician adoption. Patients are more likely to participate if the clinician reviews the survey responses and incorporates them into interventions. In our study, only 12% of clinicians checked the box in the EMR that the PROs were reviewed with the patient. While such a small percentage likely underestimates the number of physicians who reviewed the PROs with the patient, it does illustrate lower-than-anticipated physician engagement and barriers to EMR integration. How individual clinicians integrate the PROs into their workflows is variable at this time. The advancement of a computerized decision support system in response to PROs data may improve utilization, as well as standardize and enhance care. Incorporating user-centered design, in which stockholders participate in optimizing workflow and data visualization, is one strategy that may enhance integration and engagement.16 Based on patient and clinician input, domains focused on chemotherapy toxicity (including ototoxicity and neuropathy) are underrepresented in the current platform and will be added. While trigger questions are already being used to reduce patient burden in our surveys, information technology enhancements and PROMs developed with computer-adaptive testing will improve the quality of data while managing survey fatigue. Future analysis of non-responders may identify barriers to adoption, such as age, technology literacy, and education. Finally, although the information technology infrastructure to develop this ePROs program may not be available in all clinical settings, this study provides a road map to direct information technology enhancements around the needs of a successful standard-of-care ePROs program.

Conclusions

Through presentation of the MSK Head and Neck PROs Oncology platform, we have demonstrated the feasibility of an ePROs program as a component of clinical care across a DMT. The platform’s use of standardized, internationally recognized codes (ICD-10 and CPT) allows for wide expansion across disease sites and institutions. Integration of PROs as standard of care will provide novel disease- and treatment-related information beyond what can be collected from the clinician that can be used to assess outcomes and quality and to counsel patients. We will continue to test enhancements of the platform, including content, workflow, data visualization, and health informatics, to confirm their added value to oncology care.

Supplementary Material

Acknowledgments

Funding: This research was funded in part through the NIH/NCI Cancer Center Support Grant P30 CA008748, which supports Memorial Sloan Kettering Cancer Center’s research infrastructure.

Footnotes

Conflict of Interest: All co-authors have nothing to disclose.

References

- 1.Chera BS, Eisbruch A, Murphy BA, et al. Recommended patient-reported core set of symptoms to measure in head and neck cancer treatment trials. J Natl Cancer Inst. 2014;106(7). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.National Cancer Institute. Clinical trials search results: head and neck cancer. Accessed May 26, 2020. https://www.cancer.gov/about-cancer/treatment/clinical-trials/search/r?loc=0&rl=1&t=C35850.

- 3.Snyder C, Wu A, eds. Users’ guide to integrating patient-reported outcomes in electronic health records. May 2017. Accessed April 21, 2020. www.pcori.org/sites/default/files/PCORI-JHU-Users-Guide-To-Integrating-Patient-Reported-Outcomes-in-Electronic-Health-Records.pdf.

- 4.Aaronson N, Elliott T, Greenhalgh J, et al. User’s guide to implementing patient-reported outcomes assessment in clinical practice. January 2015. Accessed April 21, 2020. https://www.isoqol.org/wp-content/uploads/2019/09/2015UsersGuide-Version2.pdf.

- 5.Cracchiolo JR, Klassen AF, Young-Afat DA, et al. Leveraging patient-reported outcomes data to inform oncology clinical decision making: introducing the FACE-Q Head and Neck Cancer Module. Cancer. 2019;125(6):863–872. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Jensen RE, Snyder CF. PRO-cision medicine: personalizing patient care using patient-reported outcomes. J Clin Oncol. 2016;34(6):527–529. [DOI] [PubMed] [Google Scholar]

- 7.Stover AM, Tompkins Stricker C, Hammelef K, et al. Using stakeholder engagement to overcome barriers to implementing patient-reported outcomes (PROs) in cancer care delivery: approaches from 3 prospective studies. Med Care. 2019;57 Suppl 5 Suppl 1:S92–S99. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Brandstorp-Boesen J, Zatterstrom U, Evensen JF, Boysen M. Value of patient-reported symptoms in the follow up of patients potentially cured of laryngeal carcinoma. J Laryngol Otol. 2019;133(6):508–514. [DOI] [PubMed] [Google Scholar]

- 9.Zatterstrom U, Boysen M, Evensen JF. Significance of self-reported symptoms as part of follow-up routines in patients treated for oral squamous cell carcinoma. Anticancer Res. 2014;34(11):6593–6599. [PubMed] [Google Scholar]

- 10.Scharpf J, Karnell LH, Christensen AJ, Funk GF. The role of pain in head and neck cancer recurrence and survivorship. Arch Otolaryngol Head Neck Surg. 2009;135(8):789–794. [DOI] [PubMed] [Google Scholar]

- 11.Basch E, Deal AM, Dueck AC, et al. Overall survival results of a trial assessing patient-reported outcomes for symptom monitoring during routine cancer treatment. JAMA. 2017;318(2):197–198. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Rogers SN, Ahiaku S, Lowe D. Is routine holistic assessment with a prompt list feasible during consultations after treatment for oral cancer? Br J Oral Maxillofac Surg. 2018;56(1):24–28. [DOI] [PubMed] [Google Scholar]

- 13.Khairat SS, Dukkipati A, Lauria HA, Bice T, Travers D, Carson SS. The impact of visualization dashboards on quality of care and clinician satisfaction: integrative literature review. JMIR Hum Factors. 2018;5(2):e22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Dowding D, Randell R, Gardner P, et al. Dashboards for improving patient care: review of the literature. Int J Med Inform. 2015;84(2):87–100. [DOI] [PubMed] [Google Scholar]

- 15.Dowding D, Merrill J, Russell D. Using feedback intervention theory to guide clinical dashboard design. AMIA Annu Symp Proc. 2018;2018:395–403. [PMC free article] [PubMed] [Google Scholar]

- 16.Izard J, Hartzler A, Avery DI, Shih C, Dalkin BL, Gore JL. User-centered design of quality of life reports for clinical care of patients with prostate cancer. Surgery. 2014;155(5):789–796. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Brundage M, Feldman-Stewart D, Leis A, et al. Communicating quality of life information to cancer patients: a study of six presentation formats. J Clin Oncol. 2005;23(28):6949–6956. [DOI] [PubMed] [Google Scholar]

- 18.Stabile C, Temple LK, Ancker JS, et al. Ambulatory cancer care electronic symptom self-reporting (ACCESS) for surgical patients: a randomised controlled trial protocol. BMJ Open. 2019;9(9):e030863. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.