Abstract

Objectives

To explore the impact of data-sharing initiatives on the intent to share data, on actual data sharing, on the use of shared data and on research output and impact of shared data.

Eligibility criteria

All studies investigating data-sharing practices for individual participant data (IPD) from clinical trials.

Sources of evidence

We searched the Medline database, the Cochrane Library, the Science Citation Index Expanded and the Social Sciences Citation Index via Web of Science, and preprints and proceedings of the International Congress on Peer Review and Scientific Publication. In addition, we inspected major clinical trial data-sharing platforms, contacted major journals/publishers, editorial groups and some funders.

Charting methods

Two reviewers independently extracted information on methods and results from resources identified using a standardised questionnaire. A map of the extracted data was constructed and accompanied by a narrative summary for each outcome domain.

Results

93 studies identified in the literature search (published between 2001 and 2020, median: 2018) and 5 from additional information sources were included in the scoping review. Most studies were descriptive and focused on early phases of the data-sharing process. While the willingness to share IPD from clinical trials is extremely high, actual data-sharing rates are suboptimal. A survey of journal data suggests poor to moderate enforcement of the policies by publishers. Metrics provided by platforms suggest that a large majority of data remains unrequested. When requested, the purpose of the reuse is more often secondary analyses and meta-analyses, rarely re-analyses. Finally, studies focused on the real impact of data-sharing were rare and used surrogates such as citation metrics.

Conclusions

There is currently a gap in the evidence base for the impact of IPD sharing, which entails uncertainties in the implementation of current data-sharing policies. High level evidence is needed to assess whether the value of medical research increases with data-sharing practices.

Keywords: health informatics, information management, information technology

Strengths and limitations of this study.

Exhaustive review of both the literature and the main initiatives in data sharing.

Analysis of the full data-sharing process covering intention to share, actual sharing, use of shared data, research output and impact.

Retrieval and synthesis of information proved to be difficult because of a very siloed landscape where each initiative/platform operates with its own metrics.

Data sharing is a moving target in a rapidly changing environment with more and more new initiatives.

Only a limited research output from data sharing is available so far.

The time from submitting a data-sharing request to receiving the requested data was not systematically investigated in the review.

Introduction

Rationale

Data sharing is increasingly recognised as a key requirement in clinical research.1 In any discussion about clinical trial data sharing, the emphasis is naturally on the data sets themselves, but data sharing is much broader. Besides the individual participant data (IPD) sets, other clinical trial data sources should be made available for sharing (eg, protocols, clinical study reports, statistical analysis plans, blank consent forms) to enable a full understanding of any data set. In this scoping review, there is a focus on the sharing of IPD from clinical trials.

Within clinical research, data sharing can enhance reproducibility and the generation of new knowledge, but it also has an ethical and economic dimension.2 Scientifically, sharing makes it possible to compare or combine the data from different studies, and to more easily aggregate it for meta-analysis. It enables conclusions to be re-examined and verified or, occasionally, corrected, and it can enable new hypotheses to be tested. Sharing can, therefore, increase data validity, but it also draws more value from the original research investment, as well as helping to avoid unnecessary repetition of studies. Agencies and funders are referring more and more to the economic advantages of data reuse. Ethically, data sharing provides a better way to honour the generosity of clinical trial participants, because it increases the utility of the data they provide. Despite the high potential for sharing clinical trial data, the launch and implementation of several data-sharing initiatives and platforms, and outstanding examples related to the value of data sharing,3 to date data sharing is not the norm in clinical research, unlike many other scientific disciplines.4 One major hurdle is that clinical trial data concerns individuals and their health status, and as such requires specific measures to protect privacy.

To support sharing of IPD in clinical trials, several organisations have developed generic principles, guidance and practical recommendations for implementation. In 2016, the International Committee of Medical Journal Editors (ICMJE), a small group of medical journal editors, published an editorial stating that ‘it is an ethical obligation to responsibly share data generated by interventional clinical trials because participants have put themselves at risk’.5 The ICMJE considers that there is an implicit social contract imposing an ethical obligation for the results to lead to the greatest possible benefit to society. The ICMJE proposed to require that deidentified IPD is made publicly available no later than 6 months after publication of the main trial results. This time lapse would be useless for public health emergencies like COVID-19. However, the ICMJE proposal triggered debate, and a large number of trialists were reluctant to adopt this new norm6 on account of the feasibility of the proposed requirements, the resources required, the real or perceived risks to trial participants, and the need to protect the interests of patients and researchers.7

Despite the cultural shift towards sharing clinical trial data and the major commitment of scientific organisations, funders and initiatives, overall there is still a lack of effective policies in the biomedical literature to ensure that underlying data is maximally available and reusable. The only requirement appears to be a data management plan or a data-sharing plan. A few journals require data sharing and, for those who do require data sharing, guidelines are heterogeneous and somewhat ambiguous.8 Nevertheless, some innovative and progressive funders (eg, Wellcome Trust, Bill & Melinda Gates Foundation), and publishers/journals (eg, Public Library of Science (PLOS) (in 2014), The British Medical Journal (BMJ)) (2009–2015), have adopted strong data-sharing policies. As part of a wider cultural shift towards more open science, there have been various attempts to explore how clinical researchers can best plan for data-sharing and prepare their ‘raw’ IPD so that it becomes available to others9—although often under controlled access conditions rather than simply being publicly available on-line10—and can structure that data to make it FAIR (findable, accessible, interoperable and reusable).11 Meanwhile several data-sharing platforms and repositories are available and in use to provide practical support for the data-sharing process in clinical research (eg, Yale University Open Data Access (YODA) (launched in 2011), ClinicalStudyDataRequest.com (CSDR) (launched in 2013), Vivli (launched in 2018). A considerable number of individual studies have been performed to access and explore the sharing of data from clinical trials under different circumstances and within different frameworks. What is strongly needed is a scoping review providing an overview of the status of implementation of data sharing as a whole and the implications originating from the available evidence.

Objectives

In this scoping review, we explored the impact of data-sharing initiatives on the willingness to share data, the status of data sharing, the use of shared data and the impact of research outputs from shared data.

Methods

Protocol and registration

The study protocol was registered on the Open Science Framework on 12 September 2018 (registration number: osf.io/pb8cj). The protocol followed the methodology manual published by the Joanna Briggs Institute for scoping reviews.12 Methods and results are reported using the Preferred Reporting Items for Systematic Reviews and Meta Analyses extension for Scoping Reviews.13

Eligibility criteria

The following eligibility criteria for studies were used:

All study designs were eligible, including case studies, surveys, metrics and experimental studies, using qualitative or quantitative methods. Only published or unpublished reports (eg, preprints, congress presentations, non-indexed information such as websites) in English, German, French or Spanish were considered.

We included all studies and reports 1/providing information on current IPD data-sharing practices for clinical trials and 2/reporting on one or more of five outcome domains defined according to the data-sharing process presented in box 1.

Box 1. Definitions used for the five outcome domains.

1. Intention to share data

There is an intention to share data, expressed by a stakeholder (eg, sponsor/principal investigator, funder). This can be done by a written data-sharing commitment or by a declaration included in the trial registration. This also includes surveys on attitudes towards data sharing.

2. Actual data sharing

Data are truly made available for data sharing to secondary users. This is important because there are cases known where the data is offered for sharing but sharing does not take place, as a result of a possible hidden agenda or change in plans.

3. Use of shared data

Shared data can be used for various purposes. It can be used as background for research, usually not leading to research outputs. This covers use for education, researcher training and understanding of data. Study types that should lead to new research outputs include (1)validation/reproducibility of results, (2)further additional analyses (prognostic models, decision support, subgroup analyses, etc) and (3)individual participant data meta-analyses.

4. Research outputs from shared data

Research outputs are scientific presentations, reports and publications.

5. Impact of research output from shared data

Research output from shared data can have an impact on medical research (eg, development of new hypotheses and methods) and/or medical health (eg, changes in treatment via guidelines).

In the scoping review, only data sharing of IPD from clinical trials was considered. We defined clinical trials following the ClinicalTrials.gov definition: ‘a clinical study is a research study involving human volunteers (also called participants) that is intended to add to medical knowledge. There are two types of clinical studies: interventional studies (also called clinical trials) and observational studies. Clinical trial is another name for an interventional study’.14 We, therefore, considered any interventional clinical studies (no matter whether they were randomised), and we did not consider studies on data-sharing concerning observational and non-clinical studies (eg, on genomics) nor different fields outside medicine (eg, economics).

We included studies that investigated and reported information on current data-sharing practices performed without restrictions in terms of promotional initiatives, type of repository or platform (see box 2 for definitions) and that promoted data-sharing practices (eg, at editorial level, at funder level, at research level). We considered many different types of studies (eg, experimental studies, surveys, metrics, quality assurance studies, qualitative research, reviews, reports), as the inclusion criteria were not method-specific but rather content-specific.

Box 2. Definitions used for initiatives, repository and platform.

Initiatives

Major activities of an organisation (or a network of several organisations) to actively promote data-sharing in this area (eg, Pharmaceutical Research and Manufacturers of America/European Federation of Pharmaceutical Industries and Associations, Nordic Trial Alliance, Institute of Medicine, ICMJE, Research Data Alliance).

Repository

Large database infrastructures set up to manage, share, access and archive researchers’ datasets from clinical trials. Repositories can be specialised and dedicated to specific disciplines (eg, FreeBird, Biological Specimen and Data Repository Information Coordination Center or more general (eg, FigShare, Dryad).

Platform

A computer environment where researchers can find datasets from clinical trials across different repositories, and where additional functionalities (eg, protected analysis environment) are provided (eg, ClinicalStudyDataRequest.com, Yale University Open Data Access, Project Data Sphere, Github).

Information sources

The identification of studies was performed in two complementary stages:

A systematic literature search in bibliographic databases (MEDLINE databases, Cochrane Library, Science Citation Index Expanded and Social Science Citation Index). In addition, preprint servers and proceedings were searched.

Inspection of and if required contacts with known information sources (eg, webpages, documents and reports from platforms, funder, publisher) to explore whether they had an evaluation component and provided detailed research output from shared data (see online supplemental material 1).

bmjopen-2021-049228supp001.pdf (308.1KB, pdf)

Between 25 Janauary 2019 and 12 June 2019 (with an update on 2 November 2020), one researcher (MS) inspected (and when necessary contacted) major clinical trial data-sharing platforms to explore whether they had an evaluation component and provided details of research output from shared data (see online supplemental material 1). Similarly, in the same time period, the researcher contacted major journals and/or publishers and/or editorial groups (The BMJ, PLOS, The Annals of Internal Medicine, BioMedCentral (Springer/Nature), Faculty of 1000 Research (F1000Research)). These journals/publishers were targeted because they had either an early or a robust data-sharing policy (NEJM, Lancet and JAMA had no data-sharing policy before the 2018 ICMJE policy). Some funders (see online supplemental material 1) were also contacted, and preprints repositories were explored (bioRxiv, PeerJ, Preprints.org, PsyArXiv and MedRxiv. For the sake of completeness, ASAPbio (Accelerating Science and Publication in biology) and the Center for Open Science were also contacted for the same information, as three International Congress on Peer Review and Scientific Publication conference abstracts. In addition, when relevant references were found in various papers these references were included (snowballing searches).

Search

On 29 October 2018 (update on 12 September 2020), one researcher (EM) searched the Medline databases for indexed and non-indexed citations via Ovid from Wolters Kluwer, the Cochrane Library via Wiley, Science Citation Index Expanded and Social Sciences Citation Index via Web of Science from Clarivate Analytics for articles meeting our inclusion criteria.

The detailed search terms for the MEDLINE databases, the Cochrane Library and the Web of Science databases can be found in online supplemental material 2. The main search strategy developed by CO, DM and FN was peer-reviewed independently (by a senior medical documentalist, EM who joined the team subsequently) using evidence-based guidelines for Peer Review of Electronic Search Strategies.15 Discrepancies were resolved between the authors, and EM performed the search. All references were managed and deduplicated using a reference manager system (Endnote).

On 23 January 2019 (update on 2 November 2020), two researchers (MS and FN) independently searched for relevant pre-prints on OSF PREPRINTS using the search function to find all papers relevant to medicine with the following keyword (trial* OR random*). On 29 January 2019, the two researchers independently searched the proceedings of the three latest International Congress on Peer Review and Scientific Publication reports for relevant abstracts (2009, 2013 and 2017).

Selection of sources of evidence

The selection of sources of evidence was performed by two independent reviewers (CO and FN). Contact with initiatives/platforms/journals/publishers was made by a single reviewer (MS). In case of disagreements, these were resolved by consensus between CO and FN and, when necessary, in consultation with a third reviewer (DM).

Data charting process

We developed a data collection form and pilot-tested it on 10 randomly selected research papers which were later included in our final study. In case of disagreement, these were resolved by consensus and, when necessary, in consultation with a third reviewer (DM).

Data items

For each research paper included according to the selection criteria we extracted: (1) basic information on the paper (type of study exploring data-sharing practices, authors, year, references and type of initiative and/or repository and/or platform studied), (2) information on the material shared (sharing of data, code, programmes and material), (3) whether it reported data about one or more of the five outcomes domains defined in box 1, (4) how these outcome domains were assessed and (5) a qualitative description of the main results observed on these outcomes.

For each data-sharing platform, publisher and funder providing detailed research output from shared data, we extracted the following information (authors, date of request, date of publication, type of reuse). We initially planned to describe the scale of reuse in qualitative terms and the observed results of the reuse (ie, ‘positive’ or ‘negative’ study) but these two characteristics were difficult to extract with very poor inter-rater agreement and we decided not to detail them.

Critical appraisal of individual sources of evidence

The studies included were classified according to study type (eg, survey, metrics, experimental). Potentially relevant characteristics of studies included with regard to their internal–external validity and risk of bias were not assessed systematically with a specific tool, but explored when one of the two reviewers considered it relevant, and in this case each study was thoroughly discussed between the reviewers.

Synthesis of results

No outcome was prioritised since there was no quantitative synthesis for this study. All outcomes were described separately in sections corresponding to the outcome domain and subsections corresponding to similar types of initiative. Our plan for the presentation of results was specified in our protocol and organised into (1) different sections corresponding to the key concepts detailed in the data-sharing pipeline (intention-to-share data, actual data sharing, results of reuse, output from data sharing, impact of data sharing) and (2)different subsections corresponding to the different contexts and actors involved in the data-sharing pipeline (eg, targeted group for intention to share data or type of use for reuse of shared data). A summary of the data extracted from the papers included was constructed in tabular form with basic characteristics, and was accompanied by a narrative summary describing all results observed in the light of the review objective and question/s. Usually, individual studies were summarised in a short text with descriptive statistics of the main results (numbers, percentages), when appropriate visual representations of the data extracted were provided.

Patient and public involvement

There was no patient or public involvement in this scoping review.

Changes to the initial protocol

We initially planned to contact leading authors in the field to ask whether they were aware of other unpublished initiatives, but this was not done as it was difficult to identify relevant authors. We found relevant references about data-sharing policies including both clinical trials and observational studies, without making a distinction. These references were included in the scoping review and this point was discussed in the text.

Results

Selection of sources of evidence

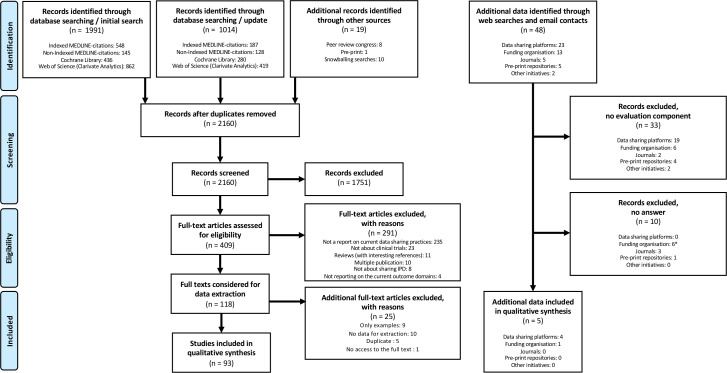

A total of 3024 records were identified, 3005 records (1991+1014 in the update) were retrieved by database search (2141 without duplicates). An additional eight records were identified by screening the proceedings of the last three International Congress on Peer Review and Scientific Publication conference abstracts and ten records by snowballing searches. One additional relevant record was identified after screening 630 identified preprints. We screened all irrelevant records by title and abstract, leaving 409 possibly relevant references which were eligible for full-text screening. Subsequently, 316 references were excluded, leaving 93 reports that met the inclusion criteria (figure 1). We inspected websites and when needed contacted 48 initiatives/platforms/journals (we actually screened 49 but Supporting Open Access for Research Initiative (SOAR) is now integrated into Vivli): 23 data-sharing platforms, 13 funding organisation, 5 journals, 5 pre-print repositories and 2 other initiatives. For 33 of these different sources, there was no evaluation component and for 10 additional contacts we received no answer as to whether they had an evaluation component and/or any data. Four data-sharing platforms (CSDR, YODA, National Institute of Diabetes and Digestive and Kidney Diseases (NIDDK), Vivli) and one funding organisation (Medical Research Council United Kingdom (MRC UK)) provided some additional data (online metrics and or data about its policy) (figure 1), which was extracted in June 2019 and updated in December 2020.

Figure 1.

PRISMA flow diagram. *For National Institute of Health USA, the answer we received was not informative. IPD, individual participant data; PRISMA, Preferred Reporting Items for Systematic Reviews and Meta-Analyses.

Characteristics of sources of evidence

Of the 93 reports, 5 were classified as experimental studies, 58 as surveys, 19 as metrics, 5 as qualitative research and 6 as other (4 case studies, 1 metrics and survey, 1 metrics and qualitative). The median year of publication was 2018 (range (2001–2020)). The vast majority of these studies were from North America (50, 54%), Europe (16, 17%) and the UK (15, 16%). Eight (9%) were from Asia and 4 (4%) from Australia. Most (78, 84%) were focused on IPD data-sharing while the remaining 15 (16%) adopted a wider definition of the material shared (eg, by including protocols, codes). Thirty-eight reports (41%) were focused on data-sharing in publications/journals, 23 (25%) on data repositories, 8 (9%) on data-sharing by various institutions, 4 (4%) on trial registries and 20 (21%) in various other contexts (see online supplemental material 3 which presents study characteristics in detail).

Collating and summarising the data

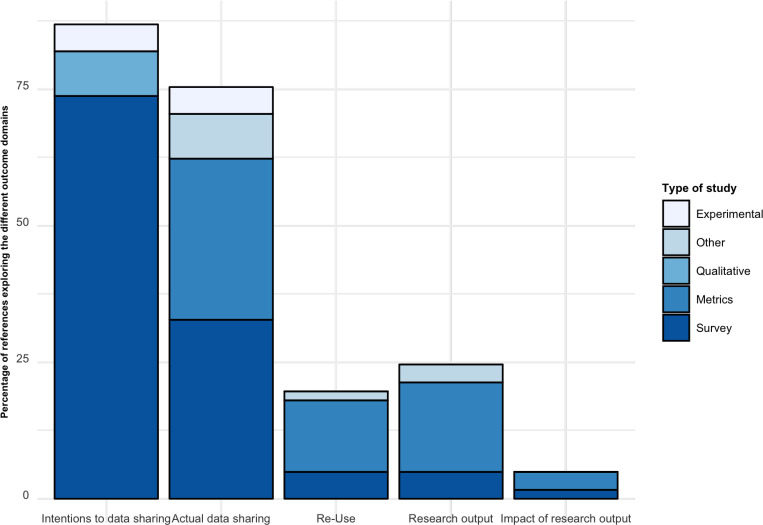

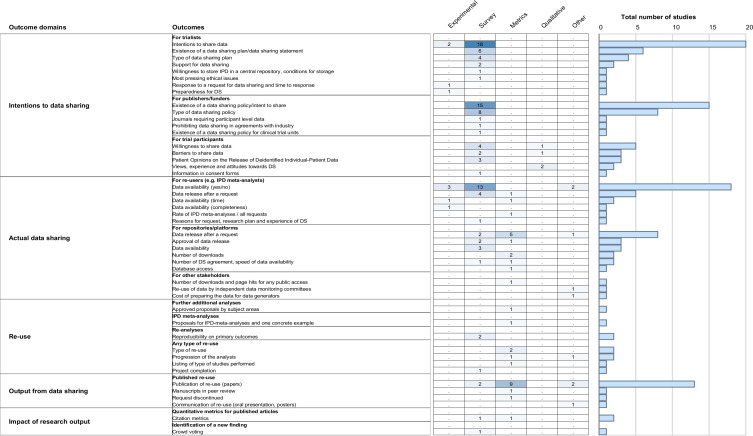

Figure 2 shows the proportion of the 93 references exploring each outcome domain. In an effort to create a useful synthesis of results, we collated results on each outcome from each publication and organised them into the prespecified categories. Figure 3 presents a detailed overview of the different outcome domains and the related outcomes used in the 93 different references included, organised by type of research.

Figure 2.

Proportion of the 93 references exploring each outcome domain. Study designs considered. Experimental: prospective research that implies testing the impact a strategy (eg, randomised controlled trial). Survey: a general overview, exploration or description of individuals and/or research objects. Metrics: descriptive metrics from each initiative provided by the initiative. Qualitative: research that relies on non-numerical data to understand concepts, opinions or experiences. Other: any other research not covered above (eg, case studies, environmental scans).

Figure 3.

Outcomes used to assess current data-sharing practices for individual patient data for clinical trials organised per outcome domain and number of studies exploring these outcomes. Study designs considered. Experimental: prospective research that implies testing the impact a strategy (eg, randomised controlled trial). Survey: a general view, exploration or description of individuals and/or research objects. Metrics: descriptive metrics from each initiative provided by the initiative. Qualitative: research that relies on non-numerical data to understand concepts, opinions or experiences. Other: any other research not covered above (eg, case studies, environmental scans). IPD, individual participant data.

Critical appraisal of sources of evidence

In general, there was a high risk of bias, especially due to study design (eg, surveys with low response rates and absence of experimental design). As stated in the methods, this was not assessed systematically. If available, we have tried to present this information in the narrative part of the review.

Results for individual sources of evidence: intentions to share data

Clinical trialists

Surveys of attitudes

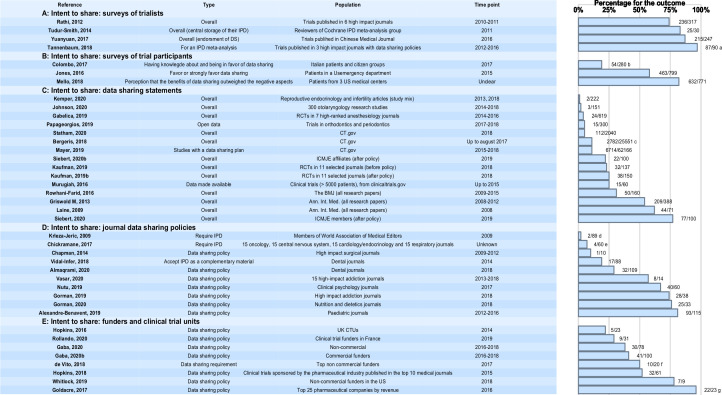

Four surveys investigating intention to share data by trialists reported high data-sharing rates of around 75% or more (see figure 4). These surveys targeted authors of published trials and in one study reviewers in a Cochrane group (where the majority of respondents had been involved in a randomised controlled trial (RCT)). The studies differed by different estimations of data-sharing rates, different selection criteria and/or survey methods. Response rates were comparable across the surveys (42%–58%). Reviewers in the Cochrane IPD meta-analysis group were strongly in favour of a central repository and of providing IPD for central storage (83%).16 In the survey by Rathi et al, 74% and 72%, respectively, thought that sharing deidentified data through data repositories should be required and that investigators should be required to share deidentified data in response to individual requests. However, only 18% indicated that they were required by the trial funder to place the trial data in a repository. In this survey, support for data sharing did not differ on trialist or trial characteristics.17 Trialists in Western Europe indicated they had shared or would share data in order to receive academic benefits or recognition more frequently than those from the USA or Canada (58% vs 31%). The most academically productive trialists less frequently indicated they had withheld or would withhold data in order to protect research subjects (24 vs 40% for the least productive), as did those who had received industry funding compared with those who had not (24% vs 43%).18 The survey by Tannenbaum, 2018 suggested that willingness to share data could depend on the intended reuse of the data (97% of respondents were willing to share data for a meta-analysis vs 73% for a reanalysis).19 For secondary analyses, the willingness to share was largely influenced by respondents' willingness to conduct a similar analysis. In addition, willingness to share was more marked after 1 year than after 6 months. In the fourth survey on trials published in Chinese medical journals, the overwhelming majority (87%) stated that they endorsed data sharing.20

Figure 4.

Intent to share. Numbers correspond to the numbers of cases with the outcome/number of cases reported in each reference. aThe proportion is 73% if the purpose is a reanalysis. bFifty-four participants out of 60 had an opinion about data-sharing (the others had no knowledge or no opinion). cAn additional 25% were undecided. dThe proportion is 19% for requiring a data-sharing plan. eThirty-five per cent have a data-sharing policy (encouraging data-sharing). fOnly two with a mandatory policy. gThe proportion is 71% for a sample of all companies (not only the top 25). In DeVito et al, we extracted the information on policies that made data-sharing mandatory (ie, a requirement to share the data). CTUs, Clinical Trial Units; ICMJE, International Committee of Medical Journal Editors; IPD, individual participant data; RCT, randomised controlled trial.

Metrics of data-sharing statements in Journal articles

Intentions to share data for trialists were less clear for data-sharing statements in published journal articles (although this section is not specific to clinical trials) (see figure 4). Depending on the journals considered, the rates vary from less than 5% to around 25%. An analysis of the first year after the Annals of Internal Medicine policies encouraged data sharing found that data were available without condition for 4%, with conditions for 57% and unavailable for 38%.21 Over the first 4 years, data were available without condition for 7%, with conditions for 47%, and unavailable for 46% of research articles.22 Nine per cent and 22% of 160 randomly sampled research articles in the BMJ from 2009 to 2015 made data available or indicated the availability of their data sets.23 Among 60 randomised cardiovascular interventional trials registered on ClinicalTrials.gov, up to 2015 with >5000 enrolment, sponsored by one of the top 20 pharmaceutical companies in terms of 2014 global sales, IPD was available for 15 trials (25%) amounting to 204 452 patients, unavailable for 15 trials (25%) and undetermined for the remaining 50 %, because of either no response or requirements for a full proposal.24 Reasons for non-availability were: cosponsor did not agree to make IPD available (four trials) and trials were not conducted within a specific time (five trials); for the remaining six trials, no specific reason was provided. Of 619 RCTs published between 2014 and 2016 in seven high-ranked anaesthesiology journals, only 24 (4%) had a data-sharing statement and none provided data in the manuscript or a link to data in a repository.25 In a survey targeting the authors of these RCTs, 86 (14%) responded and raw data was obtained from 24 participants. The authors conclude that willingness to share data among anaesthesiology RCTs is very low. From 1 July 2018, clinical trials submitted to ICMJE journals are required to contain a data-sharing statement. The reporting of the statement was investigated in a 2-month period before and after this date.26 The proportion of articles with a data-sharing statement was 23% (32/137) before and 25% (38/150) after 1 July 2018, while the number of journals publishing data-sharing statements increased from 4/11 to 7/11. Few data-sharing statements complied fully with the ICMJE journal criteria, and the majority did not refer to IPD. A total of 300 trials published in 2017–2018 and approximately equally distributed across orthodontics and periodontics were selected, assessed and analysed with respect to transparency and reporting.27 Open data sharing (repository or appendix) was found in 5% of the trials (11/150 orthodontics and 4/150 periodontics trials). Articles on reproducible research practices and transparency in reproductive endocrinology and infertility (REI) were investigated for original articles with a study type mix from REI journals (2013, 2018) and articles published in high-impact general journals between 2013 and 2018.28 Raw data were available on request or via online database for 1/98 articles in REI RCTs (2013), 0/90 in 2018 and 1/34 in high impact journals. In a random sample of 151 empirical studies in 300 otolaryngology research publications, using a PubMed search for records published between 1 January 2014 and 31 December 2018, only five provided a data availability statement and 3 (2.0%) indicated that data were available.29

Metrics of data-sharing statements in clinical trial registries

Intention to share could be even lower when considering data-sharing plans of trials registered at ClinicaTrials.gov. Here, the willingness to share data is between 5% and 10%. In one study, 25 551 trial records responded to the Plan to share IPD (72%). Of these, 10.9% of the records indicated ‘yes’ and 25.3% indicated ‘undecided’.30 Differences were observed by key funder type, with 11% of National Institute of Health (NIH) funders and 0% in the industry answering yes. Importantly, an in-depth review of 154 data-sharing plans suggested a possible misunderstanding of IPD sharing with discrepancies found between data-sharing plans and reports of actual data sharing. In a survey, the prevalence and quality of IPD-sharing statements among 2040 clinical trials first posted on ClinicalTrials.gov between 1 January 2018 and 6 June 2018 were investigated.31 The vast majority of trials included in this study did not indicate an intention to share IPD (n=1928; 94.5%). Among the trials that did commit to sharing IPD (n=112, 5.5%), significant variability existed in the content and structure of the IPD sharing statements with a need for further clarification, enhanced clarification and better outreach. Data from 287 626 clinical trials registered in ClinicalTrials.gov on 20 December 2018 were analysed with respect to sharing of IPD.32 Overall, 10.8% of trials with a first registration date after December 1 2015 answered ‘yes’ to plans to share deidentified IPD data. The sharing rate ranged from 0% (biliary tract neoplasms) to 72.2% (meningitis, meningococcal infection) when analysed by disease. For the case of HIV, which was analysed separately, the sharing rate was higher on average (24.5%). In a prediction model, studies that deposit basic summary results on ClinicalTrials.gov, large studies and phase 3 interventional studies are the most likely to declare intention to share IPD data.

Other data sources

A 2015 survey focused on The National Patient-Centered Clinical Research Network found that a possible barrier toward data-sharing intentions related to how data can be used when shared with institutions that have different levels of experience, and to the possibility of some ‘competition’ between institutions on the marketplace of ideas.33

Experimental studies

Experimental data suggest that estimations of intention to share data could differ depending on the formulation of the request. For instance, a small randomised prospective study conducted in 2001 including 29 corresponding authors of research publications published in the BMJ, explored their preparedness to share the data from their research.34 The email contact, randomly allocated, was in one of two forms, a general request (asking if the author would ‘in general’ be prepared to release data for reanalysis) and a specific request (a direct request for the data for reanalysis). Researchers receiving specific requests for data were less likely and slower to respond than researchers receiving general requests. Similarly, in 2019, a randomised controlled trial in conjunction with a Web-based survey included study authors to explore whether and how far a data-sharing agreement affected primary study authors’ willingness to share IPD.35 The response rate was relatively low (21%) in this study since more than 1200 individuals were initially contacted and 247 responded. Among the responders, study authors who received a data-sharing agreement were more willing to share their data set, with an estimated effect size of 0.65 (95% CI (0.39 to 0.90)).

Authors of published reports on prevention or treatment trials in stroke were asked to provide data for a systematic review and randomised to receive either a short email with a protocol of the systematic review attached (‘short’) or a longer email that contained detailed information, without the protocol attached (‘long’).36 Eighty-eight trials with 76 primary authors were identified in the systematic review, and of these, 36 authors were randomised to short (trials=45) and 40 to long (trials=43). Responses were received for 69 trials. There was no evidence of a difference in response rate between trial arms (short vs long, OR 1.10, 95% CI 0.36 to 3.33).

Trial participants

Qualitative studies

Perceptions of trial participants toward data sharing and their intention to share were explored qualitatively. A systematic review with a thematic analysis of nine qualitative studies from Africa, Asia and North America identified four key themes emerging among patients: the benefits of data sharing (including benefit to participants or immediate community, benefits to the public and benefits to science or research), fears and harm (including fear of exploitation, stigmatisation or repercussions, alongside concerns about confidentiality and misuse of data), data-sharing processes (mostly consent to the process) and the relationship between participants and research (eg, trust in different types of research or organisations, relationships with the original research team).37 Some qualitative reports provide data on heterogeneous samples including patients and various stakeholders from low-income and middle-income countries. In-depth interviews and focus group discussions involving 48 participants in Vietnam suggested that trial participants could be more willing to be involved in data-sharing than trialists.38 A similar study on a range of relevant stakeholders in Thailand found that data sharing was seen as something positive (eg, a means to contribute to scientific progress, better use of resources, greater accountability and more output) but it underlined considerable reservations, including potential harm to research participants, their communities and the researchers themselves.39

In a qualitative study with 16 in-depth interviews, patients with cancer currently participating in a clinical trial indicated a general willingness to allow reuse of their clinical trial data and/or samples by the original research team, and supported a generally open approach to sharing data and/or samples with other research teams, but some would like to be informed in this case.40 Despite divergent opinions about how patients prefer to be involved, ranging from passive contributors to those explicitly wanting more control, participants expressed positive opinions toward technical solutions that allow their preferences to be taken into account.

Surveys

Two surveys performed in the USA and one in Italy assessed the intention-to-share rates among trial participants (see figure 4). In one survey with a moderate response rate (47%), 463/799 (58%) patients favoured or strongly favoured data sharing, while only 9% were against or strongly against it.41 Most participants (84%) believed that disclosing the data-sharing plan within the informed consent process was important or very important. A higher percentage of ethnic minority participants was against data sharing (white, 6% vs ‘other’ 13).

In a second survey with a high response rate (79%), 93% were very or somewhat likely to allow their own data to be shared with university scientists and less than 8% of respondents felt that the potential negative consequences of data-sharing outweighed the benefits.42 Predictors of this outcome were a low level of trust in others, concern about the risk of reidentification or about information theft, and having a college degree. Ninety-three per cent and 82%, respectively, were very or somewhat likely to allow their data to be shared with academic scientists and scientists in for-profit companies. The purpose for which the data would be used did not influence willingness to share data except for use in litigation. However, patients were concerned that data-sharing might make others less willing to enrol in clinical trials, that data would be used for marketing purposes, or that data could be stolen. Less concern was expressed about discrimination and exploitation of data for profit.

In a survey of Italian patient and citizen groups, 280/2003 (14%) contacts provided questionnaires eligible for analysis.43 Of 280, 144 (51%) had some knowledge about the IPD sharing debate and 60/280 (42%) had an official position. Of those who had an official position 35/60 (58%) were in favour and 19/60 (32%) in favour with restrictions. Thirty-nine per cent approved broad access by researchers and other professionals to identified information.

Other data sources

While consent seems to be a crucial issue for trial participants, an analysis of 98 informed consent forms found that only 6 (4%) indicated a commitment to share deidentified IPD with third party researchers.44 Commitments to share were more common in publicly funded trials than in industry-funded trials (7% vs 3%).

Publishers/funders

Publishers

Metrics of data sharing statements and policies

Several studies were found about the intentions (and data-sharing policies) of publishers. Many publishers have developed data-sharing policies (20%–75%), however, less than 10% are mandatory (see figure 4). In a 2009 survey of editors of different member journals of the World Association of Medical Editors (response rate 22%), 2% and 19% of journals, respectively, required provision of participant-level data and specification by authors of their data-sharing plan.45 A similar survey of 10 high-impact surgical journals in 2009 and 2012 found only one journal that had a mandatory data-sharing policy.46 Data-sharing statements were found only in 2/246 (1%) RCTs published in these 10 journals. Another study of a random sample of 60 journals found that 21 (35 %) provided instructions for patient-level data, but only 4 (7 %) required sharing of IPD (all were oncology journals).47 A review of 88 websites of dental journals48 suggested that 17 accepted raw data as complementary material. A 6-year cross-sectional investigation of the rates and methods of data-sharing in 15 high-impact addiction journals that published clinical trials between 2013 and 2018 was performed.49 Of 14, eight (57.1%) journals had data-sharing policies for published RCTs. Of the 394 RCTs included none shared their data publicly.

A total of 40/60 clinical psychology journals had a specific policy for data sharing (2017).50 Only one journal made data-sharing mandatory, while 37 recommended it. The findings suggest great heterogeneity in journal policies and little enforcement. Online instructions for authors from 38 high-impact addiction journals were reviewed for six publication procedures, including data-sharing (2018). Of 38, 28 (74%) of the addiction journals had a data-sharing policy, none was mandatory.51 It was concluded that many addiction journals have adopted publication policies, but more stringent requirements have not been widely adopted. Instructions for authors in 43 high-impact nutrition and dietetics journals were reviewed with respect to procedures to increase research transparency (2017).52 Of 33, 25 (75%) journals publishing original research and 4/10 review journals had a data-sharing policy.

Among 109 peer-reviewed and original research-oriented dental journals that were indexed in the MEDLINE and/or SCIE database in 2018, a data-sharing policy was present in 32/109 (29.4%) and 2 of these had a mandatory policy.53 This study concluded that at present data-sharing policies are not widely endorsed by dental journals. In a cross-sectional survey, 14 ICMJE-member journals and 489 ICMJE-affiliated journals that published an RCT in 2018 were evaluated with respect to data-sharing recommendations.54 Of 14, eight (57%) of member journals and 145/489 (30%) of affiliated journals had an explicit data-sharing policy on their website. In RCTs published in member journals with a data-sharing policy, there were data-sharing statements in 98/100 (98 %) with expressed intention to share individual patient data in 77/100 (77%). In RCTs published in affiliated journals with an explicit data-sharing policy, data-sharing statements were rare 25/100 (25%), and expressed intentions to share IPD were found in 22/100 (22%).

Changes in policies from 2013 to 2016 regarding public availability of published research data were investigated in 115 paediatric journals.55 In 2012, 77/115 (67%) and in 2016, 56/115 (49%) accepted storage in thematic or institutional repositories. Publication of data on a website was accepted by 27/115 (23%) and 15/115 (13%). Most paediatric journals recommend that authors deposit their data in a repository but they do not provide clear instructions for doing so.

Funders and clinical trial units

Metrics of data sharing policies by funders

Several studies investigated mandatory data-sharing policies of funders. 30%–80% of the non-commercial funders provided data-sharing policies, the highest rates were observed in the USA. Only around 10%–20% of these policies were mandatory (see figure 4). In one study 50% of the top non-commercial funders had a data-sharing policy but it was found that in only 2/20 cases data-sharing was required. Six funders offered technical or financial resources to support IPD sharing.56 Trial transparency policies were investigated for 9/10 top non-commercial funders in the USA (May to November 2018).57 Of nine, seven (78%) funders had a policy for individual patient data-sharing, for one it was mandatory. 6 offered data-sharing and five monitored compliance. Of 96 responders out of 190 non-commercial funders contacted in France, 31 were identified as funding clinical trials (2019).58 Of 31, nine (29%) had implemented a data-sharing policy. Among these nine funders, only one had a mandatory sharing policy and eight a policy supporting but not enforcing data sharing. Funders with a data-sharing policy were small funders in terms of total financial volume.

Three studies investigated mandatory data sharing policies among commercial sponsors (see figure 4). In a 2016 survey, 22/23 (96%) companies among the top 25 companies by revenue had a policy to share IPD. In a second sample of 42 unselected companies, 30 (71%) had one. These policies generally did not cover unlicensed products or trials for an off-label use of a licensed product. Fifty-two per cent of top companies, and 38 in the sample including all companies considered that requests for IPD for additional trials were not explicitly covered by their policy.59 A second survey studied data availability for 56 publications reporting on 61 industry-sponsored clinical trials of medications.60 Of these 61 studies, 32 (52%) had a public data-sharing policy/process.

Seventy-eight non-commercial funders and a sample of 100 leading commercial funders in terms of drug sales having funded at least one RCT in the years 2016 to 2018 were surveyed (15 February 2019–10 September 2019).61 Of 78, 30 (38%) non-commercial funders had a data-sharing policy with 18/30 (60%) making data-sharing mandatory and 12/30 (40%) encouraging data-sharing. Of 100, 41 (41%) of the commercial funders had a data-sharing policy. Among funders with a data-sharing policy, a survey of two random samples of 100 RCTs registered on ClinicalTrial.gov found that data-sharing statements were present for 77/100 (77%) and 81/100 (81%) of RCTs funded by non-commercial and commercial funders respectively. Intention to share data were expressed in 12/100 (12%) and 59/100 (59%) of RCTs funded by non-commercial and commercial funders. The survey indicated suboptimal performance by funders in setting up data-sharing policies.

Metrics of data-sharing policies by Clinical Trial Units

Among 23 UK Clinical Research Collaboration registered Clinical Trial Units (CTUs) (response rate=51 %), five (22%) had an established data-sharing policy and eight (35%) specifically required consent to use patient data beyond the scope of the original trial (see table).10 Concerns were raised about patient identification, misuse of data and financial burden. No CTUs supported the use of an open access model for data sharing.

Other data sources

A 2005 survey of 107/122 accredited medical schools in the USA (response rate=88%) explored data sharing in the context of contractual provisions that could restrict investigators’ control over data in the context of industry-funded trials.62 There was poor consensus among senior administrators in the offices of sponsored research at these institutions on the question of prohibiting investigators from sharing data with third parties after the trial is over (41% allowed it, 34% disallowed it and 24% were not sure whether they should allow it).

In a survey targeting European heads of imaging departments and speakers at the clinical trials in radiology sessions (July–September 2018), the response rate was 132/460 (29%).63 Responses were received from institutions in 29 countries, reporting 429 clinical trials. For future trials, 98% of respondents (93/95) said they would be interested in sharing data, although only 34% had already shared data (23/68). The main barriers to data sharing were data protection, ethical issues and lack of a data-sharing platform.

Results for individual sources of evidence: actual data sharing

Reusers

Studies related to journal articles

Metrics of actual data sharing

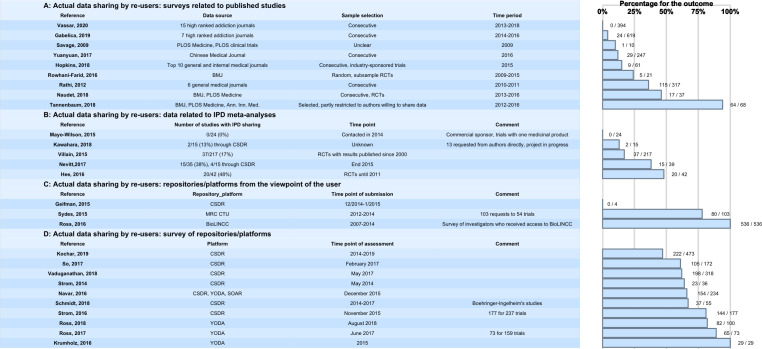

Several studies have been performed investigating data-sharing rates for studies that have been published in journals, the majority with data-sharing policies and high impact (figure 5). Even with strict data-sharing policies, the data-sharing rates are low or at most moderate, and vary between 10% and 46%, except for one study with a very high data-sharing rate due to a partly preselected sample of authors willing to share their data.19 In the 6-year cross-sectional investigation of the rates and methods of data sharing in 15 high-impact addiction journals that published clinical trials between 2013 and 2018, none of the 394 clinical trials included shared their data publicly.49 Of 86 responders in a survey targeting the corresponding authors of 619 RCTs published between 2014 and 2016 in 7 high-ranking anaesthesiology journals, raw data were obtained only for 24 studies.25 Sixty-two declined to share raw data. In a study targeting PLOS Medicine and PLOS Clinical Trials publications conducted in 2009, 1/10 (10%) of the data sets was made available after request.64 In articles in Chinese and international journals from 2016, sharing practices were indicated for 29/247 (11%) of the articles.20 Among the top 10 general and internal medical journals investigated in 2016, IPD was provided after request for 9/61 (15%) of pharmaceutical-sponsored studies.60 For BMJ research articles published between 2009 and 2015, data sets were made available in 7/157 (4%) of the articles.23 For the subsample of clinical trials, the rate was higher (5/21 (24%)). Of 317 clinical trials published in 6 general medical journals between 2011 and 2012, 115 (36%) granted access to data.17 The data availability for RCTs published in BMJ and PLOS Medicine between 2013 and 2016 was 17/37 (46%).65

Figure 5.

Actual data sharing. Numbers correspond to the numbers of cases with the outcome/number of cases reported in each reference. CSDR, Clinical StudyDataRequest.com; CTU, Clinical Trial Unit; IPD, individual participant data; PLOS, Public Library of Science; SOAR, Supporting Open Access for Research Initiative; RCT, randomised controlled trial; YODA, Yale University Open Data Access.

Experimental studies

In a parallel group RCT, an intervention group (offer of an Open Data Badge for data sharing) was compared with a control group (no badge for data sharing).66 The primary outcome was the data-sharing rate. Of 160 research articles published in BMJ Open, 80 were randomised to the intervention and control groups, of which 57 could be analysed in the intervention group and 54 in the control group. In the intervention group, data were available on a third-party repository for 2/57 (3.5%) and on request for 32/57 (56.1%), respectively, in the control group: 3/54 (5.6%) and 30/54 (56%). Data-sharing rates were low in both groups and did not differ between groups.

Data sharing for IPD meta-analyses

Metrics of data sharing for IPD meta-analyses

Some examples demonstrate that data availability for IPD meta-analyses is still limited despite the various data-sharing initiatives/platforms (figure 5). Availability can be increased under specific circumstances, such as the creation of a disease-specific repository for a scientific community, as demonstrated for a repository of IPD from multiple low back pain RCTs with IPD from 20/42 (48%) RCTs included67 and a study on antiepileptic drugs conducted by a Cochrane group with IPD for 15/39 (38%) studies included.68 In another study on different databases, 35 IPD meta-analyses with more than 10 eligible RCTs were identified (1 May 2015 to 13 February 2017).69 Of 774 eligible RCTs identified in these meta-analyses, 517 (66.8 %) contributed data. The country where RCTs are conducted (the UK vs the USA), the impact factor of the journal (high vs low) and a recent RCT publication year were associated with higher sharing rates. In three other studies, the availability of datasets for IPD meta-analysis was limited (0%–17%). In one study performed in 2014, devoted to one commercial sponsor with one specific medicinal product, IPD from 24 trials was requested without success.70 Of 15 requests (13 direct to authors, 2 to a repository) in 2014/2016, IPD was received for 2/15 (13%) of the studies.71 Of 217 RCTs published since 2000 in orthopaedic surgery, agreement to send IPD was obtained for 37/217 (17%).72

Experimental studies

The low data availability for IPD-meta-analyses is underlined by two experimental studies. One experimental study covered the issue of actual data-sharing. In this small randomised prospective study where 29 corresponding authors of original research articles in a medical journal were contacted via two different modes (general vs specific request), only one author actually sent the data immediately in response to a specific request and one author, without caveats, reported willingness to send the data in response to a general request.34

A randomised controlled trial investigated the effect of financial incentives on IPD sharing.73 All study participants (129 in all) were asked to provide the IPD from their RCT. Those allocated to the intervention group received financial incentives, those from the control group did not. The primary outcome was the proportion of authors who provided IPD. None of the authors shared their IPD, whichever the group.

Other data sources

Two studies investigated the completeness of data availability in IPD meta-analyses. Out of 30 IPD meta-analyses included in a survey,74 16 did not have all the IPD data requested. The access rate for retrieving IPD for use in IPD-meta-analyses was investigated in a systematic review.68 Only 188 (25%) of 760 IPD meta-analyses retrieved 100% of the eligible IPDs for analysis and there was poor evidence that IPD retrieval rates improved over time.

Access to repositories/platforms

Only a few studies describe access to repositories/platforms from the viewpoint of the user (figure 5). Experiences with two major platforms (CSDR, Project Data Sphere (PDS)) were reported.75 In these very early-phase projects, no data access was possible with CSDR, and faster data acquisition was achieved via the PDS. High sharing rates were reported for academic repositories (MRC CTU, BioLINCC). Of 103 requests to MRC CTUs, access was granted in 80/103 (78%) cases.76 In a survey of investigators 536/536 (100%) received access to BioLINCC over a time period between 2007 and 2014.77

Repositories/platforms

Commercial sponsors

Metrics of actual re-use

Different initiatives and platforms were initially implemented for the pharmaceutical and medical device industry to support sharing of IPD from clinical trials (these platforms are now open to academic trials but this has not been used very often so far). This covers the YODA project, CSDR, Vivli and SOAR (which is now part of Vivli). For the different platforms and repositories, metrics describing the actual use of data are available (figure 5).

Six studies have accessed data-sharing rates for CSDR. From 2014 to the end of January 2019, there was a total of 473 research proposals submitted to CSDR.78 Of these, 364 met initial administrative and data availability checks, and the independent review panel approved 291. Of 473, 222 (46.9%) of the requests gained access to the data (in progress and completed). Of the 90 research teams that had completed their analyses by January 2018, 41 reported at least one resulting publication to CSDR. Less than half of the studies ever listed on CSDR have been requested. Between 2014 and 2017, CSDR received a total of 172 research proposals, of which 105 (61%) were approved.79 In another study focusing on availability and use of shared data from cardiometabolic clinical trials in CSDR covering the time period between 2013 and 2017, 198 (62%) were approved with or without conditions.80 In year one of the use of CSDR (2013–2014), 36 research proposals were approved with conditions, of these 23 (64%) progressed to a signed data-sharing agreement.81From 2014 to 2017, Boehringer-Ingelheim listed 350 trials for potential data-sharing at CSDR.82 Fifty-five research proposals were submitted, of which 37 (67.3%) were approved. All approved research proposals submitted to Boehringer-Ingelheim except one addressed new scientific questions or were structured to generate new hypotheses for further confirmatory research, rather than replicating analyses by the sponsor to confirm previous research. Between 2013 and 2015, 177 research proposals were submitted to CSDR, and access was granted for 144 (81%) of these proposals.83

In the first year following the launch in October 2014, YODA received 29 requests all of which were approved (100%).84 In 2017 the YODA project reported 73 proposals of which 65 were approved.85 A more recent publication reported the metrics for data sharing of Johnson & Johnson clinical trials in the YODA project up to August 27, 2018.86 One hundred data requests were received from 89 principal investigators (PI) for a median of 3 trials per request. Of 100, 90 requests (90%) were approved and a data use agreement was signed in 82/100 (82%).

The use of the open access platforms CSDR, YODA and SOAR together between 2013 and 2015 was investigated in one study. Of the 234 proposals submitted, 154 (66%) were approved.87

The data available shows that the use of these platforms has increased steadily since their initiation and that 50% and more of the data requests lead to actual data sharing. The reasons for not sharing are numerous but data access is rarely denied by the platforms. Our assessment of CSDR, YODA, NIDDK and Vivli websites is presented in table 1.

Table 1.

Metrics of CSDR, YODA and Vivli websites

| Platform | Metrics date | Available studies | No of requests | No of requests with data shared | No of requests with data leading to publication | No of publications |

| CSDR | 30/11/2020 | 3008 | 621 | 318 | 59* | 79 |

| YODA | 15/11/2019 | 334 | 196 | 173 | 29 | 35 |

| Vivli | 2/11/2020 | 5203 | 215 | 123 | 8 | 9 |

NIDDK also provided metrics concerning the number of requests (530) but no other information.

*Publication anticipated.

CSDR, ClinicalStudyDataRequest.com; NIDDK, National Institute of Diabetes and Digestive and Kidney Diseases; YODA, Yale University Open Data Access.

Metrics of trial coverage for data-sharing

Ethics approval in applications for open-access clinical trial data from CSDR was investigated in a survey.79 Projects with and without ethics approval were applied to at roughly similar rates (62/111 and 43/61).

The proportion of trials where the pharmaceutical and medical device industry provided IPD for secondary analyses and thus the completeness of trial data is still limited.60 Only 15% of 61 industry-sponsored clinical trials were available 2 years after publication. For companies listing at least 100 studies on CSDR, a search was performed in ClinicalTrials. gov (January 2016, studies terminated/completed at least 18 months before search date).88 Among 966 RCTs registered in ClinicalTrials.gov, only 512 (53%) were available on CSDR and only 385 (40%) of the RCTs were registered and listed on CSDR with all datasets and documents available. This was the case despite the time lapse of 18 months since the completion of the drug trials by the company sponsor. Differences across sponsors were observed. Pharmaceutical repositories may cover only part of the trials with commercial sponsors needed for meta-analyses. In a study investigating data availability for industry-sponsored cardiovascular RCTs with more than 5000 patients, performed by a top-20 pharmaceutical company and registered at ClinicalTrials.gov (up to Jan. 2015), only 25% of the identified trial data was confirmed to be available.24 In 50% of cases availability could not be definitely confirmed.

As part of the Good Pharma Scorecard project, data-sharing practices were assessed for large pharmaceutical companies with novel drugs approved by the FDA in 2015, using data from ClinicalTrials.gov, Drugs@FDA, corporate websites, data-sharing platforms and registries (eg, YODA, CSDR).89 A total of 628 trials were analysed. Twenty-five per cent of the large pharmaceutical companies made IPD accessible to external investigators for new drug approvals, this proportion improved to 33% after applying a ranking tool.

Non-commercial sponsors

Disease-specific academic clinical trial networks (CTNs) have a long history of IPD sharing, especially US-related NIH institutions. This is clearly demonstrated by the available literature; however, the metrics of data sharing are not always as transparent as with the industry platforms, and data cannot be structured and documented easily in a table.

In a survey on the use of the National Heart, Lung, and Blood institute Data Repository (NHLBI), access to 100 studies initiated between 1972 and 2010 was investigated.90 A total of 88 trial datasets were requested at least once, and the median time from repository availability and the first request was 235 days.

Since its inception in 2006 and through to October 2012, nearly 1700 downloads from 27 clinical trials have been accessed from the Data Share website belonging to the National Drug Abuse Treatment CTN in the USA, with use increasing over the years.91 Individuals from 31 countries have downloaded data so far.

In a case study approach, the data-sharing platform Data Share of the National Institute of Drug Abuse (NIDA) was investigated in detail.92 As of March 2017, the data share platform had included 51 studies from two trial networks (36 studies from CTN and 15 studies from NID Division of Therapeutics and Medical Consequences). From 2006 to March 2017, there have been 5663 downloads from the Data Share website. Of these, 4111 downloads have been from the USA.

The PDS is an open-source data-sharing model that was launched in 2014 as an independent, non-profit initiative of the CEO roundtable on cancer.93 PDS contains data from 72 oncology trials, donated by academics, governments and industry sponsors. More than 1400 researchers have accessed the PDS database more than 6500 times. As an example, a challenge to create a better prognostic model for advanced prostate cancer was issued in 2014, with 549 registrants from 58 teams and 21 countries.

The Immune Tolerance Network (ITN) is a National Institute of Allergy and Infectious Diseases/National Institutes of Health-sponsored academic CTN.94 The trial sharing portal, which was released for public access in 2013, provides complete open access to clinical trial data and laboratory studies from ITN trials at the time of the primary study publication. Currently, data from 20 clinical trials is available and data for an additional 17 will be released to the public at the timepoint of first publication. So far, more than 1000 downloads have been registered.

In the MRC Clinical Trials Transparency Review Final Report (November 2017), the MRC UK reported that 24/107 (22%) trials that started during the review period had created a database for sharing. Seven of these datasets (7/24, 29%) had already been shared with other researchers.95

Of 215 requests submitted for Prostate, Lung, Colorectal and Ovarian (PLCO) cancer screening trial data, 199 (93%) were approved, and for National Lung Screening Trial (NLST) 214 (89%) out of 240 requests.96

Other stakeholders

In a case study about experiences with data-sharing among data monitoring committees, access to five concurrent trials assessing the level of arterial oxygen, which should be targeted in the care of very premature neonates, was investigated.97 The target of taking all relevant evidence into account when monitoring clinical trials could be only partially reached.

One case study directly addressed the issue of costs. Data from two UK publicly funded trials was used to assess the resource implications of preparing IPD from a clinical trial to share with external researchers.98 One trial, published in 2007, required 50 hours of staff time with a total estimated cost of £3185, and the other published in 2012 required 39.5 hours with £2540.

Results of individual sources of evidence: reuse

Any type of reuse

The majority of research projects using shared clinical trial data are dealing with new research. This covers studies on risk factors and biomarkers, methodological studies, studies on optimising treatment and patient stratification and subgroup analyses. IPD meta-analyses were a less frequent reason for data-sharing requests to repositories and only a few have been reported. Reanalyses are only exceptionally applied.

Early experiences with CSDR, involving GlaxoSmithKline trials found low rates of IPD meta-analyses and reanalyses, the vast majority being secondary analyses (studies on risk factors or biomarkers, methodological studies, predictive toxicology or risk models, studies of optimising treatments, subgroup analyses, etc).81 Similar results were found in an update of the analysis.83

In the YODA project, which had received 73 proposals for data sharing as of June 2017 and had approved 65 proposals, the most common study purposes were to address secondary research questions (n=39), to combine data as part of larger meta-analyses (n=35) and/or to validate previously published studies (n=17).85

Among the 172 requests to the NHLBI data repository with online project descriptions and coded purpose, 72% of requests were initiated to address a new question or hypothesis, 7% to perform a meta-analysis or combined study analysis, 2% to test statistical methods, 9% to investigate methods relevant to clinical trials and 9% for other reasons.90 In only two requests, the available description suggested a reanalysis.

From 2014 to the end of January 2019, 222/473 (46.9%) of the requests to CSDR gained access to the data (in progress and completed).78 Of 222, 90 (40.5 %) of the research teams had completed their analyses by January 2018. Forty-one published at least one paper, and another 28 that were expected to publish shortly.

In the Systolic Blood Pressure Intervention Trial (SPRINT) challenge, individuals or groups were invited to analyse the dataset underlying the SPRINT RCT and to identify novel scientific or clinical findings.99 Among 200 qualifying teams, 143 entries were received.

Further additional analyses

There were few indications concerning the exact type of secondary analysis that was performed. Approved proposals per subject matter are available for the Cancer Data Access system, covering two large cancer screening trials (PLCO, NLST).96 Of the 199 approved requests to PLCO between November 2012 and October 2016, 84 (42%) were devoted to cancer aetiology, 66 (33%) to trial-related screening, 29 (15%) to other areas, 14 (7%) to risk prediction and 6 (3%) to image analysis. Of the 214 approved requests to NLST, 95 (44%) were devoted to image analysis, 90 (42%) to trial-related screening, 14 (7%) to other subjects, 10 (5%) to cancer aetiology and 5 (2%) to risk prediction.

IPD meta-analyses

In one study, IPD meta-analyses proved to amount to a small proportion of data reuse. Among the 174 research proposals approved up to 31 August 2017 by CSDR, 12 proposals were IPD meta-analyses, including network meta-analyses.71 All were retrospective IPD meta-analyses (ie, none was a prospective IPD meta-analysis).

Reanalyses

A 2014 survey of published reanalyses100 found that a small number of reanalyses of RCTs have been published (only 37 reanalyses of 36 initial RCTs) and only a few were conducted by entirely independent authors. 35% of these reanalyses led to changes in findings that implied conclusions different from those of the original article for the types and numbers of patients who should be treated.

In the survey of 37 RCTs in the BMJ and PLOS Medicine published between 2013 and 2016 14 out of 17 (82%, 95% CI: 59% to 94%) available studies were fully reproduced on all their primary outcomes.65 Of the remaining RCTs, errors were identified in two, but reached similar conclusions, and one paper did not provide enough information in the Methods section to reproduce the analyses.

Results for individual sources of evidence: output from data sharing

Publications can be considered as the main research output of data-sharing. Publication activity in the reuse of clinical trial data was considered in several studies. Detailed data are available for academic CTNs and disease-specific repositories in the USA, some of them already practising data-sharing for a period longer than 10 years. Here, fair to moderate publication output has been observed depending on the individual repository. So far this is not the case for the repositories storing clinical trial data from commercial sponsors, taking into consideration that these repositories were established around 5 years ago and that there is usually a considerable time lag between request, approval, analysis and publication. Current statistics indicate improvement in publication output with time.

Non-commercial sponsors

In a cross-sectional web-based survey about access to clinical research data from BioLINCC, covering the period from 2007 to 2014, 98 out of 195 responders (50%) reported that their projects had been completed, among which 66 (67%) had been published.77 Of the 97 respondents who had not yet completed their proposed projects, 81 (84%) explained that they planned to complete their project; 63 (65%) indicated that their project was in the analysis/manuscript draft phase.

In a survey targeting European heads of imaging departments and speakers at the Clinical Trials in Radiology sessions (July–September 2018), 23/68 reported that they had already shared data.63 At least 44 original studies were published based on the data shared by the 23 institutions involved.

In five studies (table 2), the number of publications was reported, usually referring to the number of trials included in the repository/platform.

Table 2.

Studies reporting published outputs for non-commercial sponsors

| Reference | Repository/platform | No of trials included in repository/platform | No of published articles | Assessment |

| Shmueli-Blumberg et al,91 | CTN Data Share | 27 trials (1700 downloads) |

13 | 2012 |

| Zhu et al,96 | CDAS | 2 trials (PLCO, NLST) (455 requests) |

25% for PLCO projects, 19% for NLST projects | 2016 |

| Coady et al,90 | BioLINCC | 100 trials (88 requested at least once) |

35% of clinical trials had at least 1 publication 5 years after availability in the repository | 5/2016 |

| Huser and Shmueli-Blumberg92 | NIDA Data Store | 51 trials | 14 | 3/2017 |

| Pisani and Botchway105 | WWARN | 186 trials | 18 | 2016 |

CDAS, Cancer Data Access system; CTN, clinical trial network; NIDA, National Institute of Drug Abuse; NLST, National Lung Screening Trial; PLCO, Prostate, Lung, Colorectal and Ovarian; WWARN, World Wide Antimalarial resistance Network.

Commercial sponsors

Various studies explored metrics of both YODA and CSDR (online supplemental material 4).

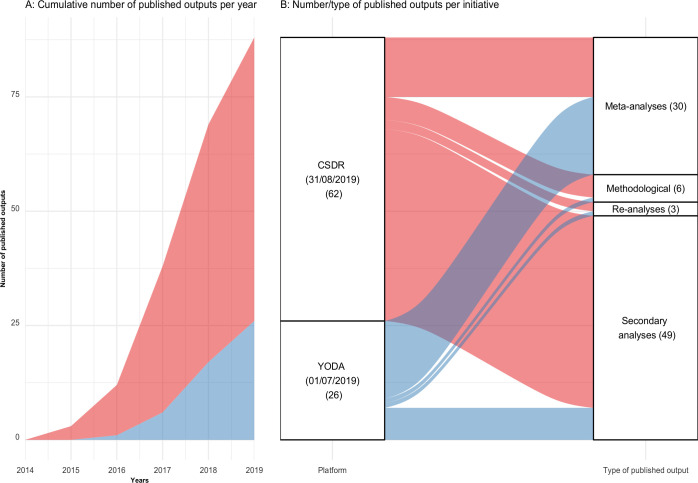

Up to 2021, Vivli’s website indicates very little published output. We were not able to retrieve published output from NIDDK. Figure 6 presents publication metrics for CSDR (up to 31 August 2019) and YODA (up to 1 July 2019). Among 88 published papers (62 from CSDR and 26 from YODA), 49 were secondary analyses (42 from CSDR and 7 from YODA), 30 were meta-analyses (13 from CSDR and 17 from YODA), 6 were methodological studies (5 from CSDR and 1 from YODA) and 3 were reanalyses (2 from CSDR and 1 from YODA). The details of these publications are presented in online supplemental material 5.80 83 85

Figure 6.

Temporal trends, number and type of published output from CSDR and YODA. Blue: YODA. Red: CSDR. CSDR, ClinicalStudyDataRequest.com; YODA, Yale University Open Data Access.

Results of individual sources of evidence: impact of research output

Evidence on the impact of research output from sharing IPD from clinical trials is still very sparse. So far only two studies, with inconsistent results dealing with this issue and focusing only on citation metrics could be identified.

Metrics on citations

One study, already published in 2007, suggested that sharing detailed research data were associated with an increased citation rate.101 Of 85 cancer microarray clinical trials published between January 1999 and April 2003 41 made their microarray data publicly available on the internet. For 2004–2005, the trials with publicly available data received 85% of the aggregate citations. Publicly available data was significantly associated with a 69% increase in citations, independently from journal impact factor, date of publication and the author’s country of origin.

Citation metrics for 224 publications based on repository data for clinical trials in the NHLBI Data Repository were compared with publications that used repository observational study data, as well as a 10%-random sample of all NHLBI-supported articles published in the same period (January 2000–May 2015).90 Half of the publications based on clinical trial data had cumulative citations that ranked in the top 34% normalised for subject category and year of publication, compared with 28.3% for publications based on observational studies and 29% for random samples. The differences were, however, not statistically significant.

Other data sources

In the SPRINT challenge, individuals or groups were invited to analyse the dataset underlying the SPRINT RCT and to identify novel scientific or clinical findings.99 Among 200 qualifying teams, 143 entries were received. Entries were judged by a panel of experts on the basis of the utility of the findings to clinical medicine, the originality and novelty of the findings, and the quality and clarity of the methods used. All submissions were also open for crowd voting among the 16 000 individuals following the SPRINT Challenge. Cash prizes were awarded, and winners were invited to present their results.

Discussion

Summary of evidence

There are major differences with respect to the intention to share IPD from clinical trials across the different stakeholder groups. The studies available so far show that clinical trialists and to some extent study participants, as the two main actors of clinical trials, usually have great willingness to share data (60%–80%). This is much less pronounced when it comes to data-sharing statements published in journal articles. Depending on the journals considered, the rates vary from less than 5% to around 25%. The situation is even worse when data-sharing plans documented in registries (eg, ClinicalTrials.gov) are analysed. Here the willingness to share data is between 5% and 10%.

As a consequence, considerable discrepancy between the positive attitude towards data-sharing in general and the intention to do so in an actual study needs to be ascertained. Publishers, enabling the publication of research output from clinical trials and funders/sponsors financing clinical trials, could be major drivers to change the situation. Meanwhile many publishers have developed data-sharing policies (20%–75%), but less than 10% are mandatory and have thus not been enforced. There are differences between journals, with some of the high-impact journals being more involved in the data sharing movement than the others (eg, PLOS Medicine, the BMJ, Annals of Internal Medicine). For funders, the situation is similar, but differs between commercial and non-commercial funders. 30%–80% of the non-commercial funders provide data-sharing policies, with the USA and NIH at the front. Only around 10%–20% of these policies are mandatory. Data-sharing policies have been developed more often in the group of commercial funders (40%–95%) but information on the proportion of mandatory policies is lacking. In short, the pressure by publishers and funders to share data is still limited and the situation is only slowly improving. Stronger policies on data sharing that include a strong evaluation component are needed. The situation is better for the pharmaceutical industry, which has not only promoted data-sharing policies in their organisations to a large degree but has also implemented platforms and repositories, providing practical support for the process of data-sharing (eg, CSDR, YODA, Vivli).