Abstract

Transitioning from one electronic health record (EHR) system to another is of the most disruptive events in health care and research about its impact on patient experience for inpatient is limited. This study aimed to assess the impact of transitioning EHR on patient experience measured by the Hospital Consumer Assessment of Healthcare Providers and Systems composites and global items. An interrupted time series study was conducted to evaluate quarter-specific changes in patient experience following implementation of a new EHR at a Midwest health care system during 2017 to 2018. First quarter post-implementation was associated with statistically significant decreases in Communication with Nurses (−1.82; 95% CI, −3.22 to −0.43; P = .0101), Responsiveness of Hospital Staff (−2.73; 95% CI, −4.90 to −0.57; P = .0131), Care Transition (−2.01; 95% CI, −3.96 to −0.07; P = .0426), and Recommend the Hospital (−2.42; 95% CI, −4.36 to −0.49; P = .0142). No statistically significant changes were observed in the transition, second, or third quarters post-implementation. Patient experience scores returned to baseline level after two quarters and the impact from EHR transition appeared to be temporary.

Keywords: HCAHPS, EHR transition, patient experience

Introduction

With the legislative enactment of Heath Information Technology for Economic and Clinical Health (HITECH) Act, health care providers including hospitals in the United States are required to adopt electronic health records (EHR) as part of the standard of care. Electronic health records were allowed to be phased in through “meaningful use”, which included electronic prescribing, health information exchange, and reporting of data for quality improvement (1). Meaningful use provided monetary incentives for health care providers to adopt EHRs and hospitals have also been engaged in transitioning to more advanced EHRs. Transitioning to a different EHR could be disruptive (2) and hospitals strive to plan carefully for a successful transition (3).

Because patient experience measures are indicative of health care quality and their scores also impact both the reputation and finances of a hospital (4), it is important for hospital leaders to understand the impact of EHR transitions on patient experience when a hospital is going through such changes. Previous reports have found patient experience to drop during the initial EHR implementation with focus mostly under ambulatory settings (5). A study from the Mayo Clinic also reported the patient satisfaction under outpatient settings had significant drops initially during an EHR transition (6). Transitioning to a new EHR in the inpatient setting could disrupt day-to-day operations and negatively impact patient experience. For example, providers might take some time to learn about the new EHR and spend less time on patient care, and workflows could be different from the legacy EHR and lead to longer waiting time to fulfill patients’ request (7). We hypothesized that the temporary decline in patient experience associated with an EHR transition in outpatient settings could be extended to inpatient settings.

The preferences and expectations from hospitalized patients are measured by the Hospital Consumer Assessment of Healthcare Providers and Systems (HCAHPS) surveys, which have become key quality indicators for hospitals (8). Scores from the HCAHPS surveys are publicly reported and integrated into pay for performance programs managed by the Centers for Medicare and Medicare Services (CMS) (9). Specifically, patient experience accounted for 25% of the total performance scores used in the Hospital Value-Based Purchasing program (10), and an estimated 2% of certain Medicare payments were redistributed to hospitals based on their total performance scores (10,11).

Evidence suggests advanced EHRs have a positive impact on quality measures in the long run (12), the impact of the transition to a new EHR on patient experience under inpatient settings remains under-researched. In this Midwest health care system, 10 adult hospitals transitioned from a fragmented multivendor system to a single advanced EHR system in 4 waves between Q3 2017 and Q2 2018. Given the concerns about potential negative impact on patient experience during the EHR implementation, we conducted this study to examine the short-term changes in patient experience scores associated with implementation of the EHR system spanning from 2015 to 2019.

Materials and Methods

Study Design

The research method included an interrupted time series study design (13), comparing the experience of patients as measured on HCAHPS survey composites and global items at pre-EHR and post-EHR time periods to determine whether there was any statistically significant differences in scores pre-EHR and post-EHR transition. This study design could also adjust for temporal trends, seasonal pattern, and other characteristics (14,15).

Hospitals and Patients

The research included the 10 adult hospitals from a Midwest health care system that had an EHR go-live from October 2017 to June 2018. Prior to the go-live dates, the health care system used EHRs from multiple vendors including Allscripts, Cerner, and McKesson. Post go-live date, a single instance of an EHR from Epic was implemented. A cluster of 3 to 4 hospitals would go-live on the new EHR, followed by several months of stabilization before the next set of hospitals. Random samples of patients from each hospital were selected and interviewed following HCAHPS Quality Assurance Guidelines protocols (16). The overall response rates were around 35%, and patients who completed surveys accounted for around 10% of all inpatient discharges across the hospitals. Patients who are 18 years or older at the time of admission and have at least 1 overnight hospital stay, have nonpsychiatric Medicare Severity Diagnosis-Related Groups (MS-DRG), and are alive at discharge are eligible for survey (8). We further excluded patients who were not mapped to an inpatient unit or had invalid MS-DRG codes. Informed consent was not necessary, given the retrospective nature of the study.

Study Periods

Calendar quarters were used as the basic units to define study periods and to evaluate changes in each quarter post-implementation (15). The hospitals had EHR go-live in 4 waves given in Table 1. The calendar quarter that overlapped the EHR go-live day was defined as the transition quarter, the 3 subsequent quarters were defined as the first, second, and third quarter post-EHR implementation. Together, these 4 quarters formed the post-EHR implementation periods. The 8 quarters prior to the transition quarter were identified as the pre-implementation periods.

Table 1.

Definition of Study Periods for Pre-implementation and Post-implementation.a

| EHR go-live date | Year/calendar quarter | |||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 2015 | 2016 | 2017 | 2018 | 2019 | ||||||||||||||

| Q1 | Q2 | Q3 | Q4 | Q1 | Q2 | Q3 | Q4 | Q1 | Q2 | Q3 | Q4 | Q1 | Q2 | Q3 | Q4 | Q1 | Q2 | |

| July 2017 (1 hospital) | Pre-implementation | A | B | C | D | |||||||||||||

| December2017 (4 hospitals) | Pre-implementation | A | B | C | D | |||||||||||||

| February 2018 (3 hospitals) | Pre-implementation | A | B | C | D | |||||||||||||

| June 2018 (2 hospitals) | Pre-implementation | A | B | C | D | |||||||||||||

Abbreviation: EHR, electronic health record.

a Patients were assigned to the study periods based on discharge date. A: Transition quarter corresponds to the calendar quarter of EHR go-live date; B: First quarter post-implementation; C: Second quarter post-implementation; D: Third quarter post-implementation.

Outcomes

The primary outcomes were the ratings of care from patients perspectives following CMS “top-box” methodology to report the percentage of most positive responses (16). Seven composite measures (Communication with Nurses, Communication with Doctors, Responsiveness of Hospital Staff, Communication about Medicines, Discharge Information, Care Transition, and Hospital Environment) and 2 global measures (Hospital Rating, Recommend the Hospital) were calculated. For each of the measures, the most positive responses from individual items were scored as 100 and other valid responses as 0. Then, the arithmetic average of individual items making up that composite measure was taken as the patient-level outcome (17). A summary score was calculated based on the arithmetic average of the 8 outcomes (7 composite measures and Hospital Rating) (16).

Additional Variables

For all study participants, we extracted the unique patient identifiers (UPI) from the survey data, and by linking the UPI, we extracted additional data about study participants from our internal enterprise data warehouse. Data about age, gender (female/male), race (Black/White/Other), education level (below college/college or above), overall health (excellent or not excellent), and overall mental health (excellent or not excellent) were collected during the phone interviews. The MS-DRG (18), admission source (emergent or elective), length of stay, and Elixhauser comorbidity were extracted from the enterprise data warehouse (19).

Statistical Analysis

An interrupted time series study design was used to compare patient experience during the pre-EHR and post-EHR implementation periods and 2 multivariate regression models for each individual outcome were used to evaluate the changes during the study periods. To account for the underlying temporal trends, quarter was treated as a continuous time variable, with zero set at the last quarter in the pre-implementation period. Because implementation dates were staggered over time across the hospitals, and because we hypothesized there would be disruptions in patient experience and then recovery, each quarter post-implementation was treated as an indicator rather than specifying a uniform trending (ie, treated as a continuous variable). Seasonality was controlled by including calendar quarter. A random intercept for inpatient unit was added to account for within-hospital level unit variations. For each outcome, base and full models were fit. The base model included time, both the indicators of post-EHR implementation, and calendar quarter as independent variables. The full model further added patient characteristics and inpatient unit to the independent variables.

To further test the robustness of the findings, individual general additive models (GAMs) for each outcome was fitted to explore the patterns of patient experience outcomes over time on a monthly basis, adjusting for the same covariates as in the full model in the main analysis (20). We defined post-implementation month as the new time variable, with zero set at the last month pre-implementation. For example, patients discharged in June 2017, July 2017, and August 2017 for hospital with August 2017 as go-live data would have values of −1, 0, and 1 for the post-implementation month, respectively. A cubic smoothing spline function of time variable with 12 degrees of freedom was included to explore variations over time. The GAM also included calendar quarter, patient characteristics, and inpatient unit as additional independent variables.

Analyses were conducted between October 2019 and March 2020 by the statisticians in our organizations. All analyses were performed using SAS version, and a 2-sided P value less than .05 was considered significant.

Results

Patient Characteristics

During the study period, 34 425 patients completed the HCAHPS surveys. After excluding patients from unmapped units (85, 0.25%) and invalid MS-DRG (34, 0.10%), the final analysis included 34 306 patients, which accounted for 99.65% of the initial population. Patient characteristics and unadjusted outcomes by pre-EHR and post-EHR periods were presented in Table 2. There were 18 096 and 16 210 patients in the pre-EHR and post-EHR implementation periods, respectively. The mean (SD) age of the study population was 59.6 (17.1) years, 19 808 (57.7%) were female, 25 917 (75.5%) were white, 7508 (21.9%) were black, and 17 964 (52.4%) had Medicare as the primary insurance. The average length of stay (SD) was 4.0 (4.4) days, and 94.5% were discharged home under self-care or home health care. Patient demographics, comorbidities, admission/discharge status, and treatment received were similar in pre-implementation and post-implementation periods.

Table 2.

Patient Characteristics and Unadjusted Study Outcomes during Study Periods.a

| Patients discharged during pre-implementation period, n = 18 096 | Patient discharged during post-implementation period, n = 16 210 | Patients during both pre-implementation and post-implementation periods, n = 34 306 | |

|---|---|---|---|

| Admission characteristics | |||

| Age, mean (SD), years | 59.6 (17.2) | 59.6 (17.1) | 59.6 (17.1) |

| Sex, % | |||

| Female | 58.7 | 56.7 | 57.7 |

| Male | 41.3 | 43.3 | 42.3 |

| Race, % | |||

| Black | 21.3 | 22.6 | 21.9 |

| White | 76.6 | 74.4 | 75.5 |

| Other | 2.2 | 3.0 | 2.6 |

| Primary payer, % | |||

| Commercial | 31.0 | 30.6 | 30.8 |

| Medicaid | 11.1 | 10.6 | 10.9 |

| Medicare | 52.4 | 50.3 | 52.4 |

| Self-pay | 3.4 | 3.5 | 3.4 |

| Other | 2.2 | 3.0 | 2.5 |

| Admission source, % | |||

| Elective | 40.2 | 40.6 | 40.4 |

| Emergent | 59.8 | 59.4 | 59.6 |

| Discharge characteristics | |||

| Length of stay, mean (SD), day | 3.8 (3.8) | 4.3 (5.1) | 4.0 (4.4) |

| Discharge disposition, % | |||

| Home | 69.5 | 71.0 | 70.2 |

| Home health | 24.8 | 23.7 | 24.3 |

| Other | 5.7 | 5.3 | 5.5 |

| MS-DRG type, % | |||

| Medical | 56.8 | 55.6 | 56.3 |

| Surgical | 43.2 | 44.4 | 43.7 |

| MS-DRG complication, % | 1.7 | 2.3 | |

| Comorbidities, % | |||

| 0-1 | 35.6 | 33.2 | 34.5 |

| 2-3 | 35.2 | 37.4 | 36.2 |

| ≥4 | 29.2 | 29.4 | 29.3 |

| Self-reported characteristics | |||

| Overall health: excellent, % | 13.1 | 13.0 | 13.0 |

| Overall mental health: excellent, % | 32.5 | 32.0 | 32.2 |

| Interview language: English, % | 99.9 | 99.5 | 99.7 |

| Education: College or above, % | 23.3 | 24.5 | 23.8 |

| Unadjusted study outcomes | |||

| Communication with Nurses | 83.70 | 83.15 | 83.44 |

| Communication with Doctors | 83.18 | 83.40 | 83.28 |

| Responsiveness of Hospital Staff | 66.92 | 65.10 | 66.06 |

| Communication about Medicines | 67.06 | 67.93 | 67.48 |

| Discharge Information | 91.29 | 91.66 | 91.47 |

| Care Transition | 58.05 | 59.15 | 58.57 |

| Hospital Environment | 69.94 | 69.87 | 69.91 |

| Hospital Rating | 75.02 | 75.13 | 75.07 |

| Recommend the Hospital | 78.97 | 79.20 | 79.08 |

| Summary Score | 74.86 | 74.87 | 74.87 |

Abbreviation: MS-DRG, Medicare Severity Diagnosis-Related Groups.

a Pre-implementation included the eight quarters prior to the transition quarter, and post-implementation included transition quarter and three following quarters. Study outcomes were average of individual nonmissing items and ranged from 0 to 100.

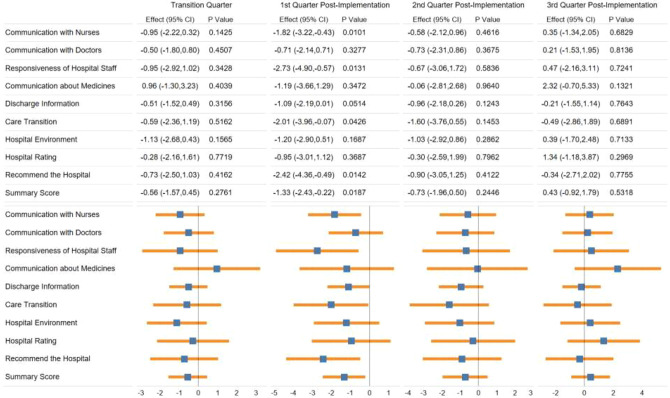

Quarter-Specific Effect on Outcome Scores

The quarter-specific effects from the base model and the full model are shown in Figure 1 and Supplemental Figure 1, respectively. After adjusting for temporal trends and seasonality in the base model (Figure 1), first quarter post-implementation was associated with significant decrease of scores in Communication with Nurses (−1.82; 95% CI, −3.22 to −0.43; P = .0101), Responsiveness of Hospital Staff (−2.73; 95% CI, −4.90 to −0.57; P = .0131), Care Transition (−2.01; 95% CI, −3.96 to −0.07; P = .0426), Recommend the Hospital (−2.42; 95% CI, −4.36 to −0.49; P = .0142) and Summary Score (−1.33; 95% CI, −2.43 to −0.22; P = .0187) compared to the pre-implementation time quarter. For the second and third quarter post-implementation, no statistically significant changes were observed among any of the outcome measures, as compared to the pre-implementation time quarter.

Figure 1.

Adjusted quarter-specific effect of the association between electronic health record implementation and patient experience outcomes from base model. Notes: Effects were based on random-effect linear model adjusting for temporal trends, seasonality, and random intercept for inpatient unit nested in hospital. Forest plots demonstrated point estimate with 95% CI of quarter-specific effect.

After further controlling for inpatient unit and patient characteristics listed in Table 2 in the full model, the pattern of association between specific quarter and outcomes was similar. In the full model (Supplemental Figure 1), first quarter after EHR implementation was associated with significant decrease in scores in Communication with Nurses (−1.91; 95% CI, −3.27 to −0.54; P = .0062), Responsiveness of Hospital Staff (−2.87; 95% CI, −4.99 to −0.75; P = .0080), Care Transition (−2.02; 95% CI, −3.88 to −0.15; P = .0344), Recommend the Hospital (−2.65; 95% CI, −4.56 to −0.74; P = .0066) and Summary Score (−1.43; 95% CI, −2.50 to −0.37; P = .0082). Consistent with the base model, no significant changes among any of the outcomes were associated with second or third quarter post-implementation.

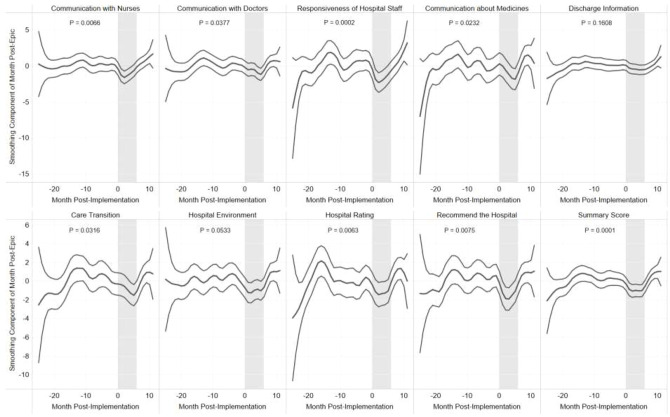

Effect of Post-EHR Implementation Month on Outcome Scores

Changes of patient experience scores over post-implementation month were shown in Figure 2. The trending revealed how the scores would change over time after adjusting for seasonality, patient characteristics, and inpatient unit. The effect of post-implementation month was not significant for Discharge Information and Hospital Environment and was significant for the other 8 outcomes. The outcomes with significant changes all showed decreasing trends during the first 6 months of the post-implementation period. For example, the Communication with Nurses (Figure 2) scores remained stable before EHR implementation, significantly decreased during the 0- to 6-month post-implementation (with upper bound of 95% CI interval below zero), then returned to baseline levels with no significant changes (95% CI included zero mostly).

Figure 2.

Smoothed effect and 95% CI of month post-implementation on patient experience outcomes. Notes: Covariates in general additive model included time variable, seasonality, patient characteristics, and inpatient unit. Shaded areas marked the 0- to 6-month post-implementation.

Discussion

We hypothesized that transitioning to another EHR would compromise the patient experience, and the findings of transient decrease in some of the care domains supported our hypothesis. Our findings suggest that patient experience was restored to historical performance after 2 quarters in the new EHR system. The normalization may help allay concerns regarding patient experience performance after implementation of a new EHR. Our study expanded knowledge about association of EHR transition with patient experience from outpatient to inpatient settings. Interestingly, capability of returning to the previous levels of patient experience after EHR transition was also observed under outpatient settings and it took several months for the patient satisfaction to recover (6). While the accessing to care was most impacted under outpatient setting (6), our study indicated the responsiveness of hospital staff was most negatively impacted.

Hospitals have acknowledged the potential disruptions from transitioning EHRs and provided strategies for mitigating disruption such as engaging leaders, standardizing workflows, and investing in infrastructure (3). Nurse involvement is cited as especially important to achieve a smooth transition because nurses are delivering the care both directly and indirectly, and they also influence how other team members perceive the new EHR system (21). Since nurses spend large amounts of time documenting within the EHR and much of their time communicating with the patient (22), this could partially explain the decrease in nurse communication and staff responsiveness domains observed here. Multiple studies in the United States and Europe have linked the patient’s interactions with nurse staff as the top contributors to the HCAHPS global measures (overall rating or recommend the hospital) (23,24). As nurses are learning to adapt to the new EHR workflows, their interactions with patients may be compromised during the implementation. This reduced interaction could contribute to the decrease in patient experience scores. Scores starting in the second quarter after implementation were no different from the scores prior to the transition; thus, the return to pre-implementation baselines may be related to overcoming the initial learning curve associated with transitioning to a new EHR. The findings suggest that the negative impact on patient experience associated with a large-scale implementation of a new EHR are short-lived. In the long term, different EHRs are likely to have no impact on the patient experience. For example, one study evaluated the impact of different EHRs on patient experience and found no statistically significant associations in inpatient settings (25).

By using an interrupted time series study design, we accounted for secular trending and controlled for confounding characteristics with a larger sample size than most existing studies with patient experience. By using a GAM, the pattern of patient experience scores over the EHR implementation time period was also revealed, reinforcing the findings from comparing quarterly scores. Nevertheless, this is an observational study and still possesses several limitations. First, the patients were all from a single large health care system. There is no standard operations as how to transition to another EHR, and hospitals likely encountered different issues such as patient safety and employee stress (26,27). Second, the patients in the analysis represented about 10% of the total patient population, and data to compare responders and nonresponders were not available. Third, although it is possible that the restored patient experience values could be attributed to patient experience interventions rather than recovery from EHR implementation. It is unlikely for the former to be the case, system, and hospital-specific patient experience initiatives would have to be synchronously timed across the varying hospital-specific EHR go-lives. Most interventions are implemented based on calendar years or aligned in implementation across the health care system. These limitations could reduce the generalizability of these finding to the entire underlying patient population (23).

Conclusion

In this interrupted time series study of hospitalized patients, the first quarter after transition to a new EHR was associated with reduced ratings in several HCAHPS domains including Communication with Nurses, Responsiveness of Hospital Staff, and Care Transition. The changes in patient experience at the monthly level also showed reduced patient experience scores during the 0- to 6-month post-implementation month of the new EHR. These patient experience measures also reflected the quality of care delivered by nurses who spend a substantial amount of time interacting with the EHR. These changes could be expected due to the multifaceted challenges stemming from an EHR transition (7). A subsequent rebound in the scores to pre-implementation levels suggests that the disruptions on patient experience associated with an EHR transition may be short-lived. After full implementation, a new EHR is less likely to be the cause of compromised patient experience scores compared to legacy EHR. Our study described the potential disruptions to patient experience when health systems are undergoing a change to an EHR. For health systems undergoing these changes, it is helpful to be aware of these impacts and be prepared to recognize these issues around nursing interaction and communicate with both patients and staff about these changes and impacts. Health system should also be confident that the patient experience could be restored after the temporary disruptions associated with EHR transitions.

Supplemental Material

Supplemental Material, sj-pdf-1-jpx-10.1177_23743735211034064 for Disrupted and Restored Patient Experience With Transition to New Electronic Health Record System by Dajun Tian, Christine M. Hoehner, Keith F. Woeltje, Lan Luong and Michael A. Lane in Journal of Patient Experience

Acknowledgments

The authors thank the patient experience leaders who provided feedback on the study and findings.

Authors’ Note: Ethical approval to this study was obtained from institutional review board of Washington University School of Medicine (IRB ID: 202003063).

Informed consent for patient information to be published in this article was not obtained because informed consent was waived by institutional review board of Washington University School of Medicine.

Declaration of Conflicting Interests: The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Funding: The author(s) received no financial support for the research, authorship, and/or publication of this article.

ORCID iD: Christine M. Hoehner, PhD, MSPH  https://orcid.org/0000-0001-9952-686X

https://orcid.org/0000-0001-9952-686X

Supplemental Material: Supplemental material for this article is available online.

References

- 1.Jha AK. Meaningful use of electronic health records: the road ahead. JAMA [Internet]. 2010;304:1709–10. doi:10.1001/jama.2010.1497 [DOI] [PubMed] [Google Scholar]

- 2.Gettinger A, Csatari A.Transitioning from a legacy EHR to a commercial, vendor-supplied, EHR: one academic health system’s experience. Appl Clin Inform. 2012; 3:366–76 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Johnson KB, Ehrenfeld JM. An epic switch: preparing for an electronic health record transition at Vanderbilt University medical center. J Med Syst. 2018;42:6. [DOI] [PubMed] [Google Scholar]

- 4.Manary MP, Boulding W, Staelin R, Glickman SW. The patient experience and health outcomes. N Engl J Med. 2013;368:201–3. [DOI] [PubMed] [Google Scholar]

- 5.Meyerhoefer CD, Sherer SA, Deily ME, Chou SY, Guo X, Chen J, et al. Provider and patient satisfaction with the integration of ambulatory and hospital EHR systems. J Am Med Informatics Assoc. 2018;25:1054–63. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.North F, Pecina JL, Tulledge-Scheitel SM, Chaudhry R, Matulis JC, Ebbert JO. Is a switch to a different electronic health record associated with a change in patient satisfaction?. J Am Med Informatics Assoc. 2020;27:867–76. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Huang C, Koppel R, McGreevey JD, Craven CK, Schreiber R. Transitions from one electronic health record to another: challenges, pitfalls, and recommendations. Appl Clin Inform. 2020;11:742–54. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Giordano LA, Elliott MN, Goldstein E, Lehrman WG, Spencer PA. Development, implementation, and public reporting of the HCAHPS survey. Med Care Res Rev. 2010;67:27–37. [DOI] [PubMed] [Google Scholar]

- 9.VanLare JM, Conway PH. Value-based purchasing — national programs to move from volume to value. N Engl J Med. 2012;367:292–5. [DOI] [PubMed] [Google Scholar]

- 10.Kahn CN, Ault T, Potetz L, Walke T, Chambers JH, Burch S.Assessing Medicare’s hospital pay-for-performance programs and whether they are achieving their goals. Health Aff (Millwood). 2015;34:1281–8. [DOI] [PubMed] [Google Scholar]

- 11.Total performance scores [Internet]. Accessed July 12, 2021. https://www.medicare.gov/hospitalcompare/data/total-performance-scores.html

- 12.Boonstra A, Versluis A, Vos JF. Implementing electronic health records in hospitals: a systematic literature review. BMC Health Serv Res. 2014;14:1–24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Lopez Bernal J, Cummins S, Gasparrini A. Interrupted time series regression for the evaluation of public health interventions: a tutorial. Int J Epidemiol. 2017;46(1):348–55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.McDonald EG, Dendukuri N, Frenette C, Lee TC. Time-series analysis of health care–associated infections in a new hospital with all private rooms. JAMA Intern Med. 2019;179:1501–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Kahn JM, Davis BS, Yabes JG, Chang CCH, Chong DH, Hershey TB, et al. Association between state-mandated protocolized sepsis care and in-hospital mortality among adults with sepsis. JAMA. 2019;322:240. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Centers for Medicare & Medicaid Service. Quality Assurance Guidelines CAHPS ® Hospital Survey (HCAHPS). Centers for Medicare & Medicaid Service; 2018. [Google Scholar]

- 17.Martino SC, Mathews M, Agniel D, Orr N, Wilson-Frederick S, Ng JH, et al. National racial/ethnic and geographic disparities in experiences with health care among adult Medicaid beneficiaries. Health Serv Res. 2019;54:287–96. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.McNutt R, Johnson TJ, Odwazny R, Remmich Z, Skarupski K, Meurer S, et al. Change in MS-DRG assignment and hospital reimbursement as a result of centers for Medicare & Medicaid changes in payment for hospital-acquired conditions: is it coding or quality?. Qual Manag Health Care. 2010;19:17–24. [DOI] [PubMed] [Google Scholar]

- 19.Moore BJ, White S, Washington R, Coenen N, Elixhauser A.Identifying increased risk of readmission and in-hospital mortality using hospital administrative data: the AHRQ Elixhauser comorbidity index. Med Care. 2017;55:698–705. [DOI] [PubMed] [Google Scholar]

- 20.Dominici F, McDermott A, Zeger SL, Samet JM. On the use of generalized additive models in time-series studies of air pollution and health. American J Epidemiol. 2002;156:193–203. [DOI] [PubMed] [Google Scholar]

- 21.Katterhagen L. Creating a climate for change introduction of a new hospital electronic medical record. Nurse Lead. 2013;11:40–3. [Google Scholar]

- 22.Yen PY, Kellye M, Lopetegui M, Saha A, Loversidge J, Chipps EM, et al. Nurses time allocation and multitasking of nursing activities: a time motion study. AMIA. Annu Symp proceedings. AMIA Symp. 2018;2018:1137–46. [PMC free article] [PubMed] [Google Scholar]

- 23.Thiels CA, Hanson KT, Yost KJ, Zielinski MD, Habermann EB, Cima RR. Effect of hospital case mix on the hospital consumer assessment of healthcare providers and systems star scores: are all stars the same? Ann Surg. 2016;264:666–73. [DOI] [PubMed] [Google Scholar]

- 24.Orindi BO, Lesaffre E, Quintero A, Sermeus W, Bruyneel L. Contribution of HCAHPS specific care experiences to global ratings varies across 7 countries: what can be learned for reporting these global ratings?. Med Care. 2019;57:e65–e72. [DOI] [PubMed] [Google Scholar]

- 25.Jarvis B, Johnson T, Butler P, O’Shaughnessy K, Fullam F, Tran L, et al. Assessing the impact of electronic health records as an enabler of hospital quality and patient satisfaction. Acad Med. 2013;88:1471–7. [DOI] [PubMed] [Google Scholar]

- 26.Babbott S, Manwell LB, Brown R, Montague E, Williams E, Schwartz M, et al. Electronic medical records and physician stress in primary care: results from the MEMO Study. J Am Med Informatics Assoc. 2014;21:100–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Whalen K, Lynch E, Moawad I, John T, Lozowski D, Cummings BM. Transition to a new electronic health record and pediatric medication safety: lessons learned in pediatrics within a large academic health system. J Am Med Informatics Assoc. 2018;25:848–54. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplemental Material, sj-pdf-1-jpx-10.1177_23743735211034064 for Disrupted and Restored Patient Experience With Transition to New Electronic Health Record System by Dajun Tian, Christine M. Hoehner, Keith F. Woeltje, Lan Luong and Michael A. Lane in Journal of Patient Experience