Abstract

We propose parameter optimization techniques for weighted ensemble sampling of Markov chains in the steady-state regime. Weighted ensemble consists of replicas of a Markov chain, each carrying a weight, that are periodically resampled according to their weights inside of each of a number of bins that partition state space. We derive, from first principles, strategies for optimizing the choices of weighted ensemble parameters, in particular the choice of bins and the number of replicas to maintain in each bin. In a simple numerical example, we compare our new strategies with more traditional ones and with direct Monte Carlo.

Keywords: Markov chains, resampling, sequential Monte Carlo, weighted ensemble, molecular dynamics, reaction networks, steady state, coarse graining

AMS subject classifications. 65C05, 65C20, 65C40, 65Y05, 82C80

1. Introduction.

Weighted ensemble is a Monte Carlo method based on stratification and resampling, originally designed to solve problems in computational chemistry [8, 12, 15, 17, 18, 23, 34, 46, 47, 51, 61, 62, 64, 66]. Weighted ensemble currently has a substantial user base; see [65] for software and a more complete list of publications. In general terms, the method consists of periodically resampling from an ensemble of weighted replicas of a Markov process. In each of a number of bins, a certain number of replicas is maintained according to a prescribed replica allocation. The weights are adjusted so that the weighted ensemble has the correct distribution [62]. In the context of rare event or small probability calculations, weighted ensemble can significantly outperform direct Monte Carlo or independent replicas; the performance gain comes from allocating more particles to important or rare regions of state space. In this sense, weighted ensemble can be understood as an importance sampling method. We assume the underlying Markov process is expensive to simulate, so that optimizing variance versus cost is critical. In applications, the Markov process is usually a high dimensional drift diffusion, such as Langevin molecular dynamics [50], or a continuous time Markov chain representing reaction network dynamics [3].

We focus on the computation of the average of a given function or observable with respect to the unique steady state of the Markov process, though many of our ideas could also be applied in a finite time setting. One of the most common applications of weighted ensemble is the computation of a mean first passage time, or the mean time for a Markov process to go from an initial state to some target set. The mean first passage time is an important quantity in physical and chemical processes, but it can be prohibitively large to compute using direct Monte Carlo simulation [1, 33, 42]. In weighted ensemble, the mean first passage time to a target set is reformulated, via the Hill relation [32], as the inverse of the steady state flux into the target. Here, the observable is the characteristic or indicator function of the target set, and the flux is the steady-state average of this observable. This steady state can sometimes be accessed on time scales much smaller than the mean first passage time [1, 16, 63]. On the other hand, when the mean first passage time is very large, the corresponding steady-state flux is very small and needs to be estimated with substantial precision, which is why importance sampling is needed. The steady state in this case, and in most weighted ensemble applications, is not known up to a normalization factor. As a result, many standard Monte Carlo methods, including those based on Metropolis–Hastings, do not apply.

In this article we consider weighted ensemble parameter optimization for steady-state averages. Our work here expands on related results in [5] for weighted ensemble optimization on finite time horizons. For a given Markov chain, weighted ensemble is completely characterized by the choice of resampling times, bins, and replica allocation. In this article we discuss how to choose the bins and replica allocation. We also argue that the frequency of resampling times is mainly limited by processor interaction cost and not variance. We use a first-principles, finite replica analysis based on Doob decomposing the weighted ensemble variance. Our earlier work [5] is based on this same mathematical technique, but the methods described there are not suitable for long-time computations or bin optimization [4]. As is usual in importance sampling, our parameter optimizations require estimates of the very variance terms we want to minimize. However, because weighted ensemble is statistically exact no matter the parameters [4, 62], these estimates can be crude. The choice of parameters affects the variance, not the mean so we only require parameters that are good enough to beat direct Monte Carlo.

From the point of view of applications, similar particle methods employing stratification include exact milestoning [7], nonequilibrium umbrella sampling [55, 22], transition interface sampling [53], trajectory tilting [54], and boxed molecular dynamics [30]. There are related methods based on sampling reactive paths, or paths going directly from a source to a target, in the context of the mean first passage time problem just cited. Such methods include forward flux sampling [2] and adaptive multilevel splitting [9, 10, 11]. These methods differ from weighted ensemble in that they estimate the mean first passage time directly from reactive paths rather than from steady state and the Hill relation. In contrast with many of these methods, weighted ensemble is simple enough to allow for a relatively straightforward nonasymptotic variance analysis based on Doob decomposition [24].

From a mathematical viewpoint, weighted ensemble is simply a resampling-based evolutionary algorithm. In this sense it resembles particle filters, sequential importance sampling, and sequential Monte Carlo. For a review of sequential Monte Carlo, see the textbook [19], the articles [20, 21], or the compilation [28]. There is some recent work on optimizing the Gibbs–Boltzmann input potential functions in sequential Monte Carlo [6, 13, 36, 56, 60] (see also [21]). We emphasize that weighted ensemble is different from most sequential Monte Carlo methods, as it relies on a bin-based resampling mechanism rather than a globally defined fitness function like a Gibbs–Boltzmann potential. In particular, sequential Monte Carlo is more commonly used to sample rare events on finite time horizons, and may not be appropriate for very long-time computations of the sort considered here, as explained in [4].

To our knowledge, the binning and particle allocation strategies we derive here are new. A similar allocation strategy for weighted ensemble on finite time horizons was proposed in [5]. Our allocation strategy, which minimizes mutation variance—the variance corresponding to evolution of the replicas—extends ideas from [5] to our steady-state setup. We draw an analogy between minimizing mutation variance and minimizing sensitivity in an appendix. In most weighted ensemble simulations, and in [5], bins are chosen in an ad hoc way. We show below, however, that choosing bins carefully is important, particularly if relatively few can be afforded. We propose a new binning strategy based on minimizing selection variance—the variance associated with resampling from the replicas—in which weighted ensemble bins are aggregated from a collection of smaller microbins [15].

Formulas for the mutation and selection variance are derived in a companion paper [4] that proves an ergodic theorem for weighted ensemble time averages. These variance formulas, which we use to define our allocation and bin optimizations, involve the Markov kernel K that describes the evolution of the underlying Markov process between resampling times, as well as the solution, h, of a certain Poisson equation. We propose estimating K and h with techniques from Markov state modeling [35, 45, 48, 49]. In this formulation, the microbins correspond to the Markov states, and a microbin-to-microbin transition matrix defines the approximations of K and h.

This article is organized as follows. In section 2, we introduce notation, give an overview of weighted ensemble, and describe the parameters we wish to optimize. In section 3, we present weighted ensemble in precise detail (Algorithm 3.1), reproduce the aforementioned variance formulas (Lemmas 3.4 and 3.5) from our companion paper [4], and describe the solution, h, to a certain Poisson equation arising from these formulas ((3.15) and (3.16)). In section 4, we introduce novel optimization problems ((4.3) and (4.6)) for choosing the bins and particle allocation. The resulting allocation in (4.4) can be seen as a version of (6.4) in [5], modified for steady state and our new microbin setup. These optimizations are idealized in the sense that they involve K and h, which cannot be computed exactly. Thus in section 5, we propose using microbins and Markov state modeling to estimate their solutions (Algorithms 5.1 and 5.2). In section 6, we test our methods with a simple numerical example. Concluding remarks and suggestions for future work are in section 7. In the appendix, we describe residual resampling [26], a common resampling technique, and draw an analogy between sensitivity and our mutation variance minimization strategy.

2. Algorithm.

A weighted ensemble consists of a collection of replicas, or particles, belonging to a common state space, with associated positive scalar weights. The particles repeatedly undergo resampling and evolution steps. Using a genealogical analogy, we refer to particles before resampling as parents, and just after resampling as children. A child is initially just a copy of its parent, though it evolves independently of other children of the same parent. The total weight remains constant in time, which is important for the stability of long-time averages [4] (and is a critical difference between the optimization algorithm described in [5] and the one outlined here). Between resampling steps, the particles evolve independently via the same Markov kernel K. The initial parents can be arbitrary, though their weights must sum to 1; see Algorithm 5.3 for a description of an initialization step tailored to steady-state calculations. The number Ninit of initial particles can be larger than the number N of weighted ensemble particles after the first resampling step.

For the resampling or selection step, we require a collection of bins, denoted , and a particle allocation , where Nt(u) is the (nonnegative integer) number of children in bin at time t. We will assume the bins form a partition of state space (though in general they can be any grouping of the particles [4]). The total number of children is always . Both the bins and particle allocation are user-chosen parameters, and to a large extent this article concerns how to pick these parameters. In general, the bins and particle allocation can be time dependent and adaptive. For simpler presentation, however, we assume that the bins are based on a fixed partition of state space. After selection, the weight of each child in bin is ωt(u)/Nt(u), where ωt(u) is the sum of the weights of all the parents in bin u. By construction, this preserves the total weight . In every occupied bin u, the Nt(u) ≥ 1 children are selected from the parents in bin u with probability proportional to the parents’ weights. (Here, we mean bin u is occupied if ωt(u) > 0. In unoccupied bins, where ωt(u) = 0, we set Nt(u) = 0.)

In the evolution or mutation step, the time t advances, and all the children independently evolve one step according to a fixed Markov kernel K, keeping their weights from the selection step, and becoming the next parents. In practice, K corresponds to the underlying process evaluated at resampling times Δt. That is, K is a Δt-skeleton of the underlying Markov process [43]. For the mathematical analysis below, this will only be important when we consider varying the resampling times and, in particular, the Δt → 0 limit. (We think of this underlying process as being continuous in time, though of course time discretization is usually required for simulations. Weighted ensemble is used on top of an integrator of the underlying process; in particular weighted ensemble does not handle the time discretization. We will not be concerned with the unavoidable error resulting from time discretization.) We assume that K is uniformly geometrically ergodic [27] with respect to a stationary distribution, or steady state, μ. Recall we are interested in steady-state averages. Thus for a given bounded real-valued function or observable f on state space, we estimate each time by evaluating the weighted sum of f on the current collection of parent particles.

We summarize weighted ensemble as follows:

In the selection step at time t, inside each bin u, we resample Nt(u) children from the parents in u, according to the distribution defined by their weights. After selection, all the children in bin u have the same weight ωt(u)/Nt(u), where ωt(u) is the total weight in bin u before and after selection.

In each mutation step, the children evolve independently according to the Markov kernel K. After evolution, these children become the new parents.

The weighted ensemble evolves by repeated selection and then mutation steps.

The time t advances after a single pair of selection and mutation steps.

See Algorithm 3.1 for a detailed description of weighted ensemble.

An important property of weighted ensemble is that it is unbiased no matter the choice of parameters: at time t the weighted particles have the same distribution as a Markov chain evolving according to K. See Theorem 3.1. With bad parameters, however, weighted ensemble can suffer from large variance, even worse than direct Monte Carlo. As there is no free lunch, choosing parameters cleverly requires either some information about K, perhaps gleaned from prior simulations or obtained adaptively during weighted ensemble simulations. We will assume we have a collection of microbins which we use to gain information about K. The microbins, like the weighted ensemble bins, form a partition of state space, and each bin will be a union of microbins. We use the term microbins because the microbins may be smaller than the actual weighted ensemble bins. We discuss the reasoning behind this distinction in section 5; see Remark 5.1.

3. Mathematical notation and algorithm.

We write for the parents at time t and for their weights. Their children are denoted with weights . Thus, weighted ensemble advances in time as follows:

The particles belong to a common standard Borel state space [29]. This state space is divided into a finite collection of disjoint subsets (throughout we only consider measurable sets and functions). We define the bin weights at time t as

where the empty sum is zero (so an unoccupied bin u has ωt(u) = 0).

For the parent of , we write . A child is just a copy of its parent:

Each child has a unique parent.

Setting the number of children of each parent completely defines the children, as the choices of the children’s indices (the j’s in ) do not matter. The number of children of will be written :

| (3.1) |

where \#S = number of elements in a set S.

Recall that Nt(u) is the number of children in bin u at time t. We require that there is at least one child in each occupied bin, no children in unoccupied bins, and N total children at each time t. Thus,

| (3.2) |

We write for the σ-algebra generated by the weighted ensemble up to, but not including, the tth selection step. We will assume the particle allocation is known before selection. Similarly, we write for the σ-algebra generated by weighted ensemble up to and including the tth selection step. In detail,

In Algorithm 3.1, we do not explicitly say how we sample the Nt(u) children in each bin u. Our framework below allows for any unbiased resampling scheme. We give a selection variance formula that assumes residual resampling; see Lemma 3.5 and Algorithm 8.1. Residual resampling has performance on par with other standard resampling methods like systematic and stratified resampling [26]. See [57] for more details on resampling in the context of sequential Monte Carlo.

3.1. Ergodic averages.

We are interested in using Algorithm 3.1 to estimate

Algorithm 3.1.

Weighted ensemble.

| Pick initial parents and weights with , choose a collection of bins, and define a final time T. Then for t ≥ 0, iterate the following: | ||

| • (Selection step) Each parent is assigned a number of children, as follows: | ||

| In each occupied bin , conditional on , let be Nt(u) samples from the distribution , where satisfies (3.2). The children are defined by (3.1) with weights | ||

| ||

| Selections in distinct bins are conditionally independent. | ||

| • (Mutation step) Each child independently evolves one time step as follows. | ||

| Conditionally on , the children evolve independently according to the Markov kernel K, becoming the next parents , with weights | ||

| ||

| Then time advances, t ← t + 1. Stop if t = T, else return to the selection step. | ||

| Algorithm 5.1 outlines an optimization for the allocation , and a procedure for choosing the bins is in Algorithm 5.2. For the initialization, see Algorithm 5.3. |

where we recall μ is the stationary distribution of the Markov kernel K, and

| (3.5) |

Note that θT is simply the running average of f over the weighted ensemble up to time T − 1. In particular, (3.5) is not a time average over ancestral lines that survive up to time T − 1, but rather it is an average over the weighted ensemble at each time 0 ≤ t ≤ T − 1. Our time averages (3.5) require no replica storage and should have smaller variances than averages over surviving ancestral lines [4].

3.2. Consistency results.

The next results, from a companion article [4], show that weighted ensemble is unbiased and that weighted ensemble time averages converge. The latter does not in general follow from the former, as standard unbiased particle methods such as sequential Monte Carlo can have variance explosion [4]. (The proofs in [4] have Ninit = N, but they are easily modified for Ninit ≠ = N.)

Theorem 3.1 (from [4]).

InAlgorithm 3.1, suppose that the initial particles and weights are distributed as ν, in the sense that

| (3.6) |

for all real-valued bounded functions g on state space. Let (ξt)t≥0 be a Markov chain with kernel K and initial distribution ξ0 ~ ν. Then for any time T > 0,

for all real-valued bounded functions g on state space.

Recall we assume K is uniformly geometrically ergodic [27].

Theorem 3.2 (from [4]).

Weighted ensemble is ergodic in the following sense:

Theorem 3.1 does not use ergodicity of K, though Theorem 3.2 obviously does.

3.3. Variance analysis.

We will make use of the following analysis from [4] concerning the weighted ensemble variance. Define the Doob martingales [25]

| (3.7) |

The Doob decomposition in Theorem 3.3 below filters the variance through the σ-algebras and . It is a way to decompose the variance into contributions from the initial condition and each time step. This type of telescopic variance decomposition is quite standard in sequential Monte Carlo, although it is usually applied at the level of measures on state space, which corresponds to the infinite particle limit N → ∞ [19]. We use the finite N formula directly to minimize variance, building on ideas in [5].

Theorem 3.3 (from [4]).

By Doob decomposition,

| (3.8) |

| (3.9) |

| (3.10) |

where RT is mean zero, .

The terms on the right-hand side of (3.8), (3.9), and (3.10) yield the contributions to the variance of θT from, respectively, the initial condition, mutation steps, and selection steps of Algorithm 3.1. We thus refer to the summands of (3.9) and (3.10) as the mutation variance and selection variance of weighted ensemble.

Below, define

| (3.11) |

and for any function g and probability distribution η on state space, let

| (3.12) |

Above and below, the dependence of Dt, , and ht on T is suppressed.

Lemma 3.4 (from [4]).

The mutation variance at time t is

| (3.13) |

To formulate the selection variance, we define

where ⌊x⌋ is the floor function, or the greatest integer less than or equal to x. In Lemma 3.5, we assume that the in Algorithm 3.1 are obtained using residual resampling. See [26, 57] or Algorithm 8.1 in the appendix below for a description of residual resampling.

Lemma 3.5 (from [4]).

The selection variance at time t is

| (3.14) |

By definition (3.12), the variances in Lemmas 3.4 and 3.5 are rewritten as

3.4. Poisson equation.

Because we are interested in large time horizons T, below we will consider the mutation and selection variances in the limit T → ∞. We will see that for any probability distribution η on state space,

where h is the solution to the Poisson equation [40, 44]

| (3.15) |

where Id = the identity kernel. Existence and uniqueness of the solution h easily follow from uniform geometric ergodicity of the Markov kernel K. Indeed, if (ξt)t≥0 is a Markov chain with kernel K, then we can write

| (3.16) |

where ξ0 ~ μ indicates ξ0 is initially distributed according to the steady state μ of K. Uniform geometric ergodicity and the Weierstrass M-test show that the sums in (3.16) converge absolutely and uniformly. As a consequence, h in (3.16) solves (3.15).

Interpreting (3.16), h(ξ) is the mean discrepancy of a time average of f(ξt) starting at ξ0 = ξ with a time average of f(ξt) starting at steady state ξ0 ~ μ. This discrepancy in the time averages has been normalized so that it has a nontrivial limit and, in particular, does not vanish, as time goes to infinity.

The Poisson solution h is critical for understanding and estimating the weighted ensemble variance. Besides, h can be used to identify model features, such as sets that are metastable for the underlying Markov chain defined by K, as well as narrow pathways between these sets. Metastable sets are, roughly speaking, regions of state space in which the Markov chain tends to become trapped.

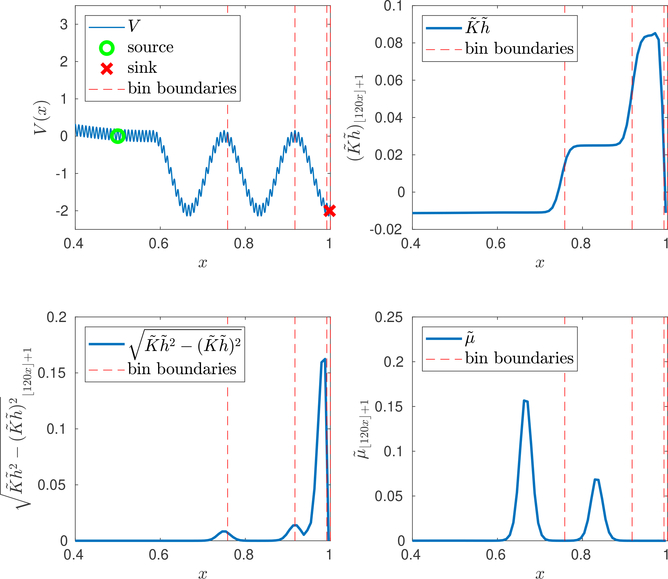

To understand the behavior of h, we define metastable sets more precisely. A region R in state space is metastable for K if (ξt)t≥0 tends to equilibrate in R much faster than it escapes from R. The rate of equilibration in R can be understood in terms of the quasi-stationary distribution [14] in R. See, e.g., [39] for more discussion on metastablity. The Poisson solution h tends to be nearly constant in regions that are metastable for K. This is because the mean discrepancy in a time average of f(ξt) over two copies of (ξt)t≥0 with different starting points in the same metastable set R is small: both copies tend to reach the same quasi-stationary distribution in R before escaping from R. In the regions between metastable sets, however, h tends to have large variance. If f is a characteristic or indicator function, this variance tends to be larger the closer these regions are to the support of f (the set where f = 1). More generally, the variance of h is larger near regions R where the stationary average of f is large. See Figure 6.1 for an illustration of these features.

Fig. 6.1.

UsingAlgorithm 5.2to compute the weighted ensemble binswhen the number of bins is M = 4. We use 106 iterations of Algorithm 5.2 with α = 105. Top left: Potential energy V and bin boundaries when M = 4. Top right: The vector defining the objective function in Algorithm 5.2, where is the approximate Poisson solution. Note that is nearly constant on each superbasin. Bottom left: (square root of) the vector involved in the mutation variance optimization in Algorithm 5.1. Bottom right: Approximate steady state distribution . All plots have been cropped at x > 0.4, where the values of and are neglibigle and is nearly constant.

4. Minimizing the variance.

Our strategy for minimizing the variance is based on choosing the particle allocation to minimize mutation variance and picking the bins to mitigate selection variance. Minimizing mutation variance is closely connected with minimizing a certain sensitivity; see the appendix below. Both strategies require some coarse estimates of K and h. We propose using ideas from Markov state modeling to construct microbins from which we estimate K, h. The microbins can be significantly smaller than the weighted ensemble bins, as we discuss below.

4.1. Resampling times.

Recall that weighted ensemble is fully characterized by the choice of resampling times, bins, and particle allocation. Though we focus on the latter two here, we briefly comment on the former. The resampling times are implicit in our framework. We assume here that K = KΔt is a Δt-skeleton of an underlying Markov process, or a sequence of values of the underlying process at time intervals Δt. In this setup, Δt is a fixed resampling time, and we are ignoring the time discretization. (Actually, the resampling times need not be fixed—they can be any times at which the underyling process has the strong Markov property [5]. In practice, the underlying process must be discretized in time, and weighted ensemble is used with the discretized process.)

Suppose that the underlying Markov process is one of the ones mentioned in the introduction: either Langevin dynamics, or a reaction network modeled by a continuous time Markov chain on finite state space. Suppose, moreover, that microbins in continuous state space are domains with piecewise smooth boundaries (for instance, Voronoi regions), and that the bins are unions of microbins. Then the underlying process does not cross between distinct microbins, or between distinct bins, infinitely often in finite time. As a result, weighted ensemble should not degenerate in the limit as Δt → 0, as we now show.

Consider the variance from selection in Lemma 3.5. By (3.3), the weights of all the children in each bin are all equal to ωt(u)/Nt(u) after the selection step. If Δt is very small, then almost none of the children move to different microbins or bins in the mutation step. If exactly zero of the children change bins, then for residual resampling in the selection step at the next time t, provided the allocation has not changed, we have for all i = 1, ߪ,N. (Note that with our optimal allocation strategy in Algorithm 5.1, if we avoid unnecessary resampling of , then does not change unless particles move between microbins.) Thus from Lemma 3.5 there is zero selection variance and, in fact, no resampling occurs in the selection step. Provided particles do not cross bins or microbins infinitely often in finite time, and the allocation only changes when particles move between microbins, this suggests there is no variance blowup when Δt → 0. We expect then that the frequency Δt of resampling should be driven not by variance cost but by computational cost, e.g., processor communication cost.

4.2. Minimizing mutation variance.

The mutation variance depends on the choice of weighted ensemble bins as well as the particle allocation at each time t. In this section we focus on the particle allocation for an arbitrary choice of bins. To understand this relationship between the allocation and mutation variance, following ideas from [5], we look at the mutation variance visible before selection. It is so named because, unlike the mutation variance in Lemma 3.4, it is a function of quantities ωt(u), Nt(u), that are known at time t before selection.

Proposition 4.1.

The mutation variance visible before selection satisfies

where h is defined in(3.15).

Proof.

By definition of the selection step (see Algorithm 3.1),

| (4.1) |

From Lemma 3.4,

| (4.2) |

In light of (3.11) and (3.16), and using the fact that

we get the result from letting T → ∞. ▯

We let T → ∞ since we are interested in long-time averages. The simpler formulas that result, as the involve h instead of ht, allow for strategies to estimate fixed optimal bins before beginning weighted ensemble simulations, which we discuss more below. Thus for minimizing the limiting mutation variance, we consider the following optimization:

| (4.3) |

where the bins are fixed, and we temporarily allow the allocation to be noninteger. A Lagrange multiplier calculation shows that the solution to (4.3) is

| (4.4) |

provided the denominator above is nonzero. Note this solution is idealized, as Nt(u) must always be an integer, and h and K are not known exactly. We explain a practical implementation of (4.4) in Algorithm 5.1.

Our choice of the particle allocation will be based on (4.4); see Algorithm 5.1. Notice that at each time t we only minimize one term, the summand in (3.9) corresponding to the mutation variance at time t in the Doob decomposition in Theorem 3.3. Later, when we optimize bins, we will optimize the summand in (3.10) corresponding to the selection variance at time t. In particular, we only minimize the mutation and selection variances at the current time, and not the sum of these variances over all times. In the T → ∞ limit, we expect that weighted ensemble reaches a steady state, provided the bin choice and allocation strategy (e.g., from Algorithms 5.1 and 5.2) do not change over time. If the weighted ensemble indeed reaches a steady state, then the mutation and selection variances become stationary in t, making it reasonable to minimize them only at the current time. (Under appropriate conditions, the variances in (3.9)–(3.10) should also become independent over time t in the N → ∞ limit. See [19] for related results in the context of sequential Monte Carlo.)

Note the term appearing in (4.4). As discussed in section 3, this variance tends to be large in regions between metastable sets. The optimal allocation (4.4) favors putting children in such regions, increasing the likelihood that their descendants will visit both the adjacent metastable sets. See the appendix for a connection between minimizing mutation variance and minimizing the sensitivity of the stationary distribution μ to perturbations of K.

4.3. Mitigating selection variance.

We begin by observing that if bins are small, then so is the selection variance. In particular, if each bin is a single point in state space, then Lemma 3.5 shows that the selection variance is zero. One way, then, to get small selection variance is to have a lot of bins. When simulations of the underlying Markov chain are very expensive, however, we cannot afford a large number of bins; see Remark 5.1 in section 5 below. As a result the bins are not so small, and we investigate the selection variance to decide how to construct them.

Lemma 4.2.

The selection variance at time t ≥ 1 satisfies

| (4.5) |

where ηt(u) is defined in Lemma 3.5 and h is defined in (3.15).

Proof.

From Lemma 3.5, (3.11), and (3.16), letting T → ∞ gives the result. ▯

We choose to mitigate selection variance by choosing bins so that (4.5) is small. This likely requires some sort of search in bin space. For simplicity, we assume that the bins do not change in time and are chosen at the start of Algorithm 3.1. Of course it is possible to update bins adaptively, at a frequency that depends on how the cost of bin searches compares to that of particle evolution.

We will minimize an agnostic variant of (4.5), for which we make no assumptions about Nt(u), ωt(u), and δt(u). This allows us to optimize fixed bins using a time-independent objective function, without taking the particle allocation into account. Our agnostic optimization also is not specific to residual resampling. Indeed, though the precise formula for the selection variance depends on the resampling method, the selection variance should always contain terms of the form VarηKh, where η are probability distributions in the individual weighted ensemble bins; see [4]. It is exactly these terms that we choose to minimize.

Thus for our agnostic selection variance minimization, we let

be the uniform distribution in bin , and consider the following problem:

over choices of , subject to the constraint .

Like (4.3), this is idealized because we cannot directly access K or h. We describe a practical implementation of (4.6) in Algorithm 5.2. Informally, solutions to (4.6) are characterized by the property that, inside each individual bin u, the value of Kh does not change very much.

Our choice of bins is based on (4.6). Here M is the desired total number of bins. Our agnostic perspective leads us to use the uniform distribution in each bin u. When bins are formed from combinations of a fixed collection of microbins, (4.6) is a discrete optimization problem that usually lacks a closed form solution. Algorithm 5.2 below solves a discrete version of (4.6) by simulated annealing. Because of the similarity of (4.6) with the k-means problem [41], we expect that there are more efficient methods, but this is not the focus of the present work.

5. Microbins and parameter choice.

To approximate the solutions to (4.3) and (4.6), we use microbins to gain information about K and h. The collection of microbins is a finite partition of state space that refines the weighted ensemble bins , in the sense that every element of is a union of elements of . Thus each bin is comprised of a number of microbins, and each microbin is inside exactly one bin. The idea is to use exploratory simulations, over short time horizons, to approximate K and h by observing transitions between microbins.

In more detail, we estimate the probability to transition from microbin to microbin by a matrix ,

| (5.1) |

and we estimate f on microbin by a vector ,

| (5.2) |

Here ν is some convenient measure, for instance an empirical measure obtained from preliminary weighted ensemble simulations. This strategy echoes work in the Markov state model community [35, 45, 48, 49].

For small enough microbins, we could replace ν in (5.1) with any other measure without changing too much the value of the estimates on the right-hand sides of (5.1) and (5.2). Moreover, if f is the characteristic function of a microbin, then ν could be replaced with any measure supported in microbin p without changing at all the value of the right-hand side of (5.2). This is the case for the mean first passage time problem mentioned in the introduction, and fleshed out in the numerical example in section 6; there, f is the characteristic function of the target set, which can be chosen to be a microbin.

Algorithm 5.1.

Optimizing the particle allocation.

| Given the particles and weights at t = 0 or at t ≥ 1: | ||

| • Define the following approximate solution to (4.3): | ||

| ||

| where is the microbin containing . | ||

| • Let count the occupied bins, | ||

|

| ||

| • Let be samples from the distribution . | ||

| • In Algorithm 3.1, define the particle allocation as | ||

|

| ||

| If the denominator of (5.3) is 0, we set . |

With and the microbin-to-microbin transition matrix in hand, we can obtain an approximate solution to the Poisson equation (3.15), simply by replacing K, f, and μ in that equation with, respectively, , , and the stationary distribution of (we assume is aperiodic and irreducible). That is, solves

| (5.4) |

where and are the identity matrix and all-ones column vector of the appropriate sizes, and , , and are column vectors. Then we can approximate the solutions to (4.3) and (4.6) by simply replacing K and h in those optimization problems with and . See Algorithms 5.1 and 5.2 for details. We can also use to initialize weighted ensemble; see Algorithm 5.3.

Algorithm 5.2.

Optimizing the bins.

| Choose an initial collection of bins. Define an objective function on the bin space, | ||

| ||

| where is the restriction of to , and is the usual vector population variance. Choose an annealing parameter α > 0, set , and iterate the following for a user-prescribed number of steps: | ||

| 1. Perturb to get new bins . | ||

| (Say by moving a microbin from one bin to another bin.) | ||

| 2. With probability , set . | ||

| 3. If , then update . Return to step 1. | ||

| Once the bin search is complete, the output is . |

We have in mind that the microbins are constructed using ideas from Markov state modeling [35, 45, 48, 49]. In this setup, the microbins are simply the Markov states. These could be determined from a clustering analysis (e.g., using k-means [41]) from preliminary weighted ensemble simulations with short time horizons. The resulting Markov state model can be crude: it will be used only for choosing parameters, and weighted ensemble is exact no matter the parameters. Indeed if our Markov state model was very refined, it could be used directly to estimate . In practice, we expect our crude model could estimate with significant bias. In our formulation, a bad set of parameters may lead to large variance, but there is never any bias. In short, the Markov state model should be good enough to pick reliable weighted ensemble parameters, but not necessarily good enough to accurately estimate .

Remark 5.1.

We distinguish microbins from bins because the number of weighted ensemble bins is limited by the number of particles we can afford to simulate over the time horizon needed to reach μ. As an extreme case, suppose we have many more bins than particles, so that all bins contain 0 or 1 particles at almost every time. Then, because Algorithm 3.1 requires at least one child per occupied bin, parents almost never have more than one child, and we recover direct Monte Carlo. This condition, that the collection of parents in a given bin must have at least one child, is essential for the stability of long-time calculations [4]. As a result, too many bins leads to poor weighted ensemble performance. A very rough rule of thumb is that the number M of bins should be not too much larger than the number N of particles.

The number of weighted ensemble bins is limited by the number N of particles. (In the references in the introduction, N is usually on the order of 102 to 103.) The number of microbins, on the other hand, is limited primarily by the cost of the sampling that produces and . The microbins could be computed by postprocessing exploratory weighted ensemble data generated using larger bins. The quality of the microbin-to-microbin transition matrix depends on the microbins and the number of particles used in these exploratory simulations. But these exploratory simulations, compared to our steady-state weighted ensemble simulations, could use more particles as their time horizons can be much shorter. As a result the number of microbins can be much greater than the number of bins.

In Algorithm 5.2, we could enforce an additional condition that the bins must be connected regions in state space. We do this in our implementation of Algorithm 5.2 in section 6 below. Traditionally, bins are connected, not-too-elongated regions, e.g., Voronoi regions. Bins are chosen this way because resampling in bins with distant particles can lead to a large variance in the weighted ensemble. However, since we are employing weighted ensemble only to compute for a single observable f, a large variance in the full ensemble can be tolerated so long as the variance assocated with estimating is still small. This could be achieved with disconnected or elongated bins, or even a nonspatial assignment of particles to bins (based e.g., on the values of on the microbins containing the particles). We leave a more complete investigation to future work.

5.1. Initialization.

Note that the steady state of can be used to precondition the weighted ensemble simulations, so that they start closer to the true steady state. This basically amounts to adjusting the weights of the initial particles so that they match . This is called reweighting in the weighted ensemble literature [8, 15, 51, 64]. One way to do this is the following. Take initial particles and weights from the preliminary simulations that define , , , and . These initial particles can be a large subsample from these simulations; in particular we can have an initial number of particles Ninit ≫ N much greater than the number of particles in weighted ensemble simulations. (The large number can be obtained by sampling at different times along the the preliminary simulation particle ancestral lines.) We require that there is at least one initial particle in each microbin. The weights of these particles are adjusted using to get new weights , such that the total adjusted weight in each microbin matches the value of on the same microbin. Then these Ninit ≫ N initial particles are fed into the first (t = 0) selection step of Algorithm 3.1. This selection step prunes the number of particles to a manageable number, N, for the rest of the main weighted ensemble simulation. See Algorithm 5.3 for a precise description of this initialization.

Algorithm 5.3.

Initializing weighted ensemble.

| After the preliminary simulations that produce a collection of particles and weights , together with approximations , , , and of K, μ, f, and h: | |

| • Adjust the weights of the particles in each microbin p according to : | |

|

| |

| There should be at least one initial particle in each microbin, | |

|

| |

| • Proceed to the selection step of Algorithm 3.1 at time t = 0 with the initial particles having the adjusted weights . | |

| The number, Ninit, of initial particles can be much greater than the number, N, of particles in the weighted ensemble simulations of Algorithm 3.1. |

5.2. Gain over naive parameter choices.

The gain of optimizing parameters, compared to naive parameter choices or direct Monte Carlo, comes from the larger number of particles that optimized weighted ensemble puts in important regions of state space, compared to a naive method. These important regions are exactly the ones identified by h; roughly speaking they are regions R where the variance of h is large. A rule of thumb is that the variance can decrease by a factor of up to

| (5.6) |

To see why, consider the mutation variance from Proposition 4.1,

| (5.7) |

The contribution to this mutation variance at time t from bin u is

Increasing Nt(u) by some factor decreases the mutation variance from bin u at time t by the same factor. The mutation variance can be reduced by a factor of almost (5.6) if Nt(u) is increased by the factor (5.6) in the bins u where is large, and if is small enough in the other bins that decreasing the allocation in those bins does not significantly increase the mutation variance.

Of course the variance formulas in Lemmas 3.4 and 3.5 can, in principle, more precisely describe the gain, although it is difficult to accurately estimate the values of these variances a priori outside of the N → ∞ limit. Since we focus on relatively small N, we do not go in this analytic direction. Instead we numerically illustrate the improvement from optimizing parameters in Figures 6.3 and 6.4.

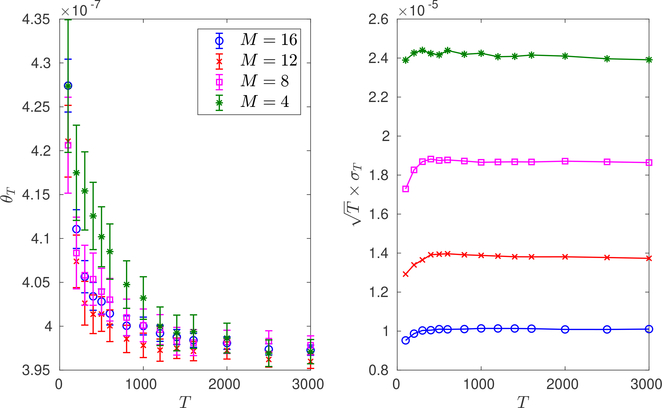

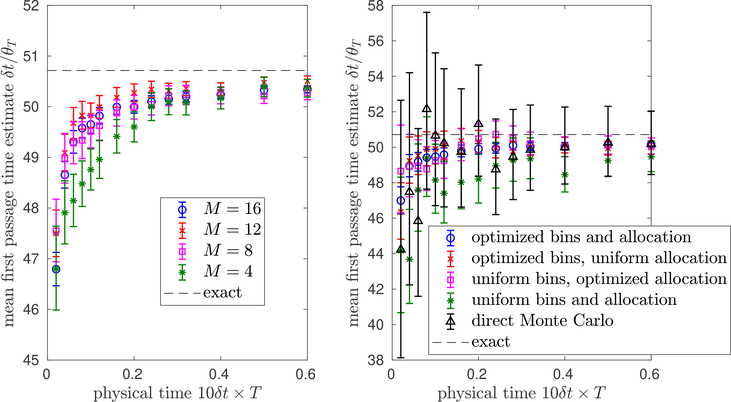

Fig. 6.3.

Varying the number M of weighted ensemble bins inAlgorithm 5.2. Left: Weighted ensemble running means θTversus T forAlgorithm 3.1with the optimal allocation and binning ofAlgorithms 5.1and5.2, when M = 4, 8, 12, 16. Values shown are averages over 105 independent trials. Error bars are , where σT are the empirical standard deviations. Right: Scaled empirical standard deviations versus T in the same setup. We use N = 40 particles, and the bins correspond exactly to the ones in Figure 6.2. More bins are not always better, since with too many bins we return to the direct Monte Carlo regime; see Remark 5.1.

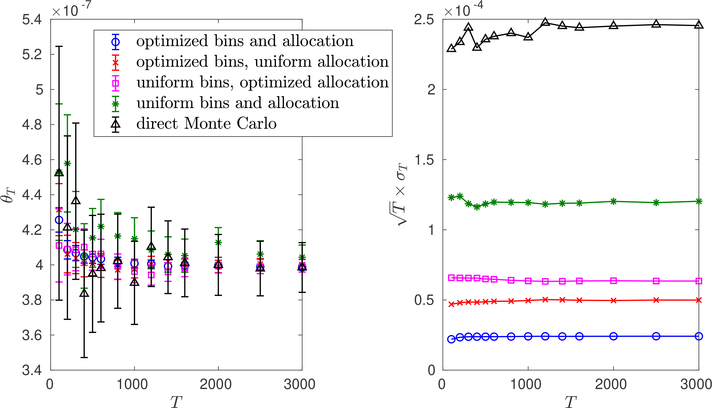

Fig. 6.4.

Comparison of direct Monte Carlo with weighted ensemble, where the bins and/or allocation are optimized, M = 4, and N = 40. Left: Weighted ensemble running means θT versus T for Algorithm 3.1 with the indicated choices for allocation and binning. Values shown are averages over 105 independent trials. Error bars are , where σT are the empirical standard deviations. Right: Scaled empirical standard deviations versus T in the same setup. By optimized bins, we mean the weighted ensemble bins are chosen using Algorithm 5.2 with M = 4. These optimized bins are exactly the ones plotted in the top left of Figure 6.2. Optimized allocation means the particle allocation follows Algorithm 5.1. Uniform bins means that the bins are uniformly spaced on [0, 1], while uniform allocation means the particles are distributed uniformly among the occupied bins.

5.3. Adaptive methods.

We have proposed handling the optimizations (4.3) and (4.6) by approximating K and h with and , where the latter are built by observing microbin-to-microbin transitions and solving the appropriate Poisson problem. Since K and h are fixed in time and we are doing long-time calculations, it is natural to estimate them adaptively, for instance, via stochastic approximation [38]. Thus both (4.3) and (4.6) could be solved adaptively, at least in principle. Depending on the number of microbins, it may be relatively cheap to compute h compared to the cost of evolving particles. If this is the case, it is natural to solve (4.3) on the fly. We could also perform bin searches intermittently, depending on their cost.

6. Numerical illustration.

In this section we illustrate the optimizations in Algorithms 5.1 and 5.2, for a simple example of a mean first passage time computation. Consider overdamped Langevin dynamics

| (6.1) |

where β = 5, (Wt)t≥0 is a standard Brownian motion, and the potential energy is

See Figure 6.1. We impose reflecting boundary conditions on the interval [0, 1]. We will estimate the mean first passage time of (Xt)t≥0 from 1/2 to [119/120, 1] using the Hill relation [5, 32]. The Hill relation reformulates the mean first passage time as a steady-state average, as we explain below.

We choose 120 uniformly spaced microbins between 0 and 1:

(They are not actually disjoint, but they do not overlap so this is unimportant.)

The microbins correspond to the basins of attraction of V. A basin of attraction of V is a set of initial conditions x(0) for which dx(t)/dt = −V′(x(t)) has a unique long-time limit. The microbins combine to make 3 larger metastable sets, defined in section 3 above. These larger metastable sets, each comprised of many smaller basins of attraction, will be called superbasins. The microbins do not need to be basins of attraction: they only need to be sufficiently “small” to give useful estimates of K and h. We choose microbins in this way to illustrate the qualitative features we expect from Markov state modeling, where the Markov states (or our microbins) are often basins of attraction. In this case, the bins might be clusters of Markov states corresponding to superbasins.

The kernel K is defined as follows. First, let Kδt be an Euler–Maruyama time discretization of (6.1) with time step δt = 2×10−5. We introduce a sink at the target state [119/120, 1] that recycles at a source x = 1/2 via

Then we define K as a Δt-skeleton of where Δt = 10:

| (6.2) |

This just means we take 10 Euler–Maruyama [37] time steps in the mutation step of Algorithm 3.1. The Hill relation [5] shows that, if is a Markov chain with kernel either Kδt or and , then

where μ is the stationary distribution of K. Thus if τ = inf{ t > 0 : Xt ∈ [119/120, 1]},

By construction μ([119/120, 1]) is small (on the order 10−7), so it must be estimated with substantial precision to resolve the mean first passage time.

We will estimate the mean first passage time from x = 1/2 to x ∈ [119/120, 1], the latter being the target state and rightmost microbin. Thus, we define f as the characteristic function of this microbin, , so that

Weighted ensemble then estimates the mean first passage time via

| (6.3) |

We illustrate Algorithm 3.1 combined with Algorithms 5.1–5.2 in Figures 6.1–6.4. For Algorithms 5.1 and 5.2, to construct , , , and as in section 5, we compute the matrix using 104 trajectories starting from each microbin’s midpoint, and we define , for 1 ≤ p ≤ 119. The pth rows of , , , and correspond to the microbin [(p − 1)/120, p/120]. In Algorithm 5.2, to simplify visualization we enforce a condition that the bins must be connected regions. We initialize all our simulations with Algorithm 5.3, where Nint = 120, , and ωi = 1/120.

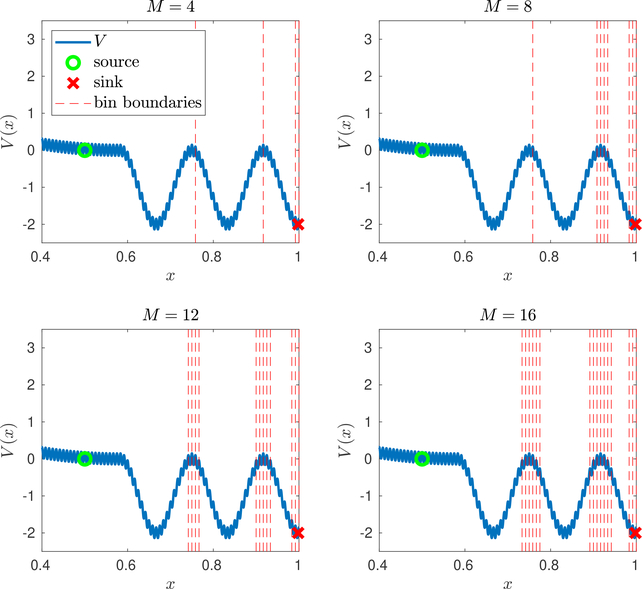

In Figure 6.1, we show the bins resulting from Algorithm 5.2, and plot the terms and appearing in the optimizations in Algorithms 5.1 and 5.2, along with the approximate steady state . Note that the bins resolve the superbasins of V. In Figure 6.2, we explore what happens when the number M of bins increases in Algorithm 5.2, finding that the bins begin to resolve the regions between superbasins, favoring regions closer to the support of f. As M increases, the optimal allocation leads to particles having more children when they are near the dividing surfaces between the superbasins; to see why, compare the particle allocation in Algorithm 5.1 with the bottom left of Figure 6.1.

Fig. 6.2.

Increasing the value of M inAlgorithm 5.2. Plotted are weighted ensemble binscomputed usingAlgorithm 5.2with M = 4, 8, 12, 16. For each value of M, we use 106 iterations of Algorithm 5.2, with α tuned between 105 and 106. Note that with increasing M, additional bins are initially devoted to resolving the energy barrier between the two rightmost superbasins. Since the observable f is the rightmost microbin, this is the most important energy barrier for the bins to resolve. Note that the multiple adjacent small bins for M = 16 correspond to the steepest gradients of in the top right of Figure 6.1. All plots have been cropped at x > 0.4.

In Figure 6.3, we illustrate weighted ensemble with the optimized allocation and binning of Algorithms 5.1 and 5.2 when the number of bins increases from M = 4 to M = 16. Observe that M = 4 bins is not enough to resolve the regions between the superbasins, but we still get a substantial gain over direct Monte Carlo (compare with Figure 6.4). With M = 16 bins we resolve the regions between superbasins, further reducing the variance. The bins we use are exactly the ones in Figure 6.2.

In Figure 6.4, we compare weighted ensemble with direct Monte Carlo when M = 4. Direct Monte Carlo can be seen as a version of Algorithm 3.1 where each parent always has exactly one child, for all t, i. For weighted ensemble, we consider optimizing either or both of the bins and the allocation. When we do not optimize the bins, we consider uniform bins . When we do not optimize the allocation, we consider uniform allocation, where we distribute particles evenly among the occupied bins, . Notice the order of magnitude reduction in standard deviation compared with direct Monte Carlo for this relatively small number of bins.

In Figure 6.5, to illustrate the Hill relation, we plot the weighted ensemble estimates of the mean first passage time from our data in Figures 6.3 and 6.4 against the (numerically) exact value. The weighted ensemble estimates at small T tend to exhibit a “bias” because the weighted ensemble has not yet reached steady state. As T grows, this bias vanishes and the weighted ensemble estimates converge to the true mean first passage time. In the simple example in this section, we can directly compute the mean first passage time. For complicated biological problems, however, the first passage time can be so large that it is difficult to directly sample even once. In spite of this, the Hill relation reformulation can lead to useful estimates on much shorter time scales than the mean first passage time [1, 16, 63]. Indeed this is the case in Figure 6.5, where we get accurate estimates in a time horizon orders of magnitude smaller than the mean first passage time. This speedup can be attributed in part to the initialization in Algorithm 3.1. In general, there can also be substantial speedup from the Hill relation itself, independently of the initial condition [63].

Fig. 6.5.

Illustration of the Hill relation for estimating the mean first passage time via(6.3). Left: The same data as inFigure 6.3, but θTis inverted and multiplied by δt to estimate the mean first passage time, and error bars are adjusted accordingly. Right: The same data as inFigure 6.4, but θTis inverted and multiplied by δt to estimate the mean first passage time, and error bars are adjusted accordingly. So that the x and y axes have the same units, we consider the “physical time” on the x-axis, defined as the total number, T, of selection steps of Algorithm 3.1 multiplied by the time, 10δt, between selection steps (see (6.2)). In both plots, the “exact” value of the mean first passage time is obtained from 105 independent samples of the first passage time . As in Figures 6.3 and 6.4, optimizing the bins and allocation has the best performance, and M = 16 bins is better than M = 4, 8, 12 bins, though more bins are not always better; see Remark 5.1.

Optimizing the bins and allocation together has the best performance. We expect that, as in this numerical example, when the number M of bins is relatively small, optimizing the bins can be more important than optimizing the allocation. Optimizing only the allocation may lead to a less dramatic gain when the bins poorly resolve the landscape defined by h. On the other hand, for a large enough number M of bins it may be sufficient just to optimize the allocation. We emphasize that more bins is not always better, since for a fixed number N of particles, with too many bins we end up recovering direct Monte Carlo. See Remark 5.1 above.

7. Remarks and future work.

This work presents new procedures for choosing the bins and particle allocation for weighted ensemble, building in part from ideas in [5]. The bins and particle allocation, together with the resampling times, completely characterize the method. Though we do not try to optimize the latter, we argue that there is no significant variance cost associated with taking small resampling times. Optimizing weighted ensemble is worthwhile when the optimized parameter choices lead to significantly more particles in important regions of state space, compared to a naive method. The corresponding gain, represented by the rule of thumb (5.6), can be expected to grow as the dimension increases. This is because in high dimensions, the pathways between metastable sets are narrow compared to the vast state space (see [58, 59] and the references in the introduction).

Though our interest is in steady-state calculations, many of our ideas could just as well be applied to a finite time setup. Our practical interest in steady state arises from the computation of mean first passage times via the Hill relation. On the mathematical side, general importance sampling particle methods with the unbiased property of Theorem 3.1 are often not appropriate for steady-state sampling. (By general methods, we mean methods for nonreversible Markov chains, or Markov chains with a steady state that is not known up to a normalization factor [40].) Indeed as we explain in our companion article [4], standard sequential Monte Carlo methods can suffer from variance explosion at large times, even for carefully designed resampling steps. So this article could be an important advance in that direction.

We conclude by discussing some open problems. The implementation of the algorithms in this article to complex, high dimensional problems will require substantial effort and modification to the software in [65]. The aim of this article is to lay the groundwork for such applications. On a more theoretical note, there remain some questions regarding parameter optimization. We could consider adaptive/nonspatial bins instead of fixed bins as in Algorithm 5.2, for instance, bins chosen via k-means on the values of Kh of the N particles. Also, we choose a number M of weighted ensemble bins and number N of particles a priori. It remains open how to pick the best value of M for a given number, N, of particles, and fixed computational resources. Using only the variance analysis above, a straightforward answer to this question can probably only be obtained in the large N asymptotic regime. More analysis is then needed, since we generally have small N. We could also optimize over both N and M, or over N, M, and a total number S of independent simulations, subject to a fixed computational budget. We leave these questions to future work.

Acknowledgments.

D. Aristoff thanks Peter Christman, Tony Lelièvre, Josselin Garnier, Matthias Rousset, Gabriel Stoltz, and Robert J. Webber for helpful suggestions and discussions. D. Aristoff and D.M. Zuckerman especially thank Jeremy Copperman and Gideon Simpson for many ongoing discussions related to this work.

Funding: This work was supported by the National Science Foundation via the awards NSF-DMS-1522398 and NSF-DMS-1818726 and the National Institutes of Health via the award GM115805.

8. Appendix.

In this appendix, we describe residual resampling, and we draw a connection between mutation variance minimization and sensitivity.

8.1. Residual resampling.

Recall that in Algorithm 3.1 we assumed residual resampling is used to get the number of children of each particle at each time t. We could also use residual resampling to compute in Algorithm 5.1. For the reader’s convenience, we describe residual resampling in Algorithm 8.1. Recall ⌊x⌋ is the floor function (the greatest integer ≤ x).

8.2. Intuition behind mutation variance minimization.

The goal of this section is to give some intuition for the strategy (4.4), which chooses the particle allocation to minimize mutation variance, by introducing a connection with sensitivity of the stationary distribution μ to perturbations of K. In this section we make the simplifying assumption that state space is finite, and each point of state space is a microbin. Thus is a finite stochastic matrix. The Poisson solution

Algorithm 8.1.

Residual resampling.

| To generate n samples from a distribution with : | |

| • Define δi = ndi − ⌊ndi⌋ and let . | |

| • Sample from the multinomial distribution with δ trials and event probabilities . In detail, | |

|

| |

| • Define Ni = ⌊ndi⌋ + Ri, . |

h defined in (3.15) satisfies and hTμ = 0, or equivalently

| (8.1) |

where , , , and are column vectors, and is the all-ones column vector. Here, I is the identity matrix, and is the column vector with 1 in the pth entry and 0’s elsewhere.

We write μ(Q) for the stationary distribution of an irreducible stochastic matrix . More precisely, μ(Q) denotes a continuously differentiable extension of Q ↦ μ(Q) to an open neighborhood of the space of irreducible stochastic matrices which satisfies μ(Q)TQ = μ(Q)T and whenever . See [52] for details and a proof of existence of this extension. Abusing notation, we still write μ with no matrix argument to denote the stationary distribution μ(K) of K.

Theorem 8.1.

Let λ(u) > 0 be such that . For each , be a vector satisfying ν(u)p ≥ 0 for , ν(u)p = 0 for p ∉ u, and . Let A(u) be a random matrix with the distribution

| (8.2) |

Let A(u)(n) be independent copies of A for and n = 1,2, ߪ. Define

| (8.3) |

Then

| (8.4) |

We now interpret Theorem 8.1 from the point of view of particle allocation. Note that A(u) is a centered sample of of K obtained by picking a microbin p ∈ u according to the distribution ν(u), and then simulating a transition from p via K. By a centered sample, we mean that we adjust A(u) by subtracting by its mean, so that A(u) has mean zero. The mean of [(d/dϵ)μ(K + ϵA(u))T|ϵ=0f]2 measures the sensitivity of to sampling from bin u according to the distribution ν(u). Similarly, the mean of [(d/dϵ)μ(K+ϵB(N))T|ϵ=0f]2 measures the sensitivity of corresponding to sampling from each bin exactly ⌊Nλ(u)⌋ times according to the distributions ν(u). Appropriately normalizing the latter in the limit N → ∞ leads to (8.4). The sensitivity in (8.4) is minimized over when

| (8.5) |

In light of the discussion above, we think of in (8.5) as a particle allocation. Note that this resembles our formula (4.4) for the optimal weighted ensemble particle allocation. We now try to make a connection between (4.4) and (8.5).

We first consider a simple case. Suppose the bins are equal to the microbins, . Since we assume here that every point in state space is a microbin, this means that every point of state space is also a bin. In particular the distributions ν(u) are trivial: ν(u)p = 1 whenever with p = u. To make (8.5) agree with (4.4), we need μp = ωt(u) when p = u. Of course this equality does not hold. It is true that μp ≈ ωt(u) when p = u is a reasonable approximation, but only in the asymptotic where N, t → ∞. To see why this is so, note that the unbiased property and ergodicity show that when p = u; provided an appropriate law of large numbers also holds, limt→∞ limN→∞ωt(u) = μp. Recall, though, that we are interested in relatively small N, due to the high cost of particle evolution.

For the general case, where and bins contain multiple points in state space, we see no direct connection between the allocation formulas (8.5) and (4.4). Indeed, to make an analogy between this sensitivity calculation and weighted ensemble, we should have . Or in other words, we should consider perturbations that correspond to sampling from the bins according to particle weights, in accordance with Algorithm 3.1. But putting in (8.5) gives something quite different from (4.4). So while the sensitivity minimization formula (8.5) is qualitatively similar to our mutation variance minimization formula (4.4), the two are not actually the same.

8.3. Proof of Theorem 8.1.

We begin by noting the following.

| (8.6) |

Like (8.1), this follows from the ergodicity of K. See, for instance, [31].

Lemma 8.2.

Suppose A is a matrix with . Then

Proof.

Since μ(K + ϵA)T(K + ϵA) = μ(K + ϵA)T, we have

Thus

| (8.7) |

Moreover since for any stochastic matrix Q,

| (8.8) |

Now by (8.1), (8.6), (8.7), and (8.8),

where the last line above uses to replace f with . ▯

Lemma 8.3.

Suppose (A(n))n=1, ߪ, N are matrices such that (i) (A(n))n=1, ߪ, N are independent over n, (ii) for all n, and (iii) for all n. Then

Proof.

Since μ is continuously differentiable,

| (8.9) |

By (ii)–(iii) and Lemma 8.2, for all n. So by (i),

| (8.10) |

Lemma 8.4.

Let A(u) be a random matrix with the distribution (8.2). Then

Proof.

Note that since . So by Lemma 8.2,

From this and (8.2),

We are now ready to prove Theorem 8.1.

Proof of Theorem 8.1.

By Lemmas 8.3 and 8.4,

| (8.11) |

The result follows by letting N → ∞. ▯

REFERENCES

- [1].Adhikari U, Mostofian B, Copperman J, Subramanian SR, Petersen AA, and Zuckerman DM, Computational estimation of microsecond to second atomistic folding times, J. Amer. Chem. Soc, 141 (2019), pp. 6519–6526. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Allen RJ, Frenkel D, and ten Wolde PR, Forward flux sampling-type schemes for simulating rare events: Efficiency analysis, J. Chem. Phys, 124 (2006), 194111. [DOI] [PubMed] [Google Scholar]

- [3].Anderson DF and Kurtz TG, Continuous time Markov chain models for chemical reaction networks, in Design and Analysis of Biomolecular Circuits, Springer, New York, 2011, pp. 3–42. [Google Scholar]

- [4].Aristoff D, An Ergodic Theorem for Weighted Ensemble, preprint, https://arxiv.org/abs/1906.00856 (2019).

- [5].Aristoff D, Analysis and optimization of weighted ensemble sampling, ESAIM Math. Model. Numer. Anal, 52 (2018), pp. 1219–1238. [Google Scholar]

- [6].Balesdent M, Marzat J, Morio J, and Jacquemart D, Optimization of interacting particle systems for rare event estimation, Comput. Statist. Data Anal, 66 (2013), pp. 117–128. [Google Scholar]

- [7].Bello-Rivas JM and Elber R, Exact milestoning, J. Chem. Phys, 142 (2015), 03B602_1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [8].Bhatt D, Zhang BW, and Zuckerman DM, Steady-state simulations using weighted ensemble path sampling, J. Chem. Phys, 133 (2010), 014110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [9].Bréhier C-E, Lelièvre T, and Rousset M, Analysis of adaptive multilevel splitting algorithms in an idealized case, ESAIM Probab. Stat, 19 (2015), pp. 361–394. [Google Scholar]

- [10].Bréhier C-E, Gazeau M, Goudenège L, Lelièvre T, and Rousset M, Unbiasedness of some generalized adaptive multilevel splitting algorithms, Ann. Appl. Probab, 26 (2016), pp. 3559–3601. [Google Scholar]

- [11].Cérou F, Guyader A, Lelièvre T, and Pommier D, A multiple replica approach to simulate reactive trajectories, J. Chem. Phys, 134 (2011), 054108. [DOI] [PubMed] [Google Scholar]

- [12].Chong LT, Saglam AS, and Zuckerman DM, Path-sampling strategies for simulating rare events in biomolecular systems, Curr. Opinion Struc. Biol, 43 (2017), pp. 88–94. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [13].Chraibi H, Dutfoy A, Galtier T, and Garnier J, Optimal Input Potential Functions in the Interacting Particle System Method, preprint, https://arxiv.org/abs/1811.10450 (2018). [Google Scholar]

- [14].Collet P, Martínez S, and San Martín J, Quasi-Stationary Distributions: Markov Chains, Diffusions and Dynamical Systems, Springer, Berlin, 2012. [Google Scholar]

- [15].Copperman J and Zuckerman DM, Accelerated Estimation of Long-Timescale Kinetics by Combining Weighted Ensemble Simulation with Markov Model “microstates” Using Non-Markovian Theory, preprint, https://arxiv.org/abs/1903.04673. [DOI] [PMC free article] [PubMed]

- [16].Copperman J, Aristoff D, Makarov DE, Simpson G, and Zuckerman DM, Transient probability currents provide upper and lower bounds on non-equilibrium steady-state currents in the Smoluchowski picture, J. Chem. Phys, 151 (2019), 174108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [17].Costaouec R, Feng H, Izaguirre J, and Darve E, Analysis of the accelerated weighted ensemble methodology, Discrete Contin. Dyn. Syst, 2013 (2013), pp. 171–181. [Google Scholar]

- [18].Darve E and Ryu E, Computing reaction rates in bio-molecular systems using discrete macro-states, in Innovations in Biomolecular Modeling and Simulations, Vol. 1, Schlick T, ed., Royal Society of Chemistry, Cambridge, 2012, pp. 138–206. [Google Scholar]

- [19].Del Moral P, Feynman-Kac formulae: Genealogical and interacting particle approximations, Probab. Appl., Springer, New York, 2004. [Google Scholar]

- [20].Del Moral P and Doucet A, Particle methods: An introduction with applications, ESAIM: Proc 44 (2014), pp. 1–46. [Google Scholar]

- [21].Del Moral P and Garnier J, Genealogical particle analysis of rare events, Ann. Appl. Probab, 15 (2005), pp. 2496–2534. [Google Scholar]

- [22].Dinner AR, Mattingly JC, Tempkin JO, Van Koten B, and Weare J, Trajectory stratification of stochastic dynamics, SIAM Rev, 60 (2018), pp. 909–938. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [23].Donovan RM, Sedgewick AJ, Faeder JR, and Zuckerman DM, Efficient stochastic simulation of chemical kinetics networks using a weighted ensemble of trajectories, J. Chem. Phys, 139 (2013), 09B642 1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [24].Doob JL, Stochastic Processes, Wiley, New York, 1953. [Google Scholar]

- [25].Doob JL, Regularity properties of certain families of chance variables, Trans. Amer. Math. Soc, 47 (1940), pp. 455–486. [Google Scholar]

- [26].Douc R and Cappé O, Comparison of resampling schemes for particle filtering, Proceedings of the 4th International Symposium on Image and Signal Processing and Analysis, 2005. ISPA 2005., IEEE, Piscataway, NJ, 2005, pp. 64–69. [Google Scholar]

- [27].Douc R, Moulines E, and Stoffer D, Nonlinear Time Series Theory, Methods, and Applications with R Examples, CRC Press, Boca Raton, FL, 2014. [Google Scholar]

- [28].Doucet A, De Freitas N, and Gordon N, Sequential Monte Carlo Methods in Practice, Stat. Eng. Inform. Sci, Springer, New York, 2001. [Google Scholar]

- [29].Durrett R, Probability: Theory and Examples, Cambridge University Press, Cambridge, 2019. [Google Scholar]

- [30].Glowacki DR, Paci E, and Shalashilin DV, Boxed molecular dynamics: Decorrelation time scales and the kinetic master equation, J. Chem. Theory Comput, 7 (2011), pp. 1244–1252. [DOI] [PubMed] [Google Scholar]

- [31].Golub GH and Meyer CD, Using the QR factorization and group inversion to compute, differentiate, and estimate the sensitivity of stationary probabilities for Markov chains, SIAM J. Algebraic Discrete Methods, 7 (1986), pp. 273–281. [Google Scholar]

- [32].Hill TL, Free Energy Transduction and Biochemical Cycle Kinetics, Dover, Mineola, NY, 2005. [Google Scholar]

- [33].Hofmann H and Ivanyuk FA, Mean first passage time for nuclear fission and the emission of light particles, Phys. Rev. Lett, 90 (2003), 132701. [DOI] [PubMed] [Google Scholar]

- [34].Huber GA and Kim S, Weighted-ensemble Brownian dynamics simulations for protein association reactions, Biophys. J, 70 (1996), pp. 97–110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [35].Husic BE and Pande VS, Markov state models: From an art to a science, J. Amer. Chem. Soc, 140 (2018), pp. 2386–2396. [DOI] [PubMed] [Google Scholar]

- [36].Jacquemart D and Morio J, Tuning of adaptive interacting particle system for rare event probability estimation, Simul. Model. Practice Theory, 66 (2016), pp. 36–49. [Google Scholar]

- [37].Kloeden PE and Platen E, Numerical Solution of Stochastic Differential Equations, Springer, Berlin, 1992. [Google Scholar]

- [38].Kushner H and Yin GG, Stochastic Approximation and Recursive Algorithms and Applications, Appl. Math 35, Springer, New York, 2003. [Google Scholar]

- [39].Lelièvre T, Two mathematical tools to analyze metastable stochastic processes, in Numerical Mathematics and Advanced Applications, Springer, Berlin, 2011, pp. 791–810. [Google Scholar]

- [40].Lelièvre T and Stoltz G, Partial differential equations and stochastic methods in molecular dynamics, Acta Numer, 25 (2016), pp. 681–880. [Google Scholar]

- [41].Lloyd SP, Least squares quantization in PCM, IEEE Trans. Inform. Theory, 28 (1982), pp. 129–137. [Google Scholar]

- [42].Metzler R, Oshanin G, and Redner S, eds., First-Passage Phenomena and their Applications, World Scientific, Hackensack, NJ, 2014. [Google Scholar]

- [43].Meyn SP and Tweedie RL, Stability of Markovian processes II: Continuous-time processes and sampled chains, Adv. Appl. Probab, 25 (1993), pp. 487–517. [Google Scholar]

- [44].Nummelin E, On the Poisson equation in the potential theory of a single kernel, Math. Scand, 68 (1991), pp. 59–82. [Google Scholar]

- [45].Pande VS, Beauchamp K, and Bowman GR, Everything you wanted to know about Markov state models but were afraid to ask, Methods, 52 (2010), pp. 99–105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [46].Rojnuckarin A, Kim S, and Subramaniam S, Brownian dynamics simulations of protein folding: Access to milliseconds time scale and beyond, Proc. Natl. Acad. Sci. USA, 95 (1998), pp. 4288–4292. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [47].Rojnuckarin A, Livesay DR, and Subramaniam S, Bimolecular reaction simulation using weighted ensemble Brownian dynamics and the University of Houston Brownian dynamics program, Biophys. J, 79 (2000), pp. 686–693. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [48].Sarich M, Noe F, and Schütte C, On the approximation quality of Markov state models, Multiscale Model. Simul, 8 (2010), pp. 1154–1177. [Google Scholar]

- [49].Schütte C and Sarich M, Metastability and Markov State Models in Molecular Dynamics, Courant Lect. Notes 24, American Mathematical Society, Providence, RI, 2013. [Google Scholar]

- [50].Stoltz G, Rousset M, and Lelièvre T, Free Energy Computations: A Mathematical Perspective, World Scientific, Hackensack, NJ, 2010. [Google Scholar]

- [51].Suárez E, Lettieri S, Zwier MC, Stringer CA, Subramanian SR, Chong LT, and Zuckerman DM, Simultaneous computation of dynamical and equilibrium information using a weighted ensemble of trajectories, J. Chem. Theory. Comput, 10 (2014), pp. 2658–2667. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [52].Thiede E, Van Koten B, and Weare J, Sharp entrywise perturbation bounds for Markov chains, SIAM J. Matrix Anal. Appl, 36 (2015), pp. 917–941. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [53].van Erp TS, Moroni D, and Bolhuis PG, A novel path sampling method for the calculation of rate constants, J. Chem. Phys, 118 (2003), pp. 7762–7774. [Google Scholar]

- [54].Vanden-Eijnden E and Venturoli M, Exact rate calculations by trajectory parallelization and tilting, J. Chem. Phys, 131 (2009), 044120. [DOI] [PubMed] [Google Scholar]

- [55].Warmflash A, Bhimalapuram P, and Dinner AR, Umbrella sampling for nonequilibrium processes, J. Chem. Phys, 127 (2007), 154112. [DOI] [PubMed] [Google Scholar]

- [56].Webber RJ, Plotkin DA, O’Neill ME, Abbot DS, and Weare J, Practical rare event sampling for extreme mesoscale weather, Chaos, 29 (2019), 053109. [DOI] [PubMed] [Google Scholar]

- [57].Webber RJ, Unifying Sequential Monte Carlo with Resampling Matrices, preprint, https://arxiv.org/abs/1903.12583 (2019).

- [58].Weinan E, Ren W, and Vanden-Eijnden E, Transition pathways in complex systems: Reaction coordinates, isocommittor surfaces, and transition tubes, Chem. Phys. Lett, 413 (2005), pp. 242–247. [Google Scholar]

- [59].Weinan E and Vanden-Eijnden E, Transition-path theory and path-finding algorithms for the study of rare events, Annu. Rev. Phys. Chem, 61 (2010), pp. 391–420. [DOI] [PubMed] [Google Scholar]

- [60].Wouters J and Bouchet F, Rare event computation in deterministic chaotic systems using genealogical particle analysis, J. Phys. A, 49 (2016), 374002. [Google Scholar]

- [61].Zhang BW, Jasnow D, and Zuckerman DM, Efficient and verified simulation of a path ensemble for conformational change in a united-residue model of calmodulin, Proc. Natl. Acad. Sci. USA, 104 (2007), pp. 18043–18048. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [62].Zhang BW, Jasnow D, and Zuckerman DM, The “weighted ensemble” path sampling method is statistically exact for a broad class of stochastic processes and binning procedures, J. Chem. Phys, 132 (2010), 054107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [63].Zuckerman DM, Discrete-State Kinetics and Markov Models, http://www.physicallensonthecell.org/discrete-state-kinetics-and-markov-models, equation (34).

- [64].Zuckerman DM and Chong LT, Weighted ensemble simulation: Review of methodology, applications, and software, Annu. Rev. Biophys, 46 (2017), pp. 43–57. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [65].Zwier MC, Adelman JL, Kaus JW, Pratt AJ, Wong KF, Rego NS, Suárez E, Lettieri S, Wang DW, Grabe M, Zuckerman DM, and Chong LT, Westpa, https://westpa.github.io/westpa/, (2015). [DOI] [PMC free article] [PubMed]

- [66].Zwier MC, Adelman JL, Kaus JW, Pratt AJ, Wong KF, Rego NB, Suárez E, Lettieri S, Wang DW, Grabe M, Zuckerman DM, and Chong LT, Westpa: An interoperable, highly scalable software package for weighted ensemble simulation and analysis, J. Chem. Theory Comput, 11 (2015), pp. 800–809. [DOI] [PMC free article] [PubMed] [Google Scholar]