Abstract

We recently developed a method to estimate speech-driven spectrotemporal receptive fields (STRFs) using fMRI. The method uses spectrotemporal modulation filtering, a form of acoustic distortion that renders speech sometimes intelligible and sometimes unintelligible. Using this method, we found significant STRF responses only in classic auditory regions throughout the superior temporal lobes. However, our analysis was not optimized to detect small clusters of STRFs as might be expected in non-auditory regions. Here, we re-analyze our data using a more sensitive multivariate statistical test for cross-subject alignment of STRFs, and we identify STRF responses in non-auditory regions including the left dorsal premotor cortex (dPM), left inferior frontal gyrus (IFG), and bilateral calcarine sulcus (calcS). All three regions responded more to intelligible than unintelligible speech, but left dPM and calcS responded significantly to vocal pitch and demonstrated strong functional connectivity with early auditory regions. Left dPM’s STRF generated the best predictions of activation on trials rated as unintelligible by listeners, a hallmark auditory profile. IFG, on the other hand, responded almost exclusively to intelligible speech and was functionally connected with classic speech-language regions in the superior temporal sulcus and middle temporal gyrus. IFG’s STRF was also (weakly) able to predict activation on unintelligible trials, suggesting the presence of a partial ‘acoustic trace’ in the region. We conclude that left dPM is part of the human dorsal laryngeal motor cortex, a region previously shown to be capable of operating in an ‘auditory mode’ to encode vocal pitch. Further, given previous observations that IFG is involved in syntactic working memory and/or processing of linear order, we conclude that IFG is part of a higher-order speech circuit that exerts a top-down influence on processing of speech acoustics. Finally, because calcS is modulated by emotion, we speculate that changes in the quality of vocal pitch may have contributed to its response.

Keywords: fMRI, Pitch, Premotor, Spectrotemporal Modulations, Speech Intelligibility, STRF

1. Introduction

Two decades ago, Buchsbaum et al. (2001) used functional magnetic resonance imaging (fMRI) to characterize a left-hemisphere network of regions in the posterior temporal and inferior frontal lobes that responded reliably during both perception and covert production of speech. The posterior superior temporal sulcus (pSTS) and dorsal speech-premotor cortex (dPM) responded relatively more during perception and production, respectively, while a region in the posterior Sylvian fissure (Spt) and the pars opercularis of the inferior frontal gyrus (pOP) responded roughly equally to perception and production of speech. The results were interpreted largely as evidence that auditory regions (pSTS) interface with articulatory regions (dPM) via an intermediary auditory-motor network (Spt to pOP) in service of speech production. The same network was later identified using sub-vocal repetition of speech and tonal melodies (Hickok, Buchsbaum, Humphries, & Muftuler, 2003). Wilson et al. (2004) independently demonstrated that listening to speech activates Spt, pOP and dPM. In contrast to the earlier studies, the authors interpreted their finding as evidence for a role of the motor cortex in speech perception. Indeed, they showed that overlapping regions of dPM were activated during perception and production of speech. The studies by Buchsbaum et al. (2001) and Wilson et al. (2004) both noted significant activation to heard speech in dPM for every single subject. A later meta-analysis by Buchsbaum and colleagues (Buchsbaum et al., 2011) found the cross-subject peak (overlap analysis) of regions activating to both speech perception and production was located in the dPM at Montreal Neurological Institute (MNI) coordinate [−51, −9, 42]. The average center of mass for perception-related activations in dPM noted by Wilson et al. (2004) was at MNI coordinate [−50, −6, 47] (see Figure 7, Discussion).

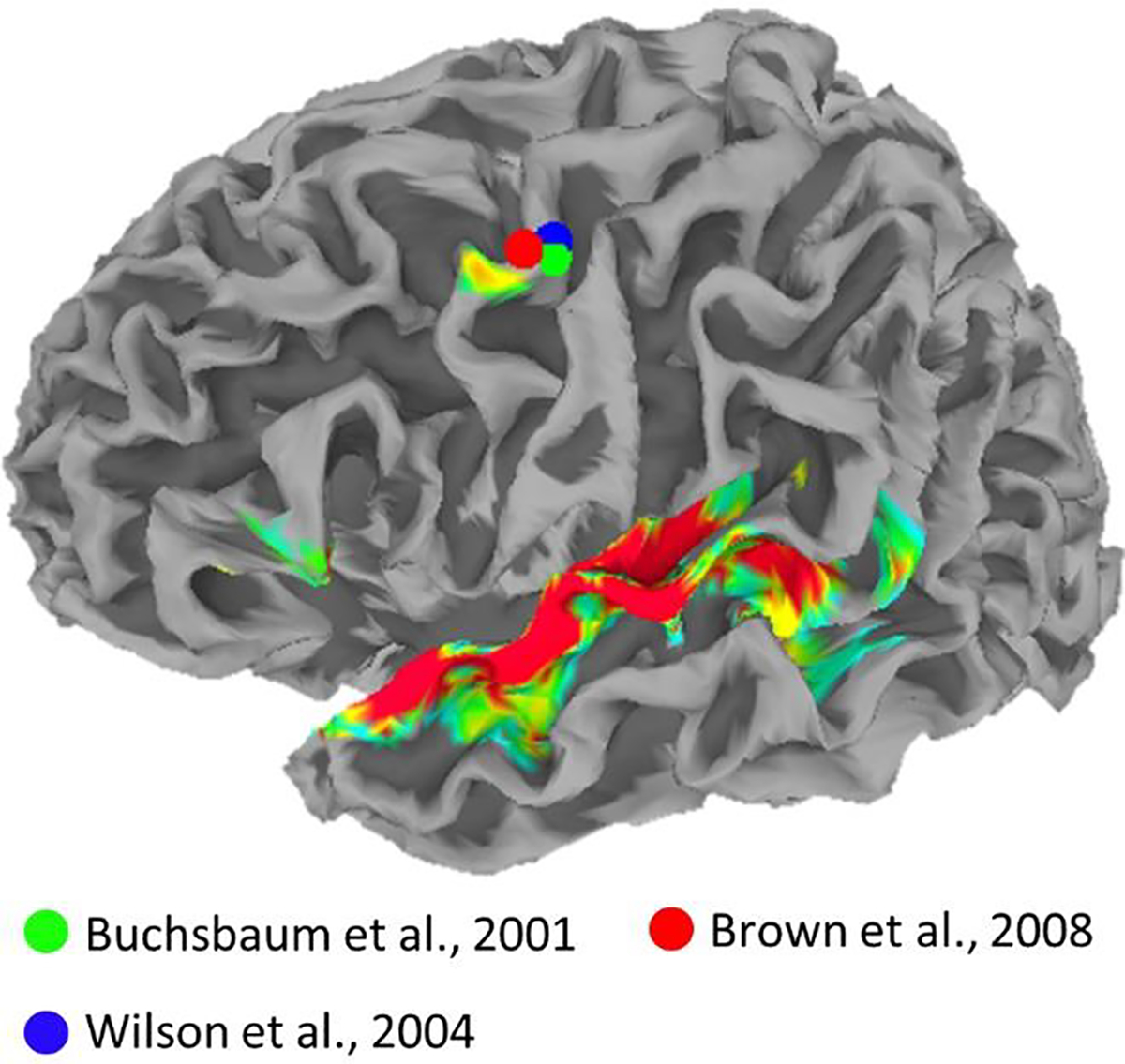

Figure 7.

Heatmap is a replication of Figure 4A. Spheres show peak dorsal premotor coordinates from previous studies showing activation during sensory and motor speech tasks (Buchsbaum et al., 2001, green; Wilson et al., 2004, blue) and during laryngeal motor tasks (Brown et al, 2008, red). Plots are overlaid on the standard topology white-matter-boundary surface derived from the Colin27 template in MNI space.

Despite the similarity of these findings, the study by Wilson et al. (2004) was cited more widely among studies on speech perception and became a major precipitant to years of renewed interest in the role of the motor cortex in speech perception (cf., D’Ausilio, Craighero, & Fadiga, 2012; Peelle, 2012; J. E. Peelle, I. S. Johnsrude, & M. H. Davis, 2010; Poeppel & Assaneo, 2020; Pulvermuller & Fadiga, 2010; Skipper, Devlin, & Lametti, 2017; Tremblay & Small, 2011). Overall, this more recent research has coalesced around analysis-by-synthesis (Bever, 2010; Skipper, Nusbaum, & Small, 2005) and Bayesian inference (Moulin-Frier, Diard, Schwartz, & Bessière, 2015) frameworks in which heard (or seen) speech is, at some level, re-encoded in terms of the articulatory commands used to generate the speech signal in order to form perceptual hypotheses that constrain the analysis and interpretation of incoming speech sounds. While several researchers have dismissed the notion that such mechanisms are a core component of speech perception (Hickok, 2010; Holt & Lotto, 2008; Rogalsky et al., 2020; Scott, McGettigan, & Eisner, 2009; Venezia & Hickok, 2009), it has been acknowledged that motor speech circuits likely play a small, modulatory role in certain listening situations (Stokes, Venezia, & Hickok, 2019).

An alternative computational account of the motor system’s involvement in speech perception is that some motor regions process heard speech in terms of its auditory features, without necessarily translating heard speech into motor commands. Specifically, recent studies show that pre- and primary-motor speech regions fail to discriminate among heard syllables in terms of their articulatory features (i.e., place of articulation; Arsenault & Buchsbaum, 2016; Cheung, Hamilton, Johnson, & Chang, 2016). However, a region within dPM discriminates among heard syllables based on features that are acoustically well defined (i.e. manner of articulation), shows auditory-like tuning to the spectrotemporal modulations in continuous speech, and tracks time-varying acoustic speech features including the spectral envelope, rhythmic phrasal structure, and pitch contour (Berezutskaya, Baratin, Freudenburg, & Ramsey, 2020; Cheung et al., 2016). Chang and colleagues (Breshears, Molinaro, & Chang, 2015; Dichter, Breshears, Leonard, & Chang, 2018) have shown convincingly that this region is part of the human dorsal laryngeal motor cortex (dLMC; cf., Brown, Ngan, & Liotti, 2008) and has both auditory and motor representations of vocal pitch. Among primates, the dLMC is unique to humans and likely evolved to provide voluntary control of the larynx (Belyk & Brown, 2017; Dichter et al., 2018; Simonyan, 2014). It has been suggested that the dLMC contains sulcal (BA 4) and gyral (BA 6) components (Brown, Yuan, & Belyk, 2020). The seminal study by Brown and colleagues (2008) puts the left-hemisphere peak of the gyral component at MNI coordinate [−54, −2, 46] (see Figure 7, Discussion).

To summarize, we and others have long recognized dPM as an important node in the auditory-motor “dorsal stream” that responds during both perception and production (Chen, Penhune, & Zatorre, 2009; Hickok & Poeppel, 2004). Initially, we dismissed dPM’s response to heard speech as somewhat trivial given that feedforward auditory-to-motor activation is expected within the dorsal stream where auditory representations serve as the “targets” for speech production (Venezia & Hickok, 2009). However, recent research suggests that speech is represented differently in dPM depending on whether speech is perceived or produced. This may relate to the fact that dPM is located within the dLMC, which some researchers have identified as playing crucial role in integrating phonation with other aspects of speech motor control (Belyk & Brown, 2017; Brown et al., 2020). We have independently suggested that dPM is part of the cortical circuit for laryngeal motor control (Hickok, 2017). Therefore, one hypothesis is that dPM responds to multiple aspects of speech motor control during production (Belyk et al., 2020; Cheung et al., 2016) but responds preferentially to pitch/voicing during perception (Cheung et al., 2016), which may owe to the presence of non-identical subpopulations of neurons coding auditory and motor features, respectively, as is typical of brain regions involved in sensorimotor integration (Sakata, Taira, Murata, & Mine, 1995).

We recently developed a method called Auditory Bubbles to estimate speech-driven spectrotemporal receptive fields (STRFs) using fMRI (Venezia, Thurman, Richards, & Hickok, 2019b). Using this method, we detected widespread and reliable responses to spectrotemporal speech features in the auditory core and immediate surrounds, superior temporal gyrus/sulcus, and planum temporale. We did not detect such responses beyond these classic auditory-speech regions, e.g., in inferior frontal or speech motor regions. However, spectrotemporal responses were quantified at the second (group) level using univariate statistical methods that were not optimized to detect less robust spectrotemporal responses in focal brain regions such as the dPM/dLMC (cf., Cheung et al., 2016). Here, we develop a new multivariate analysis for Auditory Bubbles – and applicable to any related feature-encoding method – that maximizes sensitivity to spectrotemporal responses while maintaining full statistical rigor at the second level. Note, here and elsewhere we use ‘multivariate’ to refer to statistical analysis of linear-encoding-model ‘weights’ associated with stimulus features in a multidimensional space, not to incorporation of information from multiple neighboring voxels such as in multivoxel pattern analysis. Using this technique, we re-analyze our original dataset with the intention of revealing STRF-like responses in inferior frontal speech-motor regions. To preview, we indeed find such responses in the two frontal regions previously identified as responding to heard speech: left dPM and left inferior frontal gyrus (IFG). Of note, Auditory Bubbles estimates STRFs in the spectrotemporal modulation domain (Figure 1). To improve the efficiency of STRF estimation, Auditory Bubbles introduces acoustic variation into the speech signal via spectrotemporal modulation filtering, rendering the filtered speech sometimes intelligible and sometimes unintelligible. Thus, Auditory Bubbles is uniquely positioned to answer several outstanding questions about the computations performed in left dPM and IFG during perception of continuous speech: (a) what acoustic-speech information are these regions sensitive to and how does this relate to information encoded at early and late stages of auditory processing in classic temporal lobe regions?; (b) do these patterns interact with speech intelligibility?; and (c) how well can spectrotemporal response properties be used to predict activation in inferior frontal versus temporal speech regions for speech that is (un-)intelligible?

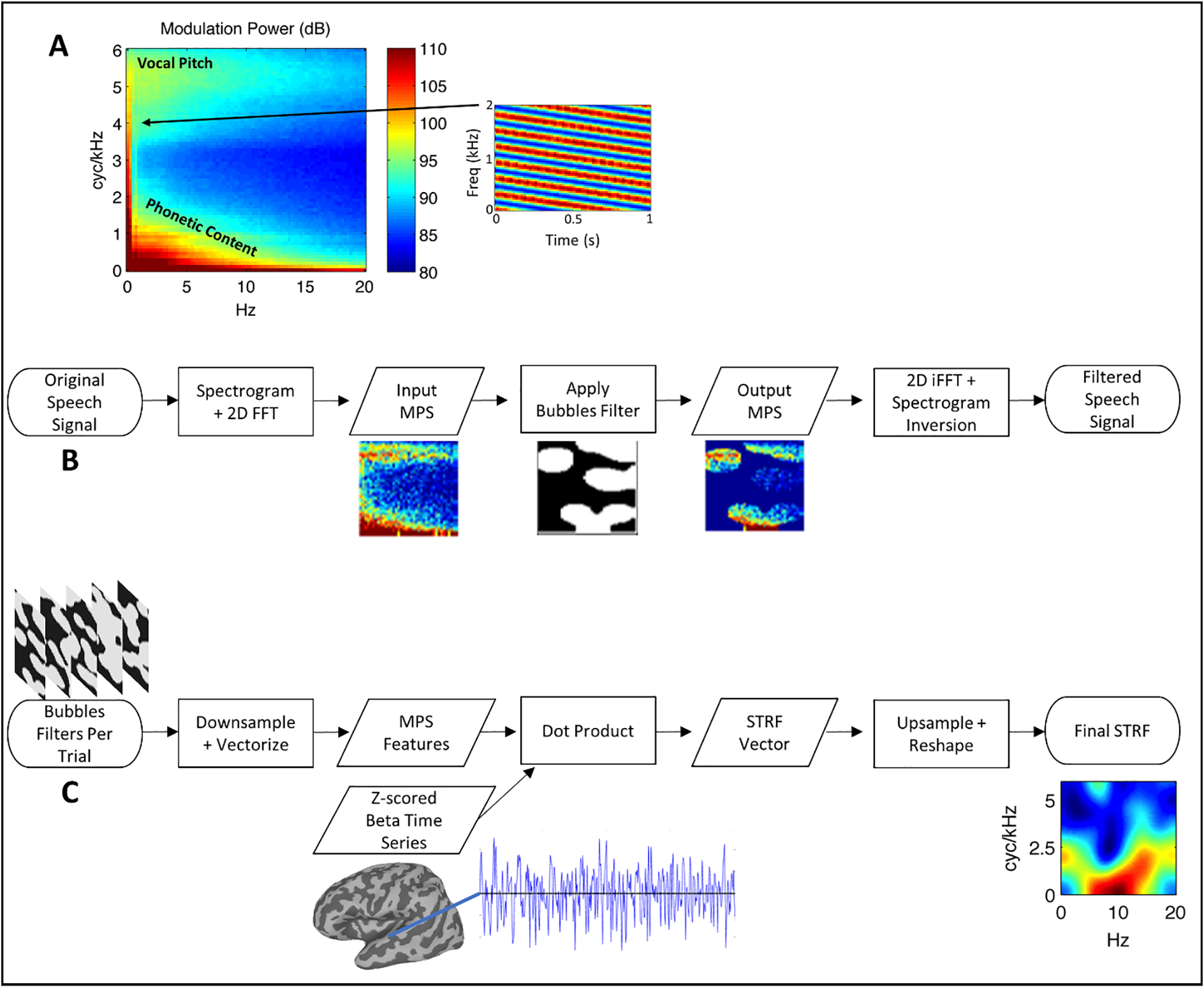

Figure 1.

(A) Average MPS of 452 IEEE sentences spoken by a female talker. The MPS describes the speech spectrogram as a weighted sum of spectrotemporal ripples containing energy at a unique combination of temporal (abscissa, Hz) and spectral (ordinate, cyc/kHz) modulation rate. An example of a downward-sweeping ripple (2Hz, 4 cyc/kHz) is shown at right. In this figure and throughout, energy at a given pixel location in the MPS reflects the average of downward- and upward-sweeping ripples at that location. Modulation energy clusters into two discrete regions: a high-spectral-modulation-rate region corresponding to vocal pitch (F0 Harmonics) and a low-spectral-modulation-rate region corresponding the resonant frequencies of the vocal tract (formants/phonetic content). Color scale = dB (arb. ref). (B) Flow-chart schematic of the signal processing steps involved in Bubbles filtering. (C) Flow-chart schematic of the Bubbles fMRI analysis (see also Eq. 1).

In principle, responses to heard speech in IFG and dPM could reflect auditory, motor, or auditory-motor processing. We aimed to characterize these responses in the STRF domain using a series of follow-up analyses to disentangle fundamentally auditory responses from motor, auditory-motor, or other higher-level (i.e., speech-specific) responses. Our confidence in interpreting a response as auditory increases if the following hold true: (i) a significant STRF-like response is observed, given that, generally, responses to speech in the spectrotemporal modulation domain are more likely to reflect processing of auditory-phonetic speech cues than processing at the articulatory-phonetic, phonological, or some other higher level; (ii) within the STRF, a significant response to vocal pitch is observed, given that vocal pitch is acoustically well defined in the spectrotemporal modulation domain (Figure 1); and (iii) an STRF-like response is observed within trials rated as unintelligible by the listener (i.e., when speech is not processed as speech per se), given that the ability to extract speech-motor or other speech-specific cues is greatly reduced when the presence of such cues in the signal is, by definition, diminished. Finally, for a given non-auditory region, confidence that its response is auditory-driven is further increased if that region is functionally connected to early auditory regions rather than higher-level auditory regions that respond selectively to intelligible speech.

Briefly, we find that responses in left IFG are primarily driven by spectotemporal features that mediate speech intelligibility (i.e., its properties mirror a classic intelligible vs. unintelligible contrast; Narain et al., 2003; Okada et al., 2010), while those in left dPM are driven by additional spectrotemporal features including, most notably, features associated with vocal pitch, though both regions are better activated by intelligible than unintelligible speech. In addition, both regions show some level of response to surface-level acoustic characteristics within trials rated as unintelligible by listeners, but this response is more pronounced in left dPM compared to left IFG. Interestingly, sensitivity to vocal pitch in left dPM emerges only within unintelligible trials. Finally, though both regions show functional connectivity with regions in the auditory cortex, left dPM is maximally correlated with regions in and around Heschl’s gyrus while left IFG is maximally correlated with downstream speech regions in the superior temporal gyrus/sulcus and middle temporal gyrus. We also report evidence that the bilateral early visual cortex shows STRF-like responses and patterns of functional connectivity similar to left dPM. In the Discussion, we clarify how these response profiles differ and speculate as to the role, if any, of visual regions in auditory speech processing.

2. Materials and Methods

2.1. Overview

A detailed description of the materials and methods is given by Venezia et al. (2019b). Here, we recapitulate only those methodological details necessary to understand the study, but we describe in full detail the present re-analysis of the data. In this overview section, we describe the Auditory Bubbles method and its use in fMRI to estimate speech-driven spectrotemporal receptive fields (STRFs). Auditory Bubbles falls within the class of techniques referred to as ‘relative weights’ or ‘reverse correlation’ in the psychoacoustic and/or neurophysiological literatures (Klein, Depireux, Simon, & Shamma, 2000; Matiasek & Richards, 1999), and as ‘encoding models’ in the fMRI literature (Naselaris, Kay, Nishimoto, & Gallant, 2011). In the context of neurophysiological responses to complex natural sounds such as speech, this class of methods is used to estimate STRFs (Theunissen & Elie, 2014). In typical STRF estimation, natural variation in the acoustic envelope of individual spectral bands is mapped to a time-locked neurophysiological response (e.g., a spike train) using a deconvolutional linear model. The STRF comprises the model weights, which describe the spectrotemporal patterns that best activate a given unit of analysis (neuron, voxel, electrode, etc.). The STRF can be visualized as an ‘impulse-response spectrogram’ with a time axis (abscissa) centered on time zero (impulse onset) and a frequency axis (ordinate) spanning the range of frequencies present in the stimulus. Alternatively, the STRF can be transformed into rate-scale space via the two-dimensional Fourier transform (Theunissen & Elie, 2014), such that best responses are described in terms of temporal (abscissa) and spectral (ordinate) modulations.

In fMRI, rate-scale STRFs must be estimated directly in the rate-scale space because sampling of the neurophysiological signal is much slower than the relevant temporal modulation rates in natural sounds (and sampling is often temporally sparse as well; Santoro et al., 2014; Venezia et al., 2019b). In other words, the acoustic stimulus must first be transformed to rate-scale space prior to the linear modeling phase of STRF estimation. This is the approach taken by Auditory Bubbles, though we take the additional step of introducing artificial acoustic variation into the stimulus by applying a filter in the rate-scale domain. This improves the efficiency of STRF estimation while introducing some acoustic distortion, where the latter has advantages (e.g., allows for a calibrated behavioral task) and disadvantages (e.g., makes the stimulus sound less natural). Here, we refer to the rate-scale representation of sounds as the modulation power spectrum (MPS). Briefly, Auditory Bubbles applies a binary filter to the MPS of acoustic speech stimuli – typically sentences – such that contiguous segments of the MPS are either retained in the stimulus or removed. The shape of the filter, which determines the MPS segments that will be retained/removed, is determined quasi-randomly. A different filter is applied to each sentence in the stimulus set, and the filtered sentences are presented sequentially to a listener in the MRI scanner. After scanning, the filters themselves – each summarizing the spectrotemporal patterns retained in each sentence – are weighted by the response elicited by the corresponding sentence and summed to produce a STRF. Essentially, the weighted sum is a linear model (cf., Venezia, Hickok, & Richards, 2016; Venezia, Leek, & Lindeman, 2020; Venezia, Martin, Hickok, & Richards, 2019a) whose predictors are the binary filters and whose criterion is the sentence-by-sentence fMRI response magnitude (i.e., event-related beta time series). A schematic of this process is shown in Figure 1C. Notably, Auditory Bubbles obtains the MPS from sentence spectrograms with a linear frequency axis, which results in speech energy clustering in two locations of the MPS – a high-spectral-modulation-rate region associated with vocal pitch and a low-spectral-modulation-rate region associated with phonetic content (roughly formant-scale features; Figure 1A). Thus, STRFs estimated with Auditory bubbles also cluster in these two regions of the MPS.

2.2. Participants

There were ten participants (mean age = 26, range = 20–33, 2 females) all of whom were right-handed, native speakers of American English with self-reported normal hearing and normal or corrected-to-normal vision. The participants all gave their informed consent in accordance with the University of California, Irvine Institutional Review Board guidelines.

2.3. Stimuli

The stimuli were recordings of 452 sentences from the Institute of Electrical and Electronics Engineers (IEEE) sentence corpus (IEEE, 1969) spoken by a single female talker. Each sentence was stored as a separate .wav file digitized at 22050 Hz with 16-bit quantization and the waveforms were zero-padded to an equal duration of 3.29 s. The stimuli were then filtered to remove randomly selected segments of the MPS (Figure 1B). Specifically, a log spectrogram was obtained using Gaussian windows with a 4.75 ms-33.5 Hz time-frequency scale and the MPS was then obtained as the modulus of the two-dimensional Fourier transform of the spectrogram. A binary “bubbles” filter was then multiplied with the MPS and the filtered waveform was obtained via inverse Fourier transform and iterative inversion of the resultant magnitude spectrogram (Griffin & Lim, 1984; Theunissen & Elie, 2014). Specifically, a 2D image of the same dimensions as the MPS was created with a set number of randomly selected pixel locations assigned the value 1 and the remainder of pixels assigned the value 0. A symmetric Gaussian low-pass filter (sigma = 7 pixels) was then applied to the image and all resultant values above 0.1 were set to 1 while the remaining values were set to 0. This produced a binary image with several contiguous regions with value 1. A second Gaussian low-pass filter (sigma = 1 pixel) was applied to smooth the edges between 0- and 1-valued regions, producing the final 2D filter. The number of pixels originally assigned a value of 1 corresponds to the number of “bubbles” in the filter. It is noteworthy that the low-pass form of the bubbles filters propagates to STRFs estimated from those filters (see Section 2.7.1), resulting in smoother STRFs than might be obtained from methods such as autocorrelation-normalized reverse correlation or boosting (David, Mesgarani, & Shamma, 2007; Theunissen, Sen, & Doupe, 2000). For each of the 452 sentences, filtered versions were created using independent, randomly generated filter patterns. This renders some filtered items unintelligible while others remain intelligible. Separate sets of filtered stimuli were created using different numbers of bubbles (20–100 in steps of five), producing a total of 7684 filtered sentences. All stimuli were generated offline and stored prior to the experiment. The average proportion of the MPS revealed to the listener is ~ 0.25 for 20 bubbles and ~ 0.7 for 100 bubbles. Examples of filtered stimuli with 50 bubbles are given in Supplementary Audio 1–3 (each presents a filtered sentence followed by the unfiltered source).

2.4. Procedure

Participants were presented with filtered sentences during sparse acquisition fMRI scanning. On each trial, a single filtered sentence was presented in the silent period (4 s) between image acquisitions (2 s). Stimulus presentation was triggered 400 ms into the silent period and sentence duration ranged from 1.57–3.29 s (mean = 3.02 s). At the end of sentence presentation, participants were visually cued to make a subjective yes-no judgment indicating whether the sentence was intelligible or not. The number of bubbles was adjusted using an up-down staircase such that participants rated sentences as intelligible on ~ 50% of trials. Participants performed 45 trials per run along with 5 trials of a visual baseline task that were quasi-randomly intermixed. We originally included the visual baseline task so that contrasts such as ‘speech vs. baseline’ would include a meaningful baseline condition rather than rest, but here the baseline condition is irrelevant to, and therefore not included in, all subsequent analyses. Two participants completed a total of 9 scan runs (405 experimental trials) and the remaining eight participants completed a total of 10 scan runs (450 experimental trials). Acoustic stimuli were amplified using a Dayton DTA-1 portable amplifier and presented diotically over Sensimetrics S14 piezoelectric earphones. Participants adjusted the volume to a comfortable level slightly above that of conversational speech (~75–80 dB SPL).

2.5. Image Acquisition Parameters

Images were acquired at the University of California, Irvine Neuroscience Imaging Center using a Philips Achieva 3T MRI scanner with a 32-channel sensitivity encoding (SENSE) head. A sparse, gradient-echo EPI acquisition sequence was employed (35 axial slices, interleaved slice order, TR = 6 s, TA = 2 s, TE = 30 ms, flip = 90°, SENSE factor = 1.7, reconstructed voxel size = 1.875 × 1.875 × 3 mm, matrix = 128 × 128, no gap). Fifty-two EPI volumes were collected per scan run. A single high-resolution, T1-weighted anatomical image was collected for each participant using a magnetization prepared rapid gradient echo (MPRAGE) sequence (160 axial slices, TR = 8.4 ms, TE = 3.7 ms, flip = 8°, SENSE factor = 2.4, 1 mm isotropic voxels, matrix = 256 × 256).

2.6. Preprocessing

Preprocessing of the functional data was performed using AFNI v17.0.05 (Cox, 2012). Functional images were slice-timing corrected based on slice time offsets extracted from the Philips PAR files, followed by motion correction and co-registration to the T1 image. The functional data were then mapped to a merged, standard-topology surface mesh using Freesurfer v5.3 (Fischl, 2012), AFNI, and the “surfing” toolbox v0.6 (https://github.com/nno/surfing; Oosterhof, Wiestler, Downing, & Diedrichsen, 2011). The surface-space data were smoothed to a target level of 4 mm full width at half maximum and scaled to have a mean of 100 across time points subject to a range of 0–200. At each node in the cortical surface mesh, a beta time series was obtained using the least squares separate (LSS) technique (AFNI 3dLSS; Mumford, Turner, Ashby, & Poldrack, 2012). The original surface mesh was produced using linear icosahedral tessellation with 128 edge divides, resulting in a two-hemisphere mesh with 327684 nodes. To reduce computational burden, the surface mesh was subsampled for eight decimation iterations using the function surfing_subsample_surface in the surfing toolbox. This produced a new surface mesh with 78812 nodes. Subsequent analyses were performed on the subsampled surface.

2.7. Analysis

2.7.1. STRF Estimation

The original dimensions of the binary bubbles filters were 165 × 215 pixels, corresponding to 0–16 cyc/kHz and 0–50 Hz on the MPS. For analysis, pixels at MPS locations > 6 cyc/kHz and > 20 Hz were discarded, resulting in filters of size 67 × 86. These were then vectorized to produce feature vectors of length 5762. To reduce the dimensionality of the feature space and eliminate covariance among the individual features, an experiment-wide feature matrix was generated by stacking the feature vectors across trials and participants. This feature matrix was then submitted to principal component analysis (PCA). It was determined that the first 104 components in the orthogonal PCA space explained > 95% of the variance in the original feature space. These components were thus retained, and the remainder discarded. The resultant, experiment-wide matrix of PCA scores, which represented the trial-by-trial bubbles feature vectors in the PCA-reduced space, was then split by participant, resulting in 10 participant-level feature matrices. For a given participant, this yielded a feature matrix, F, with number of rows equal to number of trials completed by that participant and number of columns equal to the number of the dimensions in the PCA-reduced feature space (104). At each cortical surface node (voxel-like unit), the beta time series containing a single estimate of activation magnitude (percent signal change) for each bubbles-filtered sentence was z-scored, resulting in a criterion matrix, C, with number of rows equal to the number of trials and number of columns equal to the number of surface nodes (78812). For each participant, a STRF matrix (i.e., STRF brain volume) of dimensions 78812 × 252 was obtained as:

| (1) |

In other words, at each cortical surface node a STRF was calculated as the sum of each feature across trials weighted by the z-scored activation on the corresponding trial. Of note, STRFs can be obtained in the original, full-resolution feature space by multiplying each element of the PCA-reduced STRF with the length-5762 vector of principal component weights for the corresponding principal component. We performed this backward projection into the original space to generate STRF plots and summary measures (2.7.4), computed statistics for second-level STRF alignment in the PCA-reduced space (2.7.3).

2.7.2. STRF Decomposition by Intelligibility

A residualizing procedure was used to decompose STRFs into separate components reflecting the neural response to trials with the same (what we call “within” STRFs) versus different (what we call “between” STRFs) intelligibility ratings. To accomplish this, a regression model with a single effect-coded predictor reflecting the behavioral response on each trial (1 = rated as intelligible, −1 = rated as unintelligible) was fitted at each cortical surface node where the criterion variable was the z-scored beta time series at that node. Two output time series were obtained from the regression: (1) the predicted values, , reflecting the mean activation to intelligible vs. unintelligible trials, respectively; and (2) the residuals, r, reflecting activation across trials with the main effect of intelligibility removed. Across all cortical surface nodes, this yielded two new criterion matrices, and Cr. The matrix Cr was further divided into separate matrices, Cr_intel and Cr_unintel, reflecting activation across trials rated as intelligible and unintelligible, respectively. The feature matrix, F, was similarly decomposed into Fintel and Funintel. Three additional STRF matrices were then obtained as follows:

| (2) |

| (3) |

| (4) |

This decomposition has the advantage that STRFs obtained from Eq. 1 must be equal to the sum of STRFs obtained from Eqs. 2–4. However, we cannot immediately conclude that “between” effects of intelligibility reflect high-level speech or linguistic processing, nor can we immediately conclude that such effects reflect general acoustic tuning. What is certain is that “between” STRFs must, by definition, reflect those MPS regions that support intelligibility behaviorally (Venezia et al., 2016; Venezia et al., 2019a; Venezia et al., 2019b). Conversely, it is likely that “within” STRFs reflect general acoustic tuning at some level, although such tuning may still be modulated by the experimental setting (i.e., stimuli that are exclusively speech and a task that requires subjective ratings of the speech according to intelligibility). Moreover, the “within” STRFs must, by definition, be biased away from those MPS regions described by the “between” STRFs.

2.7.3. Second-Level Assessment of STRF Alignment

The objective of STRF analysis at the second level is to determine whether the spectrotemporal response patterns exhibited at a given cortical surface node are reliable across subjects. Previously, we took the approach of calculating a t-statistic separately for each STRF feature, applying false discovery rate correction across features (Benjamini & Hochberg, 1995), clustering the data at corrected p < 0.05, and thresholding at the cluster level by obtaining a null distribution of max cluster sizes after sign-flipping the individual-participant STRFs in random order (Venezia et al., 2019b). With 10 participants, we previously used all possible sign-flip orders to form a null distribution of length 1022 (2^10 – 2). This method was sufficiently powered to detect large clusters of voxels with STRFs in the auditory cortex and immediate surrounds, but not small clusters that might be expected in nonspeech or speech-motor brain regions.

Here, we take an approach similar to Das and colleagues (2020) who used the multivariate, one-sample Hotelling T2 test with threshold free cluster enhancement (TFCE; Smith & Nichols, 2009) to assess significance of an entire feature vector at the second level. In our case, this corresponds to a test for a reliable pattern of STRF responses across participants at a given cortical surface node after correcting for multiple comparisons. However, we will replace the Hotelling T2 with a recently developed nonparametric alternative (Wang, Peng, & Li, 2015) that is appropriate for cases where the dimensionality of the feature space is high relative to the number of observations, and where the data may not be normally distributed. For a data matrix with n observations of p variables, the asymptotic null distribution (n, p → ∞) of the test statistic is standard normal. Thus, for the present application, large positive values indicate the individual-participant STRFs at a given cortical surface node are reliably concentrated in a similar region of the unit hypersphere and large negative values indicate that individual-participant STRFs are uniformly distributed about the unit hypersphere. This test statistic, which we shall call Z, was calculated at each cortical surface node. A null distribution of Z images was formed by first randomly permuting the trial order (rows) of the bubbles feature matrix, F, and then obtaining Z (Eq. 1) at each surface node (Stelzer, Chen, & Turner, 2013). In all, 10,000 permutations resulted in a null distribution of 10,000 Z images. Figure 2 compares the empirical null distribution of Z to the standard normal distribution. Relative to the standard normal, the empirical null distribution of Z is right-skewed and highly kurtotic, which is the expected behavior under the null when n or p is not sufficiently large. However, this is not problematic given we adopted TFCE, a nonparametric approach to cluster-level statistical testing and multiple comparison correction. Specifically, the original Z image and the null Z images were submitted to TFCE using CoSMoMVPA v1.1.0 (Oosterhof, Connolly, & Haxby, 2016). A node-wise, uncorrected p-value image was then obtained as the proportion of null TFCE values exceeding the true TFCE value at each node. The resultant p-value image was then corrected for multiple comparisons using the false discovery rate procedure (Benjamini & Hochberg, 1995; Chumbley, Worsley, Flandin, & Friston, 2010; Leblanc, Dégeilh, Daneault, Beauchamp, & Bernier, 2017).

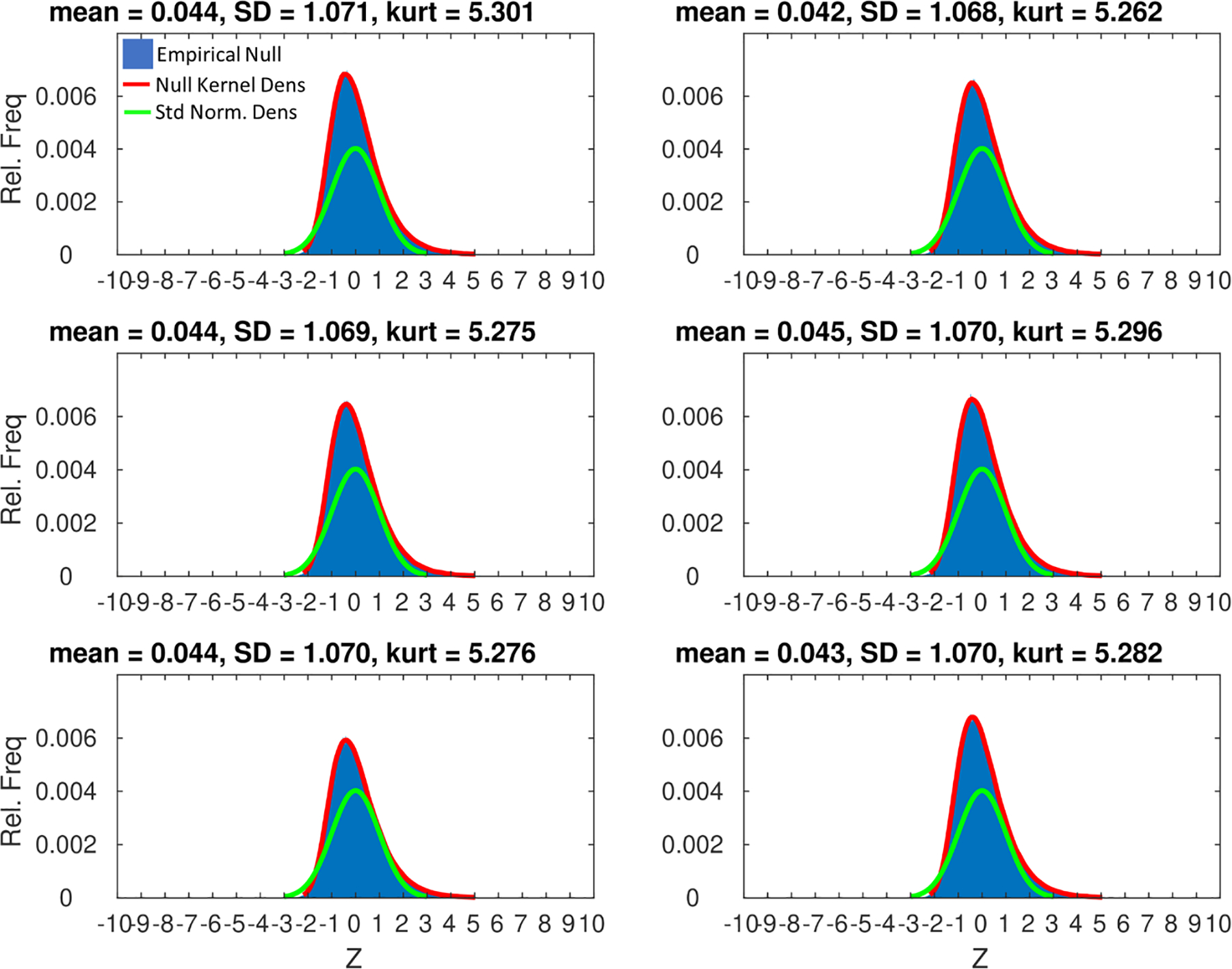

Figure 2.

For six samples of 100 cortical surface nodes selected at random from across the whole brain (panels), the empirical null distribution of Z (10,000 draws after random permutation of the trial order of bubbles feature vectors) is plotted as a relative frequency histogram (blue) overlayed with a kernel density estimate of the continuous probability density function of Z (red) and the standard normal probability density function (green). Above each panel is shown the mean, standard deviation (SD), and kurtosis (kurt) of the null distribution of Z for the corresponding sample. The empirical null is right-skewed and more kurtotic compared to the standard normal, which has a kurtosis of 3.

We chose TFCE for cluster-level analysis because: (i) TFCE obviates the requirement to choose an initial cluster forming threshold; (ii) TFCE maintains sensitivity to small local clusters with strong activation and large, spatially diffuse clusters with relatively weak activation, and (iii) TFCE is nonparametric (Smith & Nichols, 2009), which was important given the non-Gaussian form of the null distribution of the test statistic (Figure 2). A one-tailed test was employed because we were only interested in detecting STRFs with reliably similar – as opposed to reliably dissimilar – response properties across participants. Our primary interest was to apply this analysis to STRFs derived from Eq. 1. However, uncorrected Z maps were also obtained for STRFs derived from Eqs. 3–4 (“within” STRFs). These maps must be interpreted with caution because “within” STRFs are necessarily biased, as described above. No Z map was generated for “between” STRFs because the values of Z would be overwhelmed by bias resulting in unreliable statistics. For “between” STRFs, a map of the maximum STRF amplitude was obtained after averaging the STRFs across participants.

2.7.4. Region of Interest Analyses

Our multivariate analysis of STRF alignment with TFCE revealed five significant clusters outside the auditory cortex: left dPM, left IFG, calcS, right ventral speech-premotor cortex (vPM) and right supplementary motor area (SMA). For each of these regions, we obtained the mean value of Z across all nodes in the region for the overall STRF (Eq. 1), STRFwithin_intel (Eq. 3), and STRFwithin_unintel (Eq. 4). We also obtained the mean across all nodes in the region of the peak pixel intensity of STRFbetween (Eq. 2). We then obtained region level averages of STRFbetween, STRFwithin_intel, and STRFwithin_unintel. At each pixel in these region-level average STRFs, we obtained an importance index by calculating the sum, pixel by pixel, of the absolute pixel intensity across the STRF subtypes (STRFbetween, STRFwithin_intel, and STRFwithin_unintel) and expressing the contribution to this sum from each subtype as a proportion, resulting in three “proportion images”, one for each STRF subtype. We then multiplied these proportion images, pixel by pixel, with the absolute value of the region-level average overall STRF (Eq. 1). This produced three “importance images” for the three STRF subtypes. To obtain an overall measure of importance, the mean across-pixel importance was obtained each STRF subtype, and these means were scaled to sum to one. In other words, the importance index expresses the relative contribution of each decomposed STRF (Eqs. 2–4) to the overall STRF (Eq. 1). These overall importance indices were obtained separately for each region of interest. Finally, for each region of interest, we obtained a leave-one-out (LOO) estimate of the overall STRF’s ability to predict the region’s beta time series within intelligible and unintelligible trials, respectively. Specifically, for each cortical surface node in a given region, the beta time series, y, was obtained and a LOO predicted time series, , was calculated as follows. In each LOO iteration, the beta value, yLOO, and feature vector, FLOO, from a single trial were held out and STRFLOO was produced from the remaining data using Eq. 1. The held-out data point was then predicted using:

| (5) |

Iterating over all trials in order, was obtained by appending to the accumulated after each iteration. A Bayesian hierarchical linear mixed model was then employed to determine the ability of to predict y across participants. The form of the model was:

| (6) |

where intel is a binary predictor encoding trial-by-trial intelligibility ratings (1 = intelligible, 0 = unintelligible), is the product of intel==1 and , and is the product of intel==0 and . Prior to fitting the model, y and were z-scored within each participant. In Eq. 6, terms to the right of equality are fixed (non-parenthetical; group level) or random (parenthetical; participant level). This model is equivalent to a model with a main effect of intel and the two-way interaction of intel and , but in Eq. 6 the interaction is instead coded as simple effects of within intelligible and unintelligible trials, respectively. Note, here and in subsequent Eqs. 7–8, we use R model formula notation in which the names of the predictor variables in the fixed and random effects design matrices are specifically enumerated, but the associated regression coefficient terms (e.g., , etc.) are suppressed. The model was fitted using the BayesFactor package v0.9.12–4.2 in R v3.6.1 (R Core Team, 2019). The prior scale was set to ‘medium’ for fixed effects and ‘nuisance’ for random effects. The Bayes factor was obtained for the fixed effects of and using a model comparison approach (Morey & Rouder, 2011). That is, separate null models were fitted without the fixed effects and , respectively, and a Bayes factor was obtained showing the ratio of the evidence in favor of the full model (Eq. 6) versus each null model. We refer to these quantities as BFintel and BFunintel, where, controlling for “between” effects, values above one reflect increasing evidence that has some predictive validity with respect to y and values below one reflect increasing evidence that has no predictive validity with respect to y. The direction of this predictive validity is ambiguous in the Bayes factor and must be determined from the sign of the fixed effects regression coefficients. To generate region-level summaries, the geometric means of BFintel and BFunintel were obtained across all nodes in the region. For non-auditory regions of interest, we also obtained the 80th percentile of BFintel and BFunintel across all nodes in the region to identify cases where, despite a relatively low geometric mean, a nontrivial proportion (at least 20%) of nodes demonstrated high predictive validity.

2.7.5. Beta Time Series Functional Connectivity

For left dPM, left IFG, and calcS, we were interested in whether and which auditory cortical regions were functionally connected with these non-auditory regions of interest. Therefore, we performed a whole brain beta time series functional connectivity analysis for each of these three regions of interest. First, the mean beta time series from a given region of interest was extracted and z-scored within participants. A first-level linear regression model was then fitted as:

| (7) |

where yn is the z-scored beta time series at a given cortical surface node, intel is an effect-coded predictor reflecting intelligibility ratings (1 = intelligible, −1 = unintelligible), yseed is the z-scored beta time series from the seed region, and ‘*’ denotes the two-way interaction. No intercept was included in the model because yn was z-scored. The terms of interest in Eq. 7 were yseed and intel * yseed. The first-level regression coefficients for these terms were thus analyzed at the second level via one-sample t-test with false discovery rate correction (Benjamini & Hochberg, 1995). Second-level connectivity maps were thresholded at corrected p < 0.01. For intel * yseed, no significant surface nodes were detected after correction for multiple comparisons. Before concluding that no significant interaction of connectivity by intelligibility rating was present, we ran an additional Bayesian hierarchical model of the form:

| (8) |

This model can be interpreted just as Eq. 7, except it has been extended to a hierarchical (i.e., first-plus-second level) framework as in Eq. 6. The model was analyzed using the BayesFactor package just as explained for Eq. 6. The term of interest was the fixed effect of intel * yseed. Therefore, at each cortical surface node we produced a Bayes factor showing the ratio of the evidence in favor of the full model (Eq 8) and a null model without the fixed effect of intel * yseed. This interaction Bayes Factor map was thresholded at |logBF| > 1.6 (BFfull > 5 or BFnull > 5) for visualization.

For each of the three seed regions of interest – left dPM, left IFG, calcS – subregions of the auditory cortex were identified containing only those surface nodes that (a) were significantly correlated with the seed at the second level (yseed in EQ. 7; false-discovery-rate-corrected p < 0.01) and (b) had second-level correlations with the seed more than one standard deviation above the mean among auditory-cortical surface nodes with significant STRF alignment. Further, for left dPM and left IFG, subregions of the auditory cortex were identified containing only those surface nodes whose second-level interaction of seed connectivity by intelligibility rating were significant (Eq. 8, BFfull > 5). For each of these auditory-cortical subregions, average STRFs were calculated using Eqs. 1–4, and all summary measures described in the ‘Region of Interest Analyses’ subsection were tabulated.

3. Results

3.1. Significant STRF Alignment Beyond the Auditory Cortex

The results of our primary second-level analysis, which probed for reliable cross-subject alignment of STRFs (see Eq. 1), are shown in Figure 3A. In the auditory cortex and surrounds, this analysis replicated our previous results (Venezia et al., 2019b) with significant STRF alignment in the bilateral supratemporal plane, planum temporale, and superior temporal gyrus/sulcus (STG/S). Additionally, a new cluster emerged in the right pSTS that was disjoint from the main cluster of significant STRF responses in the right auditory cortex. This suggests that our analytic method was sensitive enough to detect relatively small clusters with strong, selective responses to the spectrotemporal modulations in speech. Indeed, unlike our previous result, we also detected small clusters of significant STRF responses outside the auditory cortex, with notable clusters in the left dPM, left IFG, right SMA, right vPM, and bilateral calcS.

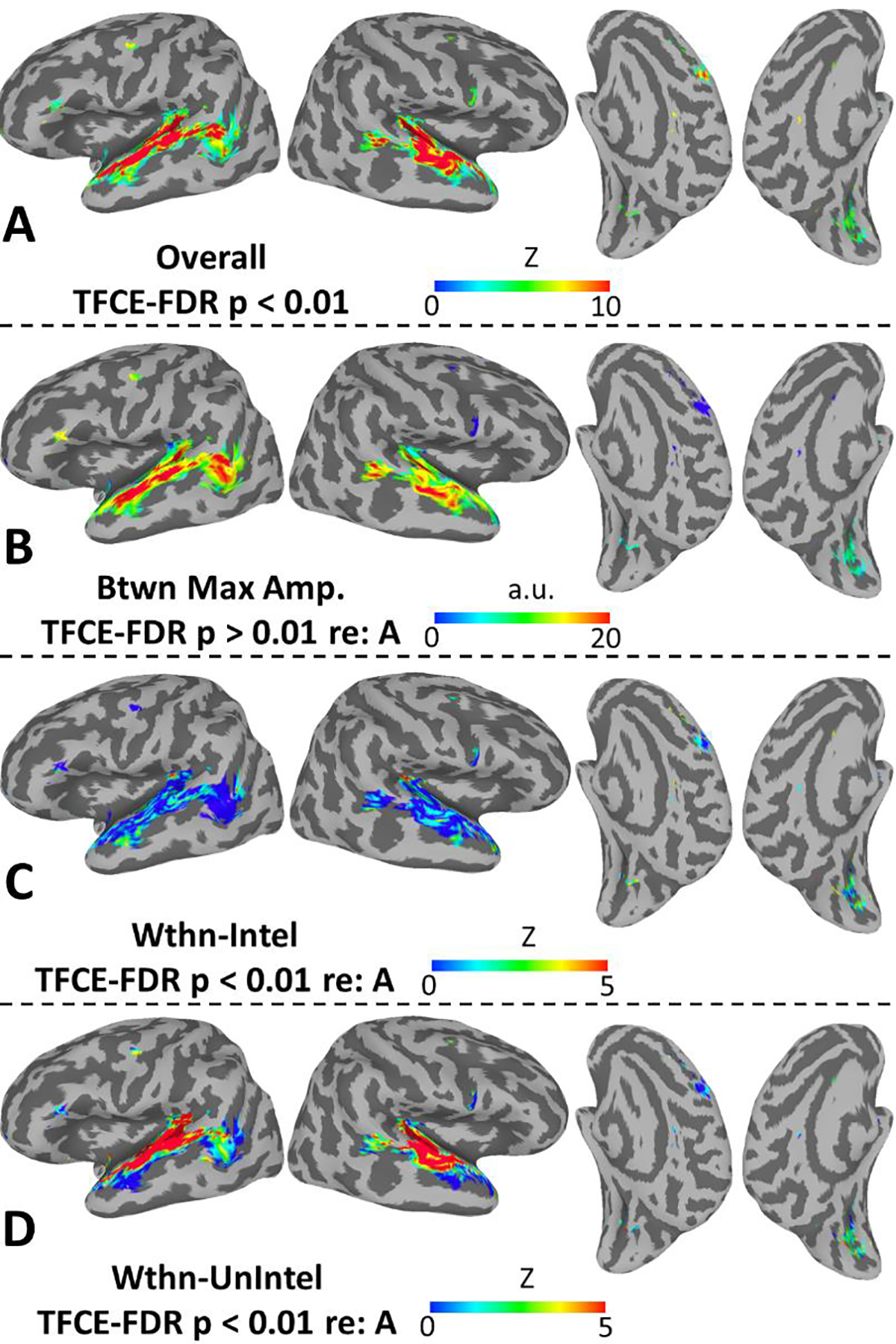

Figure 3.

(A) Maps show the strength of cross-subject STRF alignment at the second level as quantified with multivariate test statistic, Z (see Materials and Methods; only significant values shown, TFCE-FDR-corrected p < 0.01). (B) Maps show the second level mean of the maximum amplitude of STRFbetween, restricted to surface nodes identified as significant in A. (C) Maps show the strength of cross-subject STRF alignment within trials rated as intelligible by the listeners, restricted to surface nodes identified as significant in A. (D) Maps show the strength of cross-subject STRF alignment within trials rated as unintelligible by the listeners, restricted to surface nodes identified as significant in A. All plots use a standard topology inflated surface derived from the Colin N27 template in MNI space.

For those cortical surface nodes with significant cross-subject STRF alignment in Figure 3A, Figure 3B–D plots maps of “decomposed” STRF properties – specifically the maximum amplitude of the “between” STRF derived from the contrast of intelligible vs. unintelligible speech (Eq. 2), and the strength of STRF alignment within trials rated as intelligible (Eq. 3) and unintelligible (Eq. 4), respectively. The largest between effects (Figure 3B) were observed in classic auditory speech regions including the planum temporale, anterior and posterior STG, and dorsal STS bilaterally, as well as the left ventral pSTS and middle temporal gyrus. Outside the auditory cortex, the largest between effects were observed in the left IFG and right SMA (in fact, right SMA showed a large negative between effect as will be described below). All regions were modulated at least somewhat by intelligibility. In general, STRF alignment was poor within trials rated as intelligible (Figure 3C), with notable exceptions in the posterior planum temporale and anterior STS bilaterally. Among non-auditory areas, modest STRF alignment within intelligible trials was observed only in the calcS. A different pattern was observed for unintelligible trials (Figure 3D), where strong alignment was observed throughout the supratemporal plane and STG bilaterally. In non-auditory areas, the strongest alignment within unintelligible trials was observed in the left dPM, and alignment increased in strength within left dPM along a gradient from anterior to posterior (i.e., with maximum strength nearest to the central sulcus). Alignment ranging in strength from modest to moderate was also observed in the calcS, but STRFs in the other non-auditory areas were generally poorly aligned within unintelligible trials.

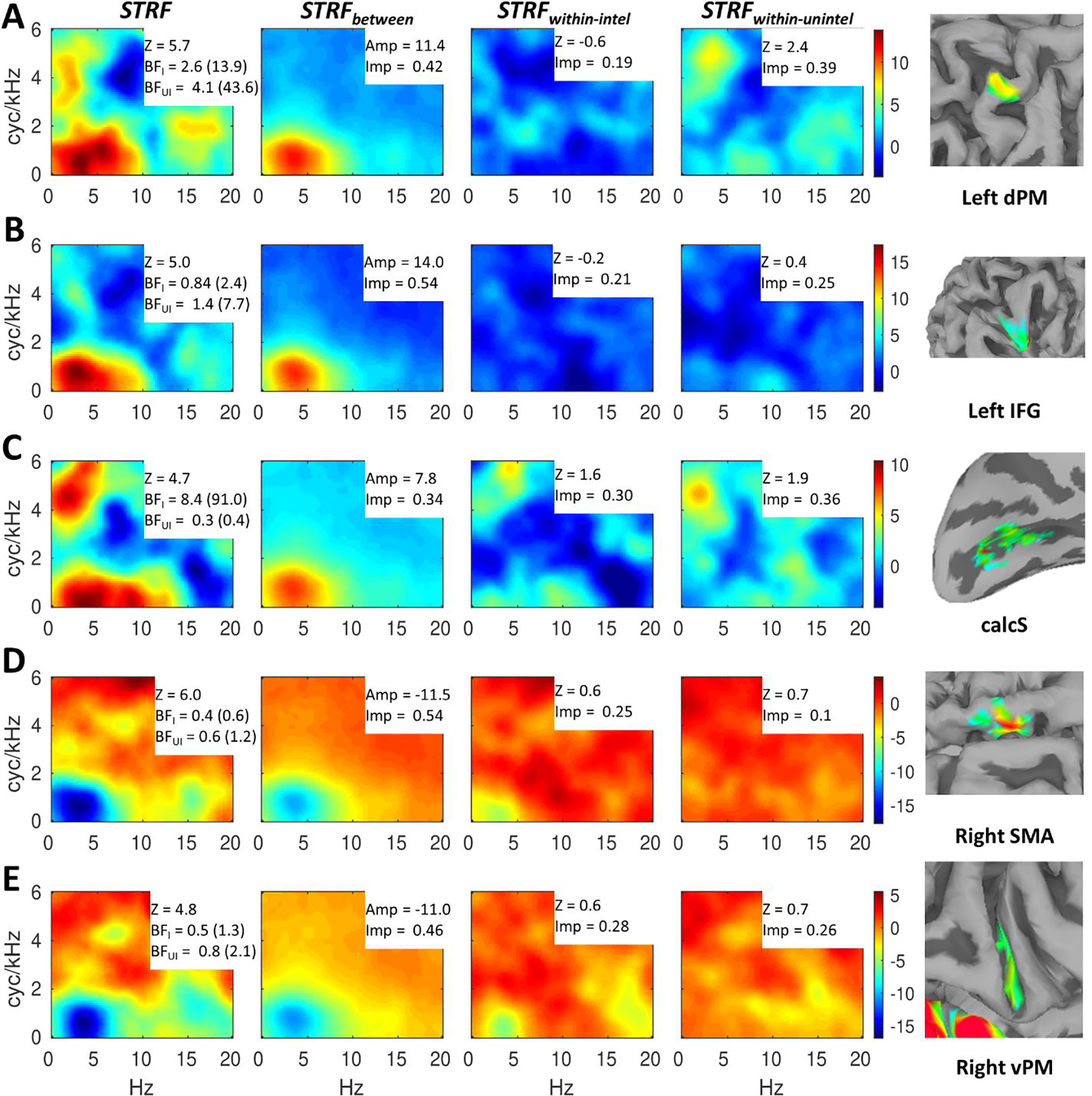

To visualize STRF responses in non-auditory regions, we obtained regional averages of second-level STRFs (overall and decomposed) for left dPM (Figure 4A), left IFG (Figure 4B), calcS (Figure 4C), right SMA (Figure 4D), and right vPM (Figure 4E). The overall STRFs (STRF, Figure 4, left column) are annotated with regional STRF summary measures including strength of alignment (Z) and ability to predict activation on held out data separately for intelligible (BFintel) and unintelligible (BFunintel) trials. The decomposed STRFs (Figure 4, right three columns) are annotated with regional STRF summary measures including max amplitude (STRFbetween, second column), strength of alignment (Z; STRFwithin_intel and STRFwithin_unintel, right two columns), and relative importance (all decomposed STRFs, right three columns). Several patterns are noteworthy. First, all five non-auditory regions were strongly driven by MPS patterns associated with speech intelligibility (0–10 Hz, 0–2 cyc/kHz) as visible in STRF and STRFbetween. However, this pattern was positive (intelligible > unintelligible) in left dPM, left IFG, and calcS, and negative (unintelligible > intelligible) in right SMA and right vPM. A negative STRF region reflects increased activation when that region of the MPS was removed from the signal. In other words, right SMA and right vPM activated more strongly when speech was more distorted, indicating a top-down or difficulty-driven response profile. Since this profile was not of primary interest, right SMA and right vPM will not be discussed further. Second, among the three regions with positive STRFs, left IFG responded most prominently to MPS patterns associated with intelligibility, while left dPM and calcS responded to MPS patterns associated with intelligibility and MPS patterns associated with vocal pitch (see Figure 1A). Indeed, left IFG had a larger amplitude in and more relative importance ascribed to STRFbetween compared to left dPM and calcS. Third, left dPM and calcS showed a non-trivial level of cross-subject alignment in STRFwithin_unintel, while left IFG did not; this was driven largely by reliable responses to vocal pitch, but to a lesser extent by responses to low spectral modulation rates (0–2 cyc/kHz) at relatively high temporal modulation rates (5–15 Hz). Interestingly, calcS showed a similar response profile in STRFwithin_intel, but left dPM and left IFG, like most of the auditory cortex, did not demonstrate strong STRF responses within intelligible trials. Relative importance was spread rather evenly across STRFbetween, STRFwithin_intel, and STRFwithin_unintel in calcS; in left IFG relative importance was weighted heavily toward STRFbetween, while in left dPM relative importance was weighted heavily toward STRFbetween and STRFwithin_unintel. The only regions in which LOO-predicted activation was significantly associated with true activation within unintelligible trials was left dPM (geometric mean of BFunintel = 4.1, 80th percentile = 43.6). However, this association was also present in a nontrivial proportion of surface nodes in left IFG (80th percentile of BFunintel = 7.7). LOO-predicted activation was significantly associated with true activation within intelligible trials in calcS (geometric mean of BFIntel = 8.4, 80th percentile = 91.0) and in a nontrivial proportion of surface nodes in left dPM (80th percentile of BFIntel = 13.9). However, inspection of the fixed effects regression coefficients in the LOO-predictive models revealed this association to be negative within intelligible trials. This means that STRF weights in calcS and left dPM should be sign-reversed in at least some subregion of the MPS to yield accurate predictions within intelligible trials.

Figure 4.

Speech-driven STRFs in non-auditory regions. A row (A-E) is dedicated to each region (right insets: zoomed versions of Figure 3A on semi-inflated or inflated standard topology surfaces). Overall responses (STRF) are shown in the first column, while effects of intelligibility (STRFbetween) and responses within intelligible (STRFintel) and unintelligible (STRFunintel) trials are shown in the second through fourth columns, respectively. For each region (A-E), the color scale is based on the overall STRF min/max and applied to each of the decomposed STRFs at right to allow a visualization of the relative contribution of each component STRF to the overall STRF. The overall STRFs are annotated with second-level alignment strength (Z) and Bayes Factors (geometric mean across surface nodes, 80th percentile given parenthetically) indicating quality of LOO predictions within intelligible (BFI = BFintel) and unintelligible (BFUI = BFunintel) trials. The decomposed STRFs are annotated with the max amplitude (Amp; STRFbetween) or second-level alignment strength (Z; STRFintel and STRFunintel) and the proportional relative importance (Imp).

3.2. Beta Time Series Functional Connectivity

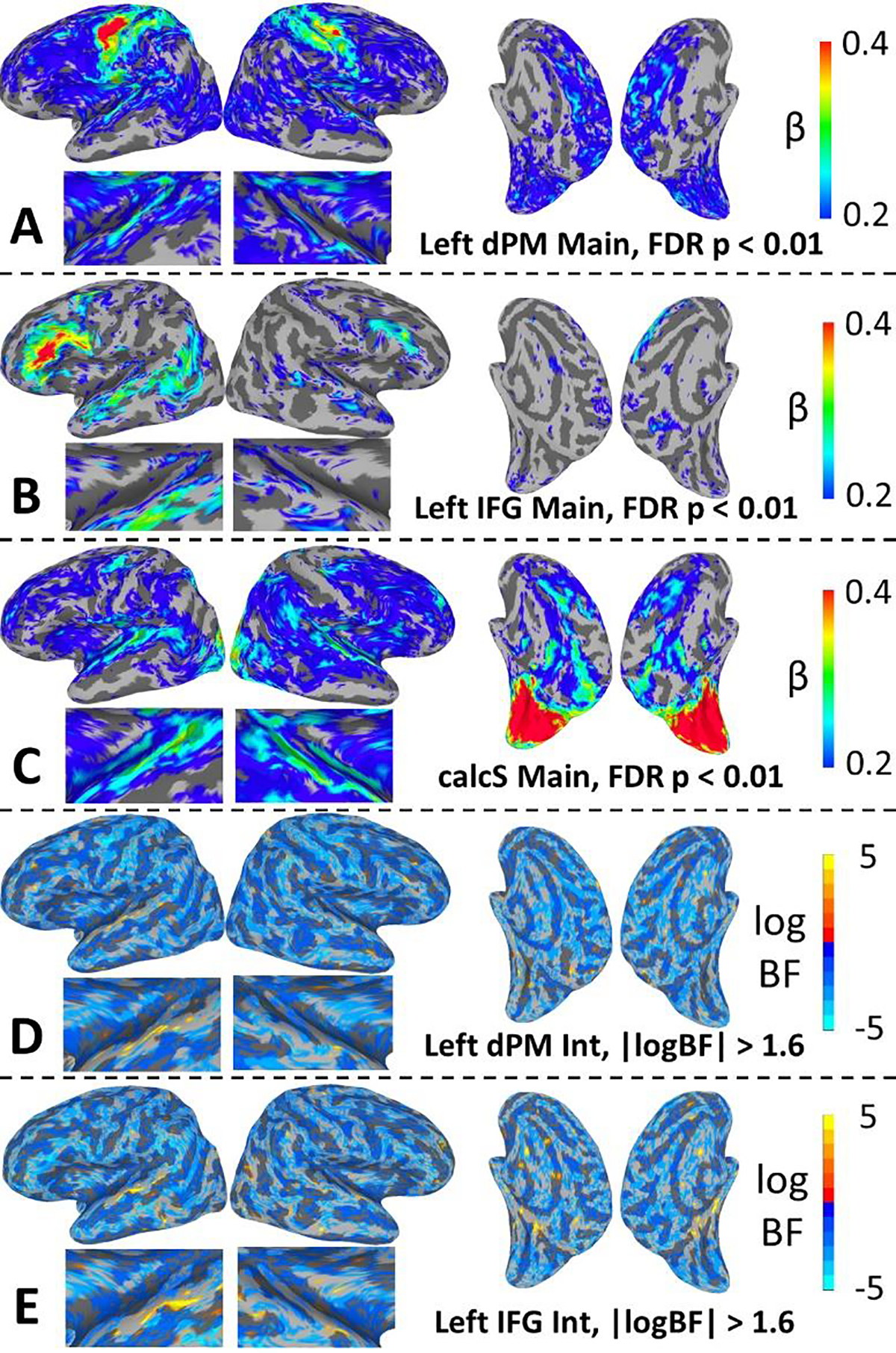

The primary motivation behind our functional connectivity analysis was to determine for each of the non-auditory regions with positive-valued STRFs – left dPM, left IFG, and calcS – whether and which areas of the auditory cortex were significantly correlated in their activation patterns across the course of the experiment. Secondarily, we wanted to know whether such patterns of functional connectivity were modulated by intelligibility. To these ends, we conducted whole brain regressions at the first level with non-auditory seed time series, binary intelligibility judgments, and their two-way interaction as predictors. The seed time series and intelligibility-by-seed interaction were the predictors of interest. In fact, the seed was significantly correlated with activation time series in the auditory cortex for all three regions of interest (Figure 5A–C). For left dPM and calcS, maximal correlations were observed in Heschl’s gyrus/sulcus and the immediately adjacent STG. Conversely, for left IFG maximal correlations were observed in the lateral/ventral STG, STS, and middle temporal gyrus. Moreover, left dPM and calcS were correlated with widespread sensorimotor networks across much of the cortical surface, while left IFG was correlated with a more restricted network of classic peri-Sylvian and inferior frontal speech-language networks. Together, these findings suggest that left dPM and calcS operate at a lower hierarchical level of information processing than left IFG.

Figure 5.

(A-C) Maps show the average regression coefficient (β) for the main effect of seed connectivity (two-way, FDR-corrected p < 0.01). Note the color scale: nearly all (>99%) significant effects were positive; the color scale was selected to allow visualization of the full range of these positive effects across brain regions. That is, both cool and warm colors denote positive functional connectivity. (D-E) Maps show the log Bayes Factor for the intelligibility-by-seed-connectivity interaction (thresholded at |logBF| > 1.6). Negative values (cool colors) indicate support for the null hypothesis (no interaction) and positive values indicate support for the alternative hypothesis (interaction present). Plots zoomed on the auditory cortex are shown beneath the lateral surface plots. All plots use a standard topology inflated surface derived from the Colin N27 template in MNI space.

For the intelligibility-by-seed interaction, no significant effects were detected at the chosen threshold of FDR-corrected p < 0.01. Therefore, we conducted a follow-up Bayesian analysis on the interaction effect to determine the extent to which the evidence favored the null hypothesis (no interaction) versus the alternative hypothesis (presence of an interaction). The results of a Bayesian hierarchical regression model are plotted for left dPM and left IFG in Figure 5D and Figure 5E, respectively. Specifically, the whole-brain plots show maps of the log Bayes factor (logBF) for the fixed (group-level) effect of the two-way interaction, where negative values reflect evidence in favor of the null and positive values reflect evidence in favor of the alternative. The maps are thresholded at |logBF| > 1.6 (BFfull > 5 or BFnull > 5). In general, evidence favored the null (negative logBF) across most of the cortical surface for both left dPM and left IFG. However, evidence in favor of the alternative was observed in some regions of the auditory cortex for both left dPM and left IFG. For left dPM, these effects were maximal in the left STG just lateral to Heschl’s gyrus. For left IFG, these effects were maximal in regions of the STG lateral and posterior to those observed for left dPM. In both cases, inspection of the regression coefficients showed that significant interactions were driven by relatively greater functional connectivity with the seed on unintelligible versus intelligible trials. Similar patterns were observed for calcS (not shown) but without any substantial evidence in favor of the alternative within the auditory cortex. In general, widespread evidence in favor of the null suggests that effects were dominated by intrinsic connectivity with the seeds in the broader functional context of speech processing (regardless of intelligibility).

3.3. STRFs in Auditory Regions Identified via Functional Connectivity

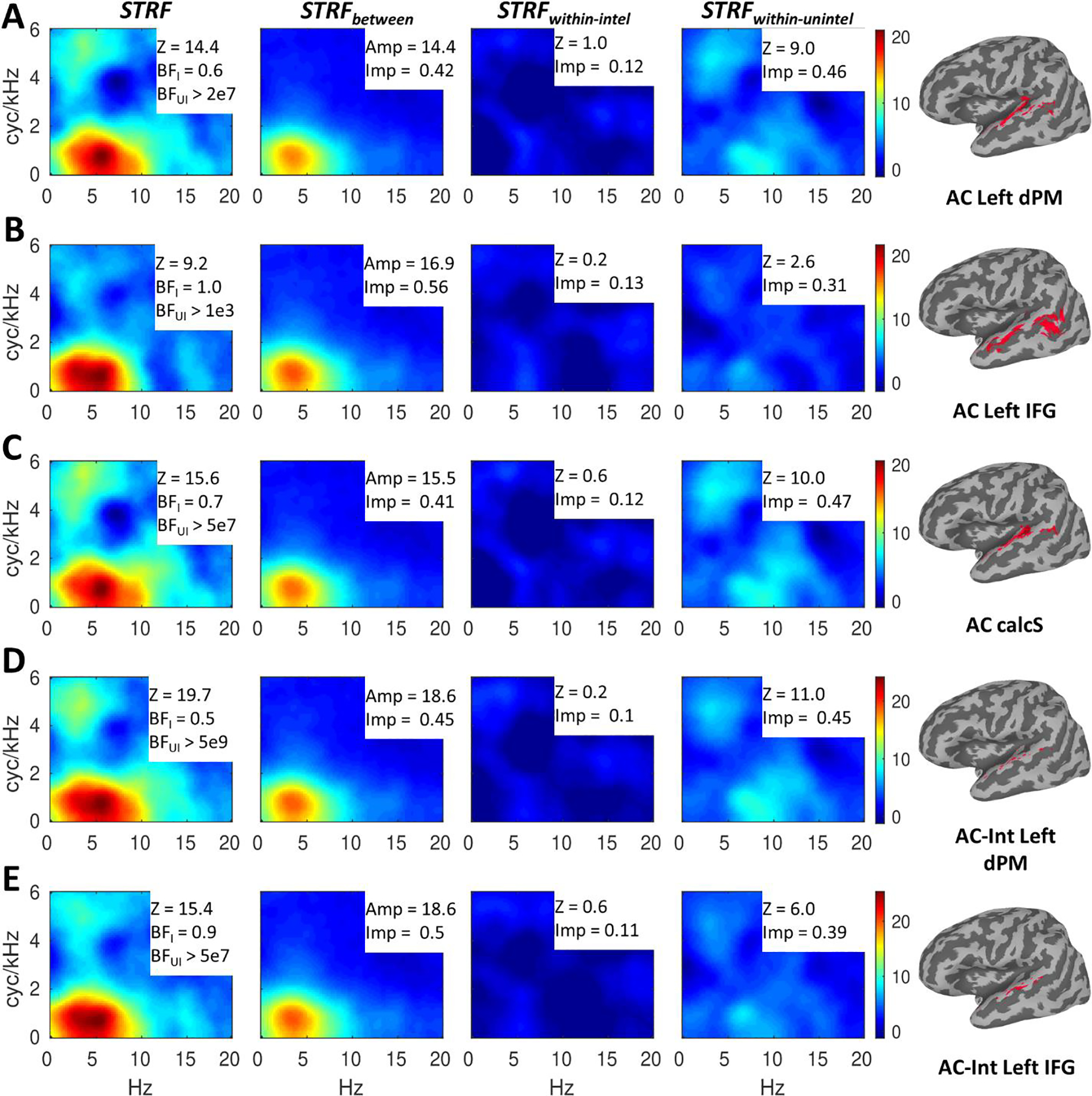

To visualize the STRF properties of the auditory regions that were maximally functionally connected to our non-auditory regions of interest (left dPM, left IFG, and calcS), we obtained second level average STRFs for auditory regions that (a) were significantly correlated with the seed at the second level and (b) had second-level correlations with the seed more than one standard deviation above the mean among those auditory-cortical surface nodes with significant cross-subject STRF alignment. These regional average STRFs are plotted in Figure 6A–C, each annotated with regional summary measures as in Figure 4. It is immediately apparent that the results pattern just as in Figure 4. Specifically, just as for left IFG, the auditory regions most correlated with left IFG responded primarily to MPS regions associated with speech intelligibility as indicated by increased relative importance/amplitude for STRFbetween and relatively decreased (but still significant) alignment for STRFwithin_unintel. On the other hand, just as for left dPM and calcS, the auditory regions most correlated with left dPM and calcS responded to MPS regions associated with both intelligibility and vocal pitch. In this case, pitch responses were driven almost entirely by STRFwithin_unintel, which mirrors the pattern in left dPM but not calcS which also responded to pitch in STRFwithin_intel. In other words, auditory regions that responded strongly to pitch (Figure 6A–B) only did so within trials rated as unintelligible by the listeners. The strength of this response was much greater in auditory regions than in left dPM and calcS (Z ~= 10 vs. Z ~= 2). Auditory regions that did not respond strongly to pitch (Figure 6C) were similar to left dPM in terms of the strength of cross-subject STRF alignment within unintelligible trials (Figure 4A; Z = 2.6 vs. Z = 2.4). For all these auditory regions (Figure 6A–C), there was a strong effect of speech intelligibility (large amplitude for STRFbetween) yet negligible STRF responses within intelligible trials (STRFwithin_intel). Correspondingly, LOO predictions for all auditory regions were significant within unintelligible (all BFunintel > 1e3) but not intelligible trials (all BFintel <= 1). In general, BFunintel was orders of magnitude larger in these auditory regions than in their corresponding non-auditory regions, though this effect was more pronounced for the early auditory regions connected to left dPM and calcS than for the downstream auditory regions connected to left IFG. This suggests BFunintel is a good proxy of the extent to which a given region has auditory-like properties (i.e., acoustically driven STRF responses that explain a relatively large proportion of signal variance).

Figure 6.

Speech-driven STRFs in auditory regions defined by functional connectivity with non-auditory regions. A row (A-E) is dedicated to each auditory region (right insets: region mask displayed on inflated standard topology surfaces and labeled with the corresponding seed region). Regions defined by the main effect of seed connectivity (A-C) are labeled as ‘AC’ and regions defined by the interaction of seed connectivity with speech intelligibility (D-E) are labeled as ‘AC-Int.’ Overall responses (STRF) are shown in the first column, while effects of intelligibility (STRFbetween) and responses within intelligible (STRFintel) and unintelligible (STRFunintel) trials are shown in the second through fourth columns, respectively. For each region (A-E), the color scale is based on the overall STRF min/max and applied to each of the decomposed STRFs at right to allow a visualization of the relative contribution of each component STRF to the overall STRF. The overall STRFs are annotated with second-level alignment strength (Z) and Bayes Factors (geometric mean across surface nodes) indicating quality of LOO predictions within intelligible (BFI = BFintel) and unintelligible (BFUI = BFunintel) trials. The decomposed STRFs are annotated with the max amplitude (Amp; STRFbetween) or second-level alignment strength (Z; STRFintel and STRFunintel) and the proportional relative importance (Imp).

We also obtained regional average STRFs for those auditory regions whose functional connectivity with the seed region (in this case, left dPM and left IFG) was significantly modulated by speech intelligibility (Figure 6D–E). These regions, located in more dorsal versus more ventral regions of the STG lateral to Heschl’s gyrus for left dPM and left IFG, respectively, showed a very similar response profile to the auditory regions that were maximally correlated with left dPM (Figure 6A). Specifically, STRFs exhibited responses to MPS regions associated with both intelligibility and vocal pitch, strong responses were observed for STRFwithin_unintel but not STRFwithin_intel, and strong effects of intelligibility (large amplitude for STRFbetween) were counterbalanced by a large relative importance for STRFwithin_unintel. As with the auditory areas defined by the main effect of connectivity, LOO predictions were significant for unintelligible trials (both BFunintel > 5e7) but not intelligible trials (both BFintel <= 0.9). These effects are unsurprising given that the interaction of functional connectivity with intelligibility was driven by relatively stronger connectivity within unintelligible trials. In other words, we would expect such effects to localize to relatively early auditory regions whose STRF responses are more acoustically driven. However, pitch responses and strength of alignment in STRFwithin_unintel were both relatively larger in auditory regions for which this interaction was present with left dPM (Figure 6D) than with left IFG (Figure 6E), which suggests that while both left dPM and left IFG receive input from acoustically driven regions of auditory cortex, left dPM receives such input from earlier and more strongly acoustically driven auditory regions. However, BFunintel was extremely large for both interaction-defined auditory regions, suggesting a predominantly acoustically driven response in both cases.

4. Discussion

In the present study, we re-analyzed fMRI data from which speech-driven spectrotemporal receptive fields (STRFs) could be estimated in young, normal-hearing listeners (Venezia et al., 2019b). Specifically, we developed a multivariate analysis combined with threshold free cluster enhancement to increase sensitivity to cross-subject STRF alignment at the second level, with the intention of revealing STRF responses beyond classical auditory-speech regions in the superior temporal lobe. Indeed, our primary analysis replicated our earlier findings in superior temporal regions (Venezia et al., 2019b) while identifying five non-auditory regions with reliable STRF alignment at the second level (Figure 3): left dorsal speech-premotor cortex (dPM), left inferior frontal gyrus (IFG), bilateral calcarine sulcus (calcS), right supplementary motor area (SMA), and right ventral speech-premotor cortex (vPM). Of these, right SMA and right vPM were shown to have a negative response to MPS regions associated with intelligible speech (Figure 4), suggesting a top-down profile in which these regions activate with increasing distortion of the acoustic speech signal and thus with increasing difficulty of speech recognition. Though such a response profile is surely of interest in the context of speech recognition in background noise (cf., Binder, Liebenthal, Possing, Medler, & Ward, 2004), it does not reflect canonical, auditory-like STRF responses which were of primary interest here. Therefore, these regions will not be discussed further. However, it is notable that this finding dovetails with a growing body of evidence suggesting that motor speech areas play a role in the processing of degraded speech (D’Ausilio et al., 2012; Du, Buchsbaum, Grady, & Alain, 2014, 2016; Erb & Obleser, 2013; Evans & Davis, 2015; J. E. Peelle, I. Johnsrude, & M. H. Davis, 2010; Szenkovits, Peelle, Norris, & Davis, 2012; Wild et al., 2012). The remaining three non-auditory regions – left dPM, left IFG, and calcS – showed auditory-like STRF profiles though with several important differences between them.

In our previous publication of this data set (Venezia et al., 2019b), we found that a defining characteristic of early auditory areas in the supratemporal plane was sensitivity to MPS regions associated with vocal pitch in addition to MPS regions associated with speech intelligibility, whereas intelligible speech much more selectively drove responses in downstream regions of the STG/S. Here, we observed similar patterns in non-auditory regions, with left dPM and calcS responding well to vocal pitch and intelligible speech while left IFG responded more selectively to intelligible speech (Figure 4). Interestingly, calcS responded to vocal pitch within both intelligible and unintelligible trials, while left dPM responded to vocal pitch only within unintelligible trials (Figure 4, compare STRFwithin_intel to STRFwithin_unintel). However, the STRFs estimated within calcS were not able to generate good leave-one-out (LOO) predictions of activation within unintelligible trials, a hallmark auditory profile. On the other hand, the STRFs estimated within left dPM were able to generate good LOO predictions within unintelligible trials (geometric mean of BFunintel = 4.1, 80th percentile = 43.6), and the STRFs estimated within left IFG were able to generate marginally good LOO predictions within unintelligible trials (geometric mean of BFunintel = 1.4, 80th percentile = 7.7).

In our beta time series functional connectivity analysis, left dPM and calcS were maximally connected with early auditory regions in the supratemporal plane, while left IFG was maximally correlated with downstream regions in the STG/S and middle temporal gyrus (Figure 5, A–C). As expected, and consistent with our prior publication (Venezia et al., 2019b), the STRFs in these auditory regions mirrored those in their non-auditory counterparts: sensitivity to vocal pitch and intelligible speech in the auditory regions maximally connected with left dPM and calcS, and more selective responses to intelligible speech in the auditory regions maximally connected with left IFG (Figure 6, A–C). All of these auditory regions showed strong STRF responses within unintelligible but not intelligible trials, and their STRFs generated good LOO predictions within unintelligible but not intelligible trials. However, these effects were stronger in the early auditory regions connected with left dPM and calcS than in the STG/S regions connected with left IFG. Interestingly, connectivity with auditory regions was modulated by intelligibility for left dPM and left IFG but not calcS. Regions of the STG just lateral to Heschl’s gyrus were more strongly connected with left dPM and left IFG within unintelligible trials (Figure 5, D–E). These effects localized to slightly more dorsal STG regions for left dPM relative to left IFG. In general, these STG regions responded similarly to the early auditory regions connected with left dPM and calcS, though the response to vocal pitch was larger in STG regions showing an intelligibility-by-connectivity interaction with left dPM relative to those showing such an interaction with left IFG (Figure 6, D–E). The STRFs in these regions generated very good LOO predictions of activation but only within unintelligible trials.

Taken together, these results suggest that only left dPM demonstrated a clear “auditory-like” response profile. Like early auditory regions, left dPM responded to both vocal pitch and intelligible speech, responded to vocal pitch primarily within unintelligible trials, demonstrated strong cross-subject STRF alignment within unintelligible but not intelligible trials, and generated good LOO predictions within unintelligible trials. Moreover, left dPM was maximally connected with early auditory regions and showed an increase in connectivity with certain early auditory regions on unintelligible trials during which vocal pitch more strongly activated those auditory regions. On the other hand, left IFG did not respond strongly to vocal pitch, generated only marginally good LOO predictions within unintelligible trials, and was maximally connected with downstream auditory regions that did not strongly encode vocal pitch. Finally, calcS responded to vocal pitch and was maximally connected with early auditory areas that strongly responded to pitch, but unlike in auditory regions this pitch response was not restricted to unintelligible trials. Moreover, calcS generated poor LOO predictions within unintelligible trials, which suggests that while activation in calcS was modulated by the relative presence or absence of vocal pitch, this modulation accounted for a trivial proportion of the overall signal variance. Like their auditory counterparts, all three non-auditory regions were strongly modulated by speech intelligibility (Figures 4/6, STRFbetween). Below, we explore what these patterns suggest about the computational roles played by these non-auditory regions during perception of continuous speech.

4.1. Left dPM: Sensitivity to Vocal Pitch in the Dorsal Laryngeal Motor Cortex

In the Introduction, we identified two prominent fMRI studies showing that a region of the left dorsal premotor cortex activates during speech perception and production (Buchsbaum et al., 2001; Wilson et al., 2004). We also noted subsequent work showing that the same region is part of a precentral/central complex that forms the human dorsal laryngeal motor cortex or ‘dLMC’ (Brown et al., 2008). In the present study, we found that left dPM exhibits auditory-like STRF properties including a substantial response to vocal pitch. In Figure 7, we reproduce the lateral left hemisphere plot from Figure 3A showing regions with significant cross-subject STRF alignment, here overlaid with 2mm spheres at the peak dorsal premotor coordinates from Buchsbaum et al. (2001), Wilson et al. (2004), and Brown et al. (2008). Considering the different samples and methods employed in these studies together with the fact that second level analysis introduces a degree of anatomical uncertainty, the proximity of activations across studies is, in our view, compelling support for a common underlying mechanism. Indeed, we suggest that left dPM, as identified in the present study, is the gyral component of the left dLMC.

Recent work suggests there is a dual representation of the larynx in human motor cortex, with a more ventral, premotor representation in the posterior frontal operculum (vLMC), and a more dorsal, primary motor representation in the central sulcus (dLMC) with close proximity to the primary motor representation of the lips (Bouchard, Mesgarani, Johnson, & Chang, 2013; Breshears et al., 2015; Chang, Niziolek, Knight, Nagarajan, & Houde, 2013; Dichter et al., 2018; Eichert, Papp, Mars, & Watkins, 2020; Galgano & Froud, 2008; Grabski et al., 2012; Olthoff, Baudewig, Kruse, & Dechent, 2008; Terumitsu, Fujii, Suzuki, Kwee, & Nakada, 2006). Current theory (Belyk & Brown, 2017; Brown et al., 2020; Eichert et al., 2020) poses that human vLMC is evolutionarily homologous with the laryngeal motor cortex in nonhuman primates, which lack the sophisticated vocal learning and voluntary laryngeal motor control abilities of humans, and whose laryngeal motor cortex is entirely confined to the premotor vLMC. The dLMC is thus uniquely human, which implies an evolutionary ‘duplication-and-migration’ (Belyk & Brown, 2017) allowing for voluntary laryngeal control. Further, it has been suggested that dLMC preferentially controls the extrinsic muscles of the larynx, which move the entire larynx vertically within the airway and play a key role in complex pitch modulations of the sort common in human speech and singing; conversely, the vLMC preferentially controls the intrinsic muscles of the larynx, which perform rapid tensioning and relaxation of the vocal folds at the onset and offset of vocalizations (i.e., serve as a coarse ‘on-off’ switch for phonation; Eichert et al., 2020; but see Belyk et al., 2020; Belyk & Brown, 2014). Interestingly, stimulation of vLMC produces speech arrest (Chang et al., 2017) while stimulation of dLMC produces involuntary vocalization and disruption of fluent speech (Belkhir et al., 2021; Dichter et al., 2018), and dLMC appears to be selectively involved in producing fast pitch changes of the sort that generate stress patterns within a sentence (Dichter et al., 2018).

While dLMC localizes most prominently to BA 4 deep in the central sulcus (Simonyan, 2014), numerous studies have now shown that it extends into BA 6 in a neighboring region of the dPM (cf., Brown et al., 2020). This gyral component of dLMC seems to be preferentially engaged during perception. As noted early on by Wilson et al. (2004), although sensory speech activations overlap with motor speech activations in BA 4, peak sensory activation occurs about 5 mm anterior to peak motor activations (i.e, in BA 6). Intracranial studies have consistently implicated this gyral component of dLMC in speech perception and production (Belkhir et al., 2021; Berezutskaya et al., 2020; Bouchard et al., 2013; Breshears et al., 2015; Chang et al., 2017; Chang et al., 2013), particularly with respect to encoding and production of vocal pitch (Cheung et al., 2016; Dichter et al., 2018). This work dovetails with the present study in which we have identified a region of left dPM that: (a) co-locates with gyral dLMC (Figure 7); (b) responds vigorously to vocal pitch (Figure 4); (c) shows maximum functional connectivity with core regions of the auditory cortex that also respond vigorously to vocal pitch; and (d) shows strong acoustically driven responses within speech trials rated as unintelligible.

One curious aspect of the present results is that left dPM responded to pitch selectively within unintelligible trials (Figure 4). While it is tempting to attribute this to acoustic biases introduced by separating the trials according to intelligibility judgments (i.e., we should, by definition, expect lower variance and therefore lower within-category STRF weights for acoustic features that contributed strongly to intelligibility judgments), such biases could not have affected estimation of the response to vocal pitch because vocal pitch did not contribute strongly to intelligibility judgments (CITE Venezia et al 2019). Moreover, it is notable that auditory regions responding to vocal pitch also did so selectively within unintelligible trials (Figure 6). In that sense, the broader issue is to determine why general auditory sensitivity to vocal pitch occurred selectively within trials rated as unintelligible. We suggest this pattern was driven by the intelligibility judgment task itself. Specifically, listeners were asked to make a binary, yes/no rating of intelligibility on each trial. Therefore, within trials rated as intelligible, the brain was able to tune in selectively to those acoustic features that are relevant to perception of intelligible speech (Figures 4/6, the “bulls-eye” in STRFbetween). Those features were adequately, or perhaps even completely, characterized by STRFbetween and so little to no signal was present in STRFwithin_intel (i.e., the brain responded rather uniformly within intelligible trials, at least with respect to the acoustic features that support intelligibility). On unintelligible trials, when those features were, by definition, less available, or unavailable in the signal, the brain keyed into other acoustic features of interest, for example vocal pitch or faster temporal modulations (> 10 Hz). Indeed, we suggest that regions responding to vocal pitch within unintelligible trials – including left dPM and core auditory regions – are the same regions that would respond to vocal pitch on intelligible trials if vocal pitch were relevant to the task (e.g., speech recognition with two co-located, simultaneous talkers).

Left dPM deviated from core auditory regions in that its STRF-derived LOO predictions of activation were correlated with true activation within both intelligible and unintelligible trials (Figure 4, first column). However, as noted in the Results, for intelligible trials this association was driven by LOO predictions that were significantly negatively correlated with true activation. In other words, STRF responses in left dPM shifted from a bottom-up profile (positive STRF weights) to a top-down profile (negative STRF weights) within intelligible trials, such that left dPM responded more to intelligible speech when that speech was partially distorted. It is unlikely that this top-down response was related to vocal pitch given that STRF weights on vocal pitch were positive overall for STRFwithin_intel in left dPM (Figure 4, third column). Like the entirety of auditory cortex, it appears that left dPM ‘re-tuned’ on intelligible trials to respond selectively to MPS regions associated with intelligibility, except that left dPM responded more strongly when those MPS regions were only partially represented in the stimulus. This suggests that responses in left dPM are highly dynamic, which reinforces our claim that left dPM is, in fact, the gyral component of dLMC, where response properties have been shown to shift adaptively depending on whether speech is perceived or produced (Cheung et al., 2016). Consistent with a top-down role and a role in perceptual processing of vocal pitch, it has been suggested that the left dPM contributes to speech comprehension by generating temporal predictions on the phrasal scale (Keitel, Gross, & Kayser, 2018). However, disruption or damage to left dPM appears to have very small effects on comprehension (Hickok et al., 2008; Krieger-Redwood, Gaskell, Lindsay, & Jefferies, 2013; Rogalsky et al., 2020; Rogalsky, Love, Driscoll, Anderson, & Hickok, 2011), which calls into question a core role for such a predictive mechanism in comprehension.

4.2. Left IFG: Response to Structured Speech Input