Abstract

With increasing number of COVID-19 cases globally, all the countries are ramping up the testing numbers. While the RT-PCR kits are available in sufficient quantity in several countries, others are facing challenges with limited availability of testing kits and processing centers in remote areas. This has motivated researchers to find alternate methods of testing which are reliable, easily accessible and faster. Chest X-Ray is one of the modalities that is gaining acceptance as a screening modality. Towards this direction, the paper has two primary contributions. Firstly, we present the COVID-19 Multi-Task Network (COMiT-Net) which is an automated end-to-end network for COVID-19 screening. The proposed network not only predicts whether the CXR has COVID-19 features present or not, it also performs semantic segmentation of the regions of interest to make the model explainable. Secondly, with the help of medical professionals, we manually annotate the lung regions and semantic segmentation of COVID19 symptoms in CXRs taken from the ChestXray-14, CheXpert, and a consolidated COVID-19 dataset. These annotations will be released to the research community. Experiments performed with more than 2500 frontal CXR images show that at 90% specificity, the proposed COMiT-Net yields 96.80% sensitivity.

Keywords: X-Ray, COVID-19, Detection, Diagnostics, Deep learning, Explainable artificial intelligence, Multi-task learning

1. Introduction

The COVID-19 pandemic has affected the health and well-being of people across the globe and continues its devastating effect on the global population. The total cases have increased at an alarming rate and have crossed 116 million worldwide [1]. Increasing cases of COVID-19 patients raises the concern for effective screening of infected patients. The current process of testing for COVID-19 is time-consuming and requires availability of testing kits. This necessitates the requirement for alternative methods of screening, which is available to the general population, cost effective, time efficient, and scalable.

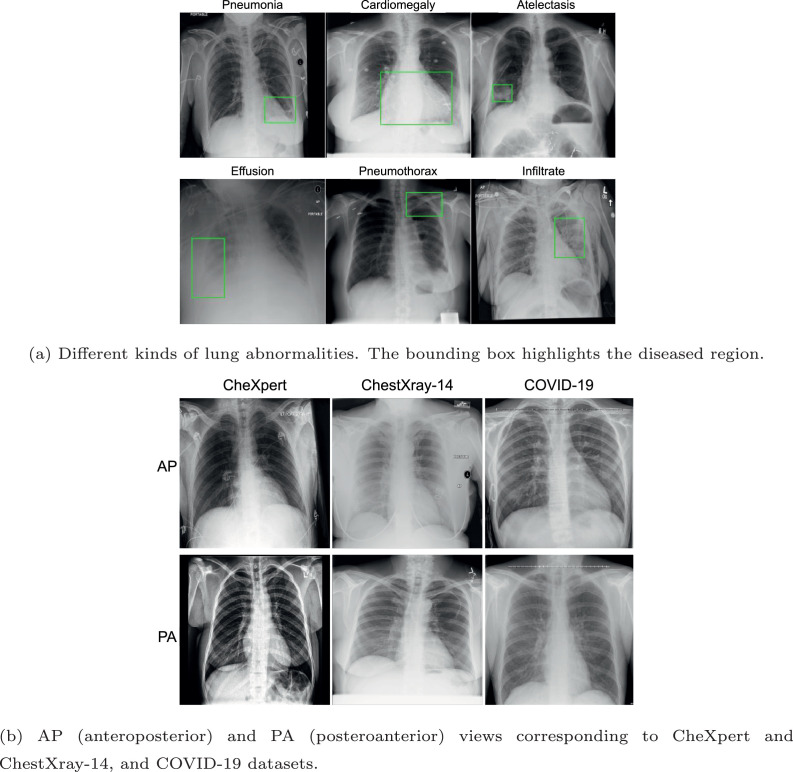

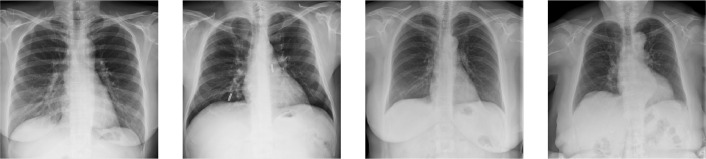

Dyspnea is a common symptom for COVID-19. Analyzing the chest X-ray, radiologists have observed that it introduces specific abnormalities in a patient’s lungs [2]. For instance, COVID-19 pneumonia has a typical appearance on chest radiographs with bilateral peripheral patchy lung opacities, lower lung distribution, rounded morphology and absence of pleural effusion and lymphadenopathy. Figure 1 shows samples of chest x-ray images with different lung abnormalities including COVID-19. Motivated by this observation and the fact that x-ray imaging is faster, cheaper, accessible, and has scope for portability, many recent studies have proposed machine learning algorithms to predict COVID-19 using CXRs [3].

Fig. 1.

Samples of chest x-ray images used as a part of this research.

1.1. Literature review

Researchers have proposed AI-based techniques to detect COVID - 19 using chest CT and x-ray images. Apostolopoulos and Mpesiana [4] explored transfer learning through various CNNs and observed that MobileNet v2 [5] yields the best results. Narin et al. [6] proposed to use three CNN models, namely, ResNet50 [7], InceptionV3 [8], and InceptionResNetV2 [9] for detecting COVID-19 using chest x-ray. The authors fine-tuned these pre-trained deep models for distinguishing COVID-19 from normal x-rays and found that ResNet-50 performed the best. They used 50 chest x-ray images of COVID-19 patients from Github repository [10] and 50 normal chest X-ray images [11]. Nishio et al. [12] used a VGG-16 based model for differentiating between COVID-19 pneumonia, non-COVID-19 pneumonia, and healthy CXR images.

Horry et al. [13] proposed a CXR and CT based multi-modal classification for COVID-19 detection. In their work, they propose a data pre-processing technique and performs transfer learning on various deep learning architectures. Their finding suggests that VGG16 and VGG19 gives promising results for COVID-19 vs Pneumonia or normal classification. Further, Oh et al. [14] proposed a patch-based CNN approach for COVID-19 diagnosis. During testing, majority voting from multiple patches at different locations of lungs is performed for final decision.

For interpretation and explainability, there are limited studies. Mangal et al. [15] and Jaiswal et al. [16] utilized DenseNet121 and DenseNet201 [17] for classification, respectively. They showed Class Activation Maps (CAM) for interpretation. With an emphasis on explainability, the authors showed CAM and confusion matrix. On similar lines, Ghoshal and Tucker [18] showed the application of ResNet50v2 [19] for the above four classes. Authors interpret the results using CAM, confusion matrices, Bayesian uncertainty, and Spearman correlation. Similarly, Shi et al. [20] and Tsiknakis et al. [21] used CAM for better interpretation of COVID detection. Their approach aimed to extract relevant features while suppressing inadmissible ones.

The problem of small sample size of COVID-19 chest X-ray images was tackled by Loey et al. [22], where they generate new COVID-19 infected images using GANs. Wang et al. [23] introduced COVID-Net for detecting COVID-19 cases. Further, the authors investigate the predictions made by COVID-Net to gain insights on the critical factors associated with COVID-19 cases. In their work, a three-class classification is performed to distinguish COVID-19 cases from regular and Non-COVID cases. In another work, Afshar et al. [24] proposed a capsule-network based framework referred as COVID-CAPS that uses X-ray images for COVID-19 detection. The results from the proposed framework looks promising as the capsule networks have few parameters to train and work well on small datasets. Similarly, Shorfuzzaman and Hossain [25] proposed a n-shot meta learning framework. In their work, they use a Siamese neural network for feature extraction with contrastive loss function in a few-shot learning setting for small dataset.

These research demonstrate that AI-driven techniques can diagnose COVID-19 using chest x-ray images. It could potentially overcome the challenges of limited test kits and speed up the screening process of COVID-19 cases. However, a significant limitation of existing studies is that the algorithms work as a black box. These algorithms predict if the input x-ray is affected by COVID-19 or some related disease. Most studies fail to explain the decisions - for instance, which lung regions are salient for the specific decisions. Secondly, existing studies do not focus on radiological abnormalities such as consolidation, opacities, or pneumothorax. Without a clear emphasis on the lung or the abnormality, it is hard to have the explainability of an algorithm in a crucial application of COVID-19 diagnosis. Further, most of these studies work with a limited number of COVID-19 samples, with around 100 samples under most scenarios. Thirdly, as shown in Fig. 1(b), the posteroanterior (PA) and anteroposterior (AP) views of CXR images vary due to the acquisition mechanisms. While training, samples from both classes need to be considered but existing algorithms are generally silent on these details.

1.2. Research contributions

In this research, we propose a deep learning network termed as COVID-19 Multi-Task Network (COMiT-Net), which learns the abnormalities present in the chest x-ray images to differentiate between a COVID-19 affected lung and a Non-COVID affected lung. For medical applications, the explainability of machine learning systems is of paramount importance [26]. In reality, the black-box nature of the deep algorithms refrain us from knowing which regions are getting focused. Furthermore, these algorithms fail to deliver what and where the disease is, which is essential for radiologists and doctors to back their decision. Hence, the proposed network incorporates additional tasks of lung and disease segmentation to provide post-hoc explainability.

The proposed COMiT-Net simultaneously processes the input X-ray for semantic lung segmentation, disease localization, and healthy/unhealthy classification. Incorporating additional tasks while performing the primary task of COVID classification has multiple advantages. While processing for COVID classification, the additional segmentation tasks enforce the network to focus on lung regions and disease-affected areas only. Further, inclusion of healthy/unhealthy classification aids the COMiT-Net to effectively identify a healthy lung. Further, assistance from other tasks reduces dependence on enormous amounts of data required during training. The key research highlights are:

-

1.

Develop COVID-19 Multi-Task Network (COMiT-Net) for classification and segmentation of the lung and disease1 regions. The COMiT-Net further predicts if lungs are affected with COVID-19 or Non-COVID-19 disorders and differentiate them from healthy lungs.

-

2.

Inclusion of simultaneous disease segmentation in the COMiT-Net helps in making the decisions explainable.

-

3.

Extensive evaluation and comparison against the existing deep learning algorithms for COVID-19 prediction, lung, and disease segmentation.

-

4.

Assemble frontal chest x-rays from various sources, that can be used for diverse tasks such as classification and semantic segmentation of lungs and disease. For the assembled dataset, the CXR reports and the CXR were manually verified by a radiologist to affirm the presence/absence of COVID-19 related abnormalities. From different sources, a total of 2513 frontal x-rays of COVID-19 affected patients are collected. Further, these manual annotations for lung and disease semantic segmentation for healthy, unhealthy, and COVID-19 affected X-ray images will be released to the research community.

2. COVID-19 multi-task network (COMiT-Net)

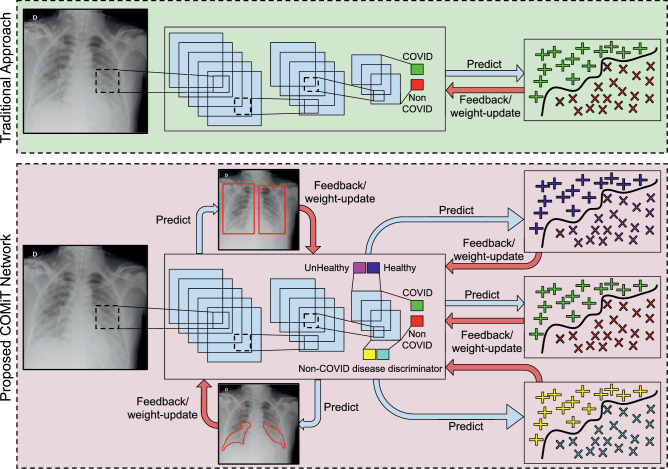

This section provides the details of the proposed COMiT-Net. Multi-task networks are known to learn similar and related tasks together based on the input data. As shown in Fig. 2 , multi-task networks have a base network with multi-objective outputs. Since each task shares the same base network, the weights are learned to be optimal for all functions jointly. The four tasks of COMiT-Net are (i) lung localization, (ii) disease localization, (iii) healthy/ unhealthy classification and (iv) multi-label classification for COVID-19 prediction. These tasks are accomplished by using five loss functions: two for segmentation and three for classification. The details of these loss functions are described in the following subsections.

Fig. 2.

The proposed COMiT-Net to perform multiple related tasks to improve the classification performance for COVID-19 disease diagnostics using frontal x-ray. The figure contrasts the multitask network with single task network.

Let be the train set with images and represent an image. is associated with five labels, where, and represent the ground truth binary mask for lung and disease localization, respectively. , , and represents the healthy/unhealthy, COVID/Non-COVID, and Non-COVID diseases discriminator labels, respectively. Let be the proposed COMiT-Net that performs the four different tasks. The task set is defined as , where, and represent the task of lung and disease localization, respectively. and represents the task of healthy/unhealthy and COVID/Non-COVID classification, respectively.

2.1. Segmentation loss

Chest x-ray of lungs contain peripheral organs along with lung regions. The primary objective of this research is to differentiate between COVID and Non-COVID samples. Since the key information lies in the lungs, the initial task is that of lung segmentation. The second segmentation loss aims to learn semantic segmentation of the diseased regions.

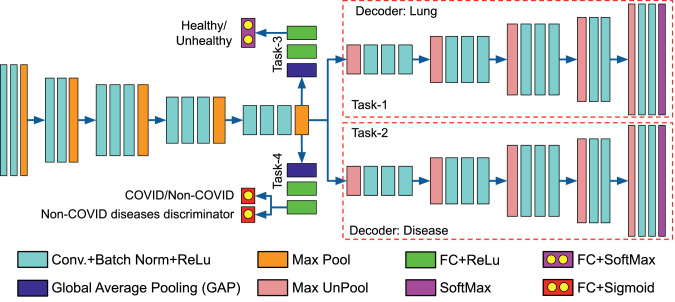

Lung segmentation can be achieved by learning a model that differentiates between the background and foreground lung regions. The COMiT-Net accomplishes this by utilizing a VGG16 Encoder-Decoder architecture [27]. The encoder has VGG16 as a base network. It has five blocks with 2, 2, 3, 3, and 3 layers of convolution + batch norm + ReLu layers, respectively. The decoder network builds upon the representation obtained from the encoder network, with a transposed architecture of the encoder network. At the final layers, the output is derived from a SoftMax layer. The output dimension equals the input spatial resolution of the X-ray image with the number of channels equaling the number of segmentation classes. Hence, the final layer consists of two channels, lung and non-lung.

Similar to lung localization, the disease localization also builds upon the encoder representation. However, the disease localization task has a separate decoder branch and is optimized for localizing more than lung-related disorders. For both the lung and disease localization, the gradients are backpropagated via decoder network into the encoder layers.

Let and represent the sub-networks for lung and disease localization, respectively. For any image , the output predicted binary masks for lung and disease localization are represented as:

| (1) |

In this research, binary cross entropy loss is used for lung and disease localization. Mathematically, it is represented as:

| (2) |

| (3) |

where, and are the lung and disease loss, respectively for image . and represent the pixel value at location for lung and disease masks, respectively.

2.2. Classification loss

The two classification tasks are Healthy/Unhealthy classification of the lung X-ray, and Multi-label classification for the presence of COVID-19 or other abnormalities. These tasks are performed using three classification loss functions. The lung and disease localization provides supervision for the three classification tasks. For healthy/unhealthy, COVID/Non-COVID, and Non-COVID diseases discrimination classification, two branches are derived over the compact encoder representation (after GAP). Each branch has three fully connected layers (FC). For both branches, the first two layers use ReLu activation. The healthy/unhealthy branch uses SoftMax activation at the last FC layer. The multi-label COVID/Non-COVID and Non-COVID diseases discrimination classification branch uses Sigmoid activation at the last FC layer (Fig. 3 ).

Fig. 3.

Architecture of the proposed COVID-19 Multi-task Network (COMiT-Net), which is based on a Encoder-Decoder architecture (Best viewed in color). (For interpretation of the references to color in this figure legend, the reader is referred to the web version of this article.)

Let and represent the sub-networks for healthy/ unhealthy and multi-label classification, respectively. The output of for image is:

| (4) |

where, is the probability of predicting image to . The loss function for healthy/unhealthy classification is represented as:

| (5) |

where, represents the healthy/unhealthy loss for image . For multi-label classification, the output of sub-network for an image is written as:

| (6) |

where, and represent the output predicted score ( [0, 1]) for COVID/Non-COVID and Non-COVID diseases discriminator, respectively. The radiological findings of COVID-19 pneumonia may overlap those of other viral pneumonia and acute respiratory distress syndrome due to other etiologies. The network needs supervision to segregate COVID-19 pneumonia from Non-COVID lung diseases. Hence, the joint optimization for COVID/Non-COVID along with Non-COVID diseases discrimination helps differentiate COVID-19 affected lungs from lungs affected with diseases other than COVID-19. The joint loss for predicting both COVID/Non-COVID and Non-COVID diseases discrimination is written as:

| (7) |

Overall Loss Function: It is possible that the ground truth labels or segmentation masks are not available for all the images during training. In this case, all branches of the networks will not be active during training of COMiT-Net. For instance, if the ground truth mask is unavailable for disease segmentation, then the sub-network will remain inactive and the loss for image will become zero. In the same manner, other losses can have a 0/1 “switch”. Therefore, the total loss is computed as:

| (8) |

where, , , , and are the switches pertaining to the tasks , , , and , respectively. The values of these switches are either 0 or 1 depending on the availability of ground truth labels/masks of the respective tasks for the th image.

3. Experimental details

We next summarize the databases used for training and testing, the lung and disease annotations performed as part of this research, and the implementation details.

3.1. Database and protocol

For different tasks of the network, we require a chest X-ray database with multiple annotations and diverse properties. Thus, the database for experiments is created by combining subsets from the ChestXray-14, CheXPert, and COVID-19 infected X-ray databases. We only use frontal X-ray in our experiments from the following publicly available databases:

-

•

ChestXray-14[28]: The dataset contains healthy and unhealthy x-ray images. It has a total of 112,120 chest x-ray images, out of which 67,310 are PA view images, and remaining 44,810 are AP view. Multiple radiographs of the same patient taken at different times are also present. From the database, we derive a subset of 13,360 images, spanning both PA and AP views. The unhealthy X-rays are labeled for one or more classes in a total of 14 classes. The 14 classes are: Atelectasis, Cardiomegaly, Consolidation, Edema, Effusion, Emphysema, Fibrosis, Hernia, Infiltration, Mass, Nodule, Pneumonia, Pneumothorax, and Pleural Thickening. Additionally, the dataset provides localization information of abnormalities for 880 X-rays. The details of the subset drawn from ChestXray-14 is illustrated in Table 1.

-

•

CheXpert[29]: The CheXpert dataset contains a total of 223,414 chest x-ray images, out of which 29,420 are PA view, 161,590 are AP view, and the remaining are lateral or single lung view images. Multiple case studies of the same patient are available in the dataset. This dataset contains healthy and unhealthy X-ray images. We selected a subset of 18,078 images. Based on the radiological findings, each X-ray image is labeled positive/negative for 14 pre-defined classes (few overlapping with ChestXray-14). The 14 classes are: No Finding, Enlarged Cardiom, Lung Lesion, Edema, Consolidation, Pneumonia, Atelectasis, Pneumothorax, Cardiomegaly, Pleural Effusion, Pleural Other, Lung Opacity, Fracture, and Support Devices. The details of the x-ray images selected from CheXpert database is shown in Table 1.

-

•

COVID-19: For this study, we collected a total of 415 X-rays from various internet sources. The sources have a mixed number of PA and AP view frontal chest x-ray. The number of X-rays collected from each source has been summarized in Table 2 . Further, we have also performed additional experiments with 2388 images from the BIMCV+ COVID-19 Database [35]. Details and experimental results of the BIMCV+ database can be found in Section 4.4.

Since the above COVID-19 subset has a limited number of images, we perform data augmentation. Each image is augmented five ways - clockwise rotation by 10, anti-clockwise rotation by 10, translation by 10 pixels in the X, Y, and XY-directions. Since pneumonia is a closely related pathology to COVID [36], we select all the pneumonia samples of the ChestXray-14 and CheXPert datasets. Further, to accommodate the variations in non-healthy x-ray samples, about 50% more unhealthy samples are selected compared to healthy samples. AP view x-rays are prominent compared to PA views in the CheXpert dataset. Hence, we select more AP view X-ray images.

Table 1.

Details of the databases used in the experiments.

Table 2.

Details for the COVID-19 databases used in the experiments.

The data is split into training and testing ensuring that there is no patient overlap in the train and test sets. The details of the train-test data split across different properties are specified in Table 3 . The first two columns specify the number of samples for the task of segmentation, i.e. the samples for which disease and lung masks are available. The next three columns specify the number of samples present in different classes (normal, COVID, others) for the task of classification. The last two columns specify the characteristics of the overall database by subdividing the total number of samples into two categories- AP and PA. AP and PA views of chest x-rays are substantially different, and we attempt to balance the two views to provide balanced training. Note that all the train set numbers mentioned in the table are post-augmentation.

Table 3.

Details of train-test split across different parameters. Train set for COVID-19 includes augmentation. Cols 1–2 specify number of samples for segmentation tasks. Cols 3–5 specify number of samples for classification task. Cols 6–7 specify total number of AP/PA view samples in the database.

| Mask |

Disease-wise |

Views |

|||||

|---|---|---|---|---|---|---|---|

| Lung | Disease | Normal | Covid | Others | PA | AP | |

| Train | 8730 | 1456 | 8173 | 1740 | 16551 | 10161 | 16690 |

| Test | 1837 | 251 | 2077 | 2223 | 4097 | 2464 | 3968 |

| Total | 10567 | 1707 | 10250 | 3963 | 20648 | 12625 | 20658 |

3.2. Lung and disease region annotation

The datasets mentioned above lack lung localization details. The proposed COMiT-Net requires a ground-truth lung location to identify the lung region from the x-ray. For this purpose, we manually annotated a total of about 9000 lung x-rays. These x-rays include well-balanced healthy/unhealthy, AP/PA subsets taken equally from the CheXpert and ChestXray-14 datasets. All x-ray images available for COVID-19 are also manually annotated for lung segmentation. Mask for each x-ray image has been created by drawing two solid bounding boxes, corresponding to the area covered by each lung. As a part of this study, we also plan to release the ground truth masks for the manually annotated lung regions.

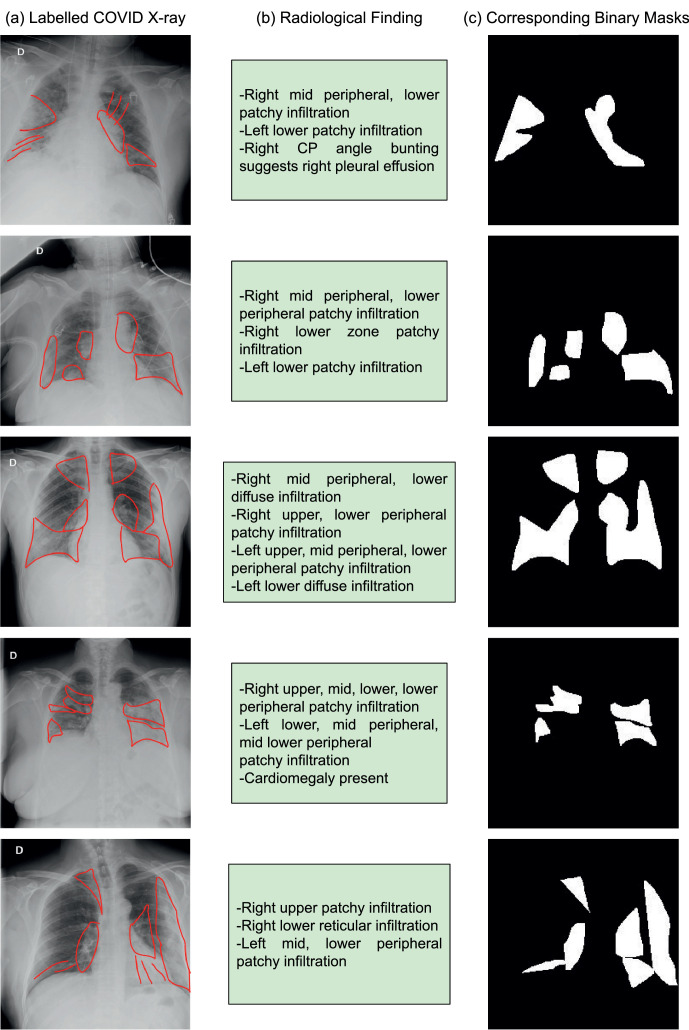

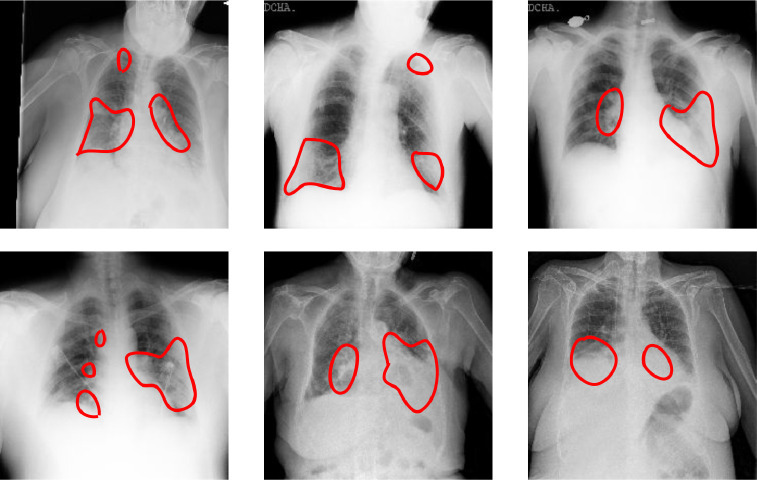

The datasets included as a part of this study have only 880 disease localization annotation images (from ChestXray-14 database). For COVID-19 affected frontal lung x-ray images, we lacked disease segmentation masks. Hence, as a part of this study, the x-ray images are annotated by a radiologist for various radiological findings. The findings radiologists looked for includes: (i) atelectasis, (ii) consolidation, (iii) interstitial shadows (reticular, nodular, ground glass), (iv) pneumothorax, (v) pleural effusion, (vi) pleural thickening, (vii) cardiomegaly, and (viii) lung lesion. The experts annotated a total of 200 COVID-19 affected chest x-rays. A few sample annotations for the same can be seen in Fig. 4 (a) and the corresponding description in Fig. 4(b). While training deep learning algorithms, the model requires binary masks as annotation. Hence, we created these masks based on the annotations (Fig. 4(c)). We will release the ground truth binary masks to promote the training of deep semantic segmentation algorithms for abnormality localization.

Fig. 4.

Annotations provided for COVID-19 affected frontal lung x-ray images as a part of this study: (a) Labeled COVID-19 X-ray for locations of radiological finding, (b) Description of the radiological finding, (c) Corresponding binary masks for training deep semantic segmentation algorithms for disease segmentation.

3.3. Implementation details

The proposed Multi-task network requires input X-ray images of size 224 224 3. The encoder stream is initialized using a pre-trained VGG16 model. With a batch size of 16, the model is optimized over binary cross-entropy loss using Adam optimizer (learning rate ). Each loss is weighted equally. The model is trained for 30 epochs2 on NVIDIA GeForce RTX 2080Ti and implemented in PyTorch.

UNet and SegNet models are trained on Nvidia RTX 2080Ti using a PyTorch (v.1.4.0) implementation. The input size is kept the same as 224 x 224 x 3 with batch size of 4. The model is trained for 25 epochs by minimizing binary Cross-Entropy loss using Adam optimizer with an initial learning rate of 0.0001. Other Python library requirements include torchvision (v.0.5.0), tqdm (v.4.45.0), tensorboardX (v.1.1), and Pillow (7.0.0). Similarly, Mask-RCNN operates on same sized images with a training batch size of 16. The model is trained for 25 epochs by minimizing binary Cross-Entropy loss using SGD optimizer with a learning rate of 0.001 and momentum 0.9. The model is trained on Google Colab with Nvidia Tesla T4 as the GPU accelerator using a PyTorch (1.6.0+cu101) implementation.

The comparitive algorithms include DenseNet121 [15], [16], [17], MobileNetv2 [4], [5], ResNet18 [6], [7], [37], and VGG19 [13], [38]. For each of these networks, the ImageNet pre-trained version is selected. The model is then fine-tuned with the dataset and protocol used for the proposed COMiT-Net. The input size, batch size, and epochs are kept same as COMiT-Net, i.e., 224 2243, 16, and 30 respectively.

4. Results and analysis

We next evaluate the performance of the proposed COMiT-Net for classification and localization tasks. The performance is compared with existing deep learning algorithms for COVID-19 chest radiograph studies. Further, to study the effectiveness of the proposed COMiT-Net, we perform experiments by selecting different combinations of sub-networks from the COMiT-Net in subsection 5.3. Lastly, subsection 5.4 specifically tasks about prediction of COVID-19 affected CXR. The predictions are presented on a large COVID-19 positive CXRs database, validated against predictions from radiologists.

4.1. Lung and disease localization

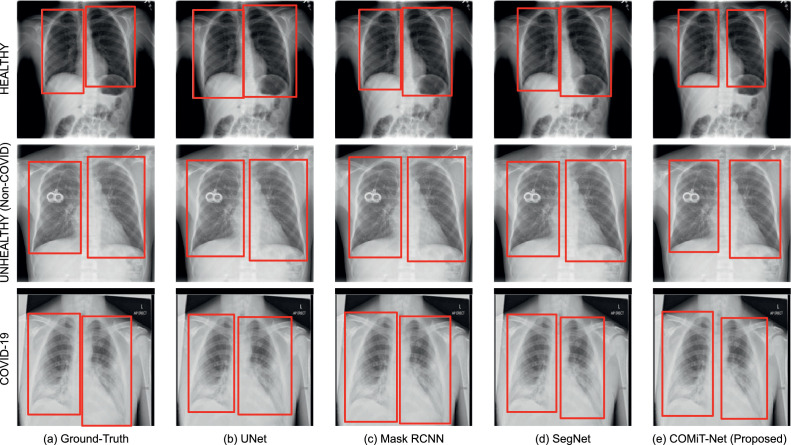

In this subsection, the segmentation results of the proposed COMiT-Net are compared against region predictions from UNet [39], Mask RCNN [40], and SegNet [27]. For lung segmentation, sample predictions of the proposed and existing algorithms are shown in Fig. 5 . Inferring the sample prediction, we observe that all four algorithms perform well and give comparable results. However, the proposed COMiT-Net yields the most precise bound for lung segmentation. To support the visual results presented in Fig. 5, we additionally report the Intersection over Union (IoU) for lung segmentation. The IoU scores corresponding to the U-Net, Mask-RCNN, SegNet, and COMiT-Net are 0.82, 0.85, 0.83, and 0.85, respectively. The reported IoU for COMiT-Net is same as the state-of-the-art segmentation method Mask-RCNN. We can also visually observe in Fig. 5 that COMiT-Net provides the tightest bound for lungs. Since lung and disease localization tasks are performed simultaneously, and diseases are present within the lungs, the lung decoder network learns to focus more on the lung regions rather than the outside the lungs.

Fig. 5.

Samples of lung segmentation output for existing algorithms and the proposed COMiT-Net.

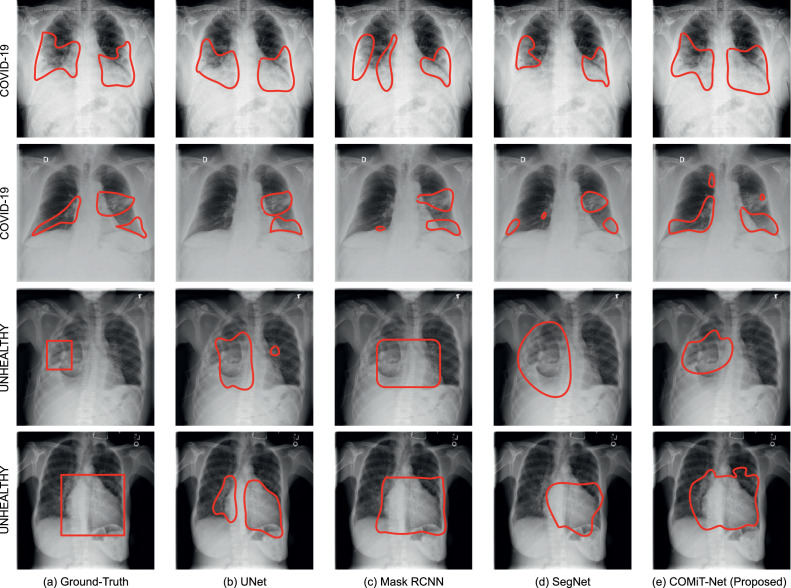

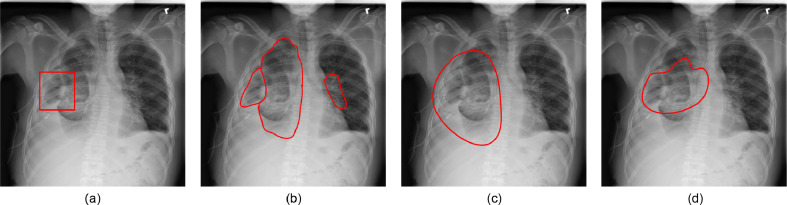

The results of disease segmentation are shown in Fig. 6 . The first two rows of Fig. 6 illustrate abnormalities in COVID-19 affected lungs while last two rows have abnormality localization in unhealthy but Non-COVID affected lungs. For disease localization, a more relevant metric is True Positive Rate (TPR). When localizing a disease, we would not want to miss detection of diseased regions (even if some non-diseased regions get predicted as diseased). Hence, the reported TPR corresponding to the U-Net, Mask RCNN, SegNet, and COMiT-Net are 0.42, 0.51, 0.31, and 0.87, respectively. The masks for all the four algorithms are predicted at a constant threshold of 0.5 (disease treated as foreground; label=1). Using the predicted mask, we calculate the TPR for the foreground disease classification. From the perspective of shape, Mask-RCNN tends to provide well-defined shape boundaries for Non-COVID unhealthy lungs. SegNet and COMiT-Net provide irregularly shaped predictions, localizing the radiological findings compactly. Overall, we observe that each of the four algorithms predict additional regions for the abnormalities. The detected abnormalities have false positive regions when compared to the ground-truth. As shown in Fig. 7 (c) and (d), these false positives sometimes arise due to better localization by SegNet and proposed COMiT-Net. The comparative ground-truth provided with the database is shown for reference in Fig. 7(a).

Fig. 6.

Samples of semantic disease segmentation for existing algorithms and the proposed COMiT-Net. The x-ray images and corresponding abnormality localization for “Unhealthy” are derived from ChestXray-14 database [28].

Fig. 7.

Non-COVID affected unhealthy lung, with (a) ground-truth annotation from ChestXray-14 database, (b) abnormality manually marked by a radiologist (as a part of this study), (c) disease prediction from SegNet, and (d) disease prediction from the proposed COMiT-Net.

Further, we observe that for certain abnormalities in ‘Unheathy’ case, deep models fail to localize the abnormality. One of the reasons for this is the limited training data for abnormality localization with large variations in the diseased regions. The unhealthy Non-COVID lung abnormalities are derived from ChestXray-14, which has 700 samples corresponding to 14 labels. As a result of a small sample size for each abnormality, the networks cannot localize diseases properly. However, the proposed COMiT-Net has assistance from other tasks. For instance, the lung prediction task would implicitly reinforce COMiT-Net to predict diseases within the lung. Hence, of the four algorithms, the proposed COMiT-Net provides the most overlapping prediction with the ground truth.

Compared to 700 samples for 14+ different radiological findings (approx. 50 images per abnormality), there are 290 COVID-19 affected lung x-rays (prior to augmentation). A majority of the COVID-19 affected chest radiographs demonstrate consolidations, which tend to be bilateral and more common in lower zones [41]. Hence, deep models have more samples to learn the localization of COVID-19 specific abnormalities than other diseases (290 vs. 50). In retrospection, the first two rows of Fig. 6 illustrate that all four models perform relatively better for COVID-19 localization than the last two rows of “unhealthy” localization. In most cases, each of the four models predict affected regions in the lower lung zones bilaterally. However, the proposed COMiT-Net outperforms other algorithms. For instance, in the first row of Fig. 6, both Mask-RCNN and SegNet tend to leave out the darker region in the right lung, while ground-truth and COMiT-Net have that region marked as diseased. Further, in the low contrast x-ray in row two, the less opaque part of the right lower lung looks darker (though being diseased). Hence, UNet fails to detect any finding in the right lower lung, while Mask RCNN and SegNet detects a few small region(s). Nevertheless, the proposed COMiT-Net can detect such faint differences in lung density.

4.2. Classification

Next, we evaluate the COMiT-Net’s performance for healthy/unhealthy (Task 3) classification and multi-label classification of COVID-19 and other diseases (Task 4). For COVID/Non-COVID classification, a branch is derived from the last layer of the encoder network i.e., GAP which outputs the embedding of the input samples. The branch has three fully connected layers (FC) where the first two layers use ReLu activation and the last FC layer uses sigmoid activation for classification. The results of the COMiT-Net are compared against popular deep networks. These include DenseNet121 [15], [16], [17], MobileNetv2 [4], [5], ResNet18 [6], [7], [37], and VGG19 [13], [38]. Further, we draw a comparison with Random Decision Forest (RDF) [42] and Support Vector Machines (SVM) [43] with three different kernels- sigmoid, gaussian, and radial basis function (RBF). In the COMiT-Net Embedding + RDF, the embeddings of the input samples are obtained from the GAP layer of the encoder network and are further used to train the RDF classifier. Similarly, for the COMiT-Net Embedding + SVM (Sigmoid), COMiT-Net Embedding + SVM (Gaussian), and COMiT-Net Embedding + SVM (RBF), the output embeddings are used to train SVM classifier with sigmoid, gaussian, and rbf kernels.

The results for classification performance are presented in Table 4 . In the Table, the sensitivity results are reported at two fixed specificities, i.e., 90% and 99%. At these fixed specificity values, the observed sensitivity is 96.80% and 87.20%, respectively. Further, for COVID classification, we observe an overall test classification performance3 of 98.79%. Lastly, we also computed precision and recall for the proposed method. At 99% specificity, the precision is 64.12% and recall is 87.20%. Additionally, using precision and recall, the F1 score is found as 73.90%.

Table 4.

Evaluation and comparison of the proposed COMiT-Net with existing algorithms for COVID-19 prediction (FC = Fully Connected Classification Layers). Y denotes varying specificity values. Col 1 with Y=99% denotes the sensitivity of the network at 99% specificity.

|

Sensitivity@Specificity |

EER (%) | ||

|---|---|---|---|

| = 99% | = 90% | ||

| DenseNet121 + FC | 60.80 | 90.40 | 9.82 |

| MobileNetv2 + FC | 67.20 | 93.60 | 8.04 |

| ResNet18 + FC | 56.00 | 81.60 | 13.78 |

| VGG19 + FC | 50.40 | 82.40 | 13.70 |

| COMiT-Net Embedding + RDF | 79.20 | 95.20 | 7.34 |

| COMiT-Net Embedding + SVM (Sigmoid) | 6.40 | 24.00 | 41.46 |

| COMiT-Net Embedding + SVM (Gaussian) | 82.40 | 88.80 | 11.38 |

| COMiT-Net Embedding + SVM (RBF) | 82.40 | 88.80 | 11.38 |

| COMiT-Net (Proposed) | 87.20 | 96.80 | 7.30 |

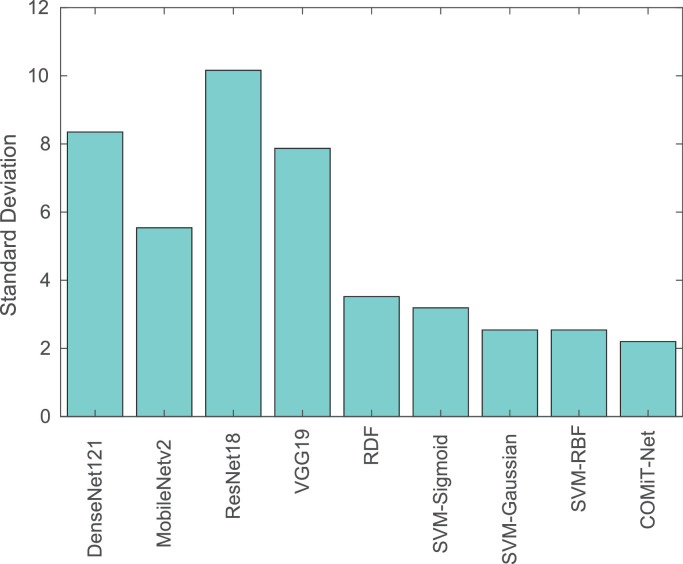

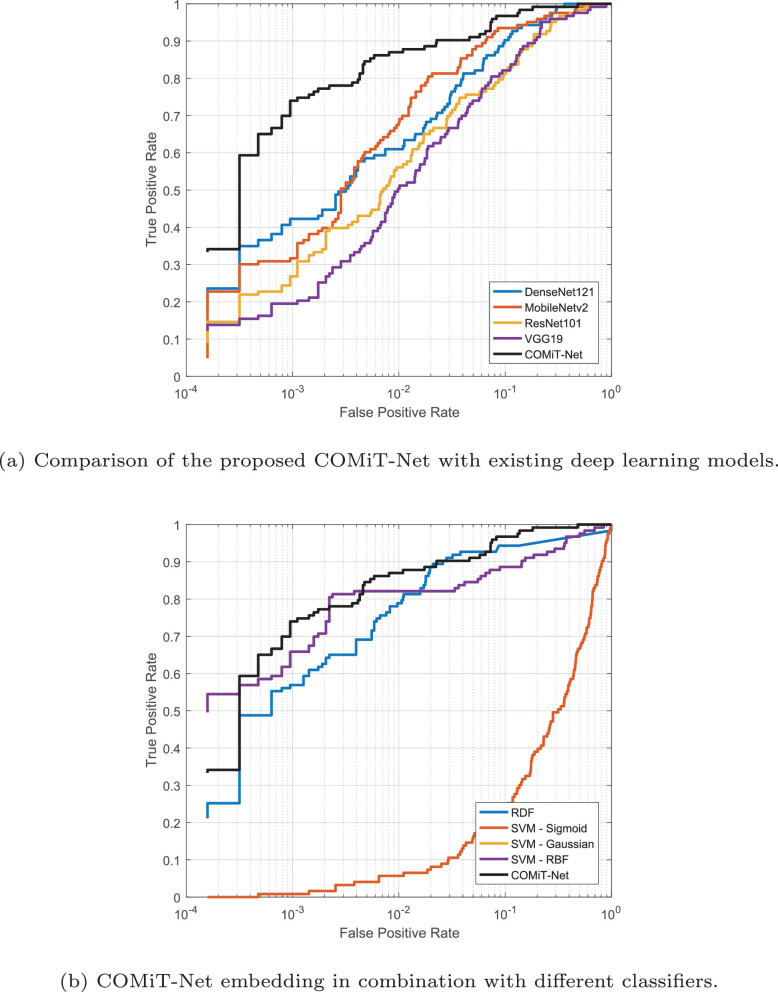

The proposed COMiT-Net achieves the highest TPR and lowest EER compared to the existing algorithms. With the implicit supervision from lung and disease localization tasks, the proposed COMiT-Net outperforms all other existing algorithms. To show the stability of different algorithms with different initialization, the networks are three-times trained with different initialization parameters. Across different training initializations, we report the standard deviation in Sensitivity to evaluate the stability (lower standard deviation implies higher stability). As shown in Fig. 8 , the proposed COMiT-Net is the most stable algorithm across different initializations. Classifiers that use embeddings from COMiT-Net also report lower standard deviation. Hence, it can be inferred that COMiT-Net consistently provides a discriminative representation, resulting in a stable performance. Fig. 9 further shows the comparison using the ROC curves of the proposed COMiT-Net and existing algorithms.

Fig. 8.

Standard deviation () of Sensitivity (at 1% FAR) for different algorithms. The performance is computed for different initialization of deep networks. The results show the stability in sensitivity for COMiT-Net, delivering consistent results for different initializations.

Fig. 9.

ROC curves summarizing the performance for COVID-19 classification.

The COMiT-Net’s classification performance for the COVID-19 samples into the healthy and unhealthy class is also analyzed. The proposed network classifies 97.25% of COVID-19 samples into unhealthy class and 2.75% in healthy class. The high TPR of the COVID-19 class and the majority of the COVID-19 samples being classified into unhealthy class showcase the effectiveness of the proposed network for COVID-19 detection. Overall, the classification performance of healthy/unhealthy classification is 75.17% for all the test samples, while for Non-COVID disease classification is 73.87%. Based on the proposed COMiT-Net, Fig. 10 shows some of the misclassified samples where the network predicts COVID-19 positive instances (as per the RT-PCR test) into healthy (Task 3). Correspondingly, the same samples are also predicted as Non-COVID by Task 4 of the proposed COMiT-Net. In retrospection, we believe that minimal opacities in the lung region could be the probable cause of misclassification. This led us to check the ground truth for the hospitalization day. Of the four misclassified samples shown in Fig. 10, three turned out to be the early days of the patients hospitalization (up to day 3). Based on these observations, we believe that the COMiT-Net predicts an x-ray being affected when there is presence of symptoms such as opacities and consolidations.

Fig. 10.

COVID-19 positive case misclassified as both healthy and Non-COVID by the proposed COMiT-Net.

4.3. Ablation study

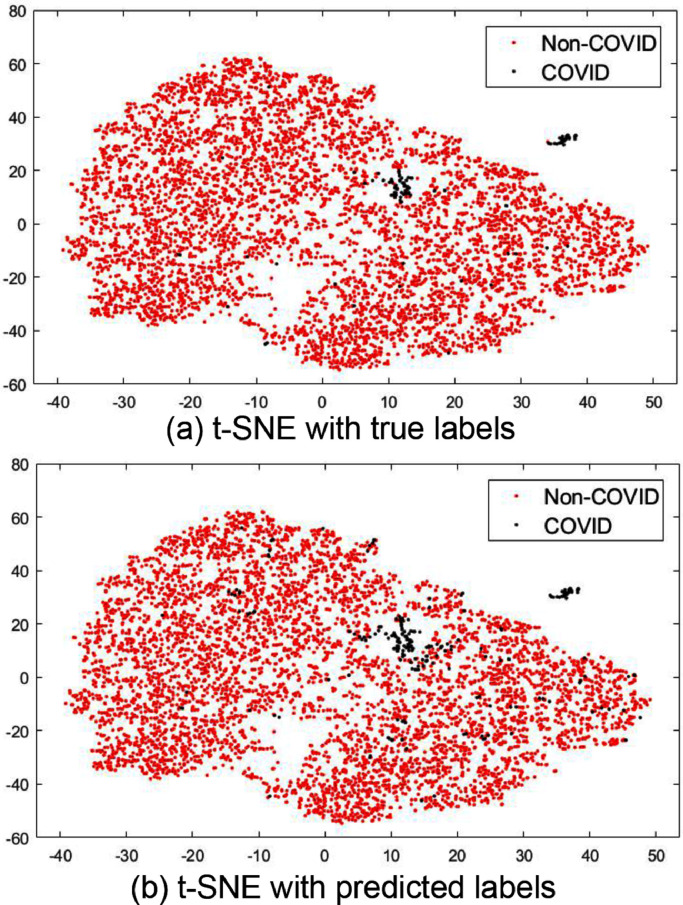

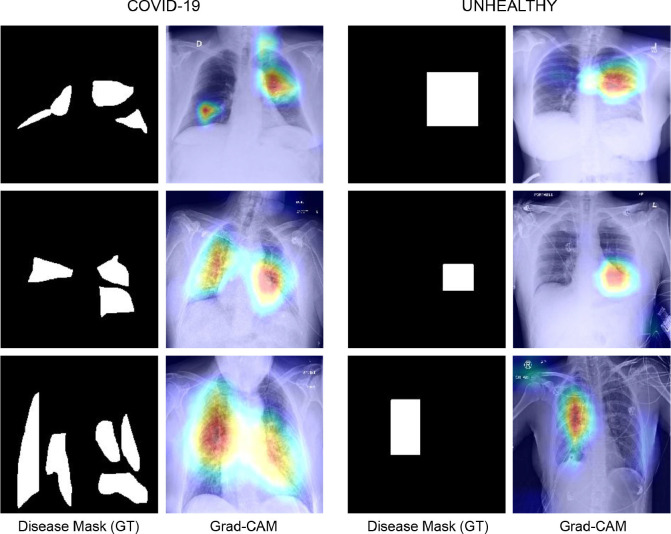

To study the importance of different tasks in the proposed COMiT-Net, we perform an ablation study by choosing different combinations of tasks. The four tasks in the COMiT-Net are Task 1: Semantic lung segmentation, Task 2: Semantic disease segmentation, Task 3: Healthy/Unhealthy classification of the lung X-ray, and Task 4: Multi-label classification for the presence of COVID-19 or other diseases. We perform eight different ablation experiments, presented in the Table 5 . It is observed that for COVID-19 prediction, each task (loss function) has an important role. Removing either of the three assisting tasks deteriorates the performance. Of all these three assisting tasks, the lung segmentation task holds a pivotal role. In a COVID-19 affected x-ray, a common trait is that the lungs get affected bilaterally. Hence, a comprehensive view provided by the lung segmentation task provides more weight to lung regions, resulting in better performance with Task 1 than any other task. We perform disease segmentation and healthy/unhealthy classification since their efficacy improves in conjunction with lung segmentation and has a positive impact on the Non-COVID disease classification prediction. As validated by the ground-truth t-SNE feature space plot (shown in Fig. 11 (a)), the predictions of the test COVID-19 samples (Fig. 11(b)) are well separated from Non-COVID samples. It shows that the model can distinguish COVID-19 affected samples and can predict unseen test labels correctly. Further, we use Grad-CAM [44] which is a popular tool for producing visual explanations for decisions obtained from deep learning architectures. As observed in the Grad-CAM analysis (Fig. 12 ), the proposed COMiT-Net focuses on diseased regions, in both COVID-19 and unhealthy test samples, to make its prediction.

Table 5.

An ablation study on reducing the number of tasks and observing its effect on COVID-19 prediction.

| COVID-19 (Sensitivity %) | |

|---|---|

| All 4 Tasks | 96.80 |

| Task 4 Only | 84.40 |

| Task 1 and 4 | 94.40 |

| Task 2 and 4 | 57.60 |

| Task 3 and 4 | 67.80 |

| Task 1, 2 and 4 | 92.80 |

| Task 1, 3 and 4 | 87.20 |

| Task 2, 3 and 4 | 54.40 |

Fig. 11.

Interpretation of feature representation based on (a) ground-truth and (b) predicted labels using t-SNE plot for COVID/Non-COVID classification.

Fig. 12.

Interpretation of regions focused by COMiT-Net using Grad-CAM. As seen from heat maps, the supervision from disease and lung annotation helps the COMiT-Net to focus on unhealthy regions.

In the real-world scenario, annotating disease and lung masks for chest x-rays is a time consuming and challenging task. The performance of the proposed COMiT-Net is dependent on the available annotated data for the different tasks. It might not be feasible to have an adequate amount of disease and lung masks for the X-ray images in the training set. Moreover, the performance of the proposed model for the task of COVID/Non-COVID classification is dependent on the task of lung and disease segmentation. Removing either of these assisting tasks deteriorates the performance of the COMit-Net.

4.4. Prediction on unseen COVID X-ray database

Recently, BIMCV-COVID19+ database has been released by the Medical Imaging Databank in Valencian Region (BIMCV). To demonstrate the performance of the proposed COMiT-Net in a real-world scenario, we report the results on the BIMCV-COVID19+ database as well. At the time of download, the database had 2388 frontal x-ray images. The x-ray is captured from COVID positive patients during hospitalization. For each patient, the database has one or more x-ray along with the report of one or more COVID-19 diagnostics tests (RT-PCR, IGM, IGG) with its timestamps. It is to be noted that the timestamp of the x-ray does not coincide with the timestamp of the diagnostics test. Hence, if an x-ray is taken closer to a negative diagnostics test or is at least 14 days away from the nearest positive diagnostics test, the x-ray is considered as COVID-19 negative. Otherwise, we label the x-ray as COVID-19 positive. With this procedure, there are a total of 2,098 COVID-19 positive x-rays which are used for testing purposes only (no training is performed on this dataset). For reproducibility, we will release these labels along with filenames of the radiographs.

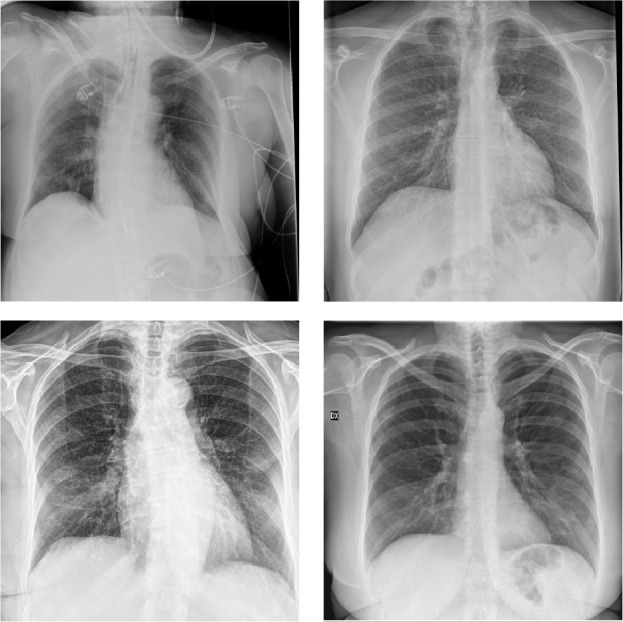

Out of 2,098 COVID-19 positive x-rays, the trained COMiT-Net correctly classifies 1,793 samples and misclassifies 305 samples (at 10% FAR). Hence, at 90% specificity, the COMiT-Net has a sensitivity of 85.46%. Sample instances of disease segmentation from the correctly classified 1,793 samples are shown in Fig. 13 . Similar to previous disease segmentation instances, COMiT-Net is able to localize abnormalities bilaterally.

Fig. 13.

Few instances of semantic disease segmentation from the proposed COMiT-Net for the BIMCV+ COVID-19 database.

To further understand the behavior of the 305 misclassified instances, each of these samples is verified by radiologists to affirm if any radiological abnormality is present or not. Of these 305, there are 115 instances where radiologists confirmed the absence of any abnormality. Few sample instance of these cases where the COMiT-Net and radiologists predicted negative yet BIMCV-COVID19+ labelled positive is shown in Fig. 14 .

Fig. 14.

Sample instances that were labeled by BIMCV-COVID19+ as positive but the COMiT-Net and radiologists predicted negative.

Lastly, we show additional results on COVID-19 subsets of the COVID-19 Radiography dataset4 . Of the six COVID subsets presented in the dataset, our proposed method had already used four (BIMCV, EuroRad, Github, SIRM). For the remaining two subsets, at 90% specificity threshold, the sensitivity is 98.75% for the ARMIRO subset (400 images) and 99.45% for the ML-workgroup subset (183 images).

5. Conclusion and future work

In the face of the SARS-CoV2 pandemic, it has become essential to perform mass screening and testing of patients. However, many countries around the world are not equipped with enough laboratory testing kits or medical personnel for the same. At the same time, X-rays are amongst the most popular, cost-effective and widely available imaging technology across the world. This paper presents an “explainable solution” for detecting COVID-19 pneumonia in patients through chest radiographs. We propose COMiT-Net which performs the tasks of classification and segmentation simultaneously. Experiments conducted on different chest radiograph datasets show promising results of the proposed algorithm in COVID prediction. The ablation study supports the utilization of different tasks in the proposed multi-task network. We believe that the proposed COMit-Net can be used as an attractive alternative solution that can assist the doctors and the research community to speed up the screening process of COVID cases.

In future, we plan to extend this work and use the proposed framework for the task of COVID-19 prediction using modalities other than X-ray such as CT and ultrasound. Since these modalities provide complementary information such as nature and formation of abnormalities present in the diseased region, incorporating them will help in crafting a robust solution. Further, we need to understand distinguishable traits between COVID-19 pneumonia and non-COVID viral pneumonia. Learning these traits can be useful in detecting COVID-19 pneumonia.

Declaration of Competing Interest

None.

Acknowledgments

The authors acknowledge RAKSHAK project under NM-CPS DST and iHuB Drishti Jodhpur. Malhotra is partially supported by Visvesvaraya Ph.D. Fellowship. Mittal is partially supported by UGC-Net JRF Fellowship. Majumdar is partially supported by DST Inspire Ph.D. Fellowship.

Biographies

A. Malhotra received the B.Tech. degree in CSE from IIIT-Delhi, India, in 2015, where he is currently pursuing the Doctoral degree. He received ORF from IIIT-Delhi to visit WVU as a Visiting Research Scholar and is a recipient of the Visvesvaraya Ph.D. fellowship. His research interests include DL and ML.

S. Mittal completed her B.Sc.(Hons) and M.Sc. in Computer Science from the University of Delhi, India in 2019. She was awarded the Junior Researcher Fellowship from UGC-NET in December 2018. Currently, she is pursuing her Ph.D. degree from IIT Jodhpur. Her research interests include computer vision and deep learning.

P. Majumdar received the M-Tech degree in CSE from the NIT-Delhi, in 2017 where she was awarded the President Gold Medal. She is currently pursuing her Ph.D. at IIIT-Delhi. She is a recipient of the DST INSPIRE Fellowship. Her research interests are ML and DL with applications in face recognition.

S. Chhabra received the B.Tech degree in ECE from the LPU - Punjab, India. Currently, he is pursuing his Ph.D. degree in Computer Science from IIIT-Delhi, India. His research interests include deep learning with applications in privacy preservation. He has received the Best Student Paper Award at IEEE BTAS 2019.

K. Thakral received the Bachelor of Technology degree in Computer Science from the College of Engineering Roorkee, India, in 2019. Currently, he is pursuing a Ph.D. degree from the Indian Institute of Technology Jodhpur. His research interests include computer vision, deep learning, and biometrics.

M. Vatsa received the M.S. (2005) & Ph.D. (2008) degrees from WVU, USA. He is currently a Professor at IIT Jodhpur, India, and the Project Director of the TIH on Computer Vision and Augmented Reality. He is also an Adjunct Professor with IIIT-Delhi and WVU, USA.

R. Singh received the M.S. (2005) & Ph.D. (2008) degree in CSE from WVU, USA. She is currently a Professor at IIT Jodhpur, India, and the Head of Department for CSE. She is also an Adjunct Professor with IIIT-Delhi and WVU, USA.

S. Chaudhury is the Director of IIT Jodhpur, and a Professor, on lien, in ECE Department at IIT Delhi, India. His research interests include CV, robotics, embedded systems, and ML. He is an IAPR fellow and fellow of the Indian National Academy of Engineering and National Academy of Sciences, India.

A. Pudrod is a Pulmonologist working with the Ashwini Hospital and Ramakant Heart Care Centre, Maharashtra, India. He completed his MBBS from GMC Nagpur, India. He is a specialist for diagnostics of lung and chest related disorders.

A. Agrawal is a consultant radiologist at Teleradiology Solutions. She is a founder member and Secretary of the Society for Emergency Radiology in India, editorial board member of the Journal Emergency Radiology and Fellow of the American Society of Emergency Radiology.

It is observed that the loss of the model converges around the 30th epoch. To make sure the model is trained and does not overfit, we track the training losses and early stop at 30th epoch.

The overall test classification accuracy is

In our context, the terms ‘abnormality’, ‘disease’, and ‘radiological finding’ are used synonymously.

References

- 1.A. Schiffmann, World COVID-19 Stats, 2020, (https://ncov2019.live/). [Accessed: 6-March-2021].

- 2.Pan F., Ye T., Sun P., Gui S., Liang B., Li L., Zheng D., Wang J., Hesketh R.L., Yang L., et al. Time course of lung changes on chest CT during recovery from 2019 novel coronavirus (COVID-19) pneumonia. Radiology. 2020;295(3):715–721. doi: 10.1148/radiol.2020200370. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.T.T. Nguyen, Artificial intelligence in the battle against coronavirus (COVID-19): a survey and future research directions, Preprint, 10.13140/RG.2.2.36491.23846 10 (2020).

- 4.Apostolopoulos I.D., Mpesiana T.A. COVID-19: automatic detection from x-ray images utilizing transfer learning with convolutional neural networks. Phys. Eng. Sci. Med. 2020;43(2):635–640. doi: 10.1007/s13246-020-00865-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Sandler M., Howard A., Zhu M., Zhmoginov A., Chen L.-C. IEEE Conference on Computer Vision and Pattern Recognition. 2018. MobileNetV2: inverted residuals and linear bottlenecks; pp. 4510–4520. [Google Scholar]

- 6.A. Narin, C. Kaya, Z. Pamuk, Automatic detection of coronavirus disease (COVID-19) using X-ray images and deep convolutional neural networks, arXiv preprint arXiv:2003.10849 (2020). [DOI] [PMC free article] [PubMed]

- 7.He K., Zhang X., Ren S., Sun J. IEEE Conference on Computer Vision and Pattern Recognition. 2016. Deep residual learning for image recognition; pp. 770–778. [Google Scholar]

- 8.Szegedy C., Vanhoucke V., Ioffe S., Shlens J., Wojna Z. IEEE Conference on Computer Vision and Pattern Recognition. 2016. Rethinking the inception architecture for computer vision; pp. 2818–2826. [Google Scholar]

- 9.Szegedy C., Ioffe S., Vanhoucke V., Alemi A.A. AAAI Conference on Artificial Intelligence. vol. 31. 2017. Inception-v4, inception-ResNet and the impact of residual connections on learning. [Google Scholar]

- 10.J.P. Cohen, P. Morrison, L. Dao, COVID-19 image data collection, arXiv preprint arXiv:2003.11597 (2020). [Accessed: 6-Nov-2020].

- 11.P. Mooney, Chest X-Ray Images (Pneumonia)(2018). [Accessed: 6-Sept-2020].

- 12.Nishio M., Noguchi S., Matsuo H., Murakami T. Automatic classification between COVID-19 pneumonia, non-COVID-19 pneumonia, and the healthy on chest X-ray image: combination of data augmentation methods. Sci. Rep. 2020;10(1):1–6. doi: 10.1038/s41598-020-74539-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Horry M.J., Chakraborty S., Paul M., Ulhaq A., Pradhan B., Saha M., Shukla N. COVID-19 detection through transfer learning using multimodal imaging data. IEEE Access. 2020;8:149808–149824. doi: 10.1109/ACCESS.2020.3016780. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Oh Y., Park S., Ye J.C. Deep learning COVID-19 features on CXR using limited training data sets. IEEE Trans. Med. Imaging. 2020;39(8):2688–2700. doi: 10.1109/TMI.2020.2993291. [DOI] [PubMed] [Google Scholar]

- 15.A. Mangal, S. Kalia, H. Rajgopal, K. Rangarajan, V. Namboodiri, S. Banerjee, C. Arora, CovidAID: COVID-19 detection using chest X-Ray, arXiv preprint arXiv:2004.09803(2020).

- 16.Jaiswal A., Gianchandani N., Singh D., Kumar V., Kaur M. Classification of the COVID-19 infected patients using DenseNet201 based deep transfer learning. J. Biomol. Struct. Dyn. 2020:1–8. doi: 10.1080/07391102.2020.1788642. [DOI] [PubMed] [Google Scholar]

- 17.Huang G., Liu Z., Van Der Maaten L., Weinberger K.Q. IEEE Conference on Computer Vision and Pattern Recognition. 2017. Densely connected convolutional networks; pp. 4700–4708. [Google Scholar]

- 18.B. Ghoshal, A. Tucker, Estimating uncertainty and interpretability in deep learning for coronavirus (COVID-19) detection, arXiv preprint arXiv:2003.10769(2020).

- 19.He K., Zhang X., Ren S., Sun J. European Conference on Computer Vision. Springer; 2016. Identity mappings in deep residual networks; pp. 630–645. [Google Scholar]

- 20.Shi W., Tong L., Zhuang Y., Zhu Y., Wang M.D. ACM International Conference on Bioinformatics, Computational Biology and Health Informatics. 2020. EXAM: an explainable attention-based model for COVID-19 automatic diagnosis; pp. 1–6. [Google Scholar]

- 21.Tsiknakis N., Trivizakis E., Vassalou E.E., Papadakis G.Z., Spandidos D.A., Tsatsakis A., Sánchez-García J., López-González R., Papanikolaou N., Karantanas A.H., et al. Interpretable artificial intelligence framework for COVID-19 screening on chest X-rays. Exp. Ther. Med. 2020;20(2):727–735. doi: 10.3892/etm.2020.8797. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Loey M., Smarandache F., M Khalifa N.E. Within the lack of chest COVID-19 X-ray dataset: a novel detection model based on GAN and deep transfer learning. Symmetry. 2020;12(4):651. [Google Scholar]

- 23.Wang L., Lin Z.Q., Wong A. COVID-Net: a tailored deep convolutional neural network design for detection of COVID-19 cases from chest x-ray images. Sci. Rep. 2020;10(1):1–12. doi: 10.1038/s41598-020-76550-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Afshar P., Heidarian S., Naderkhani F., Oikonomou A., Plataniotis K.N., Mohammadi A. COVID-CAPS: a capsule network-based framework for identification of COVID-19 cases from X-ray images. Pattern Recognit. Lett. 2020;138:638–643. doi: 10.1016/j.patrec.2020.09.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Shorfuzzaman M., Hossain M.S. MetaCOVID: a siamese neural network framework with contrastive loss for N-shot diagnosis of COVID-19 patients. Pattern Recognit. 2021;113:107700. doi: 10.1016/j.patcog.2020.107700. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Cutillo C.M., Sharma K.R., Foschini L., Kundu S., Mackintosh M., Mandl K.D., Beck T., Collier E., Colvis C., Gersing K., Gordon V., Jensen R., Shabestari B., Southall N., in Healthcare Workshop Working Group M. Machine intelligence in healthcare—perspectives on trustworthiness, explainability, usability, and transparency. Digit. Med. 2020;3(1):47. doi: 10.1038/s41746-020-0254-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Badrinarayanan V., Kendall A., Cipolla R. SegNet: a deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017;39(12):2481–2495. doi: 10.1109/TPAMI.2016.2644615. [DOI] [PubMed] [Google Scholar]

- 28.P. Rajpurkar, J. Irvin, K. Zhu, B. Yang, H. Mehta, T. Duan, D. Ding, A. Bagul, C. Langlotz, K. Shpanskaya, et al., CheXNet: radiologist-level pneumonia detection on chest X-rays with deep learning, arXiv preprint arXiv:1711.05225(2017).

- 29.Irvin J., Rajpurkar P., Ko M., Yu Y., Ciurea-Ilcus S., Chute C., Marklund H., Haghgoo B., Ball R., Shpanskaya K., et al. AAAI Conference on Artificial Intelligence. vol. 33. 2019. Chexpert: a large chest radiograph dataset with uncertainty labels and expert comparison; pp. 590–597. [Google Scholar]

- 30.SIRM, COVID-19 Database (2020). [Accessed: 6-Sept-2020].

- 31.C. Imaging, COVID-19 CXR Spain (2020). [Accessed: 6-Sept-2020].

- 32.RadioPaedia, Search results for ǣcovid 19ǥ, [Accessed: 6-Sept-2020].

- 33.BSTI, COVID-19 BSTI IMAGING DATABASE, [Accessed: 6-Sept-2020].

- 34.EuroRad, EuroRad Search results for COVID-19, [Accessed: 6-Sept-2020].

- 35.BIMCV, BIMCV-COVID19, 2020, [Accessed: 16-Oct-2020].

- 36.B. Nazari, Coronavirus and Pneumonia, 2020, [Accessed: 10-Nov-2020].

- 37.Che Azemin M.Z., Hassan R., Mohd Tamrin M.I., Md Ali M.A. COVID-19 deep learning prediction model using publicly available radiologist-adjudicated chest X-ray images as training data: preliminary findings. Int. J. Biomed. Imaging. 2020;2020:1988–1996. doi: 10.1155/2020/8828855. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.K. Simonyan, A. Zisserman, Very deep convolutional networks for large-scale image recognition, arXiv preprint arXiv:1409.1556(2014).

- 39.Ronneberger O., Fischer P., Brox T. International Conference on Medical Image computing and Computer-Assisted Intervention. Springer; 2015. U-Net: convolutional networks for biomedical image segmentation; pp. 234–241. [Google Scholar]

- 40.He K., Gkioxari G., Dollár P., Girshick R. IEEE International Conference on Computer Vision. 2017. Mask R-CNN; pp. 2961–2969. [Google Scholar]

- 41.J. Sawani, How Does COVID-19 Appear in the Lungs?, 2020.

- 42.Kam H.T., et al. International Conference on Document Analysis and Recognition. vol. 1. 1995. Random decision forest; pp. 278–282. [Google Scholar]

- 43.Suykens J.A., Vandewalle J. Least squares support vector machine classifiers. Neural Process. Lett. 1999;9(3):293–300. [Google Scholar]

- 44.Selvaraju R.R., Cogswell M., Das A., Vedantam R., Parikh D., Batra D. Grad-CAM: visual explanations from deep networks via gradient-based localization. Int. J. Comput. Vis. 2020;128(2):336–359. [Google Scholar]