Abstract

Few studies address publication and outcome reporting biases of randomized controlled trials (RCTs) in psychiatry. The objective of this study was to determine publication and outcome reporting bias in RCTs funded by the Stanley Medical Research Institute (SMRI), a U.S. based, non-profit organization funding RCTs in schizophrenia and bipolar disorder. We identified all RCTs (n = 280) funded by SMRI between 2000 and 2011, and using non-public, final study reports and published manuscripts, we classified the results as positive or negative in terms of the drug compared to placebo. Design, outcome measures and statistical methods specified in the original protocol were compared to the published manuscript. Of 280 RCTs funded by SMRI between 2000 and 2011, at the time of this writing, three RCTs were ongoing and 39 were not performed. Among the 238 completed RCTs, 86 (36.1%) reported positive and 152 (63.9%) reported negative results: 86% (74/86) of those with positive findings were published in contrast to 53% (80/152) of those with negative findings (P < .001). In 70% of the manuscripts published, there were major discrepancies between the published manuscript and the original RCT protocol (change in the primary outcome measure or statistics, change in a number of patient groups, 25% or more reduction in sample size). We conclude that publication bias and outcome reporting bias is common in papers reporting RCTs in schizophrenia and bipolar disorder. These data have major implications regarding the validity of the reports of clinical trials published in the literature.

Keywords: publication bias, negative findings, misreporting, psychiatry

Introduction

Publication bias occurs when study results influence whether or not a study is published; outcome reporting bias occurs when authors selectively report outcomes. Both undermine clinical research by distorting the biomedical evidence base toward positive studies. Both publication bias and outcome reporting bias have been reported extensively in general medicine; 1,2 there have been some,3 but relatively few papers on this topic in psychiatry. Publication bias and outcome reporting bias may lead to an overestimation of treatment effects thus exposing patients to ineffective treatments in daily clinical practice. From a research point of view, it exposes volunteering patients to the unnecessary risks and inconveniences of research procedures, and hinders drug development, as scientists are unable to learn from one another’s experiences and effectively utilize funding and resources.4

Accordingly, we conducted an audit of research funded by a nonprofit research organization, the Stanley Medical Research Institute (SMRI), which funds randomized controlled trials (RCTs) on compounds for the treatment of schizophrenia or bipolar disorder. We identified studies funded but not performed, performed but not published, (publication bias) or published in a biased fashion (outcome reporting bias). This was accomplished by comparing the original trial protocols to the published manuscripts. This study is unique in that we had access to the final scientific report that SMRI requires before final payment is issued, enabling ascertainment of reporting bias of near 100% of the funded RCTs. We hypothesized that, just as in general medicine, we would find high rates of publication bias and outcome reporting bias.

Methods

All RCTs funded by the SMRI between 2000 and 2011 were reviewed. The year 2000 was chosen because this is the earliest time for which trial protocols were available, and 2011 was chosen to ensure adequate time for RCTs to be completed and published in a peer-reviewed journal. Studies were considered completed if a final report was on file with SMRI.

Publications are reported to SMRI voluntarily, so the publication status for a substantial number of SMRI funded RCTs was unknown. If publication status was not apparent from archive review, a PubMed search was conducted by two of the authors, J.B. and L.L., using the following search criteria: name of the principal investigator, name of the compound, and the words “randomized,” “schizophrenia” or “bipolar.”

All completed RCTs were classified as being positive or negative studies based on whether the primary outcome results reported in the published manuscript showed statistically significant improvement with study medication (positive) or not (negative). RCTs that reported positive results only for secondary outcome measure(s) were classified as negative studies. We determined whether there was reporting bias among published RCTs by comparing the protocols submitted to SMRI to the corresponding manuscripts published in peer-reviewed journals. In addition, we searched the web site of clinicaltrials.gov and eudra.ct to see which of the studies were pre-registered, and for those that were, what changes, if any, were made to the protocols that had been approved by SMRI.

Data were collected in an online database (RedCap) under the following categories: (1) RCT design, (2) outcome measures, (3) statistical analyses, and (4) time to publication. See RedCap form in the web supplement.

1. RCT Design

Consistency in design of RCTs was determined by comparing randomized controlled vs. open-label designation, length of the treatment phase, length of follow-up after the end of the treatment phase, number of patient groups, sample size, and use of lab procedures.

2. Primary and Secondary Outcomes

Authors determined whether primary outcomes were stated explicitly, implicitly, questionably implicit, or not stated in the original protocol, and whether they were described in the published manuscript as indicated in the original protocol. Authors also determined whether a published manuscript reported a positive treatment effect using an outcome that had not been stated as a primary outcome in the original protocol.

3. Statistical Comparisons

Differences between the analyses proposed in the original protocol and the published manuscripts were examined.

4. Time to Publication

Reviewers recorded the date that the RCT was funded, the date an investigator submitted a final study report to SMRI, and the date the RCT was published.

In order to establish a uniform rating system, ten published manuscripts were selected randomly and rated independently by J.B. and L.L. who then compared their ratings.

Differences observed between the original study protocols and the published manuscript were divided into major or minor discrepancies. The authors considered major discrepancies to include: RCT proposed but open label executed, different number of patient groups, sample size 25% less than proposed, change in statistical analyses used, and primary outcome measure changed. Minor discrepancies included: treatment length longer or shorter than proposed, duration of follow-up after end of treatment longer or shorter than proposed, lab procedures proposed but not published, secondary outcome measure(s) different than proposed.

To gain insight about why SMRI investigators did not publish their results, emails were sent to 67 investigators.

Data Analysis

We used descriptive statistics to analyze differences between the original RCT protocols and the published manuscripts. Chi-square and t-test analyses were used to test the differences between RCTs that reported positive findings and those which reported negative findings. Data analyses were conducted using SPSS version 22, and a P-value threshold of .05 was used for statistical significance. The statistical analysis code is available upon request.

Results

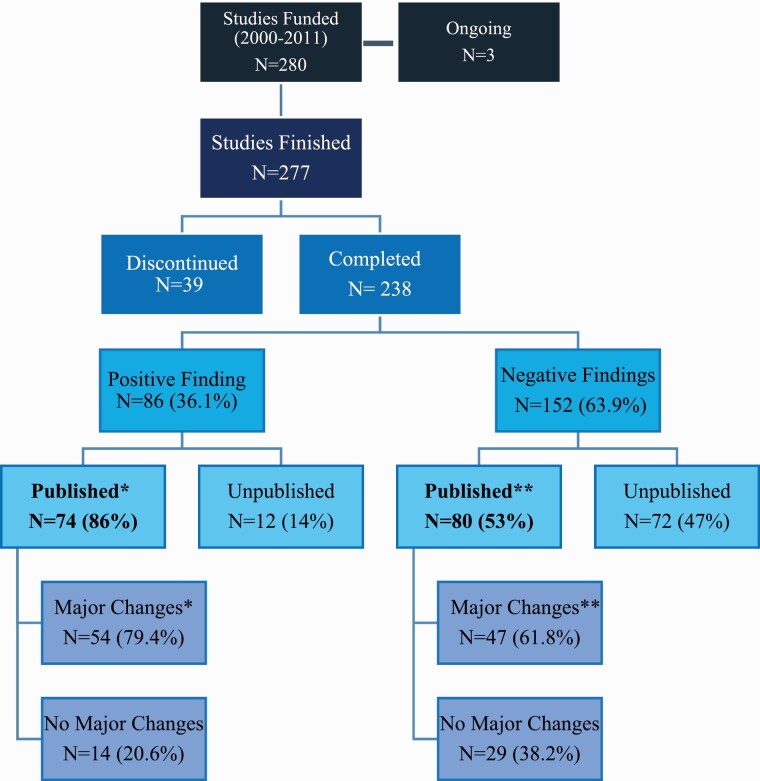

There were 280 RCTs funded by SMRI between 2000 and 2011, of which three are ongoing, 238 (85%) were completed and 39 (14%) were not completed. Among these 238 completed RCTs, 96 studied patients with schizophrenia and 48 studied patients with bipolar disorder (table 1); sample sizes ranged from 9 to 330 patients per study. Among the completed RCTs, 86/238 studies (36.1%) reported positive findings, while 152/238 (63.9%) reported negative findings to SMRI (figure 1). The median time from funding to final report submission was 5 years (IQR = 3–6 years).

Table 1.

Description of SMRI Funded RCTs (N = 238)

| Published, No. (%) | Unpublished, No. (%) | |

|---|---|---|

| Overall | 154 (64.7) | 84 (35.3) |

| Diagnosis | ||

| Schizophrenia | 100 (64.9) | 55 (65.5) |

| Bipolar disorder | 54 (35.1) | 26 (31.0) |

| Both | 0 | 3 (3.5) |

| Intervention | ||

| Pharmacological | 128 (83.1) | 76 (90.5) |

| Psychological/ psychosocial | 3 (1.9) | 1(1.2) |

| Other (TMS, dietary, etc.) | 23 (14.9) | 7 (8.3) |

| Average sample size | 72.4 | 76.1 |

| Studies completed in the United States | 68 (44.2) | 43 (51.2) |

| Studies completed in non-U.S. countries | 86 (55.8) | 41 (48.8) |

Fig. 1.

Publication rates of studies funded by SMRI from 2000 to 2011.* 68/74 positive studies that were published and **76/80 negative studies that were published had full protocols available to match with corresponding publications.

Publication Bias

Among the completed RCTs, 154 (65%) were published and 84 (35%) were performed but not published. Among the RCTs with positive findings, 86% (74/86) were published, in contrast to 53% (80/152) of the RCTs with negative findings (OR = 4.6, 95% CI: 2.3–9.0; P < .001; figure 1). Overall, the time elapsed between submission of the final trial report and published manuscript ranged from 2 to 15 years (mean = 6.5 years, IQR = 5–8, table 2); 75% of the RCTs published more than 10 years after study completion reported negative results. When stratified by whether the RCT was positive or negative, there was no statistically significant difference in time that elapsed from funding to publication (positive RCTs: median = 6, vs. negative RCTs: median = 6; P = .22).

Table 2.

Time to publication of the Stanley Medical Research Institute-funded studies

| Median years (IQR) from funding to final report | 5.0 (3.0) |

|---|---|

| Median years from funding to publication (IQR) | 6.0 (3.0) |

| Negative studies (IQR) | 6.0 (3.0) |

| Positive studies (IQR) | 6.0 (2.75) |

Outcome Reporting Bias

Of the 154 RCTs that were published, we were not able to find 10 protocols originally submitted to SMRI for funding, so all comparisons of original protocols with published manuscripts were performed on 144 protocol-published manuscript pairs. We identified discrepancies in the design, outcome measures and statistical analyses between the original protocol for these RCTs and the published manuscripts. Overall, 66% (95/144) of these RCTs had at least one minor change between the original protocol and the published manuscript, 70.8% (102/144) had at least one major change, and minor and major changes were present in 47.9% (69/144).

Design

Discrepancies observed between protocols and published manuscripts are listed in table 3. Two RCTs were awarded funding to do RCTs, but reported uncontrolled case studies. A different number of patient groups was used by 4.9% (7/144), 27.8% (40/144) used a sample size 25% less than that proposed; of these, 52.5% (21/40) described positive findings in the published manuscript, and 47.5% (19/40) had negative findings (P = .31). The original protocols of 21.5% (31/144) RCTs proposed to include biological markers and lab procedures, but did not report them in the published manuscript. Of these, 61.3% (19/31) were reported as positive findings, and 38.7% (12/31) were reported as negative findings (P = 0.04).

Table 3.

Results and Comparisons Between Protocols and Papers

| Study Design—Differences Between Protocol and Manuscript | No. (%) |

|---|---|

| RCT proposed & open label done | 2 (1.4) |

| Sample size 25% less than proposed | 40 (27.8) |

| Treatment length shorter than proposed | 22 (15.3) |

| Follow-up duration after study completed | |

| -Longer than proposed | 5 (3.5) |

| -Shorter than proposed | 11 (7.6) |

| Different number of patient groups | 7 (4.9) |

| Lab procedures proposed but not published | 31 (21.5) |

| Outcome Measures | |

| Primary outcome measure changed | 39 (27) |

| Positive findings | 18 (46.2) |

| Negative findings | 21 (53.8) |

| Secondary outcome measure different than proposed | 71 (49.3) |

| Statistical Comparisons | |

| Analyses proposed but not used/changed | 48 (33.3) |

| Positive findings reported using analyses not proposed in protocol | 31/48 (64.6) |

| % of RCTs with positive findings using statistical analyses not included in original protocol | 31/74 (41.9) |

Outcome Measures

Of the 144 published manuscripts, 39 (27%) reported a different primary outcome measure in the published manuscript when compared to the original protocols, with 46.2% (18/39) of these reported as positive finding RCTs and 53.8% (21/39) reported as negative finding RCTs (P = 0.58). Examples of primary outcome measures changed included use of a different scale to measure drug effect, inclusion of a new behavioral endpoint, reporting an endpoint classified as secondary in the original protocol as a primary outcome measure in the published manuscript.

Statistical Analyses

33.3% (48/144) of the RCTs used statistical analyses in the published manuscript that differed from those proposed in the original RCT protocols to test the main hypothesis. Of these, 64.6% (31/48) were reported as positive RCTs and 35.4% (17/48) as negative RCTs (P = .003). Additionally, we found that 21.5% of the RCTs reporting positive findings for the primary outcome used statistical analyses not included in the original protocol and not classified in the published manuscript as post-hoc analysis.

Of the 142 protocols that we searched for on clinicaltrials.gov, 80 protocols (56.3%) were not registered on clinicaltrials.gov, and 34 protocols (23.9%) were registered after the study was completed. Out of the 28 studies that were pre-registered, 23 (82%) registered the same drug and dose as the protocol, 11 (39.3%) registered the same sample size as indicated in the protocol, 16 (57.1%) of the remaining protocols did not indicate the planned sample size on clinicaltrials.gov, and one protocol (3.6%) had a decrease of 40% in the sample size. Twenty-five (89.3%) used the same inclusion and exclusion criteria. All of the pre-registered studies used the same study design, 24 (85.7%) had the same primary outcome measure, and 21 (75%) had the same secondary outcome measure. 14.3% of the pre-registered studies had no change between protocol and clinicaltrials.gov.

Of the 67 investigators who were emailed and asked about the reason for nonpublication, 13 responded. Answers included:

We could not recruit the amount of patients wanted…and I thought this would not be enough for publishing. But I still might want to publish it, since there is still no comparable study published.

“We got stuck with statistics and it became somehow forgotten.”

“Due to time constraints…[submission] never happened.”

The study had non-significant results and I did not think it would get accepted for publication.

“We experienced unusual difficulties in our data analysis.”

We have experienced difficulties in submitting to appropriate journals…the study does not fall within the scope of the journals.

Discussion

Among 280 RCTs funded by SMRI, a U.S. based, non-profit organization funding RCTs in schizophrenia and bipolar disorder, between 2000 and 2011, approximately one in seven were never performed. Among those that were performed, more than one-third were never published, and RCTs with positive findings were more likely to be published. Among those that were published, 70% had major discrepancies between the original protocol submitted to SMRI and how the RCT was reported in the peer-reviewed literature. As has been previously described in general medicine, we found concerning evidence of publication bias and outcome reporting bias among a large number of RCTs funded for schizophrenia and bipolar disorder. These data have major implications regarding the validity of the reports of clinical trials published in the literature, upon which psychiatrists make clinical decisions often involving off-label use of medication approved for non-psychiatric indications, a practice particularly common in psychiatry.5 Over an 11-year period, SMRI committed over $68 500 000 to RCTs, of which $25 million was spent on unpublished RCTs, and $29 million was spent on RCTs with at least one major discrepancy between the original protocol and published manuscript. Publication bias and outcome reporting bias misleads pre-clinical and clinical research, leads to ineffective compounds being tested again, unnecessary exposure of patients to study procedures and waste of research funds.

Publication Bias

Of 277 studies funded and not ongoing, the results of 35% were not published. These rates of publication are consistent with those of RCTs in cardiovascular medicine,6 vaccine research,7 and overall on studies registered in Clinicaltrials.gov.2,8

The answers from investigators who did not publish their work indicated a variety of reasons, including time constraints, problems with statistics, problems with recruitment, non-significant findings, etc.

The current study is unique, in that at SMRI, final payments for grants are dependent on submissions of final reports, allowing access to the vast majority of reports of study results, be them positive or negative. In comparison, in a similar study performed in other areas of medicine, Dickersin et al9 contacted 293 PIs of NIH funded RCTs, asking if their studies were positive or negative, published or not published. Of these, 74.1% (217/293) gave detailed information on study design and publication, 12.3% (36/293) gave publication information only, and 13.6% refused to be interviewed.

In our sample, RCTs with positive results on the primary outcome measure had approximately 4.5 times greater odds of being published, on the high end compared to other studies. Hopewell et al10 performed a meta-analysis of publication bias in cohorts of clinical trials in other fields of medicine, and reported that positive findings are 3.9 (CI 2.7−5.7) more likely to be published compared to negative findings. Our finding of somewhat higher rates of publication of positive vs negative findings might be due to our knowledge of the results of the unpublished study reports.

There are many possible reasons for the preferential publication of positive rather than negative results. Researchers might be reluctant to devote the effort needed to prepare and submit negative-findings manuscripts, believing that the submission will be rejected, although this is not supported by published data.4,11,12 It could also be that researchers invested in a “pet” hypothesis are less inclined to publish negative findings contradicting their theories. Similarly, reviewers with a vested interest in positive results, on which grants and reputations depend, might recommend rejection of a negative findings study.13

In the decades preceding the establishment of ClinicalTrials.gov by the National Institutes of Health, clinical studies funded by pharmaceutical companies on drugs submitted to the FDA for psychiatric indications neglected to publish data showing lack of efficacy or poor tolerability.14–17 However, a recent 2018 review of the EU Clinical Trials Register (EUCTR) showed that pharmaceutical companies and sponsors of trials with large samples are actually more compliant than universities and sponsors of trials with small sample sizes in publishing results, whether negative or positive.18

Most work on nonpublication and outcome reporting bias has been done on research funded by industries1 or governments; 2 the data presented here indicate that publication bias and outcome reporting bias is also common in research funded by non-profit foundations. This is significant, as foundations, charities and private research foundations contribute approximately 4% of all funding of bio-medical research in the United States, a sum approaching $4.2 billion dollars in 2012.19

Assuming that each RCT reached its enrollment goal, the total dollar amount of the 90 grants awarded between 2000 and 2011, but not published, was $25 million, and included 6500 patients who were exposed unnecessarily to study drug and/or study procedures. Of those RCTs whose results were published, nearly three-quarters were published within 36 months. Although other fields of medicine20 have reported faster publication rates of positive studies than negative studies, this was not the case in the present study. However, the finding that 75% of the RCTs published 10–15 years after funding were negative is a further indication of investigators’ reluctance to write-up and submit negative-finding manuscripts. Interestingly, the bias is not limited to for-profit commercial organizations but affect equally a non-for profit organization such as SMRI.

Outcome Reporting Bias

Significant bias in outcome reporting was found, with 27% of the RCTs changing the primary outcome measure, and almost half reporting a secondary outcome measure different then proposed. A third of the RCTs used a different statistical analysis to that envisaged in the protocol, two-thirds of which were reported as positive RCTs, one-third as negative, indicating that at least in some cases this seems to have been done to “achieve” a positive result.

Similar rates of outcome reporting bias have been reported in papers on early psychosis21 and in general in the study of interventions in psychiatry,3 as well as other fields of medicine.22,23 We were able to obtain results on almost all of the studies funded by SMRI, by examining the final scientific reports required before issuing final payment of the grants. Previous research on this topic attempted to contact investigators who received funding regarding full publication of results. For example, Chan et al. attempted to contact investigators of 102 studies approved by the Scientific-Ethical Committees for Copenhagen and Frederiksberg, Denmark, in 1994–1995; only 48% (49/102) of trialists responded to a questionnaire regarding unreported outcomes.23

It is well recognized that in an RCT, a positive, often unanticipated finding, or using a novel statistical analysis might generate a new hypothesis and open the way to a new line of research. The mean time between funding and publication is 6 years, during which new findings might surface and affect the interpretation of study results. However, changes in statistical analyses and reporting should be clearly labeled as post hoc, and can then be viewed without prejudice, and indeed might be emphasized. It would be reasonable to hypothesize that one reason so many positive findings fail to replicate is that the original RCTs contained one of the biases described above. As Karl Popper, the philosopher of science, suggests, the use of negative findings helping the falsification of a hypothesis is important to distinguish science from non-science.24 Likewise, we agree with Iain Chalmers that the use of the terms “negative” and “positive” findings might be misleading, because negative findings are just as important as positive studies.25

ClinicalTrials.gov and its European counterpart were created to provide transparency in reporting the results of clinical trials. A recent comparison of clinical trials on neuropsychiatric drugs submitted to the FDA before versus after the FDA mandated pre-registration of trials in 2007 reported decreased rates of publication bias and misleading interpretations in studies performed after 2007.26 In line with this, in 2016, SMRI started requiring all of its new grantees to register their trial on ClinicalTrials.gov before receiving initial payment for the study.

Limitations

Our studies were proof-of-concept studies, hence the results are relevant to RCTs at the exploratory stage and impact thereon. This is different from large confirmatory studies funded by pharmaceutical companies or by governments, which are often closely monitored for quality control.

Implications

Most RCTs in psychiatry are only interpretable when included in a meta-analysis with other studies. If a study is not published it still may be included in meta-analyses, especially if results are reported to clinicaltrials.gov. Similarly, an unpublished study does not represent a bias if it was not initiated. A study that does not achieve its enrollment target can contribute meaningfully to meta-analyses and does not “bias” the conclusion unless a study was prematurely terminated due to an early analysis that found a positive result without a pre-specified “stopping rule.” The failure to identify the a priori primary outcome is crucial and a change in statistical approach in order to achieve a significant finding is highly problematic. However, if these changes are prespecified in the protocol and in the clinicaltrials.gov listing prior to data analysis and are based on a compelling rationale, this may not be problematic.

In order to remedy this, potential action items (table 4) for funders include tracking formally the previous performance of investigators and utilizing this information when contemplating future funding. Journal editors should look favorably at publishing negative findings, require that the protocol be attached to the manuscript upon submission, require a report of the primary outcome measured with pre-defined statistics, and compare manuscripts with protocols. The cost would be small, but full, unbiased publication would increase the efficiency of science.

Table 4.

Action Items

| Funding agencies | • Formally review final study reports, compare reports with original protocol including number of patients proposed vs randomized, changes in primary outcome measures and statistics. |

| • Track whether the study was published or not. | |

| • Funding agencies (NIMH, NARSAD, Stanley) might create publicly accessible databases reporting the status of funded studies regarding whether they have been published or not. | |

| • This information should be made available to the committee when considering new grants | |

| • Not fund investigators who were previously funded and did not published their findings | |

| • In cases where the data are not published, authors should make their results available to databases such as clinicaltrials.gov or other ways to get the data publicly available | |

| Journal editors | • Look favorably at publishing negative findings |

| • Require inclusion of protocol as web-supplement | |

| • Require reviewers to compare the preregistered protocol to the paper | |

| • Require report of primary outcome measure with primary statistics be included. Post Hoc analysis should be labeled as such but can be emphasized | |

| • Full disclosure of important findings | |

| Readers of papers | • Be aware of potential reporting biases and publication bias |

Conflict of Interests Statement

-

-

Ms. Jana Bowcut is an employee of the Stanley Medical Research Institute.

-

-

Ms Linda Levi has no potential conflicts of interest to disclose.

-

-

Ms Ortal Livnah has no potential conflicts of interest to disclose.

-

-

Dr Joseph S. Ross declares the following interests: receiving research support through Yale University from Johnson and Johnson, from the Food and Drug Administration (FDA) (U01FD004585), from the Medical Devices Innovation Consortium (MDIC)/National Evaluation System for Health Technology (NEST), from the Centers of Medicare and Medicaid Services (CMS) (HHSM-500-2013-13018I), from the Agency for Healthcare Research and Quality (R01HS022882), from the National Heart, Lung and Blood Institute of the National Institutes of Health (NIH) (R01HS025164), and from the Laura and John Arnold Foundation.

-

-

Dr Michael Knable has no potential conflicts of interest to disclose.

-

-

Dr Michael Davidson is an employee of Minerva Neurosciences Inc., a biotech developing CNS drugs.

-

-

Dr John M. Davis has no potential conflicts of interest to disclose.

-

-

Dr Mark Weiser is an employee of the Stanley Medical Research Institute.

Acknowledgments

This work was supported in part by the Stanley Medical Research Institute.

References

- 1.Lundh A, Lexchin J, Mintzes B, Schroll JB, Bero L. Industry sponsorship and research outcome. Cochrane Database Syst Rev. 2017;2:MR000033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Ross JS, Tse T, Zarin DA, Xu H, Zhou L, Krumholz HM. Publication of NIH funded trials registered in ClinicalTrials. gov: cross sectional analysis. BMJ 2012;344. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Shinohara K, Suganuma AM, Imai H, Takeshima N, Hayasaka Y, Furukawa TA. Overstatements in abstract conclusions claiming effectiveness of interventions in psychiatry: a meta-epidemiological investigation. PLoS One. 2017;12(9):e0184786. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Dwan K, Gamble C, Williamson PR, Kirkham JJ; Reporting Bias Group . Systematic review of the empirical evidence of study publication bias and outcome reporting bias − an updated review. PLoS One. 2013;8(7):e66844. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Taylor D. Prescribing according to diagnosis: how psychiatry is different. World Psychiatry. 2016;15(3):224–225. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Gordon D, Taddei-Peters W, Mascette A, Antman M, Kaufmann PG, Lauer MS. Publication of trials funded by the National Heart, Lung, and Blood Institute. N Engl J Med. 2013;369(20):1926–1934. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Manzoli L, Flacco ME, D’Addario M, et al. . Non-publication and delayed publication of randomized trials on vaccines: survey. BMJ. 2014;348:g3058. [DOI] [PubMed] [Google Scholar]

- 8.Jones CW, Handler L, Crowell KE, Keil LG, Weaver MA, Platts-Mills TF. Non-publication of large randomized clinical trials: cross sectional analysis. BMJ. 2013;347:f6104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Dickersin K, Min YI. NIH clinical trials and publication bias. Online J Curr Clin Trials. 1993;Doc No. 50:[4967 words; 53 paragraphs]. [PubMed] [Google Scholar]

- 10.Hopewell S, Loudon K, Clarke MJ, Oxman AD, Dickersin K. Publication bias in clinical trials due to statistical significance or direction of trial results. Cochrane Database Syst. Rev. 2009;( 1):1465–1858. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Dickersin K, Chan S, Chalmers TC, Sacks HS, Smith H Jr. Publication bias and clinical trials. Control Clin Trials. 1987;8(4):343–353. [DOI] [PubMed] [Google Scholar]

- 12.Olson CM, Rennie D, Cook D, et al. . Publication bias in editorial decision making. JAMA. 2002;287(21):2825–2828. [DOI] [PubMed] [Google Scholar]

- 13.Adler AC, Stayer SA. Bias among peer reviewers. JAMA. 2017;318(8):755. [DOI] [PubMed] [Google Scholar]

- 14.Le Noury J, Nardo JM, Healy D, et al. . Restoring Study 329: efficacy and harms of paroxetine and imipramine in treatment of major depression in adolescence. BMJ. 2015;351:h4320. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Eyding D, Lelgemann M, Grouven U, et al. . Reboxetine for acute treatment of major depression: systematic review and meta-analysis of published and unpublished placebo and selective serotonin reuptake inhibitor controlled trials. BMJ. 2010;341:c4737. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Vedula SS, Bero L, Scherer RW, Dickersin K. Outcome reporting in industry-sponsored trials of gabapentin for off-label use. N Engl J Med. 2009;361(20):1963–1971. [DOI] [PubMed] [Google Scholar]

- 17.Nassir Ghaemi S, Shirzadi AA, Filkowski M. Publication bias and the pharmaceutical industry: the case of lamotrigine in bipolar disorder. Medscape J Med. 2008;10(9):211. [PMC free article] [PubMed] [Google Scholar]

- 18.Goldacre B, DeVito NJ, Heneghan C, et al. . Compliance with requirement to report results on the EU Clinical Trials Register: cohort study and web resource. BMJ. 2018;362:k3218. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Moses H 3rd, Matheson DH, Cairns-Smith S, George BP, Palisch C, Dorsey ER. The anatomy of medical research: US and international comparisons. JAMA. 2015;313(2):174–189. [DOI] [PubMed] [Google Scholar]

- 20.Ioannidis JP. Effect of the statistical significance of results on the time to completion and publication of randomized efficacy trials. JAMA. 1998;279(4):281–286. [DOI] [PubMed] [Google Scholar]

- 21.Amos AJ. A review of spin and bias use in the early intervention in psychosis literature. Prim Care Companion CNS Disord. 2014;16(1). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Al-Marzouki S, Roberts I, Evans S, Marshall T. Selective reporting in clinical trials: analysis of trial protocols accepted by The Lancet. Lancet. 2008;372(9634):201. [DOI] [PubMed] [Google Scholar]

- 23.Chan AW, Hróbjartsson A, Haahr MT, Gøtzsche PC, Altman DG. Empirical evidence for selective reporting of outcomes in randomized trials: comparison of protocols to published articles. JAMA. 2004;291(20):2457–2465. [DOI] [PubMed] [Google Scholar]

- 24.Popper KR.77ie Logic of Scientific Discovery. New York: Basic Books; 1959. [Google Scholar]

- 25.Chalmers I. Proposal to outlaw the term “negative trial.” BMJ. 1985;290(6473):1002.3919855 [Google Scholar]

- 26.Zou CX, Becker JE, Phillips AT, et al. . Registration, results reporting, and publication bias of clinical trials supporting FDA approval of neuropsychiatric drugs before and after FDAAA: a retrospective cohort study. Trials. 2018;19(1):581. [DOI] [PMC free article] [PubMed] [Google Scholar]