Abstract

There are inconsistent findings concerning the efficacy of consensus messages to persuade individuals to hold scientifically supported positions on climate change. In this experiment, we tested the impact of consensus messages on skeptics’ climate beliefs and attitudes and investigated how the decision to pretest initial climate beliefs and attitudes prior to consensus message exposure may influence results. We found that although consensus messages led individuals to report higher scientific agreement estimates, total effects on key variables were likely an artifact of study design; consensus messages only affected climate attitudes and beliefs when they were measured both before and after message exposure. In the absence of a pretest, we did not observe significant total effects of consensus messages on climate outcomes. These results highlight the limitations of consensus messaging strategies at reducing political polarization and the importance of experimental designs that mimic real-world contexts.

Supplementary Information

The online version contains supplementary material available at 10.1007/s10584-021-03200-2.

Keywords: Scientific consensus, Political polarization, Pretest, Motivated reasoning, Science communication

There are inconsistent findings concerning whether communicating scientific agreement information can reduce partisan polarization and build support for climate mitigation policies. While some work finds that both liberals and conservatives respond positively to consensus messages (van der Linden et al. 2015b; van der Linden et al. 2019), other work has found null or backfire effects with conservatives in the U.S.A. (Bolsen and Druckman 2018; Cook and Lewandowsky 2016; Dixon 2016; Dixon et al. 2017; Dixon and Hubner 2018). These findings have prompted calls for additional work investigating what contextual and message factors may shape the effectiveness of consensus messaging strategies (Bayes 2020; Rode 2021). Given that they are often utilized by science communicators (@BarackObama 2013; NASA 2018), it is vital to clarify under what conditions consensus messages reduce or exacerbate political divisions around climate change. The present study advances this call for additional research on the conditions in which consensus messaging is effective (Bayes et al., 2020; Rode et al., 2021) by exploring a novel factor which may shed light on the inconsistent findings: whether or not participants are asked about their beliefs and attitudes about climate change before exposure to a consensus message.

This study investigated (1) the mediated effects of consensus messages through agreement estimates on the climate beliefs and attitudes of liberals and conservatives, and (2) whether pretesting climate beliefs and attitudes prior to consensus message exposure influences responses. Though we found that consensus messages positively influenced conservatives’ scientific agreement estimates, the total effects suggested limited efficacy of consensus messaging for reducing political polarization. We only observed significant total effects on beliefs about whether climate change is happening and its anthropogenic causes when participants first responded to a pretest of climate attitudes and beliefs, and we found no total effects of consensus messages on worry about climate change or support for public or government action. These findings suggest that the decision of whether or not to pretest participant attitudes before exposure to consensus messages is, in part, responsible for inconsistent findings in extant literature, and that consensus messages may be most effective in controlled, interactive communicative contexts.

Background

Agreement estimates as a gateway belief

Individuals who believe that there is a high level of scientific agreement that climate change is real and caused by human activity are more likely to believe that climate change is happening and to support public action (Ding et al. 2011; McCright et al. 2013). Building on this, researchers have demonstrated that communicating expert agreement with scientific consensus messages (e.g., “97% of climate scientists agree”) increases perceived levels of scientific agreement (e.g., Chinn et al. 2018; van der Linden et al. 2019), which in turn are associated with holding personal beliefs and policy attitudes in line with scientific positions (e.g., Chinn et al. 2018; Ding et al. 2011; van der Linden et al. 2019). For these reasons, van der Linden et al. (2015; 2019) have argued that individuals’ estimates of scientific agreement act as a “gateway belief” for holding scientifically supported positions, and that scientific consensus messages are a useful tool for increasing perceptions of scientific agreement.

Inconsistent findings concerning political skeptics

Initial research was optimistic that consensus messages could be an effective tool for reducing political polarization around climate change in the U.S.A. because such messages increased scientific agreement estimates. Research found that individuals across the political spectrum shifted their agreement estimates by similar amounts in response to a consensus message (Deryugina and Shurchkov 2016; Goldberg et al. 2019a, b; van der Linden et al. 2015a; van der Linden et al. 2016). Other studies find that consensus messages about climate change are more impactful on conservatives’ agreement estimates than liberals’ (van der Linden et al. 2015b).

However, a growing body of work suggests caution on the effectiveness of consensus messages. This work argues that motivated reasoning is a central challenge to persuading the public to adopt scientists’ views: individuals may resist accepting scientists’ claims if doing so requires going against the positions of one’s social group or updating one’s prior beliefs (Bolsen and Druckman 2018; Kahan et al. 2011; Hahnel et al. 2020). In other words, agreement estimates may only operate as a gateway belief among those who are predisposed to believe scientists (Bolsen and Druckman 2018; Dixon 2016; Dixon et al. 2017). In addition, several studies suggest that although consensus messages may increase agreement estimates among most audiences, the downstream effects on beliefs and policy support are not uniform (Bolsen and Druckman 2018; Dixon 2016). Cook and Lewandowsky (2016) found that while consensus messages may alleviate negative effects of free market beliefs on climate attitudes in other parts of the world, however, these effects were not observed among a U.S. sample. Additionally, there are concerns that consensus messages may not only fail to persuade skeptics, but also backfire and lead to mistrust in scientific sources (Cook and Lewandowsky 2016; Dixon and Hubner 2018), as has been observed with some other climate messaging strategies (Palm et al. 2020; Hart and Nisbet 2012). In sum, there are reasons to be skeptical about the efficacy of consensus messages at persuading political skeptics to hold expert supported positions on politically divisive issues.

Recent meta-analyses present additional information on the efficacy of consensus messages in extant work. Rode (2021) examined the effects of different interventions on climate attitudes, including consensus messaging interventions. When conducting a meta-analysis only on consensus messaging studies, they found that the average effect size was significant and positive, if very small (Hedges’ g = 0.09, 95% CI [0.05, 0.13], p = .004) and they find no evidence of political moderation (Rode 2021). However, they found that the effect of consensus messaging was not significantly different from that of other interventions (e.g., religious appeals, psychological distance, national security). Rode (2021) summarize that, “although [consensus messaging] as an intervention itself has limited effectiveness […], consensus messaging is a valuable tool to inoculate the public against misinformation” (p. 12) and calls for further research into the specific situations in which interventions may be more effective (p. 11). The presented research responds to this call by exploring whether the decision to pretest affects the impact of consensus messages on skeptics’ climate attitudes.

Previous explanations for inconsistent findings

To date, work exploring causal factors for inconsistent findings has largely focused on differences in stimuli. Numerical stimuli have produced inconsistent results concerning skeptics, with some studies finding significant positive effects (e.g., Lewandowsky et al. 2012; van der Linden et al. 2015b; van der Linden et al. 2019) and others failing to find such effects (e.g., Cook and Lewandowsky 2016; Deryugina and Shurchkov 2016; Dixon 2016; Dixon et al. 2017). In addition, qualitative stimuli (e.g., “most scientists believe,” Dixon and Hubner 2018) or mere mentions of a consensus (Bolsen and Druckman 2018) do not appear to influence skeptical partisans. Further, Bayes (2020) point out that subtle variations in the treatment across studies (e.g., “Did you know? 97% of climate scientists…” versus “97% of climate scientists…”) may play a role in explaining divergent findings for some outcomes, including reactance.

Others suggest that analytical decisions may be in part to blame for inconsistent findings. Kahan’s (2017) reanalysis of van der Linden et al.’s (2015b) study shows that despite the authors’ finding of indirect effects, there were no significant total effects on climate attitudes between control and treatment conditions. However, van der Linden et al. (2019), using a larger sample, demonstrate evidence of significant total effects, though effect sizes were small. The respective authors have also debated the appropriate sample size to observe small effects while maintaining responsible research practices (Kahan 2017; van der Linden et al. 2017). Thus, there may be several factors that could explain inconsistent findings. As Bayes (2020) summarize, “differences in observed results across studies of scientific consensus messaging reflect the reality that the effects may not generalize not only across measures, but also across times, contexts, or even seemingly minor variations in the wording of the climate consensus statement” (p. 6). They call for further research of how different contexts, external events, and messages influence the efficacy of climate change consensus messaging strategies among different audiences (Bayes 2020).

The decision to pretest

A factor that has received scant attention in previous research is the impact that choosing to pre-test climate attitudes and beliefs, or not, before exposure to consensus messages may have on dependent variables of interest. Studies using pre-/posttest designs are responsible for the bulk of the evidence arguing that consensus messages positively affect the attitudes of U.S. skeptics (see Table 1). Studies that use posttest-only designs often find that U.S. skeptics’ attitudes about climate change and support for action are not affected by consensus messages (Bolsen and Druckman 2018; Dixon 2016) or that consensus messages may even produce backfire effects on these outcomes (Cook and Lewandowsky 2016; Dixon and Hubner 2018).

Table 1.

Study design and findings of prior experimental research examining moderating influence on the effects of consensus messages with U.S. samples

|

Pre-/posttest Experimental design |

Posttest only Experimental design |

|

|---|---|---|

|

Consensus message effect IS moderated, such that the consensus message positively affects skeptics’ attitudes more than non-skeptics’ attitudes. |

Van der Linden et al. (2015) climate change, prior beliefs Van der Linden et al. (2019) climate change, political ideology |

|

|

Consensus message effect IS NOT moderated. The consensus message affects all individuals similarly. |

Lewandowsky et al. (2012) climate change, free market beliefs (U.S. sample) Van der Linden et al. (2015) climate change, political ideology Van der Linden et al. (2016) climate change, political ideology Deryugina and Shurchov (2016) climate change, political ideology Goldberg et al. (2019a) climate change, political ideology Goldberg et al. (2019b) climate change, political ideology |

Van der Linden et al. (2015) vaccines, political ideology |

|

Consensus message effect IS moderated, such that the consensus message does not affect skeptics’ attitudes as strongly as non-skeptics’ attitudes OR causes backfire effects among skeptical individuals. |

Ma et al. (2019) Climate change, reactance |

Dixon (2016) GMOs, prior beliefs Cook and Lewandowsky (2016) climate change, free market beliefs (U.S. sample) Dixon et al. (2017) climate change, political ideology Bolsen and Druckman (2018) climate change, partisanship & knowledge Dixon and Hubner (2018) climate change & nuclear power, political ideology |

Note: Below each study in italics are the substantive topics the studies engaged with, followed by the moderator interacted with exposure to a consensus message

There are several reasons that the decision to pretest could influence results. Pretesting outcomes could sensitize participants to be more attentive to consensus information in the treatment, which could result in more accurate recall (Campbell 1957; Campbell and Stanley 1963; Nosanchuk et al. 1972) and elevated outcomes (Willson and Putnam 1982). It may also make consensus information more salient when reporting attitudes, where it may not have been as important a consideration to respondents in the absence of the pretest (Brossard 2010; Chong and Druckman 2007; Schwarz 1999; Tourangeau et al. 2000). Finally, a pre-/posttest design risks exposing the aim of the study to the participant by signaling outcomes of interest (Hauser et al. 2018; Parrot and Hertel 1999) and providing information for respondents to infer what a “good” participant will look like (Nosanchuk et al. 1972), which can lead respondents to modify their responses in later measures.

In one of the few studies examining the impact of pretesting climate attitudes and beliefs, Myers et al. (2015) found that respondents who were asked to estimate agreement before seeing a consensus message shifted their climate attitudes more than participants who did not see the pretest. Like Van der Linden et al. (2015), they also found that participants who estimated agreement twice reported higher agreement estimates the second time, even when they did not see a consensus message (Myers et al. 2015). These methodological considerations are important to the theory and practice of consensus messaging as they suggest that the efficacy of consensus messaging may hinge, in part, on whether participants are first asked about their initial attitudes and beliefs.

Though it is important to determine whether significant findings in previous research are influenced by study design, investigating the effect of a pretest also helps us to determine in what contexts consensus messages may be more or less effective. These inconsistent findings may indicate that consensus messages are more effective in interactive contexts, like classrooms, where “conceal and reveal” techniques may be employed (Myers et al. 2015). They may also indicate that consensus messages may be less effective in mass media contexts (e.g., online advertisements, news coverage), where people are not asked about their climate attitudes prior to exposure. Thus, investigating study design is vital to understanding under what conditions consensus messages may be more and less effective at persuading skeptics of scientists’ positions.

Hypotheses and research questions

Based on the findings of the previous research discussed above, we investigate the following hypotheses:

H1: Exposure to a consensus message (97% of climate scientists agree) will result in a higher estimate of scientific agreement on climate change.

H2: Higher agreement estimates will be positively associated with (a) belief in global warming, (b) belief in human causation, (c) worry and concern about climate change, (d) support for public action, and (e) support for government action.

H3: Effects of consensus messages on (a) belief in global warming, (b) belief in human causation, (c) worry and concern about climate change, (d) support for public action, and (e) support for government action will be mediated through agreement estimates.

Regarding the effects of including a pretest, we test the following hypotheses concerning the effects of study design drawn from the research discussed above:

H4: Those in the pre-/posttest treatment condition will report a higher estimate of scientific agreement that human activity is causing climate change than those in the posttest-only treatment condition.

H5: Those in the pre-/posttest treatment condition will report greater total effects on (a) belief in global warming, (b) belief in human causation, (c) worry and concern about climate change, (d) support for public action, and (e) support for government action than those in the posttest-only treatment condition.

Given the inconsistent findings as to whether political ideology may moderate associations, we propose the following research questions:

RQ1: How does political ideology moderate the effect of exposure to a consensus message on estimates of scientific agreement?

RQ2: How does political ideology moderate the effect of agreement estimates on (a) belief in global warming, (b) belief in human causation, (c) worry and concern about climate change, (d) support for public action, and (e) support for government action?

RQ3: How does political ideology moderate the indirect effects of exposure to a consensus message (97% of climate scientists agree) on (a) belief in global warming, (b) belief in human causation, (c) worry and concern about climate change, (d) support for public action, and (e) support for government action through agreement estimates?

The present study

This study investigates two important factors in the effects of consensus messaging: (1) how the choice of pretesting for climate attitudes and beliefs may influence responses to consensus messages and (2) how political ideology may moderate the effects of consensus messages. As consensus messaging strategies are already being employed concerning politically divisive science (@BarackObama 2013; NASA 2018; The Consensus Project, n.d.), it is vital to clarify the effectiveness of consensus messages in building support for expert-supported positions as well as whether they may potentially backfire.

Methods

Data

The data for this study were collected from Lucid Theorem, a diverse national online panel, between March 27 and March 30, 2020. After removing 69 participants who did not complete the survey because they failed to pass simple attention checks, the sample included 1998 respondents.

In our sample, 48.2% self-identified as men (N = 964) and 51.7% as women (N = 1034). Five participants who reported their gender as “other” were removed from the sample because the size of this group was too small to allow for meaningful comparison to other groups.1 71.3% of respondents identified as White, followed by 12.1% as Black or African American, 8.2% as Hispanic, 5.1% as Asian, and 3.4% as other racial groups. Age was measured on an 8-point scale from “18–24” to “85 or older,” with the median response being “35–44” (mean (M) = 3.55, standard deviation (SD) = 1.67). Education was also measured on an 8-point scale from “Less than high school diploma” to “Doctorate (e.g., PhD),” with the median response being “Some college, no degree” (M = 3.73, SD = 1.52). Finally, yearly household income was measured on a 7-point scale from “Less than $20,000” to “over $150,000,” with the median response being “$35,000 to $49,999” (M = 3.38, SD = 1.76).

Design

This online experiment used a two-by-two factorial design, crossing the treatment (exposure to a consensus message or not) and the inclusion of a pretest (pre-/posttest or posttest only). This results in four conditions: a pre-/posttest treatment condition (TPP, n = 485), in which participants responded to climate attitude and belief measures before and after reading a consensus message; a pre-/posttest control condition (CPP, n = 497), in which participants responded to climate attitude and belief measures twice but do not see a consensus message; a posttest-only treatment condition (TPO, n = 551), in which participants only responded to climate attitude and belief measures after reading a consensus message; and finally, a posttest-only control condition (CPO, n = 465), in which respondents do not see a consensus message and only responded to climate attitude and belief measures once. We refer to experimental conditions with acronyms such that the “T” or “C” indicates whether it is a “treatment” or “control” condition—that is, whether or not participants in these conditions saw a consensus message—while the “PP” refers to pre-/posttest conditions and “PO” refers to posttest-only conditions.

Procedure

After consenting to participate, the participants were told that they were taking a public opinion survey about popular topics and would see questions about several different topics. The first question blocks, presented in random order, asked participants about (a) social media use; (b) travel; and, for those in the pre-/posttest conditions (TPP and CPP), (c) a pretest measuring a range of climate attitudes and beliefs (described below and in Appendix 2). As in previous studies (van der Linden et al. 2019), presenting the pretest with distractor questions was intended to obscure the purpose of the study and create space between the identical posttest questions. Respondents in posttest-only conditions (TPO and CPO) only responded to the social media use and travel questions, to control for any inadvertent effects the distractor questions may have had.

Second, participants in treatment conditions (TPO and TPP) were exposed to the consensus message. As in previous work, these participants were told that the researchers keep a large database of media messages and that they would be randomly shown one of these messages (Goldberg et al. 2019a, b; van der Linden et al. 2015b; van der Linden et al. 2019). All participants in treatment conditions saw a short message which read: “Did you know? 97% of climate scientists have concluded that human-caused climate change is happening” (Dixon et al. 2017). We then asked these participants to identify whether they had seen a message about the environment, crime, or movies, as an attention check.

Third, all participants responded to distractor questions about tourism to create space between the stimulus and outcome measures (van der Linden et al. 2015b; van der Linden et al. 2019).

Fourth, all participants estimated the level of scientific consensus on climate change (mediator) and reported their climate change beliefs and attitudes (outcomes).

Finally, all participants responded to demographic measures. Appendix 1 visually depicts the study procedure, showing the order of question blocks seen by respondents in each condition.2

Measures

Moderator

Political ideology was measured on a 7-point scale from “very liberal” (1) to “very conservative” (7) (mean (M) = 3.95, standard deviation (SD) = 1.70). The scale mid-point was “moderate” (4).

Mediator

Agreement estimate was measured by asking participants, “To the best of your knowledge, what percent (%) of climate scientists say that human activity is causing climate change? (0 - 100)” (M = 77.12, SD = 25.62) (van der Linden et al. 2015b; van der Linden et al. 2019). This measure was also included in the pretest.

Outcomes

Belief in climate change was measured by asking respondents, “How strongly do you believe that climate change is or is not happening?” (van der Linden et al. 2015b; van der Linden et al. 2019). Respondents reported their belief in climate change on a 5-point scale from “I strongly believe that climate change IS NOT happening” (1) to “I strongly believe that climate change IS happening” (5). The scale mid-point was, “I do not know whether climate change is or is not happening” (3) (M = 4.14, SD = 1.12). This measure was also included in the pretest.

Belief in human causation was measured by asking respondents, “Assuming climate change IS happening, to what extent do you think climate change is human-induced as opposed to a result of Earth’s natural changes?” (van der Linden et al. 2015b; van der Linden et al. 2019). Participants reported their belief in anthropogenic causation on a 5-point scale from “Climate change is completely caused by natural changes” (1) to “Climate change is completely caused by human activity” (5). The scale mid-point was, “Climate change is equally caused by human activity and natural changes” (3) (M = 3.42, SD = 1.12). This measure was also included in the pretest.

Worry was measured by asking participants, “How worried are you about climate change?” (van der Linden et al. 2015b; van der Linden et al. 2019). Responses were captured on a 5-point scale from “Not at all worried” (1) to “Extremely worried” (5). We also asked, “How concerned are you about climate change?” Responses were captured on a 5-point scale from “Not at all concerned” (1) to “Extremely concerned” (5). These measures of worry and concern about climate change were averaged to create a measure of worry and concern (M = 3.35, SD = 1.33, r = .92, p < .001). This measure was also included in the pretest.

Support for public action was measured with the question, “What do you think of peoples’ efforts to address climate change? Do you think that people should be putting more, less, or about the same amount of effort toward addressing climate change?” (van der Linden et al. 2015b; van der Linden et al. 2019). Participants responded on a 5-point scale from “People should put MUCH LESS effort toward addressing climate change” (1) to “People should put MUCH MORE effort toward addressing climate change” (5). The scale mid-point was, “People should do what they have been doing to address climate change” (3) (M = 4.08, SD = 1.16). This measure was also included in the pretest.

Finally, we asked participants about their support for government action with the question, “How strongly do you support or oppose government action to address climate change?” Responses were recorded on a 7-point scale from “Strongly support” (1) to “Strongly oppose” (7). The scale mid-point was, “Neither support nor oppose” (4) (M = 5.26, SD = 1.74). This measure was also included in the pretest.

Analyses

The analyses are presented in three sections. Following a discussion of random assignment, we first investigated the effect of experimental conditions on agreement estimates (H1, H4), as well as the possibility that political ideology moderates this effect (RQ1). Then, we investigated how agreement estimates are associated with outcome variables (H2), and how these effects may be moderated by political ideology (RQ2). Finally, we conducted a mediation analysis to investigate the indirect and total effects of experimental condition through agreement estimates on outcome variables, moderated by political ideology (H3, H5, RQ3).

Random assignment

While conditions did not differ with respect to income (p = .33), conditions did differ with respect to gender (X2 = 9.22, p = .03) and age F(3, 1993) = 2.65, p = .048. Differences between conditions with respect to education were also marginally significant F(3, 1994) = 2.33, p = .072. Therefore, despite random assignment to experimental conditions, the analyses controlled for gender, age, and education. Analyses run without these controls follow a very similar pattern of results. Descriptive information for all outcomes by experimental condition is in Table 2.

Table 2.

Condition means for all outcomes

| CPO | CPP | TPO | TPP | |

|---|---|---|---|---|

| Consensus estimate (0–100) | 67.18 (26.18) | 67.59 (25.68) | 84.01 (22.82) | 88.55 (20.27) |

| Belief in global warming (1–5) | 4.14 (1.10) | 4.02 (1.20) | 4.19 (1.11) | 4.21 (1.08) |

| Belief in human causation (1–5) | 3.36 (1.10) | 3.32 (1.12) | 3.43 (1.12) | 3.57 (1.13) |

| Worry and concern (1–5) | 3.28 (1.31) | 3.33 (1.35) | 3.32 (1.30) | 3.45 (1.33) |

| Support for public action (1–5) | 4.03 (1.15) | 4.04 (1.19) | 4.10 (1.17) | 4.14 (1.13) |

| Support for government action (1–7) | 5.11 (1.73) | 5.29 (1.72) | 5.28 (1.75) | 5.36 (1.76) |

Note: descriptive means, standard deviation in parentheses

Effects of experimental condition and political ideology on agreement estimates

First, we investigated the effect of the experimental conditions on the proposed mediator, scientific agreement estimates. To do so, we first ran OLS regressions with the experimental conditions and political ideology as predictors, as well as gender, age, and education. Then, to see if political ideology moderated the effect of experimental condition, we ran a second OLS regression that also included interactions between the experimental conditions and political ideology. Full results with the CPO condition (control, posttest only) as reference group are reported in Table 2. This condition is less likely than the CPP (control, pre-/posttest) to have influenced participants’ responses in any way, and thus, it was used as a baseline. Tables for results using the CPP and TPP as reference conditions are in Appendix 4.

Looking first at the main effect of experimental condition on the mediator, exposure to consensus messages increased the estimated percentage of scientists who say that climate change is caused by humans (TPO v. CPO, unstandardized β = 16.75, p < .001; TPP v. CPO, β = 21.13, p < .001; Table 2). Both treatment conditions also led to higher agreement estimates compared to the CPP condition (TPO v. CPP, β = 16.61, p < .001; TPP v. CPP, β = 20.99, p < .001). In sum, exposure to consensus messages elevated agreement estimates (supporting H1). In addition, agreement estimates were higher in the TPP than TPO condition (TPO v. TPP, β = − 4.38, p < .001). That is, among participants who saw the consensus message, agreement estimates were higher among those who first reported their initial climate beliefs and attitudes in a pretest (supporting H4).

Political ideology negatively affected agreement estimates (β = − 2.43, p < .001), such that conservatives reported lower agreement estimates than liberals. We then investigated whether political ideology moderated the effects of the experimental conditions on consensus estimates. There were significant interactive effects between TPO (v. CPO) and political ideology (unstandardized β = 3.04, p < .001) and between TPP (v. CPO) and political ideology (β = 4.45, p < .001). We saw a similar pattern in results using CPP as the reference condition. There were significant interactive effects between TPO (v. CPP) and political ideology (β = 1.74, p < .05) and between TPP (v. CPP) and political ideology (β = 3.17, p < .001). However, there were no significant interactions between the TPO (v. TPP) and political ideology or between CPO (v. CPP) and political ideology (see Appendix 4). Visual inspections of the significant interactions revealed that conservatives’ agreement estimates were more strongly impacted by the treatment conditions (v. CPO) than liberals’ agreement estimates. That is, conservatives’ agreement estimates increased more between control and treatment conditions compared to liberals (RQ1), such that in the absence of a consensus message, conservatives’ agreement estimates were lower than those of liberals, whereas after exposure to the consensus message, liberals and conservatives reported similar agreement estimates (see Fig. S2 in Appendix 4).

Effects of agreement estimates and political ideology on outcomes

We next turn to the second leg of the proposed mediation model: whether agreement estimates were positively associated with climate beliefs and attitudes, and whether these associations were moderated by political ideology. We ran a series of OLS regressions looking at the effect of agreement estimates and political ideology on our outcome variables, controlling for experimental condition, gender, age, and education. We first report main effects of agreement estimates and political ideology on climate attitude outcomes, then test for the possibility that political ideology moderates the effect of agreement estimates on outcomes.

Agreement estimates were positively associated with all climate beliefs and attitudes (H2a–e are supported). Those who reported higher agreement estimates were more likely to report greater belief in global warming (unstandardized β = .01, p < .001), belief in human causation (β = .01, p < .001), worry and concern about climate change (β = .01, p < .001), support for public action (β = .01, p < .001), and support for government action (β = .01, p < .001). In sum, higher agreement estimates were associated with holding more scientifically supported positions on climate change, worry and concern about climate change, and greater support for action (Tables 3, 4 and 5).

Table 3.

Main and interactive effects of experimental condition and political ideology on mediator (reference condition: CPO)

| Consensus estimate | |||||||

|---|---|---|---|---|---|---|---|

| CPP (v. CPO) | .14 | (1.52) | −4.88 | (3.84) | |||

| TPO (v. CPO) | 16.75 | *** | (1.48) | 4.82 | (3.74) | ||

| TPP (v. CPO) | 21.13 | *** | (1.52) | 3.71 | (3.86) | ||

| Political ideology | −2.43 | *** | (.32) | −4.71 | *** | (.66) | |

| Gender | .84 | (1.07) | .72 | (1.06) | |||

| Age | .35 | (.32) | .35 | (.32) | |||

| Education | 1.14 | *** | (.35) | 1.01 | *** | (.35) | |

| Interactions | |||||||

| CPP (v. CPO) × political ideology | 1.30 | (.90) | |||||

| TPO (v. CPO) × political ideology | 3.04 | *** | (.87) | ||||

| TPP (v. CPO) × political ideology | 4.45 | *** | (.91) | ||||

| Constant | 70.20 | *** | (2.85) | 79.73 | *** | (3.66) | |

| Observations | 1998 | 1988 | |||||

| Adjusted R^2 | .17 | .18 | |||||

| Residual standard error | 23.390 (df = 1980) | 23.242 (df = 1977) | |||||

| F statistic | 57.853*** (df = 7; 1980) | 43.838*** (df = 10; 1977) | |||||

Note: *p < 0.1; **p < 0.05; ***p < 0.01

Table 4.

Main and interactive effects of agreement estimate and political ideology on outcomes (1/2)

| Belief in global warming | Belief in human causation | Worry and concern | ||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Consensus estimate | .01 | *** | (.00) | .02 | *** | (.00) | .01 | *** | (.00) | .02 | *** | (.00) | .01 | *** | (.00) | .02 | *** | (.00) | ||||||

| Political Ideology | − .20 | *** | (.01) | − .15 | *** | (.04) | − .18 | *** | (.01) | − .12 | *** | (.04) | − .28 | *** | (.02) | − .16 | *** | (.05) | ||||||

| CPP (v. CPO) | − .14 | ** | (.07) | − .13 | ** | (.07) | − .04 | (.07) | − .04 | (.07) | .04 | (.08) | .04 | (.08) | ||||||||||

| TPO (v. CPO) | − .18 | *** | (.07) | − .18 | *** | (.07) | − .16 | ** | (.07) | − .16 | ** | (.07) | − .16 | ** | (.08) | − .16 | ** | (.08) | ||||||

| TPP (v. CPO) | − .02 | *** | (.07) | − .23 | *** | (.07) | − .08 | (.07) | − .08 | (.07) | − .08 | (.08) | − .07 | (.08) | ||||||||||

| Gender | .05 | (.05) | .05 | (.05) | .08 | (.05) | .08 | * | (.05) | .14 | *** | (.05) | .14 | *** | (.05) | |||||||||

| Age | .02 | (.01) | .02 | (.01) | − .04 | *** | (.01) | − .04 | *** | (.01) | − .04 | ** | (.02) | − .04 | ** | (.02) | ||||||||

| Education | .02 | (.02) | .02 | (.02) | .01 | (.02) | .01 | (.02) | .07 | *** | (.02) | .07 | *** | (.02) | ||||||||||

| Interactions | ||||||||||||||||||||||||

| Consensus estimate × political ideology | − .001 | (.00) | − .001 | (.00) | − .001 | ** | (.00) | |||||||||||||||||

| Constant | 3.78 | *** | (.14) | 3.59 | *** | (.23) | 3.11 | *** | (.14) | 2.85 | *** | (.23) | 3.26 | *** | (.16) | 2.76 | *** | (.26) | ||||||

| Observations | 1988 | 1988 | 1988 | 1988 | 1988 | 1988 | ||||||||||||||||||

| Adjusted R^2 | .21 | .21 | .20 | .20 | .22 | .22 | ||||||||||||||||||

| Residual standard error | 0.998 (df = 1979) | 0.998 (df = 1978) | 1.001 (df = 1979) | 1.001 (df = 1978) | 1.170 (df = 1979) | 1.169 (df = 1978) | ||||||||||||||||||

| F statistic | 67.003*** (df = 8; 1979) | 59.705*** (df = 9; 1978) | 61.593*** (df = 8; 1979) | 55.010*** (df = 9; 1978) | 71.433*** (df = 8; 1979) | 64.319*** (df = 9; 1978) | ||||||||||||||||||

Note: *p < 0.1; **p < 0.05; ***p < 0.01

Table 5.

Main and interactive effects of agreement estimate and political ideology on outcomes (2/2)

| Support for public action | Support for government action | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Consensus estimate | .01 | *** | (.00) | .01 | *** | (.00) | .01 | *** | (.00) | .01 | ** | (.00) | |||

| Political ideology | − .22 | *** | (.01) | − .21 | *** | (.05) | − .29 | *** | (.02) | − .33 | *** | (.07) | |||

| CPP (v. CPO) | .01 | (.07) | .01 | (.07) | .17 | (.11) | .17 | (.11) | |||||||

| TPO (v. CPO) | − .12 | * | (.07) | − .12 | * | (.07) | − .01 | (.11) | − .01 | (.11) | |||||

| TPP (v. CPO) | − .13 | * | (.07) | − .13 | * | (.07) | − .01 | (.11) | − .01 | (.11) | |||||

| Gender | .16 | *** | (.05) | .16 | *** | (.05) | .03 | (.07) | .03 | (.07) | |||||

| Age | − .03 | ** | (.01) | − .03 | ** | (.01) | − .01 | (.02) | − .01 | (.02) | |||||

| Education | − .02 | (.02) | − .02 | (.02) | .03 | (.03) | .03 | (.03) | |||||||

| Interactions | |||||||||||||||

| Consensus estimate × political ideology | .00 | (.00) | .00 | (.00) | |||||||||||

| Constant | 4.02 | *** | (.15) | 4.00 | *** | (.24) | 5.38 | *** | (.23) | 5.54 | *** | (.37) | |||

| Observations | 1987 | 1987 | 1987 | 1987 | |||||||||||

| Adjusted R^2 | .19 | .19 | .12 | .12 | |||||||||||

| Residual standard error | 1.043 (df = 1978) | 1.044 (df = 1977) | 1.634 (df = 1978) | 1.634 (df = 1977) | |||||||||||

| F statistic | 59.796*** (df = 8; 1978) | 53.126*** (df = 9; 1977) | 35.500*** (df = 8; 1978) | 31.579*** (df = 9; 1977) | |||||||||||

Note: *p < 0.1; **p < 0.05; ***p < 0.01

However, it should also be noted that all outcomes were also negatively associated with political ideology. Conservatives, on average, reported less belief in global warming (β = − .20, p < .001), belief in human causation (β = − .18, p < .001), worry and concern about climate change (β = − .28, p < .001), support for public action (β = − .22, p < .001), and support for government action (β = − .29, p < .001).

Looking to whether political ideology moderated the effect that agreement estimates have on different climate attitude outcomes (RQ2), we only observed a significant interaction between agreement estimate and political ideology when predicting worry and concern (β = − .001, p < .05; Tables 3 and 4). Visual inspection of this interaction revealed that perceived scientific agreement had a stronger association with worry and concern for liberals than conservatives (RQ2; see Fig. S3 in Appendix 4).

Indirect effects

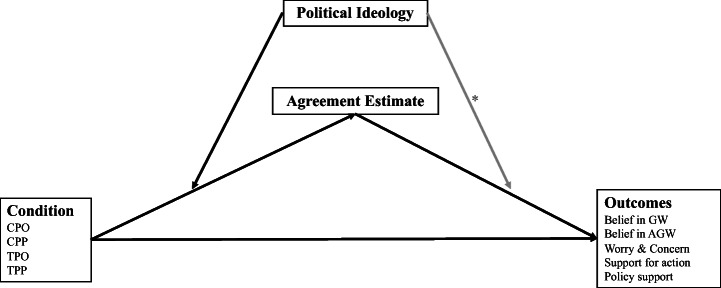

Finally, we test the mediated effect of exposure to a consensus message through agreement estimates on climate beliefs and attitudes. Figure 1 visually represents the moderated mediation model. To examine the moderated and unmoderated indirect effects, we used PROCESS version 3.5 (Hayes 2017). Analyses were run with 10,000 iterations to produce bootstrapped 95% confidence intervals. In these analyses, we looked directly at differences between the two treatment conditions (TPO v. TPP), between the two pre-/posttest conditions (TPP v. CPP), and between the two posttest conditions (TPO v. CPO). We examined unmoderated indirect and total effects using PROCESS model 4. The moderated mediation models were informed by the interaction results reported above. Thus, for the outcomes of belief in global warming, belief in anthropogenic causation, support for public action, and support for government action, we investigated moderated indirect effects using PROCESS model 7, in which political ideology only moderated the link from condition to agreement estimate. Because we found a significant interactive effect of agreement estimate and political ideology on worry and concern, we used PROCESS model 58, in which political ideology moderated the link from condition to agreement estimate and the link from agreement estimate to outcomes, to investigate the moderated-mediation effect of the experimental conditions on worry and concern about climate change. However, when investigating effects on worry and concern in the TPO v. TPP comparison, we used model 14, in which political ideology only moderates the link from agreement estimate to outcomes because we previously observed no moderating effect on agreement estimates between these conditions.

Fig. 1.

Visualizing the moderated mediation model. Note: The gray arrow with an asterisk indicates significance only in the model predicting worry and concern. OLS regressions suggested agreement estimates were unmoderated by political ideology for all other outcomes

Belief in global warming

Looking first to unmoderated mediation effects, TPO lowered belief in global warming compared to TPP (indirect effect (IE) = − .06, 95% confidence interval (CI) (− .10, − .02)), TPP increased belief compared to CPP (IE = .29, 95% CI (.23, .35)), and TPO increased belief compared to CPO (IE = .23, 95% CI (.17, .29)). H3a was supported.

However, we only saw significant total effects for the TPP v. CPP comparison (total effect (TE) = .19, 95% CI (.06, .32)). We did not observe a significant difference in total effects between TPO and CPO, or between TPO and TPP (H5a was unsupported).

Looking next to moderated-mediation effects, we used the index of moderated mediation to examine whether the indirect effect was moderated by political ideology, and, in cases of a significant moderated indirect effect, explored differences in the effect at different levels of partisanship.

The index of moderated mediation was not significant for TPO v. TPP. The index of moderated mediation was significant for TPP v. CPP (index of moderated mediation (IMM) = .05, CI = .02, .08). Looking at the moderating effect, we saw that the indirect effect of TPP v. CPP on belief in global warming was stronger among conservatives (scale point 6; indirect effect (IE) = .44, CI = .35, .54) than among liberals (scale point 2; IE = .24, CI = .16, .32). We also saw evidence of moderated indirect effects in the TPO v. CPO condition (index (I) = .05, CI = .02, .08). Similarly, the indirect effect was larger among conservatives (scale point 6; IE = .37, CI = .27, .48) than among liberals (scale point 2; IE = .18, CI = .11, .25) (RQ3a).

Belief in human causation

Looking first to unmoderated mediation effects, TPO led to less belief in human causation than TPP (IE = − .06, 95% CI (− .10, − .02)), TPP led to greater belief than CPP (IE = .29, 95% CI (.23, .35)), and TPO led to greater belief than CPO (IE = .23, 95% CI (.17, .29)). H3b was supported.

However, we only saw significant unmoderated total effects for TPO v. TPP (TE = − .14, 95% CI (− .27, − .02)) and TPP v. CPP (TE = .25, 95% CI (.12, .38)) (H5b, RQ3b).

Turning to moderated mediation, the index of moderated mediation was not significant for the TPO v. TPP comparison. The index of moderated mediation did indicate that political ideology moderated the comparison between TPP and CPP (IMM = .05, CI = .02, .08). In the comparison between TPP and CPP, TPP increased belief in human causation more for conservatives (IE = .43, CI = .34, .53) than liberals (IE = .23, CI = .16, .31). Similarly, political ideology moderated the TPO v. CPO comparison (I = .05, CI = .02, .08). TPO, when compared to CPO, led to a greater increase in the belief for human causation for conservatives (IE = .36, CI = .27, .47) than liberals (IE = .17, CI = .10, .24) (RQ3b).

Worry and concern

Looking first to unmoderated mediation effects, the TPO condition led to less worry and concern than the TPP condition (IE = − .05, 95% CI (− .09, − .02)), TPP led to more worry and concern than CPP (IE = .25, 95% CI (.19, .31)), and TPO led to more worry and concern than CPO (IE = .20, 95% CI (.15, .25)). H3c was supported. However, the total effects were not significant for any of these comparisons. (H5c was unsupported).

Looking at moderated mediation effects, when comparing TPP vs TPO, we used PROCESS model 14, in which political ideology only moderates the link from estimated scientific consensus to worry and concern, as our initial investigation indicated the political ideology does not moderate the comparison between TPP and TPO in predicting estimated scientific consensus. Model 14 revealed significant moderation (I = − .007, CI = − .02, − .006), such that TPP, when compared to TPO, increased worry and concern more for liberals (IE = .071, CI = .028, .118) than for conservatives (IE = .043, CI = .017, .073).

For moderated mediation effects comparing TPP to CPP and TPO to CPO, we used PROCESS model 58, which looks at how political ideology moderates both links of the indirect effect. Because the indirect effect is a nonlinear function of the moderator in model 58, we are not able to use the index of moderated mediation (Hayes 2017), and instead examine bootstrapped contrasts of the indirect effect at different levels of political ideology. Looking first to TPP vs. CPP, the pairwise contrast for the indirect effects for liberals (IE = .225, CI = .140, .324) and conservatives (IE = .251, CI = .154, .354) did not reveal a significant difference (contrast (C) = .026, CI = − .119, .167); we compared the indirect effects for all levels of political ideology and none were significantly different from each other. Similarly, when comparing TPO and CPO, the pairwise contrasts for the indirect effects of liberals (IE = .165, CI = .094, .248) and conservatives (IE = .211, CI = .123, .309) were not significantly different (C = .046, CI = − .086, .176); we compared the indirect effects for all levels of political ideology, and none were significantly different from each other. For clarity, we note that when looking at the output for model 58 for both TPP vs. CPP and TPO vs. CPP, political ideology did moderate both of the indirect links, but the lack of an overall moderating effect is likely due to the fact that the moderation of the first and second links of the indirect effect move in opposite directions (RQ3c).

Support for public action

We observed significant unmoderated indirect effects on support for public action for TPO v. TPP (IE = − .05, 95% CI (− .08, − .02)), TPP v. CPP (IE = .24, 95% CI (.19, .30)), and TPO v. CPO (IE = .19, 95% CI (.14, .25)). H3d was supported. However, in none of these comparisons did we see significant total effects (H5d was unsupported).

Looking to moderated mediation effects, the index of moderated mediation was not significant for the comparison of TPO v. TPP. However, the index of moderated mediation was significant for TPP v. CPP (IMM = .05, CI = .02, .07). The indirect effect of TPP v. CPP on support for public action was stronger among conservatives (IE = .39, CI = .30, .49) than among liberals (IE = .21, CI = .14, .29). Additionally, the index of moderated mediation was significant for TPO v. CPO (I = .04, CI = .02, .07). The indirect effect of TPO v. CPO on support for public action was stronger among conservatives (IE = .33, CI = .24, .43) than among liberals (IE = .16, CI = .09, .22) (RQ3d).

Support for government action

Finally, we found significant unmoderated indirect effects on support for government action for TPO v. TPP (IE = − .05, 95% CI (− .09, − .02)), TPP v. CPP (IE = .24, 95% CI (.17, .32)), and TPO v. CPO (IE = .19, 95% CI (.13, .26)). H3e was supported. However, none of the comparisons yielded significant total effects (H5e is unsupported).

Looking to moderated mediation effects, the index of moderated mediation was not significant for the comparison of TPO v. TPP. In contrast, the index of moderated mediation was significant for TPP v. CPP (IMM = .05, CI = .02, .08). The indirect effect of TPP v. CPP on support for government action was stronger among conservatives (IE = .42, CI = .30, .53) than among liberals (IE = .22, CI = .14, .31). Additionally, the index of moderated mediation was significant for TPO v. CPO (I = .05, CI = .02, .08). The indirect effect of TPO v. CPO on support for government action was stronger among conservatives (IE = .35, CI = .24, .47) than among liberals (IE = .16, CI = .10, .24). (RQ3e).

Discussion

This study focused on two overarching questions: does consensus messaging have positive effects on climate attitudes and are these effects influenced by political ideology or pretesting initial climate beliefs and attitudes?

Exposure to a consensus message increased respondents’ agreement estimates and appeared to be more influential among conservatives. After exposure to a consensus message, liberals and conservatives reported similar agreement estimates, whereas without such exposure conservatives had lower estimates than liberals. We also see evidence of moderated indirect effects, such that conservatives’ belief in climate change, belief in anthropogenic causation, support for public action, and support for government action, were more strongly affected by consensus messages than liberals’ attitudes. We did not observe that political ideology moderated the effect of consensus messages on worry and concern for climate change in a similar way.

Yet, importantly, we found limited evidence that consensus messages “work” for skeptics in shifting the total effects for our ultimate dependent variables. These indirect effects appeared insufficient to counteract the direct effect of political ideology on climate attitudes. That is, we saw no evidence of total effects of consensus messages on worry or concern about climate change, support for public action, or support for government action. And further, we only saw evidence of total effects of consensus messages on belief in global warming and belief in anthropogenic causation when climate beliefs and attitudes were pretested (TPP condition). We did not see evidence of consensus messages affecting these outcomes when climate beliefs and attitudes were not measured prior to showing the consensus message (TPO condition). While indirect associations between variables are valid explorations of relationships between variables, the failure to find consistent total effects, or any total effects for the TPO comparisons, suggests that consensus messaging are likely to have limited overall influence on political polarization around climate attitudes.

Finally, this study presents evidence that the choice to pretest climate beliefs and attitudes is likely to influence results of consensus messaging studies. The posttest-only treatment condition (TPO) led to lower agreement estimates than the pre-/posttest treatment condition (TPP). As a result, only the TPP condition saw both significant indirect effects and significant total effects on belief in global warming and human causation. Though van der Linden et al. (2015; 2019) and others claim that a between- and within-subjects design give their studies greater statistical power, it also has the potential to affect responses in ways which may bias these studies in the direction of finding results.

Furthermore, it is important to utilize study designs that are most relevant to the study of real-world effects. For researchers interested in the effects of consensus messages in mass media environments (e.g., news broadcasts, online advertisements), posttest-only designs better parallel such contexts; the results presented here suggest that the implementation of consensus messaging in mass media may shift perceptions of scientific consensus, but are unlikely to significantly shift any of the other outcome variables measured. Alternatively, studies using pre-/posttest designs may be more applicable to interactive or educational settings where “conceal and reveal” techniques may be employed (Myers et al. 2015). In short, the results taken together find some mixed evidence for the gateway belief model when participants are given a pretest, but no evidence of total effects when a pretest is not given.

Strengths and limitations

This study was well powered with nearly 500 respondents in each condition from a diverse online panel. However, it is important to note that while our sample is nationally diverse, it is not a representative sample, and appropriate caution for top-line results should be taken.

Though this study tested a series of associations and the mediated effects of consensus message on outcomes through agreement estimates, this study did not test the full serial mediation model proposed by van der Linden et al. (2015). Where this model differs from our tests is with respect to support for public action, in which van der Linden et al. (2015) examine serial mediation through both agreement estimates and the belief that humans are causing climate change. We did not test this pathway of serial mediators, but note that the overall impact of such a serial indirect effect is captured within our testing of total effects—that is, in instances where a total effect is not observed, we can infer that such an indirect effect would have been counteracted by other influences on the variable of interest. Finally, although previous literature informed the order of the variables in our mediation analysis, given the cross-sectional nature of our dataset, we cannot test for causality from the mediator to the dependent variable, and it is always possible that unobserved variables could be driving reported effects (McGrath 2020).

Conclusions

The debate around consensus messaging research largely concerns its efficacy at reducing political polarization around climate change in the U.S.A. Because strategic actors opposed to climate policies have sought to undermine or spread misinformation about the scientific consensus on climate change in efforts to build support for their policy positions (Elsasser and Dunlap 2013), some suggest that informing the public about the high degree of consensus will ameliorate this polarization. While many studies, including this one, find some evidence for indirect effects of consensus messages on climate attitudes and beliefs, this does not mean that consensus messages alone are sufficient for changing minds on climate change. Rather, it may be more effective to pair consensus messaging with other promising interventions, such as emotion, psychological distance, and religious interventions (Rode 2021). Despite finding that conservatives’ agreement estimates are more impacted by consensus messages, we only see effects on belief in global warming and anthropogenic causation in the TPP condition and we fail to observe substantive effects of consensus messages on people’s emotional responses and policy attitudes in either treatment condition. These findings reflect concerns raised by Kahan (2017) that significant correlations between climate attitudemeasures do not imply that consensus messages cause changes in attitudes and beliefs that are not observed in mean differences. In the past, researchers promoting consensus messaging have cited articles whose authors conclude against the efficacy of consensus messaging (e.g., Deryugina and Shurchkov 2016) as part of “the overwhelming majority of published research” that supports the use of consensus messaging strategies (Cook and Pearce 2019). We therefore want to be explicit on this point: the results of the present study do not find that consensus messaging is likely to lead to greater support for public or government action on the issue of climate change.

Further, this study reveals that effects of consensus messaging may be stronger in more interactive contexts compared to mass media messaging campaigns. This highlights the importance of designing studies that mimic real-world messaging conditions to produce the best understanding of real-world effects. Pre-/posttest designs may be applied to the study of consensus messaging in classrooms or other interactive settings (e.g., interactive Instagram stories). Posttest-only designs, on the other hand, can better speak to messaging contexts like online advertisements or news coverage. Experimental manipulations that echo intended messaging conditions will provide a better understanding of the conditions under which consensus messaging strategies are and are not effective.

Supplementary information

(DOCX 157 kb)

Author contribution

All authors contributed to the study conception, data collection, and analysis. The first draft of the manuscript was written by S. Chinn, and P.S. Hart commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Funding

No funding to declare.

Data availability

The data that support these findings are available from the corresponding author, upon reasonable request.

Declarations

Ethics approval

This study is exempted from ethical approval by the Health Sciences and Behavioral Sciences Institutional Review Board (IRB-HSBS) at the University of Michigan (HUM00176552).

Consent to participate

Individuals gave informed consent prior to participating in the study, in compliance with IRB-HSBS protocols.

Consent for publication

All authors have consented to the submission of the manuscript.

Competing interests

The authors declare no competing interests.

Footnotes

Our intention was not to exclude individuals who selected “other” for their gender from our study but rather to ensure that any comparisons between gender identity groups had enough statistical power to be meaningfully interpreted. Results from analyses run with and without these respondents did not differ.

Just before demographic measures, participants were asked three questions concerning reactance and perceived manipulation of the consensus message. These variables were explored in a separate analysis. Additionally, given that data was collected in March of 2020, we also asked all participants two questions about their concern about COVID-19. COVID-19 concern did not vary by condition (p = .79) and was therefore not included as a control in the analyses presented.

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Sedona Chinn, Email: schinn@wisc.edu.

P. Sol Hart, Email: solhart@umich.edu.

References

- @BarackObama. (2013) Ninety-seven percent of scientists agree: #climate change is real, man-made and dangerous. Read more: https://twitter.com/barackobama/status/335089477296988160?lang=en

- Bayes R, Bolsen T & Druckman JN (2020) A Research Agenda for Climate ChangeCommunication and Public Opinion: The Role of Scientific Consensus Messaging and Beyond, Environmental Communication. 10.1080/17524032.2020.1805343

- Bolsen T, Druckman JN. Do partisanship and politicization undermine the impact of a scientific consensus message about climate change? Group Processes and Intergroup Relations. 2018;21(3):389–402. doi: 10.1177/1368430217737855. [DOI] [Google Scholar]

- Brossard D (2010) Framing and priming in science communication. In Encyclopedia of Science and Technology Communication. 10.1007/978-1-4419-0851-3

- Campbell DT. Factors relevant to the validity of experiments in social settings. Psychol Bull. 1957;54(4):297–312. doi: 10.1037/h0040950. [DOI] [PubMed] [Google Scholar]

- Campbell, D. T., & Stanley, J. C. (1963). Experimental and quasi-experimental designs for research. Houghton Mifflin Company

- Chinn S, Lane DS, Hart PS. In consensus we trust? Persuasive effects of scientific consensus communication. Public Underst Sci. 2018;27(7):807–823. doi: 10.1177/0963662518791094. [DOI] [PubMed] [Google Scholar]

- Chong D, Druckman JN. Framing theory. Annu Rev Polit Sci. 2007;10(1):103–126. doi: 10.1146/annurev.polisci.10.072805.103054. [DOI] [Google Scholar]

- Cook J, Lewandowsky S. Rational irrationality: modeling climate change belief polarization using Bayesian networks. Top Cogn Sci. 2016;8(1):160–179. doi: 10.1111/tops.12186. [DOI] [PubMed] [Google Scholar]

- Cook J, Pearce W (2019) Is emphasising consensus in climate science helpful for policymaking? In: Hulme M (ed) Contemporary climate change debates (1st ed., pp. 127–145). Routledge. 10.4324/9780429446252-10

- Deryugina T, Shurchkov O. The effect of information provision on public consensus about climate change. PLoS One. 2016;11(4):1–14. doi: 10.1371/journal.pone.0151469. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ding D, Maibach EW, Zhao X, Roser-Renouf C, Leiserowitz A. Support for climate policy and societal action are linked to perceptions about scientific agreement. Nat Clim Chang. 2011;1(9):462–466. doi: 10.1038/nclimate1295. [DOI] [Google Scholar]

- Dixon G. Applying the gateway belief model to genetically modified food perceptions: new insights and additional questions. J Commun. 2016;66(6):888–908. doi: 10.1111/jcom.12260. [DOI] [Google Scholar]

- Dixon G, Hmielowski J, Ma Y. Improving climate change acceptance among U.S. conservatives through value-based message targeting. Sci Commun. 2017;39(4):520–534. doi: 10.1177/1075547017715473. [DOI] [Google Scholar]

- Dixon G, Hubner A. Neutralizing the effect of political worldviews by communicating scientific agreement: a thought-listing study. Sci Commun. 2018;40(3):393–415. doi: 10.1177/1075547018769907. [DOI] [Google Scholar]

- Elsasser SW, Dunlap RE. Leading voices in the denier choir: conservative columnists’ dismissal of global warming and denigration of climate science. Am Behav Sci. 2013;57(6):754–776. doi: 10.1177/0002764212469800. [DOI] [Google Scholar]

- Goldberg MH, van der Linden S, Ballew MT, Rosenthal SA, Gustafson A, Leiserowitz A. The experience of consensus: video as an effective medium to communicate scientific agreement on climate change. Sci Commun. 2019;41(5):659–673. doi: 10.1177/1075547019874361. [DOI] [Google Scholar]

- Goldberg MH, van der Linden S, Ballew MT, Rosenthal SA, Leiserowitz A. The role of anchoring in judgments about expert consensus. J Appl Soc Psychol. 2019;49(3):192–200. doi: 10.1111/jasp.12576. [DOI] [Google Scholar]

- Hahnel UJJ, Mumenthaler C, Brosch T. Emotional foundations of the public climate change divide. Clim Chang. 2020;161(1):9–19. doi: 10.1007/s10584-019-02552-0. [DOI] [Google Scholar]

- Hart PS, Nisbet EC. Boomerang effects in science communication: how motivated reasoning and identity cues amplify opinion polarization about climate mitigation policies. Commun Res. 2012;39(6):701–723. doi: 10.1177/0093650211416646. [DOI] [Google Scholar]

- Hayes AF (2017) Introduction to mediation, moderation, and conditional process analysis: a regression-based approach, 2nd edn. Guilford Press, New York, NY

- Hauser DJ, Ellsworth PC, Gonzalez R. Are manipulation checks necessary? Front Psychol. 2018;9:1–10. doi: 10.3389/fpsyg.2018.00998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kahan DM. The “gateway belief” illusion: reanalyzing the results of a scientific-consensus messaging study. J Sci Commun. 2017;16(5):20. doi: 10.22323/2.16050203. [DOI] [Google Scholar]

- Kahan DM, Jenkins‐Smith H, & Braman D (2011) Cultural cognition of scientific consensus. Journal of risk research 14(2):147–174

- Lewandowsky S, Gignac GE, Vaughan S. The pivotal role of perceived scientific consensus in acceptance of science. Nat Clim Chang. 2012;3(4):399–404. doi: 10.1038/nclimate1720. [DOI] [Google Scholar]

- Ma Y, Dixon G, & Hmielowski JD (2019) Psychological reactance from reading basic facts on climate change: The role of prior views and political identification. Environmental Communication 13(1):71–86

- McCright AM, Dunlap RE, Xiao C. Perceived scientific agreement and support for government action on climate change in the USA. Clim Chang. 2013;119(2):511–518. doi: 10.1007/s10584-013-0704-9. [DOI] [Google Scholar]

- McGrath, M. C. (2020). Experiments on problems of climate change. In advances in experimental political science (p. 606-629)

- Myers TA, Maibach E, Peters E, Leiserowitz A. Simple messages help set the record straight about scientific agreement on human-caused climate change: the results of two experiments. PLoS One. 2015;10(3):1–17. doi: 10.1371/journal.pone.0120985. [DOI] [PMC free article] [PubMed] [Google Scholar]

- NASA. (2018) Global climate change: facts. https://climate.nasa.gov/scientific-consensus/

- Nosanchuk TA, Mann L, Pletka I. Attitude change as a function of commitment, decisioning, and information level of pretest. Educ Psychol Meas. 1972;32:377–386. doi: 10.1177/001316447203200213. [DOI] [Google Scholar]

- Palm R, Bolsen T, Kingsland JT (2020) “Don’t tell me what to do”: resistance to climate change messages suggesting behavior change. Weather, Climate, and Society. 10.1175/WCAS-D-19-0141.1

- Parrot WG, Hertel P (1999) Research methods in cognition and emotion. In: Dalgleish T, Power MJ (eds) Handbook of cognition and emotion. John Wiley & Sons, Ltd, pp 61–81. 10.1093/jpids/pix105/4823046

- Rode JB, Dent AL, Benedict CN, Brosnahan DB, Martinez RL, & Ditto PH (2021) Influencing Climate Change Attitudes in the United States: A Systematic Review and Meta-Analysis. Journal of Environmental Psychology, 101623

- Schwarz N. Self-reports: how the questions shape the answers. Am Psychol. 1999;54(2):93–105. doi: 10.1037/0003-066X.54.2.93. [DOI] [Google Scholar]

- The Consensus Project. (n.d.). http://theconsensusproject.com

- Tourangeau, R., Rips, L., & Rasinski, K. (2000). Attitude judgments and context effects. In The Psychology of Survey Response Cambridge University Press 10.1017/CBO9780511819322.008

- van der Linden S, Clarke CE, Maibach EW (2015a) Highlighting consensus among medical scientists increases public support for vaccines: evidence from a randomized experiment. BMC Public Health 15(1207). 10.1186/s12889-015-2541-4 [DOI] [PMC free article] [PubMed]

- van der Linden S, Leiserowitz AA, Feinberg GD, Maibach EW (2015b) The scientific consensus on climate change as a gateway belief: experimental evidence. PLoS One 10(2). 10.1371/journal.pone.0118489 [DOI] [PMC free article] [PubMed]

- van der Linden S, Leiserowitz A, Maibach E. Gateway illusion or cultural cognition confusion? J Sci Commun. 2017;16(5):17. [Google Scholar]

- van der Linden S, Leiserowitz A, Maibach E. The gateway belief model: a large-scale replication. J Environ Psychol. 2019;62:49–58. doi: 10.1016/j.jenvp.2019.01.009. [DOI] [Google Scholar]

- van der Linden, S., Leiserowitz, A., & Maibach, E. W. (2016). Communicating the scientific consensus on human-caused climate change is an effective and depolarizing public engagement strategy: experimental evidence from a large national replication study. SSRN Electronic Journal. 10.2139/ssrn.2733956

- Willson VL, Putnam RR. A meta-analysis of pretest sensitization effects in experimental design. Am Educ Res J. 1982;19(2):249–258. doi: 10.3102/00028312019002249. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

(DOCX 157 kb)

Data Availability Statement

The data that support these findings are available from the corresponding author, upon reasonable request.