Abstract

Community colleges have been under pressure for years to improve retention rates. Considering well-publicized reductions in state funding during and after the Great Recession, progress in this area is unexpected. And yet this is precisely what we find. Using the Integrated Postsecondary Education Data System (IPEDS), we find an average increase in retention of nearly 5 percentage points, or 9 percent, across the sector from 2004 to 2017. Over 70% of institutions posted retention gains, and average improvement occurred yearly over the period excepting a reversal at the height of the Great Recession. Gains were smaller on average at schools with higher tuition and that serve more disadvantaged populations, and larger at institutions with lower student-faculty ratios and higher per-student instructional spending. Fixed-effects regression and Oaxaca decomposition analyses demonstrate that these gains were not caused by observable changes in student body composition or in institutional characteristics such as increased per-student instructional spending.

Keywords: Community colleges, Retention, Panel data

Introduction

Under the banner of “accountability”, policy entrepreneurs, nonprofits, and politicians have pressured colleges and universities for years to improve indicators of performance (Ewell, 2011). While accountability-based critiques are commonly leveled at higher education generically, non-elite public institutions are most vulnerable to potentially resulting penalization as they depend directly on public support and often post unimpressive performance statistics (Dougherty et al., 2016). Pressure is acutely felt by community colleges, which are most heavily dependent on state and local appropriations and which tend to have very low rates of retention and degree completion (Monaghan et al., 2018).

Retention1 and completion rates at community colleges are often shockingly low. In recent national measurements, public four-year colleges retained 81% of their students after one year and graduated 60% within 150% of “normal” time. These rates at community colleges are 62% and 25% respectively2 (Hussar et al., 2020). Racial disparities in completion are similarly immense; 30% of White students who begin at a community college complete a degree within six years, compared with only 18% of Black students (Shapiro et al., 2017). Many have called for community colleges to do better, and community colleges have themselves pledged to raise completion rates. However, increased funding has not accompanied these demands; community colleges are simply expected to “do more with less” (Jenkins & Belfield, 2014).

Community colleges are tremendously important institutions within the higher educational landscape, enrolling 39% of all undergraduates. As “access institutions”, they enroll a large share of students from historically excluded populations: 38% of African-American students, 46% of Latinx students, 47% of first-generation college-goers, 34% of Pell recipients, and 46% of undergraduates aged 25 or older.3 Therefore, their performance in retaining students and shepherding them to degrees has profound implications for higher educational equity and even (given the well-documented impact of degree completion on wages) socioeconomic mobility. Yet despite the importance and policy-relevance of the issue, there has been to date little research into whether community colleges have succeeded in improving retention rates.

We redress this omission using college-level data. First, we ask what has happened in terms of retention at community colleges since 2004, when institution-level data first began being collected by the Department of Education. Second, we inquire into variance in retention gains. At what sorts of institutions have retention gains or losses been more or less considerable? And finally, we attempt to explain sector-wide retention changes. Do observed changes result primarily from changes in student composition or from changes in organizational practices? We conclude by evaluating potential explanations for our findings and outlining implications for retention research.

Literature Review

Retention Theories

Only in the past four decades has higher education dropout/persistence been an acknowledged “problem”, let alone something that college leaders believe they have the responsibility to address. Accordingly, when this matter entered the research horizon, scholars focused on students, identifying characteristics that made them more or less likely to persist (e.g., Bean, 1980; Pascarella and Terenzini 1980; Tinto, 1987). The college environment was largely considered a given, something a student was differentially able to adapt themselves to based on social, academic, and other characteristics. Gradually, however, colleges accepted responsibility for student well-being and outcomes, particularly with regards to systemically marginalized populations. Reflecting and contributing to this change, more recent retention research has examined how college environments can be changed to support the persistence of diverse students (e.g., Guiffrida, 2006; Kuh & Love, 2000; Tierney, 1992, 1999).

Despite these developments, dominant theoretical models continue to be problematic for community college students and others who do not attend in culturally normative fashion: direct, full-time enrollment at a residential campus. Specifically, they conceptualize re-enrollment as a function of the interaction between student inputs (pre-matriculation characteristics) and college environments (Astin & Oseguera, 2005; Bean, 1980; Kuh & Love, 2000; Reason, 2009; Tinto, 1987). They presume that the student’s experience of the college is uniformly immersive, and the world external to college enters into consideration only insofar as it shapes the student prior to enrollment.

Bean and Metzner (1985) introduce two crucial modifications of this schema. First, the amount of interaction between student and school environment is a crucial variable. Most community college students attend part-time, many interrupt their enrollments for a semester or more, and an increasing proportion attend some or all of their classes virtually. Researchers have shown that on-campus residence, full-time attendance, and continuous enrollment are strongly associated with degree completion (DesJardins et al., 2006; Goldrick-Rab, 2006; Schudde, 2011). Findings for online coursework are mixed, but it seems detrimental for less-prepared students (Bettinger et al., 2017). Second, because community college students spend less time on campus, the influence of the college environment wanes while that of the external environment—family, neighborhood, and work—waxes (Davidson & Wilson, 2017).

What Impacts Retention Rates at Community Colleges?

All major retention models have an individual-level focus, and implicitly consider the relevant variance in environments to be between colleges. However, they can be intuitively applied to explain changes in institutions’ retention rates over time, because student body composition and college environments vary longitudinally.

What student characteristics matter for retention is well-established: pre-college academic skills as measured through grades, course-taking, and test scores; family education and affluence; gender and race; and non-cognitive skills such as tenacity, conscientiousness and organization (Bowen et al., 2009; Burrus et al., 2013; Flores et al., 2017; Galla, et al., 2019; for community colleges see Fike & Fike, 2008; Spangler & Slate, 2015; Windham et al., 2014). Depending on size and prestige, colleges are able to use admissions to screen out students with weaker academic records and thus lower propensity to be retained.

The community college context requires a few addenda. First, as open-enrollment institutions, community colleges do not screen out applicants. Student body characteristics are mostly a function of surrounding-community demographics (Stange, 2012). Second, enrollment is famously economically countercyclical (Charles et al., 2018; Hillman & Orians, 2013). Spiking unemployment nudges marginal students into community colleges, while a hot job market entices them directly into work (Kienlz et al., 2007). Since economic growth diverts less-academically-oriented individuals from school, it likely boosts retention. Finally, community colleges are more age-heterogeneous than four-year colleges, and research is mixed regarding the relationship between age and retention (Calcagno et al., 2008; Fike & Fike, 2008).

As for college environment, dominant paradigms stress social and academic factors that heighten students’ engagement with or sense of belonging to the college community (Astin, 1984; Kuh et al., 2006; Strayhorn, 2018). But as most community college students are commuters, opportunities for “socio-academic integrative moments” occur mostly in class and with faculty (Deil-Amen, 2011). This shrinks the importance of social factors and boosts that of the academic environment (Bean & Metzner, 1985; Halpin, 1990). Thus, at community colleges retention is consistently associated with institutional spending on instruction and (less consistently) with spending on student services (Bailey et al., 2006; Gansemer-Topf & Schuh, 2006; Webber & Ehrenberg, 2010). Contingent instructors are often only tenuously connected to the college organization and so may be less committed to is as well as less able to effectively mentor or advise students (Levin & Hernandez, 2014). Therefore, exposure to full-time, central line faculty rather than contingent instructors seems to reduce student dropout (Calcagno et al., 2008; Goble et al., 2008; Jacoby, 2006; Jaeger & Eagan, 2009; Jaegar & Hinz, 2008). Finally, because the likelihood of connecting with a faculty member declines in larger classes, retention rates vary inversely with student-faculty ratios (Jacoby, 2006; Townsend & Wilson, 2009).

Two other institutional factors seem to matter. Student success is reduced in larger institutions, though this effect is modest (Bailey et al., 2006; Burrus et al., 2013; Calcagno et al., 2008; Vasquez Urias & Wood, 2014). It is not clear whether enrollment spikes and declines within institutions have a similar relationship to retention. Cross-sectionally, retention is also negatively associated with tuition (Calcagno et al., 2008), but the effect of tuition increases on retention (i.e., within an institution over time) is not well-understood. Since many community college students are under economic strain, increases might discourage re-enrollment of students at the margin (Kienzl et al., 2007). On the other hand, because baseline tuition is low at these colleges, need-based aid may largely blunt their impact. Tuition increases may also lead more students to rely on loans (Dwyer et al., 2012; Herzog, 2018). Among loan-averse students, even a modest cumulative loan debt might inspire considerable anxiety, leading to withdrawal (Baker & Doyle, 2017; McKinney & Burridge, 2015).

From the above discussion we may derive empirical predictions for changes in institutional retention rates. First, community college retention rates will increase when they enroll more (empirically) retention-prone students: broadly, those with greater academic preparation and lesser social disadvantage. Student composition will be in turn driven by demographic changes, economic fluctuations, and trends in academic preparation and college participation. Second, retention rates will increase when colleges adopt retention-conducive practices: boosting per-student instructional and student support spending, lowering student-faculty ratios, having fewer contingent faculty, and reducing the cost of attendance. Third, retention rates increase when students attend more intensively (e.g., attending full-time). Finally, they may improve when enrollments are down.

What Happened in the Community College Sector Between 2004–2017?

The most striking phenomenon over the period in question was the massive boom-and-bust in enrollments surrounding the Great Recession. Enrollment spiked between 2008-10 but fell sharply afterwards; by 2018 community college enrollment was lower than at any point since 1999 (Hussar et al., 2020). The direct enrollment rate of recent high school graduates into two-year colleges fell from 27.7% in 2009 to 22.6% in 2017 before rebounding in 2018, while the rate of enrollment into 4-year colleges showed no marked trend (NCES, 2019a). This suggests that fewer graduates have been enrolling in college at all, leaving more academically-oriented and retention-prone students populating community colleges.4 Since 2010, the gender ratio of students has not changed, White and Black enrollment has fallen, and Latinx enrollment has risen (Jenkins & Fink 2020a).

State funding of public colleges—community colleges included—tends to be slashed during recessions but only partially restored during booms, resulting in progressive state disinvestment compensated for by tuition increases. This pattern held for the Great Recession and subsequent recovery, but enrollment declines have been steep enough that on a per-full time equivalent (FTE) basis state funding passed its pre-recessionary peak in 2017. Tuition increased by 33% above the rate of inflation between 2000 and 2010, and by 15% over the next decade. These increases, while considerable, were similar in percent terms to those at four-year colleges (College Board, 2020). On a per-FTE basis, community colleges have actually seen revenue expand steadily since 2009–10 to eclipse even pre-recessionary levels (Jenkins et al., 2020).

Accordingly, a number of indicators have shifted in ways that predict improved retention. Per-FTE instructional spending in 2018 was 16% above that in 2010 (NCES 2019c). And since non-contingent staffing is largely fixed in the short-term, student-faculty and student-staff ratios may have decreased. Finally, it is likely that the adjunct share of the faculty expanded during the recession and has declined since, as adjuncts are usually first to be cut when enrollment falls.

Overall, institutional conditions between 2004 and 2017, and particularly after 2010, favored retention gains. These conditions include smaller enrollments and growing revenues that follow from an expanding economy. From these driving forces come increased per-student instructional resources as well as a potential upward shift in the underlying distribution of student academic skill. Potentially offsetting these are rapid increases in tuition costs, as well as increasing shares of minoritized populations with historically lower retention rates.

Data and Methods

Data and Sample

We use data from the Integrated Postsecondary Education Data System (IPEDS). IPEDS makes publicly available the data that higher education institutions report to the Department of Education (DOE) in exchange for Title IV eligibility. This means IPEDS data is available on a year-to-year basis and is reported at the institutional level. The institution-level nature of the data means we are limited to an examination of retention (within the same college) rather than persistence (at any college), the latter of which is arguably more significant for policy. However, even at community colleges most students (73%) who persist do so at their initial institution, so raising persistence will mostly occur through raising retention. IPEDS is also limited in the quantities measured. In addition to lacking measures of student body academic preparation (for community colleges) and socioeconomic status, the data also lacks measures of potential student assets—strengths, skills, talents and competencies. Regardless, IPEDS provides the richest data on the American postsecondary sector, including on retention, gathered on a year-to-year basis.

DOE reporting rules require institutions to submit most quantities of interest yearly, but some are required biennially. The DOE has changed reporting requirements repeatedly over time, typically in the direction of more elaborate reporting. And so, though the earliest IPEDS data is from 1980, the DOE did not collect many quantities of interest until many years later. The duration of the panel available thus depends on one’s research questions.

The DOE first required institutions to report retention rates in 2004. As discussed below, retention figures for a given year relate to retention of the prior year’s entering cohort. What is thus relevant is the relationship between retention in a given year and prevailing conditions in the prior year. We employ retention data from 2004 to 2017, but all other variables are drawn from 2003 to 2016.

The universe of community colleges5 changed over this period, and some institutions are missing data in some years. For some descriptive analyses we employ the full universe of community colleges present in IPEDS (N = 1359), but in most analyses we include only institutions for which there is complete data for the full study period. Doing so circumvents the parent–child institution problem6 identified by Jaquette and Parra (2014). This produces an analytic sample of 833 institutions and 11,662 institution-year observations.7 We also incorporate data on county-level8 yearly average unemployment rates from the Bureau of Labor Statistics (Table 1).

Table 1.

Descriptive statistics for community colleges, 2004–2017 (833 institutions; 11,662 observations)

| Mean | SD | |

|---|---|---|

| Retention rate | 51.91 | 9.75 |

| Percent need-based grant recipients | 50.37 | 17.73 |

| Percent part-time, FTF | 34.84 | 17.64 |

| Percent female, FTF | 51.71 | 8.16 |

| Percent Black, FTF | 14.83 | 16.68 |

| Percent Latinx, FTF | 13.61 | 17.64 |

| Percent Asian, FTF | 3.29 | 6.94 |

| Percent Native, FTF | 2.43 | 9.50 |

| Percent White, FTF | 58.72 | 25.17 |

| Percent first-time freshmen (FTF) | 23.18 | 9.30 |

| Enrollment (FTE) | 4016.58 | 4025.88 |

| Tuition | 2465.76 | 1196.49 |

| Percent loan recipients | 21.15 | 20.67 |

| Student-faculty ratio | 19.57 | 8.73 |

| Adjunct share of instructional staff | 64.33 | 33.48 |

| Instructional spending/FTE | 5895.00 | 2897.51 |

| Academic support/FTE | 1152.62 | 859.57 |

| Student services/FTE | 1449.10 | 916.94 |

| Scholarships/FTE | 1361.83 | 1028.31 |

| County unemployment rate | 6.80 | 2.66 |

Source: IPEDS. FTE full-time equivalent

Variables

Outcome

Our target variable is the one-year (fall-to-fall) retention rate for first time, degree-seeking freshmen (FTFs).9 The retention rate reported in a given year (e.g., academic year 2014–15) describes the enrollment status in that year’s fall semester (e.g., fall 2014) of those who were FTFs during the prior fall (e.g., fall 2013). Students are considered retained if they re-enrolled or completed their program during the prior academic year. Institutions calculate and submit separate retention rates for full- and part-time students (according to initial status). We created a composite measure averaging full- and part-time retention rates, weighted by the full- and part-time shares of the prior fall’s FTF cohort.

Independent Variables

We model retention using three sets of variables: student composition, institutional practices, and external conditions. Because retention is defined only for FTFs, we measure compositional quantities for the relevant cohort of FTFs whenever possible. As per the above discussion, the most relevant student composition quantities for retention are socioeconomic status, academic preparation, and enrollment intensity, and secondarily race/ethnicity, gender, and age. For student-body SES, we include an admittedly inadequate measure: the proportion of students receiving need-based grants. Unfortunately, IPEDS has nothing superior. IPEDS also does not provide any measure of aggregate academic preparation for open-access colleges.10 For enrollment intensity, we measure the proportion of FTF students who are part-time enrollees. IPEDS presents counts of students by gender and race-ethnicity separately by attendance and FTF status, from which we construct measures of the proportion of FTE11 first-time freshmen identified as Black, Latinx, Asian, and Native, and female (White and Male are excluded reference categories). We measure student age composition indirectly, through the FTF share of total FTE enrollment. This taps something about the age distribution of student body overall, potentially indicates how oriented the institution is to first-time students, and is measured annually.12

We include a number of measures of institutional practices and conditions related to retention, including institutional size (logged FTE enrollment). Institutional pricing is measured through (logged) tuition (in constant 2016 dollars) and the proportion of students taking out loans. We measure the quantity of instructional staffing through the student-faculty ratio, which is defined by IPEDS as FTE enrollment divided by FTE instructional faculty.13 Meanwhile, instructional quality is measured through the adjunct share of total faculty: FTE adjunct faculty divided by total FTE faculty. Finally, we include four measures of per-FTE spending which may influence retention, all in constant 2016 dollars and logged. Instructional spending includes total expenses from instructional divisions of the institution “which are not separately budgeted”. Academic support spending includes everything spent on “activities and services that support” instruction, research and service. Student services expenditure includes moneys paying for admissions, the registrar, student activities and organizations, intramural athletics, events, remedial instruction, counseling and guidance, financial aid administration, and student records. We also measure per-FTE scholarship spending.

Given the importance of economic cycles to community colleges, we include the unemployment rate in the institution’s county to measure the impact of changes in the local economy. We also include a linear year term and a dummy variable identifying the recessionary years 2008–11.14

Procedures

Our methods for describing trends are straightforward. To account for changes in retention, we employ fixed-effects regression models and Blinder-Oaxaca decomposition. Fixed-effects models are ideal for tracing the correlates of within-unit change in panel data. By including a separate dummy variable for each unit (here, each college), fixed-effects models factor out time-invariant unit characteristics, and model only within-unit change in the outcome. If all relevant time-varying variables are measured, these models can robustly identify causal effects (Angrist & Pischke, 2009). We make no such claim here because clearly not all relevant confounders are available in IPEDS.

Blinder-Oaxaca decomposition (Blinder, 1973; Oaxaca, 1973) is a counterfactual method that accounts for the difference in means of a dependent variable between two subgroups (). It is used extensively to study sources of the gender wage gap, where the two groups are male and female workers. Here, I use it to account for differences in mean retention rates between two years: 2004 and 2017. The method involves separate regressions for the two groups of observations (years), and then decomposes the difference in the mean of the outcome into three components. The first component, “composition effects”, describes the proportion of the difference in means traceable to differences in the means of in predictor variables between groups—differences in student composition, institutional spending, tuition, etc. It answers a counterfactual question: what would mean retention rate be in 2017 if colleges had the same measured characteristics that they had in 2004? Composition effects are usually the focal point of a decomposition, as they reveal the proportion of the difference “explained” by observed differences. For ease of interpretation, we only display these effects in the main body of the paper, but full results are available in the appendix.15 All effects are described in percentage terms. We implement the Blinder-Oaxaca decomposition using the user-generated Oaxaca program in Stata (Jann, 2008).

Our analytic sample is not randomly drawn from a larger population. It is closer to population data, except that some units are absent and we do not know whether the mechanism generating missingness is effectively “random”. Standard frequentist inference is inappropriate with such data. Thus, wherever possible we employ permutation p-values rather along with “standard” standard errors. In a regression context, permutation reassigns values of the dependent variable randomly to cases in the sample, and then re-calculates coefficients. This process is repeated many times (we used 1000 permutations). The p value is the proportion of permutations in which the coefficient was greater than or equal to that in the empirical analysis. Thus, permutation p values are a non-parametric calculation of the likelihood of obtaining the observed results by chance.

Results

Retention Trends

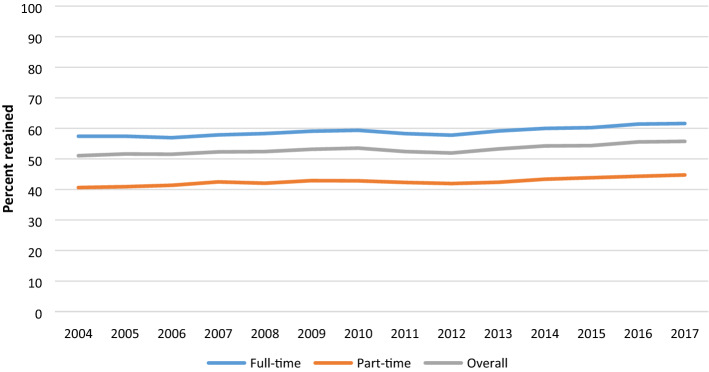

Since 2004, community colleges have posted considerable increases in retention, increasing yearly in nine out of fourteen years (Fig. 1). The mean retention rate across community colleges increased from 51.1% in 2004 to 55.7% in 2017. This is an increase of 4.7 percentage points, or a 9.1% increase. The increase in retention was nearly identical in absolute terms among full-time students and part-timers (4.18 vs. 4.12 percentage points). These shifts may seem small in numeric terms. But after considering that gains occurred a period overlapping a recession during which progress temporarily reversed and represent average improvements across hundreds of institutions, this “modest” increase becomes more impressive.

Fig. 1.

Mean retention rates at community colleges, 2004–2017 (Source: IPEDS). Figure created using MS Excel

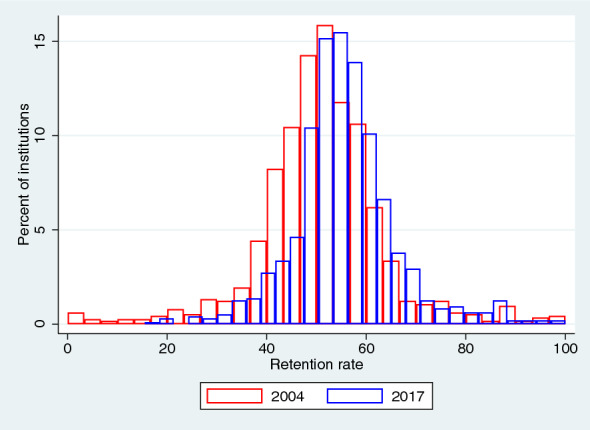

The increase in mean retention rates was not the result of large gains in a few institutions. Instead, there is a clear rightward shift in the whole distribution of retention rates (Fig. 2). Gains were slightly greater in the lower half of the distribution. In 2004, the institution at the 25th percentile had a retention rate of 44.9%, and by 2017 this had risen to 50.4% (5.5 percentage points). Meanwhile, the 75th percentile institution’s retention rate increased from 57.4 to 60.5% (3.1 percentage points).

Fig. 2.

Distribution of retention rates at community colleges in 2004 and 2017. Source: IPEDS. Figure created using Stata

Many closures, consolidations, and recategorizations of community colleges took place over the period in question, shrinking the total number of community colleges by 15%, from 1172 institutions in 2004 to 981 in 2017. If such institutions were disproportionately low-performing institutions, and newly opened institutions performed strongly, this could raise retention rates for the sector without any progress at any individual institution. However, restricting to the 833 institutions in our analytic sample does not alter results appreciably. Among these institutions, the mean retention rate increased from 56.8 to 60.5%—an increase of 3.7 percentage points. The 25th percentile institution increased its retention rate from 44.8 to 50.1%, and the 75th percentile institution saw a shift from 55.7 to 59.3%. Selective closure clearly did not drive retention gains.

After weighting for FTE enrollment (thus, expressing the improvement in retention for the full population of community college students), gains are smaller. Overall, retention among the full community college population rose from 52.1 to 55.9%. This represents a gain of 3.8 percentage points (7.3%). Among full-time students, there was a gain of 3.1 percentage points or 5.2%, and that for part-time students was 3.6 percentage points or 8.8%. That gains are smaller when weighting for enrollment suggests smaller increases at larger colleges.

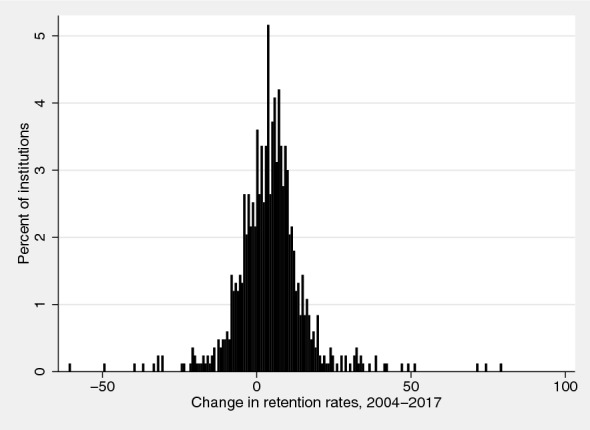

Variance in Retention Change

Over the period in question, changes in retention rates are not uniform across the sector, and many institutions posted lower retention rates at the end of the period. Figure 3 displays this variance, restricting to our analytic sample. The majority of colleges—589 out of 833, or 71%—posted increases in retention. The distribution of retention change is reasonably symmetrical, with a mean (4.4) only slightly higher than the median (4.2), but highly peaked. The interquartile range is only 11 percentage points (− 1.3, 9.3).

Fig. 3.

Distribution of change in retention rates at community colleges between 2004 and 2017. Source: IPEDS. Figure created using Stata

We explored the axes according to which this variance occurred though a regression analysis. The analysis is purely descriptive. We interpret it as indicating what factors predicted greater or lesser improvement rather than as an explanation for differential gains. Just over half of the variance in retention gains across institutions is explained by the retention rate in the base year, and precisely as one would expect: regression to the mean. That is, schools with lower retention rates in 2004 experienced, on average, greater gains and vice versa. Because this relationship is analytically uninteresting, we created residuals from a regression of retention gains on 2004 retention and used this a dependent variable in regression analysis.

In the first column of Table 2 we regress residual retention gains on the size and composition of the student body in 2004. Overall, schools serving racially and socioeconomically disadvantaged populations experienced smaller retention gains. Specifically, gains were smaller at institutions that were more heavily African-American or Native. Gains were also lower at institutions where more students received need-based aid. Schools that were more heavily female in 2004 also experienced smaller retention gains. Finally, larger gains were made by institutions where first-time freshmen are more prevalent.

Table 2.

OLS regression predicting 2004–2017 retention change (net of 2004 retention rate) (N = 833)

| (1) | (2) | (3) | |

|---|---|---|---|

| Percent need-based grant recipients | − 0.039* | − 0.017 | − 0.001 |

| (0.018) | (0.020) | (0.020) | |

| Percent part-time, FTF | − 0.032 | − 0.050** | − 0.049* |

| (0.0180) | (0.018) | (0.018) | |

| Percent female, FTF | − 0.135*** | − 0.135** | − 0.126*** |

| (0.034) | (0.036) | (0.036) | |

| Percent Black, FTF | − 0.043* | − 0.0745*** | − 0.070*** |

| (0.017) | (0.018) | (0.018) | |

| Percent Latinx, FTF | 0.023 | − 0.007 | − 0.002 |

| (0.018) | (0.019) | (0.019) | |

| Percent Asian, FTF | 0.035 | 0.012 | 0.003 |

| (0.034) | (0.034) | (0.034) | |

| Percent Native, FTF | − 0.084* | − 0.093** | − 0.094* |

| (0.030) | (0.030) | (0.030) | |

| Percent first-time freshmen (FTF) | 0.091** | 0.082** | 0.075** |

| (0.026) | (0.027) | (0.027) | |

| Enrollment (FTE), logged | 0.803* | 0.774* | |

| (0.372) | (0.370) | ||

| Tuition | − 0.559** | − 0.538* | |

| (0.218) | (0.217) | ||

| Percent loan recipients | − 0.065*** | − 0.071*** | |

| (0.017) | (0.017) | ||

| Student-faculty ratio | − 0.142** | − 0.126* | |

| (0.049) | (0.049) | ||

| Adjunct share of instructional staff | − 0.002 | − 0.003 | |

| (0.008) | (0.008) | ||

| Instructional spending/FTE, logged | 1.201** | 1.168** | |

| (0.409) | (0.407) | ||

| Academic support/FTE, logged | − 1.756*** | − 1.862*** | |

| (0.378) | (0.378) | ||

| Student services/FTE, logged | 0.289 | 0.456 | |

| (0.453) | (0.453) | ||

| Scholarships/FTE, logged | − 0.034 | − 0.044 | |

| (0.142) | (0.142) | ||

| County unemployment rate | − 0.574** | ||

| (0.181) | |||

| Constant | 8.316*** | 10.59** | 12.68** |

| (2.078) | (3.856) | (3.891) | |

| Observations | 833 | 833 | 833 |

| R-squared | 0.090 | 0.156 | 0.166 |

Source: IPEDS; permutation p values: * p < 0.05, ** p < 0.01, *** p < 0.001

In the second column, we add college environment measures. Contrary to our earlier speculation, retention gains appear to have been greater at larger institutions. More expensive schools seemed to experience less progress: both tuition and the proportion of students taking out loans in 2004 are negatively associated with retention gains. Institutions with lower student-faculty ratios more greatly improved retention, but there is no relationship between retention gains and adjunct density. Per-student instructional spending in 2004 positively predicts retention gains. However, there is a strong inverse relationship between per-student academic support spending and retention, possibly because institutions serving more academically-challenged student bodies spend more on academic support and are less able to raise retention. Finally, we add the contextual economic measure, discovering that schools in counties with higher unemployment experienced lesser improvement in retention.

Changes at Community Colleges, 2004–2017

To trace the sources of system-wide gains, Table 3 illustrates how community colleges changed over the period in question in terms of composition, resources and spending. Yearly averages are rendered relative to their measurement in the base year of 2003. Many factors we examine were obviously influenced by the onset and waning of the Great Recession. This is true of full-time equivalent enrollment, which rose by a quarter between 2006 and its peak in 2010, before declining to just above its pre-recessionary level by 2016. System-wide shifts in FTE enrollment correlate highly16 (r = 0.93) with changes in the unemployment rate. Most likely driven by this surge and drop in enrollment was a similar shift in student-faculty ratio, which also correlates highly (r = 0.97) with unemployment rates. The average institution saw the share of its students receiving need-based aid spike by eight percentage points between 2009 and 2010 and continue to rise for another 2 years before trending downward. This pattern reflects three recession-driven phenomena: increased lower-income enrollment, a population-wide income decline rendering more students eligible for aid, and a legislative expansion of eligibility (part of the American Recovery and Reinvestment Act). The share of students taking out loans trended upward prior to the recession, but spiked and declined subsequently. These two factors correlate moderately (r = 0.42, r = 0.53 respectively) with shifts unemployment, as does institutional scholarship spending (r = 0.56). Finally, student composition also shifted with the economy: the share of entering students who were African-American rose and fell, while an inverse pattern occurred among Asian students (correlation with unemployment r = 0.80 and r = − 0.78).

Table 3.

Year-by-year changes in relevant measures (relative to 2003) (N = 833)

| 2003 | 2004 | 2005 | 2006 | 2007 | 2008 | 2009 | 2010 | 2011 | 2012 | 2013 | 2014 | 2015 | 2016 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Retention rate (lead) | 1.00 | 1.00 | 1.00 | 1.01 | 1.02 | 1.03 | 1.04 | 1.02 | 1.01 | 1.03 | 1.05 | 1.06 | 1.08 | 1.09 |

| Percent need-based grant recipients | 1.00 | 1.03 | 1.04 | 1.01 | 0.98 | 1.02 | 1.08 | 1.27 | 1.40 | 1.45 | 1.41 | 1.40 | 1.39 | 1.31 |

| Percent part-time, FTF | 1.00 | 0.96 | 0.94 | 0.94 | 0.91 | 0.93 | 0.90 | 0.91 | 0.94 | 0.94 | 0.93 | 0.93 | 0.91 | 0.91 |

| Percent female, FTF | 1.00 | 1.00 | 0.99 | 0.98 | 0.97 | 0.97 | 0.95 | 0.96 | 0.96 | 0.95 | 0.95 | 0.95 | 0.94 | 0.94 |

| Percent Black, FTF | 1.00 | 1.01 | 1.02 | 1.02 | 1.05 | 1.03 | 1.05 | 1.08 | 1.11 | 1.08 | 1.07 | 1.05 | 0.99 | 1.00 |

| Percent Latinx, FTF | 1.00 | 1.06 | 1.10 | 1.14 | 1.17 | 1.19 | 1.20 | 1.37 | 1.46 | 1.56 | 1.67 | 1.74 | 1.82 | 1.92 |

| Percent Asian, FTF | 1.00 | 1.04 | 1.05 | 1.05 | 1.03 | 1.00 | 0.89 | 0.80 | 0.79 | 0.82 | 0.83 | 0.86 | 0.88 | 0.90 |

| Percent Native, FTF | 1.00 | 1.00 | 1.04 | 1.05 | 1.04 | 1.03 | 1.03 | 1.02 | 1.02 | 1.00 | 1.01 | 0.98 | 0.97 | 0.94 |

| Percent White, FTF | 1.00 | 0.99 | 0.98 | 0.97 | 0.96 | 0.94 | 0.91 | 0.89 | 0.88 | 0.87 | 0.85 | 0.85 | 0.84 | 0.83 |

| Percent first-time freshmen (FTF) | 1.00 | 0.99 | 0.96 | 0.99 | 0.98 | 1.02 | 1.03 | 0.97 | 0.95 | 0.96 | 0.97 | 0.97 | 0.97 | 0.99 |

| Enrollment (FTE) | 1.00 | 1.01 | 1.01 | 1.02 | 1.05 | 1.10 | 1.23 | 1.27 | 1.23 | 1.20 | 1.17 | 1.13 | 1.09 | 1.06 |

| Tuition | 1.00 | 1.04 | 1.07 | 1.07 | 1.08 | 1.06 | 1.13 | 1.21 | 1.25 | 1.28 | 1.30 | 1.31 | 1.35 | 1.38 |

| Percent loan recipients | 1.00 | 1.13 | 1.19 | 1.26 | 1.29 | 1.32 | 1.43 | 1.56 | 1.62 | 1.84 | 1.80 | 1.70 | 1.58 | 1.46 |

| Student-faculty ratio | 1.00 | 0.99 | 0.99 | 0.95 | 0.95 | 0.98 | 1.08 | 1.09 | 1.05 | 1.08 | 1.05 | 1.00 | 0.99 | 0.97 |

| Adjunct share of instructional staff | 1.00 | 1.00 | 1.02 | 1.02 | 1.00 | 1.01 | 1.02 | 1.03 | 1.04 | 0.98 | 0.97 | 0.98 | 0.98 | 0.98 |

| Instructional spending/FTE | 1.00 | 1.11 | 1.18 | 1.20 | 1.21 | 1.19 | 1.12 | 1.24 | 1.30 | 1.36 | 1.40 | 1.46 | 1.53 | 1.51 |

| Academic support/FTE | 1.00 | 1.10 | 1.16 | 1.18 | 1.19 | 1.18 | 1.13 | 1.24 | 1.28 | 1.34 | 1.38 | 1.47 | 1.59 | 1.61 |

| Student services/FTE | 1.00 | 1.10 | 1.19 | 1.21 | 1.24 | 1.20 | 1.17 | 1.31 | 1.34 | 1.43 | 1.46 | 1.53 | 1.65 | 1.68 |

| Scholarships/FTE | 1.00 | 1.08 | 1.10 | 1.03 | 0.97 | 0.97 | 1.09 | 1.68 | 1.95 | 1.84 | 1.70 | 1.65 | 1.62 | 1.48 |

| County unemployment rate | 1.00 | 0.93 | 0.87 | 0.79 | 0.79 | 0.96 | 1.51 | 1.57 | 1.47 | 1.34 | 1.23 | 1.04 | 0.90 | 0.84 |

Other factors we measure have clear linear trends. The proportion of first-year students who are Latinx increased while and the share who are White declined. The female share of entering students declined as well. Unsurprisingly, tuition trended upward, though it spiked in the wake of the recession’s onset. There have also been per-student spending increases in areas likely to impact retention: instruction, student services, and academic support.

These trends are interesting, for many seem incongruent with rising retention. Since White and female students are typically retained at higher rates and Latinxs at lower, compositional shifts seem to be working against retention gains. Similarly, we expect higher tuition to lower retention. Nonetheless, these are all highly correlated with retention in directions counter to expectation. Only trends in per-student spending are in keeping with theoretical expectation.

It is notable that, across the sector over time, changes in retention are quite independent of the unemployment rate. But many factors influenced by the recession had not, by 2016, returned to their pre-recessionary baseline. This is true of the percent of students receiving both loans and need-based grants, and with scholarship spending. These all correlate modestly with retention patterns. However, we expect increases in the share of students receiving loans and grants to push retention downwards.

Accounting for Rising Retention

We attempt to account for the upwards shift in retention first through fixed-effects regression (Table 4). In the first column we regress (lead) retention rate only on the time trend and recession dummy variable. After adjusting for the recessionary downturn, there is an average linear increase of a third of a percentage point per year, or a 4.2 percentage point improvement over 14 years. This is consistent with descriptive findings discussed above. In the next column we include our full set of covariates. Doing so reduces the coefficient on the year term by only about 16% (that is, it reduces from 0.303 to 0.254, and (0.303–0.254)/0.303 = 0.162). In other words, the full set of covariates only explains 16% of the average linear yearly change in retention. If the measured characteristics had remained at their 2004 levels, there would still be a 3.6 percentage point gain in retention rates – and a 3.6 percentage point gain is statistically indistinguishable from a 4.2 percentage point gain at p > 0.05 (calculation not shown). We examined many competing models, some of which included additional variables, and none better explained the upward trend in retention rates.17

Table 4.

Linear regression predicting (lead) retention rate, institution fixed-effects

| (1) | (2) | |

|---|---|---|

| Year | 0.303*** | 0.254*** |

| (0.025) | (0.037) | |

| Recession | − 0.488** | − 0.259 |

| (0.172) | (0.206) | |

| Percent need-based grant recipients | − 0.036** | |

| (0.013) | ||

| Percent part-time, FTF | − 0.177*** | |

| (0.017) | ||

| Percent female, FTF | 0.049* | |

| (0.026) | ||

| Percent Black, FTF | − 0.142*** | |

| (0.032) | ||

| Percent Latinx, FTF | 0.164*** | |

| (0.025) | ||

| Percent Asian, FTF | 0.036 | |

| (0.020) | ||

| Percent Native, FTF | 0.090 | |

| (0.094) | ||

| Percent first-time freshmen (FTF) | − 0.053** | |

| (0.023) | ||

| Enrollment (FTE), logged | − 1.937** | |

| (0.976) | ||

| Tuition | − 0.019 | |

| (0.010) | ||

| Percent loan recipients | − 0.224 | |

| (0.264) | ||

| Student-faculty ratio | 0.028* | |

| (0.014) | ||

| Adjunct share of instructional staff | − 0.013* | |

| (0.006) | ||

| Instructional spending/FTE, logged | 0.790** | |

| (0.369) | ||

| Academic support/FTE, logged | 0.256 | |

| (0.321) | ||

| Student services/FTE, logged | − 0.861** | |

| (0.353) | ||

| Scholarships/FTE, logged | − 0.018 | |

| (0.090) | ||

| County unemployment rate | 0.068 | |

| (0.055) | ||

| Constant | − 556.9*** | − 436.9*** |

| (51.44) | (76.26) | |

| Observations | 11,662 | 11,662 |

| R-squared | 0.036 | 0.108 |

| Number of institutions | 833 | 833 |

Source: IPEDS; permutation p values *p < .05, **p < .01, ***p < .001

Results are consistent with some hypothesized relationships between retention rates and predictor variables. For the most part, increases in shares of racially and socioeconomically disadvantaged students and part-time students are associated reduced retention rates. The clear exception is the Latinx share of a community college’s enrollment, which exhibits a strong positive relationship with retention. Meanwhile, increases in total enrollment and in the share that are first-time freshmen are negatively associated with retention rates. This suggests that enrollment surges and larger entering cohorts may overwhelm institutional supports, leading more students to leave early. Increased instructional spending per student is associated with increased retention, but counter to intuition so is student-faculty ratio. This latter relationship is not affected by removing measures of instructional or other spending from the model. As expected, increases in the adjunct share of instructional faculty predicts declines in retention. Student services spending is associated with lower retention, but this relationship becomes null if instructional spending is removed, suggesting that collinearity is driving this result.18 Finally, retention does not appear influenced strongly by tuition, the share of students taking out loans, or the local unemployment rate net of other factors.

In Table 5, we employ a Blinder-Oaxaca decomposition of changes in retention rates between 2004 and 2017. This analysis confirms what we found in the fixed-effects analysis: changes in measured variables between 2004 and 2017 account for only 18% of the increase in retention rates, or 0.775 percentage points.19 The rest of the retention gain remains unexplained. The decomposition suggests that increasing Latinx share, decreasing proportion part-time, and increasing per-student instructional and academic support spending pushed retention rates upward. These, however, were largely offset by rising tuition, a falling share of female students, and increases in scholarship and student services spending. Overall, student body compositional changes boosted retention by just under a percentage point (0.983), while college environment changes reduced it by about a quarter of a percentage point (− 0.268).

Table 5.

Blinder-Oaxaca decomposition of difference in mean retention rate between 2017 & 2004

| Mean retention rate | |

| 2017 retention | 54.64*** |

| (0.304) | |

| 2004 retention | 50.29*** |

| (0.386) | |

| Difference | 4.352*** |

| (0.491) | |

| Total explained by compositional factors | 0.775 |

| (0.611) | |

| Contributions to explained difference | |

| Student body composition variables | |

| Percent need-based grant recipients | 0.041 |

| (0.336) | |

| Percent part-time, FTF | 0.739*** |

| (0.211) | |

| Percent female, FTF | − 0.057 |

| (0.149) | |

| Percent Black, FTF | 0.0021 |

| (0.051) | |

| Percent Latinx, FTF | 0.235 |

| (0.238) | |

| Percent Asian, FTF | − 0.015 |

| (0.022) | |

| Percent Native, FTF | 0.050 |

| (0.153) | |

| Percent first-time freshmen (FTF) | − 0.012 |

| (0.046) | |

| Total student body composition | 0.983* |

| (0.490) | |

| College environment variables | |

| Enrollment (FTE), logged | 0.040 |

| (0.087) | |

| Tuition | − 0.444*** |

| (0.129) | |

| Percent loan recipients | 0.0322 |

| (0.170) | |

| Student-faculty ratio | 0.084 |

| (0.055) | |

| Adjunct share of instructional staff | 0.015 |

| (0.024) | |

| Instructional spending/FTE, logged | 0.549 |

| (0.891) | |

| Academic support/FTE, logged | 0.434 |

| (0.586) | |

| Student services/FTE, logged | − 0.206 |

| (0.872) | |

| Scholarships/FTE, logged | − 0.773** |

| (0.258) | |

| Total college environment | − 0.268 |

| (0.292) | |

| County unemployment rate | 0.0612 |

| (0.214) | |

| Observations | 833 |

Source: IPEDS; *p < 0.05, **p < 0.01, ***p < 0.001

Discussion and Conclusion

Considerable progress has recently been made by community colleges in retaining students. We find, using national data, that over 70% of community colleges have posted retention gains since 2004, and that the average gain across institutions is 4.9 percentage points, or 9%. These gains occurred in most of the years we analyze, with the main exception being a reversal at the height of the Great Recession. Gains appear to have been somewhat greater at the lower end of the distribution than at the higher end, and gains are smaller but still noteworthy when weighting by institutional enrollment. We can reject with confidence the possibility that retention gains were driven by the closing, consolidation, or recategorization of institutions; the size of average gains is virtually identical when only institutions in continuous independent operation as community colleges over the study window are observed.

Retention gains are, however, also not explained by observable changes in student composition or college environment. In fact, a number of changes—e.g., an increase in the proportion of racially- and socioeconomically-disadvantaged students, rising tuition—worked against increases in retention. Shifts that predict rising retention were too small to offset retention-reducing changes and still account for observed gains.

Of course, IPEDS does not measure, well or at all, some of the most pertinent predictors of student success: socioeconomic status and academic preparation. It is possible that upward shifts in these factors among the aggregate student population are what drove the gains we witness, but this seems unlikely. Conceptually, the likely sources of such shifts are either cyclical economic changes or secular trends in the underlying population. We controlled for a measure of local economic strength (county unemployment rate), and this variable should pick up and express the effects of unmeasured factors downstream from it. Changes in the local unemployment rate have little impact on student retention. This leaves underlying trends unrelated to economic cycles. But through this period, enrollment rates of high school graduates mostly trended upward while 12th grade national skills tests show no gains since the early 2000s (Hussar et al., 2020; NCES 2020), suggesting that the academic skill of the marginal community college entrant was likely stable or falling.

We suggest four potential explanations for our findings. First, there is a possibility that retention increases are the result of colleges massaging their reported statistics. IPEDS derives, after all, from college self-report, and colleges have been under great pressure to show retention gains. Outright mendacity is not necessary; for colleges the institutional presentation of the self can involve generating “the most flattering accurate defensible numbers” (Stevens, 2009). But three pieces of evidence argue against this possibility. First, degree completion has also trended upwards over the period in question (Juszkiewicz, 2020), though completion figures too come from IPEDS. Second, the National Student Clearinghouse Research Center, which tracks students independently using social security numbers, also reports gains in retention, persistence, and degree completion (National Student Clearinghouse Research Center, 2020a, 2020b). Third, Denning et al. (2019) find that degree completion rates (which are contingent on retention) increased across postsecondary education from 1990 to 2010 using IPEDS, Census data, and two NCES longitudinal surveys. As here, Denning et al. cannot attribute gains to student- or institution-level characteristics.

The second explanation is that advanced by Denning et al.: colleges may have collectively reduced “standards for degree receipt”. They provide evidence that the GPA distribution has shifted upwards in nearly all college types despite changes in college and student characteristics that predict reduced average grades. Other research does not unequivocally support the finding of escalating grade inflation in higher education (Kostal et al., 2016; Pattison et al., 2013).

Third, retention gains may result from increased academic commitment on the part of students that doesn’t translate directly into improved grades or performance on skills tests. This could derive from academically-relevant non-cognitive skills like grit, or it could reflect an increased societal emphasis on the importance of degree completion. Over time students have upgraded their academic expectations (Goyette, 2008), and this may be a response to education-based earnings premiums as well as increased cultural association of education with a positive valuation of the self (Monaghan 2020). Such factors could lead the margin of persistence and completion to shift downwards in terms of GPA. This could also explain rising high school completion despite flat NAEP scores. As mentioned above, IPEDS lacks measures that capture non-cognitive assets, and is arguably implicitly oriented to a “deficit model” of accounting for student outcomes (Kohli et al., 2017; Valencia, 2010).

Finally, increased retention may result from better academic and organizational practices at colleges. Raising retention and completion has been the stated goal for a wide range of innovations that have swept the community college sector since the early 2000s: guided pathways, accelerated or co-requisite remediation, college skills courses, learning communities, and intrusive advising. It is unclear from the research (and IPEDS does not tell us) how widespread or well-implemented these practices are, and research on their effects on student outcomes is still developing (Monaghan et al., 2018). But if they are both pervasive and effective, this could have kept more students in school.

A word of caution is in order regarding the application of our findings beyond the period we studied. This study was completed and submitted for review prior to the onset of the COVID-19 pandemic. At the time it represented—so far as is possible given time lags in data releases—the “current” state of the community college field. That is clearly no longer the case. The pandemic was profoundly disruptive of nearly every social institution, community colleges included. Moreover, there is strong evidence to suggest that “pandemic recessions” are fundamentally different from “normal” economic recessions—which the Great Recession was a particularly dramatic example (Alon et al. 2020). We encourage readers to not apply the lessons of this study regarding the Great Recession’s impact on retention rates (a minor, temporary reversal) to what is unfolding in the wake of the pandemic.

Our study has an implicit implication for prevailing models of retention summarized above. These models are explicitly focused on individual-level explanations, bringing in the surrounding environment mostly insofar as it impacts individual students. They are also implicitly cross-sectional, taking the environment to be fixed in character. As such they make little attempt to explain why average success outcomes would change over time, except as an aggregate of individual-level causal processes. What is notable here is how little of the change in retention we are able to explain using standard measures. Something else is clearly impacting student outcomes, and that it is happening across a large number of colleges suggests that the change occurred in the larger sectoral environment. Retention research would be well-served by incorporating higher-level ecological factors that change slowly over time.

We contribute to an accumulating body of research pointing to improved performance across the postsecondary sector in the first two decades of the twenty-first century. This trend has received very little attention. Instead, researchers, policymakers, and the public seem stuck within the “completion crisis” problematic. Research can lead the way in re-orienting the conversation to highlight and account for the relative turn-around that has taken place. Much more needs to be known about why it is that fewer students are dropping out and more are completing. This isn’t to argue that advocacy and research should forget about those who do not complete degrees or the still-glaring disparities in attainment. But when positive changes happen, and it is worthwhile to find out why.

Appendix

We dropped a small number of institutions because of missing data on key study variables. We dropped others because they were not observed in the data in all study years. Table 6 compares colleges in the analytic sample to those excluded. We conclude that there are significant differences between included and excluded colleges. In Table 7 we present two sensitivity analyses of the main findings from Table 4 to explore whether exclusions could bias results. First (Rows 1–2), we examine an unbalanced panel, including all colleges in the data regardless of full representation across study years. Second (Rows 3–4), we reweighted by the inverse of the probability of analytic sample inclusion—up-weighting included cases that better resemble excluded cases and down-weighting those more dissimilar. In both cases results are substantively like results from Table 4 (Rows 5–6). We conclude that bias from sample (Table 8) exclusions was unlikely to drive results.

Table 6.

T-tests for sample equivalence (quantities measured in 2004)

| In sample | Out of sample | p | |

|---|---|---|---|

| Enrollment (FTE) | 7.799 | 6.866 | < .001 |

| Percent female, FTF | 53.67 | 54.21 | 0.4248 |

| Percent Black, FTF | 14.34 | 16.52 | 0.0578 |

| Percent Latinx, FTF | 10.415 | 7.393 | 0.0029 |

| Percent Asian, FTF | 3.689 | 5.081 | 0.0548 |

| Percent Native, FTF | 2.405 | 4.66 | 0.0035 |

| Percent part-time, FTF | 35.9 | 28.49 | < .001 |

| Percent first-time freshmen (FTF) | 23.25 | 30.1 | < .001 |

| Percent need-based grant recipients | 43.08 | 43.6 | 0.681 |

| County unemployment rate | 5.802 | 5.768 | 0.7579 |

| Tuition | 7.3563 | 6.335 | < .001 |

| Percent loan recipients | 16.52 | 14.85 | 0.194 |

| Student/faculty ratio | 19.1 | 17.7 | 0.0055 |

| Adjunct share of instructional staff | 64.48 | 56.39 | 0.0003 |

| Instructional spending/FTE | 7.9702 | 6.211 | < .001 |

| Academic support/FTE | 6.276 | 4.664 | < .001 |

| Student services/FTE | 6.536 | 4.998 | < .001 |

| Scholarships/FTE | 5.923 | 4.07 | < .001 |

| N | 833 | 307 |

Table 7.

Sensitivity analysis of the impact of sample exclusions on main analysis

| Unbalanced panel | Inverse probability weights | Original analysis | ||||

|---|---|---|---|---|---|---|

| (1) | (2) | (3) | (4) | (5) | (6) | |

| Year | 0.3*** | 0.28*** | 0.384*** | 0.359*** | 0.33*** | 0.25*** |

| (0.02) | (0.038) | (0.056) | (0.0697) | (0.025) | (0.037) | |

| Recession | − 0.41* | − 0.192 | − 0.624* | − 0.384 | − 0.488** | − 0.259 |

| (0.17) | (0.220) | (0.314) | (0.374) | (0.172) | (0.206) | |

| Enrollment (FTE), logged | − 1.152 | − 2.008 | − 1.937* | |||

| (0.903) | (2.652) | (0.976) | ||||

| Percent female, FTF | 0.0646* | 0.0558 | 0.0493 | |||

| (0.025) | (0.0380) | (0.026) | ||||

| Percent Black, FTF | − 0.1*** | − 0.152*** | − 0.1*** | |||

| (0.028) | (0.039) | (0.032) | ||||

| Percent Latinx, FTF | 0.12*** | 0.128*** | 0.16*** | |||

| (0.026) | (0.0341) | (0.025) | ||||

| Percent Asian, FTF | 0.0439 | 0.0392 | 0.0360 | |||

| (0.023) | (0.0273) | (0.020) | ||||

| Percent Native, FTF | 0.052 | 0.228*** | 0.0901 | |||

| (0.066) | (0.0631) | (0.094) | ||||

| Percent part-time, FTF | − 0.1*** | − 0.127*** | − 0.1*** | |||

| (0.015) | (0.0371) | (0.017) | ||||

| Percent first-time freshmen (FTF) | − 0.041* | − 0.0532 | − 0.053* | |||

| (0.018) | (0.0489) | (0.023) | ||||

| Percent need-based grant recipients | − 0.028* | − 0.0393 | − 0.036** | |||

| (0.011) | (0.0237) | (0.013) | ||||

| County unemployment rate | 0.0369 | 0.0247 | 0.0680 | |||

| (0.058) | (0.0964) | (0.055) | ||||

| Tuition | − 0.209 | − 0.231 | − 0.224 | |||

| (0.244) | (0.429) | (0.264) | ||||

| Percent loan recipients | − 0.0** | − 0.0323* | − 0.0192 | |||

| (0.010) | (0.0141) | (0.010) | ||||

| Student-faculty ratio | 0.0089 | 0.0428*** | 0.0286* | |||

| (0.018) | (0.00896) | (0.014) | ||||

| Adjunct share of instructional staff | − 0.01* | − 0.0237 | − 0.013* | |||

| (0.009) | (0.0135) | (0.006) | ||||

| Instructional spending/FTE, logged | 0.428 | 1.016* | 0.790* | |||

| (0.339) | (0.486) | (0.369) | ||||

| Academic support/FTE, logged | 0.72** | 0.468 | 0.256 | |||

| (0.255) | (0.582) | (0.321) | ||||

| Student services/FTE, logged | − 1.0** | − 1.054* | − 0.861* | |||

| (0.370) | (0.516) | (0.353) | ||||

| Scholarships/FTE, logged | − 0.003 | − 0.140 | − 0.0184 | |||

| (0.102) | (0.198) | (0.0906) | ||||

| Constant | − 54*** | − 49*** | − 718.4*** | − 650.0*** | − 55*** | − 43*** |

| (52.6) | (76.74) | (113.3) | (138.3) | (51.44) | (76.26) | |

| Observations | 14,757 | 14,507 | 11,662 | 11,662 | 11,662 | 11,662 |

| R-squared | 0.025 | 0.082 | 0.042 | 0.106 | 0.036 | 0.108 |

| Number of unitid | 1251 | 1215 | 833 | 833 | 833 | 833 |

Robust standard errors in parentheses; ***p < 0.001, **p < 0.01, *p < 0.05

Table 8.

Blinder-Oaxaca decomposition of difference in mean retention rate between 2017 & 2004, full results

| (1) | (2) | (3) | (4) | (5) | (6) | |

|---|---|---|---|---|---|---|

| 2004 | 2017 | Differential | Composition effects | Rate effects | Interaction | |

| Percent need-based grant recipients | 0.003 | − 0.007 | 0.041 | − 0.430 | − 0.135 | |

| (0.025) | (0.023) | (0.336) | (1.458) | (0.459) | ||

| Percent part-time, FTF | − 0.230*** | − 0.142*** | 0.739*** | 3.281** | − 0.283* | |

| (0.022) | (0.017) | (0.211) | (1.072) | (0.119) | ||

| Percent female, FTF | 0.018 | − 0.195*** | − 0.057 | − 11.41*** | 0.678** | |

| (0.046) | (0.036) | (0.149) | (3.173) | (0.207) | ||

| Percent Black, FTF | − 0.065** | − 0.106*** | 0.0021 | − 0.589 | 0.001 | |

| (0.023) | (0.019) | (0.051) | (0.434) | (0.033) | ||

| Percent Latinx, FTF | 0.025 | -0.022 | 0.235 | − 0.471 | − 0.435 | |

| (0.026) | (0.016) | (0.238) | (0.305) | (0.284) | ||

| Percent Asian, FTF | 0.043 | 0.005 | − 0.015 | − 0.132 | 0.013 | |

| (0.045) | (0.054) | (0.022) | (0.253) | (0.028) | ||

| Percent Native, FTF | − 0.336*** | − 0.154*** | 0.050 | 0.437** | − 0.027 | |

| (0.040) | (0.030) | (0.153) | (0.134) | (0.082) | ||

| Percent first-time freshmen (FTF) | 0.093** | 0.129*** | − 0.012 | 0.833 | − 0.004 | |

| (0.036) | (0.036) | (0.046) | (1.212) | (0.018) | ||

| Enrollment (FTE), logged | 1.835*** | 1.806*** | 0.040 | − 0.228 | − 0.001 | |

| (0.489) | (0.367) | (0.087) | (4.766) | (0.013) | ||

| Tuition | − 1.168*** | − 1.057*** | − 0.444*** | 0.806 | 0.042 | |

| (0.266) | (0.236) | (0.129) | (2.581) | (0.135) | ||

| Percent loan recipients | 0.004 | − 0.066*** | 0.0322 | − 1.050* | − 0.486* | |

| (0.025) | (0.015) | (0.170) | (0.438) | (0.212) | ||

| Student-faculty ratio | − 0.149* | − 0.136* | 0.084 | 0.255 | − 0.007 | |

| (0.061) | (0.059) | (0.055) | (1.650) | (0.048) | ||

| Adjunct share of instructional staff | − 0.012 | − 0.016* | 0.015 | − 0.271 | 0.005 | |

| (0.011) | (0.008) | (0.024) | (0.905) | (0.018) | ||

| Instructional spending/FTE, logged | 0.385 | 1.270* | 0.549 | 6.484 | 1.259 | |

| (0.625) | (0.584) | (0.891) | (6.275) | (1.222) | ||

| Academic support/FTE, logged | 0.349 | − 0.719 | 0.434 | − 6.188 | − 1.326 | |

| (0.472) | (0.370) | (0.586) | (3.473) | (0.750) | ||

| Student services/FTE, logged | − 0.153 | − 0.550 | − 0.206 | − 2.391 | − 0.537 | |

| (0.646) | (0.562) | (0.872) | (5.153) | (1.157) | ||

| Scholarships/FTE, logged | − 0.602** | 0.140 | − 0.773** | 4.074** | 0.953** | |

| (0.194) | (0.175) | (0.258) | (1.435) | (0.346) | ||

| County unemployment rate | − 0.062 | − 0.280 | 0.0612 | − 1.361 | 0.213 | |

| (0.218) | (0.182) | (0.214) | (1.774) | (0.279) | ||

| Retention 2017 | 54.64*** | |||||

| (0.304) | ||||||

| Retention 2004 | 50.29*** | |||||

| (0.386) | ||||||

| Difference | 4.352*** | |||||

| (0.491) | ||||||

| Total | 0.775 | 3.653*** | − 0.076 | |||

| (0.611) | (0.852) | (0.926) | ||||

| Constant | 54.44*** | 66.45*** | 12.00 | |||

| (5.041) | (5.594) | (7.530) | ||||

| Observations | 833 | 833 | 1,666 | 1,666 | 1,666 | 1,666 |

| R-squared | 0.251 | 0.289 |

Source: IPEDS; *p < 0.05, **p < 0.01, ***p < 0.001

Footnotes

We follow common postsecondary education practice in using retention to refer to continuation in one’s initial institution and persistence for continuation in any institution.

150% of normal time is six years at four-year colleges and three at community colleges. We note that open enrollment public four year colleges posted an average retention rate equal to that of community colleges (62%).

Authors’ calculations from the National Postsecondary Student Aid Study of 2016, accessed through the NCES DataLab (NCES 2021).

Since recent high school graduates constitute only 10% of community college students each year, and this share has been flat since 2010, most of the enrollment decline has been among older and returning students (NCES, 2019a, 2019b).

The Community College Research Center (CCRC) has questioned the categorization of “community colleges” in IPEDS (Fink & Jenkins 2020). Regardless, we employ the IPEDS categorization.

Within multi-campus systems, satellite campuses may appear separately in some years and for some questions but not for others.

We exclude 526 institutions, or 38%, from analysis. Sensitivity analyses (using an unbalanced panel of 1,251 institutions without missing data on key variables, and using inverse-probability weights to reduce exclusion bias) suggest that these exclusions do not substantively impact analyses. See Tables 6 & 7 in the Appendix

IPEDS permits county identification through FIPS codes beginning in 2009.

DOE only requires reporting of retention for first-time freshmen. Freshmen are “first-time” from the perspective of the entire postsecondary sector, not the reporting institution.

For four-year colleges but not community colleges, IPEDS includes SAT/ACT percentile scores and admissions criteria.

We calculate FTE enrollment using the DOE formula: full-time enrollment plus one-third times part-time enrollment.

IPEDS presents student counts by age group, but these are only reported every two years and do not separate out FTF from continuing students.

FTE faculty is full-time faculty plus one-third times part-time faculty. Student-faculty ratio is provided in IPEDS beginning in 2009. For prior years we constructed a measure that cleaved as closely as possible to the above formula. Instructional faculty are only identified separately by credit/noncredit status beginning in 2012, so we included all FTE instructional faculty. For institutions not separately reporting instructional faculty, we replaced with all FTE faculty. Our measure correlates with the IPEDS measure at r = 0.77 for 2009–2017.

Inclusion of the recessionary dummy was informed by descriptive analysis of average retention trends discussed below and depicted in Fig. 1. Five different specifications were created, and we chose the one which best predicted within-institution variance in a regression of retention on only the linear year term and the recession dummy. This specification also out-performed quantitative and cubic year transformations as well as splines.

The second component, the “rate effects”, describes the proportion of change traceable to the difference in variables’ coefficients in the two regressions. The third term is the interaction of differences in parameter estimates and differences in independent variable means between the groups. Both rate and interaction effects are usually treated as residuals.

Correlations reported in this paragraph refer to those between year-by-year averages of various variables– that is, they were calculated using only the quantities in this table.

Other models controlled for such factors as student/staff ratios and student/administrator ratio, different sources of revenue, additional expenditure items and composition of returning year students.

The coefficient for student services spending reduces from − 0.86 to − 0.31 when remove the instructional spending variable, and changes sign to 0.17 if academic support spending is also removed. In the full sample, these three variables are correlated at over 0.8, and student services spending and instructional spending are correlated at over 0.9.

This figure is listed as “total explained by observables” in the top panel of Table 5. The percent change divides this by the difference in retention rates between 2017 and 2004 (0.775/4.35 = 0.178).

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- Alon, T., Doepke, M., Olmstead-Rumsey, J., & Tertilt, M. (2020). This time it's different: the role of women's employment in a pandemic recession (NBER working paper 27660) Cambridge: National Bureau of Economic Research.

- Angrist JD, Pischke J. Mostly harmless economics: An empiricist’s companion. Princeton University Press; 2009. [Google Scholar]

- Astin AW. Student involvement: A developmental theory for higher education. Journal of College Student Personnel. 1984;25(4):297–308. [Google Scholar]

- Astin AW, Oseguera L. Pre-college and institutional influences on degree attainment. In: Seidman A, editor. College student retention: Formula for student success. Rowman & Littlefield Publishers Inc; 2005. pp. 119–146. [Google Scholar]

- Bailey T, Calcagno JC, Jenkins D, Leinbach T, Kienzl G. Is student-right-to-know all you should know? An analysis of community college graduation rates. Research in Higher Education. 2006;47(5):491–519. doi: 10.1007/s11162-005-9005-0. [DOI] [Google Scholar]

- Baker DJ, Doyle WR. Impact of community college student debt levels on credit accumulation. The ANNALS of the American Academy of Political and Social Science. 2017;671(1):132–153. doi: 10.1177/0002716217703043. [DOI] [Google Scholar]

- Bean JP. Dropouts and turnover: The synthesis and test of a causal model of student attrition. Research in Higher Education. 1980;12:155–187. doi: 10.1007/BF00976194. [DOI] [Google Scholar]

- Bean JP, Metzner BS. A conceptual model of nontraditional undergraduate student attrition. Review of Educational Research. 1985;55(4):485–540. doi: 10.3102/00346543055004485. [DOI] [Google Scholar]

- Bettinger EP, Fox L, Loeb S, Taylor ES. Virtual classrooms: How online college courses affect student success. American Economic Review. 2017;107(9):2855–2875. doi: 10.1257/aer.20151193. [DOI] [Google Scholar]

- Blinder AS. Wage discrimination: Reduced form and structural estimates. Journal of Human Resources. 1973;8(4):436–455. doi: 10.2307/144855. [DOI] [Google Scholar]

- Bowen WG, Chingos MM, McPherson MS. Crossing the finish line: Completing college at America’s public universities. Princeton University Press; 2009. [Google Scholar]

- Burrus J, Elliott D, Brenneman M, Markle R, Carney L, Moore G, Betancourt A, Jackson T, Robbins S, Kyllonen P, Roberts RD. Putting and keeping students on track: Toward a comprehensive model of college persistence and goal attainment. ETS; 2013. [Google Scholar]

- Calcagno JC, Bailey T, Jenkins D, Kienzl G, Leinbach T. Community college student success: What institutional characteristics make a difference? Economics of Education Review. 2008;27(6):632–645. doi: 10.1016/j.econedurev.2007.07.003. [DOI] [Google Scholar]

- Charles KK, Hurst E, Notowidigdo MJ. Housing booms and busts, labor market opportunities, and college attendance. American Economic Review. 2018;108(10):2947–2994. doi: 10.1257/aer.20151604. [DOI] [Google Scholar]

- College Board . Trends in college pricing 2020. College Board; 2020. [Google Scholar]

- Davidson JC, Wilson KB. Community college student dropouts from higher education: Toward a comprehensive conceptual model. Community College Journal of Research and Practice. 2017;41(8):517–530. doi: 10.1080/10668926.2016.1206490. [DOI] [Google Scholar]

- Deil-Amen R. Socio-academic integrative moments: Rethinking academic and social integration among two-year college students in career-related programs. The Journal of Higher Education. 2011;82(1):54–91. doi: 10.1353/jhe.2011.0006. [DOI] [Google Scholar]

- Denning, J., Eide, E., & Warnick, M. (2019). Why have college completion rates increased? (IZA Discussion Paper 12411). SSRN. Retrieved from https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3408309

- DesJardins SL, Ahlburg DA, McCall BP. The effects of interrupted enrollment on graduation from college: Racial, income, and ability differences. Economics of Education Review. 2006;25(6):575–590. doi: 10.1016/j.econedurev.2005.06.002. [DOI] [Google Scholar]

- Dougherty KJ, Jones SM, Pheatt L, Natow RS, Reddy V. Performance funding for higher education. Johns Hopkins University Press; 2016. [Google Scholar]

- Dwyer RE, McCloud L, Hodson R. Debt and graduation from American universities. Social Forces. 2012;90(4):1133–1155. doi: 10.1093/sf/sos072. [DOI] [Google Scholar]

- Ewell PT. Accountability and institutional effectiveness in the community college. New Directions for Community Colleges. 2011;2011(153):23–36. doi: 10.1002/cc.434. [DOI] [Google Scholar]

- Fike DS, Fike R. Predictors of first-year student retention in the community college. Community College Review. 2008;36(2):68–88. doi: 10.1177/0091552108320222. [DOI] [Google Scholar]

- Fink, J. & Jenkins, D. (2020). Shifting sectors: How a commonly used federal datapoint undercounts over a million community college students. Community College Research Center. Retrieved from https://ccrc.tc.columbia.edu/easyblog/shifting-sectors-community-colleges-undercounting.html.

- Flores SM, Park TJ, Baker DJ. The racial college completion gap: Evidence from Texas. The Journal of Higher Education. 2017;88(6):894–921. doi: 10.1080/00221546.2017.1291259. [DOI] [Google Scholar]

- Galla BM, Shulman EP, Plummer BD, Gardner M, Hutt SJ, Goyer JP, D’Mello SK, Finn AS, Duckworth AL. Why high school grades are better predictors of on-time college graduation than are admissions test scores: The roles of self-regulation and cognitive ability. American Educational Research Journal. 2019;56(6):2077–2115. doi: 10.3102/0002831219843292. [DOI] [Google Scholar]

- Gansemer-Topf AM, Schuh JH. Institutional selectivity and institutional expenditures: Examining organizational factors that contribute to retention and graduation. Research in Higher Education. 2006;47(6):613–642. doi: 10.1007/s11162-006-9009-4. [DOI] [Google Scholar]

- Goble LJ, Rosenbaum JE, Stephan JL. Do institutional attributes predict individuals’ degree success at two-year colleges? New Directions for Community Colleges. 2008;2008(144):63–72. doi: 10.1002/cc.346. [DOI] [Google Scholar]

- Goldrick-Rab S. Following their every move: An investigation of social-class differences in college pathways. Sociology of Education. 2006;79(1):67–79. doi: 10.1177/003804070607900104. [DOI] [Google Scholar]

- Goyette KA. College for some to college for all: Social background, occupational expectations, and educational expectations over time. Social Science Research. 2008;37(2):461–484. doi: 10.1016/j.ssresearch.2008.02.002. [DOI] [PubMed] [Google Scholar]

- Guiffrida DA. Toward a cultural advancement of Tinto’s theory. The Review of Higher Education. 2006;29(4):451–472. doi: 10.1353/rhe.2006.0031. [DOI] [Google Scholar]

- Halpin RL. An application of the Tinto model to the analysis of freshman persistence in a community college. Community College Review. 1990;17(4):22–32. doi: 10.1177/009155219001700405. [DOI] [Google Scholar]

- Herzog S. Financial aid and college persistence: Do student loans help or hurt? Research in Higher Education. 2018;59(3):273–301. doi: 10.1007/s11162-017-9471-1. [DOI] [Google Scholar]

- Hillman NW, Orians EL. Community colleges and labor market conditions: How does enrollment demand change relative to local unemployment rates? Research in Higher Education. 2013;54(7):765–780. doi: 10.1007/s11162-013-9294-7. [DOI] [Google Scholar]

- Hussar B, Zhang J, Hein S, Wang K, Roberts A, Cui J, Smith M, Bullock Mann F, Barmer A, Dilig R. The condition of education 2020. National Center for Education Statistics, U.S. Department of Education; 2020. [Google Scholar]

- Jacoby D. Effects of part-time faculty employment on community college graduation rates. The Journal of Higher Education. 2006;77(6):1081–1103. doi: 10.1353/jhe.2006.0050. [DOI] [Google Scholar]

- Jaeger AJ, Eagan MK., Jr Unintended consequences: Examining the effect of part-time faculty members on associate degree completion. Community College Review. 2009;36(3):167–194. doi: 10.1177/0091552108327070. [DOI] [Google Scholar]

- Jaeger AJ, Hinz D. The effects of part-time faculty on first semester freshmen retention: A predictive model using logistic regression. Journal of College Student Retention: Research, Theory & Practice. 2008;10(3):265–286. doi: 10.2190/CS.10.3.b. [DOI] [Google Scholar]

- Jann B. A Stata implementation of the Blinder-Oaxaca decomposition. Stata Journal. 2008;8(4):453–479. doi: 10.1177/1536867X0800800401. [DOI] [Google Scholar]

- Jaquette O, Parra EE. Using IPEDS for panel analyses: Core concepts, data challenges, and empirical applications. In: Paulsen MB, editor. Higher education: Handbook of theory and research. Springer; 2014. pp. 467–533. [Google Scholar]

- Jenkins D, Belfield C. Can community colleges continue to do more with less? Change: The Magazine of Higher Learning. 2014;46(3):6–13. doi: 10.1080/00091383.2014.905417. [DOI] [Google Scholar]

- Jenkins, D. & Fink, J. (2020). How will COVID-19 affect community college enrollment? Looking to the Great Recession for clues. Community College Research Center. Retrieved from https://ccrc.tc.columbia.edu/easyblog/covid-community-college-enrollment.html.

- Jenkins, D., Fink, J., & Brock, T. (2020). More clues from the Great Recession: How will COVID-19 affect community college funding? Community College Research Center. Retrieved from https://ccrc.tc.columbia.edu/easyblog/community-college-funding-covid-19.html/

- Juszkiewicz, J. (2020, July). Trends in Community College Enrollment and Completion Data, Issue 6. American Association of Community Colleges. Retrieved from https://www.aacc.nche.edu/wp-content/uploads/2020/08/Final_CC-Enrollment-2020_730_1.pdf

- Kienzl GS, Alfonso M, Melguizo T. The effect of local labor market conditions in the 1990s on the likelihood of community college students’ persistence and attainment. Research in Higher Education. 2007;48(7):751–774. doi: 10.1007/s11162-007-9050-y. [DOI] [Google Scholar]

- Kohli R, Pizarro M, Nevárez A. The “new racism” of K–12 schools: Centering critical research on racism. Review of Research in Education. 2017;41(1):182–202. doi: 10.3102/0091732X16686949. [DOI] [Google Scholar]

- Kostal JW, Kuncel NR, Sackett PR. Grade inflation marches on: Grade increases from the 1990s to 2000s. Educational Measurement: Issues and Practice. 2016;35(1):11–20. doi: 10.1111/emip.12077. [DOI] [Google Scholar]

- Kuh GD, Kinzie JL, Buckley JA, Bridges BK, Hayek JC. What matters to student success: A review of the literature. National Postsecondary Education Cooperative; 2006. [Google Scholar]

- Kuh GD, Love PG. A cultural perspective on student departure. In: Braxton JM, editor. Reworking the student departure puzzle. Vanderbilt University Press; 2000. pp. 196–212. [Google Scholar]

- Levin JS, Hernandez VM. Divided identity: Part-time faculty in public colleges and universities. The Review of Higher Education. 2014;37(4):531–557. doi: 10.1353/rhe.2014.0033. [DOI] [Google Scholar]