Abstract

Social determinants of health (SDoH) are increasingly important factors for population health, healthcare outcomes, and care delivery. However, many of these factors are not reliably captured within structured electronic health record (EHR) data. In this work, we evaluated and adapted a previously published NLP tool to include additional social risk factors for deployment at Vanderbilt University Medical Center in an Acute Myocardial Infarction cohort. We developed a transformation of the SDoH outputs of the tool into the OMOP common data model (CDM) for re-use across many potential use cases, yielding performance measures across 8 SDoH classes of precision 0.83 recall 0.74 and F-measure of 0.78.

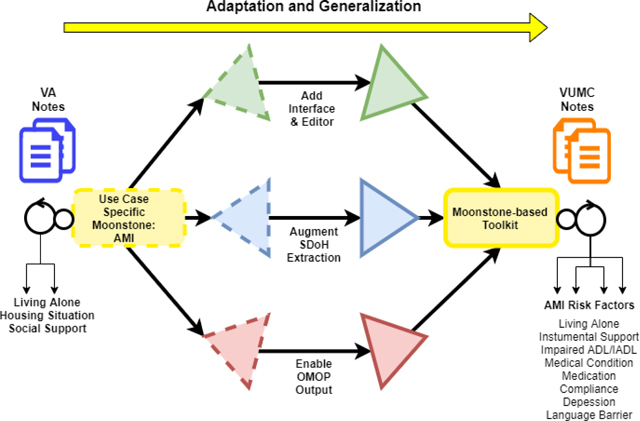

Graphical Abstract

1. Introduction

Cardiovascular disease (CVD) morbidity and mortality improved dramatically in the United States and worldwide through the 1980’s, 1990s, and early 2000. Since then, however, outcome gains have noticeably slowed. This reflects a larger pattern in the United States (US), where life expectancy is decreasing. Social determinants of health (SDoH), which include social, behavioral, cultural, environmental, and occupational influencers of health and disease outcomes,1 may be a key contributor to this pattern. According to the American Heart Association, SDoH include: socioeconomic position (including education and income), race, ethnicity, social support, culture and language, access to care, and residential environment2. The World Health Organization more broadly defines the social determinants of health as “the circumstances in which people are born, grow, live, work, and age, and the systems put in place to deal with illness.”2,3 Social risk factors (e.g., social support, housing situation, living situation and functional status) are important SDoH that affect access to care, quality of life, health outcomes and life expectancy.4

While several prior studies highlight the association between SDoH and incident CVD, the association between SDoH and outcomes following a cardiovascular event are equally important5,6. For example, patients who experience acute myocardial infarction (AMI) require several medications, close follow-up, and, in some cases, substantial lifestyle modifications. Suboptimal SDoH is a barrier to these post-AMI management steps. In order to understand SDoH and improve outcomes accordingly, we need methods that will allow clinicians and health systems to extract and catalogue patient specific SDoH characteristics. The overall goal of this project is to develop a natural language processing (NLP) approach to identify SDoH features using healthcare providers’ clinical narrative documentation.

Social risk factors have been attributed to high rates of acute myocardial infarction (AMI), a significant cause of death and disability worldwide.7,8 Prior efforts to characterize SDoH have often focused on neighborhood characteristics or hospital-level characterization9–11. Clinicians and health systems often know where the patient lives, and agencies like the US Census Bureau provide neighborhood-specific characteristics. Such neighborhood and hospital-level approaches allow for population-level characterization, but not patient-specific characterization. Yet, patient specific characteristics may improve risk stratification in order to identify patients at highest risk for adverse events, and facilitate patient-level interventions, rather than system-level interventions. There have been efforts to capture SDoH from electronic health records (EHRs) because identifying them from EHRs can provide insight in understanding health and disease outcomes.12–14 Currently, SDoH characteristics are not collected in a systematic, structured way that would allow analyses, although as Chen, Tan and Padman (2020) discuss, leveraging structured data to assemble aspects of SDoH can contribute to improved risk prediction model performance.15 EHR vendors are increasingly interested in incorporating available structured data into a SDoH model. 16 As is pointed out in Cantor and Thorpe’s 2018 report, even though EHR vendors such as Epic and Cerner have made strides toward collecting SDoH data, without overarching standards and medically-driven measures to ground these data collection services, the gap between information related to social factors and variables derived from structured data (e.g., lab results, vital signs, etc.) remains wide, in terms of interpretable, computable, even actionable datasets.17 The recommendations derived from this report call for research that ties collected SDoH data to clinical outcomes. The research reported here provides a pathway to that goal.

Clinicians often document explicit SDoH in clinical notes as a part of routine care. This information can be extracted using natural language processing (NLP). NLP applied in medical records can potentially recognize and classify clinically meaningful concepts occurring in clinical notes. One advantage of accessing this technology is that clinicians can maintain a preferred workflow, in which they type or dictate notes that are relevant for patient care, rather than click checkboxes in the interface to the electronic health record (EHR). 18,19 Challenges include creating NLP algorithms that are both robust across different healthcare systems and able to extract a wide range of concepts. Using clinical information from EHR text notes to capture SDoH is rare because it is a complex task, but if successful, this approach can identify populations with immediate social needs or identify social risk factors that may be of public health concern.20 Several studies have shown that conducting NLP over clinical notes can extract SDoH information not otherwise captured in structured administrative data. Gundlapali et al., successfully extracted indications of homelessness from VA notes 21,22. Haerian et al., compared ICD-E codes against an algorithm enhanced with NLP-extracted concepts of suicidality such as harm, ideation, and medication overdoses. Wang et al., extracted substance use variables from clinical text 23. Some studies have shown that information captured from the notes can improve prediction of hospital readmission. Greenwald et al., showed that inclusion of keywords related to cognitive status, physical function, and psychosocial support improved readmission prediction. 24, 25

Developing impactful AI systems will require adaptation of algorithms from one EHR environment to another. The current study highlights the adaptation strategies we deployed in transforming our SDoH NLP system across environments. Our strategy included leveraging curated knowledge bases such as the Unified Medical Language System (UMLS) and the Observational Medical Outcomes Partnership (OMOP), the CDM developed by the Observational Health Data Sciences and Informatics (OHDSI) collaborative,26as well as employing a rule-based system to capture medically specific language-model information. In contrast to machine learning NLP algorithms, these strategies required a modest amount of labelled data to achieve the SDoH information extraction goals.

Moonstone, a rule-based NLP system has been used to automatically extract social risk factors from clinical notes within the EHR of the Veterans Affairs (VA) over four broad cohort categories (congestive heart failure, acute myocardial infarction, pneumonia, and stroke) with good to excellent performance. 4 Extraction of social risk factors by Moonstone required semantic role interpretation and inferencing, which are uncommonly applied in clinical NLP. The system developed in this study is an extension of Moonstone, adapted for use in Vanderbilt’s EHR and tuned to SDoH pertinent to Acute Myocardial Infraction (AMI). The toolkit was adapted to the AMI use-case from a SDoH general use-case, to Vanderbilt’s EHR from the VA EHR, and to Vanderbilt’s patient population from a VA population. Although Moonstone is a rule-based system, its functionality includes term-expansion, which it does by exploiting its semantic typing system rather than the word-embedding approaches used by methods such as word2vec. Alternatively, but also in the machine learning domain, other SoDH-detecting systems exploit word-association methods to measure the association between terms in a corpus such as Dore et al (2019)’s work on psycho-social terms 27or combine embedding and association techniques such as Bejan et al (2018) work on homelessness terminology in clinical notes.14 Moonstone’s grammar compilation is responsible for distinguishing a generalized notion of topic within a document, in contrast to topic modelling, an unsupervised machine learning technique, which discovers latent topics within a set of documents. For example, Wang et al (2018) used topic modelling techniques among patients suffering dementia, which identified social support and nutritional information.28 In describing the adaptation of Moonstone’s grammar and code-base of rules to a different use-case and environment, we report on the advantages of having control over the interaction of semantics and the syntactic structures encountered in the documents it processes.

Novelty of this NLP work includes its adaptation to a new patient population, extension to multiple additional SDoH classifications, and development of new tools to support adaptation of Moonstone to new extraction tasks, as well as an interface for graphical interaction with the system’s language model which aids in developing and testing the effects of semantic interactions.

2. Methods

2.1. Study Design:

As part of a larger research program to develop risk prediction models that incorporate SDoH, we sought to adapt and deploy a previously developed NLP system within Vanderbilt University Medical Center (VUMC) and represent the outputs within the Observational Medical Outcomes Partnership (OMOP) Common Data Model (CDM). This approach supports an informatics data transformation workflow that could be used to support numerous downstream use cases.

2.2. Data Sources:

The data sources for developing this cohort and subsequent information delivery were drawn from the VUMC Electronic Health Record system in use at the time of the study initiation, StarPanel which VUMC maintained in partnership with McKesson. Our clinical use case was risk prediction of patient readmission among patients that were hospitalized for AMI, which included 6,502 patients from 2007 to 2016. The definition of AMI for constructing the cohort was collected using the Centers for Medicare & Medicaid Services (CMS) definition. The following International Classification of Disease codes and Diagnostic Related Group formed the readmission cohort selection criteria: ICD9 410.00, 410.10, 410.11, 410.20, 410.21, 410.30, 410.31, 410.40, 410.41, 410.50, 410.51, 410.60, 410.61, 410.70, 410.71, 410.80, 410.81, 410.90, 410.91; ICD10 121. ICD-10-CM I21.9 is grouped within Diagnostic Related Group(s) (MS-DRG v37.0) (222, 223, 280, 281, 282, 283, 284, 285). These data from the defined cohort included numerous EHR domains: unstructured clinical notes, inpatient and outpatient administrative data, including International Classification of Diseases (ICD), the Current Procedural Terminology (CPT), lab values, length of hospital stay, or medication orders and fill records. A machine learning 30-day readmission prediction model for AMI patients using these structured-only datatypes standardized to a CDM was developed with this cohort. 29 The present study incorporates the unstructured text corpus of these patient admissions and formed the dataset to which the NLP toolkit was applied.

2.3. Natural Language Processing Tool External Validation and Recalibration:

To develop, train and evaluate Moonstone to extract expressions representing specific social risk factors among hospitalized patients at Vanderbilt University Medical Center, we created a corpus of clinical notes, and with the assistance of a nurse chart-review team, produced a reference standard annotated with a targeted set of social risk factors. These risk factors and associated properties formed a social risk ontology of concepts and was the basis for Moonstone’s output. The developmental steps described here were used to retrain the prior version of Moonstone which was developed on VA data with overlapping but not identical social risk concepts.4 The version developed at Vanderbilt was designed for social risk factors specific to a particular cohort type, (AMI), in contrast to the prior generalized SDoH design. Additional concepts are described in the Reference Standard creation section. Because this tool was being adapted to a new environment, a different use case, exposed to data organized and formatted differently, and required developers unfamiliar with its architecture to test tool modifications at multiple levels, our design for the Vanderbilt version included an interface to facilitate observing the effects of modifying the concept ontology, and an editor to semi-automate rule generation.

2.3.1. NLP Toolkit:

The Natural Language Processing (NLP) tool, Moonstone, was designed to identify variables that are poorly documented or undocumented within structured data elements of the EHR by extracting the information from clinical narrative notes. Moonstone is a rule-based system housed within a customizable grammar engine. The initial state of Moonstone was the version developed in the US veteran population, for general social risk factors. 4 In that version of the toolkit, three classifications were captured: housing situation, living alone, and social support, with each broken out into positive vs negative assertion values, and in the case of housing situation, tri-valent categories were collected: homeless/marginally housed; lives in a facility; not homeless. The final performance (F-measure) of the tool in the VA cohort was housing situation .82, living alone .94, and social support .88. The toolkit was adapted to the AMI use-case from an SDoH general use-case, to Vanderbilt’s EHR from the VA EHR, and to Vanderbilt’s patient population from a VA population. We also trained Moonstone to extract additional SDoH variables particularly salient to post-discharge recovery among patients hospitalized for AMI and modified the algorithms of the original 3 concepts (Living Alone, Housing Situation, Social Support) to handle an expanded semantic range of textual evidence. New concepts added to the Moonstone system include Impaired ADL/IADL(Activities of Daily Living and Instrumental Activities of Daily Living); Medical Condition (those that affect activities of Daily Living); Medication Compliance; Depression; Dementia; Language Barrier. The original concepts Living Alone and Housing Situation were reconfigured in relation to the logic of assertion values with respect to evidence for patients living with others, resulting in a single new Living Alone concept. The original concept Social Support was expanded to include more types of evidence (e.g., food and rental subsidy support from community outreach or governmental support agencies, and family involvement in medical plan of care), becoming Instrumental Support in the current version of Moonstone.

2.4. Document Sampling Strategy:

To construct the language model for the targeted SDoH concepts, human reviewers constructed a reference standard by annotating a corpus of inpatient notes drawn from a patient cohort hospitalized at the Vanderbilt University Medical Center for AMI. Using elements from the OMOP common data model, we designed a document selection strategy representative of the target cohort as well as document types likely to contain meaningful social and functional status factors. This sample defines a corpus of documents derived from a cohort of patients hospitalized with a primary diagnosis of AMI, including document types from the hospitalization and using distributions across three demographic strata: Gender stratum: 50 % women, 50% men; Age stratum: 50% 65 years or older, 50% under 65 years of age; Ethnic stratum: 60% Majority (white), 40% Minority (non-white). For each patient, we selected notes dated 72 hours prior to the first AMI admission through the date of discharge, inclusive of pre-admission notes to account for patients entering through the emergency department. The resulting corpus consists of the following eight note categories: 1) Emergency Department Notes, 2) Primary Care Notes, 3) Inpatient History & Physical/Admission Notes, 4) Inpatient Discharge Summaries, 5) Mental Health Notes, 6) Case Management Notes, 7) Social Worker Notes, 8) Occupational & Physical Therapy Notes. To avoid over-representation of specific language either by a given provider or due to patient-specific information, we selected one document per patient and satisfying the demographic and note type criteria to complete a block of notes. Each annotation block consists of 160 notes; 8 note categories each selected from 20 patients reflecting the demographic strata described above, as laid out in Table 1.

Table 1:

Sampled Notes for Reference Standard Corpus

| 160 notes | corpus from under 65 cohort (1 note per patient) | corpus from 65 and over cohort (1 note per patient) | ||||

|---|---|---|---|---|---|---|

| Note Type (8 types) | 3 female, Majority 2 female, Minority |

3 male, Majority 2 male, Minority |

10 notes | 3 female, Majority 2 female, Minority |

3 male, Majority 2 male, Minority |

10 notes |

To determine the note category for this corpus, we used concepts and linkages available from multiple sources within the OMOP common data model. Linking characteristics of the note’s author to ascertain provider type, we accessed the OMOP provider concept table, which draws on the National Uniform Claim Committee vocabulary of healthcare worker specialty and subspecialty classifications. Additionally, we associated local note type and subtype to the OMOP document type concept table, which draws on the Logical Observation Identifiers Names and Codes (LOINC) clinical document ontology. Finally, we drew values from the combined OMOP Care Site and Visit Occurrence tables to assure inpatient status and the clinical setting of the notes in the selected corpus. The demographic and clinical characteristics of the cohort from which the annotation corpus was derived were also determined using OMOP concepts, the Admitting Diagnosis drawing from the OMOP conditions table which uses SNOMED-CT concepts, while the demographic characteristics Gender and Age drew from the OMOP persons table.

2.5. Reference Standard Creation:

To create the reference standard against which Moonstone would be evaluated and to provide training data for iterative development of Moonstone, we used the annotation tool eHOST (extensible Human Oracle Suite of Tools) for identifying text fitting the definition of a set of concepts and associated attributes.30 Moonstone was trained to map representative expressions to the following SDoH-specific concepts. Each of these concepts were additionally characterized by their assertion values, as present, absent or possible (e.g., might be present). Table 2 illustrates examples of each of the targeted SDoH concepts

Table 2:

Targeted SDoH Concept Examples

| Concept | Definition | Example |

|---|---|---|

| Living Alone | living situation of the patient | Pt does not live with anyone else; Lives with wife & children |

| Instrumental Support | financial, medical, food, housing, assistance by friends, family, community, or government | Ex-wife brings food by daily Receives help from operation stand down |

| Impaired ADL/IADL | impaired ability to perform activities of daily living (ADL) or instrumental activities of daily living (IADL) | No longer able to prepare meals for herself Unable to communicate |

| Medical Condition | medical conditions preventing the patient from performing ADL/IADL without assistance | No evidence of dysphasia Patient is comatose |

| Medication Compliance | evidence of willful or inadvertent failure to adhere to medication | Pt forgets to take her digoxin Reported he quit taking water pills |

| Depression | diagnosis of depression or bipolar disorder | Pt has Hx of depression Dysthymia noted |

| Dementia | diagnosis of dementia or indications of longterm cognitive impairment | Although pt. shows signs of memory loss, she is not demented |

| Language Barrier | indications that the patient has problems understanding medical instructions due to language differences between the patient and healthcare workers | Needs interpreter Clearly understands discharge instructions |

2.5.1. Annotator Training Process

In a pilot phase of the annotation process, annotator teams from Vanderbilt, University of Utah and Dartmouth College used publicly available clinical notes from Medical Transcription Sample Reports and Examples.24 The group annotation effort to construct guidelines and the layout of the annotation schema within the annotation application allowed the team to refine the classes and attributes necessary for capturing social factors pertinent to the AMI cohort, and iron out inconsistencies in interpretation across all groups31. Upon completion of the guideline refinement, three Vanderbilt nurse annotators were trained to capture the SDoH concepts and associated assertion values using non-project data. Training with these data was stopped when the inter-annotator agreement rate reached 85%. Subsequently two annotators independently annotated the project corpus of 160 notes, with the third acting as adjudicator for disagreements. Annotation guidelines used for this task are available via this repository: https://github.com/leenlp/DVUMoonstoneAMI.

2.6. Alterations to Moonstone Programming Environment

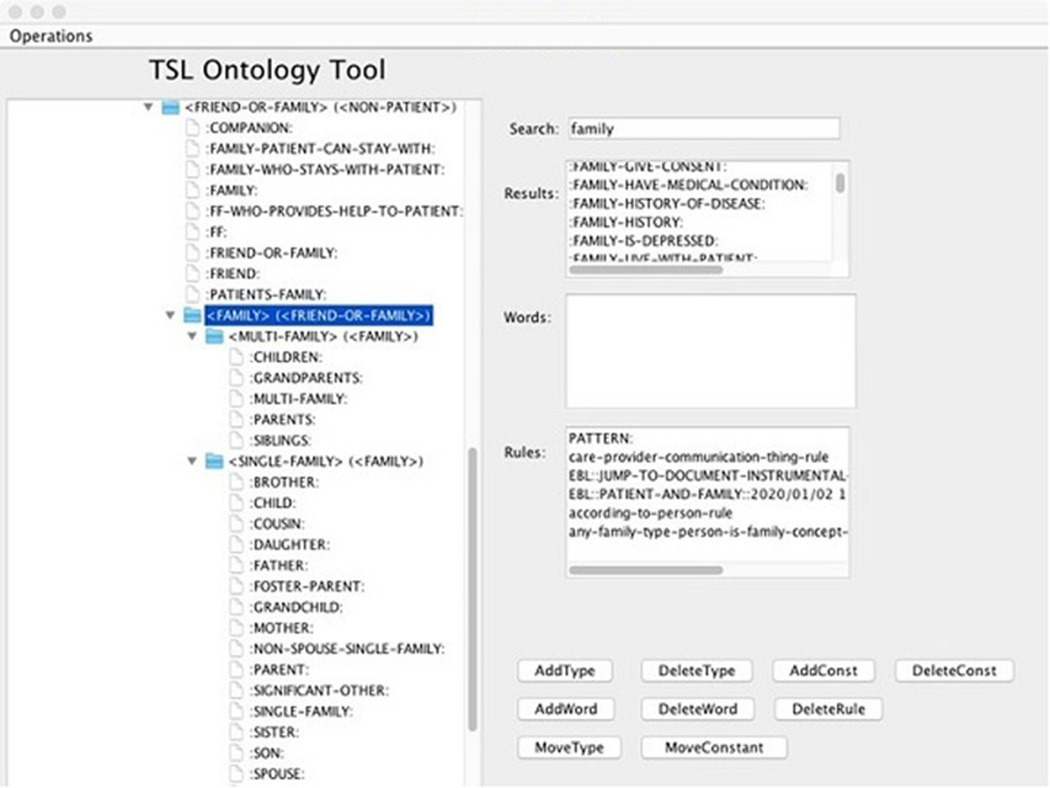

Moonstone is a complex NLP tool, and we developed a programming environment to support enrichment. The environment provides more control and awareness of environmental dependencies within the architecture of Moonstone’s rule base and data mapping processes. A graphical tool was added to simplify enhancement of the Moonstone ontology. Moonstone has several features that distinguish it from traditional grammar-based NLP systems. For instance it uses a semantic grammar with rules which match sequences of semantically typed constants rather than syntactic phrasal types as shown in the rule definition in fig 1 below, which implies that any negated reference to friends suggests the patient is socially isolated. Moonstone’s ontology does not match just objects in the task domain, but English expressions, with base-level types that include Things (e.g. nouns such as Person), Actions (e.g. verbs such as Transport), and Sentence-level summarizations such as “Patient-Interact-With-Provider”. Moonstone contains a typed first order language (called “TSL”for “typed semantic language”) for generating interpretations of phrases and sentences using terms from the ontology and semantic grammar.

Fig 1.

Typed Semantic Rule example.

One important characteristic of Moonstone is its ability to analyze and summarize relevant sentences and phrases at a literal level of meaning. Unlike more common medical NLP methods such as statistical classifiers for specific diseases, information about SDoHs is often captured in common, non-technical words and phrases, and often involves contextual knowledge and one or more steps of common sense inference to arrive at the relevant conclusion. For instance, the common expression “spouse at bedside” taken literally means that the patient’s spouse is next to the patient’s bed in the hospital setting, but also suggests that family members are closely involved with the patient’s care, an indicator of the SDoH “Instrumental Support”. Phrases such as “police at bedside” or “priest at bedside” have different interpretations, each requiring a level of understanding of the likely roles of the different individuals involved. In addition, in contrast to the highly regularized medical vocabularies used to describe diseases and symptoms, a virtually unlimited number of English expressions could indicate instrumental support or its converse. For instance, “patient arrived at clinic with mom” strongly suggests support, while “patient arrived at clinic by taxi” suggests the opposite although the wording is nearly identical.

Moonstone manages the virtually unlimited number of relevant phrases by looking for a finite set of abstract patterns that occur again and again, patterns such as “Provider interact-with Family”, “Family Provide Transportation”, “Provider Educate Family”, “Friends/Neighbors Do Chores for Patient”, etc. Recognizing these patterns requires a literal level of analysis, then abstraction through the ontology mediated by the semantic grammar. This approach is different from the more standard search for statistical correlations between medical terminology and target concepts; it relies on common knowledge of word meanings and inferences that can be drawn from those meanings.

The following example illustrates one family of support-related inferences based on communication between the patient’s family members and healthcare providers. The number of possible sentences matching this description (e.g. “I spoke with the wife” “Attending instructed the family”) is clearly vast, but Moonstone manages this by abstracting out the general description “Provider Interact-With Family”, which is then matched by a grammar rule which recognizes this as evidence of social support. Most examples of support involve family, friends, neighbors or community support resources such as Meals on Wheels. Figure 2 below displays a view of the Moonstone ontology tool showing a segment of the type heirarchy which enumerates semantic constants relating to family relationships.

Fig 2.

Moonstone Ontology Tool.

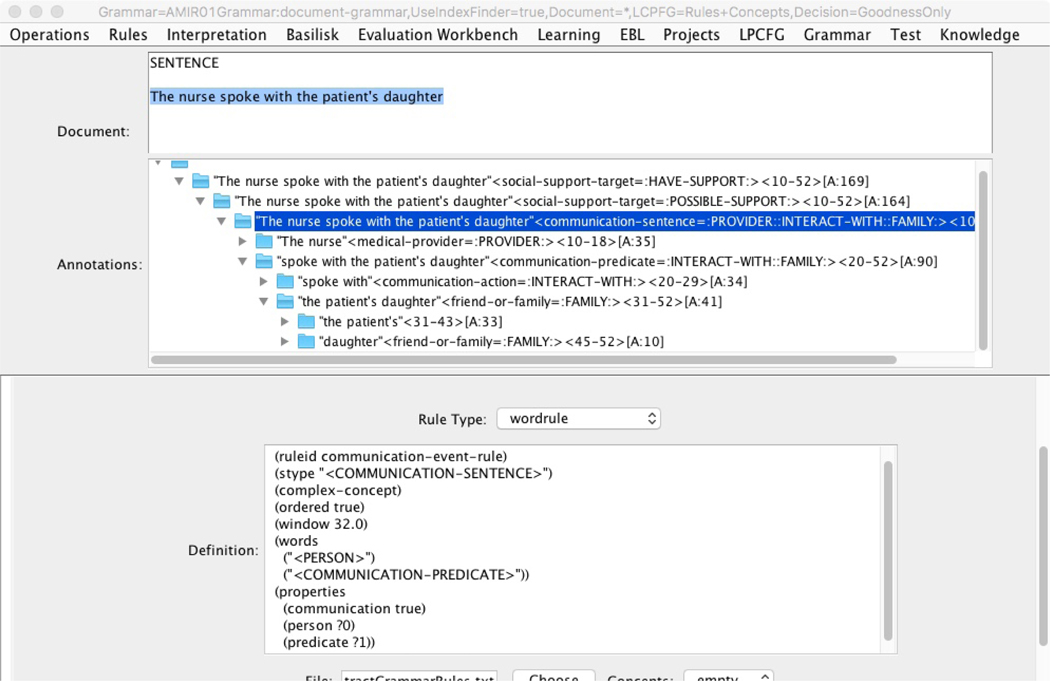

The semantic grammar maps sequences of words in an English sentence to low-level literal constants such as :FAMILY: and :PROVIDER:, which are then grouped and mapped to more complex phrase- and sentence-level constants. Figure 3 shows a view of the “parse tree” produced by the application of the semantic grammar to the sentence “The nurse spoke with the patient’s daughter”. The most comprehensive literal summary recognized by the grammar is captured by the constant “:PROVIDER::INTERACT-WITH::FAMILY:”, which is then matched by another grammar rule which recognizes this as possibly signifying social support. Each node in the tree represents a grammar rule which successfully fired, and using the mouse to select that node in the Moonstone control GUI causes the definition of that rule to be displayed in the lower window as shown in figure 3. Using this tool to identify gaps in the grammar is central to the process of training Moonstone.

Fig 3.

Firing a Grammar Rule.

Four new types of functionality were added during this project to aid in training Moonstone. First, the graphical tool shown in figure 2 above was created to help in viewing and editing the TSL ontology, which had previously been done by hand using a text editor.

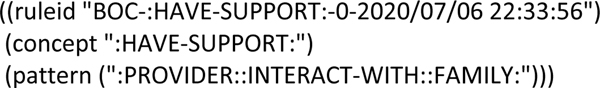

The second addition was a function (called the “snapshot function”) which can semi-automatically derive a new grammar rule directly from a parse tree such as shown in fig 3. If the Moonstone grammar fails to recognize an expression containing two or more known constants, one of the abstract grammar rules may generate a constant on-the-fly which is simply a concatenation of the subsumed lower constants. The human trainer can then assign a meaning to that constant from the ontology, either to a literal or inferred semantic constant, and a grammar rule which bridges the two constants is then automatically added to the grammar. This is a principal method by which rules are written that map complex literal meanings to target-level inferences, such as mapping :PROVIDER::INTERACT-WITH::FAMILY: to the ontology constant :POSSIBLE-SUPPORT: as shown in the “snapshot-generated” rule in figure 4:

Figure 4:

Automatically generated “snapshot rule”

Thirdly, we instantiated a generalized parameterization for note structure to manage differences between VA versus Vanderbilt clinical note formats. Parameters can be set to accommodate macros and templates--text far from the norms of natural language structure like autogenerated text or questions and attribute names followed by brief descriptions, such as the following:

Stairs or Elevator: Stairs Number stairs inside: 12 Number stairs outside: 8 ADL Prior to Admission: Moderate assistance needed

Moonstone treats the questions/header portion of such statements in the same manner as headers to a sectioned report such as “History of Present Illness”. Code was added to Moonstone to extract a list of headings from the training corpus, which could then be matched against reports analyzed by Moonstone. Headers are not directly analyzed using Moonstone’s semantic grammar, but they can be referenced in tests stored with the grammar rules. For instance, the rule in figure 5 recognizes mention of a friend or family member preceded by a header containing strings such as “discuss”, “plan”, and “transport” as evidence of instrumental support.

Figure 5:

Headers in Relation to Grammar Rules

Finally, we added a grammar compilation process designed to improve Moonstone’s runtime processing speed, which degraded considerably with the addition of many new semantic types and constants, and abstract grammar rules with a high branching factor used mostly during training. After training, the abstract rules are removed from the grammar and newly compiled “snapshot” rules are activated to run in their place. In our experience, this grammar compilation step improves Moonstone’s runtime from 10 to 100-fold.

The ontology resulting from the interaction of these sub-parts is complex. At the completion of training, there were 541 semantic types, 2413 object constants, 2505 lexical items. These items combine with the grammar for the automated inference that there is, for example, evidence that there is instrumental support. The class Instrumental Support is defined as assistance by friends, family, community, or government in the form of financial, medical (including communication with healthcare workers), food, housing assistance. The following table illustrates an example of classes used by grammar to generate the inference of Instrumental Support.

Table 3:

Moonstone Lexicon and Inferencing Example Accommodating Instrumental Support Classification

| Sample classes | lexicon | Example sentence for instrumental support |

|---|---|---|

| Family member | mother, sister, brother, son-in-law, etc. | Father at the bedside |

| Transportation | Car, taxi, bus | Took taxi home after procedure |

| Communication | Report, instruct, talk with, discuss | Discussed procedure with mother |

3. Results

We measured the rate of inter-annotator agreement to determine the stability of the annotation guidelines, and the reliability of the annotated dataset as a reference standard for training and evaluating the results of Moonstone’s output. At 89.5% overall agreement, the dataset is stable.

3.1. Inter-Annotator Agreement

The inter-annotator agreement rate on the project corpus (160 documents) are shown in table 4.

Table 4:

Inter-annotator agreement

| Concept | IAA |

|---|---|

| Summary | 89.5% |

| Living Alone | 89.6% |

| Instrumental Support | 85.6% |

| Impaired ADL/IADL | 93.5% |

| Medical Condition | 83.5% |

| Medication Compliance | 88.6% |

| Depression | 94.5% |

| Dementia | 87.5% |

| Language Barrier | 83.3% |

3.2. Moonstone Performance Measures

The annotated dataset of 160 documents was divided into a development set of 104 documents, used to train the system, and 56 of which were reserved to test the performance of the system. We measured Moonstone’s performance on the dataset that it was trained on (Table 5, 104 documents) and its performance on data it had not been exposed to (Table 6, 56 documents). Moonstone achieved an overall F-measure of .88 on the training set, and .78 on the test set. Moonstone’s overall performance on test documents resulted in precision of .83, recall of .74 and an F-measure of .78. The highest performing concept, Impaired ADL/IADL resulted in precision measure of .94, recall of .85 and an F-measure of .89. This concept was not among those in the original version of the tool. Also new to the social risk ontology for this version are Depression, (precision .83, recall .83 and F-measure .83) and Dementia (precision 1.00, recall .71 and F-measure .83). Living Alone, a concept that was adapted from the prior version, resulted in precision measure of .83, recall of .74 and an F-measure of .78, compared to an F-measure of .94 in the prior version. However, the concept definition from the prior Moonstone version, which is most closely aligned with the annotation definitions of Living Alone in the current version, was the prior version’s concept Housing Situation which had an F-measure of .82. Medical Condition showed moderate performance with an F-measure of .51, and two variables with very small sample sizes performed poorly.

Table 5:

Moonstone Performance SDoH on 104 development documents

| Concept | TP | FP | FN | Prec | Recall | F-1 |

|---|---|---|---|---|---|---|

| Summary | 1178 | 144 | 155 | 0.89 | 0.88 | 0.88 |

| Living Alone | 108 | 8 | 18 | 0.93 | 0.85 | 0.89 |

| Instrumental Support | 238 | 24 | 34 | 0.90 | 0.87 | 0.89 |

| Impaired ADL/IADL | 617 | 30 | 40 | 0.95 | 0.93 | 0.94 |

| Medical Condition | 132 | 77 | 60 | 0.63 | 0.68 | 0.65 |

| Medication Compliance | 21 | 1 | 0 | 0.95 | 1.00 | 0.97 |

| Depression | 41 | 3 | 2 | 0.93 | 0.95 | 0.94 |

| Dementia | 15 | 0 | 1 | 1.00 | 0.93 | 0.96 |

| Language Barrier | 6 | 1 | 0 | 0.85 | 1.00 | 0.92 |

Table 6:

Moonstone Performance SDoH on 56 test documents

| Concept | TP | FP | FN | Prec | Recall | F-1 |

|---|---|---|---|---|---|---|

| Summary | 517 | 104 | 185 | 0.83 | 0.74 | 0.78 |

| Living Alone | 40 | 8 | 14 | 0.83 | 0.74 | 0.78 |

| Instrumental Support | 89 | 13 | 45 | 0.87 | 0.66 | 0.75 |

| Impaired ADL/IADL | 300 | 20 | 53 | 0.94 | 0.85 | 0.89 |

| Medical Condition | 54 | 49 | 55 | 0.52 | 0.50 | 0.51 |

| Medication Compliance | 4 | 7 | 11 | 0.36 | 0.27 | 0.31 |

| Depression | 25 | 5 | 5 | 0.83 | 0.83 | 0.83 |

| Dementia | 5 | 0 | 2 | 1.00 | 0.71 | 0.83 |

| Language Barrier | 0 | 2 | 0 | 0.00 | - | 0.00 |

3.2.1. Moonstone Performance

Moonstone performance measures per concept on the development set of 104 documents shown in table 5

Moonstone performance measurements per concept on the test set of 56 documents are shown in table 6

4. Discussion

We evaluated Moonstone’s performance at identifying the extended set of SDoH on an unseen test set, and demonstrated moderate to excellent performance for most concepts. The current version of Moonstone, including a greater number of social risk factors and more nunaced set of associated values than its predeessor, was deployed to capure these factors in different clincal domain and processed documents produced by a different medical records system, at a different institution, and the programming environment in which it was developed was repurposed to facilitate more generalized use. The analyses of the modifications to Moonstone address these differences.

Extraction of SDoH from clinical text is a complex NLP problem. The semantic classes required to identify concepts like Living Alone and Instrumental Support go beyond typical classes like disease, anatomic location, and procedures to include family members, chores, transportation, relationships, activities, houseware, food, etc. In addition to a much more diverse lexicon, it requires deeper understanding of semantic roles. For instance, the system must differentiate between a patient helping feed his wife and a wife helping feed the patient to determine that the sentence “Patient requires help from his wife to eat” indicates Impaired Activity of Daily Living. We made substantive changes to the programming environment for Moonstone to enable adaptation to a new clinical domain, to a different medical record system, and to an extended set of SDoH. The additions to the programming environment enabled developers not involved in the initial Moonstone creation to make changes necessary for this complex task. The code base and user information for the most recent version of Moonstone can be found via this repository: https://github.com/leenlp/DVUMoonstoneAMI.

4.1. Moonstone Error Analysis

Text mentions corresponding to the class Medical Condition were more prevalent, more variable in expression, and appeared in a wider range of note types compared to other classes. Its modest performance was not surprising and can be understood in the context of the decision made during the reference standard creation phase to annotate medical conditions within the text, but only conditions that adversely affect the patient’s ability to perform activities of daily living, such as dysphagia in relation to eating. However, the variety of these terms was too large to successfully collect in a single class. Similarly, the etiology of patients’ failure to comply with medication orders was large and heterogenous enough that Medication Compliance, though potentially useful as an identifier of suboptimal health management, will require more granular subclasses in future Moonstone versions.

In future work, we will combine text and structured variables to predict hospital readmission. To enable integration of the information extracted from text with structured data from the EHR, we converted Moonstone’s output to OMOP. The question of whether NLP performance is good enough is a relative one depending on the use case. Because the readmission prediction models under development are machine-learning-based, we plan to use all concepts with performance above .50 F-measure as input to the predictive models and will assess whether information from the clinical notes improves predictive accuracy. Moonstone’s output includes the values present, absent, and possible for the SDoH concepts, but it also includes layers of information used to generate those values. For instance, for the sentence “she came to the clinic with her friend,” in addition to the conclusion Moonstone makes that Instrumental Support is present, Moonstone also captures that there is a patient being described, there is travel to a medical facility, that the patient was accompanied by a friend or family. Even if there is a mistake in Moonstone’s summative classification of present, the layers of information can be used by a machine learning classifier to help predict whether the patient will be readmitted to the hospital. In concert with the principals involved in making Moonstone outputs (and NLP derived variables in general) compatible with clinical CDMs, Moonstone was designed to balance generalizability with intelligibility of its working parts. Moonstone outputs were mapped to the OMOP CDM specifications for NLP data provided by OHSDI, selecting for each class in our study’s SDoH ontology the closest concept available within the medical vocabularies available (e.g., SNOMED-CT, MedDRA, LOINC, etc.). We believe that the OHDSI recommendations for the mapping of NLP-derived variables, associated attribute values, and select meta-data elements deserves a new look for informaticians interested in the aggregation of variables from both structured and unstructured data-streams, at a minimum in terms of information provenance and confidence level determinants. Because SDoH information in particular has yet to achieve an authoritative consensus definition, careful review of existing vocabularies was required for this phase of integrating Moonstone’s results into the AMI readmission prediction model. The concept mapping by topic approach discussed in Widen, Chen and Melton (2017) for the representation of Residence, Living Situation, and Living Conditions delineates a rigorous methodology for leveraging existing resources. 32 Future steps include modifications to the developer interface to allow adding new concepts and more transparent unit tests for testing modifications to either the ontology or rule set. We also are expecting to be able leverage the SoDH measurements planned for mandated collection by the Office of the National Coordinator for Health, developed from recommendations by the National Academy of Medicine (Institute of Medicine, at the time of the recommendation) for implementation within Logical Observation Identifiers Names and Codes (LOINC) codes.33 Further, the user manual for deploying the system in its current states is being updated. Finally, the Moonstone attribute module which assigns temporal status values (e.g., historical, current, chronic) to detected entities will be reintegrated into the code-base.

Although there has been on-going planning by the Office of the National Coordinator for Health IT for the collection and coding of SDoH, with National Academy of Medicine recommended SDoH measures, a standardized vocabulary, typology or SDoH ontology available for mapping to such measures has not been available. A recent feasibility study, which was developed using data within the National Health System of the United Kingdom, addresses the need for an SDoH ontology for the purposes of prescribing according the resources available to the patient.34 Construction of the UK SDoH ontology is underway, using codes available within the NHS, the descriptors of which would be potentially useable for a US version. Deciding which social factors are important for a given demographic or cohort type is complex decision process, as Lasser, Kim, Hatef, et al (2021) discuss in a SDoH recommendations study, citing the need for limiting the set, yet dynamic enough to allow change within the collection process.35 A feasible way of limiting SoDH variable to cohort type would be to deploy distinct versions of Moonstone for collecting and storing outputs across cohorts with patient membership defined independently of Moonstone. As was described in previously published work on Moonstone, it optionally accesses several existing vocabularies and ontologies within the Unified Medical Language System (UMLS) to achieve recognition and classification of some types of text, whereas other lexical items involved in the semantics of the interaction of social situations with medical information required much smaller lexicons for handling SoDH inferencing. Moonstone’s current state provides the user with several levels of interactions between the embedded ontology and its inferencing rules.

A limitation to this study is that we did not compare against a baseline algorithm. A machine learning classifier as a baseline would require much more labeled training data than was feasibly available for annotating datasets in this study, and the previous Moonstone publication already demonstrated that a version of the algorithm without the inferencing additions discussed here showed poor performance.

5. Conclusion

The adaptation of Moonstone to differences in clinical use-case, medical record system, and programming environment for the collection of social determinants of health delivers variables incorporable into the OMOP CDM with performances of precision 0.83 recall 0.74 and F-measure of 0.78. The programming environment of the toolkit has been modified to allow developers the ability to adjust and adapt the system for differences in clinical use case and EHR formatting and documenting practices.

Highlights.

SoDH can affect access to care, quality of life, health outcomes & life expectancy

Currently, SDoH are not collected in a systematic way that would allow analyses

The Moonstone NLP tool has been successfully adapted from VA EHRs to Vanderbilt EHR

Moonstone extracts 8 SoDH from clinical notes: prec. .83 recall .74, F-measure .78

Moonstone’s graphical interface aids testing the effects of semantic interactions

6. Funding and Support Acknowledgements

This study was supported by NHLBI grant R01HL130828 (principal investigators: Drs Matheny, Chapman, and Brown). Dr Shah is supported in part by grant K08HL136850 from the NHLBI.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

7. References

- 1.Hatef ESK, Predmore Z, Lasser EC, et al. The Impact of Social Determinants of Health on Hospitalization in the Veterans Health Administration. American Journal of Preventative Medicine 2019;56:811–8. [DOI] [PubMed] [Google Scholar]

- 2.Havranek EP, Mujahid MS, Barr DA, et al. Social Determinants of Risk and Outcomes for Cardiovascular Disease: A Scientific Statement From the American Heart Association. Circulation 2015;132:873–98. [DOI] [PubMed] [Google Scholar]

- 3.Organization WH. World Health Organization Commission on Social Determinants of Health. Geneva: World Health Organization; 2008. [Google Scholar]

- 4.Conway M, Keyhani S, Christensen L, et al. Moonstone: a novel natural language processing system for inferring social risk from clinical narratives. J Biomed Semantics 2019;10:6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Wang SYTA, Claggett B, Chandra A, Khatana SAM, Lutsey PL, Kucharska-Newton A, Koton S, Solomon SD, Kawachi I. . Longitudinal Associations Between Income Changes and Incident Cardiovascular Disease: The Atherosclerosis Risk in Communities Study. JAMA Cardiology 2019;4:1203–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Elfassy T, Swift SL, Glymour MM, et al. Associations of Income Volatility With Incident Cardiovascular Disease and All-Cause Mortality in a US Cohort. Circulation 2019;139:850–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Joynt KEMT. Looking Beyond the Hospital to Reduce Acute Myocardial Infarction: Progress and Potential. JAMA Cardiology 2016;1:251–3. [DOI] [PubMed] [Google Scholar]

- 8.Manrique-Garcia ESA, Hallqvist J, et al. Socioeconomic position and incidence of acute myocardial infarction: a meta-analysis. Journal of Epidemiology & Community Health Journal of Epidemiology and Community Health 2011;65:301–9. [DOI] [PubMed] [Google Scholar]

- 9.Spatz ESBA, Wang Y, Desai NR, Krumholz HM. . Geographic Variation in Trends and Disparities in Acute Myocardial Infarction Hospitalization and Mortality by Income Levels. JAMA Cardiology 2016;1:255–65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Hu J, Gonsahn MD, Nerenz DR. Socioeconomic status and readmissions: evidence from an urban teaching hospital. Health Aff (Millwood) 2014;33:778–85. [DOI] [PubMed] [Google Scholar]

- 11.Barnett ML, Hsu J, McWilliams JM. Patient Characteristics and Differences in Hospital Readmission Rates. JAMA Intern Med 2015;175:1803–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.National Academy of Medicine (formerly Institute of Medicine). Capturing Social and Behavioral Domains in Electronic Health Records: Phase 1. The National Academy Press; 2014. [PubMed] [Google Scholar]

- 13.National Academy of Medicine (formerly Institute of Medicine) Capturing Social and Behavioral Domains and Measures in Electronic Health Records: Phase 2. The National Academies Press; 2014. [PubMed] [Google Scholar]

- 14.Bejan CA, Angiolillo J, Conway D, Nash R, Shirey-Rice JK, Lipworth, et al. Mining 100 million notes to find homelessness and adverse childhood experiences: 2 case studies of rare and severe social determinants of health in electronic health records. Journal of American Medical Informatics Association 2018;25:61–71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Chen M, Tan X, Padman R. Social determinants of health in electronic health records and their impact on analysis and risk prediction: A systematic review. J Am Med Inform Assoc 2020;27:1764–73. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Freij M, Dullabh P, Lewis S, Smith SR, Hovey L, Dhopeshwarkar R. Incorporating Social Determinants of Health in Electronic Health Records: Qualitative Study of Current Practices Among Top Vendors. JMIR Med Inform 2019;7:e13849. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Cantor MN, Thorpe L. Integrating Data On Social Determinants Of Health Into Electronic Health Records. Health Aff (Millwood) 2018;37:585–90. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Wade G, Rosenbloom S. Experiences mapping a legacy interface terminology to SNOMED CT. BMC Medical Informatics and Decision Making 2008;8:S3 - S. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Rosenbloom S, Miller R, Johnson K, Elkin P, Brown S. Interface Terminologies: Facilitating Direct Entry of Clinical Data into Electronic Health Record Systems. Journal of the American Medical Informatics Association 2006;13:277–88. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Hatef E, Rouhizadeh M, Tia I, et al. Assessing the Availability of Data on Social and Behavioral Determinants in Structured and Unstructured Electronic Health Records: A Retrospective Analysis of a Multilevel Health Care System. JMIR Med Inform 2019;7:e13802. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Gundlapalli AV, Carter ME, Divita G, Shen S, Palmer M, South B, et al. Extracting Concepts Related to Homelessness from the Free Text of VA Electronic Medical Records. AMIA Symposium: AMIA Annual Symposium Proceedings Archives; 2014:589–98. [PMC free article] [PubMed] [Google Scholar]

- 22.Gundlapalli AV, Carter ME, Palmer M, Ginter T, Redd A, Pickard S, et al. Using natural language processing on the free text of clinical documents to screen for evidence of homelessness among US veterans. AMIA Symposium: AMIA Annual Symposium Proceedings Archives; 2013:537–46. [PMC free article] [PubMed] [Google Scholar]

- 23.Yea Wang. Automated Extraction of Substance Use Information from Clinical Texts. AMIA Annual Symposium proceedings 2015;20152121–30. [PMC free article] [PubMed] [Google Scholar]

- 24.Greenwald JL, Cronin PR, Carballo V, Danaei G, Choy G. A Novel Model for Predicting Rehospitalization Risk Incorporating Physical Function, Cognitive Status, and Psychosocial Support Using Natural Language Processing. Med Care 2017;55:261–6. [DOI] [PubMed] [Google Scholar]

- 25.Rumshisky A, Ghassemi M, Naumann T, et al. Predicting early psychiatric readmission with natural language processing of narrative discharge summaries. Transl Psychiatry 2016;6:e921. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.OHDSI. The Book of OHDSI: Observational Health Data Sciences and Informatics: OHSDI; 2019. [Google Scholar]

- 27.Dorr D, Bejan CA, Pizzimenti C, Singh S, Storer M, Quinones A. Identifying Patients with Significant Problems Related to Social Determinants of Health with Natural Language Processing. Stud Health Technol Inform 2019;264:1456–7. [DOI] [PubMed] [Google Scholar]

- 28.Wang L, Lakin J, Riley C, Korach Z, Frain LN, Zhou L. Disease Trajectories and End-of-Life Care for Dementias: Latent Topic Modeling and Trend Analysis Using Clinical Notes. AMIA Annu Symp Proc 2018;2018:1056–65. [PMC free article] [PubMed] [Google Scholar]

- 29.Matheny ME, Ricket I, Goodrich CA, et al. Development of Electronic Health Record–Based Prediction Models for 30-Day Readmission Risk Among Patients Hospitalized for Acute Myocardial Infarction. JAMA Network Open 2021;4:e2035782-e. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.South BR, Mowery D, Suo Y, et al. Evaluating the effects of machine pre-annotation and an interactive annotation interface on manual de-identification of clinical text. J Biomed Inform 2014;50:162–72. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Ruth M. Reeves BS, Glenn Gobbel, Lee Christensen, Paul Thompson, Wendy Chapman, Michael Matheny, Jeremiah Brown. Annotating Social Determinants of Health and Functional Status Information Using Publicly Accessible Corpora. AMIA annual symposium. San Francisco, CA2018. [Google Scholar]

- 32.Winden TJ, Chen ES, Melton GB. Representing Residence, Living Situation, and Living Conditions: An Evaluation of Terminologies, Standards, Guidelines, and Measures/Surveys. AMIA Annu Symp Proc 2016;2016:2072–81. [PMC free article] [PubMed] [Google Scholar]

- 33.National Academy of Medicine (formerly Institute of Medicine) Capturing Social and Behavioral Domains and Measures in Electronic Health Records: Phase 2. Washington (DC)2015. [PubMed] [Google Scholar]

- 34.Jani A, Liyanage H, Okusi C, et al. Using an Ontology to Facilitate More Accurate Coding of Social Prescriptions Addressing Social Determinants of Health: Feasibility Study. J Med Internet Res 2020;22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Lasser EC, Kim JM, Hatef E, Kharrazi H, Marsteller JA, DeCamp LR. Social and Behavioral Variables in the Electronic Health Record: A Path Forward to Increase Data Quality and Utility. Acad Med 2021. [DOI] [PMC free article] [PubMed]