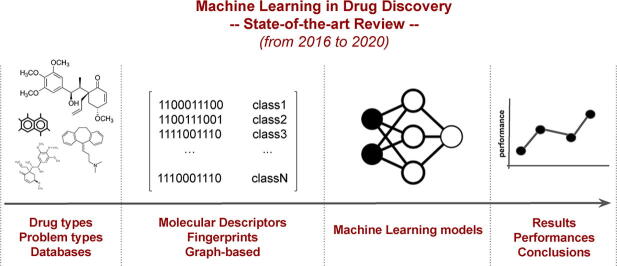

Graphical abstract

Keywords: Machine Learning, Drug Discovery, Cheminformatics, QSAR, Molecular Descriptors, Deep Learning

Abbreviations: ML, Machine Learning; AI, Artificial Intelligence; SMILES, simplified molecular-input line-entry system; DNA, Deoxyribonucleic acid; RNA, Ribonucleic Acid; PCA, Principal Component Analyisis; t-SNE, t-Distributed Stochastic Neighbor Embedding; FS, Feature Selection; CV, Cross Validation; QSAR, Quantitative structure–activity relationship; MD, Molecular Descriptors; FP, Fringerprints; ECFP, Extended Connectivity Fingerprints; MACCS, Molecular ACCess System; APFP, Atom Pairs 2d FingerPrint; CDK, Chemical Development Kit; SVM, Support Vector Machines; ANN, Artificial Neural Networks; NB, Naive Bayes; FNN, Fully Connected Neural Networks; CNN, Convolutional Neural Networks; GNN, Graph Neural Networks; GCN, Graph Convolutional Networks; ADMET, Absorption, distribution, metabolism, elimination and toxicity; ADR, Adverse Drug Reaction; CPI, Compound-protein interaction; CNS, Central Nervous System; BBB, Blood–Brain barrier; KEGG, Kyoto Encyclopedia of Genes and Genomes; WHO, World Health Organization; AUC, Area under the Curve; GEO, Gene Expression Omnibus; FDA, Food and Drug Administration; MKL, Multiple Kernel Learning; OOB, Out of Bag; TCGA, The Cancer Genome Atlas; GO, Gene Ontology; MCC, Matthews correlation coefficient; RF, Random Forest; DL, Deep Learning

Highlights

-

•

Machine Learning in drug discovery has greatly benefited the pharmaceutical industry.

-

•

Application of machine algorithms must entail a robust design in real clinical tasks.

-

•

Trending machine learning algorithms in drug design: NB, SVM, RF and ANN.

Abstract

Drug discovery aims at finding new compounds with specific chemical properties for the treatment of diseases. In the last years, the approach used in this search presents an important component in computer science with the skyrocketing of machine learning techniques due to its democratization. With the objectives set by the Precision Medicine initiative and the new challenges generated, it is necessary to establish robust, standard and reproducible computational methodologies to achieve the objectives set. Currently, predictive models based on Machine Learning have gained great importance in the step prior to preclinical studies. This stage manages to drastically reduce costs and research times in the discovery of new drugs. This review article focuses on how these new methodologies are being used in recent years of research. Analyzing the state of the art in this field will give us an idea of where cheminformatics will be developed in the short term, the limitations it presents and the positive results it has achieved. This review will focus mainly on the methods used to model the molecular data, as well as the biological problems addressed and the Machine Learning algorithms used for drug discovery in recent years.

1. Introduction

According to the Precision Medicine Initiative, precision medicine is “an emerging approach for disease treatment and prevention that takes into account individual variability in genes, environment and lifestyle for each person” [1]. This new approach allows physicians and researchers to increase accuracy in predicting disease treatment and prevention strategies that will work for particular groups of people. This approach contrasts with the “one-size-fits-all” approach, more widely used until relatively recently, in which the strategies mentioned above are developed with the average person in mind, regardless of differences between individuals.

The opportunity for the creation of new treatments offered by precision medicine generates at the same time great difficulties in the development of new methodologies. For this reason, in recent years a large amount of biomedical data has been generated, coming from very diverse sources: from small individual laboratories to large international initiatives. These data, known mostly as omic data (genomic, proteomic, metabolomic, pharmacogenomic, etc.), are an inexhaustible source of information for the scientific community, which allows stratifying patients, obtaining specific diagnoses or generating new treatments [2].

Diagnostic tests are frequently performed in some disease areas, as they allow immediate identification of the most effective treatment for a specific patient through a specific molecular analysis. With this, the practice of trial and error medicine, which is often frustrating and considerably more expensive, is often avoided. In addition, drugs created from these molecular characteristics usually improve treatment results and reduce side effects. One of the most common examples can be found in the treatment of patients with breast cancer. A significant percentage of patients with this type of tumor are characterized by overexpression of human epidermal growth factor receptor 2 (HER2). For these patients, treatment with the drug trastuzumab (Herceptin) in addition to chemotherapy treatment can reduce the risk of recurrence to more than 50% [3].

On the other hand, there are also the so-called pharmacogenomic tests that provide assistance in making decisions related to the drug and the dose formulated for each patient. These decisions are based on the genomic profiles of the patients, so that they can metabolize certain drugs in different ways according to their genetics, thus causing adverse reactions. These reactions are related to variants in the genes that encode drug metabolizing enzymes, such as cytochrome P450 (CYP450). Pharmacogenomic testing can contribute to the safe and effective application of drugs in many different areas of health, including heart disease, cancer adjunctive therapy, psychiatry, HIV and other infectious diseases, dermatology, etc.

The greatest complexity in drug discovery for certain molecular targets and/or patient subgroups is found in the process itself and in the strict regulations presented by the regulatory bodies. Currently, the discovery and development of new drugs is still a long and extremely costly process. The average time period for the development of a new drug is between 10 and 15 years of research and testing. The large number of existing molecules with the capacity to be tested as new drugs makes their study in wet lab experiments practically impossible. However, in the last decade, the evolution of the Information and Communication Technologies, as well as the increase of the available computational capacity, has given way to new methodologies in silico for the screening of extensive drug libraries. This step prior to preclinical studies reduces the economic cost and increases the space for searching for new drugs. In this context, Machine Learning (ML) techniques have gained a great prominence in the pharmaceutical industry, offering the ability to accelerate and automate the analysis of the large amount of data currently available.

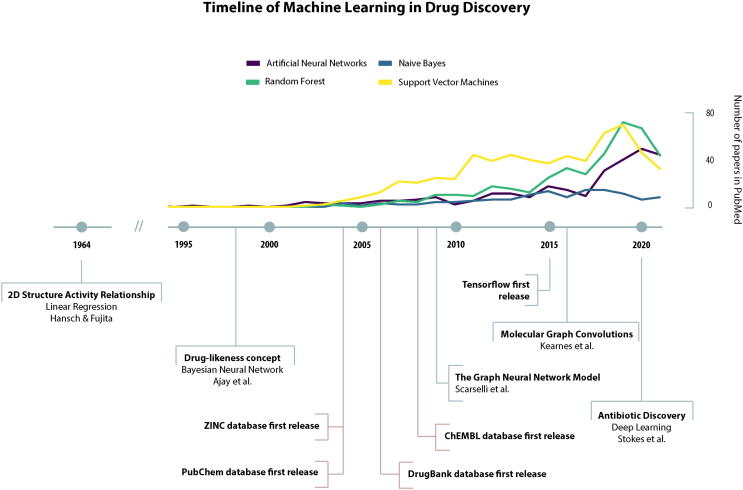

The ML is a branch of Artificial Intelligence (AI) that aims to develop and apply computer algorithms that learn from raw, unprocessed data, in order to later perform a specific task. The main tasks performed by the AI algorithms are classification, regression, clustering or pattern recognition within a large data set. There is a great variety of ML methods that have been used in the pharmaceutical industry for the prediction of new molecular characteristics, biological activities, interactions and adverse effects of drugs. Some examples of these methods are Naive Bayes, Support Vector Machines, Random Forest and, more recently, Deep Neural Networks [4], [5], [6], [7], [8], [9], [10].

In order to study the state of the art in this field, this work has been designed and developed. It gathers the most relevant publications of the last five years in the use of ML techniques for early drug discovery. Next, the works identified in this study are presented in different sections, analyzing with special interest the descriptors used, the biological problem to be solved and the ML algorithm used.

2. Standard machine learning methodology

The design of the experimental phase is a crucial step in the field of Computational Intelligence and especially in ML. For this, it is essential to first define the methodology to be implemented.Fig. 1.

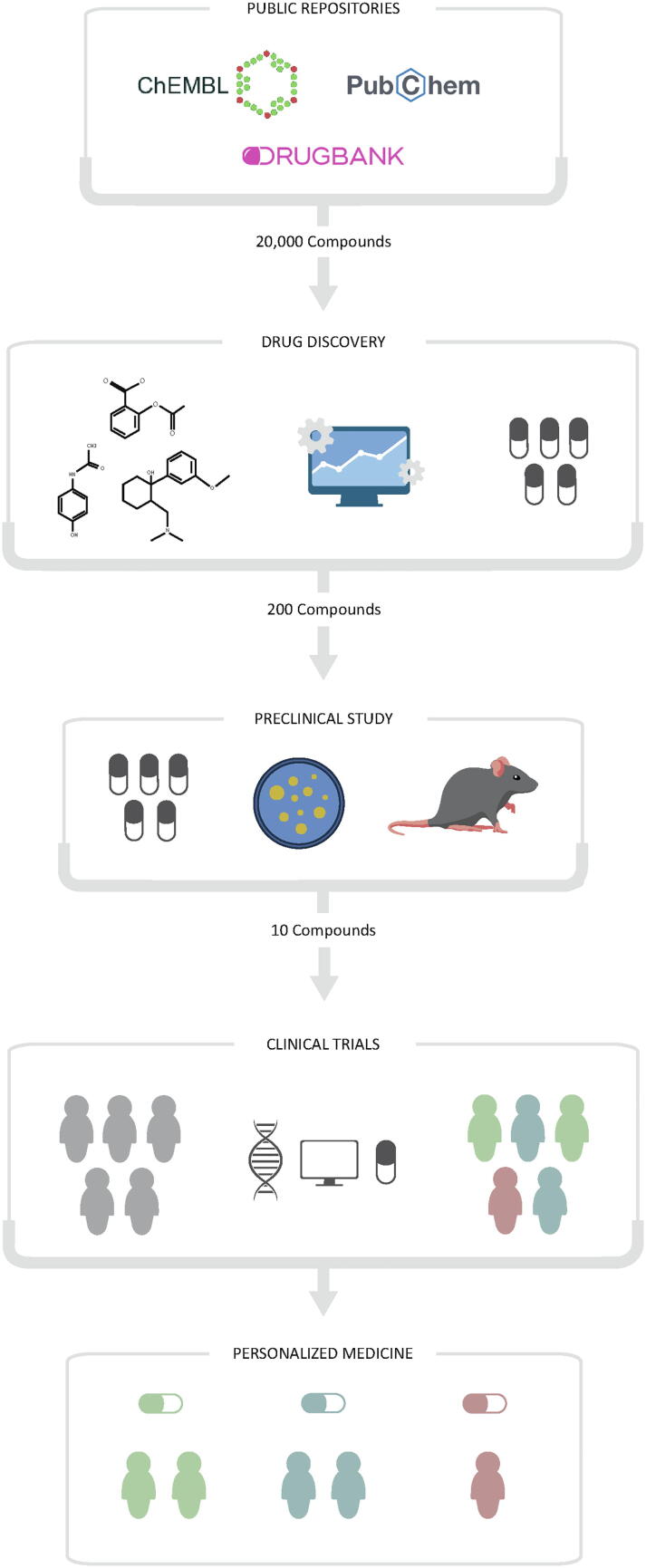

Fig. 1.

Stages in the discovery of new drugs in the context of precision medicine.

The application of an ML methodology must be transversal in any field of research [11], even if all fields share certain steps in the experimental design. Specifically, in the ML methodology applied in drug discovery we can differentiate the following steps: 1) data collection; 2) generation of mathematical descriptors; 3) search for the best subset of variables; 4) model training; 5) model validation.

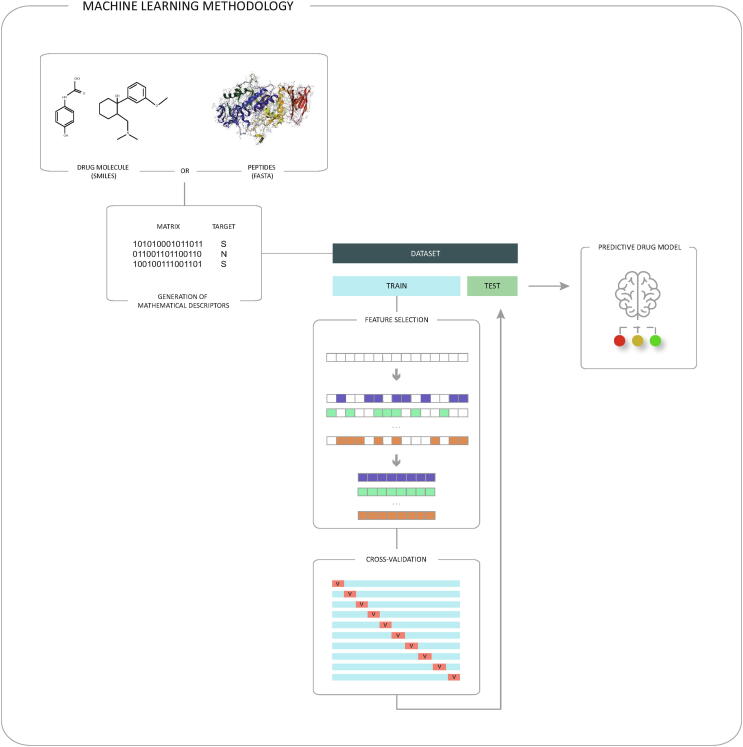

In Fig. 2, a diagram of the Machine Learning methodology commonly used for drug discovery can be observed Fig. 3.

Fig. 2.

Machine Learning methodology commonly used for drug discovery.

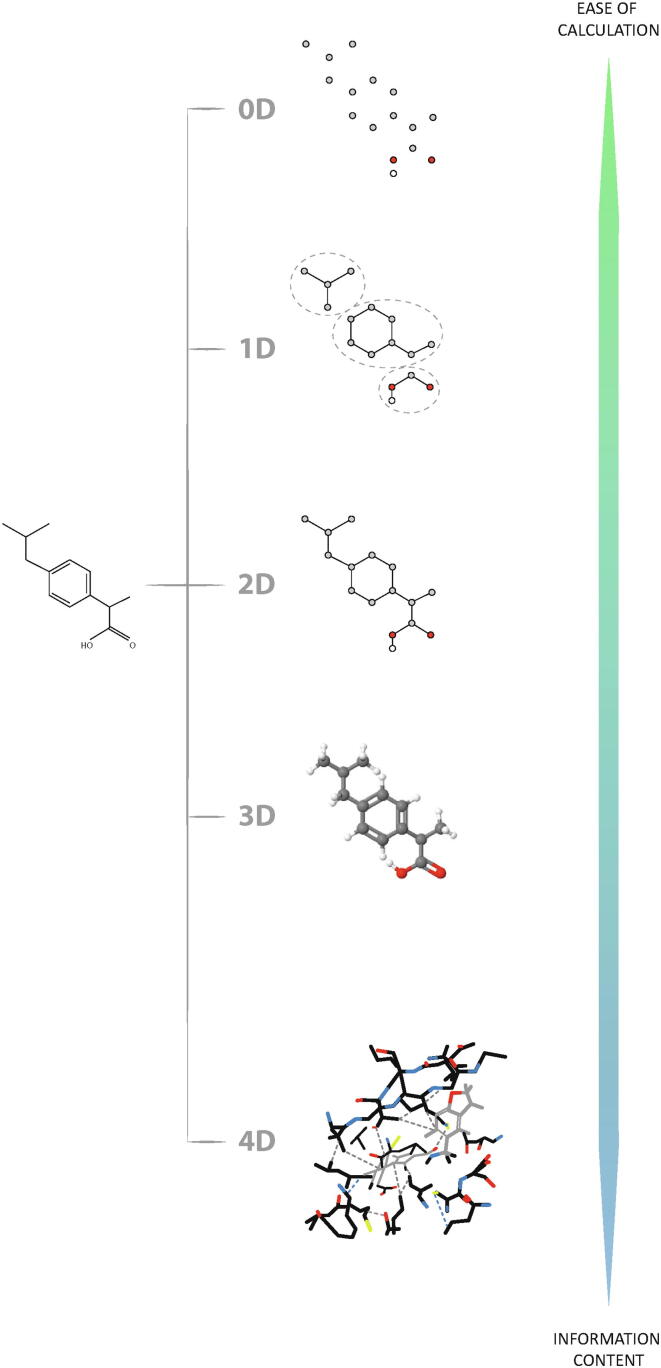

Fig. 3.

Representation of the information coded by the different molecular descriptors according to their dimensions.

The first step is to obtain the data set, which must have certain characteristics. In addition to physical–chemical characteristics that help absorption, specificity and low toxicity, it must also have characteristics that allow it to be easily produced and handled in the laboratory. This is because the pharmaceutical industry does not employ large proteins or extremely complex molecules. The main compounds it usually works on include small molecules and peptides. In order to simplify the handling and analysis of these compounds, the SMILES and FASTA formats are used to represent the sequence and structure of small molecules and peptides, respectively.

Currently, there are numerous public repositories 1 that store a large amount of useful data for the field of drug discovery, such as DrugBank [12], PubChem [13], ChEMBL [14] or ZINC [15].

Table 1.

Top public repositories with chemistry used in Machine Learning model training. Table shows number of availables compounds in each repository and its usability.

| Database | No Compounds | Usabillity | Link | Reference |

|---|---|---|---|---|

| DrugBank | 14 K | Drug Discovery | https://go.drugbank.com/ | [12] |

| PubChem | 110 M | Computational Chemistry | https://pubchem.ncbi.nlm.nih.gov/ | [13] |

| ChEMBL | 2.1 M | Drug Discovery | https://www.ebi.ac.uk/chembl/ | [14] |

| ZINC | 750 M | Virtual Screening | https://zinc.docking.org/ | [15] |

New sequencing technologies have made great progress in generating sequence data (DNA, RNA, proteins, small molecules, etc.). The sequences of compounds are the starting point in drug discovery, although few mathematical models are capable of generating predictions based solely on these sequences. In order to perform the prediction, it is necessary to convert the sequences into matrices that can be subsequently addressed by ML algorithms (matrix in Fig. 2). The labelling of the different compounds is also important (target in Fig. 2). Although there are some ML models that do not need labelling, it is common in the field of drug discovery to use supervised learning models. In this case, the labelling defined by the researchers will be essential in the experimental process.

With the generation of the mathematical descriptors, a set of data is obtained which the ML model can process. This dataset is divided into two subsets: one with a higher percentage of data, dedicated to training the model (represented by the blue colour in Fig. 2 smaller one dedicated to testing the model (represented by the green colour in Fig. 2).

Within the training set, the search for the best subset of variables is carried out, with the right and necessary information. Normally, during the generation of mathematical descriptors, a large number of numerical variables are presented. The main objective of this process is to reduce as much as possible the useless or redundant variables. To this end, there are different techniques such as PCA, t-SNE, FS, Autoencoder, etc. FS techniques obtain a subgroup of features belonging to the original set, which does not modify the content of the variables. This provides a justification that is understandable at a biological level and that is why a large majority of researchers use these techniques in their experimental designs [16].

Once the optimal subset of variables has been located, the model is trained. First, the algorithms and their parameters must be selected. These must be chosen carefully to ensure that they are appropriate to the problem in question and the amount and type of data available. Then, different runs of the experiment are performed with the training data. Excessive training should be avoided to ensure the validity of the model with unknown data. The use of techniques such as cross-validation (CV) is common in these cases. The CV allows measuring the degree of generalization of the model during the training phase, evaluating its performance and estimating the performance with unknown data. In each execution of the experiment, the original data set is divided again into two subsets: the training set and the validation set. In the Fig. 2 you can see the development of the CV technique with 10 runs. For each of these runs, the blue set corresponds to the training set and the red set to the validation set.

The ultimate goal of the CV process is to select the best combination of parameters for each technique. From these parameters the performance of each model is measured. The best model is the one that achieves the highest performance value with the lowest total cost.

Finally, the test set extracted from the original set is recovered (represented by the green colour in the Fig. 2 and a final validation process of the best model resulting from the CV process is carried out. If the validation results are statistically significant [17], it can be said that a new predictive drug model has been created.

Machine learning techniques have been used in many fields, and the number of published papers has increased especially in recent years. However, few of the machine learning publications related to drug development are found on open access websites. To facilitate this understanding, works such as [18], [19] provide an overview of machine learning techniques and the current state of applications for drug discovery in both academic and industrial settings.

3. The importance of input data in Machine Learning predictions

A critical step in the training of the model depends on the representation of the molecules by descriptors that are capable of capturing their properties and structural characteristics. Hundreds of molecular descriptors have been reported in the literature ranging from simple properties of the molecules to elaborate three-dimensional and complex molecular fingerprint formulations, stored in vectors of hundreds and/or thousands of elements.

3.1. Quantitative structure–activity relationship

Under the premises “the structure of a molecule defines its biological activity” and “structurally similar molecules have a similar biological activity”, the models of quantitative relationship between structure and activity (QSAR), which numerically relate the chemical structures of the molecules with their biological activity, allow, through mathematical systems, to predict the physicochemical and biological fate properties that a new compound will have from the knowledge of its chemical structure and from existing experimental studies.

QSAR models integrate computer and statistical techniques in order to make a theoretical prediction of biological activity that allows the theoretical design of possible future new drugs, avoiding the trial and error process of organic synthesis. As it is a science that exists only in a virtual environment, it allows dispensing with certain resources such as equipment, instruments, materials and laboratory staff. With a focus on the relationships between chemical structure and biological activity, the design of candidates for new drugs is much cheaper and faster. Modeling studies such as QSAR is one of the most effective methods to perform compound prediction when there is a lack of adequate experimental data and facilities [20].

To carry out a QSAR study, three types of information are needed [21]:

-

1.

Molecular structure of different compounds with a common mechanism of action

-

2.

Biological activity data of each of the ligands included in the study.

-

3.

Physicochemical properties, which are described from a set of numerical variables, obtained from the molecular structure virtually generated by computational techniques.

In the prospective type, the results in the form of equation or QSAR model allow predicting the biological activity of compounds not yet synthesized that are generated virtually in a short time, but must share structural characteristics of the ligands included in the study not to leave the rules or chemical pattern or range of values of the descriptors. The other type, the retrospective analyzes the already existing molecules (those of synthesis and bioassays) to understand their non-obvious interrelations between structures and biological activities. The preparation of the input data is the most crucial step since the result is obtained in an automated way and only depends on the input.

The QSAR methodology is interdisciplinary, so it receives information from Organic Chemistry and Pharmacology. The way in which QSAR rewards this situation and that constitutes the objective of this methodology, is through the directed design of ligands that do not yet exist, but through the generated equations have shown a high probability of pharmacological success because as it has been said, these equations allow a prediction of the biological activity. When there is information collected from the literature or from a laboratory, a statistical tool called multiple linear regression is used, taking as a dependent variable the values of biological activity of ligands and as independent variables, the calculated descriptors.

The time of a molecular simulation carried out by means of computational tools is much less than the time it would take for the synthesis and bioassays of new compounds, which could be months or even years. This advantage allows to take a series of molecules and thanks to the speed of having the results, directly feed the synthesis laboratory in the continuous process of the project. Thus, QSAR predicts new structures never seen before and proposes them to the organic chemists to be taken to the bioassays whose results confirm or contradict the values predicted by the QSAR model. In an optimal case, through this operational cycle, better candidates are obtained than through pure trial and error. This saves time, money, resources and avoids failure for those who develop new drugs.

The advantages of QSAR are the low cost, since it does not use laboratory instruments, nor chemical reagents, and in addition, there is free software for the generation of the models that provide interfaces that facilitate the handling and design. In addition, the construction of the molecules and the calculation of descriptors can be extremely fast. Among its disadvantages we can mention the need for training in computational methodologies (different operating systems and graphic interfaces, database management, software development) and in this sense, the resolution of different computational problems (compatibility, updates, records, data formats) as well as the fact of having to have data on biological activity of the molecules coming from the same source, the change of perspective in the way of working, etc.

3.2. Molecular descriptors

Molecular descriptors (MD) play a key role in many areas of research. They can be defined as numerical representations of the molecule that quantitatively describe its physicochemical information. But not all the information contained in a molecule, but only a part, can be extracted through experimental measurements. In recent decades there has been an increasing focus on how to capture and convert, in a theoretical way, the information encoded in the molecular structure into one or more numbers that are used to establish quantitative relationships between structures and properties, biological activities and other experimental properties. In this way, MDs have become a very useful tool to carry out the search for similarities in molecular repositories, since they can find molecules with similar physicochemical properties according to their similarity to the values of the calculated descriptors.

From the beginning of its application, thousands of molecular descriptors have been defined, which encode molecules in different ways, being able to give a generic description of the whole molecule (1D descriptors), whose calculation is simpler than those descriptors that define properties calculated from two- and three-dimensional (2D and 3D) structures, which define more specific characteristics, but whose calculation is more complex.

It has been argued that the number of atomic and molecular descriptors developed to date constitute a sufficient arsenal for the search for new drugs to develop. However, one of the causes of the lack of adjustment in the models may be the very nature of the sample or the inappropriate selection of the structural descriptors. The later may be due to the selection procedure used, or to the insufficient capacity to describe the phenomenon by the models. All this is reason enough to continue the search for new structural or atomic descriptors that can be used in QSAR-based model studies.

The molecular descriptors can be divided into two main categories. Experimental measurements, such as log P, molar refractivity, dipole moment, polarisability and, in general, additive physical–chemical properties and theoretical molecular descriptors, which are derived from a symbolic representation of the molecule and can be further classified according to the different types of molecular representation. Theoretical ones, in turn, are classified into:

-

1.

Constitutional: reflect general properties of molecular nature

-

2.

Topological: its calculation is done through graph theory

-

3.

Geometric: are derived from empirical schemes and encode the ability of the molecule to participate in different types of interactions.

-

4.

Electronics: refer to the electronic properties

-

5.

Physicochemicals: define the behaviour of the molecule in the face of external reactions

If we consider the dimensions of the molecular characteristics represented by the theoretical molecular descriptors, the following categories are established.

3.2.1. 0D descriptors

Are the easiest to calculate and interpret. Included in this category are all those molecular descriptors for whose calculation no structural information of the molecule or connectivity between atoms is needed and therefore they are independent of any conformation problem and do not need optimization of the molecular structure.

They usually show a very high degeneration, that is, they have equal values for several molecules, such as isomers. Their information content is low, but they can nevertheless play an important role in the modelling of various physicochemical properties or participate in more complex models. Examples of these descriptors are the number of atoms, number of bonds of a certain type, molecular weight, average atomic weight or sum of atomic properties such as Van der Waals volumes.

3.2.2. 1D descriptors

All the molecular descriptors that allow calculating information from fractions of a molecule can be included in this category. They are usually represented as fingerprints, which are no more than binary vectors in which 1 indicates the existence of a substructure and 0 indicates its absence. This form of representation has a great advantage and is that it allows calculations to be carried out very quickly to find similarities between molecules. Like 0D, these descriptors can be easily calculated, are naturally interpreted, do not require optimization of the molecular structure and are independent of any conformation problem. They usually show a medium–high degeneration and are often very useful to model both physicochemical and biological properties.

Within the 1D descriptors we talk about those based on the count of chemical functional groups, such as the total number of primary carbon atoms, number of cyanates, number of nitriles, etc, and the so-called atom-centred fragments, which are based on the count of different fragments of the molecule. Examples of the last-mentioned are hydrogen bonded to a heteroatom, hydrogen bonded to an alpha carbon and fluorine bonded to a primary carbon.

3.2.3. 2D descriptors

They describe properties that can be calculated from two-dimensional representations of molecules. They are obtained through the graph theory, independent of the conformation of the molecule. Their calculation is based on a graphic representation of the molecule and they present theoretical properties of structure that are preserved by isomorphism, i.e. properties with identical values for isomorphic graphs. The invariant part can be a characteristic polynomial, a sequence of numbers or a single numerical index obtained by applying algebraic operators to matrices representing molecular structures and whose values are independent of the numbering or labelling of the vertices.

They are generally derived from a molecular structure degraded in hydrogen. They can be sensitive to one or more characteristic structures of the molecule such as size, shape, symmetry, branching and cyclicity and can also encode chemical information about the type of atom and the multiplicity of bonds. In fact, they are generally divided into two categories:

-

1.

Structural-Topology index:: encode only information about the adjacency and distance of atoms in the molecular structure.

-

2.

Topochemical index: quantify information on topology but also on specific properties of atoms such as their chemical identity or state of hybridization.

3.2.4. 3D descriptors

The three-dimensional descriptors are related to the 3D representation of the molecule and include the conformation of the molecular structure, where the distances between bonds, bond angles, dihedral angles, etc. are considered, being able then to describe the stereochemical properties of the molecules. Its calculation is more complex than in the previous ones and may require the analysis of many molecular conformations.

The most popular 3D descriptors include representations of pharmacophore type molecules, defined as a set of steric and electronic features needed to ensure optimal supramolecular interactions with a specific biological target and trigger or block its biological response, where features such as hydrophobic centres or hydrogen bond donors, which are known or believed to be responsible for biological activity, are mapped into positions in a molecule. The conformation-dependent distances between these points are then calculated and recorded. Three-point pharmacophores are widely used, but more potent four-point pharmacophores have been introduced, which may require the analysis of millions of possible pharmacophores for a test compound. Complex 3D descriptors are calculated, for example, to identify active conformations of a compound or to identify critical characteristics for differences in activity in series of analogues. At the same time, this type of calculation is needed to generate the “pharmacophore shape” of a query molecule in order to search databases for compounds with similar 3D characteristics. In addition, the use of pharmacophore type descriptors is fundamental for the derivation of 3D-QSAR or 4D-QSAR models.

3.2.5. 4D descriptors

Also referred to as grid-based, these descriptors provide additional information by introducing a fourth dimension that allows characterization of interactions between molecules, their conformational states and the active sites of a biological receptor. The central hypothesis is that consideration of ligand conformational variation, influenced by factors such as solvent molecules and non-covalent interactions within protein binding pockets, will result in descriptors that characterize the molecular properties of compounds more accurately and thus lead to more reliable QSAR models.

From the work published by Cramer et al. in 1988 [22], the use of the field properties of the molecules was proposed field properties of molecules in three-dimensional space to develop and apply QSAR models. This method was called Comparative Molecular Field Analysis (CoMFA) and its basic foundation consists of sampling the steric (van der Waals) and electrostatic (Coulombic) fields around a set of aligned molecules, in order to capture all the information necessary to explain the final response exhibited by the molecules. The sampling consists of calculating the interaction energies of the molecules, by means of appropriate probes located in the three-dimensional lattice arranged around the molecular structures.

A critical step in the CoMFA method is the proper alignment of the molecules, which can be time-consuming and requires prior knowledge of the precise molecular conformation. For this reason, it is necessary to analyze the most active molecules in the dataset and use them as a template. Additionally, when the dataset features molecules with several conformational degrees of freedom, the selection of the active conformation is often a significant hurdle in QSAR-3D modeling. Therefore, a prior systematic conformational search must be performed to define the most stable conformer according to the generated Potential Energy Surface (PES). Currently, there are different computational methods to improve the performance of the CoSAR-3D alignment performance of the CoMFA technique [23].

Most QSAR models use numerical descriptors derived from the two- and/or three-dimensional structures of molecules. Conformation-dependent characteristics of flexible molecules and interactions with biological targets are not encoded by these descriptors, leading to limited prediction. 2D/3D QSAR models are successful for virtual screening, but often suffer in the optimization stages. That’s why conformation-dependent 4D-QSAR modeling was developed two decades ago, but these methods have always suffered from the associated computational cost. Recently, 4D-QSAR has undergone a significant breakthrough due to rapid advances in GPU-accelerated molecular dynamics simulations and modern machine learning techniques [24].

During the last 15 years, new multidimensional descriptors have been incorporated into QSAR modeling, termed 5D and 6D descriptors. These are based on structural parameters associated with the flexibility of the receptor binding site along with the topology of the ligand. Specifically, the 5D descriptors are calculated from multiple conformations, orientations, protonation states and stereoisomers of the ligand under analysis. In the case of the 6D descriptors, it is necessary to take into account the solvation scenarios of the complex, the ligands and the interacting environment[25].

3.2.6. How many descriptors are needed?

In broad terms, the number of descriptors to be used will depend on the computational tools available and the number of molecules included in the study. The most frequent error consists of allowing the mathematical operation of the linear regression model to add an over-dimensional numerical space to describe each molecule independently without being able to establish a rule with predictive and reliable power. This happens when the number of descriptors exceeds the number of molecules. On the other hand, the exhaustive search, can be applied to all but the simplest cases, since the search space is not practical when there is a low number of molecular descriptors. The reliability of the model can be affected, not only by the presence of noise, but also by the correlation of redundant descriptors and also by the presence of irrelevant descriptors. Therefore, variable selection techniques are largely used to remedy this situation and improve the accuracy and predictive power of classification or regression models [16].

In recent years, the scientific community has focused much attention on techniques dedicated to variable selection, i.e. the selection of molecular descriptors in QSAR. Since there are thousands of descriptors available to describe a molecule and often there is no a priori knowledge about which characteristics are most responsible for a specific property, subsets of the most appropriate descriptors are explored through different strategies. Today there are many software tools for calculating molecular descriptors, each with its advantages and disadvantages (ease of use, licences, number of descriptors, etc.).

3.3. Fingerprints

Fingerprints (FP) are a particular form of molecular descriptors that allow quickly and easily the effective representation of the molecular structure through a chain or vector of bits, with a fixed length, which indicate the existence or absence of internal substructures or functional groups. This form of molecular coding is very efficient for storing, processing and comparing the data that is hosted in the strings that contain the molecular information. However, fingerprints that are derived from chemical structures ignore the biological context, thus leaving a gap between molecular structure and biological activity, so that small changes in the former can produce substantial differences in bioactivity.

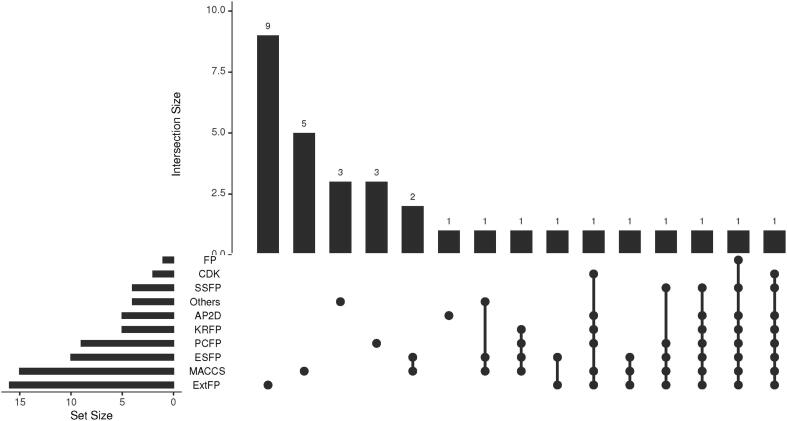

There is a wide variety of FP, from the simplest, which lists a catalog of 2D substructures (e.g. MACCS), to more advanced versions that include 3D information on molecular conformation. The following is a summary of the most commonly used ones. In the Fig. 4 a summary of the descriptors found in the articles consulted is shown. It indicates the number of times a descriptor appears individually in a publication, as well as the number of studies that have used several of them. It is common in this type of study to compare several of them. The Fig. 4 shows how ExtFP fingerprints are the most used in absolute terms. In second place we find the MACCS. The main reasons for their extensive use are their easy calculation and the positive results they have been obtaining in the different problems. Bearing in mind that the sample of articles consulted is representative of the last five years of research, we can see how these descriptors are still used today in research, since their use has not been diminished by the appearance of other more sophisticated analysis techniques.

Fig. 4.

The number of identified items that have used the most common fingerprints is represented.

3.3.1. Extended-Connectivity Fingerprints

Extended Connectivity Fingerprints (ECFP) are a class of topological fingerprints for molecular characterization [26], [27]. Historically, topological fingerprints were developed for the search of substructures and similarities, but these have been developed specifically for structure–activity modeling [28], [29], [30], [31], [32].

ECFPs are circular fingerprints with a number of useful qualities:

-

1.

They can be calculated very quickly.

-

2.

They are not predefined and can represent a large number of different molecular characteristics (including stereochemical information).

-

3.

Its characteristics represent the presence of particular substructures, which allows an easier interpretation of the analysis results.

-

4.

They are designed to represent both the presence and absence of functionality, as both are crucial for analyzing molecular activity.

-

5.

The ECFP algorithm can be adapted to generate different types of circular fingerprints, optimized for different uses.

They are among the most popular similarity search tools in drug discovery [33], [34], [35], [36], [37], [38] and are used effectively in a wide variety of applications. They can store information about the environments surrounding each atom in a molecule and in addition to the search for similarities, ECFPs are well suited to recognizing the presence or absence of particular substructures, so they are often used in the construction of QSAR and QSPR models.

3.3.2. MACCS keys

MACCS (Molecular ACCess System) Keys are another of the most used structural keys [36], [37], [39], [38]. Sometimes they are known as MDL keys, which bear the name of the company that developed them. While there are two sets of MACCS keys, one with 960 keys and the other containing a subset of 166 keys [40], [41], [42], [43], [44], only the shortest fragment definitions are available to the general public. These 166 public keys are implemented in open source chemoinformatics software packages, including RDKit, CDK, etc.

3.3.3. Pubchem fingerprints

PubChem is a repository that houses a large amount of molecular information that can be consulted and downloaded for free. A substructure is a fragment of chemical structure for which PubChem generates a fingerprint, which is a list of bits [41], [42], [45]. PubChem Fingerprints are 881-bit long structural keys, which PubChem uses to perform similarity search [44], [46]. It is also used for neighboring structures, which pre-calculate a list of similar chemical structures for each compound. This pre-calculated list can be accessed through the Compound Summary page.

3.3.4. Atom pairs

They are fingerprints based on topological routes, which represent all possible connectivity routes defined by a specific fingerprint through an input compound [36], [39], [37], [41]. They mainly focus on chemical connectivity information of synthetic compounds. In turn, within this classification we can distinguish:

-

1.

AtomPairs2DFingerprint (APFP): they are defined in terms of the atomic environment and the shortest path separations between all pairs of atoms in the topological representation of a composite structure. It encodes 780 pairs of atoms at various topological distances.

-

2.

GraphOnlyFingerprint (GraphFP): is a specialized version of the molecular fingerprint in the Chemical Development Kit (CDK), which encodes the 1024 path of a fragment in the composite structure and does not take into account the information of the binding order.

3.3.5. CDK

The Chemical Development Kit (CDK) is a set of widely used open source chemoinformatics tools (drug discovery, toxicology, etc), which provides data structures to represent chemical concepts, including 2D and 3D representation of chemical structures, along with methods to manipulate these structures and perform calculations on them. The library implements a wide variety of algorithms ranging from the canonicalization of chemical structure to molecular descriptor calculations and the perception of pharmacophores [36].

The CDK provides methods for common tasks in molecular computing, including 2D and 3D representation of chemical structures, SMILES generation, ring searches, isomorphism verification, structure diagram generation, etc. Implemented in Java, it is used both for server-side computational services, possibly equipped with a web interface, and for client-side applications [37].

3.3.6. Other types of fingerprints

In addition to the types of fingerprints described above, there are many other types that, although not as widely used, do appear in the literature very often, such as EstateFP [42], [47], [48] and Klekota-Roth [39], [41], [36].

3.4. Graph-based machine learning algorithms

Most predictive models in chemoinformatics base their input data on molecular descriptors calculated and coded in numerical vectors, as described in the previous section. The use of these descriptors generates high dimensionality matrices for the use of classical ML algorithms such as Random Forest, SVM, ANN, NB, etc. These algorithms are designed to process data structured in matrices or vectors, but are not capable of using the total information of molecules that are represented as a mathematical graph.

A molecular network is the representation of the structural formula of a chemical compound in terms of graph theory. In terms of the representation of a molecule each molecule is represented as a graph (G). Each atom in the network is represented as a node in the network. V is the set of atoms in the molecule, A corresponds to the adjacent matrix that indicates the connectivity between atoms, and the X matrix represents the atomic characteristics for each atom. Therefore, each graph is mathematically represented as G = (V, A, X) [49], [50].

Recently the creation of chemoinformatic models, capable of predicting specific functions, were based on the information extracted from these molecular graphs. For this purpose, the algorithms used were artificial neuron networks. Unlike more conventional topologies such as the fully connected neural network (FNN) or the convolutional neural network (CNN), which extract information from vectors or numerical matrices, graph neural networks (GNNs) are capable of extracting structural information from a mathematical graph. In January 2009 when Scarselli et al. [51] presented The Graph Neural Network Model, and from that moment these models were widely used in various applications. Among them, chemoinformatics was the one that has grown significantly in the last decade.

Subsequently, several works were published for the improvement and applicability of these graph-based models. For example, Duvenaud et al. [52] presented an architecture based on the generalization of fingerprint computing so that it can be learned through retropropagation. On the other hand, Bruna et al. [53] introduced convolutional deep networks on spectral representations of graphs, while Masci et al. [54] described the convolutional networks on non-Euclidean collectors. Graphics-based machine learning algorithms, in particular GNN, have recently begun to attract significant attention in chemical science [55], [56], [57], [51].

Graph convolutions are a deep learning architecture for learning directly from undirected graphs. In 2016, researchers from Stanford and Google Inc. developed what is known as Molecular Graph Convolutions [58]. It was as a result of the application of convolutional algorithms for graphs that computational research in drug discovery has taken a step forward. In the last years many researches have been published using this kind of algorithms or variants for a certain function [49]. For example, at work [59] propose a robust and guided molecular representation based on Deep Metric Learning, which automatically generates an optimal representation for a given data set. In this way they try to solve the modifications generated in the properties of the molecules to changes in its molecular structure. On the other hand, in [60] developed new network definitions using the assigment of atom and bond types in the force fields of molecular dynamics methods as node and edge labels, respectively. Thus improving the accuracy of classification activities for chemicals. In addition, these algorithms can be combined with others such as [61]. In this work they designed new drugs based on GCN and learning by reinforcement. In this case, they addressed the problem of generating new molecules with desired interaction properties as a multi-target optimization problem. They use trained GCN with linkage interaction data. The combinations of these terms, including drug similarity and synthetic accessibility, were optimized using a reinforcement learning based on a graphical convolution policy approach. Moreover, in [62] propose a comprehensive method to apply symmetry in the graphical neural network, which extends the coverage of the prediction property to the orbital symmetry for both normal and excited states. This method is able to include the molecular symmetry in the predictive models linking the real space (R) and the moment space (K). Finally, another example is the one performed by [63], where they implemented graph-based deep learning models to predict flash points of organic molecules, which play an important role in preventing flammability risks. After comparing them with different techniques, they observed how the graph-based models outperformed the others. On the other hand, in [55] implemented another graph-convolutional neural network for the prediction of chemical reactivity.

One of the weaknesses of these technologies is that most of the times they are treated as black boxes, which present a very low interpretability of the results. To avoid this fact, in [64] develop a new graphical neural network architecture for molecular representation that uses a graphical attention mechanism to learn from relevant drug discovery data sets. The interpretability of the results obtained by this new architecture stands out from this work.

4. Biological problems asses by Machine Learning in drug discovery

A drug can be defined as a molecule that interacts with a functional entity in the organism, called a therapeutic target or molecular target, modifying its behaviour in some way. Known drugs act on known targets, but the discovery of new ones that can modify the course of a disease or improve the effectiveness of existing treatments is one of the main objectives of research in the field of chemistry and biology.

The development of a new drug can take up to 12 years and it is estimated that its average cost, until it reaches the market, is approximately one billion euros. The time and costs involved are largely associated with the large number of molecules that fail at one or more stages of their development, as it is estimated that only 1 in 5,000 drugs finally reach the market.

The previous statistics show that the discovery and development of new drugs is a very complex and expensive process. This process has been carried out for a long time using exclusively experimental methods. The technological advances of the last few decades have promoted the birth of the term in silico, a term that is now common in biology laboratories, and which designates a type of experiment that is not done directly on a living organism (these are called in vivo experiments) or in a test tube or other artificial environment outside the organism (experiments called in vitro), but is carried out virtually through computer simulations of biological processes.

The complexity of modern biology has made these computational tools essential for biological experimentation, as they allow theoretical models to be coded with great precision and are capable of processing large amounts of information, thus facilitating and accelerating the process of developing new drugs.

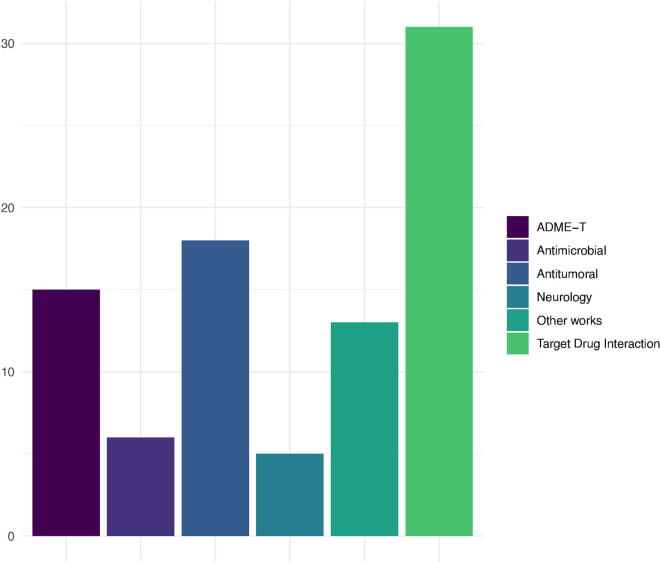

The development of a new drug begins with the search phase, through high-performance screening, for so-called hits, a term used to describe those molecules or compounds that show biological activity against a therapeutic target or molecular target, which is the place in the body where the drug is intended to operate. This phase is followed by the generation of leads, where the previously selected molecules are validated and structurally refined to increase their potency with respect to the target. In addition, appropriate pharmacokinetic properties are expected, i.e. adequate absorption, distribution, metabolism and elimination (ADME) rates, as well as low toxicity and adverse effect rates. A bar chart that quantifies the number of items identified in various biological problems is shown in Fig. 5. These problems have been defined by the researchers according to the set of articles consulted. It can be seen that the vast majority of the articles belong to the group of therapeutic targets. On the other hand, it can be seen that the works related to cancer are of great interest, as well as those aimed at predicting the adverse effects of certain drugs.

Fig. 5.

Counting of identified articles classified according to the biological problem addressed. The sampling of selected articles was during the period from 2016–2020.

4.1. Administration, distribution, metabolism, elimination and toxicity

The concept of drug similarity, established from the analysis of the physicochemical properties and structural characteristics of existing or candidate compounds, has been widely used to filter out compounds with undesirable properties in terms of administration, distribution, metabolism, elimination and toxicity (ADMET) [65]. The study of the ADME phases that a drug undergoes after being administered to an individual is another of the fundamental tasks in the development of new compounds [66]. Alteration in a patient of any of these stages (for example, excretion problems due to some type of renal failure, increased volume of distribution in obese people, absorption problems due to gastrointestinal pathology or problems in the metabolism of the drug due to deterioration of liver function) may influence the final plasma concentration of the drug modifying the expected response of the organism, thus requiring a decrease or increase in the dose of the drug in each case.Therefore, it is essential in the early stages of research to estimate the behaviour of the pharmacokinetic properties of a compound, and new tools have been developed to improve and speed up this phase of development. This is the example of Chemi-Net [67], for the prediction of ADME properties, which increases the accuracy over another tool with the same purpose already in existence.

The company Bayer Pharma has implemented a platform for absorption, distribution, metabolism and excretion ADMET in silico with the aim of generating models for a wide variety of useful pharmacokinetic and physicochemical properties in the early stages of drug discovery, but these tools are accessible to all scientists within the company [68].

The octanol–water partition coefficient is a measure of the hydrophobicity or lipid affinity of a substance dissolved in water. Chemical compounds with high values of this coefficient usually accumulate in the lipid portions of the organisms, thus producing toxicity. On the contrary, the compounds with low coefficient tend to be distributed in water or air, so they could be eliminated from the organism without accumulating [69]. Furthermore, the adequate estimation of the half-life of elimination of a drug would have potential applications for the first pharmacokinetic evaluations and thus provide guidance for designing drug candidates with a favourable in vivo exposure profile [70], so improving this estimation is another grain of sand in the development of the complex process.

The metabolism is the main route of elimination from the body for most of the 200 most marketed medicines. The study of the stability of NADPH-fortified liver microsomes is common in the research of new drugs to predict clearance and thus be able to estimate the maximum exposure to a drug for a given dose [35]. In addition, the liver is the main organ involved in drug metabolism, and therefore liver injury caused by drugs has often hindered the development of new drugs. Assessment of the risk of liver injury to drug candidates is an effective strategy to reduce the risk that a study will not go ahead with new drug discovery.

Toxicity is a major cause of the failure of drug research and development. International data showed that in the period from 2006 to 2010, toxicity accounted for 22 and 54% of failures in drug research and development, at the clinical and preclinical stages, respectively. Adverse drug reactions (ADRs), which can increase morbidity, occur more significantly in hospitalized patients and, in addition to the clinical burden, also represent a significant economic cost. For all these reasons, early stage virtual screening of drug candidate molecules plays a key role in the pharmaceutical industry to prevent ADR. It is therefore essential to study toxicological properties as early as possible and to give priority to the main compounds that pose the least threat at the stage of discovery of hits, thus increasing the chances of success during clinical development.

Computer-based predictions of toxicity and ADR can point drug safety testing in the right direction and consequently shorten the time required and save costs during drug development. Some adverse reactions can be part of the natural pharmacological action of a drug that cannot be avoided, but more often, they can be unpredictable at the development stage. They may occur due to the administration of one drug or the combined use of two or more drugs. The aim of [71] is to identify treatments for dermatological diseases (including psoriasis, atopic dermatitis, rosacea, acne vulgaris, alopecia, melanoma, eczema, keratosis, and pruritus) that may induce adverse reactions when taken in combination with other topical or oral drugs (immunosuppressants, enzyme inhibitors) prescribed to treat other pathologies (fungal/bacterial infections).

To advance research into compounds against infectious agents, these compounds must show a relative lack of toxicity in mammalian cells. In initial trials, the use of Vero cells to measure the cytotoxicity that a drug can produce is common, thus allowing the identification of non-cytotoxic components [28].

Prior to clinical application of a drug, it must go through two stages of ADR detection, including pre-clinical, to study safety profiles and clinical safety trials of drugs [72]. The high number of potential adverse effects that can occur with the consumption of drugs alone or in combination, makes it difficult to detect many of these adverse effects during early drug development, so tools such as SDHINE [73] have been proposed to predict adverse drug reactions. In [74], they are developing a new methodology for predicting drug side effects (’Feature Selection-based Multi-Label-K-Nearest Neighbor method’), which can also help reveal the possible causes of adverse effects. In general, computational tools have also been developed to distinguish between carcinogenic and non-carcinogenic compounds [37].

Many drug candidates can cause blockage of potassium channels, a potentially fatal phenomenon as it can produce a long QT syndrome, leading to death from ventricular fibrillation. In their work [67] they provide a web-based tool for predicting cardiotoxicity of potassium channel-related chemicals hERG [75], which can be used in the high-performance virtual detection stage for drug candidates.

Computational attempts have also been made to evaluate toxicity profiles of compounds included in drugs used in the treatment of HIV and Malaria [76].

4.2. Antimicrobials

Infectious diseases are caused by pathogenic microorganisms such as bacteria, viruses, parasites or fungi. These diseases can be transmitted, directly or indirectly, from one person to another.

Few new classes of antibiotics have emerged in recent decades and this pace of discovery it is unable to keep up with the increasing prevalence of resistance. However the large amount of data available promotes the use of machine learning techniques in discovery projects (for example, building regression, classification models and virtual classification or selection of compounds). The authors of [77] review some Machine Learning applications focusing on the development of new antibiotics, the prediction of resistance and its mechanisms.

Antibiotics are the treatment of choice for bacterial diseases, but the increase and abuse of antibiotics has led to the emergence of bacterial resistance to many of today’s antibiotics, hence the need to generate new compounds that can combat multi-resistant organisms. Thus, much research is being done on Halicin [78], which is a molecule with bactericidal capacity that has shown great promise in attacking bacteria that are difficult to treat with current antibiotics. Its structure is different from that of conventional antibiotics and shows bactericidal capacity against a wide phylogenetic spectrum that includes Mycobacterium Tuberculosis (bacteria for which more efficient treatments are still being sought [31]), and enterobacteria, clostridium difficilae and acinetobacter baumanii. But resistance is not exclusive to bacteria, and resistance to current treatments for malaria, produced by the protozoan parasite plasmodium falciparum, is a concern, affecting more than 200 million people worldwide. While the treatment of choice includes combinations of drugs [79], studies are already underway to identify new combinations that may circumvent current resistance mechanisms [80].

Viruses can cause common infectious diseases such as the common cold and influenza, for which there is currently no specific treatment (it is the immune system that eliminates it from the body) or preventive treatment (vaccines). But the viruses also cause serious diseases such as AIDS, Ebola (which is the cause of a large number of studies [81] because of the 2016 epidemic), or COVID-19 (on whose curative or preventive treatment most current global efforts are focused). In addition, co-infection with certain viruses is common in certain populations, such as co-infection with human immunodeficiency virus type 1 and hepatitis C virus (HIV/HCV). In this case treatment of coinfection is a challenge due to the special considerations to be taken into account to ensure liver safety and avoid drug interactions. Therefore, drugs that are effective against multiple pathogens and with less toxicity that can provide a therapeutic strategy in certain co-infections are sought [38].

An effective therapeutic strategy is urgently needed to treat the rapidly growing COVID-19 in patients from all over the world. As there is no proven effective drug to treat COVID-19 patients, it is fundamental to develop efficient strategies that allow the reuse of drugs or the design of new drugs against SARS-CoV-2.

In [82] the main strategies are summarized that are being used through the use of artificial intelligence and machine learning techniques.

The 3Clike protease of the coronavirus, associated with the syndrome severe acute respiratory tract (or 3CLpro), is a potential target as anti-SARS agents, due to its critical role in viral replication and transcription. Due to the high structural closeness between the enzymes in the old strain SARS CoV and the new SARS CoV-2, it would be expected that compounds that inhibit the former enzyme would show similar interactions with the latter. In numerous studies [83], [84], QSAR models have been developed to search for compounds that act as SARS-CoV-3CLpro enzyme inhibitors and a study of the structural characteristics of those molecules that control its inhibition of 3CLpro (pIC50).

Central nervous system (CNS) infections are a major cause of morbidity and mortality. The passage of fluids and solutes into the CNS is closely regulated through the blood–brain barrier (BBB). The penetration of any drug into the cerebrospinal fluid (CSF) depends on molecular size, lipophilicity, binding to plasma proteins and their affinity for transporters. In the search for drugs indicated for CNS infections, it is essential to predict their capacity to cross this barrier [85], being able to discard from the beginning those that do not do so in minimal concentrations.

4.3. Target drug interaction

Determining the goal to be reached is the most important, and most error-prone, in the development of a therapeutic treatment for a disease, where failures are potentially costly given the long time frames and expenses of drug development. Compound-protein interaction (CPI) analysis has become a crucial prerequisite for new drug discovery [86], [87], [88], [89], [90]. In vitro experiments are commonly used to identify CPIs, but it is not feasible to perform this task through experimental approaches alone, and advances in machine learning in predicting CPIs have made great contributions to drug discovery. To improve the task of predicting ligand–protein interactions, tools such as Multi-channel PINN [26]. In addition, the study of these interactions is essential for obtaining traces of novel drugs and predicting their side effects from approved drugs and candidates [40].

On the other hand, the identification of the viability of the target proteins is another of the preliminary steps of drug discovery [91], [92], [93]. Determining a protein’s ability to bind drugs in order to modulate its function, called drug capacity, requires a non-trivial amount of time and resources. This task is aided by new functions developed in eFindSite [94], which is independent software available free of charge, that uses supervised machine learning to predict the pharmacological ability of a given protein.

The crystallised ligands in the Protein Data Bank (PDB) can be treated as inverse forms of the active sites of the corresponding proteins. The similarity of shape between a molecule and the ligands of PDBs indicates the possibility of the molecule binding to certain targets [95]. Membrane proteins are involved in many essential biomolecule mechanisms as a key factor in enabling the transport of small molecules and signals on both sides of the cell membrane. Therefore, accurate identification of membrane ligand–protein binding sites will significantly improve drug discovery. To this end, MPLs-Pred [96] has been developed as a freely available tool for general users. The prediction of interactions from Multi Kernel methods makes it possible to identify possible drug-target interaction pairs [97].

Cone snails are poisonous sea snails that inject their prey with a lethal cocktail of conotoxins, small, secreted, cysteine-rich peptides. Given the diversity and often high affinity for their molecular targets, which consist of ion channels, receptors or transporters, many conotoxins have become valuable pharmacological probes. However, homology-based search techniques, by definition, can only detect new toxins that are homologous to previously reported conotoxins. To overcome these obstacles ConusPipe is an automatic learning tool that uses chemical characters of conotoxins to predict whether a certain transcript in a Conus transcriptome, which has no otherwise detectable homologues in current reference databases, is a putative conotoxin. This provides a new computational pathway for the discovery of new families of toxins [98].

4.4. Antitumorals

Cancer is a major public health problem throughout the world and it is therefore imperative that new drugs are developed for its treatment. The main goal of cancer research is to discover the most effective method of treatment for each cancer patient, since not everyone responds equally to a specific treatment due to external factors, such as the use of tobacco products and unhealthy diets, and internal factors, such as heterogeneity of cancer cells and immune conditions. As the number of cancer patients worldwide increases each year, being able to correctly predict a cancer’s response or non-response to a specific drug would be invaluable.

The word cancer refers to the uncontrolled proliferation of abnormal cells, which when they outgrow normal cells, make it difficult for the body to function the way it should. Although the word cancer is used in a general way, the term encompasses a range of diseases with very different pathogenesis, evolution and treatment.

In general, many therapeutic targets have been studied and identified that may be amenable to treatment. Computational techniques have been widely used to predict the activity of many compounds on these targets, so for example it is known that G-protein-coupled receptors (GPCRs) play a key role in many cell-signalling mechanisms whose alteration may be involved in the pathogenesis of cancer [99]. Protein 4, which contains bromodomain (BRD4), has emerged as a promising therapeutic target for many diseases, including cancer, heart failure and inflammatory processes. Nitroxoline is an antibiotic that showed potential over BRD4 with inhibitory activity against leukaemia cell lines, and has been shown to be effective against leukaemia cell lines [100]. Indolamine 2,3-dioxygenase (IDO), an immune checkpoint, is a promising target for cancer immunotherapy. Three IDO inhibitors with potent activity have been identified by machine learning methods [34], but have not yet been approved for clinical use. Attempts have also been made to predict the response to the same drug of different types of tumours [101], including breast cancer, triple-negative breast cancer and multiple myeloma. Computational methods have also been used to study histone deacetylases (HDAC), which are an important class of enzyme targets for cancer therapy, and inhibitory compounds of these have been sought through computational techniques [102].

The phosphoinositide 3-kinase protein (PI3K) plays a key role in an intracellular signalling pathway responsible for many processes in response to extracellular signals, such as regulation of the cell cycle, cell survival, cell growth, angiogenesis, etc. Vascular tumours in children often show mutations in this molecule, so it has become a promising drug target for cancer chemotherapy. PI3K inhibitors have gained importance as a viable cancer treatment strategy as they control most features of cancer, including cell cycle, survival, metabolism, motility and genomic instability. Structure-based virtual screening has been performed to identify PI3K inhibitors [103]. Another membrane glycoprotein studied as a target, and for which inhibition simulations have been carried out, is P-gp membrane laglucoprotein [104]. Different tumour cell lines have also been used to study antibodies as a possible treatment [105] quantifying levels of proliferation and apoptosis to predict their functioning.

Molecules can be identified as anti-cancer agents through two widely used drug discovery methods [106]: target-based drug discovery (TDD, target-first, direct chemical biology) and phenotype-based drug discovery (PDD, function-first, reverse chemical biology).

Kinases are one of the largest families considered to be attractive pharmacological targets for neoplastic diseases due to their fundamental role in signal transduction and regulation of most cellular activities [107]. As a result, kinase inhibitors have gained great importance in cancer drug discovery over the last two decades, because despite considerable academic and industry effort, current chemical knowledge of kinase inhibitors is limited and therefore tools such as Kinformation [108] have been developed, which is based on machine learning methods to automate the classification of kinase structures and this approach is expected to improve protein kinase modelling in both active and inactive conformations.

Neoplastic growth and cell differentiation are fundamental characteristics of tumour development. It is well established that communication between tumour cells and normal cells, through channels containing connective tissue including gap bonds, tunneled nanotubes and hemochannels, regulates tumour differentiation and proliferation, aggressiveness and resistance to treatment. It has been proposed that new computational approaches [109] to the identification and characterisation of these communication systems, and their associated signalling, could provide new targets for preventing or reducing the consequences of cancer.

It has been postulated that new drug combinations can improve personalized cancer therapy. Using various types of genomic information on cancer cell lines, drug targets and pharmacological information, it is possible to predict drug combination synergy by regressing the level of synergy between two drugs and a cell line, as well as classifying whether synergy or antagonism exists between them [110].

For the current diagnosis of many cancers, nuclear morphometric measurements are used to make an accurate prognosis in the last stage, but early diagnosis remains a great challenge. Recent evidence highlights the importance of alterations in the mechanical properties of individual cells and their nuclei as critical drivers for the emergence of cancer. Detecting subtle changes in nuclear morphometry at single cell resolution by combining fluorescence imaging and deep learning [111] allows discrimination between normal cells and breast cancer cell lines, thus opening new avenues for early disease diagnosis and drug discovery.

4.5. Neurology

Chemotherapy-induced peripheral neuropathy (CIPN) is a common adverse side effect of cancer chemotherapy, which can cause extreme pain and even disable the patient. Lack of knowledge about the mechanisms of multifactorial toxicity of certain compounds has prevented the identification of new treatment strategies, but computational models of drug neurotoxicity [112] are used early in drug development to detect high-risk compounds and select safer candidate drugs.

Many CNS disorders, both neurodegenerative processes and trauma, require multiple strategies to address neuroprotection, repair and regeneration of cells. The knowledge accumulated in neurodegenerative processes and neuroprotective treatments can be used, through computational techniques such as Machine Learning, to identify combinations of drugs that can be reused as potential neuroprotective agents [113]. Another branch of neurology that generates great scientific interest is the study of neurodegenerative diseases, such as Alzheimer’s disease, the main cause of dementia and pathology that currently has no cure. Several studies have reported that the expression of ROCK2, but not of ROCK1, has increased significantly in the human nervous tissue of patients with neurodegenerative disorders, so that the suppression of the expression of ROCK2 is considered a pharmacological target for the treatment of this disease [48]. In the same sense, 5-HT1A is a brain receptor used as a biomarker for degenerative disorders. Work has been carried out to predict compounds that will bind to this receptor [114]. In general, based on an equal number of drugs approved or withdrawn for the treatment of CNS pathologies, possible discriminative fragments have been studied that allow the search for other similar compounds for the treatment of CNS pathologies [115].

4.6. Other works

Type 2 diabetes mellitus is the most common endocrine pathology in the world, causing many complications in many organ systems that can lead to a shortening of life and a considerable reduction in the quality of life of patients suffering from it. For this reason, the pharmaceutical industry has made many efforts in the search for efficient treatments that can cure this disease or, failing that, minimize the lesions produced in target organs by excess blood glucose. One of the branches of research focuses on the inhibition of sodium-dependent glucose co-transporters (SGLT1 and SGLT2), through which glucose is absorbed. Dual inhibitors have been developed, but the search continues for compounds aimed at reducing the absorption of glucose by SGLT1 [33]. Another branch of research within endocrinology focuses on the physiopathology and treatment of obesity. It is known that nuclear receptors PPARs (Peroxisome Proliferator Activated Receptors) are transcription factors that are activated by the binding of specific ligands and regulate the expression of genes involved in lipid and glucose metabolism. These receptors have been proposed as therapeutic targets for metabolic diseases, and ISE (Iterative Stochastic Elimination) [116] has been developed, a tool that allows distinguishing agonist compounds from PPARs.

When the C1 complement component is over-activated, its regulation can be altered producing tissue damage that activates the complement system again, thus producing a circle of activations that perpetuates itself and produces ever greater damage. Treatments to inhibit C1 are expensive, so the search for cheaper inhibitors continues [117].

As mentioned, the development of new drugs is a complex and resource-intensive process. Therefore, the search for new clinical indications for existing drugs has become an alternative to accelerate and reduce the costs of the process. Thus, the term drug repositioning refers to the process of developing a compound for use in a pathology other than its current indication, taking advantage of the benefits of the abundance, variety and easy access to pharmaceutical products and biomedical data [118]. A promising approach to drug repositioning is to take advantage of machine learning algorithms to learn patterns in available drug-related biological data and link them to specific diseases to be treated [119], [120]. For example, indications for compounds against malaria, tuberculosis, and large cell carcinoma are already being reused for predicting protein interactions by calculating the accuracy by comparing similarity of interactions of approved drugs for other indications [121].

The WHO proposed a classification that assigns codes to compounds according to their therapeutic, pharmacological and chemical characteristics, as well as the sites of in vivo activity. The ability to predict the ATC codes of compounds can assist in the creation of high quality chemical libraries for compound detection and drug repositioning [30].

5. Trending in ML algorithms used in drug design

5.1. Naive Bayes

Generally speaking Machine Learning algorithms try to find the best hypothesis from a given data of interest. In particular for a classification problem the class for an unknown data sample. Bayesian classifiers assign the most likely class of each sample, according to the description given by the vector values of its variables. In its simplest version, the algorithm assumes that the variables are independent, that is, it facilitates the application of Bayes’ Theorem [122]. Although this assumption is unrealistic (not all variables are equally important), the family of classifiers that arises from the previous premise, known as NB (Naïve Bayes) obtain outstanding results, even though in some cases there are strong dependencies in their set of attributes. This algorithm describes a simple way to apply Bayes’ theorem to classification problems, and it is a simple, fast model that is capable of working with noisy data. It is able to learn from small data sets, which is an advantage although it does not suffer if the data volume is very high in terms of number of samples. It is not the ideal algorithm for high dimensionality problems with a high number of attributes since it uses frequency tables to extract knowledge from the data and treats each variable as categorical and, in case of working with numerical variables, it must perform some kind of transformation.

5.1.1. Naive Bayes in drug discovery

This model has been used in drug discovery for the prediction of possible drug targets. Specifically, in [123] they developed a Bayesian model that integrates different data sources such as known side effects, or gene expression data, achieving a model with 90% accuracy on more than 2,000 molecules and also developing the experimental validation of the screening process. In [38] they predict molecules that are multi target with AUC 80% for treatment of VIH/HCV from data obtained from ChEMBL and generating for each of them two types of descriptors (MACCS and ECFP6) and validating the results by docking techniques. From 5125 known interactions with four different subtypes of proteins (enzymes, ion channels, GPCRs and nuclear receptors) obtained from KEGG and DrugBank and random interactions from STITCH in [40] they generated a model for the prediction of ligand-target interactions with an accuracy of 95%. In [30] they generate drug prediction models according to the ATM (Anatomical Therapeutic Chemical) system of the WHO using from STITCH and ChEMBL the data set used and calculating three different types of molecular descriptors (based on structure, interaction between compounds and interactions with similar targets) with an accuracy of 65%. In [31] they followed an experimental design based on machine learning and molecular docking for the prediction of possible inhibitors of Mttopo I, target protein for tuberculosis with AUC values of 74% and performing the in vitro validation of their computational results.

Furthermore, from the generation of different molecular descriptors of a set of compounds that damaged the liver in [39], they predict possible liver damage by traditional Chinese medicine with an accuracy of 72%. In [34] from 50 ChEMBL compounds, prediction models were generated with an AUC of 80% for inhibitors for IDO, calculating QSAR descriptors and validating the results by means of molecular docking techniques.

This type of model has also been used in [28] to predict the toxicity of chemical elements obtained from previous publications in Pubmed with an AUC higher than 80% and validating the best predictions in the laboratory. Or in [103] to predict PI3K inhibitors from 3D QSAR descriptors obtained from ChEMBL and BindigDB with AUC values of 97% and also with in vitro validation phase and by means of computational docking techniques.

5.2. Support vector machines

Vapnik introduced Support Vector Machines (SVM) in the late seventies [124]. They are one of the most widely used techniques because of their good performance and their ability to be generalized in high-dimensional domains, especially in bioinformatics [125], [126], [127]. In machine learning, sets of points in a given space are used to learn a way to deal with new observations. Kernel-based methods use those points to learn how similar the new observations are and to make a decision. Kernels code and measure the similarity between objects [128], [129], [130].

The base implementation works with two-class problems in which the data are separated by a hyperplane although there are implementations for regression or survival problems. Being n the dimension of the data, a hyperplane is an affine subspace of dimension n-1 that divides the space in two halves that corresponds to the entries of the two classes [130]. In the classification task, the goal of the SVM is to find the hyperplane that separates the positive examples from the negative ones. This hyperplane separates the positive examples from the negative ones, oriented in such a way that the distance between the border and the nearest data of each class is maximum; the nearest points are used to define the margins, known as support vectors [131]. You can see the concept of optimal hyperplanar.