Abstract

Unsupervised learning is becoming an essential tool to analyze the increasingly large amounts of data produced by atomistic and molecular simulations, in material science, solid state physics, biophysics, and biochemistry. In this Review, we provide a comprehensive overview of the methods of unsupervised learning that have been most commonly used to investigate simulation data and indicate likely directions for further developments in the field. In particular, we discuss feature representation of molecular systems and present state-of-the-art algorithms of dimensionality reduction, density estimation, and clustering, and kinetic models. We divide our discussion into self-contained sections, each discussing a specific method. In each section, we briefly touch upon the mathematical and algorithmic foundations of the method, highlight its strengths and limitations, and describe the specific ways in which it has been used-or can be used-to analyze molecular simulation data.

1. Introduction

In recent years, we have witnessed a substantial expansion in the amount of data generated by molecular simulation. This has inevitably led to an increased interest in the development and use of algorithms capable of analyzing, organizing, and eventually, exploiting such data to aid or accelerate scientific discovery.

The data sets obtained from molecular simulations are very large both in terms of the number of data points—namely, the number of saved configurations along the trajectory—and in terms of the number of particles simulated, which can be several millions or more. However, we know both empirically and from fundamental physics that such data usually have much lower-dimensional representations that convey the relevant information without significant information loss. A striking example is given by the kinetics of complex conformational changes in biomolecules, which, on long time scales, can be well described by transition rates between a few discrete states. Moreover, symmetries, such as the invariance of physical properties under translation, rotation, or permutation of equivalent particles, can also be leveraged to obtain a more compact representation of simulation data.

Traditionally, finding such low-dimensional representations is considered a task which can be tackled based on domain knowledge: The analysis of molecular simulations is often performed by choosing a small set of collective variables (CVs), possibly complex and nonlinear functions of the coordinates, that are assumed to provide a satisfactory description of the thermodynamic and kinetic properties of the system. If the CV is appropriately chosen, a histogram created from the CV summarizes all the relevant information, even if the molecular system includes millions or billions of atoms. The list of possible CVs among which one can choose is enormous and is still growing. Tools now exist which can describe phenomena of high complexity, such as the packing of a molecular solid, the allostery of a biomolecule, or the folding path of a protein. However, choosing the right CV remains to some extent an art, which can be successfully accomplished only by domain experts, or by investing a significant amount of time in a trial-and-error procedure.

Machine learning (ML) has emerged as a conceptually powerful alternative to this approach. ML algorithms can be broadly divided in three categories.1 In supervised learning, a training data set consisting of input–output pairs is available, and a ML algorithm is trained with the goal of providing predictions of the desired output for unseen input values. This approach has been extensively used to predict specific properties of materials and molecules, such as the atomization energy of a compound or the force acting on an atom during a trajectory. In unsupervised learning, no specific output is available for the data in the training set, and the goal of the ML algorithm is to extract useful information using solely the input values. A typical application of this approach in the field of molecular simulation is the construction of low dimensional collective variables, which can compactly yet effectively describe a molecular trajectory. Finally, in reinforcement learning, no data at all is used to train the ML model, which instead learns by “trial and error” and by continuously interacting with its environment. Reinforcement learning has proven particularly successful at certain computer science challenges (such as playing board games), and it is now beginning to find application in molecular and materials science. In this Review, we focus exclusively on unsupervised learning methods. We aim to provide an overview of all algorithms currently used to extract simplified models from molecular simulations to understand the simulated systems on a physical level. The review is oriented toward researchers in the fields of computational physics, chemistry, materials and molecular science, who routinely deal with large volumes of molecular dynamics simulation data, and are hence interested in using, or extending, the techniques we describe.

Some of the algorithms we review are as old as the simulation methods themselves, and have been successfully used for decades. Others are much more recent and their conceptual and practical power is only now becoming clear. Despite the recent surge in, for example, machine learning algorithms for determining CVs, many problems in the field can still be considered open, making this research area extremely active.

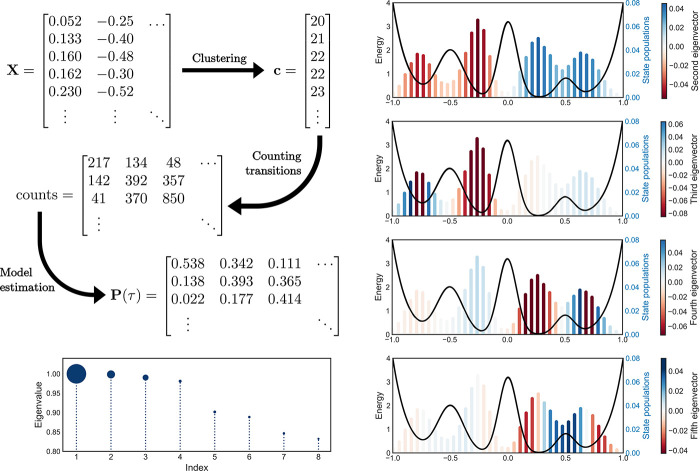

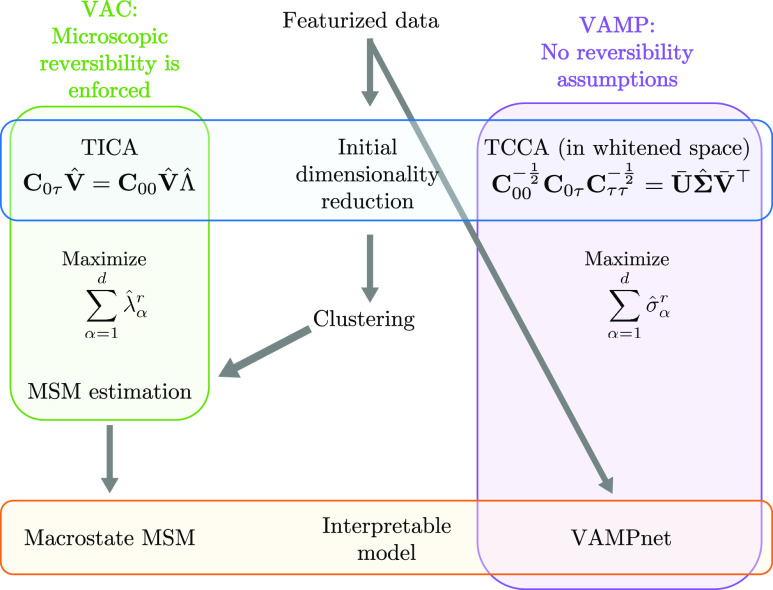

We divide our discussion in five sections, each of which will include its own introduction section: feature representation, dimensionality reduction, density estimation, clustering, and kinetic models. A graphical table of contents depicting the different methods and how they relate to each other is shown in Figure 1.

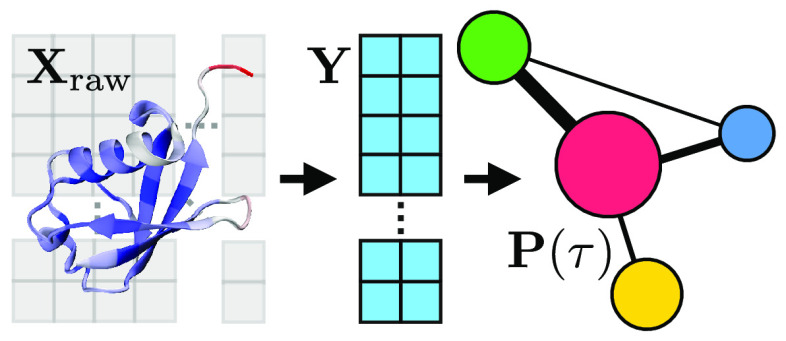

Figure 1.

Illustration of the possible steps that can be performed to analyze data from a molecular simulation with an indication of the particular section where these are discussed.

In Section 2, we discuss the choice of feature representation for atomistic and molecular systems, a topic relevant for any analysis or application of learning algorithm. We, then, turn to the description of unsupervised learning algorithms, which we divide in four groups. In Section 3, we review algorithms of dimensionality reduction, whose primary objective is to provide a low dimensional representation of the data set that is easy to analyze or interpret. While in molecular simulations the probability density from which the configurations are harvested is in principle known (e.g., the Boltzmann distribution), this probability density is defined over the whole molecular state space, which is too high-dimensional to be visualized and understood. In Section 4, we review algorithms of density estimation, which enable the estimation of probability distributions restricted to sets of relevant variables describing the data. These variables can be chosen, for example, using the techniques described in Section 3. In Section 5, we review clustering algorithms. Clustering divides the data set into a few distinct groups, or “clusters,” whose elements are similar according to a certain notion of distance in their original space. These techniques allow to capture the gross features of the probability distribution; for example, the presence of independent probability peaks, even without performing a preliminary dimensionality reduction. Therefore, the techniques described in this section can be considered complementary to those described in Section 3. When clustering is viewed as an assignment of data points to integer labels, clustering itself can be viewed as an extreme form of dimensionality reduction. In Section 6, we present kinetic models. While Sections 3-5 focus on modeling methods in which the ordering of the data points is not considered, kinetic models instead exploit dynamical information (i.e., ordering of data points in time) to reduce the dimensionality of the system representation. Altogether, this set of approaches is qualitatively based on the requirement that a meaningful low-dimensional model should reproduce the relevant time-correlation properties of the original dynamics (e.g., the transition rates). Throughout the review we present these techniques highlighting their specific application to the analysis of molecular dynamics, and discussing their advantages and disadvantages in this context. In Section 7 we list the software programs which are most currently used to perform the different unsupervised learning analysis described. Finally, in the Conclusions (Section 8), we present a general perspective on the important open problems in the field.

Some other review articles have a partial overlap with the present work. In particular, ref (2) reviews algorithms of dimensionality reduction for collective variable discovery, while refs (3 and 4) also review some approaches to build kinetic models. In this work, we review not only all these approaches but also other algorithms of unsupervised learning, namely, density estimation and clustering, focusing on the relationship between these different approaches and on the perspectives opened by their combination. Other valuable review articles of potential significance to the reader interested in machine learning for molecular and materials science are ref (5−9).

2. Feature Representation

Throughout this Review, we assume to have obtained molecular data from a simulation procedure, such as Monte Carlo (MC)10 or molecular dynamics (MD).11 In general, the data set comes in the form of a trajectory: a series of configurations, each containing the positions of all particles in the system. These particles usually correspond to atoms but may also represent larger “sites”; for example, multiple atoms in a coarse graining framework. Given a trajectory data set, before any machine learning algorithm can be deployed, we must first choose a specific numerical representation X for our trajectory. This amounts to choosing a set of “features” (often referred to as “descriptors” or “fingerprints”) that adequately describes the system of interest.

The “raw”

trajectory data set (the direct output

of the simulation procedure) is represented by a matrix  , where r stands

for “raw”, N is the number of simulation

time points collected, and Dr is the number

of degrees of freedom in the

data set. When three-dimensional spatial coordinates are retained

from the simulation, Dr is equal to three

times the number of simulated particles. When momenta are also retained, Dr is equal to six times the number of simulated

particles. It is from this representation that we seek to featurize

our data set; namely, transform our data into a new matrix

, where r stands

for “raw”, N is the number of simulation

time points collected, and Dr is the number

of degrees of freedom in the

data set. When three-dimensional spatial coordinates are retained

from the simulation, Dr is equal to three

times the number of simulated particles. When momenta are also retained, Dr is equal to six times the number of simulated

particles. It is from this representation that we seek to featurize

our data set; namely, transform our data into a new matrix  . For later reference, we denote the function

that performs the featurization on each “raw” data point xi,r as

. For later reference, we denote the function

that performs the featurization on each “raw” data point xi,r as  , that is,

, that is,

| 1 |

A trivial featurization is to let X = Xr (and thus D = Dr). In this case, the “raw” data output of the simulation is directly used for analysis. For molecular systems, however, this is often disadvantageous, since Dr is typically very large.

When sufficient prior knowledge of the system is available, it is convenient to choose a feature space such that D ≪ Dr that can appropriately characterize the molecular motion of interest without significant loss of information. Often, the number of degrees of freedom required to encode many physical properties or observables of a molecular system is relatively low.12,13 In the following, we will review features that have been frequently used to represent atomic and molecular systems. We can divide these features into two fundamentally different groups, which we will discuss in turn. For certain physical systems, such as biomolecules, each atom may be considered as having a unique identity that represents its position in a molecular graph. For such systems, it is often convenient to use features that are not invariant with respect to permutations of chemically identical atoms. On the other hand, for most condensed matter systems, and materials, atoms of the same element should be treated as indistinguishable, and descriptors should be invariant under any permutation of chemically identical atoms. We conclude this section with a brief discussion of representation learning, a promising new direction for determining features automatically.

It is worth noticing here that an explicit numerical representation for the molecular trajectory is not always necessary since some algorithms can work even if only a distance measure between molecular structures is defined.14−22 The main limitation of this approach is that its computational cost scales quadratically with the number of data points.

2.1. Representations for Macromolecular Systems

Many of the methods discussed in this Review have been applied to classical simulation data sets of systems such as DNA, RNA, and proteins. A macromolecular simulation of such a system will contain at least one solute (for example, a protein) comprising 100–100 000 atoms connected by covalent bonds which cannot be broken. The system will also typically include several thousand water molecules, as well as several ions and lipids. Other small molecules, for example a drug or other ligand, are often also included.

In most analyses of macromolecules, the focus is on the solute and the solvent degrees of freedom are neglected. In the absence of an external force, we then expect the solute’s dynamics and thermodynamics to be invariant to translation and rotation of the molecule (in three dimensions for fully solvated systems and in two dimensions for membrane-bound systems). A simple manner of obtaining a set of coordinates obeying this invariance is to remove the solvent molecules and, then, superpose (i.e., align) each configuration in the trajectory to a reference structure of the molecule (or of the complex), which is known to be of physical or biological interest. This can be done by finding the rotation and the translation minimizing the root-mean-square deviation (RMSD) with the reference structure.23

While in many cases, this simple procedure is adequate, in many others it is suboptimal; for example, when a reference structure is not known or when the configurations are too diverse to be aligned to the same structure. This is the case for simulations of very mobile systems, such as RNA and intrinsically disordered proteins, or for the simulation of folding processes, in which the system dynamically explores extremely diverse configurations that cannot be meaningfully aligned to a single structure. Beyond RMSD-based approaches, an appropriate choice of features satisfying rotational and translational invariance are the so-called internal coordinates, first introduced by Gordon and Pople.24 For classical simulations of proteins or nucleic acids, one can consider the bond lengths and the angles formed by three consecutive atoms as approximately fixed, and therefore, the configuration of the molecule can be described by the value of the dihedral angles formed by four consecutive atoms.25,26 For example, in a protein the configuration of the backbone is defined by the so-called ϕ- and ψ-dihedral (Ramachandran) angles.27 Using these coordinates, which by construction are invariant with respect to system rotation and translation, enables a significant dimensionality reduction of the system’s representation. For example, an amino acid residue has six backbone atoms, which corresponds to 18 degrees of freedom if one uses their Cartesian coordinates to represent the residue. On the other hand, their position is determined with good approximation by the value of only the two Ramachandran angles.

For larger systems whose dynamics are characterized by more global changes in conformation (as in protein folding or allostery), it is more common to use a contact-based featurization. For example, the “contact distances” between all monomers can be used according to a predesignated site (e.g., α-carbons in a protein) or the closest (heavy) atoms of each pair. These contacts can be also defined by selecting a given cutoff distance and then using a contact indicator function.25

There are many more specialized feature representations that can be employed according to the problem under study. For example, in simulations that involve two or more solute molecules, such as ligand binding or protein–protein association, distances, angles, and other data specific to the binding site can be used.28,29 In some cases, featurizations involving assignment to secondary structure elements,30 solvent-accessible surface area,31 or hand-picked features may be most appropriate.32 Furthermore, different types of features can be concatenated (and appropriately scaled). In some cases, featurizations that explicitly or implicitly treat solvent molecules, lipids or ions are important. Examples include when characterizing the solvent dynamics around binding pockets,33 ions moving through ion channels,34,35 or the lipid composition around membrane proteins.36,37 Several publications have compared the suitability of various feature sets for protein data for analyses, particularly in the context of the kinetic models described in Section 6.38−40

2.2. Representations for Condensed Matter Systems

In many condensed matter systems, such as solvents and materials, the physical properties of interest are invariant with respect to the exchange of equivalent atoms or molecules as such permutations merely exchange the particle labels. In such situations, it is often essential that the system configurations are represented in a permutation-invariant way. For example, in a pure water simulation, water molecules diffuse around and change places. Comparing different configurations by a simple RMSD, which depends on the order of molecules in the coordinate vector, is therefore inadequate.

A direct approach for working with Cartesian coordinates in a way that preserves permutational invariance is to relabel exchangeable particles or molecules such that the distance to a reference configuration is minimized. For example, in a simulation of water, one could define an ice-like configuration where water molecules are aligned on a lattice as a reference configuration. The (arbitrary) sequence in which waters are enumerated in this configuration defines the reference labeling. Then, for every configuration visited during the simulation, one must determine to which label each water molecule should be assigned (i.e., which permutation matrix should be applied) such that the relabeled configuration will have the minimal RMSD to the reference configuration. This problem can be solved by a bipartite matching method, such as the Hungarian algorithm.41 Such approaches have been applied to estimate solvation entropies42 and sampling permutation-invariant system configurations.43

Another common strategy for designing permutation-invariant features is to first represent a system as a union of low order constituents (“n-plets”) for which a permutation-invariant descriptor is computed. In this approach, invariance can be achieved by enumerating all permutations of subsets of permutable particles or molecules. While this is not possible for permutations (the number of which scales factorially), it is relatively straightforward for pairs or triplets of atoms.44−46 As a simple example, a system of M atoms is first broken down into the M(M – 1)/2 unique pairs of atoms that constitute it. Then, the distribution functions of interatomic distances between two atomic species is computed. Finally, these distribution functions are binned to produce permutation invariant feature vectors.

Different descriptors have been proposed which build upon the above idea in the context of specific aims. For example, Atom-centered symmetry functions (ACSFs)47,48 are obtained by expanding 2-body (radial) and 3-body (angular) distribution functions onto specific invariant bases. The ACSF representation was the first widely adopted representation for materials systems. ACSFs are very efficient to evaluate numerically and they have been shown to be able to resolve geometric information well enough to train highly efficient interatomic potentials.49,50 ACSFs are now widely used in shallow neural networks that replace molecular force fields by learning quantum-chemical energies.47,48,51,52

Another commonly used descriptor for materials system is the so-called Smooth Overlap of Atomic Positions (SOAP).53,54 The SOAP representation is obtained by expanding a smoothed atomic density function onto a radial and a spherical harmonics basis set. This representation can be considered an expansion of 3-body features.55 The SOAP vector has also been shown to resolve geometric information very precisely.54

Many other representations based on the n-plets principle have been suggested. They differ in the specific low-order contributions chosen and in the particular basis in which the low order information is expanded. Notable examples are the Coulomb matrix,56 the “Bag of Bonds”,57 the histograms of distances, angles, and dihedral angles (HDAD),58 and the many body tensor representation (MBT).59 In general, finding informative numerical representations for condensed matter systems is a very hard problem,60,61 and an exhaustive review of all the approaches which have been proposed to tackle it is beyond the scope of this work. The interested reader can refer to refs (62−64) for a more comprehensive analysis of the different descriptors developed for systems with permutation invariance.

2.3. Representation Learning

In the past few years, substantial research has been directed toward learning a feature representation rather than manually selecting it. Good examples of representation learning algorithms for molecules are given by graph-neural networks (GNNs)65 such as SchNet,66 PhysNet,67 DimeNet,68 Cormorant,69 or Tensor field Networks.70 These networks are usually trained to predict molecular properties, and they do so by performing continuous convolutions across the spatial neighborhoods of all atoms. In contrast to other neural network approaches based on fixed representations,47,71 in GNNs the representations are not predefined but are instead learnable using convolutional kernels. The feature representation is a vector attached to each atom or particle. It is initialized to denote the chemical identity of the particle (e.g., nuclear charge or type of bead), and it is then updated in every neural network layer depending on the chemical environment of every particle and through the action of convolutional kernels whose weights are optimized during training. The representation found at the last GNN layer can thus be interpreted as a learned feature representation which encodes the many-body information required to predict the target property (e.g., the potential energy of the molecule).

GNNs have been extensively used to predict quantum-mechanical properties66−68,72 and coarse-grained molecular models.73,74 See refs (4 and 75) for recent reviews of these topics.

For the rest of this Review, we will assume that the featurization step has been performed; and a sufficiently general numerical representation X, appropriate for the system under study, has been obtained.

3. Dimensionality Reduction and Manifold Learning

In this section, we review dimensionality reduction techniques that have been used most extensively in the analysis of molecular simulations. All these techniques involve transforming a data matrix X of dimensions N × D into a new representation Y of dimensions N × d, with d ≪ D, with the goal of preserving the information contained in the original data set. It is typically impossible to achieve this task exactly, and the different methods reviewed here can only provide different approximate solutions whose utility needs to be evaluated on a case by case basis. We first describe linear projection methods: Principal component analysis and Multidimensional scaling. These are arguably the simplest and most widely used techniques of dimensionality reduction. However, if the data do not lie on hyperplanes these linear methods can easily fall short. In the subsequent sections we describe a set of nonlinear projection methods that can deal also with data lying on twisted and curved manifolds. In order, we will cover Isomap, Kernel PCA, Diffusion map, Sketch-map, t-SNE, and deep learning methods.

We will present the theory beyond the mentioned algorithms only briefly; the interested reader can refer to existing specialized reviews for more information on such aspects.3,76,77 Instead, we will focus our attention on the practical issues related to utilizing these methods for the analysis of molecular trajectories and highlight relative merits and pitfalls of each method.

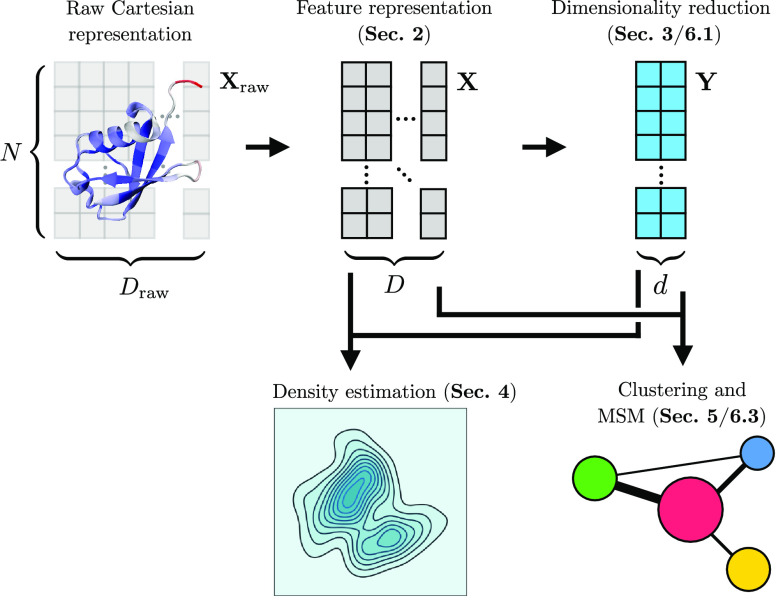

The mentioned algorithms are grouped together for reference in Figure 2. The figure emphasizes that a manifold of increasing complexity can only be efficiently reduced in dimensionality using methods of increasing sophistication, which however will typically require a greater computational power and a longer trial and error procedure to be properly deployed.

Figure 2.

Different algorithms of dimensionality reduction discussed, divided in three groups. From left to right, the listed algorithms are capable of dealing with manifolds of higher complexity, which comes at the price of a higher computational cost and a larger number of free parameters to choose.

3.1. Linear Dimensionality Reduction Methods

3.1.1. Principal Component Analysis

Principal

component analysis (PCA)78−80 is possibly the best known procedure

for reducing the dimension of a data set and further benefits from

an intuitive conceptual basis. The objective of PCA is to find the

set of orthogonal directions along which the variance of the data

set is the highest. These directions can be efficiently found as follows.

First, the data is centered by subtracting the average, obtaining

a zero-mean data matrix X̂. This operation guarantees

the translational invariance of the PCA projection. Second, the covariance

matrix of the data is estimated as  . Finally, the

eigenvectors of the matrix C are found by solving the

eigenproblem

. Finally, the

eigenvectors of the matrix C are found by solving the

eigenproblem

| 2 |

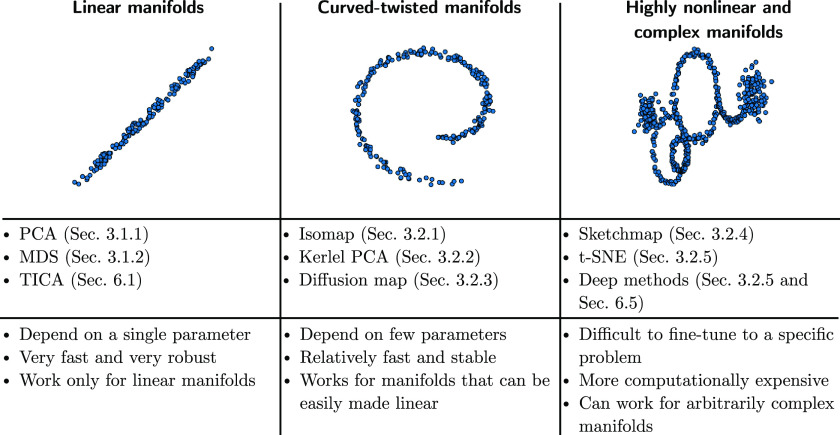

It is simple to show that the direction of maximum variance coincides with v1, the eigenvector corresponding to the largest eigenvalue λ1.80 The direction of maximum variance in the subspace orthogonal to v1 is v2, and so on. The PCA representation is ultimately obtained by choosing a number of components d, and projecting the original data onto these vectors as Y = XV, where V is a matrix of dimension D × d containing the first d eigenvectors of C. An illustration of this procedure is shown in Figure 3a on a data set obtained by harvesting data points from an 10-dimensional Gaussian stretched in two dimensions.

Figure 3.

Illustration on the working principle of the PCA projection. (A) Two-dimensional linear manifold embedded in a high dimensional space. (B) Eigenspectrum of a covariance matrix of the data, as the manifold is two-dimensional a clear gap appears after the second eigenvalues (blue line); a more typical eigenspectrum is shown in orange. (C) Low-dimensional representation of the data obtained through PCA.

Importantly, the eigenvalue λα is exactly equal to the variance of the data along the given direction vα.80 For this reason, it is common to select the dimensionality d most appropriate for a PCA projection by looking at the eigenvalue spectrum as a function of the eigenvalue index. A clear gap in such a plot, as seen in the blue curve in Figure 3b, is an indication that a dimensionality reduction including the components before the gap is meaningful. In real world applications, however, it is common to observe a continuous, although fast, decay of the magnitude of the eigenvalues (orange curve in Figure 3b). In this situation the selection of d is more arbitrary. A common rule of thumb is to choose the smallest d that is able to capture a good portion of the total variance of the data set. In practice, one can choose the smallest d such that Σi=αdλα/Σi=αλα ≥ f, where f is a free parameter, which is often set to 0.98 or 0.95.

The use of PCA to analyze biomolecular trajectories was first proposed in ref (81−83). In these papers, it was numerically found that a very small fraction of coordinates were capable of describing the majority of the motion of the molecules studied. These coordinates have often been named essential coordinates and, consistently, the methodology has been named “essential dynamics”.83 Importantly, the variation along the essential degrees of freedom was connected to the functional motion of the protein. In the years that followed, PCA has been extensively used to characterize both functional motions and the free energy surface of small peptides and proteins.84−90 In ref (91), the use of PCA was also proposed in conjunction with metadynamics for enhanced sampling. In the context of solid state and materials physics, PCA has been commonly used for exploratory analysis, visualization, data organization, and structure–property prediction.92−94

In spite of its empirical success, the theoretical foundation of the use of PCA for the analysis of molecular trajectories has been the subject of debate. In particular, soon after essential dynamics was proposed it was argued that the sampling needed to robustly characterize the essential coordinates was beyond the time-scales accessible to MD simulations.95,96 Other studies, however, argued that the convergence time needed for the characterization of a stable eigenspace of principal components is reachable and in the range of nanoseconds of simulation time.97−100 Aside from sampling concerns, the strong assumption of the existence of a linear manifold which correctly captures the important modes of variation of a molecular system can easily fall short, giving rise to systematic errors in analysis and predictions.101 The approach presented in the next section provides a manner for overcoming this limitation.

3.1.2. Multidimensional Scaling

Multidimensional scaling (MDS)102,103 is closely related to PCA, and equivalent to it under certain conditions. MDS will be the basis for the advanced nonlinear dimensionality reduction techniques which will be described in the following sections. MDS provides a low dimensional description of the data by finding the d dimensional space which best preserves the pairwise distances between points. It does so by minimizing a cost function quantifying the difference between the pairwise distance Rij as measured in the original D dimensional space and the one computed in the low dimensional embedding

| 3 |

If the distance matrix is given by Rij = ∥xi – xj∥, then, it is simple to show that the vectors yα that minimize eq 3 are found solving the following eigenproblem104

| 4 |

and taking  . The matrix K in eq 4 contains the inner products of

all the centered data vectors Kij = X̂iTX̂j, where

. The matrix K in eq 4 contains the inner products of

all the centered data vectors Kij = X̂iTX̂j, where  . The

key trick of MDS is that such a matrix

can be obtained from the matrix of distances Rij = ∥xi – xj∥

in a simple way, namely104,105

. The

key trick of MDS is that such a matrix

can be obtained from the matrix of distances Rij = ∥xi – xj∥

in a simple way, namely104,105

| 5 |

It is important

to note that the embeddings

generated by MDS and PCA are exactly equivalent if

the distance between the data points is estimated as Rij = ∥xi – xj∥. This follows from the fact that

the eigenvectors of the covariance matrix {vαc} and of those of the K matrix

{vα} are related to each other as  .76

.76

The covariance matrix C and the matrix of inner products K have dimensions D × D and N × N, respectively, which greatly affects the computational cost of the methods. So, for instance, if the number of points greatly exceeds the number of dimensions N ≫ D, PCA is more computationally efficient, while the contrary is true if D ≫ N.

The most important difference between PCA and MDS lies in the fact that MDS can be used also when the data matrix X is not available and one is only provided with the matrix Rij of pairwise distances between points. This is an important feature of MDS, which allows it to also work in the context of nonlinear dimensionality reduction as we will describe in the next section.

3.2. Nonlinear Dimensionality Reduction

3.2.1. Isomap

The Isometric feature mapping (Isomap) algorithm106 was introduced with the goal of alleviating the problems of linear methods, such as PCA, which fail to find the correct coordinates whenever the embedding manifold is not a hyperplane. The key idea of Isomap is the generation of a low dimensional representation that best preserves the geodesic distances between the data points on the data manifold, rather than the Cartesian distance.

Isomap comprises three steps. First, a graph of the local connectivities is constructed. In this graph, each point is linked to its kth nearest neighbors with edges weighted by the pairwise distances. Second, an approximation of the geodesic distance between all pairs of points is computed as the shortest path on this graph. Finally, MDS is performed on the matrix Rij containing the geodesic distances. The final representation thus minimizes the loss function in eq 3, and provides the low dimensional Euclidean projection that best preserves the computed geodesic distances. An illustration of the working principle of Isomap is presented in Figure 4.

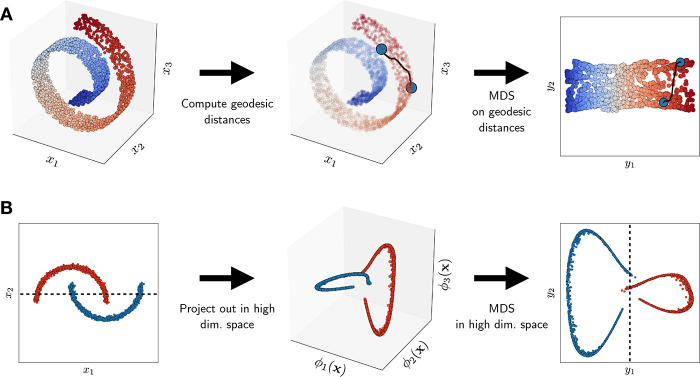

Figure 4.

(A) Illustration of Isomap on a Swiss roll data set. The original 3D data set (left) is projected on a 2D space in a way that optimally preserves geodesic distances between points (middle and right). (B) Illustration of Kernel PCA on a data set with two segments that are not linearly separable. The original 2D data set (left) is first represented in a higher dimensional space, where it becomes linearly separable (middle), and finally, MDS is performed on the transformed data set (right). Note that the transformed data set typically lies in a space of very high—and often infinite—dimensionality, and the 3D embedding shown here only serves illustrative purposes.

A drawback of Isomap is its potential for topological instabilities. Indeed, if the manifold containing the data is not isomorphic to a hyperplane (for example, it is isomorphic to a sphere or to a torus) the procedure becomes ill defined, since a sphere or a torus cannot be mapped to a hyperplane without cutting it. More generally, the quality of the representation generated depends on the quality of the geodesic distances, which can only be estimated approximately. In particular, the algorithm used to compute geodesic distances requires choosing which data points can be considered directly connected, namely close enough that their geodesic distance coincides with their Cartesian distance. Considering connected points which are too far apart can bring to a severe underestimation of the geodetic distance, if the manifold is strongly curved.107

The computation of the geodesic distance between all pairs of points is also the main performance bottleneck when using Isomap on large data sets of molecular trajectories. For this reason, when Isomap was first used for the analysis of molecular data sets,101 it involved the preselection of a small number of landmark points nL that were assumed to correctly span the data manifold. This modified procedure, named “ScIMap” (Scalable Isomap), is much faster than the standard Isomap implementation since the geodesic distance is computed only between a small fraction nL ≪ N of landmark points. The scalability of Isomap to large data sets was further improved with the introduction of “DPES-ScIMAP” (Distance-based Projection onto Euclidean Space ScImap) in ref.108 This procedure involves an initial projection of the points onto a low-dimensional Euclidean space.

In ref (101), it is shown that using Isomap in place of PCA allows describing the folding process of a small protein by much less variables. This result is confirmed in refs (109) and (110), in which it is shown that Isomap coordinates faithfully describe the motion of small molecules and the free energy landscape of small peptides. On the other hand, in ref (111), only small improvements were observed when using Isomap in place of PCA for describing the folding process of another peptide. In ref (112), an Isomap embedding was successfully used to generate the collective biasing variables for a metadynamics simulation. In ref (113), Isomap was employed to construct an enhanced MD sampling method.

In general it is clear that the extent to which a nonlinear method like Isomap proves beneficial—or even necessary—depends upon the degree of nonlinearity of the manifold in which the molecular trajectory is (approximately) contained). This nonlinearity depends on the system under study as well as on the type of coordinates chosen to describe it (see section 2). While it is difficult to determine precisely the degree of nonlinearity of a data manifold, an approximate estimate can be obtained by comparing its linear dimension, obtained for instance by an analysis of the PCA eigenspectrum (Figure 3B), with its nonlinear intrinsic dimension, which can be computed using several tools.114,115 An intrinsic dimension much smaller than the linear dimension can then be seen as an indication of the presence of curvature and topological complexity in the manifold. Providing a quantitative measure of this complexity can still be considered an open problem.

3.2.2. Kernel PCA

A different strategy for finding a low-dimensional representation for data points embedded in a curved and twisted manifold is Kernel PCA.116 In Kernel PCA, data are represented in a new space using a nonlinear transformation ϕ(x), where ϕ is a high-dimensional vector-valued function. A linear dimensionality reduction is then performed in this space. The transformation ϕ should be chosen in such a way that even if the original data manifold is nonlinear, the transformed data set is approximately linear, allowing for the usage of MDS in the transformed space. An illustration of this concept is provided in Figure 4.

In Kernel PCA, the transformation is not performed explicitly, but obtained through the use of a kernel function κ(x, x′). By definition, a kernel function represents a dot product in some vector space. Hence, one sets

| 6 |

which implicitly defines the function ϕ. One then implicitly removes the mean from the transformed data105 by the same procedure used in MDS (see eq 5)

| 7 |

where Kij = κ(xi, xj). Finally, the MDS algorithm is used on such a matrix, providing the principal components of the data in the transformed space.

It is crucial to note that the transformed data matrix is never explicitly computed in the algorithm. One only needs to compute the matrix of dot products K, and this can be done efficiently through the use of the kernel function. This fact is known as the “kernel trick”,1 and it allows to transform the data in spaces of very high and often infinite dimensionality, thereby enabling the encoding of highly nonlinear manifolds without knowing suitable feature functions. A drawback, however, is that the kernel matrix has the dimension of N2, resulting in unfavorable storage and computing costs for large data sets.

The simplest kernel one can use is the linear kernel κ(xi,xj) = xiTxj, which makes Kernel PCA equivalent to standard PCA. Polynomial kernels of the kind κ(xi,xj) = (xixj)p can increase the feature space systematically for larger values of the parameter p. The use of a specific kernel, allows recovering Isomap.117 Perhaps the most widely used kernel for Kernel PCA is the Gaussian kernel κ(xi,xj) = e–||xi–xj||2/2h2. The Gaussian kernel depends on the parameter h, which determines the length scale of distances below which two points are considered similar in the induced feature space.

Kernel PCA is a powerful method for nonlinear dimensionality reduction, since in principle it can overcome the limitations of linear methods without a significant increase in computational cost. However, a practical issue in using Kernel PCA lies in its sensitivity to the specific choice of kernel function used and any parameters it may have. In ref (118), it is suggested to choose the kernel by systematically increasing the kernel complexity (from the simple linear kernel to the polynomial kernel with p = 2 and so on) until a clear gap in the engenspectrum of the kernel matrix appears (see discussion of Figure 3b). Although this procedure is not guaranteed to be successful in general, in ref.118 the authors successfully identify the reaction coordinate of a protein with the aid of Kernel PCA and a polynomial kernel. We refer to ref.119 for more information on the use of Kernel PCA and the various possible choices of kernels to analyze molecular motion.

In the context of materials physics, Kernel PCA has been particularly fruitful when used in conjunction with the SOAP descriptors (reviewed in section 2.2). Indeed, SOAP descriptor forms the basis for accurate interpolators of atomic energies and forces,120−122 meaning that this descriptor generates data manifolds in which similar materials lie close to each other. Kernel PCA has been successfully used for visualization and exploration of materials databases,123 for identifying new materials candidates,92 and to predict phase stability of crystal structures.124

A common feature of PCA, MDS, and Isomap is that their result is strongly affected by the largest pairwise distances between the data points. This can be a problem as the distances deemed relevant for the analysis of molecular trajectories are often those of intermediate value: not the largest, which only convey the information that the configurations are different, and not the smallest, whose exact value is also determined by irrelevant details (for example bond vibrations). Kernel PCA can partially accommodate this issue via the choice of kernel, since the distances which are preserved are those computed in the transformed space that the kernel implicitly generates. In the next subsection, we will describe Diffusion map, another projection algorithm that can be used in these situations.

3.2.3. Diffusion Map

Diffusion map125,126 is another technique similar to Kernel PCA, which enables the discovery of nonlinear variables capable of providing a low-dimensional description of the system. The diffusion map has a direct application to the analysis of molecular dynamics trajectories generated by a diffusion process, as the collective coordinates emerging from it approximate the eigenfunctions of the Fokker–Planck operator of the process. In ref (127), it has been shown that the diffusion map eigenfunctions are equivalent, up to a constant, to the eigenfunctions of an overdamped Langevin equation.

To compute a diffusion map, one starts by computing a Gaussian kernel

| 8 |

identical with the one introduced in the previous section. The length scale parameter h entering eq 8 has here a specific physical interpretation. It can be seen as the scale within which transitions between two configurations can be considered “direct” or without any significant barrier crossing. In principle, all pairs of configurations enter eq 8, but in practice, only the pairs closer than h are relevant in the definition of the kernel Kij, as the contribution of pairs further apart decays exponentially with their distance. For this reason, in practical implementations usually a cutoff distance multiple of h is defined and only the distances of pairs within this cutoff are computed.

To provide an approximation of the eigenfunctions of the Fokker–Planck operator, the kernel (eq 8) needs to be properly normalized128

| 9 |

We note that different normalizations are possible and commonly used to approximate different operators (e.g., graph Laplacian or Laplace–Beltrami instead of Fokker–Planck).129 From this normalized kernel, one estimates the diffusion map transition matrix as

| 10 |

The resulting elements Pij can be thought of as the transition probability from data point i to data point j. Indeed ∑jPij = 1.

We now consider the spectral decomposition of P

| 11 |

Since P is a stochastic matrix, it is positive-definite, only one of its eigenvalues is equal to 1, and all the others are positive and smaller than 1. If the collection of configurations used to construct the diffusion matrix samples the equilibrium distribution, in the limit of h → 0 and infinite sampling, the eigenvectors vα converge to the eigenfunctions of the Fokker–Planck operator associated with the dynamics. In practice, for finite h and finite sampling, the eigenvectors vα provide a discrete approximations to these eigenfunctions, and are called “diffusion coordinates”. Reweighting tricks can be used to apply the diffusion map approach to analyze molecular dynamics trajectories out of equilibrium.130

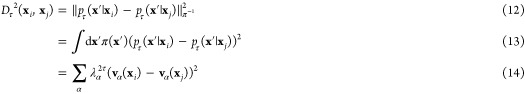

It can be shown that the Euclidean distance in the diffusion coordinates space, scaled by the corresponding eigenvalues, defines a “natural” distance metric on the diffusion manifold, the so-called diffusion distance:

|

where pτ(x′|xi) is the probability of being in configuration x′ after a time τ for a diffusion process started at position xi, and π is the equilibrium distribution. Because of the equivalence expressed in eq 14, the diffusion coordinates provide an accurate description of the diffusion process, and are usually robust to noise.

Similarly to the other dimensionality reduction algorithms discussed so far, Diffusion map is particularly useful if the spectrum of λ exhibits a gap, say after the d-th eigenvalue, with λd+1 ≪ λd. In this case, the sum over the eigenvectors in eq 14 can be truncated including only the first d terms and the first d diffusion coordinates can be used to characterize the system. The diffusion distance (eq 14) has inspired the definition of a “kinetic distance”,131,132 where the same mathematical form is retained but eigenvectors obtained with different spectral methods for the approximation of the dynamics eigenfunctions are used instead.133,134

The local scale parameter h entering the definition 8 is crucial in determining the transition probability between two configurations. Data configurations coming from the Boltzmann distribution are typically distributed very nonuniformly as they are highly concentrated in metastable states and very sparse in transition states. Therefore, a uniform h may be inadequate in MD applications. To address this, the “locally-scaled diffusion map” has been developed by Rohrdanz et al.,135 where the parameter h becomes position-dependent. The density adaptive diffusion maps approach136 is a related technique to deal with highly nonuniform data densities.

Diffusion map has been applied to analyze the slow transitions of molecules,137,138 guide enhanced-sampling methods,139−141 and have been combined with the kinetic variational principles described in section 6.142

3.2.4. Sketch-Map

While building a low dimensional representation of a molecular trajectory, we might be interested in preserving the distances falling within a specific window which is assumed to characterize the important modes of the system under study. This is the main motivation for the introduction of the Sketch-map algorithm.143 Sketch-map finds the projection Y which minimizes the following loss function:

| 15 |

The above equation differs from the standard MDS loss function (eq 3) in two ways. First, the parameters w are introduced to allow to control the importance of the distance between any two points. Second, the distances in the original space and in the projected space are passed through the sigmoid functions sx and sy before being compared in the loss. Each sigmoid function depends on the three parameters, σ, a, and b:

| 16 |

The parameter σ represents the transition point of the sigmoid (s(σ) = 1/2), and it should be chosen as the typical distance that is deemed to be preserved. The parameters a and b determine the rate at which the functions approach 0 and 1, respectively.

With a careful choice of these parameters, the Sketch-map projection will depend very weakly on distances that are too small or too large, since these will always be squashed either to 0 or to 1, thus giving little or no contribution to the loss of eq 15.

Contrary to the other projection methods described so far, Sketch-map requires solving a highly nonconvex optimization problem (eq 15). In practice, to find a sensible solution, one is forced to use a combination of heuristics,143 and in general, there are no guarantees on the computation time needed to find a sufficiently good solution.

The advantage of Sketch-map is that, with a proper selection of the parameters in eq 16, it enables the extraction of relevant structures from a trajectory even when simpler methods fail. However, an adequate choice of parameters can require a lengthy trial-and-error operation, which can be particularly challenging given the absence of guarantees on the time complexity of solving eq 15. Sketch-map has been successfully applied for visualization of free energy surfaces,143 biasing of molecular dynamics simulations,144 building “atlases” of molecular or materials databases,145 and for structure–property prediction.146

In the following sections, we describe an approach which allows finding a low dimensional representation of the data working on a very different premise: that relative distances between points are harvested from a stochastic process. This method, like Sketch-map, also works if the data manifold is not isomorphic to a hyperplane.

3.2.5. t-Distributed Stochastic Neighbor Embedding

The t-distributed stochastic neighbor embedding147 (henceforth “t-SNE”) performs dimensionality reduction on high-dimensional data sets following a different route with respect to the approaches discussed so far. The underlying idea (already present in the original Stochastic neighbor embedding (SNE)148) is to estimate, from the distances in the high-dimensional space, the probability of each point to be a neighbor of each other point. Then, the algorithm goal is to obtain a set of projected coordinates in which these “neighborhood probabilities” are as similar as possible to the ones in the original space.

The probability that point j is a neighbor of i is estimated as

| 17 |

where Kij is a Gaussian kernel. In this approach, contrary to what happens in the diffusion map (see eq 10), the length scale parameter hi is chosen independently for each point i by setting

| 18 |

where Perp is a free parameter called “perplexity”, which roughly represents the number of nearest neighbors whose probabilities are preserved by the projection. Adaptively changing the length scale to match a given perplexity, hence, allows the method to preserve smaller length scales in denser regions of the data set. These probabilities Pij are then transformed into a joint distribution by symmetrizing the matrix:

| 19 |

The neighborhood probabilities in the original space defined in this manner are then transferred to the projected space. For doing this, one needs to choose a parametric form for this probability distribution. At variance with the original SNE implementation, in which a Gaussian form is assumed, in t-SNE, a Student’s t-distribution with one degree of freedom is employed:

| 20 |

The shape of this distribution is chosen in such a way that it mitigates the so-called “crowding problem”,147 namely the tendency to superimpose points when projecting a high-dimensional data set onto a space of lower dimensionality. Indeed, the heavy tails associated with this distribution allow relaxing the constraints in the distances at the projected space, therefore allowing to a less “crowded” representation.

The two distributions are compared by measuring their Kullback–Leibler (KL) divergence:150

| 21 |

The feature vectors y entering in eq 20 are initialized (randomly in the original formulation, other possible schemes have been proposed151) and iteratively modified to minimize the loss function KL(P̅||Q̅) with a steepest descent algorithm.

The t-SNE loss function eq 21 is nonconvex, making its optimization difficult. In particular, if one uses steepest descent the low-dimensional embedding can differ significantly in runs performed with different initial conditions. This makes t-SNE a method that is, in principle, very powerful and flexible but difficult to use in practice.

Recently, the t-SNE method has been adapted to better fit the needs of molecular simulations. In ref (152), the authors propose a time-lagged version of the method, in the same spirit of TICA (see section 6.1). However, as the authors comment, the time-lagged version distorts the densities from the ones in the original space, which makes the method inappropriate for computing free energies. In ref (153), it is claimed that t-SNE provides a dimensionality reduction which minimizes the information loss and can be used for describing a multimodal free energy surface of a model allosteric protein system (Vivid). The same authors further employ this approach to obtain a kinetic model.154

3.2.6. Deep Manifold Learning Methods

Deep learning methods are now frequently used to learn nonlinear low-dimensional manifolds embedding high-dimensional data, and these techniques are also starting to be adopted for analysis and enhanced sampling of molecular simulations.

A popular deep dimensionality reduction method is the autoencoder.155 Autoencoders work by mapping input configurations x through an encoder network E to a lower-dimensional latent space representation y, and mapping this back to the original space through a decoder network D

| 22 |

| 23 |

The network learns a low-dimensional representation y by minimizing the error between the original data points xi and the reconstructed data points x̅i. Note that if E and D are chosen to be linear maps rather than nonlinear neural networks, then the optimal solution can be found analytically by PCA (section 3.1.1): indeed, the encoder E is given by the matrix of selected eigenvectors, and the decoder D is given by its transpose.

More involved deep learning approaches to obtain a low-dimensional latent space representation are generative neural networks. Key examples include Variational Autoencoders (VAEs)156 and Generative Adversarial Networks (GANs).157 VAEs are structurally similar to autoencoders, but involve a sampling step in the latent space that is required in order to draw samples from from the conditional probability distribution of the underdetermined high-dimensional state given the latent space representation, p(x|y). VAEs and other neural network architectures have been widely employed to extract nonlinear reaction coordinates and multidimensional manifolds for molecular simulation.158−171 The first goal of these approaches is to aid the understanding of the structural mechanisms associated with rare events or transitions. See section 6 for a deeper discussion of variationally optimal approaches to identify rare-event transitions. Second, the low-dimensional representation of the “essential dynamics” learned by these methods is poised to serve the enhance sampling of events that are rare and particularly important to compute certain observable of interest. This has been approached by constructing biased dynamics in the learned manifold space and reweighting the resulting simulations to obtain equilibrium thermodynamic quantities,163,165−167 as well as by selecting starting points for unbiased MD simulations which can then be similarly reweighted to obtain equilibrium dynamical quantities.161,162,164,172

GANs implicitly learn a low-dimensional latent space representation in the input of a generator network. Classical GANs are trained by playing a zero-sum game between the generator and a discriminator network, where the generator tries to fool the discriminator with fake samples and the discriminator tries to predict whether its input came from the generator or was a real sample from a database. In the molecular sciences, GANS have (so far) primarily been used for sampling across chemical space; e.g., to aid the optimization of small molecules with respect to certain properties.

For completeness, we mention that there is a third very popular class of generative neural networks called normalizing flows,173 which have recently been combined with statistical mechanics in the method of Boltzmann generators,43 which facilitate rare-event sampling of molecular systems. However, flows are invertible neural networks and are thereby not inherently performing any dimensionality reduction but rather a variable transformation to a space from which is it easier to sample, but still of the same dimension as the original input. Recently, it has been proposed to combine flows with renormalization group theory to gradually marginalize out some of the dimensions in each neural network layer.174 This is a promising direction for combining the tasks of dimensionality reduction and rare-event sampling in the molecular sciences.

4. Density Estimation

After the configurations have been projected into a low dimensional space, one can use the representation in this space for estimating the probability density function of the data set. Furthermore, if the representation is of dimension smaller than three, one can directly visualize the probability density ρ. Equivalently, one can also visualize its logarithm which, if the simulation is performed in the canonical ensemble at temperature T, is equal to the free energy; that is, F(y) = −kBT log(ρ(y)).

Density estimation is of great interest well beyond molecular simulations, and is a key working tool in unsupervised machine learning. Density estimation consists of estimating the underlying probability density function (PDF) from which a given data set has been drawn. Although its main applications relate to data visualization (as in the case of visualizing the free energy surface), it is also part of the pipeline of other analysis methods such as kernel regression,175 anomaly detection,176 and clustering (see Section 5).

The natural connection between density estimation and free energy reconstruction can be exploited to increase the efficiency of simulation analyses. For example, many kinetic analysis methods rely on density estimation177−181 (see Section 6).

In the case of equilibrium molecular systems, the PDF as a function of a feature vector y is in principle known exactly. In the canonical ensemble, this is given by,

| 24 |

where  is the function which

enables the computation

of the feature vector y as a function of the coordinates x. In practice, however, calculating this integral is not

possible, and the resulting PDF can only be estimated approximately

following one of the procedures described in this section.

is the function which

enables the computation

of the feature vector y as a function of the coordinates x. In practice, however, calculating this integral is not

possible, and the resulting PDF can only be estimated approximately

following one of the procedures described in this section.

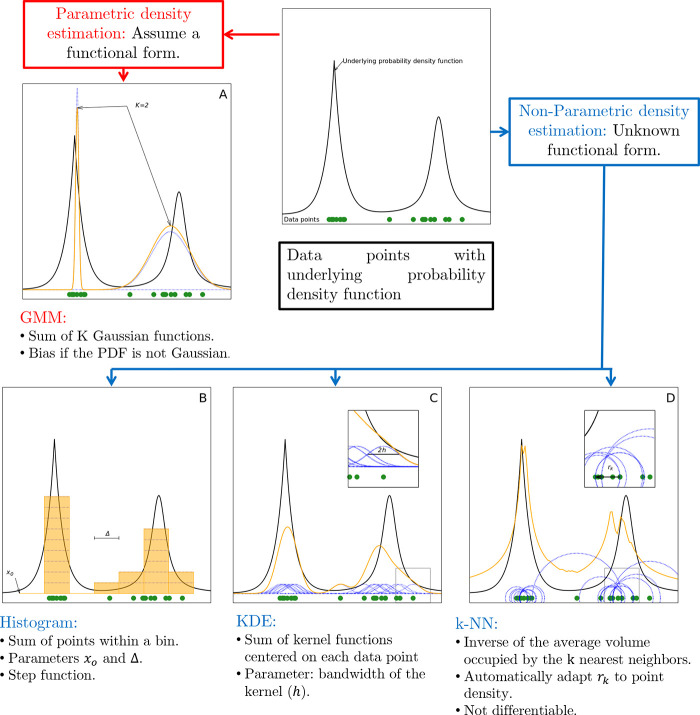

Density estimation methods can be grouped into two categories, namely, parametric and nonparametric methods (see Figure 5). In the first one, a specific functional form for the PDF is chosen and its free parameters are inferred from the data. In contrast, nonparametric methods attempt to describe the PDF without making a strong assumption about its form.182

Figure 5.

Graphical summary of the density estimation methods. Different density estimates on 20 1D data points (green) extracted from a mixture of a Laplace and a Cauchy distribution (black line).

The choice between parametric and nonparametric methods is heavily problem dependent. As a general rule, when the origin of the data permits a reasonable hypothesis for the functional form of the PDF, parametric density estimation should be preferred, since it quickly converges with relatively few data points. If the functional form is unknown, however, it is often better to sacrifice performance than to risk introducing bias into the estimation.

4.1. Parametric Density Estimation

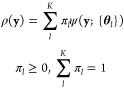

In parametric density estimation a fixed functional form of the PDF is assumed and one estimates its parameters from the data. For example, if one assumes that the data are sampled from a Gaussian distribution one can then estimate the density by simply computing mean and variance of the data. Such a procedure is common throughout various scientific branches. However, this procedure can lead to an error in the estimate that cannot be reduced by increasing the number of observations, since the error can be brought to zero only if the data points are truly generated from a Gaussian distribution. To add flexibility to this approach and, therefore, alleviate the bias inherent in assuming the form of the distribution a priori, a common technique is to model the PDF as a mixture of K distributions

|

25 |

where ψ(y;{θl}) is a function that depends upon the set of parameters θl and πl is the weight of this function in the estimate. A common choice for ψ is a Gaussian, leading to the Gaussian mixture model (GMMs).183

The parameters {θl,πl} can be estimated by a maximum likelihood method, namely

| 26 |

where the sum over i runs over the N observations yi, i = 1, ..., N. This likelihood function can then be optimized through (for example) an expectation-maximization scheme.183

A general problem of GMMs is that, since the likelihood to be optimized is a nonlinear function of the parameters, finding its global maximum is typically very difficult. Another critical issue in this approach is the choice of the hyperparameter K, that is, the number of functions used in the mixture. There is no straightforward relationship between the quality of a density estimate and K because the best choice strongly depends on the shape of the PDF. The choice of K is a model selection problem,184 which can be approached by maximizing the likelihood on a validation data set.185 Alternative Bayesian approaches for this problem involve maximizing the marginal likelihood with respect to K or using a Dirichlet process prior distribution for the hyperparameter K.186

There are numerous applications of mixture models in the analysis of molecular simulation. In refs.,187,188 the free energy surfaces of biomolecules are reconstructed as a sum of Gaussian functions. In refs.,189,190 GMMs are employed as a basis for an enhanced sampling algorithm in a way that follows the spirit of metadynamics191 but in which the bias potential is updated to be the sum of few Gaussian functions. In this case, the number of basis functions can be adjusted by using variationally enhanced sampling.192 Moreover, in ref.,181 GMMs are used as basis for the MD propagator in a kinetic model (see section 6), while in ref (193), they are used for atomic position reconstruction from coarse-grained models.

4.2. Nonparametric Density Estimation

4.2.1. Histograms

Nonparametric methods avoid making strong assumptions on the functional form of the PDF underlying the data. The most popular nonparametric method, especially in the molecular simulation community, is the histogram.194−198

In this method, the space of the data is divided into bins and the PDF is estimated by counting the number of data points within each bin. In one dimension, denoting the center of bin I as yI, and taking yI = y0 + IΔ, where Δ is the bin width, we have

| 27 |

where nI is the number

of configurations within bin I, and can be computed

as  , where χ[a,b](y) = 1 if y ∈

[a, b] and 0 otherwise.

, where χ[a,b](y) = 1 if y ∈

[a, b] and 0 otherwise.

Under

the assumption that the different observations are independent, nI is sampled from a binomial

distribution nI ∼ B(N, pI) where (in a one-dimensional feature space)  is the probability of observing a configuration

in the bin. The expected value of nI/N is equal to pI, which implies that the estimator in eq 27 is correct only if

is the probability of observing a configuration

in the bin. The expected value of nI/N is equal to pI, which implies that the estimator in eq 27 is correct only if  can be approximated with Δ·ρ(yI). If Δ is too large,

this approximation is not valid and the density estimation becomes

too coarse-grained, thereby inducing a systematic error, which is

referred to as bias.

can be approximated with Δ·ρ(yI). If Δ is too large,

this approximation is not valid and the density estimation becomes

too coarse-grained, thereby inducing a systematic error, which is

referred to as bias.

The variance of nI is Var(nI) = N pI (1 – pI), and the statistical error on the density estimate in eq 27 can be estimated as

| 28 |

| 29 |

where to go from the first to the second line we used the fact that pI ≪ 1 and pI ≅ ρ(yI)Δ. If one chooses a value of Δ that is too small, the number of configurations within the bin nI becomes small, and the resulting error, commonly referred to as variance, becomes large. In practice, the value of Δ can be chosen by considering the so-called bias/variance trade-off in which both small variance and small bias are desired, but decreasing one often increases the other.182 This trade-off is common to all nonparametric density estimators.

Histograms are typically used to estimate the density for d ≤ 3 since in higher dimensions the estimator becomes noisy since an increasing number of bins will be either empty or only visited a few times. This problem can be alleviated only if one exponentially increases the number of data points with d: a manifestation of the curse of dimensionality.199 Finally, another drawback of the histogram is that its estimate of the PDF is not differentiable.

4.2.2. Kernel Density Estimation

Kernel density estimation (KDE) partially overcomes the problems associated with histogramming. KDE approximates the PDF of a data set as a sum of kernel functions centered at each data point. In one dimension, the approximation reads

| 30 |

where the kernel function κ is chosen as a unimodal probability density symmetric around 0. Particularly, it satisfies the properties

| 31 |

The estimator depends on the kernel function of choice and the hyperparameter h > 0 (i.e., the bandwidth). Some popular examples of kernels are summarized in Table 1.

Table 1. Some Widely Used Kernels for Density Estimation.

| uniform | ||

| triangle | ||

| Epanechnikov | ||

| cosine | ||

| Gaussian |

The Gaussian kernel is arguably the most used. The Epanechnikov kernel is optimal in the sense that it minimizes the mean integrated squared error (MISE).200 However, experience has shown that the impact of the choice of the kernel on the quality of the estimation is lower than the choice of the bandwidth.201

The bandwidth hyperparameter h plays a role similar

to the bin width parameter Δ in the histogram method. h is also referred to as the “smoothing” parameter;

since the larger it is, the smoother the resulting PDF estimate is.

However, smoothing too much can lead to artificially delete important

features on the PDF. The dependence of the MISE on h can be decomposed into two terms, namely, the bias, which scales as h2, and the variance, which corresponds to the error induced by the

statistical fluctuations in the sampling and scales as  .202

.202

As in the case of the histogram, the optimal value of h should be chosen as a trade-off between the bias and the variance terms. Much research has been focused on the optimal choice of h in eq 30.203−205

Alternatively, the bandwidth selection problem can be addressed by introducing an adaptive kernel with a smoothing parameter that varies for different data points.206,207 A position-dependent bandwidth can be obtained by optimizing a global measure of discrepancy of the density estimation from the true density.182,208 However, this global measure is typically a complex nonlinear function,209 and its optimization can be prohibitive for large data sets. A more feasible approach, first proposed by Lepskii,210 is to adapt the bandwidth to the data locally. This approach has been further developed by Spokoiny, Polzehl, and others.211−216

Kernel density estimation can also be used in more than one dimension by employing multivariate kernels. However, the number of hyperparameters increases with the number of dimensions. In the case of the multivariate Gaussian kernel, the parameters can be summarized in a d×d symmetric matrix H, which plays a role analogous to h in the one-dimensional case. The corresponding estimator is

| 32 |

In comparison with histograms, kernel density estimation has the advantage that it is differentiable. KDE is gaining increasing popularity in the analysis of molecular dynamics simulations, leading even to the development of specific parameters tailored for MD.217 In addition to their use in visualization of free energy surfaces218 and the construction of kinetic models,178 KDEs have been applied in the study of nonexponential and multidimensional kinetics219 and the computation of entropy differences.220

4.2.3. k-Nearest Neighbor Estimator

Another route for estimating the density of a data set is the k-nearest neighbor (k-NN) estimator.221 In this method, the probability density ρi = ρ(yi) is estimated as

| 33 |

where Vd is the volume of the unitary sphere in dimension d and rk(yi) is the distance between yi and its k-th nearest neighbor point.

The k-NN method can be thought of as a special kernel density estimation with local bandwidth selection, where the role played by k is similar to that of h in the KDE. The statistical error induced of this estimator is given by13

| 34 |

The higher the value of k, the smaller the variance of the estimator, but the larger the bias, since this error estimate is valid only if the probability density is constant in the hypersphere of radius rk(yi) centered on yi. The k-NN method can in principle be used for estimating the PDF in any dimension d. However, if d = 1, the PDF estimated in this manner cannot be normalized since the integral for the whole space of 1/r does not converge.222

The k-NN method is also affected by the curse of dimensionality. Indeed, it can be shown that for any fixed number of data points, the difference between the distance from the k nearest neighbor (rk) and the distance from the next nearest neighbor (rk+1) trends to 0 when d → ∞,223 leading to problems in the definition of the density. Much effort has been put into bypassing this limitation.224 One approach involves avoiding estimating the density in the full feature space and instead estimating it in the manifold that contains the data (which usually has a much lower dimensionality). This approach, first suggested in ref (225), was further developed in ref (13) with a specific focus on the analysis of MD trajectories.

While in molecular systems the configurations are defined by a high-dimensional feature space, restraints induced by the chemical and physical nature of the atoms prevent the system from moving in many directions (for example, in the direction which significantly shortens a covalent bond). In practice, these restrains reduce the dimension of the space to a value which is referred to as an intrinsic dimension. The intrinsic dimension can be estimated, for example, using the approaches in refs (226−229). The probability density can then be estimated using eq 33, where the dimension of the feature space d is replaced with the intrinsic dimension, which can be orders of magnitude smaller, making the estimate better behaved. This framework also addresses the problem of finding a k that yields sufficiently small variance and bias. Ref (13) proposes that the largest possible k for which the probability density can be considered constant (within a given level of confidence) for each data point separately. This optimal k, which is point-dependent and denoted by ki, is then used to estimate the probability density by a likelihood optimization procedure allowing for density variation up to a first-order correction. The estimator of the density then becomes

| 35 |

where vi,l = VID (rlID(yi) – rl–1(yi)) is the volume of the hyperspherical shell enclosed between the lth and the (l – 1)th neighbor of the configuration yi. This approach enables the estimation of the probability density directly in the space of the coordinates introduced in section 2 (for example, the dihedral angles) rather than performing a dimensionality reduction with one of the methods described in section 3 in advance or by explicitly defining a collective variable for describing the system.

In the field of MD simulations, k-NN has been used as part of the pipeline for more complex analyses230,231 and for computing both entropies232,233 and free energies.234,235

5. Clustering

Clustering is a general-purpose data analysis technique in which data points are grouped together based on their similarity according to a suitable measure (for example, a metric). In molecular simulations, the use of clustering is very common, since clusters can be seen as a way of compactly representing a complex multidimensional probability distribution. Clustering, thus, performs an effective dimensionality reduction in a manner which can be seen as complementary to the approaches described in section 3. It is well-known that there is no problem-agnostic measure of the success or appropriateness of a given clustering algorithm or its results.236 Thus, it is crucial to choose a clustering algorithm based on what is known about the data set and what is expected about the result. In particular, in the field of molecular simulations, we can conveniently differentiate the following two classes of techniques.

Partitioning schemes: In these approaches, the clusters are groups of configurations which are similar to each other and different from configurations belonging to other clusters. These clusters define a partition (or tessellation) of the space in which the configurations are defined.

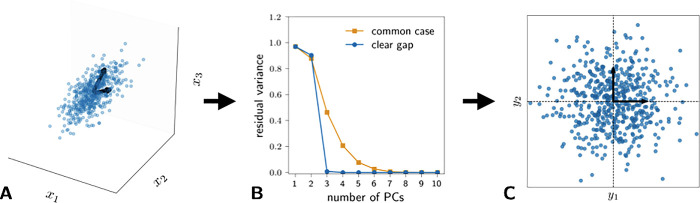

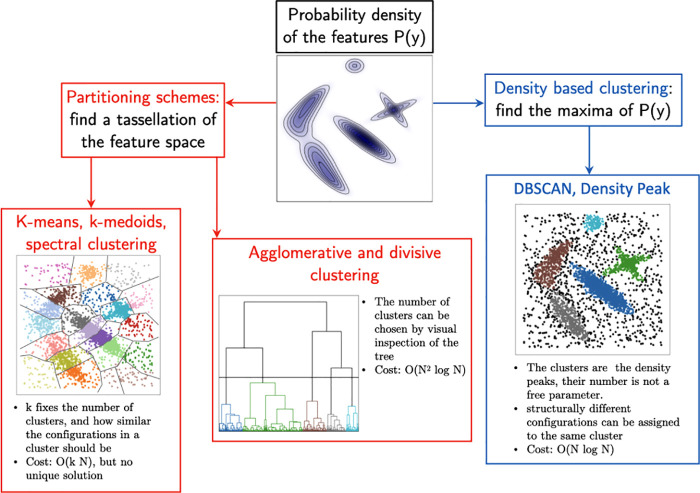

Density-based schemes: In these approaches, the clusters correspond to the peaks of the probability distribution from which the data are harvested (or, equivalently, to the free energy minima). Data points belonging to different clusters are not necessarily far apart if they are separated by a region where the probability density is low (see Figure 6).

Figure 6.

Cartoon illustration of the different clustering schemes.

The most striking difference between these two distinct approaches emerges if one attempts to cluster a set of data harvested from a uniform probability distribution. Using a partitioning scheme, one can find any number of clusters depending on the chosen level of resolution. On the other hand, using a density based scheme, one will obtain a single cluster. Clearly, which approach one should employ strongly depends upon the purpose of the analysis. For instance, if one wants to find directly the metastable states from a cluster analysis of the system, one should use a density-based clustering approach. If, instead, one wants to find an appropriate basis to represent the dynamics, as in kinetic modeling methods (see section 6), a partitioning scheme may be more appropriate, since these schemes allow one to control how similar the configuration assigned to the same cluster are.

5.1. Partitioning Schemes

Partition-based algorithms aim to classify the configurations in sets (i.e., clusters) that include only similar configurations. These algorithm can be further divided into two classes.

Centroid-based/Voronoi tesselation algorithms: In these methods, the number of clusters is determined by a specific parameter, which can be a cutoff specifying the maximum allowed distance between two configurations assigned to the same cluster or, alternatively, the number of clusters. The well-known k-means and k-medoids algorithms belong to this class, as well as the faster but less optimal leader algorithms k-centers and regular-space clustering. In all of these methods, each cluster is associated with a so-called centroid, which is a configuration representing the content of the cluster. This centroid induces a so-called Voronoi-tesselation, which divides space such that each point is associated with the nearest centroid, in the chosen distance metric.

Hierarchical/agglomerative and divisive clustering: Here, the choice of the number of clusters is deferred, being possible to run the algorithm without setting it in advance. A (usually binary) tree is constructed according to a linkage criterion, and the number of clusters can be selected by appropriately “cutting” the tree, usually by visual inspection and taking into account the scope of the analysis. These approaches are commonly referred to as hierarchical clustering, which can be “agglomerative” or “divisive” depending on whether the data points are first considered to be individual clusters or members of a single cluster, respectively.

5.1.1. k-Means and k-Medoids

Arguably, the most popular clustering schemes in molecular simulations and beyond are the centroid-based methods k-means237 and k-medoids.238 In both cases, the number of clusters k is chosen before performing the algorithm.