Abstract

Chemical compound space (CCS), the set of all theoretically conceivable combinations of chemical elements and (meta-)stable geometries that make up matter, is colossal. The first-principles based virtual sampling of this space, for example, in search of novel molecules or materials which exhibit desirable properties, is therefore prohibitive for all but the smallest subsets and simplest properties. We review studies aimed at tackling this challenge using modern machine learning techniques based on (i) synthetic data, typically generated using quantum mechanics based methods, and (ii) model architectures inspired by quantum mechanics. Such Quantum mechanics based Machine Learning (QML) approaches combine the numerical efficiency of statistical surrogate models with an ab initio view on matter. They rigorously reflect the underlying physics in order to reach universality and transferability across CCS. While state-of-the-art approximations to quantum problems impose severe computational bottlenecks, recent QML based developments indicate the possibility of substantial acceleration without sacrificing the predictive power of quantum mechanics.

1. Introduction

Promising applications of machine learning techniques have been rapidly gaining momentum throughout the chemical sciences. Apart from this present special issue in Chemical Reviews, a number of special issues in common theoretical chemistry community journals have appeared, including International Journal of Quantum Chemistry (2015),1Journal of Chemical Physics (2018),2Journal of Physical Chemistry (2018),3Journal of Physical Chemistry Letters (2020),4 and Nature Communications (2020).5 Books, essays, reviews, and opinion pieces have also been contributed by practitioners in the field.6−23 Such growth of interest prompted a general discussion in Angewandte Chemie within a trilogy of essays by Hoffmann and Malrieu on the seemingly conflicting nature of simulation and understanding in quantum chemistry.24−26 The overall enthusiasm in the hard sciences for machine learning has even led to the introduction of novel journals, such as Springer’s Nature Machine Intelligence, IOP’s Machine Learning: Science and Technology,27 or Wiley’s Applied Artificial Intelligence Letters.28

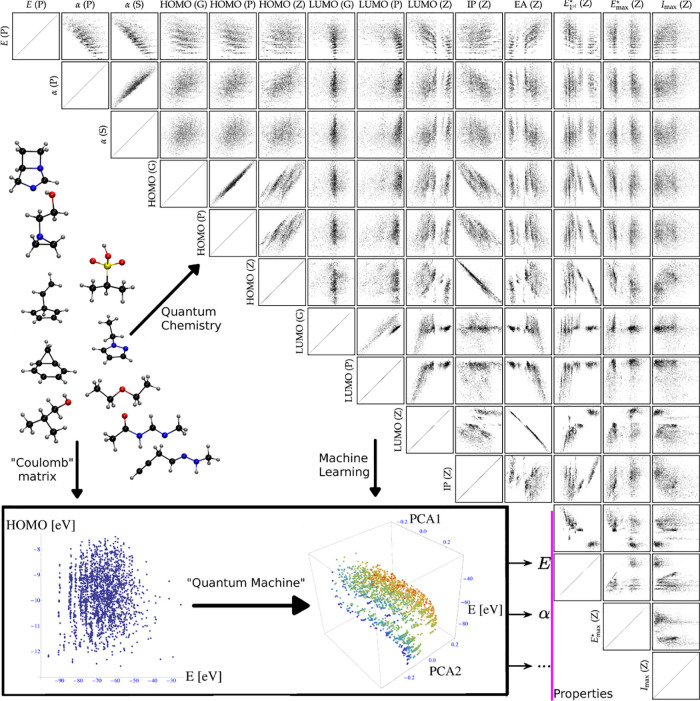

In this review, we attempt to provide a comprehensive overview on recent progress made regarding the problem of using machine learning models to train and predict quantum properties throughout chemical compound space (CCS) (Figure 1). In contrast to the current trend of machine learning in quantum computing, we here refer to the application of statistical learning to quantum properties as “quantum machine learning” (QML). This notation follows a common convention in atomistic simulation, where the quantum nature of the object to be studied corresponds to a prefix, while the actual algorithms are rather classical in nature. Examples include Quantum Monte Carlo or Quantum Molecular Dynamics (also known as ab initio or “first-principles” molecular dynamics).

Figure 1.

A cartoon of similarities among atoms across chemical compound space, not in conflict with quantum mechanics. The exemplary molecule aspirin is highlighted by bonds, and each of its atoms is superimposed with a similar atom in another molecule (hydrogens omitted for clarity). Green, yellow, gray, red, and blue refer to sulfur, phosphor, carbon, oxygen, and nitrogen, respectively. Reproduced with permission from ref (15). Copyright 2020 Springer Nature.

Within this introductory section, we will begin by first providing a qualitative description of chemical compound space (CCS) in terms of fundamental variables, which is consistent with the quantum mechanical picture within the Born–Oppenheimer approximation and neglecting nuclear quantum and relativistic effects. Thereafter, we briefly review related but complementary and system specific QML models which predominantly are not used throughout CCS but rather for training and predicting potential energies and forces in terms of conformational degrees of freedom, e.g., using molecular dynamics. Quantum mechanics based explorations for the purpose of materials design are mentioned subsequently, followed by a short subsection on studies which establish the quantitative and rigorous quantum chemistry based view on CCS.

1.1. Multiscale Nature of CCS

Figuratively speaking, CCS refers to the virtual set of all the theoretically (meta-)stable compounds one could possibly realize in this universe. To paraphrase Buckingham and Utting, a compound “...is a group of atoms...with a binding energy which is large in comparison with the thermal energy kT”.29 In other words, with respect to all its spatial degrees of freedom, it is that locally averaged atomic configuration, for which the free energy is in a local minimum surrounded by barriers sufficiently high to prevent spontaneous reactions within some observable lifetime. As such, CCS depends on external conditions. It loses all meaning, for example, when conditions are such that bonding spontaneously emerges and vanishes (e.g., aggregation state of plasma).

The mathematical number of compounds grows explosively with the number of constituting atoms due to the mutual enhancement of combinatorial scaling at three rather distinct but well established energetic scales: First, the number of possible stoichiometries for any given system size (in terms of electrons and total proton number) represents an integer partitioning problem which grows combinatorially, see ref (30), for example. The energetic variance among compounds that differ in stoichiometry is on the scale of chemical bonding due to having different number and different types of atoms. Second, the number of possible connectivity patterns, i.e., incomplete labeled undirected weighted graphs distinguishing constitutional isomers/allotropes (commonly drawn as Lewis structures) is mathematically known to grow combinatorially with number of atoms.31−33 The energetic variance among constitutional isomers is on the scale of differences in chemical bonding. Third, the number of possible conformational degrees of freedom grows combinatorially with number of atoms in a molecular graph (cf. Levinthal’s paradoxon for polymers), and one could even consider different atomic configurations of disconnected graphs, i.e., macromolecular or molecular condensed systems, to fall into this category of isomers. As such, the energetic variance among conformational isomers is on the scale of noncovalent intra- as well as intermolecular interactions. We note that stereoisomerism typically occurs among constitutional and conformational isomers. Its extension to compositional chirality has been proposed only recently.34 Given such size and diversity, highly universal, and efficient methods are in dire need in order to meaningfully explore CCS in search of deepened chemical insight and intuition and of new compounds and materials which exhibit desirable properties. While quantum mechanics and statistical mechanics offer the appropriate physical framework for dealing with CCS in an unbiased and universal manner, the computational complexity of the equations involved has hampered their widespread use.

We note that our ab initio definition of CCS implies that only those compounds are part of CCS that should, at least in principle, be experimentally accessible as long as sufficiently sophisticated synthetic chemical procedures and reservoirs of the necessary chemical elements are available. While any such synthetic procedure would have to follow the corresponding relevant free energy paths, by navigating the virtual analogue of CCS we do enjoy more design freedom and can, namely for any property that is a state function, also exploit unrealistic fictitious transformations in line with Hess’ law, i.e., without the need for direct correspondence to experimental realization (cf. “alchemical” transmutations).

We conclude this section by noting that our definition generalizes the more commonly made reference to CCS, which typically excludes conformational isomers, reactive intermediates, or minima in electronically excited states. For example, first steps toward an ab initio based representative exploration of the latter were also proposed in 2013 for drug-like compounds by Beratan and co-workers.35 However, for this review, we do not assume the most general view on CCS which would still be consistent with quantum mechanics, namely, that CCS comprises any chemical system, i.e., compounds with any chemical composition and any atomic configuration (being close to some state’s energy minimum or not). Such an encompassing definition would sacrifice the minimal free energy requirement mentioned above, and it would trivially correspond to the entire domain of CCS. Therefore, it would forego the useful link to observable lifetimes of systems as well as the appealing complementarity (not to be confused with orthogonality) to the well established problem of sampling potential energy hyper surfaces to study free energies or competing elementary reaction steps.

1.2. Machine Learning the Potential Energy Surface

While QM based studies of CCS are mostly concerned with (meta-)stable compounds, from inspection of the electronic Hamiltonian, it is quite clear that the effect of nuclear charges and nuclear coordinates are intimately linked. The well-known cusp condition due to Kato’s theorem36 explicitly links these two variables through the electron density observable. As such, ab initio studies of the PES aimed at calculating geometric distortion, transition states, or statistical mechanical averages are closely related to the topic of this review. More specifically, early attempts of QML have focused on the PES of homonuclear system (e.g., diamond37 or Sin cluster38) due to its relative simplicity (cf. compositional degree of freedom), for which many QML methods developed are also applicable to CCS. The distinction between CCS and the PES is somewhat arbitrary. For example, some molecular quantities of significant interest, such as libraries of ensemble properties of protein–ligand binding free energies, require accurate potentials as well as representative sampling of CCS. Also, instead of considering (meta-)stable constitutional or conformational isomers as distinct compounds, they can also equally well be viewed as local minima of the global PES hypersurface.

As mentioned above, within studies of the PES, the focus (at least currently) is typically placed on a single system and on computing energies and forces from scratch, i.e., ab initio. As such, one does not exploit correlations, constraints, and relationships, which only emerge through relationships observed among constitutional and compositional isomers, i.e., throughout all dimensions of CCS. The most common use-case of quantum methods for atomistic simulations deals with the problem of sampling the configurational degrees of freedom of the atoms of a given system. To develop a better informed understanding of the field, we now also briefly discuss relevant and select machine learning studies which touch upon the quantum based understanding of CCS but which primarily are concerned with the PES.

The question of how to best model a PES using some (physical or surrogate) function approximator and based on scarce and expensive potential energy surface data sets of specific systems, i.e., not through CCS, obtained from computationally demanding calculations, is long-standing. Potential energy hypersurfaces were traditionally studied for the purpose of molecular spectroscopy or for molecular dynamics applications of a given system. The development of empirical interatomic potentials, particularly the reactive force-field (ReaxFF) approach developed by van Duin and co-workers since 2001,39,40 amounts essentially to a traditional multidimensional regression problem for fixed functional basis functions and constitutes one of the mainstream efforts in this active field. Unlike traditional force field approaches, ReaxFF requires no predefined connectivity between atoms (topology) and casts the empirical interatomic potential within a formalism of bond order, which depends on the interatomic distances only. This improved adaptation of an atom to its environment allows for accurate descriptions of bond breaking and bond formation and has been applied extensively to model reactive chemistry at heterogeneous interfaces, involving typically very large systems,40 made up of millions of atoms.

The force-field approach, despite its efficiency, its chemical motivation, and its broad applicability and potential accuracy, suffers from the fixed functional forms imposed when relying on empirical interatomic potentials, implying that the model is hard to improve by adding more training data and could even fail catastrophically in certain regimes and classes. This limitation motivates interest in more flexible data-driven models. For example, already in 1994, Ischtwan and Collins improved the Shepard interpolation scheme for PES approximations.41 This paper illustrates the close relationship to QML: The authors utilized a formalism very similar to the modern kernel ridge regression, one of the workhorses of QML. The authors also already discussed one of the frequent challenges coming along with any new ML model project: How to best down-select optimal configurations for minimal data acquisition and training costs, and how to obtain systematic model improvements with increasing training set size.41 Early awareness of the trade-off between accuracy and training cost was also already addressed more than a decade earlier in the 1981 paper by Wagner, Schatz, and Bowman: Given a finite compute budget, data for which training instances should be acquired in order to obtain the most accurate model of the potential energy hypersurface?42 When facing the exploration of CCS with QML models, analogous questions must be addressed. For references to similar studies related to the problem of potential fitting and preceding 1989, we refer the reader to the comprehensive review by Schatz.43

Most of the data-driven models in the 1990s favored the neural network regressors for PES fitting. More specifically, in 1992, Sumpter and Noid published a neural network model for macromolecules.44 Additional neural network potentials were published by Blank et al. in 199545 for the CO/Ni(111) system, and Brown et al. in 199646 for the study of ground-state vibrational properties of two weakly bound molecular complexes: (FH)2 and FH–ClH. Neural networks were revisited for systems with increased size in the same year by Lorenz, Gross, and Scheffler47 for H2/K(2×2)/Pd(100) (with substrate fixed), followed by their application to represent high-dimensional potential energy surfaces for H2O2 by Manzhos and Carrington in 2006.48 Even larger systems, i.e., water clusters (up to 6 units), were dealt with by Handley and Popelier from 2009 onward,49 accounting for important electrostatic properties through learning of the atomic multipoles. These early developments used Cartesian/internal coordinates directly as the input of NN models, which is justified for modeling small to medium-sized systems; for large systems, however, this setup proves to be too inefficient. In 2007, Behler and Parinello published much improved deep neural network-based potentials,50 encoding molecular geometry effectively in terms of rotation, translation, and permutation invariant atom-centered symmetry function (ACSF), followed by molecular dynamics applications using metadynamics to identify Si phases under high pressure.51 A detailed overview of various neural network-based advances since 2010 was given in 2017.52 Starting in the same year, multiple, more universal, neural network models were introduced. In particular, Smith et al. advanced the idea of Behler’s symmetry functions in neural networks with the aforementioned normal mode displacements in order to generate a powerful neural network trained on millions of configurations of tens of thousands of organic molecules, called ANI.53 An accurate and transferable neural network exploiting an “on-the-fly” equilibration of atomic charges was introduced that same year by Faraji et al.,54 and soon thereafter, equally universal neural nets SchNet55 and PhysNet56 were published. An extensive review on neural network potentials for modeling the potential energy surfaces of small molecules and reaction is also part of the present issue in Chemical Reviews.57

Kernel models started to play a noticeable role for PES fitting in the late 1990s. In 1996, Ho and Rabitz presented kernel-based models for the fitting of potential energies58 for three small systems, He–He, He–CO, and H3+. Similar to early PES fitting works within NN framework, these early kernel-based models also utilized simple Cartesian/internal coordinates as input, and therefore applicability was limited. While the mathematics of kernel-based surrogate models was firmly established many decades ago, only from 2010 and onward, kernel-based models began to flourish, building on the seminal work contributed by Csanyi, Bartok, and co-workers within their “Gaussian-Approximated Potential” (GAP) method, relying on Gaussian process regression (GPR) and an atom index invariant bispectrum representation.59 In 2012, Henkelmann and co-workers introduced an interesting application of support vector machines (SVM) toward the identification of transition states.60 One year later, the first flavor of the smooth overlap of atomic positions (SOAP) representation for GPR based potentials was published.38 The SNAP61 method popularized the GAP idea using linear kernels in 2015, and other GPR applications with automatically improving forces were published the same year.62,63 Around the same time, a first stepping stone toward a universal force field, trained on atomic forces throughout the chemical space of molecules displaced along their normal modes, was established.64 Reproducing kernels were also shown in 2015 to be applicable toward dynamic processes in biomolecular simulations,65 and ever more accurate GPR based potentials were introduced in 201666 and in 2017.67,68 GPR was also applied to challenging processes in ferromagnetic iron69 and to the problem of the on-the-fly prediction of parameters in intermolecular force fields.70 Amorphous carbon was studied using SOAP based GPR/KRR models,71,72 and GDML, another series of highly robust and accurate GPR/KRR based molecular force fields, was introduced in refs (67 and 73−75) starting in 2017.

GPR and NN are currently the two most popular regressors for PES fitting, and each exhibits advantages and disadvantages. Seemingly very different in design, they do resemble each other to some extent in the sense that they take the role of basis functions (to be elaborated in section “Regressor”), although the similarity may be blurred within the framework of deep NN. Numerical comparison of the performance of these two methods is interesting. Most notably, such a comparison was made for modeling the potential energy surface of formaldehyde in 2018 by Manzhos and co-workers.76 A similar yet independent study on the same system was performed in 2020 by Meuwly and co-workers.77 Both studies confirm that kernel based QML models reach higher predictive power than neural network based models for same training set sizes. A highly related comparative study on modeling vibrations in formaldehyde was contributed by Käser et al.,77 also in 2020.

As for the active learning of interatomic potentials, most of the related studies relied on the kernel framework, some of them also detailed below in the section “Training Set Selection”. As early as in 2004, De Vita and co-workers proposed updating potential parameters to ab initio results during molecular dynamics runs (“learning-on-the-fly”),78 for a very large system, i.e., silicon systems composed of up to ∼200 000 atoms, although the reference level of theory is quite approximate. Podryabinkin and Shapeev proposed the so-called D-optimality criterion for selecting the most representative atomistic configurations for training on-the-fly as early as 2017.79 Using kernel ridge regression (KRR, a variant of GPR), Hammer and co-workers revisited the on-the-fly learning idea for structural relaxation in 2018,80 and investigated the exploration vs exploitation trade-off.81 In 2019, Weinan, Car, and co-workers contributed another active learning procedure for accurate potentials of Al–Mg alloys,82 and Westermayr et al. extended the use of neural networks for molecular dynamics in the electronic ground state toward photodynamics.83 Among the many purposes (also challenges) of QML for PES, one particular one is to scale to an extremely large (thus more realistic) system. Numerous efforts have pushed us closer to this goal, and most notably, Weinan, Car, and co-workers made full use of the Summit supercomputer to simulate 100 million atoms with ab initio accuracy using convolutional neural networks,84 for which they subsequently were awarded the Gordon Bell prize 2020 by the Association for Computing Machinery.

1.3. Navigating CCS from First Principles

The scientific research question of how properties trend across CCS lies at the core of the chemical sciences. Because of ever-improving hardware performance, improved approximations to Schrödinger’s equation, most notably within density functional theory and localized coupled cluster theory, QM data sets of considerable size have emerged, enabling the use of statistical learning to train surrogate QML models which can provide accurate and rapid quantum property estimates for new compounds within their applicability domain.

While quantum mechanics based computational materials design efforts had been undertaken as early as the 1990s85−88 with important progress made in the 1980s,89,90 the first-principles based computational high-throughput design has by now become an important success story.91 First attempts to employ machine learning and quantum predictions to discover new ternary materials databases date back to seminal work by Hautier and Ceder in 2010.92,93

As a promising alternative to ab initio high-throughput computations (or solving Schrodinger equation in general), one often assumes locality of atoms in molecules when constructing the mapping from molecular distance/similarity to difference in properties within QML, and the final predictive performance depends on how similar two local (and thus global) entities are, i.e., nuclear types covered by a test set are required to be retained in the training set. The capability of QML to treat species made up of elements not seen in training set is, however, very limited. There exists the so-called “alchemical” methods, being quite different in philosophy, allowing for effective and efficient treatment of the change of nuclear types, with or without the constraint that the number of electron number (Ne) being fixed (i.e., isoelectronic). We note in passing that alchemy is typically established within the density functional theory framework, as it would be tremendously simpler to expand molecular property (mostly energy) as a function of four variables (x, y, z, and Z) than 4NI ones in the case of wave function-based formulation.

Previous methodological works tackling chemical compound space from first-principles through variable (“alchemical”) nuclear charges were contributed by various pioneers, including Wilson’s formal four-dimensional density functional theory94 in 1962, which expresses the exact nonrelativistic ground-state energy of an electronic system as a functional of the electron density, which per se is a function of the spatial coordinates, and nuclear charges. Following Wilson’s idea, Politzer and Parr95 in 1972 made one step further toward practical computation by transforming Wilson’s formula into a functional of the total electrostatic potential V(r, Z) and derived some useful semiempirical formulas for the total energy of atoms and molecules, through the use of thermodynamic integration.95 Later in the 1980s, Mezey made some interesting discoveries96,97 about the global electronic energy bounds for a variety of isoelectronic polyatomic systems, which may be found useful for quantum-chemical synthesis planning, using multidimensional potential surfaces.

The theoretical alchemical research was resurrected in the new millennium. Among the numerous contributions, notable ones include a variational particle number (variable proton and electron number) approach for rational compound design90 proposed by one of the authors and collaborators, followed by a more detailed description of the underlying theories, in the name of molecular grand-canonical ensembles (GCE).98 In the same year, a reformulation of GCE in terms of linear combinations of atomic potentials (LCAP)99 (instead of Z and Ne as in GCE) was proposed by Wang et al., but for the optimization of molecular electronic polarizability and hyper-polarizability, with the optimal molecule determined analytically in the space of electron–nuclei attraction potentials. For the isoelectronic case, related works include the development of ab initio methods for the computation of higher-order alchemical derivatives100 by Lesiuk et al. in 2012, as well as the assessment of the predictability of alchemical derivatives101 by Munoz et al. in 2017. More recently, alchemical normal modes in CCS,102 alchemical perturbation density functional theory,103 and even a quantum computing algorithm for alchemical materials optimization104 were proposed, further enriching the field.

Starting in 1996 with stability of solid solutions,105 multiple promising applications, based on quantum alchemical changes, have been published over recent years, including thermodynamic integrations,106 mixtures in metal clusters,107,108 optimization of hyperpolarizabilities,109 reactivity estimates,110 chemical space exploration,111 covalent binding,112 water adsorption on BN-doped graphene,113 the nearsightedness of electronic matter,114 BN-doping in fullerenes,115 energy and density decompositioning,116 catalyst design,117−119 and protonation energy predictions120,121 Symmetry relations among perturbing Hamiltonians have also enabled the introduction of “alchemical chirality”.34

An extension of computational alchemy toward descriptions which go beyond the Born–Oppenheimer approximation has been introduced within path-integral molecular dynamics, enabling the calculation of kinetic isotope effects, already in 2011,122 and subsequently by Ceriotti and Markland.123

However, also varying the electron number is a long-standing concept within conceptual DFT.124,125 Actual variations have only more recently been considered, e.g., to estimate redox potentials,98,126 higher-order derivatives,100−102 or for the development of improved exchange-correlation potentials.127

2. Heuristic Approaches

Modern systematic attempts to establish quantitative structure–property relationships (QSPRs) have led to computationally advanced bio-, chem-, and materials-informatics methodologies. Unfortunately, conventional approaches in QSPR predominantly rely on heuristic assumptions about the nature of the forward problem, and are thus inherently limited to certain applicability domains. The implicit bias, often due to lacking basis in the underlying physics is known, as discussed, e.g. in a 2010 review by G. Schneider,128 and many improvements have been contributed more recently.20

While heuristic in nature, QSPR can still provide useful qualitative trends and insights for relevant applications, and sometimes yield accurate predictions for specific property subdomains and systems. Albeit not directly relying on the laws of quantum mechanics, these early developments are still valuable, in the sense that some just correspond to special variants of the more complicated models, for instance, a linear model can be mapped onto the framework of kernel method, by choosing a linear kernel, instead of say Gaussian kernel for Gaussian process regression (GPR). Other heuristic approaches, exhibiting more quantitative characteristics can be considered important precursors for modern QML. Such examples include Collin’s improved Shepard interpolation scheme41 for accurate representation of molecular potential energy surfaces, which resembles the form of kernel methods except that the weights are determined in a heuristic way, instead of being regressed as in GPR. One may also argue that Collins’ scheme could be recast into the kernel framework, except that a specific kernel is chosen such that the Shepard interpolation weights in Collins’ scheme are exactly reproduced (with the constraint that these weights sum up to 1). Another highly related concept is Ramon Carbo-Dorca’s quantum similarity (for a comprehensive review, see ref (129)), derived based on density matrix, or molecular orbitals, or other related quantum quantitites, it is also closely linked to kernel based methods and may be used directly as parameter-free kernel matrix elements (unlike in GPR, kernel matrix element characterizing similarity is typically hyper-parameter dependent).

In the sections below, we focus on relevant literature regarding three distinct perspectives which largely follow chronological order: (i) low-dimensional correlations or simple models from the early days of chemistry, (ii) coarse representations of molecules and derived quantities, mostly providing an overview of QSPR, and (iii) molecular representations based on properties.

2.1. Low-dimensional Correlations

Early practices of fundamental chemical research dealt with spotting correlations between inherent properties of the system and systematic changes of observed quantities. Possibly the most famous example for such work is Mendeleev’s discovery of the periodic table.130 Other important examples correspond to Pauling’s electronegativity concept and covalent bond postulate,131 or Pettifor’s Mendeleev numbering scheme.132,133 Work along such lines has been continued, and recent contributions include revisiting Pettifor scales,134,135 use of variational autoencoders to “rediscover” the ordering of elements in the periodic table,136 or the chemplitude model which extends Pauling’s concept,137 among many others. Free-energy relationships are the subject of yet another broad category of early research which is still active today. Relating logarithms of reaction constants (free energy difference) across CCS for related series of reactions138 has led to the famous Hammett equation, a 2D projection of all degrees freedom onto composition and reaction conditions.139−141 Similarly low-dimensional effective degrees of freedom have been identified within Hammond’s postulate,142 or Bell–Evans–Polanyi principle.143,144

Most of the aforementioned concepts were proposed to gain a better (or more useful) understanding of molecular behavior in the first place. For extended systems such as metallic surfaces, complexities arise and many of the simplified molecular models are no longer applicable. With the advent of density functional theory (DFT),145−149 alternative descriptors have been proposed during the past decades, playing an increasingly important role. Notable contributions include the d-band center model by Hammer et al.,150,151 the generalized coordination number,152,153 and the Fermi softness.154 Free-energy relationships are more robust against subtle changes in the electronic structure and are being widely applied in analyzing surface elementary reaction steps.155 Scaling relations between the energetics of adsorbed species on surfaces156 also enjoy extensive attention and have been proven useful for catalyst design regardless of the surface not being metallic.157−159 Many of the empirical chemical concepts such as electronegativity, softness/hardness, and electrophilicity/nucleophilicity can be rationalized and quantified within what is known as “conceptual” DFT.160,161 This specific field, as pioneered by Fukui or Parr and Yang,160 has been championed and furthered by many including Geerlings, De Proft, Ayers, Cardenas, and co-workers.161,162

We note that simple models, involving one or a few variables in general, represent effective coarse-grained schemes applicable to specific subdomains of chemistry. While they lack the desired transferability of quantum mechanics, they often do encode well tempered approximations and therefore are capable of capturing much of the essential physics. As such, they have much to offer, and they could, for example, serve the design of robust and general representations enabling the training and application of improved QML models (see below or refs (6 and 163)). Alas, this idea, to connect low-dimensional model, based on well established heuristics, with more recently developed generic ML models, is still largely unexplored, despite the fact that the latter often bear (magic) black box characteristics allowing for little qualitative insights. Unifying modern ML with low-dimensional model could therefore also help resolve open challenges in QML. For instance, how can we properly represent different electronic (spin-) states of molecules in the molecular representation or different oxidation states? Conceptual DFT derived linear or quadratic energy relationships suggest treating the number of electrons (Ne) and/or its powers as independent features might be a reasonable starting point. Another thus-inspired direction of research is to utilize conceptual DFT-based local indicators as properties of composing atoms/bonds/fragments of a target molecule as a starting point (much like the fundamental variables such as Z and R) for building representations. This might be necessary in order to address hard and outstanding problems such as building QML models of intensive properties or to account for multireference character in the electronic structure.

2.2. Stoichiometry

Given a fixed pattern of structure, stoichiometry alone can be used as a unique representation of the system under study. This idea has been demonstrated for an exhaustive QML based scan of the elpasolite (ABC2D6 stoichiometry) subspace of CCS, predicting cohesive energies of all the 2 million crystals made up from main-group elements.164 Elpasolites are the most abundant quaternary crystal form found in the Inorganic Crystal Structure Database. Comparison of the QML results to known competing ternary and binary phases enabled favorable stability predictions for nearly 90 crystals (convex hull) which subsequently have been added to the Materials Project database.165 A compact stoichiometry based representation in terms of period and group entry for elements A, B, C, and D was shown to reach the accuracy of explicit geometry based many-body potential representations at larger training set size, indicating the dominance of the former in large training data regimes.166 Similar work was subsequently done by Ye et al.167 as well as Marques et al. for perovskites on crystal stability,168 as well as by Legrain et al.,169 for predicting vibrational free energies and entropies for compounds, drawn from the Inorganic Crystal Structure Database.

A naive but useful derived concept is the so-called “dressed atom” concept,170 which characterizes the atom in a molecule of a fixed stoichiometry. When using this approach together with a linear regression model to approximate the total energy (or atomization energy), the accuracy turns out to be surprisingly reasonable,171 at least for common data sets of organic molecules with small variance among constitutional isomers. For instance, the corresponding mean absolute error (MAE) for QM7 data set is only 15.1 kcal/mol.170 Using bond counting, the MAE could be improved further to less than 10.0 kcal/mol, within reach of a conventional DFT GGA functionals.172 Therefore, it seems advisible to always use the dressed atom approach for centering the data for any fixed stoichiometry (i.e., averaging out constitutional and conformational isomers) before proceeding to the next level of QML training on the complete set of degrees of freedom. This normalization step can also be seen as data preprocessing, enabling the QML model to focus on “minor” deviations from the mean.164,173

2.3. Connectivity Graph

When the systems under study do not share some common structural skeleton, stoichiometry alone is not enough, and the covalent bonding connectivity between atoms, as well as conformations, may have to be also examined.

It is worth pointing out that chemists often assume a one-to-one relationship between the molecular graph and its associated global conformational minima (or the second lowest energy minima, or the third, etc.), and therefore it should be possible to build a QML model to predict relevant quantum properties of such ordered minima from graph-input only. In fact, the remarkable performance of extended Hückel theory for some systems could be explained in this way.

Because of the intuitive accessibility and applicability of (incomplete) graph based representations, such as Lewis structures and their extensions, for a wide range of molecular systems, associated ML methods have received broad attention and wide applications in many fields such as cheminformatics or bioinformatics. Examples of such representations include various fingerprint representations,174 such as the signature methodology.175−177 Another notable example corresponds to the so-called extended circular fingerprint (ECFP).178 ECFP and similar representations have been used for drug design179,180 and qualitative exploration of CCS.181,182 ECFP has also been used in KRR models for prediction of quantum properties of QM9 molecules. Numerical results for ECFP based QML models indicate a substantially worse performance compared to more complete, geometry derived representations.183

Modern molecular graphs, typically in SMILES format, based neural networks models, have gained considerable momentum during the past decade. A vast amount of related literature deal with chemical synthesis and retrosynthesis in such representation spaces (mainly in organic chemistry),184,185 typically favoring different deep learning architectures, chemical reaction network,186 as well as molecular design using variational autoencoder (VAE, which maps a molecule represented by SMILES string to some latent space187). The absence or presence of relationships between functional groups and binding affinity was also recently explored through use of random matrix theory in drug design.188 The incorporation of new and improved formats, such as SELFIES,189 might still lead to further improvements for such research.

In the context of a first-principles view on CCS, we note however that molecular graphs only encode a (biased) statistical average of the many conformational configurations for a molecule near some local minima in the potential energy surface. As such, they are naturally disposed for use of QML models of ensemble properties. Work along such lines still awaits being explored in the future. Albeit popular and justified for certain problems, graph-based approaches are inherently limited when it comes to noncovalent problems, such as supramolecular assembly processes governed by van der Waals interactions, metal cluster/bulk/surface adsorption involving “multivalent” (transition) metal elements controlled by weaker metallic bond (cf., covalent bond), or chemical reactions requiring the transformation of graphs from one into the other. In such situations, the intuitive concept of a graph is ill-defined, and the necessary corrections are not always obvious.

2.4. Coarse-grained

As the system size grows, the cost in training and prediction of QML models increases accordingly, although with more favorable scaling than typical quantum chemistry methods. Therefore, it may become very demanding or even impossible to deal with system sizes which cross certain thresholds. In such scenarios, one typically represents the systems in a coarse-grained fashion, meaning “superatoms” (groups of atoms in close proximity, or beads) in a molecule are being considered. Coarse-grained approaches can drastically reduce the number of degrees of freedom and are therefore the only feasible way to model systems at macroscopic scale. More importantly, they enable a significant collapse of the size of chemical space due to the transferability of beads by design.190

Current practices of coarse-grained ideas comprise mostly coarse-grained force fields (CGFF) for simulation of large systems such as macromolecular systems and soft matter. With the emerging need for systematic control of the accuracy of models of such systems, coarse-grained representation based QML models (CGQML) may be a rather promising alternative to CGFF, much the same as how QML models based on full-atom representation remedy the deficiencies of classical force field approaches for small to medium-sized molecules.19 Such comparison between QML and FF makes sense, as the most modern implementations of ML hold promise to approach the computational efficiency of FFs. Some of the first studies on coarse-grained representation used together with QML include John and Csányi’s free energy surface modeling of molecular liquids in 2017.191 Later, efforts to tackle complicated biosystems were reported by Bereau and co-workers in 2019,190 as well as by Clementi and co-workers.192 Compared to CGFF, CGQML could be significantly more accurate once the system information is properly encoded in the coarse-grained representation, as the QML part can recover what is missing in the CG part by careful selection of training data (vide infra).

2.5. Property Based

There exists another type of representation, typically referred to as descriptor and the least “ab initio” in spirit, in which the basic idea is to simply select a set of pertinent atomic/molecular properties as underlying degrees of freedom. The properties can stem from calculation and/or experiment and have to be relatively easy to obtain and are typically supposed to somehow “describe” the property of interest, and hence the name “descriptor”. This representation is often utilized in combination with some nonlinear regressor like a neural network, as the relationship between the chosen properties is commonly highly nonlinear. Although this approach could be universally applicable, no matter the size or composition of target systems, its predictive power is limited by construction due to its potential lack of uniqueness.30,163 Most of the studies following this direction can be traced back to the early applications of ML in chemistry and related fields, one example being Karthikeyan et al.’s work193 on melting and boiling points prediction of molecular crystals using the properties of standalone molecules as a feature vector. A more recent and systematic study of this idea has applied optimization algorithms toward the down-selection of descriptor candidates in order to build predictive ML models of formation energies of binary solids.194 From a first-principles point of view, however, such representations are questionable because relationships between different observables (or other arbitrary mathematical properties), obtained as expectation values of independent operators, are not necessarily well-defined.

3. QML Methodology

The fundamental idea to employ machine learning models in order to infer solutions to Schrödinger’s equation throughout CCS, rather than solving them numerically, was first introduced in 2011.195 The authors stated that ”....the external potential...uniquely determines the HamiltonianHof any system, and thereby the ground state’s potential energy by optimizing Ψ”, and they show that one can use QML instead (encoding the number of electrons implicitly by imposing charge neutrality). As such, the problem of predicting quantum properties throughout CCS belongs to what is commonly known as “supervised learning”. One typically distinguishes between unsupervised (compound data only) and supervised (data records including compounds and associated properties) learning. In this review, we focus on the latter, i.e., on the question how, given sufficient exemplary structure–property pairs, properties can be inferred for new, out-of-sample compounds.

The generic procedure for supervised learning requires first defining the model architecture, i.e., the mathematical expression for the statistical surrogate model f, which estimates some quantum property p as a function of any query compound M, pQML(M) ≈ f((M)|{Mi},{piref};{ci}), wherefcorresponds to the regressor, and regression coefficients and hyper parameters {ci} are obtained via minimization of training loss-function quantifying the deviation of pQML from {pi} for all training compounds {Mi}. In other words, f is parametric in regression coefficients and hyperparameter which, in return, are nonlinear functions in the training data. The origin (calculated or measured) as well as the actual existence (some properties, such as energies of atoms in molecules,116 are not observables but can still be inferred) of pref is secondary. Noise in the data (due to experimental or numerical uncertainty, or due to minor inconsistencies) can be accommodated to a certain degree through well-established regularization procedures. Converged cross-validation protocols help to avoid overfitting and to enable the optimization of hyper-parameters as well as meaningful estimates for any interpolative query. For introductory texts on kernel based regressors, the reader is referred to the book by Rasmussen et al.;197 as for representation and training sets, several reviews have recently been published.7,10,19,198

3.1. Regressor

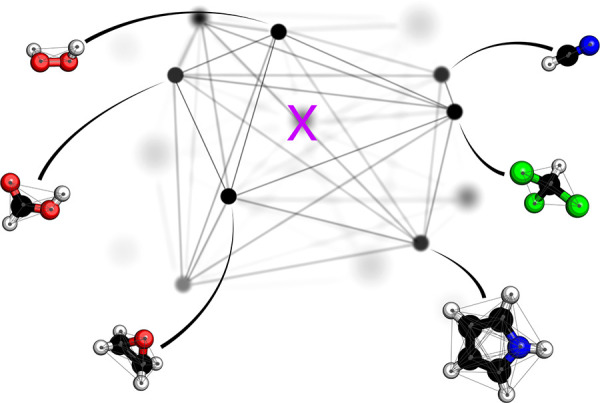

When considering the problem of fitting a generic set of basis functions to precalculated data, some of the most commonly made choices in the field of atomistic simulation include support vector machines (kernel ridge regression), tantamount to Gaussian process regression in their specific model form, neural networks, random forests, or permutationally invariant polynomials (PIPs).197,199,200 While agnostic about the training labels by construction, the choice of these basis set expansions constitutes a crucial step. Most evidently for support vector machines, nonlinear kernel functions (based on feature representations vide infra) map any nonlinear high-dimensional regression problem into a low-dimensional kernel space within which the regression problem becomes linear and therefore straightforward to solve through a closed-form expression (“kernel-trick”). How kernel space relates to CCS is also quite intuitive to grasp when thinking about it as a graph of compounds. As displayed in Figure 2, each compound, being representable by a molecular graph (or derived matrix such as a Coulomb matrix or Cartesian coordinate and nuclear charge vector) is projected into higher-dimensional feature space (shown are only three principal dimensions from the infinite number of dimensions defined within the framework of KRR/GPR). The complete connection between all compounds in the new space form another type of graph, with each vertex corresponding to a compound and each edge corresponding to a similarity measure of compounds (edge length may indicates a metric distance between two compounds). Inferring the property of a new compound (labeled as pink “X” in Figure 2) may be conceptualized as summing up distance scaled property weights. Within this picture, it becomes intuitively obvious that the interpolating accuracy must improve with increasing compound density.

Figure 2.

3D projection of high-dimensional kernel representation of chemical compound space. Within kernel ridge regression, chemical compound space corresponds to a complete graph where every compound is represented by a black vertex and black lines correspond to the edges which quantify similarities. Each compound, in return, can be represented by a molecular complete graph (e.g., the Coulomb matrix (CM)195) recording the elemental type of each atom and its distances to all other atoms. Given known training data for all compounds shown, a property prediction can be made for any query compound as illustrated by X. Choice of kernel-function, metric, and representation will strongly impact the specific shape of this space and thereby the learning efficiency of the resulting QML model.

While deep neural network models are very powerful and possess significant black-box character, their training requires data sets of very large size as well as a substantial calculation effort in order to optimize the regression coefficients and hyper-parameters (no closed-form solution is known). In this sense, kernel methods are rather lightweight and preferable in scarce data scenarios, as they enjoy the potential benefits of being more intuitive and faster to train. The specific architecture of the neural network will affect its performance and data efficiency dramatically. Deep, recurrent, convolutional, message passing, generative, adversarial, geometric neural networks, and other flavors, as well as choices of activation function, number of layers, and neurons, have all shown significant impact on the cost of training and on the predictive power in atomistic simulation.

In the case of GPR/KRR, the architecture is much simpler and hence of a lesser concern (GPR/KRR can be seen as a single-layer neural network model in the limit of infinite width201), but the specific kernel space does not only depend on the choice of kernel function but also on the choice of metric. While it is clear that one should avoid similarity measures which do not meet the mathematical criteria of how a metric is defined (identity, symmetry, triangle inequality), the impact of the specific metric choice has not yet been studied much in the field of atomistic simulation. Euclidean, Manhattan, or Frobenius norms are commonly used. Only most recently, the use of the Wasserstein norm has been proposed to gain permutational atom-index invariance while using index-dependent matrix representations.202 From inspection of Figure 2, it should be obvious that any nonlinear change in metric will strongly affect the shape of the kernel regression space and thereby the overall performance.

3.2. Learning Curves

Correct implementations of ML algorithms applied to noise-free data sets afford interpolating ML models, which avoid overfitting and enable statistically meaningful predictions of properties of out-of-sample compounds,199 after proper regularization and hyper-parametrization through converged cross-validation protocols, as discussed in great detail in the literature, for example, in refs (203 and 204).

On the basis of statistical learning theory, the leading order term of the out-of-sample prediction error (E) was shown to decay inversely with training set size N, i.e., E ∝ a/Nb, for GPR/KRR as well as for neural network models.205,206 This is not surprising, considering the great similarities shared between NN models and GPR/KRR model, as also mentioned in the preceding subsection. This asymptotic behavior for QML models has been confirmed numerically within numerous and independent studies, many of which are referenced herein. As illustrated in Figure 3, learning curves (LC), i.e., prediction error E vs training set size N, plotted on log–log scales assume linear form (log E = log a – b log N) and serve as a useful standard, facilitating systematic comparison and quality control of the efficiency of differing ML models. For maximal consistency, the QML models should be trained and tested on the exact same cross-validation splits stemming from the exact same data set. When the data contains noise, or when relevant degrees of freedom are neglected (e.g., through use of a nonunique representation, such as the bag of bond (BoB) representation,170 see section 4.1 for more details), the learning will cease eventually for some training-set size, manifesting itself visually through learning curves which level off, cf. solid-black line in Figure 3. For noise-free data and complete representations, however, a linear correlation between log(E) and log(N) is to be expected (see the dotted and dashed lines in Figure 3), with some slope b typically more or less a constant for different unique representations and related to the effective dimensionality of the problem, and some offset log a, which typically reflects the capability of the representation to capture the most relevant feature variations in kernel space. More specifically, the offset measures the degree to which the representation encodes the right physics. An illustrative example for this statement can be given by comparison of the learning curves obtained for the CM representation versus derived matrices with off-diagonal entries dependent on alternative interatomic power-laws.163 For interatomic off-diagonal elements approaching London’s R–6 law, the representation achieved lower offsets than for off-diagonal elements decaying according to Coulomb’s law. Correspondingly, representation matrices with off-diagonal elements linearly or quadratically growing with the interatomic distances resulted in LCs with dramatically increased offsets.163 At first glance, it might seem that the slope of LC (aka, “learning rate” of QML model) barely changes, when switching from one unique representation to another. It is therefore natural to ask if it is impossible to further speed up the learning process as indicated by the dotted-dashed learning curve in Figure 3, exhibiting a much steeper slope. Through an expert-informed reduction of effective dimensionality (through a priori removal of irrelevant information stored in randomly selected training data), it was shown that this is indeed possible. Such strategies for a more rational sampling of training data will be discussed in section 6. Note that in contrast to conventional curve fitting, training errors for properly trained machine learning models applied to synthetic data are typically orders of magnitude smaller than the variance of the signal. As such, they are negligible and carry little meaning because noise levels are typically close to zero or at least many orders of magnitude smaller than label variance. Consequences of model construction, i.e., choice of regressor, metric, optimizer, loss-function, representation, or computational efficiency, become immediately apparent in the characteristic shape of learning curves. When training a small parametric regressor, e.g., a shallow neural network with few neurons, to estimate a complex and high-dimensional target function, the learning curve will rapidly “saturate” and converge toward a finite optimal residual prediction error that can no longer be lowered by mere increase of training set size. As such, it should come as no surprise that learning curves have emerged as a crucial tool for development, validation, comparison, and demonstration purposes of QML models in the field.

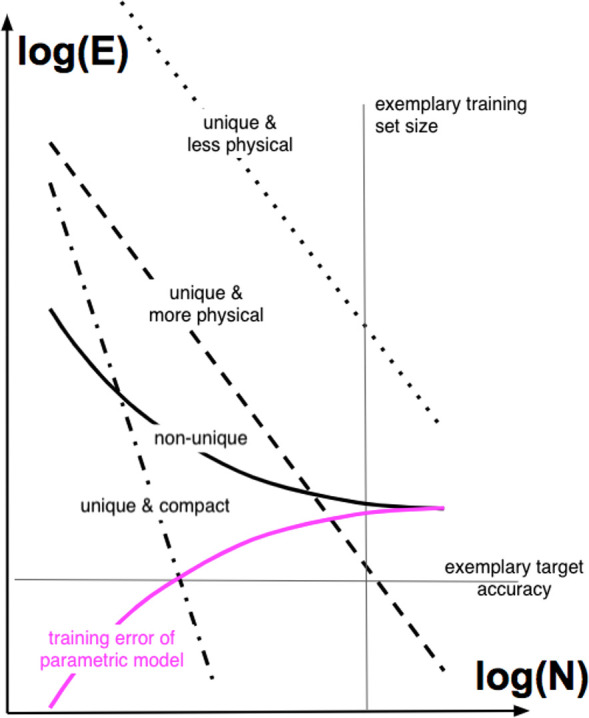

Figure 3.

Illustration of learning curves: Errors (E) versus training set size (N). Horizontal and vertical thin lines illustrate exemplary target accuracy and available training set size, respectively. For functional ML models, training errors are close to zero (not shown), and prediction errors must decay linearly with N on log–log scales. Black-solid, dotted, dashed and dotted-dashed lines exemplify prediction errors of ML models with incomplete information (ceases to learn for large N due to being parametric, using nonunique representations, or training on noisy data), unique and less physical representation, unique and more physical representation, and explicit account of lowered effective dimensionality (i.e., “compact”), respectively. The solid-pink line corresponds to the training error for a parametric model. Training errors for ML models are negligible for noise-free data.

3.3. Loss Functions

Imposing differential relationships during training amounts to adaptation of the loss function to better reflect the problem at hand. In particular, inclusion of derivative information (gradients and Hessian) has led to dramatic improvements when tackling the problem of potential energy surface fitting.66,67,73,74 A generalization of this idea to adapt the loss function for response properties of any QM observable was established for KRR in 2018207−209 (exemplified for forces, Hessians, dipolemoments, and IR spectra) and for deep neural nets in 2020 (FieldSchNet exemplified moreover also for solvent effects and magnetic effects).210

While conventional machine learning assumes that train and test loss function are identical, for atomistic simulation (or other application domains for that matter), a mathematically, more “gready” alternative might exist. In particular, the role of gradients in loss functions differing for training and testing has been studied in ref (211), with results suggesting that for predicting atomization energies throughout a CCS of distorted structures, inclusion of gradients in training improves learning curves negligibly while surely inflating the number of necessary kernel basis functions. However, when it comes to predicting the potential energy surface of a given system, they do improve the energy predictions in the above referenced studies. Conversely, when predicting gradients throughout CCS, the use of energies alone in training offers no advantage over using forces, suggesting that the inclusion of forces (if computationally less demanding than energies) should always be beneficial.

4. Representations

One could consider the choice of functional form of the representation M to be part of the machine learning methodology. However, this is a much studied question which is at the heart of how one views CCS. More specifically, what are the truly defining aspects in a compound? And how does one measure similarity? These are old questions which have already been answered for an impressive array of applications and instruct much of the basic and fundamental textbook knowledge. For example, Hammett’s σ-parameter provides a low-dimensional quantitative data-driven measure of similarity between distinct functional groups in terms of their impact on reaction rates or yields.212,213 Within QML, physically more motivated representations are sought after for subsequent use within high-dimensional nonlinear interpolators which are more universal and transferable. As illustrated for KRR in Figure 2, also the specific form of the representation (as well as the metric used) can dramatically affect the way CCS is represented within the regressor. It should therefore not come as a surprise that the data efficiency of QML models was found to depend dramatically on the specifics of the representation used. Because the importance of the choice of the distance measures has already been mentioned above, in this section, we will focus on research that was done to find improved representations.

The choice of this particular compound representation, aka descriptor or feature, plays a particularly crucial role. Correspondingly, substantial research on the design of descriptors has already been made in the fields of chem-, bio-, or materials informatics where scarce data is typical.174,214 Often, a large set of prospective features is hypothesized and subsequently reduced within iterative procedures in order to distill the most relevant variables and low-dimensional projections pertinent to the problem at hand (see above). While it is certainly possible to also pursue this approach within a quantum mechanical description of CCS,194 its heuristic and speculative character remains as unsatisfactory as its lack of universality and transferability. Fortunately, the quantum nature of CCS allows us to follow more systematic and rigorous procedures in order to address this question.

For example, it is a necessary condition for any successful ML model to rely on uniqueness (or completeness) in the representation, as pointed out, proven and discussed several years ago in refs (215 and 216) and more recently in refs (217 and 218), Uniqueness is essential in order to avoid the introduction of spurious noise due to uncontrolled “coarsening” of that subset of degrees of freedom which is neglected. Molecular graphs based on covalent bond connectivity only, for example, do not account for conformational degrees of freedom. Consequently, their use as a representation will make it impossible to quench prediction errors below the variance of the target property’s conformational distribution, no matter how large the training set.

Other characteristics, desirable for representations to display, include compactness, computational efficiency, symmetries, invariances, and meaning. Representations, in conjunction with the regressor’s functional form, define the basis functions in which properties are being expanded and strongly affect the shape of the learning curves, e.g., accounting for a target property’s invariances through the representation typically leads to an immediate decrease of the learning curve’s offset.

While it is possible to model all QM properties using the same representation and kernel,219 as also demonstrated for neural nets with multiple outputs already in 2013,220 it should be stressed, however, that this is a distinct feature of QML which stands in stark contrast to conventional QSAR or QSPR, where the ML model is typically strongly dependent on the target property. If regressor, metric, and representation M are independent of the label, i.e., the quantum property, there is a strict analogy to quantum mechanics in the sense of the Hamiltonian (or the wave function) of a system not depending on the operator for which the expectation value of any given observable is calculated.8 This becomes obvious by considering the training of a KRR model where the regression coefficients are obtained through inversion of the kernel matrix, α = (K + λ I)−1pref, where for synthetic calculated data with signals being orders of magnitude smaller than noise, the regularization λ (also known as noise level) is typically close to zero. Using property independent representations, metrics, and kernel functions, it is therefore obvious that the regression coefficients adapt to each property only because of the reference property vector pref. In ref (219), this has been illustrated numerically by generating learning curves for various properties using always the same inverted kernel matrix for any fixed training set size.

The predictive accuracy for specific properties varies wildly as a function of representation and regressor choice.183 The historic development over years 2012–2018 for a selection of ever-improved machine learning models (due to improved representations and/or regressor architectures) can be exemplified for the prediction errors of atomization energies stored in the QM9 data set171 and has also recently been summarized in the context of the “QM9-IPAM-challenge” in refs (15, 16, and 18).

The inclusion of increasingly more (less) “physics” in the representation has been demonstrated to systematically improve (worsen) learning curves163 and has been followed by a series of developments which have all been benchmarked on the same set of atomization energies of small organic molecules in the QM9 data set171 and which demonstrate the progress made. While binding energies of “frozen” geometries still constitute an application somewhat remote from most real-world applications in chemistry, from a basic physics point of view they do represent a crucial intermediate step before tackling more complex properties. In other words, if machine learning models failed to predict binding energies, one should not expect them to work for free energies. But also from a practical point of view, the computational cost of single-point energy calculations typically dominates all quantum chemistry compute campaigns and therefore represents one of the most worthwhile targets for surrogate models used for the navigation of CCS.

We note that with the emergence of deep neural networks, the problem of also “learning” the representation can be mitigated to be incorporated in the overall learning problem.55,221 While many intriguing and sophisticated representations, such as Fourier-series expansions,216 wavelets,222 multitensors,223 or molecular orbitals224 have been proposed, most representations can be categorized to either correspond to discrete adjacency matrices or to continuous many-body expansions through distribution functions. We therefore limit ourselves to discuss in the following, both predominantly in the context of KRR based QML models. A comprehensive overview on representations for KRR based QML models has also recently been contributed by Rupp and co-workers.225

4.1. Discrete

Coordinate-free, bonding neighbors (covalently bonded atom pairs) based graphs, as well as their systematic extensions to arbitrary number of neighboring shells, have formed an important research direction in cheminformatics for many years.32,174,176,178,214 In 2011, supervised learning was proposed as an alternative to solving Schrödinger’s equation throughout a chemical compound space relying as a representation on a complete undirected labeled graph that encodes the simplex spanned by all atoms.195 More specifically, this graph was represented by the “Coulomb matrix” (CM), an atom by atom matrix with the nuclear Coulomb repulsion on off-diagonal elements and with approximate energy estimates of free atoms (EI ≈ 0.5ZI2.4226) as diagonal elements. Formal requirements such as uniqueness, translational and rotational invariance, as well as basic symmetry relations (symmetric atoms will share the same matrix elements in their respective rows or columns) are all met by the CM. Atom index invariance can be achieved through use of its eigenvalues (thereby sacrificing uniqueness215,227), sets of randomly permuted CMs,220 or sorting by norms of rows,203 thereby losing differentiability due to sudden switches in ranks.202 We reiterate once more that the atom indexing dependence can be mitigated through using more sophisticated distance measures such as the Wasserstein metric.202

Similarly encouraging findings of KRR based QML models applicable throughout CCS were quickly reproduced for other materials classes such as polymers228 or crystalline solids.229 While off-diagonal elements with a London dispersion power law (r–6) have subsequently been found to be preferable for QML models of atomization energies,163 other representations (vide infra) offer lower learning curve offsets. In particular, the bag of bonds representation (BoB) is worthwhile mentioning.170 Introduced in 2015, BoB groups the entries of the CM in separate sets for each combination of atomic element pairs within which all entries have been sorted. When calculating the similarity between two molecules, only Coulomb repulsions between atoms with the same nuclear charge are being compared, rendering thereby the similarity measurement more balanced and effectively lowering the learning curve offset. While even more compact than the CM, BoB lacks uniqueness due to being strictly a two-body representation which can not distinguish between homometric configurations.216 The generalization of BoB toward the explicit incorporation of covalent bond information, angles, as well as dihedrals in terms of a systematic expansion in Bond, Angle, and higher-order interactions (i.e., BAML representation) was accomplished in 2016163 by using functional forms and parameters from the universal force-field.230 A similar, but more elaborate, parameter-free, many-body dispersion (MBD) based representation involving two and three body terms231 was proposed later in 2018.

The CM has been essential as a baseline for the interpretation, analysis, and further development of subsequent QML models. It has also been adapted successfully to account for periodicity in the condensed phase, as evinced by learning curves for formation energies of solids.232 For other properties, such as forces, electronic eigenvalues, or excited states, the CM (or its inverse distance analogues for QML applications with fixed chemical composition) is still competitive with state of the art representations.67,73−75,83,233−236 Furthermore, because of its uniqueness, compactness, and obvious meaning, the CM (or its variants) are conveniently used to overcome frequent data analysis problems in atomistic simulations, such as removal of duplicates, quantification of noise levels, and simple learning tests.

Regarding the interatomic distance dependent decay rate of off-diagonal elements, it is also worthwhile to mention exponential functions, rather than 1/r. In particular, the overlap matrix between atomic basis functions of all atoms has been proposed237 and used with great success for QML models of basis-set effects238 and excited-state surfaces.239 The overlap matrix was also included within a recent sensitivity assessment of various state-of-the art representations and performed in impressive ways.218 A constant-size descriptor based on a combination of the CM with more common molecular graph fingerprints was also proposed in 2018.240

Viewing BoB and CM as first and second rank tensors, to the best of our knowledge, use of a third rank tensor (explicitly encoding the surface of all possible triangles in a compound) has not yet been tested.

4.2. Continuous

Aforementioned discrete and global representations such as BoB enjoy fast computation. One important requirement for this kind of representation to work, however, is to introduce atom indexing invariance by sorting atoms according to the magnitude of entries belonging to each bond or other many-body types. This is artificial and may introduce derivative discontinuities with unfavorable consequences in related applications such as force predictions.

The sorting and associated problems can be naturally overcome by selecting continuous or distribution based representations, which, in essence, integrate out atom index dependent terms such as distance (w/wo angle and dihedral angle) and/or nuclear charge (i.e., alchemically166) through use of smeared out projections (a Gaussian is commonly placed on each degree of freedom). Distribution based representations, also closely related to many-body or cluster expansion,241 have gained much popularity also within QML models building on Behler’s seminal work on atom-centered symmetry functions for training neural networks on potential energy surfaces,242 or through the subsequent introduction of smooth overlap of atomic potentials (SOAP) for use in GPR by Bartok et al. in 2013.38 The first variant of linearly independent distribution based representations for QML models, applicable throughout CCS, a Fourier series expansion of nuclear charge weighted radial distribution functions, was also contributed already in 2013,216 albeit published in its final version only in 2015. Radial distributions were also used for representing crystals in solids in 2014.229 The atomic spectrum of London Axilrod–Teller–Muto (aSLATM) terms was first presented in 2017 within the “AMON” approach by Huang et al.243 (vide infra), yielding unprecedentedly low offsets in learning curves for atomization energies in the QM9 data set.171 In that same year, SOAP based QML models were generalized and shown to be also applicable throughout CCS.244

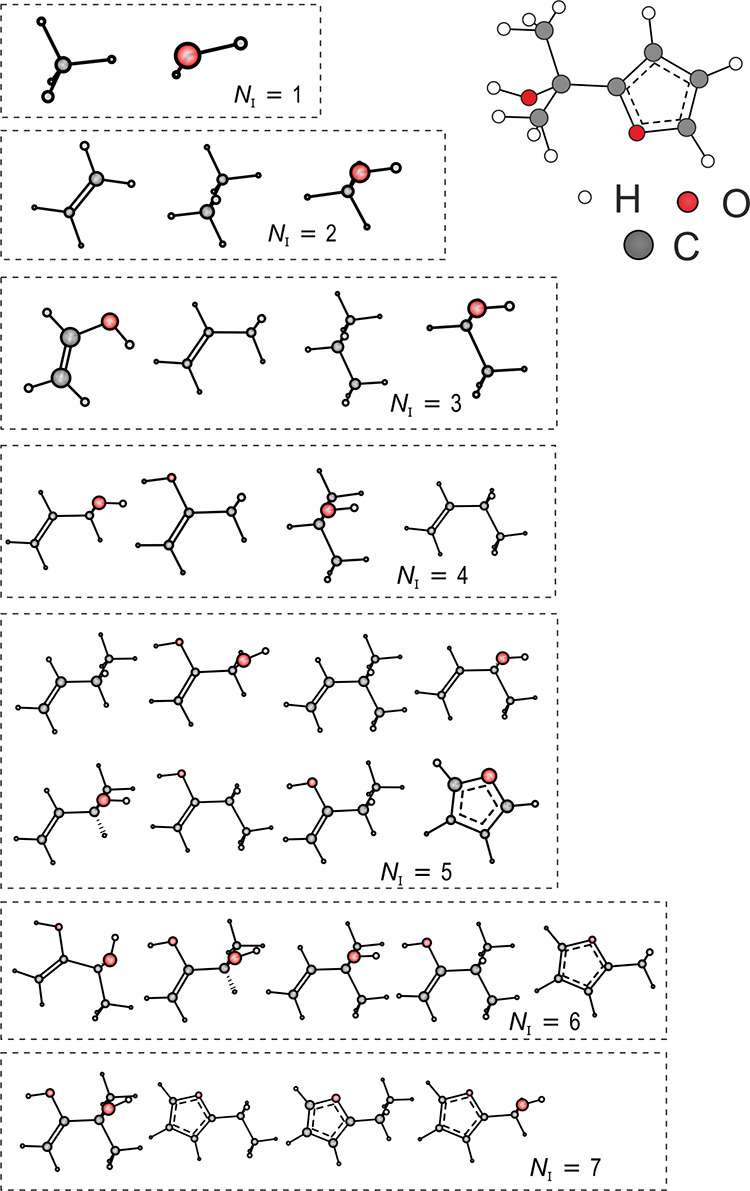

The generic histogram of distances, angles, and dihedrals (HDAD),234 a continuous but simplified version of BAML, both including many-body terms up to torsions, was contributed in 2017. In the following year, Faber et al. conceived the idea of adding alchemical degrees of freedom in a structural distribution based many-body representation, dubbed FCHL18166 (FCHL indicating the first letters of the last names of the authors and 18 in the year 2018). The FCHL family of representations encodes a systematic interatomic many-body expansion in terms of Gaussians weighted by power laws due to the insights gained in ref (163). Power law exponents and Gaussian widths were optimized as hyper-parameters through nested cross-validation during training. FCHL18 consists of three parts: The one-body term corresponds to a two-dimensional Gaussian encoding the chemical identity of the atom in terms of groups and periods of the periodic table; the two-body term encodes the interatomic distance distribution scaled down by r–4, and the three-body term encodes all angular distributions and is scaled down by r–2. The impact of four-body terms has been tested on QM9 but was found to have negligible impact on learning curves.166 Most importantly within the context of CCS, FCHL18 based QML models have been demonstrated to be capable of accurately inferring property estimates of systems containing chemical elements which were not part of training. More specifically, consider the family of molecules of formula HnY ∼ X, where Y corresponds to an element from group IV (either C, Si, or Ge), where “∼” represents single, double, or triple bond depending on chemical element X being from groups VII, VI, or V, respectively, and where n is the number of H atoms that saturates the total valences. Semiquantitative covalent bond potential binding curves have been predicted for any X/Y/bond-order combination using QML models after training on corresponding DFT curves for all other molecules that neither contain X nor Y.(see the top- and left-most subplot in Figure 4 for an illustration). For example, the ML binding curve of HC#N was obtained after training on binding curves of all other molecules that neither contained N nor C, i.e., when predicting the blue curve in the upper left panel of Figure 4, the red and green curves of that panel were not part of training nor were any other blue curve from the other panels. FCHL19, a recent revision, has been shown to provide a substantial speed-up in training and testing while imposing only a small reduction in predictive accuracy.209

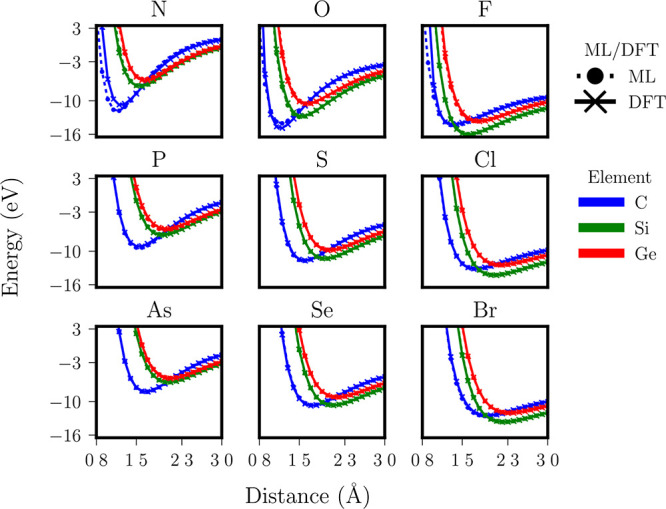

Figure 4.

QML models infer properties for new chemical compositions. DFT and QML (FCHL+KRR) based predictions of covalent triple, double, and single bonding between groups IV and V (left column), VI (mid column), and VII (right column) elements, respectively. Open valencies in the group IV elements have been saturated with hydrogens. QML models were trained on the DFT results for all of those chemical elements that are not present in the query molecule. Reproduced with permission from ref (166). Copyright 2018 licensed under a Creative Commons Attribution (CC BY) license.

We note in passing also the related moment tensor model (MTM) by Shapeev and co-workers, introduced in 2018,245 as well as the unifying interpretation of many of the popular distribution based representations by Ceriotti and co-workers.246

5. Regressor

Depending on how regression parameters are being obtained, the incorporation of legacy methods in QML models applicable throughout CCS is typically done either within neural networks or within Gaussian process regression (GPR) (or kernel ridge regression, KRR for short). Here, we mostly focus on kernel methods, mentioning only shortly the idea of transfer learning in neural network models,247 which is also applicable to QML models as shown in 2018 by Smith et al.248

More specifically, five categories of QML models can easily be distinguished, each of which accounting for legacy information in its own way: QML models of parameters of existing models, QML models of corrections to existing models (Δ-ML), multifidelity ML (MF-ML), multilevel-grid-combination (MLGC), and transfer learning techniques. We briefly review each of these in the following.

5.1. ML Models of Parameters

Existing force-field models can capture nicely the essential physics of a wide range of chemical systems, the main drawback being that force-field parameters (e.g., atom charges, harmonic force constants, etc.) are often rigid and unable to adapt to different atomic environments. Therefore, it would be natural to make these parameters flexible and predicted by ML models. This idea dates back to the 1990s, and the first piece of related works was done by Hobday et al.,249 where they proposed a neural network model to predict parameters of the Tersoff potential for C–H systems. In 2009, Handley and Popelier proposed to use machine learning models for multipole moments.49 This idea was revisited in 2015, when learning curves for atomic QML models of electrostatic properties, such as atomic charges, dipole moments, or atomic polarizabilities were presented.250 Their use for the construction of universal noncovalent potentials was established in 2018.70 Neural-network based equilibrated atomic charges were also proposed in 2015 by Goedecker and co-workers54,251 and in 2018 by Roitberg, Tretiak, Isayev, and co-workers.252,253

Similar strategy could also be applied to semiempirical quantum chemistry methods relying on parameters typically fitted by computational/experimental data. In 2015, QML models of nuclear screening parameters were contributed by Pavlo and co-workers.254 In 2018, unsupervised learning for improved repulsion in tight-binding DFT was introduced by Elstner et al.,255 followed by substantial further improvements in 2020.256 Extended Hückel theory was revisited in 2019 by Tretiak and co-workers.257

5.2. Δ-ML

The idea to present QML models of label corrections applicable throughout CCS and which systematically improve with training data size was first established in 2015 in terms of Δ-machine learning. Numerical results provided overwhelming evidence for the success of this idea as demonstrated for modeling energy and geometry differences between various levels of theory, including PM7, PBE, BLYP, B3LYP, PBE0, G2MP4, HF, MP2, CCSD, and CCSD(T) for QM9171 and subsets thereof.258

Δ-ML also works for correcting complex and subtle properties, such as van der Waals interactions in extremely data-scarce limits, as illustrated for DFT corrections based on training sets with less than 100 training instances,259 or to model higher-order corrections to alchemical perturbation density functional theory based estimates of heterogeneous catalyst activity.260 Among many other applications, Δ-ML has enabled corrections to electron densities,261 electron correlation based on electronic structure representations within Hartree–Fock or MP2 level of theory,262 or DFT and CCSD(T) based potential energy surface estimates.263

For noise-free data and functional QML models (unique representations), numerical results for learning curves indicate a constant lowering of offset, no matter which training set size. Such nonvanishing improvement appears to turn into vanishing improvement when employing Δ-ML in order to correct low-quality or coarse-grained baselines, such as a semiempirical PM7258 or Hammett’s relation.213

5.3. Multifidelity