Abstract

Electronically excited states of molecules are at the heart of photochemistry, photophysics, as well as photobiology and also play a role in material science. Their theoretical description requires highly accurate quantum chemical calculations, which are computationally expensive. In this review, we focus on not only how machine learning is employed to speed up such excited-state simulations but also how this branch of artificial intelligence can be used to advance this exciting research field in all its aspects. Discussed applications of machine learning for excited states include excited-state dynamics simulations, static calculations of absorption spectra, as well as many others. In order to put these studies into context, we discuss the promises and pitfalls of the involved machine learning techniques. Since the latter are mostly based on quantum chemistry calculations, we also provide a short introduction into excited-state electronic structure methods and approaches for nonadiabatic dynamics simulations and describe tricks and problems when using them in machine learning for excited states of molecules.

1. Introduction

1.1. From Foundations to Applications

In recent years, machine learning (ML) has become a pioneering field of research and has an increasing influence on our daily lives. Today, it is a component of almost all applications we use. For example, when we talk to Siri or Alexa, we interact with a voice assistant and make use of natural language processing,1,2 which was used to perform quantum chemistry calculations recently.3 ML is applied for example for refugee integration,4 for playing board games,5 in medicine,6 for image recognition7 or for autonomous driving.8 A short historical overview over general ML is provided in ref (9).

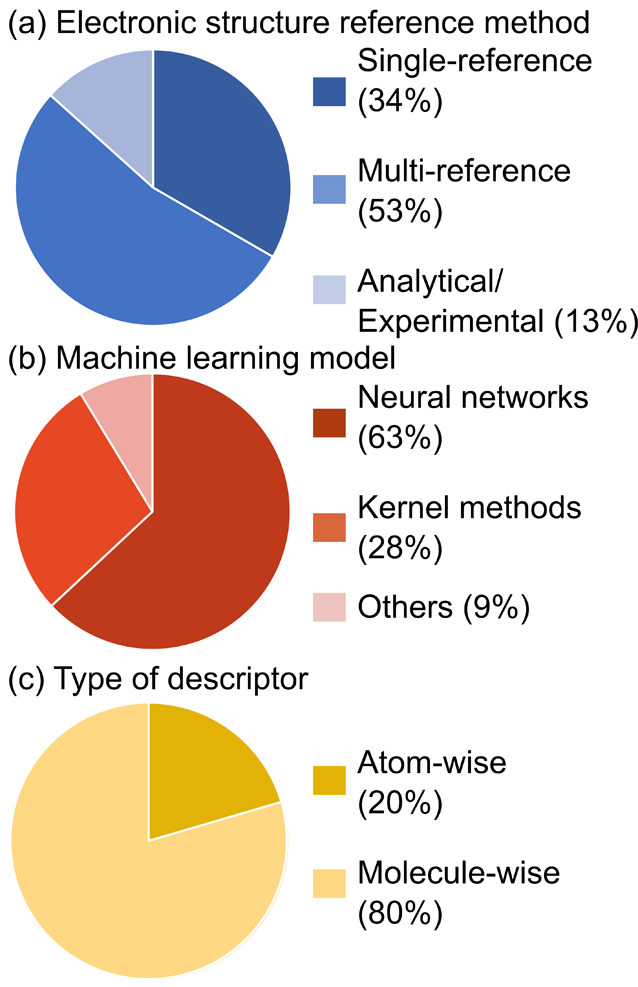

Recently, ML has also gained increasing interest in the field of quantum chemistry.10,11 The power of (big) data-driven science is even seen as the “fourth paradigm of science”,12 which has the potential to accelerate and enable quantum chemical simulations that were considered unfeasible just a few years ago.13 The reason is, at least in theory, that ML models can learn any input–output relation and offer interpolations thereof at almost no cost while retaining the accuracy of the underlying reference data. With regard to quantum chemical applications, it allows decoupling of the expenses of quantum chemistry calculations from the application, such as dynamics simulations or the computation of different types of spectra. In general, the field of ML in quantum chemistry is progressing faster and faster. In this review, we focus on an emerging part of this field, namely, ML for electronically excited states. In doing so, we concentrate on singlet and triplet states of molecular systems since almost all existing approaches of ML for the excited states focus on singlet states and only a few studies consider triplet states.14−17 We note that electron detachment or uptake further leads to doublet and quartet states, and even higher spin multiplicities, such as quintets, sextets, etc. are common in transition metal complexes, where an important task is to identify which multiplicity yields the lowest energy and is thus the ground state;17 see, e.g., refs (18−21).

The theoretical study of the excited states of molecules is crucial to complement experiments and to shed light on many fundamental processes of life and nature.22−24 For example, photosynthesis,25,26 human vision,27,28 photovoltaics,29−32 or photodamage of biologically relevant molecules are a result of light-induced reactions.33−35 Experimental techniques such as UV/visible spectroscopy or photoionization spectroscopy36−43 lack the ability to directly describe the exact electronic mechanisms of photoinduced reactions. The theoretical simulation of the corresponding experiments can go hand-in-hand with experimental results and can provide the missing details of photodamage and -stability of molecules.42,44−68 However, the computation of the excited states is highly complex and costly, and often necessitates expert knowledge.69 As ML models have only recently been applied in the field of photochemistry, keeping track of the approaches is still possible, and this field is still in its initial stage.

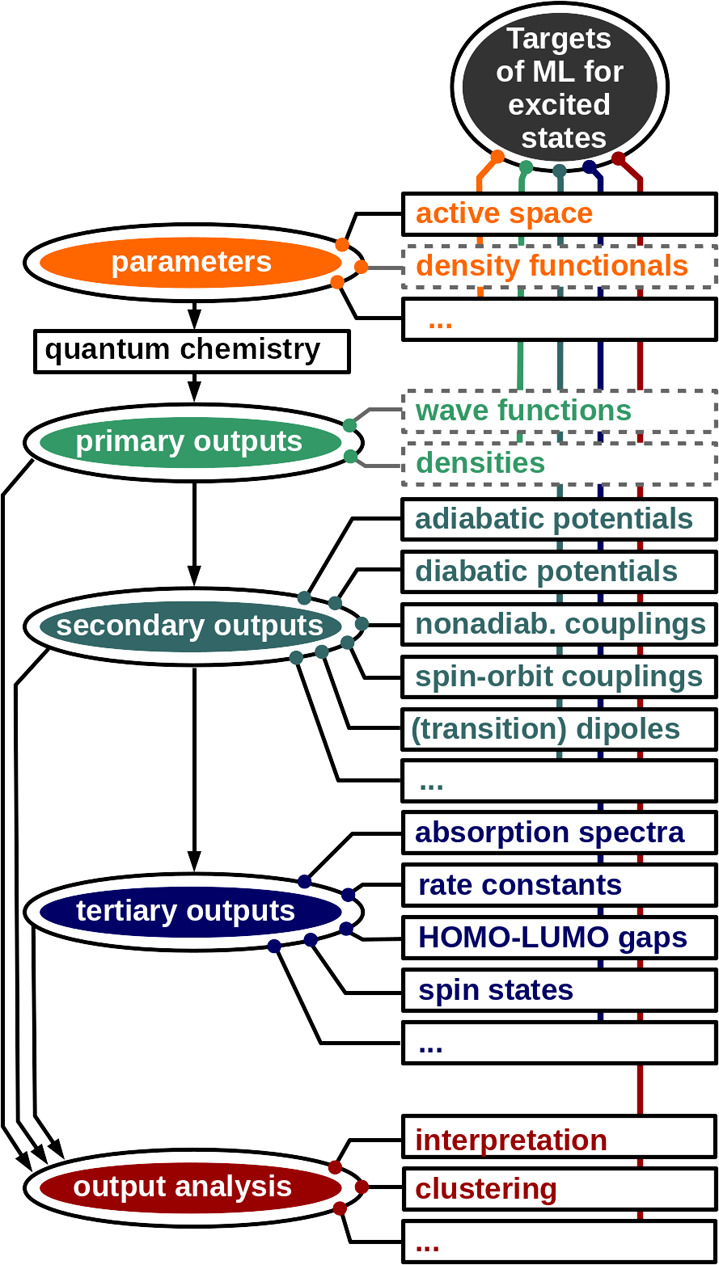

Because of the multifaceted photochemistry of molecular systems, ML models can target this research field in many different ways, which are summarized in Figure 1. For example, the choice of relevant molecular orbitals for active space selections can be assisted with ML.71 The fundamentals of quantum chemistry, e.g., to obtain an optimal solution to the Schrödinger equation or density functional theory, can be central ML applications. For the ground state, ML approximations to the molecular wave function72−80 or the density (functional) of a system exist.70,80−89 Obtaining a molecular wave function from ML can be seen as the most powerful approach in many perspectives, as any property we wish to know could be derived from it. Unfortunately, such models for the excited states are lacking and have yet been investigated only for a one-dimensional system,90 leaving much room for improvement.

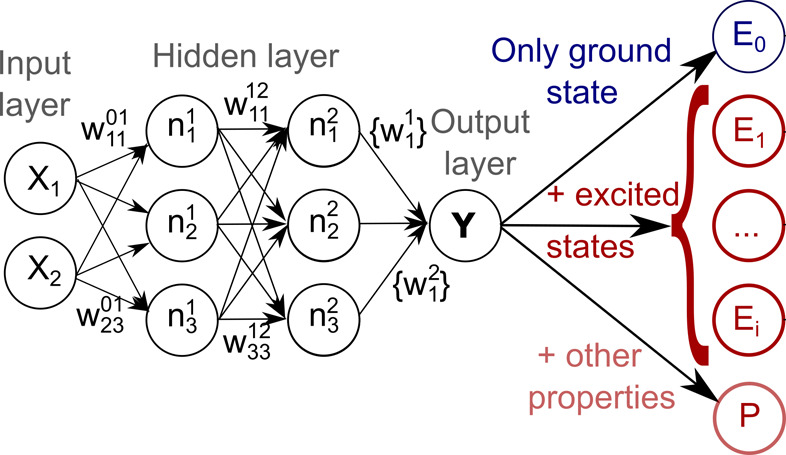

Figure 1.

Targets of ML for the excited states of molecules (dashed: not yet achieved). All areas of excited-state quantum chemistry (QC) calculations can be enhanced with ML, ranging from input to primary outputs that are used in the computation of secondary outputs, which in turn are employed to calculate tertiary outputs. Analysis can be carried out at all stages. The classification is inspired by the one in ref (70).

Most ML studies instead focus on predicting the output of a quantum chemical calculation, the so-called “secondary-output”.70 Hence, they fit a manifold of energetic states of different spin multiplicities, their derivatives, and properties thereof. With respect to different spin states of molecular systems, only a few studies exist, which predict spins of transition metal complexes17,91 or singlet and triplet energies of carbenes14 of different composition or focus on the conformational changes within one molecular system15,92,93 for the sake of improving molecular dynamics (MD) simulations. The energies of a system in combination with its properties, i.e., the derivatives, the coupling values between them, and the permanent and transition dipole moments,15,16,92−99 can be used for MD simulations to study the temporal evolution of a system in the ground-state100−137 and in the excited states.15,16,92−94,132,138−145,145−149,149−151

With energies and different properties, tertiary outputs can be computed, such as absorption, ionization or X-ray spectra,152−155 gaps between highest occupied molecular orbital (HOMO) and lowest unoccupied molecular orbital (LUMO), or vertical excitation energies.156−159

In addition, quantum chemical outputs can also be analyzed or fitted in a direct way, e.g., reaction kinetics, as results of dynamics simulations can be mapped to a set of molecular geometries and can be predicted with ML models.160 Excitation energy transfer properties can be learned,161,162 and structure–property correlations can be explored to design materials with specific properties.18,31,32,77,133,154,163−170

1.2. Scope and Philosophy of this Review

ML has entered the research field of excited states relatively late, and it might seem that this research field is developing at a slower pace than the exploding field of ML for the electronic ground state.169,171−174 Important reasons are in our opinion the complexity and high expenses of the underlying reference calculations and the associated complexity of the corresponding ML models, which might make it more suitable to say that ML for the excited states is developing at a similar pace, but toward a much more complex target. Simulation techniques to understand the excited-state processes are not yet viable for many applications at an acceptable cost and accuracy. Therefore, within this review, we also want to highlight the existing problems of quantum chemical approaches that might be solvable with ML and put emphasis on identifying challenges and limitations that hamper the application of ML for the excited states. The young age of this research field leaves much room for improvement and new methods.

This review is structured as follows:

-

(1)

After a general introduction,

-

(2)

we will start by discussing the differences between the ground-state potential energy hypersurfaces (PESs) and the excited-state PESs and will also emphasize the difference in their properties in section 2.

-

(3)

We provide an overview of the theoretical methods that can be used to describe the excited states of molecules. In the forthcoming discussion, we will describe different reference methods with a view to their application in time-dependent simulations, namely, MD simulations.59,172 It is worth mentioning that, unlike for the ground state, where a lot of different methods can provide reliable reference computations for training, choosing a proper quantum chemistry method for the treatment of excited states is a challenge on its own.175−177 Many methods require expert knowledge, prohibiting their use further.178,179 In addition, not any method can provide the necessary properties for any type of application. Subsequently, we aim to review the different flavors of excited-state MD simulations with a focus on nonadiabatic methods that have been enhanced with ML models lately.

-

(4)

After having provided the basic theoretical background on electronic structure theory and quantum chemical simulation techniques, we go on and summarize the basic ML models applied in studies with a focus on the excited states of molecules. The different types of ML models will help the reader to identify a proper model for a specific purpose. Advantages and disadvantages of certain regressor and descriptors are discussed.

-

(5)

The theoretical background on electronic structure theory and ML is followed by a discussion on how to generate a comprehensive yet compact training set for the excited states from the quantum chemistry data. We will summarize the existing approaches that are applied to create a full-fledged training set and put emphasis on the bottlenecks of existing methods that can limit also the application of ML. This will provide the reader with the knowledge about starting points for future research questions and clarify where method development is needed. It further provides the basis for the discussion of ML models for the excited states of molecular systems.

-

(6)

A summary of state-of-the-art ML methods for photochemistry follows. We will differentiate between single-state and multistate ML models and single-property and multiproperty ML models.95 As mentioned before, ML models can tackle a quantum chemical calculation in many different ways; see Figure 1. The different ML models will be classified in the ways they enhance quantum chemical simulations. Most approaches aim at providing an ML-based force field for the excited states, so we put particular emphasis on this topic. Lastly, the prospects of ML models to revolutionize this field of research and future avenues for ML will be highlighted.

Noteworthy, we focus on the excited states of molecules, as the excited electronic states in the condensed phase are challenging to fit and are thus often not explicitly considered in conventional approaches.180−185 In solid state physics for example, the electronic states are usually treated as continua. The density of states at the Fermi level,186 band gaps,187−189 and electronic friction tensors125,190,191 have been described with ML models to date, and especially the electronic friction tensor is useful to study the indirect effects of electronic excitations in materials.192−197 Electron transfer processes as a result of electron–hole-pair excitations can be further investigated along with multiquantum vibrational transitions by discretizing the continuum of electronic states and fitting them (often manually) to reproduce experimental or quantum chemical data in a model Hamiltonian.183,198−203 Yet, to the best of our knowledge, the excited electronic states in the condensed phase have not been fitted with ML. A recent review on reactive and inelastic scattering processes and the use of ML for quantum dynamics reactions in the gas phase and at a gas-phase interface can be found in ref (204).

Besides the electronic excitations that take place in molecules after light excitation, ML models have successfully entered research fields, which focus on other types of excitations as well. Those are, for example, vibrational or rotational excitations giving rise to Raman spectra or infrared spectra,41,111,205−209 nuclear magnetic resonance,210 or magnetism,211,212 which we will not consider in this review.

2. General Background: From the Ground State to the Excited States

The chemistry we are interested in is not static, but rather depends to a large extent on the changes that matter undergoes. In this regard, it is more intuitive to study the temporal evolution of a system. Much effort has been devoted to develop methods to study the temporal evolution of matter in the ground state potential. As an example, physical functions can be obtained with conventional force fields, such as AMBER,213 CHARMM,214 or GROMOS.215,216 The first ones already date back to the 1940s to 1950s. Such force fields enable the study of large and complex systems, protein dynamics or binding-free energies on time scales up to a couple of nanoseconds.180,217−225 However, their applicability is restricted by the limited accuracy and inability to describe bond formation and breaking. Novel approaches, such as reactive force fields exist, but still face the problem of generally low accuracy.226

The accuracy of ab initio methods can be combined with the efficiency of conventional force fields with ML models. The latter have shown to advance simulations in the ground state considerably and allow for the fitting of almost any input–output relation.100−134,137,172,227 Accurate and reactive PESs of molecules in the ground state can be obtained with a comprehensive reference data set, which contains the energies, forces, and ground-state properties of a system under investigation. Proper training of an ML model then guarantees that the accuracy of the reference method is retained, while inferences can be made much faster. In this way, they allow for a description of reactions and can overcome the limitations of existing force fields.135,171,228−232

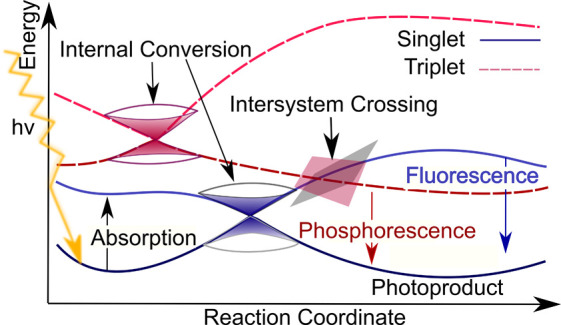

Regarding the excited states, processes become much more complex, and the computation of excited state PESs is far more difficult than the computation of the ground state PESs. Figure 2 gives an overview of the excited state processes that will be discussed within this review. As can be seen, several excited states of different spin multiplicity in addition to the ground state have to be accounted for, which feature different local minima, transition states, or saddle points and crossing points. Especially, the latter make a separate treatment of each electronically excited state inaccurate and lead to further challenges that prohibit the straightforward and large-scale use of many existing quantum chemical methods and consequently also existing ML models for the ground state.

Figure 2.

Excited-state processes that can take place after excitation of a molecule by light. Absorption of light can make the molecule enter a higher electronic singlet state. Internal conversion to another state of same spin-multiplicity and/or intersystem crossing to a triplet state can take place. Radiative emission, i.e., fluorescence and phosphorescence, are possible reactions from an excited singlet and triplet state, respectively.

As it is visible, processes usually start from a minimum in the electronic ground state (dark-blue line). When light hits a molecule and the incident light coincides with the energy gap between two electronic states, it can be absorbed, and higher electronic states can be reached when dipoles are allowed (here the second excited singlet state in light blue). Internal conversion between states of the same spin multiplicity and/or intersystem crossing between states of different spin multiplicity (here a transition to triplet states indicated as dashed red curves) can prevent the molecule from photodamage. Nonradiative transitions usually take place on a sub-picosecond time scale. With respect to intersystem crossing, it was long believed that it happens on a longer time scale and is only possible if heavy atoms are part of the molecule.233,234 However, this belief has been disproved, and today many examples of small molecules or transition metal complexes are known, which show ultrafast intersystem crossing.178,235−237 In the case of nonradiative transitions, the energy is lost due to molecular vibrations, and the molecule relaxes back to the original starting point in the ground state. However, also photodamage can occur via such nonradiative transitions, where photoproducts can be formed, e.g., by bond breaking and bond formation. When nonadiabatic transitions are not taking place, radiative emission, i.e., fluorescence and phosphorescence, can happen on a much slower time scale, i.e., in the range of nano- to milliseconds.

For small system sizes, such as SO2, highly accurate ab initio methods can be applied to describe the excited states, while more crude approximations have to be used for larger systems. The unfavorable scaling of many quantum chemical methods with the size of system under investigation requires this compromise between accuracy and system size. Crude approximations for systems that are larger than several hundreds of atoms become inevitable.44,178,238

Additionally, computations of the excited states suffer from being generally less efficient. To name only one central problem: The larger the system becomes, the closer the electronic states lie in energy, and the more excited-state processes can usually take place. The necessary consideration of an increasing number of excited states increases the already substantial computational expenses even more and restricts the use of accurate methods to systems containing only a few dozens of atoms in a reasonable amount of time with current computers. This increasing complexity makes not only the reference computations, but also the application of ML models for the excited states more complicated than for the ground state. At the same time, the application of ML models for the excited states might also be more promising, because higher speed-up can be achieved.

For the excited states, methods similar to force fields, like the linear vibronic coupling (LVC) approach,239,240 are usually limited to small regions of conformational space and restricted to a single molecule. General force fields that are valid for different molecules in the excited states do not exist. Also the ML analogue, so-called transferable ML models, to fit the excited state PESs of molecules throughout chemical compound space are unavailable to date. Only recently, we have provided a first hint at transferability of the excited states by training an ML model on two isoelectronic molecules.241 It is clear that an ML model, which is capable of describing the photochemistry of several different molecular systems, e.g., different amino acids or DNA bases of different sizes, is highly desirable. A lot remains to be done in order to achieve this goal, and yet, to the best of our knowledge, no more than a maximum of about 20 atoms and 3 electronic states with a distinct multiplicity have been fitted accurately with ML models (refs (15, 16, 92−94, 96, 132, 138−145, 146−149, 149−151, and 241)).

Whether or not the excited states of a molecular system become populated depends on the ability of a molecule to absorb energy in the form of light, or more generally, electromagnetic radiation of a given wavelength. Usually, the so-called resonance condition has to be fulfilled; i.e., the energy gap between two electronic states has to be equivalent to the photon energy of the incident light. Note however that also multiphoton processes can occur, where several photons have to be absorbed at once to bridge the energy difference between two electronic states.242−244 Further, the absorption of light not only provides access to one, but most often to a manifold of energetically close-lying states. The number of states that can be excited is related to the range of photon energies that is contained in the electromagnetic radiation. This energy range is inversely proportional to the duration of the electric field, e.g., of a laser pulse, due to the Fourier relation of energy and time.245 However, the energy range of the photons and the energy difference between the electronic states are not the only factors influencing the absorption of light, which gives rise to questions like: Is the molecule able to absorb light of a considered wavelength? Which of the excited states is populated with the highest probability?

An answer to these questions can be obtained from an analysis of the oscillator strengths for different transitions. In order to make an electronic transition possible, an oscillating dipole must be induced as a result of the interaction of the molecule with light. The oscillator strength, fijosc, between two electronic states, i and j, is proportional in atomic units (a.u.) to the respective transition dipole moment, μij, and the respective energy difference, ΔEij:246

| 1 |

If the transition dipole moment between two states is zero, no transition is allowed. The reasons can be that a change of the electronic spin would be required, and the transition is thus spin forbidden. Another reason can be the molecular symmetry, leading to symmetry forbidden transitions. The latter are common in molecules that carry an inversion center, and transitions that conserve parity are forbidden.247 An energetic state is called dark if the transition dipole moment is very small or zero. In contrast, a state is called bright if the transition dipole moment is large. Most often, studies that target the photochemistry of molecules focus on excitation to the lowest brightest singlet state, i.e., the state that absorbs most of the incident energy. The same is true for emission processes. While fluorescence is an allowed transition, phosphorescence is a spin forbidden process, i.e., a triplet-singlet emission in many cases.248

After an excitation process, the molecule is considered to move on the excited-state PESs and is expected to undergo further conversions. The excess of energy a molecule carries—as a result of the initial absorption of energy—is most often converted into heat, light, such as fluorescence or phosphorescence, or chemical energy. If the molecule returns to its original state, then the molecule is photostable. Otherwise, either photodamage, such as decomposition, or useful photochemical reactions including bond breaking/formation occur. In all cases, heat or light can be emitted, which can also be harnessed in light-emission applications.59,249−251 With respect to photostability, ultrafast transitions, in the range of femto- to picoseconds (10–15–10–12 seconds) take place and lead the molecule back to the ground state. This means that the electronic energy is converted into vibrations of the molecule, and the molecule is termed hot. This heat is usually dissipated into the environment, a process that is often neglected in excited-state simulations due to the cost of describing surrounding molecules.

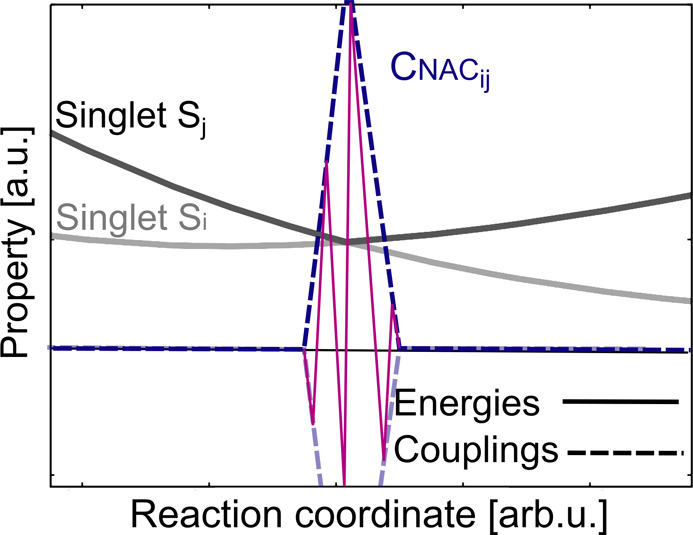

Radiationless transitions from one electronic state to another take place in so-called critical regions of the PESs. As the name already suggests, critical regions are crucial for the dynamics of a molecule, but are also challenging to model accurately. The critical points, where transitions are most likely to occur, are called conical intersections and are illustrated in Figure 2. At these crossing points, PESs computed with quantum chemistry can show discontinuities. These discontinuities can occur also in other excited-state properties and pose an additional challenge for an ML model when fitting excited-state quantities.

In addition to the aforementioned complications of treating a manifold of excited states, also the probability of a radiationless transition between them has to be computed somehow. This probability is usually determined by couplings between two approaching PESs. Between states of the same spin multiplicity, nonadiabatic couplings (NACs) arise, and spin–orbit couplings (SOCs) give rise to the transition probability between states of different spin multiplicities. These couplings are intimately linked to the excited-state PESs and therefore should also be considered with ML. However, only a handful of publications describe couplings with ML,15,92,94−96,140,145,146,149,252 which highlights the difficulty of providing the necessary reference data as well as the challenges of accurately fitting them. New methods are constantly needed to further enhance this exciting research field.

3. Quantum Chemical Theory and Methods

In this section, we present some key aspects of quantum theory for excited states, which is the basis of any study focusing on ML for excited states of molecules. We do so because (i) the outcome of the corresponding calculations serve as training data for ML, leaving quantum chemistry and ML thus inseparably connected in many cases and (ii) to clarify the employed nomenclature. We will discuss electronic structure theory (section 3.1), the different bases (section 3.2), i.e., the diabatic, adiabatic, and diagonal bases, the computation of excited-state molecular dynamics simulations (section 3.3) with different flavors of quantum nuclear dynamics and mixed quantum-classical methods, along with the computation of dipole moments and spectra. Experts on these topics may skip directly to section 4, which focuses on ML methods.

In the following, we provide a description of the differences of excited-state computations to calculations for the electronic ground state and the challenges that arise due to the treatment of a manifold of excited states. These challenges also point to issues that are problematic for ML. These explanations will provide the groundwork to evaluate different quantum chemical methods for their use to generate a training set for ML and to use it for different types of applications, such as excited-state MD simulations. Naturally, we can only provide a general idea of this field and refer the interested reader to pertinent textbooks and reviews, such as refs (178, 253−264).

In order to follow a consistent notation within this review, we try to explain all basic concepts with notations that are frequently used in the literature. Currently, a zoo of different notations for the same property can be found. For example, the NACs, or derivative couplings, are sometimes referred to as so-called interstate couplings, i.e., couplings between two states multiplied with the corresponding energy gap between those two states,144 while in other works interstate couplings refer to off-diagonal elements of the Hamiltonian in another basis, where the potential energies are no eigenvalues of the electronic Schrödinger equation. We want to avoid a confusion of different notations and thus provide a consistent definition below. For the excited states, a number of different electronic states are required. Throughout this review, we adopt the following labeling convention for different electronic states: The lower case Latin letters, i, j, etc. will be used to denote different electronic states. The abbreviations NS, NM, and NA will indicate the number of states, molecules, and atoms, respectively.

The foundation for the following sections is a separation of electronic and nuclear degrees of freedom, which is based on the work of Born and Oppenheimer.265 However, the famous Born–Oppenheimer approximation is later (partly) lifted, and the coupling between electrons and nuclei is taken into account in nonadiabatic dynamics simulations.

3.1. Electronic Structure Theory for Excited States

The main goal when carrying out an electronic structure calculation is usually to compute the potential energy and other physicochemical properties of a compound. We distinguish between two overarching theories to achieve this goal: wave function theory (WFT) and density functional theory (DFT) as outlined, e.g., by Kohn in his Nobel lecture.266

The basis of WFT, as for any electronic structure calculation, is the electronic Schrödinger equation267,268 with the electronic Hamilton operator, Ĥel, and the N-electron wave function Ψi(R, r) of electronic state i, which is dependent on the electronic coordinates r and parametrically dependent on the nuclear coordinates, R:

| 2 |

From the wave function, i.e., the eigenvector of this eigenvalue equation, any property of the system under investigation can be derived. How to solve the electronic Schrödinger equation exactly to obtain the potential energy of an electronic state i, Ei, is known in theory. However, from a practical point of view, the computation is infeasible for molecules that are more complex than for example H2, He2+, and similar systems.269 In order to make the computation of larger and more complex systems viable, approximated wave functions are introduced.

In contrast to WFT, DFT reformulates the energy of a system in terms of the ground state electron density rather than the N-electron wave function, and the energy is expressed as a functional thereof. The advantage of DFT is a rather high accuracy at a rather low computational cost. If DFT is applied properly, it is considered as one of the most efficient ways to obtain reliable and reasonably accurate results of molecules up to hundreds of atoms. In solid state physics, DFT is even the workhorse of most studies aiming to describe ground state properties.270 However, the problem is that the equations to be solved are unknown. The missing piece is the exact exchange-correlation functional of a system. To date, researchers have come up with many different approximations to this functional that can be used to treat specific problems, but a universal functional capable of describing different problems equally accurately has not yet been found. Moreover, there is no systematic way to improve a density functional. The results obtained with DFT therefore critically depend on the choice of the functional.269,271

In the following sections, we will describe both theories and focus on the excited states of molecules. We will start to cover ab initio methods, which means that they are derived from first-principles without parametrization. We mention the basic underlying concepts here because we believe them to be essential in order to generate training data and carry out ML for excited states. Furthermore, these methods present starting points for an ML approximation to the excited-state wave function or density, which is still lacking, to the best of our knowledge. Nevertheless, such an ML model would be extremely powerful and could provide a solution to many existing quantum chemical problems.

3.1.1. Wave Function Theory

The basis of all discussed ab initio methods is the Hartree–Fock method. The N-electron wave function is represented by a single Slater determinant, ϕ0, which makes N coupled one-electron problems out of the N-body problem. This Slater determinant is the antisymmetric product of one-electron wave functions, the spin orbitals, which can be atomic, molecular, or crystal orbitals, depending on the system. In the case of molecular (or also crystal) orbitals, they are usually expanded as a linear combination of atomic orbitals, where the expansion coefficients are optimized during the calculation. In order to do so efficiently, the atomic orbitals are themselves expanded with the help of a basis set. The N-electron wave function is therefore obtained as a double expansion. Two approximations are applied, which is the use of a finite basis set to represent the atomic orbitals and in turn also the molecular orbitals on the one hand and the use of a single Slater determinant on the other hand. This usually gives a poor description of a system under investigation, due to a lack of electronic correlation.

Electronic correlation describes how much the motion of an electron is influenced by all other electrons. Since the Hartree–Fock method can be seen as a mean-field theory, where an electron “feels” only the average of the other electrons, correlation is quantified by the correlation energy, which is the difference between the Hartree–Fock energy and the exact energy of a system.

Unsurprisingly, all further discussed quantum chemical methods aim at improving the Hartree–Fock method. They can be seen as different flavors of the same solution to the problem: They all include more determinants in one way or another. Accordingly, the wave function is expanded as a linear combination of determinants, where a determinant consists of molecular orbitals, which are expanded in atomic orbitals. This ansatz contains two types of coefficients that can be optimized, the ones for the determinants and the ones yielding the molecular orbitals. If the latter are kept the same for different determinants, we speak of a single-reference wave function. If both types of coefficients are adapted, we speak of a multireference wave function. Similarly, the electron correlation is also divided into two parts, termed dynamic correlation and static correlation. Single-reference methods improve on the dynamic correlation, while a multireference wave function allows for static correlation. However, the separation is not so strict, as can be seen by the following fact: Both the aforementioned single-reference variant and the multireference variant become equivalent when including an infinite number of terms and deliver the exact solution to the Schrödinger equation if also an infinite basis set is used.

Configuration Interaction

In the case of single-reference methods, the orbitals obtained from the reference calculation (usually Hartree–Fock) are kept fixed. Since usually more orbitals than the number of electrons in the system are calculated, the possibility of constructing different Slater determinants from these orbitals exist, which can be used for expanding the actual wave function:272,273

| 3 |

Each Slater determinant is weighted by a coefficient, ciI. These coefficients can be obtained variationally by minimizing the total energy under the constraint of fixed orbitals, ending up in the configuration interaction (CI) methods. ϕ0 is the reference, Hartree–Fock, wave function. In principle, the exact solution can be obtained by considering all possible Slater determinants in combination with a complete basis set. The use of all possible configurations is called full-CI and represents the case when all electrons are arranged in all possible ways. Practicable methods require truncation; e.g., CIS (CI singles) or CISD (CIS and doubles) are frequently used, where only single excitations or additionally double excitations are accounted for, respectively. Figure 3 gives a schematic overview of the improvements of CI that one can apply. A huge advantage of these methods is that how to obtain the exact solution is known and that they are systematically improvable. However, truncated CI does not scale correctly with the system size and is therefore not size-extensive and also not size-consistent (i.e., the energy of two fragments A and B at large distance computed together, E(A + B), is not equal to the sum of the energies of the fragments from separate calculations, ≠ E(A) + E(B)).274

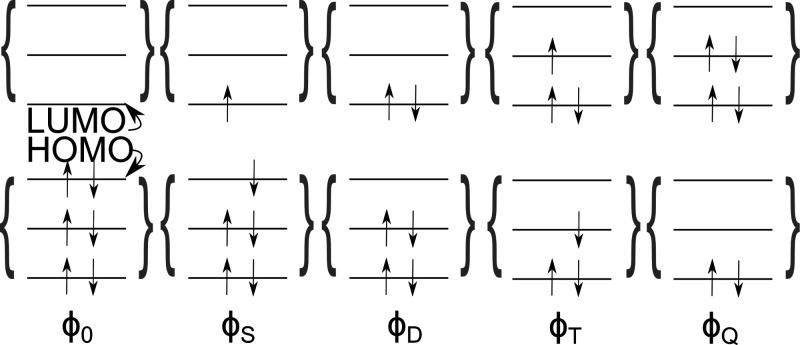

Figure 3.

Different arrangements of electrons in molecular orbitals giving rise to the configuration interaction (CI) method. Inclusion of excited configurations in addition to the ground-state, reference determinant, ϕ0, allows one to go beyond the Hartree–Fock method. Electrons are excited into higher electronic orbitals, and Slater determinants are indicated using the letters S, D, T, and Q, which refer to single, double, triplet, and quadruple excitations.

The CI scheme can be employed to improve the ground-state wave function by mixing the Hartree–Fock determinant and determinants of different electron configurations. In the same way, also wave functions of excited states can be computed. Then, the coefficients, ciI, are optimized for higher eigenvalues of the electronic Hamiltonian instead of the first one. Beginners in the field then often get confused by terms such as single excitation in comparison to the first excited state. A single excitation determinant (see Figure 3) can be part of the wave function for the first excited state but can also be a part of the ground-state wave function.

Electron Propagator Methods

Another class of methods that we shortly want to mention here are electron propagator methods that are based on one electron Green’s function and are a variant of perturbation theory schemes. One popular method that is based on Green’s function one electron propagator approach is the algebraic diagrammatic construction scheme to second-order perturbation theory (ADC(2)).275 ADC(2) is a single-reference method and can be used to efficiently compute excited states of molecules. It offers a good compromise between computational efficiency and accuracy, while being systematically improvable (higher order variants such as ADC(2)-x or ADC(3) exist). The time evolution of a systems polarizability is obtained by applying the polarization propagation, which contains information on a system’s excited states.272,276−279 The ground-state energy of ADC(2) is based on Møller–Plesset perturbation theory of second order,280,281 MP2, where the latter can formally be shown to include double excitations for the improvement of Hartree–Fock; see ref (272). The dependence of ADC(2) on MP2 gives rise to instabilities in regions, where excited states come close to the ground state, or homolytic dissociation takes place. The excited states of bound molecules are described with reasonable accuracy. Compared to multireference CI methods (see below), the black box behavior of ADC(2) is a clear advantage.275

Coupled Cluster

The current gold standard of ab initio methods for the ground state is the family of coupled cluster (CC) methods. CC is often referred to as the size-extensive and size-consistent version of CI. The different electronic configurations accounting for single or double excitations (such as in CIS and CISD for example) are obtained by applying an excitation operator, T̂:282

| 4 |

Similarly to CI, this operator can be truncated. If T̂ = T̂1+T̂2, single and double excitations are accounted for. When using the same number of determinants, CC usually converges faster than CI.

Excited states can be computed in a single-reference approach by equation-of-motion-CC (EOM-CC), where the excited-state wave function is written as an excitation operator times the ground-state wave function. For further details, see, e.g. refs (283) or (284).

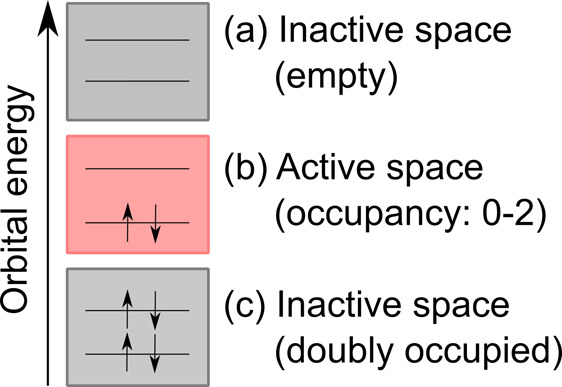

CASSCF

The problem of missing static correlation in the Hartree–Fock approach is tackled by a multireference ansatz for the wave function. Not only coefficients, but also orbitals are optimized.271 This treatment is important for many excited-state problems, but also some transition metal complexes in their ground state, transition states, or homolytic bond-breaking with the dissociation of the N2 molecule being a notoriously difficult example.285,286 An accurate ML training set for many chemical problems in the excited states often calls for such methods.

The multiconfigurational self-consistent field (MCSCF) method can be seen as the multireference counterpart to the Hartree–Fock method.287 One of the most popular variants of MCSCF methods is the Complete Active Space SCF (CASSCF),288,289 where important atomic orbitals and electrons are selected giving rise to an active space. An example is shown in Figure 4. According to this scheme, the orbitals are split into an inactive, doubly occupied part, an active part, and an inactive, empty part. Within the active space, a full CI (FCI) computation is carried out. The active space has to be chosen manually by selecting a number of active electrons and active orbitals. CASSCF is no black box method, and a meaningful active space selection is the full responsibility of the user. As an advantage, CASSCF can describe static correlation well, which is necessary in systems with nearly degenerated configurations with respect to the reference Slater determinant. For completeness, state-averaging (i.e., SA-CASSCF) is most often applied, where states belonging to the same symmetry are averaged. Another variant of MCSCF methods is restricted active space SCF (RASSCF), which is very similar to CASSCF, but within RASSCF the active space is restricted and no FCI computation is carried out.272

Figure 4.

Electrons and orbitals of an arbitrary system to exemplify the active space needed for many multireference methods. (a) The highest, not considered, molecular orbitals are inactive and always empty. (c) The lowest, not considered, molecular orbitals are always doubly occupied. (b) The active space is shown with two active electrons in two active orbitals.

DMRG

As an alternative to deal with large active spaces, the density matrix renormalization group (DMRG) can be used.290 A DMRG-SCF calculation is similar to a CASSCF calculation, but instead of a FCI solution of the active space, an approximated solution with DMRG is obtained to avoid the exponential scaling of the computational costs with the number of active orbitals.291−296 Very recently, transcorrelated DMRG (tcDMRG) was introduced for strongly correlated systems.297

MR-CI

Even higher accuracy can be obtained with multireference CI methods,253,298,299 such as MR-CISD, that additionally add single and double excitations out of the active space and are therefore based on CASSCF wave functions. With this approach, electronic correlation, i.e., static and dynamic correlation, can be treated.

CASPT2

Alternatively, complete-active-space perturbation theory of second order, CASPT2,300−302 can correct electronic correlation effects via treating multireference problems with perturbation theory. This variant of multireference perturbation theory methods uses the CASSCF wave function as the zeroth order wave function. CASPT2 can be applied to each state separately (single-state (SS)-CASPT2) or correlated states can be mixed at second order resulting in a multistate perturbation treatment (MS-CASPT2).300−302 Other perturbation approaches for multireference problems exist, like the n-electron valence state perturbation theory (NEVPT2).303−305

MRCC

In addition to multireference methods based on CI, multireference variants of CC approaches exist. A relatively efficient implementation is for example the Mk-MRCC approach of Mukherjee and co-workers306 or the Brillouin-Wigner approach,307 which is however not size extensive. Noticeably, the development of multireference CC approaches is a rather young research field compared to other excited-state methods, and the computation of properties and forces is not well explored. Many studies therefore focus on the simulation of energies of low-lying states with MRCC methods. Additionally, such methods suffer from algebraic complexity and numerical instabilities. Interested readers who seek for a more extensive summary of existing MRCC methods are referred to refs (253, 308, and 309).

Challenges

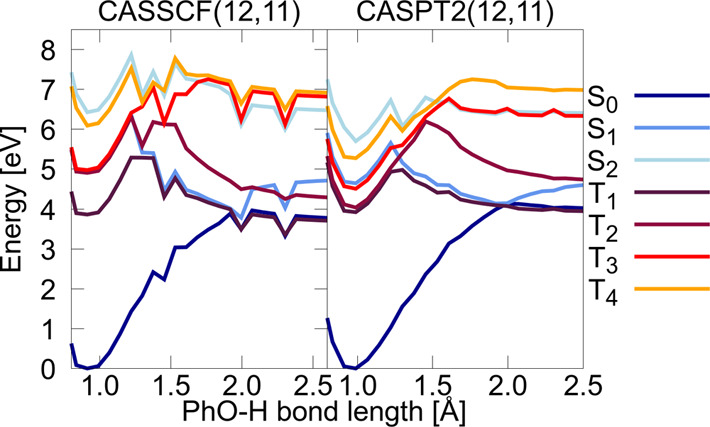

The probably biggest drawback of the aforementioned multireference methods is that their protocols are very demanding. Finding a proper active space is a tedious task that often requires expert knowledge. Too small active spaces can lead to inaccurate energies, and problems with so-called intruder states are common. Those are electronic states that are high in energy at a reference molecular geometry, but become very low in energy at another molecular geometry, that is visited along a reaction coordinate. The active space then changes along this path. This behavior can result in inconsistent potential energies. In the case of CASPT2, the configurations of intruder states can lead to large contributions in the second-order energy, making the assumption of small perturbations invalid. Especially for describing molecular systems with many energetically close-lying states and for the generation of a training set for ML, such inconsistencies are problematic. Figure 5 shows an example of potential energy curves of three singlet states and four triplet states of tyrosine computed with (a) CASSCF(12,11) and (b) CASPT2(12,11), where 12 refers to the number of active electrons and 11 to the number of active orbitals. We used OpenMolcas310 to compute an unrelaxed scan along the reaction coordinate, which is a stretching of the O–H bond located at the phenyl-ring of tyrosine.

Figure 5.

Potential energy curves of the three lowest singlet (S0–S2) and the four lowest triplet state (T1–T4) of the amino acid tyrosine along the O–H bond length of the hydroxy group located at the phenyl ring (Ph–OH) computed with CASSCF(12,11)/ano-rcc-pVDZ and CASPT2(12,11)/ano-rcc-pVDZ.311

Intruder states are no exception. Actually, they are quite common in small- to medium-sized organic molecules. A large enough reference space can mitigate this problem, but makes computations almost infeasible. The computational costs increase exponentially with the number of active orbitals. In many cases, the improved accuracy due to a larger active space cannot justify the considerably higher expenses. At its best and with massively parallel simulations, an active space of about 20 electrons in 20 orbitals can be treated,312 which is impracticable for many applications, such as dynamics simulations. For medium-sized molecules, the active space that would be required for a given simulation might even be way too large to be feasible for calculations in a static picture. With respect to ML, a model that can detect such regions along reaction coordinates would be very helpful. Indeed, the dipole moment or nonadiabatic couplings are commonly used to identify a change in a character of a state. Monitoring of these properties along reaction coordinates could potentially help to identify such regions. To the best of our knowledge, such a model does not yet exist. What is known so far is that ML can provide a smooth interpolation of such cusps in energy potentials as long as the density of electronic states is not too high.94,171

Worth mentioning at this point are also Rydberg states, which often need to be considered in small- to medium-sized molecules. Rydberg states can be strongly interlaced with valence excited states. In such cases, the active space needs to be large enough to treat both the valence and Rydberg molecular orbitals. Additionally, the one electron basis set should be flexible enough to describe both types of orbitals. This increases the computational costs additionally. More details on the inclusion of Rydberg states in simulations can be found in refs (313−316). Especially in such cases, an ML representation of the electronic wave function to reduce the computational time would be highly beneficial.

A promising tool to eliminate the complex choice of active orbitals is autoCAS.317−319 It provides a measure of the entanglement of molecular orbitals that is based on DMRG. In principle, there is no prerequisite for the active space selection. If possible, the reference space should be the full valence in order to identify the relevant orbitals and electrons. If the full valence space is too large for a DMRG-CI calculation, one or several smaller chemically sensible reference active spaces should be selected to be able to analyze the importance of all orbitals.317−319 As an alternative, ML can be used to determine an active space.71

3.1.2. Density Functional Theory

A complementary view on how to obtain the energy of a system is provided by DFT. DFT dates back to 1964, when it was formulated by Hohenberg and Kohn320 entirely in terms of the electron density, η(r⃗). A one-to-one correspondence between this density and an external potential, v(r⃗) exists and the potential acts on the electron density. The energy can be formulated in terms of a universal functional, F[η(r⃗)], of the electron density, which is independent of the external potential. In this way, the energy of a system’s ground state can be computed with the following equation:

| 5 |

The most widely used implementations of DFT rely on the Kohn–Sham approach.321 In fact, Kohn–Sham DFT is so successful that it is often simply referred to as DFT. In this approach, an auxiliary wave function in the form of a Slater determinant is employed. Since a single Slater determinant is the exact solution for a system of noninteracting electrons, this DFT approach can be seen as describing a system of noninteracting electrons that are forced to behave as if they were interacting. The latter effect can be achieved only by an unknown modification of the Hamiltonian or rather of the aforementioned functional. In other words, a Slater determinant as wave function ansatz is exact, but the Hamiltonian can only be approximated, in contrast to Hartree–Fock, where the true electronic Hamiltonian is used, but the Slater determinant is only an approximate wave function.

The functional F[η(r⃗)] can be separated into Coulombic interactions and a non-Coulombic part. The latter can further be divided into two terms: the kinetic energy of the noninteracting electrons and the exchange-correlation part, which describes the interaction of electrons and thus also corrects the kinetic energy by the difference of the real kinetic energy and the kinetic energy of the fictitious system of noninteracting electrons. The exchange-correlation functional is the part of DFT that is unknown, and finding the exchange-correlation functional remains the Holy grail of DFT.

In principle, if the exact functional was known, the exact ground-state energy of a system could be computed. Unfortunately, it is not known, and the success of a DFT calculation critically depends on the approximation that is used to the unknown exchange-correlation functional.

Excited States

As explained above, the electron density is computed from a single reference Kohn–Sham wave function, i.e., the one of noninteracting electrons with the density of the real system. This single-reference wave function makes DFT a single-reference method. In fact, most failures of DFT are a consequence of an improper description of static correlation.271 In order to describe excited states, the time-dependent (TD) version of DFT, namely, TDDFT, can be used. The foundation of this theory was laid in the 1980s with the Runge-Gross theorems,322 which can be regarded as analogies to the Hohenberg–Kohn theorems. They are based on the assumption that a one-to-one correspondence exists also between a time-dependent potential and a time-dependent electron density in this potential. A system can therefore be completely described by its time-dependent density. Also in the time-dependent case, the variational principle for the density is proposed.

The most widely used approach of TDDFT is linear response TDDFT (LR-TDDFT). Again, often TDDFT is used as a synonym for LR-TDDFT due to its extensive use. Within this theory and the KS approximation, no time dependent density is necessary to compute excitation energies and excited state properties. Linear response theory can be directly applied to the ground state density.323,324 Casida’s formulation of this theory is the most popular one and gives rise to random-phase approximation pseudoeigenvalue equations, which are also known as the Casida equations. Within the adiabatic approximation, they are implemented efficiently in many existing electronic structure programs. The Tamm-Dancoff approximation325,326 further simplifies the equations to an eigenvalue problem, resulting in the counterpart to CIS.327 Especially in cases when the time evolution of a system is studied, the Tamm-Dancoff approximation is beneficial since it leads to more stable computations close to critical regions of the PESs.269,328

Advantages and Disadvantages

The advantage of LR-TDDFT is its computational efficiency. The reasonable accuracy if a proper functional is chosen makes this approach often the method of choice to study the photochemistry of medium-sized to large and complex systems, which are not feasible to treat with costly multireference WFT based methods.253,329,330 Shortcomings of LR-TDDFT are the incorrect dimensionality of conical intersections, which are, however, one of the most important regions during nonadiabatic MD simulations.331−336 The incorrect dimensionality of conical intersections with standard TDDFT implementations leads to a qualitatively incorrect description of such critical regions. The missing couplings can be corrected for example with the CI-corrected Tamm-Dancoff approximation337 or the hole–hole Tamm-Dancoff approximation,338 which can recover the missing couplings and provide correct dimensionality at conical intersections. Alternatively, an incorporation of the spin-restricted ensemble-referenced Kohn–Sham method into the tight-binding TDDFT approach339 can be used to describe conical intersections with reasonable accuracy.

In addition, one should be aware that by definition, double excitations cannot be accounted for with LR-TDDFT. The computation of double excitations can be achieved by using a frequency dependent exchange kernel, which is known as dressed TDDFT.340,341 Alternatively, spin-flip TDDFT342−344 can be used, where a triplet state is taken as a reference state, and single excitations are treated with a flip in the electron’s spin. However, spin-contamination is quite common within these methods. In general, the description of double excitations from a multireference state would be more favorable, although spin-flip TDDFT is often considered to be a multireference method. In order to compute specific orbital occupations and consequently excitations and charge-transfer states, an alternative approximation exists, which is known as the Δ-SCF approach. In this theory, the electrons are forced into specific KS orbitals. The SCF is applied to converge the energy with respect to this configuration.345−347 Other multireference variants of TDDFT exist too. However, their description is beyond the scope of this review, and we refer the reader to recent reviews covering this topic in much more detail.253,348 The accuracy of (TD)DFT simulations for the ground state349 or excitation energies and absorption spectra of organic molecules could be improved by ML corrections, which were obtained from the genetic algorithm and NN approach (GANN),350 support vector machines,351 or AdaBoost ensemble correction models352 for example.

Last but not least, we briefly want to discuss the most critical part of a DFT calculation, which is the proper choice of the exchange-correlation functional. In the case of excited states, the treatment of valence excitations, Rydberg states and long-range charge transfer excitations on the same footing are highly problematic. While hybrid (meta-) generalized gradient approximation (GGA) or range-separated hybrid functionals353 are for example well suited for vertical excitations and the latter also for Rydberg states, global hybrid meta GGA or range-separated hybrid GGA functionals are better to describe charge transfer.269,354 Most often, functionals are accurate for one specific problem, but they fail to describe others. Although much effort has been devoted to develop functionals, finding a universal functional for DFT is still far from being achieved.180,253,269,328 ML could be particularly powerful to advance DFT and TDDFT in this regard. For example, models exist to predict the energy of a system based on self-consistent charge densities or external potentials. Kohn–Sham DFT can be circumvented, and density functionals can be constructed from ML models. Besides the density-potential, energy-density maps, and whole functionals can be learned.82,355−357

In summary, it should be stressed that, in general, there is not only one single solution to a particular problem, but that many possible ways can be considered, which lead to an equivalent description of a particular problem. Considering the excited states of molecules, it should be mentioned that it is of utmost importance to think carefully about the photochemical processes that may occur in order to find the most appropriate method for most of the assumed reactions. It often happens that within the same molecular system, one method can describe a certain photochemical reaction quite well, while another reaction can be described better with another method. However, the mixing of methods is not practicable for standard applications. Recently, studies on ML models have emerged that combine the different strengths of several methods, e.g. Δ-learning techniques358,359 or transfer learning.360 These methods could be well-suited solutions for many future applications to overcome the current limitations of existing quantum mechanical methods for the excited states. Even more than for ground state properties, the quality of the excited states depends critically on the ability of a method to describe the different possible reaction—as a consequence of the larger accessible configuration space of a molecular system. Even for medium-sized systems, it should be clear that a suitable method may already be computationally impracticable, and a balance between accuracy and computational effort has to be found.

3.2. Bases

The potentials computed with the aforementioned methods for different nuclear geometries can be represented in different bases, which are connected by unitary transformations. An example of five states in different bases is given in Figure 6. Note that often a system in a certain basis is also referred to as being in a certain picture or representation; here we will not use the term representation in order to not confuse the reader with molecular representations used in ML. As it is visible in the figure, we focus on three types of bases: (a) the diabatic basis, (b) the adiabatic (spin-diabatic) basis, i.e., the direct output of standard electronic structure programs, (c) the diagonalized version of (a) and (b), i.e., the spin-adiabatic basis. Throughout the literature, different names are given to these bases, which are summarized in Table 1. They stem from a partition of the total wave function into a sum of electronic and nuclear contributions, which can be written for all bases as

| 6 |

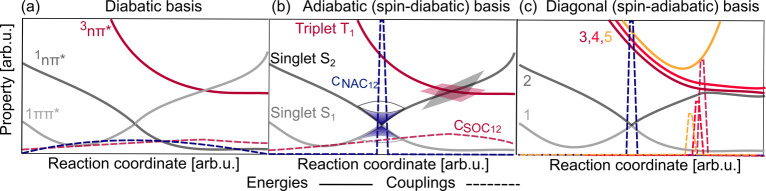

Figure 6.

(a) Example of three

potential energy curves ordered by their character

along with respective potential couplings between different states

shown by dashed lines. (b) Two singlets (Ei and Ej) and one triplet state (Ek) including coupling values (with vectorial properties,  , shown by their norm) in the adiabatic

basis, in which the triplet state crosses singlet states. (c) The

diagonal, or spin-adiabatic, basis, in which all states are ordered

by their energy and are spin-mixed. Kinetic couplings are shown by

their norm. Note that the ground state is not shown. Potential energy

curves are represented using solid lines and couplings using dashed

lines.

, shown by their norm) in the adiabatic

basis, in which the triplet state crosses singlet states. (c) The

diagonal, or spin-adiabatic, basis, in which all states are ordered

by their energy and are spin-mixed. Kinetic couplings are shown by

their norm. Note that the ground state is not shown. Potential energy

curves are represented using solid lines and couplings using dashed

lines.

Table 1. Commonly Used Names of Bases for the Excited-State Potential Energy Surfacesa.

| a | b | c |

|---|---|---|

| diabatic | adiabatic | diagonal |

| crude adiabatic | spin-diabatic | spin-adiabatic |

| spectroscopic | MCH | field-adiabatic |

| quasi-diabatic | field-free | field-dressed |

In principle, the total wave function can be expanded in infinitely many different bases. The electronic part ψibasis(r,R) corresponds to the eigenfunctions of the electronic Hamiltonian only for one of the bases (namely, the one from column B of Table 1). Associated with these functions are the corresponding potentials, depicted for a model system in Figure 6. Note that a different approach is taken in the exact factorization method,361 where the total wave function is expanded only in a single product, i.e., without the sum in eq 6, giving rise to only one (time-dependent) potential.

3.2.1. Adiabatic (Spin-Diabatic) Basis

The direct output of an electronic structure calculation usually provides the eigenenergies and eigenfunctions of the electronic Hamiltonian. In many cases, only one spin multiplicity is calculated. If this procedure is repeated along a nuclear coordinate, potential curves result that are termed adiabatic. Adiabatic means ”not going through” (from greek a = not, dia = through, batos = passable), and, indeed, the potentials never cross when considering one multiplicity. This situation is schematically illustrated in Figure 6b for singlet Si and singlet Sj.

Within one multiplicity,

3NA-dimensional adiabatic PESs are obtained

that are strictly ordered by energy. Hence, the states are usually

denominated with the first letter of the multiplicity and a number

as subscript, e.g., S0, S1, etc. For states

of the same multiplicity, critical points and seams exist. These regions

of the PESs are referred to as conical intersection (seams), in which

the corresponding states become degenerate. Such features make adiabatic

PESs nonsmooth functions of the atomic coordinates, which make them

difficult to predict with the intrinsically smooth regressors of ML.

At a conical intersection, the approaching potential energy curves

form a cone, and the NACs, denoted as  , between them show singularities

as a result

of the inverse proportionality to the vanishing energy gap:298,366

, between them show singularities

as a result

of the inverse proportionality to the vanishing energy gap:298,366

| 7 |

Second-order derivatives are

neglected here, as is done in many

quantum chemistry programs that compute NAC vectors. The blue dashed

curve in Figure 6a

illustrates the norm of the NAC vector, , that couples the states Si and Sj. At the avoided

crossing points of the states, the NAC norm shows a sharp spike, but

is almost vanishing elsewhere. If more than one multiplicity is considered,

the term adiabatic is not adequate anymore, because potentials of

different multiplicity might cross through each other. This situation

is then called diabatic with respect to the spin multiplicities, or

spin-diabatic in short. For example, singlets are adiabatic among

each other, triplets are adiabatic among each other, but singlets

are diabatic with respect to triplets. However, also the diabatic

basis (see Figure 6a and also below) qualifies as spin-diabatic. Because of this nomenclature

issue, which even gets experts confused sometimes, we refer to this

basis as “Molecular Coulomb Hamiltonian” (MCH) because

it is obtained from the eigenfunctions and eigenvalues of the nonrelativistic

electronic Hamiltonian, where only Coulomb interactions are considered.

, that couples the states Si and Sj. At the avoided

crossing points of the states, the NAC norm shows a sharp spike, but

is almost vanishing elsewhere. If more than one multiplicity is considered,

the term adiabatic is not adequate anymore, because potentials of

different multiplicity might cross through each other. This situation

is then called diabatic with respect to the spin multiplicities, or

spin-diabatic in short. For example, singlets are adiabatic among

each other, triplets are adiabatic among each other, but singlets

are diabatic with respect to triplets. However, also the diabatic

basis (see Figure 6a and also below) qualifies as spin-diabatic. Because of this nomenclature

issue, which even gets experts confused sometimes, we refer to this

basis as “Molecular Coulomb Hamiltonian” (MCH) because

it is obtained from the eigenfunctions and eigenvalues of the nonrelativistic

electronic Hamiltonian, where only Coulomb interactions are considered.

As an example, a crossing of a singlet state and a triplet state

is shown in Figure 6b. As it is visible, the triplet components, which are defined by

different magnetic quantum numbers, are degenerate. The states are

coupled by SOCs (denoted as  ), which

are usually obtained as smooth

potential couplings with standard quantum chemistry programs:256,365,367

), which

are usually obtained as smooth

potential couplings with standard quantum chemistry programs:256,365,367

| 8 |

These couplings are single real-valued or complex-valued properties.368,369 Whether they are complex or not depends on the electronic structure program employed, but they can be converted into each other.256,257,368,370 It is important to know in which way the SOCs are presented by an electronic structure program in order to find the best possible solution for ML approximations.15

ĤSO in eq 8 is the spin–orbit Hamilton operator, which describes the relativistic effect due to interactions of the electron-spin with the orbital angular momentum, allowing states of different spin-multiplicities to couple.370−372 Note that also SOCs between different states of the same multiplicity exist except for singlets. No exact expression on how to include relativistic effects into the many-body equations has been found yet. Among the most popular approximations used is the Breit equation,373 applying an adapted Hamiltonian instead of the electronic Hamiltonian, which comprises, among other terms, a relativistic part. This additional part of the Hamiltonian accounts for spin–orbit effects and is proportional to the atomic charge,257,368,370,372,373 leading to the belief that SOCs would only be relevant in systems with heavy atoms.233,234 Today, it is known that spin–orbit effects also play a crucial role in many other molecular systems and are important for intersystem crossing between states of different spin multiplicities.178,235−237

The states in the MCH basis can also be coupled via external electric-magnetic fields, e.g., by sunlight or a laser. The corresponding couplings stem from the transition dipole moments multiplied with the electric field. Since the effect of the field is not included in the potentials but as off-diagonal potential couplings, the MCH basis is also called field-free.362−364,374 However, also the diabatic basis qualifies as field-free.

3.2.2. Diabatic Basis

In the diabatic basis, the electronic wave function is not parametrically dependent on the nuclear coordinates. Diabatic potentials usually need to be determined from adiabatic potentials and are not unique; i.e., they rely on the method and the reference point, which is chosen in the adiabatic basis to fit diabatic potentials.239,256 The advantage of diabatic potentials is that singularities in NACs are removed together with nondifferentiable points of the PESs. Note that such a strictly diabatic basis for polyatomic systems does not exist in practice, and only approximated, so-called, quasi-diabatic, PESs can be fit, where NACs are almost removed, but not completely. In the literature, quasi-diabatic PESs are most often referred to as diabatic ones, so we will also use this notation here.

An example of a system in the diabatic basis is given in Figure 6a, and commonly used notations can be found in Table 1 in the first column. In regions where an avoided crossing is present in the adiabatic basis, the coupled diabatic potential energy curves cross. Since the electronic wave function of a state is ideally independent of the nuclear coordinates, its character is conserved. Consequently, the states are labeled according to their character and multiplicity, e.g., as 1ππ* or according to symmetry labels. Similar to the character, also spectroscopically important quantities like the dipole moment are mostly conserved or vary smoothly along the nuclear coordinates. Therefore, spectroscopic experiments can easily be interpreted when using the diabatic basis, which is thus sometimes also called spectroscopic basis. Note that sometimes labels like S1, etc. are used also when referring to the diabatic basis, especially in experimental papers when an identification of the wave function’s character has not been carried out and only one geometry is considered. However, at a different geometry, the energetic order of the states might have changed such that a state previously labeled as S2 might now be lower in energy than a state previously labeled as S1. Furthermore, this labeling scheme in the diabatic basis can lead to confusion with the labels from the MCH basis, and we suggest to reserve it only for the MCH basis.

Because of the mostly conserved characters and the crossing of states, diabatic potentials are smooth functions of the nuclear coordinates, in contrast to adiabatic potentials. A diabatic PES is thus highly favorable for several numerical applications including ML.

The MCH and diabatic bases can be interconverted by a unitary transformation

| 9 |

with a unitary matrix, U, that is determined up to an arbitrary sign (as a result of the arbitrary sign of the wave function, which will be discussed in detail in section 5.3). In the case of two states, U, is a rotation matrix:

| 10 |

and is dependent on the rotation angle, θ. Accordingly, the peaky NACs, which are obtained as derivative couplings (also called kinetic couplings) in the MCH basis, are converted to smooth potential couplings in the diabatic basis. The smooth SOCs from the MCH basis become even smoother (ideally constant) in the diabatic basis.

While one can straightforwardly apply diagonalization to convert diabatic PESs to adiabatic PESs (and similarly adiabatic PESs to diagonal PESs), a dilemma arises when one wants to take the inverse way to obtain diabatic PESs from adiabatic ones (and similarly adiabatic PESs from diagonal ones). In fact, finding diabatic PESs is highly complex and most often requires expert knowledge. To date, only small molecules could be represented with accurate diabatic potentials, and developing a method to automatically generate diabatic PESs remains an active field of research. Existing methods to obtain diabatic potentials require human input and are mostly applicable to small systems and certain reaction coordinates. Early pioneering works can be found in refs (239 and 375). Today, a lot more variants exist. Examples are diabatization using explicit derivative couplings,376,377 the propagation diabatization procedure,378 diabatization by localization,379 Procrustes diabatization,252 or diabatization by ansatz.142,380,381 Further, methods can be based on couplings or other properties,382−385 configuration uniformity,386 block-diagonalization,387,388 a hybrid diagonalization combining block-diagonalization and diabatization by ansatz,389,390 CI vectors,391 whereas some procedures are carried out at least partly using ML.142,143,380,390,392

3.2.3. Diagonal Basis

As the name indicates, the diagonal basis can be obtained by a diagonalization from the MCH or diabatic bases. In this case, a strictly adiabatic picture is obtained, where states never cross.256 Accordingly, the concept of multiplicity for a single state is lost because the state might be of singlet character in one region and of triplet character in another region. Therefore, the basis is also called spin-mixed or spin-adiabatic.257,369,393 The states are strictly ordered by energy and can be labeled simply with numbers (see Figure 6c). The resulting wave functions are eigenfunctions of the relativistic electronic Hamiltonian.236,256,257 These eigenfunctions as well as the eigenenergies can be also obtained directly with, e.g., relativistic two-component or four-component calculations,394 instead of via diagonalization.

In this basis, the effect of the SOCs is incorporated into the PESs to a large extent. What remains are localized kinetic couplings, which are similar in nature to the NACs in the MCH basis. An example is given in Figure 6c. The parts of the potentials that correspond to the different triplet components in the MCH basis are split energetically in the diagonal basis. In the case of small SOCs, the diagonal potentials look similar to the MCH potentials. However, if the SOCs are strong, potentials that are degenerate in the MCH basis can be easily shifted apart by 1 eV in the diagonal basis. Such splittings are then also experimentally observable, and the diagonal basis yields a more intuitive interpretation of these experiments.43,395,396

As mentioned above, the states in the MCH basis can also be coupled via electromagnetic fields. A diagonalization of the potential matrix then yields so-called field-dressed states or light-induced potentials, which can also be termed field-adiabatic.362,374,397−399 Since the fields are usually time-dependent, the most important axis along which the potentials in this field-dressed basis need to be plotted is time.374

In principle, all these bases are equivalent but only if an infinite number of terms is considered in eq 6. In practice, potentials represented in different bases have different advantages for dynamics simulations, especially in combination with different approximations made in the different dynamics methods as outlined below.

3.3. Excited-State Dynamics Simulations

In order to investigate the temporal evolution of an isolated molecular system in the excited states, the time-dependent Schrödinger equation has to be solved:254

| 11 |

From a technical point of view, a sequence of time steps is computed, where in every step the electronic problem is solved to yield potentials, which determine the forces acting on the nuclei such that the nuclear equations of motion can be solved for the current time step.

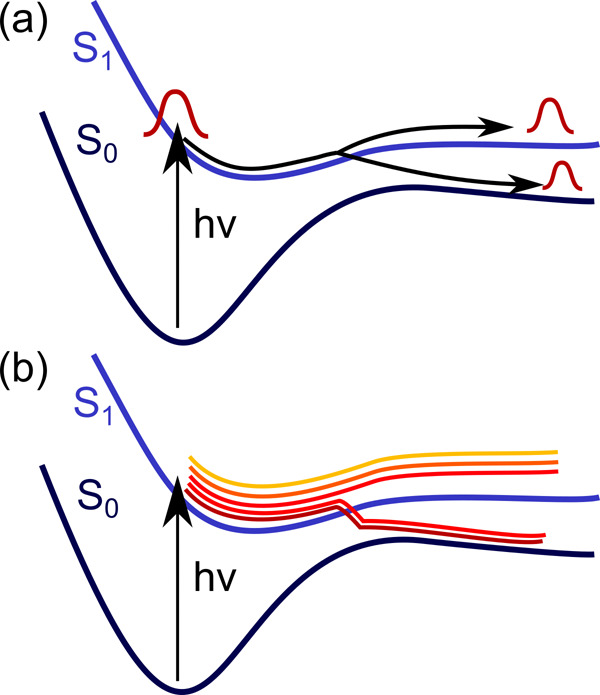

Ideally, the nuclei are treated quantum mechanically. In this case, the PESs are usually computed in advance and either interpolated or stored on a grid for later use. The hope is that ML can improve the interpolation of potentials drastically. Such global PESs are needed because a wave function is employed for the nuclei, which extends over a range of nuclear coordinates at the same time (see Figure 7a). An overview over corresponding dynamics methods is given in section 3.3.1.

Figure 7.

Excited-state dynamics can be treated with (a) quantum approaches, where wave functions are used for the nuclei, or (b) classical approaches, based on trajectories.

The nuclear dynamics can also be approximated classically while quantum potentials are used; i.e., mixed quantum classical dynamics (MQCD) simulations are carried out. Such methods is discussed in section 3.3.2. Since the classical nuclear trajectories are defined only at one nuclear geometry at a time (see Figure 7b), on-the-fly calculations of the potential energies are possible. An on-the-fly scheme is computationally advantageous if the number of visited geometries during the dynamics is smaller than the number of points needed to represent the conformational space on a grid or via interpolation.236,256,258,331,332,367,400 No fitting of PESs is necessary in an on-the-fly approach, but fitted PESs can still be used as an alternative. Since ML approaches provide such interpolated potentials, the amount of training points generated with quantum chemistry must be less than the number of points needed in an on-the-fly approach in order to be advantageous. This demand is satisfied, e.g., for long time scales or if many trajectories are necessary.

In the following, we will shortly discuss the different types of describing nuclear motion and the opportunities of ML models to enhance the respective dynamics simulations.

3.3.1. Quantum Nuclear Dynamics

The computational cost of an exact nuclear dynamics simulation scales exponentially with the nuclear degrees of freedom. Hence, simulations are limited to small systems, typically containing less than five atoms.59,368,401,402 Still, the calculation of the PESs of the molecule can be a rather expensive part of the whole scheme, and the use of ML algorithms is advisable even for such small systems.

To treat larger systems, approximations have to be invoked.402 A prominent approach that can be converged to the exact solution is the multiconfigurational time-dependent Hartree (MCTDH) approach.48,403−405 Its high efficiency stems from the use of time-dependent basis functions to represent the nuclear wave functions. Nonetheless, the computations are computationally costly, and the nuclear degrees of freedom are often reduced to only a few important key coordinates,239,406 where classical simulations can help identify the latter.407 Whether quantum dynamics of such reduced-dimensionality models are better than using classical dynamics of a full-dimensional system is still under debate and probably depends on the system. In the case of quantum dynamics, the potentials need to be presented to the algorithm in the diabatic basis, mostly due to numerical stability (e.g., smooth couplings are easier to integrate than singular ones). For more than 20 years, the (modified) Shepard interpolation has been used to fit diabatic potentials.151,408−411 Notably, the grow algorithm151 can be used to efficiently generate the database of points upon which the interpolation is based. However, it is clearly desirable to treat larger systems, and ML models like neural networks (NNs) promise higher performance or more flexibility in such cases.143,146,147,149,378,392,412−414