Abstract

We provide an introduction to Gaussian process regression (GPR) machine-learning methods in computational materials science and chemistry. The focus of the present review is on the regression of atomistic properties: in particular, on the construction of interatomic potentials, or force fields, in the Gaussian Approximation Potential (GAP) framework; beyond this, we also discuss the fitting of arbitrary scalar, vectorial, and tensorial quantities. Methodological aspects of reference data generation, representation, and regression, as well as the question of how a data-driven model may be validated, are reviewed and critically discussed. A survey of applications to a variety of research questions in chemistry and materials science illustrates the rapid growth in the field. A vision is outlined for the development of the methodology in the years to come.

1. Introduction

At the heart of chemistry is the need to understand the nature, transformations, and macroscopic effects of atomistic structure. This is true for materials—crystals, glasses, nanostructures, composites—as well as for molecules, from the simplest industrial feedstocks to entire proteins. And with the often-quoted role of chemistry as the “central science”,1,2 its emphasis on atomistic understanding has a bearing on many neighboring disciplines: candidate drug molecules are made by synthetic chemists based on an atomic-level knowledge of reaction mechanisms; functional materials for technological applications are characterized on a range of length scales, which begins with increasingly accurate information about where exactly the atoms are located relative to one another in three-dimensional space.

Research progress in structural chemistry has largely been driven by advances in experimental characterization techniques, from landmark studies in X-ray and neutron crystallography to novel electron microscopy techniques which make it possible to visualize individual atoms directly. Complementing these new developments, detailed and realistic structural insight is also increasingly gained from computer simulations. Today, chemists (together with materials scientists) are heavy users of large-scale supercomputing facilities, and the computationally guided discovery of previously unknown molecules and materials has come within reach.3−8

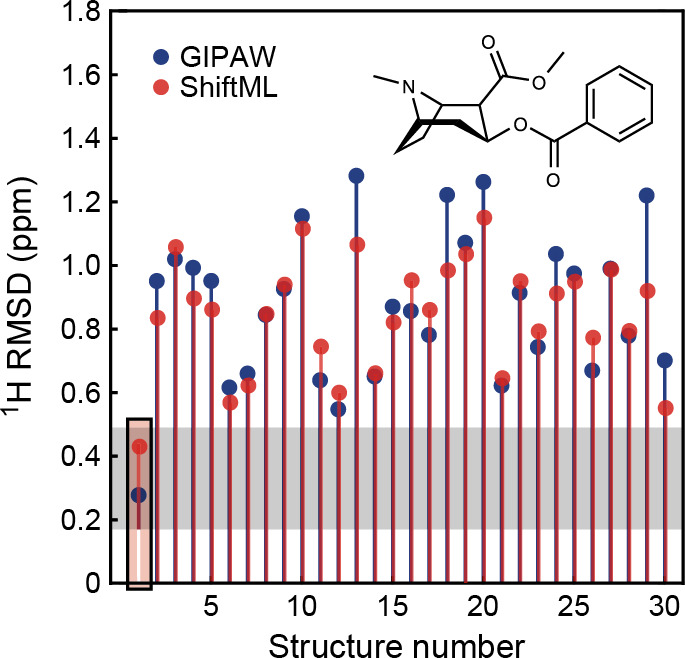

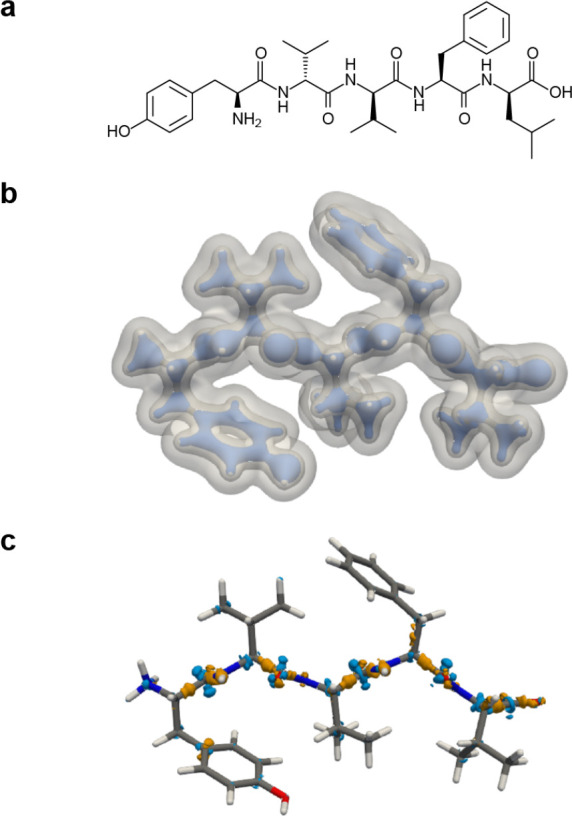

Computations based on the quantum mechanics of electronic structure, currently most commonly within the framework of density-functional theory (DFT), are widely used to study structures of molecules and materials and to predict a range of atomic-scale properties.9−11 Two approaches are of note here. One is the prediction of atomically resolved physical quantities, e.g., isotropic chemical shifts, δiso, that can be used to simulate NMR spectra with a large degree of realism12—thereby making it possible to corroborate or falsify a candidate structural model or to deconvolute experimentally measured spectra. The other central task is the determination of atomistic structure itself, achieved through molecular dynamics (MD), structural optimization, and other quantum-mechanically driven techniques. Many implementations of DFT exist and are widely used, and their consistency has been demonstrated in a comprehensive community-wide exercise.13

Electronic-structure computations are expensive, in terms

of both

their absolute resource requirements and their scaling behavior with

the number of atoms, N. For DFT, the scaling is typically  in the most common implementations; see

ref (14) for the current

status of a linear-scaling implementation. Routine use is therefore

limited to a few thousand atoms at most for DFT single-point evaluations,

to a few hundred atoms for DFT-driven “ab initio” MD,

and to even fewer for high-level wave function theory methods such

as coupled cluster (CC) theory or quantum Monte Carlo (QMC). The latter

techniques offer an accuracy far beyond standard DFT, and they are

beginning to become accessible not only for isolated molecules but

also for condensed phases. However, running MD with these methods

requires substantial effort and is currently largely limited to proof-of-principle

simulations.15−17 For studies that predict atomistic properties, such

as NMR shifts, derived from the wave function, a new electronic-structure

computation has to be carried out every time a new structure is considered,

again incurring large computational expense.

in the most common implementations; see

ref (14) for the current

status of a linear-scaling implementation. Routine use is therefore

limited to a few thousand atoms at most for DFT single-point evaluations,

to a few hundred atoms for DFT-driven “ab initio” MD,

and to even fewer for high-level wave function theory methods such

as coupled cluster (CC) theory or quantum Monte Carlo (QMC). The latter

techniques offer an accuracy far beyond standard DFT, and they are

beginning to become accessible not only for isolated molecules but

also for condensed phases. However, running MD with these methods

requires substantial effort and is currently largely limited to proof-of-principle

simulations.15−17 For studies that predict atomistic properties, such

as NMR shifts, derived from the wave function, a new electronic-structure

computation has to be carried out every time a new structure is considered,

again incurring large computational expense.

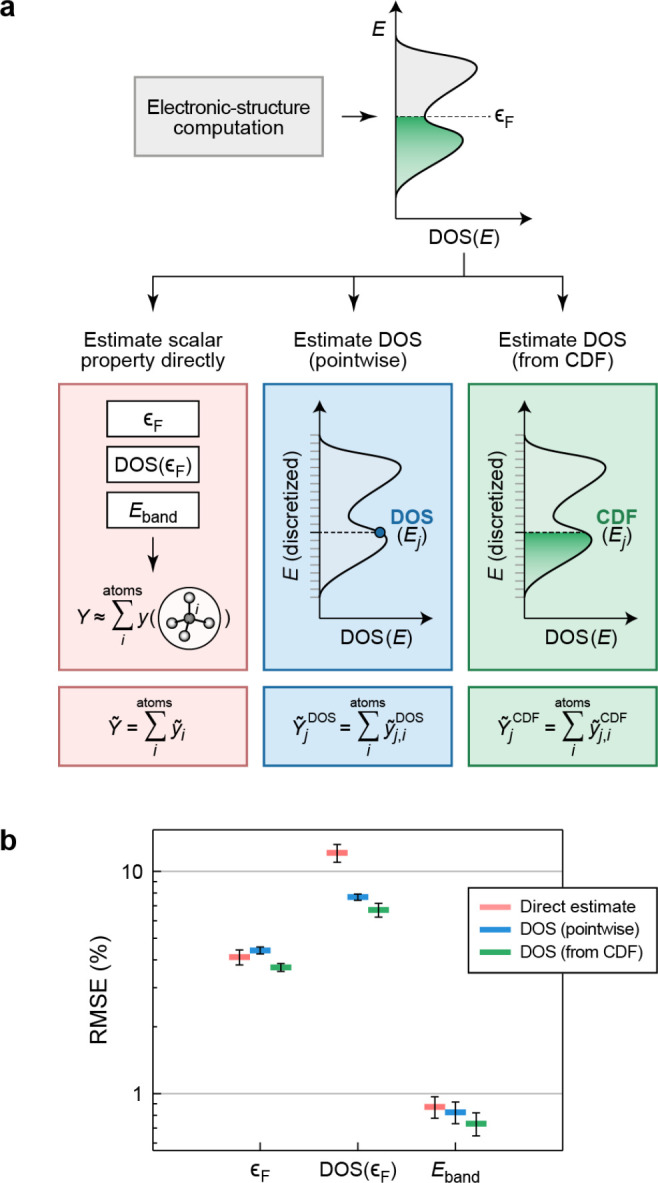

In the past decade, machine learning (ML) techniques have become a popular alternative, aiming to make the same type of predictions using an approximate or surrogate model, while requiring only a small fraction of the computational costs. There is practical interest in being able to access much more realistic descriptions of structurally complex systems (e.g., disordered and amorphous phases) than currently feasible, as well as a wider chemical space (e.g., scanning large databases of candidate materials rather than just a few selected ones). There is also a fundamental interest in the question of how one might “teach” chemical and physical properties to a computer algorithm which is inherently chemically agnostic and in the relationship of established chemical rules with the outcome of purely data-driven techniques.19 We may direct the reader to high-level overviews of ML methods in the physical sciences by Butler et al.,20 Himanen et al.,21 and Batra et al.,22 to more detailed discussions of various technical aspects,23−26 and to a physics-oriented review that places materials science in the context of many other topics for which ML is currently being used.27

The use of ML in computational chemistry, materials science, and also condensed-matter physics is often focused on the regression (fitting) of atomic properties, that is, the functional dependence of a given quantity on the local structural environment. For the case of force fields and interatomic potentials, there are a number of general overview articles28−31 and examples of recent benchmark studies.32,33 There are also specialized articles that offer more detailed introductions.34−38

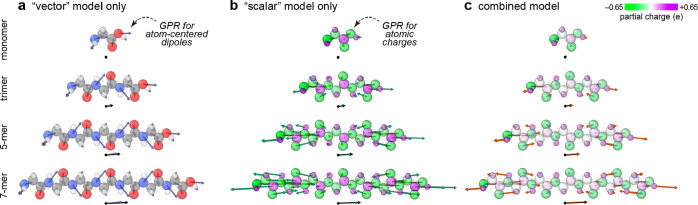

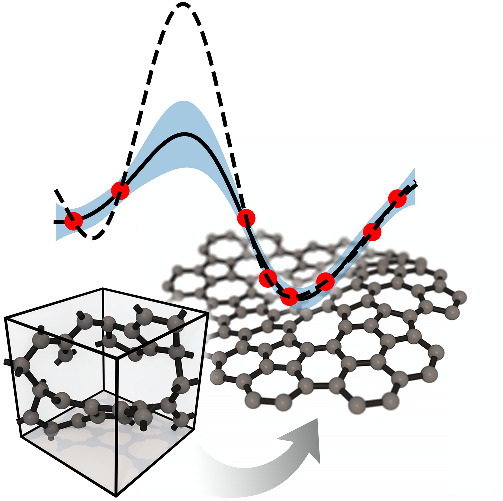

In the present work, we review the application of Gaussian process regression (GPR) to computational chemistry, with an emphasis on the development of the methodology over the past decade. Figure 1 provides an overview of the central concepts. Given early successes, there is significant emphasis on the construction of accurate, linear-scaling force-field models and the new chemical and physical insights that can be gained by using them. We also survey, more broadly, methodology and emerging applications concerning the “learning” of general atomistic properties that are of interest for chemical and materials research. Quantum-mechanical properties, including the eletronic energy, are inherently nonlocal, but the degree to which local approximations, taking account of the immediate neighborhood of an atom, can be used will be of central importance. It is hoped that the present work—indeed the entire thematic issue in which it appears—will provide guidance and inspiration for research in this quickly evolving field and that it will help advance the transition of the methodology from relatively specialized to much more widely used.

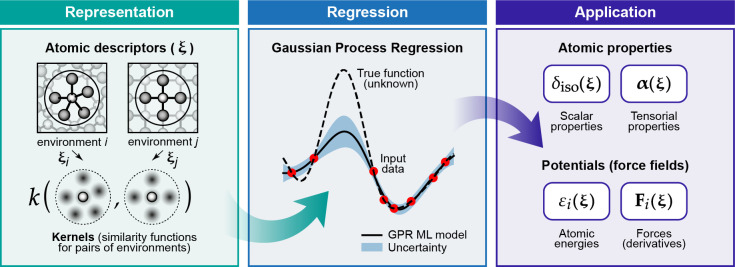

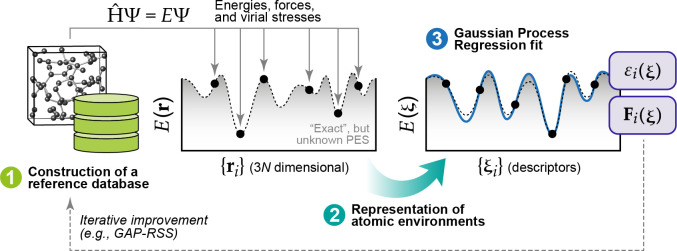

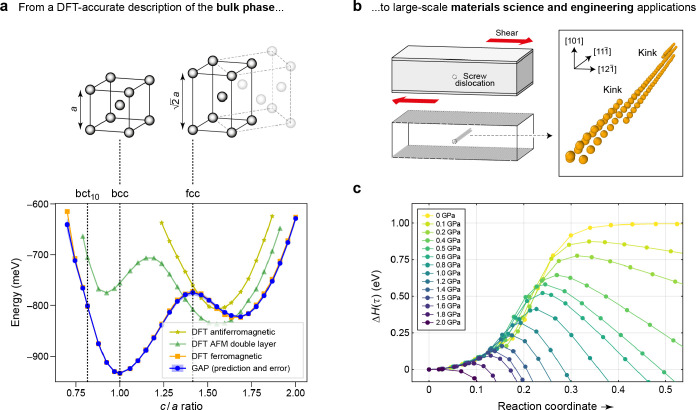

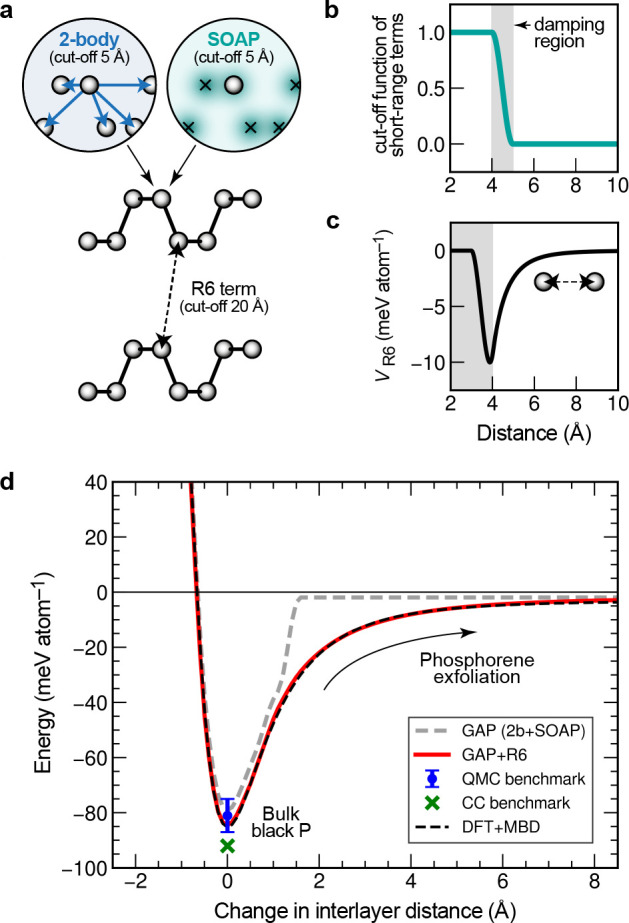

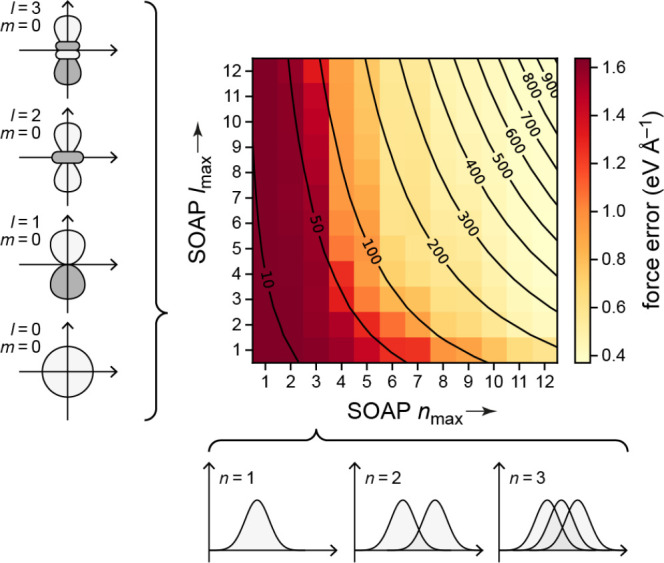

Figure 1.

Overview of central concepts in Gaussian process regression (GPR) machine-learning models of atomistic properties. Left: The models discussed in the present review are based on atomistic structure, and therefore, they require a suitable representation of atomic environments up to a cutoff. The neighborhood is “encoded” using a descriptor vector, ξ, and a kernel function, k, which is used to evaluate the similarity of two atomic environments. Center: In the regression task, the goal is to infer an unknown function from a limited number of observations or input data (section 2). The result, in GPR, is a function with quantifiable uncertainty. Right: Applications of GPR. There are two main classes within the scope of the present review. The first class of applications is the fitting of atomic properties (section 3): these can be scalar, such as the isotropic chemical shift in NMR, δiso, or vectors or higher-order tensors, such as the polarizability, α. The second class of applications is the construction of interatomic potentials or force fields (section 4), which describe atomic energies, εi , as well as interatomic forces, Fi. All these properties are fitted as functions of the descriptor, ξ. The drawings on the left are adapted from ref (18). Adapted by permission of The Royal Society of Chemistry. Copyright 2020 The Royal Society of Chemistry.

2. Gaussian Process Regression

We begin this review article with a brief general introduction to the basic principles of GPR. The present section is not yet concerned with applications, but rather provides a discussion of the underlying mathematical concepts and motivates them for modeling functions in the context of chemistry and physics, as a preparation for subsequent sections of this review. A glossary of the most important terms is provided in Table 1.

Table 1. Glossary of Technical Terms and Concepts Relevant to GPRa.

| Covariance | A measure for the strength of statistical correlation between two data values, y(x) and y(x′), usually expressed as a function of the distance between x and x′. Uncorrelated data lead to zero covariance. |

| Descriptor | In the context of regression, descriptors (sometimes called “features”) encode the independent variables into a vector, x, on which the modeled variable, y, depends. |

| Hyperparameter | A global parameter of an ML model that controls the behavior of the fit. Distinct from the potentially very large number of “free parameters” that are determined when the model is fitted to the data. Hyperparameters are estimated from experience or iteratively optimized using data. |

| Kernel | A similarity measure between two data points, normally denoted k(x, x′). Used to construct models of covariance. |

| Overfitting | A fit that is accurate for the input data but has uncontrolled errors elsewhere (typically because it has not been regularized appropriately). |

| Prior | A formal quantification, as a probability distribution, of our initial knowledge or assumption about the behavior of the model, before the model is fitted to any data. |

| Regularity | Here, we take a function to be regular if all of its derivatives are bounded by moderate bounds. Loosely interchangeable with “degree of smoothness”. |

| Regularization | Techniques to enforce the regularity of fitted functions. In the context of GPR, this is achieved by penalizing solutions which have large basis coefficient values. The magnitude of the regularization may be taken to correspond to the “expected error” of the fit. |

| Sparsity | In the context of GPR, a sparse model is one in which there are far fewer kernel basis functions than input data points, and the locations of these basis functions (which we call the representative set) need not coincide with the input data locations. |

| Underfitting | A fit that does not reach the accuracy, on neither the training nor the test data, that would be possible to achieve by a better choice of hyperparameters. |

These definitions do not yet refer to physical properties, but they will be used in subsequent sections. For a comprehensive introduction to GPR, we refer the reader to ref (39).

Inferring a continuous function from a set of individual (observed or computed) data points is a common task in scientific research. Depending on the prior knowledge of the process that underlies the observations, a wide range of approaches are available. If there exists a plausible model that can be translated to a closed functional formula, parametric fitting is most suitable, as limited data are often sufficient to estimate the unknown parameters. Examples include the interaction of real (nonideal) gas particles, the Arrhenius equation, or, closer to the topic of the present review, the r–6 decay of the long-range tail of the van der Waals dispersion interaction.

In practice, not all processes can be modeled well by simple expressions. Structure–property relations, kinetics of biomolecular reactions, and quantum many-body interactions are examples of observable outcomes that depend on input variables in a complex, not easily separable way, because of the presence of hidden variables. Instead of trying to understand this dependence analytically, one may set out to describe it purely based on existing data and observations. Interpolation and regression techniques provide tools to fill in the space between data points, resulting in a continuous function representation which, once established, can be used in further work. Linear interpolation and cubic splines are widely used examples of these methods, but they are limited to low-dimensional data and cases where there is little noise in the observations. With more than a few variables, it becomes exponentially more difficult to collect sufficient data for the uniform coverage that is required by these methods. As interpolation techniques are inherently local, noise in observations is not averaged out over a larger domain, meaning that these approaches tend to be less tolerant to uncertainty in the data.

From the practitioner’s point of view, GPR is a nonlinear, nonparametric regression tool, useful for interpolating between data points scattered in a high-dimensional input space. It is based on Bayesian probability theory and has very close connections to other regression techniques, such as kernel ridge regression (KRR) and linear regression with radial basis functions. In the following, we will discuss how these methods are related.

Nonparametric regression does not assume an ansatz or a closed functional form, nor does it try to explain the process underlying the data using theoretical considerations. Instead, we rely on a large amount of data to fit a flexible function with which predictions can be made; this is what we call “machine learning”.

GPR provides a solution to the modeling problem such that the locality of the interpolation may be explicitly and quantitatively controlled, by encoding it in the a priori assumption of smoothness of the underlying function. To introduce GPR, we consider a smooth, regular function, y(x), which takes a d-dimensional vector as input and maps it onto a single scalar value:

| 1 |

We do not know the functional form of y, but we have made N independent observations, yn , of its value at the locations xn , resulting in a dataset,

| 2 |

We can consider the observations, yn to be samples of y(x) at the given location, which may contain observation noise. The goal is now to use these data values to create an estimator that can predict the continuous function y(x) at arbitrary locations x and also to quantify the uncertainty (“expected error”) of this prediction.

There are two equivalent approaches to deriving the GPR framework: the weight-space and the function-space views, each highlighting somewhat different aspects of the fitting process.39 We provide both derivations in the following.

2.1. Weight-Space View of GPR

In the weight-space

view of GPR, which is also the one most closely aligned with the usual

exposition of kernel ridge regression, we approximate y(x) by  (x), defined as a linear combination

of M basis functions (Figure 2):

(x), defined as a linear combination

of M basis functions (Figure 2):

| 3 |

where the basis functions, k, are placed at arbitrary locations in the input space, xm , comprising what we

refer to

as the representative set, {xm}m=1M (sometimes also called the

“active” or “sparse” set), and cm are coefficients or weights.

At this stage, we do not need to specify the actual functional form

of k; we only need to know that  describes the similarity

between the function

at two arbitrary locations,

describes the similarity

between the function

at two arbitrary locations,  , that the function is symmetric to swapping

its arguments, viz. k(x, x′)

= k(x′, x), and that it is positive semidefinite.

The kernel function is positive semidefinite when, given an arbitrary

set of inputs {xn}, the matrix

built from k(xn, xn′) is positive

semidefinite.

, that the function is symmetric to swapping

its arguments, viz. k(x, x′)

= k(x′, x), and that it is positive semidefinite.

The kernel function is positive semidefinite when, given an arbitrary

set of inputs {xn}, the matrix

built from k(xn, xn′) is positive

semidefinite.

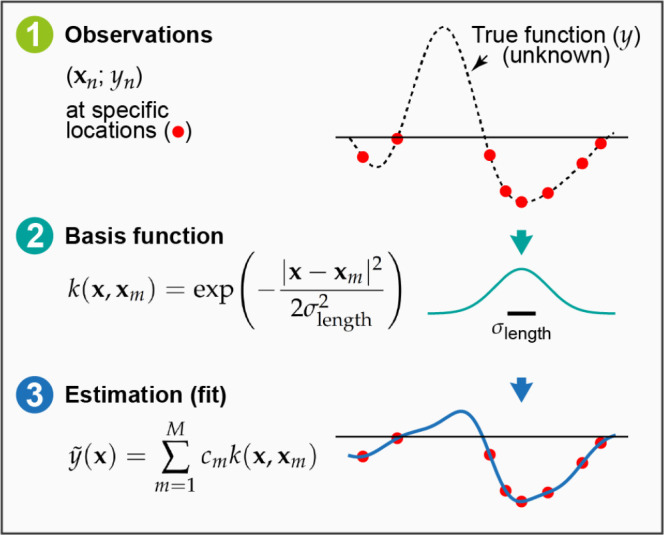

Figure 2.

Basic elements of GPR as discussed in the present

section: (1)

observations of an unknown function at a number of locations; (2)

basis functions (only one of them shown for clarity), centered at

the data locations; (3) an estimation,  , defined by the set

of coefficients, cm,

and the corresponding basis

functions; this is the prediction of the GPR model.

, defined by the set

of coefficients, cm,

and the corresponding basis

functions; this is the prediction of the GPR model.

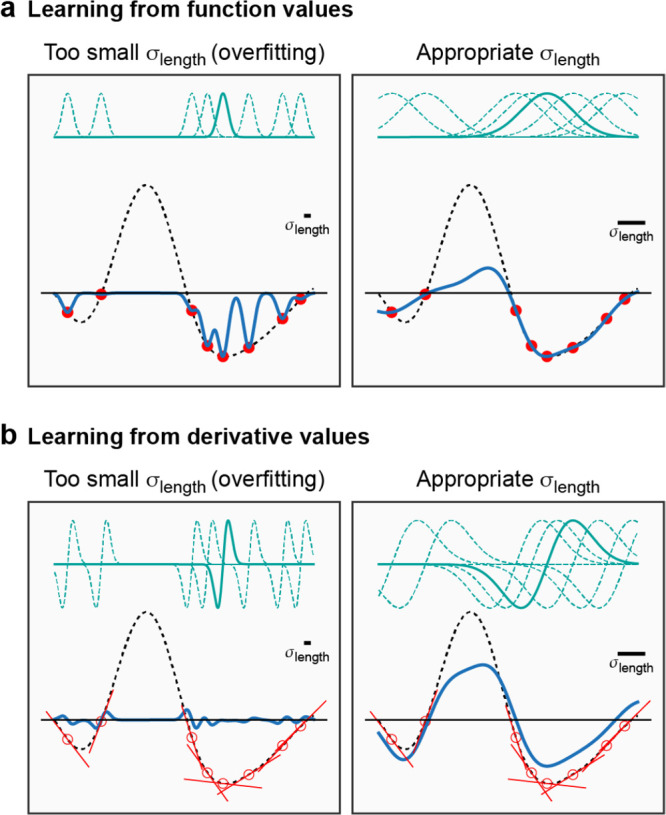

Although the form of the kernel function does not matter in principle, the practical success or failure of a GPR model will depend to a large extent on choosing the appropriate kernel. Figure 3a demonstrates this using the example of the Gaussian kernel which includes a length scale hyperparameter, σlength (defined in Figure 2). In fact, this kernel is a universal approximator for any setting of the length scale, but choosing an inappropriate length scale will result in very slow convergence as a function of the number of training data points.

Figure 3.

Effect of the kernel length scale on the GPR fit for different types of input data. (a) Learning from function value observations. We illustrate the effect of using a small (left) or larger (right) hyperparameter associated with the correlation length scale (represented by a solid bar in each panel) on the GPR models (solid black line). Basis functions (teal dashed lines—one is highlighted as solid for clarity), centered on data points (red circles) sampled from the target function (black dashed line), are also shown. (b) Learning from derivative values (section 2.4). Data points are represented by red points and derivatives by red sticks: in this example, the data values themselves, i.e. the {yn}, are not included in the fits. For all fits in this figure, the regularizer was very small, just large enough to ensure that a stable numerical solution to the linear least-squares problem can be obtained.

The fitting of the GPR model to the data is accomplished by finding the coefficients c = (c1, ..., cM) that minimize the loss function,

| 4 |

where R is a regularization term and the relative importance of individual data points is controlled by the parameters σn. In GPR, the Tikhonov regularization is used, defined as

| 5 |

Two objectives are included in the loss function that is defined in eq 4. The first term is designed to achieve a close fit to the data points. However, this term alone would lead to overfitting because of the large flexibility of the functional form, and it is therefore controlled by the second term, the regularization, which forces the coefficients to remain small. The collection of parameters {σn}n=1N (together with the length scale hyperparameter) adjusts the balance between accurately reproducing the fitting data points and the overall smoothness of the estimator.

Crucially, the coefficients in this regularization term are also weighted by the corresponding kernel elements, a relation that can be understood when formally deriving GPR from the properties of the reproducing kernel Hilbert space (RKHS), which we discuss below.39,40Equation 4 is often written as

| 6 |

using a uniform σn = σ parameter for all data points, but we later exploit the ability to express the reliability of each data point individually. Because in this form, σ multiplies the Tikhonov regularizer, most practitioners identify σ with the regularization “strength” or “magnitude”. Using this definition, we can rewrite the loss function in matrix form:

| 7 |

where the matrix elements are defined as

| 8 |

and y = (y1, ..., yN). Recall

that N indicates the number of data points in  and M indicates the number

of representative points, respectively. Our notation emphasizes the

dimensions of the various kernel matrices in the subscript and implies

that KNMT ≡ KMN because the kernel function is

symmetric. In eq 7, Σ is a diagonal matrix of size N, collecting

all the σn values,

with Σnn = σn. To minimize

and M indicates the number

of representative points, respectively. Our notation emphasizes the

dimensions of the various kernel matrices in the subscript and implies

that KNMT ≡ KMN because the kernel function is

symmetric. In eq 7, Σ is a diagonal matrix of size N, collecting

all the σn values,

with Σnn = σn. To minimize  , we differentiate eq 7 with respect to cm for all m and then search for solutions

that satisfy

, we differentiate eq 7 with respect to cm for all m and then search for solutions

that satisfy

| 9 |

and we obtain

| 10 |

Rearranging gives an analytical expression for the coefficients,

| 11 |

and once these coefficients have been determined, the prediction at a new location x is evaluated using eq 3, which in matrix notation is

| 12 |

where a shorthand notation k(x) is introduced for the vector of kernel values at the prediction location (x) and the set of representative points ({xm}),

| 13 |

When the number and locations of the representative points are set to coincide with the input data points, a case to which we refer as “full GPR”, we have M = N, and the expression for the coefficients simplifies to

| 14 |

We note that the expression in eq 14, together with eq 3, is formally equivalent to kernel

ridge regression (KRR), which is also based on the solution of the

least-squares problem using Tikhonov regularization.41 Full GPR becomes expensive for large datasets, because

the computational time required to generate the approximation scales

with the cube of the dataset size,  , and the memory requirement scales as

, and the memory requirement scales as  , at least when direct dense linear algebra

is used to solve eq 14. While iterative solvers, which are ubiquitous in ML generally,

might reduce this scaling, they are not widely employed in the context

of GPR/KRR. In our applications, detailed in the rest of this review,

we use relatively few representative points, i.e. M ≪ N, and we refer to this regime as “sparse

GPR”, following the Gaussian process literature.42,43 The matrix equations that specify both the full and the sparse GPR

fits are visualized in Figure 4. More details on how we select representative points in practice

will be given in section 4.3.

, at least when direct dense linear algebra

is used to solve eq 14. While iterative solvers, which are ubiquitous in ML generally,

might reduce this scaling, they are not widely employed in the context

of GPR/KRR. In our applications, detailed in the rest of this review,

we use relatively few representative points, i.e. M ≪ N, and we refer to this regime as “sparse

GPR”, following the Gaussian process literature.42,43 The matrix equations that specify both the full and the sparse GPR

fits are visualized in Figure 4. More details on how we select representative points in practice

will be given in section 4.3.

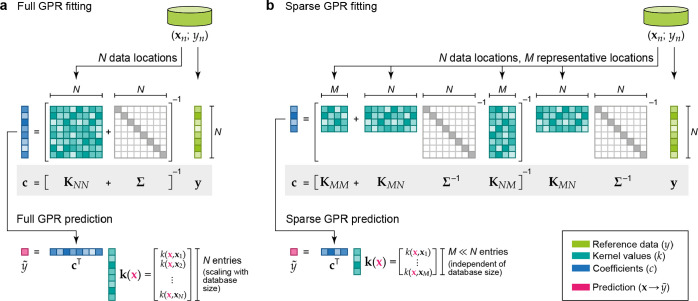

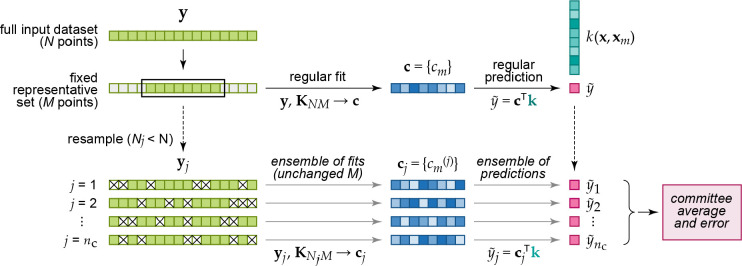

Figure 4.

Visualization of the matrix equations that define the fitting of

full (eq 14) and sparse

(eq 11) GPR models,

and the way they are used for prediction. (a) The reference database

consists of entries {xn; yn}; the data labels y1 to yn are collected in the vector y (light green);

the data locations x1 to xN are used to construct the kernel matrix, K, of size N × N (teal).

The regularizer, Σ, is shown as a light gray diagonal

matrix. By solving the linear problem, the coefficient vector c (blue) is computed, and this can be used to make a prediction

at a new location,  (x) (eq 12), the cost of which scales with the number

of data locations, N. (b) In sparse GPR, the full

data vector y is used as well, but now M representative (“sparse”) locations are chosen, with M ≪ N. The coefficient vector is

therefore of length M, and the cost of prediction

is now independent of N.

(x) (eq 12), the cost of which scales with the number

of data locations, N. (b) In sparse GPR, the full

data vector y is used as well, but now M representative (“sparse”) locations are chosen, with M ≪ N. The coefficient vector is

therefore of length M, and the cost of prediction

is now independent of N.

2.2. Function-Space View of GPR

The function-space

view is an alternative way of deriving, defining, and understanding

GPR/KRR. Again, we aim to estimate an unknown function which we can

sample at specified locations, resulting in the dataset  , and we consider

estimators of the form

, and we consider

estimators of the form

| 15 |

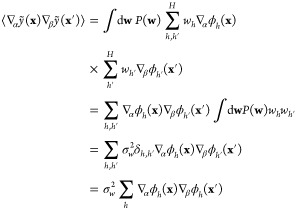

where ϕ are fixed, and for-now unspecified, basis functions. It is important to emphasize that even though eqs 3 and 15 are formally similar, the basis functions ϕh are not equivalent to the kernel function k (their relationship is shown below), nor are the coefficients c equivalent to w. Whereas in the weight-space view, the kernel basis functions are placed on the representative set of points xm, which typically (but not necessarily) coincide with data points, the fixed basis functions here are independent of the data and serve purely as a framework to define a probability distribution of functions.

The function  is determined by the

coefficients, w = (w1, w2, ...), which

are drawn from

independent,

identically distributed Gaussian probability distributions,

is determined by the

coefficients, w = (w1, w2, ...), which

are drawn from

independent,

identically distributed Gaussian probability distributions,

| 16 |

leading not to a single estimate of  but to a distribution of estimators, which

corresponds to a Gaussian prior and is commonly called a Gaussian

process (GP). For these generalized estimators, the covariance of

two estimator values at the locations x and x′ is

but to a distribution of estimators, which

corresponds to a Gaussian prior and is commonly called a Gaussian

process (GP). For these generalized estimators, the covariance of

two estimator values at the locations x and x′ is

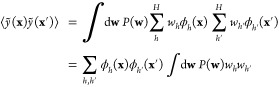

|

17 |

With the information from eq 16, the integral evaluates to σw2δhh′, and then we have

| 18 |

The sum over the basis functions in the last expression is used to define a kernel function, k, as

| 19 |

This definition makes it clear why the kernel function needs to be positive semidefinite: it has the structure of a Gram matrix, i.e. a matrix of scalar products. For coinciding arguments (x = x′), the value of the kernel function corresponds to a variance.

Each function value in the dataset is taken to incorporate observation noise, yn = y(xn) + ϵ, where ϵ is a random variable, independent for each data point and identically distributed, drawn from a Gaussian distribution with zero mean and variance σ2. It follows that the covariance of any two actual function observations in the dataset is given by

| 20 |

The probability distribution of all the observations y = (y1, ..., yN) is therefore a multivariate Gaussian with zero mean and covariance of KNN + σ2I, written as

| 21 |

Note that, for convenience in the derivation, we assume that the mean of the prior distribution of functions is zero, but very often there is a good prior guess for the mean of the function, in which case it is straightforward to modify the distribution—or simply to subtract the prior mean from the observed function values before fitting, to be added back on after prediction.

Function estimation based on the data now proceeds by fixing the N data locations and values and considering the probability distribution of a new function value, yN+1, observed at a new location, xN+1. Bayes’ rule gives this distribution as a conditional probability in terms of the old (previous) observations and the joint distribution of the old and new observations,

| 22 |

After substituting eq 21 into eq 22 (using it for both the numerator and denominator appropriately), and some algebraic manipulation,44 we find that the distribution of y(xN+1) is also Gaussian, and we can express its mean and variance as

| 23 |

| 24 |

where we again use k for the vector of covariances (kernel values) evaluated between the new data location and the set of N previous ones,

| 25 |

It is interesting to note that the GP variance estimate is formally independent of the training function values: the expression in eq 24 depends solely on the location of data points but not on the data values y. However, if the model hyperparameters are optimized either by maximizing the marginal likelihood or by cross-validation (see below), then the variance estimates do implicitly depend on training function values through this optimization.

The fact that both the estimators in eqs 23 and 24 only depend explicitly on the kernel function, k, and not on the basis functions, ϕh, shows that a GP may be defined by its kernel, without ever specifying the underlying basis set (although it is possible to determine the corresponding basis set from any given kernel). Recall that the meaning of the kernel function is the covariance of data values (eq 19) and is thus regarded as a measure of similarity between data points. This route to specifying a basis for modeling nonlinear functions is often referred to as the “kernel trick”.

Note that the combination of eqs 3 and 14 defining GPR in the weight-space view is equivalent to the result of the function-space view in eq 23. This equivalence reveals that the magnitude of the regularization term in the weight-space view, σ2 in eq 6, is the same as the variance of the Gaussian noise model on the function observations (cf. eq 20). We can use this to understand regularization from a new perspective: it is the expression of uncertainty of our observations and naturally leads to a model with an imperfect fit to the data.

Notable kernels include the Gaussian, or squared exponential, kernel (the latter name is in common use to emphasize the distinction between the form of the kernel function and the multivariate Gaussian distributions that underlie the entire GPR framework),

| 26 |

parametrized by the spatial length scale, σlength.44 The linear, or dot-product, kernel is defined as

| 27 |

where xa = [x]a are the elements of the d-dimensional input vector x. Substituting this kernel definition into eq 3 gives the prediction formula,

| 28 |

which shows that using the linear kernel in GPR is equivalent to performing regularized linear regression, with coefficients given by

| 29 |

It follows that the basis functions corresponding to the dot-product kernel are simply M functions that each pick out one element of the data vector {xm}m=1M. Finally, the polynomial kernel is

| 30 |

and expressing the prediction formula explicitly reveals that the basis functions are outer products of the elements of the data vectors. For ζ = 2, for example, we obtain the expression

| 31 |

that corresponds to a polynomial basis with a degree of ζ = 2.45

2.3. Explicit Construction of the Reproducing Kernel Hilbert Space

It is instructive to see how the function-space view of GPR arises from an explicit construction of an approximation of the RKHS.46 Consider the kernel matrix that is computed for the representative set of data points, KMM, and its eigenvalue decomposition which is given by

| 32 |

Because the kernel is positive semidefinite, the eigenvalues, Λi, are greater than or equal to zero, and it is possible to compute the feature matrix,

| 33 |

such that

| 34 |

The definition in eq 33 corresponds to performing a kernel principal component analysis (KPCA)47 without discarding any of the resulting components and is consistent with the introduction of an explicit function-space model, as follows. The elements of a feature vector, ϕ, associated with an arbitrary input point, x, are given by

| 35 |

where the sum runs over all M representative points and the number of features is the same; that is, the index j takes values from 1 to M. For any pair of locations within the representative set, we have

| 36 |

which corresponds to the definition of the kernel in terms of a scalar product in the RKHS, as given in eq 19. For arbitrary pairs of locations that are not included in the representative set, the above expression is only an approximation of the kernel, which can be improved by enlarging M.

This point of view also makes it possible to directly derive the Nyström form of sparse GPR,48 by considering it as ridge regression in the RKHS defined by the representative points. The feature matrix associated with a set of N points is ΦNM = KNMUMMΛM–1/2. This expression may be regarded as an approximate decomposition of the full kernel matrix KNN ≈ ΦNMΦNMT. The resulting regularized linear regression weights are

| 37 |

and the predictions are given by ϕ(x)·wM. By substituting for the features, ϕ, the definition in terms of the eigendecomposition of KMM (eq 35), we obtain the model predictions,

|

38 |

This is the same as eq 11, revealing how the abstract function-space derivation can be formulated as a matrix approximation problem and more generally how kernel methods can be seen as simultaneously addressing the problem of building a data-adapted feature space and performing linear regression in it.

2.4. GPR Based on Linear Functional Observations

In later sections, we will need to use the GPR formalism to estimate functions whose value cannot be directly observed. This is the case for fitting an atomic energy function (using the neighbor environment of an atom as the input) to data from quantum-mechanical electronic-structure computations, which yield the system’s total energy, not individual atomic energies, and atomic forces and stresses, which are derivatives of this total energy with respect to the atomic positions and the lattice deformation, respectively. It is therefore useful to consider this problem in the abstract: estimating a function when it is not possible to directly observe values of a function, but we have access to derived properties. The formalism that follows was introduced in ref (49) for modeling materials, which itself builds on ref (50) that discusses learning a function from its derivatives using GPR.

As a simple example,

assume that we observe data values Y at data locations X, but we wish to model the estimator as a sum of values of

the elementary estimator function  ,

,

| 39 |

where x and x′ are subsets of the degrees of freedom in X,

| 40 |

using a kernel function that is defined between points in the smaller space, k(x, x′). In the spirit of the function-space view of GPR, it follows that the covariance of two such observations Y1 and Y2 (taken at X1 = x1 ⊕ x1′ and X2 = x2 ⊕ x2′, respectively) is given by the sum of kernels,

and the rest of the regularized kernel regression formalism follows using this definition of the kernel. When building a sparse GPR model, we have the choice of picking representative points such as x from the smaller space or X from the larger space. In either case, kernels can be computed between the observed data locations and representative points, e.g. k(X1, x) = k(x1, x) + k(x1′, x).

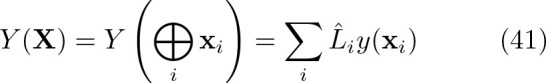

It is straightforward to generalize this construction to any linear functional observation, and the resulting kernel model becomes a linear functional of the corresponding kernel functions. To formalize this, we model the observations as

|

41 |

where L̂i is a linear operator applied on the elementary model function y. In the previous example, L̂ was simply the identity operator, but it can also include differentiation, scaling, or any other linear operation. To illustrate how fitting based on derivative observations can be performed, we consider the derivative of the estimator function defined in eq 15 with respect to the α component of the input vector, x, viz.

| 42 |

We obtain the covariance of two such derivative observations as

|

43 |

from which it follows, using eq 19, that the kernel for derivative observations is the double derivative of the original kernel:

| 44 |

In a similar manner, the covariance between a function value and a derivative observation can be found as

| 45 |

allowing a covariance matrix to be built for arbitrary observations that are linear functionals of an underlying function. For example, the block of the covariance matrix corresponding to the data vector [y, ∇1y], collected at the points [x, x′], is given by

|

46 |

For a general linear operator, L̂, the coefficients in eq 11 that constitute the regularized solution of the regression problem then become

| 47 |

where y, of length D, contains all

the training data. This matrix equation is visualized

in Figure 5. When implementing

this in code, the operator  is applied to the kernel matrix KNM which results in a matrix of size M × D, that we label

is applied to the kernel matrix KNM which results in a matrix of size M × D, that we label  or alternatively

or alternatively  .

.

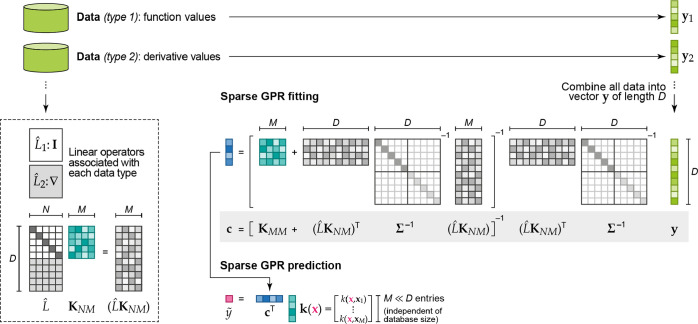

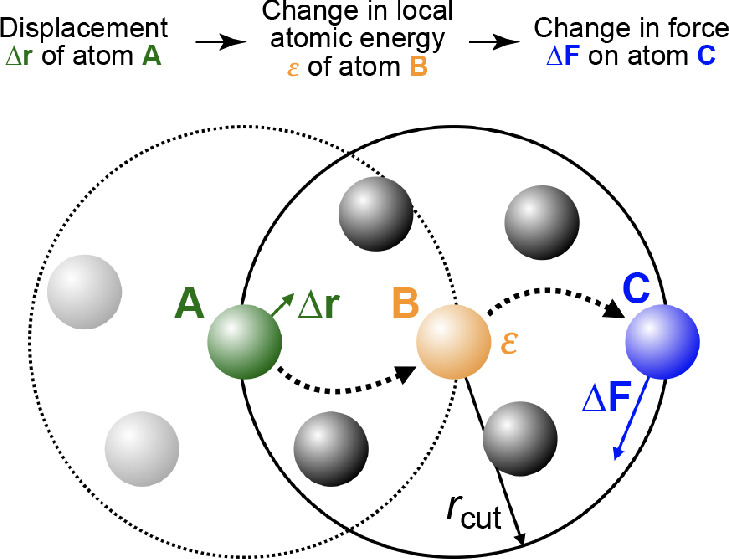

Figure 5.

Sparse GPR fitting based on different types of input data (eq 47). In this example, two types of data are present in the reference database: function values (corresponding to the identity operator, I) and derivatives (corresponding to the differential operator, ∇). All these observations are combined into a single vector, y, which has D entries. The fit itself proceeds as shown in Figure 4b but now includes the use of a matrix of operators, L̂. Some sizes of vectors and matrices (M, N, or D) are indicated. The regularization, Σ, is indicated as a block diagonal matrix (one block corresponding to function values, one to derivatives); more individual settings are also possible. Once c is determined, it is used for sparse GPR prediction in the same way as shown in Figure 4.

Figure 3 illustrates these concepts for a simple one-dimensional function (dashed lines) for which GPR estimates are made (solid lines). The examples presented here show “full GPR” fits (i.e., when the set of representative points associated with the basis functions is exactly the same as the set of input data points) in the two cases when either function values (Figure 3a) or derivative values (Figure 3b) are used in the regression. In each case, we show two choices for the length scale of the squared exponential kernel, σlength, namely a value that is too small, and also a larger (near optimal) value. If the length scale is chosen too small (left panels in Figure 3), the result is a terrible overfit in both cases, but showing different behavior. When fitting to function observations, the fit matches the data exactly near the data points (red points) and reverts to near zero away from the data points. When fitting to derivatives, the estimate has the correct derivatives locally but overall is nearly zero everywhere. For the near optimal value of the length scale (right panels in Figure 3), fitting to function observations results in an excellent fit near the right-hand side minimum where there are a lot of data and a rather poor fit elsewhere. Fitting to derivatives reproduces the shape of both minima, and the maximum in between qualitatively too, despite there being no data points there. However, the relative depths of the two minima are not well captured.

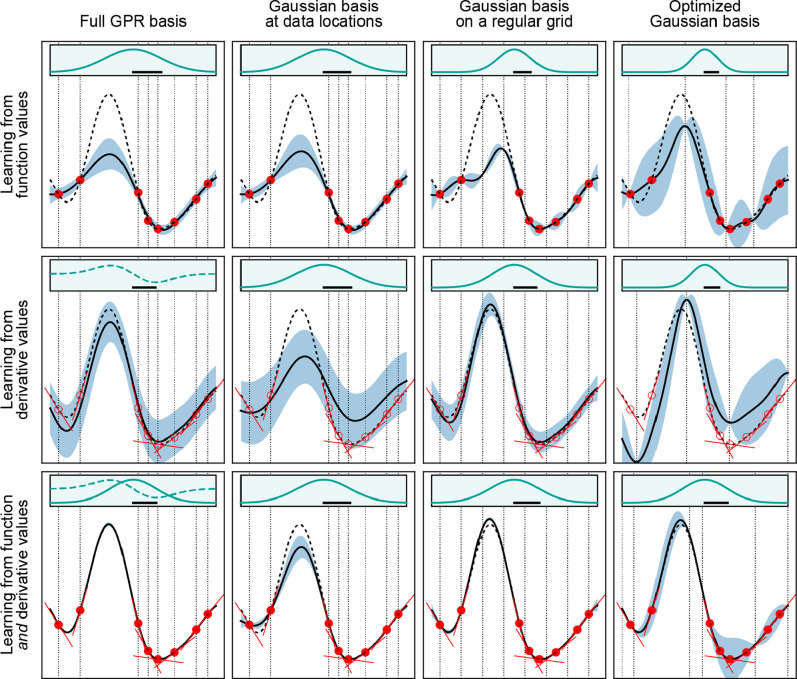

In Figure 6, we show the fit quality for the same simple one-dimensional function, but this time using sparse GPR and exploring different ways of constructing the representative set and the corresponding basis set. In the first row, only function values are used in the fit, in the second row, only derivatives are used, and in the third row, function values and derivatives are combined to form the dataset. The first column shows full GPR (as in Figure 2), using square kernel matrices and placing a basis function on each data point, with the basis function type corresponding to the data type: function value observations induce Gaussian basis functions, and derivative value observations induce Gaussian-derivative basis functions (cf. eq 45). Therefore, in the first column of Figure 6, the top panel shows a fit to the function values and uses eight Gaussian basis functions, the middle panel shows a fit to only derivative values and uses eight Gaussian-derivatives, and the bottom panel shows a fit to all the available data and uses both types of basis functions (16 altogether). The improvement in the fit from top to bottom is steep, with the bottom panel showing an almost perfect fit.

Figure 6.

Effects of different types of data and basis functions on GPR fits. These are illustrated using the same example function as in Figure 2 (black dashed lines), showing the predicted mean (black solid lines) and variance (light blue shaded area) of the fit. Observations are indicated by the red points for values and short red line segments for derivatives. The fitting data included only function values in the first row, only derivative values in the second row, and both function and derivative values in the bottom row. Full GPR was used for the data shown in the first column, and sparse GPR for those in the others. Representative point locations (vertical dotted lines) coincide with the data point locations for the first and second columns, whereas they were placed at regular intervals for the third column. In the fourth column, the number and location of representative points were optimized to maximize the marginal likelihood. The regularization hyperparameter σ as well as the length-scale hyperparameter σlength were independently optimized for each panel to maximize the marginal likelihood. Insets show the kernel basis functions used in the fit (solid for Gaussians; dashed for Gaussian derivatives); scale bars represent the optimized values of σlength.

The second, third, and fourth columns of Figure 6 all use sparse GPR fits, but with Gaussian basis functions irrespective of what the type of the data is (that is, even if only derivative values are used). In the second column, the eight basis functions are simply placed at the input data locations (and thus the first two panels of the first row are identical!). In the third column, again we use eight Gaussian basis functions but they are centered on a regular grid. This has little effect when the fitting data consist of function values, but it shows a considerable improvement in rows two and three, when derivative data are used. In the fourth column, the locations of the representative set are optimized (by maximizing the marginal likelihood; see below). Note that fewer than eight basis functions are used, because some of the basis function centers have merged during the optimization. We observe some improvement in the first row and an improved estimation of the maximum in the second row, albeit with a poor description of the relative depths of the minima. In the last row, when both the function value and derivative information are provided, the fit is as good as using a regular grid and almost as good as with full GPR (first column).

Studying such simple toy models can be very instructive in understanding GPR, but of course one has to be careful in drawing conclusions and applying them to the high-dimensional problems of materials and molecular data. Nevertheless, it is clear that full GPR does not scale to large datasets and that high dimensionality precludes the use of regular grids when setting up basis sets—indeed the fundamental reason why GPR is efficient even in many dimensions is because the basis set can adapt to the data locations. In the Gaussian approximation potential (GAP) scheme, detailed in section 4, the construction is most similar to column-four-row-three of Figure 6, because both total energy and derivative data are used, and the representative set is selected as the optimized subset of the very large number of atomic environments that are present in the input dataset.

The general formulation in eq 41 for modeling arbitrary linear observations in the framework of sparse GPR allows for the complete separation of the basis functions of the representative set and the training data. This greatly simplifies the application of GPR for force-field development, where a large proportion of the training data are in the form of atomic forces. This is because each structure contributes three times the number of atoms as Cartesian force components and just one total energy value. Attempting to use full GPR would result in square kernel matrices with row and column sizes equal to the number of input data values, which in turn would limit the models to rather small datasets. Therefore, in the context of interatomic potentials, where the fitting data correspond to total energies (sums of many atomic energies), forces, and stresses (sums of partial derivatives of many atomic energies), we model the atomic energy as the elementary function and use representative points that are individual atomic environments and corresponding to kernel (rather than kernel derivative) basis functions.

2.5. Regularization

Regularization can be regarded as a mechanism to deal with noisy and incomplete data, which balances the requirements of a smooth estimator and a close fit to the data. We introduced the Tikhonov regularization term when we described the weight-space view of GPR in section 2.1 and made the connection with the noise model assumed for function observations in section 2.2 in the function-space view of GPR. From a Bayesian point of view, a noise parameter that is significantly larger than the covariance of function values, σ2 ≫ k(x, x′), favors the prior assumption on the function space, which is smoothness, and ultimately leads to the trivial solution of the constant function y(x) = 0 as σ → ∞ (assuming that the mean of the GP prior is zero). Equivalently, the loss function in eq 4 is dominated by the regularization term for the choice of large σ and leads to the trivial solution of c = 0 in the σ → ∞ limit. Conversely, small σ values force the estimator to follow the data points as closely as possible, at the price of potentially significant overfitting. The extreme case of σ = 0 reduces eq 4 to the unregularized least-squares fit.

Apart from these considerations, regularization is of practical relevance from the point of view of numerical stability: it conditions the kernel matrix by adding a diagonal matrix with positive values. In the case of the location of some data points coinciding, the determinant of the kernel matrix KNN would otherwise become zero, and the inverse KNN–1 would become undefined without conditioning the diagonal values. Of course, it would be possible to remove exactly duplicate data points, but even close data points would cause numerical instabilities in practice, which are less trivial to eliminate. Furthermore, it may actually be desirable to have multiple data points at the same or similar locations if the observations do genuinely contain noise, as the observed function values would sample the function, and GPR would effectively and automatically perform averaging. Note that noise in the observations does not have to be of stochastic nature: even in the case of deterministic observations, model error can give rise to deviations that appear as noise, as we will discuss in section 4.

2.6. Hyperparameters

A particularly appealing feature of GPR is that it is parameter-free, in the sense that once the prior assumptions (i.e., the kernel and the observation noise) are specified, the function estimator follows. In some cases (particularly when working in the well-established field of atomic-scale modeling), the appropriate kernel and the observation noise might be known. For example, we might have a very good idea of how smoothly the atomic forces vary with atomic position (corresponding to the length scale hyperparameter, σlength, introduced above), or how much error we expect in observed values (corresponding to σ) due to a lack of convergence in the electronic-structure computations that provide the fitting data. But often, the hyperparameters describing the problem are not available. In section 6 below, we will describe strategies to set these for material models that we found effective. Formally, when using sparse GPR, the locations of the basis functions are also hyperparameters, and their choice can dramatically influence the accuracy of the fit (cf. Figure 6).

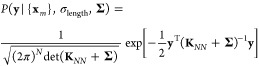

In the Bayesian interpretation of GPR, we have already made use of the marginal likelihood44 (or evidence; eq 21), which can also be understood as a conditional probability over the hyperparameters,

|

48 |

This provides a route to eliminating all of the unknown hyperparameters, because Bayes’ formula allows one to integrate the likelihood over all possible hyperparameter values when making a prediction. This is essentially an encapsulation of the Bayesian principle of “Occam’s razor”: we are not just interested in hyperparameter settings that lead to small fitting error, but in solutions that are also robust, in the sense that parameters in a large volume of parameter space near the optimum all lead to small fitting error. This turns out to be a good predictor of performance on any future test set, without having to explicitly do the test.

However, integrating the likelihood is often not a practical proposition for large models, because the integral cannot be evaluated analytically. Instead, the hyperparameters corresponding to the highest marginal likelihood are often selected, and these can be obtained in a straightforward way by maximizing the logarithm of the marginal likelihood,

|

49 |

for which the derivatives with respect to hyperparameters may also be computed.

Another route for hyperparameter optimization, more often used in the context of KRR, is cross-validation. There are many variations to this approach, but commonly the available data are divided into a “training” and a “test” set. The training set is used for the regression, with the predictions evaluated on the test set. The hyperparameters are then adjusted to achieve the lowest possible error on the test set. There are more sophisticated versions, where multiple splits are created (so-called “k-fold cross-validation”).

3. Learning Atomistic Properties

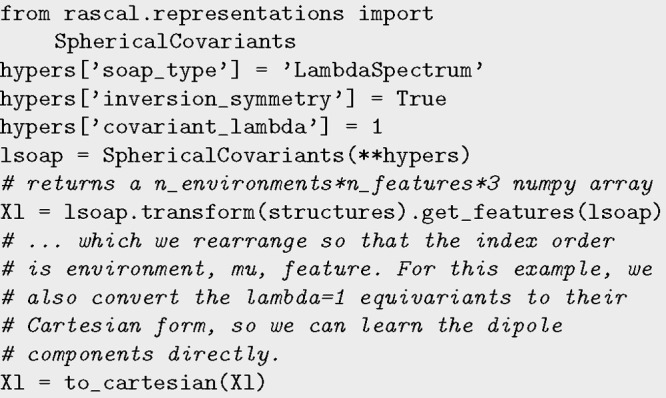

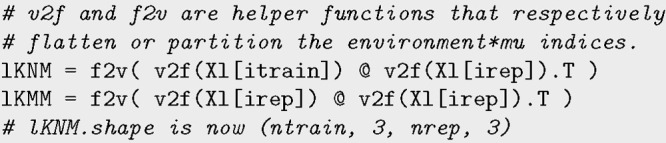

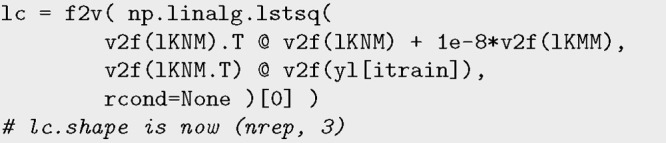

Let us now show how the general GPR framework translates into a scheme to model the atomic-scale properties of molecules and materials. First, we discuss how the Cartesian coordinates and the atomic numbers that determine the specific configuration of the system should be represented to obtain a description that is suitable for atomistic ML. This is one of the central problems in the field, and we refer the reader to a dedicated review51 in the present thematic issue for a more detailed discussion. Here, we limit ourselves to a family of approaches which covers most of the example applications that are discussed in what follows. We then present a “hands-on” example: the construction of a GPR model of the energy and dipole moment of an isolated water molecule. We use this example to introduce the relevant concepts and show them “in action”; for more details, the reader is referred to subsequent sections. We provide Python (Jupyter) notebooks that reproduce the results shown in the present section, and we report code snippets to show the connection between general expressions and the practical implementation for an atomistic problem.

3.1. Representing Atomic Structures

The chemical structure of molecules and materials is defined most directly by the Cartesian positions, {ri}, of the constituent atoms. Interatomic potential models do not typically use these positions as input directly but rather transform them into a different mathematical representation. This way, the resulting potential can automatically gain some desirable properties, particularly symmetries of the potential energy with respect to translation, rotation, and permutation (swapping) of atoms of the same element. Furthermore, the representation should reflect other physical requirements, such as smoothness of the mapping, additivity when applied to the learning of extensive properties, as well as correct limiting behaviors, e.g., that the atomization energy is zero (by definition) when atoms are at infinite separation.

The classic example of such a transformation is to represent the relative positions of two atoms i and j by their mutual distance (Figure 7a),

| 50 |

If, in addition, the potential energy is written as a separable sum of functions of these distances, the result is a pair potential,

| 51 |

where V2 is a one-dimensional function. The simplicity of the above form obscures its implications as the basis of a regression model for atomic-scale properties. The fact that the interatomic distances are independent of an absolute coordinate reference frame guarantees that the potential is invariant with respect to translation and rotation. Since the true potential energy obeys these invariances exactly, this is universally agreed to be a good thing. The true potential is also invariant to permutation of like atoms, and the separable form and the sum over each pair of atoms guarantees this invariance but at the cost of a drastic approximation: the true quantum-mechanical energy is not separable into a sum of pairwise terms. Whether this approximation still results in a usable potential depends on the system: the Lennard–Jones pair potential is an excellent approximation for noble gases, and similar models give qualitatively decent models of simple fcc metals54 and simple ionic halides55 and some oxides.56 For covalently bonded systems, pair potentials that reflect the connectivity of the system can provide reasonably accurate descriptions of small displacements, e.g., vibrational dynamics, but fail to give a natural description of chemical reactivity and are basically unusable as general-purpose models.

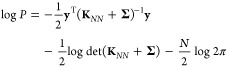

Figure 7.

Descriptors for atomistic structure. (a) Conventional 2-body and 3-body terms, viz. distances and angles between atoms, as typically used in empirical force fields. Adapted from ref (29) with permission. Copyright 2019 Wiley-VCH. (b) General descriptor for 3-body terms: the three distances, d, between the atoms, specify the relative geometry of the three atoms completely. (c) Schematic of the smooth overlap of atomic positions (SOAP) descriptor.52 The neighbor density ρ is permutationally invariant; expanding it in a local basis of radial functions and spherical harmonics, Ylm, and then summing up the square modulus of the expansion coefficients cnlm over the index m ensures rotational invariance of the power spectrum p (eq 56). Adapted from ref (53). Original figure published under the CC BY 4.0 license (https://creativecommons.org/licenses/by/4.0/). (d) Illustration why the power-spectrum vector, p, is a 3-body descriptor (here shown without element indices for clarity); the consequences of this are discussed in the text.

The traditional route to improving the potential is to add a correction in the same spirit, but at a higher body order, i.e. a term that explicitly depends on the positions of three atoms (Figure 7b) and is summed up over all atom triplets in the system to preserve permutational symmetry:

| 52 |

The three-body term is sometimes approximated to explicitly depend only on the angle between the three atoms (cf. Figure 7a), rather than their individual distances, thereby reducing the number of adjustable parameters. Interestingly, as a result of recent developments in high-dimensional fitting using many parameters, it has become apparent that a lot can be accomplished with just three-body but fully flexible potentials.57−62 In principle, one could continue along this direction and add even higher-order, viz. general four-body, terms. Because of the complexity of managing the increasing number of parameters while still maintaining the permutation symmetry exactly (which involves summing over all atom tuples of increasing size), this has only been done systematically for small molecules63 and is only now beginning to be explored for larger systems64 and materials.65

An alternative approach is to not make the approximation of separability in body order in the first place but instead to write the total energy of the system as a sum of atomic (“local” or “site”) energies that depend on many-body descriptions of atomic environments. This, however, requires a representation that itself is invariant to permutation of like atoms and also incorporates the approximation that interactions are of finite range. The foundational works of Behler and Parrinello66 and Bartók et al.49 precisely hinged on such innovations: the former, on atom-centered symmetry functions; the latter, on spherical harmonic spectra, originally the bispectrum and later the power spectrum,52 also called the smooth overlap of atomic positions (SOAP; Figure 7c). Coupled with nonlinear regression models, the remaining significant approximations are controlled by the number of training data points and the interaction range. All of our examples in sections 6 and 7 will use the SOAP representation, and so we give a brief definition here for completeness.

To obtain the SOAP representation of the neighborhood of a given atom i, we first build a set of neighbor densities, one for each chemical element in the set that is relevant for the system at hand:52

| 53 |

where the sum is over neighbors j of element a that are within the cutoff rcut and fcut(r) is a cutoff function that smoothly goes to zero at rcut. The hyperparameter σa has units of length and determines the regularity (smoothness) of the representation. The above neighbor density is thus a mollified version of a neighbor distribution where each atom would be represented by a Dirac delta function. It is tempting to associate the Gaussian mollifier with an atomic electron density or a smeared nuclear charge, but the correspondence is not so direct. The direct effect of the mollification in the density is only to ensure that the interatomic potential constructed using the SOAP representation is regular, and it would be reasonable to construct a SOAP representation from Dirac delta densities, given that the regularity of the potential is ensured in some other way. For example, the moment tensor potentials (MTP)67 and the atomic cluster expansion (ACE)68 do exactly that.

In the following, for each expression,

we will give both the notation

that was introduced in ref (52) and (highlighted in blue) a recently proposed69 bra-ket notation of the form  that uses q to describe

the indices enumerating the entries of a feature vector and A to indicate the nature of the representation. Note that

the expressions typeset in blue are here to make the connection to

ref (51) explicit and

are not needed to follow most of the exposition in the present review.

Using this notation, for example, the equivalent expression corresponding

to eq 53 reads:

that uses q to describe

the indices enumerating the entries of a feature vector and A to indicate the nature of the representation. Note that

the expressions typeset in blue are here to make the connection to

ref (51) explicit and

are not needed to follow most of the exposition in the present review.

Using this notation, for example, the equivalent expression corresponding

to eq 53 reads:

| 54 |

It is important

to emphasize that for each

atom i, irrespective of what element it is, the full

set of elemental neighbor densities is constructed. Each elemental

neighbor density is invariant to permutations of that element. To

achieve rotational invariance, we first expand the neighbor density

in a basis of orthogonal radial functions,  , and spherical harmonics,

, and spherical harmonics,  ,

,

|

55 |

or equivalently, where the expansion

coefficients are labeled cnlmi,a for consistency with earlier publications34,52 and are not to be confused with the coefficients of the kernel regression

model that have been introduced in section 2. Note the similarity with how atom-centered

orbitals, containing radial and angular parts, are constructed in

quantum chemistry. As emphasized by the bra-ket notation, the expansion

in spherical harmonics just amounts to a change of basis, and these

coefficients are not rotationally invariant. A symmetrized

combination of these coefficients yields the power spectrum,

where the expansion

coefficients are labeled cnlmi,a for consistency with earlier publications34,52 and are not to be confused with the coefficients of the kernel regression

model that have been introduced in section 2. Note the similarity with how atom-centered

orbitals, containing radial and angular parts, are constructed in

quantum chemistry. As emphasized by the bra-ket notation, the expansion

in spherical harmonics just amounts to a change of basis, and these

coefficients are not rotationally invariant. A symmetrized

combination of these coefficients yields the power spectrum,

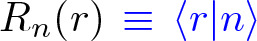

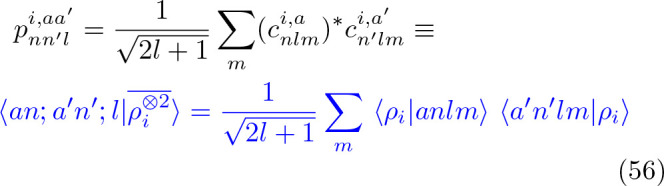

|

56 |

where the notation  hints at the fact that the SOAP power spectrum

is obtained by averaging a two-point tensor product of the atom density

over rotations—which, in the spherical harmonic basis, is equivalent

to summing over m. The l-dependent

prefactor in the definition of the power spectrum is necessary to

make a connection to the overlap of densities (see below). Note that

various other constant numerical factors have appeared in the definition

in the past,34,52 but none of them are consequential,

because the power spectrum is typically normalized to yield a unit

length vector. The descriptor for each atomic environment now has

five indices: two for the neighbor-element channels (a, a′), two radial channels (n, n′), and an angular channel (l). This power spectrum, also commonly referred to as the SOAP descriptor,

or SOAP vector, is a concise representation of atomic neighbor environments.

It is smooth and continuous with respect to atomic displacements and

invariant with respect to physical symmetries, and its only free parameters,

the cutoff and the length scale, σa, are physically intuitive.

hints at the fact that the SOAP power spectrum

is obtained by averaging a two-point tensor product of the atom density

over rotations—which, in the spherical harmonic basis, is equivalent

to summing over m. The l-dependent

prefactor in the definition of the power spectrum is necessary to

make a connection to the overlap of densities (see below). Note that

various other constant numerical factors have appeared in the definition

in the past,34,52 but none of them are consequential,

because the power spectrum is typically normalized to yield a unit

length vector. The descriptor for each atomic environment now has

five indices: two for the neighbor-element channels (a, a′), two radial channels (n, n′), and an angular channel (l). This power spectrum, also commonly referred to as the SOAP descriptor,

or SOAP vector, is a concise representation of atomic neighbor environments.

It is smooth and continuous with respect to atomic displacements and

invariant with respect to physical symmetries, and its only free parameters,

the cutoff and the length scale, σa, are physically intuitive.

An important question in the context of building atomistic regression models based on any structural descriptor is whether the descriptor is complete, in the sense that two atomic environments that are not related by symmetry should map to different descriptors (that is, whether the functional definition of the descriptor is injective). If this were not the case, the accuracy of any ML model based on the descriptor would be ultimately limited by the corresponding loss of information. Since their introduction, it was believed or implied70 that SOAP and all related descriptors (i.e., those that are based on three-body correlations, such as the atom centered symmetry functions of Behler and Parrinello66) are complete. Recently, however, it was discovered that neither SOAP nor the other equivalent descriptors are complete, and counter-examples were shown also for the higher-order bispectrum (which corresponds to four-body correlations).71 Therefore, SOAP-based models cannot describe an atomic energy function of its neighborhood to arbitrary precision, although practical successes suggest that the corresponding errors are on the same order or smaller than other systematic errors that are due to locality and k-point sampling (see below for a more detailed discussion of these). Yet, it may well be possible that complete descriptors can lead to more efficient learning; see ref (51) for more details.

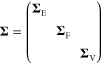

The full SOAP descriptor for each atom i contains all entries of pnn′li,aa′, resulting in a vector whose length scales with the square of the number of elements (due to the presence of the two element indices, a and a′), with the square of the radial basis expansion limit (due to the two indices n and n′), and linearly with the angular basis expansion limit (due to the index l). This vector has hundreds of components (thousands, for systems with several elements) when the basis expansion of the neighbor density is truncated in n and l such that these truncations do not give rise to noticeable inaccuracy. It is therefore natural to think about suitable subsets of the SOAP vector components that could be used without compromising accuracy. There is a highly abstract question here: given the dimensionality of the Cartesian positions, most of the SOAP components must be algebraically related to one another. Knowing such relationships would be useful in reducing the number of components to the independent ones, although it is quite likely that a regression model might work significantly better with more inputs, even if many of those are not independent, because the functional relationship being modeled might be simpler. We are not aware of any theoretical results in this area. On the practical side, however, given datasets and specific regression models, one can numerically experiment with choosing subsets of the SOAP components, and considerable compression is possible.72−74

While such atomic environment descriptors can be used as the basis of any kind of regression scheme, to use them in GPR (which is the focus of the present review), we need to define a kernel that allows us to compare two atomic environments, denoted A and A′. While a standard Gaussian kernel is certainly an option, applications to date have used low-order polynomial kernels, viz.

| 57 |

where ξ and ξ′ indicate the feature vectors corresponding to the normalized power spectrum vectors, ξ = p/|p|, associated with the two environments—with the power spectrum vector associated with an atom i being built from the components that are defined in eq 56, viz.

| 58 |

Considering the linear kernel (ζ = 1) explains the origin of the SOAP name (cf. “smooth overlap of atomic positions”), because the dot product of the power spectra is equivalent to the rotationally integrated squared overlap of the corresponding neighbor densities of two atoms,52

| 59 |

where R̂ is a 3D rotation. A kernel model made using this linear kernel results in a three-body model, i.e. one in which the model can be written as a sum, over triplets of atoms, of a function which only depends on the Cartesian coordinates of the triplet.58 This is not obvious, but it follows from the fact that the SOAP vector itself is a three-body representation of the atomic environment, which is not obvious either, but which we show as follows (and have illustrated in Figure 7d).

Let us separate out the contribution of each neighbor j to the neighborhood density of atom i,

| 60 |

so that the neighbor density for element a is simply

| 61 |

We then form the two-point correlation of this density,

| 62 |

and compute the SOAP vector by transforming it into the spherical harmonic basis in both arguments and then summing over m to ensure rotational invariance:

| 63 |

|

64 |

| 65 |

where Pl are the Legendre polynomials. Thus, the SOAP vector elements can be written explicitly as sums over pairs of neighbors. In all software implementations, eq 56 is used to compute the SOAP vectors, because that makes the calculation independent of the number of neighbors—this is commonly referred to as the “density trick” and is essentially the swapping of the sum and the integral in the last expression.

Using ζ = 2, i.e. raising the scalar product to the power of 2, results in dependence on four neighbors and together with the central atom yields 5-body terms; in general, the body order of the model is 2ζ + 1. Quantum mechanics is a fundamentally many-body theory, and although it is clear that for many properties an expansion in atomic body order is a good idea, formally all body orders are necessary for convergence. In the kernel framework, there is no extra computational cost to increasing the body order in this way, because there is no explicit sum over atom tuples: the SOAP components are computed just once, and the body order is set when the kernel is evaluated between environments. Yet, it is likely a good idea to not choose the body order higher than necessary for achieving the target accuracy: a model with lower body order, and therefore with lower dimensionality, will converge more quickly to its ultimate accuracy as the amount of input data is increased. Many successful SOAP-GAP interatomic potentials for materials have been built with ζ = 2 and ζ = 4, and such potentials and their applications are discussed in section 6.

We note that this link between the body order of the model and the quadratic nature of the power spectrum features (and the fact that the bispectrum features correspond to the next body order) leads naturally to body ordered linear models, that are three-body potentials if they use the power spectrum58,75−77 and four-body when using the bispectrum (because its terms are cubic in the neighbor density coefficients), as is the case for the spectral neighbor analysis potential (SNAP).78 One can go further in body order explicitly while continuing to keep the regression linear.64,65,67,68,73,79

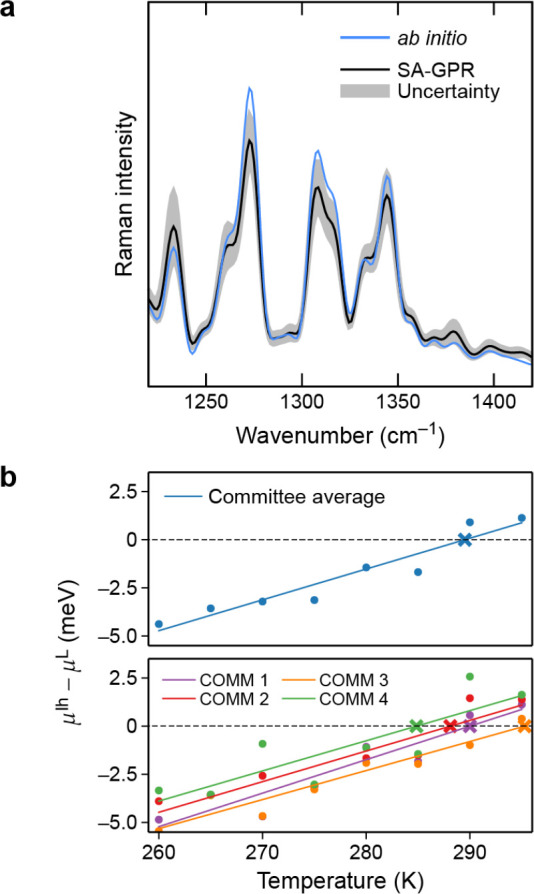

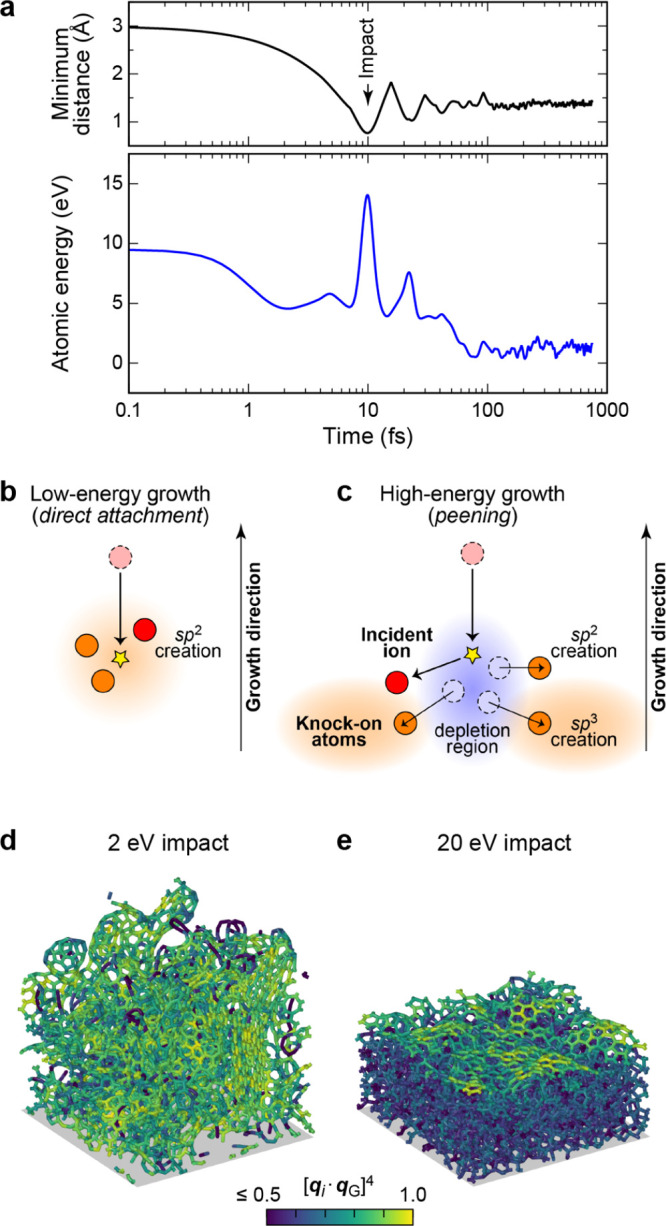

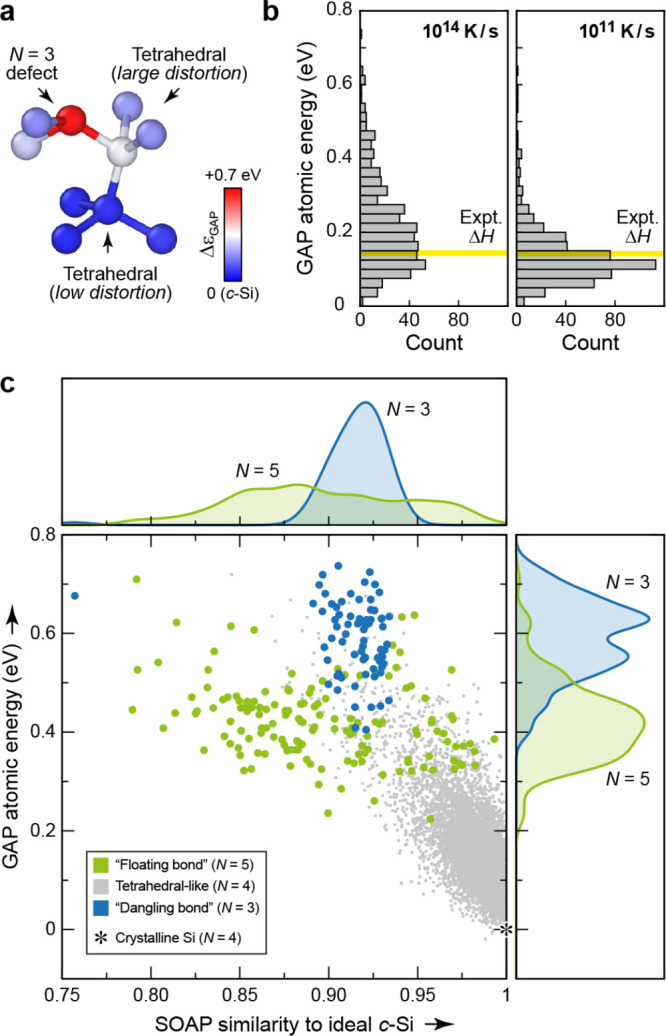

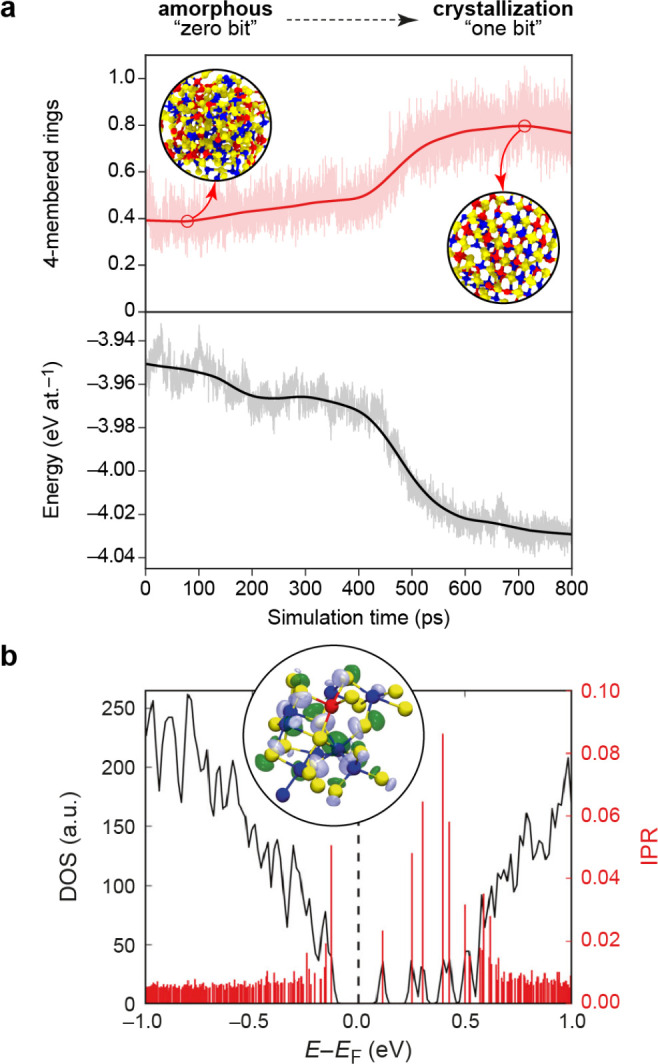

3.2. Symmetry-Adapted Representation

In contrast to scalar properties such as the potential energy, which are invariant under rotations of a system, tensorial properties such as molecular dipole moments and material polarizations transform covariantly when the system is rotated. A natural way to account for this covariance is to build it into the training and prediction processes. The procedure for doing so was first discussed by Glielmo et al. in the context of learning Cartesian vectors.80 They noted that the GPR interpretation of a kernel function as a covariance naturally dictates the symmetry properties of kernels for predicting vectors, requiring the kernel function k(ξi, ξi′) to be replaced by a matrix-valued function k(ξi, ξi′). In this function, the block kαα′(ξi, ξi′) represents the coupling between the Cartesian component α of a coordinate system centered on the i-th atom and the coordinate α′ of a reference system centered on the i′-th atom. A number of symmetry-adapted methods for predicting tensors have appeared in recent years, generally relying on the use of reference frames based on the internal molecular coordinates. These have been successfully applied to generate ML models for the multipole moments of small organic molecules81,82 and the hyperpolarizability of water,83 as well as being used to predict vibrational spectra, including infrared spectra of organic molecules84,85 and the Raman spectrum of liquid water.86 It has become clear in the past few years that both linear73,79,87 and fully nonlinear88−90 models can be built using covariant representations.

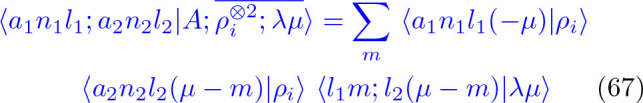

It is possible to generalize the approach of ref (80) to arbitrary orders of tensor by applying analogous symmetry arguments,91,92 and we refer to the resulting method as symmetry-adapted GPR (SA-GPR). Rather than working with Cartesian tensors, it is more convenient to decompose them into their irreducible spherical components,93 which are more naturally related to the transformation properties of the rotation group and afford a more concise description of the problem. For instance, the polarizability (a symmetric 3 × 3 tensor with six independent components) can be decomposed into its trace, which transforms as a scalar, and a 5-vector that transforms as a λ = 2 spherical harmonic. (Note that we use λ to indicate the angular momentum symmetry of the fitting target, rather than l, to distinguish it from the analogous angular momentum index that appears in the density expansion.) Given that a covariant kernel must describe the correlations between the entries of the tensors associated with two environments, this transformation allows us to work with a 1 × 1 and a 5 × 5 kernel, rather than one with 6 × 6 entries. The transformation between Cartesian and spherical tensors is not entirely trivial for λ > 1, but it is well-established93 and necessary for separating the Cartesian tensor into components according to how they transform under rotation. The basic form of a kernel that is suitable for fitting spherical tensors of order λ is a generalization of the SOAP kernel of eq 59:

| 66 |

is the Wigner D matrix

of order λ. These kernel matrices encode information on the

relative orientation of the two environments, as well as their similarity,

and are referred to as λ-SOAP kernels. A kernel built using eq 66 satisfies the two properties

that are necessary for learning a tensorial quantity: namely, that

the predictions of a SA-GPR model are invariant to a rotation of any

member of the training set and that when a rotation is applied to

a test structure, the predictions of the model transform covariantly

with this rotation.

is the Wigner D matrix

of order λ. These kernel matrices encode information on the

relative orientation of the two environments, as well as their similarity,

and are referred to as λ-SOAP kernels. A kernel built using eq 66 satisfies the two properties

that are necessary for learning a tensorial quantity: namely, that

the predictions of a SA-GPR model are invariant to a rotation of any

member of the training set and that when a rotation is applied to

a test structure, the predictions of the model transform covariantly

with this rotation.

For λ = 0, which has  eq 66 reduces to the expression for the scalar SOAP kernel.

For

the general spherical case, the integral of eq 66 can be carried out analytically.91 In practice, the kernel can be computed from

an equivariant generalization of the power spectrum,

eq 66 reduces to the expression for the scalar SOAP kernel.

For

the general spherical case, the integral of eq 66 can be carried out analytically.91 In practice, the kernel can be computed from

an equivariant generalization of the power spectrum,

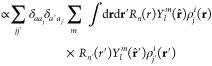

|

67 |

where  is a Clebsch–Gordan

coefficient,

is a Clebsch–Gordan

coefficient,  is a density

expansion coefficient (eq 55), and the notation

is a density

expansion coefficient (eq 55), and the notation  alludes to the fact that these are features

obtained from the symmetrized average of a two-point density correlation

(akin to SOAP) that transforms under rotation as a spherical harmonic Yλμ. The λ-SOAP kernel (eq 66) can be obtained by summing over

the feature indices,

alludes to the fact that these are features

obtained from the symmetrized average of a two-point density correlation

(akin to SOAP) that transforms under rotation as a spherical harmonic Yλμ. The λ-SOAP kernel (eq 66) can be obtained by summing over

the feature indices,

|

68 |

where we use  as a shorthand notation for the full set

of indices

as a shorthand notation for the full set

of indices  .

.

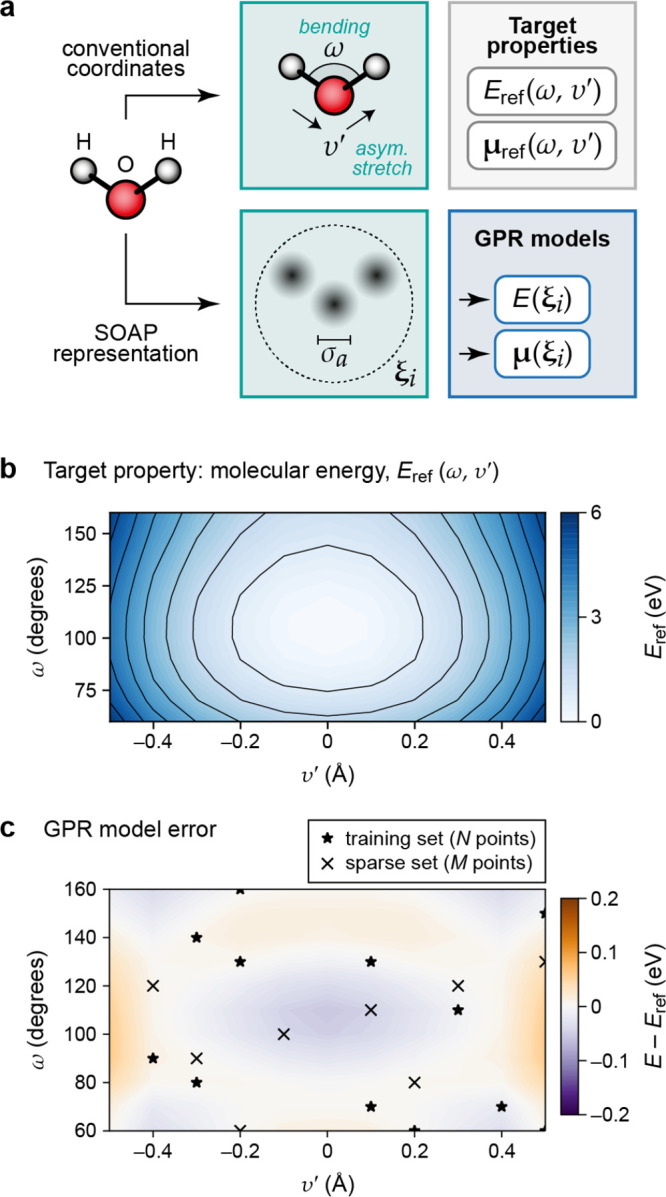

3.3. H2O Potential Energy: A Hands-On Example

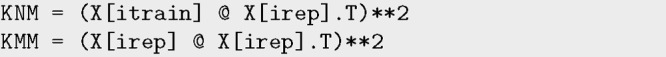

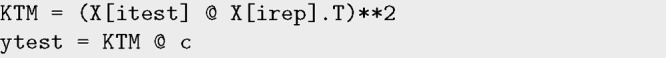

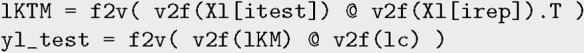

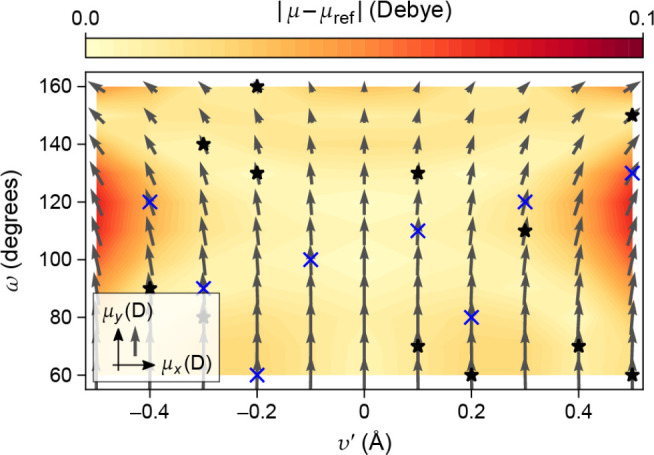

The Dataset

We consider as a toy model the prediction

of the energy of a water molecule, deformed along the bending coordinate

ω and the asymmetric stretch coordinate ν′

= dOH(1) – dOH(2), with fixed symmetric

stretch coordinate  Å (Figure 8). The dataset is a collection of 121 configurations,