Abstract

In recent years, the use of machine learning (ML) in computational chemistry has enabled numerous advances previously out of reach due to the computational complexity of traditional electronic-structure methods. One of the most promising applications is the construction of ML-based force fields (FFs), with the aim to narrow the gap between the accuracy of ab initio methods and the efficiency of classical FFs. The key idea is to learn the statistical relation between chemical structure and potential energy without relying on a preconceived notion of fixed chemical bonds or knowledge about the relevant interactions. Such universal ML approximations are in principle only limited by the quality and quantity of the reference data used to train them. This review gives an overview of applications of ML-FFs and the chemical insights that can be obtained from them. The core concepts underlying ML-FFs are described in detail, and a step-by-step guide for constructing and testing them from scratch is given. The text concludes with a discussion of the challenges that remain to be overcome by the next generation of ML-FFs.

1. Introduction

In 1964, physicist Richard Feynman famously remarked “that all things are made of atoms, and that everything that living things do can be understood in terms of the jigglings and wigglings of atoms”.1 As such, an atomically resolved picture can provide invaluable insights on biological (and other) processes. The first molecular dynamics (MD) study of a protein in 1977 by McCammon et al.2 did not consider explicit solvent molecules and was limited to less than 10 ps of simulation. Still, it challenged the (at that time) common belief that proteins are essentially rigid structures3 and, instead, suggested that the interior of proteins behaves more fluid-like. Since then, systems consisting of more than a million atoms have been studied,4 simulation times extended to the millisecond regime,5 and the study of entire viruses in atomic detail made possible.6,7 Recently, a distributed computing effort even allowed to study the viral proteome of SARS-CoV-2 for a total of 0.1 s of simulation time.8

To perform MD simulations, typically, the Newtonian equations

of

motion are integrated numerically, which requires knowledge of the

forces acting on individual atoms at each time step of the simulation.9 In principle, the most accurate way to obtain

these forces is by solving the Schrödinger equation (SE), which

describes the physical laws underlying most chemical phenomena and

processes.10 Unfortunately, an analytic

solution is only possible for two-body systems such as the hydrogen

atom. For larger chemical structures, the SE can only be solved approximately.

However, even with approximations, an accurate numerical solution

is a computationally demanding task. For example, the CCSD(T) method

(coupled cluster with singles, doubles and perturbative triples),

which is widely regarded as the “gold standard” of chemistry

(as its predictions compare well with experimental results),11 scales ∝ N7 with the number of atoms N. (Strictly speaking,

the true scaling of the CCSD(T) method is  , where n is the number

of basis functions used for the wave function ansatz. Depending on

the desired accuracy and which atoms are present (more precisely,

how many electrons are in their shells), n can vary

greatly. However, the number of atoms is usually a good proxy.) Because

of this, it is unfeasible to calculate the forces for many different

configurations of large chemical systems, which is required for running

MD simulations, with accurate methods. Instead, simple empirical functions

are commonly used to model the relevant interactions. From these so-called

force fields (FFs), atomic forces can be readily derived analytically.

, where n is the number

of basis functions used for the wave function ansatz. Depending on

the desired accuracy and which atoms are present (more precisely,

how many electrons are in their shells), n can vary

greatly. However, the number of atoms is usually a good proxy.) Because

of this, it is unfeasible to calculate the forces for many different

configurations of large chemical systems, which is required for running

MD simulations, with accurate methods. Instead, simple empirical functions

are commonly used to model the relevant interactions. From these so-called

force fields (FFs), atomic forces can be readily derived analytically.

Most conventional FFs model chemical interactions as a sum over bonded and nonbonded terms.12,13 The former can be described with simple functions of the distances between directly bonded atoms, or angles and dihedrals between atoms sharing some of their bonding partners. The nonbonded terms consider pairwise combinations of atoms, typically by modeling electrostatics with Coulomb’s law (assuming a point charge at each atom’s position) and dispersion with a Lennard-Jones potential.14 Because of the computational efficiency of these terms, such classical FFs allow to study systems consisting of many thousands of atoms. However, while offering a qualitatively reasonable description of chemical interactions, the quality of MD simulations, and hence the insights that can be obtained from them, are ultimately limited by the accuracy of the underlying FF.15 This is particularly problematic when polarization, or many-body interactions in general, are of significant importance, as these effects are not adequately modeled by the terms described above. While it is possible to construct polarizable FFs16−19 or account for other important effects, for example, anisotropic charge distributions,20,21 to improve accuracy (in exchange for computational efficiency), it is not always clear a priori when such modifications are necessary. Beyond that, conventional FFs require a preconceived notion of bonding patterns and thus cannot describe bond breaking or bond formation. While there exist reactive FFs offering an approximated description of reactions,22−24 they are often not sufficiently accurate for quantitative studies or restricted to specific types of reactions. Mixed quantum mechanics/molecular mechanics (QM/MM) treatments25 pose an alternative solution: Here, the SE is only solved for a small part of the system where high accuracy is required or reactions may take place, for example, the active site of an enzyme. Meanwhile, all remaining atoms are treated at the FF level of accuracy. Although this is more efficient than a pure quantum-mechanical approach, it is often necessary to model a large number of atoms at the QM level for converged results,26 which is still highly computationally demanding.

In a “dream scenario” for computational chemists and biologists, it would be possible to treat even large systems at the highest levels of theory, which would require prohibitively large computational resources in the real world. Machine learning (ML) methods could help to achieve this dream in a principled manner, thus closing the gap between the accuracy of ab initio methods and the efficiency of classical FFs (Figure 1). ML methods aim to learn the functional relationship between inputs (chemical descriptors) and outputs (properties) from patterns or structure in the data. Ideally, a trained learning machine would then reflect the underlying effective “rules” of quantum mechanics.27 Practically, ML models can take a shortcut by not having to solve any equations that follow from the physical laws governing the structure–property relation. Because of this unique ability, ML methods have been enjoying growing popularity in the chemical sciences in recent years. They allow to explore chemical space and predict the properties of compounds with both unprecedented efficiency and high accuracy.27−32 ML has also been used to augment and accelerate traditional methods used in molecular simulations, for example, for sampling equilibrium states33,34 and rare events,35 computing reaction rates,36 exploring protein folding dynamics37 and other biophysical processes,38−42 Markov state modeling,43−49 and coarse-graining50−53 (for a recent review on applications of ML in molecular simulatons, see ref (54)). Recent advances made it even possible to predict molecular wave functions, which can act as an interface between ML and quantum chemistry,55,56 as knowledge of the wave function allows to deduce many different quantum mechanical observables at once. ML can also be combined with more traditional semiempirical methods, for example by predicting accurate repulsive potentials for density functional tight-binding approaches.57 Instead of circumventing equations, ML methods can also help when solving them. They have been used to find novel density functionals58−60 and solutions of the Schrödinger equation.61,62 Other promising applications include the generation of molecular structures to tackle inverse design problems,63−67 or planning chemical syntheses.68

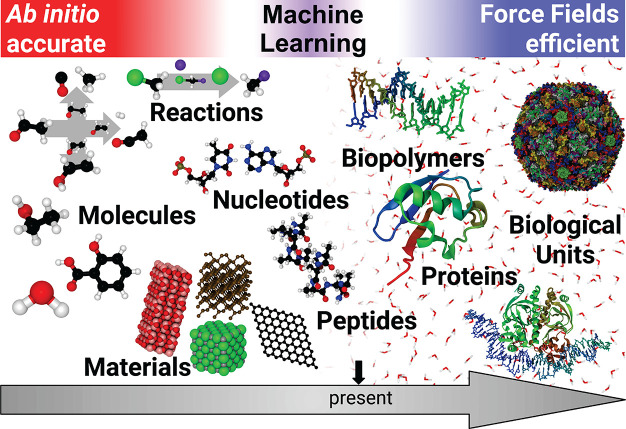

Figure 1.

Accurate ab initio methods are computationally demanding and can only be used to study small systems in gas phase or regular periodic materials. Larger molecules in solution, such as proteins, are typically modeled by force fields, empirical functions that trade accuracy for computational efficiency. Machine learning methods are closing this gap and allow to study increasingly large chemical systems at ab initio accuracy with force field efficiency.

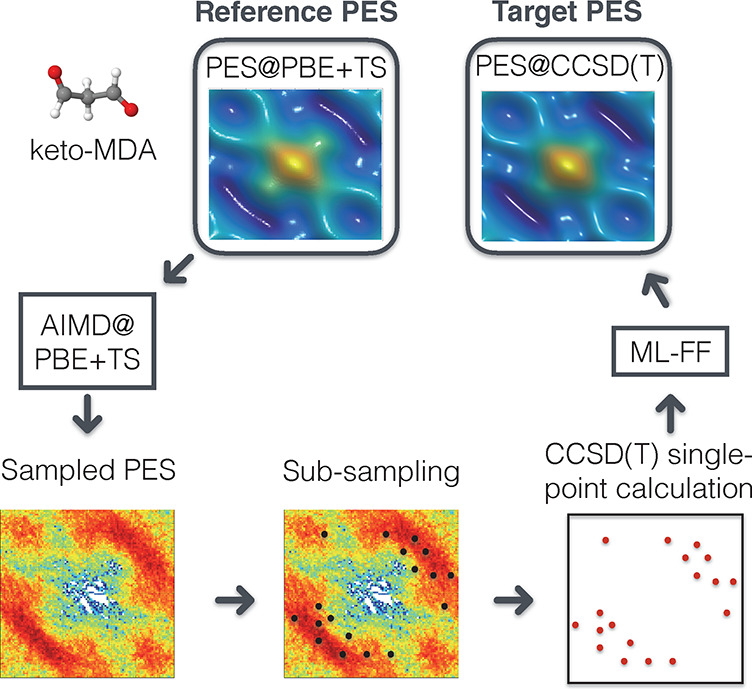

For constructing ML-FFs, suitable reference data to learn the relevant structure–property relation include energy, forces, or a combination of both, obtained from ab initio calculations. Contrary to conventional FFs, no preconceived notion of bonding patterns needs to be assumed. Instead, all chemical behavior is learned from the reference data. This allows to reconstruct the important interactions purely from atomic positions without imposing a restricted analytical form on the interatomic potential and enables a natural description of chemical reactions. For example, it is now possible to construct ML-FFs for small molecules from CCSD(T) reference data close to spectroscopic accuracy and with computational efficiency similar to conventional FFs.69,70 This has enabled studies that would be prohibitively expensive with conventional methods of computational chemistry and allowed to obtain novel chemical insights (see Figure 2).

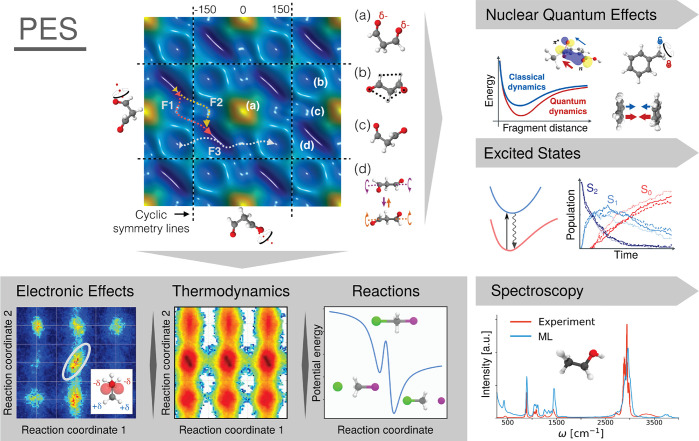

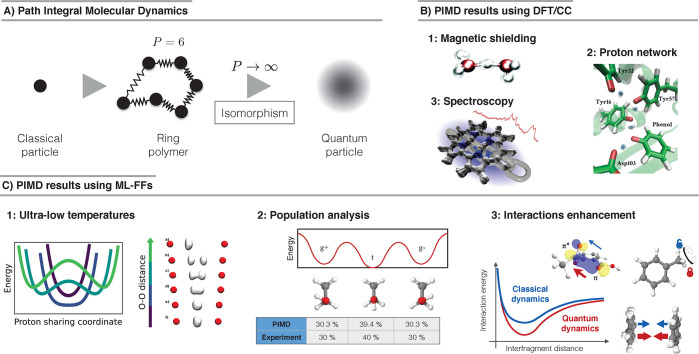

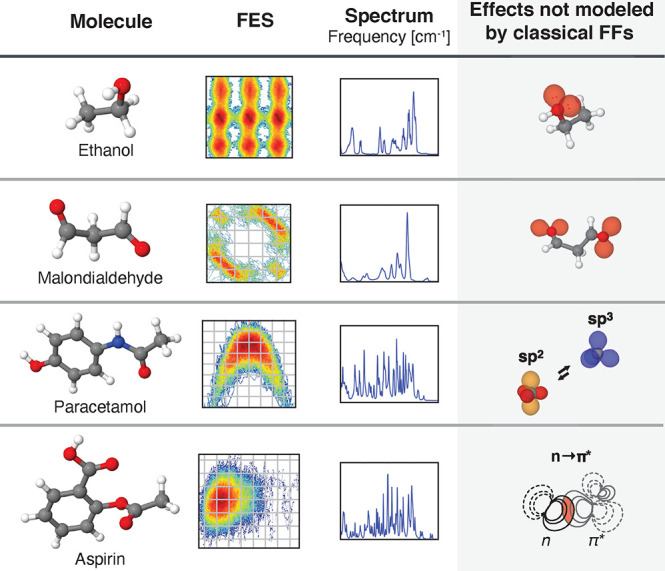

Figure 2.

ML-FFs combine the accuracy of ab initio methods and the efficiency of classical FFs. They provide easy access to a system’s potential energy surface (PES), which can in turn be used to derive a plethora of other quantities. By using them to run MD simulations on a single PES, ML-FFs allow chemical insights inaccessible to other methods (see gray box). For example, they accurately model electronic effects and their influence on thermodynamic observables and allow a natural description of chemical reactions, which is difficult or even impossible with conventional FFs. Their efficiency also allows them to be applied in situations where the Born–Oppenheimer approximation begins to break down and a single PES no longer provides an adequate description. An example is the study of nuclear quantum effects and electronically excited states (upper right). Finally, ML-FFs can be further enhanced by modeling additional properties. This provides direct access to a wide range of molecular spectra, building a bridge between theory and experiment (lower right). In general, such studies would be prohibitively expensive with ab initio methods.

Other properties than energies and forces can be predicted as well: For example, dipole moments, which are a measure for how polar molecules are, can be used to calculate infrared spectra from MD simulations71−73 and allow a comparison to experimental measurements. Other prediction targets could be used to screen large compound databases for molecules with desirable properties several orders of magnitude faster than with ab initio methods. The HOMO/LUMO gap, which is important for materials used in electronic devices such as solar cells,74 is only one prominent example of many potentially interesting prediction targets.

This review will focus on the construction of ML-FFs for the usage in MD simulations and other applications (for details on how to set up such simulations or how to extract physical insights from them, refer to refs (75−77)). The text is structured as follows: Section 2 reviews fundamental concepts of chemistry (Section 2.1) and machine learning (Section 2.2) relevant to the construction of ML-FFs and discusses special considerations when the two are combined (Section 2.3). As this article is intended for both chemists and machine learning practitioners, these sections provide all readers with the necessary background to understand the remainder of the review. Experts in either field may want to skip over the respective sections, as they discuss fundamentals. The next part (Section 3) serves as a step-by-step guide and reference for readers that want to apply ML-FFs in their own research. Here, the best practices for constructing ML-FFs are outlined, possible problems that may be encountered along the way (and how to avoid them) are discussed and an overview of several software packages, which may be used to accelerate the construction of ML-FFs, is provided. Section 4 lists several applications of ML-FFs described in the literature and highlights physical and chemical insights made possible through the use of ML. The review is concluded in Section 5 by a discussion of obstacles that still need to be overcome to extend the applicability of ML-FFs to an even broader context.

2. Mathematical and Conceptual Framework

Section 2.1 reviews important chemical concepts such as the potential energy surface and invariance properties of physical systems, which are essential for constructing physically meaningful models. It is meant as a short summary of the most important physical principles and fundamental chemical knowledge for readers with a primarily ML-focused background who are interested in constructing ML-FFs. On the other hand, to offer readers with a chemical background a first orientation, an overview of two important methodologies in Machine Learning, namely kernel-based learning approaches and artificial neural networks, is given in Section 2.2. Finally, Section 2.3 lists constraints related to the physical invariances mentioned earlier and gives examples of models for constructing ML-FFs and how they implement these constraints in practice.

2.1. Chemistry Foundations

The Schrödinger equation (SE),78 which describes the interaction of atomic nuclei and electrons, is sufficient for understanding most chemical phenomena and processes.10 Unfortunately, it can only be solved analytically for very simple systems, such as the hydrogen atom. For more complex systems like molecules, exact numerical solutions are often impractical due to a steep increase of computational costs as a function of system size. For this reason, numerous approximation schemes have been devised to enable insights into more complicated chemical systems. Virtually all of these are based on the Born–Oppenheimer (BO) approximation,79 which decouples electronic and nuclear motion so that the latter can be neglected. It is assumed that electrons adjust instantaneously to changes in the nuclear positions, which is motivated by the observation that atomic nuclei are heavier than electrons by several orders of magnitude, thus moving on a vastly different time scale. Hence, the nuclear positions appear almost stationary to the electrons and therefore enter the resulting “electronic SE” only parametrically: The energy of the electrons depends on the external potential caused by the nuclei, which in turn is fully determined by their positions and nuclear charges. By summing electronic energy and Coulomb repulsion between nuclei, the total potential energy of the system is obtained, which is one of the most important properties of molecules. Alongside entropic contributions, it determines the relative stability of different compounds, whether reactions are exothermic or endothermic, and can even serve as proxy for more complex properties. For example, the potency of some drugs can be estimated from their binding energy to biomolecules.80

2.1.1. Potential Energy Surface

By introducing

a parametric dependency between energy and nuclei, the BO approximation

implies the existence of a functional relation  , which maps the nuclear

charges Zi and positions ri of N atoms

directly

to their potential energy E. This function, called

the potential energy surface (PES), governs the dynamics of a chemical

system, similar to a ball rolling on a hilly landscape. Minima (“valleys”)

on the PES correspond to stable molecules and significant structural

changes (or even chemical reactions) occur when a system crosses over

a transition state (“ridge”) from one minimum into another

(Figure 3).

, which maps the nuclear

charges Zi and positions ri of N atoms

directly

to their potential energy E. This function, called

the potential energy surface (PES), governs the dynamics of a chemical

system, similar to a ball rolling on a hilly landscape. Minima (“valleys”)

on the PES correspond to stable molecules and significant structural

changes (or even chemical reactions) occur when a system crosses over

a transition state (“ridge”) from one minimum into another

(Figure 3).

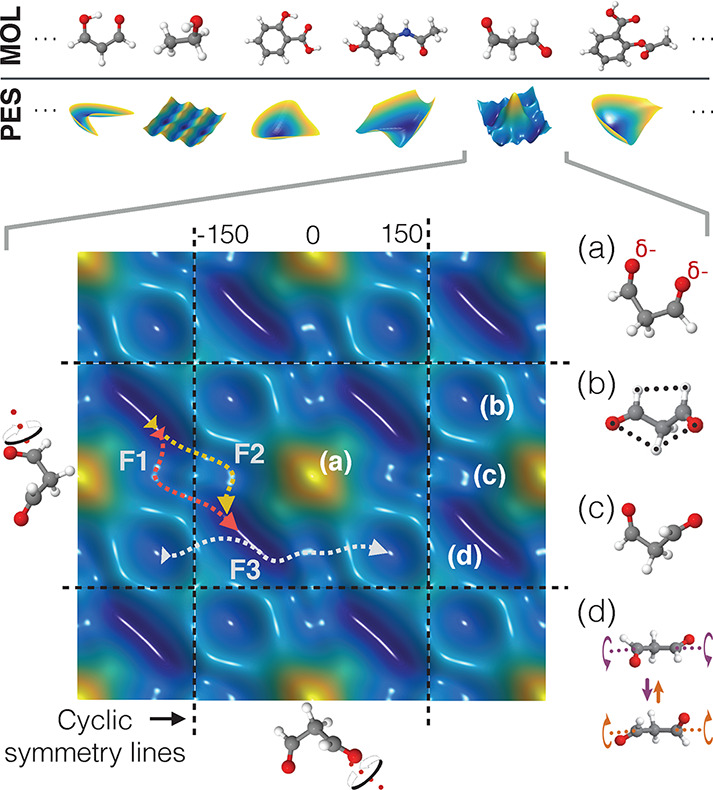

Figure 3.

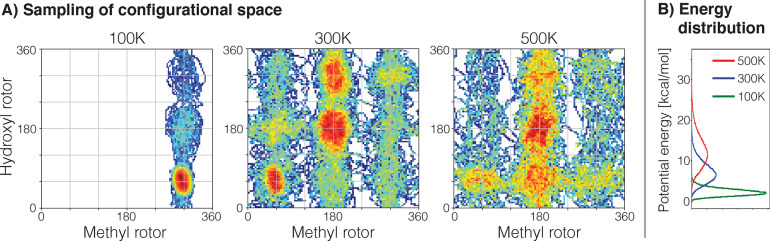

Top: Two-dimensional projections of the PESs of different molecules, highlighting rich topological differences and various possible shapes. Bottom: Cut through the PES of keto-malondialdehyde for rotations of the two aldehyde groups. Note that the shape repeats periodically for full rotations. Regions with low potential energy are drawn in blue and high energy regions in yellow. Structure (a) leads to a steep increase in energy due to the proximity of the two oxygen atoms carrying negative partial charges. Local minima of the PES are shown in (b) and (c), whereas (d) displays structural fluctuations around the global minimum. By running molecular dynamics simulations, the most common transition paths (F1, F2, and F3) between the different minima could be revealed.

Knowledge of the PES therefore also allows to predict how a system evolves over time. For example, by studying a thermal ensemble of molecules starting from the same minimum on the PES, it is possible to determine which fraction of them will reach different minima and in what time frame, allowing to assess their reactivity and which products are formed. It is also possible to deduce the macroscopic thermodynamic properties of a system by studying how it behaves at an atomic level. In such molecular dynamics (MD) simulations, a classical treatment of nuclear dynamics is sometimes sufficiently accurate. In case of significant nuclear delocalization, which may occur in systems with light atoms, strong bonds, or for shallow potential energy landscapes,81 nuclear quantum effects (NQEs) must be included as well. Even then, methods like path-integral MD establish a one-to-one correspondence between the properties of a quantum object and a classical system with an extended phase space, eliminating the need to solve the nuclear SE.82−84

At each

time step of a dynamics simulation, the forces Fi acting on each atom i must

be known so that the equations of motion can be integrated

numerically (e.g., using the Verlet algorithm85). They can be derived from the PES by using the relation Fi = − riE, that is, the forces are the negative

gradient of the potential energy E with respect to

the atomic positions ri (see

also Section 2.1.2). Forces can also be used to perform geometry optimizations, e.g.,

to find special configurations of atoms which correspond to critical

points on the PES. For example, the height of a reaction barrier can

be computed from the energy difference between the saddle point (transition

state) and either of the two minima (equilibrium structures) which

are connected by it.

riE, that is, the forces are the negative

gradient of the potential energy E with respect to

the atomic positions ri (see

also Section 2.1.2). Forces can also be used to perform geometry optimizations, e.g.,

to find special configurations of atoms which correspond to critical

points on the PES. For example, the height of a reaction barrier can

be computed from the energy difference between the saddle point (transition

state) and either of the two minima (equilibrium structures) which

are connected by it.

Although the BO approximation simplifies the SE, even approximate solutions can be computationally demanding, in particular for large systems containing many degrees of freedom. Thus, it is often unfeasible to derive ab initio energies and forces for each time step of an MD simulation. For this reason, analytical functions known as force fields (FFs), are typically used to represent the PES, circumventing the problem of solving an equation altogether. The difficulty is then shifted to finding an appropriate functional form and parametrization of the FF. ML methods automate this demanding and time-consuming process by learning an appropriate function from reference data.

2.1.2. Invariances of Physical Systems

Closed physical systems are governed by various conservation laws that describe invariant properties. They are fundamental principles of nature that characterize symmetries that must not be violated. As such, conservation laws provide strong constraints that can be used as guiding principles in search of physically plausible ML models. The basic invariances of molecular systems are directly derived from Noether’s theorem,86 which states that each conserved quantity is associated with a differentiable symmetry of the action of a physical system. Typical conserved quantities include the total energy (following from temporal invariance) as well as angular and linear momentum (rototranslational invariance). Energy conservation imposes a particular structure on vector fields in order for them to be valid force fields with corresponding potentials. Namely, forces must be the negative gradient of the potential energy with respect to atomic positions. This relation ensures that when atoms move, they always acquire the same amount of kinetic energy as they lose in potential energy (and vice versa), i.e., the total energy is constant (the work done along closed paths is zero). The conservation of linear and angular momentum implies that the potential energy of a molecule only depends on the relative position of its atoms to each other and does not change with rigid rotations or translations. Another invariance (not derived from Noether’s theorem) follows from the fact that, from the perspective of the electrons, atoms with the same nuclear charge appear identical to each other. They can thus be exchanged without affecting the energy and forces, which makes the PES symmetric with respect to permutations of some of its arguments. To ensure physically meaningful predictions, ML-FFs must be made invariant under the same transformations as the true PES by introducing appropriate constraints.

2.2. Machine Learning Foundations

A question that frequently arises for researchers new to the field of ML concerns the difference of ML modeling to plain interpolation in the noise free regression case. After all, the Shannon sampling theorem gives bounds for the number of “training samples” needed to reconstruct a band-limited signal exactly.87 Since the regression tasks considered in this review use ab initio data as reference, they can be considered practically noise-free. Furthermore, PESs are usually smooth, which means there is a well-defined frequency cutoff in the spectrum of this “signal”. Thus, both requirements for Shannon interpolation are satisfied and it should in principle be possible to reconstruct FFs via interpolation of the training samples without error, provided there are enough of them.

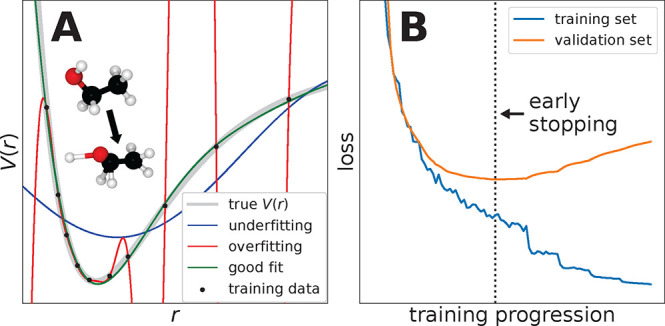

This is where ML diverges from signal interpolation theory. In practice, there is often not enough data available to fully capture all the necessary information for a perfect reconstruction. In that case, the goal of ML methods is not to recover the training data, but rather to estimate the true process with its underlying regularities that also describes all new and unseen data; this is often denoted as generalization. The key to generalization is selecting a model based on the well-known principle of Occam’s razor, i.e., the notion that simpler hypotheses are more likely to be correct.88 The capacity of the model can be controlled using the bias–variance trade-off89 (a compromise between expressiveness and complexity) and is practically done by exercising model selection techniques (see Section 2.2.3) such as cross-validation that leave out part of the data from the ML training process and use it later to obtain a valid estimate of the generalization error.30,90 The reason why regularization is often needed is that ML algorithms are universal approximators that can approximate any continuous function on a closed interval arbitrarily close. Since for a finite amount of reference data infinitely many such functions are thinkable, a regularization mechanism is often needed to select a preferably simple function from the vast space of possibilities.

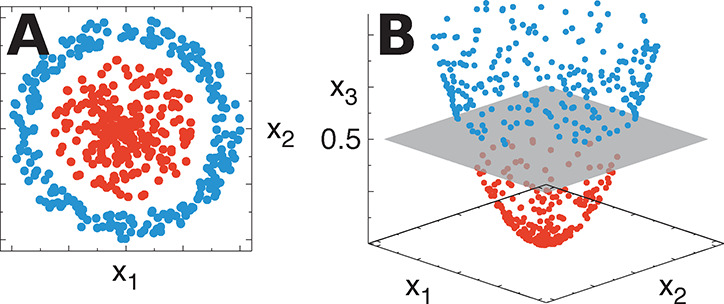

ML methods typically rely on the fact that nonlinear problems, such as predicting energy from nuclear positions, can be “linearized” by mapping the input to a (often higher-dimensional) “feature space” (see Figure 4).90−93 Note that such feature spaces are explicitly constructed for kernel-based learning methods (see Section 2.2.1) or learned respectively for deep learning models94 (see Section 2.2.2). Kernel-based methods achieve this by taking advantage of the so-called kernel trick,92,95−98 which allows implicitly operating in a high-dimensional feature space without explicitly performing any computation there. In contrast, artificial neural networks (NNs) decompose a complex nonlinear function into a composition of linear transformations with learnable parameters connected by nonlinear activation functions. With increasingly many of such nonlinear transformations organized in “layers” (deep NNs), it is possible to efficiently learn highly complex feature spaces.

Figure 4.

(A) Blue and red points with coordinates (x1, x2) are linearly inseparable. (B) By defining a suitable mapping from the input space (x1, x2) to a higher-dimensional feature space (x1, x2, x3), blue and red points become linearly separable (gray plane at x3 = 0.5).

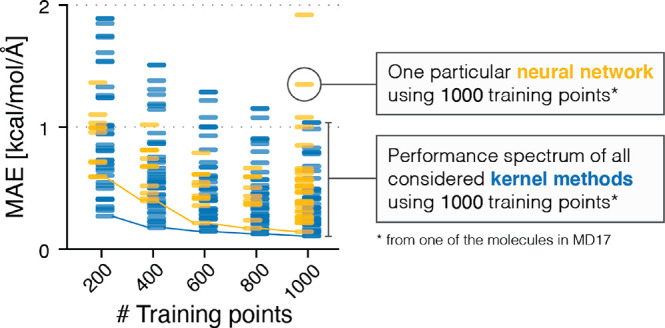

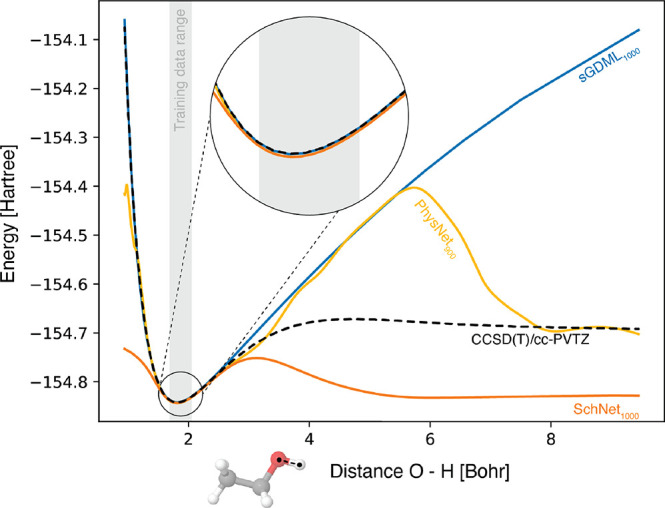

While NNs tend to require more training data to reach the same accuracy as kernel methods (see Figure 5),99 they typically scale better to larger data sets. In general, neither method is strictly superior over the other,100 and both have advantages and disadvantages that must be weighed against each other for a specific application. Recently, it has even been discovered that in the limit of infinitely wide layers, deep NNs are equivalent to kernel methods, which shifts the main differentiating factor between both methodologies to how they are constructed and trained101,102 and makes deep NNs accessible to kernel-based analysis methods.103,104

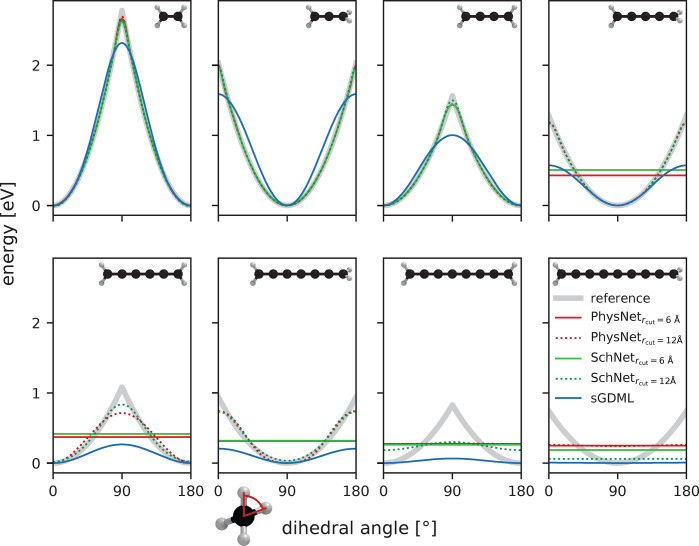

Figure 5.

Mean absolute force prediction errors (MAEs) of different ML models trained on molecules in the MD17 data set,105 colored by model type. Overall, kernel methods (GDML,105 sGDML,69 FCHL18/19106,107) are slightly more data efficient, that is, they produce more accurate predictions with smaller training data sets, but neural network architectures (PhysNet,108 SchNet,109 DimeNet,110 EANN,111 DeePMD,112 DeepPot-SE,113 ACSF,114 HIP-NN115) catch up quickly with increasing training set size and continue to improve when more data for training is available.

In the following, kernel methods and neural networks are described in more detail to highlight the most important properties that differentiate both methodologies.

2.2.1. Kernel-Based Methods

Given a data

set {(yi; xi)}i = 1M of M reference

values  for inputs

for inputs  kernel regression aims to estimate

kernel regression aims to estimate  for unknown inputs

for unknown inputs  . For example, for PES construction, y is the potential

energy and x encodes structural

information about the atoms, i.e., their nuclear charges and relative

positions in space. Popular choices for such “descriptors”

are vectors of internal coordinates, Coulomb matrices,28 representations of atomic environments (e.g.,

symmetry functions,116 SOAP117 or FCHL106,107), or an encoding of

crystal structure.118−120 See ref (121) for a recent review on structural descriptors.

. For example, for PES construction, y is the potential

energy and x encodes structural

information about the atoms, i.e., their nuclear charges and relative

positions in space. Popular choices for such “descriptors”

are vectors of internal coordinates, Coulomb matrices,28 representations of atomic environments (e.g.,

symmetry functions,116 SOAP117 or FCHL106,107), or an encoding of

crystal structure.118−120 See ref (121) for a recent review on structural descriptors.

The representer theorem states that the functional relation

| 1 |

where ϵ denotes measurement noise, can be optimally approximated as a linear combination

| 2 |

where αi are coefficients, and K(x, x′) is a (typically nonlinear) symmetric and

positive semidefinite function122−124 that measures the similarity

of two compound descriptors x and x′

(see Figure 7). (The

function K(x, x′)

computes the inner product of two points ϕ(x) and

ϕ(x′) in some Hilbert space  (the feature

space) without the need to

evaluate (or even know) the mapping

(the feature

space) without the need to

evaluate (or even know) the mapping  explicitly, i.e., K(x, x′) is a reproducing kernel of

explicitly, i.e., K(x, x′) is a reproducing kernel of  .90,125) Examples for such

functions K are the polynomial kernel

.90,125) Examples for such

functions K are the polynomial kernel

| 3 |

where hyperparameter d is the degree of the polynomial and ⟨·, ·⟩ is the dot product, or the Gaussian kernel given by

| 4 |

with hyperparameter γ controlling its width/scale and ∥·∥ denoting the L2-norm (see refs (30, 90, 97, and 126) for more examples of kernel functions).

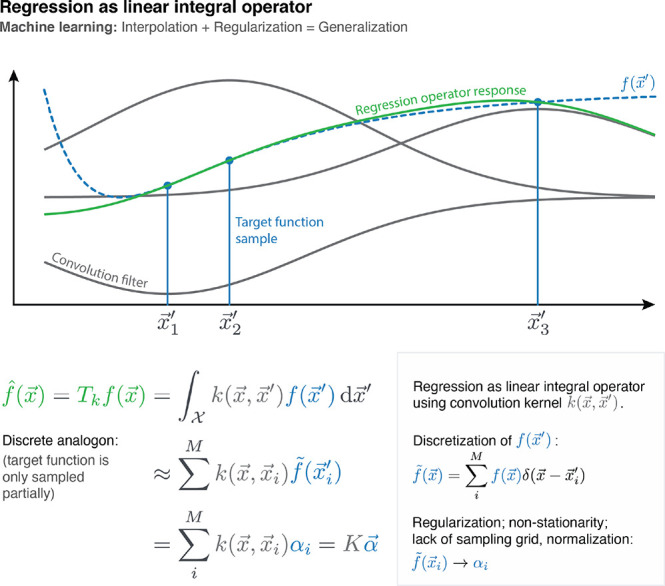

Figure 7.

Kernel ridge regression can be understood as a linear integral operator Tk that is applied to the (only partially known) target function of interest f(x). Such operators are defined as convolutions with a continuous kernel function K, whose response is the regression result. Because the training data is typically not sampled on a grid, this convolution task transforms to a linear system that yields the regression coefficients α. Because only Tkf(x) and not the true f(x) is recovered, the challenge is to find a kernel that defines an operator that leaves the relevant parts of its original function invariant. This is why the Gaussian kernel (eq 4) is a popular choice: Depending on the chosen length scale γ, it attenuates high frequency components, while passing through the low frequency components of the input, therefore making only minimal assumptions about the target function. However, stronger assumptions (e.g., by combining kernels with physically motivated descriptors) increase the sample efficiency of the regressor.

The structure and number of dimensions

of the associated Hilbert

space  depends on

the choice of K(x, x′)

and dimension of the inputs x and x′.

As an example, consider the

polynomial kernel (eq 3) with degree d = 2 and two-dimensional inputs.

The corresponding homogeneous (c = 0) polynomial mapping is given by

depends on

the choice of K(x, x′)

and dimension of the inputs x and x′.

As an example, consider the

polynomial kernel (eq 3) with degree d = 2 and two-dimensional inputs.

The corresponding homogeneous (c = 0) polynomial mapping is given by  , so the associated

, so the associated  is three-dimensional.

While in this case,

it is still possible to compute ϕ and evaluate the inner product

of two points ϕ(x) and ϕ(x′)

explicitly, the advantage of using kernels becomes apparent when the

Gaussian kernel (eq 4) is considered. Rewriting eq 4 as

is three-dimensional.

While in this case,

it is still possible to compute ϕ and evaluate the inner product

of two points ϕ(x) and ϕ(x′)

explicitly, the advantage of using kernels becomes apparent when the

Gaussian kernel (eq 4) is considered. Rewriting eq 4 as

| 5 |

and expanding the third factor in a Taylor

series  reveals that the Gaussian kernel

is equivalent

to an infinite sum over (scaled) polynomial kernels (see eq 3) and the associated

reveals that the Gaussian kernel

is equivalent

to an infinite sum over (scaled) polynomial kernels (see eq 3) and the associated  is ∞-dimensional.

Fortunately, by using the kernel function K(x, x′), it is possible to operate in

is ∞-dimensional.

Fortunately, by using the kernel function K(x, x′), it is possible to operate in  implicitly

and evaluate

implicitly

and evaluate  (x) (eq 2) without computing the mapping ϕ. This

is often referred to as the kernel trick.90,92,95−97,126

(x) (eq 2) without computing the mapping ϕ. This

is often referred to as the kernel trick.90,92,95−97,126

It remains the question how the coefficients αi in eq 2 are determined. One way to do so is by adopting a Bayesian, or probabilistic, point of view.127,128 Here, it is assumed that the reference data {(yi; xi)}i = 1M are generated by a Gaussian process (GP), i.e., drawn from a multivariate Gaussian distribution. For simplicity, it can be assumed that this distribution has a mean of zero, as other values can be generated by simply adding a constant term. Further, the possibility that the reference data might be contaminated by noise (for example due to uncertainties in measuring yi) is accounted for explicitly. Typically, Gaussian noise is assumed, i.e.,

| 6 |

where λ is the variance of the normally

distributed noise  . In

the GP picture, the choice of K(x, x′) expresses an assumption

about the underlying function class. For example, choosing the Gaussian

kernel implies that f(x) does not change

drastically over a length scale controlled by γ (see eq 4). As such, a particular

kernel function K corresponds to an implicit regularization,

i.e., an assumption about the underlying smoothness properties of

the function to be estimated.129 The challenge

lies in finding a kernel that represents the structure in the data

that is being modeled as good as possible.103,129 Many kernels are able to approximate continuous functions on a compact

subset arbitrarily well,129,130 but a strong prior

has the advantage of restricting the hypothesis space, which drastically

improves the convergence of the learning task with respect to the

available training data.131

. In

the GP picture, the choice of K(x, x′) expresses an assumption

about the underlying function class. For example, choosing the Gaussian

kernel implies that f(x) does not change

drastically over a length scale controlled by γ (see eq 4). As such, a particular

kernel function K corresponds to an implicit regularization,

i.e., an assumption about the underlying smoothness properties of

the function to be estimated.129 The challenge

lies in finding a kernel that represents the structure in the data

that is being modeled as good as possible.103,129 Many kernels are able to approximate continuous functions on a compact

subset arbitrarily well,129,130 but a strong prior

has the advantage of restricting the hypothesis space, which drastically

improves the convergence of the learning task with respect to the

available training data.131

Under

these conditions, it is now possible to rigorously answer

the question “given the data y = [y1···yM]T, how likely is it to observe the value  for input

for input  ?” As

?” As  is generated by

the same GP as the reference

data, the conditional probability

is generated by

the same GP as the reference

data, the conditional probability  can be expressed as

can be expressed as

| 7 |

where IM is the identity

matrix of size M, K is the M × M kernel matrix90,132 with entries Kij = K(xi, xj) and  =

=  . In other words, eq 7 expresses a probability

distribution over

possible predictions, where its mean value

. In other words, eq 7 expresses a probability

distribution over

possible predictions, where its mean value

| 8 |

is the

most likely estimate for  (given the reference data) and its variance

(given the reference data) and its variance

| 9 |

provides information about how strongly other likely predictions vary from the mean. Note that while eq 9 can be used as uncertainty estimate for a particular prediction, it should not be confused with error bars. The optimal coefficients α = [α1···αM] in eq 2 are thus given by

| 10 |

or simply α = K–1y in the noise-free case (λ

= 0). However, even in the absence of noise, it can be beneficial

to choose a nonzero λ to obtain a regularized solution. The

addition of λ > 0 to the diagonal

of K increases numerical stability and has the effect

of damping the magnitude of the coefficients, thereby increasing the

smoothness of  (x). The downside is that the

known reference values yi are only approximately reproduced. This, however, also decreases

the chance of overfitting and can lead to better generalization, that

is, increased accuracy when predicting unknown values.

(x). The downside is that the

known reference values yi are only approximately reproduced. This, however, also decreases

the chance of overfitting and can lead to better generalization, that

is, increased accuracy when predicting unknown values.

Matrix

factorization methods like Cholesky decomposition133 are typically used to efficiently solve the

linear problem in eq 10 in closed form. However, this type of approach scales as  (M3) with the

number of reference data and may become problematic for extremely

large data sets. Iterative gradient-based solvers can reduce the complexity

to

(M3) with the

number of reference data and may become problematic for extremely

large data sets. Iterative gradient-based solvers can reduce the complexity

to  .134 Once the

coefficients have been determined, the value

.134 Once the

coefficients have been determined, the value  for an arbitrary input

for an arbitrary input  can be estimated according to eq 2 with

can be estimated according to eq 2 with  complexity (a sum over all M reference data points is required).

complexity (a sum over all M reference data points is required).

Alternatively, a variety

of approximation techniques exploit that

kernel matrices usually have a small numerical rank, i.e., a rapidly

decaying eigenvalue spectrum. This enables approximate factorizations RRT ≈ K, where R is either a rectangular matrix  with L < M or sparse. As a result, eq 10 becomes easier to solve, albeit the result will not be exact.135−139

with L < M or sparse. As a result, eq 10 becomes easier to solve, albeit the result will not be exact.135−139

A straightforward approach to approximate a linear system

is to

pick a representative or random subset of L points x̃ from the data set (in principle, even arbitrary x̃ could be chosen) and construct a rectangular kernel

matrix  with entries KLM,ij = K (x̃, xj). Then the

corresponding coefficients can be obtained via the Moore-Penrose pseudoinverse:140,141

with entries KLM,ij = K (x̃, xj). Then the

corresponding coefficients can be obtained via the Moore-Penrose pseudoinverse:140,141

| 11 |

Solving eq 11 scales

as  and is much less computationally

demanding

than inverting the original matrix in eq 10. Once the L coefficients α̃ are obtained, the model can be evaluated with

and is much less computationally

demanding

than inverting the original matrix in eq 10. Once the L coefficients α̃ are obtained, the model can be evaluated with  (x) = ΣLα̃iK(x, x̃i), i.e., an additional

benefit is that evaluation now scales as

(x) = ΣLα̃iK(x, x̃i), i.e., an additional

benefit is that evaluation now scales as  instead of

instead of  (see eq 2).

(see eq 2).

However, the approximation above gives rise to an overdetermined system with fewer parameters than training points and therefore reduced model capacity. Strictly speaking, the involved matrix does not satisfy the properties of a kernel matrix anymore, as it is neither symmetric nor positive semidefinite. To obtain a kernel matrix that still maintains these properties, the Nyström135 approximation

| 12 |

can be used instead. Here, the submatrix KLL is a true kernel matrix between all inducing points x̃i. Using the Woodbury matrix identity,142 the regularized inverse is given by

| 13 |

and  .

The computational complexity of solving

the Nyström approximation is

.

The computational complexity of solving

the Nyström approximation is

It should be mentioned that kernel regression methods are known under different names in the literature of different communities. Because of their relation to GPs, some authors prefer the name Gaussian process regression (GPR). Others favor the term kernel ridge regression (KRR), since determining the coefficients with eq 10 corresponds to solving a least-squares objective with L2-regularization in the kernel feature space ϕ and is similar to ordinary ridge regression.143 Sometimes, the method is also referred to as reproducing kernel Hilbert space (RKHS) interpolation, since eq 2 “interpolates” between known reference values (when coefficients are determined with λ = 0, all known reference values are reproduced exactly). All these methods are formally equivalent and essentially differ only in the manner the relevant equations are derived. There are small philosophical differences, however: For example, in the KRR and RKHS pictures, λ in eq 10 is a regularization hyperparameter that has to be introduced ad hoc, whereas in the GPR picture, λ is directly related to the Gaussian noise in eq 6. The expansion coefficients obtained from eq 10 can change drastically depending on the choice of λ, so this is an important detail. Further, while eq 9 can be used to compute uncertainty estimates for all kernel regression methods, the GPR picture allows to relate it directly to the variance of a Gaussian process.

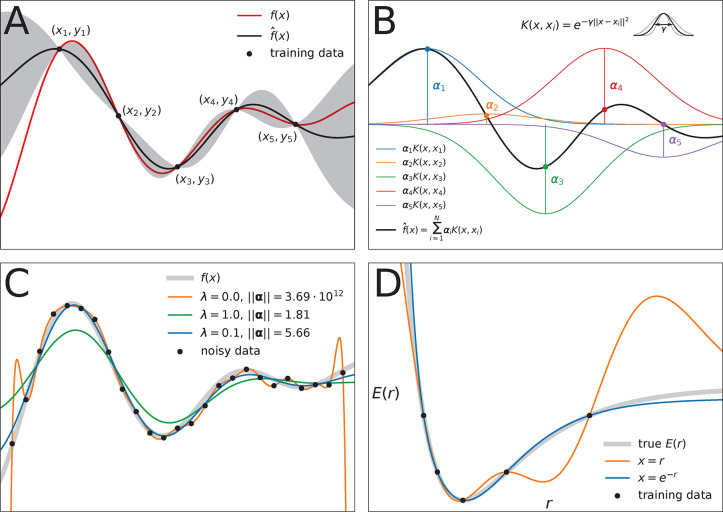

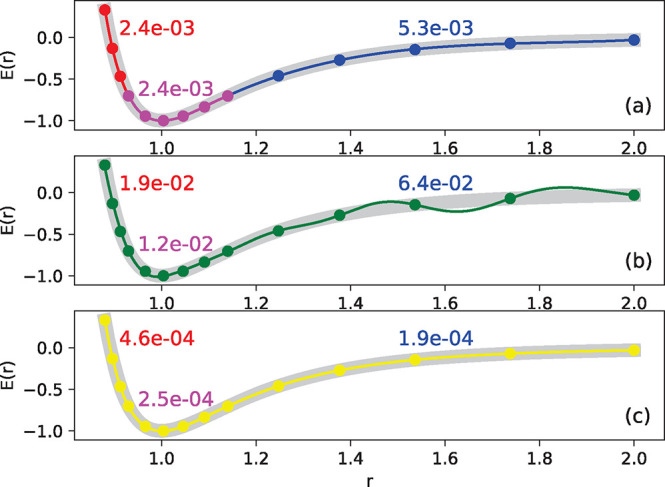

The most important concepts discussed in this section are summarized visually in Figure 6.

Figure 6.

Overview of the mathematical concepts that form the basis

of kernel

methods. (A) Gaussian process regression of a one-dimensional function f(x) (red line) from M = 5 data samples

(xi, yi). The black line  (x) depicts the mean (eq 8) of the conditional probability p(

(x) depicts the mean (eq 8) of the conditional probability p( |y)

(see eq 7), whereas the

gray area depicts two standard

deviations from its mean (see eq 9). Note that predictions are most confident in regions where

training data is present. (B) Function

|y)

(see eq 7), whereas the

gray area depicts two standard

deviations from its mean (see eq 9). Note that predictions are most confident in regions where

training data is present. (B) Function  (x) can be expressed as

a linear combination of M kernel functions K(x, xi) weighted with regression coefficients αi (see eq 2). In this example, the Gaussian kernel (eq 4) is used (the hyperparameter γ

controls its width). (C) Influence of noise on prediction performance.

Here, the function f(x) (thick gray

line) is learned from M = 25 samples, however, each data point (xi, yi) contains observational noise (see eq 6). When the coefficients αi are determined without

regularization, i.e., no noise is assumed to be present, the model

function reproduces the training samples faithfully, but undulates

wildly between data points (orange line, λ = 0). The regularized

solution (blue line, λ = 0.1, see eq 10) is much smoother and stays closer to the

true function f(x), but individual

data points are not reproduced exactly. When the regularization is

too strong (green line, λ = 1.0), the model function becomes

unable to fit the data. Note how regularization shrinks the magnitude

of the coefficient vectors ∥α∥. (D)

For constructing force fields, it is necessary to encode molecular

structure with a representation x. The choice of this

structural descriptor may strongly influence model performance. Here,

the potential energy E of a diatomic molecule (thick

gray line) is learned from M = 5 data points by two kernel machines

using different structural representations (both models use a Gaussian

kernel). When the interatomic distance r is used

as descriptor (orange line, x = r), the predicted potential energy oscillates between data points,

leading to spurious minima and qualitatively wrong behavior for large r. A model using the descriptor x = e–r (blue line) predicts

a physically meaningful potential energy curve that is qualitatively

correct even when the model extrapolates.

(x) can be expressed as

a linear combination of M kernel functions K(x, xi) weighted with regression coefficients αi (see eq 2). In this example, the Gaussian kernel (eq 4) is used (the hyperparameter γ

controls its width). (C) Influence of noise on prediction performance.

Here, the function f(x) (thick gray

line) is learned from M = 25 samples, however, each data point (xi, yi) contains observational noise (see eq 6). When the coefficients αi are determined without

regularization, i.e., no noise is assumed to be present, the model

function reproduces the training samples faithfully, but undulates

wildly between data points (orange line, λ = 0). The regularized

solution (blue line, λ = 0.1, see eq 10) is much smoother and stays closer to the

true function f(x), but individual

data points are not reproduced exactly. When the regularization is

too strong (green line, λ = 1.0), the model function becomes

unable to fit the data. Note how regularization shrinks the magnitude

of the coefficient vectors ∥α∥. (D)

For constructing force fields, it is necessary to encode molecular

structure with a representation x. The choice of this

structural descriptor may strongly influence model performance. Here,

the potential energy E of a diatomic molecule (thick

gray line) is learned from M = 5 data points by two kernel machines

using different structural representations (both models use a Gaussian

kernel). When the interatomic distance r is used

as descriptor (orange line, x = r), the predicted potential energy oscillates between data points,

leading to spurious minima and qualitatively wrong behavior for large r. A model using the descriptor x = e–r (blue line) predicts

a physically meaningful potential energy curve that is qualitatively

correct even when the model extrapolates.

2.2.2. Artificial Neural Networks

Originally,

artificial neural networks (NNs) were, as suggested by their name,

intended to model the intricate networks formed by biological neurons.144 Since then, they have become a standard ML

algorithm94,98,144−150 only remotely related to their original biological inspiration.

In the simplest case, the fundamental building blocks of NNs are dense

(or “fully-connected”) layers–linear transformations

from input vectors  to output vectors

to output vectors  according to

according to

| 14 |

where

both weights  and biases

and biases  are parameters, and nin and nout denote the number of

dimensions of x and y, respectively. Evidently,

a single dense layer can only express linear functions. Nonlinear

relations between inputs and outputs can only be modeled when at least

two dense layers are stacked and combined with a nonlinear activation

function σ:

are parameters, and nin and nout denote the number of

dimensions of x and y, respectively. Evidently,

a single dense layer can only express linear functions. Nonlinear

relations between inputs and outputs can only be modeled when at least

two dense layers are stacked and combined with a nonlinear activation

function σ:

| 15 |

Provided that the number of dimensions of the “hidden layer” h is large enough, this arrangement can approximate any mapping between inputs x and outputs y to arbitrary precision, i.e., it is a general function approximator.151,152

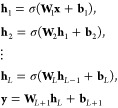

In theory, shallow NNs as shown above are sufficient to approximate any functional relationship.152 However, deep NNs with multiple hidden layers are often superior and were shown to be more parameter-efficient.153−156 To construct a deep NN, L hidden layers are combined sequentially

|

16 |

mapping the

input x to several

intermediate feature representations hl, until the output y is obtained by a linear regression

on the features hL in the

final layer. For PES construction, typically, the NN maps a representation

of chemical structure x to a one-dimensional output representing

the energy. Contrary to the coefficients α in kernel

methods (see eq 10),

the parameters {Wl,bl}l = 1L + 1 of an NN cannot be fitted in closed form. Instead,

they are initialized randomly and optimized (usually using a variant

of stochastic gradient descent) to minimize a loss function that measures

the discrepancy between the output of the NN and the reference data.157 A common choice is the mean squared error (MSE),

which is also used in kernel methods. During training, the loss and

its gradient are estimated from randomly drawn batches of training

data, making each step independent of the number of training data M. On the other hand, finding the coefficients for kernel

methods scales as  due to the need of inverting the M × M kernel matrix. Evaluating an

NN according to eq 16 for a single input x scales linearly with respect to

the number of model parameters. The same is true for kernel methods,

but here the number of model parameters is tied to the number of reference

data M used for training the model (see eq 2), which means that evaluating kernel

methods scales

due to the need of inverting the M × M kernel matrix. Evaluating an

NN according to eq 16 for a single input x scales linearly with respect to

the number of model parameters. The same is true for kernel methods,

but here the number of model parameters is tied to the number of reference

data M used for training the model (see eq 2), which means that evaluating kernel

methods scales  . As the evaluation cost

of NNs is independent

of M and only depends on the chosen architecture,

they are typically the method of choice for learning large data sets.

A schematic overview of the mathematical concepts behind NNs is given

in Figure 8.

. As the evaluation cost

of NNs is independent

of M and only depends on the chosen architecture,

they are typically the method of choice for learning large data sets.

A schematic overview of the mathematical concepts behind NNs is given

in Figure 8.

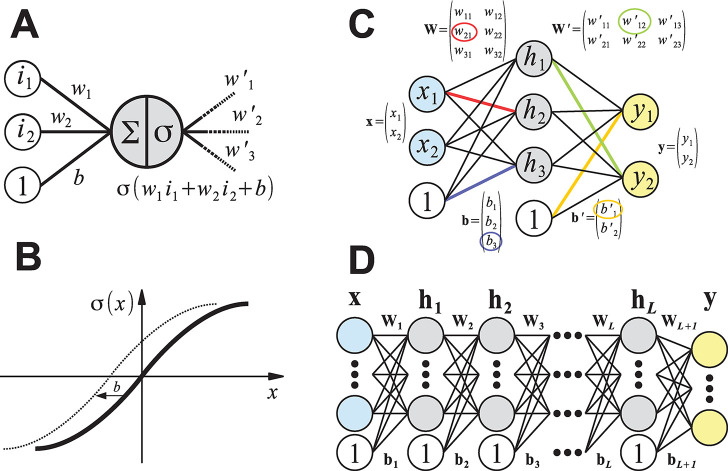

Figure 8.

Schematic representation of the mathematical concepts underlying artificial (feed-forward) neural networks. (A) A single artificial neuron can have an arbitrary number of inputs and outputs. Here, a neuron that is connected to two inputs i1 and i2 with “synaptic weights” w1 and w2 is depicted. The bias term b can be thought of as the weight of an additional input with a value of 1. Artificial neurons compute the weighted sum of their inputs and pass this value through an activation function σ to other neurons in the neural network (here, the neuron has three outputs with connection weights w′1, w′2, and w′3). (B) Possible activation function σ(x). The bias term b effectively shifts the activation function along the x-axis. Many nonlinear functions are valid choices, but the most popular are sigmoid transformations such as tanh(x) or (smooth) ramp functions, for example, max(0, x) or ln(1 + ex). (C) Artificial neural network with a single hidden layer of three neurons (gray) that maps two inputs x1 and x2 (blue) to two outputs y1 and y2 (yellow), see eq 15. For regression tasks, the output neurons typically use no activation function. Computing the weighted sums for the neurons of each layer can be efficiently implemented as a matrix vector product (eq 14). Some entries of the weight matrices (W and W′) and bias vectors (b and b′) are highlighted in color with the corresponding connection in the diagram. (D) Schematic depiction of a deep neural network with L hidden layers (eq 16). Compared to using a single hidden layer with many neurons, it is usually more parameter-efficient to connect multiple hidden layers with fewer neurons sequentially.

2.2.3. Model Selection: How to Choose Hyperparameters

In addition to the parameters that are determined when learning an ML model for a given data set, for example, the weights W and biases b in NNs or the regression coefficients α in kernel methods, many models contain hyperparameters that need to be chosen before training. They allow to tune a given model to the prior beliefs about the data set/underlying physics and thus play a significant role in how a model generalizes to different data patterns. Two types of hyperparameters can be distinguished. The first kind influences the composition of the model itself, such as the type of kernel or the NN architecture, whereas the second kind affects the training procedure and thus the final parameters of the trained model. Examples for hyperparameters are the width (number of neurons per layer) and depth (number of hidden layers) of an NN, the kernel width γ (see eq 4), or the strength of regularization terms (e.g., λ in eq 10).

The range of valid values is strongly dependent on the hyperparameter in question. For example, certain hyperparameters might need to be selected from the positive real numbers (e.g., γ and λ, see above), while others are restricted to positive integers or have interdependencies (such as depth and width of an NN). This is why hyperparameters are often optimized with primitive exhaustive search schemes like grid search or random search in combination with educated guesses for suitable search ranges, or more sophisticated Bayesian approaches.158 Common gradient-based optimization methods can typically not be applied effectively. Fortunately, for many hyperparameters, model performance is fairly robust to small changes and good default values can be determined, which work across many different data sets.

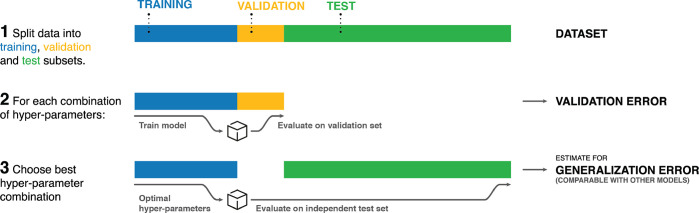

Before any hyperparameters may be optimized, a so-called test set must be split off from the available reference data and kept strictly separate. The remainder of the data is further divided into a training and a validation set. This is done because the performance of ML models is not judged by how well they predict the data they were trained on, as it is often possible to achieve arbitrarily small errors in this setting. Instead, the generalization error, that is, how well the model is able to predict unseen data, is taken as indicator for the quality of a model. For this reason, for every trial combination of hyperparameters, a model is trained on the training data and its performance measured on the validation set to estimate the generalization error. Finally, the best performing model is selected. To get better statistics for estimates of the generalization error, instead of splitting the remaining data (reference data excluding test set) into just two parts, it is also possible to divide it into k parts (or folds). Then a total of k models is trained, each using k – 1 folds as the training set and the last fold as validation set. This method is known as k-fold cross-validation.30,159

As the validation data influence model selection (even though it is not used directly in the training process), the validation error may give too optimistic estimates and is no reliable way to judge the true generalization error of the final model. A more realistic value can be obtained by evaluating the model on the held-out test set, which has neither direct nor indirect influence on model selection. To not invalidate this estimate, it is crucial not to further tweak any parameters or hyperparameters in response to test set performance. More details on how to construct ML models (including the selection of hyperparameters and the importance of keeping an independent test set) can be found in Section 3. The model selection process is summarized in Figure 9.

Figure 9.

Overview of model selection process.

2.3. Combining Machine Learning and Chemistry

The need for ML methods often arises from the lack of theory to describe a desired mapping between input and output. A classical example for this is image classification: It is not clear how to distinguish between pictures of different objects, as it is unfeasible to formulate millions of rules by hand to solve this task. Instead, the best results are currently achieved by learning statistical image characteristics from hundreds of thousands of examples that were extracted from a large data set representing a particular object class. From that, the classifier learns to estimate the distribution inherent to the data in terms of feature extractors with learned parameters like convolution filters that reflect different scales of the image statistics.94,98,101 This working principle represents the best approach known to date to tackle this particular challenge in the field of computer vision.

On the other hand, the benchmark for solving molecular problems is set by rigorous physical theory that provides essentially exact descriptions of the relationships of interest. While the introduction of approximations to exact theories is common practice and essential to reduce their complexity to a workable level, those simplifications are always physical or mathematical in nature. This way, the generality of the theory is only minimally compromised, albeit with the inevitable consequence of a reduction in predictive power. In contrast, statistical methods can be essentially exact, but only in a potentially very narrow regime of applicability. Thus, a main role of ML algorithms in the chemical sciences has been to shortcut some of the computational complexity of exact methods by means of empirical inference, as opposed to providing some mapping between input and output at all (as is the case for image classification). Notably, recent developments could show that machine learning can provide novel insight beyond providing efficient shortcuts of complex physical computations.33,55,59,62,70,105,160,161

Force field construction poses unique challenges that are absent from traditional ML application domains, as much more stringent demands on accuracy are placed on ML approaches that attempt to offer practical alternatives to established methods. Additionally, considerable computational cost is associated with the generation of high-level ab initio training data, with the consequence that practically obtainable data sets with sufficiently high quality are typically not very large. This is in stark contrast with the abundance of data in traditional ML application domains, such as computer vision, natural language processing etc. The challenge in chemistry, however, is to retain the generality, generalization ability and versatility of ML methods, while making them accurate, data-efficient, transferable, and scalable.

2.3.1. Physical Constraints

To increase data efficiency and accuracy, ML-FFs can (and should) exploit the invariances of physical systems (see Section 2.1.2), which provide additional information in ways that are not directly available for other ML problems. Those invariances can be used to reduce the function space from which the model is selected, in this manner effectively reducing the degrees of freedom for learning,69,162 making the learning problem easier and thus also solvable with a fraction of data. As ML algorithms are universal approximators with virtually no inherent flexibility restrictions, it is important that physically meaningful solutions are obtained. In the following, important physical constraints of such solutions and possible ways of their realization are discussed in detail. Furthermore, existing kernel-based methods and neural network architectures tailored for the construction of FFs and how they implement these physical constraints in practice are described.

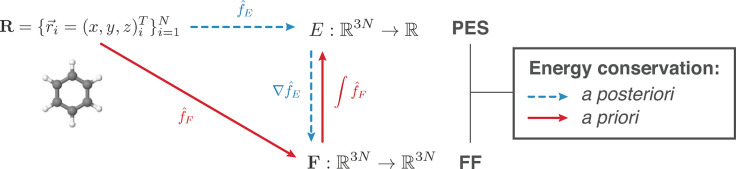

2.3.1.1. Energy Conservation

A necessary requirement for ML-FFs is that, in the absence of external forces, the total energy of a chemical system is conserved (see Section 2.1.2). When the potential energy is predicted by any differentiable method and forces derived from its gradient, they will be conservative by construction. However, when forces are predicted directly, this is generally not true, which makes deriving energies from force samples slightly more complicated. The main challenge to overcome is that not every vector field is necessarily a valid gradient field. Therefore, the learning problem cannot simply be cast in terms of a standard multiple output regression task, where the output variables are modeled without enforcing explicit correlations. A big advantage of predicting forces directly is that they are true quantum-mechanical observables within the BO approximation by virtue of the Hellmann–Feynman theorem,163,164 i.e., they can be calculated analytically and therefore at a relatively low additional cost when generating ab initio reference data. As a rough guideline, the computational overhead for analytic forces scales with a factor of only around ∼1–7 on top of the energy calculation.165 In contrast, at least 3N + 1 energy evaluations would be necessary for a numerical approximation of the forces by using finite differences. For example, at the PBE0/DFT (density functional theory with the Perdew–Burke–Ernzerhof hybrid functional) level of theory,166 calculating energy and analytical forces for an ethanol molecule takes only ∼1.5 times as long as calculating just the energy (the exact value is implementation-dependent), whereas for numerical gradients, a factor of at least ∼10 would be expected.

As forces provide additional information about how the energy changes when an atom is moved, they offer an efficient way to sample the PES, which is why it is desirable to formulate ML models that can make direct use of them in the training process. Another benefit of a direct reconstruction of the forces is that it avoids the amplification of estimation errors due to the derivative operator that would otherwise be applied to the PES reconstruction (see Figure 10).58,70,105

Figure 10.

Differentiation of an energy estimator (blue) versus direct force reconstruction (red). The law of energy conservation is trivially obeyed in the first case but requires explicit a priori constraints in the latter scenario. The challenge in estimating forces directly lies in the complexity arising from their high 3N-dimensionality (three force components for each of the N atoms) in contrast to predicting a single scalar for the energy.

2.3.1.2. Rototranslational Invariance

A crucial requirement for ML-FFs is the rotational

and translational

invariance of the potential energy, i.e.,  , where

, where  and

and  are rigid

rotations and translations and R are the Cartesian coordinates

of the atoms. As long as the

representation x(R) of chemical structure

chosen as input for the ML model itself is rototranslationally invariant,

ML-FFs inherit its desired properties and even the gradients will

automatically behave in the correct equivariant way due to the outer

derivative

are rigid

rotations and translations and R are the Cartesian coordinates

of the atoms. As long as the

representation x(R) of chemical structure

chosen as input for the ML model itself is rototranslationally invariant,

ML-FFs inherit its desired properties and even the gradients will

automatically behave in the correct equivariant way due to the outer

derivative  . One example of appropriate features

to

construct a representation x with the desired properties

are pairwise distances. For a system with N atoms,

there are

. One example of appropriate features

to

construct a representation x with the desired properties

are pairwise distances. For a system with N atoms,

there are  different pairwise distances that

result

in reasonably sized feature sets for systems with a few dozen atoms.

Apart from very few pathological cases, this representation is complete,

in the sense that any possible configuration of the system can be

described exactly and uniquely.117 However,

while pairwise distances serve as an efficient parametrization of

some geometry distortions like bond stretching, they are relatively

inefficient in describing others, for example, rotations of functional

groups. In the latter case, many distances are affected even for slight

angular changes, which can pose a challenge when trying to learn the

geometry-energy mapping. Complex transition paths or reaction coordinates

are often better described in terms of bond and torsion angles in

addition to pairwise distances. The problem is that the number of

these features grows rather quickly, with

different pairwise distances that

result

in reasonably sized feature sets for systems with a few dozen atoms.

Apart from very few pathological cases, this representation is complete,

in the sense that any possible configuration of the system can be

described exactly and uniquely.117 However,

while pairwise distances serve as an efficient parametrization of

some geometry distortions like bond stretching, they are relatively

inefficient in describing others, for example, rotations of functional

groups. In the latter case, many distances are affected even for slight

angular changes, which can pose a challenge when trying to learn the

geometry-energy mapping. Complex transition paths or reaction coordinates

are often better described in terms of bond and torsion angles in

addition to pairwise distances. The problem is that the number of

these features grows rather quickly, with  and

and  , respectively. At that rate, the

size of

the feature set quickly becomes a bottleneck, resulting in models

that are slow to train and evaluate. While an expert choice of relevant

angles would circumvent this issue, it reduces some of the “data-driven”

flexibility that ML models are typically appreciated for. Note that

models without rototranslational invariance are practically unusable,

as they may start to generate spurious linear or angular momentum

during dynamics simulations.

, respectively. At that rate, the

size of

the feature set quickly becomes a bottleneck, resulting in models

that are slow to train and evaluate. While an expert choice of relevant

angles would circumvent this issue, it reduces some of the “data-driven”

flexibility that ML models are typically appreciated for. Note that

models without rototranslational invariance are practically unusable,

as they may start to generate spurious linear or angular momentum

during dynamics simulations.

2.3.1.3. Indistinguishability of Identical Atoms

In the BO approximation, the potential energy of a chemical system only depends on the charges and positions of the nuclei. As a consequence, the PES is symmetric under permutation of atoms with the same nuclear charge. However, symmetric regions are not necessarily sampled in an unbiased way during MD simulations (see Figure 11). Consequently, ML-FFs that are not constrained to treat all symmetries equivalently may predict different results when permuting atoms (due to the uneven sampling).

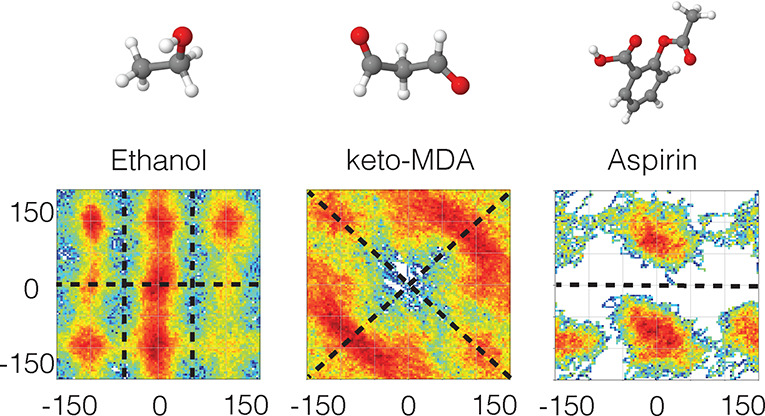

Figure 11.

Regions of the PESs for ethanol, keto-malondialdehyde and aspirin visited during a 200 ps ab initio MD simulation at 500 K using the PBE+TS/DFT level of theory167,168 (density functional theory with the Perdew–Burke–Ernzerhof functional and Tkatchenko-Scheffler dispersion correction). The black dashed lines indicate the symmetries of the PES. Note that regions related by symmetry are not necessarily visited equally often.

While it is in principle possible to arrive at a ML-FF that is symmetric with respect to permutations of same-species atoms indirectly via data augmentation29,169 or by simply using data sets that naturally include all relevant symmetric configurations in an unbiased way, there are obvious scaling issues with this approach. It is much more efficient to impose the right constraints onto the functional form of the ML-FF such that all relevant symmetric variants of a certain atomic configuration appear equivalent automatically. Such symmetric functions can be constructed in various ways, each of which has advantages and disadvantages.

Assignment-based approaches do not symmetrize the ML-FF per se, but instead aim to normalize its input, such that all symmetric variants of a configuration are mapped to the same internal representation. In its most basic realization, this assignment is done heuristically, that is, by using inexact, but computationally cheap criteria. Examples for this approach are the Coulomb matrix28 or the Bag-of-Bonds31 descriptors, that use simple sorting schemes for this purpose. Histograms107,170 and some density-based117,171,172 approaches follow the same principle, although not explicitly. All of those schemes have in common that they compare the features in aggregate as opposed to individually. A disadvantage is that dissimilar features are likely to be compared to each other or treated as the same, which limits the accuracy of the prediction. Such weak assignments are better suited for data sets with diverse conformations rather than gathered from MD trajectories that contain many similar geometries. In the latter case, the assignment of features might change as the geometry evolves, which would lead to discontinuities in the prediction and would effectively be treated by the ML model as noise (see ϵ in eq 1).

An alternative path is to recover the true correspondence of molecular features using a graph matching approach.173,174 Each input x is matched to a canonical permutation of atoms x̃ = Px before generating the prediction. This procedure effectively compresses the PES to one of its symmetric subdomains (see dashed black lines in Figure 11), but in an exact way. Note that graph matching is in all generality an NP-complete problem which can only be solved approximately. In practice, however, several algorithms exist to ensure at least consistency in the matching process if exactness can not be guaranteed.175 A downside of this strategy is that any input must pass through a matching process, which is relatively costly, despite being approximate. Another issue is that the boundaries of the symmetric subdomains of the PES will necessarily lie in the extrapolation regime of the reconstruction in which prediction accuracy tends to degrade. As the molecule undergoes symmetry transformations, these boundaries are frequently crossed, to the detriment of prediction performance.

Arguably the most

universal way of imposing symmetry, especially

if the functional form of the model is already given, is via invariant

integration over the relevant symmetry group fsym(x) = ∫π∈Sf(Pπx). Typically,  would

be the permutation group and Pπ the

corresponding permutation matrix that transforms each

vector of atom positions x. Some approaches117,176,177 avoid this implicit ordering

of atoms in x by adopting

a three-dimensional density representation of the molecular geometry

defined by the atom positions, albeit at the cost of losing rotational

invariance, which then must be recovered by integration. Invariant

integration gives rise to functional forms that are truly symmetric

and do not require any pre- or postprocessing of the in- and output

data. A significant disadvantage is, however, that the cardinality

of even basic symmetry groups is exceedingly high, which affects both

training and prediction times.

would

be the permutation group and Pπ the

corresponding permutation matrix that transforms each

vector of atom positions x. Some approaches117,176,177 avoid this implicit ordering

of atoms in x by adopting

a three-dimensional density representation of the molecular geometry

defined by the atom positions, albeit at the cost of losing rotational

invariance, which then must be recovered by integration. Invariant

integration gives rise to functional forms that are truly symmetric

and do not require any pre- or postprocessing of the in- and output

data. A significant disadvantage is, however, that the cardinality

of even basic symmetry groups is exceedingly high, which affects both

training and prediction times.

This combinatorial challenge can be solved by limiting the invariant integral to the physical point group and fluxional symmetries that actually occur in the training data set. Such a subgroup of meaningful symmetries can be automatically recovered and is often rather small.165 For example, each of the molecules benzene, toluene and azobenzene have only 12 physically relevant symmetries, whereas their full symmetric groups have orders 6!6!, 7!8!, and 12!10!2! symmetries, respectively.

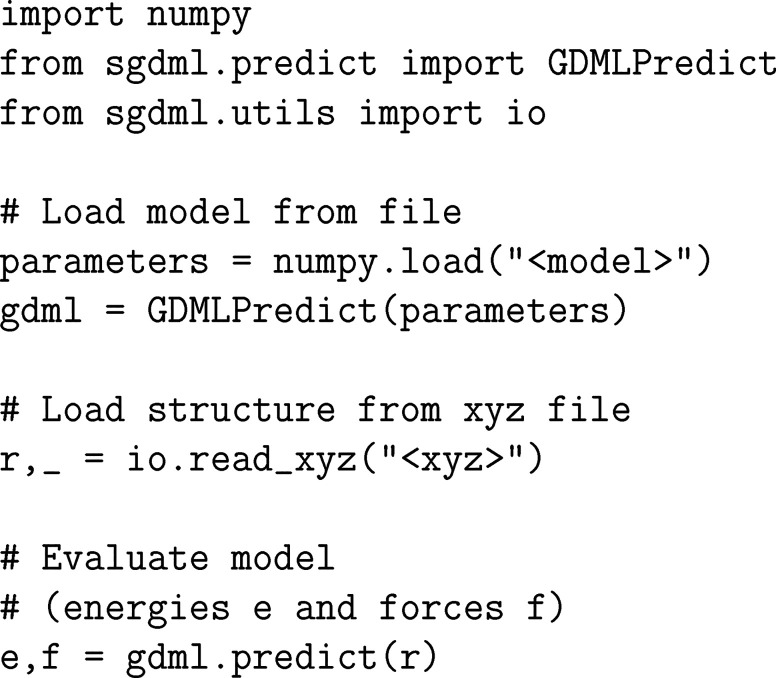

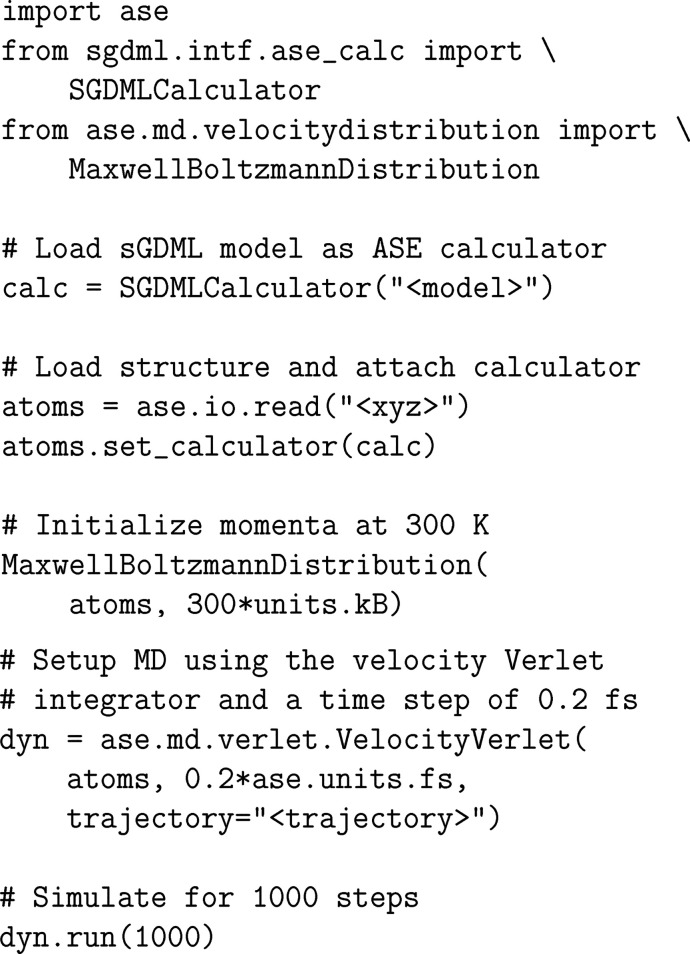

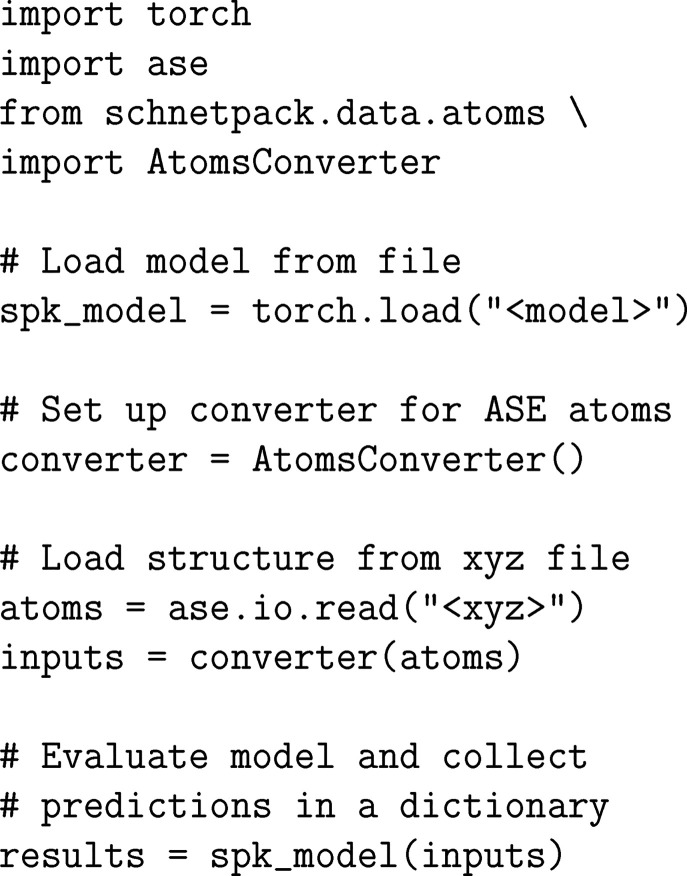

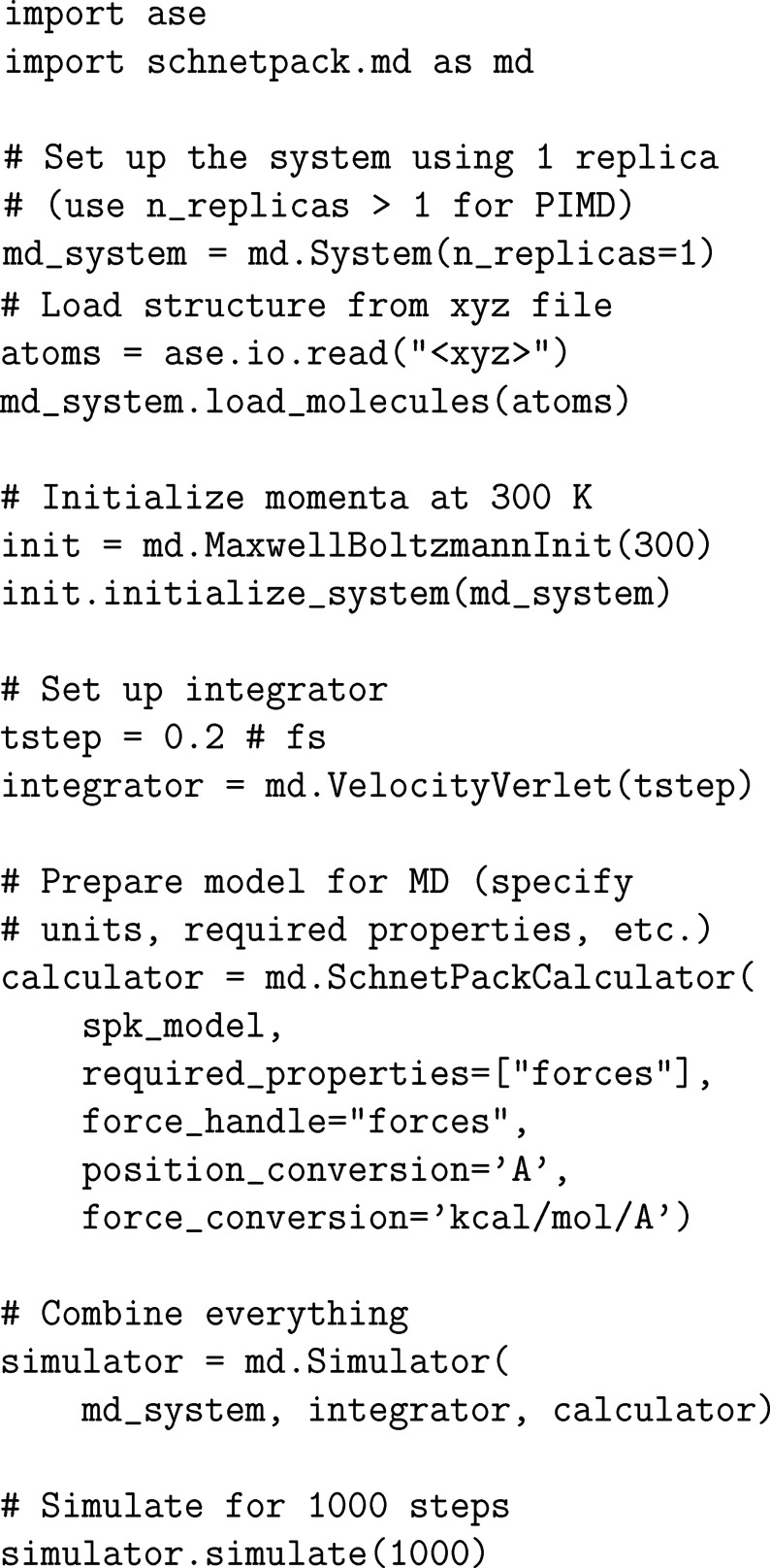

2.3.2. (Symmetric) Gradient Domain Machine Learning ((s)GDML)

Gradient domain machine learning (GDML) is a kernel-based

method introduced as a data efficient way to obtain accurate reconstructions

of flexible molecular force fields from small reference data sets

of high-level ab initio calculations.105 Contrary to most other ML-FFs, instead of predicting

the energy and obtaining forces by derivation with respect to nuclear

coordinates, GDML predicts the forces directly. As mentioned in Section 2.3.1, forces

obtained in this way may violate energy conservation. To ensure conservative

forces, the key idea is to use a kernel K (x, x′) =  xKE(x, x′)

xKE(x, x′) x′T that models the forces F as a transformation of an unknown potential energy surface E such that

x′T that models the forces F as a transformation of an unknown potential energy surface E such that

| 17 |

Here,  and

and  are the prior mean and covariance functions

of the latent energy-based Gaussian process

are the prior mean and covariance functions

of the latent energy-based Gaussian process  , respectively. The descriptor

of chemical

structure

, respectively. The descriptor

of chemical

structure  consists of

the inverse of all D pairwise distances, which guarantees

rototranslational

invariance of the energy. Training on forces is motivated by the fact

that they are available analytically from electronic structure calculations,

with only moderate computational overhead atop energy evaluations.

The big advantage is that for a training set of size M, only M reference energies are available, whereas

there are three force components for each of the N atoms, that is, a total of 3NM force values. This

means that a kernel-based model trained on forces contains more coefficients

(see eq 2) and is thus

also more flexible than an energy-based variant. Additionally, the

amplification of noise due to the derivative operator is avoided.

consists of

the inverse of all D pairwise distances, which guarantees

rototranslational

invariance of the energy. Training on forces is motivated by the fact

that they are available analytically from electronic structure calculations,

with only moderate computational overhead atop energy evaluations.

The big advantage is that for a training set of size M, only M reference energies are available, whereas

there are three force components for each of the N atoms, that is, a total of 3NM force values. This

means that a kernel-based model trained on forces contains more coefficients

(see eq 2) and is thus

also more flexible than an energy-based variant. Additionally, the

amplification of noise due to the derivative operator is avoided.

A limitation of the GDML method is that the structural descriptor x is not permutationally invariant because the values of its

entries (inverse pairwise distances) change when atoms are reordered.

An extension of the original approach, sGDML69,165 (symmetric GDML), additionally incorporates all relevant rigid space

group symmetries, as well as dynamic nonrigid symmetries of the system

at hand into the kernel, to further improve its efficiency and ensure

permutational invariance. Usually, the identification of symmetries

requires chemical and physical intuition about the system at hand,

which is impractical in an ML setting. Here, however, a data-driven

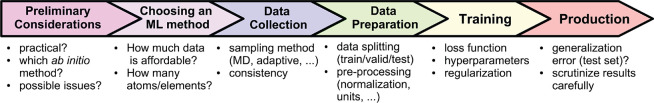

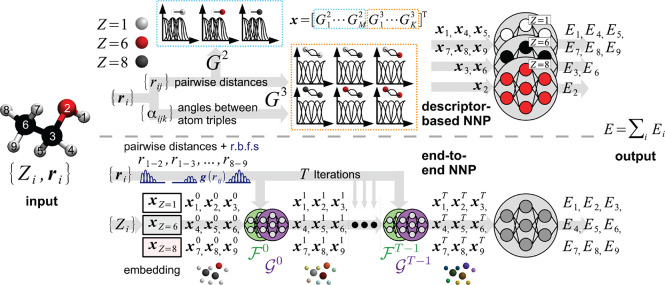

multipartite matching approach is employed to automatically recover