Abstract

Objectives:

To investigate differences in speech, language, and neurocognitive functioning between samples of normal hearing (NH) children and deaf children with cochlear implants (CIs) based on performance on an anomalous sentence recognition test. Semantically anomalous sentences block the use of downstream predictive coding strategies during speech recognition, allowing for investigation of the role of other factors such as rapid phonological coding and executive functioning in sentence recognition.

Methods:

Extreme groups were extracted from samples of children with CIs and NH peers (ages 9 to 17) based on the 7 highest and 7 lowest scores in each sample on the Harvard-Anomalous sentence recognition test (Harvard-A). The four extreme groups were compared on measures of speech, language, and neurocognitive functioning.

Results:

The 7 highest-scoring CI users and the 7 lowest-scoring NH peers did not differ in Harvard-A scores. Despite comparable Harvard-A scores, high-performing CI users and low-performing NH peers differed significantly on several measures of neurocognitive functioning. Compared to low-performing NH peers, high-performing children with CIs had significantly lower nonword repetition scores but higher nonverbal intelligence scores, greater verbal working memory (WM) capacity, and excellent executive function (EF) skills related to inhibition, shifting attention/mental flexibility and working memory updating.

Discussion:

High performing deaf children with CIs are able to successfully adapt and compensate for their sensory deficits and weaknesses in rapid automatic phonological coding of speech by actively engaging in a slow effortful mode of information processing involving inhibition, working memory and executive functioning, whereas NH peers are less dependent on these cognitive control processes for successful sentence recognition.

Keywords: deafness, cochlear implants, speech recognition, language, executive functioning, working memory

Spoken sentences are typically processed as integrated holistic linguistic units by listeners and not as lists of isolated unrelated words concatenated together as a sequence of symbols (Miller, 1962b). Meaningful sentences have a complex internal structure and rule-governed linguistic organization that is automatically recovered (i.e., parsed) by listeners when they perceive and understand spoken language. This emergent property of grammatical sentences as perceptual units of analysis in speech perception has been observed consistently across a wide range of information processing tasks using different types of sentence materials (Bever, 1970; Fodor, Bever, & Garrett, 1974).

Recovering the internal organizational structure of meaningful grammatical sentences using down-stream linguistic parsing strategies and semantic and syntactic constraints is universally assumed to be a fundamental prerequisite for understanding the meaning of a talker’s intended linguistic message (Fodor et al., 1974). Sentence perception reflects the integration of available sensory evidence in the speech signal with grammatical knowledge and top-down semantic predictive processing by listeners based on their prior linguistic experience and developmental histories (Boothroyd, 1968). In everyday real-world listening, sensory information and context are intertwined and closely linked together seamlessly to support robust language understanding under a wide range of challenging listening conditions.

The present study used a novel set of semantically-anomalous sentences (sentences that maintain correct grammatical syntax and word order but that have no semantic meaning) that were specifically designed to block the automatic use of semantic constraints in sentence processing in order to investigate how prelingually deaf, early-implanted, long-term cochlear implant (CI) users would adapt to the loss of contextual information and modify their predictive coding strategies in recognizing words. The speech recognition skills of CI users are typically assessed in quiet listening conditions with lists of isolated monosyllabic consonant-vowel nucleus-consonant (CNC) words or short meaningful sentences such as the Hearing in Noise Test (HINT) or the Arizona Biomedical Institute Sentence Test (AZ-Bios) (Adunka, Gantz, Dunn, Gurgel, & Buchman, 2018; Kirk, Diefendorf, Pisoni, & Robbins, 1997; Luxford et al., 2001; Mendel & Danhauer, 1997). Studies that experimentally manipulate the semantic attributes of words in sentences have rarely been undertaken with CI users. The dearth of information in this domain is problematic because of the central importance and clinical relevance of sentence context and the reliance on semantic and contextual cues in speech recognition which may underlie the enormous individual differences routinely observed in speech and language outcomes following cochlear implantation (Pichora-Fuller, Schneider, & Daneman, 1995). A detailed understanding of core foundational information processing mechanisms underlying individual differences in speech and language outcomes (such as the use of semantics, fast-automatic phonological coding, and/or slow-effortful cognitive control strategies) is important for designing novel efficacious interventions to improve speech recognition and sentence processing outcomes in low-functioning CI users (Moberly, Bates, Harris, & Pisoni, 2016).

In studying highly overlearned and automatized information processing strategies like those routinely used in speech recognition and spoken language understanding, it is often informative to use experimental methodologies that are designed to specifically challenge the information processing system in order to investigate adaptive functioning and perceptual adjustments to novel unfamiliar stimulus materials. By using nonsense words in non-word repetition tasks or semantically anomalous sentences in word recognition tests, it is possible to experimentally block the use of down-stream predictive coding strategies and higher-level linguistic context in order to observe the activity of other cognitive strategies that support spoken word recognition performance.

In a seminal study on sentence processing, Miller and Isard (1963) created a novel set of anomalous sentences in order to investigate the independent effects of syntactic and semantic factors on sentence perception. Although their anomalous sentences maintained correct grammatical syntax and word order, the sentences violated semantic constraints used in interpreting the meaning of words in sentences and blocked the use of downstream predictive coding strategies normally used in understanding sentences. Miller and Isard found that the intelligibility of strings of English words depended on their conformity to linguistic rules. Intelligibility was highest for meaningful grammatical sentences, lower for semantically anomalous sentences, and lowest for ungrammatical random strings of words, suggesting that linguistic rules facilitate the perception of speech by limiting the number of available response alternatives that a listener has to consider in understanding the meaning of sentences (Miller, 1962a).

Anomalous sentence tasks are used to block the automatic obligatory use of higher-order syntactic and semantic predictive constraints based on prior linguistic knowledge and long-term experience, in order to assess spoken word recognition skills under challenging conditions. Anomalous sentences force the listener to actively suppress the highly overlearned automatic use of semantic prediction/coding and language understanding processes in sentence recognition, reallocating and engaging controlled attention, memory, and conscious processing resources to focus on the bottom-up acoustic-phonetic evidence encoded in the speech signal. The recognition of words in anomalous sentences requires listeners to actively and effortfully make greater use of the bottom-up sensory evidence they hear rather than relying on automatic down-stream semantic predictive coding and processing strategies based on what they expect to hear about language.

Recognizing words in semantically anomalous sentences is a fundamentally different process than recognizing words in ordinary conventional meaningful sentences because the processing of anomalous sentences requires the listener to actively engage cognitive control processes that rely heavily on the primary domains of executive functioning: inhibition, mental flexibility, and verbal working memory (Miyake et al., 2000). Inhibition involves suppressing fast-automatic responding in the service of controlled, conscious processing; mental flexibility requires disengagement of an initial cognitive processing strategy in order to consider and shift to more effective and appropriate alternative strategies; and working memory consists of active, effortful storage and manipulation of the contents of short-term memory under the demands of additional concurrent cognitive processing operations.

Unlike traditional measures of executive function, such as Stroop, Flanker, Stop-Signal, and the Wisconsin Card Sorting Test (WCST) (Golden & Freshwater, 2002; Zelazo et al., 2013), anomalous sentences provide an experimental methodology that can be used to investigate the underlying core foundational components of executive functioning and speech recognition together simultaneously in the same information processing task. The successful recognition of words in anomalous sentences requires the listener to actively inhibit automatic semantic predictive coding processes resulting from semantic interpretation of the individual words and overall meaning of the sentence in order to help them recognize individual words rapidly in close to real-time. The listener must then actively shift their attention and recognition strategies to focus conscious attention on the acoustic-phonetic information and sensory evidence encoded in the speech signal itself. The listener must also engage and update working memory in order to encode and maintain sequences of incompatible words in working memory that violate conventional predictive semantic rules. Working memory for sequences of words without downstream support from semantics and meaningful linguistic context is far more demanding for the listener, requiring active maintenance strategies and controlled attention.

Analyses of open-set response protocols and error patterns obtained from subjects listening to semantically anomalous sentences have demonstrated very clearly that listeners commonly struggle with inhibition and shifting from automatic semantic processing to effortful acoustic-phonetic processing, routinely attempting to make sense of semantically incompatible sequences of words by assigning a coherent and appropriate meaningful linguistic interpretation to the sentence based on downstream semantic and syntactic constraints (Herman & Pisoni, 2003; Pisoni, 1982; Pisoni, Nusbaum, & Greene, 1985). Recognition of words in anomalous sentences is often worse than might be expected based on sequences of words in typical meaningful grammatical sentences or sequences of words that are randomly concatenated together without any linguistic organization (Marks & Miller, 1964; Salasoo & Pisoni, 1985).

Other studies using anomalous sentences have been carried out by Stelmachowicz (2000) and Moberly & Reed (2019) who investigated the effects of sentence context and semantic constraints on word recognition. Stelmachowicz (2000) studied speech recognition in children with hearing aids using a set of nonsense sentences with conflicting context. Moberly & Reed (2019) used the set of Harvard anomalous sentences developed by Herman & Pisoni (2003) as a method to reduce the contribution of downstream predictive semantic information in a group of elderly post-lingually deaf adults who were experienced cochlear implant users. Both studies found that the presence of coherent meaningful top-down linguistic context facilitated word recognition performance when bottom-up sensory evidence alone was insufficient to support speech recognition. Moberly & Reed (2019) suggested that the active use of inhibitory control processes in speech recognition may play an important role in recognizing words in sentences.

Although very little research has been carried out on processing of semantic information in deaf children with cochlear implants, a recent study by Wechsler-Kashi et al.,(2014) investigated picture naming and verbal retrieval fluency in this clinical population. In the verbal retrieval task, both phonological fluency and semantic fluency were measured using conventional retrieval fluency methodologies in a group of 20 children with CIs and a control group of 20 age-matched children with normal hearing. Their results showed that when compared with the NH controls, deaf children with cochlear implants retrieved fewer words in the phonological fluency task than the semantic fluency task suggesting selective weaknesses in the phonological representations of words in lexical memory. In a follow up computational analysis, Kennet et al., (2013) applied graph theory methods from network science to the response protocols obtained by Wechsler-Kashi et al., in the verbal fluency task for animal names. Kennet et al., found that the semantic networks of the children with CIs were compromised and appeared to be developmentally delayed compared to the network structures computed for the NH controls. The differences observed in verbal fluency in deaf children with CIs suggest that their lexical networks and organization of words in lexical memory may be more densely organized reflecting incomplete and underspecified semantic knowledge of words in the language.

In this study, we used an extreme-groups design to investigate the cognitive (primarily executive) and speech recognition strategies underlying the ability to suppress or abandon contextual processing in anomalous sentence recognition in samples of children and adolescents with either normal hearing or CIs. High- and low-performers within each hearing group (NH or CI) based on scores from the anomalous sentence recognition test were compared on demographics, hearing history, speech recognition skills, and three primary domains of executive functioning (EF): inhibition, shifting, and working memory (WM). We chose to use an extreme groups design in order to more clearly depict the differences in background, speech recognition, and executive functioning skills associated with high-vs.-low anomalous sentence recognition performance in CI users and NH peers. Additionally, use of an extreme-groups design allowed us to compare subgroups of CI users and NH peers that had comparable performance on the anomalous sentence recognition test; because those groups did not differ on anomalous sentence recognition, differences between those groups could provide insight into different processes underlying anomalous sentence recognition in CI users compared to NH peers. Thus, results using this design provided new information about how sentence context and downstream predictive coding constrains speech recognition skills in children with CIs compared to children with NH, as well as the cognitive and speech-language skills that support processing in a challenging task that combines speech perception and executive functioning. The following specific hypotheses were investigated:

NH children will score higher than children with CIs on the anomalous sentence recognition task, such that the strongest performers from the CI sample will fall in a range comparable with the weakest performers from the NH sample. This hypothesis is based on findings from challenging speech perception tests that typically show an overlap between the upper tail of performance (e.g., highest scorers) in CI samples and the lower tail of performance (e.g., lowest scorers) in NH samples (Smith, Pisoni, & Kronenberger, 2019).

High- and low-performers within the NH sample and within the CI sample will differ on measures of inhibition, shifting of attention, and working memory, reflecting the important contribution of these primary EF domains in challenging sentence perception tasks.

High-scoring children with CIs will demonstrate stronger nonverbal intelligence, inhibition, shifting, working memory, and speech perception skills than low-scoring NH children, despite the fact that both groups are equivalent on anomalous sentence perception scores. These differences reflect the compensatory skills and mental effort/cognitive control processes necessary for children with CIs to engage in speech perception without the benefit of down-stream predictive context, compared to NH peers, for whom the task is much less demanding and more highly automatic. Specifically, because speech perception is more demanding and effortful for CI users than NH peers, we expected that CI users would need some additional strengths to compensate for the greater demands in speech perception (Kronenberger, Henning, Ditmars, & Pisoni, 2018; Smith et al., 2019).

Method

Participants

Participants included in the present study were chosen from a larger sample of 58 children and adolescents age 9-17 years with either normal hearing (NH; N=33) or cochlear implants (CIs; N=25) who participated in a study of speech, language, and neurocognitive development (Smith et al., 2019). Inclusion criteria for the CI sample were: severe-to-profound hearing loss [>70 dB Hearing Level (HL)] prior to age 12 months, cochlear implantation prior to age 36 months, use of a modern, multichannel CI system for ≥7 years, and at time of testing enrollment in a rehabilitative program or living in an environment that actively encouraged the development and use of spoken language listening and speaking skills. Inclusion criteria for the NH sample were absence of history of hearing problems or loss by parent report and passing a hearing screen (in each ear individually at 20 dB HL at 500, 1000, 2000, and 4000 Hz) administered during the study visit. Inclusion criteria for both the CI and NH samples were: under 18 years of age, English the primary language spoken in the household, no other neurological or neurodevelopmental disorders or delays documented in medical chart or reported by parents, and a nonverbal intelligence >70 as measured by the Geometric Nonverbal Intelligence Composite Index (normed standard score) obtained from the Comprehensive Test of Nonverbal Intelligence (CTONI-2), Second Edition (Hammill, Pearson, & Wiederholt, 2009).

Because the purpose of this study was to investigate factors related to good or poor anomalous sentence recognition performance, an extreme-groups method was used to select the study sample from the larger sample of 58 children (N=25 CI with Harvard-A mean score=50.7, standard deviation [SD]=20.6; N=33 NH with Harvard-A mean/SD=89.4/6.1). One primary goal in defining extreme groups was to identify a group of CI users and a group of NH peers that overlapped and that did not significantly differ in Harvard-A scores. In order to accomplish this goal, distributions of the CI and NH samples on the Harvard-A sentence recognition test were first examined for overlap. Because the entire NH sample scored at or above 72% on the Harvard-A keywords correct score, we arbitrarily set a Harvard-A score of 70% or higher as the criterion for identifying overlap between the NH and CI samples. Children in the CI sample who scored 70% or higher on the Harvard-A (N=6; range=70-89%, mean=75.5, SD=7.6) were therefore identified as overlapping with the low end of the NH distribution. To match those 6 high-performing children from the CI sample, we selected a same size matching subsample (N=6) of the 6 lowest performing NH peers on the Harvard-A (range=72-83%; mean=79.2, SD=4.0). Thus, the 6 highest performing children with CIs based on Harvard-A scores (CI High Performers) and the 6 lowest performing children with NH based on Harvard-A scores (NH Low Performers) showed comparable Harvard-A mean scores (t(10)=1.05, p=0.32) in addition to the overlapping ranges shown above. Finally, in order to further examine factors related to anomalous sentence recognition skills within the CI and NH samples, additional same-sized (e.g., N=6) groups of high- and low-performing CI users and NH peers were identified on the Harvard-A, yielding a low performing group of CI users (the 6 CI users with the lowest Harvard-A scores: CI Low Performers; range=16-22%; mean=20.0, SD=3.3) and a high performing group of NH peers (the 6 NH peers with the highest Harvard-A scores: NH High Performers; range=95-97%; mean=96.0, SD=0.9).

Thus, the final study sample consisted of 24 children. Six participants were assigned to each of four subgroups: (1) CI Low Performers, (2) CI High Performers, (3) NH Low Performers, and (4) NH High Performers subgroups. Although all of the participants in the CI Low and CI High subgroups had early onset of deafness and early implantation, they differed significantly in Harvard-A scores as expected (t(10)=16.5, p<0.001). Similarly, although all participants in the NH Low Performers and NH High Performers subgroups had normal-hearing, they also differed significantly in Harvard-A scores as expected (t(10)=10.0, p<0.001). Finally, although the CI High Performers and NH Low Performers differed in hearing status, they did not differ on Harvard-A sentence recognition scores or any of the other three sentence recognition tests, as described below. Contrasts between the four subgroups, therefore, could be used to identify factors related to hearing status or Harvard-A performance, each independent of the other.

Procedures

Study procedures were approved by the local Institutional Review Board, and written consent from the parent/guardian and assent from the child were obtained prior to administration of study procedures. Participants took part in 2 waves of data collection (addressing different research aims) separated by an average of 1.84 (SD 0.96) years; the 4 subgroups did not differ in years between waves (F(3,23)=0.11, p=0.96). The Language, Spatial Span, and Behavioral Rating Inventory of Executive Function (BRIEF) executive functioning scores were assessed at Wave 1, while the Speech Perception/Sentence Recognition, Nonverbal Intelligence, and Digit Span measures were obtained at Wave 2. Each wave of data collection was performed in 1 to 2 visits to the laboratory consisting of a total of about 4 hours each; all measures of Speech Perception/Sentence Recognition were administered at the same visit. Participants were administered measures of speech perception, language, and neurocognitive functioning at each visit. Measures of speech perception and sentence recognition were presented in the quiet at 65 dB SPL using a high-quality loudspeaker (Advent AV570, Audiovox Electronics) positioned on a table approximately 3 feet in front of the participant. All tests were administered by ASHA-certified speech-language pathologists using the same standard directions for both the CI and NH samples. All tests and directions were administered in auditory-verbal format without the use of any sign language.

Measures

Demographics, Hearing, and Communication History.

Demographic and hearing history variables were obtained from a questionnaire and interview as well as from review of medical records. Demographic variables obtained were current chronological age, gender, race, and household income (coded on a 1 (under $5000) to 10 ($95,000+) scale) (Kronenberger, Colson, Henning, & Pisoni, 2014). Hearing history variables were age at which deafness was identified, age at implantation, duration of deafness, duration of CI use, and preimplant unaided pure-tone average (PTA) for frequencies 500, 1000, and 2000 Hz. Communication mode was coded using criteria in Geers and Brenner (2003), and all participants were required to have a communication mode of “Auditory-Oral,” defined as “the child is encouraged throughout the day to both lipread and listen to the talker. No formal sign language is used…the child both watches and listens to the talker for most of the day.”

Nonverbal Intelligence.

The three Geometric Scale subtests of the Comprehensive Test of Nonverbal Intelligence, Second Edition (Hammill et al., 2009) were used to measure nonverbal reasoning and concept formation using abstract visual-spatial designs that reduce the influence of verbal mediation. The Geometric Analogies subtest measures analogic reasoning to identify relationships among stimuli; the Geometric Categories subtest measures ability to identify a shared stimulus category among pictures; the Geometric Sequences subtest measures ability to identify a progressive sequential pattern in a series of stimuli. Norm-based scaled scores (normative mean of 10, normative SD of 3) were obtained for each of the 3 subtests using the test manual. A composite Geometric Nonverbal Intelligence composite index is obtained from the sum of the 3 subtests, expressed as a standard score (normative mean of 100, normative SD of 15).

Harvard Anomalous Sentences Test (Harvard-A).

A set of novel semantically anomalous sentences was used to block the effects of top-down lexical-semantic processing and the contributions of sentence context on word recognition. The anomalous sentences used in this study were originally developed by Herman and Pisoni (2003) and were based on a set of meaningful sentences taken from lists 11-20 of the Harvard-IEEE sentences originally developed by Egan (1944). Random words from lists 21-70 were substituted for the same lexical-syntactic categories (noun, verb, adjective etc.) in each sentence to produce a new set of sentences that preserved the surface syntactic structure of original meaningful sentences but contained sequences of semantically-incompatible words. Examples are “Crackers reach gray and rude in the paint,” and “These dice bend in a hot desk.” The percentage of key words correctly repeated was used as the Harvard-A score.

Measures of Speech Perception and Sentence Recognition.

A nonword repetition test and three sentence recognition tests were used to assess speech perception and sentence recognition skills. The Children’s Test of Nonword Repetition (Gathercole & Baddeley, 1996; Gathercole, Willis, Baddeley, & Emslie, 1994) was used to measure rapid phonological coding and perception of spoken nonwords presented via a loudspeaker. Percentage of nonwords repeated correctly was the primary dependent measure used in the current data analysis.

The Harvard Standard Sentence test (Harvard-S) consisted of 28 meaningful, grammatically correct sentences taken from the Harvard-IEEE corpus (Egan, 1944; IEEE, 1969) produced in the quiet by a male speaker. Harvard-S sentences consist of 6 to 10 total words (mean=8.0 words), with 5 words per sentence identified as keywords (e.g., “The boy was there when the sun rose”). Percentage of keywords correctly repeated was the primary dependent measure used in the current data analysis.

The Perceptually Robust English Sentence Test Open-Set (PRESTO) (Gilbert, Tamati, & Pisoni, 2013) and PRESTO-Foreign Accented English (PRESTO-FAE)(Tamati & Pisoni, 2015) are high-variability sentence recognition tests that use multiple talkers and different regional dialects for each sentence. PRESTO consists of 30 sentences drawn from the Texas Instruments-MIT Acoustic-Phonetic Speech Corpus (TIMIT) database (Garafolo et al., 1993). Each sentence was spoken by a different male or female talker selected from 1of 6 regional United States dialects, with 3-5 words in each sentence serving as keywords (e.g., “A flame would use up air”, “John cleaned shellfish for a living”). PRESTO-FAE (Tamati & Pisoni, 2015) consisted of 26 low predictability (LP) sentences selected from the Speech Perception in Noise Test (SPIN) test (D.N Kalikow, Stevens, & Elliot, 1977). Each sentence was spoken by a non-native speaker of English who differed in accent and international English dialect, with 3-6 words identified as keywords (“It was stuck together with glue”, “My jaw aches when I chew gum”). Percentage of keywords correctly recognized was the primary dependent measure used for PRESTO and PRESTO-FAE in the current data analysis.

The utility and validity of the Children’s Test of Nonword Repetition (CN REP), Harvard-S, PRESTO, and PRESTO-FAE tests in CI users have been documented by previous work from our research group (Smith et al., 2019). Strong correlations have been found between CNREP scores and the three sentence recognition tests in a sample of CI users. Furthermore, CNREP scores correlated significantly with the high-variability PRESTO tests even after controlling for Harvard-S scores, demonstrating that the high-variability multiple-talker PRESTO tests present added processing demands on rapid phonological coding over and above single-talker sentence recognition. Additionally, NH participants did not show any ceiling effects on CNREP or PRESTO-FAE, allowing those tests to be used to compare speech perception performance of CI and NH samples without the confounding influence of restricted range in the NH sample.

Measures of Language.

Three language measures were chosen to assess receptive vocabulary (word knowledge) and higher order (organization and integration of linguistically complex information in the service of language comprehension or expression) language skills (Kronenberger & Pisoni, 2019). The Peabody Picture Vocabulary Test, Fourth Edition (PPVT-4)(Dunn & Dunn, 2007) is a measure of receptive vocabulary in which participants identify which of four visual pictures corresponds to a word spoken by the examiner; PPVT-4 age-based standard scores were used to measure receptive vocabulary. The Clinical Evaluation of Language Fundamentals, Fourth Edition (CELF-4) (Semel, Wiig, & Secord, 2003) Formulated Sentences (ability to produce grammatically and semantically correct sentences based on a word and picture) and Recalling Sentences (ability to recall and repeat spoken sentences of increasing length and linguistic complexity) subtests were used as measures of higher-order language skills based on prior work demonstrating that these subtests were especially complex and challenging for CI users (Kronenberger & Pisoni, 2019).

Measures of Working Memory and Executive Functioning.

Individually-administered neurocognitive measures of verbal (digit span) and spatial (spatial span) working memory were administered to all subjects. Digit span forward (DSF; repeat a series of digits in forward order) and digit span backward (DSB; repeat a series of digits in the reverse order of their presentation) were administered visually using a computer touch screen monitor, with digits presented individually at 1-second intervals followed by a 3x3 response screen (9 digits) that the child used to point to the digits in the appropriate sequence (AuBuchon, Pisoni, & Kronenberger, 2015a, 2015b). The Spatial Span subtest of the WISC-V (Wechsler et al., 2004) requires participants to point to a series of spatial locations on a board of blocks presented by the examiner in forward (Spatial Span Forward; SSF) or backward (Spatial Span Backward; SSB) order. For Digit Span and Spatial Span, two stimuli were presented at each sequence length, beginning with a sequence of 2 stimuli and increasing by 1 until the child failed 2 consecutive sequences at the same length. For Digit Span, raw scores were the number of correct sequences repeated; for Spatial Span, age-based scaled scores were used.

Three subscales from the Behavior Rating Inventory of Executive Functioning (BRIEF) (Gioia, Isquith, Retzlaff, & Espy, 2002) were selected to match the three core domains of EF identified by Miyake et al. (2000): Inhibition, Shifting, and Working Memory. The BRIEF is an 86-item behavior rating questionnaire that is used to assess parental-report of child executive functioning behaviors in everyday situations. The Inhibit scale measures children’s ability to control their impulses and behavior; the Shift scale measures the ability to switch flexibly between activities, strategies, or perspectives; the Working Memory scale measures the ability to hold information in mind in order to complete activities or stay on-task. Raw scores from the BRIEF were converted to T-scores based on age- and gender-based national norms (Gioia, Isquith, Kenworthy, & Barton, 2002; Gioia, Isquith, Retzlaff, et al., 2002).

Data Analysis Approach

Sample characteristics are reported for demographic and hearing history variables for each of the four subgroups; subgroup comparisons were tested for significance using one-way ANOVA across the four subgroups as well as t-tests comparing each pair of subgroups. For the nonverbal intelligence, speech perception, language, and working memory/EF measures, a multivariate analysis of variance (MANOVA) was first calculated comparing the four subgroups on all measures within each domain (e.g., four MANOVAs, one each for nonverbal intelligence [note that only the three CTONI-2 subtests were used in the MANOVA, since the composite is redundant with the sum of the subtests], speech perception, language, and working memory/EF). Significant MANOVAs were followed up by ANOVAs comparing each measure within the domain, as well as planned contrasts (t-tests) between subgroups 2 and 3 in order to investigate differences between NH and CI samples that had equivalent performance on the Harvard-A. Additional t-tests were carried out between all subgroups if the ANOVA was significant for a specific measure. Uncorrected p-values were used to evaluate significance because only variables within significant MANOVAs were further investigated for statistical significance, other than the planned contrasts between the CI High Performers and the NH Low Performers subgroups.

Results

Demographics and Hearing History

A summary of the demographics for the four Harvard-A samples (CI Low, CI High, NH Low, NH High) is presented in Table 1, and a comparison of hearing history variables between the CI Low Performers subgroup and the CI High Performers subgroup is presented in Table 2. Age was inversely related to Harvard-A performance for the CI users. The CI Low Performers subgroup was significantly older at time of test than the CI High Performers subgroup. For NH peers, the inverse pattern was observed; the NH High Performers children were older than NH Low Performers children. For household income, children in the CI High Performers subgroup were consistently in the highest income category ($95,000+/year), which was significantly higher than the household income for the CI Low Performers subgroup and the NH Low Performers subgroup. As shown in Table 2, no significant differences were found between the two CI samples on any of the hearing history variables. Differences in gender and race could not be tested due to small sample size.

Table 1.

Comparison of Harvard-A Subsamples

| CI Low | CI High | NH Low | NH High | F | ||

|---|---|---|---|---|---|---|

| Performers | Performers | Performers | Performers | |||

| Demographics | 3.69** | |||||

| Age - Years | 14.9 (2.7)a | 11.6 (2.5)b | 11.9 (2.1)b | 15.6 (1.5)a | 5.02** | |

| Household Income | 6.2 (3.1)a | 10.0 (0.0)b | 7.2 (1.2)a | 8.2 (2.0)ab | 3.62* | |

| Gender (N; Male/Female) | 2/4 | 4/2 | 5/1 | 4/2 | ------ | |

| Race (N; White/Black) | 6/0 | 6/0 | 4/3 | 6/1 | ------ | |

| Speech Perception | 4.97*** | |||||

| Harvard-A | 20.0 (3.3)a | 75.5 (7.6)b | 79.2 (4.0)b | 96.0 (0.9)c | 308.1*** | |

| Nonword Repetition | 8.3 (8.2)a | 62.5 (14.1)b | 83.3 (10.3)c | 91.7 (5.2)c | 84.8*** | |

| Harvard Sentences | 41.5 (6.5)a | 91.8 (1.7)b | 91.5 (3.6)b | 99.2 (1.3)c | 285.6*** | |

| PRESTO | 27.2 (2.5)a | 83.0 (4.9)b | 87.2 (6.8)b | 98.3 (1.0)c | 317.5*** | |

| PRESTO-FAE | 20.3 (10.4)a | 64.0 (5.9)b | 69.5 (10.2)b | 84.7 (1.9)c | 73.4*** | |

| Language | 7.73*** | |||||

| PPVT-4 | 64.3 (8.4)a | 106.5 (5.4)b | 108.5 (15.2)b | 121.3 (18.8)b | 21.5*** | |

| CELF-4 Formulated | 1.5 (1.2)a | 12.7 (2.3)b | 12.2 (2.4)b | 12.5 (1.2)b | 52.0*** | |

| CELF-4 Recalling | 1.7 (1.2)a | 12.8 (2.0)b | 9.0 (0.9)c | 13.0 (0.9)b | 93.4*** | |

| Nonverbal Intelligence | 2.12* | |||||

| Geometric Analogies | 11.0 (2.7)a | 10.7 (0.8)a | 8.3 (3.6)a | 10.2 (2.2)a | 1.33 | |

| Geometric Categories | 8.0 (3.1)a | 13.0 (2.1)b | 11.5 (2.3)b | 10.5 (2.6)ab | 4.05* | |

| Geometric Sequences | 11.5 (3.1)ab | 14.0 (2.6)a | 10.0 (3.0)b | 12.3 (2.1)ab | 2.25 | |

| IQ Composite | 100.8 (14.2)ab | 117.2 (9.8)a | 99.5 (17.5)b | 106.5 (11.6)ab | 2.10 | |

| Executive Functioning | 3.13*** | |||||

| Digit Span Forward | 5.8 (0.8)a | 8.5 (1.9)b | 7.3 (1.5)ab | 11.7 (1.5)c | 17.1*** | |

| Digit Span Backward | 4.3 (0.5)a | 4.2 (1.6)a | 4.7 (2.4)a | 9.7 (3.3)b | 8.68*** | |

| Spatial Span Forward | 9.2 (1.8)a | 10.2 (2.7)a | 9.5 (1.8)a | 11.3 (3.7)a | 0.80 | |

| Spatial Span Backward | 10.5 (2.2)ab | 13.5 (2.7)a | 10.3 (2.8)b | 12.8 (2.4)ab | 2.42a | |

| BRIEF Inhibit | 59.7 (6.9)a | 48.7 (15.6)a | 48.2 (5.3)a | 47.5 (10.2)a | 1.90 | |

| BRIEF Shift | 63.5 (12.5)a | 43.0 (4.8)b | 45.7 (7.5)b | 51.2 (22.0)b | 2.76a | |

| BRIEF WM | 65.8 (7.9)a | 43.8 (7.1)b | 51.8 (7.2)b | 46.7 (6.1)b | 11.33*** | |

Note: CI=cochlear implant; NH=normal-hearing; N=6/subsample. Values are mean (standard deviation) unless otherwise indicated. F-values are for MANOVA (df=(6,36) for Demographics; df=(9,60) for Nonverbal Intelligence and Language; df=(12,57) for Speech Perception; df=(21,48) for Executive Functioning) or ANOVA (df=(3,20), except Income Level (3,18)). Means with different letter superscripts are different at p<0.05. Income is reported on a 1 (under $5,500) to 10 ($95,000+) scale (Kronenberger et al., 2013). Because some subjects reported more than one race, N for race may be greater than 6.

p < .10;

p < .05;

p < .01;

p < 0.001

Table 2.

Comparison of Harvard-A Cochlear Implant Subsamples on Hearing History

| CI Low Performers | CI High Performers | t | |

|---|---|---|---|

| Hearing History | |||

| Age of Deafness Onset (Months) | 0.8 (2.0) | 0.3 (0.8) | 0.56 |

| Age at Implantation (Months) | 26.9 (5.5) | 19.0 (8.5) | 1.92 |

| Duration of Deafness (Months) | 26.1 (6.8) | 18.7 (8.6) | 1.67 |

| Duration CI Use (Years) | 12.6 (2.9) | 10.0 (2.6) | 1.64 |

| Preimplant Best PTA (dB HL) | 113.1 (7.2) | 106.4 (11.4) | 1.21 |

Note: CI=cochlear implant. df=10 for all independent groups t-tests; all p-values > .05. Values are mean (standard deviation). PTA=preimplant unaided pure-tone average for frequencies 500, 1000, 2000 Hz in dB HL.

Speech Perception and Language

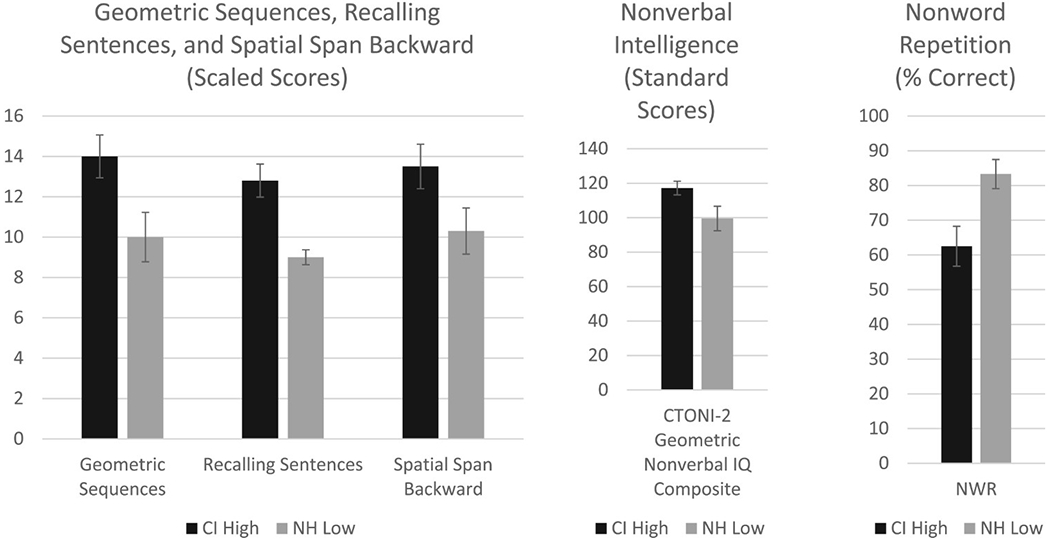

The MANOVAs and all ANOVAs comparing speech perception and language scores by Harvard-A Performance subgroup were statistically significant (Table 1). For all three sentence recognition tests (Harvard-Standard, PRESTO, PRESTO-FAE), the CI Low Performers subgroup performed more poorly than the CI High Performers subgroup and the NH Low Performers subgroup, who did not differ from each other. The NH High Performers subgroup scored significantly better than the other three subgroups on all three sentence recognition tests. For nonword repetition, the two NH subgroups did not differ from each other, and both of the NH subgroups scored significantly higher than the CI High Performers subgroup who scored significantly higher than the CI Low Performers subgroup. Of note, the NH Low Performers subgroup scored significantly higher than the CI High Performers subgroup on nonword repetition, despite the finding that both subgroups displayed the same scores on all three of the sentence recognition tests as well as the Harvard-A test that was used to create the original subgroups in this study. Figure 1 presents comparisons for all variables in the study that differed significantly between the NH Low Performers and CI High Performers groups.

Figure 1. Significant Differences between CI High Performers and NH Low Performers.

Mean subsample scores (with whiskers for standard error) are shown for the 5 speech perception, language, and cognitive/executive functioning scores that were significantly different (p<0.05) between the CI High Performers and NH Low Performers groups, despite both groups having very similar scores on the Harvard-A (Table 1). Values in the left panel (Geometric Sequences, Recalling Sentences, and Spatial Span Backward) are norm-based scaled scores; values in the middle panel (CTONI-2 Geometric Nonverbal Intelligence Composite) are standard scores; and values in the right panel (Nonword Repetition) are percent correct raw scores.

On the (PPVT-4) and CELF-4 Formulated Sentences tests, the CI Low Performers subgroup scored more poorly than the other three subgroups, who did not differ from each other. However, on the CELF-4 Recalling Sentences subtest, the CI High Performers subgroup scored significantly better than the NH Low Performers subgroup (see Figure 1). The mean CELF-4 Recalling Sentences score for the CI Low Performers subgroup was well below the scores of the other three subgroups and almost 3 standard deviations (SD) below the normative mean for this subtest as shown in Table 1.

Nonverbal Intelligence and Executive Functioning/Working Memory

MANCOVAs for nonverbal intelligence and executive functioning/working memory scores by Harvard-A performance subgroups were statistically significant (Table 1). For nonverbal intelligence, the ANOVA comparing Harvard-A subgroups on Geometric Categories was statistically significant, with the CI Low Performers subgroup scoring lower than the CI High Performers and NH Low Performers subgroups (see Figure 1). Planned contrasts between the CI High Performers and NH Low Performers were statistically significant for the Geometric Sequences and Geometric Nonverbal Intelligence composite scores, with CI High Performers subgroup scoring better on nonverbal intelligence than the NH Low Performers subgroup (see Figure 1).

On Digit Span measures of working memory, the NH High Performers subgroup scored higher than the other three subgroups on both forward and backward span tasks. The CI High Performers subgroup also scored better than the CI Low Performers subgroup on the Digit Span Forward task only. The four subgroups did not differ on Spatial Span forward, but the CI High Performers subgroup scored higher than the NH Low Performers subgroup on Spatial Span backward (see Figure 1). The ANOVA comparing the four subgroups on BRIEF Working Memory scores was highly significant (p<.001), with CI Low Performers subgroup scoring higher (indicating more problems with working memory) than the other three subgroups.

Discussion

Overview

In this study, we used an extreme groups design to investigate the perception of anomalous sentences by prelingually deaf children with CIs compared to NH peers. Semantically anomalous sentences block the automatic deployment of lexical access and semantic processing routines and powerful downstream linguistic contextual constraints in sentence perception. As a consequence, the perception of anomalous sentences requires the active use of executive function and cognitive control processes to activate and sustain effortful concentration on individual words and to facilitate the recognition of words in the presence of conflicting semantic contexts that deviate from the expected pattern and normal sequence of words that typically appear in meaningful sentences. To successfully recognize words in anomalous sentences, listeners have to not only activate speech perception processes but also engage core components of EF including working memory, mental flexibility/shifting of attention and inhibitory control processes.

Because the compromised sensory input provided by a CI interferes with the fast-automatic perceptual processing and encoding of the phonological and lexical components of speech (Smith et al., 2019), in order to perform at a high level on an anomalous sentence recognition test, CI users must activate additional EF mechanisms to compensate for deficits in fast-automatic phonological coding. In contrast, NH peers are able to perform at a high level on the anomalous sentence test using fast-automatic encoding of the phonological information in the anomalous sentences test, with less dependence on EF mechanisms. Thus, we hypothesized that CI and NH groups with the same performance on an anomalous sentence test would differ in EF (higher in the CI group) and nonword repetition (fast phonological coding; higher in the NH group).

The use of an extreme-groups approach in this study permitted us to make direct comparisons between these two subgroups of children that had the same word recognition performance on Harvard-A sentences but very different hearing histories and early developmental experiences. Because of the design of the larger study from which these subsamples were drawn (Smith et al., 2019), comparisons could also be carried out with two other subgroups of children in the same study, a group of CI users who scored low on the Harvard-A (CI Low) and a group NH peers who scored high on the Harvard-A (NH High Performers) (Smith et al., 2019). Comparisons among these four subgroups from the larger study also provided several new insights into the demographics, language, intellectual and neurocognitive differences that underlie recognition of words in semantically anomalous sentences.

Demographics and Hearing History.

Several nonsignificant trends were present in the demographic and hearing history variables suggesting that additional research is warranted to investigate whether the CI High Performers group may have had an enriched auditory-verbal-linguistic environment compared to the CI Low Performers group. The CI High Performers group received their CIs at an earlier age, had a shorter duration of deafness before implantation, and had lower Pure Tone Average thresholds (PTAs) compared to the CI Low Performers group. Although not statistically significant, when taken together with other performance measures these numerical differences may reflect an enriched auditory-verbal-linguistic environment for the CI High Performers group, which may promote better language development, EF, and cognitive control skills (Smith et al., 2019). The significantly higher SES of the CI High Performers group relative to both the CI Low Performers and the NH Low Performers groups may also underlie their enriched verbal experiences (Hart & Risley, 1995; Suskind, 2015). Interestingly, for CI users, age was inversely related to Harvard-A performance (high Harvard-A scorers were younger than Low Harvard-A scorers), whereas for NH peers, age was positively related to Harvard-A performance. While it is unsurprising that older NH children showed stronger performance than younger children on anomalous sentence recognition (reflecting stronger language skills with age), the reverse relationship for CI users may be a result of younger users receiving the benefit of more modern, improved devices, strategies, and audiological/speech interventions (Ruffin, Kronenberger, Colson, Henning, & Pisoni, 2013), although we did not directly test this effect.

Speech Perception and Rapid Phonological Coding.

The three additional sentence recognition tests replicated the same pattern of results observed with the Harvard-A sentences that were used to create the original four subgroups used in this study. However, a significant difference was found on the non-word repetition task between the CI High Performers group and the NH Low Performers group. Non-word repetition is a well-known psycholinguistic test that is routinely used to measure rapid phonological coding in speech perception(Cleary, Dillon, & Pisoni, 2002; Gathercole et al., 1994). The difference in non-word repetition scores between the two subgroups suggests that the NH Low Performers group had much better rapid automatic phonological coding skills and were able to use bottom-up sensory evidence in the signal more efficiently to recognize words in sentences than the CI High Performers group, who had to rely on slow-effortful processing to recognize words in sentences.

Although the CI High Performers subgroup displayed word recognition performance on the sentence recognition tests that was comparable to the NH Low Performers subgroup, their poorer performance on the non-word repetition task is theoretically and clinically informative because it suggests that these children may have used compensatory information processing strategies to overcome their deficit and weaknesses in automatic rapid phonological coding of speech. Specifically, children in the CI High Performers group may have achieved excellent sentence recognition scores by using slow-effortful controlled information processing and reliance on executive functioning and cognitive control processes to orchestrate the elementary processing operations used in word recognition, lexical access and sentence perception (Smith et al., 2019). These effects are especially important under challenging conditions such as the recognition of words in anomalous sentences that contain conflicting and incoherent semantic and lexical information that is difficult to parse linguistically.

Language.

No differences were found on the PPVT-4 or the CELF Formulated Sentences subtest between the CI High Performers and NH Low Performers subgroups despite the presence of very large differences in vocabulary knowledge and language scores that are routinely found between CI and NH groups on those measures (Kronenberger et al., 2014; Kronenberger & Pisoni, 2019). Furthermore, norm-based scores for the PPVT-4 and CELF Formulated Sentences were in the upper-end of the average range for the CI High Performers group, suggesting that these children had extraordinarily good global language skills that were well beyond the scores that are typically observed for the CI population. These strong language scores are consistent with the demographic results summarized earlier for the CI High group.

However, the CI High Performers group scored significantly higher than the NH Low Performers group on the CELF Recalling Sentences subtest. This finding suggests that verbal memory for linguistically complex sentences, which is heavily dependent on verbal working memory updating and executive control, is much stronger in the CI High Performers than in the NH Low Performers group. Because of their enriched early verbal-linguistic environment (e.g., higher familial SES, larger vocabulary) and development of robust compensatory strategies for encoding, storing, and recalling words in meaningful sentences, the CI High Performers group may have developed more efficient parsing and chunking strategies to make use of syntactic cues in sentences and self-generated organizational strategies to update and maintain lexical information in verbal working memory. In stark contrast, the CI Low Performers group had very low language scores, almost 3 SDs below the normative mean, on all of the language tests as summarized in Table 1.

Nonverbal Intelligence.

As shown in Table 1, the CI High Performers group displayed much better nonverbal intelligence scores on both the CTONI Geometric Sequences subtest score and the CTONI Geometric Intelligence Composite score than the NH Low Performers subgroup. The CI High Performers subgroup achieved scores in above average ranges on CTONI subtest and composite scores, suggesting that their fluid, nonverbal reasoning skills are above average compared to the general population. Although not statistically significant, it is also noteworthy that the CI High Performers subgroup obtained numerically higher scores than all other subgroups (including the NH High Performers subgroup) on all of the individual subtests of the CTONI (Geometric Analogies, Geometric Categories and Geometric Sequences) as well as the composite score.

Executive Functioning and Working Memory.

The perception of anomalous sentences requires the listener to actively engage cognitive control processes that rely heavily on executive functioning such as inhibition, mental flexibility/shifting, and updating the contents of verbal working memory (Miyake et al., 2000). Although not statistically significant, we found that the CI High Performers subgroup obtained numerically higher memory span scores on Digit Span Forward, Spatial Span Forward, and Spatial Span Backward than the NH Low Performers subgroup. Examination of the BRIEF working memory scores for the two groups revealed a similar trend that was also not significant: A nonsignificant trend (p<0.07) was found for the CI High Performers subgroup to score lower on the BRIEF Working Memory scale (indicating better working memory) than the NH Low Performers subgroup. The pattern observed in both sets of measures, the memory spans and BRIEF ratings, suggests that a difference in working memory dynamics may exist between the two subgroups but additional replication with a larger N is necessary. Moreover, both sets of working memory measures obtained from the CI High Performers group suggest that these children represent a sample of very high functioning CI users, because earlier studies on short-term and working memory capacity using much larger samples of deaf children with CIs have consistently reported large differences in memory span between children with CIs and age- and IQ-matched normal-hearing controls (Kronenberger et al., 2013). Furthermore, norm-based scores on Spatial Span and BRIEF measures were consistently better (e.g., in the direction of better EF) for the CI High Performers subgroup than the normative mean score. In contrast, norm-based scores on Spatial Span and BRIEF were closer to the normative mean (or exceeded the normative mean in the problem direction) for the NH Low Performers group and (to an even greater extent) in the CI Low Performers group. The BRIEF and Spatial Span norm-based scores suggest that the CI High Performers subgroup has excellent EF and behavior regulation skills that may underlie their strong sentence recognition performance.

Conclusions and Implications

Although there is broad agreement among speech and hearing scientists that speech perception is a highly interactive process involving the interpretation of bottom-up sensory evidence against a background of top-down knowledge and context, a number of core issues still remain unclear and await further investigation, especially in clinical populations with hearing loss where enormous individual differences in speech recognition outcomes are commonly observed (Lenarz, 2017). Because speech perception is a very fast and highly automatic process reflecting a tight coupling between sensory evidence encoded in the speech signal and the use of higher-order linguistic context, it has been difficult to dissociate these two factors completely and study the underlying component processes independently from each other.

Conventional sentence recognition tests used in speech audiometry to assess audibility and hearing of speech signals provide reliable and valid endpoint clinical benchmark measures of speech recognition, but these measures reflect the combined effects of both sensory evidence in the signal and higher-order context and down-stream predictive coding. In order to study these two factors independently and assess their combined interactions, it is necessary to use special stimulus materials that attenuate the automatic use of normal lexical and semantic constraints in order to force the listener to focus attention and processing resources on the sensory evidence and information encoded in the speech signal.

Using an extreme groups design with a set of novel anomalous sentences, we were able to study sentence perception and word recognition skills in a small group of deaf children with CIs who had exceptionally good sentence recognition skills and compare them to a small group of NH children who had similar performance on the anomalous sentence test. We found that although both groups of children displayed comparable word recognition scores on the anomalous sentences, they achieved these scores in fundamentally different ways and differed from each other selectively on a number of measures across different information processing domains including rapid phonological coding, nonverbal fluid intelligence, and executive functioning. When compared to NH controls who scored the same on the Harvard-A, the subgroup of CI High Performers children had higher nonverbal intelligence scores, stronger verbal WM capacity for recalling linguistically complex sentences, and excellent EF skills related to inhibition, shifting attention/mental flexibility and working memory updating. It appears that these children are able to successfully compensate for their sensory deficits and weaknesses in bottom-up rapid automatic phonological coding by engaging in a slow effortful mode of information processing that relies heavily on down-stream predictive coding and cognitive control processes to fill in and restore the missing or incomplete acoustic-phonetic and lexical information that is not reliably encoded and fully specified in the initial sensory input.

Limitations of the Present Study

The results of this study should be interpreted in the context of several limitations. Most importantly, the sample size in each group was small (N=6), which was a result of the small overlap of CI and NH participants scoring in the same range on the Harvard-A. The identification of these matching groups on Harvard-A scores involved taking the upper tail of the distribution of CI users and the lower tail of the distribution of NH peers, which is only a fraction of each group. In order to increase the size of this overlapping fraction, larger samples will be needed. As a result of the small N of the study, results should be replicated with larger samples, and nonsignificant results should be interpreted with caution. An additional limitation is the possible influence of confounding variables on results. For example, the groups differed in age, income, and intelligence, which may have affected results of language and executive functioning tests. The interrelations between demographic, hearing history, intelligence, language, and executive functioning characteristics is certainly complex, with reciprocal effects and associations that may not only reflect the effects of Harvard-A performance alone (Kronenberger & Pisoni, 2020). There is also a need to investigate EF and other domains of neurocognitive functioning using additional neuropsychological measures of constructs such as mental efficiency and concept formation. Finally, because this study was cross-sectional, causal interpretations of results should be made with caution. Associations between variables may be consistent with causal effects, but causality cannot be definitively established with this methodology.

Acknowledgments:

The research reported in this article was supported by National Institute on Deafness and Other Communication Disorders Research Grant R01 DC-015257.

Footnotes

Disclosure Statement: David Pisoni reports no conflict of interest. William Kronenberger is a paid consultant to Takeda/Shire Pharmaceuticals, Homology Medicines, and the Indiana Hemophilia and Thrombosis Center (none are relevant to the current article).

References

- Adunka OF, Gantz BJ, Dunn C, Gurgel RK, & Buchman CA (2018). Minimum Reporting Standards for Adult Cochlear Implantation. Otolaryngol Head Neck Surg, 159(2), 215–219. doi: 10.1177/0194599818764329 [DOI] [PMC free article] [PubMed] [Google Scholar]

- AuBuchon AM, Pisoni DB, & Kronenberger WG (2015a). Short-Term and Working Memory Impairments in Early-Implanted, Long-Term Cochlear Implant Users Are Independent of Audibility and Speech Production. Ear Hear, 36(6), 733–737. doi: 10.1097/AUD.0000000000000189 [DOI] [PMC free article] [PubMed] [Google Scholar]

- AuBuchon AM, Pisoni DB, & Kronenberger WG (2015b). Verbal processing speed and executive functioning in long-term cochlear implant users. J Speech Lang Hear Res, 58(1), 151–162. doi: 10.1044/2014_JSLHR-H-13-0259 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bever TG (1970). The cognitive basis for linguistic structures. In Hayes JR (Ed.), Cognition and the development of language (pp. 279–362). New York: Wiley. [Google Scholar]

- Boothroyd A (1968). Statistical theory of the speech discrimination score. J Acoust Soc Am, 43(2), 362–367. doi: 10.1121/1.1910787 [DOI] [PubMed] [Google Scholar]

- Cleary M, Dillon C, & Pisoni DB (2002). Imitation of nonwords by deaf children after cochlear implantation: preliminary findings. Ann Otol Rhinol Laryngol Suppl, 189, 91–96. Retrieved from https://www.ncbi.nlm.nih.gov/pubmed/12018358 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dunn LM, & Dunn DM (2007). Peabody Picture Vocabulary Test - Fourth Edition (PPVT-4). Minneapolis, MN: Pearson Assessments. [Google Scholar]

- Egan JP (1944). OSRD Report No. 3802 Articulation Testing Methods II. Cambridge, MA: Psycho-Acoustic Laboratory Harvard University [Google Scholar]

- Fodor JA, Bever TG, & Garrett MF (1974). The psychology of language: An introduction to psycholinguistics and generative grammar. New York: McGraw-Hill, Inc. [Google Scholar]

- Garafolo JS, Lamel LF, Fisher WM, Fiscus JG, Pallett DS, Dahlgren NL, & Zue V (1993). The DARPA TIMIT Acoustic-Phonetic Continuous Speech Corpus LDC93S1. Philadelphia, PA: Linguistic Data Consortium. [Google Scholar]

- Gathercole SE, & Baddeley AD (1996). Children’s Test of Nonword Repetition. London, UK: Psychological Corporation. [Google Scholar]

- Gathercole SE, Willis CS, Baddeley AD, & Emslie H (1994). The Children’s Test of Nonword Repetition: a test of phonological working memory. Memory, 2(2), 103–127. doi: 10.1080/09658219408258940 [DOI] [PubMed] [Google Scholar]

- Geers A, & Brenner C (2003). Background and educational characteristics of prelingually deaf children implanted by five years of age. Ear Hear, 24(1 Suppl), 2S–14S. doi: 10.1097/01.AUD.0000051685.19171.BD [DOI] [PubMed] [Google Scholar]

- Gilbert JL, Tamati TN, & Pisoni DB (2013). Development, reliability, and validity of PRESTO: a new high-variability sentence recognition test. J Am Acad Audiol, 24(1), 26–36. doi: 10.3766/jaaa.24.1.4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gioia GA, Isquith PK, Kenworthy L, & Barton RM (2002). Profiles of everyday executive function in acquired and developmental disorders (Vol. 8). [DOI] [PubMed] [Google Scholar]

- Gioia GA, Isquith PK, Retzlaff PD, & Espy KA (2002). Confirmatory factor analysis of the behavior rating inventory of executive function (BRIEF) in a clinical sample (Vol. 8). [DOI] [PubMed] [Google Scholar]

- Golden CJ, & Freshwater SM (2002). The stroop color and word Test: A manual for clinical experimental uses. Wood Dale, IL: Stoelting. [Google Scholar]

- Hammill DD, Pearson NA, & Wiederholt JL (2009). Comprehensive Test of Nonverbal Intelligence (CTONI-2), Second Edition. Austin, TX: Pro-Ed. [Google Scholar]

- Hart B, & Risley TR (1995). Meaningful differences in the everyday experiences of young American children. Baltimore: Paul H Brookes Publishing Co. [Google Scholar]

- Herman R, & Pisoni DB (2003). Perception of “Elliptical Speech” Following Cochlear Implantation: Use of Broad Phonetic Categories in Speech Perception. Volta Rev, 102(4), 321–347. Retrieved from https://www.ncbi.nlm.nih.gov/pubmed/21625300 [PMC free article] [PubMed] [Google Scholar]

- IEEE. (1969). IEEE recommended practice for speech quality measurements. Retrieved from

- Kalikow DN, Stevens KN, & Elliot LL (1977). Development of a test of speech intelligibility in noise using sentence materials with controlled word predictability. Journal of the Acoustical Society of America, 61, 1337–1351. [DOI] [PubMed] [Google Scholar]

- Kalikow DN, Stevens KN, & Elliott LL (1977). Development of a test of speech intelligibility in noise using sentence materials with controlled word predictability. The Journal of the Acoustical Society of America, 61(5), 1337–1351. doi: 10.1121/1.381436 [DOI] [PubMed] [Google Scholar]

- Kenett YN, Wechsler-Kashi D, Kenett DY, Schwartz RG, Ben-Jacob E, & Faust M (2013). Semantic organization in children with cochlear implants: computational analysis of verbal fluency. Front Psychol, 4, 543. doi: 10.3389/fpsyg.2013.00543 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kirk KI, Diefendorf AO, Pisoni DB, & Robbins AM (1997). Assessing speech perception in children. In Mendel L & Danhauser J (Eds.), Audiological Evaluation and Management and Speech Perception Training (pp. 101–132). San Diego: Singular Publishing Group. [Google Scholar]

- Kronenberger WG, Colson BG, Henning SC, & Pisoni DB (2014). Executive functioning and speech-language skills following long-term use of cochlear implants. J Deaf Stud Deaf Educ, 19(4), 456–470. doi: 10.1093/deafed/enu011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kronenberger WG, Henning SC, Ditmars AM, & Pisoni DB (2018). Language processing fluency and verbal working memory in prelingually deaf long-term cochlear implant users: A pilot study. Cochlear Implants Int, 19(6), 312–323. doi: 10.1080/14670100.2018.1493970 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kronenberger WG, & Pisoni DB (2019). Assessing Higher Order Language Processing in Long-Term Cochlear Implant Users. Am J Speech Lang Pathol, 28(4), 1537–1553. doi: 10.1044/2019_AJSLP-18-0138 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kronenberger WG, & Pisoni DB (2020). Why are children with cochlear implants at risk for executive functioning delays: Language only or something more? In Marschark M & Knoors H (Eds.), Oxford Handbook of Deaf Studies in Learning and Cognition. New York: Oxford. [Google Scholar]

- Kronenberger WG, Pisoni DB, Harris MS, Hoen HM, Xu H, & Miyamoto RT (2013). Profiles of verbal working memory growth predict speech and language development in children with cochlear implants. J Speech Lang Hear Res, 56(3), 805–825. doi: 10.1044/1092-4388(2012/11-0356) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lenarz T (2017). Cochlear implant - state of the art. GMS Curr Top Otorhinolaryngol Head Neck Surg, 16, Doc04. doi: 10.3205/cto000143 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Luxford WM, Ad Hoc Subcommittee of the Committee on, Hearing, Equilibrium of the American Academy of, Otolaryngology-Head, & Neck S (2001). Minimum speech test battery for postlingually deafened adult cochlear implant patients. Otolaryngol Head Neck Surg, 124(2), 125–126. doi: 10.1067/mhn.2001.113035 [DOI] [PubMed] [Google Scholar]

- Marks L, & Miller GA (1964). The role of semantic and syntactic constraints in the memorization of English sentences. Journal of Verbal Learning and Verbal Behavior, 3, 1–5. [Google Scholar]

- Mendel LL, & Danhauer JL (1997). Audiologic evaluation and management and speech perception assessment (Mendel LL & Danhauer JL Eds.). San Diego: Singular Publishing Group, Inc. [Google Scholar]

- Miller GA (1962a). Decision units in the perception of speech. IRE Transactions on Information Theory, 8, 81–83. [Google Scholar]

- Miller GA (1962b). Some Psychological Studies of Grammar. American Psychologist, 17, 748–762. [Google Scholar]

- Miller GA, & Isard S (1963). Some perceptual consequences of linguistic rules. Journal of Verbal Learning and Verbal Behavior, 2, 217–228. [Google Scholar]

- Miyake A, Friedman NP, Emerson MJ, Witzki AH, Howerter A, & Wager TD (2000). The unity and diversity of executive functions and their contributions to complex “Frontal Lobe” tasks: a latent variable analysis. Cogn Psychol, 41(1), 49–100. doi: 10.1006/cogp.1999.0734 [DOI] [PubMed] [Google Scholar]

- Moberly AC, Bates C, Harris MS, & Pisoni DB (2016). The Enigma of Poor Performance by Adults With Cochlear Implants. Otol Neurotol, 37(10), 1522–1528. doi: 10.1097/MAO.0000000000001211 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moberly AC, & Reed J (2019). Making Sense of Sentences: Top-Down Processing of Speech by Adult Cochlear Implant Users. J Speech Lang Hear Res, 62(8), 2895–2905. doi: 10.1044/2019_JSLHR-H-18-0472 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pichora-Fuller MK, Schneider BA, & Daneman M (1995). How young and old adults listen to and remember speech in noise (Vol. 97). [DOI] [PubMed] [Google Scholar]

- Pisoni DB (1982). Perception of speech: The human listener as a cognitive interface. Speech Technology, 1, 10–23. [Google Scholar]

- Pisoni DB, Nusbaum HC, & Greene BG (1985). Perception of synthetic speech generated by rule. Paper presented at the Proceedings of the IEEE. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ruffin CV, Kronenberger WG, Colson BG, Henning SC, & Pisoni DB (2013). Long-term speech and language outcomes in prelingually deaf children, adolescents and young adults who received cochlear implants in childhood. Audiol Neurootol, 18(5), 289–296. doi: 10.1159/000353405 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Salasoo A, & Pisoni DB (1985). Interaction of Knowledge Sources in Spoken Word Identification. J Mem Lang, 24(2), 210–231. doi: 10.1016/0749-596X(85)90025-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Semel EM, Wiig EH, & Secord WA (2003). Clinical evaluation of language fundamentals (4 ed.). San Antonio, TX: The Psychological Corporation. [Google Scholar]

- Smith GNL, Pisoni DB, & Kronenberger WG (2019). High-Variability Sentence Recognition in Long-Term Cochlear Implant Users: Associations With Rapid Phonological Coding and Executive Functioning. Ear Hear, 40(5), 1149–1161. doi: 10.1097/AUD.0000000000000691 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stelmachowicz PG, Hoover BM, Lewis DE, Kortekaas RW, & Pittman AL (2000). The relation between stimulus context, speech audibility, and perception for normal-hearing and hearing-impaired children. J Speech Lang Hear Res, 43(4), 902–914. doi: 10.1044/jslhr.4304.902 [DOI] [PubMed] [Google Scholar]

- Suskind DL (2015). Thirty Million Words: Building a Child’s Brain. New York: Dutton. [Google Scholar]

- Tamati TN, & Pisoni DB (2015). The perception of foreign-accented speech by cochlear implant users. Paper presented at the International Congress of Phonetic Sciences Glasgow [Google Scholar]

- Wechsler D, Kaplan E, Fein D, Kramer J, Morris R, Delis D, & Maerlender A (2004). Wechsler Intelligence Scale for Children - Fourth Edition Integration. San Antonio, TX: Harcourt Assessment. [Google Scholar]

- Wechsler-Kashi D, Schwartz RG, & Cleary M (2014). Picture naming and verbal fluency in children with cochlear implants. J Speech Lang Hear Res, 57(5), 1870–1882. doi: 10.1044/2014_JSLHR-L-13-0321 [DOI] [PubMed] [Google Scholar]

- Zelazo PD, Anderson JE, Richler J, Wallner-Allen K, Beaumont JL, & Weintraub S (2013). II. NIH Toolbox Cognition Battery (CB): measuring executive function and attention. Monogr Soc Res Child Dev, 78(4), 16–33. doi: 10.1111/mono.12032 [DOI] [PubMed] [Google Scholar]