Abstract

Based on the behavior of living beings, which react mostly to external stimuli, we introduce a neural-network model that uses external patterns as a fundamental tool for the process of recognition. In this proposal, external stimuli appear as an additional field, and basins of attraction, representing memories, arise in accordance with this new field. This is in contrast to the more-common attractor neural networks, where memories are attractors inside well-defined basins of attraction. We show that this procedure considerably increases the storage capabilities of the neural network; this property is illustrated by the standard Hopfield model, which reveals that the recognition capacity of our model may be enlarged, typically, by a factor . The primary challenge here consists in calibrating the influence of the external stimulus, in order to attenuate the noise generated by memories that are not correlated with the external pattern. The system is analyzed primarily through numerical simulations. However, since there is the possibility of performing analytical calculations for the Hopfield model, the agreement between these two approaches can be tested—matching results are indicated in some cases. We also show that the present proposal exhibits a crucial attribute of living beings, which concerns their ability to react promptly to changes in the external environment. Additionally, we illustrate that this new approach may significantly enlarge the recognition capacity of neural networks in various situations; with correlated and non-correlated memories, as well as diluted, symmetric, or asymmetric interactions (synapses). This demonstrates that it can be implemented easily on a wide diversity of models.

Keywords: neural networks, models of single neurons, artificial intelligence, nonlinear dynamical systems

PACS: 07.05.Mh, 87.85.dq, 87.19.ll, 87.18.Sn, 05.90.+m

1. Introduction

Although the area of neural networks (NNs) has experienced impressive developments in the last few decades [1,2,3,4], essential characteristics and reactions of the brain are still far from being satisfactorily replicated in these models. This is an essential direction to pursue, since NNs were initially introduced in order to reproduce some of the primary functions of the brain [5], and their first main practical application was pattern recognition [6]. So far, NNs have been more successful in artificial intelligence applications, such as information and image processing, than in emulating the reactions of living beings in nature.

The remarkable works of Darwin and Wallace on evolutionary theory [7,8,9] were fiercely debated throughout the 19th century, but are now considered fundamental to our understanding the world. They are accepted by all scientists as a starting point for studying everything related to living beings, and all organs of living creatures were, of course, molded by evolution. In particular, the brain itself grew and developed under the action of natural selection; it works in accordance with its evolutionary history. But what is signified by the evolutionary history of the brain? It means the development, from generation to generation, from species to species, of a complex system with fast responses to external stimuli—a fundamental condition of survival. A living being needs to react, in many cases instantaneously, to escape from a predator, to catch prey, to avoid an accident, and many other situations. This implies that the nervous systems of animals were developed as fundamental tools, able to react promptly to changes in the environment.

After millions of years of the development of nervous systems, which generally increased in size and complexity, a concentrated region of nervous cells appeared—the primitive brain—which quickly enlarged its size; this was a breakthrough in the evolution of living beings. However, a further duty was yet to be fully developed. Living beings had to react quickly and more efficiently to changes in the environment—this task was at the origin of the brain and is recorded “au fer et au feu” in the way that it works. This stimuli-dependence of the brain is behind all of its assignments, the old ones (essentially instinctive reactions), as well as the biologically newer ones (e.g., those related to cognition). During the whole of its evolutionary history, pattern recognition was a basic attribute of the brain, allowing living beings to react appropriately. NN models should, therefore, incorporate some of the main features related to this; below we outline, briefly, the main procedure for pattern recognition in living beings.

-

(a)

Living beings accumulate memories (past input patterns), that are stored in some way, e.g., using Hebb’s rule [10,11].

-

(b)

Without external stimuli, they do not recognize any pattern, and remain in a noisy state.

-

(c)

In the presence of an external stimulus that is associated with some stored pattern, they recognize that pattern; if the external stimulus has no relation to any stored pattern, nothing is recognized.

-

(d)

If the external stimulus associated with some memory disappears, the effectivity of the recognized pattern decreases, becoming essentially null after some time delay. This induces a return to a noisy state.

-

(e)

In line with a common feature in nature, whenever an external stimulus abruptly changes its pattern, the living being quickly adjusts to the new recognized pattern and away from the old one.

-

(f)

These steps are followed repeatedly in the presence of each new stimulus, or sequences of stimuli.

Biologically realistic pattern-recognition NN models should reproduce the actions sketched above (at least, most of them), by following the route of new concepts in complex systems [12,13,14,15]. These systems essentially live at the chaos–order or ordered–disordered borderlines, and effective NN models should be defined at these borderlines; chaotic, ordered, or disordered regimes can never yield appropriate proposals for NNs.

Along these lines, we will introduce a stimuli-dependent neural-network model, and its effectiveness in pattern recognition will be demonstrated; certainly, similar scenarios can be constructed for other brain process. Stimuli will be defined in the form of an external local field, which has already shown its effectiveness in the suppression of chaos in a recurrent fully connected NN model [16]. Here, we will illustrate how this works using the standard Hopfield model. In the next section we briefly review the paradigmatic Hopfield model, define the class of attractor neural networks (ANNs), and analyze some of their limitations. In Section 3, we present our model, which incorporates several of the points sketched above; the procedure will be applied to the Hopfield model, for which analytical calculations are feasible. We also discuss the new concepts embodied in this approach, emphasizing its pattern storage capacity and showing this is substantially larger than the capacity of ANN models. Analytical calculations within a mean-field approximation, with special attention given to its zero-temperature () limit, are carried out in Section 4. In Section 5, we present results from numerical simulations of uncorrelated patterns at , comparing some of these with the analytical results. The significant increase in pattern storage capacity, as compared to ANN models, is indicated by these numerical simulations. The ability of the model to react promptly to changes in the external stimuli—particularly in replicating actions (b)–(e) described above, which are crucial for living beings—is verified in Section 6. The good performance of the present proposal in the case of previously stored correlated patterns is reported in Section 7. In Section 8, we introduce site dilution into the model, revealing that it also functions well for asymmetric diluted synapses. In Section 9, we discuss our main results, emphasizing some of the advantages with respect to previous NN models, and propose potential applications; finally, in Section 10, we present our conclusions.

2. The Hopfield Model and Attractor Neural Networks

A breakthrough in the NN area occurred in the beginning of the 1980s, with the proposal of a model due to Hopfield [17] (known in the literature as the Hopfield model), which has attracted the attention of many during the last few decades [1,2,3]. Imitating ideas from random magnetic models, particularly those from the Ising spin-glass model [4,18,19], one makes the analogies, and . In this way, the Hopfield model is defined by means of a Hamiltonian,

| (1) |

with () representing the state of the i-th neuron at time t [5], activated or at rest. Moreover, stands for the intensity of the synapses between neurons i and j (considered as symmetric), whereas denotes a sum over all distinct pairs of neurons, corresponding to a fully connected neural network. The intensity of the synapses is expressed in terms of p stored memories , according to Hebb’s rule [10,11],

| (2) |

These memories should remain fixed along the whole time evolution (i.e., are quenched variables) and are assumed to be orthogonal on average [for finite N there may occur overlaps between memories of ]. The obey the equation

| (3) |

The definition of the Hamiltonian above has attracted the interest of many physicists, particularly because statistical-mechanics techniques could be applied [20,21,22,23]. However, an immediate question concerns the assumption of symmetric couplings , which is a serious obstacle to an appropriate emulation of the brain, since it is well-known that real synapses violate this symmetry, i.e., . In this case no Hamiltonian can be defined.

The Hopfield model has helped to create the class of ANNs; systems where the fundamental states become stored memories. Each such memory defines a nontrivial phase space and possesses its own basin of attraction. In ANNs, an initial pattern that has a significant overlap with one stored memory—that is, belonging to its basin of attraction—evolves by means of an appropriate dynamics (stochastic or not) to a lower energy state, i.e., to the stored memory. This leads to the recognition of the pattern [1,2,3,4,20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40]. However, once a pattern is recognized, the system stays in its resultant state forever, even if the input pattern has acted only at the beginning of the process. Due to this aspect, it is easy to see that, of the items listed in the previous section, few are consistently fulfilled.

Three basic limitations that are common for ANNs are listed and discussed below. (i) They do not operate at the chaos–order border, as any complex system should [12,13,14,15], but rather on the ordered side; (ii) They present a maximum limit in their storage capacity; and (iii) They are inefficient when reacting to changes in the external environment.

The ordered side, where a multiplicity of low-energy states appears, is the main ingredient of an ANN; as a proposal for modeling one of the most complex systems in nature, restriction (i) means that the ANNs are very far from achieving an appropriate approach to the brain. The constraint (ii) comes from the fact that the memories correspond to ground states, each one having its own basin of attraction, so that the size of the basins reduces as the number of stored memories increases. This severely limits their number, even if these memories are not correlated. In general, the storage capacity of ANNs, with N neuronal units, may be expressed as , with characterized by threshold values (); in most cases is much smaller than one. In the standard Hopfield model, it is well-known that [22], so that the system becomes unable to recognize any further pattern for . The limitation (iii) is related to the above-mentioned fact that, once a pattern is recognized, the system stays in its corresponding state; this feature prevents quick reactions to external changes. In order to consider such reactions in the standard Hopfield model, for , one should change the initial state , which may lead to a jump in phase space to a different basin of attraction. As will be shown later, for the model we define next, reactions that follow changes in the external environment occur naturally and smoothly.

Modifications to the Hopfield model, such as modifying coupling constants to be asymmetric (in which case there is no Hamiltonian) [41,42,43], correlating or coupling pairs of memories [44,45,46,47,48,49,50,51], and introducing dilution into the synapses [3,43,52,53,54,55,56,57,58,59,60,61,62], yield minor changes, but largely fail to satisfy the requirements for an appropriate description of the brain [steps (b)–(e) of the previous section], and do not overcome the drawbacks described in items (i)–(iii) above. Asymmetric coupling constants, correlations among memories, and dilution may lead to fixed points or cycles in the dynamics, which appear on the ordered side of the chaos–order dichotomy. These ingredients could also lead to a chaotic state [63,64]; in both situations (ordered or disordered states) one is not at the border of chaos and order, and thus the situation is inappropriate for emulating a complex system such as the brain.

3. Biologically Motivated Model: The Relevance of External Stimuli

Reacting quickly to changes in the neighboring environment is so important to life that many reactions are written into our DNA. Being the most primitive ones; they are commonly called instinctive reactions. The new-born mammal, which sucks anything that enters its mouth, illustrates an instinctive reaction, fundamental to the first weeks of any mammals’ life. Rapid and involuntary movements to escape from predators represent provide additional examples, among many other instinctive reactions in animals. Clearly, the brain was forged by evolution for the task of quickly analyzing any external stimulus and triggering a muscle reaction if necessary. With evolution, further types of reactions to external stimuli, non-instinctive reactions, were developed in the nervous systems of many animals. These animals can take some time to analyze the stimulus, recognize it, and then, react.

Prompt reactions to external stimuli should be ubiquitous for any brain activity; besides, of course, the important task of pattern recognition. During pattern recognition, in short, the system is able to store a certain number of patterns (memories), which come from previous experiences. If an external stimulus related to one of these patterns is presented, the system should be able to recognize it as one of the stored patterns.

The typical framework of using ANNs for pattern recognition generates a topography, in an appropriate mathematical space, where memories are at the bottom of the valleys and external stimuli are (initially) located on the mountain slopes, giving birth to the recognition process. An appropriate choice of dynamics should lead the external stimulus from the mountain slopes to the bottom of the valley, associating it with the corresponding memory.

In these types of ANN models, the influence of an external stimulus on a specific neuron belonging to the NN can be essentially split into two contributions; namely, a signal, connected with the external stimulus and correlated to a stored pattern (memory), as well as noise, produced by all stored memories that are not related to the external stimulus. This external stimulus yields the initial state of neuronal activity that, by evolving according to some internal process, leads the system to recognize the memory associated with the initial stimulus. As the number of stored patterns (memories) increases, the width of the noise distribution also increases, so that when the system attains a sufficiently large number of stored memories, this width becomes of the order of the signal; once this occurs, the NN is not able to recognize any further stored pattern. The ability of an ANN to recognize stored memories fails because the width of the noise distribution (roughly) cancels out the signal from the external initial stimulus.

In this paper, our main assumption is that the long-term evolution of living beings has allowed them to calibrate the external-stimulus influence, so that the noise produced by memories that are not correlated with the external stimulus is canceled as much as possible. Roughly, we can express the influence () due to other neurons () on a particular neuron i, in the presence of an external stimulus correlated with some stored memory, in terms of the following contributions [65],

| (4) |

If the external stimulus succeeds in canceling the noise contribution, the signal should remain, allowing the recognition of the stimulus whenever it is associated to some stored pattern. The external stimulus does not merely correspond to an initial state, which is responsible for starting the process of recognition as happens in standard NNs [1,2,3,4], but it will rather be present during the whole process of recognition. In this framework, the initial state becomes irrelevant; the fundamental point concerns the process of tuning the external-stimulus intensity in order to cancel precisely (or approximately) the noise term. Operationally, in a given computational NN, the first two contributions on the right-hand-side of Equation (4) result from the model construction [e.g., from the definition of the intensity of synapses in Equations (1) and (2)], whereas the last contribution corresponds to an extra term acting on each neuron i, introduced in order to attenuate the noise contribution. From now on, we refer to this scheme as a stimulus-dependent neural network (SDNN).

In principle, this framework may be implemented in many NN models, but here it will be illustrated for the Hopfield model, due to its simplicity and potential for producing analytical results. In this case, the local field at time t becomes

| (5) |

where, in the first term on the r.h.s., we have the usual contribution due to neurons, whereas the second term corresponds to the external stimulus acting on neuron i. This later contribution should remain fixed during the whole time evolution, with and depicting its intensity, to be considered in the recognition process. The main idea is to maintain the influence of the external pattern during the whole process, and not only as an initial state, as happens in typical ANNs.

We will consider two typical situations concerning this external stimulus: (i) It should present an overlap with one specific stored memory (e.g., memory , ). It should be orthogonal to all other memories and should lead to an external pattern being correlated with a specific memory; (ii) It should be orthogonal to all stored memories, possess no overlap with any memory, and correspond to an external pattern not correlated with any stored memory. Case (i) can be expressed by assuming that the set obeys the probability distribution

| (6) |

where for and for . The particular case means that the set of external-stimulus signs yields the same pattern as the memory , covering common real situations where one should recognize an external pattern that coincides precisely with the stored one. On the other hand, case (ii) is characterized by

| (7) |

Let us investigate the local field of Equation (5), by separating the contribution of memory from those of other memories () in Equation (2); one obtains,

| (8) |

Comparing the equation above with Equation (4), one immediately identifies each of the contributions on its r.h.s.: the first one is the signal activated when the neuron configuration is roughly the same as the stored memory ; the second one represents the noise, induced by other memories distinct from ; and the third contribution corresponds to the external stimulus. In the case , if we choose , then

| (9) |

which shows that one may calibrate appropriately, allowing the external stimulus to roughly cancel the noise term, in order to favor the signal contribution. This would allow the system to recognize the submitted external pattern. Therefore, must not be so small, since it will not be able to cancel the noise term, nor very large, in which case it may dominate the local field, forcing an alignment with the external stimulus, whether correlated with a particular memory or not. Finding an optimal value for is a fundamental point of this framework.

Next, we estimate, approximately, the optimal value of , by focusing on the noise contribution in Equation (9),

| (10) |

Considering that there are no correlations between patterns and , and that patterns associated with different sites () are independent, each of these contributions take the values with equal probability. For large enough values of N and p the average over the variables is,

| (11) |

whereas its associated variance yields

| (12) |

Hence, the width of the noise distribution is approximately , so that for independent patterns, a good choice for should roughly be ; the numerical simulations to be presented later on display good agreement with this choice. This procedure could indicate pattern recognition for values of p that are much higher than those restricted by the upper limit of the Hopfield model. However, in those models where analytical calculations for the optimal value of are not feasible, it should still be possible to estimate this value numerically; in fact, this is an easy task, as will be shown later on.

From Equation (8), with the reminder that the couplings are symmetric in the Hopfield model, one can define a Hamiltonian at time t,

| (13) |

where denotes sums over all distinct pairs of neurons, corresponding to a fully connected NN.

In the simulations to be presented later, we essentially focused on two parameters, namely, the macroscopic superposition (or overlap) of a neuron state with a given pattern at time t,

| (14) |

as well as the overlap of this neuron state with the special case where the external-stimuli signs are orthogonal to all stored memories [i.e., case (ii) in Equation (7) with for all memories], defined as

| (15) |

The quantity above represents a macroscopic superposition with an external pattern, not stored and orthogonal to all stored patterns, for which with equal probability. Frequently, the set is considered to contain the components of a p-dimensional vector, , and we will give a special emphasis to , as associated with a single condensed pattern and identified in the first term of the Hamiltonian in Equation (13). All other components appear in the noise contribution of the Hamiltonian.

4. Analytic Calculations

In this section we perform analytical calculations, which essentially correspond to a mean-field approach, along the lines of Refs. [21,22]. We assume that the system defined by the Hamiltonian in Equation (13) attains, after a sufficiently long time, well-defined thermal equilibrium states (for finite temperatures, ), together with its zero-temperature limit (). For a given realization of the quenched disorder , one may define a partition function , so that the free energy per neuron becomes

| (16) |

where (we work in units ). Above, indicates quenched averages on and , which, according to Equations (3) and (6), should be carried out with the average over the taken before the one over . Following standard procedure, we apply the replica method to calculate the free energy [1,2,3,4],

| (17) |

where corresponds to the partition function of n independent replicas, for a given realization of the disorder.

Then, one assumes that the total number of stored patterns is an extensive quantity, expressed as ; in the standard Hopfield model, this holds up [22], so that pattern recognition does not work for . At the above-mentioned equilibrium, one may perform the usual averages, so the quantity of Equation (14) leads to

| (18) |

whereas, for the case where the external stimulus is orthogonal to all stored memories, the superposition in Equation (15) yields

| (19) |

with corresponding to local magnetizations.

Lengthy (but well-established) calculations for the free energy of Equation (17) are outlined in the Appendix A, where we have split the patterns into two sets; namely, s condensed patterns and non-condensed ones. From now on, we will investigate the recognition of a single pattern (i.e., ), meaning that the external stimulus presents a superposition with memory , corresponding to case (i) of Equation (6), for which one has the overlapping

| (20) |

Moreover, the free energy at the end of the Appendix A [cf. Equation (A7)] becomes

| (21) |

where

| (22) |

and the averages should be considered over the single condensed pattern only. Two further parameters appear in the above free energy, namely, the Edwards–Anderson one (which becomes relevant only for very low temperatures),

| (23) |

and a parameter that measures the noise produced by non-condensed patterns,

| (24) |

In deriving the free energy in Equation (21), as well as the parameters in Equations (23) and (24), we have assumed the replica-symmetry ansatz: in full replica space, both parameters depend on two replica indices, representing matrix elements, as can be seen in the Appendix A. In this ansatz, one assumes that all off-diagonal matrix elements are equal, given by Equations (23) and (24) [1,2,3,4,18].

The equilibrium solution comes from the derivatives of the free-energy with respect to the parameters above, leading to the saddle-point equations,

| (25) |

| (26) |

| (27) |

For case (ii) in Equation (7), where the external stimulus is orthogonal to all memories, there is no condensed pattern, and the parameter of Equation (19) may be calculated from

| (28) |

where we have assumed, as usual, that the derivative comutes with the average operations, since these latter are essentially expressed by sums and integrals. It is important to stress that the averages above should be considered over the orthogonal patterns, for which and with equal probability. In this way,

| (29) |

Limit

Throughout this work, a special emphasis will be given to limit (or ); in this case, , so that the internal energy per neuron becomes

| (30) |

Additionally, Equations (25)–(29) lead to

| (31) |

| (32) |

with the case where the external stimulus is orthogonal to all stored memories yielding

| (33) |

where erf(x) denotes the error function. Notice that in the particular limit , Equations (31) and (32) become

| (34) |

and

| (35) |

which, for , recover the zero-temperature equations of Ref. [22].

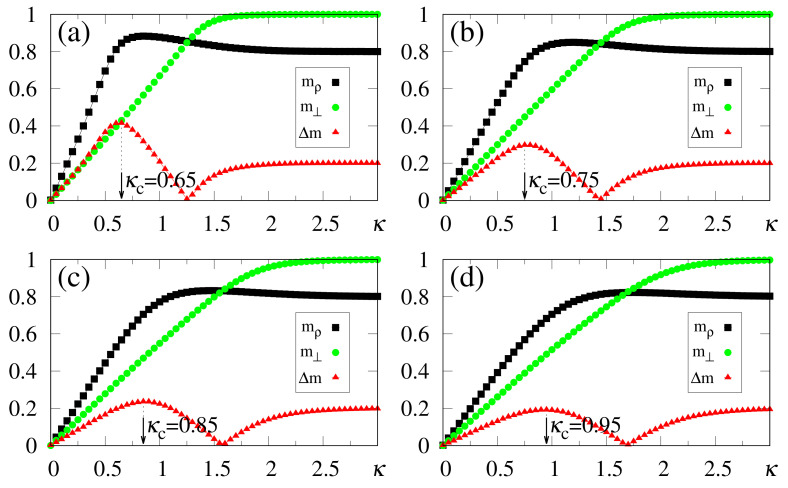

The above zero-temperature equations may be solved numerically for given values of , and ; in Figure 1 we illustrate the particular case , with curves for the overlaps and resulting from the solution of Equations (33)–(35). The overlaps and versus (typical values of ), or versus (typical values of ), are presented in Figure 1a,b and Figure 1c,d, respectively. Here, we again emphasize that two different situations for the external stimulus are being considered, i.e., a fully correlated external stimulus (plots for ) and an external stimulus orthogonal to all memories (plots for ). One notices certain ranges of and where multiple solutions for these quantities appear, characterizing first-order phase transitions. Some typical cases are illustrated in the corresponding insets, showing the coexistence of more than one solution; in these cases, the precise locations of the discontinuities may be computed through Maxwell constructions, where one equates the internal energy of Equation (30) for both solutions. For the purpose of pattern recognition, the quantities and are expected to vary smoothly, so that discontinuities, where one may have large variations on either one of these overlaps, for infinitesimal changes of given parameters, do not correspond to common situations in natural systems. Hence, for the rest of this work we will concentrate on solutions where the parameters and , associated with two different types of external stimuli, vary smoothly on either one of the parameters or ; more particularly, we will focus on the role played by in the non-retrieval region of the standard Hopfield model, i.e., , along which one has for . We call the attention to those plots for higher values of (or ) in Figure 1, focusing on the relevant regions for pattern recognition (), around which one notices significant values for the overlapping (typically, ).

Figure 1.

(Color online) Results from mean-field approximation in the zero-temperature limit, for the overlaps [Equation (34)] and [Equation (33)], are exhibited for the case : and are shown versus (typical values of ) in panels (a,b), and represented versus (typical values of ) in panels (c,d), respectively. In (a,b) one has , and (from bottom to top), whereas in (c,d) one has , and (from top to bottom). For smaller values of and , one notices multiple solutions for both and , typical of first-order phase transitions. Insets illustrate some cases where two solutions coexist for certain intervals of these parameters: (b) and ; (c) ; (d) .

5. Numerical Simulations: Recognition of Uncorrelated Patterns

We studied the SDNN, defined by the local field of Equations (5)–(8), through zero-temperature numerical simulations, for neurons and stored memories, considering several choices for the parameters and . At first, we set couplings and external stimuli according to Equations (2), (3) and (6); being quenched variables, these quantities were kept fixed along each time evolution. The initial neuron configuration was chosen at random, i.e., with equal probability; then, these dynamical variables were updated in a sequential way, through the zero-temperature dynamics,

| (36) |

and each time unit corresponded to the operation above on a single neuron. Following this procedure, the nearest local minimum of energy is attained after a sufficiently long time (, i.e., each neuron is visited times), with macroscopic quantities presenting slight fluctuations for . In our analysis, we have emphasized the overlaps of Equations (19) and (20); at a given local minimum of energy, i.e., for , these quantities can be calculated, and according to Equations (3) and (6), the average over should be taken before the one over ; for these averaging procedures, each simulation was repeated times.

Throughout this section, we restrict ourselves to the recognition of uncorrelated patterns; the satisfying performance of the present proposal in the case of correlated patterns will be shown later. In what follows, we will be particularly interested in the role played by in the non-retrieval region of the standard Hopfield model, where for . The intensity of the external stimulus yields two distinct situations concerning the external pattern; namely, for and for . According to this, sufficiently large values of lead to and . However, these two particular limits ( small or large) are not appropriate for pattern recognition; on the other hand, a well-calibrated value of , to be called hereafter , chosen in such a way to cancel (or diminish as much as possible) the effects of the noise contribution in Equation (8), as discussed in Section 3, represents the crucial point of this new framework. Values will not be sufficient to cancel the noise term, whereas for , the external field will dominate the total local field and there will be no pattern recognition at all; in this later case, the local field will simply reproduce the external stimulus, whether associated with a memory or not. Here, we propose to monitor the absolute value of the difference between the overlaps of Equations (19) and (20), which correspond to two different types of external stimuli,

| (37) |

in order to identify the optimal value . One notices that only the orthogonal (i.e., non-stored) memories will contribute to , so that the appropriate choice of should correspond to its maximum value, .

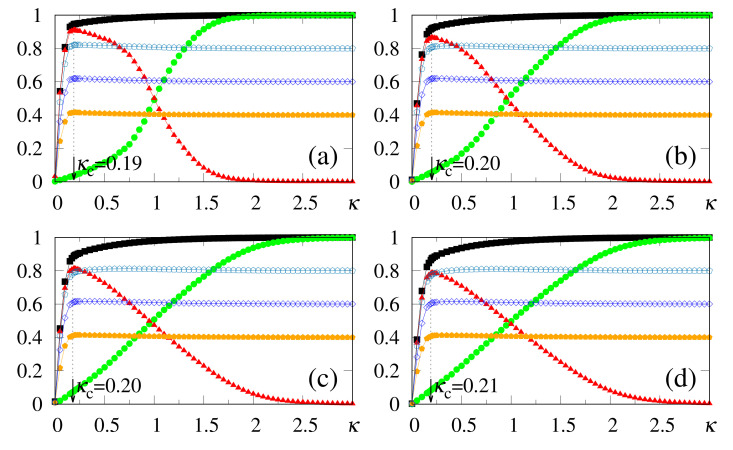

Results from numerical simulations are shown in Figure 2, where the quantities defined in Equations (19), (20) and (37) are plotted versus , for and typical values of . In all cases, is clearly identified by means of , and since the storage capacity p grows with , the variance of the noise contribution also increases. The analytical approximate result [cf. Equation (12)] shows good agreement with the numerical estimates of Figure 2. As another consequence of this increase, one finds a tendency towards diminishing the difference , as well as the magnitude of the values . In general, computing extremely accurate estimates for is not a central aim in the present scheme, since slight variations around the values of provide equally good results. This is directly related to fluctuations in the noise contribution, shown in Section 3. It should be stressed that all values of considered in Figure 2 are greater (or much greater) than the critical value , above which the standard Hopfield NN does not recognize any pattern. Moreover, one can observe the limits (), as well as and ().

Figure 2.

(Color online) Results from numerical simulations for the overlaps (black squares) [cf. Equation (20)], (green circles) [cf. Equation (19)], and the modulus of their difference, (red triangles) [cf. Equation (37)], are plotted versus , for , and typical values of : (a) ; (b) ; (c) ; (d) . In each case, the maximum value of yields the best choice for , denoted by . The two overlaps shown represent, respectively, macroscopic superpositions of a neuron state with a stored pattern (), and with non-stored patterns orthogonal to the stored one (). The lines interpolating the symbols are guides for the eye.

An interesting effect appears in Figure 3, where we present results from numerical simulations for the quantities defined in Equations (19), (20) and (37) for , and the same values of used in Figure 2. For , all plots are qualitatively similar to those of Figure 2 and, in particular, very close estimates for (or even equal in some cases) were obtained; one should notice that although the analytical result of Equation (12) was derived for the case , it holds for as a good approximation. Nevertheless, the limits and are fulfilled for , so that their plots cross () at some value of , yielding . To the right of this crossing point one has that , signaling that the external stimulus starts dominating the total local field, as one approaches a non-relevant limit for the SDNN. In this region, pattern recognition begins deteriorating, and for the local field will simply reproduce the external stimululs, whether associated with a memory or not. The optimal value , associated with the maximum difference between overlaps and , represents the appropriate one, allowing the recognition of a stored pattern, for ; it cannot be too large, otherwise it prevents the external stimulus from dominating the other terms of the local field in Equation (8). This subtle balance is the heart of this approach.

Figure 3.

(Color online) Results from numerical simulations for the overlaps (black squares) [cf. Equation (20)], (green circles) [cf. Equation (19)], and the modulus of their difference, (red triangles) [cf. Equation (37)], are plotted versus , for , and typical values of : (a) ; (b) ; (c) ; (d) . In each case, the maximum value of yields the best choice for , denoted by . The two overlaps shown represent, respectively, macroscopic superpositions of a neuron state with a stored pattern (), and with non-stored patterns orthogonal to the stored one (). The lines interpolating the symbols are guides for the eye.

One of the main virtues of the SDNN, illustrated in Figure 2 and Figure 3, concerns the range of values, above the critical value of the standard Hopfield model, that still allow significant pattern recognition capabilities. Considering, as an illustrative example, the case [cf. Figure 2d and Figure 3d], one finds in both cases and , and the macroscopic superposition with a given pattern presents the values [Figure 2d] and [Figure 3d]. It should be noted that superpositions with memories are still present, being of order . This enlargement in the pattern recognition capacity is directly related to the fact that the SDNN expresses no basins of attraction, although it creates a huge and single basin of attraction when . However, by setting , this basin of attraction disappears and the memories do not occupy any volume in phase space, for any . As will be shown later, within this framework recognition works well, even for values of .

As defined in Equation (6), the parameter measures the disturbance associated with recognition of a given stored pattern . Therefore, as decreases from the value of one, we should find dissimilarities between the external stimulus and the stored pattern, so that pattern recognition fails for some . We illustrate this aspect in Figure 4, where we present a situation close to the limit of disturbance for the recognition of the stored pattern, . One notices that there is not a clear optimal choice for in this case, so that one can not distinguish a stored pattern from a new pattern that is not stored and is orthogonal to other stored patterns. This feature of the model was investigated in Ref. [65], where this limit was verified, and it was shown that it is not possible to find an optimal choice of for . Hence, in this approach, we can deform the stored pattern close to 25% and the SDNN will still recognize it, even for values of greater than the critical limit of the Hopfield model. This peculiarity demonstrates an impressive performance compared with other NN models that are studied in the literature.

Figure 4.

(Color online) Results from numerical simulations for the overlaps (black squares) [cf. Equation (20)], (green circles) [cf. Equation (19)], and the modulus of their difference, (red triangles) [cf. Equation (37)], are plotted versus , for , and typical values of : (a) ; (b) ; (c) ; (d) . The two overlaps shown represent, respectively, macroscopic superpositions of a neuron state with a stored pattern (), and with non-stored patterns orthogonal to the stored one (). The lines interpolating the symbols are guides for the eye.

We have also carried out simulations that consider much higher values of , with the external pattern corresponding to , i.e., , as shown in Figure 5. One sees that there is no well-defined limit for the storage capacity, showing that the procedure described above holds for considerably large values of . Particularly, as presented in Figure 5c, this technique was easily implemented for ; in this case we acquire , together with , whereas the difference remains quite significant (revealing a maximum value ), and still allowing recognition. One should notice that the value of used in Figure 5c is more than times larger than the threshold of the standard Hopfield model () [22]. Analyzing together the results of Figure 1 and Figure 5 (all of them for ), two characteristics deserve discussion, as follows. (i) The analytical approximate result (derived for and ), [cf. Equation (12)], shows a good agreement with most numerical estimates (typically within error), although the discrepancies increase for the larger values. One sees that the analytical calculation yields overestimates for , with respect to the numerical results. These discrepancies are mostly due to the approximations leading to Equation (12), as well as to the finite sizes used in the simulations. (ii) Considering even larger values for , a saturation effect occurs, in the sense that from below, yielding . This characteristic is illustrated in Figure 5d, where we consider , which shows that the curves for and become very close, leading to a flat behavior in . In this regime, clear identification of becomes difficult, explaining the increase in the discrepancy between analytical and numerical results. We verified that such a saturation occurs, typically, for . Nevertheless, we can state that for relatively high values of , as illustrated in Figure 5b,c, the SDNN is able to achieve pattern recognition with no difficulties.

Figure 5.

(Color online) Results from numerical simulations for the overlaps (black squares) [cf. Equation (20)], (green circles) [cf. Equation (19)], and the modulus of their difference, (red triangles) [cf. Equation (37)], are plotted versus , for , and typical high values of : (a) ; (b) ; (c) ; (d) . In each case, the maximum value of yields the best choice for , denoted by . The two overlaps shown represent, respectively, macroscopic superpositions of a neuron state with a stored pattern (), and with non-stored patterns orthogonal to the stored one (). The lines interpolating the symbols are guides for the eye.

In Figure 6, we compare data from numerical simulations of neurons (symbols) with results from analytical calculations in the zero-temperature limit (full lines), considering typical choices for and . The analytical results for follow from Equations (33) and (32), whereas those for were obtained from Equations (31) and (32); the corresponding numerical estimates were computed from Equations (19) and (20), respectively, with given by Equation (37). In all cases one notices a good agreement between the two approaches, with small discrepancies between them (at most of the order ), which become more significant for small values of , as well as around the best choice , as expected, signaling the most relevant region for pattern recognition. However, the results from the two approaches essentially coincide when the external stimulus becomes very large, since in this limit the fluctuations in pattern recognition disappear. In Figure 6a, we present results for —i.e., very close to the limit of disturbance for the recognition of a stored pattern, as illustrated in Figure 5—so that there is not a well-defined value for . However, may be obtained clearly from Figure 6b–d, and one notices in these cases that the analytical estimates (given by the maximum of ) are always overestimates with respect to the numerical ones [even in the cases of Figure 6b,c]. This is in agreement with previous discussions referring to the approximations that led to Equation (12). The above-mentioned discrepancies are essentially due to finite-size effects, since analytical and numerical results are expected to coincide for , in which limit the mean-field approximation becomes exact for a fully connected network [1,2,3,4].

Figure 6.

(Color online) Zero-temperature results from analytical calculations (full lines) are plotted versus and compared with those from numerical simulations (symbols) for typical values of and : (a) and ; (b) and ; (c) and ; (d) and . To distinguish from the numerical data, in each case the upper index stands for theoretical results. The theoretical overlaps of Equation (33) (blue full line) and Equation (31) (brown full line) are compared with the numerical estimates for (green circles) [cf. Equation (19)] and (black squares) [cf. Equation (20)], respectively, whereas the theoretical modulus of the difference between these quantities (purple full line) is compared with the numerical (red triangles) [cf. Equation (37)].

6. Time Evolution of the Macroscopic Superposition under Changes in the External Pattern

One well-known characteristic of living beings, fundamental to their survival, consists in their ability to react to changes in the environment. In this section, we show how the SDNN adjusts itself, being capable of replacing a previously recognized pattern with a new one, according to changes in the external stimulus. Let us consider a typical real situation where at a time we present a pattern associated with one stored memory, say memory ; then, at a later time , we withdraw it and present a different pattern associated with memory . In order to illustrate the effectiveness of the SDNN in responding to these changes, we now allow the set of external-stimulus signs to change in time, i.e., . Furthermore, we assume abrupt changes in these variables at two given times, and , so that the probability distribution in Equation (6) is replaced by the time-dependent set

| (38) |

and

| (39) |

with . The actions at times and correspond, respectively, to the presentation of pattern , and to its replacement by the new pattern , giving rise to the question of whether the SDNN will respond adequately to these modifications.

In Figure 7, we illustrate how the SDNN reacts to the above-mentioned changes, by plotting the time-dependent macroscopic superpositions [cf. Equation (14)] (black circles) and (dashed green line) versus time, for , while considering four different intensities of the external stimulus, in increasing order, as shown in Figure 7a–d. Up to , no external pattern is presented (), and no memory is recognized, as expected. Then, a pattern with () superposition with the stored pattern is presented, leading to the onset of the macroscopic superposition (). Similarly, up to , the macroscopic superposition remains zero. It becomes nonzero only for , due to the abrupt change in the stimulus at , where pattern is replaced by the new pattern, presenting a superposition with stored pattern . Accordingly, Figure 7 presents another important attribute of the SDNN—that is, its reactions to this change at , resulting in a decrease in , together with a growth in () for .

Figure 7.

(Color online) Results from numerical simulations showing the time evolution of two different macroscopic superpositions of states of neurons with memories and [ and , defined according to Equation (14)], for . The external stimulus changes in time, following Equations (38) and (39), where the two relevant times are and . Hence, for there is no stimulus, i.e., , and at a stimulus with an intensity appears with a superposition with stored pattern ; then, at it changes abruptly, presenting a superposition with stored pattern . Four different intensities of the external stimulus are considered for : (a) ; (b) ; (c) ; (d) . The macroscopic superposition (dashed green line) remains zero for , whereas (black circles) becomes nonzero for .

Some interesting effects are shown in Figure 7, as discussed next. (i) The overlaps and approach a plateau whose height increases for larger values of , in agreement with the results presented in Figure 2, Figure 3, Figure 4, Figure 5 and Figure 6. (ii) The response time to the changes considered, either in the growth or reduction in the overlaps, is directly related to the intensity of the external stimulus : larger (smaller) values of lead to smaller (larger) response times. This type of behavior is also in agreement with reactions of living beings. In this way, one notices that for the long-time limits for the overlaps have not been fully attained, as one can see by comparing the plot of of Figure 7a with the long-time result of Figure 2c at (both for and ). One should note that the time interval for the action of the external stimulus in all panels of Figure 7 is (for both patterns and ), which is smaller than the time used in the simulations of Figure 2, Figure 3, Figure 4, Figure 5 and Figure 6, (i.e., ), and thus sufficient for the SDNN to reach its nearest local minimum of energy. Due to this, for the smaller values of [cf., e.g., Figure 7a], both overlaps and have not yet reached their long-time limits. However, for larger values of , much smaller times are required for the overlaps and to approach their long-time limits; particularly, in Figure 7d, one notices that these overlaps have attained their maximum values; namely, and . (iii) All panels of Figure 7 are illustrative, and exhibit the ability of the SDNN to react under changes in the environment. However, the most relevant interval for pattern recognition should be close to , as considered in Figure 7b, according to the values of estimated in Figure 2c and Figure 3c.

One should note that reactions can also be studied in ANNs, as in the standard Hopfield model for . In these cases, this may be achieved by changing the initial state , which may lead to jumps in phase space among different basins of attraction. The results presented above show, clearly, that the SDNN modifies the recognized pattern, in a smooth way, according to changes in the external pattern, which is a common characteristic of living beings. In many real situations, living beings need to react to any new external pattern presented; this model behaves precisely in this way.

7. Recognition of Correlated Patterns

It is also interesting to investigate the performance of this framework when correlated patterns are stored. Let us suppose an external stimulus that presents a maximum overlap with one particular stored memory, let us say memory , i.e., in Equation (6). Then, we consider another pattern, , that presents a correlation b () with pattern ; this means that for a fraction b of indices i, leading to

| (40) |

Above, the limits and correspond to anti-correlated and fully correlated patterns, respectively, whereas the most interesting situations occur for .

In Figure 8, we present results from the simulations of two patterns, and , with a correlation parameter between them; all other patterns are uncorrelated among themselves, as well as with these two. One may notice that the macroscopic superpositions, and , attain the expected saturation limits for sufficiently large values of , i.e., (due to its maximum overlap with the external stimulus) and [following cf. Equation (40)]. Similarly to the previous situations investigated with uncorrelated patterns, the optimal value increases for increasing values of . Around their corresponding optimal values , the system recognizes both patterns, with and already close to its saturation limit, , for all values of considered, whereas all memories present overlaps that are essentially zero (in fact, of order ). It is important to stress that even for one finds recognition of both stored patterns, indicating that pattern presents a significant overlap with the external stimulus, as a direct consequence of its correlation with the stored pattern , demonstrating an appropriate feature of the SDNN model when dealing with correlated patterns. As another interesting result, one should call attention to the reduction of the estimates of , as a consequence of correlations; this aspect is revealed by comparing Figure 2d () and Figure 8d (), both for and , for which one typically notices a decrease on the value of .

Figure 8.

(Color online) Results from numerical simulations of two correlated patterns ( and ), with between them present a correlation parameter [cf. Equation (40)], are plotted versus . The pattern is fully correlated with the external stimulus (), and data for typical values of are shown: (a) ; (b) ; (c) ; (d) . The macroscopic superpositions with memories and ( and ) are represented by black squares and brown pentagons, respectively; the green circles are data for the macroscopic superposition [cf. Equation (19)], computed with a different external stimulus, orthogonal to all stored memories, whereas the red triangles stand for the modulus of the difference, . In each case, the maximum value of yields the best choice for , denoted by . The lines interpolating the symbols are guides for the eye.

Results from the numerical simulations of several patterns—more precisely, , and three other patterns correlated with —are exhibited in Figure 9. The remaining patterns are uncorrelated with these four and among themselves. Pattern presents a maximum overlap with the external stimulus (), whereas the three correlated ones follow Equation (40) with , and . All macroscopic superpositions attain the expected saturation limits for sufficiently large values of , i.e., , whereas the three correlated patterns approach their corresponding values, according to Equation (40). Notice that is sufficient to recover all four patterns, with and the other three macroscopic superpositions already very close to their saturation limits, for all values of considered; such significant values appear as direct consequences of the correlations with pattern . A curious aspect of Figure 9 concerns the fact that the optimal value increases much slower with , when compared with previous cases investigated, for both uncorrelated patterns (see, e.g., Figure 2 and Figure 3) and two correlated patterns (cf. Figure 8). Besides small variations in , the magnitude of is diminished by increasing the number of correlated patterns, as can be seen by comparing, e.g., Figure 2d (), Figure 8d (), and Figure 9d (), all of them for and . The present simulations suggest that, for a sufficiently large number of correlated patterns, should converge to a small finite value , for fixed; by increasing , a slow increase in the values of should occur. This later result is in agreement with the recognition of similar patterns that is performed by living beings, where once one constituent of a given group is recognized, all similar members of the group are also recognized immediately, requiring little external stimulus for this task. The results of Figure 8 and Figure 9 illustrate additional important features of the SDNN model, presenting characteristics very similar to those of living beings in the recognition of correlated patterns.

Figure 9.

(Color online) Results from numerical simulations for three patterns, correlated with pattern , are plotted versus . The pattern is fully correlated with the external stimulus () and data for typical values of are shown: (a) ; (b) ; (c) ; (d) . The macroscopic superposition with memory , , is represented by black squares, whereas those for the three correlated patterns, for values , and , with respect to pattern [cf. Equation (40)], are depicted by brown pentagons, open blue diamonds, and open green pentagons, respectively (increasing values of b from bottom to top). The green circles are data for the macroscopic superposition [cf. Equation (19)], computed with a different external stimulus, orthogonal to all stored memories, whereas the red triangles stand for the modulus of the difference, . In each case, the maximum value of yields the best choice for , denoted by ; one notices that still increases with , although much slower than in Figure 8. The lines interpolating the symbols are guides for the eye.

We have also tested how the SDNN behaves by considering several correlated patterns in the regime of large values, as shown in Figure 10. Similarly to the experiments for Figure 8 and Figure 9, the estimates of decrease for correlated patterns. This effect may be verified by comparing the results of Figure 10 (four correlated patterns) with those of Figure 5 (uncorrelated patterns), e.g., in the case [Figure 10b () and Figure 5a ()], as well as [Figure 10d () and Figure 5c ()], all for . This aspect is directly related to the gaps between the curves for and , as can be seen from the corresponding above-mentioned plots. In these cases, one notices that the maximum value of is typically doubled in Figure 10b,d, when contrasted to those of Figure 5a,c, respectively, making it easier to compute the values of . Such an increase in the maximum of , together with the reduction in the values of , should yield an enlargement in the total number of memories, since the range of values for pattern recognition is expanded; these results indicate that the saturation effect observed in Figure 5 should occur for even larger values of , when one introduces correlations among patterns. Consequently, the introduction of such an important ingredient for real systems, i.e., correlations among patterns, is expected to improve, even further, the storage capacity of the SDNN.

Figure 10.

(Color online) Results from numerical simulations for three patterns, correlated with pattern , are plotted versus . The pattern is fully correlated with the external stimulus () and data for typical values of are shown: (a) ; (b) ; (c) ; (d) . The macroscopic superposition with memory , , is represented by black squares, whereas those for the three correlated patterns, for values , and , with respect to pattern [cf. Equation (40)], are depicted by brown pentagons, open blue diamonds, and open green pentagons, respectively (increasing values of b from bottom to top). The green circles are data for the macroscopic superposition [cf. Equation (19)], computed with a different external stimulus, orthogonal to all stored memories, whereas the red triangles stand for the modulus of the difference, . In each case, the maximum value of yields the best choice for , denoted by ; one notices that still increases with . The lines interpolating the symbols are guides for the eye.

8. Pattern Recognition in a Diluted Neural Network

In this section we show that the SDNN also performs well for diluted models; we illustrate this by analyzing its performance on the Hopfield model with non-symmetric synapse dilution. Let us then consider synaptic couplings in the modified form [52,53],

| (41) |

where are independent random variables following the probability distribution,

| (42) |

The variables represent the asymmetric dilution of the couplings and is a dilution parameter, defined as the fraction of the total number of connections that have been eliminated, i.e., a macroscopic dilution. Notice that the interactions are not symmetric now, since for each neuron pair [ and ], and are independent variables with ; consequently, no Hamiltonian can be defined. The two extremum values for the parameter d correspond to (undiluted limit), whereas its maximum value comes from Equation (41), leading to .

Next, to understand better the effects on due to the dilution of synapses, let us estimate its value within the molecular-field approach; the procedure is similar to the one carried out in Section 3, through an analysis of the noise contribution. Therefore, the local field on neuron i is given by

| (43) |

with now given by Equation (41). Then, we assume that at a time t the neuron states , as well as the external stimulus , coincide with pattern , leading to,

| (44) |

As before, the sum over memories was split into two contributions; namely, a signal produced by pattern , and a noise due to memories ,

| (45) |

Assuming that there are no correlations between patterns and , and that patterns associated with different sites () are independent, each memory contribution takes the values with equal probability. Noting that are also independent variables, the noise random variables do not yield a Gaussian for a finite N, but they should become Gaussian distributed for . Hence, considering large enough values of N and p, one acquires for the average over the variables ,

| (46) |

whereas

| (47) |

Since , the associated variance with respect to the variables becomes

| (48) |

Similarly to the discussion carried out in Section 3, one should choose equal to the width of the noise distribution,

| (49) |

in order to cancel (approximately) the noise contribution. According to this, a dilution parameter yields an increase in the analytical approximate value for , as compared to the undiluted case ; this effect is in agreement with numerical simulations, as will be shown below. The limit , i.e., a fully diluted system, is not relevant for living beings and will not be investigated here; in this case one should have .

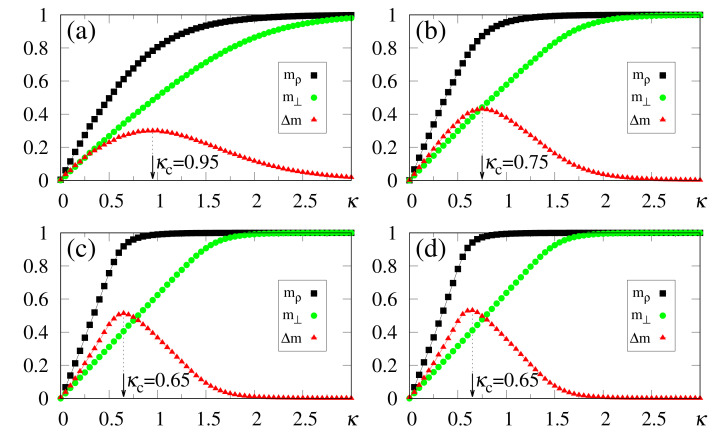

In what follows, for simplicity, we illustrate the effects of synapse dilution for uncorrelated patterns, considering in Equation (6), i.e., an external pattern coinciding precisely with the stored one. Results from numerical simulations are shown in Figure 11 and Figure 12, with the quantities defined in Equations (19), (20) and (37) being plotted versus , for decreasing values of the dilution parameter d, and two typical choices of , namely, [Figure 11] and [Figure 12]. One notices that the limits () still hold; furthermore, for sufficiently high values of , the saturation limits and should be approached, even for high dilutions. One important aspect in these figures concerns the fact that is clearly identified by means of , even for the largest value of d considered [ in Figure 11a and Figure 12a]. Moreover, for fixed choices of the dilution parameter d, increases with , as already observed for the undiluted case, whereas for fixed , one notices that decreases by decreasing d; these results are in agreement with the theoretical estimate of Equation (49). However, the discrepancies between the numerical and analytical estimates for are larger for , when compared to those for the undiluted limit (); whereas the relative discrepancies in the undiluted limit are typically of the order , for larger dilutions, e.g., , one may have relative discrepancies slightly larger than . Similarly to the large limit of the undiluted case [see, e.g., Figure 5] the curves for become flatter for high dilutions, leading to such larger discrepancies. Furthermore, as before, the analytical result of Equation (49) represents overestimates with respect to the numerical ones, for all cases shown in Figure 11 and Figure 12. Increasing values of with dilution is qualitatively expected, since synapse dilution diminishes the possible paths for transmitting given information, and so, larger intensities of the external field should be necessary in the pattern-recognition process.

Figure 11.

(Color online) Results from numerical simulations for the overlaps (black squares) [cf. Equation (20)], (green circles) [cf. Equation (19)], and the modulus of their difference, (red triangles) [cf. Equation (37)], are plotted versus , for , , and typical values of the dilution parameter d: (a) ; (b) ; (c) ; (d) . In each case, the maximum value of yields the best choice for , denoted by . The two overlaps shown represent, respectively, macroscopic superpositions of a neuron state with a stored pattern (), and with non-stored patterns orthogonal to the stored one (). The lines interpolating the symbols are guides for the eye.

Figure 12.

(Color online) Results from numerical simulations for the overlaps (black squares) [cf. Equation (20)], (green circles) [cf. Equation (19)], and the modulus of their difference, (red triangles) [cf. Equation (37)], are plotted versus , for , , and typical values of the dilution parameter d: (a) ; (b) ; (c) ; (d) . In each case, the maximum value of yields the best choice for , denoted by . The two overlaps shown represent, respectively, macroscopic superpositions of a neuron state with a stored pattern (), and with non-stored patterns orthogonal to the stored one (). The lines interpolating the symbols are guides for the eye.

9. General Discussion and Potential Applications

In contrast to standard ANNs, where memories are associated with minima in a metaphorical energy landscape, in the present SDNN model a given minimum appears only after a stimulus correlated with a stored pattern is presented. Such a stimulus is inspired by the form of a random magnetic field, commonly used on models of magnetism, acting independently on each neuron; it is expressed as [; ] and kept active during the entirety of the recognition process.

The crucial part of the scheme is to calibrate the intensity of the external stimulus in order to cancel out, as much as possible, the noise contribution due to other patterns, which are not correlated with the external pattern presented. We developed a technique for calculating this optimal value (referred to as ), which consists in estimating the maximum gap between: i) the macroscopic superpositions of a neuron state with a stored pattern (), and ii) those with non-stored patterns orthogonal to the stored one (). More specifically, the maximum of yields , and it was shown that, around this value, the overlap attained significant values, making the model appropriate for pattern recognition.

We showed that the SDNN considerably increases the capability of the NN to recognize previously stored patterns. The proposal was illustrated through the inclusion of the additional contribution to the standard Hopfield model, for which the number of stored patterns is expressed as (N representing the total number of neurons), and is known to be unable to recognizing any further patterns for . Taking into account that analytical calculations may be performed for the Hopfield model, we compared analytical and numerical results, showing good agreement between the two approaches. In contrast to the standard Hopfield case, we found no threshold value for . Rather, a saturation effect for sufficiently large was observed, in the sense that the two overlaps, and , become very close, resulting in . In spite of this, we verified a significant increase in the recognition capacity of the neural network, so that for this specific application, considering no correlations between stored patterns, the range of possible values of is enlarged, typically, by a factor .

The impressive performance of the present proposal was also demonstrated in situations designed to mimic the common daily tasks of living beings, as described next. (i) Its ability to react promptly to changes in the external environment, reproducing a fundamental characteristic of living beings which need to react quickly to newly presented external patterns. (ii) Its recognition of correlated patterns, showing that correlations lead to a decrease in the optimal values of , together with an increase in the maxima of ; as a direct consequence, the numerical simulations indicate that the range of values become enlarged, due to these correlations. These results are in agreement with the recognition of groups of patterns performed by living beings; once one constituent of a given group is recognized, all similar members of this group are identified immediately, and this process requires only a small external stimulus. (iii) Its correct functioning for both asymmetric and diluted synapses, showing that when dilution is included, higher values of the external stimulus are necessary.

It is important to stress that the features of the present SDNN can be implemented both computationally (i.e., in software), as well as on devices. Computationally, there are many modern algorithms that use various gates (such as AND or XOR), and which implement Hebb-like rules (see, e.g., Refs. [66,67,68,69]). In line with these algorithms, the synapse values may be updated whenever new memories are added to the network. In the present proposal, synapses are responsible for the first two terms in the right-hand-side of Equations (8) and (9), so that by changing their values, these two contributions are modified. To introduce the external-pattern contribution [third term of Equations (8) and (9)], one should add the signal of the external stimulus to each neuron i. Hence, neurons will be updated in a sequential way, through the zero-temperature dynamics, . In a given device, Hebb’s rule can be implemented in several ways by means of neuromorphic engineering, which is a recently developed area of research (see, e.g., Refs. [70,71]).

10. Conclusions

Based on the common behavior of living beings, we proposed a new neural-network framework, in which an external stimulus exerts a strong influence on the pattern-recognition process. This external stimulus, introduced in the form of a random field, remains active during the whole recognition process, considerably increasing the capability of the neural network to recognize previously stored patterns. In contrast to more-common attractor neural networks in the absence of an external field, memories are not attractors inside basins of attraction, and basins can be generated for external stimuli that present significant macroscopic superpositions with stored memories.

Finally, it is important to mention that this procedure may be implemented upon a large diversity of neural-network models, utilizing both analytical and numerical investigations. Moreover, its potential application in other processes, distinct from pattern recognition, is very appealing. The present proposal should help to shorten the wide gap between the performance of many of these NN models and the common characteristics of real living beings, improving their performance and, in particular, leading to a considerable increase in their recognition and reaction capabilities. Furthermore, the application of the presented theoretical concepts, both computationally (i.e., in software), as well as on physical devices, is a very promising prospect, and we hope that this task is taken up in the near future.

Acknowledgments

The authors thank Constantino Tsallis for useful comments.

Appendix A. Mean-Field Equations

In this Appendix A, we detail some of the analytic calculations of Section 4; in particular, those leading to the free energy of Equation (21). We follow the lines of Refs. [21,22], where one assumes that the total number of memories p increases linearly with N, by introducing as a finite number. In order to calculate the average over the quenched disorder, one applies the replica method, as defined in Equation (17), where corresponds to the partition function of n independent replicas for a given realization of the disorder [1,2,3,4]. One has that

| (A1) |

where denotes a sum over all distinct pairs of neurons, corresponding to a fully connected neural network; in this case, the mean-field approach becomes exact in the thermodynamic limit. Moreover, a tags the n replicas () and stands for a trace over the neuron states, for each of the n replicas.

Now, using the Gaussian integral

| (A2) |

one transforms the neuron-pair contributions into single-neuron ones, by introducing integration variables . Splitting the p patterns into two sets, namely, condensed , and non-condensed patterns, one may calculate the averages over the non-condensed patterns ,

| (A3) |

Assuming that for non-condensed patters , one uses the approximation and integrates over for , leading to

| (A4) |

where the change was used. The matrix is defined by the elements , and from now on, the index applies to condensed states (). The integrals over the set are evaluated by means of the steepest-descent method; introducing as Lagrange multipliers for the non-diagonal elements , one has

| (A5) |

with the functional (in full replica space) given by

| (A6) |

Now, one assumes the replica-symmetry ansatz [4], by imposing all off-diagonal elements of the matrix (as well as their corresponding Lagrange multipliers) to be equal, i.e., and (). Considering the limit , the free energy in Equation (A5) may be written as

| (A7) |

where

| (A8) |

Author Contributions

Conceptualization, E.M.F.C. and F.D.N.; methodology, E.M.F.C., N.B.M. and F.D.N.; numerical studies and plots, N.B.M.; formal analysis, E.M.F.C., N.B.M. and F.D.N.; discussions, E.M.F.C., N.B.M. and F.D.N.; original draft preparation, E.M.F.C. and F.D.N.; writing—review and editing, E.M.F.C., N.B.M. and F.D.N. All authors have read and agreed to the published version of the manuscript.

Funding

The authors acknowledge partial financial support from CNPq, CAPES and FAPERJ (Brazilian funding agencies).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data is contained within the article.

Conflicts of Interest

The authors declare no conflict of interest.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Amit D.J. Modelling Brain Function: The World of Attractor Neural Networks. Cambridge University Press; Cambridge, UK: 1989. [Google Scholar]

- 2.Peretto P. An Introduction to the Modelling of Neural Networks. Cambridge University Press; Cambridge, UK: 1992. [Google Scholar]

- 3.Hertz J.A., Krogh A., Palmer R.G. Introduction to the Theory of Neural Computation. CRC Press; Miami, FL, USA: 2018. [Google Scholar]

- 4.Nishimori H. Statistical Physics of Spin Glasses and Information Processing. Oxford University Press; Oxford, UK: 2001. [Google Scholar]

- 5.McCulloch W.S., Pitts W. A logical calculus of the ideas immanent in nervous activity. Bull. Math. Biophys. 1943;5:115–133. doi: 10.1007/BF02478259. [DOI] [PubMed] [Google Scholar]

- 6.Pitts W., McCulloch W.S. How we know universals the perception of auditory and visual forms. Bull. Math. Biol. 1947;9:127–147. doi: 10.1007/BF02478291. [DOI] [PubMed] [Google Scholar]

- 7.Darwin C.R., Wallace A.R. On the tendency of species to form varieties and on the perpetuation of varieties and species by natural means of selection. J. Proc. Linn. Soc. Lond. Zool. 1858;3:45–62. doi: 10.1111/j.1096-3642.1858.tb02500.x. [DOI] [Google Scholar]

- 8.Darwin C.R. On the Origin of Species. John Murray; London, UK: 1859. [Google Scholar]

- 9.Beddall B.G. Wallace, Darwin, and the theory of natural selection: A study in the development of ideas and attitudes. J. Hist. Biol. 1968;1:261–323. doi: 10.1007/BF00351923. [DOI] [Google Scholar]

- 10.Hebb D.O. The Organization of Behavior: A Neuropsychological Theory. Wiley; New York, NY, USA: 1949. [Google Scholar]

- 11.Freeman W.J. The Hebbian paradigm reintegrated: Local reverberations as internal representations. Behav. Brain Sci. 1995;18:631. doi: 10.1017/S0140525X0004022X. [DOI] [Google Scholar]

- 12.Schroeder M. Fractals, Chaos, Power Laws: Minutes from an Infinite Paradise. W. H. Freeman; New York, NY, USA: 1991. [Google Scholar]

- 13.Manneville P. An Introduction to Nonlinear Dynamics and Complex Systems. Imperial College Press; London, UK: 2004. [Google Scholar]

- 14.Tsallis C. Introduction to Nonextensive Statistical Mechanics: Approaching a Complex World. Springer; New York, NY, USA: 2009. [Google Scholar]

- 15.Cencini M., Cecconi F., Vulpiani A. Chaos: From Simple Models to Complex Systems. World Scientific Publishing; Singapore: 2010. [Google Scholar]

- 16.Rajan K., Abbott L.F., Sompolinsky H. Stimulus-dependent suppression of chaos in recurrent neural networks. Phys. Rev. E. 2010;82:011903. doi: 10.1103/PhysRevE.82.011903. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Hopfield J.J. Neural networks and physical systems with emergent collective computational abilities. Proc. Natl. Acad. Sci. USA. 1982;79:2554–2558. doi: 10.1073/pnas.79.8.2554. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Mezard M., Parisi G., Virasoro M.A. Spin Glass Theory and Beyond. World Scientific; Singapore: 1987. [Google Scholar]

- 19.Curado E.M.F., Meunier J.-L. Spin-glass in low dimensions and the Migdal-Kadanoff Approximation. Phys. A. 1988;149:164–181. doi: 10.1016/0378-4371(88)90212-9. [DOI] [Google Scholar]

- 20.Peretto P. Collective properties of neural networks: A statistical physics approach. Biol. Cybern. 1984;50:51–62. doi: 10.1007/BF00317939. [DOI] [PubMed] [Google Scholar]

- 21.Amit D.J., Gutfreund H., Sompolinsky H. Spin-glass models of neural networks. Phys. Rev. A. 1985;32:1007–1018. doi: 10.1103/PhysRevA.32.1007. [DOI] [PubMed] [Google Scholar]

- 22.Amit D.J., Gutfreund H., Sompolinsky H. Storing infinite numbers of patterns in a spin-glass model of neural networks. Phys. Rev. Lett. 1985;55:1530–1533. doi: 10.1103/PhysRevLett.55.1530. [DOI] [PubMed] [Google Scholar]

- 23.Mezard M., Nadal J.P., Toulouse G. Solvable models of working memories. J. Phys. 1986;47:1457–1462. doi: 10.1051/jphys:019860047090145700. [DOI] [Google Scholar]

- 24.Abu-Mostafa Y.S., St. Jacques J.-M. Information capacity of the Hopfield model. IEEE Trans. Inf. Theory. 1985;31:461–464. doi: 10.1109/TIT.1985.1057069. [DOI] [Google Scholar]

- 25.McEliece R.J., Posner E.C., Rodemich E.R., Venkatesh S.S. The capacity of the Hopfield associative memory. IEEE Trans. Inf. Theory. 1987;33:461–482. doi: 10.1109/TIT.1987.1057328. [DOI] [Google Scholar]

- 26.Fontanari J., Köberle R. Information storage and retrieval in synchronous neural networks. Phys. Rev. A. 1987;36:2475–2477. doi: 10.1103/PhysRevA.36.2475. [DOI] [PubMed] [Google Scholar]

- 27.Chiueh T.-D., Goodman R.M. High-capacity exponential associative memories. IEEE Int. Conf. Neural Netw. 1988;1:153–160. [Google Scholar]

- 28.Buhmann J., Divko R., Schulten K. Associative memory with high information content. Phys. Rev. A. 1989;39:2689–2692. doi: 10.1103/PhysRevA.39.2689. [DOI] [PubMed] [Google Scholar]

- 29.Penna T.J.P., de Oliveira P.M.C. Simulations with a large number of neurons. J. Phys. A. 1989;22:L719–L721. doi: 10.1088/0305-4470/22/14/012. [DOI] [Google Scholar]

- 30.Tamas G. Physical Models of Neural Networks. World Scientific Publishing; Singapore: 1990. [Google Scholar]

- 31.de Oliveira P.M.C. Computing Boolean Statistical Models. World Scientific Publishing; Singapore: 1991. [Google Scholar]

- 32.Aiyer Sreeram V.B., Nirajan M., Fallside F. A theoretical investigation into the performance of the Hopfield model. IEEE Trans. Neural Netw. 1990;1:204–215. doi: 10.1109/72.80232. [DOI] [PubMed] [Google Scholar]

- 33.Clark J.W. Neural network modelling. Phys. Med. Biol. 1991;36:1259–1317. doi: 10.1088/0031-9155/36/10/001. [DOI] [PubMed] [Google Scholar]

- 34.Sherrington D. Neural networks: The spin glass approach. N.-Holl. Math. Libr. 1993;51:261–291. [Google Scholar]

- 35.Chapeton J., Fares T., LaSota D., Stepanyants A. Efficient associative memory storage in cortical circuits of inhibitory and excitatory neurons. Proc. Natl. Acad. Sci. USA. 2012;109:E3614–E3622. doi: 10.1073/pnas.1211467109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Alemi A., Baldassi C., Brunel N., Zecchina R. A three-threshold learning rule approaches the maximal capacity of recurrent neural networks. PLoS Comput. Biol. 2015;11:e1004439. doi: 10.1371/journal.pcbi.1004439. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Da Silva I.N., Spatti D.H., Flauzino R.A., Liboni L.H.B., dos Reis Alves S.F. Artificial Neural Networks: A Pratical Course. Springer International Publishing; Cham, Switzerland: 2017. [Google Scholar]

- 38.Folli V., Leonetti M., Ruocco G. On the maximum storage capacity of the Hopfield model. Front. Comput. Neurosci. 2017;10:144. doi: 10.3389/fncom.2016.00144. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Kim D.-H., Park J., Kahng B. Enhanced storage capacity with errors in scale-free Hopfield neural networks: An analytical study. PLoS ONE. 2017;12:e0184683. doi: 10.1371/journal.pone.0184683. [DOI] [PMC free article] [PubMed] [Google Scholar]