Abstract

Objective

To assess existing knowledge related to methodological considerations for linking population-based surveys and health facility data to generate effective coverage estimates. Effective coverage estimates the proportion of individuals in need of an intervention who receive it with sufficient quality to achieve health benefit.

Design

Systematic review of available literature.

Data sources

Medline, Carolina Population Health Center and Demographic and Health Survey publications and handsearch of related or referenced works of all articles included in full text review. The search included publications from 1 January 2000 to 29 March 2021.

Eligibility criteria

Publications explicitly evaluating (1) the suitability of data, (2) the implications of the design of existing data sources and (3) the impact of choice of method for combining datasets to obtain linked coverage estimates.

Results

Of 3805 papers reviewed, 70 publications addressed relevant issues. Limited data suggest household surveys can be used to identify sources of care, but their validity in estimating intervention need was variable. Methods for collecting provider data and constructing quality indices were diverse and presented limitations. There was little empirical data supporting an association between structural, process and outcome quality. Few studies addressed the influence of the design of common data sources on linking analyses, including imprecise household geographical information system data, provider sampling design and estimate stability. The most consistent evidence suggested under certain conditions, combining data based on geographical proximity or administrative catchment (ecological linking) produced similar estimates to linking based on the specific provider utilised (exact match linking).

Conclusions

Linking household and healthcare provider data can leverage existing data sources to generate more informative estimates of intervention coverage and care. However, existing evidence on methods for linking data for effective coverage estimation are variable and numerous methodological questions remain. There is need for additional research to develop evidence-based, standardised best practices for these analyses.

Keywords: public health, quality in healthcare, public health, statistics & research methods

Strengths and limitations of this study.

We systematically reviewed a wide range of methodological issues pertaining to linking population-based and health provider data for effective coverage estimation.

The review was limited by the diversity of terminology and fields related to the linking methodology.

Multiple search strategies were used to minimise the likelihood of overlooking relevant publications.

Results of the review are summarised and related to actionable items and needs for future research.

Background

There is growing demand for tracking progress towards the sustainable development goals through effective coverage estimates.1 2 Effective coverage measures assess not only the proportion of individuals in need of an intervention who receive it, but also the content and quality of services received with an aim to estimate the proportion of individuals receiving the health benefit of an intervention.2 Numerous publications have estimated effective coverage3 using a range of methods and measures to define intervention need, receipt and quality.

Linking household and health provider data is a promising means of generating effective coverage estimates that provide population-based estimates and incorporate data on service quality from health facilities. Data from household surveys can provide a population-based estimate of intervention need and care-seeking for services, such as the proportion of women with a recent live birth who delivered in a health facility. However, a number of maternal, newborn and child health interventions4 5 cannot be accurately measured through household surveys due to reporting errors and biases by respondents (eg, the proportion of women who received a uterotonic during delivery). Health provider assessments yield information on provider quality, including available infrastructure, commodities, equipment, human resources and potentially provision of care. Provider data do not capture need for care in the population, care-seeking behaviour or the experience of individuals who do not access the formal health system. Linking these two data sources can provide a more complete picture of population access to and coverage of high-quality health services, for example, the proportion of women who delivered at a health facility with sufficient structural resources and competence to provide appropriate labour and delivery care.

There are many approaches for combining household and provider datasets.6 The results depend on the choice of data and of methods for combining datasets. However, very limited guidance exists to guide decision making. We conducted a systematic review to understand the current evidence base for effective coverage linking methods and identify needs for further research.

Methods

We searched for papers addressing methods or assumptions regarding: (1) the suitability of household and provider (defining health providers as healthcare outlets such as health facilities, pharmacies, and community-based health workers) data used in linking analyses, (2) the implications of the design of existing household (Demographic and Health Survey (DHS) and Multiple Indicator Cluster Survey (MICS)) and provider (Service Provision Assessment (SPA) and Service Availability and Readiness Assessment (SARA)) data sources commonly used in linking analyses and (3) the impact of choice of method for combining datasets to obtain linked coverage estimates.

Our primary search was conducted in Medline. The search was limited to papers published between 1 January 2000 and 29 March 2021 that included terms related to (1) effective coverage, benchmarking, system dynamics or universal health coverage (UHC) metrics, or (2) structural, process and/or health outcome quality, (3) linking analyses using terms adapted from Do et al,6 (4) validity of self-report health indicators and (5) spatial methods for measuring utilisation or distance to care. A full list of Medline search terms is presented in online supplemental file 1. The search was conducted using English-language terms; however, publications in English, Spanish and French were reviewed if captured in the search. Additionally, we conducted searches using these criteria in Population Health Metrics (which was not fully indexed in Medline at the time of our search), the Carolina Population Health Center and DHS publications. In a second step, we handsearched the references of a systematic review by Do et al on linking household and facility data to estimate coverage of reproductive, maternal, newborn and child health (RMNCH) services,6 and a review by Amouzou et al of effective coverage analyses.3 Both the Do and Amouzou reviews summarised publications that linked data or estimated effective coverage; however, they did not systematically address methodological concerns or relevant results for guiding application of these methods. We also handsearched the references, citing works and journal—or database interface-generated related publications of all articles that passed the title and abstract review.

bmjopen-2020-045704supp001.pdf (38.2KB, pdf)

Publications were reviewed for relevant analyses or commentary related to linking methodologies. Articles were included if they explicitly evaluated or compared assumptions used in linking approaches for at least one of the areas defined above. The review focused on low-income and middle-income countries (LMICs) and data sources common in these settings, however, publications from high-income settings were retained if the relevant evidence could translate to LMICs (eg, use of centroid global positioning system (GPS) location in estimates of distance, validity of provider quality measures). No formal quality assessment was conducted due to the diversity of study designs and research objectives of the papers relevant to the review. Title and abstract review were conducted simultaneously by the first author (EC). Data extraction included the title, author, year of publication, country or countries included in analysis, data source and specific analyses or findings relevant to linking loosely categorised by topic areas. Topical area groupings emerged from the review and were used to structure the findings.

Patient and public involvement

As a systematic review, neither patients nor the public were involved in the design, conduct, reporting, or dissemination plans of our research.

Results

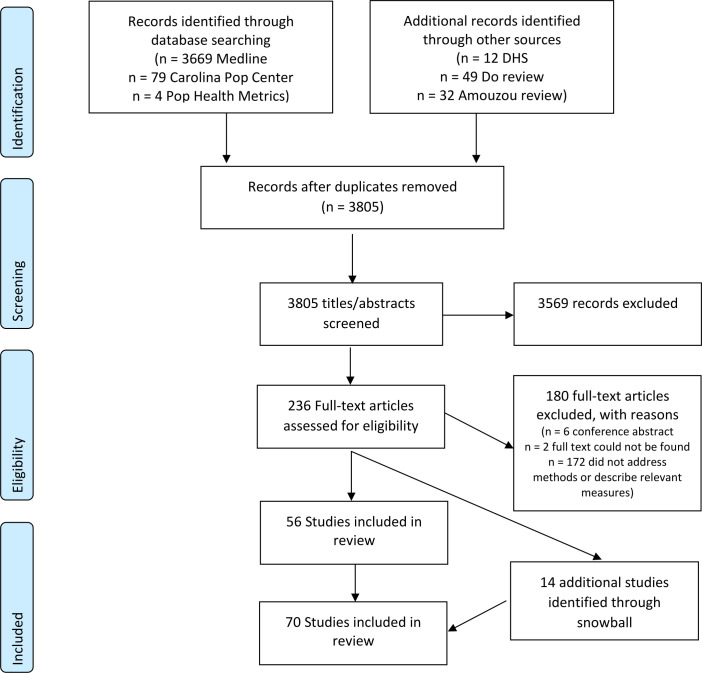

The Medline search produced 3669 publications, along with 79 from the Carolina Population Center, 4 from Population Health Metrics, 12 DHS publications, 35 papers included in the review by Amouzou et al and 49 papers included in the review by Do et al meeting the publication date restrictions. After removing duplicates, 3805 publications were included in the title and abstract review and 236 were included in the full text review. Of those papers included in the full text review, 56 publications addressed a methodological concern related to linking household and provider data and were included in the final review. Fourteen additional publications were identified through the snowball review of references and related works (figure 1). In total 70 publications addressed one or more methodological concern, including the suitability of household (n=13) and provider data (n=39) for use in linking analyses, concerns related to the design of existing household (n=6) and provider (n=4) data sources and methods for combining household and facility data (n=14). A list of publications included in the review and a summary of their contributions to the review are provided in table 1.

Figure 1.

PRISMA flow diagram. DHS, Demographic and Health Survey; PRISMA, Preferred Reporting Items for Systematic Reviews and Meta-Analyses.

Table 1.

Summary of publications included in the review and contribution to the literature

| Author | Year | Country | Key method contribution |

Suitability of houseold and provider data for linking analyses

| |||

| Ayede et al8 | 2018 | Nigeria | Accuracy of maternal report of pneumonia symptoms measured through household survey. |

| Blanc et al17 | 2016 | Mexico | Accuracy of maternal report of delivery/immediate PNC attendant measured through household survey. |

| Blanc et al18 | 2016 | Kenya | Accuracy of maternal report of delivery/immediate PNC attendant measured through household survey. |

| Carter et al15 | 2018 | Zambia | Accuracy of maternal report of care-seeking for child illness measured through household survey. |

| Chang et al14 | 2018 | Nepal | Accuracy of maternal report of birth weight and preterm birth measured through household survey. |

| D’Acremont et al10 | 2010 | SSA | Reduced proportion of fever cases that are malaria. |

| Hazir et al9 | 2013 | Pakistan and Bangladesh | Accuracy of maternal report of pneumonia symptoms measured through household survey. |

| Keenan et al12 | 2017 | USA | Accuracy of maternal recall of birth complications. |

| Shengelia et al7 | 2005 | – | Effect of true versus perceived intervention need on effective coverage estimation. |

| Stanton et al16 | 2013 | Mozambique | Accuracy of maternal report of place of delivery measured through household surveys. |

| Fischer Walker et al11 | 2013 | – | Issues with measurement of child diarrhoea through household surveys. |

| Wang et al19 | 2018 | Multiple Regions | Issues with provider categories and alignment between DHS and SPA surveys. |

| Zimmerman et al13 | 2019 | Ethiopia | Reliability of maternal report of maternal and newborn birth complications. |

Suitability of household and provider data for linking analyses

| |||

| Akachi and Kruk57 | 2017 | – |

|

| Carter et al33 | 2018 | Zambia | Quality score for child health effective coverage. |

| Chou et al39 | 2019 | Multiple Regions | Quality score for maternal and neonatal health effective coverage. |

| Davis et al32 | 2006 | High-income countries | Agreement between provider self-assessment and observed quality. |

| Diamond-Smith et al24 | 2016 | Kenya and Namibia | Association between maternal perception of care and measured structural and process quality. |

| Fisseha et al51 | 2017 | Ethiopia | Internal consistency of structural and process quality indicator. |

| Gabrysch et al45 | 2011 | Zambia | Quality score for labour and delivery effective coverage. |

| Getachew et al25 | 2020 | Ethiopia | Association between caregiver perception of care and measured structural and process quality. |

| Hoogenboom et al27 | 2015 | Thai-Myanmar Border | Agreement between facility records and observed care. |

| Hrisos et al30 | 2009 | High-income countries | Systematic review of agreement between observed quality of care and provider self-report, patient-report, and/or chart review. |

| Jackson et al53 | 2015 | Tanzania | PCA to reduce quality index. |

| Joseph et al42 | 2020 | Malawi |

|

| Kanyangarara et al37 | 2017 | SSA | Quality score for ANC effective coverage. |

| Kruk et al55 | 2017 | SSA | Association between structural and process quality. |

| Larson et al26 | 2014 | Tanzania |

|

| Leegwater et al59 | 2015 | – | Association between UHC index and infant mortality and life expectancy at national level. |

| Leslie et al58 | 2016 | Malawi | Association between quality of delivery care and neonatal mortality. |

| Leslie et al41 | 2017 | SSA |

|

| Leslie et al54 | 2018 | Multiple Regions | Performance of approaches for generating service readiness indices. |

| Leslie et al46 | 2019 | Mexico | Quality score for ANC, labour and delivery, newborn, sick child, chronic conditions, and cancer treatment effective coverage. |

| Lozano et al49 | 2006 | Mexico | UHC index using weighted vs simple average of indicators |

| Mallick et al52 | 2017 | Haiti, Malawi and Tanzania | Comparison of measures of family planning quality. |

| Marchant et al35 | 2015 | Ethiopia, Nigeria and India | Measurement of quality using “last delivery module”. |

| Mboya et al36 | 2016 | Tanzania | mHealth tool to measure quality. |

| MCSP23 | 2018 | Multiple Regions | Availability and quality of data captured through HMIS. |

| Moucheraud and McBride48 | 2020 | SSA and Haiti | Systematic review of quality measures derived from SPA data. |

| Munos et al40 | 2018 | Cote D’Ivoire | Quality score for ANC, labour and delivery, PNC, and child health effective coverage. |

| Nesbitt et al28 | 2013 | Ghana | Quality score for labour and delivery and PNC effective coverage. |

| Nguhiu et al38 | 2017 | Kenya | Quality score for ANC, labour and delivery, sick child, and family planning effective coverage. |

| Nickerson et al21 | 2015 | Multiple Regions | Comparison of data collected through health facility assessments. |

| Osen et al29 | 2011 | Ghana | Agreement between provider reported and observed surgical service quality. |

| Peabody et al31 | 2000 | US | Agreement between vignettes, chart abstraction, and simulated client measures. |

| Serván-Mori et al47 | 2019 | Mexico | Quality score for labour and delivery and newborn care effective coverage. |

| Sheffel et al22 | 2018 | Multiple regions | Summary of quality data collected through SPA and SPA. |

| Sheffel50 | 2018 | Haiti, Malawi, Tanzania | Association between structural and process quality. |

| Willey et al44 | 2018 | Uganda | Quality score for labour and delivery and newborn care effective coverage. |

| Wilunda et al34 | 2015 | Uganda | Quality score for maternal and neonatal care effective coverage. |

| Zurovac et al56 | 2015 | Vanuatu | Poor association between structural quality and clinical care in fever management. |

Implications of design of existing household and provider data sources commonly used in linking analyses

| |||

| Bliss et al62 | 2012 | USA | Comparison of distance using centroid vs true location. |

| Healy and Gilliland64 | 2012 | Canada and UK | Comparison of distance using centroids of varying areal groupings. |

| Jones et al63 | 2010 | USA | Comparison of distance using zip-code centroid versus true household location. |

| Nesbitt et al65 | 2014 | Ghana | Comparison of straight-line distance, network distance, raster and network-based travel time distance measures using village versus compound centroid. |

| Perez-Heydrch et al60 | 2013 | – | Effect of DHS cluster displacement on distance measures. |

| Skiles et al66 | 2013 | Rwanda | Effect of DHS cluster displacement on estimates of service environment. |

Implications of designs of existing household and provider data sources commonly used in linking analyses

| |||

| Carter et al33 | 2018 | Zambia | Effect of excluding non-facility providers from sampling frame on effective coverage estimates. |

| Munos et al40 | 2018 | Cote d’Ivoire | Effect of excluding non-facility providers from sampling frame on effective coverage estimates. |

| Skiles et al66 | 2013 | Rwanda | Effect of facility sampling on estimates of service environment. |

| Turner et al67 | 2001 | – |

|

Implications of designs of existing household and provider data sources commonly used in linking analyses

| |||

| Baker et al68 | 2005 | Uganda and Tanzania | Stability of facility diagnostic capacity over time. |

| Marchant et al69 | 2008 | Tanzania | Stability of IPTp stocks. |

| Wang et al71 | 2011 | Multiple Regions | Stability of maternal healthcare-seeking behaviours measured through household survey over time. |

| Willey et al44 | 2018 | Uganda | Stability of facility infrastructure indicators for labour, delivery, and newborn care. |

| Winter et al70 | 2015 | Multiple Regions | Stability of care-seeking for child illness behaviours measured through household survey over time. |

Impact of choice of method for combining household and provider data

| |||

| Carter et al33 | 2018 | Zambia | Comparison of exact match and ecological linking methods in estimating effective coverage in sick child care. |

| Munos et al40 | 2018 | Cote d'Ivoire | Comparison of exact match and ecological linking methods in estimating effective coverage in ANC, labour and delivery, PNC and sick child care. |

| Willey et al44 | 2017 | Uganda | Comparison of exact match and ecological linking methods in estimating effective coverage in ANC, labour and delivery, PNC and sick child care. |

Impact of choice of method for combining household and provider data

| |||

| Carter et al33 | 2018 | Zambia | Comparison of true-source of care for child illness to straight-line and road distance measures. |

| Delamater et al74 | 2019 | US | Comparison of FCA, simple distance, and Huff distance measure against true utilisation patterns. |

| Gething et al76 | 2004 | Kenya | Comparison of Theissen boundaries and true utilisation patterns. |

| Munos et al40 | 2018 | Cote d'Ivoire | Comparison of true-source of care for ANC, labour and delivery, PNC and child illness to straight-line and road distance measures. |

| Noor et al72 | 2006 | Kenya | Comparison of true-source of care for child fever to closest by Euclidian and road distance. |

| Tanser et al77 | 2001 | South Africa | Comparison of Theissen boundaries and true utilisation patterns. |

| Tanser et al73 | 2006 | South Africa | Comparison of typical source of care to closest by travel time. |

| Tsoka and Le Sueur75 | 2004 | South Africa | Comparison of Theissen boundaries and true utilisation patterns. |

Impact of choice of method for combining household and provider data

| |||

| Sauer et al79 | 2020 | – | Comparison of exact, parametric bootstrap and delta method for estimating effective coverage variance. |

| Wang et al19 | 2018 | Multiple Regions | Use of Delta method for estimating effective coverage variance. |

| Willey et al44 | 2018 | Uganda | Use of Delta method for estimating effective coverage variance. |

ANC, antenatal care; DHS, Demographic and Health Survey; FCA, floating catchment area; HMIS, Health Management Information Systems; IPTp, intermittent preventive treatment of malaria in pregnancy; LBW, low birthweigh; MCSP, Maternal and Child Survival Program; PCA, principal component analysis; PNC, postnatal care; SPA, Service Provision Assessment; SSA, sub-Saharan Africa; UHC, universal health coverage.

Suitability of household and provider data for linking analyses

Suitability of household data needed for linked estimates

In effective coverage linking analyses, household surveys can be used to estimate the proportion of the population in need of healthcare, as well as care-seeking behaviour. Household surveys must produce valid estimates of these parameters and provide care-seeking data that can be linked to provider assessments. This review identified papers discussing issues in defining intervention need (n=8) and care-seeking (n=5) that should guide selection of indicators for linking.

Intervention need

Estimation of intervention need may require solely population demographics such as age (eg, for prevention and health promotion interventions) or may require defining specific illnesses or conditions. The latter is more subject to reporting bias.7 Multiple studies have shown poor association or biases between maternally reported symptoms and clinical pneumonia,8 9 malaria10 and diarrhoea11 in children under 5. A handful of studies (n=3) showed maternal report of both maternal and newborn birth complications is variable.12–14 A simulation by Shengelia et al demonstrated the effect of the divergence of true from perceived intervention need on effective coverage estimates. The authors propose estimating the posterior probability of disease based on responses to symptomatic questions using a Bayesian model to measure disease presence on a probabilistic scale.7 However, there has been no work on how to integrate these adjusted estimates into effective coverage estimates.

Care-seeking behaviour

Four studies addressed the accuracy of respondent report of seeking care. Mothers in Zambia and Mozambique were able to accurately report on the type of health provider where they sought sick child15 and delivery care,16 respectively. However, studies in two countries suggested women cannot report on the type of health worker who attended to them during labour and delivery and immediate postnatal care.17 18 Wang et al note that provider categories are not standardised between population surveys and health system assessments, with population surveys often including vague or overly broad categories that do not directly match SPA/SARA categories and require harmonisation.19

Suitability of healthcare quality data needed for linked estimates

Provider assessments present data on service content and quality for effective coverage linking analyses. However, the measurement, construction and interpretation of provider quality measures are highly variable and may significantly alter effective coverage estimates. This paper does not present an exhaustive review of healthcare quality measures or the association between levels of quality. A comprehensive summary of quality of care concepts and measurement approaches, along with their relative strengths and limitations, was presented by Hanefeld et al.20 Publications of particular relevance to linking analyses are noted here, with an emphasis on national provider survey data as the most common source of provider data for linking analyses.

Methods used in assessing provider quality

A review by Nickerson et al found significant variability in the data collected and methods used in health facility assessment tools in LMICs.21 While SPA and SARA data are the most widely used sources of data on health service delivery in LMICs, one paper noted that these surveys focused primarily on structural quality with less data on provision and experience of care.22 The lack of process quality data is in part related to the reliance on direct observation of clinical care—a time-intensive and resource-intensive method—to collect these data. None of the studies included in the review used Health Management Information Systems (HMIS) data to generate linked coverage estimates. A desk review by the Maternal and Child Survival Program (MCSP) found that data collected through HMIS was variable across countries, data recorded within registers often was not transmitted through the system, and only a limited number of indicators collected were related to the provision of health services.23

Nine publications assessed alternatives to direct observation of clinical care for collecting process quality data. Two studies found no association between process quality and maternal perceptions of the quality of care received24 25 while one study found perceived quality was associated with the number of services received but not structural quality.26 Agreement between observed care and health records or provider report was also variable.27–29 A review by Hrisos et al found few studies to support use of patient report, provider self-report or record review as proxy measures of clinical care quality.30 In the USA, vignettes performed better than chart abstraction for estimating quality.31 Another review found providers were unable to accurately assess their own performance, with the worst accuracy among the least skilled providers.32 Five other publications used alternative methods for measuring process quality, including use of vignettes,26 33 register review,26 34 most recent delivery interview35 and an mHealth tool,36 but did not assess their performance against other measurement methods.

Content of provider quality indices

Most linking papers estimating effective coverage included in this review (n=15) characterised provider quality using structural measures of quality, with or without measures of process quality. Various approaches were used to select items for inclusion in these measures. Measures of structural and process quality were derived from either national or international guidance on minimum service availability and required commodities, equipment, infrastructure, training or actions. Measures used by effective coverage analyses included SPA or SARA structural indicators33 37–39 and/or clinical observations,38 40–43 emergency obstetric and newborn care functions,28 34 44 45 provider recall of actions during their last delivery,35 44 and measured health outcomes.46 47

Construction of provider quality indices

In addition to the range of variables used in provider measures, there was no consensus on the approach to use to select and combine variables to generate quality indices. The reviewed publications used a variety of approaches to construct indices including weighted indices,41 simple averages across all indicators or domains,33 39 40 42–44 and categorisation using set thresholds or relative categories.26 37 45 A review of quality measurement using SPA data found that studies frequently did not apply a theoretical framework when selecting indicators for quality measures, and that there was high variability in the indicators included in quality scores.48 In our review, seven publications presented data on the performance of different measurement modes and summary approaches. Two studies found the method of selecting and combining quality indicators had little effect on overall effective coverage estimates.49 50 However, two other studies found inconsistency in the rankings of health facilities when using different index methods.51 52 Two studies using principal component analysis (PCA) to create SPA health service indices found the reduced indices explained only a limited amount of the variance across indicators.52 53 An analysis of SPA data in ten countries found indices empirically derived through machine learning captured a large proportion of the service readiness data in the full SPA index, however, the selected set of indicators varied across countries, and an index generated through expert review captured very little of the data from the full index.54 Two studies found that few facilities could meet all requirements when applying a threshold, limiting the utility of the approach.45 51

Performance of provider measures

Despite the common usage of SPA and SARA data-derived structural and process quality measures, the review found limited data explicitly assessing the association of these measures with each other and health outcomes (n=7). Three studies, two incorporating data from multiple countries, found little association between structural quality and process quality.41 55 56 However, an analysis of SPA data from three countries found a small but significant association between antenatal care (ANC) facility structural and process quality and suggests structural quality can limit provider performance when basic infrastructure and commodities are unavailable.50 Akachi and Kruk emphasised the limited number of studies showing process quality associated with health outcomes.57 Two studies in Malawi found a small association between an obstetric quality index and decreased neonatal mortality58 and an association between quality-adjusted ANC nutrition intervention coverage and decreased low birth weight prevalence.42 Another found a national UHC ‘heath service coverage’ index correlated strongly with infant mortality rate and life expectancy.59

Implications of design of existing household and provider data sources commonly used in linking analyses

Issues related to household and cluster location data

The way in which common household surveys, particularly the DHS and MICS, collect and process location data may also impact the validity of some linked estimates. In many household datasets used for linked analyses, the precise location of individual households is often unknown. The DHS collects central point locations for clusters, rather than household locations, and displaces these points in publicly released datasets.60 MICS often does not collect or make geographical information system (GIS) data available.61 Imprecision around household location may influence the accuracy of estimates generated by linking household and provider data based on geographical proximity.

Data on household location

The effect of using cluster central point locations rather than individual household locations in linking analyses was not addressed by any publication identified in this review. However, four studies looked at the effect of using centroids of varying areal units versus household locations in distance analyses. Two studies found using US census tract62 and zip-code63 centroid locations produced little difference in measures of facility access compared with household location. A third study showed use of areal unit centroids resulted in misclassification of household access to health-related facilities, especially in less densely populated rural areas.64 However, in rural Ghana, measures calculated from village centroids identified the same closest facility as measures from compound locations for over 85% of births.65

Cluster displacement

Displacement of cluster central points might induce additional error in analyses based on geographical proximity. A DHS analytical report found that ignoring DHS displacement in analyses that used distance to a resource as a covariate resulted in increased bias and mean squared error. However, this will not affect linking by administrative unit because DHS has restricted displacement to within the representative sample administrative unit since 2009.60 A simulation analysis in Rwanda reported DHS cluster displacement produced less misclassification in level of access and relative service quality than healthcare provider sampling.66

Issues related to provider sampling

Typical sampling designs for healthcare provider data also present issues for linking analyses. Both SPAs and SARAs are sampled independently of household surveys, thus, there may be no sampled facilities near household survey clusters.67 SPA and SARA surveys typically collect data on a sample, rather than census, of public, private and non-governmental organization (NGO) health facilities and exclude non-facility providers, such as pharmacies or community health workers (CHWs). In most settings, facilities are sampled and analysed to be representative of all facilities within a managing authority, level and/or geographical area, and the results of the provider assessment are not intended to represent the population using health services.67 For provider assessments conducting direct observations of clinical care, the number and type of interactions observed within each health facility is dependent on patient volume and chance.

Provider sampling frame

Two papers assessed the impact of excluding non-facility providers on linked effective coverage estimates. In Zambia and Cote d’Ivoire, CHWs offered a level of care for sick children similar to first-level public facilities. Excluding these providers reduced estimates of effective coverage in Zambia where CHWs were a significant source of skilled care in rural areas,33 but had little effect in Cote d’Ivoire where they were an insignificant source of care.40 In both studies, exclusion of pharmacies did not alter effective coverage estimates as they were an uncommon source of care, though they offered moderate structural quality.33 40

Provider sampling design

Two publications addressed the impact of facility survey sampling designs. At the facility level, Skiles et al’s analysis demonstrated that sampling facilities, rather than using a census, led to an underestimation of the adequacy of the health service environment and substantial misclassification error in relative service environment for individual clusters.66 No studies addressed the suitability of SPA or SARA facility sampling strategies for generating stable quality estimates for use in linking analyses at a level below administrative unit used for the sampling approach.

A Measure Evaluation manual emphasised that data on provision of services (collected through observation of client–staff interactions), experience of care (collected through client exit interviews) and staff characteristics (collected through health worker interviews) are sampled independently and collected among health workers and care interactions available on the day of the survey. These data are a subsample of the overall survey and representative at the level the survey is sampled to be representative—not at the facility level.67 This paper proposed multiple linked sampling approaches to capture geographically concordant household and provider data for linked analyses. While multiple studies included in this review used a census or sample of providers derived from a household sample, none implemented this approach at a national scale.

Issues related to timing of surveys used in linked coverage estimates

Both care-seeking behaviour and provider quality are likely to vary over time, and both household and provider surveys are conducted infrequently in LMICs (~3–5 years). Linked coverage estimates for RMNCH may cover a long time frame as the reference period for care-seeking in household surveys varies from 2 weeks (sick child care) to 2–5 years (peripartum care). Population movement and quality improvement efforts at facilities further complicate associations with increasing time lags. The implications of linking household and provider indicators of different temporal periods is unclear.

Stability of provider indicators

No paper in this review specifically addressed the effect of provider indicator stability on linked effective coverage estimates. However, three linking papers presented data on the stability of some health facility indicators over time. Expanded Quality Management Using Information Power (EQUIP) studies in Uganda and Tanzania found moderate variability in the availability of some maternal and newborn health commodities and services over a period of 2–3 years.44 68 69

Stability of household indicators

Care-seeking behaviour, including overall rates of care and utilisation of different sources of care, may also change over time. Analysis of care-seeking for child illness70 and maternal healthcare71 in multiple LMICs over time showed high inconsistency in trends across countries. However, no identified studies addressed the consequences of this temporal variability within the context of linking analyses.

Impact of choice of method for combining household and provider data

The approach for combining household and provider data can potentially have a significant impact on linked coverage estimates. Methods used to link data, including exact match and various types of ecological linking, are defined in table 2. Exact match linking assigns provider information to individuals in the target population based on their specific source of care. This approach, while potentially subject to the reporting biases described previously, is considered the most precise approach for combining the two data sets in the absence of individual patient health records.6 Without data on specific source of care, ecological linking approaches are designed to approximate care-seeking behaviour or model healthcare access by linking the target population to sources of care based on geographical proximity or administrative catchment area, making assumptions about service access and use.

Table 2.

Table of linking approaches

| Approach | Method |

| Exact match | Link to specific reported source of care. |

| Ecological | Link to one or more providers based on geographical proximity or administrative association. |

| Geographical proximity | |

| Straight-line/Euclidean distance | Closest by absolute (crow-flies) distance. |

| Manhattan distance | Closest by sum of horizontal and vertical distance between points on a grid (blockwise). |

| Minokowski distance | Closest by weighted average of Euclidean and Manhattan distance. |

| Road distance | Closest by distance along a road (line and joint) network. |

| Raster-based travel time | Closest by travel time between points on a continous grid surface with variable transit speed coefficients in each cell. |

| Network-based travel time | Closest by travel time along a road network accounting for variable speed and road conditions. |

| Buffer | All providers within a defined radius from household. |

| Theissen polygon | Define catchment boundaries based on optimal distance between known providers. |

| Kernel density estimation | Define relative draw of providers over geographical area weighted by a density variable. |

| Interpolated surface | Define continuous surface of provider access or quality by smoothing between provider point data. |

| Floating catchment area | Define catchments for known providers allowing for cross-border use (catchment overlap) and distance decay. |

| Administrative | All providers within administrative unit boundaries. |

Comparison of exact match and ecological linking methods for estimating effective coverage

Three publications explicitly compared effective coverage estimates generated using exact match and ecological linking methods (table 3).33 40 44 Estimates generated using the exact match linking approach were considered the gold-standard measure of effective coverage. All three publications found exact match linked effective coverage estimates were similar to straight-line,33 40 travel time,33 40 5 km buffer,33 10 km buffer40 and administrative unit33 40 44 geolinked estimates for antenatal,40 labour and delivery,40 44 postnatal40 and sick child33 40 care when linking was restricted by the reported provider category (eg, hospital, health centre, CHW). Distance-restricted linking approaches, such as linking to providers within a 5 km radius, produced inaccurate results if unlinked events were treated as no care.33 Restriction of geographical linking to only providers within the reported category of care and/or weighting by providers’ relative patient volume improved agreement between the exact match and ecological linking estimates.40 44 All three studies also used provider data obtained from a census of health facilities, and therefore, the findings may not be applicable when household data are linked to a sample survey of health facilities.

Table 3.

Exact versus ecological linking estimates for select indicators across studies

| Willey labour and delivery structural QoC |

Carter child health urban structural QoC |

Carter child health rural structural QoC |

Munos labour and delivery structural QoC |

Munos labour and delivery process QoC |

Munos child health structural QoC |

Munos child health process QoC |

||||||||

| % (95% CI) |

Relative % Diff | % (95% CI) |

Relative % Diff | % (95% CI) |

Relative % Diff | % (95% CI) |

Relative % Diff | % (95% CI) |

Relative % Diff | % (95% CI) |

Relative % Diff | % (95% CI) |

Relative % Diff | |

| Exact match | 9.86 (3.2 to 16.5) |

REF | 49 (43.6 to 54.5) |

REF | 60.3 (55.6 to 65.1) |

REF | 37.2 (30.5 to 43.9 |

REF | 40.1 (32.9 to 47.3) |

REF | 22.9 (18.2 to 27.5) |

REF | 16.8 (12.8 to 20.8) |

REF |

| Ecological—geographical | ||||||||||||||

| Absolute distance | 36.5 (29.5 to 43.5) |

−1.9% | 39.8 (32.2 to 47.5) |

−0.7% | 18.2* (12.3 to 24.1) |

−20.5% | 14.3 (9.1 to 19.6) |

−14.9% | ||||||

| Absolute distance and provider category* |

49.1 (43.7 to 54.6) |

0.2 | 61.1 (56.3 to 65.9) |

1.3% | 37 (30.0 to 44.0) |

−0.5% | 39.6 (32.1 to 47.1) |

−1.2% | 20.8* (16.1 to 25.4) |

−9.2% | 16.5 (12.2 to 20.7) |

−1.8% | ||

| Road distance | 36.8 (30.0 to 44.0) |

−1.1% | 40.4 (32.6 to 48.1) |

0.7% | 16* (10.9 to 21.1) |

−30.1% | 13.8 (8.5 to 19.1) |

−17.9% | ||||||

| Road distance & provider category* |

48.7 (43.2 to 54.1) |

−0.6% | 58.8 (54.1 to 63.5) |

−2.5% | 37.5 (30.4 to 44.6) |

0.8% | 40.2 (32.5 to 47.9) |

0.2% | 20.2* (16.0 to 24.4) |

−11.8% | 16.5 (12.3 to 21.8) |

−1.8% | ||

| Radius 5 km and provider category* |

49.2 (43.7 to 54.7) |

0.4% | 59.4 (54.8 to 64.1) |

−1.5% | ||||||||||

| Radius 10 km—unweighted† | 35.3* (29.3 to 41.4) |

−5.1% | 39.1 (32.0 to 46.2) |

−2.5% | 18.8* (14.9 to 22.7) |

−17.9% | 15.7 (12.4 to 19.1) |

−6.5% | ||||||

| Radius 10 km—weighted‡ | 37.5 (30.6 to 44.4) |

0.8% | 39.8 (32.5 to 47.1) |

−0.7% | 19.1* (15.1 to 23.0) |

−16.6% | 15.6 (12.1 to 19.1) |

−7.1% | ||||||

| KDE—single | 71.8* (69.3 to 74.2) |

46.5% | 55* (50.4 to 59.6) |

−8.8% | ||||||||||

| KDE—aggregate | 74.3* (73.2 to 75.5) |

51.6% | 54.9* (50.4 to 59.5) |

−9.0% | ||||||||||

| Ecological—administrative | ||||||||||||||

| Facility catchment and provider category* | 49.1 (43.6 to 54.6) |

0.2% | 59.8 (55.1 to 64.5) |

−0.8% | ||||||||||

| Subdistrict and provider category* |

49.4 (43.9 to 54.9) |

0.8% | 57.9 (53.4 to 62.4) |

−4.0% | ||||||||||

| District—unweighted† | 4.7* (21.4 to 31.7) |

−52.5% | 34.9* (29.0 to 40.8) |

−6.2% | 39 (32.3 to 45.7) |

−2.7% | 17.8* (14.6 to 21.0) |

−22.3% | 21* (17.2 to 24.8) |

25.0% | ||||

| District—unweighted† and provider category* |

11.0 (3.8 to 18.2) |

11.8% | 37 (30.4 to 43.6) |

−0.5% | 39.7 (32.7 to 46.7) |

−1.0% | 20.3* (15.8 to 24.8) |

−11.4% | 17.4 (13.3 to 21.4) |

3.6% | ||||

| District—weighted‡ | 37.9 (31.3 to 44.4) |

1.9% | 40.7 (33.7 to 47.7) |

1.5% | 19.7* (16.1 to 23.2) |

−14.0% | 21.2* (17.4 to 25.0) |

26.2% | ||||||

| District—weighted‡ and provider category* |

38.8* (31.9 to 45.7) |

4.3% | 40.8 (33.6 to 48.0) |

1.7% | 21.1* (16.4 to 25.8) |

−7.9% | 17.1 (13.1 to 21.2) |

1.8% | ||||||

*Ecological linking restricted to only providers within the category (type of outlet, managing authority, and facility level) reported by survey respondent.

†Simple average of provider quality scores applied, not accounting for differentials in patient volume.

‡Provider quality scores weighted by provider utilisation volume for relevant health area.

QoC, quality of care.

Performance of measures of geographical proximity for ecological linking

Eight studies assessed the performance of geographical measures for assigning households or individuals to their reported source of healthcare. Four studies in sub-Saharan Africa compared the predicted source of care based on geographical proximity against the true source of care. They found straight-line and road distance performed similarly,72 high performance of shortest travel time method73 and better performance of straight-line distance compared with road distance.33 40 In the USA, a more sophisticated approach (two-stage and three-stage floating catchment area) performed better than alternatives methods in assigning households to their source of care.74 Three studies in sub-Saharan Africa evaluated use of Theissen boundaries, a method of defining catchment boundaries based on the optimal distance between known providers, in assigning households to the catchment of facilities they used. The studies found high performance in some settings,75 but poorer performance related to the use of higher-order facilities76 and influence of public transportation routes.77

Statistical challenges

Most linking analyses that have generated effective coverage estimates by assigning individuals the quality score of the reported or linked source of care have derived estimates of uncertainty based on household sampling error and ignored any sampling error around provider data. However, two analyses used the Delta method78 for estimating the variance of effective coverage estimates generated by multiplying service use and readiness.19 44 A simulation study compared three variance estimation methods for linked effective coverage measures (household sampling error alone, parametric bootstrapping and the delta method), and found that all three performed similarly for large samples. However, the delta method produced more valid confidence bounds with smaller samples or when the effective coverage estimate approached either 0 or 100%.79

Discussion

This review found a variable number of publications that addressed the diverse methodological issues related to linking household and provider datasets. A summary of key findings and needs for further research is presented in table 4 and discussed below.

Table 4.

Summary of evidence related to methodological issues for linking analyses and related needs for future research

Suitability of household and provider data for linking analyses

| ||

| Issue | Evidence | Action |

| How valid are data on target population for interventions? |

|

Explore alternative methods for defining population in need (eg, biomarkers, Bayesian modelling of disease probability). |

| How valid are data on care-seeking? |

|

|

| How are QoC data being collected and what are the limitations of these methods? |

|

|

| How are quality measures being constructed and what do we know about the performance of these indices? |

|

Develop standardised and validated summary QoC measures. |

| How well do measures of quality track with each other, clinical quality and/or health benefit? |

|

Standardise methods and terminology for defining and interpreting QoC measures to more accurately reflect role in the coverage cascade. |

Implications of design of existing household and health provider data sources commonly used in linking analyses

| ||

| Issue | Evidence | Action |

| Does imprecise DHS/MICS household location data affect ecological linking results? | Handful of studies suggest minimal effect on results produced by linking on geographical proximity. | Assess impact of household vs cluster centroid location vs displaced centroid in ecological linking analyses in multiple settings. |

| How does SPA/SARA sampling design affect estimates? |

|

|

| How stable are indicators over time? |

|

|

Impact of choice of method for combining household and provider data

| ||

| Issue | Evidence | Action |

| How do exact match and ecological linking approaches compare? |

|

|

| How do different ecological linking methods and measures of geographical proximity perform? |

|

|

| What are the statistical challenges in combining data for effective coverage estimation? |

|

Continue developing tools and approaches for estimating uncertainty around linked estimates. |

DHS, Demographic and Health Survey; EC, effective coverage; EmONC, emergency obstetric and newborn care; HMIS, Health Management Information Systems; MICS, Multiple Indicator Cluster Survey; QoC, quality of care; SARA, Service Availability and Readiness Assessment; SPA, Service Provision Assessment.

Suitability of household and provider data for linking analyses

We identified a number of papers that critically assessed household and provider data needed for linking analyses. The limited existing data on respondent-reported care-seeking suggest respondents can identify sources of care if not individual healthcare worker cadre, but additional validation in various settings and service areas, such as postnatal care, would be informative. Further, it is essential to ensure that categorisation of sources of care in household surveys align with the categories used in provider assessments to facilitate linking datasets. The validity of household survey data for estimating populations in need was more variable. While some populations in need can be clearly defined, others, particularly those requiring symptom-derived diagnoses based on respondent report, have demonstrated potential for bias. Additional work is needed to explore alternative methods for identifying populations in need of interventions within population-based data sources.

The content and construction of provider quality indices was highly variable across publications, but largely derived from facility surveys and informed by international guidelines or recommendations. Methods for collecting provider quality have a number of limitations, and no single method perfectly encompasses all aspects of care.80 The review found a lack of agreement between measures of quality derived through various means of collection. Overall, there was little empirical data supporting association between structural quality and process quality, and measures of quality and appropriate care or good health outcomes, although the number of reviewed studies was very limited. However, as articulated by Nguhiu et al, there is need to consider quality indicators’ ‘intrinsic value as levers for management action’ and application to policy decision making in addition to their ability to capture or predict associated health gain.38 Many important indicators of healthcare quality, particularly around patient-centred care, are not currently measured through existing tools and there is a need to better capture these indicators.81 82 Additional research is needed in the short term to develop and evaluate new quality indices using existing data sources (eg, facility surveys, HMIS and medical records) with an aim of identifying a standardised approach for selecting, combining, and interpreting indicators that reflect aspects of provider quality necessary for delivering appropriate, respectful and effective care. Longer term, substantial effort is needed to strengthen or adapt existing mechanisms or develop alternative methods for collecting provider quality indicators that can produce timely and informative estimates for tracking effective coverage of key interventions.

Implications of the design of existing household and provider data sources commonly used in linking analyses

Few studies addressed the influence of the design of common data sources on linking analyses, including the impact of imprecise household GIS data, provider sampling frame and sampling design and estimate stability. However, there was a lack of concrete evidence around the impact of these factors on linked effective coverage estimates. Explicitly evaluating the impact of imprecise household location, sampling design and temporal gaps between measures within the context of effective coverage estimation would be informative. Mixed results on the inclusion of non-facility providers in provider assessments for effective coverage estimation emphasise the need to empirically assess the utilisation and service quality of non-facility providers in a given setting prior to conducting a linking analysis, as the quality and use of these providers varies by health area and setting.70 71 83 Although data related to impact on effective coverage estimation were limited, small samples of client-staff observations, sampling of health workers and facilities, and temporal gaps between household and provider data have the potential to bias estimates. The available data suggest that developing and testing alternative means of sampling health providers could improve the validity of linked estimates of effective coverage, including evaluating joint sampling approaches proposed by Measure Evaluation67 or used by other data collection mechanisms such as Performance Monitoring for Action (PMA) and the India District Level Household and Facility Survey.

Impact of choice of method for combining household and provider data

The most consistent evidence found through the review was around methods for combining data sets. Three papers compared ecological and exact match linking and reported that ecological linking (when accounting for frequency of provider utilisation by type) produced similar estimates to exact match linking. The agreement between the three publications that compared exact match and ecological linking is promising. Exact match linking is considered the most precise method for generating linked estimates; however, ecological linking is often more feasible because it does not require information on exact source of care or data on all providers. The papers further point to the need to maintain data on type of provider from which care was sought or the relative volume of patients seen by providers in order to generate valid estimates of effective coverage. All three studies were conducted in rural sub-Saharan Africa in settings with high utilisation of public sector health facilities; additional studies evaluating the performance of these methods in settings with a more diverse healthcare landscape would be informative. Other papers evaluated ecological linking approaches and found similar estimates of access to care or effective coverage using different approaches for assessing geographical proximity, although the ability of methods to capture true source of care was more variable. External to this review, additional data suggest that individuals may not always use the closest source of care and may bypass providers in favour of providers offering better care.37 84 85 These findings along with the analyses comparing exact match and ecological linking approaches emphasise the need to carefully select methods for performing ecological linking and to control for true care-seeking behaviour as much as possible by accounting for the type of provider from which care was sought or weighting by utilisation in linking analyses. There is also need to further develop approaches and tools for estimating uncertainty around linked effective coverage estimates.

Evidence across the review demonstrates the need for careful choice of methods, data sources and indicators when conducting studies or analyses to link household and provider data for effective coverage estimation. An exploration of the precise effect of setting characteristics, such as variation in provider quality, on effective coverage estimates is needed to guide decision making in the selection of linking methods. Once more of these issues have been evaluated, additional tools and guidance to facilitate use of these methods will be needed.

The review was limited by the diversity of terminology and fields related to the linking methodology. However, the use of multiple search strategies minimised the likelihood of overlooking relevant publications. No formal grading of publication quality was included in the assessment, but the choice to conduct the search through Medline was intended to ensure a basic level of quality across the diverse study designs included in the review. Additionally, the diversity of fields, approaches and questions made it difficult to summarise the findings neatly, emphasising the need for communication between researchers, more standard terminology, and, ideally, a cohesive research strategy going forward. Recent efforts have aimed to align definitions of effective coverage.2 We attempt in table 4 to translate the review results into actionable items and needs for future research.

Conclusions

Linking household and healthcare provider data is a promising approach that leverages existing data sources to generate more informative estimates of intervention coverage and care. These methods can potentially address limitations of both household and provider surveys to generate population-based estimates that reflect not only use of services, but also the content and quality of care received and the potential for health benefit. However, there is need for additional research to develop evidence based, standardised best practices for these analyses. The most pressing priorities identified in this review are: (1) for those collecting data from health systems to explore methods to strengthen existing provider data collection mechanisms and promote temporal and geographical alignment with population-based measures, (2) for those collecting population-based data to address validity of self-reported intervention need and ensure indicators of access and utilisation of care are measured to facilitate linking analyses and (3) for those conducting linked analyses to standardise approaches for generating and interpreting effective coverage indicators, including sources of uncertainty, to ensure we are producing evidence that is harmonised, informative and actionable for governments and valid for monitoring population health globally.

Supplementary Material

Footnotes

Contributors: ICMJE criteria for authorship read and met: EC, HL, TM, AA and MKM. Conceived of the study design: EC, MKM. Conducted review: EC. Drafted paper: EC. Agree with manuscript and conclusions: EC, HL, TM, AA and MKM. All authors read, edited and approved the manuscript.

Funding: This work was supported, in whole, by the Improving Measurement and Program Design (IMPROVE) grant (OPP1172551) from the Bill & Melinda Gates Foundation. The funders did not have any role in the design of the study and collection, analysis, and interpretation of data or in writing the manuscript.

Competing interests: None declared.

Provenance and peer review: Not commissioned; externally peer reviewed.

Supplemental material: This content has been supplied by the author(s). It has not been vetted by BMJ Publishing Group Limited (BMJ) and may not have been peer-reviewed. Any opinions or recommendations discussed are solely those of the author(s) and are not endorsed by BMJ. BMJ disclaims all liability and responsibility arising from any reliance placed on the content. Where the content includes any translated material, BMJ does not warrant the accuracy and reliability of the translations (including but not limited to local regulations, clinical guidelines, terminology, drug names and drug dosages), and is not responsible for any error and/or omissions arising from translation and adaptation or otherwise.

Data availability statement

Data sharing not applicable as no datasets generated and/or analysed for this study. As a review article, this article reports data from previously published studies.

Ethics statements

Patient consent for publication

Not required.

References

- 1.Ng M, Fullman N, Dieleman JL, et al. Effective coverage: a metric for monitoring universal health coverage. PLoS Med 2014;11:e1001730. 10.1371/journal.pmed.1001730 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Marsh AD, Muzigaba M, Diaz T, et al. Effective coverage measurement in maternal, newborn, child, and adolescent health and nutrition: progress, future prospects, and implications for quality health systems. Lancet Glob Health 2020;8:e730–6. 10.1016/S2214-109X(20)30104-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Amouzou A, Leslie HH, Ram M, et al. Advances in the measurement of coverage for RMNCH and nutrition: from contact to effective coverage. BMJ Glob Health 2019;4:e001297. 10.1136/bmjgh-2018-001297 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Bryce J, Arnold F, Blanc A, et al. Measuring coverage in MNCH: new findings, new strategies, and recommendations for action. PLoS Med 2013;10:e1001423. 10.1371/journal.pmed.1001423 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Day LT, Sadeq-Ur Rahman Q, Ehsanur Rahman A, et al. Assessment of the validity of the measurement of newborn and maternal health-care coverage in hospitals (EN-BIRTH): an observational study. Lancet Glob Health 2021;9:e267–79. 10.1016/S2214-109X(20)30504-0 [DOI] [PubMed] [Google Scholar]

- 6.Do M, Micah A, Brondi L, et al. Linking household and facility data for better coverage measures in reproductive, maternal, newborn, and child health care: systematic review. J Glob Health 2016;6:020501. 10.7189/jogh.06.020501 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Shengelia B, Tandon A, Adams OB, et al. Access, utilization, quality, and effective coverage: an integrated conceptual framework and measurement strategy. Soc Sci Med 2005;61:97–109. 10.1016/j.socscimed.2004.11.055 [DOI] [PubMed] [Google Scholar]

- 8.Ayede AI, Kirolos A, Fowobaje KR, et al. A prospective validation study in south-west Nigeria on caregiver report of childhood pneumonia and antibiotic treatment using demographic and health survey (DHS) and multiple indicator cluster survey (MICs) questions. J Glob Health 2018;8:020806. 10.7189/jogh.08-020806 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Hazir T, Begum K, El Arifeen S, et al. Measuring coverage in MNCH: a prospective validation study in Pakistan and Bangladesh on measuring correct treatment of childhood pneumonia. PLoS Med 2013;10:e1001422. 10.1371/journal.pmed.1001422 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.D'Acremont V, Lengeler C, Genton B. Reduction in the proportion of fevers associated with Plasmodium falciparum parasitaemia in Africa: a systematic review. Malar J 2010;9:240. 10.1186/1475-2875-9-240 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Fischer Walker CL, Fontaine O, Black RE. Measuring coverage in MNCH: current indicators for measuring coverage of diarrhea treatment interventions and opportunities for improvement. PLoS Med 2013;10:e1001385. 10.1371/journal.pmed.1001385 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Keenan K, Hipwell A, McAloon R, et al. Concordance between maternal recall of birth complications and data from obstetrical records. Early Hum Dev 2017;105:11–15. 10.1016/j.earlhumdev.2017.01.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Zimmerman LA, Shiferaw S, Seme A, et al. Evaluating consistency of recall of maternal and newborn care complications and intervention coverage using PMA panel data in SNNPR, Ethiopia. PLoS One 2019;14:e0216612. 10.1371/journal.pone.0216612 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Chang KT, Mullany LC, Khatry SK, et al. Validation of maternal reports for low birthweight and preterm birth indicators in rural Nepal. J Glob Health 2018;8. 10.7189/jogh.08.010604 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Carter ED, Ndhlovu M, Munos M, et al. Validity of maternal report of care-seeking for childhood illness. J Glob Health 2018;8:010602. 10.7189/jogh.08.010602 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Stanton CK, Rawlins B, Drake M, et al. Measuring coverage in MNCH: testing the validity of women's self-report of key maternal and newborn health interventions during the Peripartum period in Mozambique. PLoS One 2013;8:e60694. 10.1371/journal.pone.0060694 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Blanc AK, Diaz C, McCarthy KJ, et al. Measuring progress in maternal and newborn health care in Mexico: validating indicators of health system contact and quality of care. BMC Pregnancy Childbirth 2016;16:255. 10.1186/s12884-016-1047-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Blanc AK, Warren C, McCarthy KJ, et al. Assessing the validity of indicators of the quality of maternal and newborn health care in Kenya. J Glob Health 2016;6:010405. 10.7189/jogh.06.010405 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Wang W, Mallick L, Allen C. Effective coverage of facility delivery in Bangladesh, Haiti, Malawi, Nepal, Senegal, and Tanzania, 2018. Available: https://dhsprogram.com/publications/publication-as65-analytical-studies.cfm [Accessed 17 May 2019]. [DOI] [PMC free article] [PubMed]

- 20.Hanefeld J, Powell-Jackson T, Balabanova D. Understanding and measuring quality of care: dealing with complexity. Bull World Health Organ 2017;95:368. 10.2471/BLT.16.179309 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Nickerson JW, Adams O, Attaran A, et al. Monitoring the ability to deliver care in low- and middle-income countries: a systematic review of health facility assessment tools. Health Policy Plan 2015;30:675–86. 10.1093/heapol/czu043 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Sheffel A, Karp C, Creanga AA. Use of service provision assessments and service availability and readiness assessments for monitoring quality of maternal and newborn health services in low-income and middle-income countries. BMJ Glob Health 2018;3:e001011. 10.1136/bmjgh-2018-001011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Maternal and Child Survival Program (MCSP) . What data on maternal and newborn health do National health management information systems include? 2018. Available: https://www.mcsprogram.org/resource/what-data-on-maternal-and-newborn-health-do-national-health-management-information-systems-include/ [Accessed 20 May 2019].

- 24.Diamond-Smith N, Sudhinaraset M, Montagu D. Clinical and perceived quality of care for maternal, neonatal and antenatal care in Kenya and Namibia: the service provision assessment. Reprod Health 2016;13:92. 10.1186/s12978-016-0208-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Getachew T, Abebe SM, Yitayal M, et al. Assessing the quality of care in sick child services at health facilities in Ethiopia. BMC Health Serv Res 2020;20:574. 10.1186/s12913-020-05444-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Larson E, Hermosilla S, Kimweri A, et al. Determinants of perceived quality of obstetric care in rural Tanzania: a cross-sectional study. BMC Health Serv Res 2014;14:483. 10.1186/1472-6963-14-483 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Hoogenboom G, Thwin MM, Velink K, et al. Quality of intrapartum care by skilled birth attendants in a refugee clinic on the Thai-Myanmar border: a survey using who safe motherhood needs assessment. BMC Pregnancy Childbirth 2015;15:17. 10.1186/s12884-015-0444-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Nesbitt RC, Lohela TJ, Manu A, et al. Quality along the continuum: a health facility assessment of intrapartum and postnatal care in Ghana. PLoS One 2013;8:e81089. 10.1371/journal.pone.0081089 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Osen H, Chang D, Choo S, et al. Validation of the world Health organization tool for situational analysis to assess emergency and essential surgical care at district hospitals in Ghana. World J Surg 2011;35:500–4. 10.1007/s00268-010-0918-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Hrisos S, Eccles MP, Francis JJ, et al. Are there valid proxy measures of clinical behaviour? A systematic review. Implement Sci 2009;4:37. 10.1186/1748-5908-4-37 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Peabody JW, Luck J, Glassman P, et al. Comparison of vignettes, standardized patients, and chart abstraction: a prospective validation study of 3 methods for measuring quality. JAMA 2000;283:1715–22. 10.1001/jama.283.13.1715 [DOI] [PubMed] [Google Scholar]

- 32.Davis DA, Mazmanian PE, Fordis M, et al. Accuracy of physician self-assessment compared with observed measures of competence: a systematic review. JAMA 2006;296:1094–102. 10.1001/jama.296.9.1094 [DOI] [PubMed] [Google Scholar]

- 33.Carter ED, Ndhlovu M, Eisele TP, et al. Evaluation of methods for linking household and health care provider data to estimate effective coverage of management of child illness: results of a pilot study in southern Province, Zambia. J Glob Health 2018;8:010607. 10.7189/jogh.08.010607 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Wilunda C, Oyerinde K, Putoto G, et al. Availability, utilisation and quality of maternal and neonatal health care services in Karamoja region, Uganda: a health facility-based survey. Reprod Health 2015;12:30. 10.1186/s12978-015-0018-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Marchant T, Tilley-Gyado RD, Tessema T, et al. Adding content to contacts: measurement of high quality contacts for maternal and newborn health in Ethiopia, North East Nigeria, and Uttar Pradesh, India. PLoS One 2015;10:e0126840. 10.1371/journal.pone.0126840 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Mboya D, Mshana C, Kessy F, et al. Embedding systematic quality assessments in supportive supervision at primary healthcare level: application of an electronic tool to improve quality of healthcare in Tanzania. BMC Health Serv Res 2016;16:578. 10.1186/s12913-016-1809-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Kanyangarara M, Munos MK, Walker N. Quality of antenatal care service provision in health facilities across sub-Saharan Africa: evidence from nationally representative health facility assessments. J Glob Health 2017;7:021101. 10.7189/jogh.07.021101 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Nguhiu PK, Barasa EW, Chuma J. Determining the effective coverage of maternal and child health services in Kenya, using demographic and health survey data sets: tracking progress towards universal health coverage. Trop Med Int Health 2017;22:442–53. 10.1111/tmi.12841 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Chou VB, Walker N, Kanyangarara M. Estimating the global impact of poor quality of care on maternal and neonatal outcomes in 81 low- and middle-income countries: a modeling study. PLoS Med 2019;16:e1002990. 10.1371/journal.pmed.1002990 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Munos MK, Maiga A, Do M, et al. Linking household survey and health facility data for effective coverage measures: a comparison of ecological and individual linking methods using the Multiple Indicator Cluster Survey in Côte d’Ivoire. J Glob Health 2018;8. 10.7189/jogh.08.020803 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Leslie HH, Sun Z, Kruk ME. Association between infrastructure and observed quality of care in 4 healthcare services: a cross-sectional study of 4,300 facilities in 8 countries. PLoS Med 2017;14:e1002464. 10.1371/journal.pmed.1002464 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Joseph NT, Piwoz E, Lee D, et al. Examining coverage, content, and impact of maternal nutrition interventions: the case for quality-adjusted coverage measurement. J Glob Health 2020;10:010501. 10.7189/jogh.10.010501 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Yakob B, Gage A, Nigatu TG. Low effective coverage of family planning and antenatal care services in Ethiopia. Int J Qual Health Care J Int Soc Qual Health Care 2019;31:725–32. [DOI] [PubMed] [Google Scholar]

- 44.Willey B, Waiswa P, Kajjo D, et al. Linking data sources for measurement of effective coverage in maternal and newborn health: what do we learn from individual- vs ecological-linking methods? J Glob Health 2018;8:010601. 10.7189/jogh.08.010601 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Gabrysch S, Simushi V, Campbell OMR. Availability and distribution of, and geographic access to emergency obstetric care in Zambia. Int J Gynecol Obstet 2011;114:174–9. 10.1016/j.ijgo.2011.05.007 [DOI] [PubMed] [Google Scholar]

- 46.Leslie HH, Doubova SV, Pérez-Cuevas R. Assessing health system performance: effective coverage at the Mexican Institute of social security. Health Policy Plan 2019;34:ii67–76. 10.1093/heapol/czz105 [DOI] [PubMed] [Google Scholar]

- 47.Servan-Mori E, Avila-Burgos L, Nigenda G, et al. A performance analysis of public expenditure on maternal health in Mexico. PLoS One 2016;11:e0152635. 10.1371/journal.pone.0152635 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Moucheraud C, McBride K. Variability in health care quality measurement among studies using service provision assessment data from low- and middle-income countries: a systematic review. Am J Trop Med Hyg 2020;103:986–92. 10.4269/ajtmh.19-0644 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Lozano R, Soliz P, Gakidou E, et al. Benchmarking of performance of Mexican states with effective coverage. Lancet 2006;368:1729–41. 10.1016/S0140-6736(06)69566-4 [DOI] [PubMed] [Google Scholar]

- 50.Sheffel A. Association between antenatal care facility readiness and provision of care at the individual and facility level in Haiti, Malawi and Tanzania 2018.

- 51.Fisseha G, Berhane Y, Worku A, et al. Quality of the delivery services in health facilities in northern Ethiopia. BMC Health Serv Res 2017;17:187. 10.1186/s12913-017-2125-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Mallick L, Wang W, Temsah G. A comparison of summary measures of quality of service and quality of care for family planning in Haiti, Malawi, and Tanzania, 2017. Available: https://dhsprogram.com/publications/publication-mr20-methodological-reports.cfm [Accessed 17 May 2019]. [DOI] [PMC free article] [PubMed]

- 53.Jackson EF, Siddiqui A, Gutierrez H, et al. Estimation of indices of health service readiness with a principal component analysis of the Tanzania service provision assessment survey. BMC Health Serv Res 2015;15:536. 10.1186/s12913-015-1203-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Leslie HH, Zhou X, Spiegelman D, et al. Health system measurement: harnessing machine learning to advance global health. PLoS One 2018;13:e0204958. 10.1371/journal.pone.0204958 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Kruk ME, Chukwuma A, Mbaruku G, et al. Variation in quality of primary-care services in Kenya, Malawi, Namibia, Rwanda, Senegal, Uganda and the United Republic of Tanzania. Bull World Health Organ 2017;95:408–18. 10.2471/BLT.16.175869 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Zurovac D, Guintran J-O, Donald W, et al. Health systems readiness and management of febrile outpatients under low malaria transmission in Vanuatu. Malar J 2015;14:489. 10.1186/s12936-015-1017-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Akachi Y, Kruk ME. Quality of care: measuring a neglected driver of improved health. Bull World Health Organ 2017;95:465–72. 10.2471/BLT.16.180190 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Leslie HH, Fink G, Nsona H, et al. Obstetric facility quality and newborn mortality in Malawi: a cross-sectional study. PLoS Med 2016;13:e1002151. 10.1371/journal.pmed.1002151 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Leegwater A, Wong W, Avila C. A Concise, health service coverage index for monitoring progress towards universal health coverage. BMC Health Serv Res 2015;15:230. 10.1186/s12913-015-0859-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Perez-Haydrich C, Warren JL, Burgert CR. Guidelines on the use of DHS GPs data, 2013. Available: https://dhsprogram.com/publications/publication-SAR8-Spatial-Analysis-Reports.cfm [Accessed 14 Sep 2018].

- 61.FAQ - UNICEF MICS. Available: http://mics.unicef.org/faq [Accessed 19 Jun 2019].

- 62.Bliss RL, Katz JN, Wright EA, et al. Estimating proximity to care: are straight line and zipcode centroid distances acceptable proxy measures? Med Care 2012;50:99–106. 10.1097/MLR.0b013e31822944d1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Jones SG, Ashby AJ, Momin SR, et al. Spatial implications associated with using Euclidean distance measurements and geographic centroid imputation in health care research. Health Serv Res 2010;45:316–27. 10.1111/j.1475-6773.2009.01044.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Healy MA, Gilliland JA. Quantifying the magnitude of environmental exposure misclassification when using imprecise address proxies in public health research. Spat Spatiotemporal Epidemiol 2012;3:55–67. 10.1016/j.sste.2012.02.006 [DOI] [PubMed] [Google Scholar]

- 65.Nesbitt RC, Gabrysch S, Laub A, et al. Methods to measure potential spatial access to delivery care in low- and middle-income countries: a case study in rural Ghana. Int J Health Geogr 2014;13:25. 10.1186/1476-072X-13-25 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Skiles MP, Burgert CR, Curtis SL, et al. Geographically linking population and facility surveys: methodological considerations. Popul Health Metr 2013;11:14. 10.1186/1478-7954-11-14 [DOI] [PMC free article] [PubMed] [Google Scholar]