Abstract

The classical account of reasoning posits that analytic thinking weakens belief in COVID-19 misinformation. We tested this account in a demographically representative sample of 742 Australians. Participants completed a performance-based measure of analytic thinking (the Cognitive Reflection Test) and were randomized to groups in which they either rated the perceived accuracy of claims about COVID-19 or indicated whether they would be willing to share these claims. Half of these claims were previously debunked misinformation, and half were statements endorsed by public health agencies. We found that participants with higher analytic thinking levels were less likely to rate COVID-19 misinformation as accurate and were less likely to be willing to share COVID-19 misinformation. These results support the classical account of reasoning for the topic of COVID-19 misinformation and extend it to the Australian context.

Supplementary Information

The online version contains supplementary material available at 10.3758/s13421-021-01219-5.

Keywords: misinformation, COVID-19, cognitive reflection, decision-making, classical account

Introduction

The World Health Organization (WHO) has warned that the current COVID-19 pandemic has fueled an “infodemic”—the outbreak of misinformation about this disease (WHO, 2020). Misinformation about COVID-19 can inflict serious harm—for instance, through the spreading of false cures or baseless preventative measures (Mian & Khan, 2020; Swire-Thompson & Lazer, 2020; Tangcharoensathien et al., 2020). In response, cognitive and behavioral scientists have begun to study the psychological mechanisms that enable the spread of COVID-19 misinformation and propose interventions to mitigate it (Van Bavel, Baicker, et al., 2020).

Misinformation is any message that is not supported by the best available evidence (Swire-Thompson & Lazer, 2020). While some misinformation is innocuous, a recent review of research on health-related misinformation found that harmful misinformation is abundant, particularly on social media platforms (Wang et al., 2019). This is of considerable concern because health-related misinformation has been linked with poor health decisions in previous pandemics. For example, during the 2014–2016 Ebola outbreak, Americans who believed conspiratorial misinformation about the disease were significantly less willing to seek medical assistance should they develop symptoms (Earnshaw et al., 2019). Exposure to conspiratorial misinformation has also been associated with the deterioration of COVID-19 preventive health behaviors (Allington et al., 2020; Barua et al., 2020). Moreover, the sharing of COVID-19 misinformation on Twitter has been associated with a future increase in case numbers in that geographical area (Singh et al., 2020). It stands to reason that reducing the spread of misinformation could help mitigate harmful health behaviors during the COVID-19 pandemic.

There are multiple causes of belief in and sharing of misinformation, including many cognitive biases (Van Bavel, Harris, et al., 2020). Recent work on cognitive biases has focused on the dual-process framework that distinguishes between two reasoning processes (Evans & Stanovich, 2013; Kahneman, 2012; Pennycook et al., 2015b): “Type 1” or intuitive processes that do not require working memory and that tend to be fast and automatic, and “Type 2” or analytic processes that require working memory and that tend to be slow and deliberative. In this framework, an important function of analytic processes is to monitor intuitive processes for mistakes and intervene when necessary. In the context of misinformation, a natural prediction is that engaging Type 2 processes facilitates the discernment between true and false claims (Pennycook & Rand, 2019a). An individual’s propensity to engage in analytic (rather than intuitive) processes is often measured using the Cognitive Reflection Test (CRT; Frederick, 2005). Consider an item from this measure: “A bat and a ball cost $1.10 in total. The bat costs $1 more than the ball. How much does the ball cost?” An intuitive answer that rapidly comes to mind is 10 cents. However, reflecting upon this question reveals that the correct response is 5 cents. Better performance on the CRT predicts correct responses to many thinking and reasoning tasks (Toplak et al., 2011), lower levels of epistemically suspect beliefs (Pennycook et al., 2015a), and less belief in and sharing of misinformation (Bago et al., 2021; Bronstein et al., 2019; Pennycook & Rand, 2019a, 2019b; Ross et al., 2021; Sindermann et al., 2020).

The classical account of reasoning

According to the classical account of reasoning, people who fail to override their Type 1 responses are more likely to perceive misinformation as true (Pennycook & Rand, 2019b). Consistent with this hypothesis, research indicates that people who rely more on analytic thinking and less on emotions are less likely to believe in misinformation (Martel et al., 2019); people tend to rationalize information that aligns with their attitudes when put under time pressure but are more likely to form objectively correct interpretations when allowed more time for reflection (Bago et al., 2021); and people higher in analytic thinking are less likely to believe political misinformation, even when it aligns with their political ideology (Pennycook & Rand, 2019b; Ross et al., 2021).

Extending this line of research to misinformation about COVID-19, there is evidence that lower levels of analytic thinking (among other individual differences) are associated with believing misinformation about COVID-19. This association has been found in samples from Canada (Pennycook, McPhetres, Bago, et al., 2021), Italy (Salvi et al., 2021), Iran (Sadeghiyeh et al., 2020), Slovakia (Čavojová et al., 2020), Turkey (Alper et al., 2020), the U.S. (Pennycook, McPhetres, Zhang, et al., 2020; Salvi et al., 2021; Stanley et al., 2020), and the UK (Pennycook, McPhetres, Zhang, et al., 2020). While there is evidence that higher levels of analytic thinking predicts being better able to discern between accurate and inaccurate information about COVID-19 in Italy (Salvi et al., 2021) and the United States (Calvillo et al., 2020; Salvi et al., 2021), there is evidence that analytic thinking does not predict the acceptance of accurate COVID-19 information in Slovakia (Čavojová et al., 2020). Better understanding these predictors of beliefs about COVID-19 is important because people who believe misinformation about COVID-19 are less likely to follow public health advice designed to mitigate this disease’s spread (Roozenbeek et al., 2020).

To our knowledge, only one study has investigated the relationship between analytic thinking and the willingness to share COVID-19 misinformation, finding a significant negative relationship with a U.S. sample (Pennycook, McPhetres, Zhang, et al., 2020). More work on this topic is also needed to help with developing interventions to reduce its spread.

Rationale

The relationship between analytic thinking and COVID-19 misinformation beliefs and willingness to share this misinformation has not yet been examined in Australia. Australia provides a useful point of comparison with existing research from other countries because per capita rates of COVID-19 cases and fatalities are relatively very low (WHO, 2021), with less than one-tenth of the fatality rate per capita compared with other countries where the relationship between analytic thinking and COVID-19 misinformation belief had been studied (Roser et al., 2020), with the exception of Slovakia (see Table 1).

Table 1.

COVID-19 cases and fatalities as of 2 May 2020 (the date our data collection commenced)

| Country | Fatalities per million | Cases per million |

|---|---|---|

| Italy | 474.8 | 3,462.2 |

| United Kingdom | 413.0 | 2,750.7 |

| United States | 210.3 | 3,454.0 |

| Canada | 120.0 | 1,534.8 |

| Iran | 73.29 | 1,148.3 |

| Turkey | 39.6 | 1,474.7 |

| Slovakia | 4.4 | 257.7 |

| Australia | 3.7 | 266.6 |

Nonetheless, due to the global nature of digital communication, Australians have been exposed to substantial amounts of COVID-19 misinformation. A nationally representative survey conducted in April 2020 found that 59% of Australians indicated they had encountered COVID-19 misinformation at least “some of the time” (Park et al., 2020). This is likely to be an underestimate of misinformation exposure because people who agree with COVID-19 misinformation would not regard it as misinformation. Moreover, there is evidence that many Australians believe COVID-19 misinformation (Pickles et al., 2021). A nationally representative survey conducted in May 2020 found that many Australians reported believing at least one example of debunked misinformation about COVID-19, with 39% believing that the virus was engineered by a Chinese laboratory in Wuhan and 12% believing that the 5G network has been used to spread the virus (Essential Research, 2020).

In summary, the Australian context provides an excellent opportunity to investigate misinformation beliefs and the willingness to share misinformation in a country where exposure to COVID-19 misinformation was high, but the relative risk of contracting COVID-19 was low.

Hypotheses

We preregistered six hypotheses relating to the role of analytic thinking in the spread of COVID-19 misinformation. As the classical account of reasoning predicts that people with higher levels of analytic thinking would be better at identifying accurate information, we hypothesized that people with higher levels of analytic thinking would be less likely to perceive COVID-19 misinformation as accurate, would be more likely to perceive information as accurate, and would be better able to discern between the two.

H1: Higher analytic thinking predicts lower belief in COVID-19 misinformation.

H2: Higher analytic thinking predicts higher belief in COVID-19 information.

H3: Higher analytic thinking predicts better discernment between COVID-19 misinformation and information.

While the classical reasoning account does not make explicit predictions about the willingness to share misinformation, it stands to reason that people with higher levels of analytic thinking would be less likely to share misinformation since most people report that accuracy is very important when deciding what information to share (Pennycook et al., 2021).

H4: Higher analytic thinking predicts lower willingness to share COVID-19 misinformation.

H5: Higher analytic thinking predicts higher willingness to share COVID-19 information.

H6: Higher analytic thinking predicts better discernment between the willingness to share COVID-19 misinformation and information.

Methods

In a confirmatory approach, we preregistered our hypotheses and analysis plan using the As Predicted repository #40309.1 Ethics approvals were granted by the University of Kent #202015872211976468, Macquarie University #52020640915322, and the Australian National University #2020/235. Analyses were performed by using R (Version 4.03; R Core Team, 2018) with the psych (Revelle, 2020) and MASS (Venables & Ripley, 2020) packages. The data, analysis code, wording for all questions, and other materials are available on the Open Science Framework (https://osf.io/gcfb7/).

Participants

Australian participants were recruited as part of the International Collaboration on the Social & Moral Psychology of COVID-19 (ICSMP) project (https://icsmp-covid19.netlify.app/index.html) (Van Bavel, Cichocka, et al., 2020). Australian residents who completed the ICSMP survey were randomly allocated either to additional questions regarding COVID-19 statements that are the focus of the present study or to a separate study. The answers to questions from the ICSMP and the additional Australia-only survey were combined to test the present study’s hypotheses. The sample size was based on available financial resources and is comparable to those used in related misinformation research (e.g., Pennycook, McPhetres, Zhang, et al., 2020).

Participants were recruited via Lucid, a professional surveying company that has been assessed as suitable for social science research (Coppock & McClellan, 2019). Data were collected between 2 May and 12 May 2020, 45–55 days following the formal declaration of a human biosecurity emergency in Australia due to the COVID-19 pandemic (Commonwealth of Australia, 2020). Our sample was quota matched for age, gender, and state- or territory-level residence within Australia. No analyses were conducted until the data collection was complete. As per our preregistered analysis plan, we used two attention check questions from the main ICSMP survey. In the first of these, we asked participants to move a slider to the left, providing the value zero on a numerical scale. In the second, we asked participants to type the number “213” as their response. In line with the preregistered plan, any participant whose response to either of these questions was incorrect was removed from the data set before conducting the analysis. In total, 342 participants failed at least one of these attention checks and were removed, resulting in a sample of 742 participants. The median survey completion time for participants was 21.3 minutes. Participants were not forced to answer survey questions. Those participants with partially incomplete observations for any survey item used in a given analysis were removed from that particular analysis, resulting in a small number of differences in the number of observations across the analyses.

Variables

As part of the (ICSMP) project, participants completed a modified version of a three-item Cognitive Reflection Test (CRT) designed to measure the tendency to engage in analytic thinking (Frederick, 2005). The psychometric properties of the original three-item version of the CRT are generally considered to be reliable and valid (Bialek & Pennycook, 2018). New items were developed for the ICSMP because the original CRT has become widely known, and correct responses can be easily found online (Bialek & Pennycook, 2018). The total number of correct answers on this test form the CRT score variable, which ranges from 0 to 3 (M = 0.83, SD = 0.99). See Supplementary Fig. S1 for a histogram of CRT scores. As a test of internal consistency, Cronbach’s alpha is known to be less effective for nonnormally distributed data with low numbers of items (Sijtsma, 2009). As an alternative, we adopted the Greatest Lower Bound test (Jackson & Agunwamba, 1977; Ten Berge & Sočan, 2004) and used the procedure specified by McNeish (2017, pp. 431–432). This test showed the CRT scale to be internally consistent (glb = 0.80).

Participants were presented with ten statements (see Tables 2 and 3) about COVID-19, including five “information” statements that public health agencies had made (e.g., the Australian Department of Health and the WHO) and five “misinformation” statements that had been debunked by signatories of the International Fact-Checking Network’s code of principles (Poynter, 2020). We slightly reworded some of these misinformation statements while maintaining their central factual claim. These statements were presented to participants in a random order. See Supplementary Table S1 for the original statements and their amendments.

Table 2.

Perceived accuracy and willingness to share misinformation statements

| Misinformation statements | Perceived accuracy (%) | Willingness to share (%) |

|---|---|---|

| 1. “The coronavirus is not a virus. It’s 5G that’s actually killing people and not a virus. They are trying to get you scared of a fake virus when it’s the 5G towers being built around the world.” | 5.6 | 8.5 |

| 2. “The coronavirus pandemic can be dramatically slowed or stopped completely with the immediate widespread use of high doses of vitamin C.” | 9.8 | 12.9 |

| 3. “The truth is that the WuXi pharma lab located in Wuhan, China, is where COVID-19 was developed and conveniently broke out.” | 39.7 | 34.3 |

| 4. “Boil some orange peels with cayenne pepper in it. Stand over the pot and breathe in the steam so all that mucus can release. Keep blowing your nose too. Mucus is the problem; it is where the virus lives.” | 7.7 | 9.1 |

| 5. “They started mass vaccination for COVID-19 in Africa, and the first 7 children who received it died on the spot.” | 5.3 | 8.8 |

Table 3.

Perceived accuracy and willingness to share information statements

| Information statements | Perceived accuracy (%) | Willingness to share (%) |

|---|---|---|

| 1. “COVID-19 presents a more serious risk to people aged 70 or over, people aged 65 and over with chronic medical conditions, and people with a compromised immune system.” | 94.4 | 90.1 |

| 2. “If you live in an apartment with a security entrance, don’t allow delivery people to enter the building or use lifts or internal stairways. This minimizes the risk to any older or vulnerable people who share the common areas of the property.” | 80.2 | 66.2 |

| 3. “Being able to hold your breath for 10 seconds or more without coughing or feeling discomfort does not mean you are free from COVID-19.” | 53.5 | 37.9 |

| 4. “You should clean and disinfect frequently used objects such as mobile phones, keys, wallets, and work passes to stop the coronavirus from spreading.” | 89.9 | 86.0 |

| 5. “One way to slow the spread of viruses, such as coronavirus, is social distancing (also called physical distancing). The more space between you and others, the harder it is for the virus to spread.” | 94.7 | 91.5 |

Participants were randomly allocated to either the accuracy group (n = 378) who were asked about the accuracy of the statements or the sharing group (n = 364) who were asked about their willingness to share the statements. We split participants into two groups because experimental research demonstrates that asking people about accuracy can nudge them toward greater truth discernment in what social media headlines they are willing to share (Fazio, 2020; Pennycook, McPhetres, Zhang, et al., 2020; Pennycook & Rand, 2021; Pennycook et al., 2021; Roozenbeek et al., 2021).

Those participants in the accuracy group were asked to indicate whether each misinformation and information statement was accurate: “You will be presented with claims that have been made about the Coronavirus (COVID-19). To the best of your knowledge, are these claims accurate?” The response options were “yes” or “no.” The number of “yes” responses on the misinformation statements was added to create the perceived misinformation accuracy scale, ranging from 0 to 5 (M = 0.68, SD = 1.00). The scale was internally consistent (glb = 0.92). The “yes” responses to the information statements were tallied into a perceived information accuracy scale, ranging from 0 to 5 (M = 4.13, SD = 1.03). This scale was also internally consistent (glb = 0.87).

Those participants in the sharing group were asked whether they would consider sharing each misinformation and information statement: “Would you consider sharing these claims with your family or friends (such as during a phone call, in a text message, or via social media)?” Unlike some earlier studies in this literature (e.g. Pennycook & Rand, 2019b), this question was worded to enquire about any form of sharing (i.e., not specifically about sharing via social media) because previous research had found that the most common way Australians share information about COVID-19 is through in-person conversations (Park et al., 2020). The response options were “yes” or “no.” The “yes” responses to the misinformation statements were added together to create a willingness to share misinformation scale, ranging from 0 to 5 (M = 0.74, SD = 1.08). This scale was internally consistent (glb = 0.89). The “yes” responses to information statements were added to create a willingness to share information scale, ranging from 0 to 5 (M = 3.72, SD = 1.22). This scale was internally consistent (glb = 0.89).

Histograms of these four variables are available in Supplementary Figs. 2 to 5. We deviated our preregistration in that we used Spearman’s correlations instead of Pearson’s correlations since the data are not normally distributed (Spearman, 1987).

Following the procedure developed by Pennycook and Rand (2019b), we compiled an accuracy discernment variable by subtracting the participants’ misinformation accuracy score from their perceived information accuracy score and standardizing this difference by converting them to a z-score. Likewise, we compiled a sharing discernment scale by subtracting the participants’ misinformation sharing score from their information sharing score and standardizing those values.

Results

Table 2 shows the perceived accuracy ratings and sharing ratings for COVID-19 misinformation statements. A total of 42.9% of participants reported that at least one of these statements was accurate, and 43.9% of participants indicated they would be willing to share at least one of them.

Table 3 shows the perceived accuracy ratings and willingness to share COVID-19 information. A total of 96.2% of participants reported that at least one of these statements was accurate, and 98.4% of participants indicated they would be willing to share at least one of them.

Analysis

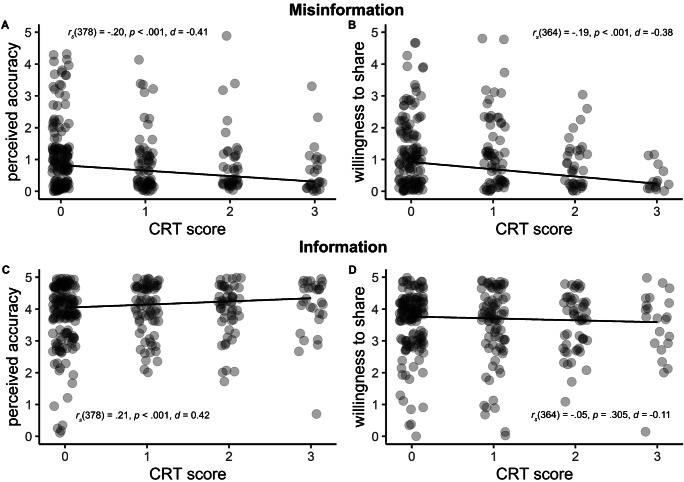

Regarding hypothesis H1, as predicted, there was a significant negative association between CRT score and the perceived misinformation accuracy scale, rs(378) = −.20, p < .001, d = −0.41. This relationship is shown in Fig. 1a and indicates that participants with higher analytic thinking levels were less likely to perceive misinformation about COVID-19 to be accurate.

Fig. 1.

Associations between CRT and perceived accuracy and the willingness to share. Note. Each dot represents an individual participant. The value on the accuracy scale shows how many items (out of five) the participant rated as accurate. The value on the willingness to share scale shows how many items (out of five) the participant indicated they were willing to share.

However, we found no support for H2, as there was no significant association between CRT scores and the perceived information accuracy scale, rs(378) = .09, p = .094, d = 0.17 (see Fig. 1c). Supporting H4, we found a significant negative relationship between CRT score and the misinformation willingness to share scale, rs(364) = −.19, p < .001, d = −0.38 (see Fig. 1b). However, H5 was not supported as we found no association between CRT score and the information willingness to share scale, (rs(364) = −.05, p = .305, d = −0.11 (see Fig. 1d).

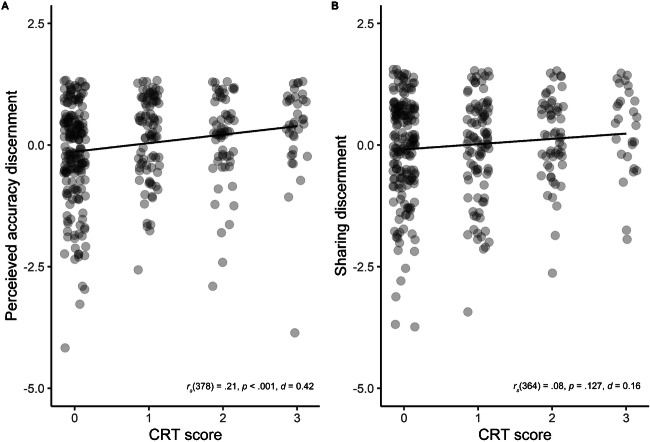

Regarding hypothesis H3, we found a significant positive association between CRT scores and accuracy discernment scores, rs(378) = .21, p < .001, d = 0.42, which is shown in Fig. 2a. In line with our predictions, this shows that people who had higher levels of analytic thinking could discern the COVID-19 misinformation from the COVID-19 information statements more accurately.

Fig. 2.

Associations between CRT score and perceived accuracy discernment and sharing discernment. Note. Each dot represents an individual participant. A higher rating on the perceived accuracy discernment scale indicates the participant was better able to discern between COVID-19 information statements and misinformation statements. A higher rating on the sharing discernment scale indicates the participant was more discerning in their willingness to share COVID-19 misinformation statements compared with COVID-19 information statements; y-axis values are standard deviations from the mean.

However, we found no relationship between CRT scores and sharing discernment scores as predicted by H6, rs(364) = .08, p = .127, d = 0.16 (see Fig. 2b). Contrary to our predictions, this means that higher analytic thinking levels did not predict better discernment between the COVID-19 misinformation and information items when it comes to the willingness to share them with other people.2 Correlations between all variables are reported in Supplementary Tables S3 and S4.

Discussion

In this study we sought to establish whether analytic thinking would predict whether Australians believed or were willing to share misinformation about COVID-19, in line with the classical reasoning account of misinformation perception (Pennycook & Rand, 2019b). Our results do support this account, finding a significant negative relationship between analytic thinking and COVID-19 misinformation beliefs and willingness to share this misinformation in Australia.

More broadly, and in line with other research (Essential Research, 2020; Pickles et al., 2021), we find that many Australians rate some debunked COVID-19 misinformation as accurate: 42.9% reported believing at least one of our misinformation items, and 43.9% reported that they were willing to share at least one of them. These findings confirm that Australians have been affected by the “infodemic” declared by the World Health Organization, even though Australia’s COVID-19 infection and fatality rates were very low at the time the study was conducted.

As predicted, participants higher in analytic thinking (measured using the CRT) were significantly less likely to perceive COVID-19 misinformation as accurate compared with participants lower in analytic thinking. These findings are consistent with similar research on beliefs about COVID-19 misinformation in Canada, Italy, Iran, Slovakia, Turkey, the United States, and the UK (Alper et al., 2020; Čavojová et al., 2020; Pennycook, McPhetres, Bago, et al., 2021; Sadeghiyeh et al., 2020; Salvi et al., 2021). We also found that people with higher analytic thinking levels were less willing to share debunked misinformation about COVID-19, which is consistent with research from the United States (Pennycook, McPhetres, Zhang, et al., 2020).

Given that Australia has much lower COVID-19 fatalities per capita than many other countries, our results make a contribution to the classical reasoning literature by showing that the relationship between analytic thinking and COVID-19 misinformation beliefs persists even when the risk level is relatively low. While the classical reasoning account is silent about the willingness to share misinformation, our results finding a negative relationship between analytic thinking and willingness to share COVID-19 misinformation stand to reason because people report that accuracy is an important consideration when deciding what information to share (Pennycook et al., 2021) and people higher in analytic thinking are better able to identify misinformation, and thus, avoid sharing it.

However, our hypothesis that people with higher analytic thinking levels would be more likely to believe objectively accurate information statements about COVID-19 was not supported. This is a somewhat curious finding, but it does align with similar research conducted in Slovakia, where cases per capita and fatalities per capita were also low and where no relationship was found between analytic thinking and perceiving coronavirus facts as true (Čavojová et al., 2020). Our prediction that people with higher analytic thinking levels would be more willing to share COVID-19 information statements was not supported either. A potential explanation for this lack of associations is that cognitive reflection might only be strongly impactful for rejecting false claims (particularly when they are implausible), not for endorsing true claims (particularly when they are plausible). This possibility is consistent with research that found only inconsistent evidence for a relationship between analytic thinking and belief in legitimate news headlines despite strong associations with fake news headlines (Pennycook & Rand, 2019b). Alternatively, the lack of associations with belief in and willingness to share information statements may reflect methodological limitations of our study (see below).

As predicted, we also found that people with higher analytic thinking levels could better discern true COVID-19 information statements from debunked misinformation, in line with research from in Italy (Salvi et al., 2021) and the United States (Calvillo et al., 2020; Salvi et al., 2021). However, contrary to our prediction, we found no evidence that people with higher analytic thinking levels were any better at discernment when it comes to the willingness to share misinformation. It is possible that people do not always consider whether a claim is accurate before deciding to share it (Pennycook, McPhetres, Zhang, et al., 2020).

Taken together, these findings provide support for the classical reasoning account of misinformation perception (Pennycook & Rand, 2019b), consistent with a growing body of evidence accumulated across the world (Bago et al., 2021; Bronstein et al., 2019; Martel et al., 2019; Pennycook, McPhetres, Zhang, et al., 2020; Pennycook & Rand, 2019b).

Limitations

Our study has some limitations. First, we used self-reported measures of beliefs and behavioral intentions, which might not match true beliefs and behaviors. While there is evidence that the self-reported willingness to share misinformation correlates with real-world sharing behavior (Mosleh et al., 2020), it is conceivable that our survey questions have led to either socially desirable responding (Paulhus, 2002) or insincere responding (Levy & Ross, 2021) in reporting their beliefs and intentions, and as such there may be an Intention-Behavior Gap (Sheeran & Webb, 2016). For example, consider misinformation statement three, claiming that the COVID-19 virus was created in a laboratory in China, which received a much higher level of support than the other debunked statements. Some respondents may have provided responses based on racial or geopolitical attitudes rather than a sincere belief in the statement.

Second, the null results regarding information items could reflect ceiling effects. This is because the information items used in our study were generally perceived to be highly accurate, and these high levels could have suppressed variation in perception scores (i.e., a ceiling effect). Further studies might benefit from using information items that are less obviously accurate.

Finally, there is debate about the extent to which the CRT captures the disposition to rely upon analytic thinking as opposed to measuring numeracy or general cognitive ability (Isler et al., 2020; Pennycook & Ross, 2016; Sinayev & Peters, 2015). Future work on the relationship between analytic thinking and misinformation beliefs and sharing intentions would benefit from using additional measures of analytic thinking or controlling for general cognitive ability. We also note that as this study was correlational in design, we cannot make causal inferences about the relationship between analytic thinking and beliefs, or analytic thinking and sharing intentions.

Conclusion

Our results provide insights into the psychological correlates of COVID-19 misinformation beliefs and the willingness to share that misinformation among Australians. Supporting the classical account of misinformation perception, we find that people who are lower in analytic thinking are more likely to perceive debunked COVID-19 misinformation as accurate and are also more willing to share such misinformation.

Supplementary Information

(DOCX 920 kb)

Acknowledgements

M. S. Nurse was supported by an Australian Government Research Training Program (RTP) scholarship and R. M. Ross was supported by the Australian Research Council (grant no: DP180102384). The authors would also like to thank Dr Will J. Grant for providing feedback on a previous version of this article.

Footnotes

Note that hypotheses relating to vaccination intention listed in this preregistration will be subject to a separate study on that topic.

In our preregistration we stated that we would compare accuracy judgments and sharing intention with a mixed-design ANOVA. However, as a reviewer pointed out, it is difficult to interpret an analysis in which different participants were asked different questions. Consequently, we do not report this analysis here. Instead, Supplementary Table S2 compares the strength of the correlations using Hittner May and Silver’s Z-score (Hittner et al., 2003; Zou, 2007).

Open practices statement

The data, analysis code and materials for all analyses are available at https://osf.io/gcfb7/wiki/home/ and our hypotheses and confirmatory analysis plan was preregistered with AsPredicted.orghttps://aspredicted.org/s4r8d.pdf

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- Allington, D., Duffy, B., Wessely, S., Dhavan, N., & Rubin, J. (2020). Health-protective behaviour, social media usage and conspiracy belief during the COVID-19 public health emergency. Psychological Medicine, 1–7. Advance online publication. 10.1017/s003329172000224x [DOI] [PMC free article] [PubMed]

- Alper, S., Bayrak, F., & Yilmaz, O. (2020). Psychological correlates of COVID-19 conspiracy beliefs and preventive measures: Evidence from Turkey. Current Psychology. Advance online publication. 10.1007/s12144-020-00903-0 [DOI] [PMC free article] [PubMed]

- Bago, B., Rand, D. G., & Pennycook, G. (2021). Fake news, fast and slow: Deliberation reduces belief in false (but not true) news headlines. Journal of Experimental Psychology: General. Advance online publication. 10.1037/xge0000729 [DOI] [PubMed]

- Barua, Z., Barua, S., Aktar, S., Kabir, N., & Li, M. (2020). Effects of misinformation on COVID-19 individual responses and recommendations for resilience of disastrous consequences of misinformation. Progress in Disaster Science, 8, Article 100119. 10.1016/j.pdisas.2020.100119 [DOI] [PMC free article] [PubMed]

- Bialek M, Pennycook G. The cognitive reflection test is robust to multiple exposures. Behavior Research Methods. 2018;50(5):1953–1959. doi: 10.3758/s13428-017-0963-x. [DOI] [PubMed] [Google Scholar]

- Bronstein MV, Pennycook G, Bear A, Rand DG, Cannon TD. Belief in fake news is associated with delusionality, dogmatism, religious fundamentalism, and reduced analytic thinking. Journal of Applied Research in Memory and Cognition. 2019;8(1):108–117. doi: 10.1016/j.jarmac.2018.09.005. [DOI] [Google Scholar]

- Calvillo, D. P., Ross, B. J., Garcia, R. J. B., Smelter, T. J., & Rutchick, A. M. (2020). Political ideology predicts perceptions of the threat of COVID-19 (and susceptibility to fake news about it). Social Psychological and Personality Science, 194855062094053. 10.1177/1948550620940539

- Čavojová, V., Šrol, J., & Ballová Mikušková, E. (2020). How scientific reasoning correlates with health-related beliefs and behaviors during the COVID-19 pandemic? Journal of Health Psychology, 135910532096226. 10.1177/1359105320962266 [DOI] [PubMed]

- Commonwealth of Australia. (2020). Biosecurity Human Biosecurity Emergency, Human Coronavirus with Pandemic Potential Declaration. Commonwealth of Australia. https://www.legislation.gov.au/Details/F2020L00266

- Coppock A, McClellan OA. Validating the demographic, political, psychological, and experimental results obtained from a new source of online survey respondents. Research & Politics. 2019;6(1):205316801882217. doi: 10.1177/2053168018822174. [DOI] [Google Scholar]

- Earnshaw VA, Bogart LM, Klompas M, Katz IT. Medical mistrust in the context of Ebola: Implications for intended care-seeking and quarantine policy support in the United States. Journal of Health Psychology. 2019;24(2):219–228. doi: 10.1177/1359105316650507. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Essential Research. (2020). The Essential Report 18 May 2020. https://essentialvision.com.au/wp-content/uploads/2020/05/Essential-Report-180520-1.pdf

- Evans JSBT, Stanovich KE. Dual-process theories of higher cognition. Perspectives on Psychological Science. 2013;8(3):223–241. doi: 10.1177/1745691612460685. [DOI] [PubMed] [Google Scholar]

- Fazio, L. (2020). Pausing to consider why a headline is true or false can help reduce the sharing of false news. Harvard Kennedy School Misinformation Review. 10.37016/mr-2020-009

- Frederick S. Cognitive reflection and decision making. Journal of Economic Perspectives. 2005;19(4):25–42. doi: 10.1257/089533005775196732. [DOI] [Google Scholar]

- Hittner JB, May K, Silver NC. A Monte Carlo Evaluation of tests for comparing dependent correlations. The Journal of General Psychology. 2003;130(2):149–168. doi: 10.1080/00221300309601282. [DOI] [PubMed] [Google Scholar]

- Isler O, Yilmaz O, Dogruyol B. Activating reflective thinking with decision justification and debiasing training. Judgment and Decision Making. 2020;15(6):926–938. [Google Scholar]

- Jackson PH, Agunwamba CC. Lower bounds for the reliability of the total score on a test composed of non-homogeneous items: I. Algebraic lower bounds. Psychometrika. 1977;42(4):567–578. doi: 10.1007/bf02295979. [DOI] [Google Scholar]

- Kahneman, D. (2012). Thinking, fast and slow. Penguin.

- Levy, N., & Ross, R. (2021). The cognitive science of fake news. In M. Hannon & J. de Ridder (Eds.), The Routledge handbook of political epistemology (pp. 181–191). Routledge. 10.31234/osf.io/3nuzj

- Martel, C., Pennycook, G., & Rand, D. G. (2019). Reliance on emotion promotes belief in fake news. Yale University Press. 10.31234/osf.io/79ejc [DOI] [PMC free article] [PubMed]

- McNeish, D. (2017). Thanks coefficient alpha, we'll take it from here. Psychological Methods. 10.1037/met0000144 [DOI] [PubMed]

- Mian, A., & Khan, S. (2020). Coronavirus: The spread of misinformation. BMC Medicine, 18(1). 10.1186/s12916-020-01556-3 [DOI] [PMC free article] [PubMed]

- Mosleh, M., Pennycook, G., & Rand, D. G. (2020). Self-reported willingness to share political news articles in online surveys correlates with actual sharing on Twitter. PLOS ONE, 15(2), Article e0228882. 10.1371/journal.pone.0228882 [DOI] [PMC free article] [PubMed]

- Park, S., Fisher, C., Lee, J. Y., & McGuiness, K. (2020). COVID-19: Australian news and misinformation. Univerity of Canberra. https://www.canberra.edu.au/research/faculty-research-centres/nmrc/publications/documents/COVID-19-Australian-news-and-misinformation.pdf

- Paulhus, D. L. (2002). Socially desirable responding: The evolution of a construct. In H. I. Braun, D. N. Jackson, & D. E. Wiley (Eds.), The role of constructs in psychological and educational measurement (pp. 49–69). Erlbaum.

- Pennycook G, Rand DG. Fighting misinformation on social media using crowdsourced judgments of news source quality. Proceedings of the National Academy of Sciences of the United States of America. 2019;116(7):2521–2526. doi: 10.1073/pnas.1806781116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pennycook G, Rand DG. Lazy, not biased: Susceptibility to partisan fake news is better explained by lack of reasoning than by motivated reasoning. Cognition. 2019;188:39–50. doi: 10.1016/j.cognition.2018.06.011. [DOI] [PubMed] [Google Scholar]

- Pennycook, G., & Rand, D. (2021). Reducing the spread of fake news by shifting attention to accuracy: Meta-analytic evidence of replicability and generalizability. PsyArXiv. 10.31234/osf.io/v8ruj

- Pennycook, G., & Ross, R. M. (2016). Commentary: Cognitive reflection vs. calculation in decision making. Frontiers in Psychology, 7. 10.3389/fpsyg.2016.00009 [DOI] [PMC free article] [PubMed]

- Pennycook G, Fugelsang JA, Koehler DJ. Everyday consequences of analytic thinking. Current Directions in Psychological Science. 2015;24(6):425–432. doi: 10.1177/0963721415604610. [DOI] [Google Scholar]

- Pennycook G, Fugelsang JA, Koehler DJ. What makes us think? A three-stage dual-process model of analytic engagement. Cognitive Psychology. 2015;80:34–72. doi: 10.1016/j.cogpsych.2015.05.001. [DOI] [PubMed] [Google Scholar]

- Pennycook, G., McPhetres, J., Zhang, Y., Lu, J., & Rand, D. G. (2020). Fighting COVID-19 misinformation on social media: Experimental evidence for a scalable accuracy-nudge intervention. Psychological Science. 10.1177/0956797620939054 [DOI] [PMC free article] [PubMed]

- Pennycook, G., Epstein, Z., Mosleh, M., Arechar, A. A., Eckles, D., & Rand, D. (2021). Shifting attention to accuracy can reduce misinformation online. Naturehttps://www.nature.com/articles/s41586-021-03344-2 [DOI] [PubMed]

- Pennycook, G., McPhetres, J., Bago, B., & Rand, D. (2021). Beliefs about COVID-19 in Canada, the U.K., and the U.S.A.: A novel test of political polarization and motivated reasoning. Personality and Social Psychology Bulletin.10.31234/osf.io/zhjkp [DOI] [PMC free article] [PubMed]

- Pickles, K., Cvejic, E., Nickel, B., Copp, T., Bonner, C., Leask, J., Ayre, J., Batcup, C., Cornell, S., Dakin, T., Dodd, R. H., Isautier, J. M. J., & McCaffery, K. J. (2021). COVID-19 misinformation trends in Australia: Prospective longitudinal national survey. Journal of Medical Internet Research, 23(1), Article e23805. 10.2196/23805 [DOI] [PMC free article] [PubMed]

- Poynter. (2020). International Fact-Checking Network's code of principles. Poynter. Retrieved 5 June from https://ifcncodeofprinciples.poynter.org/

- R Core Team. (2018). R: A language and environment for statistical computing. In R Foundation for Statistical Computing. https://www.R-project.org

- Revelle, W. (2020). psych: Procedures for psychological, psychometric, and personality research (R Package Version 1.9.12.31). Northwestern University. https://cran.r-project.org/web/packages/psych/psych.pdf

- Roozenbeek, J., Freeman, A. L. J., & Van Der Linden, S. (2021). How accurate are accuracy-nudge interventions? A preregistered direct replication of Pennycook et al. (2020). Psychological Science, 095679762110245. 10.1177/09567976211024535 [DOI] [PMC free article] [PubMed]

- Roozenbeek, J., Schneider, C. R., Dryhurst, S., Kerr, J., Freeman, A. L. J., Recchia, G., Van Der Bles, A. M., & Van Der Linden, S. (2020). Susceptibility to misinformation about COVID-19 around the world. Royal Society Open Science, 7(10), Article 201199. 10.1098/rsos.201199 [DOI] [PMC free article] [PubMed]

- Roser, M., Ritchie, H., Ortiz-Ospina, E., & Hasell, J. (2020). Coronavirus pandemic (COVID-19). https://ourworldindata.org/coronavirus

- Ross RM, Rand DG, Pennycook G. Beyond “fake news”: Analytic thinking and the detection of false and hyperpartisan news headlines. Judgment and Decision Making. 2021;16(2):484–504. [Google Scholar]

- Sadeghiyeh, H., Khanahmadi, I., Farhadbeigi, P., & Karimi, N. (2020). Cognitive reflection and the coronavirus conspiracy beliefs. PsyArXiv Preprints. 10.31234/osf.io/p9wxj

- Salvi, C., Iannello, P., Cancer, A., McClay, M., Rago, S., Dunsmoor, J. E., & Antonietti, A. (2021). Going viral: How fear, socio-cognitive polarization and problem-solving influence fake news detection and proliferation during COVID-19 pandemic. Frontiers in Communication, 5. 10.3389/fcomm.2020.562588

- Sheeran P, Webb TL. The intention-behavior gap. Social and Personality Psychology Compass. 2016;10(9):503–518. doi: 10.1111/spc3.12265. [DOI] [Google Scholar]

- Sijtsma K. On the use, the misuse, and the very limited usefulness of Cronbach’s alpha. Psychometrika. 2009;74(1):107–120. doi: 10.1007/s11336-008-9101-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sinayev, A., & Peters, E. (2015). Cognitive reflection vs. calculation in decision making. Frontiers in Psychology, 6. 10.3389/fpsyg.2015.00532 [DOI] [PMC free article] [PubMed]

- Sindermann C, Cooper A, Montag C. A short review on susceptibility to falling for fake political news. Current Opinion in Psychology. 2020;36:44–46. doi: 10.1016/j.copsyc.2020.03.014. [DOI] [PubMed] [Google Scholar]

- Singh, L., Shweta, B., Bode, L., Budak, C., Chi, G., Kawintiranon, K., Padden, C., Vanarsdall, R., Vraga, E., & Wang, Y. (2020). A first look at COVID-19 information and misinformation sharing on Twitter. ArXiv Preprint. https://arxiv.org/abs/2003.13907

- Spearman C. The proof and measurement of association between two things. The American Journal of Psychology. 1987;100(3/4):441–471. doi: 10.2307/1422689. [DOI] [PubMed] [Google Scholar]

- Stanley, M. L., Barr, N., Peters, K., & Seli, P. (2020). Analytic-thinking predicts hoax beliefs and helping behaviors in response to the COVID-19 pandemic. Thinking & Reasoning, 1–14. Advance online publication. 10.1080/13546783.2020.1813806

- Swire-Thompson B, Lazer D. Public Health and Online Misinformation: Challenges and Recommendations. Annual Review of Public Health. 2020;41(1):433–451. doi: 10.1146/annurev-publhealth-040119-094127. [DOI] [PubMed] [Google Scholar]

- Tangcharoensathien, V., Calleja, N., Nguyen, T., Purnat, T., D’Agostino, M., Garcia-Saiso, S., Landry, M., Rashidian, A., Hamilton, C., Abdallah, A., Ghiga, I., Hill, A., Hougendobler, D., Van Andel, J., Nunn, M., Brooks, I., Sacco, P. L., De Domenico, M., Mai, P., Gruzd, A., Alaphilippe, A., & Briand, S. (2020). Framework for managing the COVID-19 infodemic: Methods and results of an online, crowdsourced WHO technical consultation. Journal of Medical Internet Research, 22(6), Article e19659. 10.2196/19659 [DOI] [PMC free article] [PubMed]

- Ten Berge JMF, Sočan G. The greatest lower bound to the reliability of a test and the hypothesis of unidimensionality. Psychometrika. 2004;69(4):613–625. doi: 10.1007/bf02289858. [DOI] [Google Scholar]

- Toplak ME, West RF, Stanovich KE. The Cognitive Reflection Test as a predictor of performance on heuristics-and-biases tasks. Memory & Cognition. 2011;39(7):1275–1289. doi: 10.3758/s13421-011-0104-1. [DOI] [PubMed] [Google Scholar]

- Van Bavel JJ, Baicker K, Boggio PS, Capraro V, Cichocka A, Cikara M, Crockett MJ, Crum AJ, Douglas KM, Druckman JN, Drury J, Dube O, Ellemers N, Finkel EJ, Fowler JH, Gelfand M, Han S, Haslam SA, Jetten J, Kitayama S, Mobbs D, Napper LE, Packer DJ, Pennycook G, Peters E, Petty RE, Rand DG, Reicher SD, Schnall S, Shariff A, Skitka LJ, Smith SS, Sunstein CR, Tabri N, Tucker JA, Linden SVD, Lange PV, Weeden KA, Wohl MJA, Zaki J, Zion SR, Willer R. Using social and behavioural science to support COVID-19 pandemic response. Nature Human Behaviour. 2020;4(5):460–471. doi: 10.1038/s41562-020-0884-z. [DOI] [PubMed] [Google Scholar]

- Van Bavel, J. J., Cichocka, A., Capraro, V., Sjåstad, H., Nezlek, J. B., Pavlović, T., et al. (2020). National identity predicts public health support during a global pandemic. 10.31234/osf.io/ydt95 [DOI] [PMC free article] [PubMed]

- Van Bavel, J. J., Harris, E. A., Pärnamets, P., Rathje, S., Doell, K., & Tucker, J. A. (2020). Political psychology in the digital (mis)information age.10.31234/osf.io/u5/

- Venables, W., & Ripley, B. (2020). Modern Applied Statistics with S. In Springer. https://cran.r-project.org/web/packages/MASS/index.html

- Wang Y, McKee M, Torbica A, Stuckler D. Systematic Literature Review on the Spread of Health-related Misinformation on Social Media. Social Science & Medicine. 2019;240:112552. doi: 10.1016/j.socscimed.2019.112552. [DOI] [PMC free article] [PubMed] [Google Scholar]

- WHO. (2020). Director General's speech to the Munich Security Conference. World Health Organization. Retrieved 13 April 2021 from https://www.who.int/dg/speeches/detail/munich-security-conference

- WHO . WHO Coronavirus Disease (COVID-19) Dashboard. World Health Organisation. 2021. [Google Scholar]

- Zou GY. Toward Using Confidence Intervals to Compare Correlations. Psychological methods. 2007;12(4):399–413. doi: 10.1037/1082-989X.12.4.399. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

(DOCX 920 kb)