Abstract

Background:

This study is part of a larger research program focused on developing objective, scalable tools for digital behavioral phenotyping. We evaluated whether a digital app delivered on a smartphone or tablet using computer vision analysis (CVA) can elicit and accurately measure one of the most common early autism symptoms, namely, failure to respond to a name call.

Methods:

During a pediatric primary care well-child visit, 910 toddlers, 17-37 months old, were administered an app on an iPhone or iPad consisting of brief movies during which the child's name was called three times by an examiner standing behind them. Thirty-seven toddlers were subsequently diagnosed with autism spectrum disorder (ASD). Name calls and children’s behavior were recorded by the camera embedded in the device and children’s head turns were coded by both CVA and a human.

Results:

CVA coding of response to name was found to be comparable to human coding. Based on CVA, children with ASD responded to their name significantly less frequently than children without ASD. CVA also revealed that children with ASD who did orient to their name exhibited a longer latency before turning their head. Combining information about both the frequency and delay in response to name improved the ability to distinguish toddlers with and without ASD.

Conclusions:

A digital app delivered on an iPhone or iPad in real world settings using computer vision analysis to quantify behavior can reliably detect a key early autism symptom - failure to respond to name. Moreover, the higher resolution offered by CVA identified a delay in head turn in toddlers with ASD who did respond to their name. Digital phenotyping is a promising methodology for early assessment of ASD symptoms.

Introduction

Infants as young as 7 months of age typically respond when their name is called by turning their head and looking at the speaker. In contrast, young children with autism spectrum disorder (ASD) often fail to respond when their name is called (Dawson, Meltzoff, Osterling, Rinaldi, & Brown, 1998; Dawson et al., 2004; Miller et al., 2017; Nadig et al., 2007). Failure to respond to name is one of the earliest symptoms in infants who later develop ASD. Studies of home videotapes documented a failure to respond to name in 8-10-month-old infants who later developed autism (Werner, Dawson, Osterling, & Dinno, 2000). In a prospective longitudinal study of infant siblings of children with autism, 9-month-old infants who were later diagnosed with ASD failed to orient to their name and this persisted until preschool age (Miller et al., 2017). Caregivers are likely to report concerns about their child failing to respond to their name by the time their child is 18 months of age (Becerra-Culqui, Lynch, Owen-Smith, Spitzer, & Croen, 2018). Because of the robustness and early appearance of this autism symptom, assessments for responding to name are part of routinely-used autism screening questionnaires (Robins, Fein, Barton, & Green, 2001) and standardized diagnostic protocols (Gotham, Risi, Pickles, & Lord, 2007).

We have been developing novel computational approaches for behavioral assessment of a wide range of autism symptoms with the goal of developing reliable, objective, quantitative, and scalable methods for behavioral measurement of symptoms of ASD and other developmental disorders (Dawson & Sapiro, 2019). Our goal is to design and empirically validated time-efficient methods, based on video analytics, for collecting, quantifying, and automatically interpreting behavioral data using relatively inexpensive, ubiquitous devices that clinicians, researchers, and caregivers can use to augment traditional methods of neurodevelopmental assessment. Caregiver autism screening questionnaires, while useful, have lower performance rates with caregivers who have lower education and are from minority ethnic/racial backgrounds; current methods often require relatively high levels of literacy and knowledge of child development (Khowaja, Hazzard, & Robins, 2015). To what extent can digital tools based on direct behavioral observation assist in providing accurate and reliable assessments of autism behavioral symptoms?

The tools we are developing for digital assessment methods are based on downloadable software applications for low-cost mobile devices (e.g., tablet or smartphone) that can automatically and objectively measure autism risk behaviors as indexed by the child’s visual attention (Campbell et al., 2019; Egger et al., 2018), sensorimotor behaviors (Dawson et al., 2018), and facial expressions (Carpenter et al., 2020) in response to carefully-designed stimuli designed to elicit such behaviors. In a previous study (Campbell et al., 2019), we showed brief, engaging movies on an iPad to 104 16-30 month-old toddlers during their well-child checkup with a pediatrician. Twenty-two of the toddlers were diagnosed with ASD. At pre-specified time points, the child's name was called by an adult in the room three times. Children’s behavior while watching the movies was recorded by the camera in the iPad and coded automatically using computer vision analysis (CVA). The computer accurately coded the head turns in response to name; the intraclass correlation coefficient between human and computer coding of orienting to name was .84. We found that only 8% of toddlers with ASD oriented to name on more than one trial, compared to 63% of toddlers in the comparison group. This proof-of-concept study suggested that response to name could be accurately and efficiently detected via a digital app.

The current study extends our previous work in several novel ways: First, whereas the previous app was administered solely on an iPad, we were interested in whether the app could also be used to accurately detect responses to name when delivered on an iPhone. If successful, the app could have broader exportability given that smartphones are more commonly available in a wide range of settings. Second, in order to deploy the app on both an iPhone and iPad in a pediatric clinic setting, we made several significant improvement on the computational tools compared to our previous work, incorporating more accurate and robust detection of head position during the name call and automatic name call detection in the context of often noisy clinic environments, and addressing the challenge of having the device’s speaker used to play movies and the microphone that recorded the background noise and name calls located in close proximity to each other. Third, the present study enrolled a new and substantially larger sample of children. As was done in the previous study, toddlers were recruited from pediatric primary care clinics rather than from high-risk populations, such as infant siblings of children with ASD. Although this community-based study design requires a much larger sample of children to be assessed to detect an adequate number of children with ASD, the strength of this approach is that it mirrors how a screening tool would eventually be used by pediatricians and other providers. Fourth, we examined whether differences in frequencies of head turns were apparent solely in response to name and not a general feature that distinguished children with and without ASD.

We hypothesized that, compared to non-ASD toddlers, those diagnosed with ASD would exhibit fewer head turns in response to their name. Furthermore, for children with ASD who did turn in response to their name, we were interested in whether CVA would detect differences in the latency between the name call and head turn between toddlers with and without ASD. Detection of subtle but reliable differences in motor speed is an ideal application for CVA since this measurement, which is on a subsecond scale, would be difficult to perform by a human during a clinical examination. We also evaluated whether combining these two features, namely, frequency of responding to name and speed of response to name, improved our ability to distinguish between children with and without ASD. Finally, we wanted to further evaluate the accuracy of CVA for measuring response to name by comparing results based on CVA versus human coding of head turns.

Methods

Participants

910 children between the ages of 17-37 months completed the app administration. Demographic characteristics including sex, socioeconomic status and race/ethnicity are shown in Table 1.

Table 1:

Demographic Characteristics of Study Sample

| N (%) | |||

|---|---|---|---|

| Subgroups | TD (N=856; 94.07%) |

ASD (N=37; 4.07%) |

LD-DD (N=17, 1.87%) |

| Age in months | |||

| Mean (SD) | 20.72 (3.32)a | 23.86 (3.79)a | 22.12 (3.46) |

| Sex | |||

| Female | 437 (51.05%)ab | 9 (24.32%)a | 3 (17.64%)b |

| Male | 419 (48.95%)ab | 28 (75.68%)a | 14 (82.35%)b |

| Race | |||

| American Indian/Alaskan Native | 25 (2.92%) | 3 (8.11%) | 0 (0.00%) |

| Asian | 29 (3.39%) | 1 (2.70%) | 0 (0.00%) |

| Black or African American | 140 (16.36%) | 5 (13.51%) | 6 (35.29%) |

| Native Hawaiian or Other Pacific Islander | 0 (0.00%) | 0 (0.00%) | 0 (0.00%) |

| White/Caucasian | 520 (60.75%) | 17 (45.94%) | 7 (41.18%) |

| More Than One Race | 88 (10.28%) | 7 (18.92%) | 1 (5.88%) |

| Other | 49 (5.84%) | 4 (10.81%) | 2 (11.76%) |

| Unknown/Not Reported | 5 (0.58%) | 0 (0.00%) | 1 (5.88%) |

| Ethnicity | |||

| Hispanic/Latino | 134 (15.65%)a | 12 (32.43%)a | 5 (29.41%) |

| Not Hispanic/Latino | 717 (83.76%)a | 25 (67.58%)a | 12 (70.59%) |

| Unknown/Not Reported | 5 (0.58%)a | 0 (0.00%)a | 0 (0.00%) |

| Highest Level of Education | |||

| Without High School Diploma | 33 (3.86%)ab | 3 (8.11%)a | 3 (17.65%)b |

| High School Diploma or Equivalent | 56 (6.54%)ab | 5 (13.51%)a | 6 (35.29%)b |

| Some College Education | 148 (17.29%)ab | 13 (35.14%)a | 2 (11.76%)b |

| 4-Year College Degree or More | 589 (68.81%)ab | 16 (43.24%)a | 5 (29.41%)b |

| Unknown/Not Reported | 30 (3.40%)ab | 0 (0.00%)a | 1 (5.88%)b |

| M-CHAT-R/F Result | |||

| Positive | 1 (0.12%)ab | 29 (78.38%)ac | 7 (41.18%)bc |

| Negative | 855 (99.88%)ab | 6 (16.22%)ac | 9 (52.94%)bc |

| Missing | 0 (0.00%)ab | 2 (5.41%)ac | 1 (5.88%)bc |

| Clinical Variables | Mean (SD) | |

|---|---|---|

| ASD (N=37) |

LD-DD (N=17) |

|

| ADOS-2 Toddler Module | ||

| Calibrated Severity Score | 7.67 (1.68)c | 3.9 (1.6)c |

| Mullen Scales of Early Learning | ||

| Early Learning Composite Score | 63.00 (10.13)c | 74.05 (15.71)c |

| Expressive Language T-Score | 27.41 (6.82)c | 36.06 (11.21)c |

| Receptive Language T-Score | 22.51 (4.35)c | 32.06 (14.05)c |

| Fine Motor T-Score | 34.35 (10.59)c | 38.35 (5.93)c |

| Visual Reception T-Score | 33.43 (10.83) | 37.29 (12.28) |

TD: Typical development; ASD: Autism spectrum disorder; LD-DD: Language delay and/or developmental delay M-CHAT-R/F: Modified Checklist for Autism in Toddlers, Revised with Follow-Up Questions

ADOS-2: Autism Diagnostic Observation Schedule – Second Edition

Significant difference between typical and ASD groups

Significant difference between typical and LD-DD groups

Significant difference between ASD and LD-DD groups (P’s < .05

Recruitment.

This study is part of a larger study that is following toddlers longitudinally from the point of their 18-month well-child visit through age 3 years. Toddlers were recruited via an email that was sent to the caregiver describing the study before an upcoming well child visit. Then, after the child was seen by their provider, a research assistant approached the caregiver and invited them have the app administered. Reference to diagnosis or concern about autism was not included in the recruitment script. Inclusion criteria were as follows: child was being seen by their pediatrician for an 18, 24, or 36 months well child visit and was 16 to 38 months of age nor ill at the time of assessment, and caregiver’s primary language at home was English or Spanish. Exclusionary criteria included a significant sensory or motor impairment that precluded sitting upright and/or seeing the movies shown on the app.

Ethical Considerations.

All caregivers/legal guardians provided written informed consent, and the study protocols were approved by the Duke University Health System IRB (Pro00085434, Pro00085435)

Measures

Modified Checklist for Toddlers – Revised with Follow-up.

All but three caregivers completed the Modified Checklist for Autism in Toddler-Revised with Follow-up Questions (MCHAT-R/F) (Chlebowski, Robins, Barton, & Fein, 2013) as part of routine pediatric care during the child 18-month well-child visit. The M-CHAT-R/F is a caregiver survey comprised of a series of 20 questions regarding the presence or absence of autism symptoms that has been widely used as a screening tool for ASD. Toddlers whose total scores were 3 or greater initially and those whose scores were 2 or greater after the follow-up questions are considered at risk for ASD.

Referral determination and diagnostic and developmental assessments.

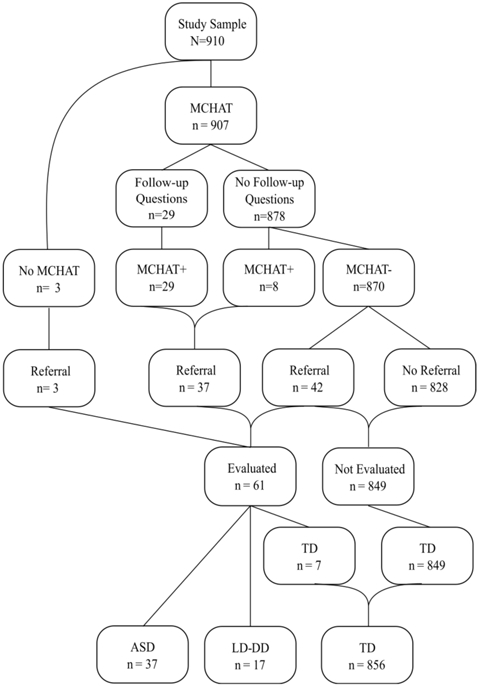

Figure 1 shows the flow diagram for the 910 participants, showing the numbers screened with the MCHAT-R/F, administered the follow-up interview, referred for evaluation, and diagnostic outcome. As illustrated in Figure 1, there were two ways in which a child could be referred for a diagnostic and developmental assessment, either because the child had a positive MCHAT-R/F score or either the caregiver or provider had a concern that prompted a referral by the provider. The evaluation included the Autism Diagnostic Observation Schedule- Second Edition (ADOS-2) (Gotham et al., 2007) conducted by a research-reliable clinician to establish whether the child met DSM-5 criteria for ASD and the Mullen Scales of Early Learning (Mullen Scales) to establish whether the child had development delays in cognitive/language functioning (Mullen, 1995). The average time from M-CHAT-F/U screening to completed evaluation was 107.98 days .

Figure 1.

Study flow depicting participants’ M-CHAT-R/F, whether follow-up questions were administered, screening status and referral, and diagnostic outcome.

Group definitions.

Three groups were defined for analyses: (1) ASD group (N = 37) was defined as having been referred for evaluation based on a positive M-CHAT-R/F score or caregiver/provider concern, and meeting DSM-5 diagnostic criteria for ASD (with or without developmental delay) based on both the ADOS-2 and clinical judgement by a licensed psychologist. 16/37 (43%) of the children with ASD had nonverbal cognitive ability that was significantly delayed as evidenced by scoring 30 or below on the Visual Reception subscale of the Mullen Scales; (2) Non-ASD language/developmental delay group (LD-DD, N = 17) was defined as having failed the MCHAT-R/F or having provider or caregiver concern and having been administered the ADOS-2 and determined by a licensed psychologist to not meet criteria for ASD but to have language or developmental delay. All children in the LD-DD group scored at least 9 points below the mean on at least one Mullen Early Learning Subscale (1 SD = 10 points); and (3) Typical development (TD) group (N = 856) was defined as having a high likelihood of typical development due to having scored a 1 or below on the M-CHAT-R/F after having been administered the follow-up questions (when appropriate) and no concern raised by physician or caregiver (N = 849), or having been referred based on the M-CHAT-R/F (N = 1) or caregiver/provider concern but then determined by a licensed psychologist based on the ADOS and Mullen Scales to be developing typically (N = 7). While it is possible that there is a small number of children in the latter group who might have ASD and/or language/developmental delay, it was not feasible to administer the ADOS-2 and Mullen Scales to all 910 children. Due to the low number of LD-DD participants, especially when results are broken down by device used (iPad versus iPhone), data for this group are presented in a descriptive fashion in the Supplementary Material. Demographic characteristics of the subsamples are shown in Table 1.

Digital screening app.

Caregivers were asked to hold their child on their laps, and an iPad or iPhone was set on a tripod about 60 centimeters away from the child. To minimize distraction, other adults and siblings (if present) were asked to stand behind the caregiver. Caregivers were asked to remain quiet and not direct the child’s behavior or attention once the movies began. An examiner stood directly behind and 1.3-1.6 meters from the caregiver’s chair. A series of short, engaging, developmentally-appropriate dynamic movies were shown on the iPad or iPhone that in combination took less than 10 minutes to administer. Examples include cascading bubbles, a woman saying rhymes, and an actor blowing a pinwheel or spinning a top. The frontal camera in the iPad or iPhone recorded the child’s behavior during the movies, shown at 1280 x 720 resolution and 30 frames per second.

At three separate points during the movies a small icon appeared on the screen to prompt the examiner standing behind the child to call the child’s name (twice during the first movie, which consisted of floating bubbles cascading across the screen with a background sound of water gurgling, and once during the fourth movie, which consisted of a noise-making mechanical toy that moved across the screen). If the child was already looking at the examiner or caregiver when the prompt appeared, the examiner did not call the name and this was considered a missing trial. If the child turned to look at the caregiver or examiner after the name call, the examiner silently smiled and waved.

Dependent variables were the automatic detection of the frequency and latency of head turns to the examiner when the child’s name was called based on all three name calls, and the first name call only. Responding to name was defined as a head turn toward the examiner within 5 seconds from the name call. Note that we examined the impact of changing the interval length to 2 or 10 seconds, and the results of the analyses described below did not change. As such, we selected an interval of 5 seconds to align with a previous study (Campbell et al., 2019).

Latency to respond to name was defined as the difference between the first frame of the namecalling event and the first frame of the head turning sequence. This dependent variable was analyzed for all instances of responding, defined as head turns that occurred within the first 5 seconds after the name call.

Detection of head turns in response to name

Methods for automatic detection of head turns were based on an adapted procedure described and validated in a previous paper (Campbell et al., 2019) and are described in detail in the Supplementary Material. These improvements were made to address the challenges of testing in a noisy environment and the use of multiple types of devices. In brief, the face was first detected in the video frame to validate it was that of the participant, each frame in which the face was detected was marked, and 49 facial landmarks were tracked allowing for the detection of head, mouth, and eye positions (D’e la Torre, 2015). These landmarks were used to estimate the pitch, yaw, and roll angles of the head (head’s orientation) on a frame-by-frame basis (30 frames per second). Head orientation relative to the camera was estimated by computing the optimal rotation parameters between the detected landmarks and a three-dimensional canonical face model. We used the toddler’s head orientation as a proxy of the child facing toward the stimuli (or towards the examiner calling the child’s name); this is further supported by the ‘center bias’ property (Li, 2013; Manna, 1995). Engagement with the movie stimuli was assumed when the toddler exhibited “forward” head poses within specific angles as detailed in the Supplementary Material. Since the examiner and caregiver were positioned behind the child, the child was required to perform a head turn by rotating their head from facing towards the screen (forward) to looking behind in response to a name call event. A head turn was defined as (a) the toddler starting from being engaged with the stimulus (“small yaw”), (b) to turning their head away (disengagement, “large yaw or face not fully visible”), and (c) then back to watch the movie (back to “small yaw”). Since we tracked the participants’ facial landmarks to derive the head position from the landmarks on a frame-by-frame basis, these orientations and their sequence could all be automatically computed. As such, the precise frame-by-frame time evolution from engagement to disengagement and back to engagement was obtained.

In addition, a human coder naive to child diagnosis viewed the videotapes of each participant and made a judgement regarding whether and when the child responded to their name by turning their head for each name call.

Detection of name call

To ensure that the child’s head turns corresponded to a name call event, an automatic voice detection of the name call event was used. Methods to automatically detect the audio of the name call event was extended from the technique described and validated in previous studies (Hashemi, 2015). However, improvements to this method were implemented for this study, and are detailed in the Supplementary Material. The recorded audio data of a pre-defined window (in seconds) around each of the known name-call prompts were extracted, and automatically processed, and inspected, including denoising and filtering (Buades, 2005; Rafii, 2012). Focusing on the power spectrum density of the extracted audio signal in the 0-3000 Hz frequency range, we estimated when the examiner called the child’s name by finding (in the denoised and filtered spectrogram) the frame with the highest energy. Some of the name call events were easier to detect than others, due to the device’s microphone capturing all background sound in the often-noisy clinical environment, including sounds that were part of the movie. The current implementation provided sufficient accuracy on both devices (more details are presented in the Supplementary Material).

Statistical methods

Two-way, random, consistency Intra-Class correlation with 95% confidence interval was used to compare the computer algorithm detection versus human coding of a head turn during the 5-second period after the name. Group comparisons between children with ASD versus TD in the number of responses to a name call were made using the Mann-Whitney-U test with a one-sided tail. A time-to-event analysis was used to investigate group differences between ASD and TD groups. The time the child initiated the head movement toward the examiner (as defined above) was the event of interest. For the first name call, hazards ratios were tested against the null hypothesis of equal hazards between groups with the log-rank test. For the three name calls aggregated, Cox proportional hazards models were created with all three name calls for each child linked as repeated measures and with age as a covariate. Mann-Whitney-U statistical test was used to compare groups in terms of spontaneous head turns, with effect size computed using rank-biserial correlation. The analysis is done for the cumulative number of spontaneous head turns. Effect sizes are noted as “r.” Kaplan–Meier curves of the cumulative events were constructed to visualize the proportion of events in the TD and ASD groups over time for the three name calls. A tree classifier was used to assess discrimination between ASD and TD groups based on one versus two features (Breiman, 1984). ‘Gini impurity' was used as automatic splitting criteria. The first node of the otherwise automatically computed depth-3 tree was set to separate between participants with no name calling response and those with a response. Confidence interval computation used Greenwood's Exponential formula. Statistics were computed in Python using SciPy low-level functions V.1.4.1, Statsmodels V.0.10.1, Lifelines V.0.24.6, and Pingouin V.0.3.4 (Davidson-Pilon, 2020; Jones, 2001; Seabold, 2010; Vallat, 2018). Assumptions of normality were tested using Shapiro-Wilk test. Homoscedasticity (equality of variance) was tested using Levene’s test. Except for the Kaplan–Meier curves, confidence intervals were computed using bootstrap resampling method with 1,000 iterations. Statistical significance was set at alpha = 0.05 level. Confidence intervals for the AUCs were computed using the Hanley and McNeil method (Hanley, 1982). Differences between AUCs based on difference features were compared using the DeLong method (DeLong, 1988).

Results

Comparison of human and computer coding

To validate the automatic detection of head turns in response to name, human coding of the child’s head turn to name and computer-detected orienting were compared and found to be in excellent agreement (ICC = 0.99). Raw data showing correspondence between computer and human coding can be found in the Supplementary Material (Figures S2 and S3).

Compliance/engagement during app administration

Engagement watching the movie was computed by filtering frames where the child was not attending, using criteria based on head orientation, gaze, and head movement (see details in Supplementary Material). For the floating bubbles movie (containing two name callings), participants were attending 78% (+/− 17%) of the time for the iPad, and 66% (+/− 21 %) for the iPhone. For the bunny movie featuring one name calling, participants were attending 86% (+/− 16%) , and 84% (+/− 18%) of the time for the iPad and the iPhone respectively. Children with TD and ASD did not differ statistically in terms of their level of engagement.

Frequency of responses to name

There were 2,462 (86% out of a potential 2,856) valid name calls considered for analyses. 86% of TD children (N = 733), 92% of the children with ASD (N = 34) had valid administrations of the first name call prompt and were included in the analyses. Reasons for an invalid administration included the child was already turned at the time of the experiment (62%), administration was not completed due to non-compliance (20%), clinician forgot to administer the name call (13%), name call was not detected (5%). Note that some children who were provided a diagnostic evaluation were administered both the iPhone and iPad versions of the app (one in the pediatric clinic and one on a separate day during the evaluation), in which case the first version administered was used for combined analyses.

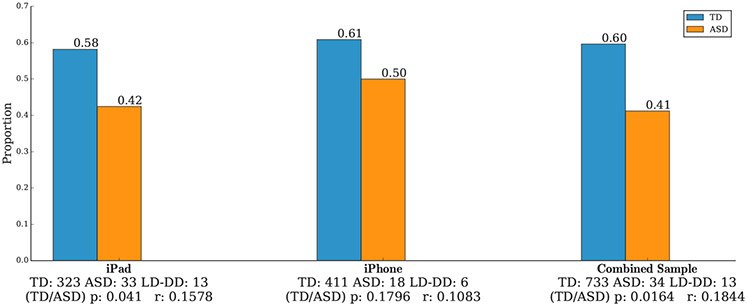

We first examined the proportion of children in each group who responded to the first name call, as shown in Figure 2. A significantly higher proportion of TD children responded to the first name call as compared to the children with ASD (Proportions = 0.60 for TD and 0.41 for ASD, P = 0.0164 for the combined sample). Descriptive results for the LD-DD sample are shown in Figure S4 in the Supplementary Material.

Figure 2.

Proportion of toddlers with typical development (TD) versus ASD who responded by turning their head to the first name call, which occurred in the first movie (Floating bubbles). The difference between groups is statistically significant (P = 0.0164 for the combined sample). Effect sizes are denoted as "r."

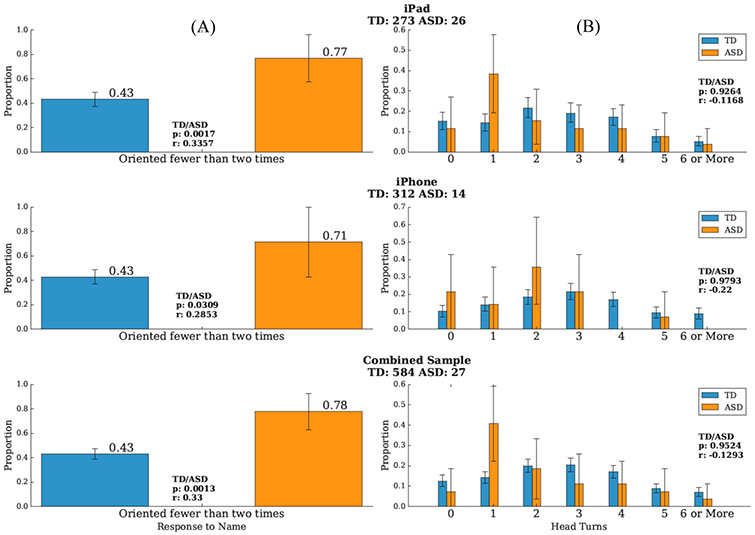

We next analyzed the frequency of orienting to a name call by turning their head for those children who had three valid name calls [68% of TD children (N=584), 73% of ASD subjects (N=27)]. Figure 3a shows the proportion of participants who oriented fewer than two times for each group, separately for the iPad and iPhone and the combined sample. Toddlers with ASD were significantly more likely to respond to their name fewer than 2 times as compared to TD toddlers who were more likely to orient 2 or more times (Ps = 0.0017, 0.0309 and 0.0013 for the iPad, iPhone, and combined samples, respectively). This variable more robustly distinguished between the toddlers with and without ASD, compared to the frequency of orienting to the first name call. For the combined sample, the proportion of toddlers with ASD oriented fewer than two times was 0.78, compared to 0.43 for the TD toddlers, and a one-way ANCOVA comparing ASD and TD participants, controlling for age, revealed a significant group difference in frequency (F = 6.5, P = 0.001). Spearman correlations between the age and response frequency were r=0.048 (P = 0.169) for the whole sample, r = 0.055 (P = 0.12) for the TD sample, and r = 0.29 (P = 0.09) for the ASD sample. Descriptive results for the LD-DD sample are shown in Figure S5 in the Supplementary Material.

Figure 3.

(A) The proportion of children who oriented fewer than two times versus twice or more to three name calls was significantly different for those with typical development (TD) versus those with ASD. (B) The proportion of children who spontaneously turned their heads 0, 1, 2, 3, 4, 5 and 6 or more times during the two movies during which the name calls occurred. The TD and ASD groups did not exhibit a significant difference in the cumulative number of spontaneous head turns. Effect sizes are denoted as “r.”

We next examined whether these differences in head turning were specific to instances in which the child’s name was called. For example, if toddlers with ASD were less interested in the surroundings in the room in general, we might expect fewer head turns in general. To test this, we examined whether there were group differences in the cumulative number of spontaneous head turns (i.e., head turns that were not within 5 seconds of the name call) throughout the movies during which the head turn occurred. ASD versus TD did not in differ in the cumulative number of spontaneous head turns (P = 0.9524; see Figure 3b); thus, group differences in head turns were specific to responding to name.

Latency differences in head turns in response to name

We were interested in whether CVA revealed a difference between the TD and ASD groups in the latency between the name call and the head turn for those instances in which a child oriented to name. Group comparisons were made using a time-to-event analysis both for the first name call only and also for the subgroup of children who were administered three valid name calls. For the latter, latency was averaged for instances of orienting across the three name call trials. The time the child initiated the head movement toward the examiner was defined as the event of interest. Kaplan–Meier curves of the cumulative events are shown in Figure 4 and illustrate the slower orienting response in the autism group. For both analyses, for the combined sample, children with ASD exhibited a significantly longer latency to orient compared to children with typical development (orient to first name: P = 0.025; across three trials: P = 0.00008), and a one-way ANCOVA comparing ASD and TD participants, controlling for age, revealed a significant group difference in latency (F = 5.6, P = 0.003). Spearman correlations between the age and latency to respond were r=−0.012 (P = 0.75) for the whole sample, r=−0.024 (P = 0.54) for the TD sample, and r = −0.022 (P = 0.91) for the ASD sample.

Figure 4.

Kaplan-Meier plots of cumulative events of orienting to name over time, for the three name calls (A) and for the first name call only (B), with 95% confidence intervals for toddlers with ASD versus those with typical development (TD). Children with ASD who oriented to their name showed a significantly longer latency between the name call and turning their head.

Combining features

We investigated the value of combining information about the frequency, and the latency of head turns in response to a name call. Figure 5 shows the receiver operating characteristic (ROC) curves and area under the curve (AUC) for tree-based classifiers for both the iPad and iPhone apps when using only information about the frequency of head turns (AUC = 0.70 for both the iPad and iPhone administrations) versus only information about the latency of the head turn (AUC = 0.77 for iPad and 0.69 for iPhone administrations) versus the combination of these two features (AUC = 0.83 for iPad and 0.78 for iPhone). Combining information significantly improved our ability to distinguish between toddlers with ASD versus TD toddlers. Specifically, the decision tree trained on the combined features, compared to single features, lead to statistically significant improved performances; frequency/combined features comparison: Z = −4.25, P < 0.00002 and Z=−1.42, P = 0.15 for the iPad and iPhone respectively; delay/combined features comparison: Z = −2.1, P = 0.035 and Z = −4.84 P < 0.000002 for the iPad and iPhone respectively.

Figure 5.

ROC curves and AUC results for tree-based classifiers (iPad study on the left and iPhone study on the right) showing level of ASD versus TD group discrimination when using only information about the frequency of head turns (blue line, AUC = 0.70 for iPad and iPhone) versus only information about the latency of the head turn (orange line, AUC = 0.77 for iPad and 0.69 for iPhone) versus the combination of these two features (green line, AUC = 0.83 for iPad and 0.78 for iPhone).

Comparisons between app measures, M-CHAT-R/F and other clinical characteristics

The Spearman correlation between M-CHAT-R/F final score and frequency of responding to name is r=−0.06 (P=0.13) for the whole sample, r=−0.01 (P=0.7) for the TD sample, and r=−0.39 (P=0.025) for the ASD sample. The correlations between the M-CHAT-R/F final score and mean delay in responding to name are r=0.02 (P=0.56) for the whole sample, r=−0.001 (P=0.97) for the TD sample, and r=0.24 (P=0.25) for the ASD sample.

We next compared the relationship between the app measure of responding to name versus caregiver-report of the same behavior on the MCHAT-R/F (Question 10) in relationship to diagnostic outcome. For the combined sample, 78% of the children subsequently diagnosed with ASD responded to their name fewer than two times. In comparison, on the single Question 10, 31% of caregivers indicated their child did not respond when their name was called.

Children with ASD who responded < 2 times versus those who responded two or more times did not differ in terms of their receptive language skills or severity of autism symptoms [Mullen Receptive Language Subscale : M = 20.9 (SD = 2.5) vs. 23.5 (SD = 5.8) and ADOS Calibrated Severity Score : M = 7.7 (SD = 1.9) vs. 7.5 SD = 1.2)].

Discussion

Our goal was to determine whether a digital screening tool that is delivered on a smartphone or tablet and uses computer vision analysis (CVA) to automatically code behavior can reliably elicit, detect, and accurately measure a key early autism symptom - failure to orient when the child’s name is called. Several methodological advances (described in detail in the Supplementary Material) were implemented that increased the robustness for implementing the app on both an iPhone and iPad in real world, often noisy community settings (pediatric primary care clinic). For example, coding of both the head turns and name calls was automated, requiring separation of these features from background noise that would be expected in a clinic setting.

When we compared the detection of a head turn in response to name as measured by CVA versus a human coder, we found strong correspondence, which provides confidence in the validity of the CVA results. We found that toddlers with ASD were significantly less likely to respond to the first name call and were less likely to respond to 2 out of the 3 name calls, compared to toddlers with typical development (TD). Notably, outside of the context of a name call, the groups did not differ in the number of times they spontaneously turned their heads. Thus, the group differences in frequency of head turns were specific to moments when the child’s name was called.

Using the quantitative measurement of CVA, we also found that, when children with ASD do orient to their name, their head turn is significantly delayed compared to TD toddlers. This finding highlights one of the advantages of using a computer rather than a human to measure human behavior - higher resolution. This difference in latency to respond to their name is likely not detectable by the human eye. Thus, clinicians evaluating a child’s response to their name during a diagnostic evaluation would have missed this. Importantly, combining information about the speed with which the child turn their head in response to name with information about the frequency of head turns improved the ability to distinguish between toddlers diagnosed with ASD versus the typically-developing toddlers (AUC increased from 0.70 to 0.83 for the iPad administration and to 0.78 for the iPhone administration).

The difference in latency of the orienting response suggests that ASD is associated with sensorimotor differences potentially related to both the processing of the auditory stimulus (name call) and the initiation of a motor response (head turn). Numerous studies have documented motor coordination as an early feature of ASD, and it is possible that a delay in head turning to a name call reflects subtle deficits in sensorimotor abilities. In a previously published paper, other sensorimotor differences between toddlers with and without ASD, namely, differences in postural sway while watching the movies on the app, were detected with CVA (Dawson et al., 2018).

This study extends previous clinical research on the failure to respond to name by young children with ASD by demonstrating that that this symptom can be detected using relatively inexpensive ubiquitous devices, namely a smartphone or tablet, using the camera in the device to record the child’s behavior and CVA to automatically code the child’s response. The app used in the present study demonstrated the capability to fully automate the elicitation of a head turn in response to name and detection and measurement of both the name call and head turn in real world, noisy environments. The assessment involved very few instructions and was administered with children sitting on their caregiver’s lap while watching engaging movies. The study sample was relatively diverse in terms of ethnic/racial background and caregiver education level, involving both English- and Spanish-speaking families. While the results show some minor differences between the iPad and iPhone, this can be both intrinsic to the devices (e.g., different screen sizes, 9.7 inches for the iPad and 5.5 inches for the iPhone) or a result of one set having a larger number of ASD participants. It is, therefore, premature to infer any critical difference between both devices, beyond the fact that the measures here show in general viable in both types of devices. By implementing this digital assessment on multiple devices on a relatively large community-based sample, we are increasingly confident that a digital approach may offer a complementary method for reliably and objectively assessing children’s behavior (Campbell et al., 2019; Carpenter et al., 2020; Dawson et al., 2018; Egger et al., 2018).

It is noteworthy that the 4% ASD prevalence rate in this study is substantially higher than the 2% rate reported by the ADDM Network (Maenner et al., 2020). We speculate that factors that could have influenced the prevalence rate in the present study were near universal screening with the MCHAT-R/F, providers who were aware that they were part of a study about autism screening which could have heightened their awareness of developmental concerns, a caregiver population that was motivated to attend their well child visits, and immediate access to a free diagnostic evaluation. A strength of the design of the present study is that the relatively diverse population studied was recruited from a large community-based sample of toddlers who have not been previously evaluated for ASD, who were recruited without knowledge of the presence of a risk factor, such as presence of an older sibling with ASD. The advantage of this study design is its comparability to how screening tools would pragmatically be used in settings such as in pediatric primary care. However, we stress that we do not view one behavior – in this case, response to name call – as sufficient for screening for ASD. Indeed, 43% of typically-developing toddlers did not respond consistently to their name. Thus, while it was unusual for a child with ASD to orient consistently (only 23% of children with ASD did so), it was not unusual for a typically-developing child to show this characteristic. Given the heterogeneous presentation of ASD, one behavior will not provide accurate screening for ASD. Even adding the latency to orient information significantly increased accuracy in ASD risk prediction. Our long-term goal is to combine features related to orienting to name, such as frequency and latency, with other behavioral symptoms that the app is designed to elicit, such as differences in sensorimotor behavior, attention, and affective expression. For example, when the child watches the engaging movies, a spontaneous head turn toward an adult combined with a smile offers a potential way to measure social referencing and affective sharing. The goal is to combine several autism risk behaviors in a multi-behavior algorithm to identify young children at risk for ASD. Each child would be expected to exhibit a unique subset of potential risk behaviors, which in combination, would reach a threshold of risk for ASD. This information could be made readily available to a provider in the form of a risk index. Information from a digital screening tool can also be combined with other types of information, including caregiver report, such as the M-CHAT-R/F and medical record data to increase the accuracy of risk detection.

Conclusions

By creating and validating screening tools that allow for direct behavioral observation and are quantitative, scalable, and exportable to real world environments, we hope to develop methods that can complement existing methods, such as those reliant on caregiver report. The use of digital methods will also facilitate the collection of large data sets amenable to machine learning, which open new avenues for developing optimized screening algorithms. It is hoped that such approaches will improve our ability to accurately detect children at risk for ASD and other developmental disorders with the goal of providing the earliest access to intervention and improving children’s outcomes.

Supplementary Material

What’s known

Failure to respond to name is a well-established autism symptom.

This symptom is currently assessed via questionnaires or clinical observation, requiring caregiver literacy and/or trained professionals.

What’s new

We studied whether a digital app delivered in community settings on a smartphone or tablet using computer vision analysis to code behavior could assess a failure to respond to name.

The app reliably detected a failure to respond to name in toddlers with ASD, and delayed orienting in children with ASD who responded. These behaviors discriminated toddlers with ASD vs typical development.

What’s relevant

Digital behavioral assessment complements existing methods and enables collection of large data sets of behavioral observations amenable to machine learning, opening new avenues for scalable, exportable methods for measuring autism symptoms.

Acknowledgements

We wish to thank the many caregivers and children for their participation in the study, without whom this research would not have been possible. We gratefully acknowledge the collaboration of the physicians and nurses in Duke Children’s Primary Care, and the contributions of Samantha Bowen, Karen Goetz, Emily Henderson, Gordon Keeler, Abby Scheer, Sarah Sipe, and Elizabeth Sturdivant, as well as several clinical research specialists. Data analysis for this project was independently reviewed by Scott Compton, PhD, who has no conflict of interest related to this work. This work was supported by NIH Autism Centers of Excellence Award NICHD 1P50HD093074 (Dawson & Kollins, Co-PIs), NIMH 1 R01MH121329 (Dawson & Sapiro, Co-PIs) and NIMH R01MH120093 (Sapiro & Dawson, Co-PIs) with additional support provided by The Marcus Foundation, the Simons Foundation, NSF-1712867, ONR N00014-18-1-2143 and N00014-20-1-233, NGA HM04761912010, Apple, Inc. Microsoft, Inc., Amazon Web Services, and Google, Inc. The funders had no direct role in the study design, data analysis, decision to publish or preparation of the manuscript.

Abbreviations

- ADOS-2

Autism Diagnostic Observation Schedule- Second Edition

- ASD

Autism spectrum disorder

- CVA

Computer vision analysis

- MCHAT-R/F

Modified Checklist for Autism in Toddlers- Revised with Follow-up

- TD

Typically-developing

- LD-DD

Language delay/developmental delay

Footnotes

Competing Interests: K.C., Z.C., S.E., G.D., and G.S. developed technology related to the app that has been licensed to Apple, Inc., and both they and Duke University have benefited financially. J.H. reports consulting fees from Roche. S.K. has received research support and/or consulting fees from the following commercial sources: Adlon Pharmaceuticals, Akili Interactive, Bose Corporation, OnDosis, Sana Health, Tali Health, Tris Pharma, Vallon Pharmaceuticals. S.k. holds equity and/or stock options in Behavioral Innovations Group, LLC and Akili Interactive. G.D. is on the Scientific Advisory Boards of Janssen Research and Development, Akili, Inc, LabCorp, Inc, Roche Pharmaceutical Company, and Tris Pharma, and is a consultant for Apple, Gerson Lehrman Group, Guidepoint, Inc, Axial Ventures, Teva Pharmaceutical, and is CEO of DASIO, LLC. She has received book royalties from Guilford Press, Oxford University Press, Springer Nature Press, and has the following patent applications: 1802952, 1802942, 15141391, and 16493754. G.S. is a consultant for Apple, Volvo, Restore3D, SIS, and is CEO of DASIO, LLC. He is in the Board of SIS and Tanku and has inventions disclosures and patent applications registered at the Duke Office of Licensing and Venture. He received speaking fees from Janssen.

Contributor Information

Sam Perochon, Department of Electrical and Computer Engineering, Duke University..

Matias Di Martino, Department of Electrical and Computer Engineering, Duke University..

Rachel Aiello, Department of Psychiatry and Behavioral Sciences, Duke University..

Jeffrey Baker, Department of Pediatrics, Duke University..

Kimberly Carpenter, Department of Psychiatry and Behavioral Sciences, Duke University..

Zhuoqing Chang, Department of Electrical and Computer Engineering, Duke University..

Scott Compton, Department of Psychiatry and Behavioral Sciences, Duke University..

Naomi Davis, Department of Psychiatry and Behavioral Sciences, Duke University..

Brian Eichner, Department of Pediatrics, Duke University..

Steven Espinosa, Office of Information Technology, Duke University..

Jacqueline Flowers, Department of Psychiatry and Behavioral Sciences, Duke University..

Lauren Franz, Department of Psychiatry and Behavioral Sciences, Duke University..

Martha Gagliano, Department of Pediatrics, Duke University..

Adrianne Harris, Department of Psychiatry and Behavioral Sciences, Duke University.; Department of Psychology & Neuroscience, Duke University..

Jill Howard, Department of Psychiatry and Behavioral Sciences, Duke University..

Scott H. Kollins, Department of Psychiatry and Behavioral Sciences, Duke University..

Eliana M. Perrin, Department of Pediatrics, Duke University.; Duke Center for Childhood Obesity Research..

Pradeep Raj, Department of Electrical and Computer Engineering, Duke University..

Marina Spanos, Department of Psychiatry and Behavioral Sciences, Duke University..

Barbara Walter, Department of Psychiatry and Behavioral Sciences, Duke University..

Guillermo Sapiro, Department of Electrical and Computer Engineering, Duke University.; Biomedical Engineering, Mathematics, and Computer Sciences, Duke University..

Geraldine Dawson, Department of Psychiatry and Behavioral Sciences, Duke University..

References

- Becerra-Culqui TA, Lynch FL, Owen-Smith AA, Spitzer J, & Croen LA (2018). Parental First Concerns and Timing of Autism Spectrum Disorder Diagnosis. J Autism Dev Disord, 48(10), 3367–3376. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Breiman L, Friedman J Olshen R, and Stone C (1984). Classification and regression trees. Wadsworth, Belmont, CA. [Google Scholar]

- Buades A, Coll B, and Morel J (2005). A non-local algorithm for image denoising. IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 2, 60–65. [Google Scholar]

- Campbell K, Carpenter KL, Hashemi J, Espinosa S, Marsan S, Borg JS, … Dawson G (2019). Computer vision analysis captures atypical attention in toddlers with autism. Autism, 23(3), 619–628. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carpenter KLH, Hahemi J, Campbell K, Lippmann SJ, Baker JP, Egger HL, … Dawson G (2020). Digital Behavioral Phenotyping Detects Atypical Pattern of Facial Expression in Toddlers with Autism. Autism Res. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chlebowski C, Robins DL, Barton ML, & Fein D (2013). Large-scale use of the modified checklist for autism in low-risk toddlers. Pediatrics, 131(4), e1121–1127. [DOI] [PMC free article] [PubMed] [Google Scholar]

- D’e la Torre F, Chu W, Xiong X, Vincente F, Ding X, and Cohn J (2015). IntraFace. International Conference on Automatic Face and Gesture Recognition. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davidson-Pilon C, Kalderstam J, Jacobson N, Zivich P, Kuhn B, Williamson M, and Moncada- Torres A (2020). CamDavidsonPilon.lifelines: Version 0.24.6. Zenodo. [Google Scholar]

- Dawson G, Campbell K, Hashemi J, Lippmann SJ, Smith V, Carpenter K, … Sapiro G (2018). Atypical postural control can be detected via computer vision analysis in toddlers with autism spectrum disorder. Sci Rep, 8(1), 17008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dawson G, Meltzoff AN, Osterling J, Rinaldi J, & Brown E (1998). Children with autism fail to orient to naturally occurring social stimuli. Journal of Autism and Developmental Disorders, 28(6), 479–485. [DOI] [PubMed] [Google Scholar]

- Dawson G, & Sapiro G (2019). Potential for Digital Behavioral Measurement Tools to Transform the Detection and Diagnosis of Autism Spectrum Disorder. JAMA Pediatr, 173(4), 305–306. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dawson G, Toth K, Abbott R, Osterling J, Munson J, Estes A, & Liaw J (2004). Early social attention impairments in autism: social orienting, joint attention, and attention to distress. Developmental Psychology, 40(2), 271–283. [DOI] [PubMed] [Google Scholar]

- DeLong E e. a. (1988). Comparing the areas under two or more correlated receiver operating characteristic curves: A nonparametric approach. . Biometrics, 44(3), 837–845. [PubMed] [Google Scholar]

- Egger HL, Dawson G, Hashemi J, Carpenter KLH, Espinosa S, Campbell K, … Sapiro G (2018). Automatic emotion and attention analysis of young children at home: a ResearchKit autism feasibility study. NPJ Digit Med, 1, 20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gotham K, Risi S, Pickles A, & Lord C (2007). The Autism Diagnostic Observation Schedule: revised algorithms for improved diagnostic validity. J Autism Dev Disord, 37(4), 613–627. [DOI] [PubMed] [Google Scholar]

- Hanley J, McNeil BJ. . (1982). The meaning and use of the area under a receiver operating characteristic (ROC) curve. Radiology, 143((1)), 29–36. [DOI] [PubMed] [Google Scholar]

- Hashemi J, Campbell K, Carpenter KLH, Harris A, Qiu Q, Tepper M, Espinosa S, Schaich Borg J, Maon S, Calderbank R, Baker JP, Egger HL, Dawson G, and Sapiro G (2015). A scalable app for measuring autism risk behaviors in young children: A technical validity and feasibility study. MobiHealth, October. [Google Scholar]

- Jones E, Oliphant T, Peterson P et al. (2001). SciPy: Open Source Scientific Tools for Python. [Google Scholar]

- Khowaja MK, Hazzard AP, & Robins DL (2015). Sociodemographic Barriers to Early Detection of Autism: Screening and Evaluation Using the M-CHAT, M-CHAT-R, and Follow-Up. J Autism Dev Disord, 45(6), 1797–1808. doi: 10.1007/s10803-014-2339-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li Y, Fathi A, and Rehg J (2013). Learning to predict gaze in egocentric video. IEEE International Conference on Computer Vision. [Google Scholar]

- Maenner MJ, Shaw KA, Baio J, EdS, Washington A, Patrick M, … Dietz PM (2020). Prevalence of Autism Spectrum Disorder Among Children Aged 8 Years - Autism and Developmental Disabilities Monitoring Network, 11 Sites, United States, 2016. MMWR Surveill Summ, 69(4), 1–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Manna S, Ruddock K, and Wooding D (1995). Automatic control of saccadic eye movements made in visual inspection of briefly presented 2-D images. Spatial Vision, 9(3), 363–386. [DOI] [PubMed] [Google Scholar]

- Miller M, Iosif AM, Hill M, Young GS, Schwichtenberg AJ, & Ozonoff S (2017). Response to Name in Infants Developing Autism Spectrum Disorder: A Prospective Study. J Pediatr, 183, 141–146.e141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mullen E (1995). Mullen Scales of Early Learning. Western Psychological Services, Inc. [Google Scholar]

- Nadig AS, Ozonoff S, Young GS, Rozga A, Sigman M, & Rogers SJ (2007). A prospective study of response to name in infants at risk for autism. Archives of pediatrics & adolescent medicine, 161(4), 378–383. [DOI] [PubMed] [Google Scholar]

- Rafii Z, Pardo B (2012). Music/voice separation using the similarity matrix. IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 2(San Diego, CA: ), 60–65. [Google Scholar]

- Robins DL, Fein D, Barton ML, & Green JA (2001). The Modified Checklist for Autism in Toddlers: an initial study investigating the early detection of autism and pervasive developmental disorders. Journal of Autism and Developmental Disorders, 31(2), 131–144. [DOI] [PubMed] [Google Scholar]

- Seabold S, and Perktold J (2010). Econometric and statistical model with python. Proceedings of the 9th Python in Science Conference. [Google Scholar]

- Vallat R (2018). Pingouin: Statistics in Python. Journal of Open Source Software, 3(31), 1026. [Google Scholar]

- Werner E, Dawson G, Osterling J, & Dinno N (2000). Brief report: Recognition of autism spectrum disorder before one year of age: a retrospective study based on home videotapes. Journal of Autism and Developmental Disorders, 30(2), 157–162. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.