Abstract

A new segmentation technique is introduced for delineating the lung region in 3D computed tomography (CT) images. To accurately model the distribution of Hounsfield scale values within both chest and lung regions, a new probabilistic model is developed that depends on a linear combination of Gaussian (LCG). Moreover, we modified the conventional expectation-maximization (EM) algorithm to be run in a sequential way to estimate both the dominant Gaussian components (one for the lung region and one for the chest region) and the subdominant Gaussian components, which are used to refine the final estimated joint density. To estimate the marginal density from the mixed density, a modified k-means clustering approach is employed to classify the Gaussian subdominant components to determine which components belong properly to a lung and which components belong to a chest. The initial segmentation, based on the LCG-model, is then refined by the imposition of 3D morphological constraints based on a 3D Markov–Gibbs random field (MGRF) with analytically estimated potentials. The proposed approach was tested on CT data from 32 coronavirus disease 2019 (COVID-19) patients. Segmentation quality was quantitatively evaluated using four metrics: Dice similarity coefficient (DSC), overlap coefficient, 95th-percentile bidirectional Hausdorff distance (BHD), and absolute lung volume difference (ALVD), and it achieved , , , and , respectively. The reported results showed the capability of the proposed approach to accurately segment healthy lung tissues in addition to pathological lung tissues caused by COVID-19, outperforming four current, state-of-the-art deep learning-based lung segmentation approaches.

Keywords: computed tomography (CT), lung, chest, segmentation, COVID-19

1. Introduction

Pulmonary diseases are serious public heath threats that may happen after having inflammation or fluid accumulation in the lung, causing a respiratory failure, such as coronavirus disease 2019 (COVID-19). The primary reason for COVID-19 death is acute respiratory distress syndrome (ARDS) [1]. According to Gupta et al. [2], of the COVID-19 patients in their study needed a mechanical ventilation support, of whom had ARDS. Therefore, detection and diagnosis of COVID-19 grades is vital to prioritize patient’s need for ventilator support. The accuracy attainable by computer-aided diagnostic (CAD) system using lung imaging data for COVID-19 depends on how accurate the segmentation is. Accurate lung segmentation is a challenging task as different pathologies affect the appearance of the lung, and if the infected regions are missed during the segmentation, it will affect the entire task. Therefore, this paper focuses on developing an automatic system to detect and segment the lungs in chest computed tomography (CT), which is one of the popular noninvasive clinical modalities used by physicians to diagnose lung pathologies.

In the last few years, many preliminary studies have been conducted to detect and segment lung as well as pathological lesions. Some of these studies [3,4,5,6,7,8,9] proposed threshold-based approaches for lung segmentation, which performed well on normal CT scans but failed in pathological cases, especially severe cases, whereas lungs in the normal CT scan can be discriminated easily from background due to huge differences in attenuation [10]. Therefore, to overcome this problem, more recent studies employed texture, shapes, deep learning, or hybrid techniques to accurately segment normal and different lung pathologies. These studies are briefly discussed below.

In [11,12,13,14], authors considered texture analysis, shape analysis, or both of them in their system to discriminate between objects. A recent study by Oulefki et al. [15] proposed a system to automatically segment COVID-19 lung infected region by applying a multilevel entropy-based threshold approach, namely a modified Kapur method. Their system achieved a sensitivity, specificity, Dice similarity coefficient (DSC), and precision of , , , and , respectively. Another study by Korfiatis et al. [16] employed k-means clustering to partition CT voxels into four classes: lung, muscle, fat, and bone based on intensity values. After that, the initial lung region was extracted by applying a filling operation. Finally, a support vector machine (SVM) was used to determine the final border of the lung based on intensity and wavelet-based descriptors. In [17], authors proposed a segmentation system by eliminating unwanted regions and segmenting lung initially using a threshold approach. Moreover, a 3D gray-level co-occurrence matrix (GLCM) was constructed for a window of size centered on each voxel. Then, predefined features were extracted from the GLCM, and a new image was constructed, being the product of the entropy and the inverse difference moment of the GLCM. Subsequently, the abnormal regions were identified from the constructed image using a threshold approach. Finally, the later and initial segmentation were merged together to determine the final segmentation. Dehmeshki et al. [18] used a genetic algorithm (GA) to construct a system to identify spherical nodules within CT images. First, the lung was segmented using adaptive thresholding. Then, the authors utilized a geometric feature, namely, volumetric shape index (VSI), for the segmented lung as a weighted factor in the fitness function of GA. VSI of a spherical object is 1, while that of a cylindrical object is , so the values of fitness function for nodules were higher than for blood vessels. Convergence criteria of GA to select the shape as a nodule was a threshold-based. The detection rate of their system was approximately with a false positive per scan. Moreover, Nakagomi [19] presented a min-cut graph segmentation algorithm based on multiple shapes and prior information of neighbors structure to detect and segment lung infected by pleural effusion. In [20], authors presented a lung segmentation system for different lung pathologies. Their system first determined two seed points within both lungs using a thresholding approach, then a fuzzy connectedness (FC) algorithm was used to extract the lung. Furthermore, multiple refinement stages based on machine learning classification and neighboring anatomy-guided learning mechanisms were included in their system to detect pathological regions during FC segmentation. A recent study by Houssein et al. [21] developed a segmentation system that employed a heuristic method, called manta ray foraging optimization (MRFO), based on an opposition-based learning (OBL), using Otsu’s method as a fitness function, to get the best threshold values using COVID-19 CT images. More information about texture- and shape-based lung segmentation can be found in [22].

Recently, deep learning approaches have been employed to segment normal as well as pathological lung caused by COVID-19. For example, Saood et al. [23] investigated two deep learning approaches to semantically segment infected/non-infected lung using CT images. These included SegNet [24] and U-Net [25] networks. The author employed these networks for binary and multi-class classification. They conducted multiple experiments with different hyperparameters. The best reported results for the binary (multi-class) classification gave accuracy of () and () using SegNet and U-Net, respectively. A similar study [26] proposed a segmentation system using a convolution neural network (CNN). Their network employed feature variation block to enhance the efficiency of feature representation as well as progressive atrous spatial pyramid pooling to deal with appearance and shape differences caused by sophisticated infection. The DSC (sensitivity, specificity) of their system was (, ) and (, ) for COVID-19 infections and normal lung CT images, respectively. A multi-task deep learning-based system was implemented by Amyar et al. [27]. This study included reconstruction for better feature representation; segmentation to extract lesion regions; and classification to categorize the scan into normal, COVID-19, and other diseases. Their system employed encoder-decoder architecture based on U-Net network which used a common encoder for the three tasks. The best reported DSC of their segmentation task was . Recent study by Fan et al. [28] developed a binary and multi-class segmentation system using CT chest images, called Inf-Net. This system was mainly based on a deep learning. Moreover, to compensate the limited number of labeled images, they included a random sampling-based semi-supervised learning, namely, Semi-Inf-Net. Their system employed edge attention as well as reverse attention to improve the feature representation by modeling lung boundaries. In addition, high-level features were exploited by their network and combined by a parallel partial decoder. The performance of their infection segmentation system achieved a DSC of and , sensitivity of and , and specificity of and using Inf-Net and Semi-Inf-Net, respectively. A similar study [29] proposed an automatic deep learning-based multi-class segmentation system of COVID-19 using CT chest images. The latter exploited aggregated residual transformations in addition to soft attention mechanism to better represent the features and to increase the system’s ability to distinguish between different COVID-19 lesions. The reported DSC (accuracy, precision) of their system was (, ) and (, ) with and without data augmentation, respectively. Another study [30] proposed a semi-supervised deep learning-based segmentation system, called FSS-2019-nCov, to detect lesion infection in COVID-19 patients. The latter was based on encoder–decoder architecture with Res2Net [31] encoder backbone. In the proposed encoder–decoder architecture, the authors used a context enrichment module, namely, smoothed atrous convolution block and the multi-scale pyramid pooling block, to overcome any debilitation occurred in the represented knowledge generated in the encoder phase. This system was consisted of three modules: conditioner path, adaptive interaction module, and segmentation path. Conditioner path was responsible to learn feature maps from support sets which contain CT slice and its ground-truth. Subsequently, these feature maps were transmitted to the segmentation path using adaptive interaction module which was responsible for detecting lesion in the CT slice. The performance of their system achieved a DSC of , sensitivity of , and specificity of . In [32], the authors employed V-Net [33] to segment lung in COVID-19 CT images that was refined by a shape deformation module. A similar study by Li et al. [34] employed U-Net to segment lung on CT images. Then, they proposed a deep learning network, called COVNet, with a ResNet-50 [35] backbone to detect COVID-19 lesions. A recent study [36] developed a deep learning-based segmentation system, called LungINFseg, to detect COVID-19 lesions in CT images. This system was built on the basis of encoder–decoder architecture. The authors employed a 2D discrete wavelet transform (DWT) with four Haar filters and a receptive field aware (RFA) module in the encoder phase, which were able to change the size of receptive field, to capture more relevant features related to infected regions. Their system achieved a DSC and intersection over union (IoU) score of and , respectively. Other studies have also employed deep learning as a segmentation system with varying accuracy as reported in [36,37,38,39,40,41,42,43].

Segmentation techniques for CT data using deep learning method consider the current, state-of-the-art approaches. However, they have some drawbacks in practical applications, such as the need for huge databases to learn the different pathology of the lung regions which makes the training of such network is very high computational [44]. Moreover, segmentation approaches based on deformable models, which optimize a trade-off between smoothness of the deformable boundary and homogeneity of the region inside the boundary, suffer from high computational complexity and limited capabilities when the desired boundary has concavities or encompasses a region that is naturally inhomogeneous, such as infected lung regions. To overcome the aforementioned limitations, we are proposing an unsupervised lung segmentation approach that is based on modeling the first-order appearance model of CT data by using a probabilistic model based on a linear combination of Gaussian (LCG) that estimates dominant components, corresponding to lung and chest regions, as well as subdominant components. Subsequently, these subdominant components are clustered to one of the dominant components for marginal density estimation. This model can capture the variability in the Hounsfield distributions that may come from changing the screening protocols and severity of lung infections. Finally, we refine the lung segmentation by applying 3D morphological constraints based on the Markov–Gibbs random field (MGRF) model with analytical parameter estimations.

2. Methods

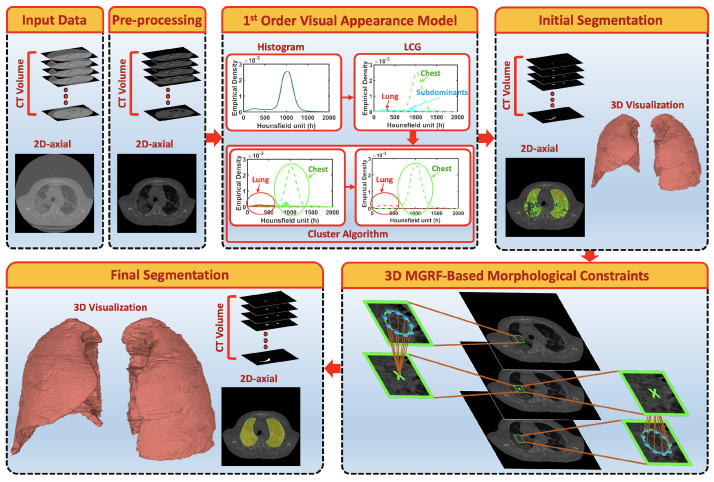

A fully automated segmentation framework is presented to extract both healthy lung tissues as well as pathological lung tissues that may be caused by COVID-19. The major steps of the framework, depicted in Figure 1, are as follows: (i) preprocessing 3D chest CT scans to identify background voxels; (ii) modeling the gray-level distribution of the CT data as a Gaussian mixture model with parameters estimated using a novel, sequential, expectation-maximization (EM)-based approach; (iii) preliminary segmentation of the lung region based on the use of a Bayes classifier; and (iv) refining the segmentation using a three-dimensional, rotation- and translation-invariant MGRF to impose morphological constraint. Below, we will describe the details of each step.

Figure 1.

Schematic illustration of the pipeline of the proposed segmentation system using CT images.

2.1. First-Order Visual Appearance Model

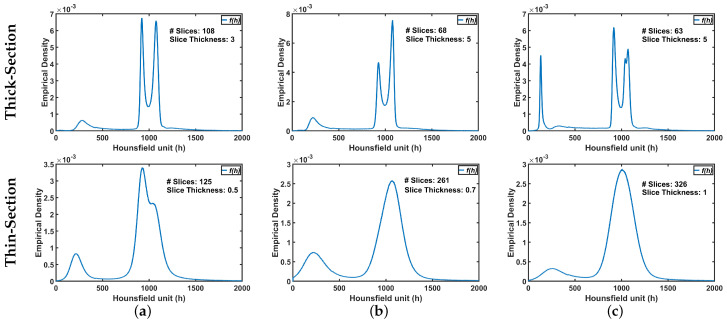

The ultimate goal is accurate labeling of voxels as belonging to lung tissue or background, where accuracy is defined as close agreement with “ground-truth” lung region delineated by a radiologist. The main challenge in modeling the distribution of the radiodensities (in Hounsfield units) of lung and chest tissues, i.e., the relative frequency histogram of CT voxel values, is dependent upon slice thickness and the severity of lung infection as shown in Figure 2.

Figure 2.

An illustrative example of variability of CT appearance (distribution of radiodensities) for (a) healthy/mild, (b) moderate, and (c) severe COVID-19 infections.

To address this challenge, we will assume that the first-order visual appearance model of the CT data () can be modeled with linear combination of Gaussian distributions with components [45]. The first two components, called the dominant modes, corresponding to the lung region () and the chest region exterior to the lungs (). The remaining Gaussian components are called subdominant modes. Thus, the proposed probabilistic model is

| (1) |

where the are mixing weights, and is a Gaussian density with parameters . In order for to be a density function, the weights must satisfy the constraint

| (2) |

Given the number K of Gaussian components, the parameters of Equation (1), including mixing weights and means and variances , are estimated by maximizing the log-likelihood of the empirical data

| (3) |

where is the histogram of the CT data, whose voxel values range from 0 to H. The corresponding relative frequency histogram is denoted , N being the total number of voxels. To maximize the likelihood in Equation (3), we employ an iterative block relaxation process as follows.

Let indicate an iteration such that are the parameter estimates on that iteration, and

| (4) |

is the proposed probabilistic model for the CT data. The conditional weights are estimated as follows:

| (5) |

This conditional probability specifies the relative contributions of voxel value h to each component at step . Using these variables, Equation (3) can be written in the equivalent form:

| (6) |

From given starting values at , the block relaxation scheme converges to a local maximum of the likelihood function in Equation (6) through iteration of the following two steps:

E-step : estimate , , which maximize under the fixed conditional weights of Equation (5) at step .

M-step : recalculate weights, which maximize L holding parameters and fixed.

The process is repeated until the changes of all the parameters become small.

The E-step maximizes the likelihood function of Equation (6) subject to the constraints Equation (2). The solution for the weights is

| (7) |

Then, parameters of each Gaussian component are found using the ordinary (unconstrained) maximum likelihood estimates:

| (8) |

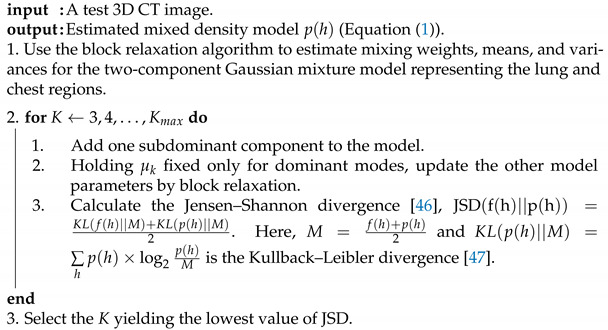

We will follow Algorithm 1 to illustrate the steps for estimating the parameters of the proposed probabilistic model. The final estimated density will consist of the two dominant Gaussian components and subdominant Gaussian components. Jensen–Shannon divergence (JSD) [46] is employed in this algorithm to measure the similarity between empirical density and mixed density for use as convergence criteria to determine the number of Gaussian components. The latter is a symmetric version of a Kullback–Leibler divergence [47].

| Algorithm 1: Estimation of the proposed probabilistic model parameters |

|

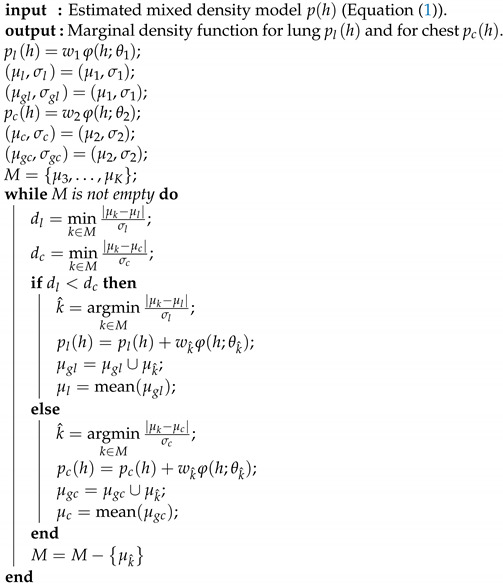

Estimation of the marginal density: The subdominant components of the final estimated model need to be partitioned among the two dominant modes. Each subordinate component is associated with one dominant component in order to minimize the expected misclassification rate. This is accomplished using the proposed Algorithm 2.

| Algorithm 2: The proposed clustering algorithm |

|

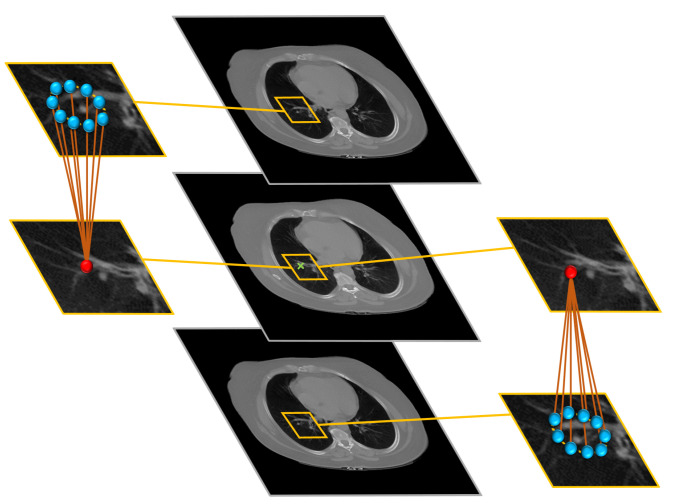

2.2. MGRF-Based Morphological Constraints

To get a consistent segmentation of the lung region, we applied rotation invariant spatial constraints by using a generic Markov–Gibbs model of region maps [45]. The model, which incorporates voxel–voxel interaction effects as shown in Figure 3, has, in general, an arbitrary interaction structure and corresponding Gibbs potentials. For simplicity, we restrict the interaction neighborhood system () to the nearest 9 neighbors in the above CT slice and 9 neighbors in the below CT-slice.

Figure 3.

Illustration of the 3D MGRF-Based morphological constraints on the anatomical segmentation. The middle column shows the selected slice, and its upper and lower slices; the left column shows the selected pixel and its neighbors at the upper slice while the right column shows the selected pixel and its neighbors at the lower slice.

To model the interactions between the CT voxels, we will assume all the interactions as the same within each region. To estimate this interaction in analytical way, let denote a bi-valued Gibbs potential describing pairwise interactions, where

| (9) |

Then, the Gibbs probability distribution (GPD) of region maps on the 3D lattice is as follows [45]:

| (10) |

By modifying the derivation scheme in [45] to fit our model, the following first approximation of the maximum likelihood estimator (MLE) of the potential values for a given map is obtained:

| (11) |

where and denote the relative frequency of the equal and non-equal pairs of the labels in all the equivalent voxels pairs ; ; , respectively.

2.3. Joint MGRF Model and Lung Segmentation Algorithm

In order to integrate the first-order appearance model with the spatial probabilistic model that describes the morphological/anatomical constrains, we will assume that the CT data () consisting of visual appearance model and its spatial map (, data labels) follow the following two-level MGRF model:

| (12) |

Here, is an unconditional distribution of maps that is modeled by MGRF probabilistic model that is demonstrated in Equation (10). is a conditional distribution of gray levels for a given labeling. The Bayesian maximum a posteriori estimation (MAP) of the labeling, given the image , maximizes the log-likelihood,

| (13) |

In order to summarize the proposed segmentation system, the basic steps are demonstrated in Algorithm 3.

| Algorithm 3: Lung Extraction Algorithm |

|

input: A test 3D CT image. output: Final 3D lung segmentation.

|

3. Evaluation Metrics

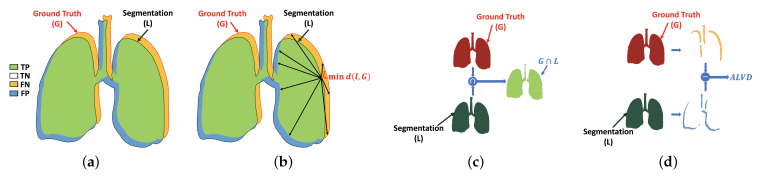

This section describes the metrics used to gauge the performance of our proposed system: Dice similarity coefficient (DSC), overlap coefficient, and absolute lung volume difference (ALVD). Each of these quantifies in some way either the agreement or dissimilarity between the segmentation algorithm result and the corresponding ground-truth segmentation. More detailed explanation is presented in Section 3.1, Section 3.2 and Section 3.3, respectively. Furthermore, a fourth metric, the 95th-percentile bidirectional Hausdorff distance (BHD) (Section 3.4), is employed to quantify the accuracy of the boundary of the segmented region relative to ground-truth.

3.1. Dice Similarity Coefficient (DSC)

Dice similarity coefficient (DSC) is one of the most common similarity metric to measure the similarity between two different areas. This metric is used to evaluate the result of the proposed system by estimating the similarity between the black-white segmented lung (L) and the ground-truth (G), i.e., the percentage of common region (i.e., the green part) in both images as shown in Figure 4a. The range of this metric is between 0 and 1, as 0 and 1 mean dissimilar and similar, respectively. It is computed as follows:

| (14) |

where is the cardinality of white pixels in the intersection between the segmented lung (L) and the ground-truth (G), while and are the cardinality of the white pixels in the segmentation (L) and the ground-truth (G), respectively.

Figure 4.

Illustration of the evaluation metrics: (a) DSC, (b) HD, (c) overlap coefficient, and (d) ALVD. Note that TP, TN, FP, and FN are true positive (correct lung pixel), true negative (correct background pixel), false positive (incorrect lung pixel), and false negative (incorrect background pixel).

3.2. Overlap Coefficient

Overlap coefficient is used in our assessment pipeline to measure the similarity between the predicted object and its ground-truth by computing the overlap percentage between them, see Figure 4c. The overlap coefficient of identical objects gives 1, while it gives 0 for heterogeneous one. The latter is estimated as follows:

| (15) |

where is the cardinality of white pixels in the union between the segmented lung (L) and the ground-truth (G).

3.3. Absolute Lung Volume Difference (ALVD)

Another metric used to assess our work is absolute lung volume difference (ALVD). ALVD computes the similarity between two images by measuring the differences between the ground-truth (G) and the black-white segmented lung (L) (Figure 4d). The ALVD of similar objects gives 0. This metric is defined as

| (16) |

where is the absolute difference between the cardinality of white pixels in the ground-truth (G) and segmentation (L).

3.4. Bidirectional Hausdorff Distance (BHD)

This section describes the last metric called bidirectional Hausdorff distance (BHD), which is used to evaluate our proposed system in addition to the previous three metrics. BHD is the bidirectional estimation of Hausdorff distance (HD) between the black-white segmented lung (L) and the ground-truth (G), and vice versa. HD is the maximum Euclidean distance between the points in the border of the black-white segmented lung (L) and its closest point in the border of the ground-truth (G), as visualized in Figure 4b, which is computed as follows [48,49]:

| (17) |

where l and g are sets of the points border in the L and G, respectively, and is the Euclidean distance between the two points.

As, is estimated as

| (18) |

In this paper, the 95th-percentile BHD is used to evaluate our proposed system. Instead of getting the maximum Euclidean distance between L and G, 95th-percentile of all computed distances is selected to overcome the outliers.

4. Experimental Results

The segmentation framework described above was applied to the problem of segmenting lung with pathological tissue in COVID-19 patients. The proposed segmentation system is evaluated and tested on 32 CT chest volume with different severity of COVID-19 infections, selected from 249 CT volume in COVID-19 [50]. Four of them had healthy/mild COVID-19 infections whose image size ranges from to , while 17 patients of size who had moderate infections as well as 11 CT chest volume of size had severe COVID-19 infections. To compare our framework with other approaches that depend on a training dataset, we select another 34 3D CT chest volume (i.e., 3713 images in total) from the same dataset to use them as a training. Table 1 summarized the dataset characteristics used in our experimental results. The data are graded according to the radiology protocol in [51]. To obtain more accurate segmentation, we included morphological/anatomical constraints based on the use of a rotation invariant MGRF model.

Table 1.

Dataset characteristics.

| Class | Resolution | #Slices | #Patients | Total | |

|---|---|---|---|---|---|

| Training | Healthy/Mild | 2 | 34 | ||

| Moderate | 35–397 | 20 | |||

| Severe | 12 | ||||

| Testing | Healthy/Mild | 4 | 32 | ||

| Moderate | 17 | ||||

| Severe | 11 |

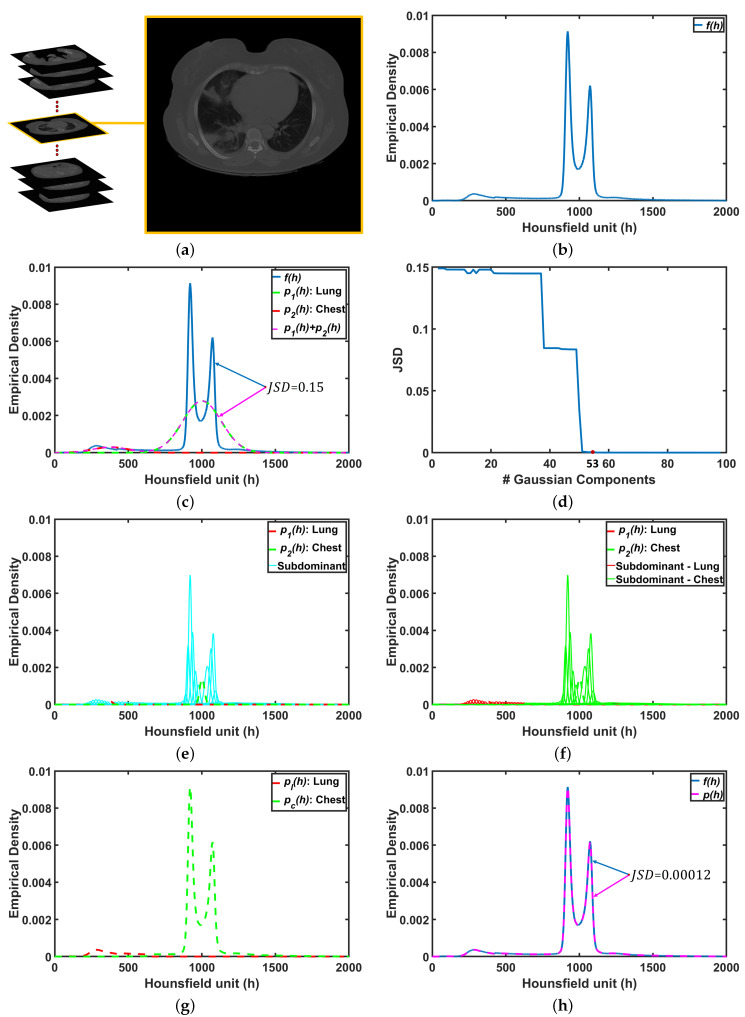

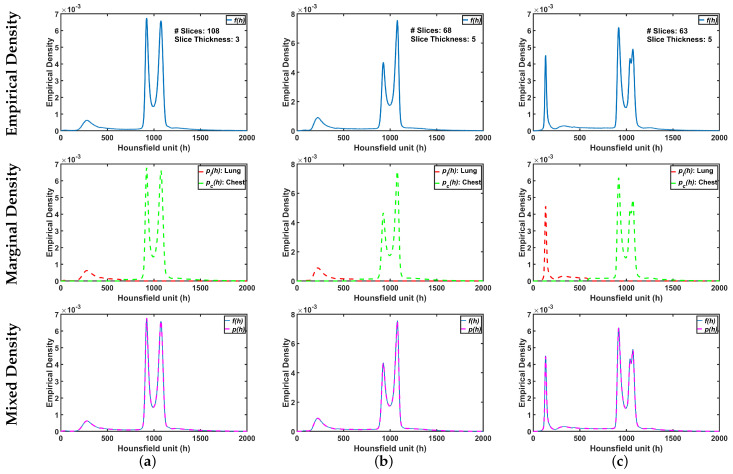

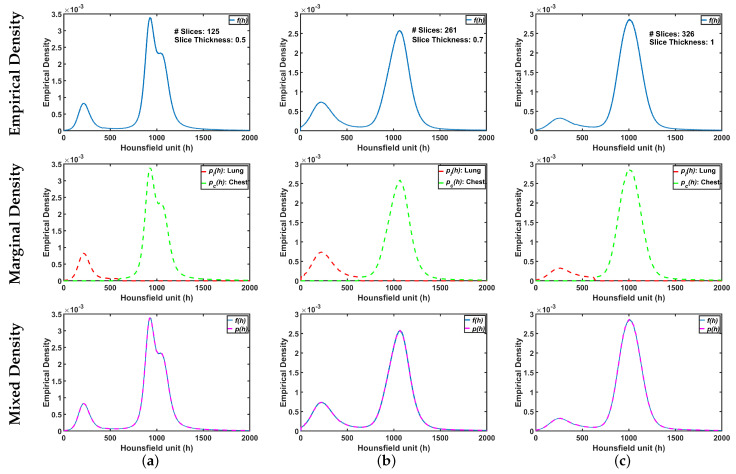

To demonstrate step by step how our proposed approach works, Figure 5b shows empirical density for the 3D CT chest volume (Figure 5a), and the two Gaussian mixtures approximating its dominant modes are presented in Figure 5c. Furthermore, Figure 5c demonstrates the JSD between the empirical density and the two estimated dominant Gaussian components. Figure 5d shows the changes of JSD and the best-estimated number of Gaussian components that are demonstrated in Figure 5e. Figure 5f demonstrated the classification of the subdominant Gaussian components based on the use of the proposed clustering algorithm (Algorithm 2). Figure 5g,h demonstrates the final marginal densities for lung and chest as well as the final estimated mixed density. Figure 6 and Figure 7 demonstrate the ability of the proposed probabilistic model to handle the variability in the empirical density that it may occur due to the severity of infections or the variability of the scanning protocol.

Figure 5.

An illustrative example of the proposed system: (a) CT chest volume, (b) empirical density, (c) two dominants Gaussian components, (d) JSD between empirical density and mixed density, (e) two dominant and subdominant Gaussian components, (f) proposed cluster algorithm, (g) marginal density for lung and chest, and (h) final mixed density for all components. Note that JSD stands for Jensen–Shannon divergence.

Figure 6.

An illustrative example of the proposed appearance model estimated from Thick-Section CT appearance model for (a) healthy/mild, (b) moderate, and (c) severe COVID-19 infected lung. Note that first, second, and third rows represent empirical, marginal, and mixed densities, respectively.

Figure 7.

An illustrative example of the proposed appearance model estimated from Thin-Section CT appearance model for (a) healthy/mild, (b) moderate, and (c) severe COVID-19 infected lung. Note that first, second, and third rows represent empirical, marginal, and mixed densities, respectively.

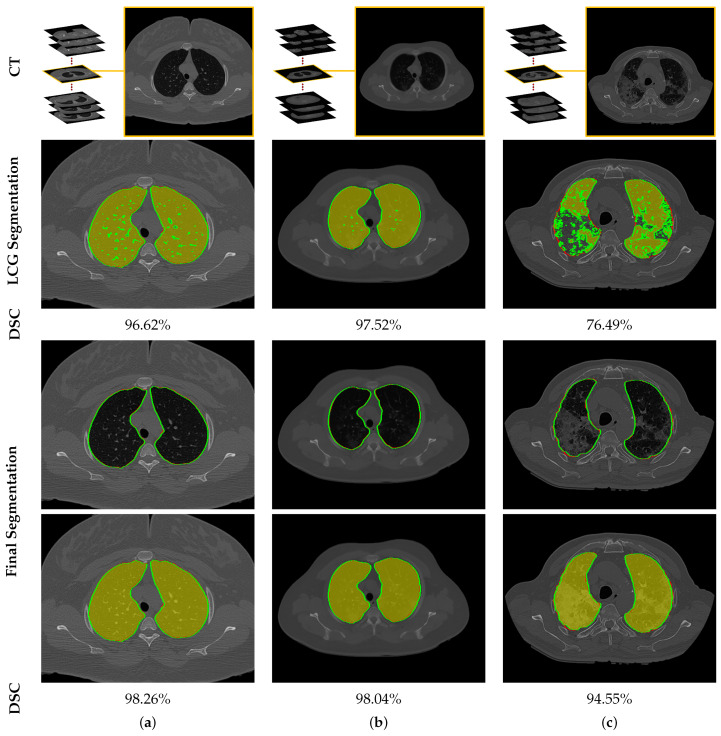

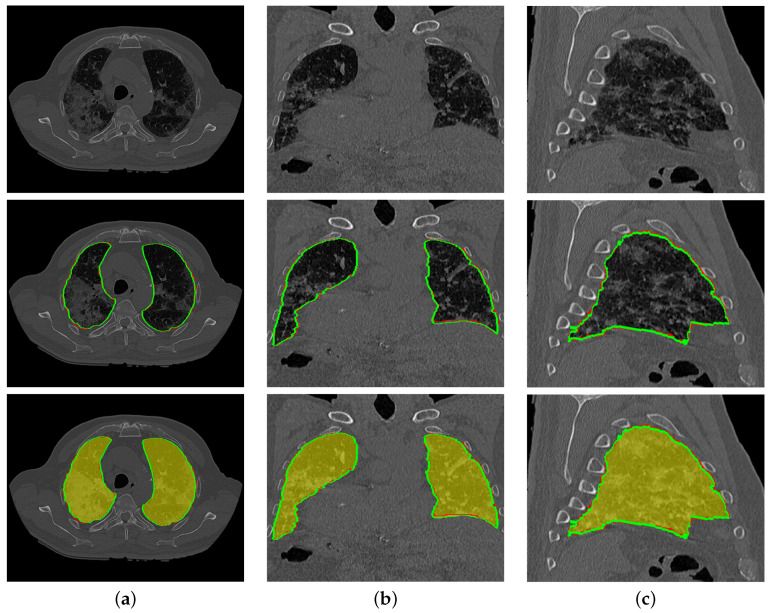

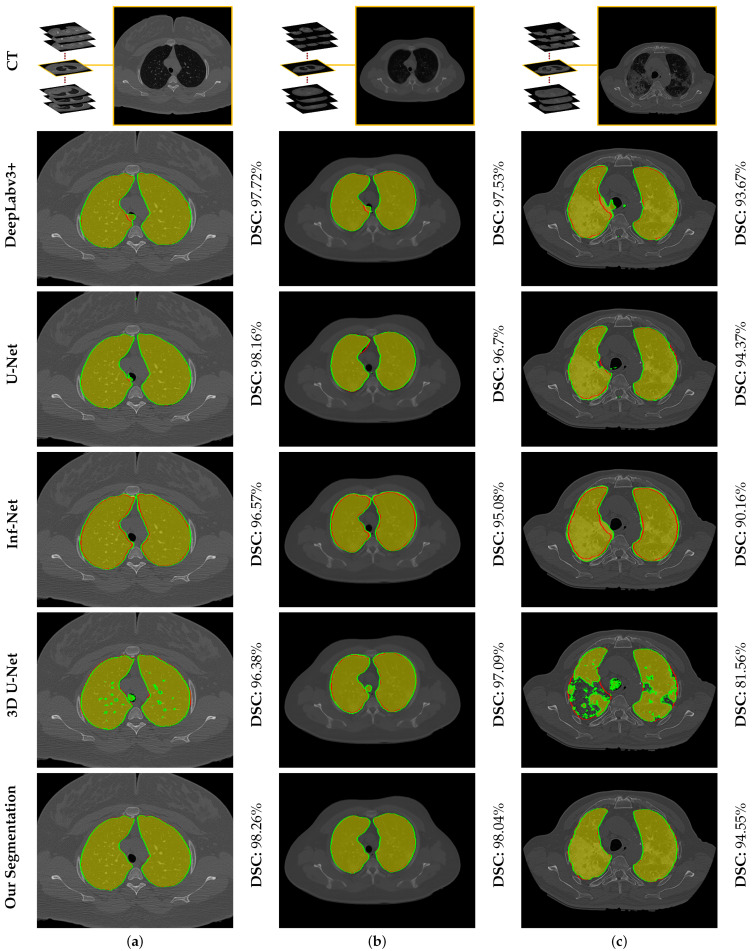

To highlight the promise of including the rotation invariant MGRF-based morphological/anatomical constraints with the adaptive first-order appearance model, the system’s performance is evaluated before and after inclusion, as demonstrated in Table 2. As shown in the table, the proposed segmentation is enhanced after including MGRF-based morphological/anatomical constraints, particularly in severe cases where the DSC is significantly increased from to . To more prove the attainable enhancement of the system, Figure 8 presents three examples of the proposed system before and after the inclusion for healthy/mild, moderate, and severe COVID-19 infected lung. As shown in the figure, the developed system outperforms the proposed appearance model alone (i.e., LCG) for three examples, whereas the proposed system shows its ability to segment a severe COVID-19 infection with DSC compared to the proposed appearance model which gives DSC. Moreover, Figure 9 presents the proposed segmentation for a severe lung COVID-19 infection at different cross-sections (i.e., 2D axial, coronal, and saggital) to visually show the efficiency of the proposed system. Overall, the proposed system achieves a DSC, overlap, BHD, and ALVD of , , , and , respectively. Finally, to prove the robustness of the proposed segmentation approach, deep learning approaches are adopted as a comparison: DeepLabv3+ [52] using ResNet-50 network as a backbone, Inf-Net [28] with backbone ResNet-50 network, U-Net [25], and 3D U-Net [53]. The results are reported in Table 3. As demonstrated in the table, the 3D U-Net approach gives a worst performance of DSC, overlap, BHD, and ALVD, while the proposed segmentation approach gives the best performance compared to these deep learning approaches. Moreover, to visually demonstrate the capability of the proposed system, three different examples of healthy/mild, moderate, and severe lung COVID-19 infections are segmented using these approaches, as presented in Figure 10. As demonstrated in the figure, the proposed approach segments the three examples better than the other four approaches. Moreover, the U-Net approach has a DSC close to the proposed approach. However, there are some parts that segment incorrectly as demonstrated in the figures, e.g., classifying part of trachea or chest as lung. Therefore, the proposed system is much better due to its segmentation being closer to the ground-truth. Therefore, it is highly recommended to use our approach to segment the lung infected by COVID-19 as it shows better performance than the state-of-the-art deep learning approaches. In addition, it is unsupervised technique, thus it will not suffer from the underfitting and overfitting problems.

Table 2.

Quantitative evaluation of the proposed segmentation system before and after applying rotation invariant Markov–Gibbs random field (MGRF). Note that LCG, DSC, BHD, and ALVD stand for linear combination of Gaussian, Dice similarity coefficient, 95th-percentile bidirectional Hausdorff distance, and absolute lung volume difference, respectively.

| DSC | Overlap | BHD | ALVD | ||

|---|---|---|---|---|---|

| LCG-model | Healthy/Mild | ||||

| Moderate | |||||

| Severe | |||||

| Overall | |||||

| Final System | Healthy/Mild | ||||

| Moderate | |||||

| Severe | |||||

| Overall |

Figure 8.

An illustrative example of the proposed segmentation for (a) healthy/mild, (b) moderate, and (c) severe COVID-19 infected lung. Note that red border (green border or yellow region) refers to ground-truth (segmentation).

Figure 9.

An illustrative example of the proposed segmentation (second and third rows) for a severe COVID-19 infected lung at (a) 2D axial, (b) coronal, and (c) sagittal cross sections of an original image (first row). Note that red border (green border or yellow region) refers to ground-truth (segmentation).

Table 3.

Quantitative evaluation of the proposed segmentation system compared with other deep learning approaches. Note that DSC, BHD, and ALVD stand for Dice similarity coefficient, 95th-percentile bidirectional Hausdorff distance, and absolute lung volume difference, respectively.

Figure 10.

An illustrative example of the proposed segmentation compared to other deep learning approaches for (a) healthy/mild, (b) moderate, and (c) severe COVID-19 infected lung. Note that red border (green border or yellow region) refers to ground-truth (segmentation).

5. Discussion and Conclusions

Experiments demonstrate that the proposed framework is promising and achieved high accuracy, with identification of the first-order appearance model followed by 3D morphological constraints based on analytical estimation MGRF parameters producing good results when segmenting the COVID-19 infected lung region from CT images. Quantitative metrics of accuracy including the DSC, overlap coefficient, 95th-percentile BHD, and the ALVD metrics all show consistent performance on our sample data set of 32 subjects, outperforming current, state-of-the-art deep learning-based lung segmentation approaches. The results herein demonstrate the ability of the developed system to segment lung on a CT image, whose DSC is improved from to when 3D morphological MGRF-based constraints are included in the system pipeline. However, the accuracy of the proposed segmentation system will get affected if the lung is significantly damaged or filled with water, or the appearance of the lung is closed to the chest. Thus, separation based on appearance model will be very challenging task. Therefore, we plan to add some shape model approach in our system to overcome these problems. Moreover, a future extension of this work would integrate the proposed segmentation approach into a computer-aided diagnostic system to assess pulmonary function and risk of mortality in COVID-19 patients, which is the ultimate goal of our research group. Furthermore, the morphological constraints could be made to support large-scale inhomogeneity of the kind seen in severe lung infection. This will be accomplished by expanding the neighborhood system to include larger cliques so that the MGRF model incorporates higher order interaction effects.

Author Contributions

Methodology, A.S. (Ahmed Sharafeldeen), M.E., N.S.A., A.S. (Ahmed Soliman), and A.E.-B.; Software, A.S. (Ahmed Sharafeldeen) and A.E.-B.; Validation, A.S. (Ahmed Sharafeldeen) and A.E.-B.; Formal analysis, A.S. (Ahmed Sharafeldeen), M.E., N.S.A., A.S. (Ahmed Soliman), and A.E.-B.; investigation, A.E.-B.; Writing—original draft preparation, A.S. (Ahmed Sharafeldeen), M.E., N.S.A., A.S. (Ahmed Soliman), and A.E.-B.; Writing—review and editing, A.S. (Ahmed Sharafeldeen), M.E., N.S.A., A.S. (Ahmed Soliman), and A.E.-B.; Visualization, A.S. (Ahmed Sharafeldeen) and A.E.-B.; Supervision, A.E.-B.; Project administration, N.S.A. and A.E.-B. All authors have read and agreed to the published version of the manuscript.

Funding

The authors extend their appreciation to the Deputyship for Research & Innovation, Ministry of Education in Saudi Arabia for funding this research work through the project number (PNU-DRI-Targeted-20-001).

Institutional Review Board Statement

We used public data.

Informed Consent Statement

We used public data.

Data Availability Statement

The data presented in this study are openly available in The Cancer Imaging Archive (TCIA) at https://doi.org/10.7937/TCIA.2020.GQRY-NC81 accessed date 5 June 2021, reference number [50].

Conflicts of Interest

The authors declare no conflict of interest.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Machhi J., Herskovitz J., Senan A.M., Dutta D., Nath B., Oleynikov M.D., Blomberg W.R., Meigs D.D., Hasan M., Patel M., et al. The Natural History, Pathobiology, and Clinical Manifestations of SARS-CoV-2 Infections. J. Neuroimmune Pharmacol. 2020;15:359–386. doi: 10.1007/s11481-020-09944-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Gupta S., Hayek S.S., Wang W., Chan L., Mathews K.S., Melamed M.L., Brenner S.K., Leonberg-Yoo A., Schenck E.J., Radbel J., et al. Factors Associated With Death in Critically Ill Patients With Coronavirus Disease 2019 in the US. JAMA Intern. Med. 2020;180:1436. doi: 10.1001/jamainternmed.2020.3596. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Vijayaraj J. Various Segmentation Techniques for Lung Cancer Detection using CT Images: A Review. Turk. J. Comput. Math. Educ. 2021;12:918–928. doi: 10.17762/turcomat.v12i2.1102. [DOI] [Google Scholar]

- 4.Silveira M., Nascimento J., Marques J. Automatic segmentation of the lungs using robust level sets; Proceedings of the 2007 29th Annual International Conference of the IEEE Engineering in Medicine and Biology Society; Lyon, France. 22–26 August 2007; [DOI] [PubMed] [Google Scholar]

- 5.Sun X., Zhang H., Duan H. 3D Computerized Segmentation of Lung Volume With Computed Tomography. Acad. Radiol. 2006;13:670–677. doi: 10.1016/j.acra.2006.02.039. [DOI] [PubMed] [Google Scholar]

- 6.Leader J.K., Zheng B., Rogers R.M., Sciurba F.C., Perez A., Chapman B.E., Patel S., Fuhrman C.R., Gur D. Automated lung segmentation in X-ray computed tomography. Acad. Radiol. 2003;10:1224–1236. doi: 10.1016/S1076-6332(03)00380-5. [DOI] [PubMed] [Google Scholar]

- 7.Hu S., Hoffman E., Reinhardt J. Automatic lung segmentation for accurate quantitation of volumetric X-ray CT images. IEEE Trans. Med. Imaging. 2001;20:490–498. doi: 10.1109/42.929615. [DOI] [PubMed] [Google Scholar]

- 8.Brown M.S., Goldin J.G., McNitt-Gray M.F., Greaser L.E., Sapra A., Li K.T., Sayre J.W., Martin K., Aberle D.R. Knowledge-based segmentation of thoracic computed tomography images for assessment of split lung function. Med. Phys. 2000;27:592–598. doi: 10.1118/1.598898. [DOI] [PubMed] [Google Scholar]

- 9.Brown M., McNitt-Gray M., Mankovich N., Goldin J., Hiller J., Wilson L., Aberie D. Method for segmenting chest CT image data using an anatomical model: Preliminary results. IEEE Trans. Med. Imaging. 1997;16:828–839. doi: 10.1109/42.650879. [DOI] [PubMed] [Google Scholar]

- 10.Van Rikxoort E.M., de Hoop B., Viergever M.A., Prokop M., van Ginneken B. Automatic lung segmentation from thoracic computed tomography scans using a hybrid approach with error detection. Med. Phys. 2009;36:2934–2947. doi: 10.1118/1.3147146. [DOI] [PubMed] [Google Scholar]

- 11.Tan J.H., Acharya U.R. Active spline model: A shape based model—Interactive segmentation. Digit. Signal Process. 2014;35:64–74. doi: 10.1016/j.dsp.2014.09.002. [DOI] [Google Scholar]

- 12.Gill G., Toews M., Beichel R.R. Robust Initialization of Active Shape Models for Lung Segmentation in CT Scans: A Feature-Based Atlas Approach. Int. J. Biomed. Imaging. 2014;2014:1–7. doi: 10.1155/2014/479154. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Lassen B., van Rikxoort E.M., Schmidt M., Kerkstra S., van Ginneken B., Kuhnigk J.M. Automatic Segmentation of the Pulmonary Lobes From Chest CT Scans Based on Fissures, Vessels, and Bronchi. IEEE Trans. Med Imaging. 2013;32:210–222. doi: 10.1109/TMI.2012.2219881. [DOI] [PubMed] [Google Scholar]

- 14.Birkbeck N., Kohlberger T., Zhang J., Sofka M., Kaftan J., Comaniciu D., Zhou S.K. Medical Image Computing and Computer-Assisted Intervention–MICCAI 2014. Springer International Publishing; Berlin/Heidelberg, Germany: 2014. Lung Segmentation from CT with Severe Pathologies Using Anatomical Constraints; pp. 804–811. [DOI] [PubMed] [Google Scholar]

- 15.Oulefki A., Agaian S., Trongtirakul T., Laouar A.K. Automatic COVID-19 lung infected region segmentation and measurement using CT-scans images. Pattern Recognit. 2021;114:107747. doi: 10.1016/j.patcog.2020.107747. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Korfiatis P., Kalogeropoulou C., Karahaliou A., Kazantzi A., Skiadopoulos S., Costaridou L. Texture classification-based segmentation of lung affected by interstitial pneumonia in high-resolution CT. Med. Phys. 2008;35:5290–5302. doi: 10.1118/1.3003066. [DOI] [PubMed] [Google Scholar]

- 17.Wang J., Li F., Li Q. Automated segmentation of lungs with severe interstitial lung disease in CT. Med. Phys. 2009;36:4592–4599. doi: 10.1118/1.3222872. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Dehmeshki J., Ye X., Lin X., Valdivieso M., Amin H. Automated detection of lung nodules in CT images using shape-based genetic algorithm. Comput. Med Imaging Graph. 2007;31:408–417. doi: 10.1016/j.compmedimag.2007.03.002. [DOI] [PubMed] [Google Scholar]

- 19.Nakagomi K., Shimizu A., Kobatake H., Yakami M., Fujimoto K., Togashi K. Multi-shape graph cuts with neighbor prior constraints and its application to lung segmentation from a chest CT volume. Med. Image Anal. 2013;17:62–77. doi: 10.1016/j.media.2012.08.002. [DOI] [PubMed] [Google Scholar]

- 20.Mansoor A., Bagci U., Xu Z., Foster B., Olivier K.N., Elinoff J.M., Suffredini A.F., Udupa J.K., Mollura D.J. A Generic Approach to Pathological Lung Segmentation. IEEE Trans. Med. Imaging. 2014;33:2293–2310. doi: 10.1109/TMI.2014.2337057. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Houssein E.H., Emam M.M., Ali A.A. Improved manta ray foraging optimization for multi-level thresholding using COVID-19 CT images. Neural Comput. Appl. 2021 doi: 10.1007/s00521-021-06273-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.El-Baz A., Beache G.M., Gimel’farb G., Suzuki K., Okada K., Elnakib A., Soliman A., Abdollahi B. Computer-Aided Diagnosis Systems for Lung Cancer: Challenges and Methodologies. Int. J. Biomed. Imaging. 2013;2013:942353. doi: 10.1155/2013/942353. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Saood A., Hatem I. COVID-19 lung CT image segmentation using deep learning methods: U-Net versus SegNet. BMC Med. Imaging. 2021;21 doi: 10.1186/s12880-020-00529-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Badrinarayanan V., Kendall A., Cipolla R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017;39:2481–2495. doi: 10.1109/TPAMI.2016.2644615. [DOI] [PubMed] [Google Scholar]

- 25.Ronneberger O., Fischer P., Brox T. Lecture Notes in Computer Science. Springer International Publishing; Berlin/Heidelberg, Germany: 2015. U-Net: Convolutional Networks for Biomedical Image Segmentation; pp. 234–241. [DOI] [Google Scholar]

- 26.Yan Q., Wang B., Gong D., Luo C., Zhao W., Shen J., Shi Q., Jin S., Zhang L., You Z. COVID-19 Chest CT Image Segmentation—A Deep Convolutional Neural Network Solution. arXiv. 20202004.10987 [Google Scholar]

- 27.Amyar A., Modzelewski R., Li H., Ruan S. Multi-task deep learning based CT imaging analysis for COVID-19 pneumonia: Classification and segmentation. Comput. Biol. Med. 2020;126:104037. doi: 10.1016/j.compbiomed.2020.104037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Fan D.P., Zhou T., Ji G.P., Zhou Y., Chen G., Fu H., Shen J., Shao L. Inf-Net: Automatic COVID-19 Lung Infection Segmentation From CT Images. IEEE Trans. Med Imaging. 2020;39:2626–2637. doi: 10.1109/TMI.2020.2996645. [DOI] [PubMed] [Google Scholar]

- 29.Chen X., Yao L., Zhang Y. Residual Attention U-Net for Automated Multi-Class Segmentation of COVID-19 Chest CT Images. arXiv. 20202004.05645 [Google Scholar]

- 30.Abdel-Basset M., Chang V., Hawash H., Chakrabortty R.K., Ryan M. FSS-2019-nCov: A deep learning architecture for semi-supervised few-shot segmentation of COVID-19 infection. Knowl. Based Syst. 2021;212:106647. doi: 10.1016/j.knosys.2020.106647. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Gao S.H., Cheng M.M., Zhao K., Zhang X.Y., Yang M.H., Torr P. Res2Net: A New Multi-Scale Backbone Architecture. IEEE Trans. Pattern Anal. Mach. Intell. 2021;43:652–662. doi: 10.1109/TPAMI.2019.2938758. [DOI] [PubMed] [Google Scholar]

- 32.Zhao C., Xu Y., He Z., Tang J., Zhang Y., Han J., Shi Y., Zhou W. Lung segmentation and automatic detection of COVID-19 using radiomic features from chest CT images. Pattern Recognit. 2021;119:108071. doi: 10.1016/j.patcog.2021.108071. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Milletari F., Navab N., Ahmadi S.A. V-Net: Fully Convolutional Neural Networks for Volumetric Medical Image Segmentation; Proceedings of the 2016 Fourth International Conference on 3D Vision (3DV); Stanford, CA, USA. 25–28 October 2016; [DOI] [Google Scholar]

- 34.Li L., Qin L., Xu Z., Yin Y., Wang X., Kong B., Bai J., Lu Y., Fang Z., Song Q., et al. Using Artificial Intelligence to Detect COVID-19 and Community-acquired Pneumonia Based on Pulmonary CT: Evaluation of the Diagnostic Accuracy. Radiology. 2020;296:E65–E71. doi: 10.1148/radiol.2020200905. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.He K., Zhang X., Ren S., Sun J. Deep Residual Learning for Image Recognition; Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR); Las Vegas, NV, USA. 27–30 June 2016; [DOI] [Google Scholar]

- 36.Singh V.K., Abdel-Nasser M., Pandey N., Puig D. LungINFseg: Segmenting COVID-19 Infected Regions in Lung CT Images Based on a Receptive-Field-Aware Deep Learning Framework. Diagnostics. 2021;11:158. doi: 10.3390/diagnostics11020158. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Shan F., Gao Y., Wang J., Shi W., Shi N., Han M., Xue Z., Shen D., Shi Y. Abnormal lung quantification in chest CT images of COVID-19 patients with deep learning and its application to severity prediction. Med. Phys. 2021;48:1633–1645. doi: 10.1002/mp.14609. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Gerard S.E., Herrmann J., Xin Y., Martin K.T., Rezoagli E., Ippolito D., Bellani G., Cereda M., Guo J., Hoffman E.A., et al. CT image segmentation for inflamed and fibrotic lungs using a multi-resolution convolutional neural network. Sci. Rep. 2021;11 doi: 10.1038/s41598-020-80936-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Pan F., Li L., Liu B., Ye T., Li L., Liu D., Ding Z., Chen G., Liang B., Yang L., et al. A novel deep learning-based quantification of serial chest computed tomography in Coronavirus Disease 2019 (COVID-19) Sci. Rep. 2021;11:1–11. doi: 10.1038/s41598-020-80261-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Ma J., Wang Y., An X., Ge C., Yu Z., Chen J., Zhu Q., Dong G., He J., He Z., et al. Toward data-efficient learning: A benchmark for COVID-19 CT lung and infection segmentation. Med. Phys. 2021;48:1197–1210. doi: 10.1002/mp.14676. [DOI] [PubMed] [Google Scholar]

- 41.Elharrouss O., Subramanian N., Al-Maadeed S. An encoder-decoder-based method for COVID-19 lung infection segmentation. arXiv. 2020 doi: 10.1007/s42979-021-00874-4.2007.00861 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Müller D., Rey I.S., Kramer F. Automated Chest CT Image Segmentation of COVID-19 Lung Infection based on 3D U-Net. arXiv. 2020 doi: 10.1016/j.imu.2021.100681.2007.04774 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Tilborghs S., Dirks I., Fidon L., Willems S., Eelbode T., Bertels J., Ilsen B., Brys A., Dubbeldam A., Buls N., et al. Comparative study of deep learning methods for the automatic segmentation of lung, lesion and lesion type in CT scans of COVID-19 patients. arXiv. 20202007.15546 [Google Scholar]

- 44.Tajbakhsh N., Jeyaseelan L., Li Q., Chiang J.N., Wu Z., Ding X. Embracing imperfect datasets: A review of deep learning solutions for medical image segmentation. Med. Image Anal. 2020;63:101693. doi: 10.1016/j.media.2020.101693. [DOI] [PubMed] [Google Scholar]

- 45.El-Baz A.S., Gimel’farb G.L., Suri J.S. Stochastic Modeling for Medical Image Analysis. OCLC: 1086143882; CRC Press; Boca Raton, FL, USA: 2016. [Google Scholar]

- 46.Fuglede B., Topsoe F. Jensen-Shannon divergence and Hilbert space embedding; Proceedings of the International Symposium onInformation Theory 2004; Chicago, IL, USA. 27 June–2 July 2004; [DOI] [Google Scholar]

- 47.MacKay D.J.C. Information Theory, Inference, and Learning Algorithms. Cambridge University Press; New York, NY, USA: 2003. p. 34. [Google Scholar]

- 48.Gerig G., Jomier M., Chakos M. Medical Image Computing and Computer-Assisted Intervention–MICCAI 2001. Springer; Berlin/Heidelberg, Germany: 2001. Valmet: A New Validation Tool for Assessing and Improving 3D Object Segmentation; pp. 516–523. [DOI] [Google Scholar]

- 49.Soliman A., Khalifa F., Alansary A., Gimel’farb G., El-Baz A. Performance Evaluation of an Automatic MGRF-Based Lung Segmentation Approach. AIP; College Park, MA, USA: 2013. [DOI] [Google Scholar]

- 50.An P., Xu S., Harmon S.A., Turkbey E.B., Sanford T.H., Amalou A., Kassin M., Varble N., Blain M., Anderson V., et al. CT Images in COVID-19. [(accessed on 5 June 2021)]; Available online: https://wiki.cancerimagingarchive.net/display/Public/CT+Images+in+COVID-19.

- 51.Kasper J., Decker J., Wiesenreiter K., Römmele C., Ebigbo A., Braun G., Häckel T., Schwarz F., Wehler M., Messmann H., et al. RöFo-Fortschritte auf dem Gebiet der RöNtgenstrahlen und der Bildgebenden Verfahren. Georg Thieme Verlag KG; New York, NY, USA: 2021. Typical Imaging Patterns in COVID-19 Infections of the Lung on Plain Chest Radiographs to Aid Early Triage. [DOI] [PubMed] [Google Scholar]

- 52.Chen L.C., Zhu Y., Papandreou G., Schroff F., Adam H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation; Proceedings of the European Conference on Computer Vision (ECCV); Munich, Germany. 8–4 September 2018. [Google Scholar]

- 53.Çiçek. Ö., Abdulkadir A., Lienkamp S.S., Brox T., Ronneberger O. Medical Image Computing and Computer-Assisted Intervention–MICCAI 2016. Springer International Publishing; Berlin/Heidelberg, Germany: 2016. 3D U-Net: Learning Dense Volumetric Segmentation from Sparse Annotation; pp. 424–432. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The data presented in this study are openly available in The Cancer Imaging Archive (TCIA) at https://doi.org/10.7937/TCIA.2020.GQRY-NC81 accessed date 5 June 2021, reference number [50].