Abstract

Surveillance of sleeping posture is essential for bed-ridden patients or individuals at-risk of falling out of bed. Existing sleep posture monitoring and classification systems may not be able to accommodate the covering of a blanket, which represents a barrier to conducting pragmatic studies. The objective of this study was to develop an unobtrusive sleep posture classification that could accommodate the use of a blanket. The system uses an infrared depth camera for data acquisition and a convolutional neural network to classify sleeping postures. We recruited 66 participants (40 men and 26 women) to perform seven major sleeping postures (supine, prone (head left and right), log (left and right) and fetal (left and right)) under four blanket conditions (thick, medium, thin, and no blanket). Data augmentation was conducted by affine transformation and data fusion, generating additional blanket conditions with the original dataset. Coarse-grained (four-posture) and fine-grained (seven-posture) classifiers were trained using two fully connected network layers. For the coarse classification, the log and fetal postures were merged into a side-lying class and the prone class (head left and right) was pooled. The results show a drop of overall F1-score by 8.2% when switching to the fine-grained classifier. In addition, compared to no blanket, a thick blanket reduced the overall F1-scores by 3.5% and 8.9% for the coarse- and fine-grained classifiers, respectively; meanwhile, the lowest performance was seen in classifying the log (right) posture under a thick blanket, with an F1-score of 72.0%. In conclusion, we developed a system that can classify seven types of common sleeping postures under blankets and achieved an F1-score of 88.9%.

Keywords: sleep posture recognition, convolutional neural network, sleep disorder, sleep behavior, sleep monitoring, sleep surveillance

1. Introduction

Good sleep is imperative to health and well-being [1]. Significant associations have been made between sleep disorders and chronic diseases/conditions such as obesity, diabetes, and hypertension [2,3,4]. Poor sleep or sleep disturbance can contribute to depression, anxiety, and other mental or psychiatric conditions [5]. The prevalence of sleep disorders is 47 individuals among every 1000 in the population, while some reports suggest that half the population may experience sleep disorders [6]. In China, more than one-quarter of adolescents have been reported to suffer from sleep disturbance [7]. Insomnia represents the most common sleep disorder and has been investigated using polysomnography, ballistocardiography, photoplethysmography and actigraphy [1].

Apart from sleep quality, sleep posture constitutes another important category in the field [8]. Different lying or recumbent positions in sleep alters spinal loading, intervertebral disc pressure, and muscle activity [9,10], potentially leading to sleep-related musculoskeletal pain and disorders such as neck spasm, back pain and waking symptoms [11,12]. For example, a prolonged non-symmetrical sleeping posture might lead to structural spinal changes and symptoms [12,13]. Moreover, sleep apnea, sleep paralysis, and nocturnal gastroesophageal reflux can also be associated with poor sleeping posture [14,15,16]. Investigating sleeping posture is of paramount importance in order to understand the pathomechanism and design interventions for sleeping disorders.

Surveillance of sleeping posture is essential for bed-ridden patients because of the risk of pressure sores [17]. Appropriate body turning frequency or changes in sleeping posture can relieve the prolonged localization of pressure that leads to tissue ischemia and necrosis [18]. In addition, it is also essential to monitor sleeping posture for individuals at risk of falling out of bed, particularly elderly people with dementia or delirium, or those at the end of life [19]. While pressure mats and infrared fences have been routinely exploited for this purpose, some researchers developed an integrated depth camera and impulse radar system to monitor wandering behavior and alarm caregivers when elderly people get out of bed [20].

Traditionally, measurement of sleep posture relied on videotaping with manual labeling or self-reported assessments, which could be inaccurate [12,21]. Tang et al. [22] reviewed the nonintrusive technology applied for recognition of sleep posture, including visible light, infrared, and depth cameras [23,24], inertia measurement units with wireless connection [25], and radar/radio sensors [1,8,26]. Although efforts were made to utilize and integrate different sensors for better versatility, there were few attempts to recognize and classify sleeping postures accurately [22]. Body load and pressure patterns represent another area of studies dedicated to sleep posture recognition. A pressure-sensing mattress or distributed pressure sensors embedded in the mattress can be used to estimate changes of posture during sleep [27,28,29]. Recently, machine learning techniques, such as deep learning, support vector machine (SVM), k-nearest neighbors (KNN), and a convolutional neural network (CNN), were applied to improve the accuracy of posture classification for both optical and pressures sensing [23,24,30,31,32,33,34,35]. However, a lack of robustness remained the major issue that limited their application in the field [33].

A state-of-the-art review on sleep surveillance technology highlighted that tracking movements and postures through blankets was the primary barrier to achieving accurate noncontact monitoring, which remained unresolved [36]. To this end, we aimed to develop a sleep posture classifier using deep learning models with an infrared depth camera and blankets with different thicknesses and materials. Accordingly, we implemented fine-grained classification of seven postures with regard to head and leg positions [36] to improve the robustness of the classifier, and coarse classification of the four standard postures (supine, prone, left side-lying, and right side-lying) to facilitate comparison with existing studies.

2. Materials and Methods

2.1. Participant Recruitment

We recruited 66 adults (40 men and 26 women) from the university campus by convenience sampling. The mean age of the participants was 35.7 years (SD: 17.4, range 18 to 72), and their average height was 167 cm (SD: 18 cm) and weight was 63 kg (SD: 12.23 kg). They had no reported severe sleeping deprivation, sleep disorder, musculoskeletal pain, or deformity. The experiment was approved by the Institutional Review Board of the university (reference no.: HSEARS20210127007). All participants signed informed consent after receiving oral and written descriptions of the experimental procedures before the start of the experiment.

2.2. Hardware Setup

The sleeping posture data were collected from participants lying on a standard electronic rehabilitation bed that was 196 cm long by 90 cm wide and 55 cm high. A 3D time-of-flight near-infrared red, blue, green (RGB) camera (RealSense D435i depth camera, Intel Corp., Santa Clara, CA, USA) was mounted 1.6 m above the bed surface on a steel camera stand. The bed and the annotated area with a color-coded paper for labeling the posture scene were within the camera coverage. The resolution of the camera was 848 × 480 pixels at a frame rate of 6 fps. Four blanket conditions were used with three types of blankets resembling a thick (8 cm thick), medium (2 cm thick), and thin (0.4 cm thick) blanket. The product names of the blankets were the FJALLARNIKA extra warm duvet, SAFFERO light warm duvet, and VALLKRASSING duvet, purchased from IKEA (Delft, The Netherlands).

2.3. Experimental Procedure

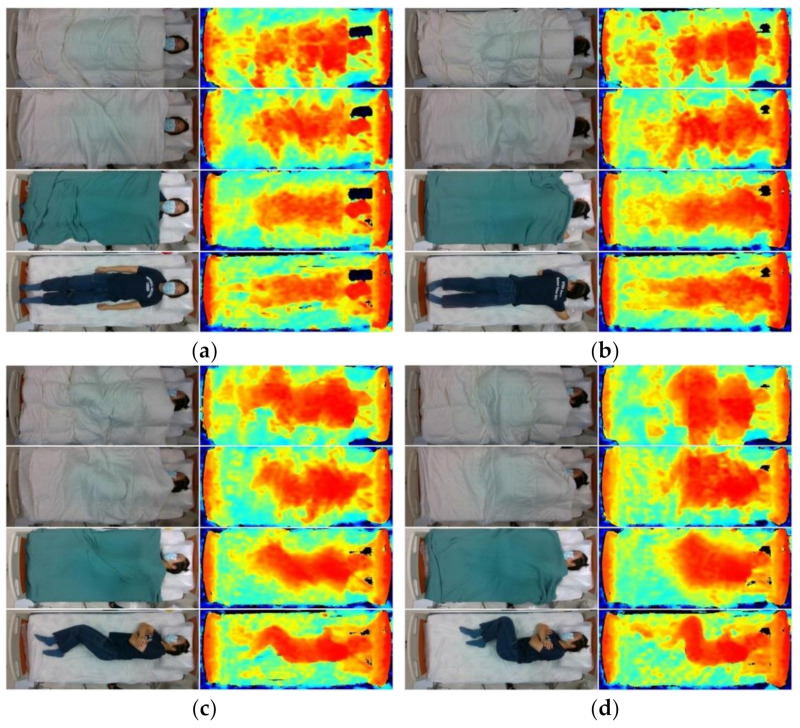

During the experiment, participants were instructed to perform 7 recumbent postures: (1) supine, (2) prone with head turned left, (3) prone with head turned right, (4) log left, (5) log right, (6) fetal left, and (7) fetal right (log and fetal are variations of side-lying). In addition, we instructed the participants to flex their knees at a higher level resembling a cuddle for the fetal position compared to the log position. The supine, prone, log, and fetal positions are demonstrated in Figure 1.

Figure 1.

RGB (left column) and infrared (right column) images of typical participant under thick (first row), medium (second row), thin (third row), and control (fourth row) blanket conditions in (a) supine, (b) prone (head left), (c) log (right), and (d) fetal (right) postures.

The participants were given time to adjust themselves into their most comfortable position, with a voice cue given for each posture. They were then asked to maintain their position after final adjustment. Each participant was covered with each blanket, from the thickest to no blanket, by the investigators, so that the body position under the different blankets would be identical. The participants were asked to repeat the posture for any observable movement or change in posture without the voice cue. The infrared RGB depth camera continued recording without interruption throughout the entire course of the experiment. For each posture condition, we replaced a color-coded paper next to the bed within the camera coverage to indicate the time for splicing and facilitate labeling and pre-processing of data.

2.4. Data Pre-Processing and Augmentation

On the RealSense Software Development Kit (SDK) platform, the RGB and infrared depth image data were aligned and streamlined. Image/video cropping was performed to confine the image to the bed area only through a manual pipeline program on the SDK OpenCV platform. All data were spliced and labeled based on the color-coded paper placed during the experiment and observation. Together with the participant information, the processed and labeled data were organized into a MariaDB database. Out of the 66 participants, the data on 51 were randomly selected for model training, while that of the remaining 15 were used for model testing and evaluation.

The original model training dataset consisted of approximately 1400 data samples (51 participants × 7 postures × 4 blanket conditions). A customized data augmentation strategy was applied to the model training to generate more blanket conditions to account for the limited number of samples. Data augmentation was conducted using affine transformation of the image data and data fusion of the blanket conditions.

The affine transformation was implemented using the Albumentation library, which scaled, translated, rotated, and sheared the images with range shifts of 5%, 2%, 5.0°, and 2.5°, respectively [37]. The range shifts were determined based on empirical observation, such that the participant’s whole body was still covered after image transformation.

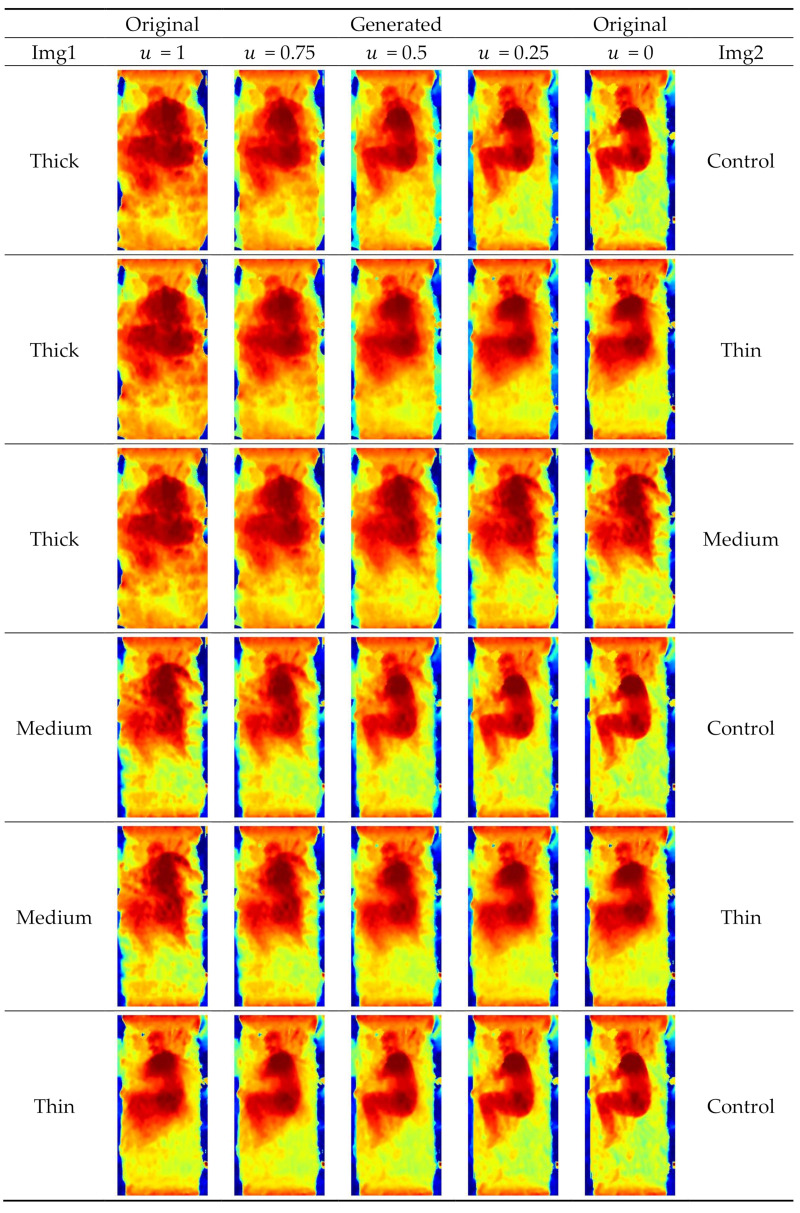

For the data fusion, we synthesized an additional dataset based on the original model training dataset and generated more blanket conditions using an intraclass mix-up algorithm with random variables, as shown in Equation (1). The depth images can be interpreted as a linear combination over the multiple positions of the human body and the blanket, under the premise that the participants maintained their posture across blanket conditions during data acquisition. The technique interpolated the depth image data and constructed the hypothetical variations of new blankets. Mathematically, the weighted sum of the two depth images (blanket conditions) was applied, as shown in Equation (1).

| (1) |

where img1 and img2 are two sets of drawn images, with all combinations, and u is a random number drawn from 0 to 1 with uniform distribution.

Four blanket conditions contributed to 6 possible combinations and thus 6 hypothetical blanket conditions for img1 and img2 (i.e., the 6 rows in Figure 2). For ease of understanding, we present Figure 2 to illustrate all 6 combinations of blanket pairs with 3 pre-assigned u values. It should be noted that a random u value will be assigned in every combination and in every epoch during model training.

Figure 2.

Illustration of data fusion technique on six combinations of blanket conditions.

Both the original model training dataset (51 participants) and the synthesized dataset were used for the model training. Only the original testing dataset (15 participants) was used for model evaluation and testing.

2.5. Model Training and Architecture

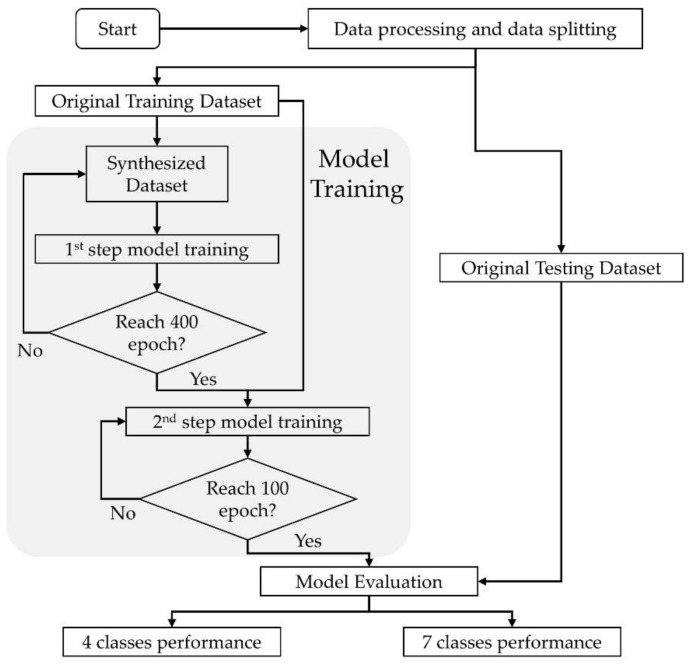

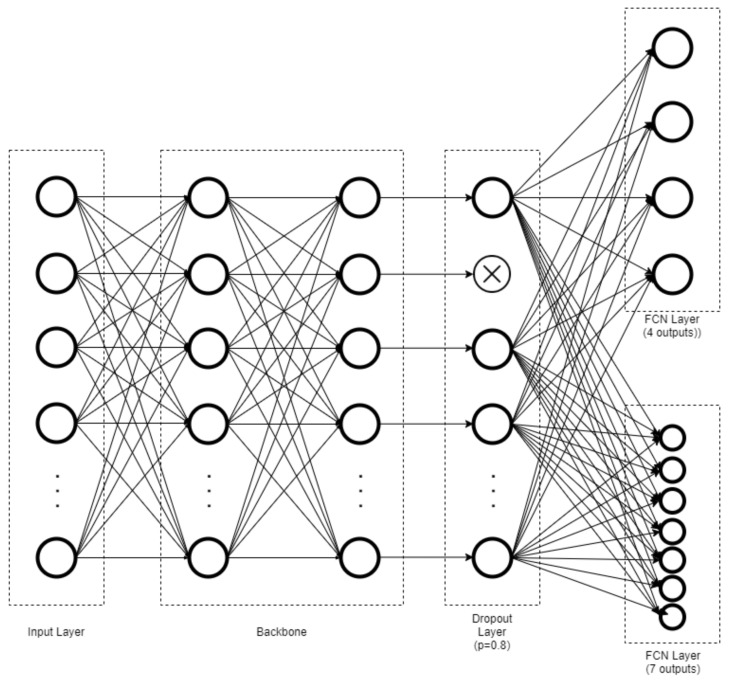

A convolution neural network (CNN) with EfficientNet as the backbone [38] over 2 output channels was implemented via TensorFlow Keras v. 2.4.1 (Google Brain Team, Google Alphabet, Mountain View, CA, USA) with Compute Unified Device Architecture, CUDA (Nvidia, Santa Clara, CA, USA). The CNN was built by replacing the last layer of EfficientNet with a dropout layer, followed by 2 parallel fully connected network (FCN) layers, and Softmax was used as the activation function to achieve coarse-(4-posture) and fine-grained (7-posture) classification. The network was initialized with weights pretrained using the “nosy-student” transfer learning method [39]. The dropout layer was assigned with a probability of 0.8 to overcome the overfitting problem. Figure 3 and Figure 4 show flowcharts of the functional component linkage and the architecture of the model network, respectively. The model was subsequently trained using the prepared dataset for the coarse-(4-posture) and fine-grained (7-posture) classification. The fine-grained classification was based on the 7 postures performed by the participants. In the coarse classification, the log and fetal posture classes were merged into the side-lying class, and the prone postures with different head positions were also pooled.

Figure 3.

Flowchart of functional component linkage of model network.

Figure 4.

Architecture of model network illustrating fully connected network (FCN) layers toward coarse-grained (4-posture) and fine-grained (7-posture) classification.

During the model training, the model parameters were determined by stochastic gradient descent to optimize the loss function. The outputs were passed to the categorical cross-entropy loss functions for both the coarse and fine classification, as shown in Equations (2) and (3). Finally, the total loss function was constructed based on a simple sum of losses, as shown in Equation (4):

| (2) |

| (3) |

| (4) |

where C is the number of classes; is an indicator function, which is one if belongs to class and zero otherwise; and is the predicted probability when the input belongs to class. and denote the categorical cross-entropy between the true and predicted output by the network for coarse and fine-grained classifications. The pseudocode of the algorithm is included in the Appendix (Table A1).

The Adam optimizer was used at a fixed learning rate of 0.0001 and L2 regularization of 0.0005. The model was trained in two steps because of the bias in probability density distribution in the synthesized dataset. The model was firstly trained by the synthesized dataset at 400 epochs and afterward trained by the original training dataset at 100 epochs, determined by the observation of the learning curve.

2.6. Model Evaluation

The original testing dataset of the 15 participants was used for model evaluation. The model’s performance was evaluated using macro accuracy, recall, precision, and F1 score parameters based on the true/false positives and negatives of the predicted classes. In addition, Cohen’s kappa was used to assess the agreement of the predicted outcome and the dataset labels (ground truth) [40]. These parameters were calculated based on Equations (5)–(9). A confusion matrix across the coarse- and fine-grained classifications was also developed to identify whether the source of errors was mainly the classification technique, posture, or blanket conditions.

| (5) |

| (6) |

| (7) |

| (8) |

Here, TP, FP, TN, and FN, are true positive, false positive, true negative, and false negative, respectively.

| (9) |

Here, po is the empirical probability of agreement of the label assigned to any sample (observed agreement ratio), and pe is the expected agreement when both annotators assign labels randomly and is estimated using a priori per annotator over class labels [40].

3. Results

The overall performance of the coarse- and fine-grained classification is presented in Table 1. In general, the performance of the former was better than that of latter. The accuracy, recall, and precision of the coarse classification model were approximately 8% higher than those of the fine-grained classification. The F1 score of the four-posture classifier was 97.1%, whereas that of the seven-posture classifier was 88.9%. In addition, the four-posture coarse classification model demonstrated excellent reliability, with a Cohen’s kappa of 0.970, whereas the seven-posture fine-grained classification had a Cohen’s kappa coefficient of 0.891, indicating strong reliability between the ground truth set and the predictive set.

Table 1.

Overall performance of 4-posture coarse-grained and 7-posture fine-grained classification model trained by convolution neural network.

| Performance | 4-Posture Coarse Classification |

7-Posture Fine-Grained Classification |

|---|---|---|

| Accuracy | 97.5% | 89.0% |

| Recall | 97.3% | 89.0% |

| Precision | 97.0% | 88.9% |

| F1 score | 97.1% | 88.9% |

| Cohen’s kappa coefficient | 0.970 | 0.891 |

A subgroup analysis of the model performance (F1-score) was conducted, stratifying the posture and blanket conditions. The blanket affected the model performance of the classifiers. As seen in Table 2, the overall performance of the coarse- and fine-grained classification declined from 98.6% to 95.1% and from 93.3% to 84.4%, respectively, when the condition shifted from control (no blanket) to thick blanket. The overall F1-score difference between the thin blanket and control was minimal: 0.1% for the coarse-grained and 2% for the fine-grained classification.

Table 2.

Model performance (F1-score) of coarse- and fine-grained classifications in each posture and blanket condition.

| Posture/Blanket | Thick | Medium | Thin | Control | Overall |

|---|---|---|---|---|---|

| 4-posture coarse-grained classification | |||||

| Supine | 92.3% | 92.9% | 96.0% | 96.3% | 94.3% |

| Side (right) | 95.8% | 98.0% | 100% | 98.0% | 98.0% |

| Side (left) | 98.1% | 98.0% | 100% | 100% | 99.0% |

| Prone | 94.3% | 96.2% | 98.1% | 100% | 97.1% |

| Overall | 95.1% | 96.3% | 98.5% | 98.6% | 97.1% |

| 7-posture fine-grained classification | |||||

| Supine | 92.9% | 96.3% | 96.0% | 96.3% | 95.3% |

| Log (right) | 72.0% | 83.3% | 88.9% | 87.0% | 82.8% |

| Fetal (right) | 81.5% | 83.3% | 84.6% | 92.9% | 85.7% |

| Log (left) | 92.3% | 92.9% | 96.3% | 96.3% | 94.4% |

| Fetal (left) | 96.0% | 88.0% | 91.7% | 96.0% | 92.9% |

| Prone (head right) | 75.0% | 78.6% | 89.7% | 92.9% | 84.4% |

| Prone (head left) | 81.5% | 83.3% | 91.7% | 91.7% | 86.9% |

| Overall | 84.4% | 86.5% | 91.3% | 93.3% | 88.9% |

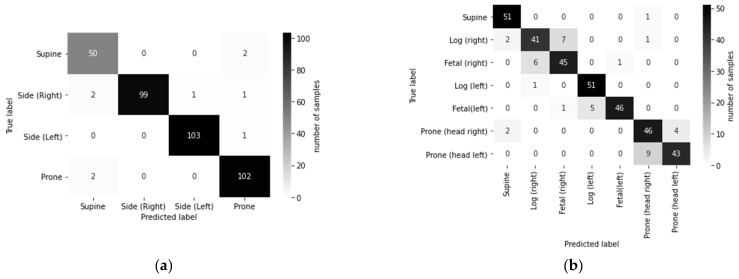

Side-lying seemed to produce the best prediction performance in the four-posture coarse classification. The overall F-scores for right and left side-lying were 98.0% and 99.0%, respectively. However, subdividing the side-lying posture into the log and fetal postures affected the model performance. The F1-score for log (right) was 82.8%, which was the lowest among the fine-grained classification scores. The performance was further reduced to 72.0% if only the thick blanket was considered. Moreover, subdividing prone into left and right head positions also weakened the model performance. F1 scores were 75.0% and 81.5% in prone postures with head left and right, respectively, under the thick blanket.

The confusion matrix in Figure 5a shows that the performance in classifying supine and prone was slightly inferior to that of left and right side-lying posture. The confusion matrix in Figure 5b shows that the majority of predicted errors were contributed by the further sub-classification of log and fetal postures and the head facing left and right in prone posture.

Figure 5.

Confusion matrix across true and predicted labels for (a) 4-posture coarse-grained classification and (b) 7-posture fine-grained classification.

4. Discussion

The innovation of this study is the robustness provided by including blankets in the classification of sleep postures to enable practical applications. We also classified postures with higher granularity, from the four standard postures (supine, prone, right and left lateral) to seven postures (including head and leg positions) [36]. Another novelty is the use of data fusion techniques to generate various blanket conditions from the original dataset to subsume hypothetical blanket variations to enhance the generalizability of the model. Indeed, the study demonstrates practical value because it is infeasible to apply all available blankets for the investigation. It should be noted that although the results in this paper present both RGB and infrared depth camera images, the deep learning model applied infrared depth images only. Therefore, confidentiality could be compromised in actual applications.

Other posture classification systems have used the depth cameras but with different configurations or levels of classification. Ren et al. [41] developed a system with the Kinect Artec scanner. They classified six postures (without prone) using SVM with the scale-invariant feature transform (SIFT) feature, which yielded an accuracy of 92.5%. The accuracy of our seven-posture fine-grained classifier was higher (93.3%) for the no-blanket condition. Although the overall accuracy of our classifier was lower (89.0%), the experimental design of our dataset allowed for more features, including the prone posture and the interference of blankets. It was challenging to differentiate the supine and prone classes because of the limited depth resolution to trace detailed head/face features. On the other hand, Grimm et al. [42] proposed an alleviation map approach to delineate depth camera images followed by a CNN to classify three postures, supine and left and right side-lying. Their system achieved an accuracy of 94%, while our four-posture coarse classification system performed better with an overall accuracy of 97.5% considering blanket interference.

The performance of the coarse-grained classifier was approximately 8% higher than that of the fine-grained classifier, which could be due to the feature-diminishing effects. For instance, the head left and head right positions in the prone posture were primarily determined by the head orientation and facial features. However, some important features, such as the nose, were hidden because of individual posture differences and the limited resolution of the depth camera. Similarly, there was a high variability of lower limb positions among individuals during side-lying, which challenged the classifier when distinguishing the log and fetus postures. Furthermore, the accuracy was lower in classifying the right side postures than the left side postures, which could be explained by the fixed condition sequence of the experiment. The right side postures were tested first, and the participants may have been more aware of the instruction and the requirements of the experiment. In addition, some studies reported that an individual’s dominant sleep side could contribute to accuracy bias in sleep posture classification [43,44]. A randomized cross-over trial is warranted.

We found one study that considered blankets in the sleep posture classification using a noncontact approach. Mohammad et al. [45] classified 12 kinds of sleeping postures with and without a soft blanket using a Microsoft Kinect infrared depth camera, which yielded 76% and 91% accuracy, respectively. While that system examined higher postural granularity, our classifier produced higher overall accuracy and considered more blanket conditions; in particular, the thick blanket condition was very challenging. Intriguingly, we found that the classification accuracy was greater for the thin blanket than for the no blanket. We believe that this was because the thin blanket acted as a smoothing filter to reduce noise during the classification.

Aside from the depth camera, the classification accuracy of pressure mats and wearables, such as embedded accelerometers, have been evaluated. Ostadabbas et al. [46] applied the Gaussian mixture model to process data from a pressure mat and classify three sleep postures (supine, left and right side-lying). Their system had slightly better performance (98.4%) than our system despite less granularity. In addition, Fallmann et al. [43] utilized generalized matrix learning vector quantization to classify six sleeping postures from accelerometry over the chest and both legs, with an accuracy of 98.3%. A contact-based sleep posture classification system seemed to have better performance. However, these systems can be obstructive and have maintenance issues. Wearables may be unfavorable for long-term surveillance of the elderly, since they may forget about the device after taking it off.

There were some strengths and weaknesses in our system. Our work involved a relatively larger sample size, higher granularity of posture definitions, accommodation of blanket conditions, and easy setup. Accounting for the robustness, our net was generally effective and accurate compared to the state-of-the-art methods of different modalities. However, we considered our sample to be medium sized. Our dataset also did not encompass age groups older than 70 and younger than 18. The gender ratio of the participants was also unbalanced. It is necessary to use a larger dataset in order to enhance the model’s generalizability, in particular to observe positions with finer granularity [42]. It would also be useful to construct gender subgroup models and evaluate the influence of gender on the model performance.

The long-term goal of this research is to develop a sleep surveillance system that can track participants’ sleeping postures and behaviors, and it represents a milestone in establishing baseline parameters to classify postures and remedy the pragmatic problems of blankets in a controlled setting. We achieved another milestone in monitoring the behavior of getting out of bed in a previous paper [20]. The next step is to consider real-world sleeping conditions and evaluate the proportion of each posture across the time axis.

Furthermore, sleep-associated musculoskeletal disorders and pain can manifest as significant consequences of sleep complaints and deprivation. Sleep posture itself cannot indicate whether the posture is poor or problematic, although this risk factor is modifiable and can be mitigated by using the proper mattress and pillows or engaging in physical exercise [12,47,48]. We are aiming for a machine learning-based measurement of spinal alignment and limb placement with a classification of sleeping postures that could be important in identifying related problems such as neck and back pain [12,36].

5. Conclusions

This study demonstrates that the overall classification accuracy of our fine-grained seven-posture system, accounting for the interference of blankets, was satisfactory at 88.9% and can pave the way toward pragmatic sleep surveillance at care homes and hospitals. We will conduct field tests on the system and enrich the dataset with participants with different health conditions. In addition, the system will be further developed to identify body morphotypes, body segment positions, and joint angles.

Appendix A

The pseudocode of the algorithm is included in Table A1.

Table A1.

The pseudocode of the model algorithm.

| 0 | Requie: a Stepsize |

| 1 | Exponential decay rates for the moment estimates |

| 2 | : Initial parameter vector |

| 3 | ← 0 (Initial 1st moment vector) |

| 4 | ← 0 (Initial 2nd moment vector) |

| 5 | t ←0 : (Initial time step) |

| 6 | not converged do |

| 7 | t ← t+1 |

| 8 | Feature_vector ← BackboneNetwork(x) (Extract feature vector using CNN ) |

| 9 | DropoutFeatureVector ← Dropout(Feature_vector) (Dropout layer) |

| 10 | ← fullyConnectedNetwork1(DropoutFeatureVector) (Predict Coarse Classification) |

| 11 | ← fullyConnectedNetwork2(DropoutFeatureVector) (Predict Fine Classificatoin) |

| 12 | (Compute Loss) |

| 13 | ← (Get gradients w.r.t. stochastic objective at timestep t) |

| 14 | ← (Update biased first moment estimate) |

| 15 | ← (Update biased second raw moment estimate) |

| 16 | ← (Compute bias-correced first moment estimate) |

| 17 | ←(Update parameters) |

| 18 | end while |

| 19 | (Resulting parameters) |

Author Contributions

Conceptualization, D.W.-C.W. and J.C.-W.C.; methodology, D.W.-C.W., J.C.-W.C. and A.Y.-C.T.; software, A.Y.-C.T. and T.T.-C.C.; formal analysis, A.Y.-C.T. and T.T.-C.C.; investigation, A.Y.-C.T., B.P.-H.S. and A.K.-Y.C.; data curation, A.Y.-C.T., B.P.-H.S. and A.K.-Y.C.; writing—original draft preparation, A.Y.-C.T. and D.W.-C.W.; writing—review and editing, J.C.-W.C.; visualization, B.P.-H.S. and T.T.-C.C.; validation, B.P.-H.S. and T.T.-C.C.; supervision, D.W.-C.W. and J.C.-W.C.; project administration, J.C.-W.C.; funding acquisition, J.C.-W.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

The study was conducted according to the guidelines of the Declaration of Helsinki, and approved by the Institutional Review Board of the Hong Kong Polytechnic University (HSEARS20210127007).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The program, model codes, and updates presented in this study are openly available in GitHub at https://github.com/BME-AI-Lab?tab=repositories (accessed on 18 July 2021). The dataset for the model is not publicly available since the videos and images of the participants would disclose their identity, violating confidentiality.

Conflicts of Interest

The authors declare no conflict of interest.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Lin F., Zhuang Y., Song C., Wang A., Li Y., Gu C., Li C., Xu W. SleepSense: A noncontact and cost-effective sleep monitoring system. IEEE Trans. Biomed. Circuits Syst. 2016;11:189–202. doi: 10.1109/TBCAS.2016.2541680. [DOI] [PubMed] [Google Scholar]

- 2.Khalil M., Power N., Graham E., Deschênes S.S., Schmitz N. The association between sleep and diabetes outcomes—A systematic review. Diabetes Res. Clin. Pract. 2020;161:108035. doi: 10.1016/j.diabres.2020.108035. [DOI] [PubMed] [Google Scholar]

- 3.Vorona R.D., Winn M.P., Babineau T.W., Eng B.P., Feldman H.R., Ware J.C. Overweight and obese patients in a primary care population report less sleep than patients with a normal body mass index. Arch. Intern. Med. 2005;165:25–30. doi: 10.1001/archinte.165.1.25. [DOI] [PubMed] [Google Scholar]

- 4.Spiegel K., Knutson K., Leproult R., Tasali E., Cauter E.V. Sleep loss: A novel risk factor for insulin resistance and Type 2 diabetes. J. Appl. Physiol. 2005;99:2008–2019. doi: 10.1152/japplphysiol.00660.2005. [DOI] [PubMed] [Google Scholar]

- 5.Short M.A., Booth S.A., Omar O., Ostlundh L., Arora T. The relationship between sleep duration and mood in adolescents: A systematic review and meta-analysis. Sleep Med. Rev. 2020;52:101311. doi: 10.1016/j.smrv.2020.101311. [DOI] [PubMed] [Google Scholar]

- 6.Hombali A., Seow E., Yuan Q., Chang S.H.S., Satghare P., Kumar S., Verma S.K., Mok Y.M., Chong S.A., Subramaniam M. Prevalence and correlates of sleep disorder symptoms in psychiatric disorders. Psychiatry Res. 2019;279:116–122. doi: 10.1016/j.psychres.2018.07.009. [DOI] [PubMed] [Google Scholar]

- 7.Liang M., Guo L., Huo J., Zhou G. Prevalence of sleep disturbances in Chinese adolescents: A systematic review and meta-analysis. PLoS ONE. 2021;16:e0247333. doi: 10.1371/journal.pone.0247333. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Liu J., Chen X., Chen S., Liu X., Wang Y., Chen L. TagSheet: Sleeping posture recognition with an unobtrusive passive tag matrix; Proceedings of the IEEE INFOCOM 2019-IEEE Conference on Computer Communications; Paris, France. 29 April–2 May 2019; pp. 874–882. [Google Scholar]

- 9.Dreischarf M., Shirazi-Adl A., Arjmand N., Rohlmann A., Schmidt H. Estimation of loads on human lumbar spine: A review of in vivo and computational model studies. J. Biomech. 2016;49:833–845. doi: 10.1016/j.jbiomech.2015.12.038. [DOI] [PubMed] [Google Scholar]

- 10.Lee W.-H., Ko M.-S. Effect of sleep posture on neck muscle activity. J. Phys. Ther. Sci. 2017;29:1021–1024. doi: 10.1589/jpts.29.1021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Canivet C., Östergren P.-O., Choi B., Nilsson P., Af Sillen U., Moghadassi M., Karasek R., Isacsson S.-O. Sleeping problems as a risk factor for subsequent musculoskeletal pain and the role of job strain: Results from a one-year follow-up of the Malmö Shoulder Neck Study Cohort. Int. J. Behav. Med. 2008;15:254–262. doi: 10.1080/10705500802365466. [DOI] [PubMed] [Google Scholar]

- 12.Cary D., Briffa K., McKenna L. Identifying relationships between sleep posture and non-specific spinal symptoms in adults: A scoping review. BMJ Open. 2019;9:e027633. doi: 10.1136/bmjopen-2018-027633. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Cary D., Collinson R., Sterling M., Briffa K. Examining the relationship between sleep posture and morning spinal symptoms in the habitual environment using infrared cameras. J. Sleep Disord. Treat. Care. 2016;5:1000173. [Google Scholar]

- 14.Ye S.-Y., Eum S.-H. Implement the system of the Position Change for Obstructive sleep apnea patient. J. Korea Inst. Inf. Commun. Eng. 2017;21:1231–1236. [Google Scholar]

- 15.Cheyne J.A. Situational factors affecting sleep paralysis and associated hallucinations: Position and timing effects. J. Sleep Res. 2002;11:169–177. doi: 10.1046/j.1365-2869.2002.00297.x. [DOI] [PubMed] [Google Scholar]

- 16.Johnson D.A., Orr W.C., Crawley J.A., Traxler B., McCullough J., Brown K.A., Roth T. Effect of esomeprazole on nighttime heartburn and sleep quality in patients with GERD: A randomized, placebo-controlled trial. Am. J. Gastroenterol. 2005;100:1914–1922. doi: 10.1111/j.1572-0241.2005.00285.x. [DOI] [PubMed] [Google Scholar]

- 17.Waltisberg D., Arnrich B., Tröster G. Pervasive Health. Springer; Berlin/Heidelberg, Germany: 2014. Sleep quality monitoring with the smart bed; pp. 211–227. [Google Scholar]

- 18.Sprigle S., Sonenblum S. Assessing evidence supporting redistribution of pressure for pressure ulcer prevention: A review. J. Rehabil. Res. Dev. 2011;48:203–213. doi: 10.1682/JRRD.2010.05.0102. [DOI] [PubMed] [Google Scholar]

- 19.Sharp C.A., Moore J.S.S., McLaws M.-L. Two-Hourly Repositioning for Prevention of Pressure Ulcers in the Elderly: Patient Safety or Elder Abuse? J. Bioethical Inq. 2019;16:17–34. doi: 10.1007/s11673-018-9892-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Cheung J.C.-W., Tam E.W.-C., Mak A.H.-Y., Chan T.T.-C., Lai W.P.-Y., Zheng Y.-P. Night-time monitoring system (eNightLog) for elderly wandering behavior. Sensors. 2021;21:704. doi: 10.3390/s21030704. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Kubota T., Ohshima N., Kunisawa N., Murayama R., Okano S., Mori-Okamoto J. Characteristic features of the nocturnal sleeping posture of healthy men. Sleep Biol. Rhythm. 2003;1:183–185. doi: 10.1046/j.1446-9235.2003.00040.x. [DOI] [Google Scholar]

- 22.Tang K., Kumar A., Nadeem M., Maaz I. CNN-Based Smart Sleep Posture Recognition System. IoT. 2021;2:119–139. doi: 10.3390/iot2010007. [DOI] [Google Scholar]

- 23.Yu M., Rhuma A., Naqvi S.M., Wang L., Chambers J. A posture recognition-based fall detection system for monitoring an elderly person in a smart home environment. IEEE Trans. Inf. Technol. Biomed. 2012;16:1274–1286. doi: 10.1109/TITB.2012.2214786. [DOI] [PubMed] [Google Scholar]

- 24.Masek M., Lam C.P., Tranthim-Fryer C., Jansen B., Baptist K. Sleep monitor: A tool for monitoring and categorical scoring of lying position using 3D camera data. SoftwareX. 2018;7:341–346. doi: 10.1016/j.softx.2018.10.001. [DOI] [Google Scholar]

- 25.Hoque E., Dickerson R.F., Stankovic J.A. Monitoring body positions and movements during sleep using wisps; Proceedings of the Wireless Health 2010, WH 2010; San Diego, CA, USA. 5–7 October 2010; pp. 44–53. [Google Scholar]

- 26.Zhang F., Wu C., Wang B., Wu M., Bugos D., Zhang H., Liu K.R. Smars: Sleep monitoring via ambient radio signals. IEEE Trans. Mobile Comput. 2019;20:217–231. doi: 10.1109/TMC.2019.2939791. [DOI] [Google Scholar]

- 27.Liu J.J., Xu W., Huang M.-C., Alshurafa N., Sarrafzadeh M., Raut N., Yadegar B. A dense pressure sensitive bedsheet design for unobtrusive sleep posture monitoring; Proceedings of the 2013 IEEE International Conference on Pervasive Computing and Communications (PerCom); San Diego, CA, USA. 18–22 March 2013; pp. 207–215. [Google Scholar]

- 28.Pino E.J., De la Paz A.D., Aqueveque P., Chávez J.A., Morán A.A. Contact pressure monitoring device for sleep studies; Proceedings of the 2013 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC); Osaka, Japan. 3–7 July 2013; pp. 4160–4163. [DOI] [PubMed] [Google Scholar]

- 29.Lin L., Xie Y., Wang S., Wu W., Niu S., Wen X., Wang Z.L. Triboelectric active sensor array for self-powered static and dynamic pressure detection and tactile imaging. ACS Nano. 2013;7:8266–8274. doi: 10.1021/nn4037514. [DOI] [PubMed] [Google Scholar]

- 30.Matar G., Lina J.-M., Kaddoum G. Artificial neural network for in-bed posture classification using bed-sheet pressure sensors. IEEE J. Biomed. Health Inform. 2019;24:101–110. doi: 10.1109/JBHI.2019.2899070. [DOI] [PubMed] [Google Scholar]

- 31.Liu Z., Mingliang S., Lu K. A Method to Recognize Sleeping Position Using an CNN Model Based on Human Body Pressure Image; Proceedings of the 2019 IEEE International Conference on Power, Intelligent Computing and Systems (ICPICS); Shenyang, China. 12–14 July 2019; pp. 219–224. [Google Scholar]

- 32.Zhao A., Dong J., Zhou H. Self-supervised learning from multi-sensor data for sleep recognition. IEEE Access. 2020;8:93907–93921. doi: 10.1109/ACCESS.2020.2994593. [DOI] [Google Scholar]

- 33.Byeon Y.-H., Lee J.-Y., Kim D.-H., Kwak K.-C. Posture Recognition Using Ensemble Deep Models under Various Home Environments. Appl. Sci. 2020;10:1287. doi: 10.3390/app10041287. [DOI] [Google Scholar]

- 34.Viriyavit W., Sornlertlamvanich V. Bed Position Classification by a Neural Network and Bayesian Network Using Noninvasive Sensors for Fall Prevention. J. Sens. 2020;2020 doi: 10.1155/2020/5689860. [DOI] [Google Scholar]

- 35.Wang Z.-W., Wang S.-K., Wan B.-T., Song W.W. A novel multi-label classification algorithm based on K-nearest neighbor and random walk. Int. J. Distrib. Sens. Netw. 2020;16:1550147720911892. doi: 10.1177/1550147720911892. [DOI] [Google Scholar]

- 36.Fallmann S., Chen L. Computational sleep behavior analysis: A survey. IEEE Access. 2019;7:142421–142440. doi: 10.1109/ACCESS.2019.2944801. [DOI] [Google Scholar]

- 37.Buslaev A., Iglovikov V.I., Khvedchenya E., Parinov A., Druzhinin M., Kalinin A.A. Albumentations: Fast and flexible image augmentations. Information. 2020;11:125. doi: 10.3390/info11020125. [DOI] [Google Scholar]

- 38.Tan M., Le Q. Efficientnet: Rethinking model scaling for convolutional neural networks; Proceedings of the International Conference on Machine Learning; Long Beach, CA, USA. 9–15 June 2019; pp. 6105–6114. [Google Scholar]

- 39.Xie Q., Luong M.-T., Hovy E., Le Q.V. Self-training with noisy student improves imagenet classification; Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition; Seattle, WA, USA. 13–19 June 2020; pp. 10687–10698. [Google Scholar]

- 40.Artstein R., Poesio M. Inter-coder agreement for computational linguistics. Comput. Linguist. 2008;34:555–596. doi: 10.1162/coli.07-034-R2. [DOI] [Google Scholar]

- 41.Ren A., Dong B., Lv X., Zhu T., Hu F., Yang X. A non-contact sleep posture sensing strategy considering three dimensional human body models; Proceedings of the 2016 2nd IEEE International Conference on Computer and Communications (ICCC); Chengdu, China. 14–17 October 2016; pp. 414–417. [Google Scholar]

- 42.Grimm T., Martinez M., Benz A., Stiefelhagen R. Sleep position classification from a depth camera using bed aligned maps; Proceedings of the 2016 23rd International Conference on Pattern Recognition (ICPR); Cancun, Mexico. 4–8 December 2016; pp. 319–324. [Google Scholar]

- 43.Fallmann S., Van Veen R., Chen L., Walker D., Chen F., Pan C. Wearable accelerometer based extended sleep position recognition; Proceedings of the 2017 IEEE 19th International Conference on e-Health Networking, Applications and Services (Healthcom); Dalian, China. 12–15 October 2017; pp. 1–6. [Google Scholar]

- 44.Yousefi R., Ostadabbas S., Faezipour M., Farshbaf M., Nourani M., Tamil L., Pompeo M. Bed posture classification for pressure ulcer prevention; Proceedings of the 2011 Annual International Conference of the IEEE Engineering in Medicine and Biology Society; Boston, MA, USA. 30 August–3 September 2011; pp. 7175–7178. [DOI] [PubMed] [Google Scholar]

- 45.Mohammadi S.M., Alnowami M., Khan S., Dijk D.-J., Hilton A., Wells K. Sleep posture classification using a convolutional neural network; Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC); Honolulu, HI, USA. 18–21 July 2018; pp. 1–4. [DOI] [PubMed] [Google Scholar]

- 46.Ostadabbas S., Pouyan M.B., Nourani M., Kehtarnavaz N. In-bed posture classification and limb identification; Proceedings of the 2014 IEEE Biomedical Circuits and Systems Conference (BioCAS) Proceedings; Lausanne, Switzerland. 22–24 October 2014; pp. 133–136. [Google Scholar]

- 47.Ren S., Wong D.W.-C., Yang H., Zhou Y., Lin J., Zhang M. Effect of pillow height on the biomechanics of the head-neck complex: Investigation of the cranio-cervical pressure and cervical spine alignment. PeerJ. 2016;4:e2397. doi: 10.7717/peerj.2397. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Wong D.W.-C., Wang Y., Lin J., Tan Q., Chen T.L.-W., Zhang M. Sleeping mattress determinants and evaluation: A biomechanical review and critique. PeerJ. 2019;7:e6364. doi: 10.7717/peerj.6364. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The program, model codes, and updates presented in this study are openly available in GitHub at https://github.com/BME-AI-Lab?tab=repositories (accessed on 18 July 2021). The dataset for the model is not publicly available since the videos and images of the participants would disclose their identity, violating confidentiality.