Abstract

Background: In the field of biomedical imaging, radiomics is a promising approach that aims to provide quantitative features from images. It is highly dependent on accurate identification and delineation of the volume of interest to avoid mistakes in the implementation of the texture-based prediction model. In this context, we present a customized deep learning approach aimed at addressing the real-time, and fully automated identification and segmentation of COVID-19 infected regions in computed tomography images. Methods: In a previous study, we adopted ENET, originally used for image segmentation tasks in self-driving cars, for whole parenchyma segmentation in patients with idiopathic pulmonary fibrosis which has several similarities to COVID-19 disease. To automatically identify and segment COVID-19 infected areas, a customized ENET, namely C-ENET, was implemented and its performance compared to the original ENET and some state-of-the-art deep learning architectures. Results: The experimental results demonstrate the effectiveness of our approach. Considering the performance obtained in terms of similarity of the result of the segmentation to the gold standard (dice similarity coefficient ~75%), our proposed methodology can be used for the identification and delineation of COVID-19 infected areas without any supervision of a radiologist, in order to obtain a volume of interest independent from the user. Conclusions: We demonstrated that the proposed customized deep learning model can be applied to rapidly identify, and segment COVID-19 infected regions to subsequently extract useful information for assessing disease severity through radiomics analyses.

Keywords: COVID-19, deep learning, segmentation, computed tomography, customized ENET

1. Introduction

In the era of the 2019 coronavirus (COVID-19) pandemic, medical imaging, e.g., computed tomography (CT), plays a preponderant role in the fight against this disease [1]. Specifically, there is a growing interest in the analysis of novel image segmentation techniques to improve radiomics studies related to COVID-19 [2]. Unlike qualitative approaches, in which biomedical images are inspected and interpreted visually [3], radiomics analyses a large number of parameters to identify any statistical correlation with measurable aspects of the disease to identify the most relevant features. On the other hand, radiomics is strongly linked to the identification of the target region. When the delineation is performed manually, hundreds of CT slices have to be segmented, making this an extremely time-consuming task. Moreover, and, above all, the segmentations become highly operator-dependent [4,5,6]. Radiomics obtains repeatable results only if the segmentation task is independent of the operator [7]. For this reason, automatic target identification (e.g., COVID-19 infected regions) becomes crucial to avoid bias in the feature extraction task and to ensure repeatable radiomics results [8,9]. Automated segmentation is a general key issue in medical image analysis and remains a popular and challenging research area. Although CT provides high-quality images for COVID-19 detection, the lungs can be affected by extensive patchy ground-glass regions and consolidations and may even show pleural fluid [10,11]. As a result, automated lung segmentation is a very challenging process. Furthermore, supervised segmentation algorithms, such as those proposed in our previous lung study [12], require user control and time-consuming manual corrections. Artificial intelligence (AI) can be used to overcome these limitations by achieving accurate and operator-independent identification and delineation of COVID-19 infections, facilitating subsequent image quantification for disease diagnosis and prognosis [13]. Consequently, there is a quantity of research related to enhanced AI applications on chest X-rays or CT images showing effectiveness in both follow-up assessment and COVID-19 evolution evaluation [14]. These studies indicate great potential for clinical support, but they are often overfitting, being trained using proprietary data or from a single site, i.e., [15,16]. In [15], the authors proposed a new weakly supervised learning method (Inf-Net) in which they aggregated high-level features using a parallel partial decoder for segmentation of infected regions. To improve the representation, explicit edge and implicit reverse attention were used, resulting in a dice similarity coefficient (DSC) ~ 58% on nine real CT volumes. Zhou et al. [16] integrated the spatial and channel attention mechanism in U-Net to obtain a better representation of the features. Additionally, Tversky focal loss was introduced to address the segmentation of small lesions. The two datasets used in the experiments come from the Italian Society of Medical and Interventional Radiology obtaining a DSC~83%. To overcome the issue of proprietary data or single-site data, Roth et al. [17] organized an international challenge and competition for the development and comparison of AI algorithms using public data. Consequently, this study aims to propose a customized Efficient Neural Network (ENET) to segment Covid-19 infections using the dataset provided by [17] for the experiments. Specifically, ENET was originally proposed for real-time image delineation on low-power mobile devices [18]. Subsequently, it has been used successfully for lung, aorta, and prostate segmentation tasks [19,20,21]. In the first study, a small dataset of images from CT studies of patients with idiopathic pulmonary fibrosis (IPF) was considered. IPF is a form of interstitial lung disease characterized by a continuous and irreversible process of fibrosis that causes the progressive decay of lung function leading to death. In patients with IPF, CT images are characterized by honeycombing, extensive patchy ground-glass regions with or without consolidations, presence of pleural fluid, and, of course, fibrotic regions. Quantification of the affected IPF regions is crucial for patient assessment and for the detection of parenchyma abnormalities [12]. COVID-19 has several common features with IPF; some of the features mentioned above can be identified in COVID-19 infected lungs [22]. The main difference between the two diseases is the presence of peripheral ground-glass opacities and less or no fibrosis in COVID-19 infected lungs [23]. Consequently, this study aims to investigate how a DL network used for the parenchyma segmentation task in patients with IPF can be applied, with appropriate adjustments, to detect and segment COVID-19 infected lung regions. We explore the effectiveness of the customized ENET model, namely C-ENET, compared to other state-of-the-art models, namely UNET [24] and ERFNET [25], using the online COVID-19 Lung CT Lesion Segmentation Challenge—2020 (COVID-19-20) dataset [17,26]. The results show the great potential of the proposed network in automatically identifying and segmenting COVID-19 infected subregions for the subsequent extraction of quantitative features. In this way, the methodology performed in radiomics studies could benefit from an operator-independent segmentation method capable of automatically extracting the target from COVID-19 infected lungs.

2. Materials and Methods

2.1. Dataset

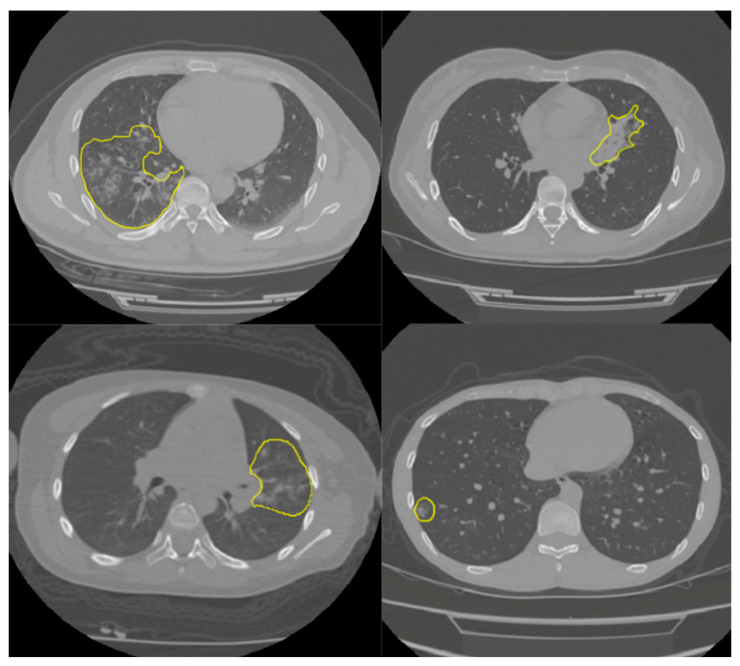

In this study, the online COVID-19 Lung CT Lesion Segmentation Challenge—2020 (COVID-19-20) dataset was used to train and test our model. The dataset contains 199 lung studies with a matrix resolution of 512 × 512 and with labels provided by the Challenge [26] (four CT examples are shown in Figure 1). Dataset annotation was made possible through the joint work of the Children’s National Hospital, NVIDIA and the National Institutes of Health for the COVID-19-20 Lung CT Lesion Segmentation Grand Challenge. The aim was to identify and segment the lung subregions infected with the disease. Since DL networks require inputs of the same size for training, CT voxels were resampled to the isotropic size of 1 × 1 × 1 mm3 using linear interpolation. We implemented the network and the Tversky loss function [27] (see Section 2.3) using Keras with Tensorflow backend in the open-source language Python (www.python.org, accessed on 1 January 2021). We used the whole dataset in a k-fold (k = 5) cross-validation fashion, as explained in Section 2.4.

Figure 1.

CT images showing the parenchyma with visible subregions (yellow) infected with COVID-19 disease.

2.2. C-ENET

ENET is an optimized neural network implemented for the high accuracy and fast inference typically needed in the self-driving car industry. Its architecture is widely described in [18]. Briefly, it is based on building blocks of residual networks, with each block consisting of three convolutional layers. ENET is characterized by asymmetric and separable convolutions with sequences of 5 × 1 and 1 × 5. The 5 × 5 convolution has 25 parameters while the corresponding asymmetric convolution has 10 parameters to reduce the size of the network. This network has been applied for parenchyma segmentation in patients with IPF that presents several similarities to COVID-19 disease [19]. Now, we customize the proposed network to apply it in COVID-19 infected lungs. The difference between the two network architectures (ENET and customized-ENET, namely C-ENET) is shown in Table 1. In stage 3, the output of 128 × 64 × 64 was replaced by an output of 256 × 64 × 64. This implies a DSC distribution on average greater and with less variability than ENET, as shown in Section 3.

Table 1.

Difference between ENET and C-ENET network architecture.

| Name | Type | Stage | Output Size | |

|---|---|---|---|---|

| initial | stage0 | 16 × 256 × 256 | ||

| bottleneck1.0 | Down-sampling | stage1 | 64 × 128 × 128 | |

| 4 × bottleneck1.x | stage1 | 64 × 128 × 128 | ||

| bottleneck2.0 | Down-sampling | stage2 | 128 × 64 × 64 | |

| bottleneck2.1 | stage2 | 128 × 64 × 64 | ||

| bottleneck2.2 | dilated 2 | stage2 | 128 × 64 × 64 | |

| bottleneck2.3 | asymmetric 5 | stage2 | 128 × 64 × 64 | |

| bottleneck2.4 | dilated 4 | stage2 | 128 × 64 × 64 | |

| bottleneck2.5 | stage2 | 128 × 64 × 64 | ||

| bottleneck2.6 | dilated 8 | stage2 | 128 × 64 × 64 | |

| bottleneck2.7 | asymmetric 5 | stage2 | 128 × 64 × 64 | |

| bottleneck2.8 | dilated 16 | stage2 | 128 × 64 × 64 | |

| ENET | Repeat stage2, without bottleneck2.0 | stage3 | 128 × 64 × 64 | |

| C-ENET | Repeat stage2, without bottleneck2.0 | stage3 | 256 × 64 × 64 | |

| bottleneck4.0 | Up-sampling | stage4 | 64 × 128 × 128 | |

| bottleneck4.1 | stage4 | 64 × 128 × 128 | ||

| bottleneck4.2 | stage4 | 64 × 128 × 128 | ||

| bottleneck5.0 | Up-sampling | stage5 | 16 × 256 × 256 | |

| bottleneck5.1 | stage5 | 16 × 256 × 256 | ||

| fullconv | Final output | C × 512 × 512 | ||

2.3. Loss Function

In CT images, the number of voxels belonging to the target is small compared to the number of voxels belonging to the background. The COVID-19 lung regions infected are very small compared to the background which can be composed of many different organs or tissues characterized by a wide range of Hounsfield unit (HU) values. DL suffers from this problem as it has difficulty learning a reliable feature representation of the target class. Consequently, it tends to identify most CT regions as belonging to the background class. To overcome this issue, as reported in [28], the Tversky loss function [27] is used for the weight adjustment of false positives (FPs) and false negatives (FNs), unlike the DSC that is the most used DL loss function. Specifically, DSC is the harmonic mean of FPs and FNs and weighs both equally. Vice versa, the Tversky loss is defined as follows:

| (1) |

where p0i is the probability of voxel I being part of the target, and p1i is the probability of it belonging to the background. The ground truth training label g0i is 1 for target and 0 for everything else, and vice versa for the label g1i. By adjusting the parameters, 𝛼 and 𝛽, the trade-off can be controlled between FPs and FNs. To obtain DSC, set 𝛼 = 𝛽 = 0.5. Setting β’s > 0.5 weight recall higher than precision by placing more emphasis on FNs in the slices with small target regions.

2.4. Training

In AI studies, images are divided into three datasets: (i) training, (ii) validation, and (iii) testing. However, if a small number of images are available for training, as is often the case in the biomedical field, the k-fold cross-validation strategy can be used. In this way, CT studies are divided into k folds: one fold is used as the validation set and the remaining folds are used as the training set. This process is repeated k times using each fold as the validation set and the other ones as the training set. As a result, we used a 5-fold by randomly dividing the CT studies into 5 folds of 40 or 39 studies. In this way, 5 models were trained differently. The performance result was obtained by averaging the performance results of the validation datasets of the 5 models.

2.5. Experimental Details

We implemented DL networks using the open-source programming language Python and a high-end HPC system equipped with GPU (NVIDIA QUADRO P4000 with 8 GB of RAM, 1792 CUDA Cores). We used a learning rate of 0.0001 for ENET and C-ENET, and 0.00001 for ERFNET and UNET with the Adam optimizer, while a batch size of 8 slices was set for all experiments [19,20,21]. During the training task, a maximum of 100 epochs was allowed. The criterion of stopping of the Tversky loss function was characterized by = 0.3, and = 0.7. If the training loss did not decrease for 10 consecutive epochs, the training was stopped. We also applied the data augmentation technique by randomly rotating (20), translating in both x = 0.2 and y = 0.3 directions, and applying shearing (20), horizontal flip, zooming (0.4) and elastic transform ( = 0.8, and = 0.2). In addition, standardization and normalization of data were applied to converge faster and avoid numerical instability and too large weights.

2.6. Evaluation Metrics

The performance result of the proposed approach was obtained using DSC, sensitivity, volume overlap error (VOE), volumetric difference (VD), and positive predictive value (PPV) calculated as mean, standard variation (std), and confidence interval (CI) [29]. The DSC measures the spatial overlap between the reference volume and the segmented volume. It ranges between 0% (no overlap) and 100% (perfect overlap) and is calculated as:

| DSC= (2 × 𝑇𝑃)/(2 × 𝑇𝑃 + 𝐹𝑃 + 𝐹𝑁) × 100% | (2) |

where TP, FP, and FN are the number of true positives, false positives, and false negatives respectively.

Sensitivity is the true positive rate and is defined as:

| Sensitivity = (𝑇𝑃)/(TN + 𝐹𝑁) × 100% | (3) |

where TN is the number of true negatives.

Finally, VOE, VD, and PPV are defined respectively as:

| VOE = 1 − (TP)/(TP + FP + FN) × 100% | (4) |

| VD = (FN − FP)/(2TP + FP + FN) × 100% | (5) |

| PPV = (𝑇𝑃)/(TP + 𝐹𝑁) × 100% | (6) |

To test the significance of differences between the DL algorithms’ performance, an analysis of variance (one-way ANOVA) with Tukey HSD (honestly significant difference) was used as multiple comparison correction techniques on the DSC. A p-value ≤ 0.05 was considered statistically significant.

3. Results

We applied the proposed segmentation approach to identify and contour COVID-19 infected regions and we provided a comparison with the results of the original ENET, UNET, and ERFNET. Table 2 shows the performance evaluation using the k-fold strategy. C-ENET showed the highest DSC (74.83 ± 11.18%), while ENET and ERFNET achieved a DSC of 72.28 ± 13.44% and 54.23 ± 18.64%, respectively.

Table 2.

Performance of DL networks.

| DSC | VOE | VD | PPV | Sensitivity | |

|---|---|---|---|---|---|

| C-ENET | |||||

| Mean | 74.83% | 39.01% | 20.97% | 76.26% | 76.50% |

| ±std | 11.18% | 13.67% | 21.21% | 10.01% | 16.79% |

| ±CI (95%) | 3.51% | 4.29% | 6.66% | 3.14% | 5.27% |

| ENET | |||||

| Mean | 72.28% | 41.80% | 27.60% | 70.84% | 77.10% |

| ±std | 13.44% | 15.41% | 38.35% | 14.19% | 15.95% |

| ±CI (95%) | 4.22% | 4.84% | 12.04% | 4.45% | 5.01% |

| ERFNET | |||||

| Mean | 54.23% | 60.56% | 119.92% | 48.65% | 72.97% |

| ±std | 18.64% | 17.66% | 180.15% | 20.67% | 20.11% |

| ±CI (95%) | 5.85% | 5.54% | 56.54% | 6.49% | 6.31% |

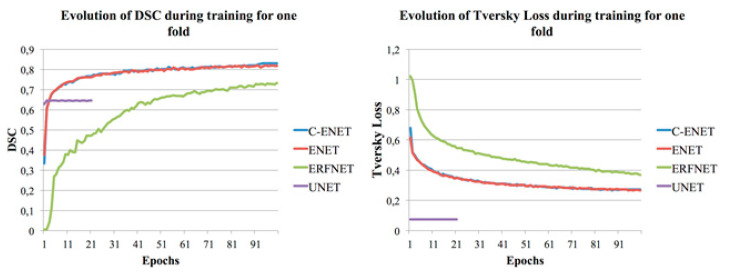

UNET [24] was unable to achieve any convergence after more than twenty-one epochs (with reference to Figure 2 where the training DSC and Tversky loss function [27] plots are shown for one-fold and for each considered algorithm), unlike our previous studies whose purpose was the segmentation of the whole lung, aorta, and prostate [19,20,21]. Specifically, during the training, the network immediately gave a low value of Tversky loss (0.075) and a value of DSC = 62.79%. The network maintained the same values after twenty-one epochs, consequently, the training was stopped (the training loss did not decrease for 10 consecutive epochs). Although the network maintained low Tversky loss values, it was unable to learn; in fact, by testing the validation dataset, we obtained a DSC of 0%. A further test was performed with a modified version of UNET where all 3 × 3 convolutions were replaced by larger 5 × 5 convolution operators as reported in [19], and similar results were obtained. For this reason, UNET was not included in Table 2. Figure 2 also shows how C-ENET and ENET models outperformed the other two models. Specifically, both models achieved a training DSC greater than 80% in less than 50 epochs.

Figure 2.

Training DSC and Tversky loss plots for C-ENET, ENET, ERFNET and UNET.

At the analysis of variance, the p-value corresponding to the F-statistic of one-way ANOVA was less than 0.05, suggesting that one or more methods were significantly different (see Table 3).

Table 3.

ANOVA on the DSC showed statistical differences between DL methods.

| ANOVA | F Value | F Critic Value | p-Value |

|---|---|---|---|

| C-ENET vs. ENET vs. ERFNET | 22.010 | 3.076 | p < 0.01 |

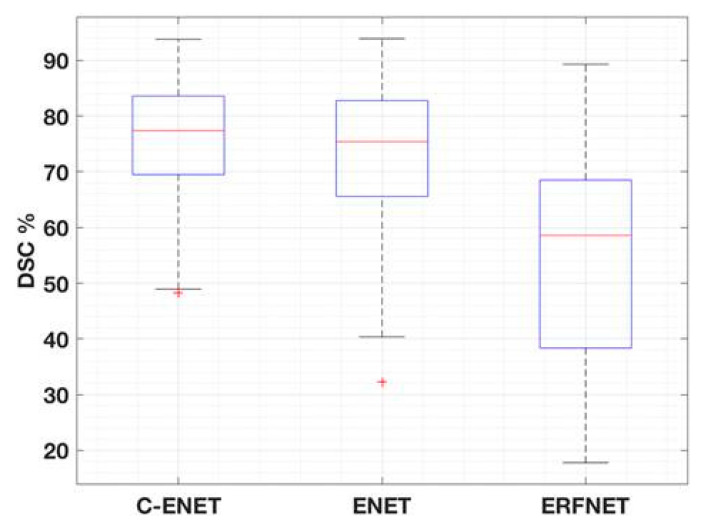

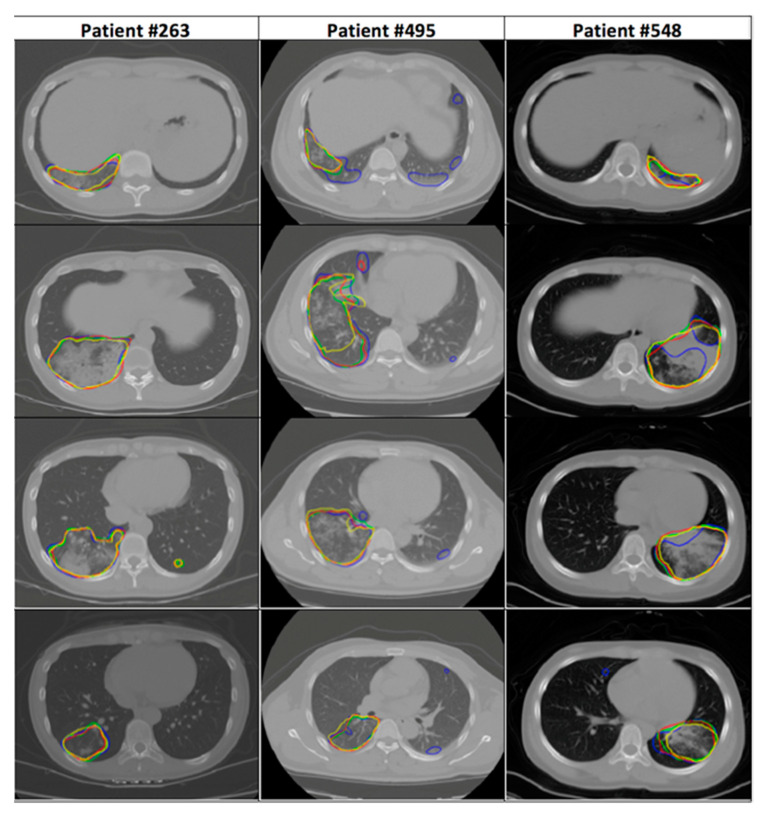

Table 4 shows the multiple comparison using the Tukey HSD correction technique. C-ENET was significantly different from ERFNET but not from ENET. However, DSC-based boxplots were also calculated (with reference to Figure 3) to further evaluate the differences in algorithm performance. Although C-ENET and ENET methods obtained very good performance, C-ENET showed a DSC distribution on average greater and with less variability than ENET. Consequently, it can be assumed that C-ENET focuses on higher performance values. Examples of obtained results are shown in Figure 4.

Table 4.

Tukey HSD was used as a multiple comparison correction technique.

| Tukey HSD | Q-Statistic | p-Value |

|---|---|---|

| C-ENET vs. ENET | 10.659 | 0.7137408 |

| C-ENET vs. ERFNET | 75.402 | 0.0010053 |

| ENET vs. ERFNET | 86.062 | 0.0010053 |

Figure 3.

DSC-based boxplots of the different DL networks.

Figure 4.

CT images showing parenchyma with visible subregions (yellow for the gold standard, red for C-ENET, green for ENET, and blue for ERFNET,) infected with COVID-19 disease.

Finally, Table 5 shows the computational complexity of the three DL networks. In particular, we estimated the time taken to complete a CT study by considering the average time required to obtain the output for each study. Using the GPU (NVIDIA QUADRO P4000, 8 GB VRAM, 1792 CUDA Cores) about 2–4 s were required for each network. When calculations were performed on a CPU (Intel(R) Xeon(R) W-2125 CPU 4.00 GHz processor), DL networks required less than 17 s.

Table 5.

Comparison of computational complexity and performance of DL models.

| Model Name | Number of Parameters | Size on Disk | Inference Times/Dataset | Training Times/Dataset | ||

|---|---|---|---|---|---|---|

| Trainable | Non-Trainable | CPU (sec) | GPU (sec) | GPU (days) | ||

| C-ENET | 793,917 | 11426 | 11 MB | 16.857 | 4.026 | 4.22 |

| ENET | 363,069 | 8354 | 5.8 MB | 12.833 | 3.505 | 3.47 |

| ERFNET | 2,056,440 | 0 | 25.3 MB | 10.630 | 2.614 | 2.87 |

4. Discussion and Conclusions

In this study, we investigate user-independent strategies to support clinicians with the automatic identification and delineation of COVID-19 infected regions in CT images. Our main goal is to devise an algorithm capable of satisfying the growing need for an efficient, repeatable, and real-time segmentation approach without any user supervision, unlike our previous studies [12,30,31]. Identifying the volume of interest is the first task of a radiomics analysis. Accurate delineation is essential to avoid mistakes in the calculation of features. Furthermore, when target identification is performed manually, the results are characterized by high variability [7]. For this reason, with the advent of COVID-19 pandemic, there is a growing interest in the use of automated and operator-independent image segmentation strategies to detect SARS-CoV-2 infection in the parenchyma. The DL methods are more efficient than the classical statistical approaches, obtaining better performance in the classification of images and in the segmentation of several anatomical districts. In this study, we explore the performance of ENET, as proposed in [19] for IPF patient studies, and appropriately modified for our purpose, henceforth referred to as the C-ENET (with reference to Section 2.2). CT scans of patients with IPF, similar to CT scans of COVID-19 patients, show extensive patchy ground-glass regions with or without consolidations and the presence of pleural fluid. For this reason and considering that ENET was designed to be used with limited hardware resources (it was originally developed for image segmentation and recognition in self-driving car applications), and, as a result, benefits from rapid training and reduced training data requirements, we aim to investigate this approach for identifying COVID-19 infected subregions. Specifically, we customize this network by changing, in stage 3 as shown in Table 1, the ENET output of 128 × 64 × 64 to an output of 256 × 64 × 64. This implies that the C-ENET implementation is performing better than the original ENET, as shown in the DSC boxplots of Figure 3: although C-ENET and ENET methods are not statistically different, C-ENET shows an average DSC distribution greater and with less variability than ENET. Additionally, two solutions were implemented to overcome two typical issues in imaging data processing, namely unbalanced data (with reference to Section 2.3) and a limited number of labelled images (with reference to Section 2.4). Regarding the unbalanced data issue in CT images, COVID-19 infected regions are very small compared to the background characterized by a wide range of HU values. Consequently, DL networks tend to predict as target a CT region smaller than the true one. The Tversky loss function [27] was then used to assign a higher weight to the target voxels, unlike DSC which is the most used DL loss function. In this way, the network was able to learn more effectively how to recognize the target area, as reported in [28]. Regarding the issue of the limited number of labelled images, we address the problem through a five-fold cross-validation strategy. In this way, the whole CT dataset was randomly split into five folds: one-fold was used as the validation set and four folds were used as the training set. This process was repeated five times using each fold as the validation set and the others as the training set. As a result, five models were trained differently. The performance result was obtained by averaging the performance results of the validation datasets of the five models. Finally, two other state-of-the-art DL networks were evaluated in terms of segmentation accuracy, training and execution time, hardware and data requirements: UNET, the most widely used model for segmentation of biomedical image [24], and ERFNET [25] developed for the self-driving car task, such as ENET. All DLs were tested with the same public dataset of COVID-19 studies containing 199 CT images with labels provided by the Challenge [26] (with reference to Section 2.1) and a high-end HPC system equipped with GPU (NVIDIA QUADRO P4000 with 8 GB of RAM, 1792 CUDA Cores) (with reference to Section 2.4) which takes an average time of about 2–4 s to get the output for each study. Although the main limitation of this study was the limited number of cases, our results demonstrated the feasibility and effectiveness of C-ENET which showed a good segmentation accuracy with a DSC of approximately 75%. Furthermore, the choice of using a public dataset allows for easier reproducibility of the results and a clear reference for future studies in this research area: all images and labels are freely available for use by other researchers. Moreover, despite the relatively small number of cases, we obtained good results with an efficient solution both from the point of view of computational times and processing power requirements. Validation in a large image dataset will be performed to improve classification performance and to confirm our results, for example, using the datasets proposed in [32,33].

In conclusion, the clinical application of C-ENET can improve the way to assess the COVID-19 disease and open the way for a clinical decision-support system for risk stratification and patient management. In particular, the clinical application of C-ENET for automatic identification and segmentation of COVID-19 infected regions can provide tailored management for COVID-19 patients in discriminating the different degrees of disease aggressiveness. Finally, the application of this DL in a radiomics study will be described in a forthcoming paper.

Author Contributions

Conceptualization: A.C. and A.S.; data curation: A.C.; formal analysis: A.C.; funding acquisition: A.C. and A.S.; investigation: A.C.; methodology: A.C.; project administration: A.C. and A.S.; resources: A.C. and A.S.; software: A.C.; supervision: A.C. and A.S.; validation: A.C.; visualization: A.C.; writing—original draft: A.S. and A.C.; writing—review and editing: A.C. and A.S. Both authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

The data used in this study was downloaded from a public database, so this study did not require the approval of the ethics committee.

Informed Consent Statement

Not applicable as the data used in this study was downloaded from a public database.

Data Availability Statement

CT Images in COVID-19—The Cancer Imaging Archive (TCIA) Public Access—Cancer Imaging Archive Wiki. Available online: https://wiki.cancerimagingarchive.net/display/Public/CT+Images+in+COVID-19#702271074dc5f53338634b35a3500cbed18472e0 (accessed on 25 March 2021).

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Li Y., Xia L. Coronavirus disease 2019 (COVID-19): Role of chest CT in diagnosis and management. Am. J. Roentgenol. 2020;214:1280–1286. doi: 10.2214/AJR.20.22954. [DOI] [PubMed] [Google Scholar]

- 2.Wei W., Hu X., Cheng Q., Zhao Y., Ge Y. Identification of common and severe COVID-19: The value of CT texture analysis and correlation with clinical characteristics. Eur. Radiol. 2020:1–9. doi: 10.1007/s00330-020-07012-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Khoo V.S., Adams E.J., Saran F., Bedford J.L., Perks J.R., Warrington A.P., Brada M. A comparison of clinical target volumes determined by CT and MRI for the radiotherapy planning of base of skull meningiomas. Int. J. Radiat. Oncol. Biol. Phys. 2000;46:1309–1317. doi: 10.1016/S0360-3016(99)00541-6. [DOI] [PubMed] [Google Scholar]

- 4.Cuocolo R., Stanzione A., Ponsiglione A., Romeo V., Verde F., Creta M., La Rocca R., Longo N., Pace L., Imbriaco M. Clinically significant prostate cancer detection on MRI: A radiomic shape features study. Eur. J. Radiol. 2019 doi: 10.1016/j.ejrad.2019.05.006. [DOI] [PubMed] [Google Scholar]

- 5.Sun C., Tian X., Liu Z., Li W., Li P., Chen J., Zhang W., Fang Z., Du P., Duan H., et al. Radiomic analysis for pretreatment prediction of response to neoadjuvant chemotherapy in locally advanced cervical cancer: A multicentre study. EBioMedicine. 2019;46:160–169. doi: 10.1016/j.ebiom.2019.07.049. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Cutaia G., La Tona G., Comelli A., Vernuccio F., Agnello F., Gagliardo C., Salvaggio L., Quartuccio N., Sturiale L., Stefano A., et al. Radiomics and Prostate MRI: Current Role and Future Applications. J. Imaging. 2021;7:34. doi: 10.3390/jimaging7020034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Comelli A., Stefano A., Coronnello C., Russo G., Vernuccio F., Cannella R., Salvaggio G., Lagalla R., Barone S. Communications in Computer and Information Science. Volume 1248 CCIS. Springer; Cham, Switzerland: 2020. Radiomics: A New Biomedical Workflow to Create a Predictive Model; pp. 280–293. [Google Scholar]

- 8.Alongi P., Stefano A., Comelli A., Laudicella R., Scalisi S., Arnone G., Barone S., Spada M., Purpura P., Bartolotta T.V., et al. Radiomics analysis of 18F-Choline PET/CT in the prediction of disease outcome in high-risk prostate cancer: An explorative study on machine learning feature classification in 94 patients. Eur. Radiol. 2021 doi: 10.1007/s00330-020-07617-8. [DOI] [PubMed] [Google Scholar]

- 9.Stefano A., Comelli A., Bravatà V., Barone S., Daskalovski I., Savoca G., Sabini M.G., Ippolito M., Russo G. A preliminary PET radiomics study of brain metastases using a fully automatic segmentation method. BMC Bioinf. 2020;21:325. doi: 10.1186/s12859-020-03647-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.George P.M., Wells A.U., Jenkins R.G. Pulmonary fibrosis and COVID-19: The potential role for antifibrotic therapy. Lancet Respir. Med. 2020 doi: 10.1016/S2213-2600(20)30225-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Christe A., Peters A.A., Drakopoulos D., Heverhagen J.T., Geiser T., Stathopoulou T., Christodoulidis S., Anthimopoulos M., Mougiakakou S.G., Ebner L. Computer-Aided Diagnosis of Pulmonary Fibrosis Using Deep Learning and CT Images. Invest. Radiol. 2019;54:627–632. doi: 10.1097/RLI.0000000000000574. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Stefano A., Gioè M., Russo G., Palmucci S., Torrisi S.E., Bignardi S., Basile A., Comelli A., Benfante V., Sambataro G., et al. Performance of Radiomics Features in the Quantification of Idiopathic Pulmonary Fibrosis from HRCT. Diagnostics. 2020;10:306. doi: 10.3390/diagnostics10050306. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Mei X., Lee H.C., Diao K., Huang M., Lin B., Liu C., Xie Z., Ma Y., Robson P.M., Chung M., et al. Artificial intelligence–enabled rapid diagnosis of patients with COVID-19. Nat. Med. 2020;26:1224–1228. doi: 10.1038/s41591-020-0931-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Shi F., Wang J., Shi J., Wu Z., Wang Q., Tang Z., He K., Shi Y., Shen D. Review of Artificial Intelligence Techniques in Imaging Data Acquisition, Segmentation, and Diagnosis for COVID-19. IEEE Rev. Biomed. Eng. 2021;14:4–15. doi: 10.1109/RBME.2020.2987975. [DOI] [PubMed] [Google Scholar]

- 15.Fan D.P., Zhou T., Ji G.P., Zhou Y., Chen G., Fu H., Shen J., Shao L. Inf-Net: Automatic COVID-19 Lung Infection Segmentation from CT Images. IEEE Trans. Med. Imaging. 2020;39:2626–2637. doi: 10.1109/TMI.2020.2996645. [DOI] [PubMed] [Google Scholar]

- 16.Zhou T., Canu S., Ruan S. Automatic COVID-19 CT segmentation using U-Net integrated spatial and channel attention mechanism. Int. J. Imaging Syst. Technol. 2021;31:16–27. doi: 10.1002/ima.22527. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Roth H., Diez C.T., Jacob R.S., Zember J., Harouni A., Isensee F., Tang C. Rapid Arti cial Intelligence Solutions in a Pandemic—The COVID-19-20 Lung CT Lesion Segmentation Challenge. Res. Sq. 2021 doi: 10.21203/rs.3.rs-571332/v1. [DOI] [Google Scholar]

- 18.Paszke A., Chaurasia A., Kim S., Culurciello E. ENet: A Deep Neural Network Architecture for Real-Time Semantic Segmentation. arXiv. 20161606.02147 [Google Scholar]

- 19.Comelli A., Coronnello C., Dahiya N., Benfante V., Palmucci S., Basile A., Vancheri C., Russo G., Yezzi A., Stefano A. Lung Segmentation on High-Resolution Computerized Tomography Images Using Deep Learning: A Preliminary Step for Radiomics Studies. J. Imaging. 2020;6:125. doi: 10.3390/jimaging6110125. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Comelli A., Dahiya N., Stefano A., Benfante V., Gentile G., Agnese V., Raffa G.M., Pilato M., Yezzi A., Petrucci G., et al. Deep learning approach for the segmentation of aneurysmal ascending aorta. Biomed. Eng. Lett. 2020 doi: 10.1007/s13534-020-00179-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Cuocolo R., Comelli A., Stefano A., Benfante V., Dahiya N., Stanzione A., Castaldo A., De Lucia D.R., Yezzi A., Imbriaco M. Deep Learning Whole-Gland and Zonal Prostate Segmentation on a Public MRI Dataset. J. Magn. Reson. Imaging. 2021 doi: 10.1002/jmri.27585. [DOI] [PubMed] [Google Scholar]

- 22.Cozzi D., Cavigli E., Moroni C., Smorchkova O., Zantonelli G., Pradella S., Miele V. Ground-glass opacity (GGO): A review of the differential diagnosis in the era of COVID-19. Jpn. J. Radiol. 2021 doi: 10.1007/s11604-021-01120-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Fetita C., Rennotte S., Latrasse M., Tapu R., Maury M., Mocanu B., Nunes H., Brillet P.-Y. Transferring CT image biomarkers from fibrosing idiopathic interstitial pneumonia to COVID-19 analysis; Proceedings of the Medical Imaging 2021: Computer-Aided Diagnosis; Online Only. 15–20 February 2021; p. 5. [Google Scholar]

- 24.Ronneberger O., Fischer P., Brox T. U-net: Convolutional networks for biomedical image segmentation; Proceedings of the Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Fuzhou, China. 13–15 November 2015. [Google Scholar]

- 25.Romera E., Alvarez J.M., Bergasa L.M., Arroyo R. ERFNet: Efficient Residual Factorized ConvNet for Real-Time Semantic Segmentation. IEEE Trans. Intell. Transp. Syst. 2018 doi: 10.1109/TITS.2017.2750080. [DOI] [Google Scholar]

- 26.CT Images in COVID-19—The Cancer Imaging Archive (TCIA) Public Access—Cancer Imaging Archive Wiki. [(accessed on 25 March 2021)]; Available online: https://wiki.cancerimagingarchive.net/display/Public/CT+Images+in+COVID-19#702271074dc5f53338634b35a3500cbed18472e0.

- 27.Salehi S.S.M., Erdogmus D., Gholipour A. Tversky loss function for image segmentation using 3D fully convolutional deep networks; Proceedings of the Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Quebec City, QC, Canada. 10 September 2017; pp. 379–387. [Google Scholar]

- 28.Comelli A., Dahiya N., Stefano A., Vernuccio F., Portoghese M., Cutaia G., Bruno A., Salvaggio G., Yezzi A. Deep Learning-Based Methods for Prostate Segmentation in Magnetic Resonance Imaging. Appl. Sci. 2021;11:782. doi: 10.3390/app11020782. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Comelli A., Bignardi S., Stefano A., Russo G., Sabini M.G., Ippolito M., Yezzi A. Development of a new fully three-dimensional methodology for tumours delineation in functional images. Comput. Biol. Med. 2020;120:103701. doi: 10.1016/j.compbiomed.2020.103701. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Palmucci S., Torrisi S.E., Falsaperla D., Stefano A., Torcitto A.G., Russo G., Pavone M., Vancheri A., Mauro L.A., Grassedonio E., et al. Assessment of Lung Cancer Development in Idiopathic Pulmonary Fibrosis Patients Using Quantitative High-Resolution Computed Tomography: A Retrospective Analysis. J. Thorac. Imaging. 2020;35:115–122. doi: 10.1097/RTI.0000000000000468. [DOI] [PubMed] [Google Scholar]

- 31.Torrisi S.E., Palmucci S., Stefano A., Russo G., Torcitto A.G., Falsaperla D., Gioè M., Pavone M., Vancheri A., Sambataro G., et al. Assessment of survival in patients with idiopathic pulmonary fibrosis using quantitative HRCT indexes. Multidiscip. Respir. Med. 2018;13:1–8. doi: 10.4081/mrm.2018.206. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Zaffino P., Marzullo A., Moccia S., Calimeri F., De Momi E., Bertucci B., Arcuri P.P., Spadea M.F. An open-source covid-19 ct dataset with automatic lung tissue classification for radiomics. Bioengineering. 2021;8:26. doi: 10.3390/bioengineering8020026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Tsai E.B., Simpson S., Lungren M.P., Hershman M., Roshkovan L., Colak E., Erickson B.J., Shih G., Stein A., Kalpathy-Cramer J., et al. The RSNA International COVID-19 Open Radiology Database (RICORD) Radiology. 2021;299:E204–E213. doi: 10.1148/radiol.2021203957. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

CT Images in COVID-19—The Cancer Imaging Archive (TCIA) Public Access—Cancer Imaging Archive Wiki. Available online: https://wiki.cancerimagingarchive.net/display/Public/CT+Images+in+COVID-19#702271074dc5f53338634b35a3500cbed18472e0 (accessed on 25 March 2021).