Abstract

Competition in health insurance markets may fail to improve health outcomes if consumers are not able to identify high quality plans. We develop and apply a novel instrumental variables framework to quantify the variation in causal mortality effects across plans and how much consumers attend to this variation. We first document large differences in the observed mortality rates of Medicare Advantage plans within local markets. We then show that when plans with high (low) mortality rates exit these markets, enrollees tend to switch to more typical plans and subsequently experience lower (higher) mortality. We derive and validate a novel “fallback condition” governing the subsequent choices of those affected by plan exits. When the fallback condition is satisfied, plan terminations can be used to estimate the relationship between observed plan mortality rates and causal mortality effects. Applying the framework, we find that mortality rates unbiasedly predict causal mortality effects. We then extend our framework to study other predictors of plan mortality effects and estimate consumer willingness to pay. Higher spending plans tend to reduce enrollee mortality, but existing quality ratings are uncorrelated with plan mortality effects. Consumers place little weight on mortality effects when choosing plans. Good insurance plans dramatically reduce mortality, and redirecting consumers to such plans could improve beneficiary health.

1. Introduction

When product quality is difficult to observe, consumers and producers may make suboptimal choices and investments. This concern is heightened in healthcare markets, where the quality of healthcare providers or insurance plans can be especially hard to infer. If consumers cannot determine whether certain plans are more likely to improve their health, then competition is unlikely to incentivize insurers to invest in this dimension of quality. To better inform consumers, policymakers disseminate provider and plan quality measures. But there is little evidence for how well existing quality measures predict the causal impacts of insurance plans on enrollee health, much less whether consumers attend to such differences in plan quality.

This paper estimates the effects of different private health insurance plans on enrollee mortality, investigates why some plans are higher quality by this measure, and assesses whether consumer demand responds to plan mortality effects. Our setting is the Medicare Advantage (MA) market, in which beneficiaries choose from a broad array of private managed care plans that are subsidized by the government. The MA program is large and growing, covering more than one third of Medicare beneficiaries (KFF, 2019). Annual mortality in the elderly MA population is high, at 4.7%.

Measuring plan mortality effects is fundamentally challenging. Differences in observed mortality rates may reflect non-random selection by consumers of different unobserved health, while quasi-experimental variation in plan choice is both limited and likely under-powered to detect different mortality effects across individual plans. Quantifying the extent to which consumer demand responds to mortality effects is also difficult, since any set of effect estimates are likely noisy and potentially biased by non-random sorting. We develop tools to overcome these challenges by combining observational and quasi-experimental variation, following a small but growing literature on quality estimation in education and health (Chetty, Friedman, and Rockoff, 2014; Angrist et al., 2017; Hull, 2020). We add to this literature by showing that instrumental variables (IV) methods relating observational quality estimates to true causal effects require a previously overlooked condition governing individual choice. We build theoretical and empirical support for the condition in the MA setting, and show how extensions of such IV regressions can be combined with standard discrete choice modeling to estimate consumer willingness to pay for plan quality.

We begin by documenting large differences in the one-year mortality rates of MA plans operating in the same county, after adjusting for observable differences in enrollee demographics and accounting for statistical noise. We refer to these adjusted mortality rates as “observational mortality,” which we calculate as a time-invariant plan characteristic measured over our sample period. If causal, our estimated variation in observational mortality would suggest that a one standard deviation higher quality plan decreases beneficiary mortality by 1.1 percentage points—a 23% reduction in mortality from a baseline rate of 4.7%, comparable to the sizable variation in mortality effects across hospitals (Doyle et al., 2015; Doyle, Graves, and Gruber, 2019; Hull, 2020). Given conventional estimates of the value of a statistical life (VSL), such variation suggests consumers should value higher quality MA plans at tens or even hundreds of thousands of dollars per year.

However, variation in our observational mortality measure may reflect unobserved sorting as well as causal plan health effects. We next validate the measure by leveraging variation in MA choice sets arising from plan terminations. Intuitively, when plans with high or low observational mortality exit a market their enrollees tend to re-enroll in plans that have more typical observational mortality. The enrollees of non-terminated plans, in contrast, tend to be inertial and so they tend to remain in high- or low-mortality plans. If the observational mortality variation reflects variation in true mortality effects, we would therefore expect the subsequent mortality of enrollees in high-(low-) mortality plans to decline (rise) when these plans exogenously exit the market, relative to the subsequent mortality of beneficiaries in similar plans that do not terminate. The magnitude of this relationship should furthermore reveal the relationship between observational estimates and causal plan effects. All else equal, subsequent enrollee mortality should change one-for-one with observational predictions when plan-level selection bias is negligible or uncorrelated with observational mortality across plans.

We formalize this quasi-experimental approach to validating observational mortality with a novel IV framework. Our main parameter of interest is the mortality effect “forecast coefficient,” defined by the regression of unobserved plan mortality effects on observational mortality. While not identifying mortality effects for individual plans, the forecast coefficient can be used to evaluate many policies of interest. For example, it allows the prediction of average impacts of policies (based on, e.g., information or incentives) that would redirect consumers to plans with different observational mortality levels. We show how a feasible beneficiary-level IV regression identifies the forecast coefficient under three assumptions. First, we assume that terminations impact the observational mortality of an enrollee’s plan via subsequent plan enrollment. We verify that the first stage is strong in our setting. Second, we assume that any relationship between observational mortality and underlying beneficiary health is the same in terminated and non-terminated plans, conditional on observables. We build support for this assumption, which allows for direct termination effects, by showing that there are not economically meaningful differences in patient observables across terminated and non-terminated MA plans, and that past cohorts in these plans have similar mortality prior to termination. In some specifications, we isolate terminations arising from a nationwide change in reimbursement policy for a category of Medicare Advantage plans.

Our primary methodological contribution is to show that these two standard IV conditions are not generally enough to estimate the plan forecast coefficient. Instead, the IV exclusion restriction which identifies the forecast coefficient comprises a usual “balance condition” (which would be satisfied when terminations are as-good-as-randomly assigned) and a novel “fallback condition.” In our setting, this condition restricts the fallback (second choice) plans that enrollees choose after a plan termination. Fallback choices must be similar to those chosen initially in terms of the unforecastable component of plan mortality effects. We show how this third assumption can be microfounded by a standard discrete choice model, in which there is no persistent unobserved heterogeneity in choices, and how it can be relaxed under different model assumptions. We further show how the assumption can be investigated empirically by testing for observable differences in fallback plans following plan terminations.

Our IV framework shows that observational mortality is a strong predictor of true MA mortality effects. Across a variety of specifications, we find first-stage effects of terminations on enrolled plan observational mortality which closely match the associated reduced-form effects of terminations on enrollee mortality. Consequently, IV forecast coefficient estimates are close to and statistically indistinguishable from one. This finding does not rule out selection bias in individual plan mortality rates. Instead, the finding shows that variation in observational mortality across plans accurately predicts variation in causal mortality effects, at least on average.

We then extend our approach to answer a series of policy-relevant questions. We first generalize the three IV assumptions to estimate the relationship between plan mortality effects and plan characteristics other than observational mortality. We find that the most widely used measure of plan quality, CMS star ratings, is uncorrelated with plan mortality effects. Higher premium plans have better mortality effects, as do plans with more generous prescription drug coverage and higher medical-loss ratios. Thus, in every way we measure, plans that spend more tend to reduce enrollee mortality. Overall, our estimates imply very large variation across plans. Future work should explore additional mechanisms—including networks of providers—that could help explain this variation.

We next extend the IV approach to measure the extent to which consumers value plan mortality effects. Plans with better mortality effects tend to have larger market shares conditional on premiums. We show how this finding can be used in our IV framework to estimate the implicit willingness to pay (WTP) for plan quality. Estimating WTP is challenging because we observe only noisy and biased measures of mortality effects. We show how this challenge can be overcome by using our IV framework to compute forecast coefficients that relate mortality effects to premium-adjusted mean utility for each plan. Under our three IV assumptions, these forecast coefficients can be used to compute an upper bound on consumer WTP for plan quality. We find a positive WTP, but one which is several orders of magnitude smaller than standard VSL estimates. Thus, while we find consumers to be somewhat responsive to differences in plan quality, they underrespond relative to the large variation in mortality effects. In simple partial-equilibrium simulations, we find that redirecting consumers to higher quality plans could produce large benefits.

Our analysis of MA plan quality adds to a growing literature estimating the impact of health insurance on health. Miller, Johnson, and Wherry (2021) and Goldin, Lurie, and McCubbin (2021), for example, show that gaining access to Medicaid leads to large mortality reductions. Card, Dobkin, and Maestas (2008) similarly document a discontinuous drop in mortality when beneficiaries age into Medicare. Less well studied is the question of whether different types of insurance plans in a market can differentially affect health outcomes.1 By connecting plan quality differences to consumer demand, we add to a long literature studying consumer attentiveness to plan heterogeneity (Abaluck and Gruber, 2011, 2016; Ericson and Starc, 2016; Handel, 2013; Handel and Kolstad, 2015). Our findings have general equilibrium implications, to the extent consumer demand impacts the characteristics of offered plans (Starc and Town, 2020; Miller et al., 2019).2

Our analysis also adds to a recent methodological literature combining observational and quasi-experimental variation to estimate heterogeneity in the quality of institutions, such as hospitals, doctors, nurses, teachers, schools, and regions (Hull, 2020; Fletcher, Horwitz, and Bradley, 2014; Yakusheva, Lindrooth, and Weiss, 2014; Kane and Staiger, 2008; Chetty, Friedman, and Rockoff, 2014; Angrist et al., 2016, 2017; Doyle, Graves, and Gruber, 2019; Finkelstein et al., 2017). The literature draws on “value-added” estimation methods originally developed in the field of education; we are the first to apply such methods to measure the health effects of individual health insurance plans. We extend this literature in two ways. First, we formalize and develop tests for a novel assumption (i.e. the fallback condition) under which IV can be used to measure the relationship between observational value-added estimates and causal effects in the presence of selection bias. Second, we show how conventional discrete choice modeling can be integrated with such IV procedures to both microfound the key fallback condition and to measure how sensitive consumer choice is to true value-added (e.g. the implicit consumer WTP).

Broadly, our approach builds on many earlier studies using exogenous displacements from institutions or regions in order to estimate their causal effects. Examples include studies of industry wage differentials (e.g. Krueger and Summers, 1988; Murphy and Topel, 1987; Gibbons and Katz, 1992) or firm wage premiums (e.g. Abowd, Kramarz, and Margolis, 1999; Card et al., 2018) using job transitions, studies of neighborhood or place effects using natural disasters (e.g. Chetty and Hendren, 2018; Deryugina and Molitor, 2020) or housing demolitions (e.g. Jacob, 2004; Chyn, 2018), and studies of school or hospital effects using unanticipated closures (e.g. Angrist et al., 2016; Carroll, 2019). We develop a new framework for using such displacements to evaluate the relationship between causal effects and observational proxies, while allowing for the kinds of endogeneity in fallback choices that has been a concern in some of the earlier studies.

We organize the remainder of this paper as follows. In Section 2, we describe the institutional setting and data, document large variation in observational mortality across MA plans, and motivate our quasi-experimental validation approach. In Section 3, we develop our econometric framework for IV estimation of forecast coefficients and related parameters. In Section 4, we present our main forecast coefficient estimates. In Section 5, we study the correlates of mortality effects and estimate consumer WTP. We conclude in Section 6. Additional results and other material is given in an Online Appendix.

2. Setting and Data

2.1. Medicare Advantage

The Medicare program was established in 1965 primarily to provide insurance coverage for Americans aged 65 and older. Parts A and B of the Medicare program are often referred to as “traditional Medicare” (TM). TM is centrally administered by the Centers for Medicare and Medicaid Services (CMS) and covers hospitalizations and physician services for most Medicare beneficiaries.3 In recent years a large and growing share of beneficiaries have instead opted to receive coverage through a set of diverse private managed care plans (34% as of 2019; see KFF (2019)). This parallel private program has gone by various names (see McGuire, Newhouse, and Sinaiko (2011) for a comprehensive history), but is currently known as Medicare Advantage (MA).

Medicare beneficiaries can choose between TM and typically many MA plans in their local market. Broadly, MA plans must provide all of the mandated insurance benefits of TM in exchange for a capitated monthly payment. Competitive plans may charge lower premiums or offer supplemental benefits to attract certain consumers. MA plans also tend to vary significantly in their insurance networks, with some restricting access to providers (similar to commercial HMOs) while offering more generous financial coverage or better cost-sharing. While there is significant geographic heterogeneity in MA enrollment, most markets offer a wide variety of MA plans to choose from. In 2011, for example, 19 MA plans operated in the average county (KFF, 2021).

The MA program has historically had two broad and sometimes conflicting goals: to expand consumer choice and to reduce Medicare costs (Commission, 2001, 1998).4 Less discussed is the role of competition among MA plans in enhancing product quality, though policymakers recognize the need for beneficiaries to make informed decisions in the MA market. Consequently, some form of public plan quality ratings has existed since 1999, with current quality rankings (known as star ratings) provided since 2007. These ratings score plans on multiple dimensions, including quality of care and customer service. Star ratings have also begun to play a role in policy-making, with the 2009 Affordable Care Act giving bonus payments to high-ranked MA plans. Unlike with other programs, such as Value-Based Purchasing for hospitals, MA plans are not currently ranked or rewarded for achieving low enrollee mortality rates.

Multiple insurers may enter or exit a local market in any given year and change MA consumer choice sets. Broadly, insurers consider the cost of maintaining a given network, the potential revenue from different groups of beneficiaries, and policies affecting federal reimbursement when deciding what plans to offer. Duggan, Gruber, and Vabson (2018) argue that the factors that drive plan exit are unlikely to relate to outcomes through any other channel. For example, a policy change in 2008 increased the fixed costs of certain MA plans, known as private-fee-for-service (PFFS). Pelech (2018) documents significant plan terminations in the year following the policy, with the market share of PFFS plans falling by two-thirds between 2008 and 2011. We leverage this specific policy variation in some analyses below.

2.2. Data and Summary Statistics

We use data on the universe of Medicare beneficiaries aged 65 or older in one of 50 US states or the District of Columbia from 2006 to 2011. For each beneficiary in each year, we observe the identity of their selected plan (both MA and TM), their local market (county), standard beneficiary demographics (age, sex, race, and dual-eligible status), and their end-of-year mortality status. For traditional Medicare enrollees, we further observe inpatient claims. We supplement these data with characteristics of plans such as annual premiums, star ratings, and medical loss ratios.

Our Medicare data consists of 186,603,694 beneficiary-years with non-missing enrollment, demographics, and mortality information. We use the full sample to construct our observational mortality measure, as discussed below. For our IV analysis we restrict attention to the subset of beneficiaries in 2008–2011 who ended the previous year in a MA plan. Because of changes to Medicare reimbursement policy (Pelech, 2018), the vast majority of plan terminations we observe take place during these years. The restrictions yield an analysis sample of 11,442,053 enrollees in 34,559 plans, where we treat plans in different counties as different products. Appendix B describes the construction of these samples in detail.

Table I summarizes our analysis samples. Column (1) shows average demographics, outcomes, and plan characteristics for the universe of Medicare beneficiaries in 2008–2011. The average Medicare beneficiary is 77.5 years old; 85.5% are white, 41.9% are male, and 15.9% are low-income and eligible for Medicaid in addition to Medicare (“dual-eligibles”). In any given year of our sample, 10.0% of Medicare beneficiaries change plans and 5.6% die. Among all Medicare beneficiaries, 12.5% are enrolled in a Health Maintenance Organization (HMO), 2.0% are enrolled in a Preferred Provider Organization (PPO), and 2.4% are enrolled in a PFFS plan. Within a county-year, we find about 25 plans in the median beneficiary choice set (including both TM and MA plans).

Table I:

Summary Statistics

| All Medicare Plans | IV Sample |

|||

|---|---|---|---|---|

| All MA Plans | Non-Terminated | Terminated | ||

| (1) | (2) | (3) | (4) | |

|

| ||||

| Beneficiary Age | 77.5 | 77.3 | 77.3 | 77.0 |

| % White | 85.5 | 87.4 | 87.3 | 90.5 |

| % Male | 41.9 | 41.1 | 41.0 | 43.3 |

| % Dual-Eligible | 15.9 | 8.2 | 8.3 | 6.2 |

| % Switched Plans | 10.0 | 14.1 | 11.6 | 100.0 |

| % Died | 5.6 | 4.7 | 4.7 | 4.5 |

| % HMO | 12.5 | 73.3 | 74.8 | 21.9 |

| % PPO | 2.0 | 9.9 | 9.9 | 9.1 |

| % PFFS | 2.4 | 10.8 | 9.1 | 67.1 |

| Median N Plans in Choice Set | 25 | 17 | 17 | 12 |

|

| ||||

| Total Plans | 226,459 | 34,559 | 25,140 | 9,419 |

| N Beneficiary-Years | 118,184,127 | 11,442,053 | 11,119,125 | 322,928 |

Notes: This table summarizes the analysis samples in 2008–2011. Column (1) reports average enrollee demographics, annual plan switching rates, annual mortality, and plan type for the full Medicare population. Column (2) restricts the sample to beneficiary-years who ended the previous year in a MA plan. Columns (3) and (4) present the sample divided into beneficiary-years previously enrolled in MA plans that did and did not terminate. The total number of plans in column (3) subtracts the number of plans that ever terminate in column (4) from the number of MA plans in column (2). Choice sets are defined as county-years; plans operating in different counties are treated as different plans. We round the % Switched Plans in the final column to 100% from 99.99%.

Columns (2)-(4) of Table I summarize the subpopulation of beneficiary-years who ended the previous year in any MA plan (our IV sample). MA enrollees are less likely to be dual-eligible than Medicare beneficiaries as a whole, but are otherwise demographically similar. A higher rate of MA beneficiaries switch plans in a given year (14.1%) and their annual mortality rate is somewhat lower than in the full sample (4.7%). The vast majority of MA enrollees are in HMOs (73.7%), PPOs (9.9%), and PFFS plans (10.8%).5

Columns (3) and (4) of Table I summarize the subpopulations of enrollees of MA plans that did and did not terminate in the previous year. Broadly, these two groups appear similar, though beneficiaries in terminated plans are slightly less likely to be dual-eligible and are located in somewhat smaller markets.6 The largest difference in these samples is the annual plan-switching rate: while all beneficiaries previously enrolled in a terminated plan are forced to change to a new MA plan, only 11.6% of beneficiaries in non-terminated plans switch.7 The majority of terminated plans (67.1%, when weighted by beneficiaries) are PFFS, reflecting the 2008 policy change.

2.3. Observational Mortality

We begin our analysis by computing observational differences in one-year mortality rates among Medicare plans operating in the same county, adjusting for observable differences in plan enrollees and statistical noise. These observational mortality estimates come from ordinary least squares (OLS) regressions, of the form

| (1) |

where Yit is an indicator for beneficiary i dying in year t and Dijt indicates her enrollment in a given plan j at the start of this period. The control vector Xit contains observable characteristics of enrollees (age, sex, race, and dual-eligibility status) as well as a full set of county and year fixed effects. We allow the coefficient vector ω to vary flexibly by plan size (see Appendix C.1 for details). Given the fixed effects and controls, variation in the observational mortality coefficients μj thus reflects within-county differences in one-year plan mortality rates among observably similar enrollees. We estimate this model across all plans (both MA and TM), treating plans operating in different counties as different plans.

We account for statistical noise in the observational mortality estimates by applying a conventional empirical Bayes correction (Morris, 1983). This correction, detailed in Appendix C.1, “shrinks” the estimated μj towards their county- and plan size-level mean, in proportion to their expected degree of estimation error. The shrinkage is larger for smaller plans but minimal for the larger plans that make up the majority of our sample; as discussed in the appendix, our shrinkage procedure further allows for correlation of observational mortality rates within an insurer’s offerings. In practice the shrinkage procedure plays a minimal role for the typical plan, which enrolls several thousand beneficiary-years. The average effective shrinkage coefficient is very close to one, with 90% of plans having a coefficient greater than 0.92.8

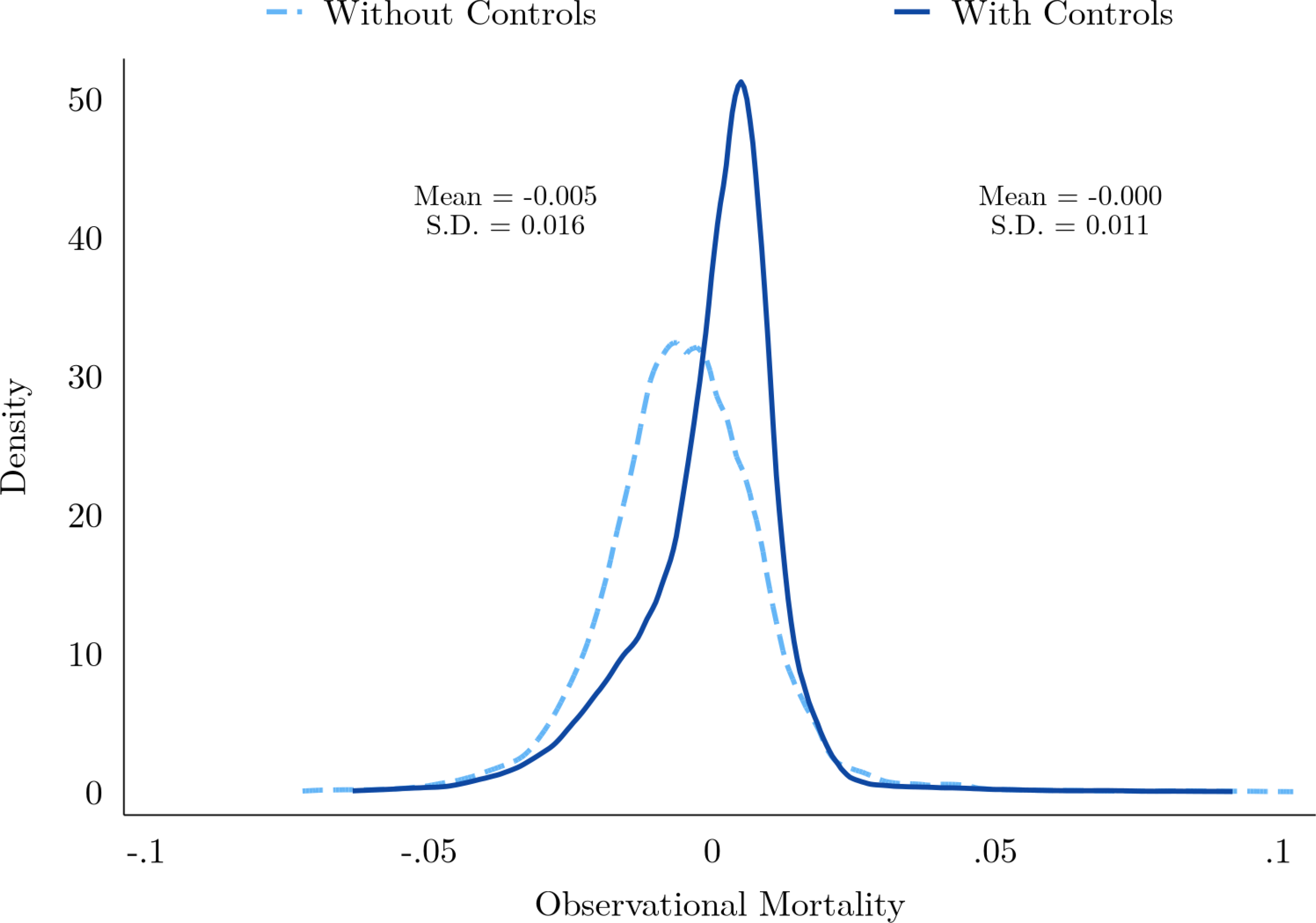

Estimates of Equation (1) reveal substantial within-county variation in MA plan mortality rates among observably similar beneficiaries. The estimated beneficiary-weighted standard deviation of μj across MA plans, after correcting for estimation error, is 1.1 percentage points or 23% of the average one-year mortality rate of 4.7%. Figure I plots the full distribution of shrunk observational mortality rates across MA plans. The solid line shows this distribution for our baseline specification of Equation (1), with all observable controls included in Xit, while the dashed line shows the corresponding distribution for a simpler specification that omits the beneficiary demographic controls. We normalize average observational mortality in both models by the average in the complete model that includes TM. The model without controls has a slightly lower mean (implying that MA plans have observably healthier beneficiaries than TM plans, on average) and a 45% larger standard deviation of 1.6 percentage points.

Figure I:

Observational Mortality

Notes: This figure summarizes the enrollment-weighted distribution of observational mortality across MA plans. The solid dark line shows this distribution when observational mortality is estimated from Equation (1), with all demographic controls, while the light dashed line shows the corresponding distribution for a simpler specification that omits age, race, sex, and dual-eligible status. Average observational mortality across all plans (TM and MA) is normalized to the average of the full model. Estimates are shrunk via the empirical Bayes procedure in Appendix C.1. Estimated means and standard deviations of μj for MA plans are for the prior distribution, computed as described in Appendix C.1, and shown for each estimation procedure.

The fact that the mean and standard deviation of observational mortality changes when beneficiary demographic controls are included suggests some degree of non-random selection. In other words, the variation in observational mortality from the simpler specification appears to be in part driven by observable differences in the health of plan enrollees and not the true mortality effects of plans. This selection appears to be primarily on two dimensions of our observable characteristics: age and dual-eligibility. Conditional on these characteristics, further controlling for beneficiary sex and race has little effect on the estimated distribution of observational mortality (e.g. the noise-adjusted standard deviation of μj remains at 1.1 percentage points). Absent further observables, we are unable to directly test for remaining selection bias in our benchmark specification.9 Instead, we derive an indirect validation based on termination-induced variation in MA choice sets.

2.4. Plan Terminations

To build intuition for our quasi-experimental approach to validating observational mortality, consider a set of beneficiaries who end a year enrolled in a MA plan with a high observational mortality rate μj. Since Medicare plan choice is highly inertial (only 14.1% of MA beneficiaries change plans in a given year, per Table I), most of these enrollees will remain in their high-mortality plan throughout the following year. Suppose, however, that at the end of the year the high-mortality plan terminates for a plausibly idiosyncratic reason (such as a federal change in reimbursement policy).

This termination would force the plan’s enrollees to make an active enrollment choice, and under standard regression-to-the-mean, they will tend to switch to a new MA plan that is more typical in terms of μj.10 If the observational mortality rates were causal, then all else equal we would expect the mortality of this enrollee cohort to fall commensurate to the decline in μj. Identical logic holds for beneficiaries enrolled in exogenously terminated plans with low observational mortality rates: subsequent plan choice is likely to be more typical in terms of μj, relative to enrollees in non-terminated low observational mortality plans. If observational mortality variation reflects causal effects, then mortality should rise. Combining these two termination quasi-experiments may reveal the predictive content of our observational mortality rate estimates while allowing for direct termination effects that are common to the high- and low-mortality terminations.

Figure II illustrates the relationship between plan mortality rates and termination status for high- and low-mortality plans in our IV sample. The solid lines indicate regression-adjusted trends in observational mortality for beneficiaries before and after a plan termination, separately for beneficiaries previously enrolled in plans with above-median (blue) and below-median (red) mortality.11 The dashed lines indicate comparable trends in observational mortality for beneficiaries in the same counties and years whose plans did not terminate, again separately for beneficiaries enrolled in above- and below-median mortality plans. The solid lines indicate a regression-to-the-mean in plan choice following termination: those previously enrolled in high- and low-mortality plans tend to switch to more typical plans on average. At the same time, the dotted lines indicate inertia in plan choice absent termination: beneficiaries previously enrolled in high- and low-mortality plans tend to stay in these different plans provided they remain available. Bracketed 95% confidence intervals show that the post-termination difference in observational mortality is statistically significant for both high- and low-mortality plans, despite terminated and non-terminated plans having statistically indistinguishable observational mortality prior to termination.

Figure II:

Plan Terminations and Observational Mortality

Notes: This figure shows regression-adjusted trends in the observational mortality for enrollees in non-terminated and terminated MA plans, separately for plans with above- and below-median observational mortality. The median is defined over the entire IV sample. Data is plotted in the last year prior to termination for terminated plans and the following year. Termination effects are estimated in each year and median group by a separate regression which controls for county-by-year fixed effects; flexible interactions of lagged plan type and market shares; and beneficiary demographics (age in 5-year bands, sex, race and dual-eligibility status). County-clustered 95% confidence intervals for the termination effects are shown in brackets.

Figure III illustrates the corresponding relationship between realized beneficiary mortality and plans termination status for beneficiaries enrolled in high- and low-mortality plans. Here the solid and dashed lines correspond to the one-year mortality rates of the same groups of beneficiaries summarized in Figure II. Unlike the (time-invariant) observational mortality in Figure II, true mortality risk increases with age, such that the beneficiaries in non-terminated plans (dashed blue and red lines) exhibit an increasing trend in realized mortality. However, the solid blue line (indicating the realized mortality of beneficiaries enrolled in a low-mortality plan prior to termination) exhibits a steeper trend while the solid red line (indicating the realized mortality of beneficiaries enrolled in a high-mortality rate plan prior to termination) exhibits a decreasing trend. Again the bracketed 95% confidence intervals show a significant termination effect for both high- and low-mortality plans, with no statistically significant difference in average mortality prior to termination.

Figure III:

Plan Terminations and Beneficiary Mortality

Notes: This figure shows regression-adjusted trends in the one-year mortality of enrollees of non-terminated and terminated MA plans, separately for plans with above- and below-median observational mortality. The median is defined over the entire IV sample. Data is plotted in the last year prior to termination for terminated plans and the following year. Termination effects are estimated in each year and median group by a separate regression which controls for county-by-year fixed effects; flexible interactions of lagged plan type and lagged market shares; and beneficiary demographics (age in 5-year bands, sex, race and dual-eligibility status). County-clustered 95% confidence intervals for these effects are shown in brackets.

Together, the differential trends in Figures II and III suggest that a termination-induced move to MA plans with more typical observational mortality μj has a differential causal effect on actual mortality Yit. This result suggests that the sizable variation in observational mortality we find in Figure I is not driven entirely by selection bias. At least some of the variation in observational mortality appears to be attributed to causal variation in MA plan mortality effects. We next develop an econometric framework to formalize this logic and measure the predictive validity of observational mortality for such causal effects.

3. Econometric Framework

We use an instrumental variables (IV) framework, leveraging plan terminations, to measure the validity of observational mortality differences in predicting differences in causal plan mortality effects. While not identifying mortality effects for individual plans, this approach is sufficient to estimate the expected mortality impact of reallocating beneficiaries across observably different plans. We first outline the econometric setting and parameter of interest before providing three conditions under which this parameter is identified by an IV regression. We devote special attention to the third condition, what we term the fallback condition, which is novel to this paper.

3.1. Plan Health Effects

We use a simple model to define causal plan effects and the IV parameter of interest. Let Yijt denote the potential mortality outcome of individual i in year t if she were to enroll in a plan j in her market. For the moment, we assume an additively separable model of Yijt =βj +uit; we extend our framework to account for unobserved treatment effect heterogeneity in Section 3.4 below. By normalizing the beneficiary-weighted average βj in each market to zero, we can interpret each βj as the average mortality effect from moving a random beneficiary to plan j, with uit capturing latent differences in beneficiary health. Projecting uit on a vector of observable characteristics Xit (which includes a constant) yields

| (2) |

where E[Xitεit] = 0 by definition of the projection coefficient γ.

Consumers choose among the set of available plans in their market, with Dijt = 1 indicating that consumer i enrolls in plan j in year t. Observed consumer mortality is then given by . Substituting in the previous expression for Yijt yields

| (3) |

In contrast to the regression model (1) in the previous section, Equation (3) is a causal model linking beneficiary plan choice Dijt to subsequent mortality Yit via the causal plan effects βj.

Nonrandom plan selection creates fundamental econometric challenges in estimating plan mortality effects. To the extent that any given plan attracts consumers of poor (good) unobserved health, its observed mortality rate will be an upward- (downward-)biased estimate of βj. For this reason, variation in the regression parameters μj that we estimate in Equation (1) need not coincide with variation in the causal parameters βj in Equation (3): formally, average unobserved health εit need not be uncorrelated with the Dijt choice indicators.

In principle, quasi-experimental variation in plan choice could be used to address such selection bias and estimate the full set of plan effects. This IV approach would require a set of exogenous variables Zijt to instrument for the plan choice indicators in Equation (3). In practice, any available quasi-experimental variation in plan choice is unlikely to generate enough instruments for such a procedure (given the large number of MA plans in each market) nor have sufficient power to detect small differences in mortality effects (since mortality is relatively rare). We next discuss our approach to quantifying variation in the plan mortality effects in light of these challenges.12

3.2. The Forecast Coefficient

Our first goal is to measure the relationship between observational mortality μj and true MA mortality effects βj. Formally, we seek to estimate the MA forecast coefficient λ, defined by the projection of causal mortality effects βj on observational mortality μj. Normalizing the means of both parameters to zero, this projection can be written

| (4) |

where ηj is mean-zero and uncorrelated with μj by definition. This regression is infeasible in the sense that the dependent variable βj is neither observed nor estimated, despite measurement of the independent variable μj. The forecast coefficient nevertheless captures the predictive validity of the observational mortality measures. For example, μj is an on-average unbiased predictor of causal mortality effects when λ = 1, while observational mortality has little association with true causal effects when λ is small.13 We emphasize that Equation (4) reflects an equilibrium statistical relationship, given by existing patterns of selection, and that λ is not a structural parameter.

Along with the forecast coefficient, Equation (4) defines a forecast residual, ηj. This residual reflects the fact that for a given level of observational mortality μj, some plans may increase mortality by more or less than expected due to selection bias (even when λ = 1). Only when both λ = 1 and ηj = 0 for all j is observational mortality unbiased for individual MA plans (i.e. μj =βj).14 Since Cov(ηj, μj)= 0, knowledge of the forecast coefficient is enough to place a lower bound on the variance in true causal effects, even in the presence of selection bias, by ignoring the contribution of ηj. Namely, Var(βj) ≥ λ2Var(μj).

While it is not feasible to estimate Equation (4) directly, we can relate it to observed enrollee mortality via the causal model (3). Substituting the former equation into the latter, we obtain

| (5) |

where denotes the observational mortality of beneficiary i given her plan choice Dijt and is the corresponding forecast residual of her selected plan.

Equation (5) is again a causal model, linking observational mortality μit to realized mortality Yit via the forecast coefficient λ. As with Equation (3), OLS estimation of Equation (5) will be biased when consumers of different unobserved health sort non-randomly into plans.15 To estimate the forecast coefficient, we instead use an IV approach that follows the logic of Figures II and III. This approach uses an instrument for the observational mortality of an enrollee’s plan that combines quasi-experimental choice set variation from plan terminations and the lagged observational mortality of an enrollee’s plan. In contrast to the initial causal model, a single valid instrument is enough to identify λ in Equation (5). There is, however, a cost to simplifying Equation (3), captured by the additional residual term ηit. We next discuss this cost in formalizing our IV approach.

3.3. Identification

Intuition and Related Literature

To see the basic logic of our IV approach, consider a market with three plans of equal market shares. Two of the plans, A and B, have an observational mortality of 0.05 and the third plan C has an observational mortality of 0.03. Suppose plan C exogenously terminates, and that subsequently all of its enrollees move to plan A or B. In either case, enrollees in plan C move to a plan where observational mortality is 2 percentage points higher. All else equal, the forecast regression (4) should then predict the resulting change in beneficiary mortality. If λ = 1, we expect mortality for the plan C cohort to rise by 5 − 3 = 2 percentage points. If instead λ = 1/2, we expect this cohort’s mortality to rise by percentage point, as the 2 percentage point difference in observational mortality between plan C and either A or B would then partly reflect selection bias and not causal effects. Such intuition mirrors the motivation for quasi-experimental evaluations of observational quality measures in other settings (e.g. Kane and Staiger, 2008; Chetty, Friedman, and Rockoff, 2014; Angrist et al., 2016; Doyle, Graves, and Gruber, 2019).

A subtle but key ingredient to this intuition is “all else equal.” In the three-plan example, there is an implicit assumption that not only are terminations as-good-as-randomly assigned to plan C, in the sense of being unrelated to unobserved beneficiary health εi, but that the plans chosen before and after its termination are representative in terms of ηj, the error term in Equation (4). In fact, the presence of ηj may confound quasi-experimental inferences on λ, even when terminations are completely randomly assigned and thus independent of beneficiary health.

To see how the forecast residual can yield misleading quasi-experimental estimates of the forecast coefficient, suppose that while observational mortality is unbiased on average (λ = 1), there is still bias at the level of individual plans (ηj ≠ 0). Concretely, suppose in the three-plan example that βA = βC = 0.03 and βB = 0.07. In this case the exact mixture of fallback plans A and B determines how mortality responds to the termination. If all enrollees move to plan B following plan C’s termination, then mortality will rise by 4 percentage points. Given the observational mortality difference of 2 percentage points, a naïve estimate of the forecast coefficient will be inflated by a factor of 2 (i.e.). Conversely, if all of C’s enrollees switch to plan A, one might falsely conclude that observational mortality has no relationship with true causal effects (i.e.). Only in the case where beneficiaries sort evenly into plans A and B following C’s termination, maintaining the equal market shares of the original plan choice distribution, will the comparison of actual mortality effects to observational mortality effects yield the correct estimate of λ = 1.

This potential challenge with quasi-experimental estimation of parameters like λ is quite general. For example, Doyle, Graves, and Gruber (2019) uses ambulance referral patterns to measure returns to hospital spending, implicitly relating a hospital’s average spending to its quality βj. As they discuss, this approach requires more than random assignment of patients to ambulances. For their IV estimates to be unbiased, ambulance companies cannot systematically bring patients to higher quality hospitals conditional on spending. Similarly, Chetty, Friedman, and Rockoff (2014) consider the case of teachers quasi-randomly moving across schools. To recover a forecast coefficient for grade-level teacher value-added parameters βj, they require an additional assumption. New schools cannot be systematically good conditional on observational value-added. Within schools, grade assignments further cannot track variation in value-added not captured by the observational measure.16

We next formalize a novel solution to this general issue. The formal challenge in such settings is that the usual instrument exclusion restriction comprises two distinct assumptions: a familiar balance condition (satisfied when the instrument is as-good-as-randomly assigned) and a novel condition restricting the fallback choices of individuals subjected to a quasi-experimental shock (such as plan terminations, ambulance company assignment, or teacher moves). Intuitively, in our setting, the choices following a plan termination cannot systematically differ across terminated plans in ways that are correlated with the forecast residual ηj.

This solution can be compared and contrasted with other strategies using shocks to institutional choices in order to estimate causal effects. Abowd, Kramarz, and Margolis (1999) famously estimate worker and firm premiums using a two-way fixed effects model. Their approach identifies firm-specific βj under a parallel trends restriction, akin to that of difference-in-differences, which assumes workers moving between different firms j would have seen similar wage changes absent a move (Card et al., 2018; Hull, 2018). The two-way fixed effects approach differs from our IV approach—as well as the examples above—in which an external shock to movement decisions is used to relate an observed characteristic of j to the βj’s, without restricting outcome trends. Gibbons and Katz (1992) use an approach more similar to ours in the wage premium literature. They use plausibly exogenous plant closings to relate the observed differences in wages of industries j to industry premiums βj. Here a worker’s fallback industry following a plant closing need not be exogenous, and the authors test for this possibility. We provide a formal foundation for such an approach and propose new tests.

In our stylized example above, the fallback condition required that beneficiaries sort evenly into plans, which might suggest that this condition is generally quite strong. In fact, when pooling termination-induced choice set variation across many markets, the solution becomes weaker and more natural. We show below that the fallback condition holds in a wide range of discrete choice models (including those typically estimated in the industrial organization literature) and can be empirically investigated. Before presenting the general condition and its microfoundation, we first discuss the more standard first-stage and balance assumptions required by our IV approach.

The First-Stage and Balance Assumptions

Our approach to estimating the forecast coefficient uses an instrument which, as in Figures II and III, leverages the interaction of past plan choice and plan terminations. Consider, for a beneficiary i observed in year t, the instrument

| (6) |

where μi,t−1 denotes the observational mortality of the beneficiary’s plan in the previous year, and Ti,t−1 is an indicator for whether that year was the plan’s last (prior to termination). We first derive conditions for this instrument to identify λ in a simplified setting where observational mortality is known without estimation error, there is no unobserved treatment effect heterogeneity, and we control only for characteristics of a beneficiary’s plan in the previous year (including μi,t−1 and Ti,t−1). We discuss how we relax each of these simplifying assumptions in Section 3.4 below.

An IV regression of beneficiary mortality Yit on observational mortality μit which instruments with Zit and controls for Xit identifies the forecast coefficient λ under three conditions, per Equation (5). First, we require that the residualized instrument (that is, Zit after partialling out Xit in the population) is correlated with observational mortality:

Assumption 1. (First Stage):.

The first-stage condition is highly intuitive in our setting. We expect most beneficiaries to remain in their previous year’s plan due to inertia, unless the plan is terminated. Beneficiaries forced into an active choice by a termination, however, will tend to switch to more typical plans. This combination of inertia and regression-to-the-mean implies that lagged terminations are likely to predict the observational mortality of year t choices differentially depending on lagged observational mortality, so that and μit are negatively correlated. Such negative correlation is shown in Figure II, where terminated enrollees in below-median (above-median) observational mortality plans saw an increased (decreased) observational mortality of their enrolled plan in the following year.

The second condition is a standard balance assumption: that Zit is conditionally uncorrelated with unobserved beneficiary health εit.

Assumption 2. (Balance):.

As-good-as-random assignment of plan terminations is sufficient, but not necessary for this condition to hold. Since Zit is given by the interaction of terminations and lagged observational mortality, and since both Zit and Xit only vary at the lagged plan level, a minimal assumption is that any relationship between observational mortality and the average unobserved health of a plan’s beneficiaries is the same for terminated and non-terminated plans. Formally, we can evaluate Assumption 2 in terms of the infeasible plan-level difference-in-differences regression,

| (7) |

where denotes the average unobserved health among beneficiaries previously enrolled in plan j and Xj,t−1 includes the lagged plan characteristics in Xit (including the μj and Tj,t−1 main effects). Appendix C.2 shows that if and only if ϕZ = 0 in the version of this regression that weights by lagged market shares. Since Tj,t−1 is included in Xj,t−1, this formulation of Assumption 2 makes clear that we allow both for terminated and non-terminated plans to enroll beneficiaries of systematically different unobserved health, and for plan terminations to have direct disruption effects. We only require that this imbalance or effect is not systematically related to the observational mortality measure.17 The similarity of the pre-period mortality in Figure III supports the stronger version of Assumption 2 in our setting; we develop and apply additional falsification tests of the sufficient balance assumption in Section 4.1 below.

The Fallback Condition

The third identification condition we formalize is novel, and follows the above intuition regarding fallback plans. Even when terminations are as-good-as-randomly assigned (satisfying Assumption 2), consumers are not randomly assigned to fallback plans after terminations. Imbalance in the forecast residual ηj must thus be ruled out for Zit to identify λ:

Assumption 3. (Fallback):.

Recall that is the forecast residual of the plan that consumer i selects in period t, potentially following a termination in time t −1. For the instrument to be relevant, must be correlated with subsequent plan choice Dijt; thus, the as-good-as-random assignment with respect to ηj does not guarantee that is uncorrelated with ηit. Assumption 3 rules out this correlation, requiring fallback choices to be “typical” in a particular sense.

Interpreting Assumption 3 can be challenging because ηit is not structural. It instead arises from the statistical Equation (4) and the potentially complex realizations of consumer choices and health which give rise to μj. We take two approaches to better understand the fallback condition. First, we give a plan-level interpretation analogous to Equation (7). Second, we microfound the condition by asking what restrictions on consumer plan choices would cause it to hold.18

The fallback condition can be viewed (as with Assumption 2) as restricting the relationship between observational mortality and a particular plan-level unobservable to be similar across terminated and non-terminated plans. Specifically, Assumption 3 restricts a plan-level difference-indifferences regression which replaces in Equation (7) with . For the fallback condition to hold, the interaction of observational mortality μj and lagged plan termination Tj,t−1 must not predict conditional on the controls. This, in turn, says that the conditional relationship between μj and the average ηj of beneficiaries previously enrolled in terminated and non-terminated plans must be the same. This plan-level interpretation gives some intuition for the behavioral restrictions that might be sufficient. The fallback condition requires the first- and second-choice plans of consumers (i.e. the choices made before and after termination) to be similar, in terms of the relationship between the predictable dimension of plan quality μj and the unpredictable dimension ηj. Since the first-choice μj and ηj are uncorrelated by definition, the fallback condition requires that this lack of correlation remains as consumers switch from their first-choice plan to their second-choice plan. The fallback condition holds if consumers, after terminations, make similar choices from the remaining plans as new consumers in the market.

Microfounding the fallback condition requires behavioral restrictions on underlying consumer choice, since Assumption 3 is not ensured by as-good-as-random assignment of plan terminations. Appendix C.4 presents a discrete choice model that yields such restrictions. The simplest version of the model assumes that consumers in non-terminated plans are fully inertial, while consumers in terminated plans make an unrestricted choice that maximizes their latent utility Uijt. We show that the fallback condition holds provided the IV control vector Xit includes any lagged characteristics of plans that lead to persistent unobserved heterogeneity in choice (along with μi,t−1 and Ti,t−1). Suppose, for example, that consumer utility has the form

| (8) |

where αit captures potentially heterogeneous preferences over observed plan characteristics Wj, ξj denotes a fixed plan unobservable, and uijt captures unobserved idiosyncratic time-varying plan-specific preferences. We show in Appendix C.4 that the fallback condition holds in this model (absent any functional form assumptions) when αit is either fixed across consumers or idiosyncratic over time. For general αit, we show that the fallback condition holds provided flexible transformations of the lagged plan characteristics Wj are controlled for: namely, when one conditions on the characteristics of plans over which consumers exhibit heterogeneous and persistent preferences. Similar logic can be extended outside the utility model of Equation (8): in Appendix C.4 we discuss how any controls sufficient to capture persistent heterogeneity in plan choice probabilities can be included to satisfy Assumption 3 more generally. We further show that consumers in non-terminated plans need not be fully inertial; the same logic can hold in models with partial inertia, such as that of Ho, Hogan, and Morton (2017).19

The microfoundation suggests the novel fallback condition is likely to hold in discrete choice specifications that are commonly estimated in both canonical and recent papers in the industrial organization literature. For example, Equation (8) is the classic random-coefficient model of demand for differentiated products used in Berry, Levinsohn, and Pakes (1995). More recently, Allende (2019) employs a model in this class when estimating school value-added. That said, there exist choice specifications that would violate the fallback condition. Assumption 3 could fail if, for example, termination-induced changes in preferences cause consumers to select plans differently.20

The microfoundation of the fallback condition has two implications for our IV approach. First, when estimating the MA forecast coefficient it may be important to control for lagged plan characteristics over which consumers may have persistent heterogeneous preferences. We include such controls in our baseline specification, as discussed below. Second, as with the conventional balance assumption, the fallback condition may be investigated empirically. Assumption 3 asserts that the forecast error of a beneficiary’s plan, ηit, is conditionally uncorrelated with the instrument . We do not observe this residual directly, just as we do not observe the beneficiary residual εit which enters Assumption 2. However, just as standard IV falsification tests can investigate whether the instrument is correlated with observable proxies of εit, we can construct and test for instrument balance on an observable proxy for ηit. Intuitively, we would check whether the observable characteristics of a beneficiary’s fallback plans have a differential relationship with the observational mortality of her previous plan, across those previously enrolled in terminated and non-terminated plans. We conduct this test in the MA setting below.

3.4. Extensions

We consider four extensions to our basic econometric framework before bringing it to the data. First, we note that while we have derived the first-stage, balance, and fallback conditions for an IV regression involving μj, in practice the observational mortality of each plan is not known and must be estimated. We show in Appendix C.5 how each of these conditions extend to the case where μj is replaced with an empirical Bayes posterior mean of observational mortality . The untestable balance assumption is unchanged in this case, while the feasible IV regression fallback condition is satisfied under the same microfoundation we considered above. Importantly, we continue to estimate the same forecast coefficient λ with the feasible IV regression as we would if observational mortality were known, although increased estimation error in is likely to reduce power. In practice the issue of estimating μj should be of little empirical consequence in our setting, since the typical plan in our sample has thousands of enrollees and the typical shrinkage coefficient is correspondingly close to one (see Appendix Figure A.II.).

Second, we note that we simplified the exposition by only considering an IV regression with lagged plan-level controls, of the form . This restriction also allows for controls at a level higher than plan, such as county-by-year fixed effects. In practice we further include controls that vary at the beneficiary level (such as demographics) in some IV specifications. When not necessary for identification, we expect such controls to absorb residual variation in beneficiary mortality and potentially yield precision gains.

Third, in Appendix C.6 we show how our framework can accommodate unobservable selection on heterogeneous treatment effects. Our core argument proceeds similarly, although we require a further condition on unobserved selection on treatment effects. The new condition requires that any relationship between the degree of such “Roy selection” and observational mortality is again the same among consumers in terminated and non-terminated plans. Below we probe the role of treatment effect heterogeneity by allowing plan effects to vary by observables.

Finally, we note that while we have derived first-stage, balance, and fallback conditions for the purposes of estimating the forecast coefficient λ, analogous conditions can be imposed to estimate the coefficient from regressing plan effects βj on any plan observable Wj. The first stage for an instrument of the form Zit =Wi,t−1×Ti,t−1 (where ) continues to derive power from a combination of plan choice inertia and termination-induced regression-to-the-mean; the balance assumption is analogous to Assumption 2, and the appropriate fallback condition continues to hold under our choice model microfoundation. We use this extension in Section 5 to study the observable correlates of plan quality, such as premiums and star ratings. We also show how our IV framework can be used to bound the implicit willingness to pay for plan quality using the association between plan mortality effects and premium-adjusted market shares.

4. Results

4.1. Tests of Assumptions

We first investigate Assumption 1 by showing that termination-induced changes to consumers’ choice sets lead to predictable changes in the observational mortality of the plan in which they subsequently enroll. We show this by estimating an OLS first-stage regression of

| (9) |

where again μit denotes the plan observational mortality for beneficiary i at time t and Zit = μi,t−1× Ti,t−1 is the interaction of observational mortality of the lagged plan and an indicator for lagged plan termination. To explore robustness, we sometimes replace the linear interaction with more flexible alternatives, such as interactions of percentiles of lagged observational mortality and lagged plan terminations. The baseline control vector Xit includes county-by-year fixed effects (such that we only exploit variation within choice sets), year- and county-specific termination main effects (to allow for flexible direct effects) and flexible interactions of lagged plan type, lagged observational mortality, and lagged plan size and market shares (to allow for a weakened fallback condition).21

In some specifications we also include controls for beneficiary demographics (age in 5-year bands, sex, race and dual-eligibility status). We cluster standard errors at the county level, allowing for arbitrary correlation in the regression residual across different beneficiaries, plans, and years.22

First-stage coefficient estimates are reported in Panel A of Table II. The finding of πZ < 0 is consistent with a combination of inertia and regression-to-the-mean in MA plan choice, first documented in Figure II. Beneficiaries enrolled in high- or low-mortality plans that are terminated in year t −1 tend to choose plans in year t which are more typical in terms of observational mortality, relative to the mostly inertial beneficiaries in non-terminated plans; consequently, and μit are negatively correlated. In column (1), we estimate a termination-induced regression-to-the-mean of −0.72, implying that a consumer in a one percentage point higher observational mortality plan in the previous period switches to a plan with 0.72 percentage points lower observational mortality in the period following termination, relative to a consumer in a similarly high-mortality plan that does not terminate. Column (2), corresponding more directly to Figure II, shows that the termination of an above-median observational mortality plan in year t − 1 induces a differential reduction in the observational mortality of year t plans of 0.02 percentage points, relative to a termination of a below-median observational mortality plan. Both specifications yield high first-stage F statistics, confirming the relevance of our instrument (Assumption 1).

Table II:

Tests of Assumptions

| (1) | (2) | |

|---|---|---|

|

| ||

| Dep. Var.: Observational Mortality | A. First Stage | |

| Instrument | −0.724 (0.015) | −0.0189 (0.0014) |

| F Statistic | 2,358.1 | 173.9 |

| Dep. Var.: Predicted Mortality | B. Balance | |

| Instrument | −0.020 (0.013) | −0.0011 (0.0006) |

| Dep. Var.: Predicted Forecast Residual | C. Fallback | |

| Instrument | 0.010 (0.001) | −0.0000 (0.0001) |

|

| ||

| Specification | Linear | Median |

| Demographic Controls | No | No |

| N Beneficiary-Years | 11,441,205 | |

Notes: Panel A of this table is based on estimation of Equation (9) and presents the OLS coefficient in a first-stage regression of observational mortality on the instrument. Panel B replaces observational mortality as the dependent variable with a prediction of one-year mortality based on beneficiary demographics. Panel C uses as the dependent variable a prediction of the forecast residual based on plan characteristics. In column (1) the instrument is the interaction of observational mortality of the lagged plan and a lagged plan termination indicator. In column (2) the instrument is the interaction of an indicator for above-median observational mortality of the lagged plan and a lagged plan termination indicator. In all specifications, we control for the observational mortality of the lagged plan and termination main effects, county-by-year fixed effects, year- and county-specific termination effects, and interactions of lagged plan characteristics (as described in the text). Standard errors are clustered by county and reported in parentheses.

Panel A of Figure IV illustrates the first-stage relationship by replacing the linear instrument in Equation (9) with one based on deciles of lagged observational mortality (controlling for decile main effects). We use this specification to plot the estimated contemporaneous plan observational mortality for enrollees who, in the previous year, were enrolled in plans of different deciles of observational mortality that did and did not terminate. The figure shows that while observational mortality of the lagged plan predicts current plan observational mortality among the non-terminated group, the relationship is essentially flat for terminated plans. The flattening again reflects the combination of inertia and regression-to-the-mean in plan choice that yields negative first-stage coefficients in Panel A of Table II.23

Figure IV:

Graphical Tests of Assumptions and the Reduced Form

Notes: This figure illustrates the three assumptions in our IV approach, as well as the IV reduced form. Panel A shows average observational mortality by deciles of lagged observational mortality among non-terminated and terminated plans, controlling for county-by-year fixed effects and other observables in our baseline specification. Panel B shows the corresponding averages of predicted one-year mortality given omitted beneficiary demographics (age, sex, race, and dual-eligible status). Panel C shows the corresponding averages of a predicted forecast residual given omitted plan characteristics (star ratings, premiums, MLRs, and an indicator for donut hole coverage). Panel D shows the corresponding averages of one-year mortality. Points are the average of each left-hand side variable in deciles of lag plan observational mortality, predicted by the lagged observational mortality in the regression model, combined with the decile-specific termination effects estimated from specifications of the form of Equation (9). The controls as in Table II, including decile main effects. Coefficients are normalized to remove termination main effects.

We next build support for the balance condition (Assumption 2) by testing whether the instrument predicts observable differences in beneficiary health. We replace the observational mortality outcome in Equation (9) with a prediction of one-year beneficiary mortality, obtained from a regression of one-year mortality on dummies for 5-year age bands, sex, race, and dual-eligibility fixed effects (see Appendix Table A.III. for model estimates). The results are in Panel B of Table II. In contrast to the large and significant first-stage effects in Panel A, we cannot reject the null of instrument balance on predicted beneficiary mortality. With the baseline linear specification we obtain an insignificant coefficient of −0.020, while in the median specification we obtain an insignificant coefficient of −0.0011. Both of these estimates are more than an order of magnitude smaller than the corresponding first-stage estimates. Finding balance for our instrument on predicted mortality is not surprising in light of the motivating Figure III.

Panel B of Figure IV illustrates the predicted mortality regressions by replacing the observational mortality measure in Panel A. We plot the average predicted mortality among terminated and non-terminated plans at different deciles of lagged observational mortality. In contrast to the clear first-stage effect, there is no differential trend in predicted mortality for terminated versus non-terminated plans. Any differential trend in the actual mortality of beneficiaries in terminated and non-terminated plans is therefore unlikely to be due to pre-existing differences in their health.

Appendix Figure A.III. similarly shows that our instrument appears visually balanced on age and average CMS risk scores, which attempt to predict enrollee costs based on demographics and diagnoses. Additional balance tests are given in Appendix Table A.IV..24

Finally, we build support for the novel fallback condition (Assumption 3) by testing whether our instrument predicts an observable proxy for the forecast residual ηi. We construct the proxy by first regressing observational mortality on a set of observable plan characteristics (plan star ratings, premiums, medical loss ratios, and an indicator for donut hole coverage). We then take the residual from projecting the fitted values from this regression (as an observable proxy of βj) on μj. This residual yields an observable proxy for ηj, and thus of given a beneficiary’s plan. Panel C of Table II reports the resulting instrument coefficients from replacing the outcome in Equation (9) with this proxy. In this case, we find a coefficient of 0.01 in the linear specification and a coefficient of effectively zero in the median specification. While statistically significant, the linear imbalance is quantitatively negligible—almost two orders of magnitude smaller than the associated first-stage effect. In Appendix C.7, we show how the frameworks of Altonji, Elder, and Taber (2005) and Oster (2019) can be adopted to quantify the importance of imbalances on both beneficiary and plan-level observables. The statistical imbalances are too small to substantially alter our forecast coefficient estimates, even under conservative assumptions.

Panel C of Figure IV illustrates these predicted forecast residual regressions by replacing the predicted mortality measure in Panel B. As before, we see no systematic relationship between terminations and the predicted enrollee unobservable at any decile of lagged observational mortality. This result builds confidence in our third and final identification condition, suggesting that termination-induced changes in observational mortality can be related to termination-induced changes in actual mortality to estimate the MA forecast coefficient. We next present these IV estimates.

4.2. Forecast Coefficient Estimates

Table III reports first-stage, reduced-form, and second-stage estimates for our main IV specification. The second-stage estimates come from a regression of

| (10) |

with the first stage given by Equation (9). The second-stage coefficient λ estimates the observational mortality forecast coefficient under Assumptions 1–3. The reduced form regression replaces the observational mortality outcome in Equation (9) with the actual mortality outcome in Equation (10). As before, we use both this linear specification and an alternative specification which replaces the instrument with one constructed from an above-median lag observational mortality indicator. We also report two specifications for the control vector Xit; one which mirrors the tests of our assumptions, and a second which adds beneficiary demographics (age, sex, race, and dual-eligible status). Given the balance of our instrument on these beneficiary observables, via the predicted mortality measure, we do not expect the inclusion of these controls to meaningfully affect the IV estimates (though it may increase their precision).

Table III:

Forecast Coefficient Estimates

| (1) | (2) | (3) | (4) | |

|---|---|---|---|---|

|

| ||||

| Dep. Var.: Observational Mortality | A. First Stage | |||

| Instrument | −0.724 (0.015) | −0.0189 (0.0014) | −0.724 (0.015) | −0.0189 (0.0014) |

| F Statistic | 2,358.1 | 173.9 | 2,358.7 | 173.6 |

| Dep. Var.: One-Year Mortality | B. Reduced Form | |||

| Instrument | −0.764 (0.069) | −0.0214 (0.0025) | −0.745 (0.069) | −0.0203 (0.0023) |

| Dep. Var.: One-Year Mortality | C. Second Stage (Forecast Coefficient) | |||

| Observational Mortality | 1.056 (0.098) | 1.130 (0.117) | 1.029 (0.098) | 1.073 (0.106) |

|

| ||||

| Specification | Linear | Median | Linear | Median |

| Demographic Controls | No | No | Yes | Yes |

| N Beneficiary-Years | 11,441,205 | |||

Notes: Panels A and C of this table report first- and second-stage coefficient estimates from Equations (9) and (10). Panel B reports the corresponding reduced-form coefficients. The dependent variable is observational mortality in Panel A and realized mortality in Panels B and C. In columns (1) and (3) the instrument is the interaction of observational mortality of the lagged plan and a lagged plan termination indicator. In columns (2) and (4) the instrument is the interaction of an indicator for above-median observational mortality of the lagged plan and a lagged plan termination indicator. In all specifications, we control for lagged observational mortality and termination main effects, county-by-year fixed effects, year- and county-specific termination effects, and interactions of lagged plan characteristics (as described in the text). Columns (3) and (4) additionally control for beneficiary demographics. Standard errors are clustered by county and reported in parentheses.

Panel A of Table III replicates the first-stage results reported in Panel A of Table II and confirms that these change little when we add the demographic controls. Panel B shows the corresponding reduced-form estimates from the same specifications. We find reduced-form coefficients of −0.76 and −0.75 for the linear specification (without and with demographic controls) and of −0.0214 and −0.0203 for the median specification. Each of these estimates are quite similar to the corresponding first-stage coefficients, reflecting the pattern first shown in Figures II and III: terminations tend to shift observational mortality and realized mortality by similar amounts.

Panel C of Table III shows that the similarity of first-stage and reduced-form effects yields high forecast coefficient estimates, in the range of 1.029–1.130, with standard errors in the range of 0.098–0.117. The point estimates are again similar with and without demographic controls, which reduce standard errors slightly. The median specification yields a somewhat higher forecast coefficient, though the estimates are not statistically distinguishable. Together, these IV estimates suggest the variation in observational mortality unbiasedly predicts variation in true mortality effects (i.e. that λ ≈ 1).

Panel D of Figure IV illustrates this finding by plotting reduced-form variation in one-year mortality rates for beneficiaries in terminated and non-terminated plans by deciles of lagged observational mortality. The resulting differential trend (obtained by replacing observational mortality in Equation (9) with actual one-year mortality) strongly mirrors that of the first stage in Panel A, consistent with the finding of a forecast coefficient that is close to one. Lagged observational mortality strongly predicts the subsequent mortality of beneficiaries previously enrolled in non-terminated plans, but this relationship is effectively flat for beneficiaries previously enrolled in terminated plans (who switch to more typical plans). This finding is striking in contrast to Panel B of Figure IV, which shows no such relationship for predicted one-year mortality. Beneficiaries in high- and low-mortality terminated plans appear similar to those in corresponding non-terminated plans until they are induced by terminations to choose more average plans.25

4.3. Robustness Checks

We verify the robustness of our forecast coefficient estimates in a number of exercises summarized in Appendix Table A.VI.. First, we show that the estimates in Table III are unaffected by the removal of counties which do not see a plan termination during our sample period. The first row of Appendix Table A.VI. shows we obtain similar forecast coefficient estimates of around 1.00–1.05 in this specification, with comparable standard errors. This finding is consistent with the fact that the vast majority of counties see MA plan terminations (see Appendix Figure A.I.) and that counties with and without terminations are broadly similar (see Appendix Table A.I.).

Second, we verify that similar results are obtained when we drop the minority of beneficiaries who switch from a MA plan to a TM plan (our baseline specification includes comparisons between the majority of MA plans and a single TM plan in each county). While this specification may be biased by selecting on an endogenous variable, we nevertheless obtain similar forecast coefficients in the second row of Appendix Table A.VI..

Third, we show that we obtain similar but less precise estimates when we limit attention to terminations of PFFS plans. Pelech (2018) links such terminations to a 2008 policy change which increased PFFS operating costs. While these plan terminations are perhaps more plausibly exogenous, there may also be less variation across PFFS plans, which typically do not establish restrictive networks. The third row of Appendix Table A.VI. shows that these plan terminations yield a similar forecast coefficient estimate of 1.08, with a standard error of 0.11. The corresponding median specification gives a slightly larger but similar estimate, with a similar standard error. The fourth row of Appendix Table A.VI. reports the results of excluding PFFS terminations. Forecast coefficient estimates from this specification are more imprecise, but qualitatively similar to (and not statistically distinguishable from) our baseline estimates.

We next investigate the role of treatment effect heterogeneity. The fifth row of Appendix Table A.VI. shows that we obtain similar estimates, of around 1.05, when we exclude dual-eligible beneficiaries from both the IV sample and the sample used to construct the observational mortality measure. The sixth row further shows that our results are similar when we allow observational mortality to vary by beneficiary age, estimating Equation (1) separately by five-year age bins. This specification yields forecast coefficients of around 1.03–1.07, with similar or slightly smaller standard errors. This robustness is especially striking as age and dual-eligible status appear to drive the majority of selection bias in the most naïve observational mortality estimates, as discussed in Section 2.3. The findings suggest either that treatment effect heterogeneity is not first-order in this setting, or that the extension of our framework in Appendix C.6 (that accommodates such heterogeneity) is likely to hold.