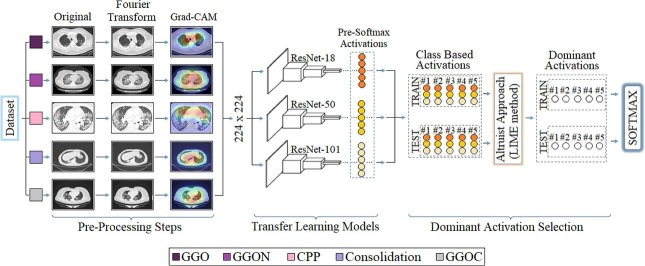

Graphical abstract

Keywords: COVID-19, Chest CT findings, Deep learning, Image processing, Medical decision support system

Abstract

Covid-19 is a disease that affects the upper and lower respiratory tract and has fatal consequences in individuals. Early diagnosis of COVID-19 disease is important. Datasets used in this study were collected from hospitals in Istanbul. The first dataset consists of COVID-19, viral pneumonia, and bacterial pneumonia types. The second dataset consists of the following findings of COVID-19: ground glass opacity, ground glass opacity, and nodule, crazy paving pattern, consolidation, consolidation, and ground glass. The approach suggested in this paper is based on artificial intelligence. The proposed approach consists of three steps. As a first step, preprocessing was applied and, in this step, the Fourier Transform and Gradient-weighted Class Activation Mapping methods were applied to the input images together. In the second step, type-based activation sets were created with three different ResNet models before the Softmax method. In the third step, the best type-based activations were selected among the CNN models using the local interpretable model-agnostic explanations method and re-classified with the Softmax method. An overall accuracy success of 99.15% was achieved with the proposed approach in the dataset containing three types of image sets. In the dataset consisting of COVID-19 findings, an overall accuracy success of 99.62% was achieved with the recommended approach.

1. Introduction

Coronaviruses are a large family of viruses that can cause respiratory illness. Previously identified coronaviruses are Severe Acute Respiratory Syndrome (SARS) in 2003 and Middle East Respiratory Syndrome (MERS) in 2012 has caused severe illness. The new type of coronavirus (COVID-19) infection outbreak, which emerged in Wuhan, China's Hubei province as a public health threat in December 2019, has been reported by the World Health Organization (WHO) on March 11, 2020, as a pandemic agent [1]. The most typical symptoms of COVID-19 are high fever, dry cough, and tiredness. These symptoms usually occur on the fifth day of the illness; however, in different cases, they have been found to emerge from the second to the fourteenth day [2].

However, these symptoms are not only specific to COVID-19 but may also be associated with other viral/bacterial pneumonia. Pneumonia is the filling of the air sacs in the lungs with an inflamed fluid and is caused by a bacterial infection or by a virus, such as COVID-19. Most bacterial pneumonia can be treated with antibiotics, while antibiotic treatment is generally not used for pneumonia caused by viruses [3]. There is no specific treatment approved so far for COVID-19, which is transmitted by respiratory droplets between people who are in close contact with each other, is highly contagious, and has become the leading cause of death in the world [4].

Globally, in late June 2021, the World Health Organization reported 181,544,130 confirmed COVID-19 cases and 3,938,369 deaths. The number of cases reported weekly was approximately 400,000 in March 2020 and eight times more in June 2021 [5]. Therefore, early diagnosis is crucial to fighting back against the pandemic while reducing the spread of the outbreak and may keep the intensive care burden at a service level.

The real-time polymerase chain reaction (RT-PCR) test for the detection of viral nucleic acids, which is also used in the detection of SARS-CoV and MERS-CoV and influenza viruses, was determined as the gold standard for COVID-19 diagnosis by WHO. However, collecting the specimen too early, poor quality of the specimen, inappropriate transportation of the specimen, and kit performance can lead to false-negative results; sensitivity of RT-PCR test ranges between 59% and 71% [6], [7].

Developing countries have more difficulty managing outbreaks due to limited resources. When the number of RT-PCR tests applied to 1 million people in developing countries and developed countries is compared, it is seen that the tests are performed 10 times less and are performed only on those with obvious [6].

Although early diagnosis, rapid isolation, and initiation of patient treatment are very important in outbreak management; turnaround times for RT- PCR test results take up to 14 h in manually operated systems [8]. As a result of its slow turnaround times and low sensitivity, faster and more reliable screening techniques added to RT-PCR tests (or as an independent screening tool) are required to keep the intensive care unit load at service level and to control the spread of the outbreak by slowing it down.

Computer tomography (CT) scans of the thorax were found to be more sensitive than RT-PCR (up to 98% compared with 71% of RT-PCR) [6], [7]. Chest X-ray (CXR) is also commonly performed in the initial diagnosis of COVID-19 with a sensitivity of about 57% [9]. Studies report that in the early stage of COVID-19, CXR has 15% worse sensitivity than computed tomography; therefore, small ground glass opacity in the lower lobes is frequently seen in CT findings at the early stage of COVID-19 is not seen as an abnormality in CXR [10], [11]. Therefore, poor sensitivity to early-stage findings of COVID-19, which is important in the treatment of the disease, is seen as the most important disadvantage of CXR. CT findings vary according to the stage of pneumonia in the lung and are reported as defined by the Fleischner Society [12]. By evaluating CT findings in radiological interpretation, it is determined not only whether the individuals have COVID-19 but also the need for mechanical ventilation and intensive care [13]. Therefore, the performance of radiologists in distinguishing COVID-19 from non-COVID-19 Pneumonia with Chest CT findings is critical.

Research has shown that the Chest CT Findings of COVID-19 are similar to those detected findings in viral pneumonia (H1N1 influenza A) and bacterial pneumonia [14], [15], [16], [17], [18]. The most frequent CT findings in the early stages of that pneumonia are Ground Glass Opacity (GGO) (Hazy opacity areas as a result of partial displacement of air, such as alveoli collapse and filling air gaps) and nodules (small, rounded, and up to 3 cm in size, well or poorly described opacity with a peripheral halo of ground glass) among the positive patients. Although consolidation (homogeneous increase in pulmonary parenchymal attenuation that obscures the adjacent margins of the vessel and airway walls) is the second most detected finding within the first 10 days after the onset of the symptom, sometimes Crazy Paving Pattern (CPP) (irregular shaped cobblestones as a combination of ground glass opacity and smooth interlobular septal thickening) findings are seen after increasing grade of GGO and consolidations [14], [12], [15], [16], [17]. It is much more difficult to discriminate COVID-19 from pneumonia because COVID-19 CT findings are not distinct from other viral pneumonia (e.g. influenza, adenovirus) and reported that the CT abnormalities of COVID-19 and non-COVID-19 viral pneumonia are highly similar except for the higher peripheral distribution in the upper and middle lobes [16]. Bacterial pneumonia is also known to differ significantly from viral pneumonia only in diffuse airspace opacification with ground glass opacity or consolidation in one segment or lobe [18], [19].

Radiologic interpretation accuracy of chest CT is dependent on the radiologist’s experience [20]. In the study evaluating the performances of radiologists in distinguishing COVID-19 and non COVID-19 pneumonia and determining COVID-19 CT findings (for the most common ground glass pattern), it is seen that the sensitivity values of the radiologists can decrease from 100% to 60% and from 91% to 68%, respectively [21]. Despite the limitations stated in the PCR test and CXR, rapid turnaround and high sensitivity of CT is seen as a great advantage, but the accuracy of the chest CT in the diagnosis of COVID-19 is related to the experience of the radiologists who show different performances due to the high similarity of the pneumonia findings.

Artificial intelligence technology has been used to perform COVID-19 detection. For this aim, many studies have been carried out in the literature. If some of them are examined; Tianqing et al. [22] designed a deep CNN model to detect COVID-19 positive cases. They applied the class activation map (CAM) technique to each image as a preprocessing step. They used the extreme learning machine (ELM) method in the last layer of the DCNN model they designed, and they used the chimpanzee optimization method to stabilize the DCNN-ELM. They performed analyzes with two public datasets in their study. The overall accuracy successes they achieved from the analyzes were 98.25% and 99.11%, respectively. M. Turkoglu [23] used X-ray images to detect COVID-19. He has designed a pre-trained deep learning model called COVIDetectioNet. He used the SVM method in the classification process. The overall accuracy of the classification process was 99.18%. JavadiMoghaddam et al. [24] proposed a new deep learning-based model to detect COVID-19 cases. They used a combination of the squeeze excitation block (SE-block) layer in their proposed approach. They used the mish function to optimize the analysis performance of the model. The overall accuracy they achieved in their study was 99.03%. A. Chaddad et al. [25] used CT and X-ray images for the detection of COVID-19. They performed analyzes using two datasets. The first dataset consisted of normal, pneumonia, and COVID-19 image types. The second dataset consisted of the findings of COVID-19. They used pre-trained deep learning models to train the datasets. They used the Region of Interest (ROI) technique to improve classification performance. Their overall accuracy of the first dataset was 97%. The second dataset included findings images of COVID-19. Their overall accuracy in the classification of this dataset was between 70% and 83%. V. Perumal et al. [26] used CT images to detect COVID-19. They used color maps to show the region of interest in each image data. They used machine learning methods and deep learning models in the analysis of the dataset. The best overall accuracy they achieved in their study was 96.69%. E. Luz et al. [27] used X-ray images to detect COVID-19. The dataset consisted of normal, pneumonia, and COVID-19 image types. They used the EfficientNet model family to train the dataset and classify the types. The overall accuracy of the classification was 93.9%.

Aims to be achieved in this article;

-

•

Successful classification among three types of pneumonia (COVID-19, H1N1 Type A, and Bacterial) and five types of COVID-19 chest CT findings.

-

•

Successful extraction of type-based activations transferred to Softmax, the last activation layer of CNN models used for data sets. (three types and three activation sets for dataset #1; five types and five activation sets for dataset #2).

-

•

It is the selection of the types that give the best performance with the Local Interpretable Model-agnostic Explanations Method (LIME) method for the activation sets extracted for both training and test data and testing it with the Softmax method.

Other sections of this study are summarized as follows: the second section gives information about the dataset methods used in preprocessing, CNN models, the LIME method, and the proposed approach. The third section gives information about experimental studies and the obtained analysis results. The last two sections consist of the Discussion and Conclusion sections, respectively.

2. Dataset, models, and methods

2.1. Dataset

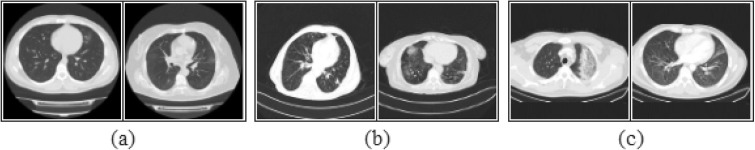

To apply the proposed method, three different class viral pneumonia chest CT scans were retrospectively obtained from the hospital Picture Archiving and Communication System. Data were collected from hospitals in İstanbul, Turkey. Datasets obtained are COVID-19, viral pneumonia (H1N1 Type A), and bacterial pneumonia. COVID-19 dataset consists of 631 CT scans from 201 patients, which was obtained after the first cases in Turkey in March 2020. Pneumonia (H1N1 Type A) dataset is obtained from 417 CT scans of 212 patients between 2017 and 2019 years. The bacterial pneumonia dataset consists of 518 CT scans from 10 patients before 2019 years. These CT scan datasets were used in the classification of three different pneumonia classes with high similarity and are aimed towards a rapid diagnosis of patients suffering from pneumonia during the flu season. We named all of these datasets as Cov-Pne-Bac (CPB). All images from the datasets are in JPG format. The resolutions of the images are varied within the datasets, with the lowest 512 by 512 and the highest 1096 by 1289 pixels. However, all images were resized up to 224 by 224 according to the deep learning model we used. The demographic characteristics are summarized in Table 1 and a sample subset of the CPB dataset is shown in Fig. 1 .

Table 1.

Demographic Characteristics of the Patient Group in the CPB dataset. (* Numbers are means ± standard deviations, with ranges in parentheses.)

| Characteristic | COVID-19 (n = 200) | Pneumonia (n = 212) | Bacterial (n = 10) |

|---|---|---|---|

| Age (y) | |||

| Mean* | 52,9 ± 17,4 | 59 ± 20,5 | 58 ± 11 |

| <60 | 132 (%66) | 98 (%46) | 4 (%66) |

| ≥60 | 68 (%34) | 114 (%54) | 6 (%34) |

| Male | 109 (%54) | 131 (%61) | 5 (%54) |

| Female | 91 (%46) | 81 (%39) | 5 (%46) |

Fig. 1.

Sample subset images of the CPB dataset; a) COVID-19, b) viral pneumonia, c) bacterial pneumonia.

CT scans were evaluated for the following characteristics to obtain CT findings that are considered by Şişli Hamidiye Etfal Training and Research Hospital physicians in determining the intensive care unit need and ventilation requirements of patients:

-

a)

presence of ground-glass opacities (GGO),

-

b)

presence of consolidation,

-

c)

presence of nodules and ground-glass opacities (GGON),

-

d)

presence of consolidation and ground-glass opacities (GGOC),

-

e)

presence of crazy-paving-pattern (CPP).

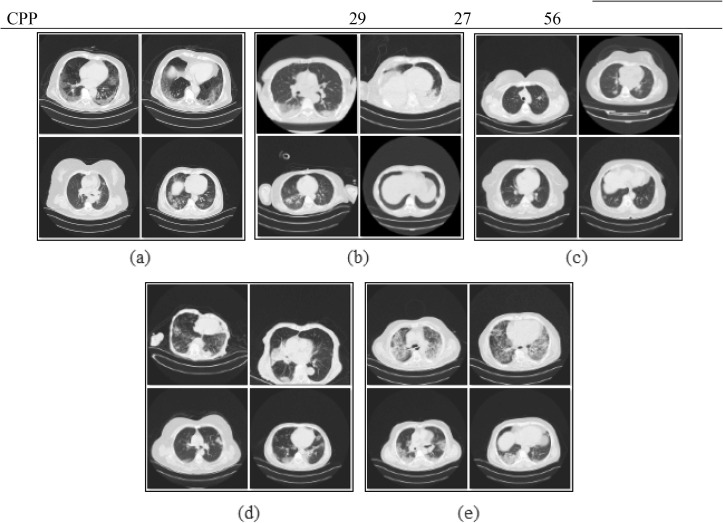

According to the 5 different CT findings mentioned above; 270 CT scans from the COVID-19 CT dataset obtained by J. Zhao et al. [28] and 614 CT scans from the COVID-19 data set were classified and the CT findings dataset was created for a total of 884 images. As far as we know, the dataset of COVID-19 CT findings was obtained for the first time, and this dataset will be expressed as CovCT-Findings. Details of CPB and CovCT-Findings are shown in Table 2 . Sample images (dataset #2) of CT findings of COVID-19 disease are shown in Fig. 2 . All CT examinations were performed using a 128-detector row helical CT scanner (Somatom Sensation 16, Siemens Medical Systems, Erlangen, Germany). CT images were obtained during patient breath-hold using the following parameters: 120 kVp, variable tube current (150–250 mAs), slice thickness 5 mm and reconstruction interval of 2 mm Chest CT scans were reviewed blindly and independently by two radiologists (with 15 and 25 years of experience). The final agreement was reached by consensus between the two radiologists.

Table 2.

Numerical information about CPB and CovCT-Findings datasets.

| CPB Dataset (Dataset #1) | Number | Total Number | ||

|---|---|---|---|---|

| COVID-19 | 631 | 1566 | ||

| Viral Pneumonia | 417 | |||

| Bacterial Pneumonia | 518 | |||

| CovCT-Findings Dataset (Dataset #2) | COVID-CT | COVID-19 | Number | Total Number |

| GGO | 99 | 337 | 436 | 884 |

| Consolidation | 108 | 36 | 144 | |

| GGON | 7 | 191 | 198 | |

| GGOC | 27 | 23 | 50 | |

| CPP | 29 | 27 | 56 | |

Fig. 2.

Sample subset images of the CovCT-Findings dataset; a) GGO, b) Consolidation, c) GGON, d) GGOC, e) CPP.

In the experimental analysis of this study, 30% of the datasets are divided into test data and 70% as training data.

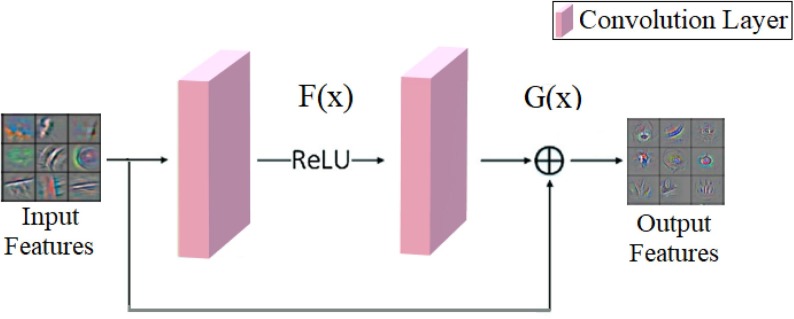

2.2. Residual models

Residual block networks operate slightly differently than convolutional networks and process the activation maps extracted from the convolution layer in front of these networks and directly transfer them to the convolution layer two or three layers later. Therefore, residual blocks bypass the convolutional layer/layers, which they see as unnecessary, contributing to model success and saving time. As the convolutional depth increases in CNN models, the training speed of the model decreases and more time consumption occurs. In addition, the increase in the number of unnecessary convolutional layers in CNN models may cause performance degradation of the deep learning model. One of the solutions to this situation is to use residual blocks in CNN architectures. Thanks to the residual blocks used in deep networks, layers that do not contribute to the performance of the model are skipped and contribution is provided [29], [30].

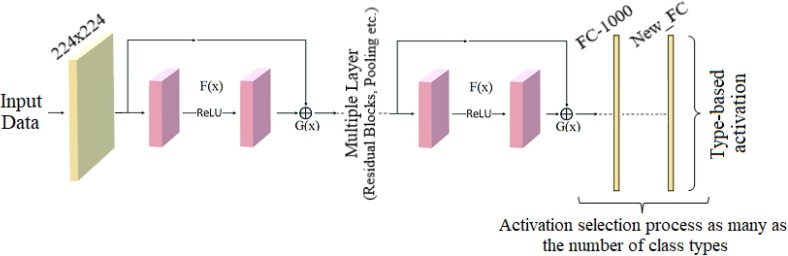

ResNet models consist of convolutional layers, pooling layers, fully connected layers, residual block structures, etc. [31]. The convolutional layer allows the creation of activation maps by circulating the input features (3 × 3, 5 × 5, etc.) with filters. The pooling layer is usually used after convolutional layers and contributes to the performance speed of the CNN model by reducing the size of the activation layers [32]. Fully connected layers collect features extracted from all layers of the CNN model and help calculate the probabilistic values of each input data into the classification process [33]. Softmax as the activation function in the last layer that performs the classification process of ResNet models, converts the input values obtained by processing from fully connected layers into probabilistic [0, 1] values. Softmax is generally preferred in CNN models for classifying two and multi-type datasets [34]. Additionally, in ResNet models, batch normalization and rectified linear activation function (ReLU) functions are used within layers. Batch normalization contributes to the speed of the model by converting input values to values within a certain limit range and tolerates problems such as overfitting. The ReLU function provides linearization of these values to avoid the complexity that may arise in the model by processing nonlinear values in layers. In other words, if the input values are positive, ReLU directly transfers this positive value as an output, but if the input value is negative, ReLU transfers this negative value to the output as zero [35]. The Residual block structure explaining this situation is shown in Fig. 3 . In this way, if we define identity , which is transferred directly from input to output, equation is obtained [36].

Fig. 3.

The functioning of the residual block.

The general structure of the ResNet model is shown in Fig. 4 . The ResNet model is diversified according to the number of layers and depth parameters of the model. (For example; ResNet-18, ResNet-50, ResNet-101 etc.). However, the input dimensions of these models are 224 × 224 and they have a fully connected (FC) layer as the output layer [37]. In this study, the ResNet-18 model, ResNet-50 model, and ResNet-101 model were used for feature extraction in images, and models carried out their training with the transfer learning approach. The parameter values for the three ResNet models are the same for all. Some of these parameters are: the epoch value was chosen 50 for the CPB dataset and 100 was chosen for the CovCT-Findings dataset. In all experimental analyses, the learning speed of CNN models was determined as 10-5, the mini-batch value was chosen as 32, and the stochastic gradient descent (SGD) optimization method was preferred. The reason why the values in the epoch selection are different; two different datasets need to complete the required epoch to complete the training processes in the proposed approach. For this reason, epoch values can take different values according to datasets.

Fig. 4.

The general structure of ResNet architectures.

In the last layer of ResNet models, the “New_FC” layer was used to extract type-based activation sets (as many activation sets as the number of input classes). In the “New_FC” layer used for this study, 3 activation sets were obtained in the dataset (Bacterial, COVID-19, Viral) containing three types for each CNN model; likewise, 5 activation sets were obtained within the five COVID-19 findings.

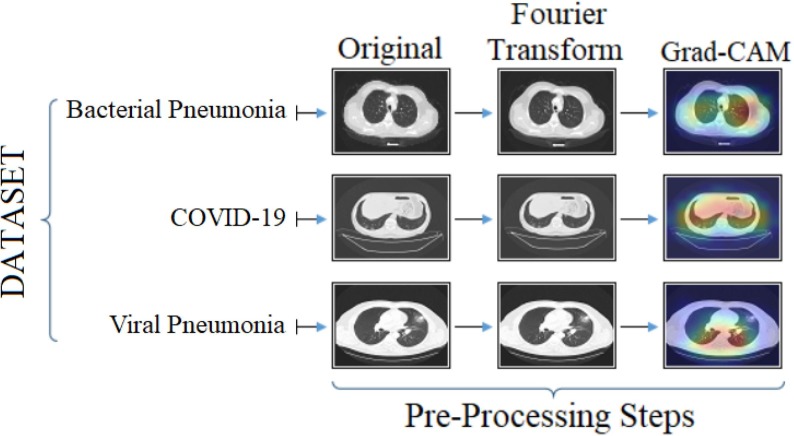

2.3. Preprocessing steps: fourier transform and grad-CAM

Preprocessing steps generally consist of methods and techniques that enable the improvement of input data. Fourier Transform and Gradient-weighted Class Activation Mapping (Grad-CAM) techniques were applied in the preprocessing steps of this study.

The Fourier Transform technique is a preprocessing step that performs operations by separating the input image into cosine and sine components. Fourier Transform can be used in processes such as image processing, image reconstruction, or image compression. Fourier Transform is used in the sense of separation and equates the input image in a spatial field to the output image in the frequency field. Here the number of pixels in each input image is equal to the number of frequencies in the output image. As a result, the image size in the spatial field and the image size in the Fourier field are the same [38], [39]. The mathematical formula in Eq. (1) is used to calculate each point in the Fourier field. The formula expressed in this equation has been prepared for a square image in size. When Eq. (1) is examined, the variable represents the image in the spatial field, and the exponential term is the fundamental function corresponding to each point in the Fourier space. The term expressed as the fundamental function is cosine and sine waves with increasing frequencies. Here is the calculation process of ; it consists of multiplying the spatial image with the corresponding elementary function and adding the resulting result [38].

| (1) |

However, the mathematical formulas in Eqs. (2) and (3) are used to calculate the sums of the pixel () point in each image, since the Fourier Transform is separable. An input image in the spatial field is transformed into an inter-transition image using N one-dimensional Fourier transforms using Eqs. (2) and (3). Then this inter-transition image uses N one-dimensional Fourier transforms again and transformed into a two-dimensional main image [38], [39].

| (2) |

| (3) |

The source codes of the Fourier Transform technique used for this study were designed and compiled in Python 3.6 software language. The source codes used were designed using the Open CV library and parameters in the Numpy library. Default values were used for the values of the parameters [40].

The most important advantage of the Grad-CAM technique is that CNN models draw attention to the area that should be focused on in the training process. Grad-CAM technique is a localization approach that provides highlighting of distinctive regions in input images. Grad-CAM techniques process the activation maps obtained from the layers of a CNN model. It begins to achieve spatial focus by using the convolutional feature maps global average pooling layers. During the regional focus, a score value is generated for each class, and regional focus is achieved by taking into account this score value. The mathematical formulas of all these operations are given in Eqs. (4) and (5). Here represents the variable as a feature map. Feature maps represent the formula for , while the formula for class feature weights represents the formula. Additionally, a localization map is obtained with the mathematical formula in Eq. (4) [41].

| (4) |

| (5) |

The grad-CAM technique allows the heat maps of the relevant regions on the input images to be found more easily by CNN models. Heat maps visualize input data in the two-dimensional form as color maps. Hue, saturation or brightness, etc., to get the details in the input data. uses parameter values. Here the function of heat maps is about changing numeric values to colors. The grad-CAM technique enables it to improve the quality of the input data visualized by the heat maps [42], [43]. For this study, the grad-CAM technique was applied to images processed with the Fourier Transform technique. The source codes of the grad-CAM technique are designed in Python and the code parameters in the Keras library are used. In addition, in the dataset where the grad-CAM technique was applied, the maps were created by using the Xception model in the Keras library in the extraction of heat maps. For the grad-CAM technique, preferred values of other important parameters are used as default values in the Keras library [44].

In addition, all images in CPB and CovCT-Findings datasets have been preprocessed. The sample images of the preprocessing step performed in this study and applied to the dataset are shown in Fig. 5 .

Fig. 5.

The preprocess step outputs performed in the experimental analysis of this study and applied to the sample images in the CPB dataset.

Two pre-processing steps were applied for the datasets used in this study. Fourier Transform technique is used to improve each image in the dataset. Thus, the noise in the images is reduced to a minimum. In addition, the input images improved with the Fourier Transform technique are processed faster in the convolutional layers of the deep learning models. Thus, the training of deep learning models was carried out faster. With the Grad-CAM technique, heat maps of each image in the datasets were extracted, facilitating the training by deep learning models and focusing on the interest region in the images.

2.4. Local interpretable model-agnostic explanations method

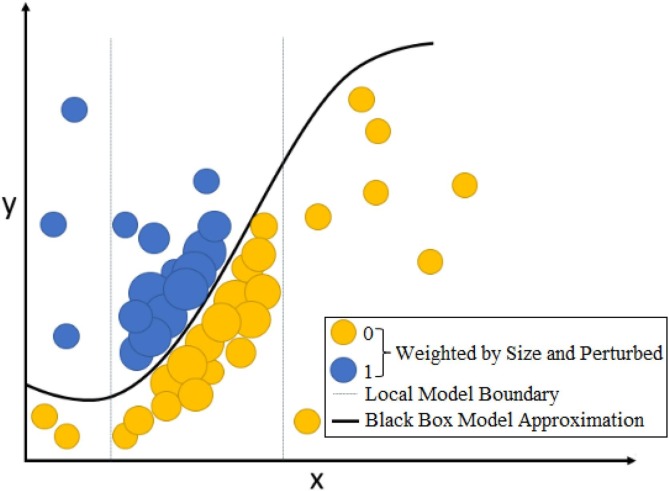

LIME learns a locally interpretable model around forecasting and is a method that explains what it learns according to the predictions of any classifier [45]. LIME is a method that can be used with other approaches other than CNN models or can be integrated into any image classification system [46]. This method mixes the input data and involves observing the output of the black-box model to understand how predictions change with different observations in the next process. If there is anxiety at local levels and this case is reflected in the black-box model, the LIME method considers this feature input important/valuable. The explanations of the LIME method are calculated according to Eq. (6). When Eq. (6) is examined; the variable represents decision trees or linear models. Each model inside the variable is represented by . The complexity value of the variable is denoted by For example; In the decision trees method, the unit of measurement for complexity can be the number of trees and in this case, the number of trees is expressed as . The black box is represented by and the approximation accuracy of variable to variable is expressed by. The explanation of the variable takes place within the defined locality and the goal of the LIME method here is to reduce and to the minimum value [47].

| (6) |

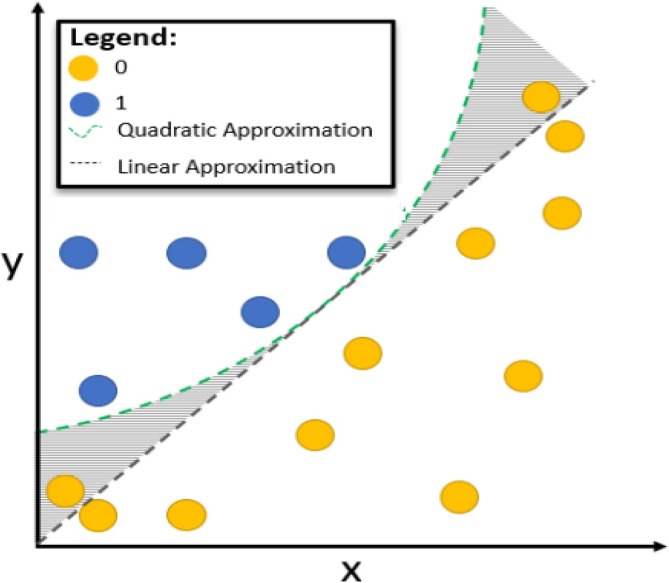

This situation can give a more successful result with a deterministic approach. However, LIME can set limits for nonlinear approaches. Calculating the perturbation value may not be sufficient in nonlinear approaches. In this case, special solutions and parameter values should be selected according to the solution of the problem. A sample representation of weighted perturbations around a focal point within local boundaries is shown in Fig. 6 . Quadratic transform is applied for nonlinear data. This situation is shown in Fig. 7 [47].

Fig. 6.

Representation of perturbed and weighted points [47].

Fig. 7.

Improving nonlinear values using the LIME method [47].

Mathematical formulas and necessary detailed information about the LIME method can be learned from references [45], [48], [49], [50], [51]. In addition, the LIME method used in the experimental analysis of this study was developed using open codes whose source codes were designed in Python language and presented to the public as an “Altruist” approach in the literature [48], [49].

The LIME method performed in the experimental analysis of this study was designed in Python and its open-source codes [48], [49] were compiled using the Scikit Learn library. The LIME method operates by selecting a classifier in the pipeline. Support Vector Machines (SVM) were used for this study and “Grid Search CV” was used for the features search parameter. The radial basis function (RBF) was selected for the core selection parameter in the SVM method. In addition, the cross-validation (CV) value was chosen as 10 in the SVM method and a gamma value of 0.1 was preferred in SVM.

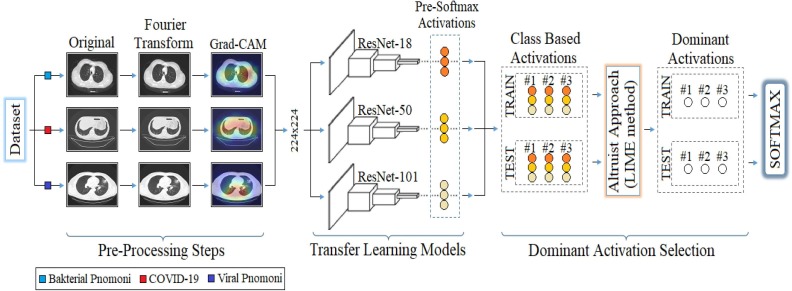

2.5. Proposed approach

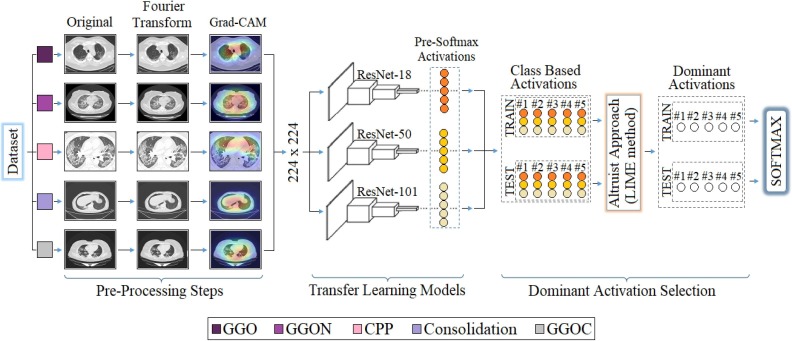

The proposed approach is a hybrid approach aimed at improving the COVID-19 diagnostic system using X-ray images and successfully classifying between COVID-19 findings. The contribution of the proposed approach to the literature is that CNN models obtain the type-based activation classes they use in the classification process (before Softmax) and enable the selection of dominant activations for both training and test data with the LIME method. Thus, it is aimed to increase the performance of the classification process by selecting the most efficient (dominant) activation sets among the class-based activations for the Softmax activation function. For example; Since a 5-class data set is trained by 3 CNN models with the recommended approach, there are 3 activation sets (ResNet-18, ResNet-50, ResNet-101) in the 1st class type. The aim is to choose the best type-based activation for the 1st class type among the LIME method and CNN models. The model structure designed for the COVID-19 diagnostic system and the findings types of the coronavirus are shown in Figs. 8 and 9 , respectively.

Fig. 8.

The general design of the proposed approach is used in COVID-19 detection.

Fig. 9.

The general design of the proposed approach used in the detection of COVID-19 findings.

The proposed approach consists of four steps. These steps are;

-

•

Preprocessing step,

-

•

Transfer learning models,

-

•

Selection of dominant activation classes (by CNN models),

-

•

Classification,

The first step consists of preprocessing steps. At this step, Fourier Transform and Grad-CAM techniques are used for each image in the data set, respectively. The Fourier Transform technique, it is aimed to purify each image in the dataset from noise and improve the image quality. The image set developed with the Fourier Transform technique was then processed with the Grad-CAM technique. With the Grad-CAM technique, regional focusing was performed on each image data. With the Grad-CAM technique, CNN models are focused on local regions that they consider important in image data. It is aimed to save time and contribute to the performance of the model by not adding unnecessary image regions to the training process of CNN models. The second step covers the training process of CNN models consisting of residual blocks. ResNet-18, ResNet-50 and ResNet-101 models are used at this step. The softmax method, which performs the classification process in the last layer of CNN models, was also preferred in the proposed approach. Before the last layer of transfer learning-based CNN models (pre-Softmax), activation sets as many as the number of class types are formed. For example, after the training of a 5-class data set with the transfer learning-based CNN model, it is processed from fully connected layers, again in the classification process, activation sets of 5 class types are formed. At this step, the aim is to obtain activation sets for each class type from different CNN models (ResNet-18, ResNet-50, ResNet-101). Thus, for each class type, a total of 3 activation sets are obtained from the three CNN models. In the third step, the selection of the dominant activation set for each class type (among three activation sets) is made using the LIME method. This process is done for both training data and test data. At the last step, the dominant activation sets selected for training data and test data are given as input to the Softmax method and classified.

In this study, the reason why ResNet models are preferred in the proposed approach is that residual blocks can provide direct data transfer after two or three layers within the model. In this way, it is aimed to contribute to the education of the model by bypassing the layers it deems unnecessary (saving time).

3. Experimental analysis and results

CNN models used in conducting experimental analysis were compiled in MATLAB (2020a) interface. Preprocessing steps and the LIME method were coded in Python 3.6 software and compiled in the Jupyter Notebook interface. Hardware information on which analyses are performed; the Windows 10 operating system (64 bit) was an 8 GB graphics card, 16 GB memory card, and an Intel © i5 - Core 3.2 GHz processor. Confusion matrix and metrics were used to compare the analyses. Mathematical formulas used in the calculation of metrics are given between Eqs. (7) and (11). When these equations are examined, abbreviations are used: sensitivity (Se), specificity (Sp), prediction (Pre), f-score (F-Scr), accuracy (Acc), true positive (TP), false positive (FP), true negative (TN), and false negative (FN) [52], [53], [54], [55], [56].

| (7) |

| (8) |

| (9) |

| (10) |

| (11) |

In the process of training the two datasets used in the experiment of this study by CNN models, 70% was set as training data and 30% as test data. In addition, experimental analyses consist of three steps for each dataset.

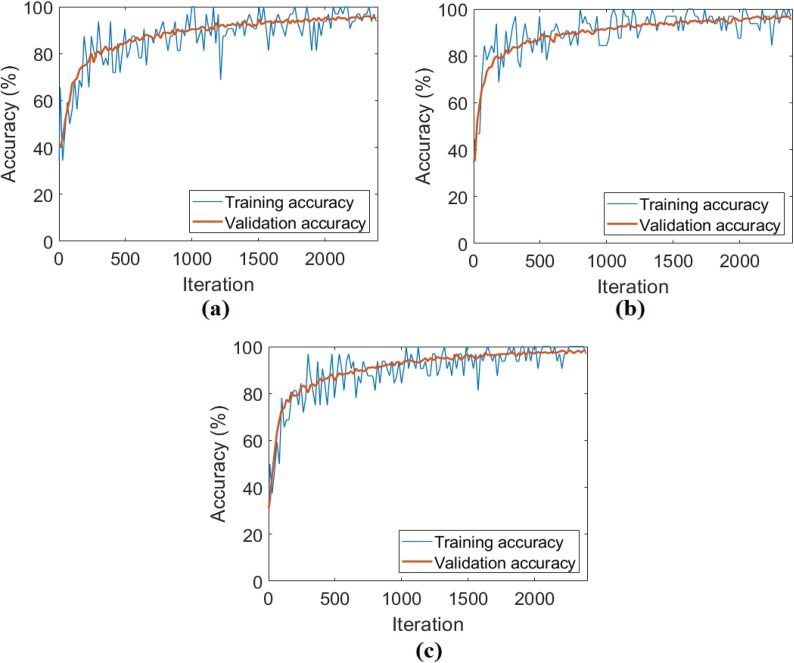

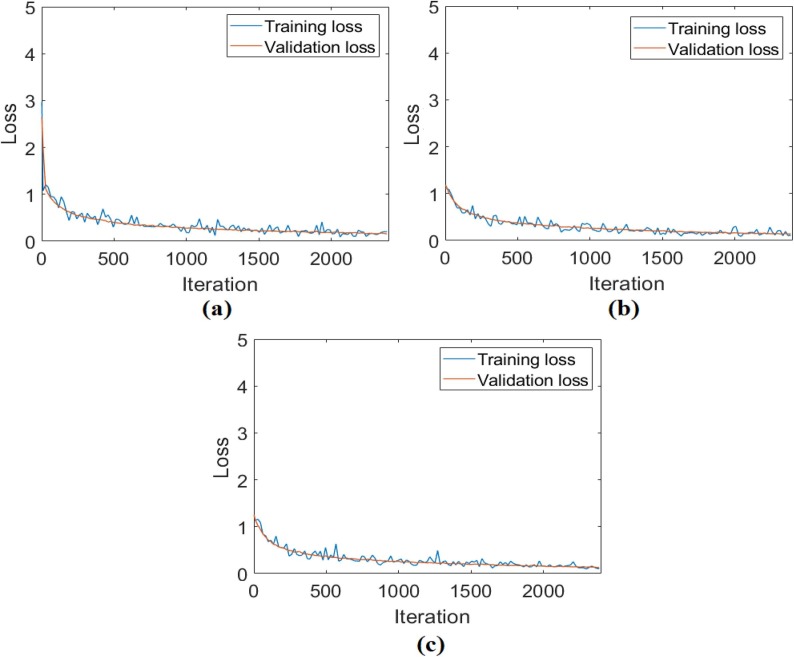

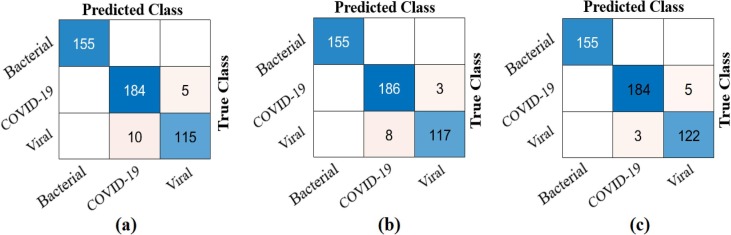

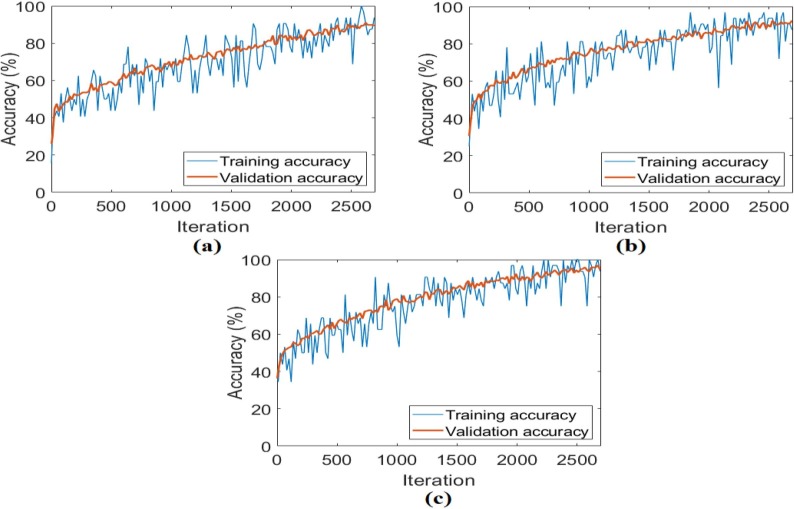

In the first analysis for the CPB dataset, the original images that were not preprocessed were trained with ResNet models, respectively. Training and test success overall accuracy graphs of these analyses are shown in Fig. 10 . Training and test loss graphs of these analyses are shown in Fig. 11 , and the confusion matrices obtained from the analysis results are shown in Fig. 12 . The metric results obtained from the confusion matrices are given in Table 3 . As a result of the analysis performed in the first step of the CPB dataset; the ResNet-18 model achieved an overall accuracy success of 96.80%, the ResNet-50 model had an overall accuracy success of 97.65%, and the ResNet-101 model achieved an overall accuracy success of 98.29%. In the first step, a comparison was made between ResNet models, and the ResNet-101 model with a deep network depth gave a more successful result. Class-based accuracy rates were obtained from the ResNet-101 model; 100% accuracy success for bacterial pneumonia data, 98.29% accuracy success for COVID-19 data, and 98.29% accuracy success for Viral pneumonia data.

Fig. 10.

The training-test overall accuracy graphs obtained by ResNet models of the original CPB dataset; a) ResNet-18, b) ResNet-50, c) ResNet-101.

Fig. 11.

The training-test loss graphs obtained by ResNet models of the original CPB dataset; a) ResNet-18, b) ResNet-50, c) ResNet-101.

Fig. 12.

Confusion matrices of the original CPB dataset obtained by ResNet models; a) ResNet-18, b) ResNet-50, c) ResNet-101.

Table 3.

Analysis results of the original CPB dataset performed by ResNet models (%).

| Model | Class | Se | Sp | Pre | F-Scr | Acc | Overall Acc |

|---|---|---|---|---|---|---|---|

| ResNet-18 | Bacterial pneumonia | 100 | 100 | 100 | 100 | 100 | 96.80 |

| COVID-19 | 97.35 | 96.42 | 94.84 | 96.08 | 96.80 | ||

| Viral pneumonia | 92 | 98.54 | 95.83 | 93.87 | 96.80 | ||

| ResNet-50 | Bacterial pneumonia | 100 | 100 | 100 | 100 | 100 | 97.65 |

| COVID-19 | 98.41 | 97.14 | 95.87 | 97.12 | 97.65 | ||

| Viral pneumonia | 93.60 | 99.12 | 97.50 | 95.51 | 97.65 | ||

| ResNet-101 | Bacterial pneumonia | 100 | 100 | 100 | 100 | 100 | 98.29 |

| COVID-19 | 97.35 | 98.92 | 98.39 | 97.87 | 98.29 | ||

| Viral pneumonia | 97.60 | 98.54 | 96.06 | 96.82 | 98.29 | ||

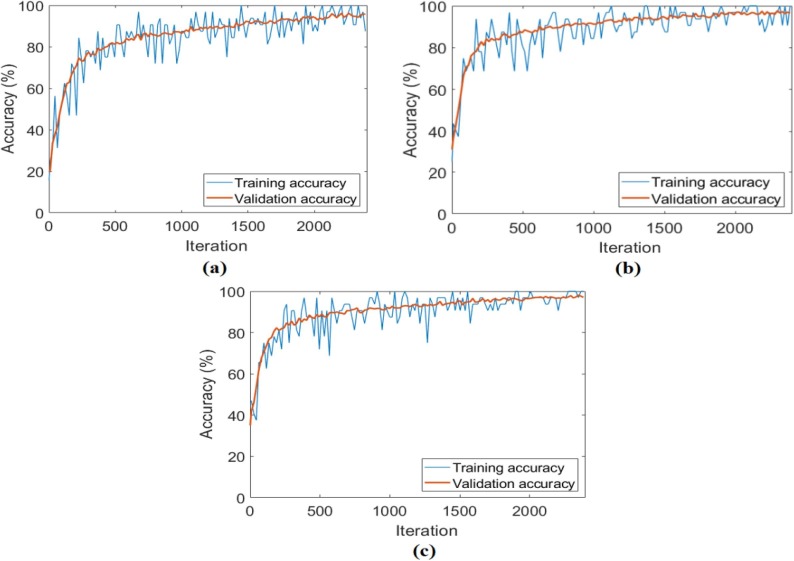

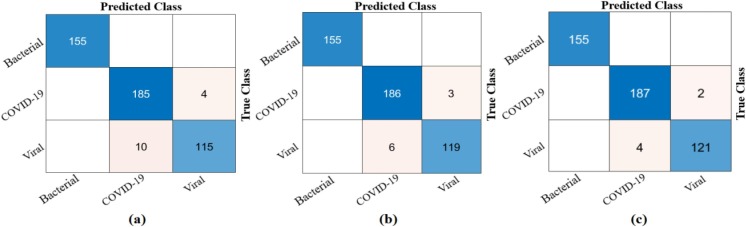

In the second analysis performed for the CPB dataset, after the preprocessing steps for three class types (bacterial pneumonia, COVID-19, viral pneumonia) were performed, their training was performed with ResNet models, respectively. Training and test success overall accuracy graphs of these analyses are shown in Fig. 13 . Training and test loss graphs of these analyses are shown in Fig. 14 , and the confusion matrices obtained from the analysis results are shown in Fig. 15 . The metric results obtained from the confusion matrices are given in Table 4 . As a result of the analysis performed in the second step of the CPB dataset; the ResNet-18 model achieved an overall accuracy success of 97.01%, the ResNet-50 model had an overall accuracy success of 98.08%, and the ResNet-101 model achieved an overall accuracy success of 98.72%. In the second step, a comparison was made between ResNet models, and the ResNet-101 model with a deep network depth gave a more successful result. Class-based accuracy rates were obtained from the ResNet-101 model; 100% accuracy success for bacterial pneumonia data, 98.72% accuracy success for COVID-19 data, and 98.72% accuracy success for Viral pneumonia data.

Fig. 13.

The training-test overall accuracy graphs obtained by ResNet models of the CPB dataset created by preprocessing; a) ResNet-18, b) ResNet-50, c) ResNet-101.

Fig. 14.

The training-test loss graphs obtained by ResNet models of the CPB dataset created by preprocessing; a) ResNet-18, b) ResNet-50, c) ResNet-101.

Fig. 15.

Confusion matrices obtained by ResNet models of the CPB dataset created by preprocessing; a) ResNet-18, b) ResNet-50, c) ResNet-101.

Table 4.

Analysis results obtained by ResNet models of the CPB dataset created by preprocessing (%).

| Model | Class | Se | Sp | Pre | F-Scr | Acc | Overall Acc |

|---|---|---|---|---|---|---|---|

| ResNet-18 | Bacterial pneumonia | 100 | 100 | 100 | 100 | 100 | 97.01 |

| COVID-19 | 97.88 | 96.42 | 94.87 | 96.35 | 97.01 | ||

| Viral pneumonia | 92.0 | 98.83 | 96.63 | 94.26 | 97.01 | ||

| ResNet-50 | Bacterial pneumonia | 100 | 100 | 100 | 100 | 100 | 98.08 |

| COVID-19 | 98.41 | 97.85 | 96.87 | 97.63 | 98.08 | ||

| Viral pneumonia | 95.20 | 99.12 | 97.54 | 96.35 | 98.08 | ||

| ResNet-101 | Bacterial pneumonia | 100 | 100 | 100 | 100 | 100 | 98.72 |

| COVID-19 | 98.94 | 98.57 | 97.90 | 98.42 | 98.72 | ||

| Viral pneumonia | 96.80 | 99.41 | 98.37 | 97.58 | 98.72 | ||

When the first two steps were compared, it was seen that the preprocessing steps applied to the original dataset contributed to the training-test success of the ResNet models.

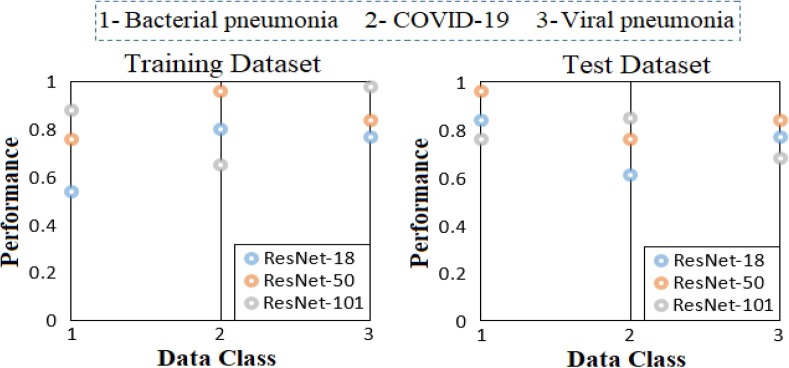

In the third step of the analysis performed for the CPB dataset, activation is obtained as much as the number of classes from the previous layer (New FC) from the Softmax function located in the last layer of each CNN model (ResNet-18, ResNet-50, ResNet-101). For example, since the CPB dataset is a three-class dataset, activation of 3 class types is transferred to the Softmax of the ResNet-18 model. (Input: 3 Output: 3 types). If the other two CNN models (ResNet-50 and ResNet-101) are calculated together, each class of the three-class dataset (for CPB dataset) has 3 activation sets and a total of 9 activation sets. For example, it includes 3 activation sets for Class #1 (bacterial pneumonia), both activation of ResNet-18, activation set of ResNet-50 and activation set of ResNet-101. The aim here is to select which CNN model is better for activation (dominant activation) for Class #1 with the LIME method. So this activation selection was carried by the LIME method for both training data and test data. The dominant CNN models in both training and test data with the LIME method in types-based activation selection are shown in Fig. 16 . When Fig. 16 is examined; ResNet-101 was chosen as the best activation set of Class #1 (Bacterial pneumonia) in the training set. Likewise, ResNet-50 was selected as the best activation set for Class #2 (COVID-19) in the training dataset and the ResNet-101 model gave the best activation set for Class #3 (Viral pneumonia). The analyses obtained with the LIME method for the test data set are as follows; ResNet-50 model has the best activation set for Class #1 (Bacterial pneumonia). For Class #2 (COVID-19), the ResNet-101 model was selected as the best activation type with the LIME method. The best activation type was selected for Class #3 (Viral pneumonia) with the ResNet-50 model LIME method.

Fig. 16.

Sorting the selected types-based activations among CNN models by applying the LIME method to the CPB data.

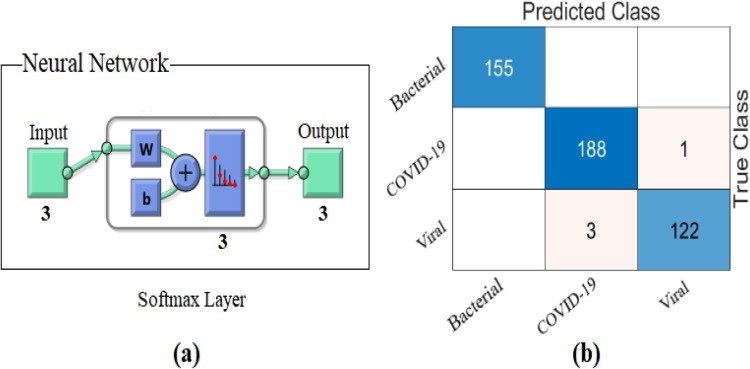

As result, it was reclassified with Softmax by distinguishing the dominant activations from the training and test data where the LIME method was applied. The confusion matrix obtained as a result of this classification is shown in Fig. 17 and Table 5 gives detailed results of the analysis. The overall accuracy success obtained as a result of the classification was 99.15%. Type-based accuracy rates achieved 100% accuracy success for bacterial pneumonia data, 99.15% accuracy success for COVID-19 data, and 99.15% accuracy success for Viral pneumonia data. With the proposed approach, it was observed that it contributed to both type-based success and overall accuracy success compared to ResNet models.

Fig. 17.

Confusion matrix obtained from classification of types-based dominant activations selected with the LIME method by Softmax method; a) Softmax input–output process, b) confusion matrix.

Table 5.

Analysis results from the application of the proposed approach to the CPB data (%).

| Model | Class | Se | Sp | Pre | F-Scr | Acc | Overall Acc |

|---|---|---|---|---|---|---|---|

| Proposed Approach |

Bacterial pneumonia | 100 | 100 | 100 | 100 | 100 | 99.15 |

| COVID-19 | 99.47 | 98.93 | 98.43 | 98.95 | 99.15 | ||

| Viral pneumonia | 97.60 | 99.71 | 99.19 | 98.39 | 99.15 |

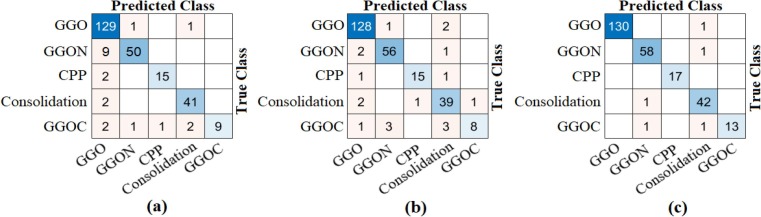

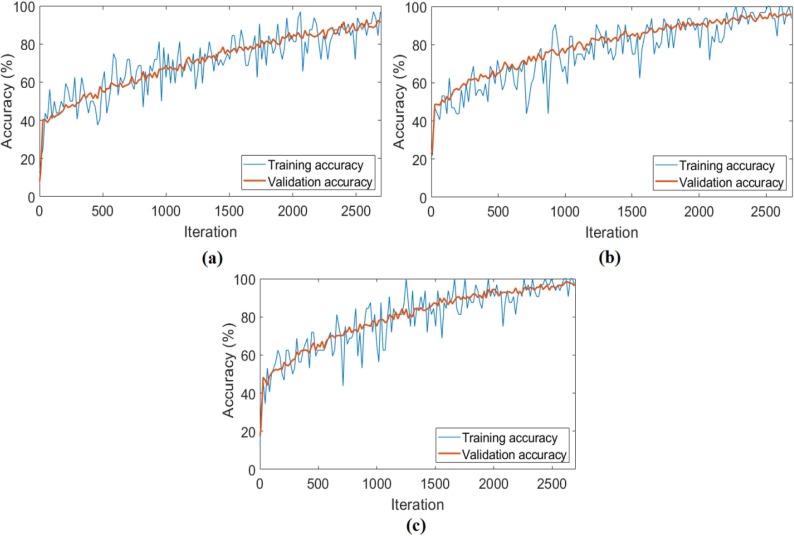

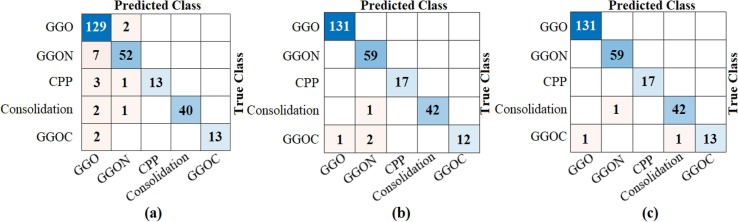

The proposed approach was applied for the second dataset (CovCT-Findings dataset) and the same analysis performed for the CPB dataset was applied in the CovCT-Findings dataset. The CovCT-Findings dataset contains images of COVID-19 findings. In the first step of the analyses performed for this original dataset, 5 class types (GGO, Consolidation, GGON, GGOC, CPP) were respectively trained with ResNet models. In this step, the dataset was not preprocessed. The success graphs showing the training processes of the ResNet models are shown in Fig. 18 . The confusion matrices obtained from the training processes of the models are shown in Fig. 19 . Metric-based analysis results calculated from confusion matrices are given in Table 6 . Overall accuracy success was achieved by ResNet models in classifying COVID-19 findings; it was 92.08% in the ResNet-18 model, 92.83% in the ResNet-50 model, and 98.11% in the ResNet-101 model. Among the CNN models, the ResNet-101 model with high convolutional depth achieved the highest success.

Fig. 18.

The training-test overall accuracy graphs obtained by ResNet models of the original CovCT-Findings dataset; a) ResNet-18, b) ResNet-50, c) ResNet-101.

Fig. 19.

Confusion matrices of the original CovCT-Findings dataset obtained by ResNet models; a) ResNet-18, b) ResNet-50, c) ResNet-101.

Table 6.

Analysis results of the original CovCT-Findings dataset performed by ResNet models (%).

| Model | Class | Se | Sp | Pre | F-Scr | Acc | Overall Acc |

|---|---|---|---|---|---|---|---|

| ResNet-18 |

GGO | 98.47 | 88.46 | 89.58 | 93.81 | 93.48 | 92.08 |

| GGON | 84.74 | 98.97 | 96.15 | 90.09 | 95.68 | ||

| CPP | 88.23 | 99.56 | 93.75 | 90.90 | 98.78 | ||

| Consolidation | 95.34 | 98.54 | 93.18 | 94.25 | 97.99 | ||

| GGOC | 60 | 100 | 100 | 75 | 97.60 | ||

| ResNet-50 | GGO | 97.70 | 95.16 | 95.52 | 96.60 | 96.47 | 92.83 |

| GGON | 94.91 | 97.93 | 93.33 | 94.11 | 97.23 | ||

| CPP | 88.23 | 99.56 | 93.75 | 90.90 | 98.79 | ||

| Consolidation | 90.69 | 96.72 | 84.78 | 87.64 | 95.71 | ||

| GGOC | 53.33 | 99.58 | 88.88 | 66.66 | 96.85 | ||

| ResNet-101 | GGO | 99.23 | 100 | 100 | 99.61 | 99.61 | 98.11 |

| GGON | 98.30 | 99.01 | 96.66 | 97.47 | 98.85 | ||

| CPP | 100 | 100 | 100 | 100 | 100 | ||

| Consolidation | 97.67 | 98.64 | 93.33 | 95.45 | 98.48 | ||

| GGOC | 86.66 | 100 | 100 | 92.85 | 99.23 | ||

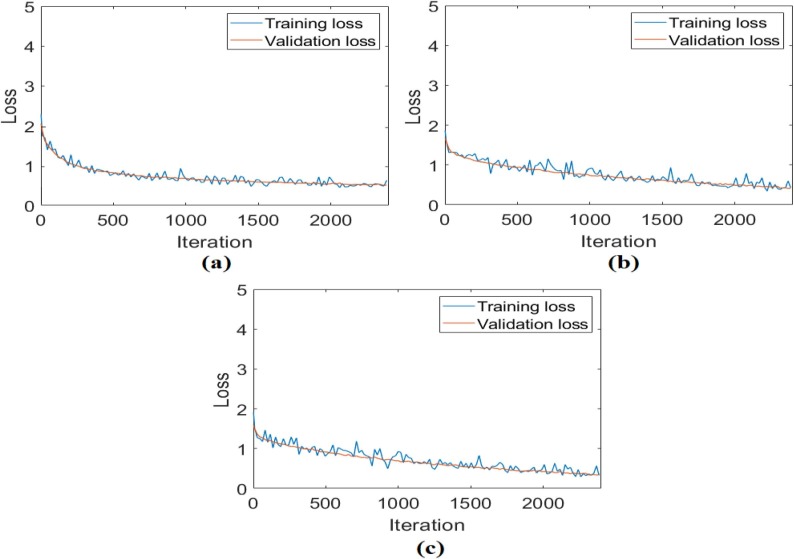

The CovCT-Findings dataset was preprocessed and ResNet models were applied to the dataset created from the preprocesses. In the second step of the analyses performed for this dataset, 5 class types (GGO, Consolidation, GGON, GGOC, CPP) were respectively trained with ResNet models. The success graphs showing the training processes of the ResNet models are shown in Fig. 20 . When Fig. 20 is examined, the training-test graphics of all ResNet models have increased harmoniously. There is no abnormal fluctuation in all of the graphs in Fig. 20. Therefore, there is no overfitting and underfitting in the training and test graphics. The general accuracy graphs in Fig. 20 followed a stable course close to the last iteration. In addition, it has been observed that the overall accuracy success graph of the ResNet-101 model is more efficient than the ResNet-18 and ResNet-50 models. The confusion matrices obtained from the training processes of the models are shown in Fig. 21 . Metric-based analysis results calculated from confusion matrices are given in Table 7 . Overall accuracy success was achieved by ResNet models in classifying COVID-19 findings; it was 93.21% in the ResNet-18 model, 98.49% in the ResNet-50 model, and 98.87% in the ResNet-101 model. Among the CNN models, the ResNet-101 model with high convolutional depth achieved the highest success. Accuracy achievements of the ResNet-101 model of COVID-19 findings are as follows: 99.61% for the GGO and GGON types, 100% accuracy for the CPP type, and 99.24% for the consolidation and GGOC types.

Fig. 20.

The training-test overall accuracy graphs obtained by ResNet models of the CovCT-Findings dataset created by preprocessing; a) ResNet-18, b) ResNet-50, c) ResNet-101.

Fig. 21.

The confusion matrices obtained by ResNet models of the CovCT-Findings dataset created by preprocessing; a) ResNet-18, b) ResNet-50, c) ResNet-101.

Table 7.

Analysis results obtained by ResNet models of the CovCT-Findings dataset created by preprocessing (%).

| Model | Class | Se | Sp | Pre | F-Scr | Acc | Overall Acc |

|---|---|---|---|---|---|---|---|

| ResNet-18 | GGO | 98.47 | 89.39 | 90.20 | 94.16 | 93.91 | 93.21 |

| GGON | 88.13 | 97.98 | 92.85 | 90.43 | 95.73 | ||

| CPP | 76.47 | 100 | 100 | 86.66 | 98.40 | ||

| Consolidation | 93.02 | 100 | 100 | 96.38 | 98.80 | ||

| GGOC | 86.66 | 100 | 100 | 92.85 | 99.19 | ||

| ResNet-50 | GGO | 100 | 99.23 | 99.24 | 99.61 | 99.61 | 98.49 |

| GGON | 100 | 98.53 | 95.16 | 97.52 | 98.86 | ||

| CPP | 100 | 100 | 100 | 100 | 100 | ||

| Consolidation | 97.67 | 100 | 100 | 98.82 | 99.61 | ||

| GGOC | 80.0 | 100 | 100 | 88.88 | 98.86 | ||

| ResNet-101 | GGO | 100 | 99.24 | 99.24 | 99.61 | 99.61 | 98.87 |

| GGON | 100 | 99.50 | 98.33 | 99.15 | 99.61 | ||

| CPP | 100 | 100 | 100 | 100 | 100 | ||

| Consolidation | 97.67 | 99.54 | 97.67 | 97.67 | 99.24 | ||

| GGOC | 86.66 | 100 | 100 | 92.85 | 99.24 | ||

Experimental analyzes performed in the first two steps of the CovCT-Findings dataset showed that the preprocessing step contributed to the training process of ResNet models. It has been seen that the methods applied in the preprocessing process play an active role in both the first dataset and the second dataset.

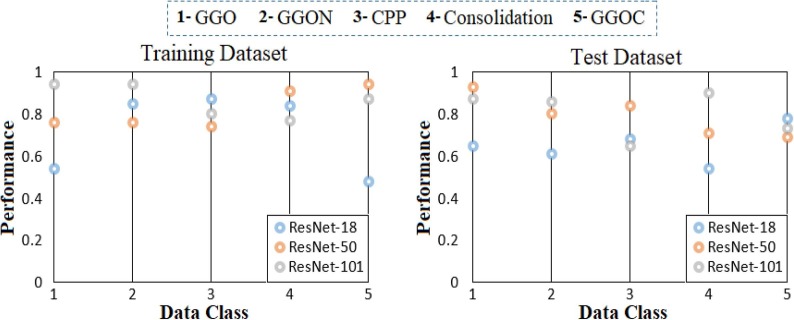

In the third step, the proposed approach was applied to the CovCT-Findings dataset created from the preprocesses. In the third step of the analysis performed for the CovCT-Findings dataset, activation is obtained as much as the number of classes from the previous layer (New FC) from the Softmax function located in the last layer of each CNN model (ResNet-18, ResNet-50, ResNet-101). For example, since the CovCT-Findings dataset is a five-class dataset, activation of 5 class types is transferred to the Softmax of the ResNet-18 model. (Input: 5 Output: 5 types). If the other two CNN models (ResNet-50 and ResNet-101) are calculated together, each class of the five-class dataset (for CovCT-Findings dataset) has 5 activation sets and a total of 15 activation sets. For example, it includes 5 activation sets for Class #1 (GGO), both activation of ResNet-18, activation set of ResNet-50 and activation set of ResNet-101. The aim here is to select which CNN model is better for activation (dominant activation) for Class #1 with the LIME method. So this activation selection was carried by the LIME method for both training data and test data. The dominant CNN models in both training and test data with the LIME method in types-based activation selection are shown in Fig. 22 . When Fig. 22 is examined, ResNet-101 was chosen as the best activation set of Class #1 (GGO) in the training set. Likewise, ResNet-101 was selected as the best activation set for Class #2 (GGON) in the training dataset, ResNet-18 was selected as the best activation set for Class #3 (CPP) in the training dataset, ResNet-50 was selected as the best activation set for Class #4 (Consolidation) in the training dataset, and ResNet-50 was selected as the best activation set for Class #5 (GGOC) in the training dataset. The analyses obtained with the LIME method for the test data set are as follows: ResNet-50 model has the best activation set for Class #1 (GGO), and for Class #2 (GGON), the ResNet-101 model was selected as the best activation type with the LIME method. The best activation type was selected for Class #3 (CPP) with the ResNet-50 model LIME method. The best activation type was selected for Class #4 (Consolidation) with the ResNet-101 model LIME method. The best activation type was selected for Class #5 (GGOC) with the ResNet-18 model LIME method.

Fig. 22.

Sorting the selected types-based activations among CNN models by applying the LIME method to the CovCT-Findings data.

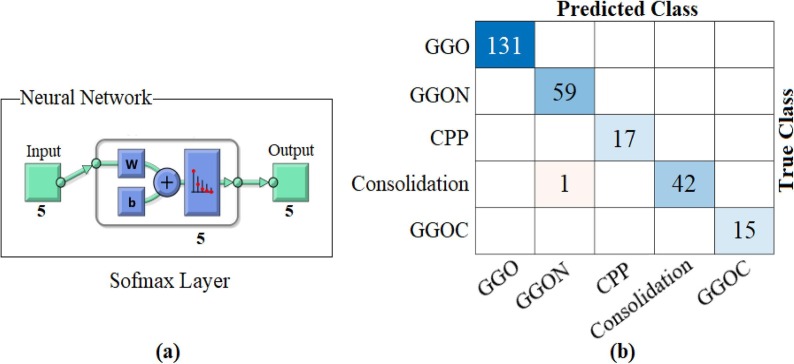

As result, it was reclassified with Softmax by distinguishing the dominant activations from the training and test data where the LIME method was applied. The confusion matrix obtained as a result of this classification is shown in Fig. 23 and detailed results of the analyses are given in Table 8 . The overall accuracy success obtained as a result of the classification was 99.62%. Type-based accuracy rates: 100% accuracy success was achieved for GGO, CPP, and GGOC data, and 99.62% accuracy success was achieved for GGON and Consolidation data. The proposed approach has been observed to contribute to both type-based success and overall accuracy success compared to ResNet models.

Fig. 23.

Confusion matrix obtained from classification of types-based dominant activations selected with the LIME method by Softmax method; a) Softmax input–output process, b) confusion matrix.

Table 8.

Analysis results from the application of the proposed approach to the CovCT-Findings data (%).

| Model | Class | Se | Sp | Pre | F-Scr | Acc | Overall Acc |

|---|---|---|---|---|---|---|---|

| Proposed Approach |

GGO | 100 | 100 | 100 | 100 | 100 | 99.62 |

| GGON | 100 | 99.51 | 98.33 | 99.15 | 99.62 | ||

| CPP | 100 | 100 | 100 | 100 | 100 | ||

| Consolidation | 97.67 | 100 | 100 | 98.82 | 99.62 | ||

| GGOC | 100 | 100 | 100 | 100 | 100 |

4. Discussion

In this study, COVID-19 detection was performed using CT images and the types of findings of COVID-19 were classified with the proposed approach. The datasets created by obtaining the necessary ethical permissions were distinguished by two expert radiologists. Since the lungs are the organ most affected by COVID-19 disease, we created the CPB dataset with a joint decision that it should be compared with the images of bacterial pneumonia and viral pneumonia occurring in the lungs. We created the CovCT-Findings dataset to see if five types that can be distinguished by radiologists among the COVID-19 finding types can contribute to the proposed approach. Here, the success rate in differentiating the COVID-19 types in the CovCT-Findings dataset is important and this process is long by radiologists. It is important to be able to diagnose this situation correctly in less time with an artificial intelligence-based approach. Therefore, among the objectives of this study, it was aimed to successfully distinguish GGO, GGON, CPP, Consolidation, GGOC class data from each other. The overall accuracy success achieved with the proposed approach was 99.62%. The overall accuracy success of the proposed approach in the CPB dataset was 99.15%, and the accuracy success on COVID-19 data alone was 99.15%. We also observed that preprocessing steps contribute to the training process of ResNet models. In the first two analyzes of the two datasets, it was seen that the dataset created with the preprocessing steps gave better results than the original datasets. In the analysis performed in the last step of the datasets (with the proposed approach), it has been observed that it gives a more successful performance.

When the proposed approach steps are examined, it consists of three steps, and thanks to the preprocessing step applied in the first step, it was aimed to eliminate the noise in the images with the Fourier transform technique. Likewise, it was aimed to realize regional focusing with heat maps with the Grad-CAM technique for CNN models to pass the training process more easily in CT images. In the second step, three CNN models with the residual block are used. Residual blocks are preferred because they directly skip the layer considered unnecessary by the model in convolutional layers. In this way, the CNN model is aimed to perform a faster and more accurate analysis. The biggest contribution of the proposed approach to the literature is that it can obtain type-based activation sets (output activation sets as much as the number of input types in the CNN model) through CNN models and determining the activation set of the best CNN model in the types with the LIME method. In the third step, it is aimed to reclassify the dominant type-based activations with Softmax. Thus, it was observed whether the proposed approach was successful in achieving its goals. The most important drawbacks proposed are that it is not an end-to-end model and using more than one CNN model in its structure causes more time loss for the model. However, this situation has been tolerated with the successful results of the proposed approach.

In the experimental analysis of the two datasets, the ResNet-101 model gave the best performance among the ResNet models. Experimental analyzes have shown us that among ResNet models, as parameter depth increases, its contribution to performance increases. However, increasing the depth in deep networks also extends the training time of the model. This situation negatively affected the speed-time performance of the proposed approach. However, all ResNet models were also included in the selection of the dominant sets among the type-based activation sets obtained by the models in the proposed approach. Therefore, although the ResNet-101 model gave more successful results than other ResNet models, it could be effective in other models in the type-based dominant activation selections selected by the LIME method. The results obtained from the analyzes confirm that the LIME method shows effective selection. A comparison of our proposed approach with the state-of-art models is given in Table 9 . However, it is not possible to directly compare the studies given in Table 9 with our proposed approach. The reasons for this can be expressed as follows; dataset amounts, training-test rates, the software they use and hardware they use, etc.

Table 9.

Comparison of studies on COVID-19 with this study.

| Models/Methods | Year | Dataset | # of classes | Overall Acc (%) |

|---|---|---|---|---|

| Transfer learning, Social Mimic optimization [57] | 2020 | Public | 3 | 99.27 |

| DarkCovidNet [58] | 2020 | Public | 3 | 87.02 |

| DRE-Net [59] | 2020 | Private | 2 | 86 |

| CNNs, Transfer learning [60] | 2020 | Private | 2 | 89.5 |

| COVIDetectioNet [23] | 2021 | Public | 3 | 99.18 |

| CNN, Bayesian optimization [61] | 2020 | Public | 3 | 98.97 |

| Data augmentation, Segmentation, CNN [62] | 2021 | Private | 2 | 90.3 |

| Proposed approach | 2021 | Private | 3 | 99.15 |

Scientists focused on chest images to perform a clinical assessment of COVID-19 cases. Many datasets on COVID-19 have been made available and this situation is increasing day by day. However, these datasets are either few or are formed by combining several open-access datasets. Most of the studies in Table 9 have this approach.

Toğaçar et al. [57] reconstructed chest X-ray images by processing them with a fuzzy color approach. They combined the original dataset with the fuzzy color technique and the dataset reconstructed with the stacking technique. Thus, they made each image in the dataset of higher quality. They used 295 COVID-19 chest images, 65 normal chest images, and 98 pneumonia images in their study. They trained the dataset with CNN models and then optimized the features obtained from the last layers of the models with the social mimic approach. In the classification process, they achieved an overall accuracy of 99.27% by using the SVM method. Ozturk et al. [58] used X-ray images to detect COVID-19. They used a two-class and multi-class dataset. Of the positive cases in the dataset, 43 were female and 82 were male. The total number of X-ray images obtained from COVID-19 cases was 127. They also added the publicly available ChestX-ray8 dataset, which consists of normal X-ray images and pneumonia X-ray images. They used 500 no-findings images and 500 pneumonia images in the experimental analysis. The deep learning model they designed; consisted of real-time object detection and convolutional layers. Their overall accuracy in binary classification was 98.08% and overall accuracy in multi-classification was 87.02%. Ying et al. [59] used CT images collected from hospitals in two provinces in China for comparison and modeling. Their dataset consisted of COVID-19 images from 88 patients and chest images from 86 healthy people. They obtained 777 slices of images from patients with COVID-19 and 708 slices of images from healthy people. Their proposed approach is based on the combination of the ResNet-50 model and the feature pyramid network (FPN) model. The overall accuracy they achieved in their study was 86%. Wang et al. [60] used a dataset of 1065 CT images in their study. The dataset they used consisted of 325 images of COVID-19 and 740 images of typical viral pneumonia. They used a modified Inception transfer-learning model in the experimental analysis and achieved an overall accuracy of 89.5%. Turkoglu [23] designed a hybrid CNN model for the diagnosis of COVID-19. In his proposed approach, he used the AlexNet model together with the feature selection method. The dataset he used in the experimental analysis consisted of chest X-ray images. 219 images consisted of COVID-19 types, 1583 images of normal (healthy) types, and 4290 images of pneumonia types. He achieved an overall accuracy of 99.18% in the classification performed with the SVM method. Nour et al. [61] used Bayesian optimization with a pre-trained deep learning model to perform COVID-19 detection. Their dataset consisted of 2905 chest X-ray images. The dataset consisted of 219 COVID-19 types, 1341 normal (healthy) types, and 1345 viral pneumonia types. The overall accuracy they achieved was 98.97%. Keidar et al. [62] used chest X-ray images in their experimental analysis to diagnose COVID-19. The dataset they used included 1384 COVID-19 type images and 1024 other disease-type images. Their proposed approach included deep CNN models as well as data augmentation and segmentation techniques. They achieved an overall accuracy of 90.3% in their study.

Some studies have contributed to time-speed performance as they perform end-to-end training [58], [60], [61]. Some studies did not provide end-to-end training [57], [62]. The approach we propose is not an end-to-end model. With this aspect, the proposed approach may lose time in the training process compared to end-to-end models. Since some approaches are trained from scratch, the training period may take a long time [61]. Some approaches can obtain results earlier because they use transfer learning and pre-trained CNN models [23], [57], [58], [59], [60], [62]. Since our proposed approach uses pre-trained CNN models, the processing result is faster and this has a positive effect on the successful performance. Optimization methods are used in hybrid approaches to obtain efficient features among the extracted features in the proposed models [23], [57], [61]. In this way, more efficient performance can be achieved in a shorter time. Feature selection was performed with the LIME method, thus contributed to the classification performance in our proposed approach. In some studies, they contributed to the performance of the proposed model by using segmentation and ROI techniques [59], [60], [62]. Our proposed approach may have reduced the performance speed by using CNN models simultaneously compared to other studies, but this variety was preferred for the selection of efficient features. Moreover, the dominant activation set selection based on the types demonstrated the innovative side of our proposed approach.

5. Conclusion

The global economy, public health, people's living standards are maintained by creating new norms with the effect of COVID-19 disease. The number of people affected by this infectious disease is increasing day by day. Since the COVID-19 virus directly affects the lungs, studies have focused on developing a computer-aided diagnosis system that can distinguish diseases such as pneumonia in this organ. In this paper, an artificial intelligence-based approach is proposed for the detection of COVID-19 disease and its findings. COVID-19 detection from bacterial and viral pneumonia image types was successfully performed with the proposed approach. The proposed approach is based on pre-trained CNN models. Fully connected layers that can give as many activation sets as the number of classes have been added in the last layer of CNN models. Thus, it is aimed to perform a successful classification of the activation sets extracted for each type by using the LIME method. An overall accuracy success of 99.15% was achieved on the CPB dataset. Detection of finding types of COVID-19 in the CovCT-Findings dataset was successfully performed with the proposed approach, achieving an overall accuracy success of 99.62%. Performing analyses among the finding types of COVID-19 added a different meaning to the study. In the proposed approach, extracting the output activation sets as much as the number of input types from CNN models and selecting the best CNN activation sets by the LIME method shows the innovative direction it provides to the literature. In addition, a reliable diagnostic tool has been obtained with the proposed approach. This diagnostic tool can play an active role in the decision-making process of clinical experts and radiologists.

The performance of radiologists in discriminating COVID-19 from other types of viral pneumonia is demonstrated with 67–97% sensitivity [21]. Due to the high similarity of chest CT findings between COVID-19 and others, radiologists who do not have enough experience may underperform. It is very important to distinguish COVID-19 pneumonia from others for pandemics where COVID-19 spreads rapidly in the community. This approach distinguishes different types of pneumonia all at once. Also, it successfully classifies three different types of pneumonia all at once.

Chest CT images are very helpful in diagnosing COVID-19 individuals. Radiologists are readers of these images. Chest CT reports are not the only diagnostician of viral pneumonia types but also report the viral pneumonia chest tomography findings. These tomography findings contain information about the progression of pneumonia in the lung and are of critical importance for clinic and intensive care physicians for the continuation of the treatment. To the best of our knowledge, the most common COVID-19 CT findings are classified successfully for the first time in this study.

In the next study, it is considered to develop the proposed approach by using different structuring techniques that will contribute to the optimization algorithms different on other datasets.

CRediT authorship contribution statement

Conceptualization: Mesut Toğaçar, Burhan Ergen. Data curation: Nedim Muzoğlu, Bekir Sıddık Binboğa Yarman, Ahmet Mesrur Halefoğlu. Formal analysis: Mesut Toğaçar, Nedim Muzoğlu, Burhan Ergen, Ahmet Mesrur Halefoğlu, Bekir Sıddık Binboğa Yarman. Investigation: Nedim Muzoğlu, Mesut Toğaçar. Methodology: Mesut Toğaçar. Resources: Mesut Toğaçar, Nedim Muzoğlu, Bekir Sıddık Binboğa Yarman. Software: Mesut Toğaçar. Supervision: Nedim Muzoğlu, Burhan Ergen, Mesut Toğaçar. Validation: Mesut Toğaçar. Visualization: Mesut Toğaçar. Writing - original draft: Mesut Toğaçar, Nedim Muzoğlu. Writing - review & editing: Mesut Toğaçar, Nedim Muzoğlu.

Declaration of Competing Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Acknowledgments

Acknowledgements

The authors wish to thank Prof. Dr. Mehmet Ruhi ONUR, an expert radiologist from Hacettepe University Radiology Department for his valuable help and contributions, we would also like to thank the Department of Radiology Şişli Hamidiye Etfal Training and Research Hospital.

Funding

There is no funding source for this article.

Ethical approval

This article does not contain any data, or other information from studies or experimentation, with the involvement of human or animal subjects.

References

- 1.WHO Director-General’s opening remarks at the media briefing on COVID-19, WHO. (2020). https://www.who.int/director-general/speeches/detail/who-director-general-s-opening-remarks-at-the-media-briefing-on-covid-19---11-march-2020 (accessed June 20, 2021).

- 2.P. Auwaerter M.D., Coronavirus COVID-19 (SARS-CoV-2), (2020). https://www.hopkinsguides.com/hopkins/view/Johns_Hopkins_ABX_Guide/540747/all/Coronavirus_COVID_19__SARS_CoV_2_.

- 3.Ginsburg A.S., Klugman K.P. COVID-19 pneumonia and the appropriate use of antibiotics. Lancet Glob. Heal. 2020;8(12):e1453–e1454. doi: 10.1016/S2214-109X(20)30444-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Mohapatra R.K., Pintilie L., Kandi V., Sarangi A.K., Das D., Sahu R., Perekhoda L. The recent challenges of highly contagious COVID-19, causing respiratory infections: Symptoms, diagnosis, transmission, possible vaccines, animal models, and immunotherapy. Chem. Biol. Drug Des. 2020;96(5):1187–1208. doi: 10.1111/cbdd.v96.510.1111/cbdd.13761. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.WHO COVID-19 Explorer, WHO. (2021). https://covid19.who.int/ (accessed July 31, 2021).

- 6.Fang Y., Zhang H., Xie J., Lin M., Ying L., Pang P., Ji W. Sensitivity of Chest CT for COVID-19: Comparison to RT-PCR. Radiology. 2020;296(2):E115–E117. doi: 10.1148/radiol.2020200432. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.T. Ai, Z. Yang, H. Hou, C. Zhan, C. Chen, W. Lv, Q. Tao, Z. Sun, L. Xia, Correlation of Chest CT and RT-PCR Testing for Coronavirus Disease 2019 (COVID-19) in China: A Report of 1014 Cases, Radiology. 296 (2020) E32–E40. doi:10.1148/radiol.2020200642. [DOI] [PMC free article] [PubMed]

- 8.Ching L., Chang S.P., Nerurkar V.R. COVID-19 special column: principles behind the technology for detecting SARS-CoV-2, the cause of COVID-19. Hawai’i J. Heal. Soc. Welf. 2020;79:136–142. https://pubmed.ncbi.nlm.nih.gov/32432217 [PMC free article] [PubMed] [Google Scholar]

- 9.Borakati A., Perera A., Johnson J., Sood T. Diagnostic accuracy of X-ray versus CT in COVID-19: a propensity-matched database study. BMJ Open. 2020;10(11):e042946. doi: 10.1136/bmjopen-2020-042946. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Stephanie S., Shum T., Cleveland H., Challa S.R., Herring A., Jacobson F.L., Hatabu H., Byrne S.C., Shashi K., Araki T., Hernandez J.A., White C.S., Hossain R., Hunsaker A.R., Hammer M.M. Determinants of chest X-Ray sensitivity for COVID- 19: a multi-institutional study in the United States. Radiol. Cardiothorac. Imaging. 2020;2 doi: 10.1148/ryct.2020200337. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Lei Y., Zhang H.-W., Yu J., Patlas M.N. COVID-19 infection: early lessons. Can. Assoc. Radiol. J. 2020;71(3):251–252. doi: 10.1177/0846537120914428. [DOI] [PubMed] [Google Scholar]

- 12.Hansell D.M., Bankier A.A., MacMahon H., McLoud T.C., Müller N.L., Remy J. Fleischner society: glossary of terms for thoracic imaging. Radiology. 2008;246(3):697–722. doi: 10.1148/radiol.2462070712. [DOI] [PubMed] [Google Scholar]

- 13.Emara D.M., Naguib N.N., Moustafa M.A., Ali S.M., El Abd A.M. Typical and atypical CT chest imaging findings of novel coronavirus 19 (COVID-19) in correlation with clinical data: impact on the need to ICU admission, ventilation and mortality. Egypt. J. Radiol. Nucl. Med. 2020;51:227. doi: 10.1186/s43055-020-00339-3. [DOI] [Google Scholar]

- 14.Rodrigues R.S., Marchiori E., Bozza F.A., Pitrowsky M.T., Velasco E., Soares M., Salluh J.I. Chest computed tomography findings in severe influenza pneumonia occurring in neutropenic cancer patients. Clinics (Sao Paulo) 2012;67(4):313–318. doi: 10.6061/clinics10.6061/clinics/2012(04)10.6061/clinics/2012(04)03. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Shi H., Han X., Jiang N., Cao Y., Alwalid O., Gu J., Fan Y., Zheng C. Radiological findings from 81 patients with COVID-19 pneumonia in Wuhan, China: a descriptive study. Lancet Infect. Dis. 2020;20(4):425–434. doi: 10.1016/S1473-3099(20)30086-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Altmayer S., Zanon M., Pacini G.S., Watte G., Barros M.C., Mohammed T.-L., Verma N., Marchiori E., Hochhegger B. Comparison of the computed tomography findings in COVID-19 and other viral pneumonia in immunocompetent adults: a systematic review and meta-analysis. Eur. Radiol. 2020;30(12):6485–6496. doi: 10.1007/s00330-020-07018-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Koo H.J., Lim S., Choe J., Choi S.-H., Sung H., Do K.-H. Radiographic and CT Features of Viral Pneumonia. RadioGraphics. 2018;38(3):719–739. doi: 10.1148/rg.2018170048. [DOI] [PubMed] [Google Scholar]

- 18.Miller W.T., Mickus T.J., Barbosa E., Mullin C., Van Deerlin V.M., Shiley K.T. CT of viral lower respiratory tract infections in adults: comparison among viral organisms and between viral and bacterial infections. AJR. Am. J. Roentgenol. 2011;197(5):1088–1095. doi: 10.2214/AJR.11.6501. [DOI] [PubMed] [Google Scholar]

- 19.Hani C., Trieu N.H., Saab I., Dangeard S., Bennani S., Chassagnon G., Revel M.-P. COVID-19 pneumonia: a review of typical CT findings and differential diagnosis. Diagn. Interv. Imaging. 2020;101(5):263–268. doi: 10.1016/j.diii.2020.03.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Kwee T.C., Kwee R.M. Chest CT in COVID-19: what the radiologist needs to know. RadioGraphics. 2020;40(7):1848–1865. doi: 10.1148/rg.2020200159. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Bai H.X., Hsieh B., Xiong Z., Halsey K., Choi J.W., Tran T.M.L., Pan I., Shi L.-B., Wang D.-C., Mei J., Jiang X.-L., Zeng Q.-H., Egglin T.K., Hu P.-F., Agarwal S., Xie F.-F., Li S., Healey T., Atalay M.K., Liao W.-H. Performance of radiologists in differentiating COVID-19 from non-COVID-19 viral pneumonia at chest CT. Radiology. 2020;296(2):E46–E54. doi: 10.1148/radiol.2020200823. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Hu T., Khishe M., Mohammadi M., Parvizi G.-R., Taher Karim S.H., Rashid T.A. Real-time COVID-19 diagnosis from X-Ray images using deep CNN and extreme learning machines stabilized by chimp optimization algorithm. Biomed. Signal Process. Control. 2021;68:102764. doi: 10.1016/j.bspc.2021.102764. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Turkoglu M. COVIDetectioNet: COVID-19 diagnosis system based on X-ray images using features selected from pre-learned deep features ensemble. Appl. Intell. 2021;51(3):1213–1226. doi: 10.1007/s10489-020-01888-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.JavadiMoghaddam S., Gholamalinejad H. A novel deep learning based method for COVID-19 detection from CT image. Biomed. Signal Process. Control. 2021;70:102987. doi: 10.1016/j.bspc.2021.102987. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Chaddad A., Hassan L., Desrosiers C. Deep CNN models for predicting COVID-19 in CT and x-ray images. J. Med. Imaging (Bellingham, Wash.) 2021;8:14502. doi: 10.1117/1.JMI.8.S1.014502. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Perumal V., Narayanan V., Rajasekar S.J.S., Pan D. Prediction of COVID-19 with computed tomography images using hybrid learning techniques. Dis. Markers. 2021;2021:1–15. doi: 10.1155/2021/5522729. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Luz E., Silva P., Silva R., Silva L., Guimarães J., Miozzo G., Moreira G., Menotti D. Towards an effective and efficient deep learning model for COVID-19 patterns detection in X-ray images. Res. Biomed. Eng. 2021 doi: 10.1007/s42600-021-00151-6. [DOI] [Google Scholar]

- 28.Zhao J., He X., Yang X., Zhang Y., Zhang S., Xie P. COVID-CT-dataset: a CT image dataset about COVID-19. ArXiv. 2020:1–14. [Google Scholar]

- 29.Zhang K., Sun M., Han T.X., Yuan X., Guo L., Liu T. Residual networks of residual networks: multilevel residual networks. IEEE Trans. Circuits Syst. Video Technol. 2018;28(6):1303–1314. doi: 10.1109/TCSVT.2017.2654543. [DOI] [Google Scholar]

- 30.Naranjo-Alcazar J., Perez-Castanos S., Martin-Morato I., Zuccarello P., Cobos M. On the performance of residual block design alternatives in convolutional neural networks for end-to-end audio classification. ArXiv. 2019:1–6. [Google Scholar]

- 31.Hanif M.S., Bilal M. Competitive residual neural network for image classification. ICT Express. 2020;6(1):28–37. doi: 10.1016/j.icte.2019.06.001. [DOI] [Google Scholar]

- 32.M.D. Pandya, P.D. Shah, S. Jardosh, Medical image diagnosis for disease detection: A deep learning approach, in: N. Dey, A.S. Ashour, S.J. Fong, S.B.T.-U.-H.M.S. Borra (Eds.), Adv. Ubiquitous Sens. Appl. Healthc., Academic Press, 2019: pp. 37–60. doi:https://doi.org/10.1016/B978-0-12-815370-3.00003-7.

- 33.Basha S.H.S., Dubey S.R., Pulabaigari V., Mukherjee S. Impact of fully connected layers on performance of convolutional neural networks for image classification. Neurocomputing. 2020;378:112–119. doi: 10.1016/j.neucom.2019.10.008. [DOI] [Google Scholar]

- 34.Maharjan S., Alsadoon A., Prasad P.W.C., Al-Dalain T., Alsadoon O.H. A novel enhanced softmax loss function for brain tumour detection using deep learning. J. Neurosci. Methods. 2020;330:108520. doi: 10.1016/j.jneumeth.2019.108520. [DOI] [PubMed] [Google Scholar]

- 35.G. Chen, P. Chen, Y. Shi, C.-Y. Hsieh, B. Liao, S. Zhang, Rethinking the Usage of Batch Normalization and Dropout in the Training of Deep Neural Networks, (2019). http://arxiv.org/abs/1905.05928.

- 36.Yue B., Fu J., Liang J. Residual recurrent neural networks for learning sequential representations. Inf. 2018;9(3):56. doi: 10.3390/info9030056. [DOI] [Google Scholar]

- 37.Mahmood A., Bennamoun M., An S., Sohel F., Boussaid F. ResFeats: Residual network based features for underwater image classification. Image Vis. Comput. 2020;93:103811. doi: 10.1016/j.imavis.2019.09.002. [DOI] [Google Scholar]

- 38.R. Fisher, S. Perkins, Image Transforms - Fourier Transform, Univ. Edinburgh. (2021). http://homepages.inf.ed.ac.uk/rbf/HIPR2/fourier.htm (accessed January 23, 2021).

- 39.Cadet F., Fontaine N., Vetrivel I., Ng Fuk Chong M., Savriama O., Cadet X., Charton P. Application of fourier transform and proteochemometrics principles to protein engineering. BMC Bioinform. 2018;19(1) doi: 10.1186/s12859-018-2407-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.H. Chen, The fourier transform source code, GitHub. (2020). https://gist.github.com/HiCraigChen/931a6abf9d78e8fa2de4df60580f3ffe (accessed January 23, 2021).

- 41.R.R. Selvaraju M. Cogswell A. Das R. Vedantam D. Parikh D. Batra Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization, in, IEEE Int Conf. Comput. Vis. 2017 2017 618 626 10.1109/iccv.2017.74.

- 42.P. Morbidelli, D. Carrera, B. Rossi, P. Fragneto, G. Boracchi, Augmented Grad-CAM: Heat-Maps Super Resolution Through Augmentation, in: ICASSP 2020 - 2020 IEEE Int. Conf. Acoust. Speech Signal Process., 2020: pp. 4067–4071. doi:10.1109/ICASSP40776.2020.9054416.

- 43.Courtemanche F., Léger P.-M., Dufresne A., Fredette M., Labonté-LeMoyne Élise, Sénécal S. Physiological heatmaps: a tool for visualizing users’ emotional reactions. Multimed. Tools Appl. 2018;77(9):11547–11574. doi: 10.1007/s11042-017-5091-1. [DOI] [Google Scholar]

- 44.F. Chollet, Grad-CAM class activation visualization, Keras. (2021). https://keras.io/examples/vision/grad_cam/ (accessed June 7, 2021).

- 45.Shi S., Zhang X., Fan W. A modified perturbed sampling method for local interpretable model-agnostic explanation. ArXiv. 2020:1–5. http://arxiv.org/abs/2002.07434 [Google Scholar]

- 46.Palatnik de Sousa I., Maria Bernardes Rebuzzi Vellasco M., Costa da Silva E. Local interpretable model-agnostic explanations for classification of lymph node metastases. Sensors (Basel) 2019;19(13):2969. doi: 10.3390/s19132969. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Bramhall S., Horn H., Tieu M., Bramhall S., Horn H., Tieu M., Lohia N. QLIME-A quadratic local interpretable model-agnostic explanation approach. SMU Data Sci. Rev. 2020;3 https://scholar.smu.edu/datasciencereview/vol3/iss1/4/ [Google Scholar]

- 48.I. Mollas N. Bassiliades G. Tsoumakas Altruist, Argumentative Explanations through Local Interpretations of Predictive Models Arxiv 2020 http://arxiv.org/abs/2010.07650.