Abstract

Introduction/Purpose

Increasing demand for training in focused cardiac ultrasound (FCU) is constrained by availability of supervisors to supervise training on patients. We designed and tested the feasibility of a cloud‐based (internet) system that enables remote supervision and monitoring of the learning curve of image quality and interpretative accuracy for one novice learner.

Methods

After initial training in FCU (iHeartScan and FCU TTE Course, University of Melbourne), a novice submitted the images and interpretation of 30 practice FCU examinations on hospitalised patients to a supervisor via a cloud‐based portal. Electronic feedback was provided by the supervisor prior to the novice performing each FCU examination, which included image quality score (for each view) and interpretation errors. The primary outcome of the study was the number of FCU scans required for two consecutive scans to score: (i) above the lower limit of acceptable total image quality score (64%), and (ii) below the upper limit of acceptable interpretive errors (15%).

Results

The number of FCU practice examinations required to meet adequate image quality and interpretation error standard was 10 and 13, respectively. Improvement in image acquisition continued, remaining within limits of acceptable image quality. Conversely, interpretive in‐accuracy (error > 15%) continued.

Conclusion

This electronic FCU mentoring system circumvents (but should not replace) the requirement for bed‐side supervision, which may increase the capacity of supervision of physicians learning FCU. The system also allows real‐time tracking of their progress and identifies weaknesses that may assist in guiding further training.

Keywords: simulation, supervision, training, transthoracic echocardiography

Introduction

Demand for training in focused cardiac ultrasound (FCU) is increasing and becoming a requirement of training in critical care specialties including anaesthesia, intensive care and emergency medicine. There are three components to achieving competence, knowledge, practical learning and practice, which is traditionally supervised. The first two components, knowledge and initial practical training, can be scaled up to meet a large volume of demand by using methods such as flipped classroom teaching (replacement of didactic teaching with self‐directed learning) with electronic resources, and simulators to teach image acquisition and interpretation of pathology.1 However, the ability to scale training to the expanding demand is constrained by availability of supervisors to oversee the practice component. Electronic‐based feedback from supervisor to trainees has demonstrated some scalability in an ultrasound curriculum in the intensive care setting;2 however, a system for monitoring improvement of trainee ultrasound competence over time is yet to be tested.

The numbers of FCU studies required to achieve basic competence (Level 1) published in societal recommendations vary from 30 (Intensive Care Medicine3, 4) to 90 (Anaesthesia5) and are largely based on opinion rather than evidence (20–40 scans6, 7, 8) which is currently weak. See et al. reported an average of 30 FCU studies were required for 90% agreement with experts by seven pulmonologists after initial training in FCU.6 Vignon et al.7 reported that after performing 33 FCU studies, six residents performed acceptable image acquisition and interpretation compared to competent ICU physicians. Royse et al. reported acceptable agreement in interpretation of haemodynamic state between a medical student and expert after 20 studies but 40 studies were required to reach acceptable agreement on all measured variables. One issue in interpreting these findings include the differing methods used to assess the FCU findings, from a binary assessment of presence or absence of a dilated left ventricle, right ventricle and inferior vena cava,7 to more comprehensive assessment of haemodynamic state and valvular pathology.8 Furthermore, the novices varied in specialty training including pulmonology, intensive care and a medical student. It may be argued that since there are likely to be many factors affecting the rate of achieving competency (extent of the FCU assessment, motivation of the learner and supervisor, availability of time, supervision and equipment), the actual number of scans required is probably less important than monitoring the performance until competence has been obtained. A useful training and assessment tool for novices learning FCU would include the ability to track the progress in competency during the learning phase to be able to determine when the learning has reached a satisfactory competency level.

The help answer this aim, we designed and tested the feasibility of a cloud‐based supervised practice system that uses internet to share FCU images and reports, and to provide an electronic portal for feedback between supervisor and novice. The aim was to determine feasibility of the system, which is designed to increase the capacity of supervision, and to determine how many supervised practice scans were required by the novice to achieve a desired level of competency in satisfactory image acquisition and interpretation accuracy.

Materials/Patients

The novice, a 2nd year medical officer, received initial training in FCU (iHeartScan9 and Focused Cardiac Ultrasound Transthoracic Echocardiography Simulator course,1 University of Melbourne). Both courses include 20 h of eLearning, which covers basics of ultrasound, how to obtain FCU views, how to perform the FCU protocol and how to interpret frequently encountered abnormal haemodynamic states or major structural cardiac or pericardial pathology. The eLearning also includes practice interpretation of 20 real pathology cases that is assessed online using automated marking of MCQ's. The practical teaching is a 2‐day hands‐on workshop (iHeartScan course) and a 3‐h workshop followed by 10 self‐directed simulator case studies (Simulator course). The simulator used in the simulator course is a Vimedix™ simulator (CAE Healthcare, Montreal, Canada).

After the basic FCU training, the study began where the novice completed 30 practice FCU examinations on hospitalised patients (aged over 18 years) to complete their FCU training, supervised remotely using the cloud‐based supervision method below. The selection of patients to be scanned was at the discretion of the novice. There were no exclusion criteria for patients to be scanned. The rationale for selecting 30 FCU examinations was based on previous reports.6, 7, 8 The ultrasound equipment used was a 3.5–5 MHz transthoracic probe and handheld display (iViz, Sonosite, Bothwell, Andover, MA, USA). The image acquisition and interpretation of FCU images followed the iHeartScan protocol (Hemodynamic Echocardiography Assessment in Real Time; the Ultrasound Education Group, University of Melbourne, Melbourne, Victoria, Australia9), which is a limited study designed to diagnose abnormal hemodynamic state, haemodynamically significant valve disease and pericardial effusion. This echocardiography protocol includes two‐dimensional and colour‐flow Doppler using the parasternal, apical and subcostal windows. The protocol was designed to be performed in fewer than 10 min so as to be integrated with bed‐side clinical examination and management.

After institutional ethics approval (Melbourne Health Human Research Ethics Committee) and written patient consent, the novice uploaded FCU images (dropbox.com) and a completed standardised FCU report, containing the novice's interpretation of the FCU images, from each of 30 practice examinations. As soon as possible after notification from the novice, the supervisor reviewed the images and wrote their interpretation of the FCU images on the standardised FCU report form. The supervisor attempted to provide feedback within 48 h of receiving the FCU data. Differences between FCU interpretation between the novice and supervisor were noted. The supervisor then evaluated the FCU images using a validated image quality scoring system described below.10 The report form and IQS sheet comprise detailed feedback for the novice but some general comments (including differences in interpretation) were also provided by the supervisor. The novice waited for feedback from each FCU examination prior to performing their next examination.

The IQS is designed to assess adequacy of 2D‐visualisation from 10 standard FCU views of key cardiac structures (e.g. separation of valve leaflet tips) sector axis alignment (e.g. lack of foreshortening of the left ventricle or aorta), positioning the region of interest in the centre of the sector (e.g. tricuspid valve in the centre for the RV inflow view), and a suitable sector depth. The IQS compromises the sum of between 1 and 10 binary assessment questions (1 point for correct, 0 points for incorrect) for each of 10 standard two‐dimensional views: parasternal long‐axis view (10 points), right ventricular inflow view (4 points), parasternal short‐axis view at the level of the aortic valve (7points), the mid LV (8 points), apical 4‐chamber view (9 points), apical 5‐chamber view (1 point), apical 2‐chamber view (8 points), apical long‐axis view (9 points), subcostal 4‐chamber view (8 points) and the subcostal inferior vena cava view (4 points). The sum of points from each view are summated as the total IQS and then expressed as a percentage of the maximum score (68 points). Further detail on the image quality score is published2 and represented with permission in Appendix S1. The limits of acceptable image quality scores were defined as the mean (80%) ± 2 standard deviations of experts scores (64% to 97%), which were obtained in a previous study where two experts performed FCU on 5 healthy volunteers.2

Interpretive error was defined as the number of interpretive errors by the novice divided by the number (eighteen) of categorical interpretive items on the iHeartscan report (Appendix S2). Acceptable interpretive error between experts has been described to be 10% due to interobserver variability.11, 12 For this study, the upper limit of interpretive error rate for a novice in FCU was agreed to be 15%. The lower limit is 0% consistent with no errors reported, and hence, the acceptable range of interpretation error was set at 0–15%.

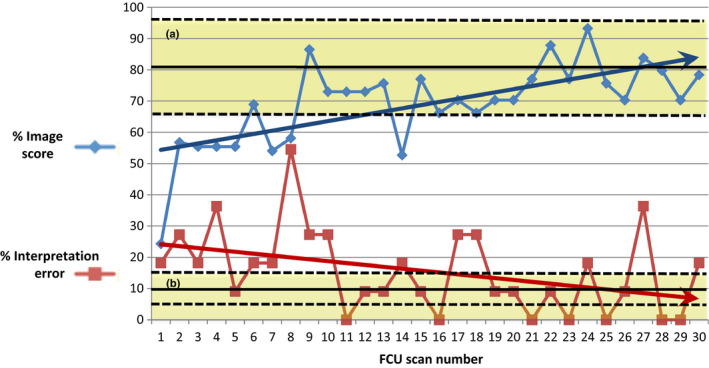

Image acquisition score and errors in interpretation were plotted over time to produce a ‘learning curve’. The primary outcome of the study was the number of scans required, for two consecutive scans to fall within the limits of acceptable image quality and interpretive accuracy.

Results

Of the thirty FCU scans performed, acceptable images were obtained for all participants. After each submission from the novice of FCU images and interpretation report to the supervisor, image quality and interpretation scores and feedback occurred prior to the novice performing their next FCU examination. Significant pathology was frequently identified, with many patients having multiple pathologies including left ventricular systolic and/or diastolic dysfunction (22 participants), right ventricular systolic dysfunction (11), aortic stenosis (7), tricuspid regurgitation (5) and mitral regurgitation (4).

The number of FCU practice examinations required to meet adequate image quality and interpretation error standard was 10 and 13, respectively (Figure 1). After this number, there was a trend to improvement image acquisition over the whole time period, remaining within limits of acceptable image quality. Conversely, there continued to be FCU examinations without acceptable interpretive accuracy (interpretative error > 15%).

Figure 1.

Focused Cardiac Ultrasound Image Acquisition Scores and Interpretation Errors. The % Image Score for Each Practice Focused Cardiac Ultrasound Performed by the Novice is Displayed, which is the Sum of Image Quality Scores for Each View Divided by the Maximum Possible Score (68). The % Interpretation Error for Each Practice FCU is also Displayed, Which is the Number of Differences in Focused Cardiac Ultrasound Interpretation by the Novice Compared to an Expert Divided by the Maximum Number of Assessments (18). The Upper Yellow Shaded Area (a) Represents the Limits of Acceptable Image Quality Scores, which were Defined as the Mean (80%) ± 2 Standard Deviations of Experts Scores (64% to 97%), which were Obtained in a Previous Study where Two Experts Performed Focused Cardiac Ultrasound on 5 Healthy Volunteers (Supplemental digital information 1). 3 The Lower Yellow Shaded Area (b) Represents the Acceptable Interpretive Error Rate (0–15%), which was Defined as ±50% of Expected Variability (10%).11, 12 The Arrows Represent Linear Trend Lines, or Lines of Best Fit, Calculated Using the Least Squares Method (Regression).

Discussion

In this pilot study, we have demonstrated feasibility of a cloud‐based system for monitoring the development of competence in image acquisition and interpretation of focused cardiac ultrasound in a novice that also was able to deliver timely feedback. This method enables prospective tracking of learner's progress, enabling identification of weaknesses (e.g. interpretation vs. image quality or which views need more practice) to help guide both the learner and supervisor. A limitation of conventional assessment methods, that nominate a set minimum number of cases required to be eligible for competence, is that the learning curve is not the same for all learners as this is affected by many other factors beyond the control of the echocardiography supervisor of training. Our method notifies the learner and supervisor in real time when a set level of competency has been achieved. To our knowledge, this has not been reported elsewhere.

Unlike previous reports,6, 7, 8 our method enabled assessment of competency after each scan, informing the supervisor of the learner's progress. Vignon et al.7 reported the agreement with the reference assessors for the mean number of 33 scans per participant. So, this number (33 scans) was set and not dictated by the performance of the participants. It is possible that acceptable agreement was reached prior to 33 scans, but these data were not available. See et al.6 reported the assessment in blocks of 10 scans, that is 1–10, 11–20, 21–30, 31–40. However, the assessment was reported as the proportion of the 10 scan that had acceptable agreement with the reference observers for simple end‐points such as all seven acoustic windows obtained, and the presence or absence of pericardial effusion, RV dilation, mitral regurgitation, visual estimation of category of LV dysfunction and correct measurement of inferior vena cava size. See et al. did not prospectively report an acceptable level of agreement for image quality and interpretation error for which to decide when the number of required scans had been reached. In our study, the end‐point was set at two consecutive scans with acceptable image quality score and interpretation errors; however, this end‐point is arbitrary and could be set as any number of scans, where the likelihood of competence increases as the set number of consecutive acceptable scans increases. Hence, our findings that only 10 scans were required for acceptable image quality score and 13 scans were required for acceptable image interpretation is of less importance than our findings that the process is feasible.

In our study, the plateau in improvement was less than these studies, but errors in interpretation occurred even with 30 scans, indicating that a greater emphasis may be required on interpretation more so than acquisition as the novice progresses. This feedback is useful as it helps direct the learner to areas of deficiency that require more practice, in this case in interpretation. This information could also be useful for the supervisor as it could prompt areas to improve in the teaching program. Further suggestions on how to improve image quality score could be included on the image quality scoring sheet and could improve the feedback. Some hints are already included, for example there is one point scored at the start of the apical and subcostal views for adjusting the depth setting to optimise the size of the heart so it fills at least two‐thirds of the screen but is not too large to fit on the screen.

Limitations include the analysis of only one novice FCU learner (a junior resident doctor) and hence conclusions of the number of scans required to attain pre‐specified competency cannot be extrapolated to the general population of FCU trainees. Another limitation is the use of only one supervisor assessor with the associated risk rating of bias; however, the primary purpose of this study was to determine feasibility rather than how many FCU studies are required to achieve competency. Asynchronous remote supervision affects the quality of the feedback given for each ultrasound examination performed. Good feedback should be timely, contextual and based on observable behaviours (i.e. how the probe is manipulated).13 Remote supervision does not respect a lot of these principles compared to face‐to‐face supervision and training. For example, there was no reference images (performed by an expert) to compare the novice images, which is an inherent limitation of remote supervision. Hence, this cloud‐based supervision method could not replace face‐to‐face supervision entirely, but could be used to supplement it. Another limitation is that factors known to impair FCU image quality such as such as increased body mass index, chronic obstructive pulmonary disease and immediately post‐surgery, were not recorded and included in the assessment. It is possible that the learner avoided patients with these characteristics, which may increase the image quality and interpretation score. Unfortunately, these data were not recorded in this study but could be a valuable addition for future research in this area.

This electronic FCU mentoring system circumvents the requirement for bed‐side supervision, which may increase the capacity of supervision of physicians learning FCU. The system also allows real‐time documentation of the trainee's learning in curve in both image acquisition and interpretation, allowing tracking of their progress and potentially for assessment of competency. Further research is required to determine the average number of scans required to achieve competency, especially in interpretation.

Conclusion

This electronic FCU mentoring system circumvents (but should not replace) the requirement for bed‐side supervision, which may increase the capacity of supervision of physicians learning FCU. The system also allows real‐time tracking of their progress and identifies weaknesses that may assist in guiding further training.

Disclosure

Colin Royse, Alistair Royse and David Canty are employees of the University of Melbourne, and Colin Royse and Alistair Royse are directors of iTeachU limited. Educational content from these entities are available for sale in the United States of America through the American Society of Anesthesiologists and the Society of Cardiovascular Anesthesiology. The University of Melbourne has received loan equipment from the simulator company CAE.

Supporting information

Appendix S1. Details, definitions, and evaluation of the image quality scoring system

Appendix S2. Focused Cardiac Ultrasound report form

Acknowledgements

We acknowledge the significant contributions to performing this study from the administrative staff of the Ultrasound Education Group, The University of Melbourne.

Prior Presentations: Vijayakumar R. Cloud‐based supervision of training in focused cardiac ultrasound – a scalable solution? Biennial Conference of the Cardiothoracic Vascular Perfusion Special Interest Group of the Australian College of Anaesthetists 207, Queenstown New Zealand.

References

- 1.Canty DJ, Royse AG, Royse CF. Self‐directed simulator echocardiography training: a scalable solution. Anaesth Intensive Care 2015; 43: 425–7. [PubMed] [Google Scholar]

- 2.Arntfield RT. The utility of remote supervision with feedback as a method to deliver high‐volume critical care ultrasound training. J Crit Care 2015; 30(2): 441.e1‐6. [DOI] [PubMed] [Google Scholar]

- 3.Expert Round Table on Ultrasound in ICU . International expert statement on training standards for critical care ultrasonography. Intensive Care Med 2011; 37: 1077–83. [DOI] [PubMed] [Google Scholar]

- 4.https://www.cicm.org.au/CICM_Media/CICMSite/CICM‐Website/Resources/Trainee%20Resources/T‐35‐(2014)‐Focused‐Cardiac‐Ultrasound‐in‐Intensive‐Care‐Syllabus.pdf (last accessed 7 Aug 2018)

- 5.http://www.anzca.edu.au/documents/ps46-2014-guidelines-ontraining-and-practice-of-p.pdf (last accessed 7 Aug 2018)

- 6.See KC, Ong V, Ng J, Tan RA, Phua J. Basic critical care echocardiography by pulmonary fellows: learning trajectory and prognostic impact using a minimally resourced training model. Crit Care Med 2014; 42: 2169–77. [DOI] [PubMed] [Google Scholar]

- 7.Vignon P, Mücke F, Bellec F, Marin B, Croce J, Brouqui T, et al. Basic critical care echocardiography: validation of a curriculum dedicated to noncardiologist residents. Crit Care Med 2011; 39(4): 636–42. [DOI] [PubMed] [Google Scholar]

- 8.Royse C, Seah J, Donelan L, Royse A. Point of care ultrasound for basic haemodynamic assessment: novice compared with an expert operator. Anaesthesia 2006; 61(9): 849–55. [DOI] [PubMed] [Google Scholar]

- 9.Royse C, Haji D, Faris J, Veltman M, Kumar A, Royse A. Evaluation of the interpretative skills of participants of a limited transthoracic echocardiography training course (H.A.R.T. scan course). Anaesth Intensive Care 2012; 40: 498–504. [DOI] [PubMed] [Google Scholar]

- 10.Canty DJ, Heiberg J, Tan JA, Yang Y, Royse AG, Royse CF, et al. Assessment of image quality of repeated limited transthoracic echocardiography after cardiac surgery. J Cardiothorac Vasc Anesth 2017; 31: 965–72. [DOI] [PubMed] [Google Scholar]

- 11.Canty DJ, Royse CF, Kilpatrick D, Williams DL, Royse AG. The impact of pre‐operative focused transthoracic echocardiography in emergency non‐cardiac surgery patients with known or risk of cardiac disease. Anaesthesia 2012; 67(7): 714–20. [DOI] [PubMed] [Google Scholar]

- 12.Cowie B. Three years’ experience of focused cardiovascular ultrasound in the perioperative period. Anaesthesia 2011; 66: 268–73. [DOI] [PubMed] [Google Scholar]

- 13.Ende J. Feedback in clinical medical education. J Am Med Assoc 1983; 250(6): 777–81. [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Appendix S1. Details, definitions, and evaluation of the image quality scoring system

Appendix S2. Focused Cardiac Ultrasound report form