Abstract

The year 2020 will certainly be remembered for the COVID-19 outbreak. First reported in Wuhan city of China back in December 2019, the number of people getting affected by this contagious virus has grown exponentially. Given the population density of India, the implementation of the mantra of the test, track, and isolate is not obtaining satisfactory results. A shortage of testing kits and an increasing number of fresh cases encouraged us to come up with a model that can aid radiologists in detecting COVID19 using chest Xray images. In the proposed framework the low level features from the Chest X-ray images are extracted using an ensemble of four pre-trained Deep Convolutional Neural Network (DCNN) architectures, namely VGGNet, GoogleNet, DenseNet, and NASNet and later on are fed to a fully connected layer for classification. The proposed multi model ensemble architecture is validated on two publicly available datasets and one private dataset. We have shown that our multi model ensemble architecture performs better than single classifier. On the publicly available dataset we have obtained an accuracy of 88.98% for three class classification and for binary class classification we report an accuracy of 98.58%. Validating the performance on private dataset we obtained an accuracy of 93.48%. The source code and the dataset are made available in the github linkhttps://github.com/sagardeepdeb/ensemble-model-for-COVID-detection.

Keywords: COVID19, DCNN, Ensemble network, CNN

1. Introduction

The 2019 novel Corona Virus Disease was first observed in the Wuhan city of the Hubei province of China in December last year. This rare novel virus belongs to the family of “Coronavirus” (CoV) and was called Severe Acute Respiratory Syndrome Coronavirus2 (SARS-CoV-2). On February 2020 it was named COVID-19 by the World Health Organization (WHO). As on 20th August during the time of writing this manuscript, 22.6 million people have already been infected by this virus (according to WHO updates on COVID-19) and 792,000 individuals have already scummed to this deadly virus.

The WHO declared the outbreak of a pandemic in March 2020. Since then we have seen the number of fresh cases going up exponentially. The virus has engulfed more than 210 countries and among them, the USA, Brazil, and India being the worst affected. As of 20th August, the USA alone has reported 5.61 million cases while 3.5 and 2.91 million cases were reported from Brazil and India respectively. A COVID-19 infected patient may develop symptoms like fever, cough, and respiratory illness. Some serious patients may experience pneumonia and (or) difficulty in breathing, multi-organ failure and death [1]. The radiographic findings of established COVID-19 pneumonia are bilateral multi focal, randomly scattered ground glass opacity present mainly in the peripheral sub pleural region with thickened pulmonary interstitium. The interstitium shows Broncho-vascular prominence and consolidation in moderate to severe individuals [2]. Many countries’ health systems are on the verge of collapsing due to the rapid increase in COVID-19 incidents. Most of them are currently experiencing a scarcity of ventilators and testing kits. There is enough data to suggest that the R0 value which is a parameter to measures how contagious an infectious disease is higher than the deadly virus outbreak of 1918. The rapid spread of a disease of this kind demands a highly accurate point-of-care COVID19 screening [3].

According to [4], a report published in the Lancet, isolation, testing, contact tracing, and physical distancing increases the effect on reducing transmission of SARS-CoV-2 to a great extent. But the unavailability of testing kits hampers the process of large scale testing and thus contact tracing becomes nearly impossible. Moreover, the RT-PCR test, which is regarded as the gold standard screening process for detection of COVID-19 is not real-time.

Given the availability of X-ray machines in all primary health care centers, detection of COVID-19 from chest X-ray images will surely boost up the testing process, and thus at the end of the day more tests can also be performed. According to [5], [6], reports published in Radiology Journals, Chest X-Rays can be useful in detecting COVID-19. With the success of Deep Learning models in classification and detection, we can make use of them to help the radiologists in detecting COVID-19 from CXR images. And again the deep learning model can also deliver results in almost no time, thus making the system fast and robust at the same time.

2. Related works

Researchers all around the world are working day and night to counter this pandemic. Researchers of image processing and biomedical engineering fields are also not left behind. Every other day they are coming up with models based on deep learning architectures to detect COVID-19. Researchers in the image processing field have used either CT-Scan or Chest X-ray images for the detection of COVID-19. With the success of Deep Learning and more specifically Convolutional Neural Networks in the field of Computer Vision, almost all researchers have shown more interest in solving the problem using Deep Learning. This biasness towards DCNN is primarily because, the Deep Learning, unlike other traditional methods, is not dependent on handcrafted features and secondly time and again DCNN has proved to be the best algorithm for image classification.

Dibag et al. [7] proposed multi-objective differential evolution (MODE) based DCNN structure to identify COVID-19 infected patients by looking at their chest CT images. They obtained an accuracy of about 93%. Maghdid et al. [8] also used CT-Scan images for detection and classification of the COVID-19. They have introduced an AI-based mobile application that reads the smartphone sensors signal measurements and looks into the CT-Scan images to tell about the severity of pneumonia in the patient. In [9] the researchers have used Inception migration-learning model to detect COVID19 from 453 CT images and achieved an accuracy of 73.1%. Li et. al.[10] have also used Chest CT images for the detection of COVID19. An AUC (Area Under the ROC Curve) of 0.96 is reported by them. [11] have used an hybrid 3-D and full 3-D models, both based on Densenet-121 architecture to detect COVID-19 from CT images. They have obtained an accuracy of 90.8%. On CT images, it’s worth noting that nearly half of patients with COVID-19 infection have a regular CT scan if screened soon after the onset of symptoms. [12]. Moreover the radiation dose, which is one of the important health concern, is much higher for CT scan in comparison to the X ray imaging. This is especially important for pregnant women and children who are much susceptible to high radiation dose [13]. Taking all these facts into consideration we have used CXR images for our experimentation.

Wang et al. [14] has proposed COVID-Net for classification of Chest Radiology images into four classes namely- normal, COVID-19, pneumonia (bacterial), and pneumonia (viral). They have proposed a tailored network and is one of the first open source designs for COVID-19 detection from Chest X-ray(CXR) images. They obtained an accuracy of almost 83.5%. COVIDX-net [15] was proposed by Hemdan et al. used seven DCNN structures to classify CXR images into COVID-19 and normal cases. [16] used various pre-trained DCNN structures for feature extraction and SVM for classification. ResNet50 model followed by SVM gave an accuracy of 95.38%. CoroNet, [17] proposed by Asif et al. used a DCNN based on the Xception [18] model for classification of CXR into COVID-19, normal and pneumonia classes. They are also the first researchers to consider three different classes for pneumonia. Their 4-class classification obtained an accuracy of 89.6%, 3-class classification obtained 95% and the binary class-classification obtained a whooping 99% accuracy.

2.1. Motivation and key contribution

Developing a fast and accurate testing mechanism, which can assist the radiologists in detecting COVID-19 from Chest X-ray images is our motivation. The CheXNet [19], proposed by Rajpurkar et. al. for classification of Chest X-ray images into 14 different diseases also served as a motivation. The key contributions are listed below.

-

•

A multi-model ensemble based DCNN structure is proposed to extract the low-level features from the CXR images and concatenate them before classification. The DCNNs used for building the proposed model namely VGGNet, GoogleNet, DenseNet, and NASNet are the most common DCNN architectures in literature. We have proved that employing such a scheme enhances performance.

-

•

Till date most of the work presented in literature uses a very less number of test images for COVID-19 class. We aim to use a little large number of images for testing. For that the test images of the COVID-19 class in Kaggle dataset is added to the original Cohen el. al. dataset. More details about this are presented in the next section.

-

•

The performance of the proposed multi model ensemble architecture is validated on a private dataset collected from MGM Medical College and hospital.

-

•

Lastly, to investigate the concept of transfer learning and to provide a competitive analysis with the state of the art model for COVID-19 detection.

3. Dataset description

Since the outbreak of COVID19 researchers has uploaded lot of clinically proven CXR dataset on Internet. For our experimentation we have used three different datasets.

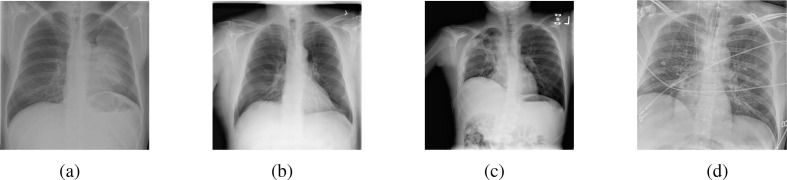

Cohen et al.[20] The dataset was acquired on 20th April 2020. Along with chest X-rays for COVID-19 it also contains examples from Community Acquired Pneumonia (CAP) and normal classes. Fig. 1 shows some of the images from all three classes. The dataset was partitioned and compared as given in [14].

Fig. 1.

(a) CAP (b) Normal and (c) COVID-19 examples as given in Cohen et. al. (d) COVID-19 example as given in Kaggle Dataset.

Kaggle It is observed that in Cohen et. al. dataset the number of images in train/COVID-19 and test/COVID-19 is very less as compared to CAP and normal classes. So we have used the images from Kaggle’s chest-xray-covid19-pneumonia dataset. All the images in the test and train section of COVID-19 are added to the original dataset for our experimentation. This is just done to increase the number of COVID-19 examples in train and test set. The number of images used for train, test and validation are given in Table 1 . For train/COVID-19 we have used 175 images from Cohen et. al. and 460 were used from Kaggle. Test 1 contains the number of samples from each category used for the first part of the experimentation.

Table 1.

# of images in dataset.

| CAP | Normal | COVID19 | |

|---|---|---|---|

| Train | 4836 | 7081 | 175 + 460 |

| Valid | 605 | 885 | 20 |

| Test 1 | 605 | 885 | 20 + 116 |

| Test 2 | 28 | 35 | 29 |

Private Dataset The private dataset is prepared at MGM Medical College and hospital, Indore. It was retrospectively collected from patients seen at Radiodiagnosis center of MGM Medical College and hospital for the period of one week. Each images were reviewed by the radiologists and the ground truth was proved with biopsy. This small collection of private dataset is collected just to validate the performance of ensemble model trained using the datasets explained above. The dataset has three classes and the number of images are given in Table 1 against the column Test 2.

A re-sampling approach known as under-sampling is used here since classifiers are often biased towards the majority class. Under-sampling involves randomly deleting instances from majority classes until the dataset is equal. This is done to remove the class imbalance present in the dataset.

4. Methodology

4.1. Data pre-processing

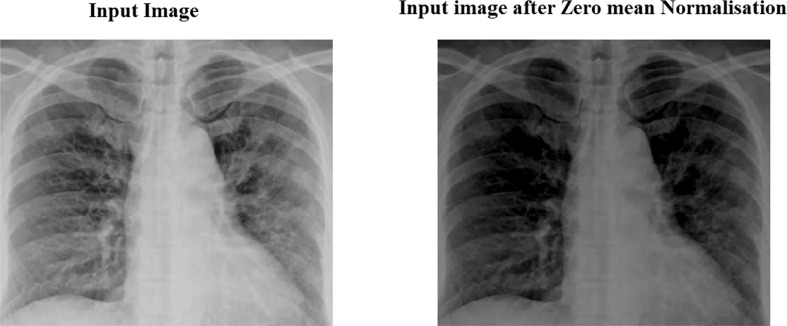

For our experimentation we are using two publicly available dataset, one given in Cohen et al. and the other in Kaggle and one private dataset. Zero mean Normalization is applied to get rid of the difference in lighting between them. Zero mean Normalization makes all pixel values between 0 and 1. This helps in fastens up the convergence of the model to be trained [21]. A sample input lung image before and after zero mean normalization is shown in Fig. 3.

Fig. 3.

Input lung image before and after pre-processing.

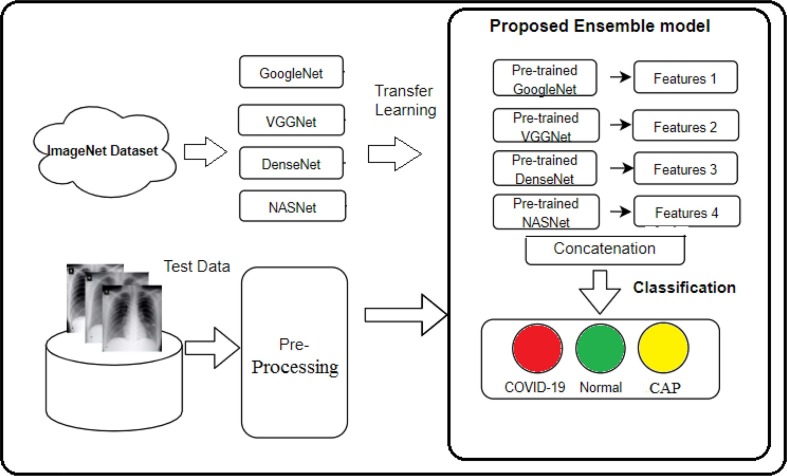

4.2. Transfer learning

With merely 12,552 images for training (4836 for CAP, 7081 for normal and 635 for COVID-19), it will not be judicious to train a network from scratch. So we have used a technique called Transfer Learning. Transfer Learning is a process of re-using a network which have been trained for some other purpose on a dataset which is considerably large for our own use. The proposed architecture as shown in Fig. 2 uses four pre-trained network for extracting low level features from the input images. The four pre-trained networks namely – VGGNet [22], DenseNet [23], NASNet [24] and GoogleNet [25] and are trained on Imagenet [26] dataset.

Fig. 2.

Schematic illustration of our model.

It is proved that the best approach for various image classification and detection tasks is to create ensembles of multiple models. In [27], the authors have used ensemble models for melanoma classification. In [28], the authors proposed a bi-stream network, comprising two different sub branches of two network structures, namely ResNet50 and VGG16 for object detection and recognition. It is done with an aim to take advantage of rich semantic information present in their proposed dataset. A novel triple-stream network is proposed by Wang et al. [29] for salient object detection. In [30], for solving the problem of object detection, the authors have successfully used VGG16 as the backbone architecture. Similarly for detection of objects from video sequence [31] proposed a novel plug-and-play scheme to weakly retrain a pretrained image saliency deep model for video data by using the newly sensed and coded temporal information.

4.3. Ensemble model for features extraction

Initially individual DCNN structures are used for feature extraction. The extracted features are provided to a fully connected layer for final classification. Later on, we have used the ensemble of the four most common DCNN structures for feature extraction. The ensemble uses VGGNet, DenseNet, GoogleNet and NASNet which are the state-of-the-art Deep Convolutional Neural Networks and have performed significantly in object classification and recognition. As shown in Fig. 2, the low-level features from the input images are extracted using an ensemble of the four pre-trained DCNNs. The details of the parameters and the architecture are given in Table 2 .We have observed that employing such scheme enhances the classification performance. A brief description of all the four DCNNs used in our multi-model ensemble structure is given below.

Table 2.

Architecture of the proposed model.

| Layer(type) | Output shape | # of parameter |

|---|---|---|

| VGG(model) | 512 | 20,025,923 |

| GoogLeNet(model) | 2048 | 21,802,784 |

| DenseNet(model) | 1920 | 18,321,984 |

| NASNet(model) | 1056 | 4,269,716 |

| concatenate_6 (Concatenate) | 5536 | 0 |

| dropout(Dropout) | 5536 | 0 |

| dense(Dense) | 256 | 1,417,216 |

| dense_1(Dense) | 3 | 768 |

| Total Parameters | 65,838,400 | |

| Parameters trained | 1,417,985 | |

4.3.1. VGGNet

With an architecture similar to that of AlexNet [32], Visual Geometry Group Network [22] won the ImageNet LSVRC challenge in the year 2013. It uses 3 x 3 window size filter and 2 x 2 pooling network. The 3 convolution layer deep network performs better as compared to AlexNet, due to its simple architecture [33].

4.3.2. DenseNet

Introduced in the year 2018 by Huang et al. [23] densely connected Covolutional Network connects each layer in a network to every other layer in a feed-forward style. This revolutionary work made possible to design more deeper and more accurate Convolutional Neural Network.

4.3.3. GoogleNet

A 22 layer deep network won the 2014 edition of ImageNet LSVRC challenge. This network revolutionized the idea of Deep Learning. With the introduction of inception model the networks could be more deeper and at the same time containing lesser parameter.This network contain 12 times lesser parameters than AlexNet [32], [25].

4.3.4. NASNet

Introduced by Google Brain in the year 2018, the idea of transferability was first proposed by them [24]. The best convolutional layers, dubbed as cells, for solving the classification problem on the CIFAR10 dataset were found, and several stacks of those cells were used to solve the ImageNet [26] classification problem.

4.4. Model architecture and development

The ensemble model used for our experimentation is shown in 2 and the details are provided in Table 3 . Transfer Learning scheme is employed to overcome the problem of overfitting as the training data is not sufficient. The experiments were implemented in Python using Keras package with Tensor-flow as a backend framework.

Table 3.

Table compares the performance of individual model with the proposed model (A three class problem).

| Architecture | Accuracy |

|---|---|

| VGGNet | 87.23% |

| GoogleNet | 86.78% |

| DenseNet | 85.67% |

| NASNet | 83.23% |

| ResNet | 82.64% |

| ResNeXT | 85.96% |

| Ensemble Model | 88.98% |

4.5. Implementing and training

We implemented two scenarios using the proposed model to detect COVID-19 from CXR images.

4.5.1. First scenario

In the first scenario the model is trained and validated using the images given in datasets obtained from Internet. Initially a three class multi-model ensemble architecture is trained. It classifies CXR images into three classes namely- Community-Acquired Pneumonia (CAP), Normal, and COVID-19. The number of images used for training is given in the Table 1. For testing the we have used the images from Coehn et al. and Kaggle. The numbers are mentioned in the same table against the column Test 1.

Later on the same model is modified for binary classification. In binary classification the same model is retrained to classify the input CXR images into COVID and non-COVID cases.

4.5.2. Second scenario

The efficiency of the ensemble model trained is evaluated on a private dataset obtained from MGM Medical College and hospital, Indore. Both three class and binary classification are performed. The number of images used to test the model is given in Table 1 against the column Test 2.

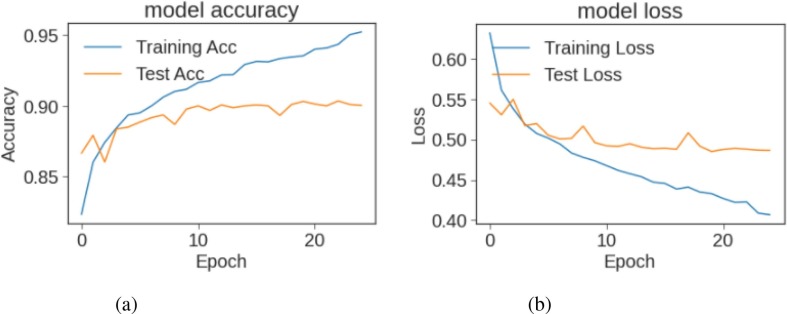

Both the scenarios was performed in Google Colaboratory which is equipped with a Tesla K80 graphics card. The training progress for the first experiment is shown in Fig. 4 . Adam optimizer with a learning rate of 0.0001 is used for both the cases. Early stopping was applied for fast implementation.

Fig. 4.

(a) training Progress (b) Model Loss.

5. Results

The entire experiment is divided into two scenarios as given below.

5.1. First scenario

The ensemble model for three-class classification achieves an accuracy of 88.92%. A comparison with the individual DCNNs in terms of accuracy is given in Table 3. The performance of the ensemble model is also compared with the two recent networks, namely ResNet [34] and ResNeXT [35].The performance comparison of the proposed ensemble model is also done on dataset with and without the preprocessing steps as mentioned in section IV A. The results for the same are shown in Table 4 . The 95% confidence interval of the proposed model on three-class classification is 88.95% 1.52%. Similarly, the 95% confidence interval of the model on two-class classification is 98.58% 0.57%.

Table 4.

Table compares the performance of proposed ensemble model on dataset with and without preprocessing.

| Architecture (With/Without Pre-processing) | Accuracy |

|---|---|

| Proposed Ensemble Model (Without Pre-processing) | 85.72% |

| Proposed Ensemble Model (With Pre-processing) | 88.98% |

Precision, Recall and F-measure are the top metrics used to measure the performance of any classification algorithms. The class wise measures for the three-class classification are presented in Table 5 . Training progress for the same is shown in Fig. 4.

Table 5.

Results obtained for three class classification.

| Class | Precision (%) | Recall(%) | F-measure(%) |

|---|---|---|---|

| CAP | 88 | 86 | 87 |

| COVID-19 | 98 | 62 | 75 |

| Normal | 89 | 95 | 92 |

After carefully analyzing the results and after discussion with the experts we conclude that there is a confusion between the CAP and COVID-19 class. So in the second part of the experiment the proposed multi model ensemble structure is retrained for binary classification. In case of binary classification the input CXR images are classified either into COVID-19 or non COVID-19 class. For that the normal and CAP class are merged together to form non-COVID19 class. An accuracy of 98.58% is obtained. Class wise precision, recall and F-score measures are presented in Table 6 .

Table 6.

Results obtained for binary classification.

| Class | Precision (%) | Recall(%) | F-measure(%) |

|---|---|---|---|

| COVID-19 | 87.41 | 97.05 | 91.97 |

| NonCOVID-19 | 99.72 | 98.74 | 99.23 |

The results obtained by our model are superior to other models as most of the models are tested on a very less number of COVID-19 examples. A comparison with other models is given in Table 9. Narin et al. [36] used ResNet50 architecture for the detection of COVID-19. Though they have obtained an accuracy of 98% in binary classification but only 10 COVID data were used for testing their model. Similarly, Asif et al. [17], proposed Xception based CoroNet, used only 29 images both for binary and three class classification. Ozturk et al. [37] proposed a darknet based COVID-19 detector but used only 127 images in total. They performed a 5-fold cross-validation procedure. Sethy and Behra [16] performed binary classification using a DCNN based on ResNet50. They have obtained an accuracy of 95.38%. The COVID examples used for testing the model is only 25. Ioannis et al. [38] used the VGG network and achieved better accuracy. They have used only a total of 224 Covid-19 images for training, testing, and validation. They have performed 5-fold cross-validation. And most importantly their dataset has images from Kaggle only.

Table 9.

The table shows proposed model compared to other networks in the literature.

| Study | Dataset Used | Architecture | 3-class Accuracy | Binary Class Accuracy | # of test images (COVID-19) |

|---|---|---|---|---|---|

| Narin et al. [36] | Cohen et al. [20] (Date of procurement not given) | ResNet50 | NA | 98 | 10 |

| Asif et. al. [17] | Cohen et al. (Date of procurement not given) | CoroNet (Xception as base model) | 89.6 | 99 | 29 |

| Narin et al. [36] | Cohen et al. (Date of procurement not given) | InceptionV3 | NA | 97 | 10 |

| Wang and Wong [39] | COVIDx dataset | Covid-Net (based on Residual Architecture) | NA | 92.4 | 100 |

| Ozturk et al. [37] | Cohen et al. (Date of procurement not given) | Darknet | 87.02 | 98.08 | 127 (total) |

| Sethy and Behra [16] | Cohen et al. (Date of procurement not given) | ResNet50 | NA | 95.38 | 25 |

| Hemdan et al. [15] | Cohen et al. (Date of procurement not given) | VGG16 | NA | 90 | 5 |

| Ioannis et al. [38] | Kaggle | VGG19 | 93.48 | 98.75 | 224 (total) |

| Goodwin et al. [40] | Cohen et al. (Acquired on 17th April) | DenseNet201 | 88.4 | NA | 20 |

| Deb et al. [41] | Cohen et al. (Acquired on 17th April) | DCNN network | 88.39 | 96 | 20 |

| Proposed study | Cohen et al. [20] procured on 20th April and Kaggle | Ensemble Model (based on four pre-trained DCNN) | 88.98 | 98.58 | 136 |

| Proposed study | Private Dataset | Ensemble Model (based on four pre-trained DCNN) | 93.48 | 95.65 | 29 |

Accuracy given in percentage NA– Not Applicable as authors have not performed binary class classification.

Whereas the ensemble model proposed by us is tested on 136 COVID-19 examples from two publicly available datasets, 20 images from Coehn et al. [20] and rest 116 from Kaggle. The model proposed is more robust as it is tested on two publicly available dataset and one private dataset.

5.2. Second scenario

The second scenario is about classifying the dataset we have acquired from MGM Medical College, Indore. As the dataset is small so we have avoided retraining the model. Rather we have used the model trained in first scenario to test the private dataset. As far as three class classification is concerned we have obtained an accuracy of 93.48%. The 95% confidence interval of the proposed model on three-class classification is 93.48% 5.04%. Similarly, the 95% confidence interval of our model on two-class classification is 95.65% 4.16%. Precision, Recall, and F-measure is given in Table 7 . As shown in Table 7, the precision for COVID-19 is 100%. This signifies that no patient is mis-classified into COVID-19 class. This is very important as this model has guaranteed that patients having pneumonia or are completely healthy is not mis-classified as COVID-19. Recall for normal class is also reported to be 100%. Significance of having cent percent recall of normal class is that no healthy patients are classified as COVID-19 or CAP. For classifying the input CXR images into COVID-19 and non COVID-19 class, we have merged the images from CAP and normal class into non COVID-19 class. An accuracy of 95.65% is obtained.

Table 7.

Results obtained for three class classification (Private Dataset).

| Class | Precision (%) | Recall(%) | F-measure(%) |

|---|---|---|---|

| CAP | 90 | 93 | 91 |

| COVID-19 | 100 | 86 | 93 |

| Normal | 92 | 100 | 96 |

6. Conclusions

With the increasing number of cases, it is important to ensure that no single COVID-19 patient goes undetected. To make this possible we need to have a testing mechanism which is not only accurate but also real-time. The proposed method,which is based on four pre-trained DCNN structures can assist the radiologists to have a deeper understanding of the critical aspects related to COVID-19. The model achieved promising results on the prepared dataset and we strongly believe that once more training data becomes available, the accuracy will go up. (see Table 8 ).

Table 8.

Results obtained for binary classification (Private Dataset).

| Class | Precision (%) | Recall(%) | F-measure(%) |

|---|---|---|---|

| COVID-19 | 88.31 | 95.05 | 91.56 |

| NonCOVID-19 | 97.52 | 97.74 | 97.62 |

7. Source code and dataset

The source code, dataset can be obtained from the following github linkhttps://github.com/sagardeepdeb/ensemble-model-for-COVID-detection

CRediT authorship contribution statement

Sagar Deep Deb: Methodology, Writing - original draft. Rajib Kumar Jha: Supervision, Visualization, Investigation. Kamlesh Jha: Resources, Supervision. Prem S Tripathi: Resources, Supervision.

Declaration of Competing Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Acknowledgment

All the due ethical issues have been addressed and taken care properly. There is no ethical violation in this work.

References

- 1.E. Mahase, Coronavirus: covid-19 has killed more people than sars and mers combined, despite lower case fatality rate, 2020. [DOI] [PubMed]

- 2.Sohail S. Rational and practical use of imaging in covid-19 pneumonia. Pakistan Journal of Medical Sciences. 2020;36(COVID19-S4):S130. doi: 10.12669/pjms.36.COVID19-S4.2760. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.X. Li, D. Zhu, Covid-xpert: An ai powered population screening of covid-19 cases using chest radiography images, arXiv preprint arXiv:2004.03042, 2020.

- 4.A.J. Kucharski, P. Klepac, A. Conlan, S.M. Kissler, M. Tang, H. Fry, J. Gog, J. Edmunds, C.C.-. W. Group et al., Effectiveness of isolation, testing, contact tracing and physical distancing on reducing transmission of sars-cov-2 in different settings, medRxiv, 2020. [DOI] [PMC free article] [PubMed]

- 5.A. Bernheim, X. Mei, M. Huang, Y. Yang, Z.A. Fayad, N. Zhang, K. Diao, B. Lin, X. Zhu, K. Li et al., Chest ct findings in coronavirus disease-19 (covid-19): relationship to duration of infection, Radiology, 2020, p. 200463. [DOI] [PMC free article] [PubMed]

- 6.X. Xie, Z. Zhong, W. Zhao, C. Zheng, F. Wang, J. Liu, Chest ct for typical 2019-ncov pneumonia: relationship to negative rt-pcr testing. Radiology, 343, 2020, pp. 200 343–200. [DOI] [PMC free article] [PubMed]

- 7.Singh D., Kumar V., Kaur M. Classification of covid-19 patients from chest ct images using multi-objective differential evolution–based convolutional neural networks. European Journal of Clinical Microbiology & Infectious Diseases. 2020:1–11. doi: 10.1007/s10096-020-03901-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.H. Maghdid, K. Ghafoor, A. Sadiq, K. Curran, and K. Rabie, A novel ai-enabled framework to diagnose coronavirus covid-19 using smartphone embedded sensors: Design study. arxiv, 2003.

- 9.S. Wang, B. Kang, J. Ma, X. Zeng, M. Xiao, J. Guo, M. Cai, J. Yang, Y. Li, X. Meng et al., A deep learning algorithm using ct images to screen for corona virus disease (covid-19), MedRxiv, 2020. [DOI] [PMC free article] [PubMed]

- 10.Li L., Qin L., Xu Z., Yin Y., Wang X., Kong B., Bai J., Lu Y., Fang Z., Song Q., et al. Artificial intelligence distinguishes covid-19 from community acquired pneumonia on chest ct. Radiology. 2020 doi: 10.1148/radiol.2020200905. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Harmon S.A., Sanford T.H., Xu S., Turkbey E.B., Roth H., Xu Z., Yang D., Myronenko A., Anderson V., Amalou A., et al. Artificial intelligence for the detection of covid-19 pneumonia on chest ct using multinational datasets. Nature Communications. 2020;11(1):1–7. doi: 10.1038/s41467-020-17971-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.J.P. Kanne, B.P. Little, J.H. Chung, B.M. Elicker, L.H. Ketai, Essentials for radiologists on covid-19: an updateradiology scientific expert panel, 2020. [DOI] [PMC free article] [PubMed]

- 13.Kim Y.Y., Shin H.J., Kim M.-J., Lee M.-J. Comparison of effective radiation doses from x-ray, ct, and pet/ct in pediatric patients with neuroblastoma using a dose monitoring program. Diagnostic and Interventional Radiology. 2016;22(4):390. doi: 10.5152/dir.2015.15221. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Linda W. A tailored deep convolutional neural network design for detection of covid-19 cases from chest radiography images. Journal of Network and Computer Applications. 2020 [Google Scholar]

- 15.E.E.-D. Hemdan, M.A. Shouman, M.E. Karar, Covidx-net: A framework of deep learning classifiers to diagnose covid-19 in x-ray images, arXiv preprint arXiv:2003.11055, 2020.

- 16.P.K. Sethy, S.K. Behera, Detection of coronavirus disease (covid-19) based on deep features, Preprints, vol. 2020030300, 2020, p. 2020.

- 17.Khan A.I., Shah J.L., Bhat M.M. Computer Methods and Programs in Biomedicine. 2020. Coronet: A deep neural network for detection and diagnosis of covid-19 from chest x-ray images; p. 105581. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Chollet F. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2017. Xception: Deep learning with depthwise separable convolutions; pp. 1251–1258. [Google Scholar]

- 19.P. Rajpurkar, J. Irvin, K. Zhu, B. Yang, H. Mehta, T. Duan, D. Ding, A. Bagul, C. Langlotz, K. Shpanskaya et al., Chexnet: Radiologist-level pneumonia detection on chest x-rays with deep learning, arXiv preprint arXiv:1711.05225, 2017.

- 20.J.P. Cohen, P. Morrison, L. Dao, K. Roth, T.Q. Duong, M. Ghassemi, Covid-19 image data collection: Prospective predictions are the future, arXiv preprint arXiv:2006.11988, 2020.

- 21.Li H., Zhuang S., Li D.-A., Zhao J., Ma Y. Benign and malignant classification of mammogram images based on deep learning. Biomedical Signal Processing and Control. 2019;51:347–354. [Google Scholar]

- 22.K. Simonyan, A. Zisserman, Very deep convolutional networks for large-scale image recognition, arXiv preprint arXiv:1409.1556, 2014.

- 23.Huang G., Liu Z., Van Der Maaten L., Weinberger K.Q. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2017. Densely connected convolutional networks; pp. 4700–4708. [Google Scholar]

- 24.Zoph B., Vasudevan V., Shlens J., Le Q.V. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2018. Learning transferable architectures for scalable image recognition; pp. 8697–8710. [Google Scholar]

- 25.Szegedy C., Liu W., Jia Y., Sermanet P., Reed S., Anguelov D., Erhan D., Vanhoucke V., Rabinovich A. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2015. Going deeper with convolutions; pp. 1–9. [Google Scholar]

- 26.Deng J., Dong W., Socher R., Li L.-J., Li K., Fei-Fei L. Imagenet: A large-scale hierarchical image database, in: 2009 IEEE conference on computer vision and pattern recognition. Ieee. 2009;2009:248–255. [Google Scholar]

- 27.Perez F., Avila S., Valle E. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops. 2019. Solo or ensemble? Choosing a cnn architecture for melanoma classification. [Google Scholar]

- 28.Wu Z., Li S., Chen C., Hao A., Qin H. A deeper look at image salient object detection: Bi-stream network with a small training dataset. IEEE Transactions on Multimedia. 2020 [Google Scholar]

- 29.Wang X., Li S., Chen C., Fang Y., Hao A., Qin H. Data-level recombination and lightweight fusion scheme for rgb-d salient object detection. IEEE Transactions on Image Processing. 2020;30:458–471. doi: 10.1109/TIP.2020.3037470. [DOI] [PubMed] [Google Scholar]

- 30.Ma G., Li S., Chen C., Hao A., Qin H. Stage-wise salient object detection in 360 omnidirectional image via object-level semantical saliency ranking. IEEE Transactions on Visualization and Computer Graphics. 2020;26(12):3535–3545. doi: 10.1109/TVCG.2020.3023636. [DOI] [PubMed] [Google Scholar]

- 31.Li Y., Li S., Chen C., Hao A., Qin H. A plug-and-play scheme to adapt image saliency deep model for video data. IEEE Transactions on Circuits and Systems for Video Technology. 2020;31(6):2315–2327. [Google Scholar]

- 32.Krizhevsky A., Sutskever I., Hinton G.E. Advances in Neural Information Processing Systems. 2012. Imagenet classification with deep convolutional neural networks; pp. 1097–1105. [Google Scholar]

- 33.Khan S., Islam N., Jan Z., Din I.U., Rodrigues J.J.C. A novel deep learning based framework for the detection and classification of breast cancer using transfer learning. Pattern Recognition Letters. 2019;125:1–6. [Google Scholar]

- 34.He K., Zhang X., Ren S., Sun J. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2016. Deep residual learning for image recognition; pp. 770–778. [Google Scholar]

- 35.Xie S., Girshick R., Dollár P., Tu Z., He K. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2017. Aggregated residual transformations for deep neural networks; pp. 1492–1500. [Google Scholar]

- 36.A. Narin, C. Kaya, Z. Pamuk, Automatic detection of coronavirus disease (covid-19) using x-ray images and deep convolutional neural networks, arXiv preprint arXiv:2003.10849, 2020. [DOI] [PMC free article] [PubMed]

- 37.Ozturk T., Talo M., Yildirim E.A., Baloglu U.B., Yildirim O., Acharya U.R. Computers in Biology and Medicine. 2020. Automated detection of covid-19 cases using deep neural networks with x-ray images; p. 103792. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Apostolopoulos I.D., Mpesiana T.A. Physical and Engineering Sciences in Medicine. 2020. Covid-19: automatic detection from x-ray images utilizing transfer learning with convolutional neural networks; p. 1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.L. Wang, A. Wong, Covid-net: A tailored deep convolutional neural network design for detection of covid-19 cases from chest x-ray images, arXiv preprint arXiv:2003.09871, 2020. [DOI] [PMC free article] [PubMed]

- 40.B.D. Goodwin, C. Jaskolski, C. Zhong, H. Asmani, Intra-model variability in covid-19 classification using chest x-ray images, arXiv preprint arXiv:2005.02167, 2020.

- 41.S.D. Deb, R.K. Jha, Covid-19 detection from chest x-ray images using ensemble of cnn models, in: 2020 International Conference on Power, Instrumentation, Control and Computing (PICC). IEEE, 2020, pp. 1–5.