Abstract

Intelligence quotient (IQ) testing is standard for evaluating cognitive abilities in genomic studies but requires professional expertise in administration and interpretation, and IQ scores do not translate into insights on implicated brain systems that can link genes to behavior. Individuals with 22q11.2 deletion syndrome (22q11.2DS) often undergo IQ testing to address special needs, but access to testing in resource‐limited settings is challenging. The brief Penn Computerized Neurocognitive Battery (CNB) provides measures of cognitive abilities related to brain systems and can screen for cognitive dysfunction. To examine the relation between CNB measures and IQ, we evaluated participants with the 22q11.2DS from Philadelphia and Tel Aviv (N = 117; 52 females; mean age 18.8) who performed both an IQ test and the CNB with a maximum of 5 years between administrations and a subsample (n = 24) who had both IQ and CNB assessments at two time points. We estimated domain‐level CNB scores using exploratory factor analysis (including bifactor for overall scores) and related those scores (intraclass correlations (ICCs)) to the IQ scores. We found that the overall CNB accuracy score showed similar correlations between time 1 and time 2 as IQ (0.775 for IQ and 0.721 for CNB accuracy), correlated well with the IQ scores (ICC = 0.565 and 0.593 for time 1 and time 2, respectively), and correlated similarly with adaptive functioning (0.165 and 0.172 for IQ and CNB, respectively). We provide a crosswalk (from linear equating) between standardized CNB and IQ scores. Results suggest that one can substitute the CNB for IQ testing in future genetic studies that aim to probe specific domains of brain‐behavior relations beyond IQ.

Keywords: 22q11.2 deletion syndrome, intelligence, IQ, linear equating, Penn Computerized Neurocognitive Battery

IQ testing is standard for evaluating cognitive abilities in genomic studies and individuals with 22q11.2 deletion syndrome often undergo IQ testing, but access to testing in resource limited settings is challenging. The brief Penn Computerized Neurocognitive Battery (CNB) provides measures of cognitive abilities related to brain systems and can screen for cognitive dysfunction. We evaluated participants with the 22q11.2DS from Philadelphia and Tel‐Aviv and related CNB scores to IQ scores. We found that the overall CNB accuracy score showed similar correlations between Time 1 and Time 2 as IQ and correlated well with the IQ scores and provide a crosswalk between CNB and IQ scores. Results suggest that one can substitute the CNB for IQ testing in future genetic studies that aim to probe specific domains of brain‐behavior relations beyond IQ.

1. INTRODUCTION

Cognitive dysfunction is evident in individuals with neurogenetic disorders, such as 22q11.2 deletion syndrome (22q11.2DS), (McDonald‐McGinn et al., 2015) and intelligence quotient (IQ) testing is standard in the evaluation of cognitive abilities in affected individuals (Duijff et al., 2012; Green et al., 2009; Morrison et al., 2020). Like other groups with compromised cognitive functioning, people with 22q11.2DS often receive IQ testing to determine optimal educational placement and help inform intervention and prognosis (Antshel et al., 2017; Hooper et al., 2013). IQ scores are lower in individuals with 22q11.2DS, with an average IQ of 70, which is at the traditional border between “normal” abilities and disability.

Major cognitive domains that are impaired in 22q11.2DS include executive functions and complex and social cognitions (Azuma et al., 2015; Duijff et al., 2012; Gur et al., 2014). It is important to conduct a neurocognitive evaluation in 22q11.2DS, as cognitive abilities are the best predictor of successful school assignment (Mosheva et al., 2019) and are needed for recommending specific in‐school assistance for children with 22q11.2DS (Mosheva et al., 2019). In addition, lower baseline IQ, as well as decline over time in cognitive abilities (e.g., executive functioning) and in verbal IQ, predict the later onset of psychotic disorders (Antshel et al., 2010; Vorstman et al., 2015). Yet IQ testing requires professional expertise in administration and interpretation, and IQ scores do not readily translate into insights on implicated brain systems that can help link genes to behavior.

While the Wechsler (Wechsler, 1981) tests are considered “gold standard” there are faster methods for obtaining IQ estimates, such as the Peabody Picture Vocabulary Test (PPVT; Dunn, 1965) and Raven's Progressive Matrices (Raven & Court, 1938). However, these tests may be suboptimal, mainly because they each assess only a narrow construct. Thus, Raven's test examines the ability to derive rules from abstract patterns and apply those rules to select an optimal related pattern. Likewise, the PPVT assesses only the vocabulary tied to visual stimuli. This narrowness of construct in these tests is what allows relatively quick assessment but at the cost of ignoring broader domains of ability.

We have developed a computerized neurocognitive battery (CNB), based on functional neuroimaging tasks, which has been applied in several populations providing normative data and measures of performance accuracy and response time (Gur et al., 2010; 2012; Moore et al., 2015; Roalf et al., 2014). The tests, which have been validated with functional neuroimaging, are scored automatically and can be administered using a web interface by minimally trained staff who can proctor the testing on site or remotely. In 22q11.2DS, we reported overall impaired performance across cognitive domains, most notable for complex cognition and social cognition (Goldenberg et al., 2012; Gur et al., 2014; Niarchou et al., 2018; Weinberger et al., 2016; Yi et al., 2016). Previous studies associated scores of CNB domains with the severity of subthreshold psychotic symptoms and characterized cognitive deficits of psychotic individuals with 22q11.2DS (Weinberger et al., 2016; Yi et al., 2016). The battery provides performance measures that are highly correlated with other estimates of IQ and educational attainment (Gur et al., 2010; Swagerman et al., 2016) and includes tests such as matrix reasoning that have been used as proxies for IQ. Examining associations between traditional IQ measures and those from a neuroscience‐based computerized battery would allow integration of older data with new genomic studies that increasingly incorporate computerized assessments implemented on a large scale and in resource‐limited settings.

The purpose of the present study was to examine whether an open‐source, brief neurocognitive battery–specifically, the Penn CNB, or a subset of tests from it–could be used as a bridge to IQ. The CNB requires minimal professional expertise for administration and scoring, is more time‐efficient than traditional IQ testing, and assesses a wide range of abilities including executive functions, episodic memory, complex cognition, and social cognition. Thus, it allows probing more specific brain‐behavior systems differentially implicated by genetic mechanisms. Importantly, it also taps domains targeted by existing brief IQ tests (e.g., matrix reasoning). Comparing CNB scores to IQ could help gauge the potential utility of the CNB as a substitute for IQ testing in large‐scale genomic studies and resource‐limited settings. Our study tested the hypotheses that (1) exploratory factor analysis will show that the CNB in this sample has the same factorial structure as in previous studies with normative populations, and this will hold true in both correlated traits and bifactor frameworks; (2) consistency of scores with repeated measures will be comparable between IQ and the CNB‐derived measure; (3) a composite score based on average accuracy will have strong correlations with IQ; and (4) IQ CNB composite scores have similar correlations with an external validator such as level of functioning. Another aim of the study was to explore a data‐driven approach aimed at answering two questions: (1) How well can we predict IQ from CNB scores in a CV framework? (2) How few variables can we use to achieve this prediction, that is, which smallest set of CNB measures is best predictive of IQ? The exploratory analyses were designed to help potential investigators who want to use portions of the CNB yet would still like to know how well they can expect their measures to be predictive of IQ. These analyses allow us to provide a crosswalk (from linear equating) between standardized CNB and IQ scores.

2. MATERIALS AND METHODS

2.1. Participants

The sample included 117 (52 female) participants, mean age 18.8 years (range 8.11–44.7). They were recruited at two collaborating sites: 50.4% were from Tel Aviv, Israel, and the remainder from Philadelphia, USA. All participants had a confirmed chromosome 22q11.2 deletion using fluorescence in situ hybridization tests, comparative genomic hybridization, multiplex ligation‐dependent probe amplification, or Single Nucleotide Polymorphism (SNP) microarray (Jalali et al., 2008). For the total sample, the mean parental education was 14.9 years.

The Tel Aviv cohort was recruited from the Behavioral Neurogenetic Center at the Sheba Medical Center. This is a large referral center that coordinates research and treatment of individuals with 22q11.2DS from all over Israel. The Philadelphia cohort was part of an ongoing collaboration of the “22q and You Center” at the Children's Hospital of Philadelphia (CHOP) and the Brain Behavior Laboratory at Penn Medicine. The 22q and You Center is a large program supporting multidisciplinary coordinated care and research activities specific to individuals with 22q11.2DS. Enrollment criteria included: proficiency in English or Hebrew, ambulatory and in stable health, and physical and cognitive capability of participating in an interview and performing neurocognitive assessments. IQ exclusion cutoff for the Philadelphia sample was ≥70 and for the Tel Aviv sample IQ≥60. Participants provided informed consent/assent after receiving a complete description of the study, and the Institutional Review Boards at Penn and CHOP and Sheba Medical Center approved the protocol.

2.2. Measures

2.2.1. IQ tests

Data include standardized cognitive assessments obtained from ongoing studies or available IQ scores collected from medical records. All IQ assessments used the age‐appropriate version of the Wechsler test: Wechsler Intelligence Scale for Children (Wechsler, 1949), Wechsler Abbreviated Scale of Intelligence (Wechsler, 1999), or the Wechsler Adult version (Wechsler, 1981). Mean (SD) across the sample of Full Scale IQ was 78.0 (11.8). Mean global assessment of functioning (GAF; McGlashan et al., 2003) was 67.4.

2.2.2. Penn CNB

The CNB comprises 14 tests grouped into five domains of neurobehavioral function. These tests were selected because they represent well‐established brain systems, and tests in the battery were validated with functional neuroimaging (Roalf et al., 2014). There are three tests in each of four domains measuring executive control, episodic memory, complex cognition, and social cognition as summarized in Supplementary Figure S1 (reproduced from Moore et al., 2015). A fifth domain comprises two speed tests, motor and sensorimotor. However, while speed is incorporated into some measures that compose the traditional IQ test, they do not log response speed at millisecond precision. Furthermore, since the current analyses emphasized accuracy, the two speed‐only tests were omitted. Because the Penn Verbal Reasoning Test required English comprehension and could not be readily translated to Hebrew, it was omitted. All CNB scores were age‐standardized by regressing age, age (Green et al., 2009), and age (Duijff et al., 2012) out of each test score, leaving all CNB scores in an age‐corrected, standardized (z) metric. The mean inter‐test interval between CNB and IQ testing was 1.6 years (maximum 5 years).

2.2.3. Repeated assessments

A subsample of individuals had two IQ assessments (N = 103), two CNB assessments (N = 130), or both (N = 24). This subsample permitted comparison of the stability of IQ and CNB‐derived measures and their associations. Comparing the sample with two measures to the remaining sample on key characteristics (age, sex, parental education, IQ‐CNB interval, IQ, CNB accuracy, CNB efficiency, GAF) indicated that the groups differed significantly only in age, with the group having both repeated measures being younger (F = 4.67, degrees of freedom = 1115, p = .033).

2.3. Statistical analysis

To assess the consistency of the current data with the theoretical Penn CNB measurement model (Moore et al., 2015), we performed exploratory factor analyses (EFA), and to avoid complicating the study goals, we created a single summary score for use in subsequent analyses. We anticipated that our EFA results would conform to those of Moore et al. (2015) from a non‐22q11.2DS sample and Niarchou et al. (2018) from a 22q11.2DS sample. To simplify replication and CNB use in this population in the future, we used a unit‐weighted factor score (mean z‐score composite) rather than, for example, using the pattern or structure matrix from analyses below to calculate scores.

The measurement model was subjectively assessed using an exploratory bifactor model (Reise et al., 2010; Schmid & Leiman, 1957); which includes a general (overall, “g”) factor as well as orthogonal specific factors (e.g., memory). The benefit of a bifactor model is that it avoids the distortion in factor loadings on the overall “g” factor caused by the presence of multidimensionality (Reise et al., 2015), for example, extracting only one factor or using the first unrotated component of a principal components analysis will yield biased loadings (Reise et al., 2011). Consider a test for psychosis that includes three items about positive symptoms (e.g., hallucinations) and three items about negative symptoms (e.g., anhedonia). To make an overall “psychosis” score combining all six items, one might wish to find optimal weights for each item (e.g., perhaps an item about auditory hallucinations should contribute more to the score than an item about olfactory hallucinations). One way to find the optimal weights is to estimate a unidimensional (one‐factor) model and use the loadings on that one factor as the weights. However, as demonstrated by the above‐cited studies, this will result in incorrect (biased) loadings because a one‐factor model ignores the fact that there are really two correlated dimensions (positive and negative symptoms). The bifactor model accounts for this multidimensionality (here, two factors) as nuisance, producing a single factor (a third, general/overall factor) combining all items with optimal and unbiased weights.

With the measurement model supported (see below), we examined the bivariate relationships among IQ scores and the CNB summary score using Intraclass correlations (ICC) because we cared about the means being stable across time (captured by ICC but not correlation). Whereas correlations indicate relative consistency across time, ICCs indicate both relative and absolute consistency. For example, if five subjects scored 90, 95, 100, 105, 110 at time point 1 and then (in the same order) scored 110, 111, 112, 113, 114 at time point 2, the correlation between time points–Pearson or Spearman–would be 1.00, while the ICC between time points would be 0.38, reflecting the difference in average values between the time points. We used the method described in Ibrahim et al. (2015) to correct for practice effects on the CNB, and for comparability, we applied the same procedure to the IQ scores (although such corrections for IQ are admittedly not routine). Notably, the practice‐effect corrected ICCs were consistently higher than the uncorrected ICCs for both CNB measures and IQ. We also calculated the bivariate relationships between IQ and CNB tests administered at two different times (maximum 5 years apart). This was done in the subsample with repeated IQ assessments and repeated CNB testing.

To determine whether a smaller subset of tests might predict IQ just as well as the full battery, saving administration time for those who are mainly interested in estimating IQ, we applied best‐subset regression to the individual CNB tests. Best‐subset regression (sometimes called “exhaustive search” regression) is a variable‐selection technique whereby all possible combinations of variables are compared, and the set with the maximum R‐squared is selected. Unlike other regression‐based techniques like stepwise, which test only a small range of models, best‐subset tests every possible model (given a specific number of variables set by the user). There is a clear risk of overfitting, however, so cross‐validation is necessary. We first split the sample into random halves (50%/50%), ran best‐subset regression for two, three, four, and up to 12 variables, in one‐half of the data, and as standard practice, the best model from each set was selected. These 11 models (best two through best 12) were then CV in the second random half of the sample by 10‐fold cross‐validation, producing 11 predicted values for each person from a model estimated without that individual. These predicted values were correlated with the true values (and squared) to get the CV R‐squared. For thoroughness, we also CV the models using ridge regression, a type of regularized regression known to perform better than general linear models for prediction problems. Thus, each person receives 11 + 11 = 22 predicted values, yielding 22 CV Rs‐squared. All of the above was repeated 1000 times, and the results were recorded. Results were then examined graphically to determine an optimal number of variables based on the CV values, with a general preference for parsimony. We also calculated ICCs for all repetitions to ensure consistency with bivariate R‐squared and allow translation from absolute CNB values to absolute IQ estimates.

Finally, as a tool for investigators, we calculated equivalence scores using linear equating. Linear equating involves a combination of linear regression and weighting to achieve equivalent scores. The linear regression is used to determine an equation in classical slope‐intercept form, and the weighting is used to preserve the variability of the dependent variable so as to overcome regression to the mean. Based on the linear equating, we created a “crosswalk” between age‐normed CNB scores and IQ. A crosswalk is a tool for translating scores on one instrument to scores on another. If one had scores on one test of ability (e.g., the Graduate Record Examination) and wanted to translate them into scores on a different test of ability (e.g., an IQ test), a crosswalk would provide such information, usually in the form of a “lookup” table where the score on one test is shown beside the equivalent score on another. The lookup table is available in Tables S1.

3. RESULTS

We first examined the comparability of the two samples. Figure S2 compares samples across sites on key characteristics, where the y‐axis is in a z metric for all variables. The Tel Aviv (TLV) group is older (F = 57.03; p < .001), has lower parental education (8.42; p = .004), lower overall CNB accuracy (F = 29.72; p < .001), and overall CNB efficiency (F = 13.12; p < .001), and higher GAF (F = 9.29; p = .003). However, there was no significant difference between sites in sex distribution or the time interval between IQ and CNB tests, the latter of which is the only variable one would expect to affect the strength of the relationship between IQ and CNB. Indeed, when the analyses were conducted separately by site, the results were very similar (Penn ICC = 0.583; Tel Aviv ICC = 0.575).

3.1. Hypothesis testing

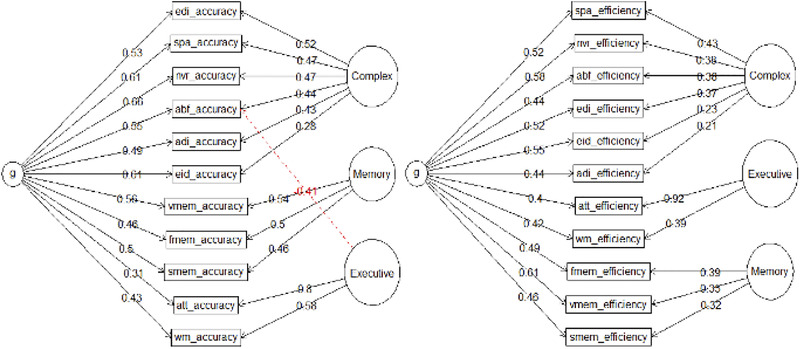

Figure 1 shows the exploratory bifactor measurement models. The efficiency general factor had three strong indicators: nonverbal reasoning (progressive matrices), emotion identification, and word memory. The accuracy “g” was most strongly determined by nonverbal reasoning, spatial processing (the line orientation test), and emotion identification. For both accuracy and speed, the weakest indicator was attention (continuous performance test).

FIGURE 1.

Exploratory bifactor (Schmid–Leiman) analyses of Computerized Neurocognitive Battery (CNB) accuracy and efficiency scores for the purposes of calculating the general factor scores. Abbreviations as in Table 1

Table 1 shows the results of the EFAs using oblique rotation. For efficiency, the strongest indicators were abstraction and mental flexibility, face memory, and attention. For both accuracy and speed, the item‐factor clustering is identical to that suggested by the exploratory bifactor results: three separate factors for complex/social cognition, memory, and executive function. The strongest indicators of the three accuracy scores were emotion differentiation, word memory, and attention, respectively. The high loading of attention on executive function in both models (accuracy and efficiency) explains why the attention test had such a low loading on the general factors–specifically, the bifactor model involves a “competition” between the general and a specific factor (in this case executive) for the variance explained in each test score (in this case attention) and the specific executive factor “won out” over the general. Finally, as expected, the moderate interfactor correlations suggest the existence of a general factor (justifying the use of the bifactor above). These results supported hypothesis 1 that the CNB in this sample has the same factorial structure as in previous studies with normative populations, and this will hold true in both correlated traits and bifactor frameworks.

Table 1.

Exploratory factor analysis results for Computerized Neurocognitive Battery accuracy and efficiency scores in the Philadelphia and Tel Aviv combined samples

| Accuracy | Efficiency | |||||

|---|---|---|---|---|---|---|

| Test | F1 | F2 | F3 | F1 | F2 | F3 |

| EDI | 0.81 | 0.74 | ||||

| SPA | 0.75 | 0.81 | ||||

| NVR | 0.75 | 0.64 | ||||

| ABF | 0.74 | −0.44 | 0.86 | |||

| ADI | 0.66 | 0.40 | 0.34 | |||

| EID | 0.45 | 0.31 | 0.40 | |||

| VMEM | 0.77 | 0.64 | ||||

| FMEM | 0.72 | 0.86 | ||||

| SMEM | 0.65 | 0.84 | ||||

| ATT | 0.87 | 0.94 | ||||

| WM | 0.62 | 0.79 | ||||

| Inter‐factor correlations | ||||||

|---|---|---|---|---|---|---|

| F1 | F2 | F3 | F1 | F2 | F3 | |

| F1 | 1.00 | 1.00 | ||||

| F2 | 0.55 | 1.00 | 0.57 | 1.00 | ||

| F3 | 0.32 | 0.28 | 1.00 | 0.44 | 0.41 | 1.00 |

Note: Loadings < 0.30 removed for clarity.

Abbreviation: EDI = Emotion Differentiation Test; SPA = Line Orientation Test; NVR = Nonverbal (Matrix) Reasoning Test; ABF = Condition Exclusion Test; ADI = Age‐Differentiation Test; EID = Emotion Identification Test (ER40); VMEM = Verbal Memory Test; FMEM = Face Memory Test; SMEM = Spatial Memory Test (Visual Object Learning Test, VOLT); ATT = Continuous Performance Test (CPT); WM = Working Memory (NBack).

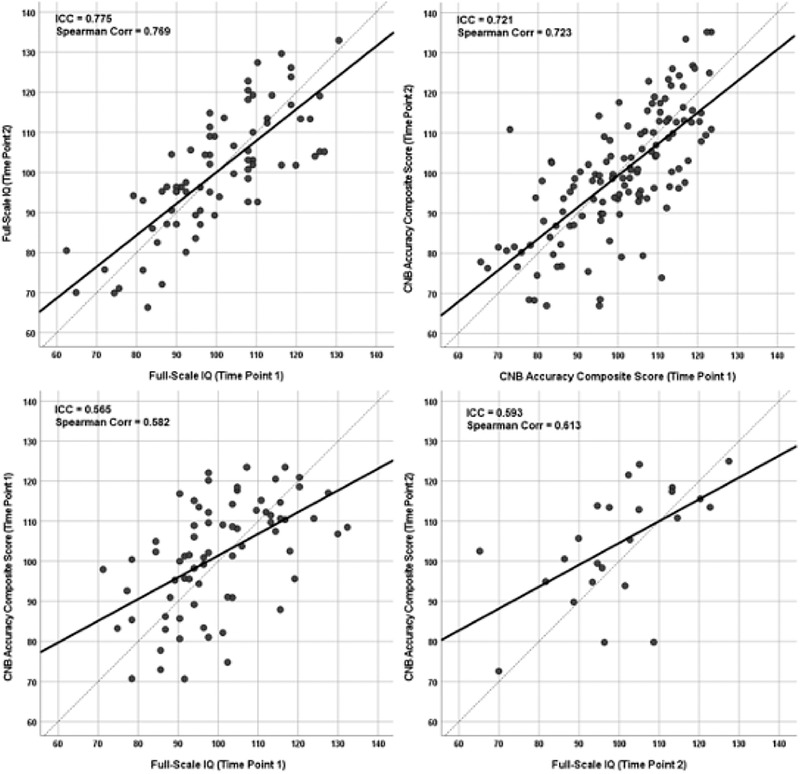

Based on these analyses and earlier work (Gur et al., 2010, Swagerman et al., 2016) we selected the CNB composite accuracy score for the main analyses testing hypotheses on comparing IQ and CNB measures, and the results with other CNB parameters are summarized in Figure S3. Figure 2 shows the bivariate relationships between CNB and IQ scores at two time points. As can be seen, supporting hypothesis 2, there is a high and comparable concordance in both IQ scores (upper left panel) and CNB accuracy scores (upper right panel) between the two time points, with ICC of 0.775 and 0.721, respectively. Supporting hypothesis 3, at both time points, the ICC between overall accuracy and IQ was similar, 0.565 and 0.593 at time 1 (lower left panel) and time 2 (lower right panel), respectively. Finally, supporting hypothesis 4, the CNB accuracy score and IQ had similar correlations with the external validator, GAF (0.165 and 0.172, respectively, p < .05, no significant difference between correlations using the Steiger test for dependent correlations).

FIGURE 2.

Bivariate relationships between intelligence quotient (IQ) and CNB scores at two time points corresponding to longitudinal data in subsequent analyses. Scatterplots show the relation between CNB accuracy scores (scaled to IQ) in time 1 and time 2 (top left panel), the same relation for IQ (top right panel), the relation between CNB and IQ at time 1 (bottom left panel) and the same relation at time 2 (bottom right panel)

3.2. Exploratory analyses

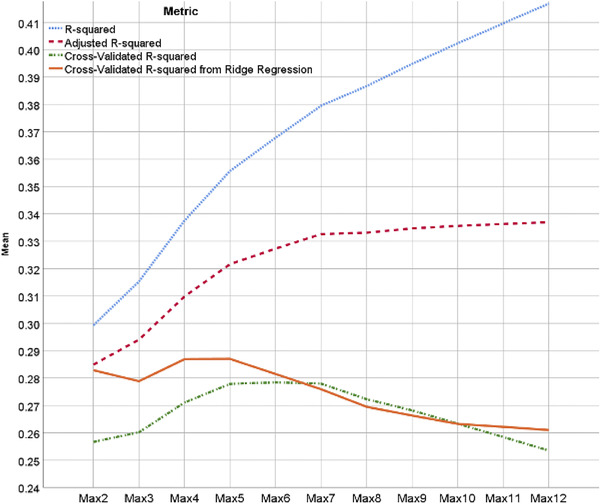

Figure 3 shows prediction metrics (R‐squared) for the 11 best subset regression models, including raw, adjusted, and CV versions. As expected, the R‐squared and adjusted R‐squared overestimate the predictive utility of the models, the CV results increase and then decrease, and the ridge regression models show superior CV performance, at least in the smaller models. The CV Rs‐squared for basic regression models (green line) reaches their near‐peak at five variables, while the ridge regressions (orange line) reach their peak after only four variables. The ridge models are therefore not only better but more parsimonious, suggesting the ridge model with four variables would be the optimum choice, achieving a CV R‐squared of ∼0.54 and a raw R‐squared of ∼0.58. The ICCs suggest the same, with mean values for ridge (across repetitions) of 0.445, 0.449, 0.462, 0.467, and 0.465 for max‐2, max‐3, max‐4, max‐5, and max‐6 variables, respectively. As was true for R‐squared, while the max‐5 model technically has the highest ICC, it is not high enough above the max‐4 ICC to justify the fifth variable.

FIGURE 3.

Prediction metrics for 11 best subset regression models, ordered by maximum variables allowed in model

The four variables selected were attention accuracy, working memory (WM) accuracy, matrix (nonverbal) reasoning accuracy, and matrix reasoning speed. Conveniently, this would mean that only three tests would need to be administered to obtain the four needed scores. Figure S4 shows an ordered rank of variables selected during the variable‐selection stage, where variable importance was determined ad hoc as the number of times the variable was selected divided by the square root of the maximum variables in the model (so being one of two variables counts more than being one of 12). Though not all used in the present model, the following variables show promise as IQ proxies: NVR accuracy, WM accuracy, ATT accuracy, NVR speed, SPA accuracy, and EID accuracy. Combined, these results confirm that by administering the attention, WM, and nonverbal (matrix) reasoning tests, which combined take an average of 20 min to administer, one would be able to calculate a reliable proxy measure for IQ. However, if any of these tests are absent, they could be substituted by the spatial (line orientation) or emotion identification tests (5.2 and 2.3 min each, respectively).

4. DISCUSSION

With the increased interest in cognitive performance as a phenotype in brain disorders, genomic research has examined available indicators of intelligence and prospective studies are incorporating a collection of multimodal brain‐behavior parameters that can point to mechanisms. Most measures of intelligence available for genomic analyses capitalize on results of tests generally referred to as measuring an IQ, typically reported in scores that are standardized to an average of 100 and a standard deviation of 15. Such scores are abundant in developmental disorders with intellectual disability, which include rare genetic disorders such as 22q11.2DS. However, these scores are also available in genomic studies of common variants as well as rare variants in population samples (Davies et al., 2016; Davies et al., 2020; Huguet et al., 2018; Kendall et al., 2017; Spain et al., 2016; Zhao et al., 2018). Notably, it is impractical to incorporate traditional IQ tests in large‐scale prospective genomic studies because their administration requires extensive training and is lengthy. Furthermore, IQ scores are limited in their ability to contribute to mechanistic models and are being replaced by computerized neuroscience‐based measures with established links to underlying brain circuitry. The current study offers a bridge between the novel computerized measures and the traditional IQ measures in a binational sample that has received multiple measurements of both types during the course of care.

Our results indicate that the Penn CNB administered to individuals with 22q11.2DS shows a comparable factor structure to that seen in the general population and allows parsing performance into more specific domains including executive functions, episodic memory, complex cognition, and social cognition. The factorial structure supports the existence of both a general factor and specific factors. Note that the goal here was not to test the measurement models, that is, the factor analyses were used as a means to an end, and further research is needed to confirm the factor structures found here in other 22q11.2DS samples. However, the EFA results here closely matched those of Moore et al. (2015) providing evidence for the generalizability of the CNB in unique populations.

The results of comparing CNB accuracy to IQ indicated similar test‐retest correlations when separated by an average of 1.6 years. These correlations are high, exceeding ICCs of 0.7, and thus can be considered stable measures of generalized cognitive performance. The CNB accuracy and IQ also correlate well with each other at both first and subsequent administrations. These ICCs are lower than the correlations within measures, approaching 0.6, which indicates that they tap highly related but not the same trait. This difference between correlations could indicate a lack of overlap in the variance explained by these measures or differences in the reliability of the two measures in assessing the latent trait. That both CNB accuracy and IQ have similar test‐retest reliability supports the first possibility rather than lower reliability of one of the measures. Notably, both correlate equally with a GAF and hence can be considered as similarly predictive of adjustment. Thus, there seems to be a reasonable justification to use either measure as indicative of cognitive functioning in relation to this validity criterion.

Because the CNB consists of multiple measures that are differentially related to the g‐factor and hence likely differ in the correlations with IQ, we were able to explore which combination of the CNB parameters best predicts IQ scores. These results were remarkably close to expectations given what we know about neurocognitive batteries and IQ. As shown in Figure S4, the top three neurocognitive predictors of IQ were non‐verbal (matrix) reasoning, WM, and attention. The first measure is quite expected, as matrix reasoning tests such as the Raven's have long been used as proxies for IQ, and the other two (WM and attention) have a long history of being centrally implicated in IQ (Kane & Engle, 2002; Unsworth et al., 2009). The fourth highest predictor—matrix reasoning response time—is consistent with our previous work (Moore et al., 2016) showing that fast performance on this task is strongly indicative of poor effort. The right half of Figure S3, showing CNB scores least associated with IQ, is also consistent with expectations, where eight out of the 12 are measures of pure speed, including finger‐tapping speed. These prediction results strongly support the possibility of capturing IQ with fewer tests than used here, indicated most clearly by the fact that the relevant CV R‐squared from Figure 3 (max of solid orange function at ∼0.288) is impressively close to the non‐CV R‐squared (0.5822 = 0.339) achieved with the accuracy composite (main analysis; the bottom left panel of Figure 2).

By offering a bridge between traditional IQ tests and a summary measure from a neuroscience‐based computerized battery, the present study facilitates the linking of archival data with current and future studies employing large‐scale computerized assessments of brain‐behavior parameters. Genetically mediated associations with IQ uncovered in biobanks could be pursued in samples where computerized measures are obtained in order to drill deeper into specific cognitive domains, which could be regulated by different genetic mechanisms related to the brain and behavior.

This study has several limitations. This is a collaborative naturalistic study, where the timing of testing relied on existing clinical protocols and not a prospective study with regularly timed assessments. To maximize the sample size in this rare genomic disorder required that we expand the inter‐test intervals out to 5 years. While this should theoretically not be a problem given the stability of IQ, there might be a reason to doubt the stability of IQ in persons with 22q11.2DS (Antshel et al., 2010; Tang et al., 2017; Vorstman et al., 2015). However, our findings indicate the stability of both IQ and CNB over this time period. Another limitation of the study is that we did not examine the association with psychopathology, which is prevalent in 22q11.2DS (Antshel et al., 2010, 2017; Goldenberg et al., 2012; Green et al., 2009; Hooper et al., 2013; Mekori‐Domachevsky et al., 2017; Morrison et al., 2020; Niarchou et al., 2018; Schneider et al., 2014; Tang et al., 2017; Vorstman et al., 2015; Weinberger et al., 2016). We and others have examined this association in earlier work and considered it beyond the scope of the present report. As the sample is enlarged, we will be in a position to compare IQ and CNB in relation to psychopathology, medical comorbidities, and medications. The study is also limited in the absence of a verbal measure such as vocabulary or verbal reasoning. Such measures require extensive adjustment with the involvement of linguists and would be sensitive to regional variation and educational exposure even within language communities. Finally, we excluded from analyses individuals below IQ levels considered intellectually disabled, and hence our results may not apply to such individuals and further research is required.

Notwithstanding these limitations, our study has demonstrated that the Penn CNB might offer an alternative that is more efficient than the traditional IQ tests. The Penn CNB is open‐source, free to the public, can be administered online or remotely, and has been deployed in multiple resource‐limited settings across the globe. Furthermore, rather than single measures of general intelligence, the CNB offers measures of accuracy and speed on multiple neurocognitive domains that have been well‐investigated in neuroscience in relation to brain function as well as genomics. Of particular relevance in this context is that data derived from this battery can be easily integrated with larger electronic databases due to automated quality control and scoring algorithms. Finally, the incorporation of modern psychometric methodology, such as computerized adaptive testing based on item‐response theory, is enabling further efficiency in administration time without loss of information in a way that can be phased into ongoing studies.

CONFLICT OF INTEREST

The authors declare that they have no conflict of interest.

AUTHOR CONTRIBUTION

Ruben C. Gur, Raquel E. Gur, and Doron Gothelf designed the research; Ronnie Weinberger, Ehud Mekori‐Domachevsky, Raz Gross performed the study in Tel Aviv and Beverly S. Emanuel, Elaine H. Zackai, Edward Moss, Robert Sean Gallagher, Daniel E. McGinn, Terrence Blaine Crowley, Donna McDonald‐McGinn performed the study in Philadelphia; Tyler M. Moore conducted the statistical analyses; Ruben C. Gur, Tyler M. Moore, Raquel E. Gur, Ronnie Weinberger, and Doron Gothelf wrote the paper and all authors critically contributed to drafts of the manuscript.

PEER REVIEW

The peer review history for this article is available at https://publons.com/publon/10.1002/brb3.2221.

Supporting information

Supporting Information

ACKNOWLEDGMENTS

The study was supported by the National Institute of Mental Health grants U01MH101719 (REG), U01MH119738 (REG), MH119738 (DMM), U01MH101722 (DMM) and R01MH117014 (RCG) and the Binational Science Foundation, grant number 2017369. We thank the study participants and the research teams.

Gur, R. C., Moore, T. M., Weinberger, R., Mekori‐Domachevsky, E., Gross, R., Emanuel, B. S., Zackai, E. H., Moss, E.Gallagher, R. S., McGinn, D. E., Crowley, T. B., McDonald‐McGinn, D., Gothelf, D., & Gur R. E. (2021). Relationship between intelligence quotient measures and computerized neurocognitive performance in 22q11.2 deletion syndrome. Brain and Behavior, 11, e2221. 10.1002/brb3.2221

[Correction added on 30 August 2021, after first online publication: Peer review history statement has been added.]

DATA AVAILABILITY STATEMENT

The data that support the findings of this study are available from the corresponding author upon request.

REFERENCES

- Antshel, K. M., Fremont, W., Ramanathan, S., & Kates, W. R. (2017). Predicting cognition and psychosis in young adults with 22q11.2 deletion syndrome. Schizophrenia Bulletin, 43(4), 833–842. 10.1093/schbul/sbw135 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Antshel, K. M., Shprintzen, R., Fremont, W., Higgins, A. M., Faraone, S. V., & Kates, W. R. (2010). Cognitive and psychiatric predictors to psychosis in velocardiofacial syndrome: A 3‐year follow‐up study. Journal of the American Academy of Child and Adolescent Psychiatry, 49(4), 333–344. [PMC free article] [PubMed] [Google Scholar]

- Azuma, R., Deeley, Q., Campbell, L. E., Daly, E. M., Giampietro, V., Brammer, M. J., Murphy, K. C., & Murphy, D. G. M. (2015). An fMRI study of facial emotion processing in children and adolescents with 22q11.2 deletion syndrome. Journal of Neurodevelopmental Disorders, 7(1), 1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davies, R. W., Fiksinski, A. M., Breetvelt, E. J., Williams, N. M., Hooper, S. R., Monfeuga, T., Bassett, A. S., Owen, M. J., Gur, R. E., Morrow, B. E., McDonald‐McGinn, D. M., Swillen, A., Chow, E. W. C., van den Bree, M., Emanuel, B. S., Vermeesch, J. R., van Amelsvoort, T., Arango, C., Armando, M., … Vorstman, J. A. S. (2020). Using common genetic variation to examine phenotypic expression and risk prediction in 22q11.2 deletion syndrome. Nature Medicine, 26(12), 1912–1918. 10.1038/s41591-020-1103-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davies, G., Marioni, R. E., Liewald, D. C., Hill, W. D., Hagenaars, S. P., Harris, S. E., Ritchie, S. J., Luciano, M., Fawns‐Ritchie, C., Lyall, D., Cullen, B., Cox, S. R., Hayward, C., Porteous, D. J., Evans, J., Mcintosh, A. M., Gallacher, J., Craddock, N., Pell, J. P., … Deary, I. J. (2016). Genome‐wide association study of cognitive functions and educational attainment in UK Biobank (N = 112 151). Molecular Psychiatry, 21(6), 758–767. 10.1038/mp.2016.45 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duijff, S. N., Klaassen, P. W. J., De Veye, H. F. N. S., Beemer, F. A., Sinnema, G., & Vorstman, J. A. S. (2012). Cognitive development in children with 22q11.2 deletion syndrome. British Journal of Psychiatry, 200(6), 462–468. 10.1192/bjp.bp.111.097139 [DOI] [PubMed] [Google Scholar]

- Dunn, L. M. (1965). Expanded manual, Peabody Picture Vocabulary Test. American Guidance Services. [Google Scholar]

- Goldenberg, P. C., Calkins, M. E., Richard, J., Mcdonald‐Mcginn, D., Zackai, E., Mitra, N., Emanuel, B., Devoto, M., Borgmann‐Winter, K., Kohler, C., Conroy, C. G., Gur, R. C., & Gur, R. E. (2012). Computerized neurocognitive profile in young people with 22q11.2 deletion syndrome compared to youths with schizophrenia and At‐Risk for psychosis. American Journal of Medical Genetics Part B Neuropsychiatric Genetics, 159B(1), 87–93. 10.1002/ajmg.b.32005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Green, T., Gothelf, D., Glaser, B., Debbane, M., Frisch, A., Kotler, M., Weizman, A., & Eliez, S. (2009). Psychiatric disorders and intellectual functioning throughout development in velocardiofacial (22q11.2 deletion) syndrome. Journal of the American Academy of Child and Adolescent Psychiatry, 48(11), 1060‐1068. 10.1097/CHI.0b013e3181b76683 [DOI] [PubMed] [Google Scholar]

- Gur, R. E., Yi, J. J., Mcdonald‐Mcginn, D. M., Tang, S. X., Calkins, M. E., Whinna, D., Souders, M. C., Savitt, A., Zackai, E. H., Moberg, P. J., Emanuel, B. S., & Gur, R. C. (2014). Neurocognitive development in 22q11.2 deletion syndrome: comparison with youth having developmental delay and medical comorbidities. Molecular Psychiatry, 19(11), 1205–1211. 10.1038/mp.2013.189 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gur, R. C., Richard, J., Hughett, P., Calkins, M. E., Macy, L., Bilker, W. B., Brensinger, C., & Gur, R. E. (2010). A cognitive neuroscience‐based computerized battery for efficient measurement of individual differences: Standardization and initial construct validation. Journal of Neuroscience Methods, 187(2), 254–262. 10.1016/j.jneumeth.2009.11.017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gur, R. C., Richard, J., Calkins, M. E., Chiavacci, R., Hansen, J. A., Bilker, W. B., Loughead, J., Connolly, J. J., Qiu, H., Mentch, F. D., Abou‐Sleiman, P. M., Hakonarson, H., & Gur, R. E. (2012). Age group and sex differences in performance on a computerized neurocognitive battery in children age 8–21. Neuropsychology, 26(2), 251–265. 10.1037/a0026712 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hooper, S. R., Curtiss, K., Schoch, K., Keshavan, M. S., Allen, A., & Shashi, V. (2013). A longitudinal examination of the psychoeducational, neurocognitive, and psychiatric functioning in children with 22q11.2 deletion syndrome. Research in Developmental Disabilities, 34(5), 1758–1769. 10.1016/j.ridd.2012.12.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huguet, G., Schramm, C., Douard, E., Jiang, L., Labbe, A., Tihy, F., Mathonnet, G., Nizard, S., Lemyre, E., Mathieu, A., Poline, J.‐B., Loth, E., Toro, R., Schumann, G., Conrod, P., Pausova, Z., Greenwood, C., Paus, T., Bourgeron, T., & Jacquemont, S. (2018). Measuring and estimating the effect sizes of copy number variants on general intelligence in community‐based samples. JAMA Psychiatry, 75(5), 447–457. 10.1001/jamapsychiatry.2018.0039 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ibrahim, I., Tobar, S., Elassy, M., Mansour, H., Chen, K., Wood, J., Gur, R. C., Gur, R. E., El Bahaei, W., & Nimgaonkar, V. (2015). Practice effects distort translational validity estimates for a Neurocognitive Battery. Journal of Clinical and Experimental Neuropsychology, 37(5), 530–537. 10.1080/13803395.2015.1037253 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jalali, G. R., Vorstman, J. A. S., Errami, A., Vijzelaar, R., Biegel, J., Shaikh, T., & Emanuel, B. S. (2008). Detailed analysis of 22q11.2 with a high density MLPA probe set. Human Mutation, 29(3), 433–440. 10.1002/humu.20640 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kane, M. J., & Engle, R. W. (2002). The role of prefrontal cortex in working‐memory capacity, executive attention, and general fluid intelligence: An individual‐differences perspective. Psychonomic Bulletin & Review, 9(4), 637–671. 10.3758/BF03196323 [DOI] [PubMed] [Google Scholar]

- Kendall, K. M., Rees, E., Escott‐Price, V., Einon, M., Thomas, R., Hewitt, J., O'donovan, M. C., Owen, M. J., Walters, J. T. R., & Kirov, G. (2017). Cognitive performance among carriers of pathogenic copy number variants: Analysis of 152,000 UK Biobank subjects. Biological Psychiatry, 82(2), 103–110. 10.1016/j.biopsych.2016.08.014 [DOI] [PubMed] [Google Scholar]

- Mcdonald‐Mcginn, D. M., Sullivan, K. E., Marino, B., Philip, N., Swillen, A., Vorstman, J. A. S., Zackai, E H., Emanuel, B S., Vermeesch, J. R., Morrow, B. E., Scambler, P. J., & Bassett, A. S. (2015). 22q11.2 deletion syndrome. Nature Reviews Disease Primers, 1(1), 15071. 10.1038/nrdp.2015.71 [DOI] [PMC free article] [PubMed] [Google Scholar]

- McGlashan, T., Miller, T. J., Woods, S. W., Rosen, J. L., Hoffman, R. E., & Davidson, L. (2003). Structured interview for prodromal syndromes (Version 4). PRIME Research Clinic, Yale School of Medicine. [Google Scholar]

- Mekori‐Domachevsky, E., Guri, Y., Yi, J., Weisman, O., Calkins, M. E., Tang, S. X., Gross, R., Mcdonald‐Mcginn, D. M., Emanuel, B. S., Zackai, E H., Zalsman, G., Weizman, A., Gur, R. C., Gur, R. E., & Gothelf, D. (2017). Negative subthreshold psychotic symptoms distinguish 22q11.2 deletion syndrome from other neurodevelopmental disorders: A two‐site study. Schizophrenia Research, 188, 42–49. 10.1016/j.schres.2016.12.023 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moore, T. M., Reise, S. P., Gur, R. E., Hakonarson, H., & Gur, R. C. (2015). Psychometric properties of the Penn Computerized Neurocognitive Battery. Neuropsychology, 29(2), 235–246. 10.1037/neu0000093 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moore, T. M., Reise, S. P., Roalf, D. R., Satterthwaite, T D., Davatzikos, C., Bilker, W. B., Port, A. M., Jackson, C. T., Ruparel, K., Savitt, A. P., Baron, R. B., Gur, R E., & Gur, R. C. (2016). Development of an itemwise efficiency scoring method: Concurrent, convergent, discriminant, and neuroimaging‐based predictive validity assessed in a large community sample. Psychological Assessment, 28(12), 1529–1542. 10.1037/pas0000284 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morrison, S., Chawner, S. J. R. A., Van Amelsvoort, T A. M. J., Swillen, A., Vingerhoets, C., Vergaelen, E., Linden, D. E. J., Linden, S., Owen, M. J., & Van Den Bree, M. B. M. (2020). Cognitive deficits in childhood, adolescence and adulthood in 22q11.2 deletion syndrome and association with psychopathology. Translational Psychiatry, 10(1), 53. 10.1038/s41398-020-0736-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mosheva, M., Pouillard, V., Fishman, Y., Dubourg, L., Sofrin‐Frumer, D., Serur, Y., Weizman, A., Eliez, S., Gothelf, D., & Schneider, M. (2019). Education and employment trajectories from childhood to adulthood in individuals with 22q11.2 deletion syndrome. European Child & Adolescent Psychiatry, 28(1), 31–42. 10.1007/s00787-018-1184-2 [DOI] [PubMed] [Google Scholar]

- Niarchou, M., Calkins, M. E., Moore, T. M., Tang, S. X., Mcdonald‐Mcginn, D. M., Zackai, E. H., Emanuel, B. S., Gur, R. C., & Gur, R. E. (2018). Attention deficit hyperactivity disorder symptoms and psychosis in 22q11.2 deletion syndrome. Schizophrenia Bulletin, 44(4), 824–833. 10.1093/schbul/sbx113 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Raven, J. C., & Court, J. H. (1938). Raven's progressive matrices. Western Psychological Services. [Google Scholar]

- Reise, S. P., Moore, T. M., & Haviland, M. G. (2010). Bifactor models and rotations: Exploring the extent to which multidimensional data yield univocal scale scores. Journal of Personality Assessment, 92(6), 544–559. 10.1080/00223891.2010.496477 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reise, S. P., Cook, K. F., & Moore, T. M. (2015). Evaluating the impact of multidimensionality on unidimensional item response theory model parameters. In Reise S. & Revicki D. (Eds.), Handbook of item response theory modeling. Taylor & Francis. [Google Scholar]

- Reise, S. P., Moore, T., & Maydeu‐Olivares, A. (2011). Using targeted rotations to assess the impact of model violations on the parameters of unidimensional and bifactor IRT models. Educational and Psychological Measurement, 71(4), 684–711. 10.1177/0013164410378690 [DOI] [Google Scholar]

- Roalf, D. R., Ruparel, K., Gur, R. E., Bilker, W., Gerraty, R., Elliott, M. A., Gallagher, R. S., Almasy, L., Pogue‐Geile, M. F., Prasad, K., Wood, J., Nimgaonkar, V. L., & Gur, R. C. (2014). Neuroimaging predictors of cognitive performance across a standardized neurocognitive battery. Neuropsychology, 28(2), 161–176. 10.1037/neu0000011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schmid, J., & Leiman, J. M. (1957). The development of hierarchical factor solutions. Psychometrika, 22(1), 53–61. 10.1007/BF02289209 [DOI] [Google Scholar]

- Schneider, M., Debbané, M., Bassett, A. S., Chow, E. W.C., Fung, W. L. A., Van Den Bree, M. B. M., Owen, M., Murphy, K C., Niarchou, M., Kates, W. R., Antshel, K. M., Fremont, W., Mcdonald‐Mcginn, D. M., Gur, R. E., Zackai, E. H., Vorstman, J., Duijff, S. N., Klaassen, P. W. J., Swillen, A., … Eliez, S. (2014). Psychiatric disorders from childhood to adulthood in 22q11.2 deletion syndrome: Results from the international consortium on brain and behavior in 22q11.2 deletion syndrome. American Journal of Psychiatry, 171, 627–639. 10.1176/appi.ajp.2013.13070864 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spain, S. L., Pedroso, I., Kadeva, N., Miller, M. B., Iacono, W. G., Mcgue, M., Stergiakouli, E., Smith, G. D., Putallaz, M., Lubinski, D., Meaburn, E. L., Plomin, R., & Simpson, M. A. (2016). A genome‐wide analysis of putative functional and exonic variation associated with extremely high intelligence. Molecular Psychiatry, 21(8), 1145–1151. 10.1038/mp.2015.108 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Swagerman, S. C., De Geus, E. J. C., Kan, K. ‐J., Van Bergen, E., Nieuwboer, H. A., Koenis, M. M. G., Hulshoff Pol, H. E., Gur, R. E., Gur, R. C., & Boomsma, D. I. (2016). The computerized neurocognitive battery: Validation, aging effects, and heritability across cognitive domains. Neuropsychology, 30(1), 53–64. 10.1037/neu0000248 [DOI] [PubMed] [Google Scholar]

- Tang, S. X., Moore, T. M., Calkins, M. E., Yi, J. J., Mcdonald‐Mcginn, D. M., Zackai, E. H., Emanuel, B. S., Gur, R. C., & Gur, R. E. (2017). Emergent, remitted and persistent psychosis‐spectrum symptoms in 22q11.2 deletion syndrome. Translational Psychiatry, 7(7), e1180. 10.1038/tp.2017.157 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Unsworth, N., Spillers, G. J., & Brewer, G. A. (2009). Examining the relations among working memory capacity, attention control, and fluid intelligence from a dual‐component framework. Psychology Science Quarterly, 51(4), 388–402. https://maidlab.uoregon.edu/PDFs/UnsworthSpillers&Brewer(2009)PSQ.pdf [Google Scholar]

- Vorstman, J. A. S., Breetvelt, E. J., Duijff, S. N., Eliez, S., Schneider, M., Jalbrzikowski, M., Armando, M., Vicari, S., Shashi, V., Hooper, S. R., Chow, E. W. C., Fung, W. L. A., Butcher, N. J., Young, D. A., Mcdonald‐Mcginn, D. M., Vogels, A., Van Amelsvoort, T., Gothelf, D., Weinberger, R., … Bassett, A. S. (2015). Cognitive decline preceding the onset of psychosis in patients with 22q11.2 deletion syndrome. JAMA Psychiatry, 72(4), 377–385. 10.1001/jamapsychiatry.2014.2671 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wechsler, D. (1981). WAIS‐R manual: Wechsler Adult Intelligence Scale–Revised. Psychological Corporation. [Google Scholar]

- Wechsler, D. (1949). Wechsler Intelligence Scale for Children. Psychological Corporation. [Google Scholar]

- Wechsler, D. (1999). Wechsler Abbreviated Scale of Intelligence (WASI). Psychological Corporation. [Google Scholar]

- Weinberger, R., Yi, J., Calkins, M., Guri, Y., Mcdonald‐Mcginn, D. M., Emanuel, B. S., Zackai, E. H., Ruparel, K., Carmel, M., Michaelovsky, E., Weizman, A., Gur, R. C., Gur, R. E., & Gothelf, D. (2016). Neurocognitive profile in psychotic versus nonpsychotic individuals with 22q11.2 deletion syndrome. European Neuropsychopharmacology, 26(10), 1610–1618. 10.1016/j.euroneuro.2016.08.003 [DOI] [PubMed] [Google Scholar]

- Yi, J. J., Weinberger, R., Moore, T. M., Calkins, M. E., Guri, Y., Mcdonald‐Mcginn, D. M., Zackai, E. H., Emanuel, B. S., Gur, R. E., Gothelf, D., & Gur, R. C. (2016). Performance on a computerized neurocognitive battery in 22q11.2 deletion syndrome: A comparison between US and Israeli cohorts. Brain and Cognition, 106, 33–41. 10.1016/j.bandc.2016.02.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhao, Y., Guo, T., Fiksinski, A., Breetvelt, E., Mcdonald‐Mcginn, D. M., Crowley, T. B., Diacou, A., Schneider, M., Eliez, S., Swillen, A., Breckpot, J., Vermeesch, J., Chow, E. W. C., Gothelf, D., Duijff, S., Evers, R., Van Amelsvoort, T. A., Van Den Bree, M., Owen, M., … Morrow, B. E. (2018). Variance of IQ is partially dependent on deletion type among 1,427 22q11.2 deletion syndrome subjects. American Journal of Medical Genetics Part A, 176(10), 2172–2181. 10.1002/ajmg.a.40359 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supporting Information

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon request.