Abstract

Purpose

This study aims to design that using formative assessment as an instructional strategy in real-time online classes, and to explore the application of Bloom’s taxonomy in the development of formative assessment items.

Methods

We designed the instruction using formative assessment in real-time online classes, developed the items of formative assessment, analyzed the items statistically, and investigated students' perceptions of formative assessment through a survey.

Results

It is designed to consist of 2–3 learning outcomes per hour of class and to conduct the formative assessment with 1–2 items after the lecture for each learning outcome. Formative assessment was 31 times in the physiology classes (total 48 hours) of three basic medicine integrated. There were nine “knowledge” items, 40 “comprehension” items, and 55 “application” items. There were 33 items (31.7%) with a correct rate of 80% or higher, which the instructor thought was appropriate. As a result of the survey on students’ perceptions of formative assessment, they answered that it was able to concentrate on the class and that it was helpful in achieving learning outcomes.

Conclusion

The students focused during class because they had to take formative assessment immediately after the learning outcome lecture. “Integration of lesson and assessments” was maximized by solving the assessment items as well as through the instructor’s immediate explanation of answers. Through formative assessment, the students were able to utilize metacognition by learning what content they understood or did not understand. Items that consider Bloom’s taxonomy allow students to remember, understand, and apply to clinical contexts.

Keywords: Real-time online classes, Formative assessment, Assessment for learning, Assessment as learning, Bloom’s taxonomy, Integration of lesson and assessment

Introduction

In the spring of 2020, most medical school classes switched from face-to-face classes to online classes in Korea due to the coronavirus disease 2019 (COVID-19) pandemic. Within a short period of time, it has become an era of full-scale online classes and professors either have real-time online classes through online-conference platforms such as Zoom or Google Meet, or make a video for the class and distribute it to the students.

Real-time online classes have the advantage of being of enabling interaction between professor and students; however, some teachers complained that it was difficult to check if students were participating in the class or understanding the content as students would either turn off their cameras or the small frames made it hard for them to see the students’ facial expressions or gestures clearly. Serhan [1] in 2020 found that 48.4% of the students displayed a negative attitude toward Zoom classes compared to face-to-face lectures, while 61.3% of the students answered Zoom classes did not help improve their learning. It is especially necessary to focus on the negative answers regarding Zoom classes, such as “difficulty focusing on the lecture”, “dissatisfaction over the quality of interaction and feedback”, and “poor class quality” [1]. Against this background, Bao [2] in 2020 proposed the following five high-impact teaching practice principles to increase the quality of online learning: (1) maintain appropriate relevance between the amount, level, and length of class content and students’ level; (2) control the pace of classes while effectively delivering content; (3) provide sufficient support through timely feedback, including e-mail guidance, from professors and teaching assistants to students after class; (4) heighten the level and depth of student participation during class; and (5) prepare a contingency plan to deal with unexpected problems that may occur on the online education platform. Thus, it has been confirmed through studies of Serhan [1] and Bao [2] that the sudden online classes due to the COVID-19 pandemic require a strengthening of design, operation, and students’ feedback of the classes, in addition to the various efforts to improve the quality of learning.

Recent assessment trends have moved past the summative assessment where the students’ achievements were checked to emphasize the importance of formative assessments, where the assessment itself is used for learning and applied in learning. Formative assessment refers to an assessment to give feedback to students while teaching and learning is in progress and to improve the curriculum and teaching methods. Black and Wiliam [3] in 1998 regarded all feedback activities partaken by the teacher or students during class for the improvement of teaching and learning as formative assessment, and claimed that “assessment for learning” should be emphasized because formative assessments have a significant impact on student achievement. Formative assessment is emphasized and is being implemented and conducted in many classes and subjects. Many studies are also being conducted on the theories, effects, and method of formative assessment [3-9]. However, not many studies have been conducted on the “items” used in the formative assessment, and there exist studies that used the cognitive domain of Bloom’s taxonomy (BT) [9] and applied cognitive diagnostic models [10,11]. Kim et al. [12] in 2017 argued that when developing formative assessment items, all important elements of the learning unit and each stage of taxonomy of educational objectives should be included. In addition, in formative assessment, items of difficulty equivalent to the minimum criterion should be presented to ascertain whether a student has achieved or not [12]. However, there are insufficient studies on this matter.

Based on literature review of Bao [2] and Kim et al. [12], this study aims to exploring that (1) using formative assessment as instruction strategy to increase student’s participation and to enhance understanding of contents in real-time online classes, (2) to achieve the goal of improving student learning and improving classes, which are the main functions of formative assessment, BT is applied to item development and its implications are considered.

Methods

1. Research design

This is the study that developed the items of formative assessment, analyzed the items statistically, and investigated students’ perceptions of formative assessment through the survey.

2. Design for online classes

The Function of Human Body (FHB), Basic Science of Circulatory and Respiratory System (BSCRS), and Basic Science of Urinary and Reproductive System (BSURS) subjects for first-year students at the CHA University School of Medicine are integrated subjects comprising histology, physiology, internal medicine, and emergency medicine classes. One physiology professor taught all three subjects to 43 registered students. In 2020, all classes were conducted online in real-time through Zoom because of the COVID-19 pandemic.

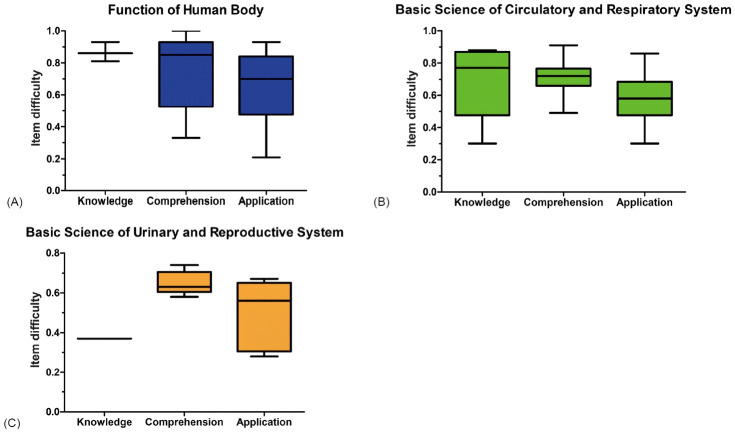

In order to increase the quality of online learning, Bao [2] in 2020 proposed to adjust the level and length of lessons appropriately, and to adjust the speed of lessons in consideration of the level of students and the contents of the lessons. Accordingly, the contents of the existing learning outcomes were adjusted to 2–3 per hour, and this allowed time to participate in online formative assessment and to enhance student participation and the interaction between the professors and students, the class was designed by including a formative assessment on related content, which was presented as the learning outcomes, as illustrated in Fig. 1.

Fig. 1. Class Design.

Students took 10–15-minute real-time lectures per learning outcome through Zoom, and then solved 1–2 formative assessment items on the relevant learning outcome after class through Google Classroom. The professor could instantly check the results after the completion of the assessment and explain the correct answers for all the questions. The formative assessment score was not reflected in their grades.

3. Development of formative assessment items

Kim et al. [12] in 2017 said that taxonomy of educational objectives is important when developing formative assessment items. In Korea, primary and secondary school teachers use taxonomy of educational objectives when developing test or exam items, and taxonomy of educational objectives is composed of a two-dimensional matrix of assessment contents and behavioral elements [13-15]. The sub-elements of the behavioral elements may be different for each teacher, but the most used is the cognitive sub-behavioral elements of Bloom’s cognitive domain [12]. BT (1956)1) consisted of six major categories [16]: knowledge, comprehension, application, analysis, synthesis, and evaluation, and each category has a hierarchical structure in cognitive domain. The lowest level of “knowledge” and the highest level of “evaluation” are required for cognitive abilities. In this study, only “knowledge, comprehension, and application” among BT were applied in the behavioral elements. Because there was a study that pointed out that teachers had difficulty distinguishing between knowledge–comprehension, application–analysis, and analysis–synthesis, behavioral element beyond “application” were excluded [13]. And we also thought that the higher-level “analysis, synthesis, and evaluation” require student’s time to internalize the content of the lesson into their own learning.

When developing the items, each item was developed with the content element as a learning outcome and the behavior element as “knowledge, comprehension, and application” were matched, and all items were developed as multiple-choice items. Application items that used physiology knowledge in clinical context were presented as formative assessment items; it was designed so that the students learned how basic medical knowledge could be applied in clinical context by solving these items.

4. Data collection

All correct answers for each item were collected for the matching the categories of BT of cognitive domain used in the formative assessment for FHB, BSCRS, and BSURS subjects in 2020 to calculate the difficulty based on the classical test theory.

After completing the semester, students’ opinions on the class and formative assessments were collected through the survey developed by us. The survey was anonymous and the students’ free response was guaranteed. The survey consisted of 5-point Likert scale questions, multiple-choice questions, and open-ended questions that they could answer freely. This study analyzed only the response data about formative assessment.

5. Data analysis

The number of formative assessments carried out in each physiological class during the three subjects, the frequency of items and analysis of the item difficulty by the categories of BT. For student responses to the questions related to formative assessment in the survey, descriptive statistics and frequency analysis were conducted using JAMOVI ver. 1.6.15 (JAMOVI, Sydney, Australia; https://www.jamovi.org), and the answers to the open-ended questions were summarized accordingly.

6. Ethical considerations

While this study collected formative assessment data from the results of the previous year’s class operation and survey data for the improvement of classes, it did not collect personal identification data of the study subjects. Thus, the institutional review board approved this study to be exempt from deliberation (1044308-202105-HR-032-01).

Results

1. The results of the online classes and formative assessments

Table 1 presents the number of classes, number of formative assessments, and number of formative assessment items per categories of BT for the FHB, BSCRS, and BSURS subjects taken by first-year students in 2020. Each subject gave students 2–4 formative assessment items after each class, which were distinguished into “knowledge, comprehension, and application” items. The instructor maintained 45%–55% inclusion rate for “application” items, so that the basic medical knowledge learned in class have been used in clinical context through formative assessment items.

Table 1.

Results of Online Classes and Formative Assessments

| Variable | FHB | BSCRS | BSURS |

|---|---|---|---|

| No. of classes | |||

| Total (hr) | 55 | 54 | 40 |

| Physiology classes (hr) | 24 | 16 | 8 |

| Formative assessment | |||

| No. of assessments | 14 | 12 | 5 |

| Total no. of questions | 46 | 47 | 11 |

| Questions per cognitive domain | |||

| Knowledge | 3 | 5 | 1 |

| Comprehension | 18 | 17 | 5 |

| Application | 25 | 25 | 5 |

| Items above 80% of answer | |||

| Knowledge | 3 | 2 | 0 |

| Comprehension | 12 | 2 | 0 |

| Application | 12 | 2 | 0 |

FHB: Function of Human Body, BSCRS: Basic Science of Circulatory and Respiratory System, BSURS: Basic Science of Urinary and Reproductive System.

2. The analysis results for the item difficulty

As mentioned earlier, in formative assessment, items of difficulty equivalent to the minimum criterion should be presented to ascertain whether a student has achieved or not. The instructor expected 80% of the correct answer rate to take each item. However, some Application items were thought to be difficult for students, but they were included in the formative assessment as they thought they were necessary for learning.

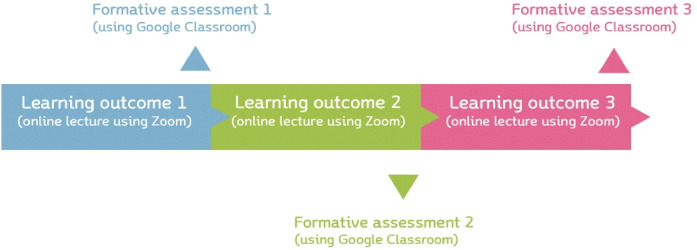

The item difficulty (the higher the number, the easier the item) of formative assessment items of FHB, BSCRS, and BSURS subjects was analyzed with the classical test theory. The results are presented in Fig. 2. The average difficulty was 0.70 for FHB, 0.64 for BSCRS, and 0.55 for BSURS. Students were able to easily solve “knowledge” items; however, it was found that the higher the level of cognitive abilities required to solve an item, the lower the average difficulty of that item was. Application items are considered to be of difficulty because students are required to use their application cognitive ability based on their full understanding of the content learned.

Fig. 2. Difficulty Level of the Formative Assessment Question per Subject.

Table 1 shows the number of items with a correct answer rate of 80% or higher. FHB, BSCRS, and BSURS were 58.7%, 12.8%, and 0%, respectively. FHB had the most items with a correct rate of 80% or more, and BSURS had none. The difference between the instructor’s prediction and the actual item difficulty is large, so it seems necessary to adjust the item difficulty later. However, the results of the item difficulty analysis by the classical test theory may have different values due to the influence of the learner group, so this should be considered when interpreting. That is, even with the same item, the difficulty of the item is calculated to be high in the group of excellent learners, while a low value is derived for the group of learners with low achievement.

3. Students’ perception on formative assessment

Of the total 43 students, 26 answered the survey for class improvement, 14 were male (53.8%), and 12 were female (46.2%). Their answers to the 5-point Likert scale question to evaluate whether formative assessments helped their learning are presented in Table 2. According to the students, the formative assessment helped them focus on class, understand the learning content, and achieve learning outcomes. In addition, by solving the “application” items and listening to the professor’s explanation of the correct answer, they were able to apply basic medicine to clinical context. No student answered 1 (not at all) or 2 (not really) to all four questions.

Table 2.

Students’ Perception on Formative Assessments

| Item | Mean±SD |

|---|---|

| Formative assessment helped me focus in class. | 4.31±0.55 |

| Formative assessment helped me understand the class content. | 4.50±0.51 |

| Formative assessment helped me achieve learning outcome. | 4.38±0.64 |

| Formative assessment helped me deeply understand and apply the class content. | 4.15±0.54 |

SD: Standard deviation.

In detail, students’ opinion on how the formative assessment helped their learning; whether the formative assessment items for each category of BT helped their learning; the experience of taking a formative assessment after each learning outcome; and open-ended questions are presented in Table 3. Most students answered that they learned what content was most important through formative assessment. It was indicated that “comprehension” items helped the most in helping them understand the class content and the “application” items helped the most in achieving learning outcomes. As for the adequate timing of formative assessments, most students answered that it would be best to conduct formative assessments once at the end of each class. This seems to reflect their burden toward the fact that they experience an assessment once or twice every class. Other opinions included the increase in the number of items and a modification of the difficulty.

Table 3.

Students’ Opinion on How Formative Assessments Facilitated Learning

| Item | % | |

|---|---|---|

| 1. What are the reasons that the formative assessment helped your understanding of class content and achievement of learning outcomes? (Multiple answers possible) | ||

| I learned what the important content was. | 53.8 | |

| Important content was repeated. | 35.5 | |

| I found out what I knew and what I did not. | 35.5 | |

| I could make the class content my own during class time. | 26.9 | |

| It motivated my learning, and I was able to concentrate in class. | 23.1 | |

| 2. Which formative assessment question type helped you understand the class content the most? | ||

| Questions concerning the acquisition of knowledge | 11.5 | |

| Questions concerning my comprehension of the content | 61.5 | |

| Questions concerning the application in clinical situations or real life | 26.9 | |

| 4. Which formative assessment question type helped you achieve learning outcomes the most? | ||

| Questions concerning the acquisition of knowledge | 7.7 | |

| Questions concerning my comprehension of the content | 42.3 | |

| Questions concerning the application in clinical situations or real life | 50.0 | |

| 5. When is the adequate time for formative assessments to be effective for online classes? | ||

| During class, right after the explanation of relevant content | 20.0 | |

| Towards the end of the class | 44.0 | |

| Right after the class ends | 8.0 | |

| Any time within the day the class was held | 28.0 | |

| 6. Other opinions regarding formative assessments | ||

| A formative assessment after the learning outcome lecture was appropriate. | ||

| The number of questions should be increased as it greatly helps students understand the content and apply the content in clinical situations. | ||

| By solving the questions, I can check if I understood the content correctly. | ||

| Some clinical situation application questions were too difficult to solve with just the content learned during class. | ||

| The professor’s explanation of the correct answers greatly helped learning, and solving one problem had the same effect as solving multiple problems. | ||

Discussion

Online classes have become common in educational institutions around the world due to the COVID-19 pandemic. Agarwal and Kaushik [17] in 2020 proposed in their research that most of the learners of online classes using Zoom will be a part of medical education and believed online classes will be a part of the postgraduation curriculum even after the end of the COVID-19 pandemic. Due to such changes in teaching methods and the learning environment, many schools and professors are seeking ways to design effective online classes and increase student participation.

Against this background, we would like to propose the utilization of formative assessments to increase the students’ concentration during online classes, to allow instructors to immediately check how much the students understood the learning content, and to allow interaction between instructors and students. This study presented 1–2 formative assessment items after one learning outcome lecture to utilize formative assessments as an online instruction strategy. And when developing the items, “knowledge, comprehension, and application”—the categories of BT were matched to each item. The results are as follows:

First, the students focused during class because they had to take formative assessment immediately after the learning outcome lecture, thus being able to utilize the knowledge acquired during the class. Second, instructor was able to immediately check the students’ answers in Google Classroom, thus being able to provide instant feedback. In addition, instructor was able to immediately improve his classes, because he could assess the students’ situation of understanding. Third, “integration of lesson and assessments” was maximized by solving the assessment items as well as through the instructor’s immediate explanation of answers. Students were able to learn through the problem-solving process. This also means that the learning in the existing class unit was further subdivided into the learning outcome unit as the learning process through the lecturer’s class deliveryformation assessment-explanation of correct and incorrect answers and distractors for each learning outcome. Fourth, through formative assessment, the students were able to utilize metacognition by learning what content was important and what content they understood or did not understand. Fifth, the formative assessment items of diverse level of cognitive abilities allowed students to understand the content of the class and apply it to clinical context. Application items themselves became an example of how the knowledge learned was applied; with this, just solving the question became key learning content.

This study is about the timing of formative assessment during class, and the content of item development. Through this study, we would like to consider the method of formative assessment, the cognitive level to be measured during item development and appropriate difficulty, and the effect of formative assessment perceived by students.

First, although formative assessment was not included in grades, it was found that students were stressed just by being frequently exposed to assessment situations. Studies on formative assessment through online platforms or mobile apps like Kahoot! existed before the COVID-19 outbreak [4-6]. In these pre-studies, several methods were suggested so that students could have fun and be interested in participating in assessment. Using a mobile app, students can be entertained like a game, or they can reward a student who answers more or faster than other students. It is beyond the assessment of the student’s understanding of the lesson, and it is seen that evaluation itself becomes a part of learning, that is, lecture and evaluation are integrated.

Second, the feedback provided after formative evaluation enables students’ self-reflection and self-assessment, and through this, “assessment as learning” was possible. The three most common answers by students about why formative assessment helped their learning were as follows: (1) I learned what the important content was. (2) Important content was repeated. (3) I found out what I knew and what I did not. Of these, “(3) I found out what I knew and what I did not” is a response related to students’ metacognition, and students acquire metacognition by self-reflection and self-assessment through commentary and feedback on the items.

Earl [18] in 2013 has presented “assessment of learning”, “assessment for learning”, and “assessment as learning” as the paradigms of student assessment. According to Earl [18], “assessment as learning” is a subset of assessment for learning, emphasizing the role of the students. Learning is the process of combining new knowledge with the structure of the student’s existing knowledge. What is important in this process is the student’s own role. In other words, it is important for students to think about what they knew, what new knowledge is, and how to internalize it by organizing it with existing knowledge. Assessment helps with this. Earl [18] stated that “assessment as learning” must be most widely used, where students become the subjects of assessment and they check and adjust their own knowledge for further learning. Then, “assessment for learning” must be used, to enable instructor obtain information for their instructional judgement in teaching situations and provide effective feedback to students. What these two assessment paradigms have in common is that assessment is done to support learning rather than to provide information about the results, and that formative assessment as an assessment method is preferred [19].

Third, items that can maximize the function of formative assessment should be developed. According to Seong [10] in 2018, the general characteristics of test tools for formative assessment are as follows: (1) It is conducted by teachers, but recently learners can also participate in test production. (2) It has the characteristics of a criterion-referenced test because it aims to analyze how much students understand the contents of teaching-learning. (3) As it has the purpose of criterion-referenced, the difficulty of the test tool should be composed of items that correspond to criterion that can distinguish success and failure of learning, rather than varying the difficulty of the test tool. (4) It should be an item that can continuously arouse learning motivation and interest. (5) Items must contain distractors that contain misconceptions that may cause underachievement [10].

However, although positive functions of formative assessment are expected, it is not easy to put a lot of effort into developing items compared to summative assessment. In this study, BT was applied when developing the items, and 80% of the students were expected to get it right. As a result of analyzing the actual percentage of correct answers, there was a big difference in the number of items that got more than 80% correct for each subject. It is not easy to develop items with the difficulty of 80%, so more related research is needed. And, in order to accurately diagnose a student’s current situation of understanding of class content through formative assessment, it is important to develop distractors. If each distractor is developed based on cognitive factor that students can make mistakes, the correct diagnosis data for students can be based on which distractor is selected. As formative assessment is being emphasized, continuous research is needed to develop items.

This study has a limitation. As a case study on the design, item development, item analysis, and resulting student perception of formative assessment carried out in the real-time online physiology class in one school, generalizing the results was difficult. However, it is based on the data continuously accumulated through 48 hours of classes taught by one professor in one semester. This study is significant in that it suggested the appropriate implementation time for formative assessment in online classes, and it deals with the application of BT when developing formative assessment items.

Acknowledgments

None.

Footnotes

Funding

This work has partially supported by the National Research Foundation of Korea (NRF) grant founded by the Korea government (MSIT) (NRF-2019R1F1A1058771).

Conflicts of interest

No potential conflict of interest relevant to this article was reported.

Author contributions

Conceptualization: SJN, YGJ, DHL. Data curation: DHL. Formal analysis: SJN. Methodology: DHL, YGJ. Project administration: SJN, DHL. Writing–original draft: SJN. Writing–review & editing: SJN, DHL, YGJ.

References

- 1.Serhan D. Transitioning from face-to-face to remote learning: students’ attitudes and perceptions of using Zoom during COVID-19 pandemic. Int J Technol Educ Sci. 2020;4(4):335–342. [Google Scholar]

- 2.Bao W. COVID-19 and online teaching in higher education: a case study of Peking University. Hum Behav Emerg Technol. 2020;2(2):113–115. doi: 10.1002/hbe2.191. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Black P, Wiliam D. Assessment and classroom learning. Assess Educ Princ Policy Pract. 1998;5(1):7–74. [Google Scholar]

- 4.Velan GM, Jones P, McNeil HP, Kumar RK. Integrated online formative assessments in the biomedical sciences for medical students: benefits for learning. BMC Med Educ. 2008;8:52. doi: 10.1186/1472-6920-8-52. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Ismail MA, Ahmad A, Mohammad JA, Fakri NM, Nor MZ, Pa MN. Using Kahoot! as a formative assessment tool in medical education: a phenomenological study. BMC Med Educ. 2019;19(1):230. doi: 10.1186/s12909-019-1658-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Kühbeck F, Berberat PO, Engelhardt S, Sarikas A. Correlation of online assessment parameters with summative exam performance in undergraduate medical education of pharmacology: a prospective cohort study. BMC Med Educ. 2019;19(1):412. doi: 10.1186/s12909-019-1814-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.McMillan JH, Venable JC, Varier D. Studies of the effect of formative assessment on student achievement: so much more is needed. Pract Assess Res Eval. 2013;18(1):2. [Google Scholar]

- 8.Lim YS. Students’ perception of formative assessment as an instructional tool in medical education. Med Sci Educ. 2019;29(1):255–263. doi: 10.1007/s40670-018-00687-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Li W, Cai H, Yang X, Cheng X. In: Technology in Education: Innovations for Online Teaching and Learning. Lee LK, U LH, Wang FL, Cheung SK, Au O, Li KC, editors. Singapore: Springer; 2020. Medical teachers’ action research: the application of Bloom’s taxonomy in formative assessments based on rain classroom; pp. 112–125. [Google Scholar]

- 10.Seong TJ. Development and analysis of formative assessment for mathematics ‘Number and Operation’ in the first grade of middle school by the Cognitive Diagnosis Model. J Educ Eval. 2018;31(1):29–53. [Google Scholar]

- 11.Seong TJ, Lee B, Ahn S, Gwak YL. Effects of computerized formative assessment with the Cognitive Diagnosis Model on achievement and affective attitude for math. J Educ Eval. 2019;32(1):1–25. [Google Scholar]

- 12.Kim SS, Kim HK, Seo MH, Seong TJ. Formative assessment for classroom practice. Seoul, Korea: Hakjisa; 2017. [Google Scholar]

- 13.Kang SC. A study on the improvement for building the taxonomy table of two dimensional objectives based on it’s actual condition survey in the standards based assessment of achievement. J Curric Eval. 2014;17(2):21–48. [Google Scholar]

- 14.Choi JI, Paik SH. An analysis of content validity of behavioral domain of descriptive tests and factors that affect content validity: focus on the fifth and sixth grade science. J Korean Assoc Sci Educ. 2016;36(1):87–101. [Google Scholar]

- 15.Kim S, Kim S, Chang JH, Lee S. An analysis of secondary school teachers’ evaluation professionality on Bloom’s taxonomy and achievement standards. J Educ Eval. 2017;30(2):173–197. [Google Scholar]

- 16.Anderson LW, Krathwohl DR, Airasian PW, et al. A taxonomy for learning, teaching, and assessing: a revision of Bloom’s taxonomy of educational objectives. New York, USA: Longman; 2001. [Google Scholar]

- 17.Agarwal S, Kaushik JS. Student’s perception of online learning during COVID pandemic. Indian J Pediatr. 2020;87(7):554. doi: 10.1007/s12098-020-03327-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Earl LM. In: Assessment as Learning: Using Classroom Assessment to Maximize Student Learning. 2nd ed. Earl LM, editor. Thousand Oaks, USA: Corwin a SAGE Company; 2013. Assessment of learning, for learning, and as learning; pp. 25–34. [Google Scholar]

- 19.Park JI. A study on the future direction of the online formative assessment system for learning Korean Language Arts. Korean J Teach Educ. 2015;31(2):41–60. [Google Scholar]